Artificial Intelligence 8 Supervised and unsupervised learning Japan

- Slides: 23

Artificial Intelligence 8. Supervised and unsupervised learning Japan Advanced Institute of Science and Technology (JAIST) Yoshimasa Tsuruoka

Outline • Supervised learning • Naive Bayes classifier • Unsupervised learning • Clustering • Lecture slides • http: //www. jaist. ac. jp/~tsuruoka/lectures/

Supervised and unsupervised learning • Supervised learning – Each instance is assigned with a label – Classification, regression – Training data need to be created manually • Unsupervised learning – Each instance is just a vector of attribute-values – Clustering – Pattern mining

Naive Bayes classifier Chapter 6. 9 of Mitchell, T. , Machine Learning (1997) • Naive Bayes classifier – Output probabilities – Easy to implement – Assumes conditional independence between features – Efficient learning and classification

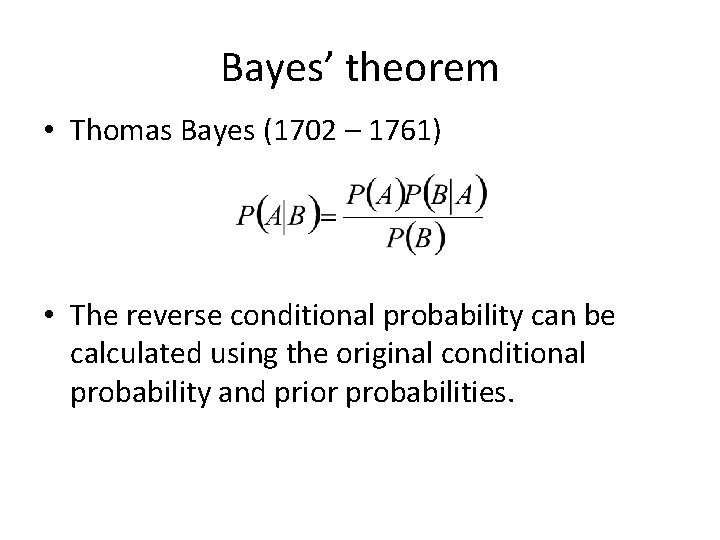

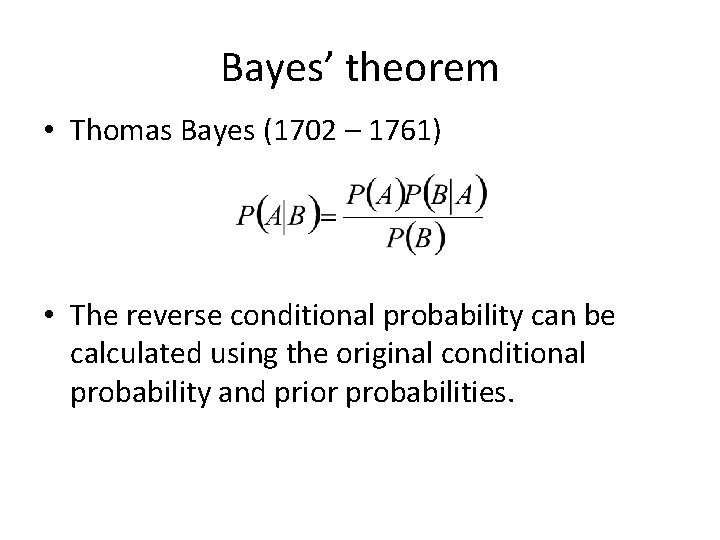

Bayes’ theorem • Thomas Bayes (1702 – 1761) • The reverse conditional probability can be calculated using the original conditional probability and prior probabilities.

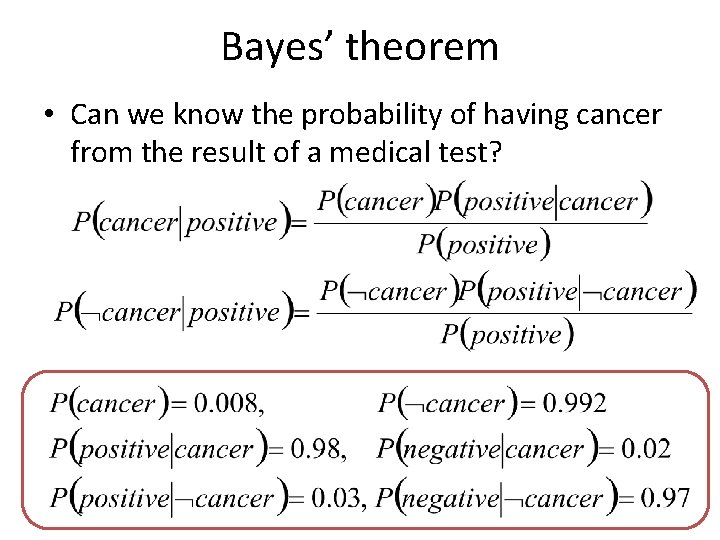

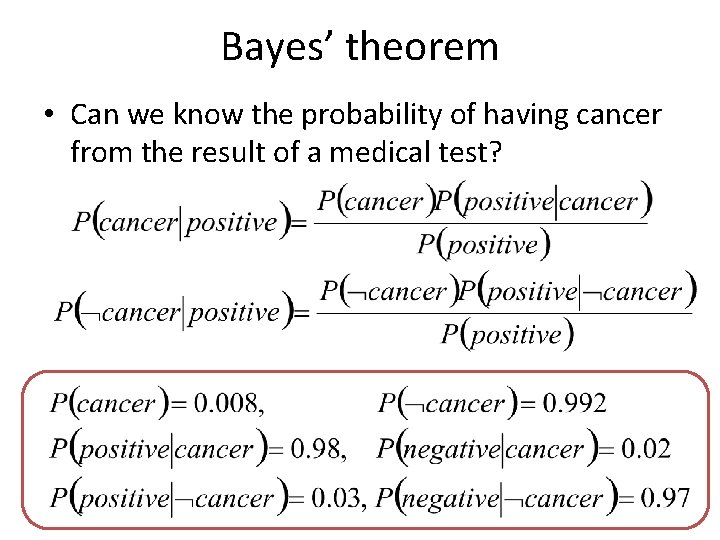

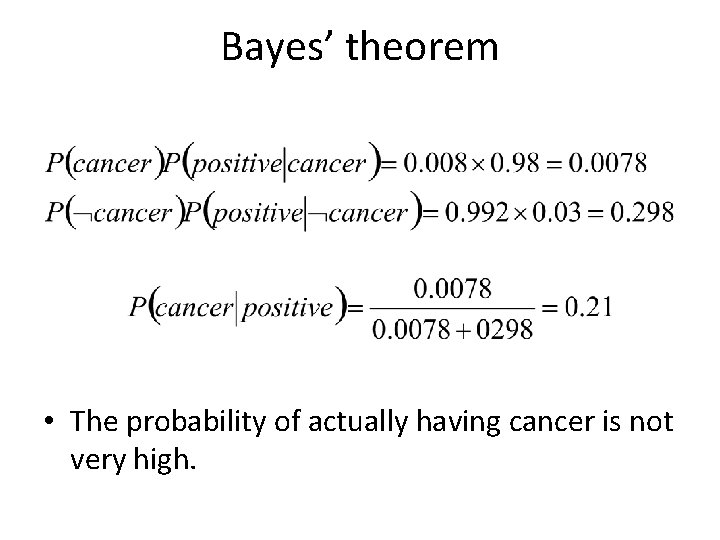

Bayes’ theorem • Can we know the probability of having cancer from the result of a medical test?

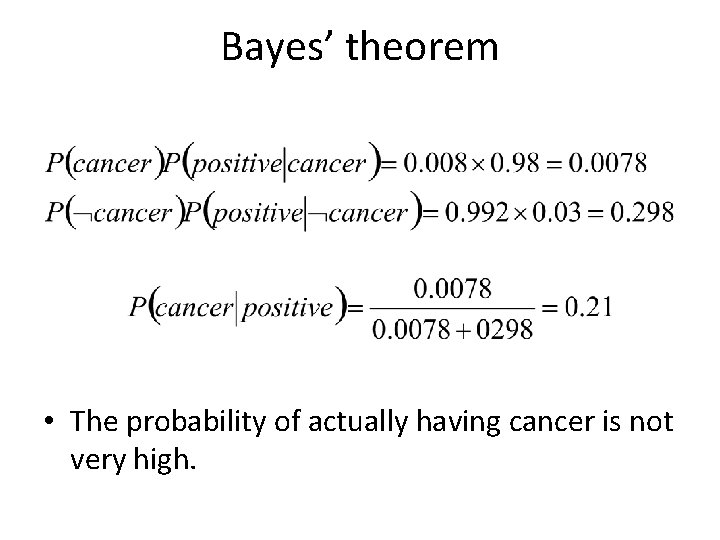

Bayes’ theorem • The probability of actually having cancer is not very high.

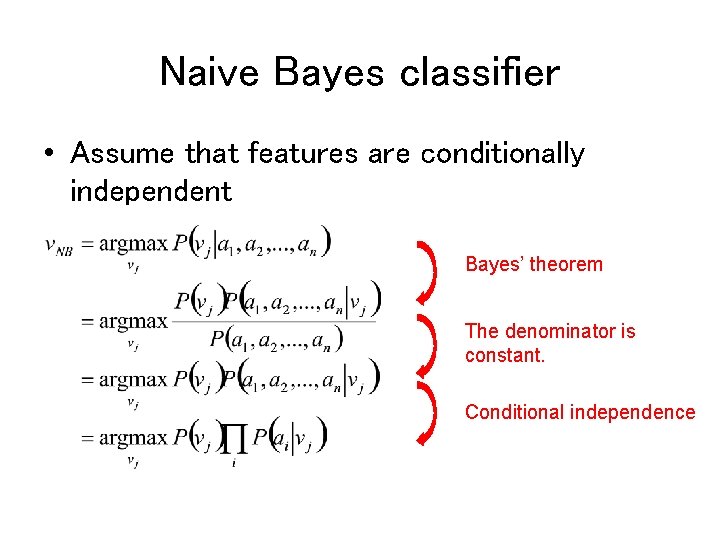

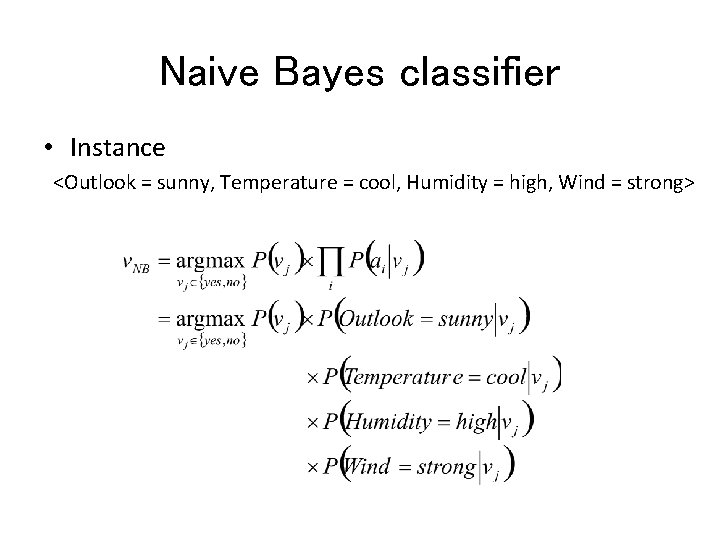

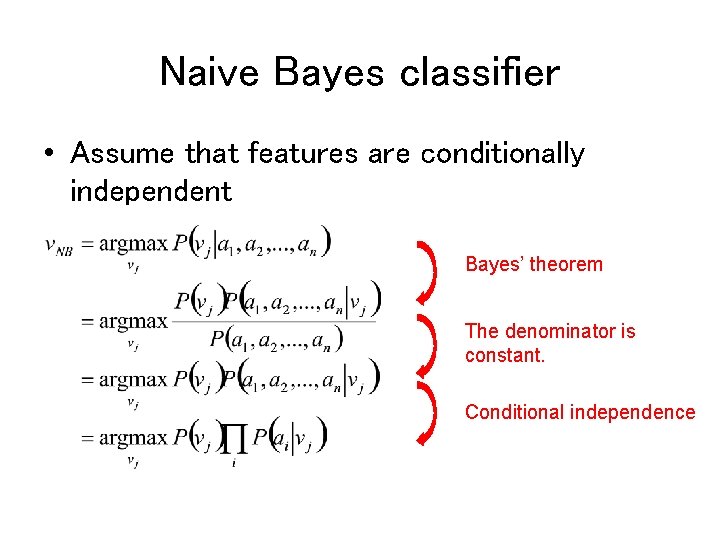

Naive Bayes classifier • Assume that features are conditionally independent Bayes’ theorem The denominator is constant. Conditional independence

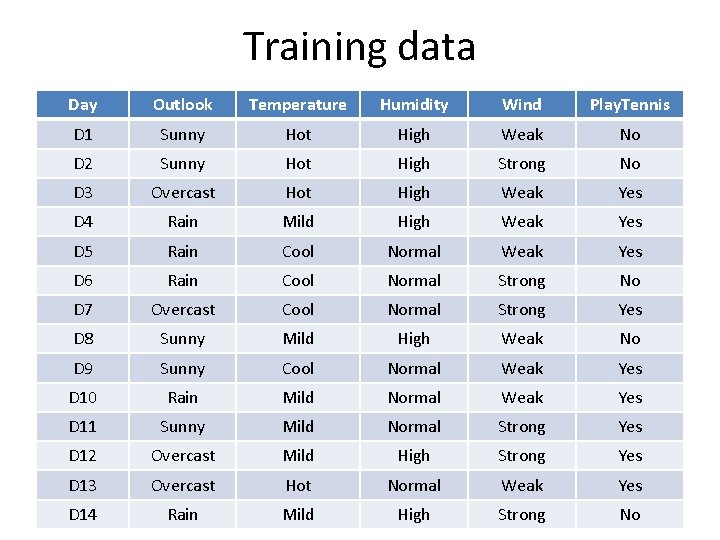

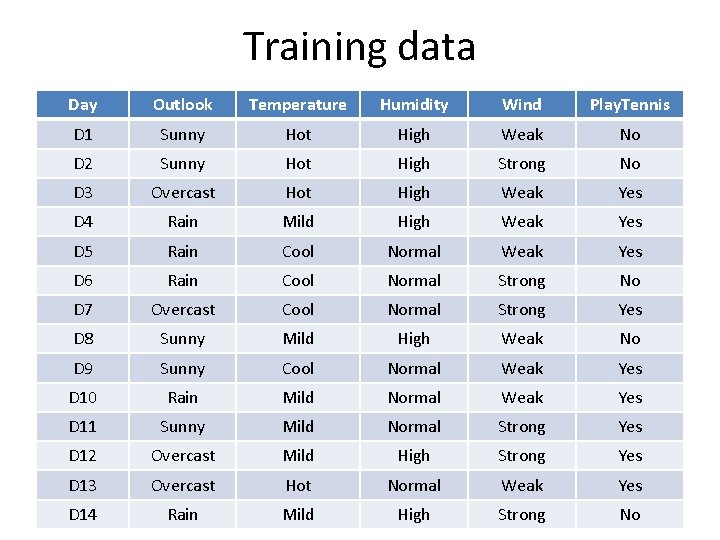

Training data Day Outlook Temperature Humidity Wind Play. Tennis D 1 Sunny Hot High Weak No D 2 Sunny Hot High Strong No D 3 Overcast Hot High Weak Yes D 4 Rain Mild High Weak Yes D 5 Rain Cool Normal Weak Yes D 6 Rain Cool Normal Strong No D 7 Overcast Cool Normal Strong Yes D 8 Sunny Mild High Weak No D 9 Sunny Cool Normal Weak Yes D 10 Rain Mild Normal Weak Yes D 11 Sunny Mild Normal Strong Yes D 12 Overcast Mild High Strong Yes D 13 Overcast Hot Normal Weak Yes D 14 Rain Mild High Strong No

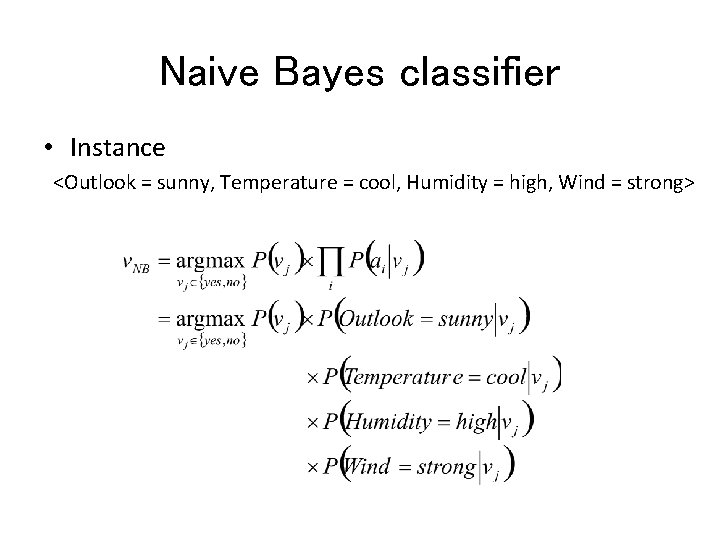

Naive Bayes classifier • Instance <Outlook = sunny, Temperature = cool, Humidity = high, Wind = strong>

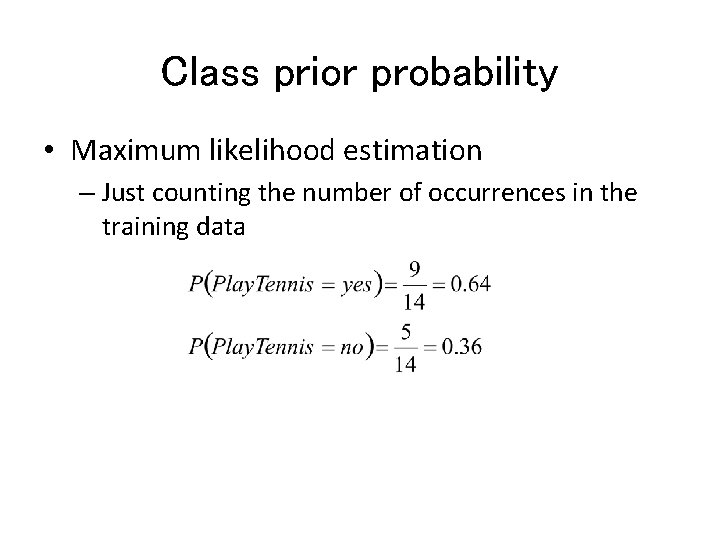

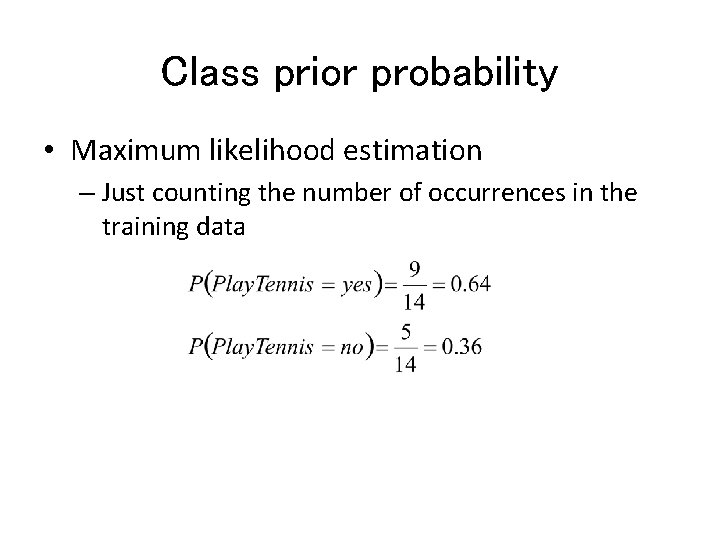

Class prior probability • Maximum likelihood estimation – Just counting the number of occurrences in the training data

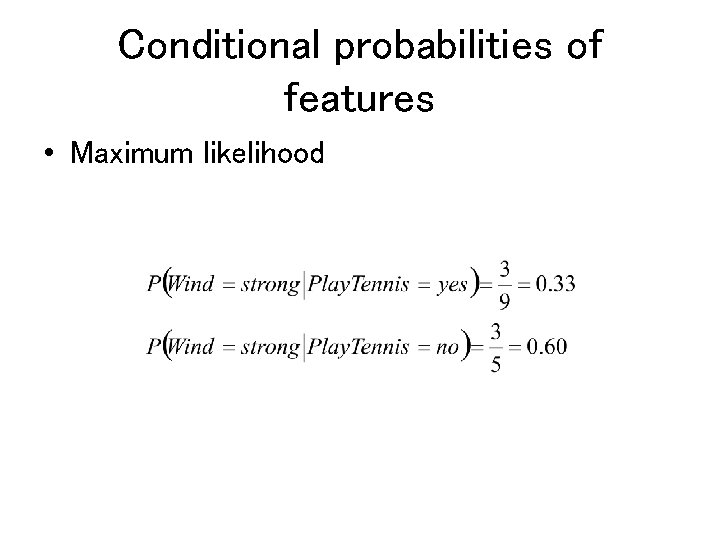

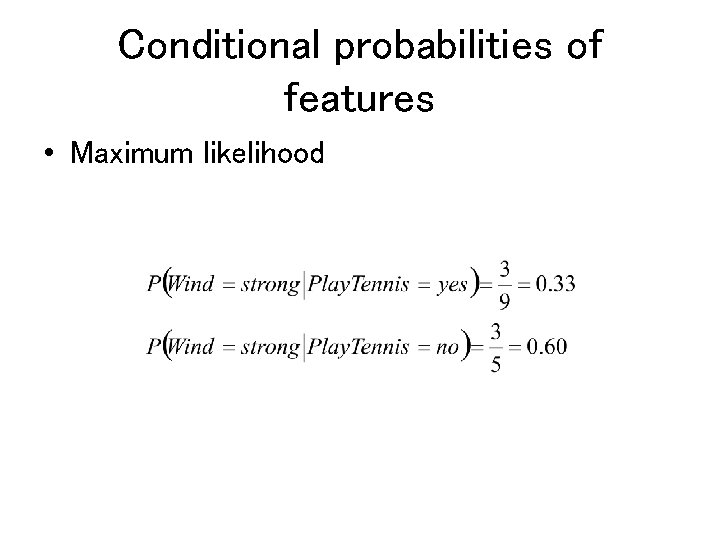

Conditional probabilities of features • Maximum likelihood

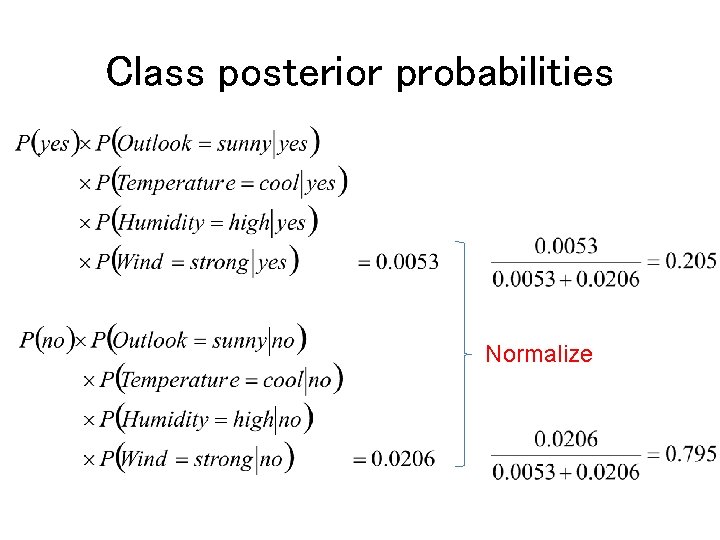

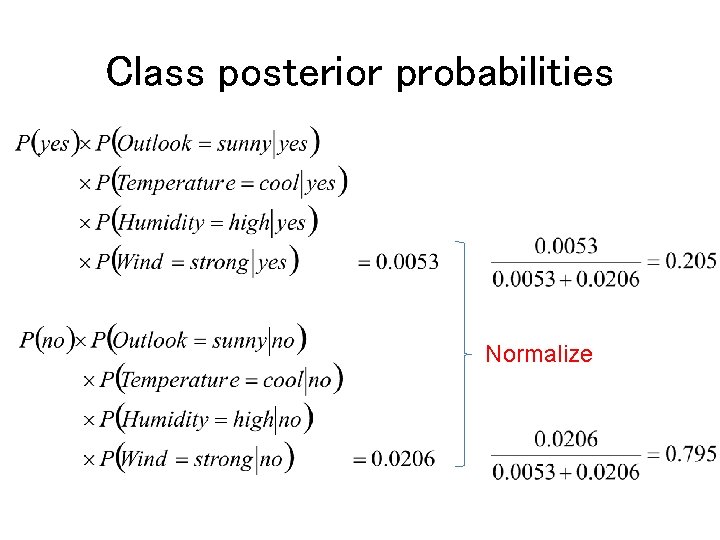

Class posterior probabilities Normalize

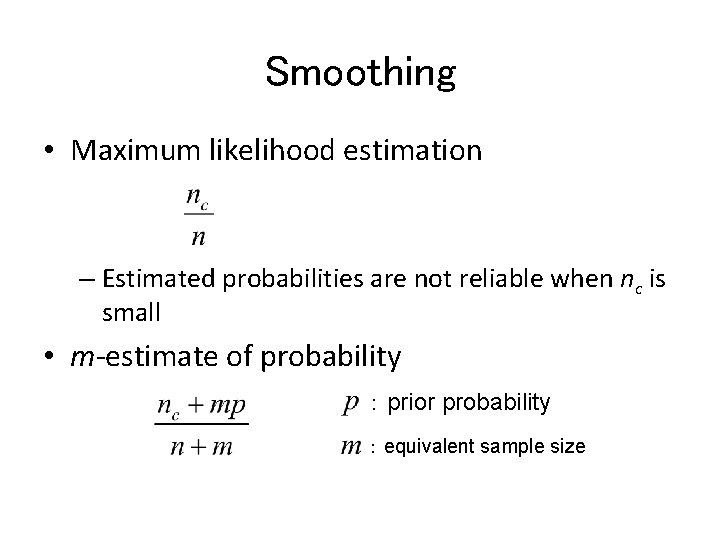

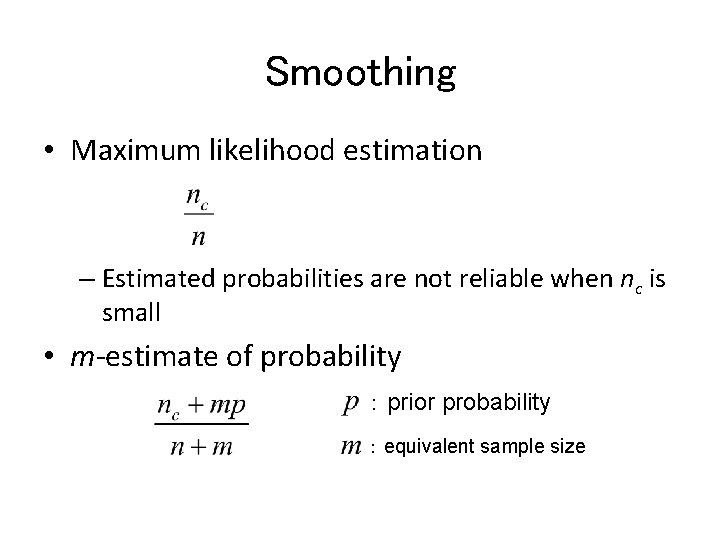

Smoothing • Maximum likelihood estimation – Estimated probabilities are not reliable when nc is small • m-estimate of probability : prior probability : equivalent sample size

Text classification with a Naive Bayes classifier • Text classification – Automatic classification of news articles – Spam filtering – Sentiment analysis of product reviews – etc.

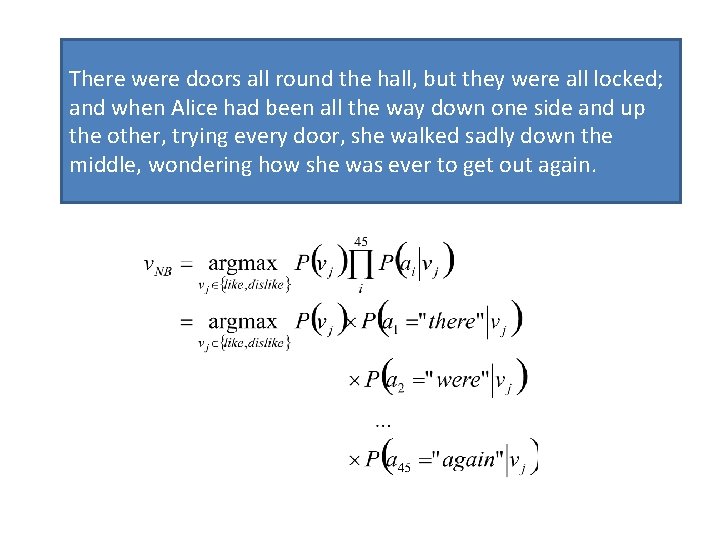

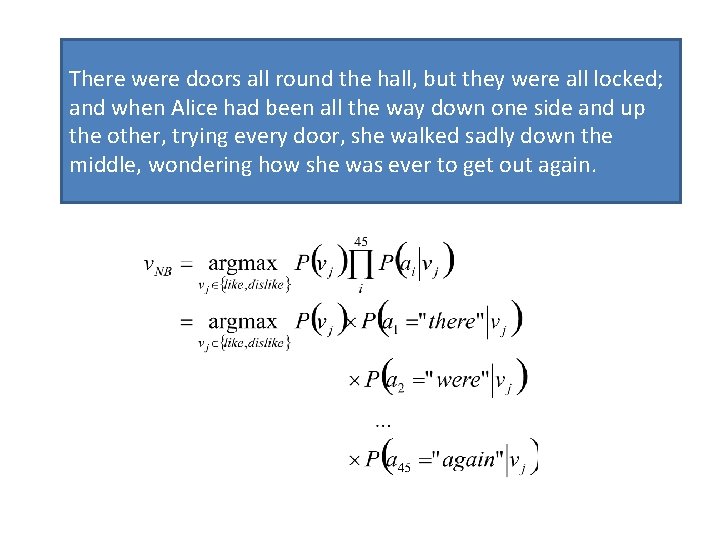

There were doors all round the hall, but they were all locked; and when Alice had been all the way down one side and up the other, trying every door, she walked sadly down the middle, wondering how she was ever to get out again.

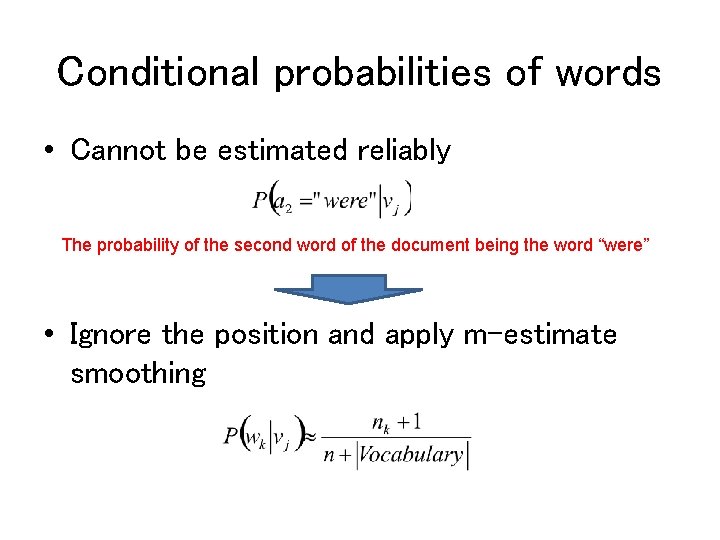

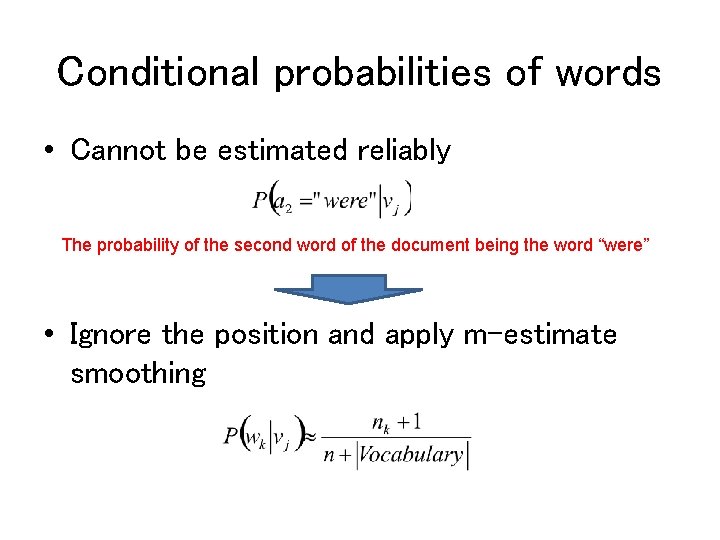

Conditional probabilities of words • Cannot be estimated reliably The probability of the second word of the document being the word “were” • Ignore the position and apply m-estimate smoothing

Unsupervised learning • No “correct” output for each instance • Clustering – Merging “similar” instances into a group – Hierarchical clustering, k-means, etc. . • Pattern mining – Discovering frequent patterns from a large amount of data – Association rules, graph mining, etc

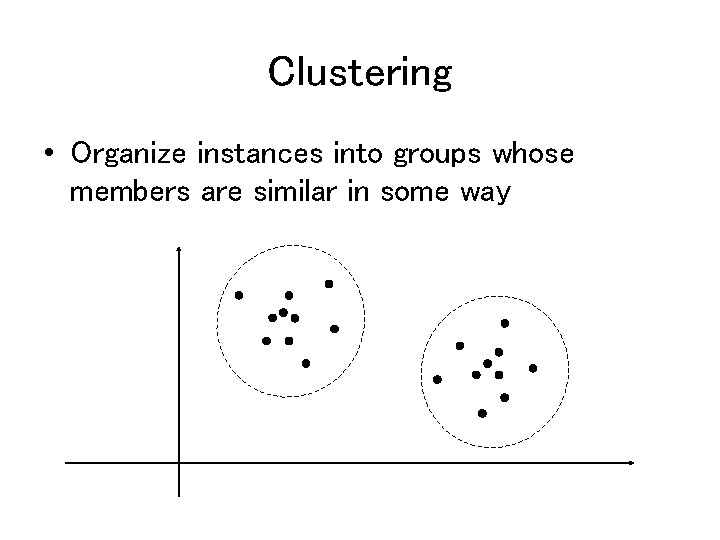

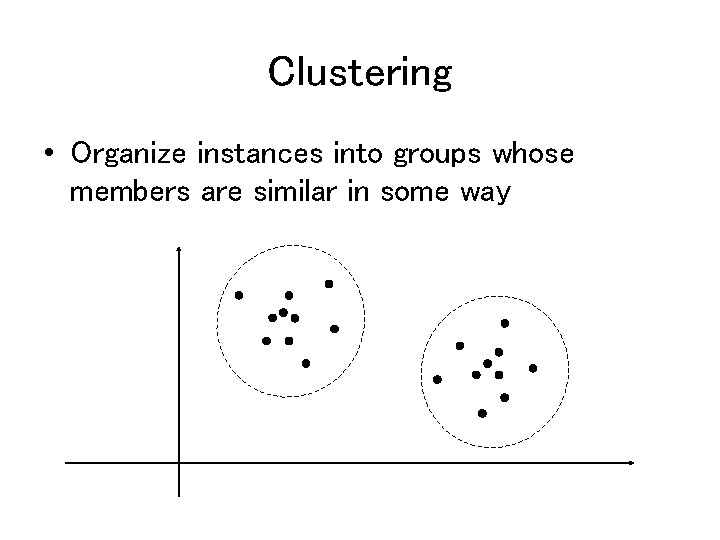

Clustering • Organize instances into groups whose members are similar in some way

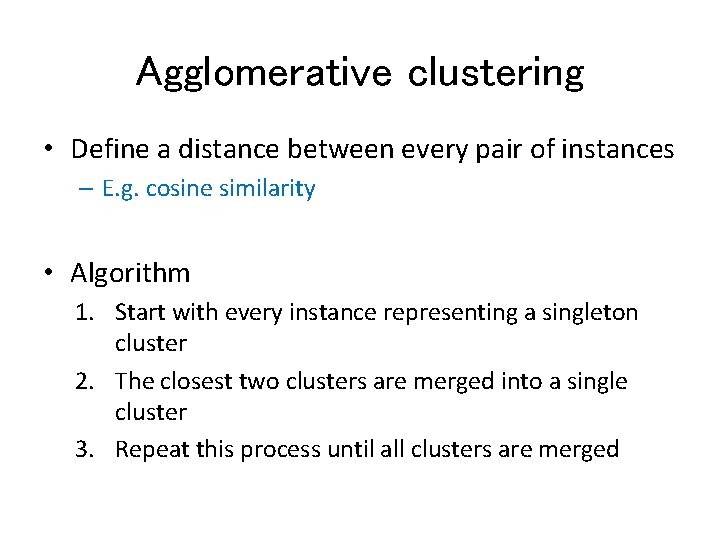

Agglomerative clustering • Define a distance between every pair of instances – E. g. cosine similarity • Algorithm 1. Start with every instance representing a singleton cluster 2. The closest two clusters are merged into a single cluster 3. Repeat this process until all clusters are merged

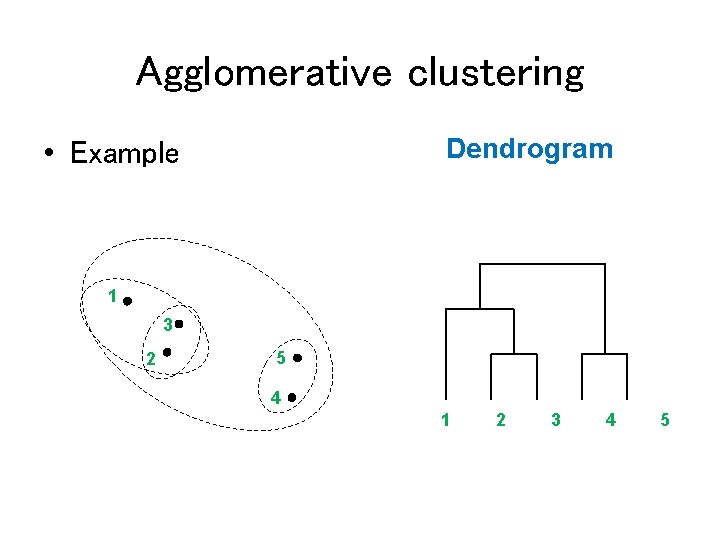

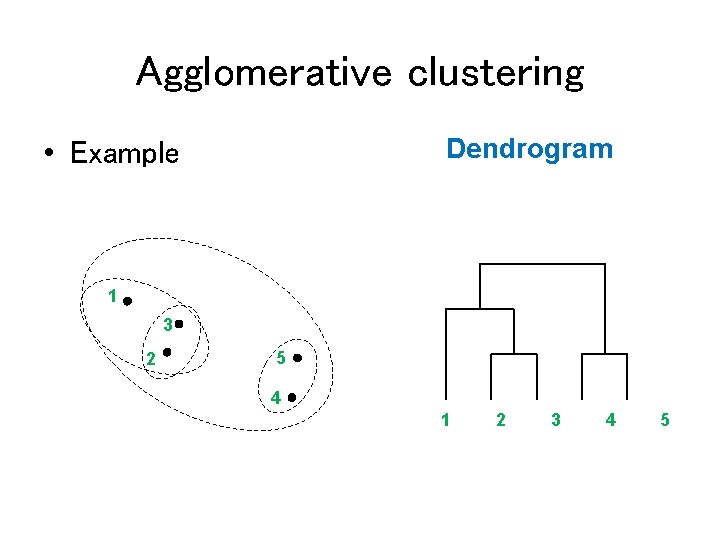

Agglomerative clustering Dendrogram • Example 1 3 2 5 4 1 2 3 4 5

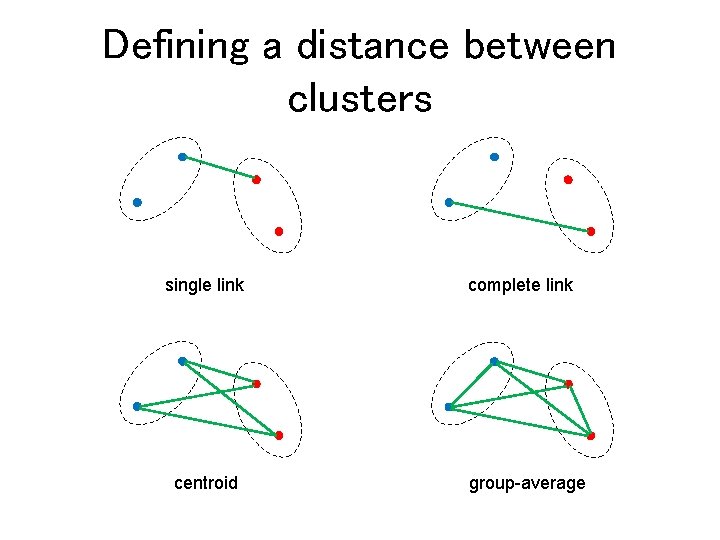

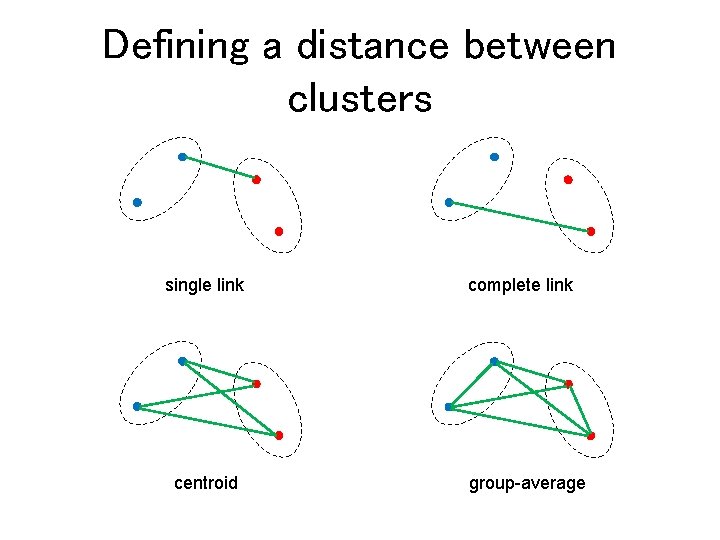

Defining a distance between clusters single link centroid complete link group-average

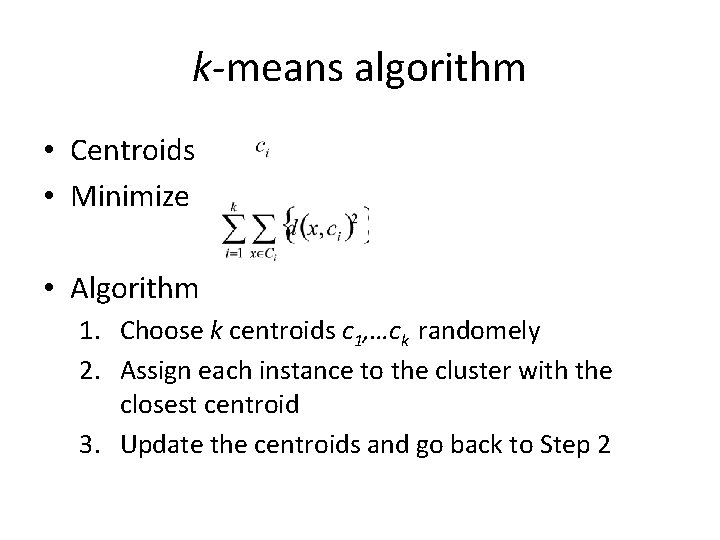

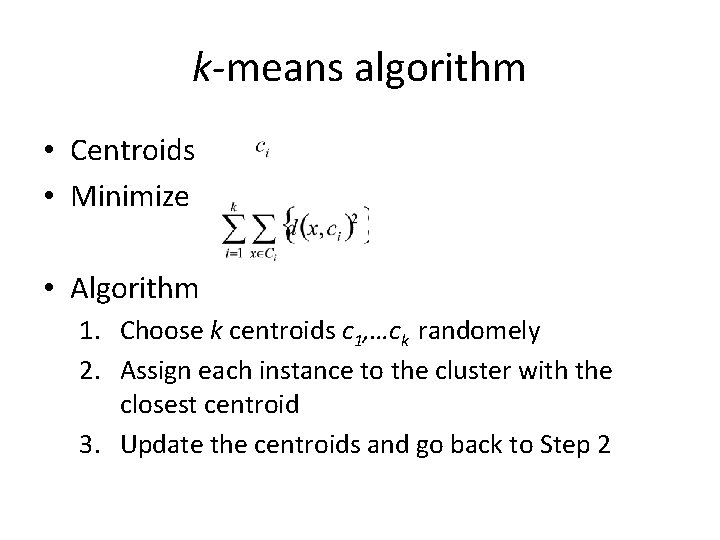

k-means algorithm • Centroids • Minimize • Algorithm 1. Choose k centroids c 1, …ck randomely 2. Assign each instance to the cluster with the closest centroid 3. Update the centroids and go back to Step 2