Artificial Intelligence 7 Decision trees Japan Advanced Institute

- Slides: 18

Artificial Intelligence 7. Decision trees Japan Advanced Institute of Science and Technology (JAIST) Yoshimasa Tsuruoka

Outline • What is a decision tree? • How to build a decision tree • Entropy • Information Gain • Overfitting • Generalization performance • Pruning • Lecture slides • http: //www. jaist. ac. jp/~tsuruoka/lectures/

Decision trees Chapter 3 of Mitchell, T. , Machine Learning (1997) • Decision Trees – Disjunction of conjunctions – Successfully applied to a broad range of tasks • Diagnosing medical cases • Assessing credit risk of loan applications • Nice characteristics – Understandable to human – Robust to noise

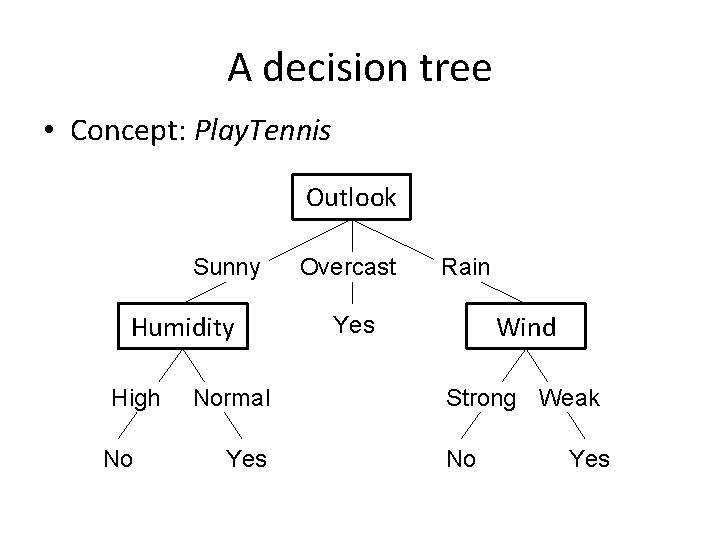

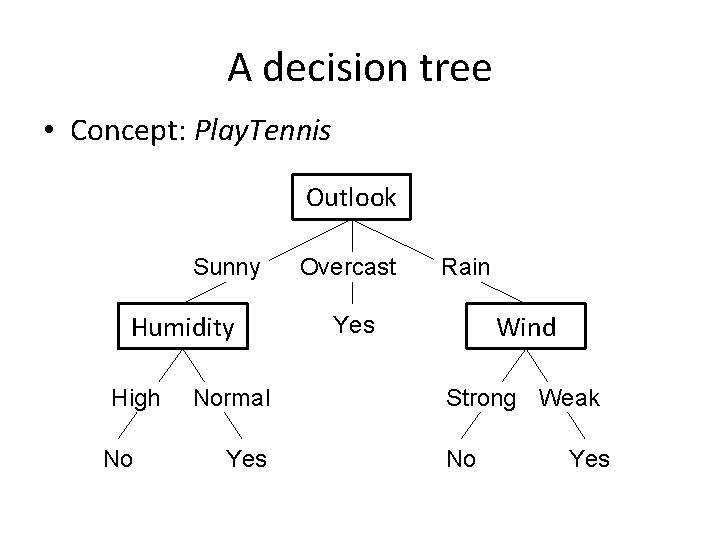

A decision tree • Concept: Play. Tennis Outlook Sunny Humidity High No Normal Yes Overcast Rain Wind Yes Strong Weak No Yes

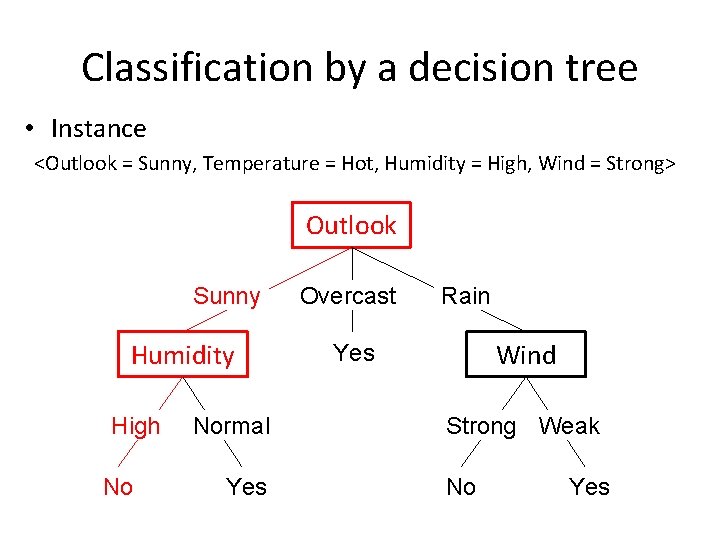

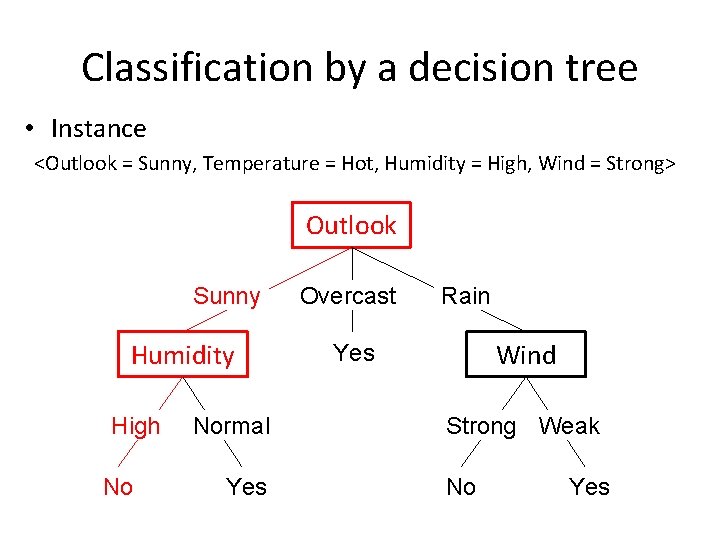

Classification by a decision tree • Instance <Outlook = Sunny, Temperature = Hot, Humidity = High, Wind = Strong> Outlook Sunny Humidity High No Normal Yes Overcast Rain Wind Yes Strong Weak No Yes

Disjunction of conjunctions (Outlook = Sunny ^ Humidity = Normal) v (Outlook = Overcast) v (Outlook = Rain ^ Wind = Weak) Outlook Sunny Humidity High No Normal Yes Overcast Rain Wind Yes Strong Weak No Yes

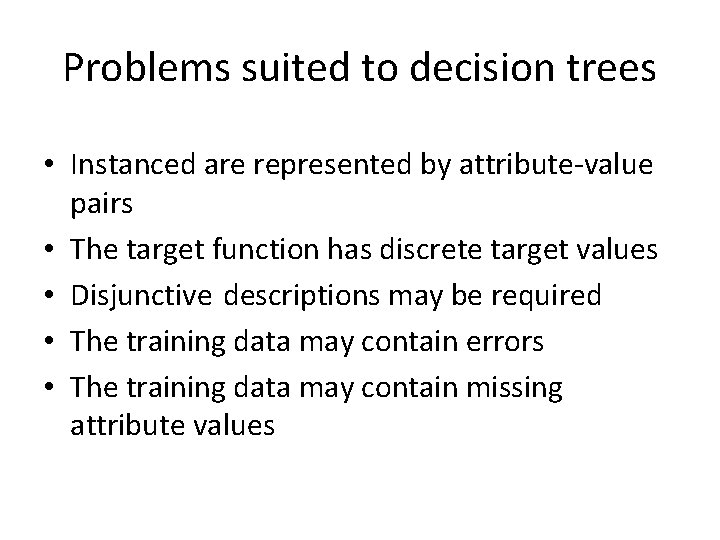

Problems suited to decision trees • Instanced are represented by attribute-value pairs • The target function has discrete target values • Disjunctive descriptions may be required • The training data may contain errors • The training data may contain missing attribute values

Training data Day Outlook Temperature Humidity Wind Play. Tennis D 1 Sunny Hot High Weak No D 2 Sunny Hot High Strong No D 3 Overcast Hot High Weak Yes D 4 Rain Mild High Weak Yes D 5 Rain Cool Normal Weak Yes D 6 Rain Cool Normal Strong No D 7 Overcast Cool Normal Strong Yes D 8 Sunny Mild High Weak No D 9 Sunny Cool Normal Weak Yes D 10 Rain Mild Normal Weak Yes D 11 Sunny Mild Normal Strong Yes D 12 Overcast Mild High Strong Yes D 13 Overcast Hot Normal Weak Yes D 14 Rain Mild High Strong No

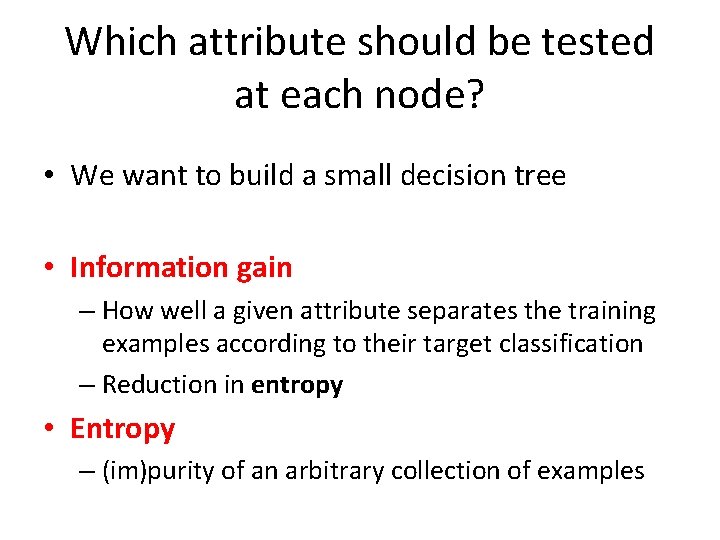

Which attribute should be tested at each node? • We want to build a small decision tree • Information gain – How well a given attribute separates the training examples according to their target classification – Reduction in entropy • Entropy – (im)purity of an arbitrary collection of examples

Entropy • If there are only two classes • In general,

Information Gain • The expected reduction in entropy achieved by splitting the training examples

Example

Coumpiting Information Gain Humidity High Normal Wind Weak Strong

Which attribute is the best classifier? • Information gain

Splitting training data with Outlook {D 1, D 2, …, D 14} [9+, 5 -] Outlook Sunny Overcast Rain {D 1, D 2, D 8, D 9, D 11} [2+, 3 -] {D 3, D 7, D 12, D 13} [4+, 0 -] {D 4, D 5, D 6, D 10, D 14} [3+, 2 -] ? Yes ?

Overfitting • Growing each branch of the tree deeply enough to perfectly classify the training examples is not a good strategy. – The resulting tree may overfit the training data • Overfitting – The tree can explain the training data very well but performs poorly on new data

Alleviating the overfitting problem • Several approaches – Stop growing the tree earlier – Post-prune the tree • How can we evaluate the classification performance of the tree for new data? – The available data are separated into two sets of examples: a training set and a validation (development) set

Validation (development) set • Use a portion of the original training data to estimate the generalization performance. Original training set Training set Validation set Test set