Array computers Single Instruction Stream Multiple Data Streams

- Slides: 26

Array computers

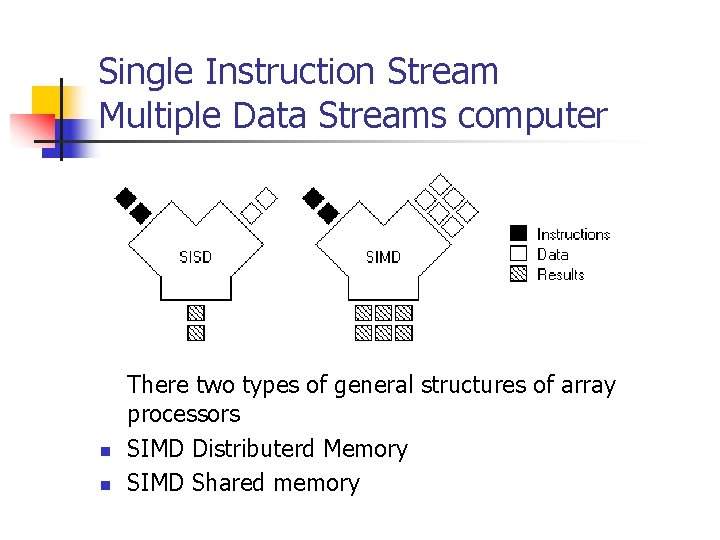

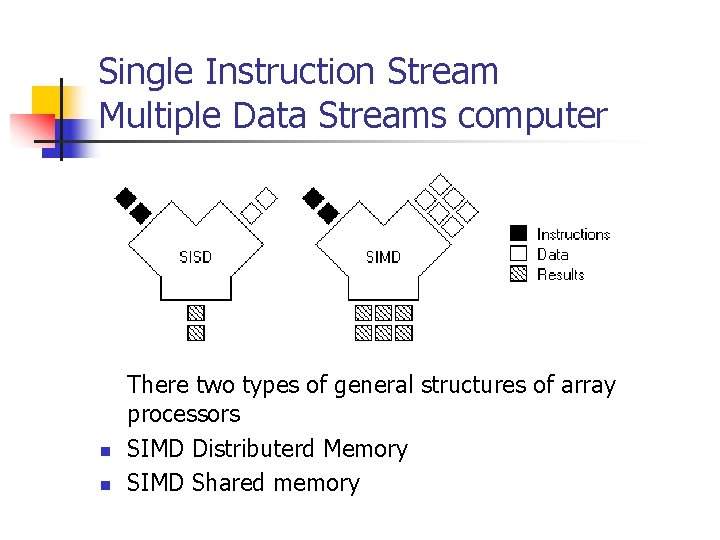

Single Instruction Stream Multiple Data Streams computer n n There two types of general structures of array processors SIMD Distributerd Memory SIMD Shared memory

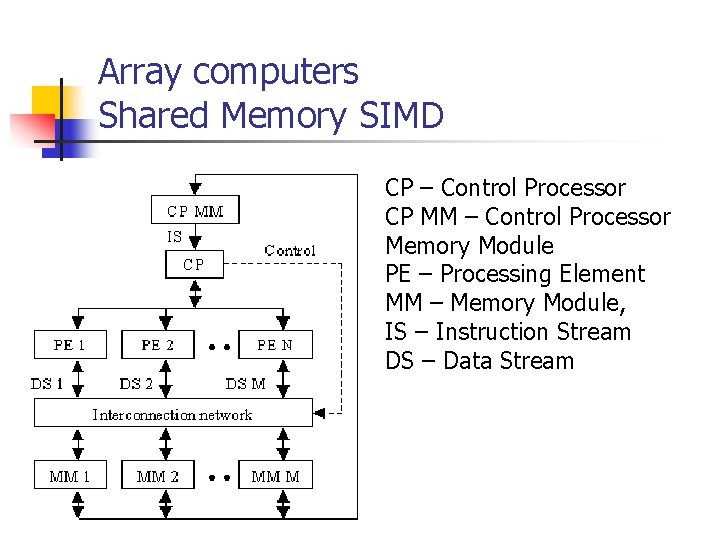

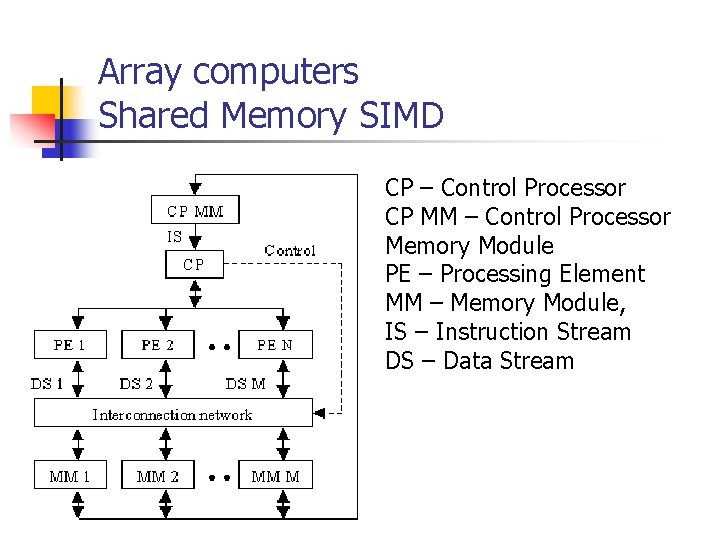

Array computers Shared Memory SIMD CP – Control Processor CP MM – Control Processor Memory Module PE – Processing Element MM – Memory Module, IS – Instruction Stream DS – Data Stream

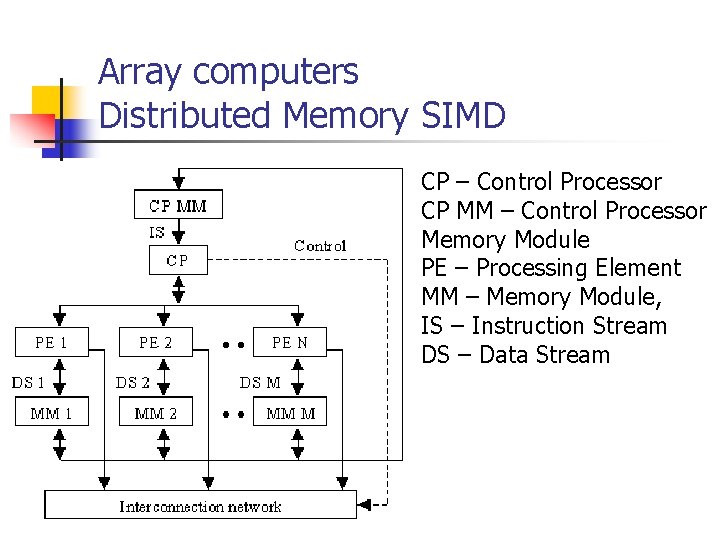

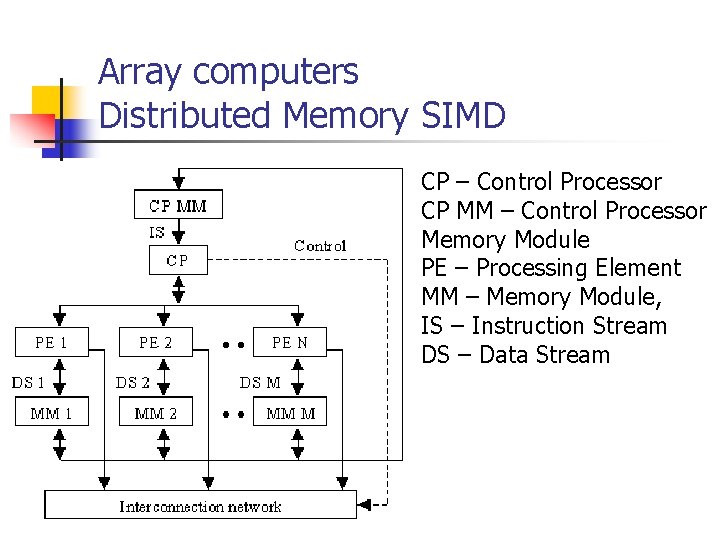

Array computers Distributed Memory SIMD CP – Control Processor CP MM – Control Processor Memory Module PE – Processing Element MM – Memory Module, IS – Instruction Stream DS – Data Stream

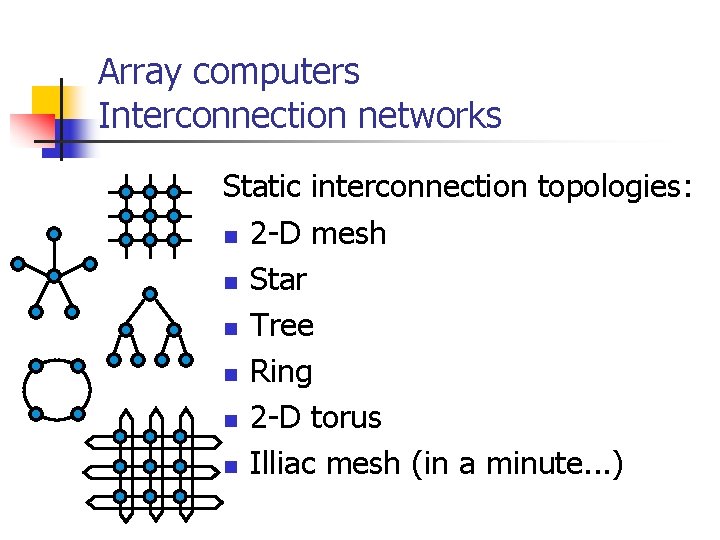

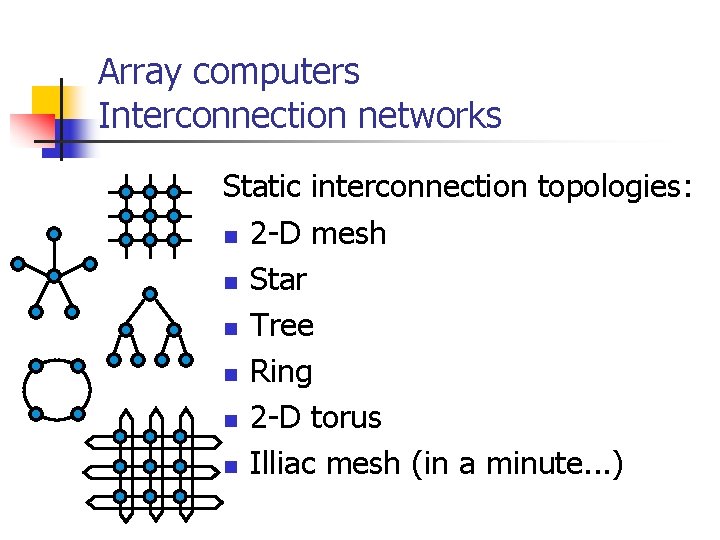

Array computers Interconnection networks Static interconnection topologies: n 2 -D mesh n Star n Tree n Ring n 2 -D torus n Illiac mesh (in a minute. . . )

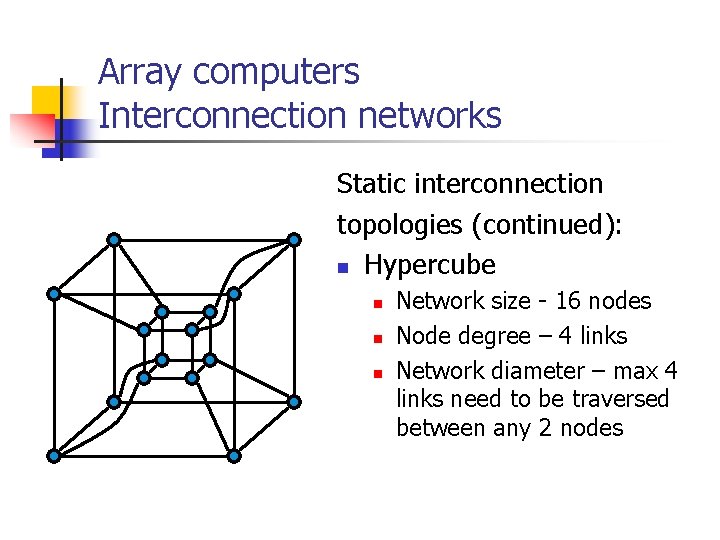

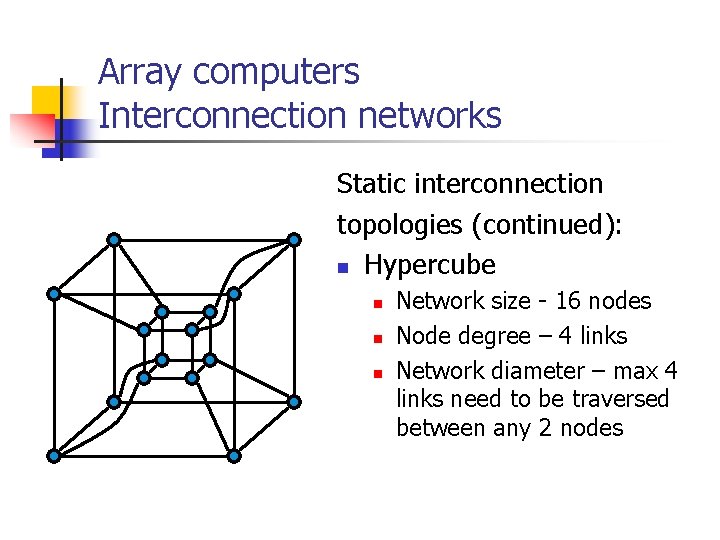

Array computers Interconnection networks Static interconnection topologies (continued): n Hypercube n n n Network size - 16 nodes Node degree – 4 links Network diameter – max 4 links need to be traversed between any 2 nodes

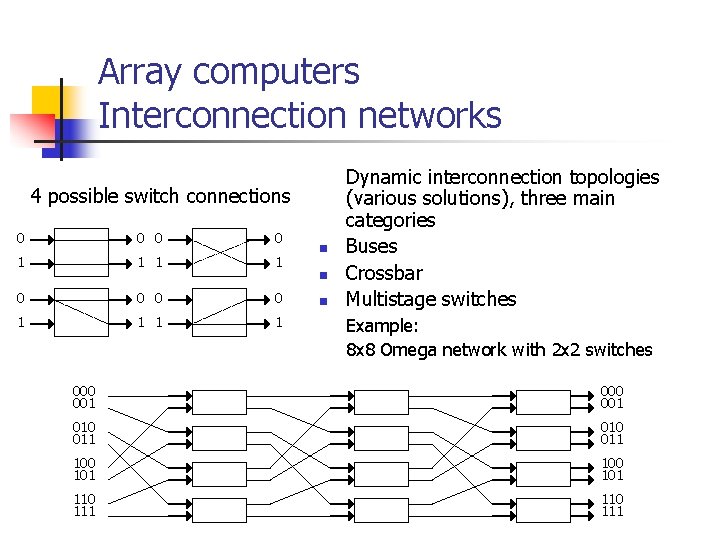

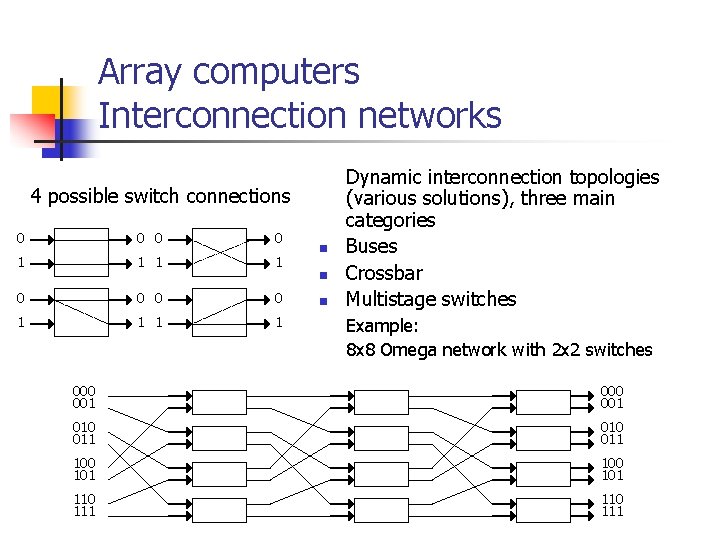

Array computers Interconnection networks 4 possible switch connections 0 0 0 0 1 1 1 1 n n n Dynamic interconnection topologies (various solutions), three main categories Buses Crossbar Multistage switches Example: 8 x 8 Omega network with 2 x 2 switches 000 001 010 011 100 101 110 111

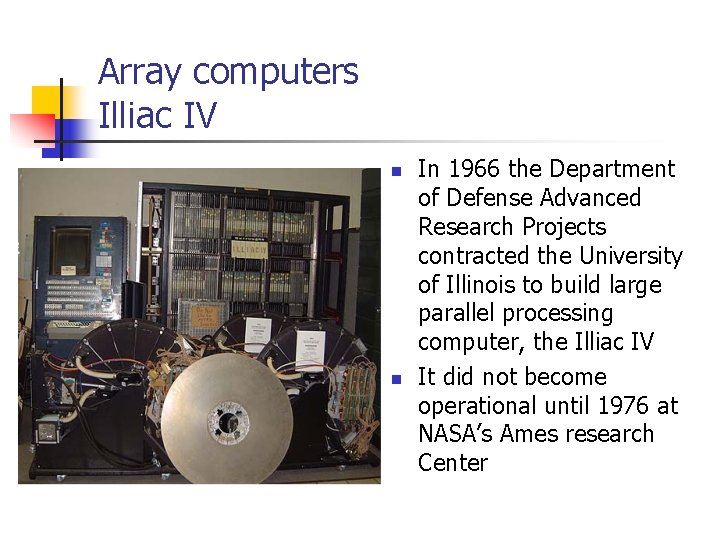

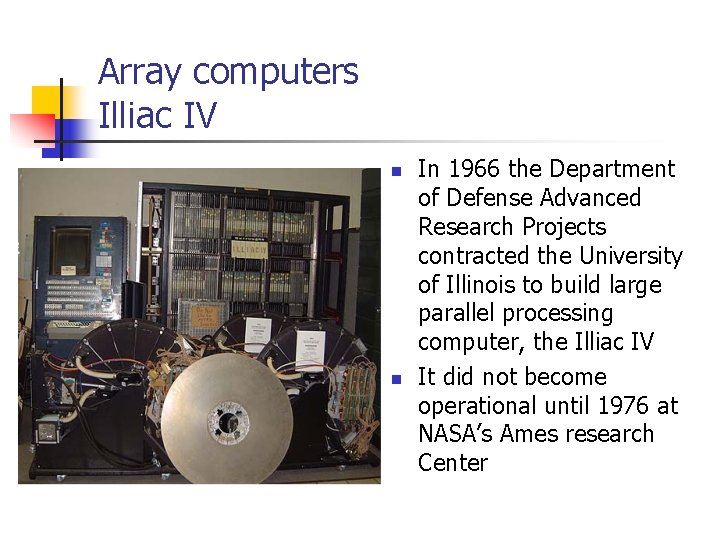

Array computers Illiac IV n n In 1966 the Department of Defense Advanced Research Projects contracted the University of Illinois to build large parallel processing computer, the Illiac IV It did not become operational until 1976 at NASA’s Ames research Center

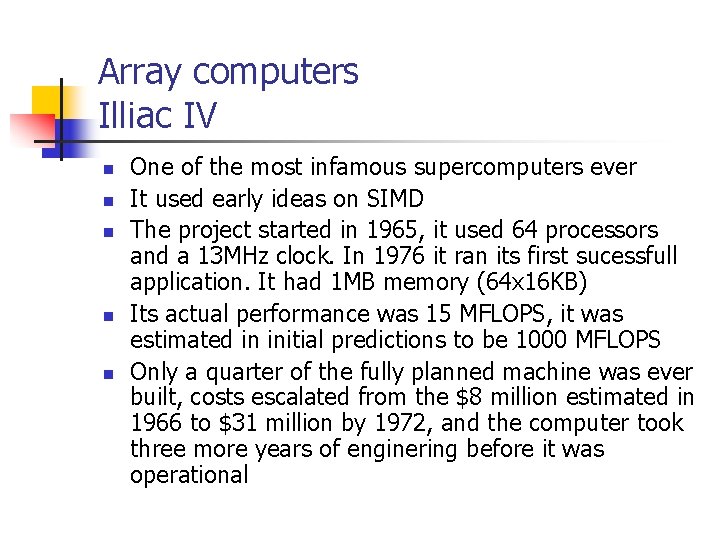

Array computers Illiac IV n n n One of the most infamous supercomputers ever It used early ideas on SIMD The project started in 1965, it used 64 processors and a 13 MHz clock. In 1976 it ran its first sucessfull application. It had 1 MB memory (64 x 16 KB) Its actual performance was 15 MFLOPS, it was estimated in initial predictions to be 1000 MFLOPS Only a quarter of the fully planned machine was ever built, costs escalated from the $8 million estimated in 1966 to $31 million by 1972, and the computer took three more years of enginering before it was operational

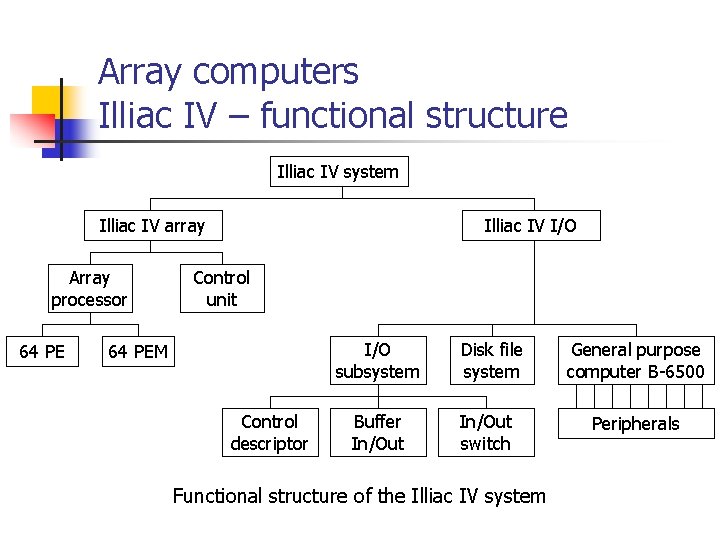

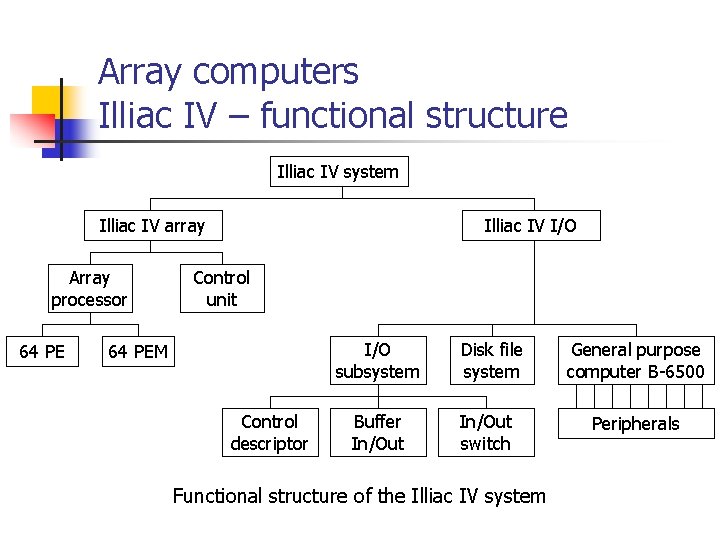

Array computers Illiac IV – functional structure Illiac IV system Illiac IV array Array processor 64 PE Illiac IV I/O Control unit I/O subsystem 64 PEM Control descriptor Buffer In/Out Disk file system In/Out switch Functional structure of the Illiac IV system General purpose computer B-6500 Peripherals

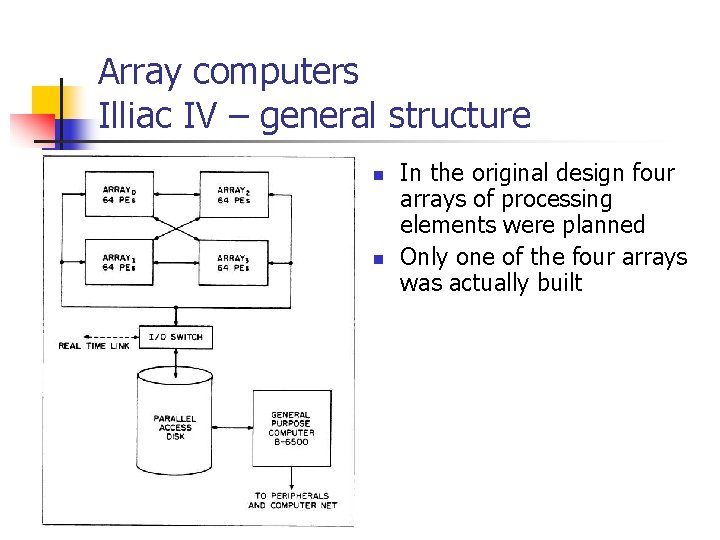

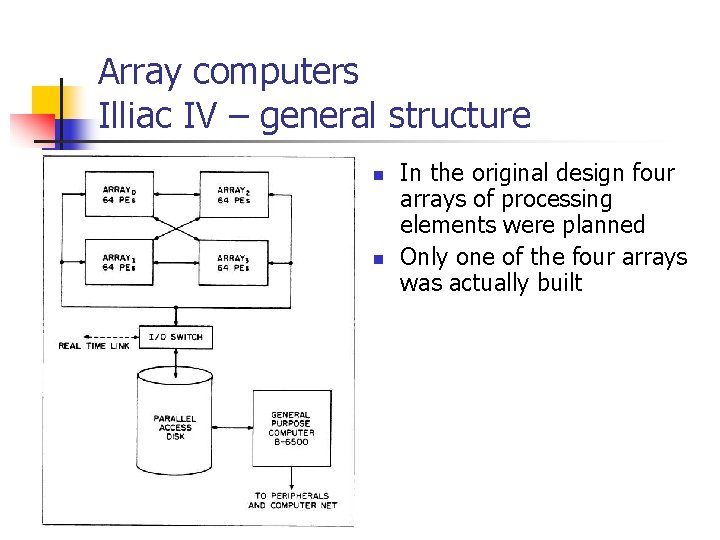

Array computers Illiac IV – general structure n n In the original design four arrays of processing elements were planned Only one of the four arrays was actually built

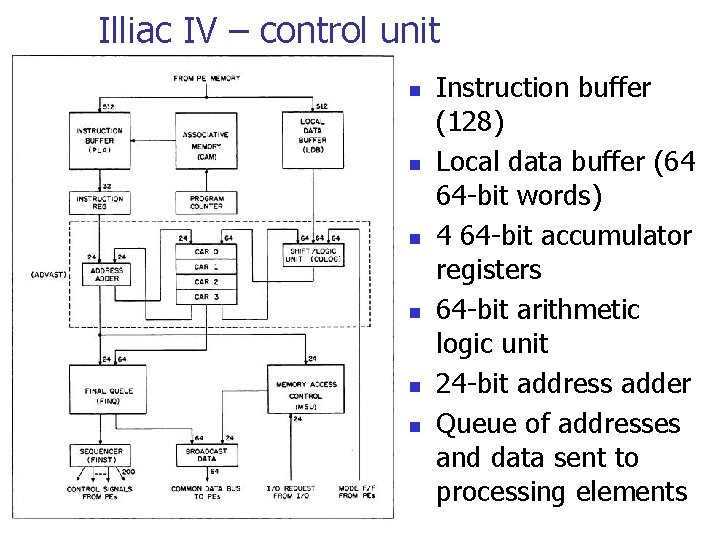

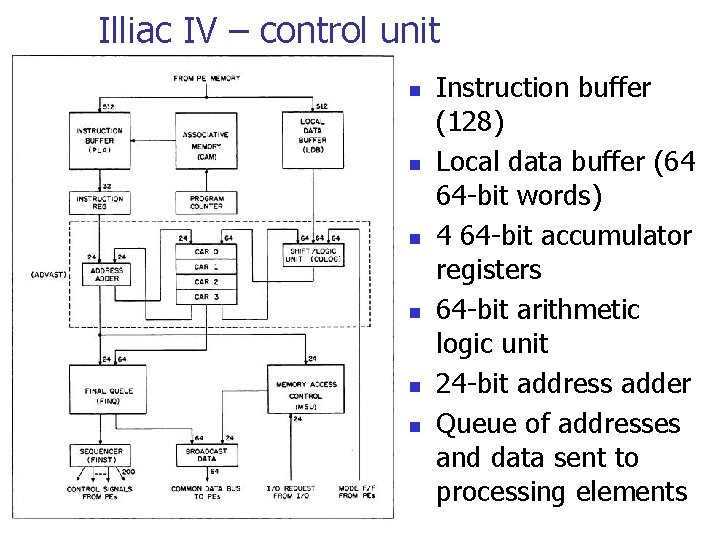

Illiac IV – control unit n n n Instruction buffer (128) Local data buffer (64 64 -bit words) 4 64 -bit accumulator registers 64 -bit arithmetic logic unit 24 -bit address adder Queue of addresses and data sent to processing elements

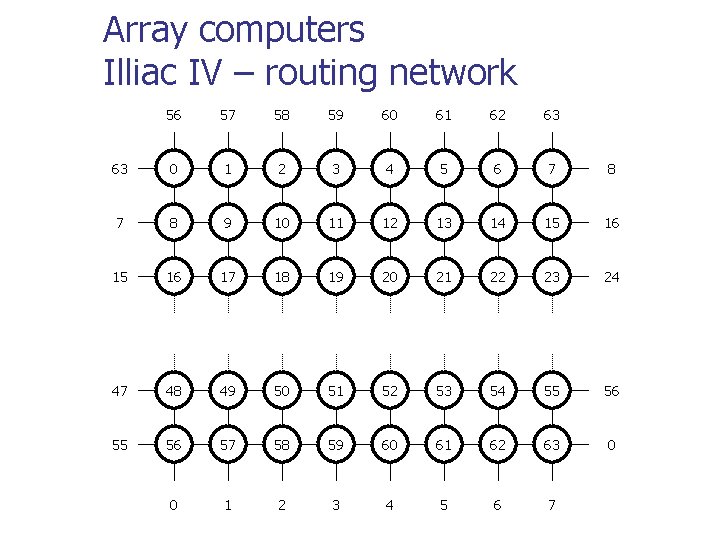

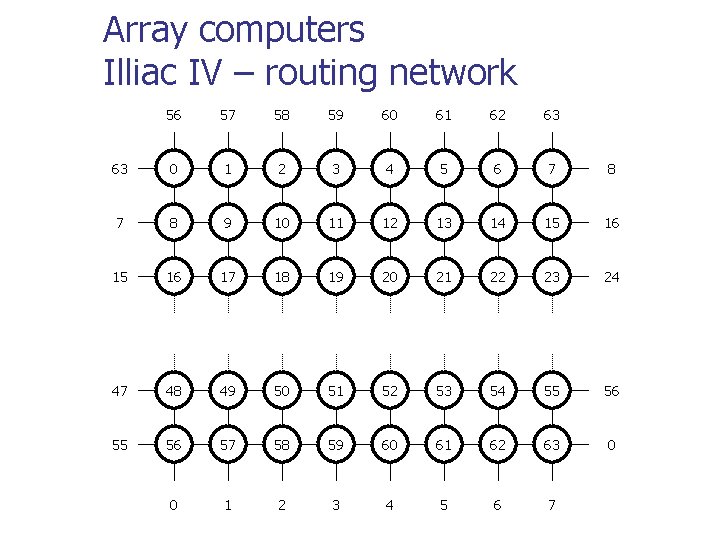

Array computers Illiac IV – routing network 56 57 58 59 60 61 62 63 63 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 0 0 1 2 3 4 5 6 7

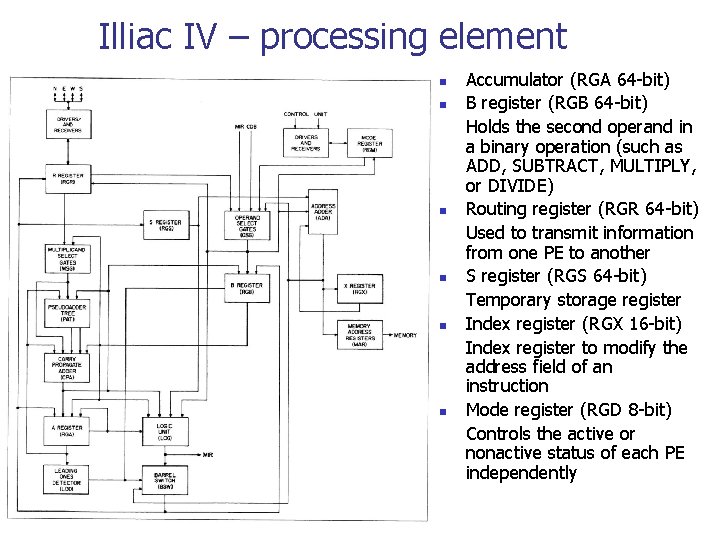

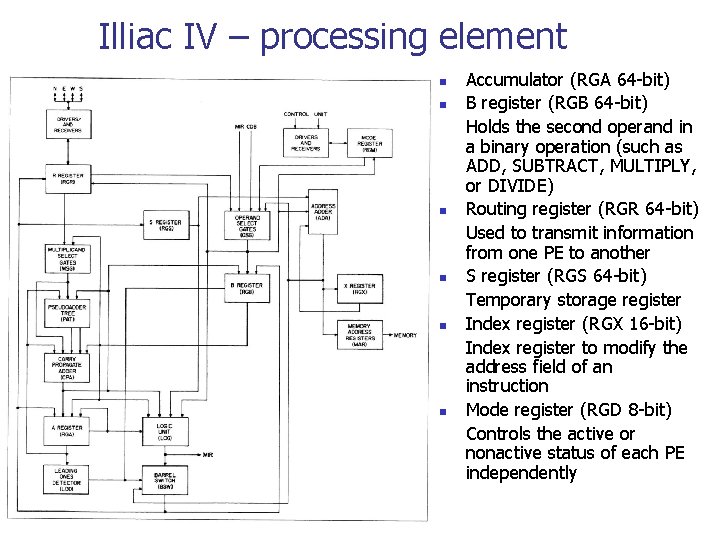

Illiac IV – processing element n n n Accumulator (RGA 64 -bit) B register (RGB 64 -bit) Holds the second operand in a binary operation (such as ADD, SUBTRACT, MULTIPLY, or DIVIDE) Routing register (RGR 64 -bit) Used to transmit information from one PE to another S register (RGS 64 -bit) Temporary storage register Index register (RGX 16 -bit) Index register to modify the address field of an instruction Mode register (RGD 8 -bit) Controls the active or nonactive status of each PE independently

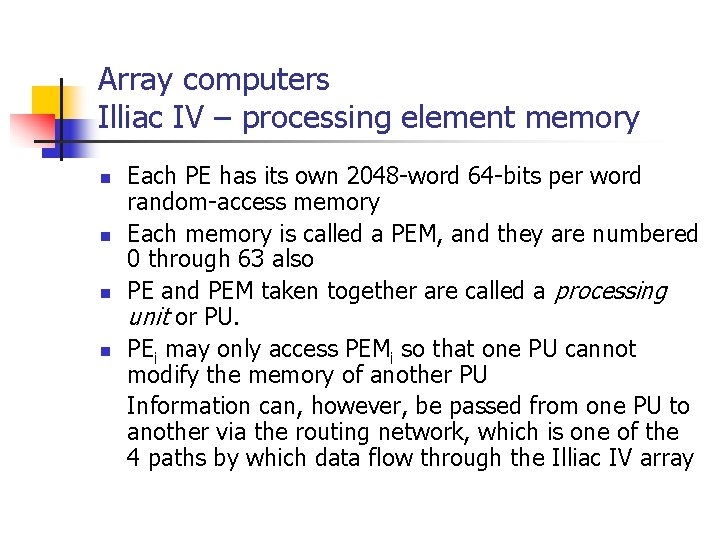

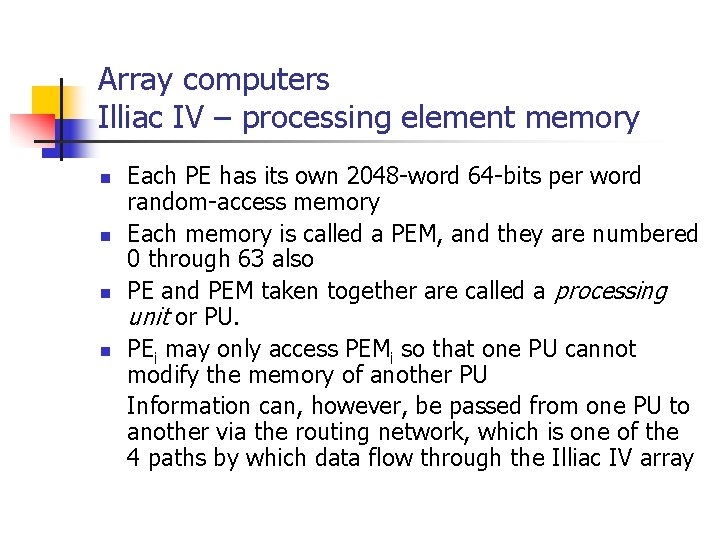

Array computers Illiac IV – processing element memory n n Each PE has its own 2048 -word 64 -bits per word random-access memory Each memory is called a PEM, and they are numbered 0 through 63 also PE and PEM taken together are called a processing unit or PU. PEi may only access PEMi so that one PU cannot modify the memory of another PU Information can, however, be passed from one PU to another via the routing network, which is one of the 4 paths by which data flow through the Illiac IV array

Array computers Illiac IV – data paths Data paths in the array of Illiac IV

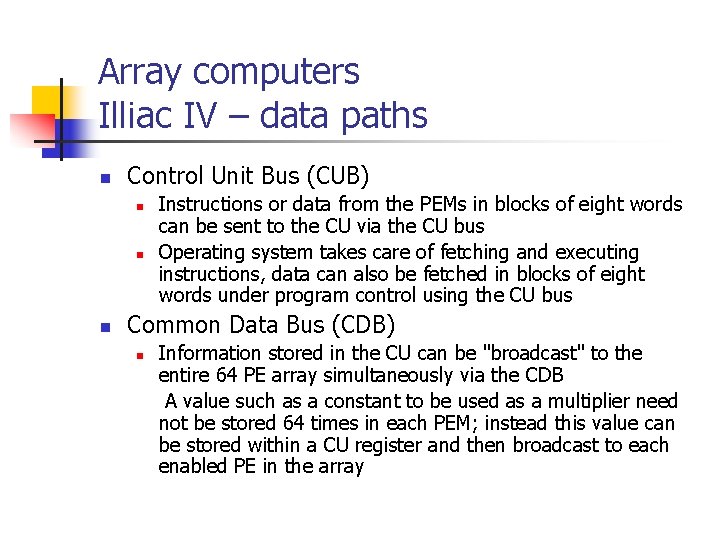

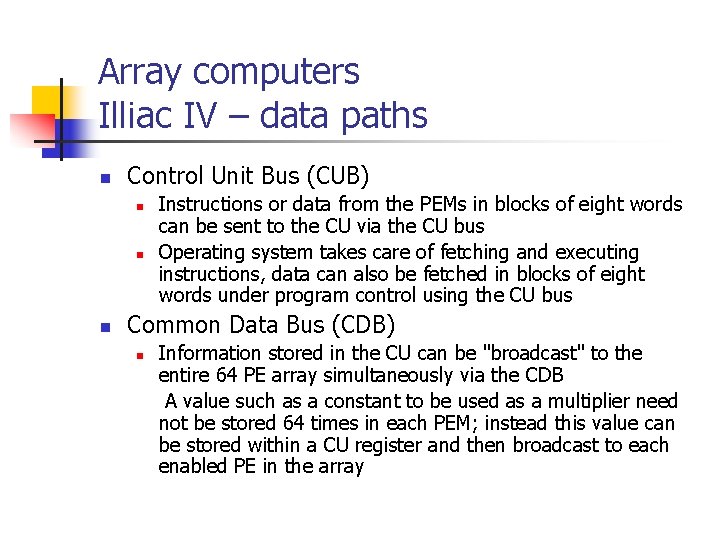

Array computers Illiac IV – data paths n Control Unit Bus (CUB) n n n Instructions or data from the PEMs in blocks of eight words can be sent to the CU via the CU bus Operating system takes care of fetching and executing instructions, data can also be fetched in blocks of eight words under program control using the CU bus Common Data Bus (CDB) n Information stored in the CU can be "broadcast" to the entire 64 PE array simultaneously via the CDB A value such as a constant to be used as a multiplier need not be stored 64 times in each PEM; instead this value can be stored within a CU register and then broadcast to each enabled PE in the array

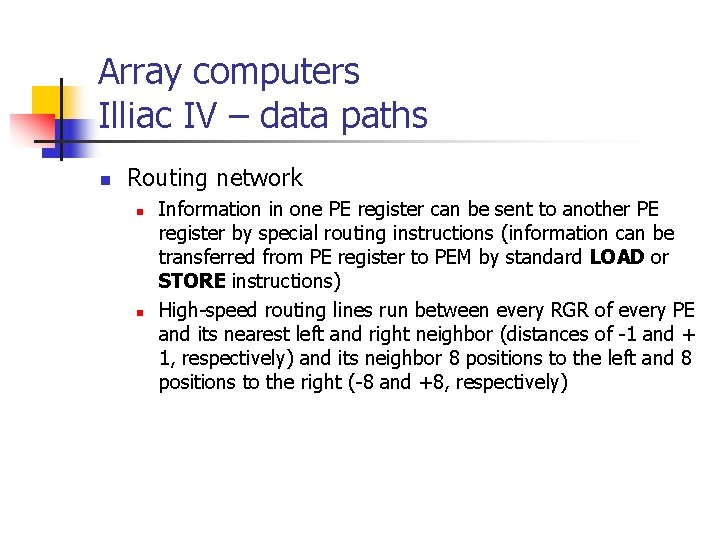

Array computers Illiac IV – data paths n Routing network n n Information in one PE register can be sent to another PE register by special routing instructions (information can be transferred from PE register to PEM by standard LOAD or STORE instructions) High-speed routing lines run between every RGR of every PE and its nearest left and right neighbor (distances of -1 and + 1, respectively) and its neighbor 8 positions to the left and 8 positions to the right (-8 and +8, respectively)

Array computers Illiac IV – data paths n Mode-bit line n n Mode-bit line consists of one line coming from the RGD of each PE in the array Mode-bit line can transmit one of the eight mode bits of each RGD in the array up to an ACAR in the CU If this bit is the bit which indicates whether or not a PE is on or off, we can transmit a "mode pattern" to an ACAR. This mode pattern reflects the status or on-offness of each PE in the array There are instructions which are executed completely within the CU that can test this mode pattern and branch on a zero or nonzero condition In this way branching in the instruction stream can occur based on the mode pattern of the entire 64 -PE array.

Array computers Programming Illiac IV n n Hardware-first design Relatively poor software support Rudimentary operating system (for example, no support for disk management) GLYPNIR (ALGOL derivative) Provided statements which identified vector arithmetic suitable for parallel implementation CFD (Computational Fluid Dynamics) Fortran based language written for work at NASA None of these languages hid the architecture of the machine very well, but they allowed the development of machine specific programs in a High Level Language.

Examples of algorithms executed on array computers n n n '*' in the array subscripts identifies that all the elements of a vector are to be operated upon simultaneously Programmer needs to be aware that only 64 processors are available, and so needs to structure the computation to make use of this Compiler will split a computation up into a number of 64 element iterations of the instruction where required

Examples of algorithms executed on array computers Time 0 2 4 6 8 7 5 3 1 Time 1 6 10 14 15 12 8 4 3 Time 2 20 25 26 23 16 11 10 13 Time 3 36 36 J=1 DO 1 I=1, 3 A(*) = A(*) + A(* + J) J = J * 2 1 CONTINUE

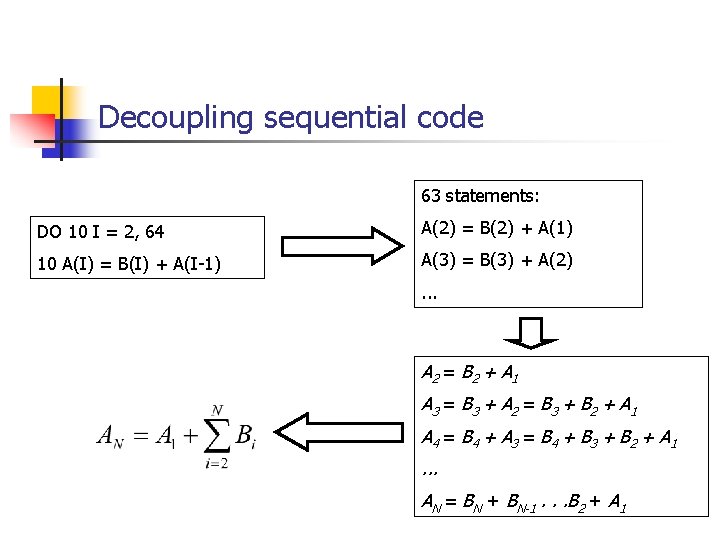

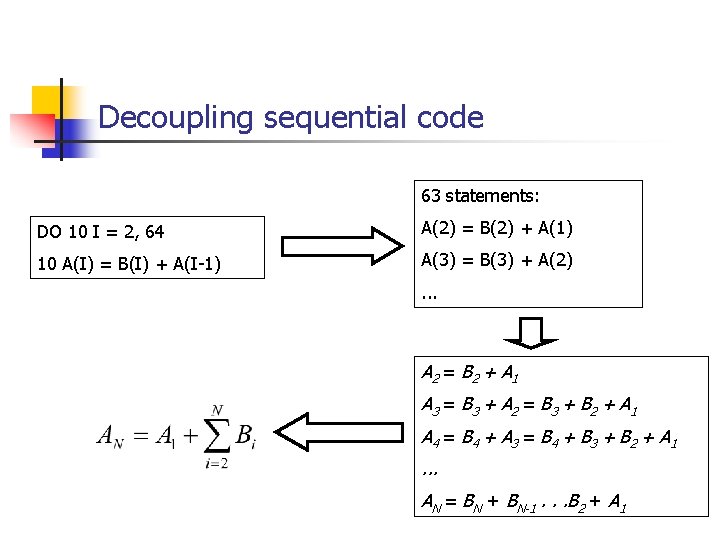

Decoupling sequential code 63 statements: DO 10 I = 2, 64 A(2) = B(2) + A(1) 10 A(I) = B(I) + A(I-1) A(3) = B(3) + A(2) . . . A 2 = B 2 + A 1 A 3 = B 3 + A 2 = B 3 + B 2 + A 1 A 4 = B 4 + A 3 = B 4 + B 3 + B 2 + A 1 . . . AN = BN + BN-1. . . B 2 + A 1

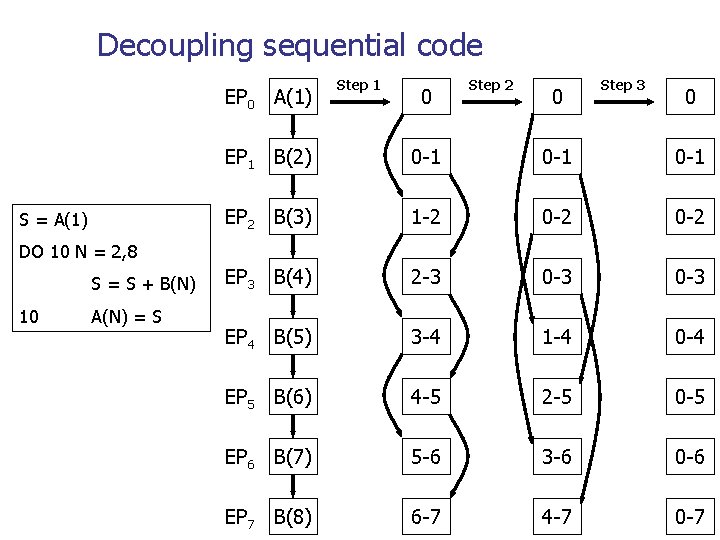

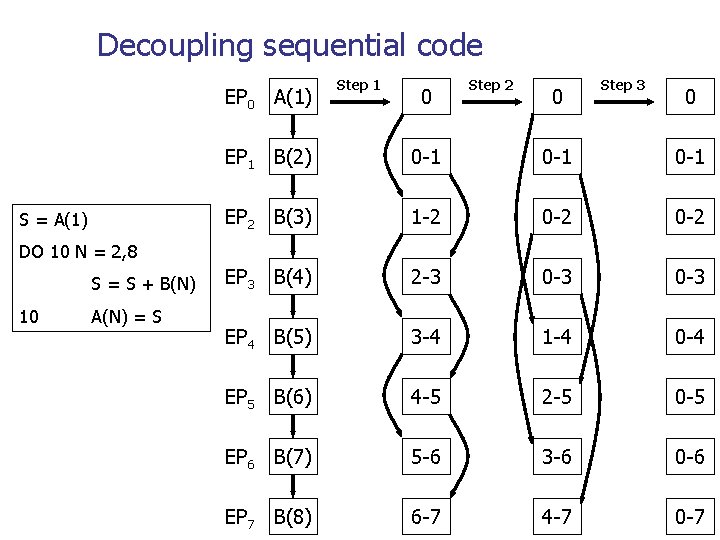

Decoupling sequential code EP 0 A(1) S = A(1) Step 1 0 Step 2 0 Step 3 0 EP 1 B(2) 0 -1 0 -1 EP 2 B(3) 1 -2 0 -2 EP 3 B(4) 2 -3 0 -3 EP 4 B(5) 3 -4 1 -4 0 -4 EP 5 B(6) 4 -5 2 -5 0 -5 EP 6 B(7) 5 -6 3 -6 0 -6 EP 7 B(8) 6 -7 4 -7 0 -7 DO 10 N = 2, 8 S = S + B(N) 10 A(N) = S

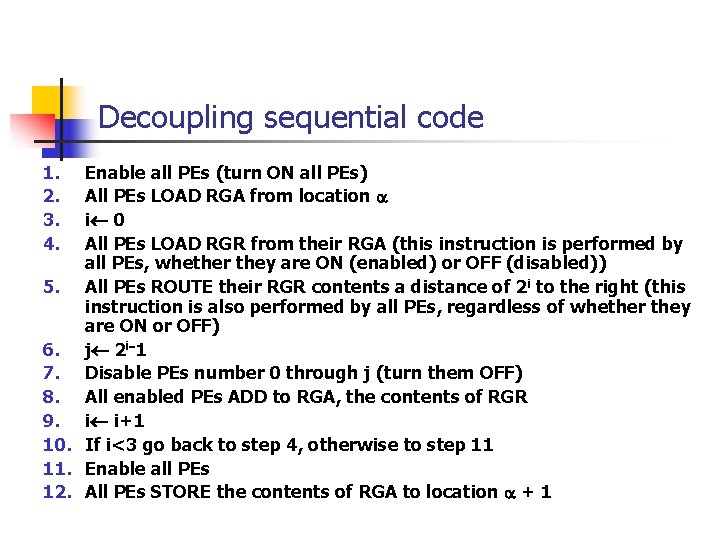

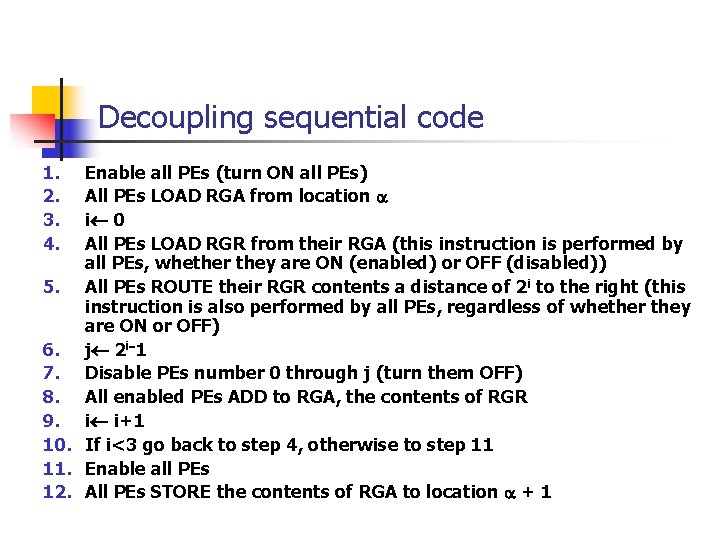

Decoupling sequential code 1. 2. 3. 4. Enable all PEs (turn ON all PEs) All PEs LOAD RGA from location a i¬ 0 All PEs LOAD RGR from their RGA (this instruction is performed by all PEs, whether they are ON (enabled) or OFF (disabled)) 5. All PEs ROUTE their RGR contents a distance of 2 i to the right (this instruction is also performed by all PEs, regardless of whether they are ON or OFF) 6. j¬ 2 i-1 7. Disable PEs number 0 through j (turn them OFF) 8. All enabled PEs ADD to RGA, the contents of RGR 9. i¬ i+1 10. If i<3 go back to step 4, otherwise to step 11 11. Enable all PEs 12. All PEs STORE the contents of RGA to location a + 1

Array computers Subsequent designs n n n BSP – Burroughs Scientific Processor (1977) MPP – Massively Parallel Processor (1983) CM-1, CM-2 Thinking Machines