Architecture For Model Composition Deployment and Runtime Orchestration

- Slides: 5

Architecture For Model Composition, Deployment, and Runtime Orchestration How a Model Composition Tool, Kubeflow, Argo and Kubernetes fit together? Kazi Farooqui, AT&T Acumos is a registered trademark of the Linux Foundation

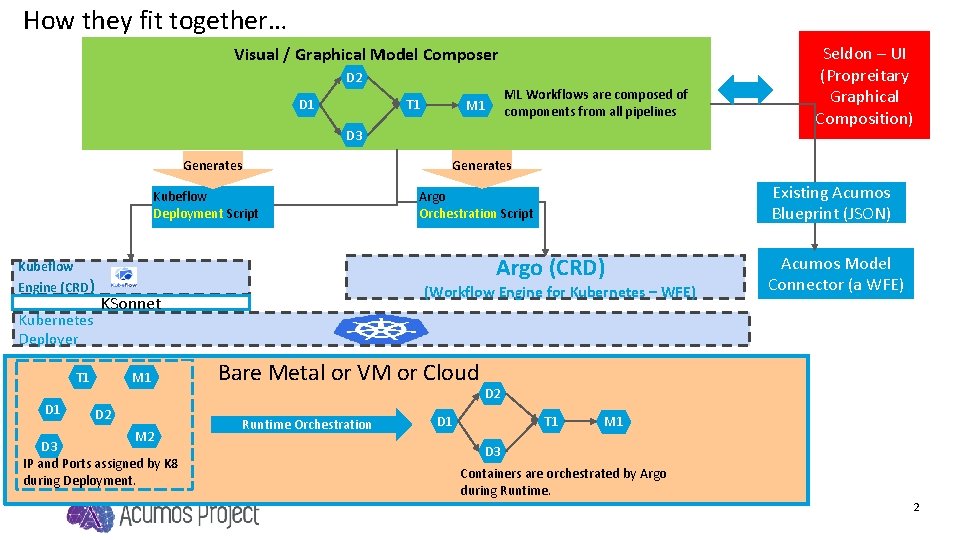

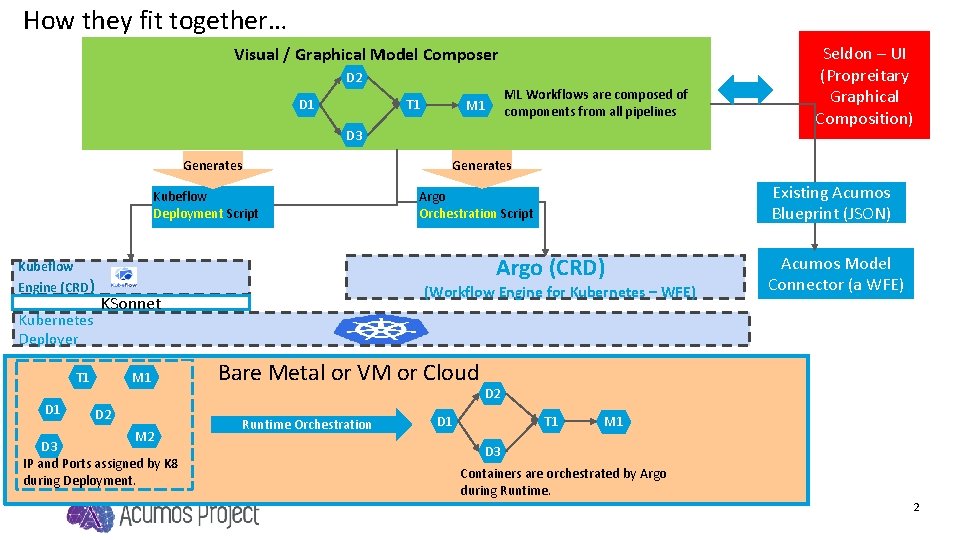

How they fit together… Visual / Graphical Model Composer D 2 D 1 T 1 ML Workflows are composed of components from all pipelines M 1 D 3 Generates Kubeflow Deployment Script Kubernetes Deployer Existing Acumos Blueprint (JSON) Argo Orchestration Script (Workflow Engine for Kubernetes – WFE) KSonnet T 1 D 1 Generates Argo (CRD) Kubeflow Engine (CRD) M 1 D 2 M 2 D 3 IP and Ports assigned by K 8 during Deployment. Bare Metal or VM or Cloud Runtime Orchestration Seldon – UI (Propreitary Graphical Composition) Acumos Model Connector (a WFE) D 2 D 1 T 1 M 1 D 3 Containers are orchestrated by Argo during Runtime. 2

Argo: Open Source Kubernetes Workflow Engine Ø Open Source Container Native Workflow Engine. Ø Defines workflows where each step is in the workflow is a Container Ø Model multi-step workflows as a sequence of steps or capture the dependencies between tasks using a graph (DAG) Ø Implemented as a Kubernetes Custom Resource Definition (CRD) – an extension mechanism of K 8. Ø Argo workflows can be managed using kubectl and natively integrates with other Kubernetes services such as volumes, secrets, and RBAC. Ø Leverage Kubernetes to run compute intensive jobs like data processing or machine learning jobs in a fraction of the time using Argo workflows. Ø Argo is cloud agnostic and can run on any kubernetes cluster. 3

Sample Argo Workflow Script: A YAML File api. Version: argoproj. io/v 1 alpha 1 kind: Workflow metadata: generate. Name: My. MLWorkflow spec: # This spec contains two templates: hello-hello and whalesay templates: - name: hello-hello - # Instead of just running a container - # This template has a sequence of steps - - name: hello 1 #hello 1 is run before the following steps template: whalesay arguments: parameters: - name: message value: "hello 1" - - name: hello 2 a #double dash => run after previous step template: whalesay arguments: parameters: - name: message value: "hello 2 a" - name: hello 2 b #single dash => run in parallel with previous step template: whalesay arguments: parameters: - name: message value: "hello 2 b"

Kubeflow Pipelines: New Open Source Project started Ø Kubeflow: To deploy ML Workflows via Kubernetes on K 8 Clusters (Local, Cloud, etc. ) and to manage the life cycle of byworkflow components. Google (Last Week!!!) Ø Kubeflow Pipelines is a new project of Kubeflow. Ø Kubeflow Pipelines is a model composition tool. Ø Kubeflow pipelines uses Argo under the hood to orchestrate Kubernetes resources. Ø Kubeflow Pipelines provides a Jupyter Notebook style workbench to compose machine learning (ML) workflows. Ø Kubeflow Pipelines is “Text – based”, it does not offer a visual / graphical composition experience (https: //github. com/kubeflow/pipelines/wiki). (https: //github. com/kubeflow/pipelines/wiki/Build-a-Pipeline) Ø Authoring a pipeline is just like authoring a normal Python function. The pipeline function describes the topology of the pipeline. Each step in the pipeline is typically a Container. Op --- a simple class or function describing how to interact with a docker container image. 5