ARCH Adaptive replacement cache management for heterogeneous storage

- Slides: 30

ARC-H: Adaptive replacement cache management for heterogeneous storage devices Young-Jin Kim a, Jihong Kim b a Division of Electrical and Computer Engineering, Ajou University, San 5, Woncheon-dong, Yeongtong-gu, Suwon 443 -749, Republic of Korea b School of Computer Science and Engineering, Seoul National University, 599 Gwanangno, Gwanak-gu, Seoul 151 -742, Republic of Korea Journal of Systems Architecture 58 (2012) 86– 97

OUTLINE • Introduction • Background & Motivation • ARC-H Design • Experimental results • Conclusions

OUTLINE • Introduction • Background & Motivation • ARC-H Design • Experimental results • Conclusions

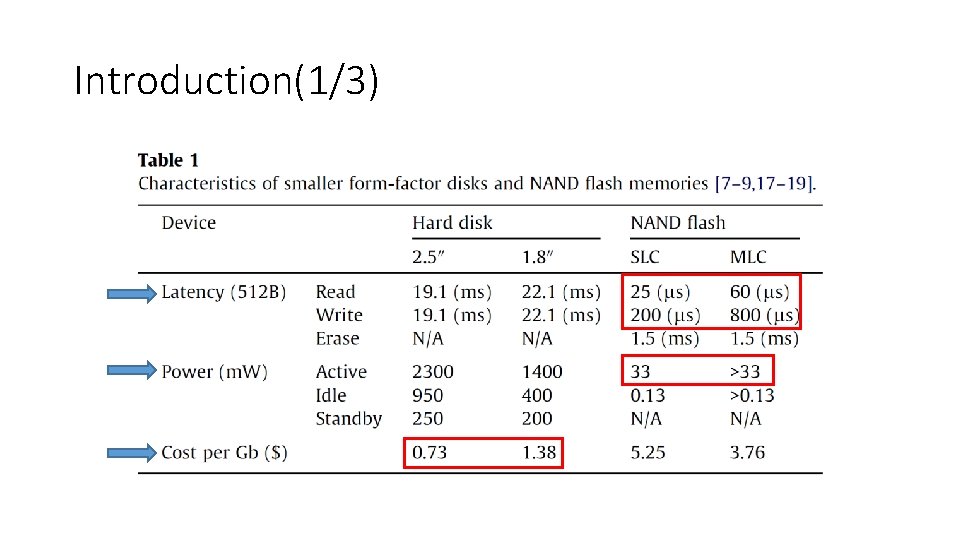

Introduction(1/3)

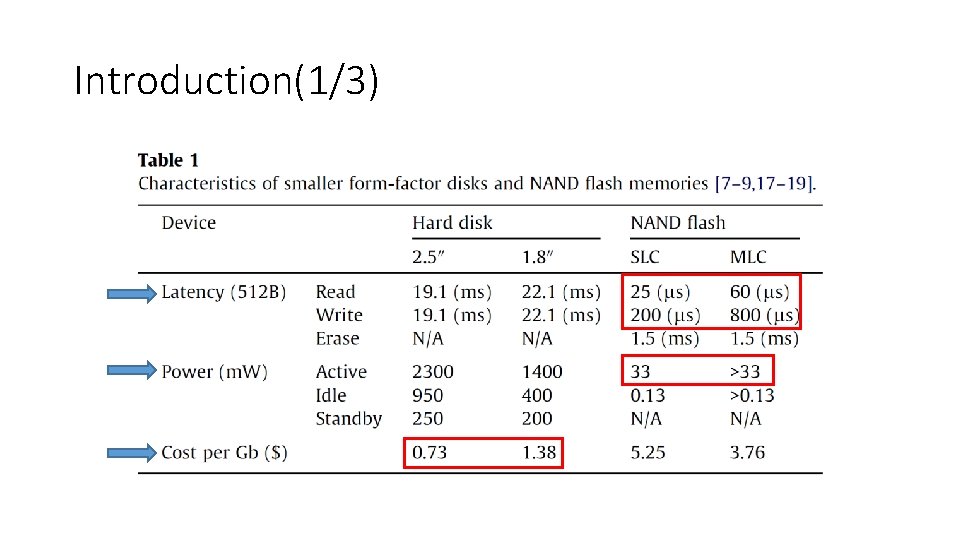

Introduction(2/3) • The fast response time of NAND flash memory and the low cost per bit of hard disks are both attractive; but the high power consumption of hard disks and high cost per bit of flash memory are not. • Thus, there has been a lot of research on combining a hard disk and a NAND flash memory. • To use heterogeneous secondary storage devices effectively, an operating system needs to be modified to account for the different I/O cost of each device the page cache algorithm.

Introduction(3/3) • We propose a novel cache replacement algorithm called ARC-H. • ARC-H provides cache management in a manner specifically adapted to heterogeneous storage systems and fluctuating workloads. • We investigate how device and workload characteristics can be accommodated by a cache replacement algorithm, and then devise a cache algorithm that can deliver high performance in terms of total service time.

OUTLINE • Introduction • Background & Motivation • ARC-H Design • Experimental results • Conclusions

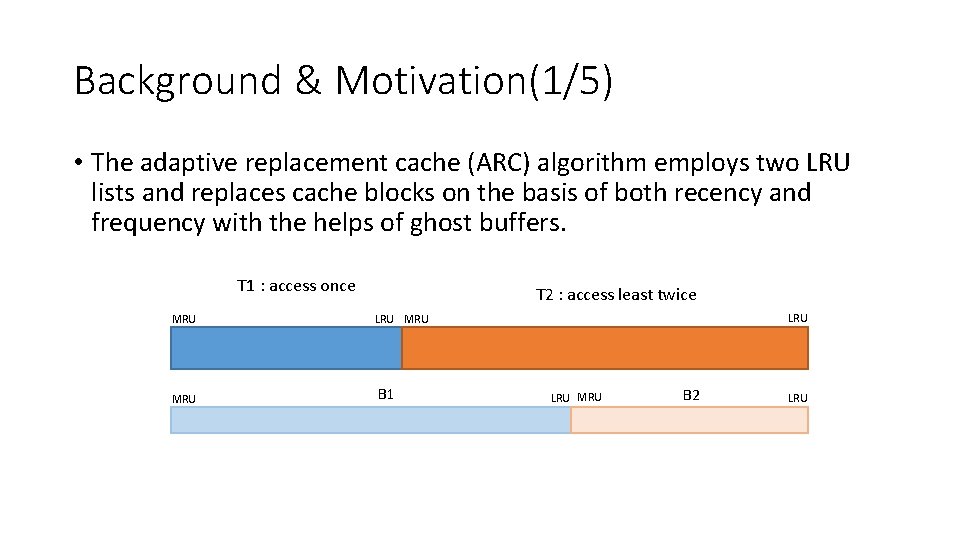

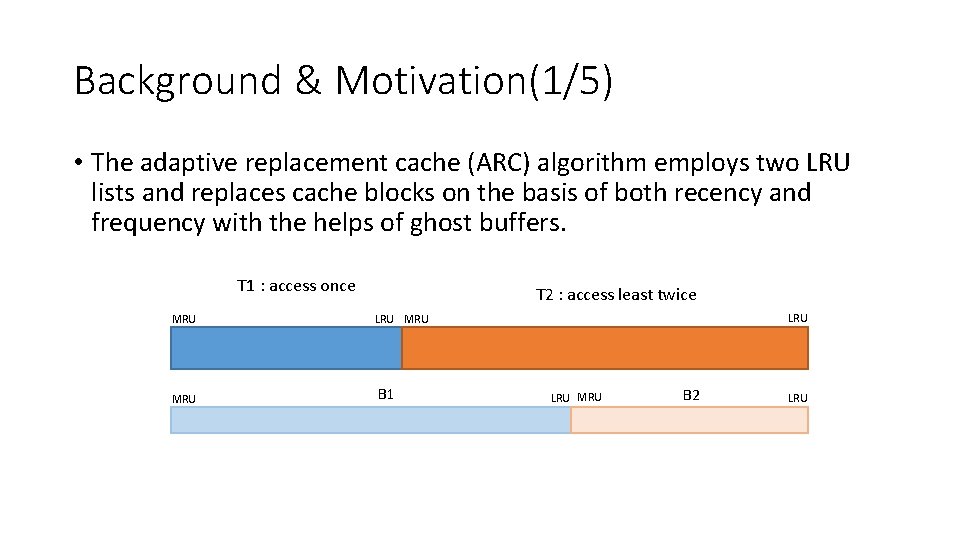

Background & Motivation(1/5) • The adaptive replacement cache (ARC) algorithm employs two LRU lists and replaces cache blocks on the basis of both recency and frequency with the helps of ghost buffers. T 1 : access once T 2 : access least twice MRU LRU MRU B 1 LRU MRU B 2 LRU

Background & Motivation(2/5) • ARC achieves a higher cache hit rate than existing algorithms because it allows multiple cache blocks to be moved from an inactive LRU list (i. e. , T 1) to an active LRU list (i. e. , T 2) in a page cache when they are predicted to be accessed frequently in the near future. • To predict future workload patterns in T 1 and T 2, ARC considers the hit counts of the blocks in the two ghost buffers B 1 and B 2, respectively.

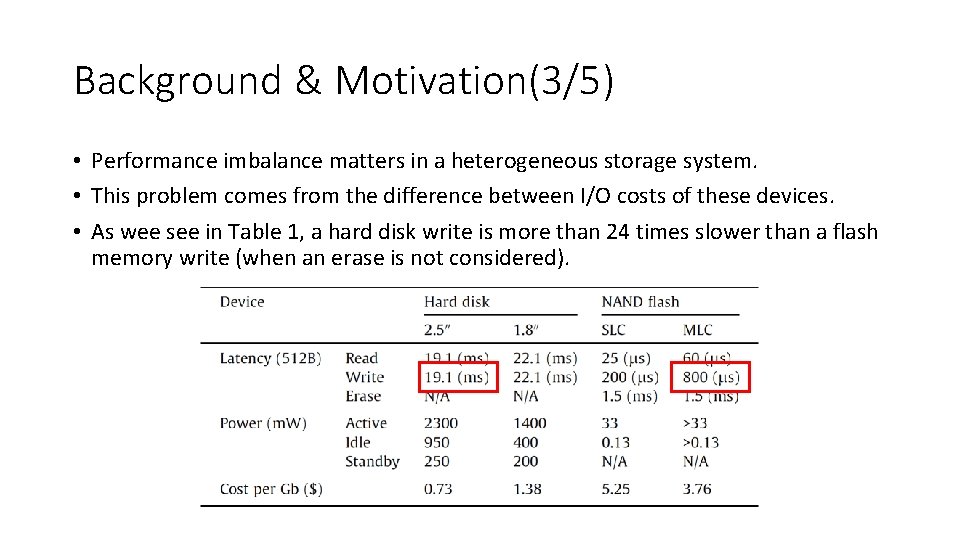

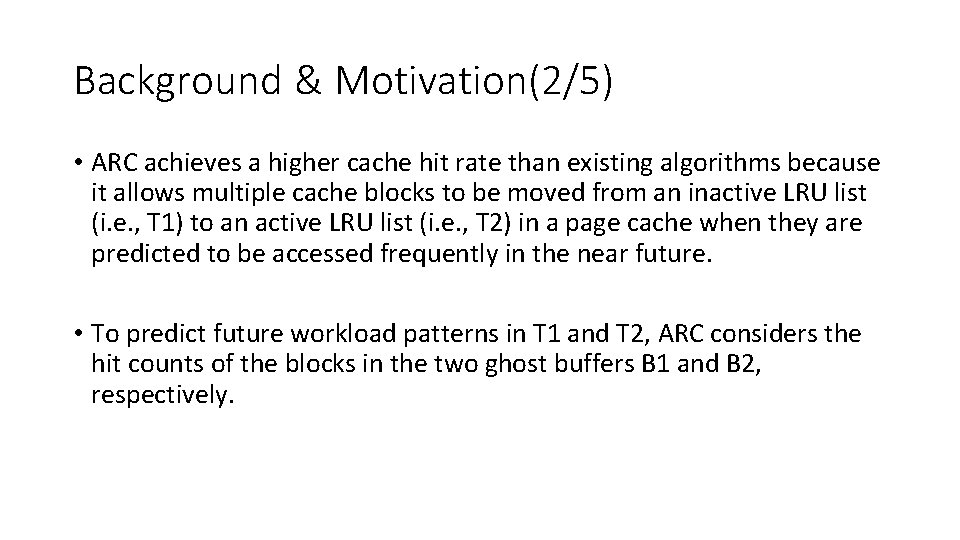

Background & Motivation(3/5) • Performance imbalance matters in a heterogeneous storage system. • This problem comes from the difference between I/O costs of these devices. • As wee see in Table 1, a hard disk write is more than 24 times slower than a flash memory write (when an erase is not considered).

Background & Motivation(4/5) • Keeping a large number of the blocks which reside on the disk kept in the page cache will decrease the number of disk accesses, improving the response time. • In other words, sometimes the retention of many blocks belonging to the flash memory in the cache may not help the system performance. • Thus, a high cache hit rate does not of itself ensure fast I/O performance.

Background & Motivation(5/5) • We observed that accounting for the different access times of each device in a page cache is likely to reduce the total I/O cost. • But ARC has no intrinsic mechanism to deal with heterogeneous devices. • The authors’ motivation is two folds: • 1) reduce service time by a practical implementation. • 2) achieve the best possible hit rate.

OUTLINE • Introduction • Background & Motivation • ARC-H Design • Experimental results • Conclusions

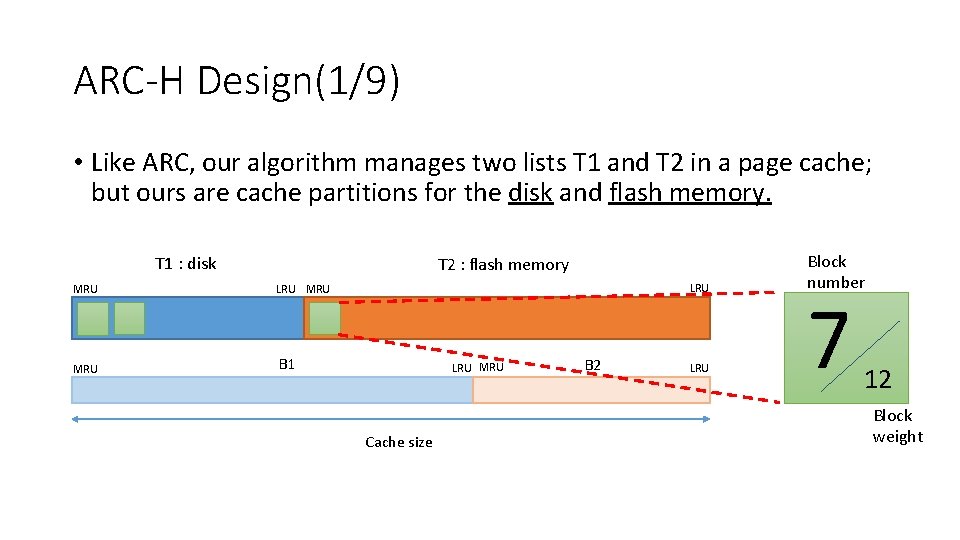

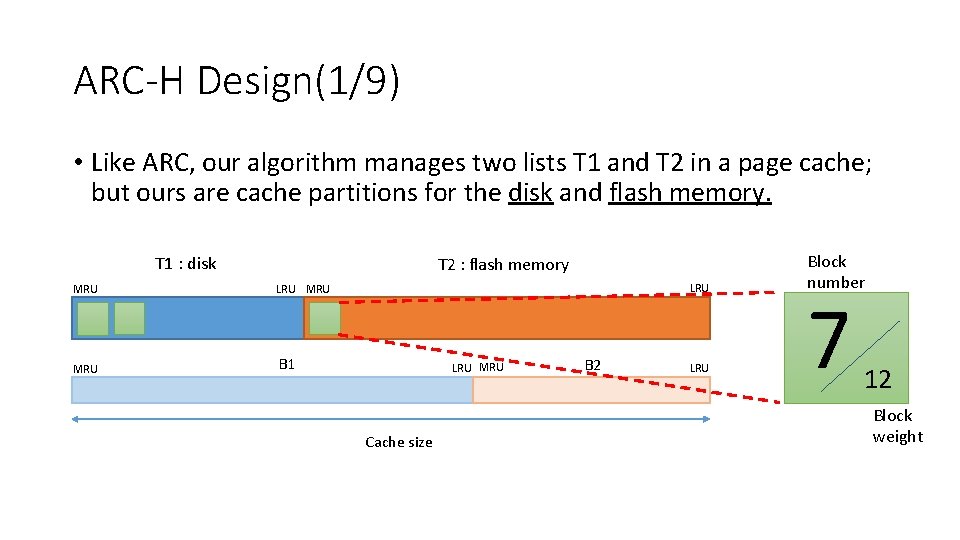

ARC-H Design(1/9) • Like ARC, our algorithm manages two lists T 1 and T 2 in a page cache; but ours are cache partitions for the disk and flash memory. T 1 : disk T 2 : flash memory MRU LRU MRU B 1 LRU MRU Cache size B 2 LRU Block number 7 12 Block weight

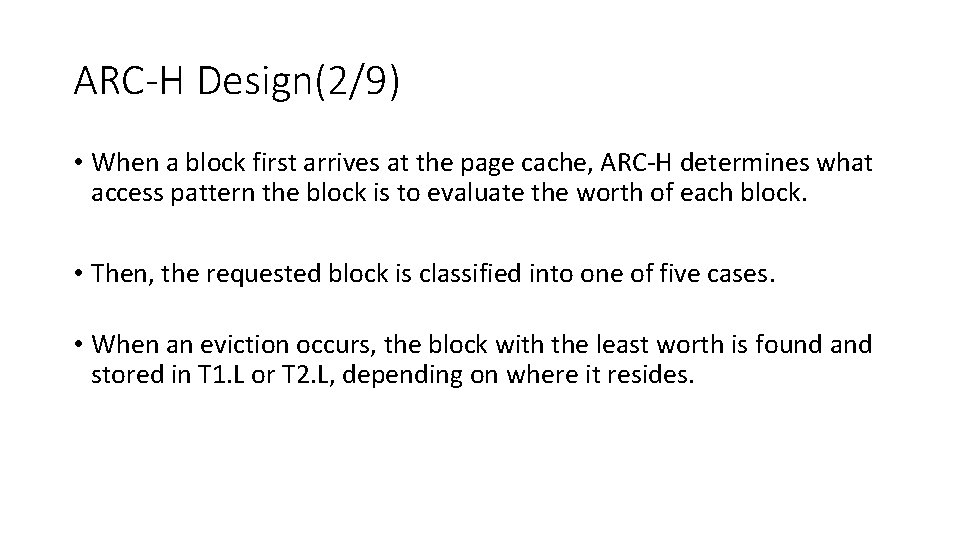

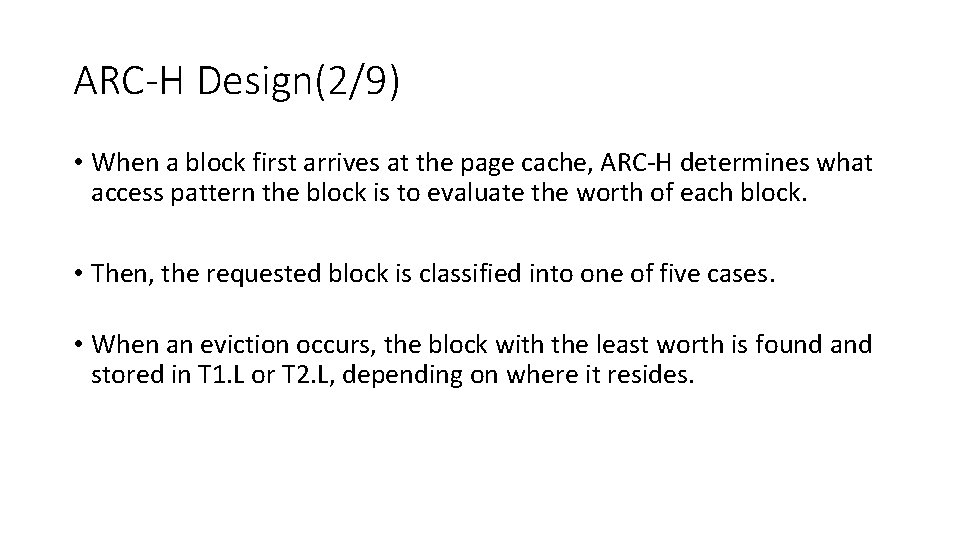

ARC-H Design(2/9) • When a block first arrives at the page cache, ARC-H determines what access pattern the block is to evaluate the worth of each block. • Then, the requested block is classified into one of five cases. • When an eviction occurs, the block with the least worth is found and stored in T 1. L or T 2. L, depending on where it resides.

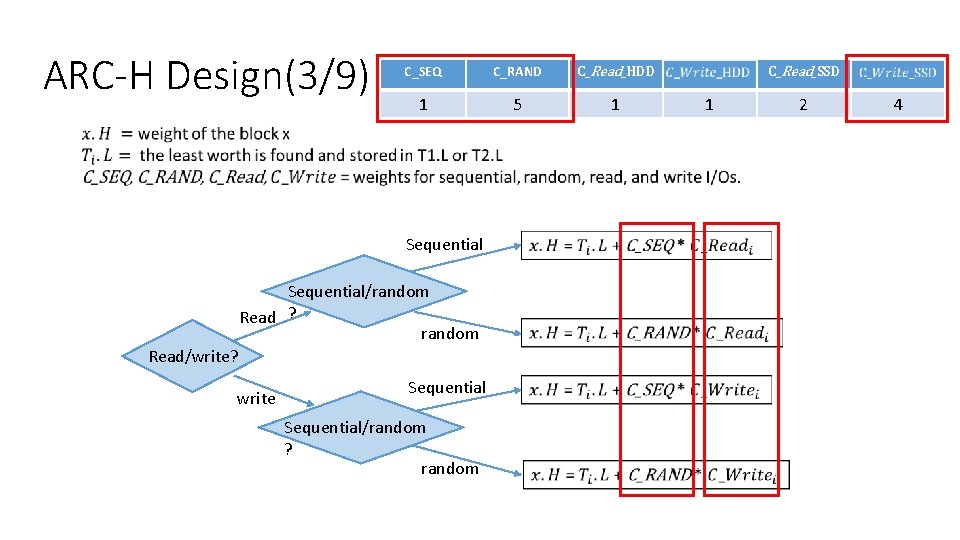

ARC-H Design(3/9) C_SEQ C_RAND C_Read_HDD 1 5 1 Sequential/random Read ? random Read/write? write Sequential/random ? random C_Read_SSD 1 2 4

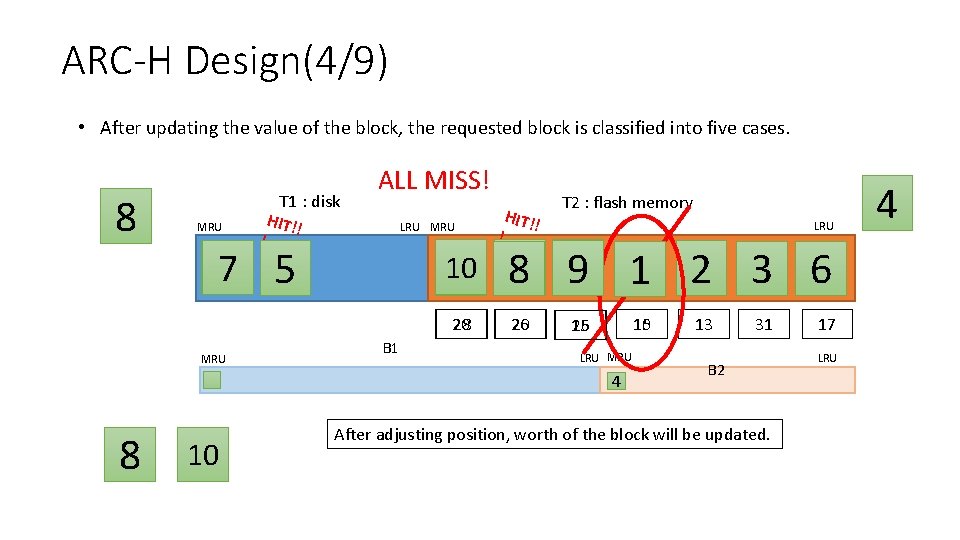

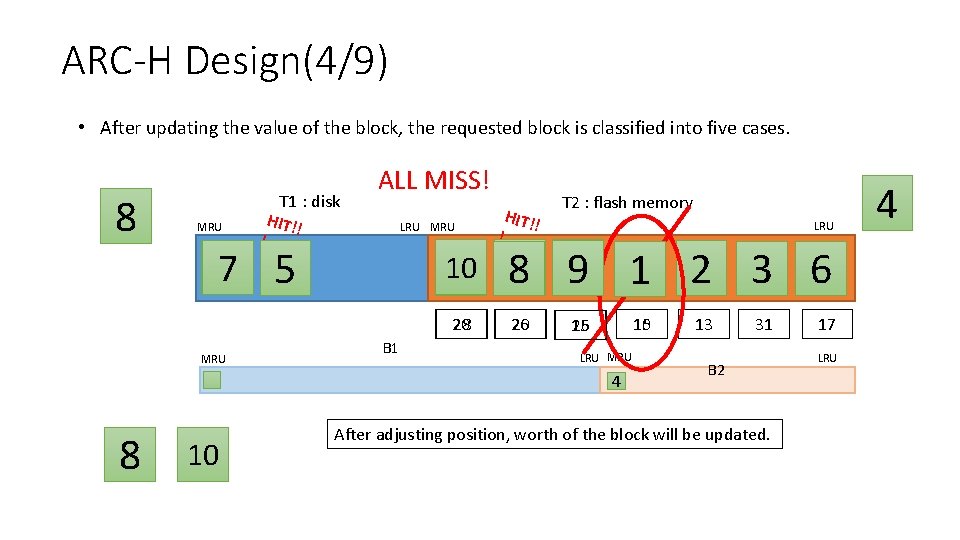

ARC-H Design(4/9) • After updating the value of the block, the requested block is classified into five cases. 8 MRU ! T 1 : disk HIT!! ALL MISS! LRU MRU 10 9 18 57 57 20 28 MRU B 1 ! HIT!! T 2 : flash memory LRU 189 491 14 2 3 6 26 20 10 15 15 26 LRU MRU 4 8 10 8 13 31 B 2 After adjusting position, worth of the block will be updated. 17 LRU 4

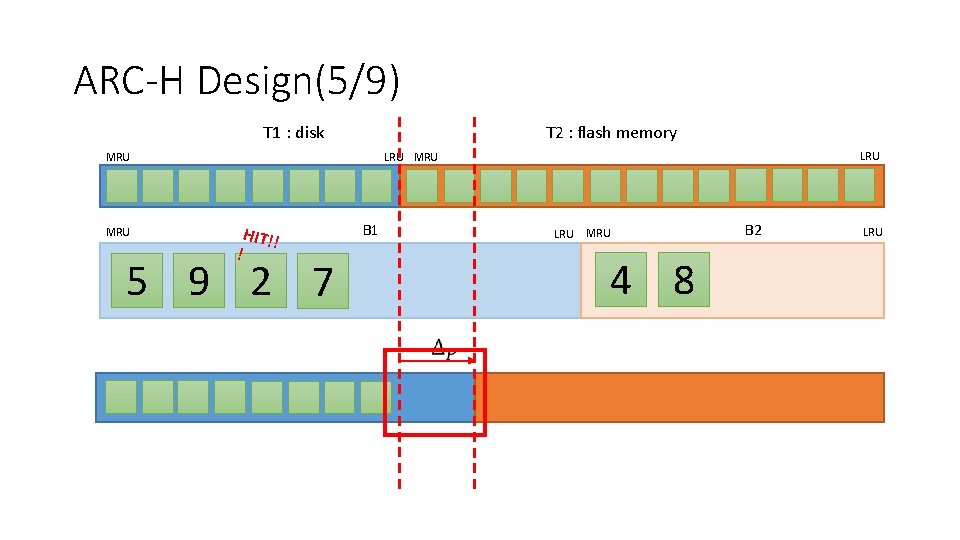

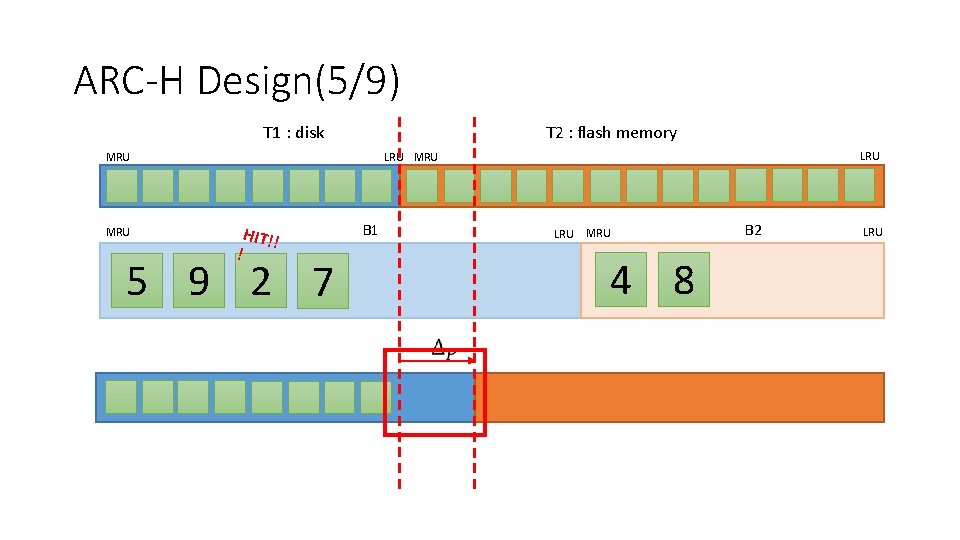

ARC-H Design(5/9) T 1 : disk T 2 : flash memory LRU MRU MRU ! HIT!! 5 9 2 7 B 1 LRU MRU 4 8 B 2 LRU

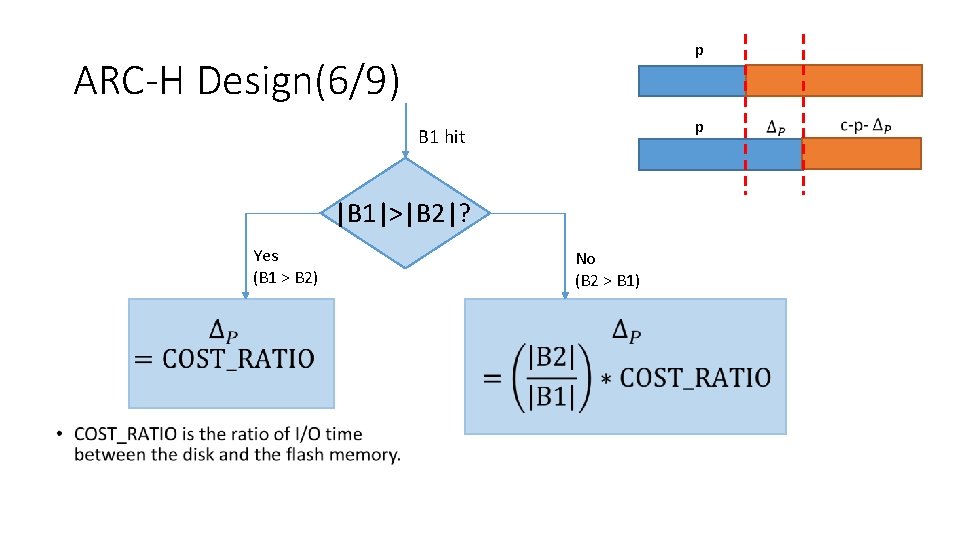

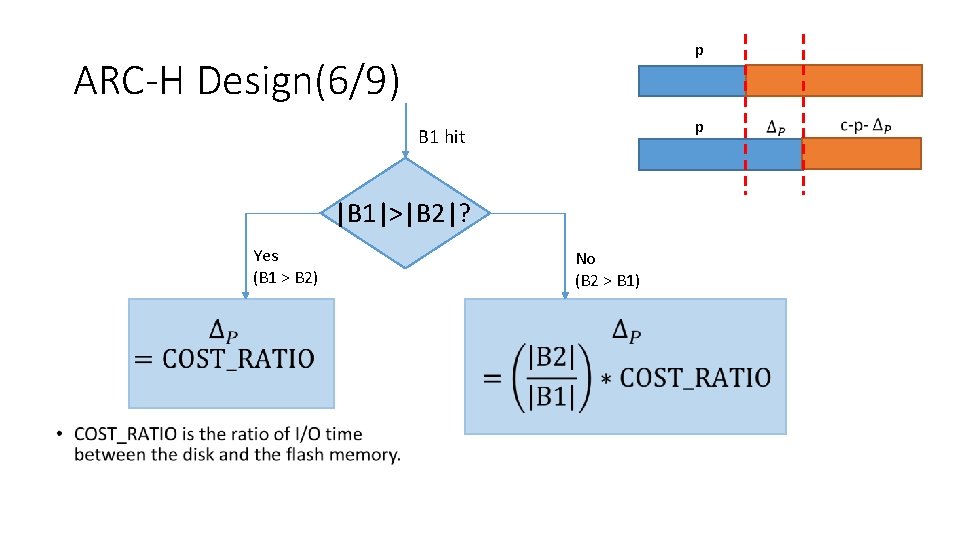

p ARC-H Design(6/9) p B 1 hit |B 1|>|B 2|? Yes (B 1 > B 2) • No (B 2 > B 1)

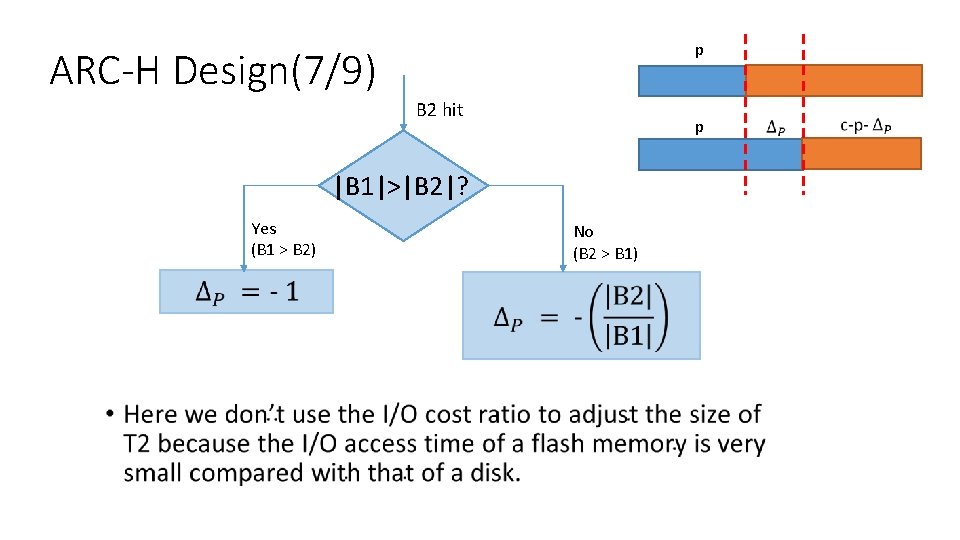

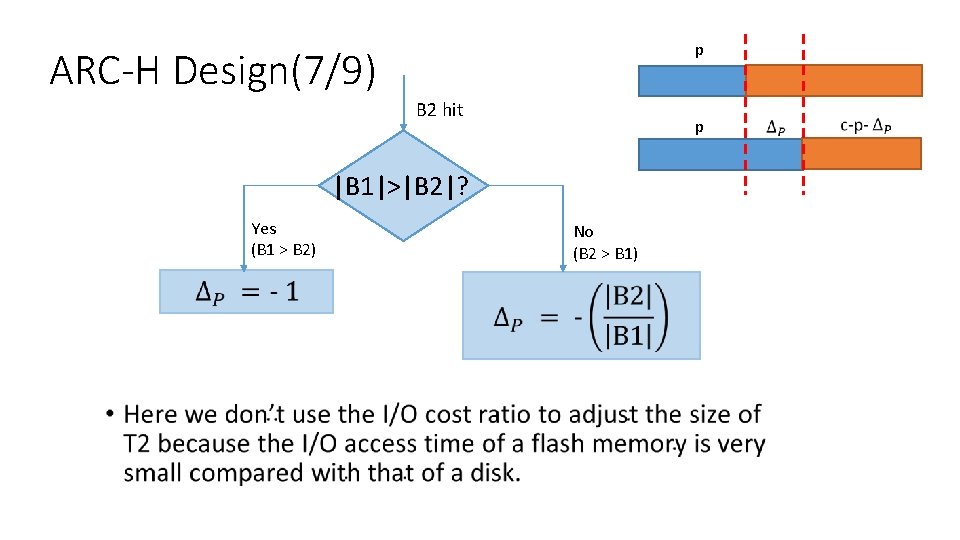

p ARC-H Design(7/9) B 2 hit p |B 1|>|B 2|? Yes (B 1 > B 2) • No (B 2 > B 1)

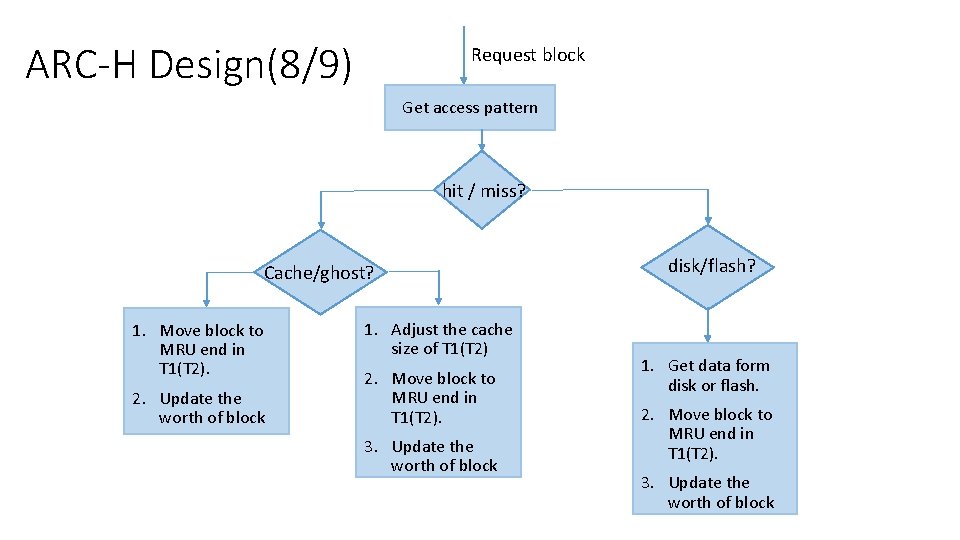

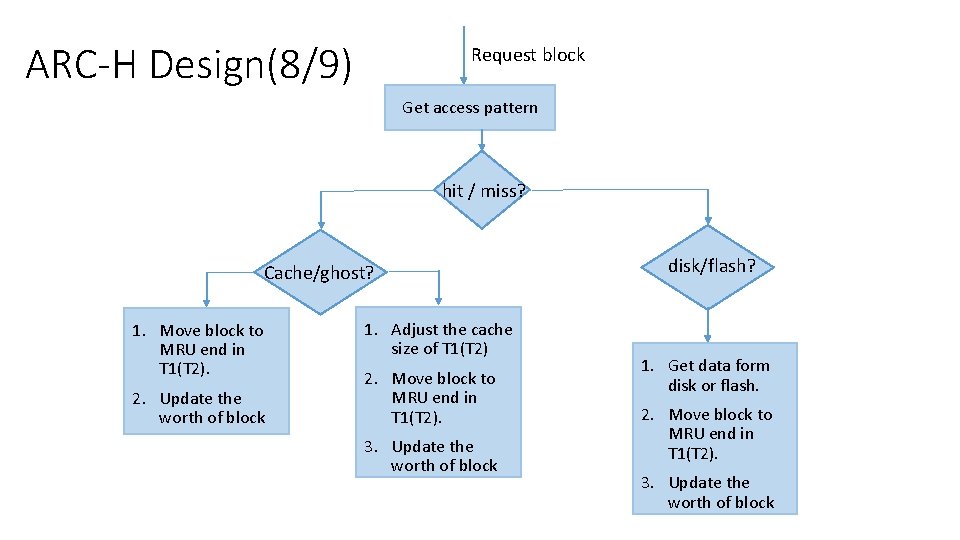

ARC-H Design(8/9) Request block Get access pattern hit / miss? Cache/ghost? 1. Move block to MRU end in T 1(T 2). 2. Update the worth of block 1. Adjust the cache size of T 1(T 2) 2. Move block to MRU end in T 1(T 2). 3. Update the worth of block disk/flash? 1. Get data form disk or flash. 2. Move block to MRU end in T 1(T 2). 3. Update the worth of block

ARC-H Design(9/9) • ARC-H is designed to improve the total system response of a heterogeneous mobile. • ARC-H improves the total service time by • (1) adjusting the cache partition of each device, to increase the number of valuable blocks based on ghost buffers. • (2) evicting less valuable blocks earlier, reducing the space taken by sequential blocks on the disk and read-type blocks for the flash memory.

OUTLINE • Introduction • Background & Motivation • ARC-H Design • Experimental results • Conclusions

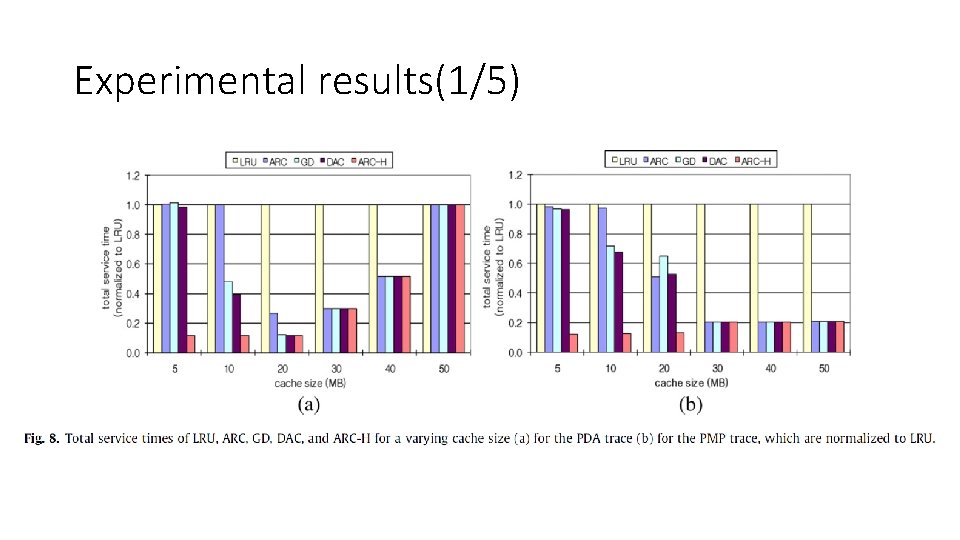

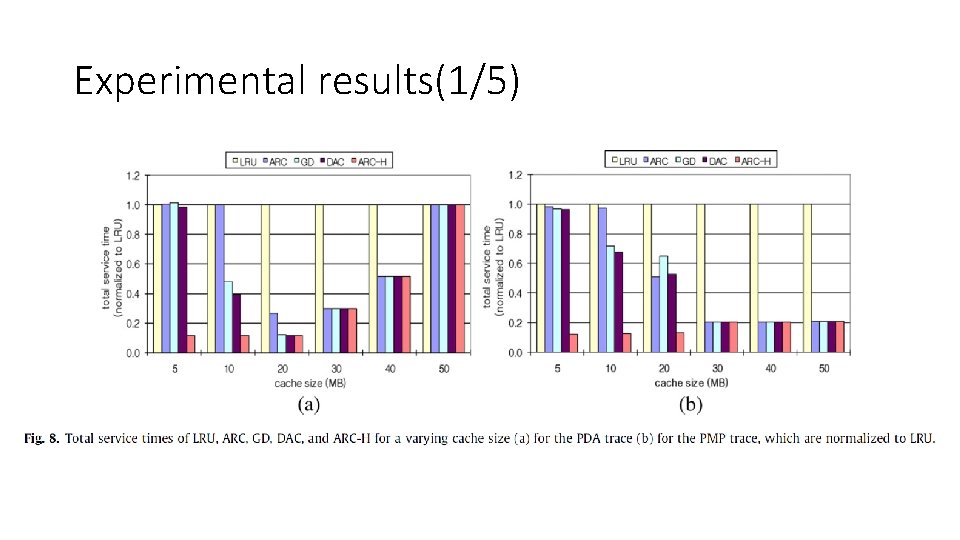

Experimental results(1/5)

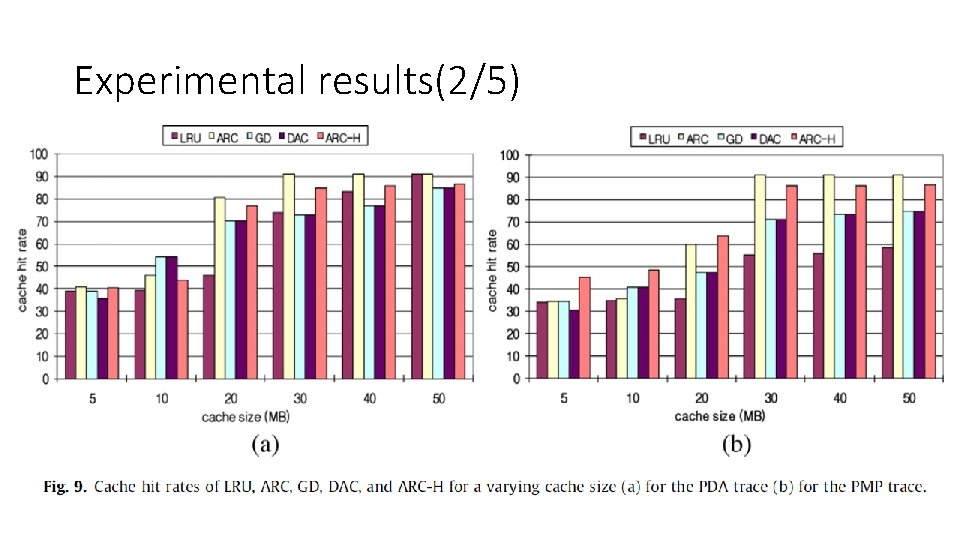

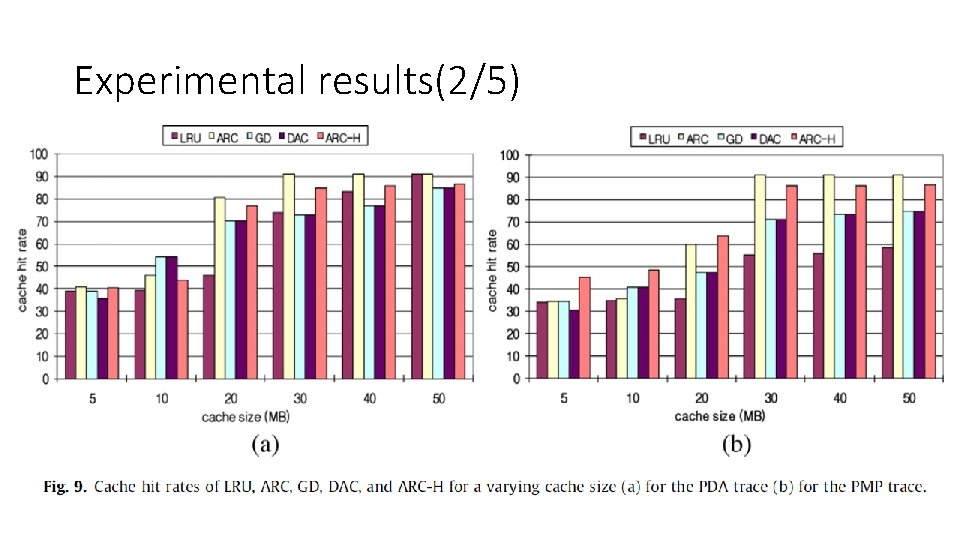

Experimental results(2/5)

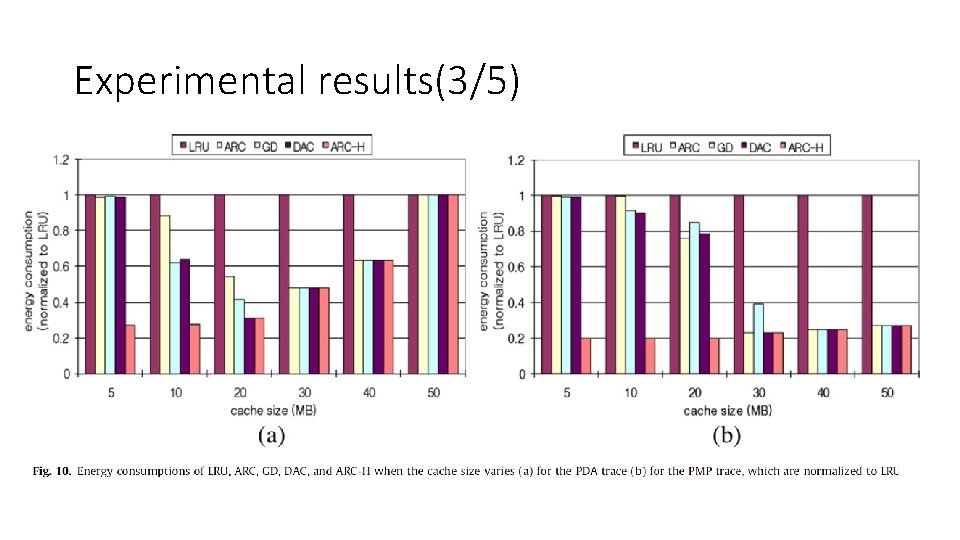

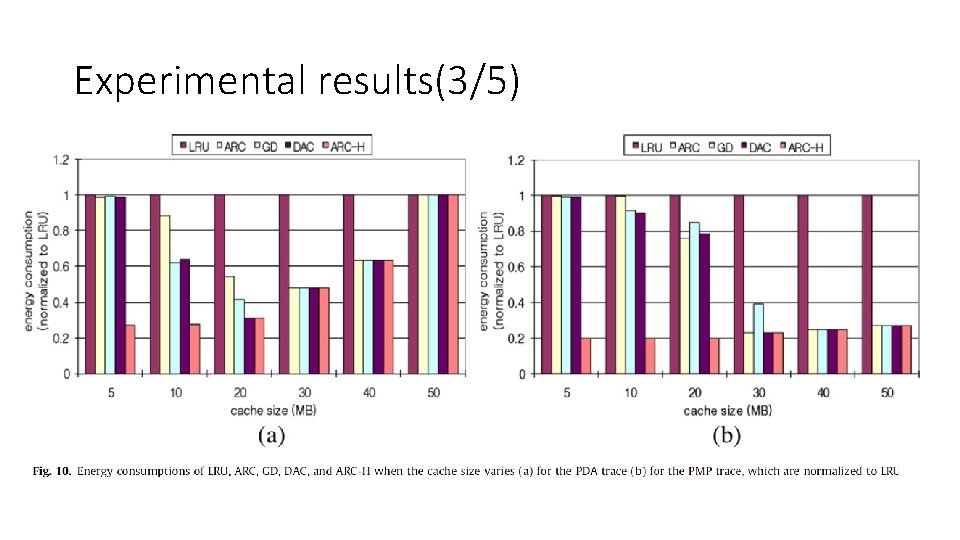

Experimental results(3/5)

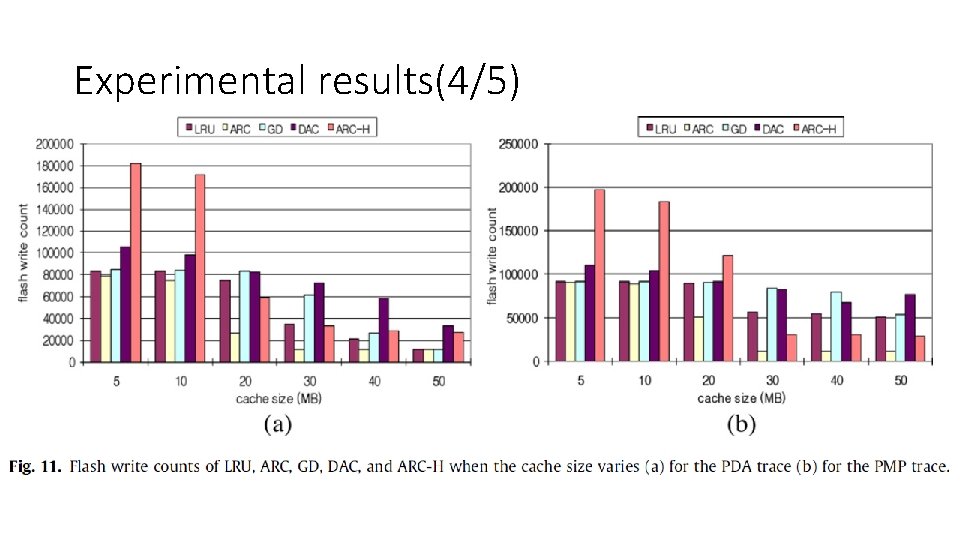

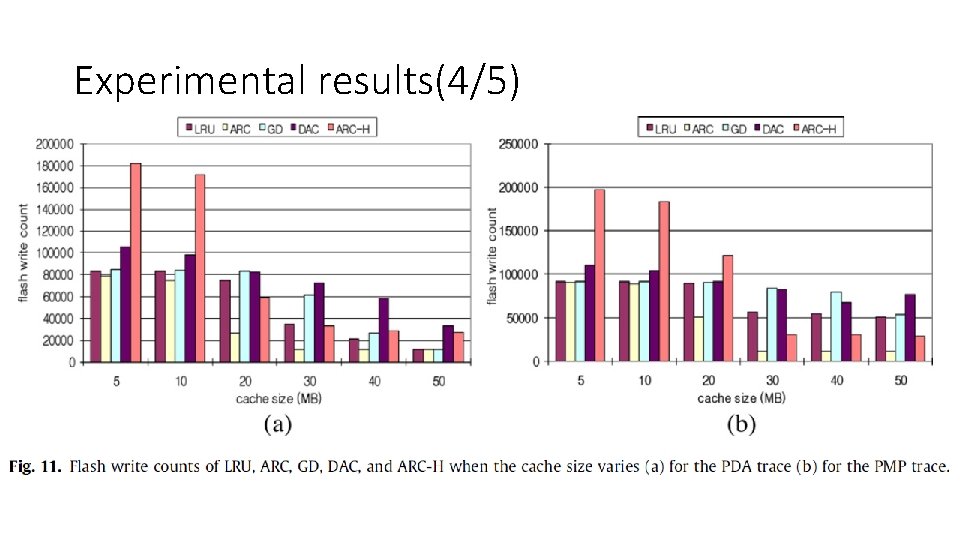

Experimental results(4/5)

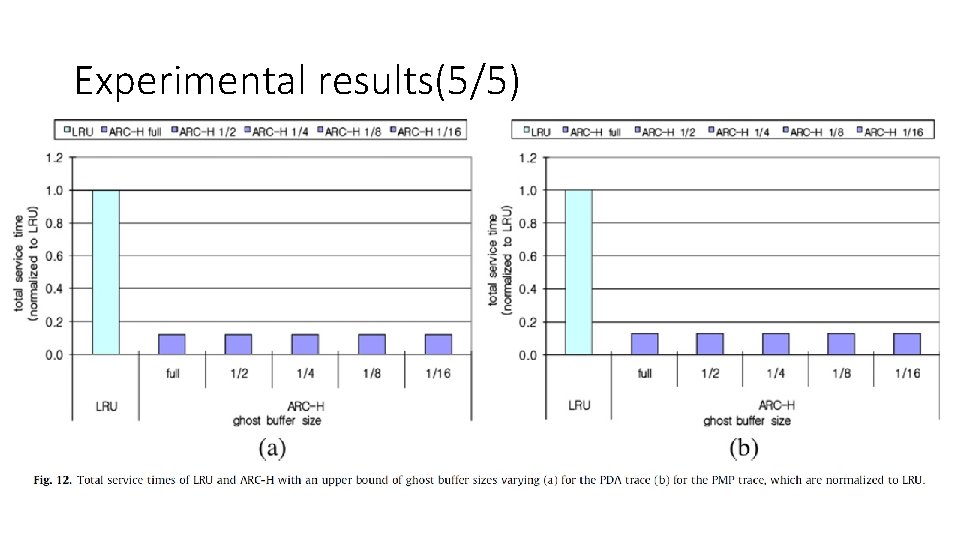

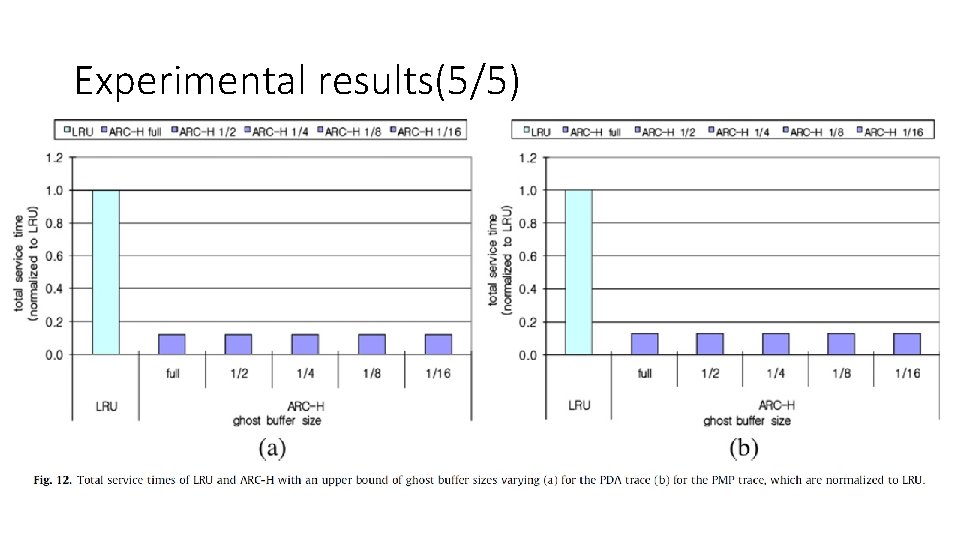

Experimental results(5/5)

OUTLINE • Introduction • Background & Motivation • ARC-H Design • Experimental results • Conclusions

Conclusions(1/1) • We have proposed a new adaptive device-aware cache replacement algorithm called ARC-H for a heterogeneous. • ARC-H seeks to overcome the inability of existing cache replacement techniques to deal with the very different I/O costs of heterogeneous storage devices. • Extensive trace-driven simulations showed that the ARC-H algorithm reduced the total service time by up to 88% compared with existing caching algorithms for the cache size of 20 Mb, when a 1. 800 hard disk and a NAND flash memory are employed. • The ARC-H algorithm reduced the energy consumption by up to 81% over existing caching algorithms.