Apache TVM and ONNX What can ONNX do

- Slides: 17

Apache TVM and ONNX What can ONNX do for DL Compilers (and vice versa)? Jason Knight - CPO Automate efficient AI/ML ops through a unified software foundation. jknight@octoml. ai

Agenda Intro to TVM Cool results (TVM + ONNX) How does it work? … and in 10 minutes … Let’s go! Octo. ML’s wishlist for ONNX 2

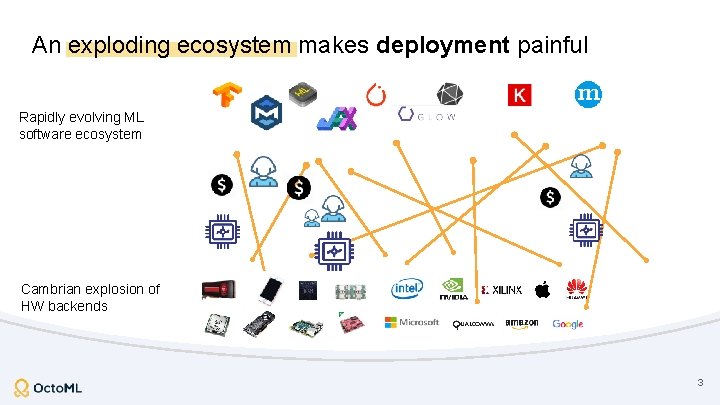

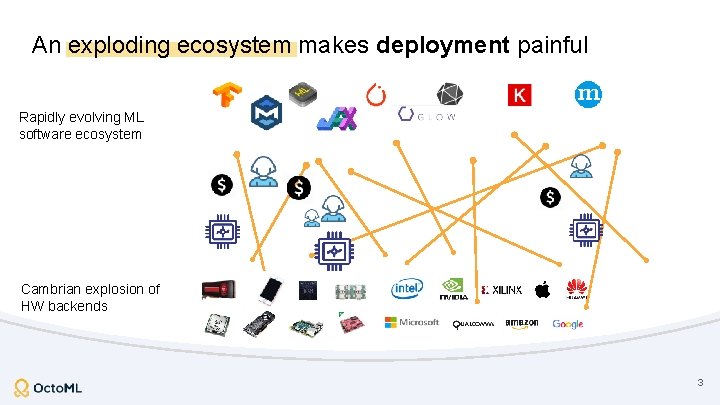

An exploding ecosystem makes deployment painful Rapidly evolving ML software ecosystem Cambrian explosion of HW backends 3

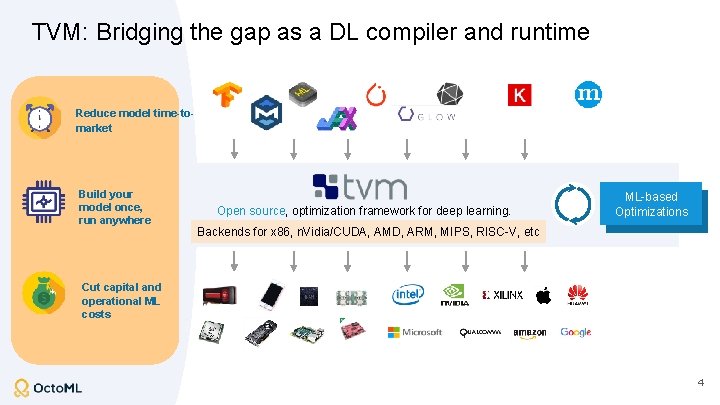

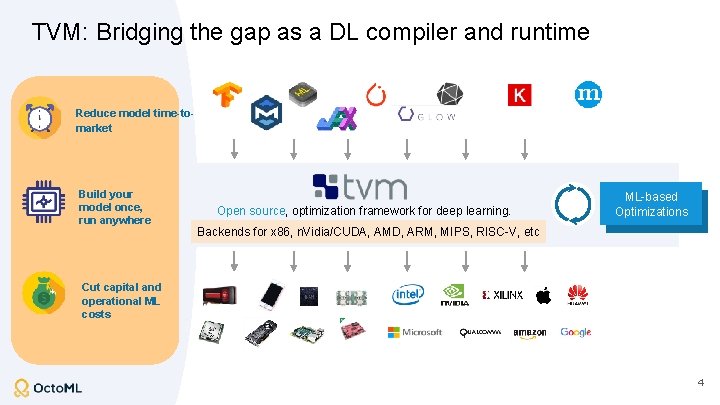

TVM: Bridging the gap as a DL compiler and runtime Reduce model time-tomarket Build your model once, run anywhere Open source, optimization framework for deep learning. ML-based Optimizations Backends for x 86, n. Vidia/CUDA, AMD, ARM, MIPS, RISC-V, etc Cut capital and operational ML costs 4

TVM is an emerging industry standard ML stack Every “Alexa” wake-up today across all devices uses a model optimized with TVM Open source ~428+ contributors from industry and academia. “[TVM enabled] real-time on mobile CPUs for free. . . We are excited about the performance TVM achieves. ” More than 85 x speed-up for speech recognition model. Bing query understanding: 112 ms (Tensorflow) -> 34 ms (TVM). Qn. A bot: 73 ms->28 ms (CPU), 10. 1 ms->5. 5 ms (GPU) “TVM is key to ML Access on Hexagon” - Jeff Gelharr, VP Technology 5

The power of TVM + ONNX (AKA Results) 6

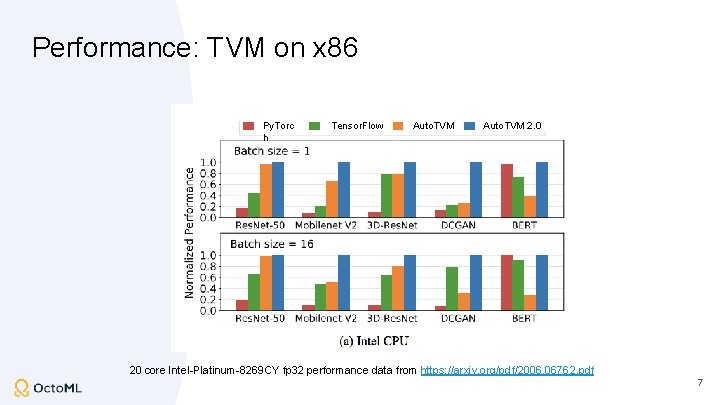

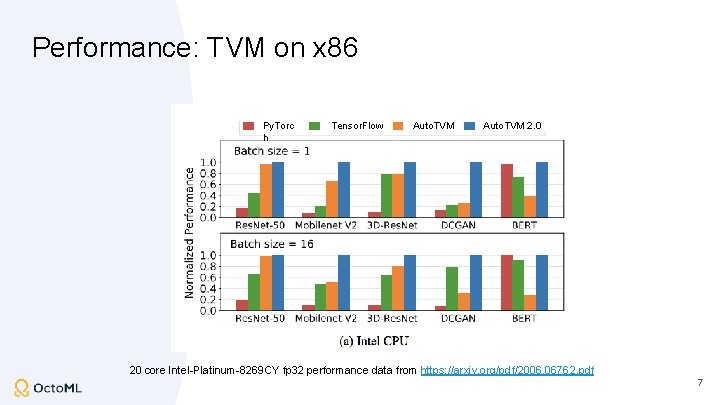

Performance: TVM on x 86 Py. Torc h Tensor. Flow Auto. TVM 2. 0 20 core Intel-Platinum-8269 CY fp 32 performance data from https: //arxiv. org/pdf/2006. 06762. pdf 7

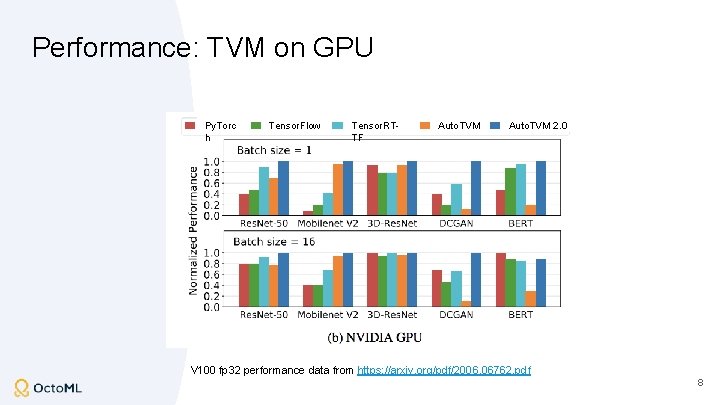

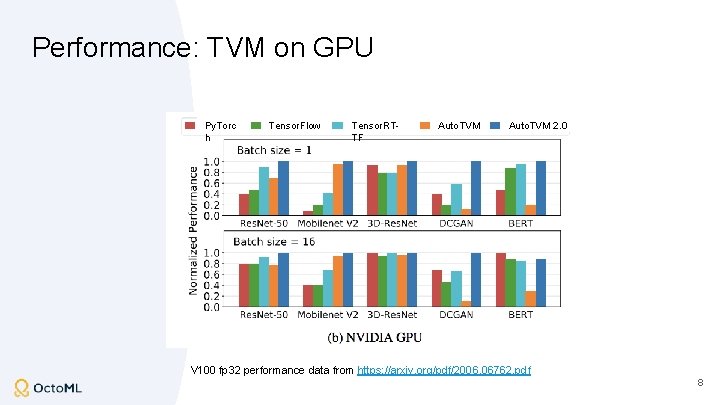

Performance: TVM on GPU Py. Torc h Tensor. Flow Tensor. RTTF Auto. TVM 2. 0 V 100 fp 32 performance data from https: //arxiv. org/pdf/2006. 06762. pdf 8

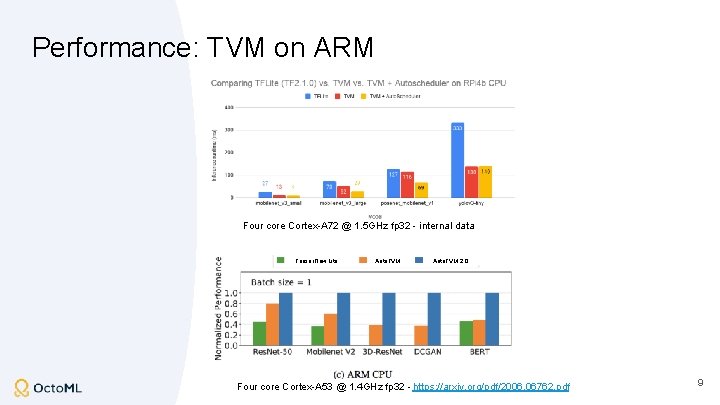

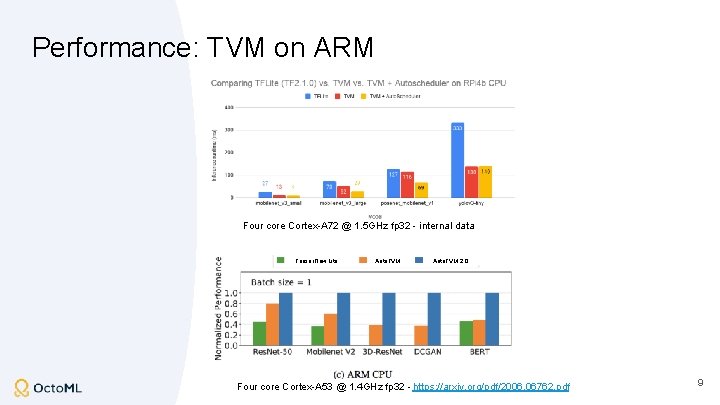

Performance: TVM on ARM Four core Cortex-A 72 @ 1. 5 GHz fp 32 - internal data Tensor. Flow Lite Auto. TVM 2. 0 Four core Cortex-A 53 @ 1. 4 GHz fp 32 - https: //arxiv. org/pdf/2006. 06762. pdf 9

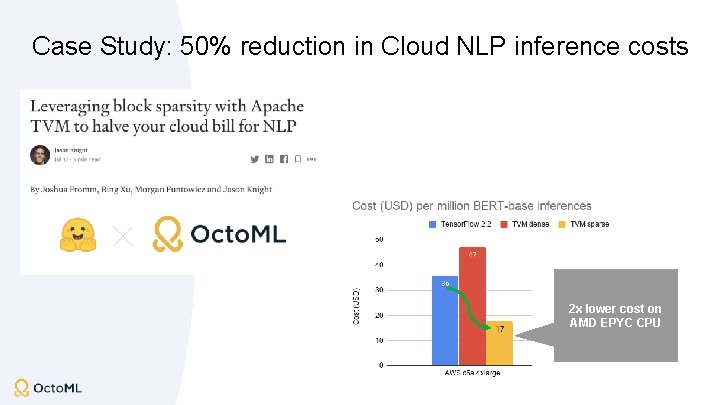

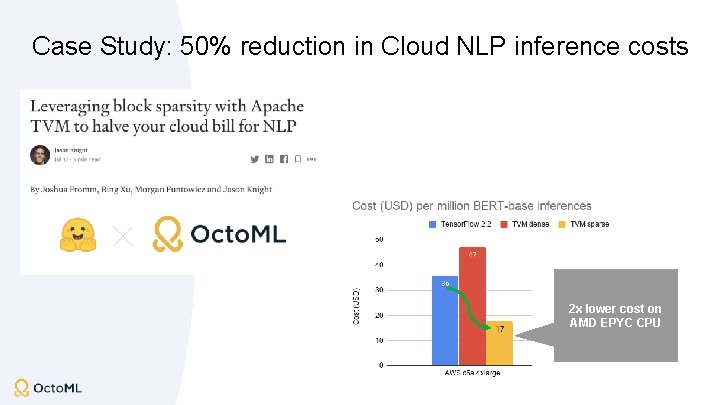

Case Study: 50% reduction in Cloud NLP inference costs 2 x lower cost on AMD EPYC CPU

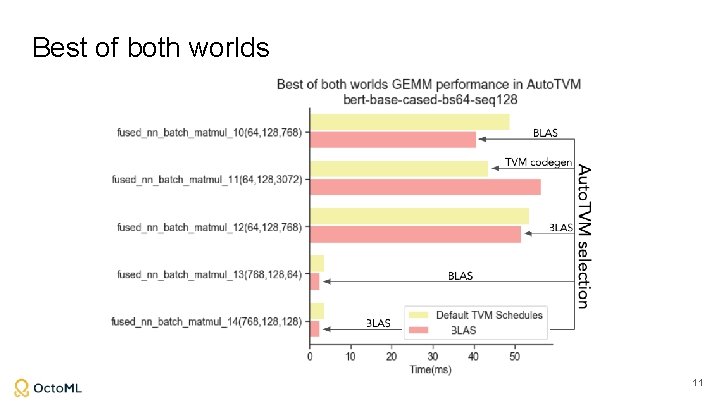

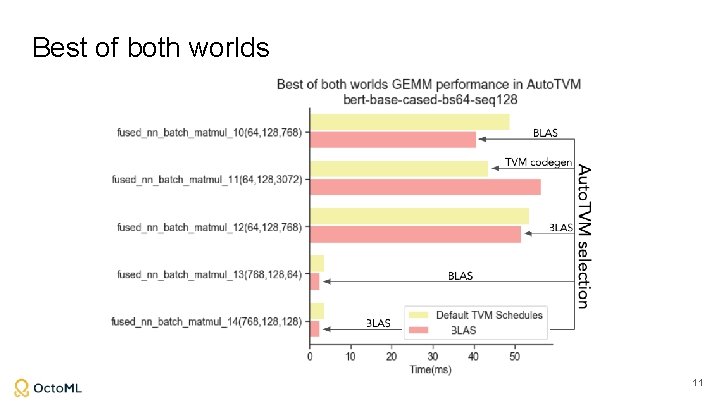

Best of both worlds 11

Not enough time! ● Tensor. Core performance (better than cu. BLAS) ● Classical ML (better than XGBoost and RAPIDS) ● u. TVM for Tiny. ML - ML for microcontrollers ● Int{8, 4, 3, 2, 1} and posit quantization support ● ML in your browser - Web. GPU and WASM as TVM backends ● … and more! 12

How does it work? 13

Auto. TVM Overview Automatically adapt to hardware type by learning 14

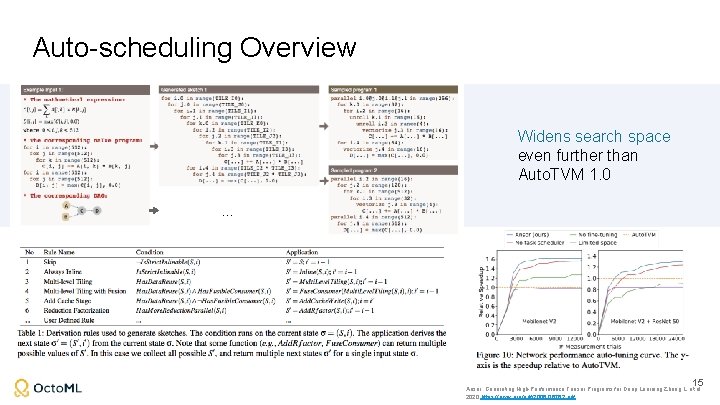

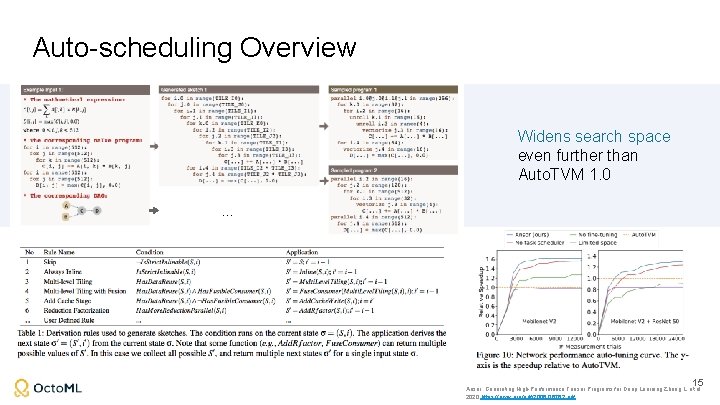

Auto-scheduling Overview Widens search space even further than Auto. TVM 1. 0. . . 15 Ansor: Generating High-Performance Tensor Programs for Deep Learning Zheng L, et al. 2020 https: //arxiv. org/pdf/2006. 06762. pdf

Octo. ML’s wishlist for ONNX 16

We wish ONNX had… ● Even broader op coverage (eg Embedding. Bag) ● Broader non-ML (but adjacent) support: ○ ○ ○ More classical ML GCNN/DGL Graph workloads (Graph. BLAS, Metagraph) And on the “pie-in-the-sky” list: ● Framework integrations ○ ○ Py. Torch: so we don’t have to deal with torchscript MLIR dialect so we can easily plug into Tensor. Flow (for runtime JIT) ○ For eg: canonicalization of models coming out of Quantization-aware-training pipelines ● Quantization-aware standardization Thanks! 17