Apache Lucene in Lex Grid Lucene Overview Highperformance

- Slides: 17

Apache Lucene in Lex. Grid

Lucene Overview • High-performance, full-featured text search engine library. • Written entirely in Java. • An open source project available for free download. • http: //lucene. apache. org/

Lucene structure overview • Index : Contains a sequence of documents. • Document : Is a sequence of fields. • Field : Is a named sequence of terms. • Term : Is a string. – Same string can be assigned to different fields. – Indexes only text or Strings.

Index Or Store Fields • Index : – Will be used for searching. – Stores statistics about terms in order to make termbased search more efficient. – Inverted index : for a term, it can list all the documents that contain it. • Store : – Not used for searching. – Helpful for debugging. – Term is stored in the index literally.

Analyzer • Responsible for breaking up the text in each of the document fields into individual tokens. • Tokens are the smallest piece of information that you can search. • You can also use a different analyzer for each field so they can be treated differently. However, at search time your search analyzer must match your indexing analyzer in order to get good results.

The Mapping • Our indexer code reads Lex. Grid data from database. • The reader code needs to assemble the concept information so that it can call this method: • protected void add. Concept(String coding. Scheme. Name, String coding. Scheme. Id, String concept. Code, String property. Type, String property. Value, Boolean is. Active, String presentation. Format, String language, Boolean is. Preferred, String concept. Status, String property. Id, String degree. Of. Fidelity, Boolean match. If. No. Context, String representational. Form, String[] sources, String[] usage. Contexts, Qualifier[] qualifiers) • Every time this method is called, it creates a Lucene document out of this information.

The Mapping (Cont. . ) • The above method called for every Presentation, Property, Definitions etc in a concept code. • This is all of the information from Lex. Grid that is currently stored in the index. • When the Boolean parameters are indexed, they are stored as a 'T' or an 'F', if supplied. • When constructing a field, we have to decide if it will be analyzed, stored, and indexed.

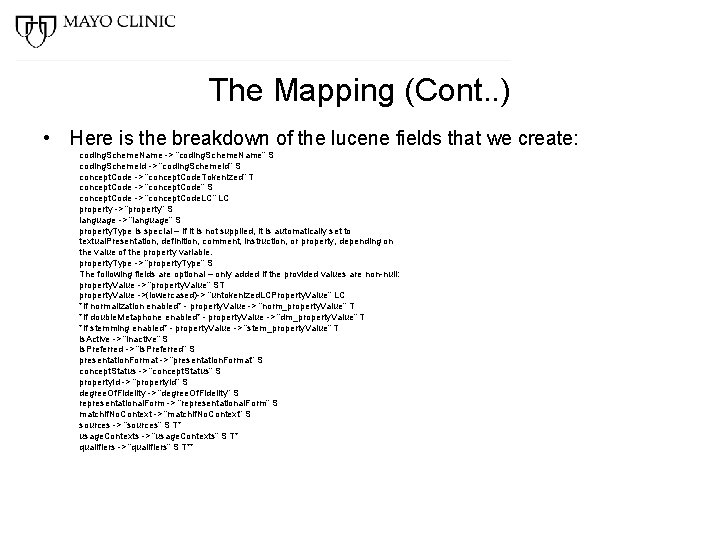

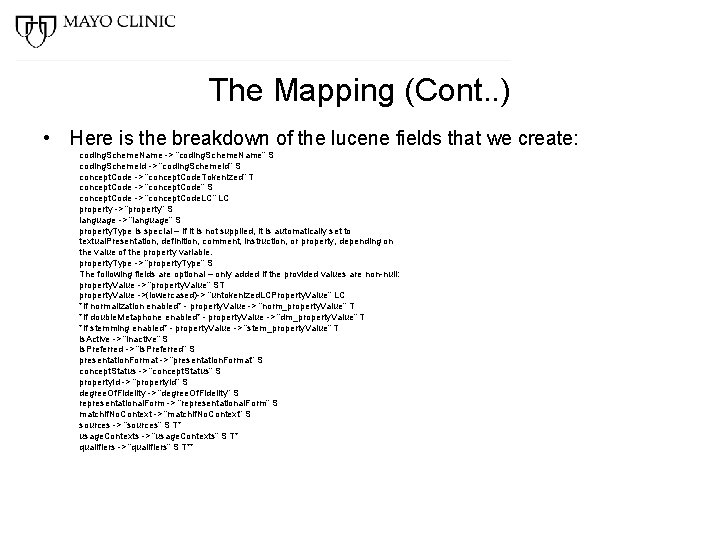

The Mapping (Cont. . ) • Here is the breakdown of the lucene fields that we create: coding. Scheme. Name -> “coding. Scheme. Name” S coding. Scheme. Id -> “coding. Scheme. Id” S concept. Code -> “concept. Code. Tokenized” T concept. Code -> “concept. Code” S concept. Code -> “concept. Code. LC” LC property -> “property” S language -> “language” S property. Type is special – if it is not supplied, it is automatically set to textual. Presentation, definition, comment, instruction, or property, depending on the value of the property variable. property. Type -> “property. Type” S The following fields are optional – only added if the provided values are non-null: property. Value -> “property. Value” ST property. Value ->(lowercased)-> “untokenized. LCProperty. Value” LC *If normalization enabled* - property. Value -> “norm_property. Value” T *If double. Metaphone enabled* - property. Value -> “dm_property. Value” T *If stemming enabled* - property. Value -> “stem_property. Value” T is. Active -> “inactive” S is. Preferred -> “is. Preferred” S presentation. Format -> “presentation. Format” S concept. Status -> “concept. Status” S property. Id -> “property. Id” S degree. Of. Fidelity -> “degree. Of. Fidelity” S representational. Form -> “representational. Form” S match. If. No. Context -> “match. If. No. Context” S sources -> “sources” S T* usage. Contexts -> “usage. Contexts” S T* qualifiers -> “qualifiers” S T**

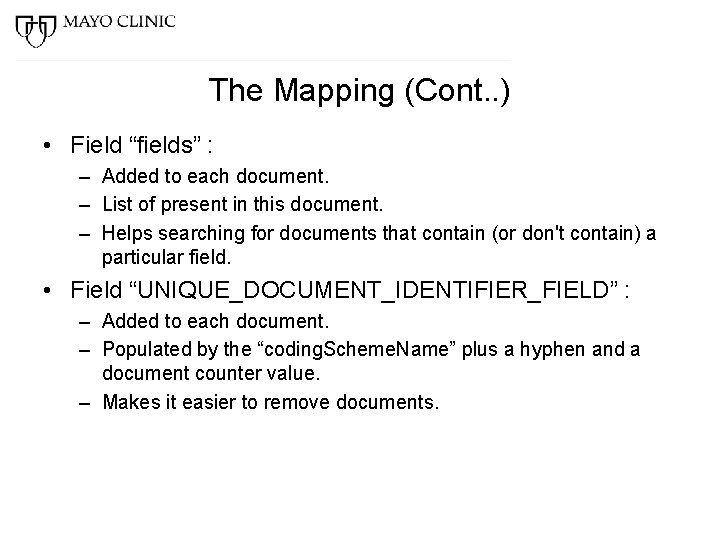

The Mapping (Cont. . ) • Field “fields” : – Added to each document. – List of present in this document. – Helps searching for documents that contain (or don't contain) a particular field. • Field “UNIQUE_DOCUMENT_IDENTIFIER_FIELD” : – Added to each document. – Populated by the “coding. Scheme. Name” plus a hyphen and a document counter value. – Makes it easier to remove documents.

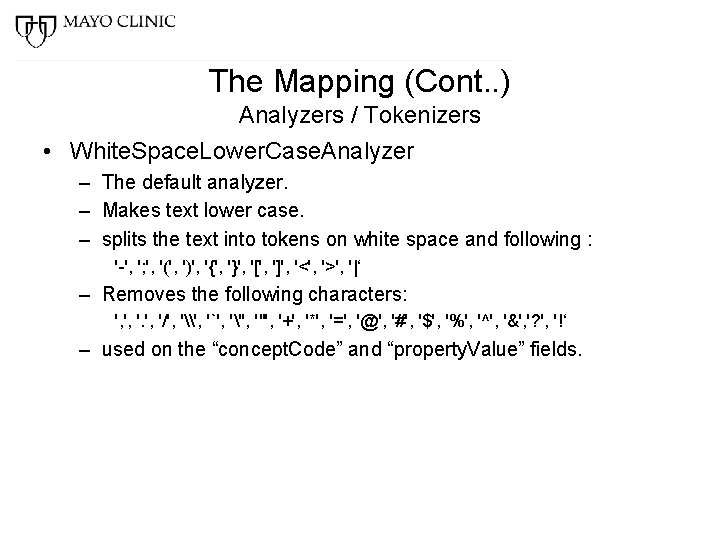

The Mapping (Cont. . ) Analyzers / Tokenizers • White. Space. Lower. Case. Analyzer – The default analyzer. – Makes text lower case. – splits the text into tokens on white space and following : '-', '; ', '(', ')', '{', '}', '[', ']', '<', '>', '|‘ – Removes the following characters: ', ', '/', '\', '`', ''', '"', '+', '*', '=', '@', '#', '$', '%', '^', '&', '? ', '!‘ – used on the “concept. Code” and “property. Value” fields.

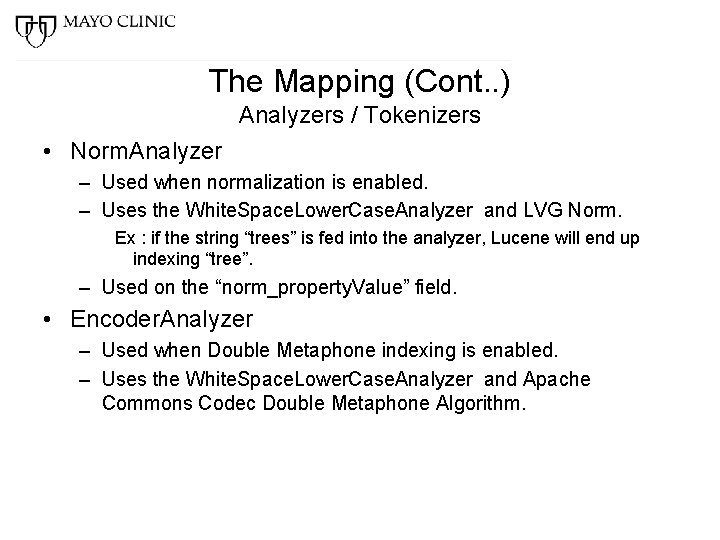

The Mapping (Cont. . ) Analyzers / Tokenizers • Norm. Analyzer – Used when normalization is enabled. – Uses the White. Space. Lower. Case. Analyzer and LVG Norm. Ex : if the string “trees” is fed into the analyzer, Lucene will end up indexing “tree”. – Used on the “norm_property. Value” field. • Encoder. Analyzer – Used when Double Metaphone indexing is enabled. – Uses the White. Space. Lower. Case. Analyzer and Apache Commons Codec Double Metaphone Algorithm.

Index Usage in Lex. BIG • Restrictions on Code. Node. Sets are turned into Lucene queries. • Supported Queries: – – – – Lucene. Query Double. Metaphone. Lucene. Query Stemmed. Lucene. Query Starts. With Exact. Match Contains Reg. Exp

Index Usage in Lex. BIG (Cont. . ) • Simple Queries: – Queries constructed using default field, term value and Analyzer based on user specified query. – for example, if you specify 'active. Only', we add a section to the query which would require the “is. Active” field to have a value of 'T'. – Nearly all of the untokenized fields are handled this way. – “starts. With” and “exact. Match” queries are also handled this way.

Index Usage in Lex. BIG (Cont. . ) • Complex Queries: – User queries containing boolean logic, embedded wild cards, etc – Rely on Lucene Query Parser by providing appropriate Analyzer and field depending on the type of search algorithm selected. – For example, for normalized search, we feed the match. Text into a Query. Parser with the Norm. Analyzer, and the “norm_property. Field” set at the default field. For the “Lucene. Query” match algorithm, we provide the White. Space. Lower. Case. Analyzer and the “property. Value” field. – Wild card and fuzzy searches supported.

Index Usage in Lex. BIG (Cont. . ) • Result from Lucene : – Bit. Set (an array of bits – either 1 or 0) – with one bit per Lucene document. Each bit will be set to 1 if the document matched the query, or it will be set to 0 if it did not satisfy the query. – We take advantage of the boundary documents. – Combination of boundary bit. Set and user query bit. Set gives all the matching unique concept code data. – Additional restriction on a coded. Node. Set are resolved to a bit. Set in the same way as above and then the bit. Sets are AND'ed together. – Bit. Set resolved into Card. Holder object (contains only the concept. Code, coding. Scheme, and version + the score, if requested) which can be used for ‘union’, ‘intersection’ and ‘difference’.

Index Usage in Lex. BIG (Cont. . ) – If entire result to be returned at once : • Each of the items in the Code. Holder is resolved into a Resolved. Concept. Reference – this is done through a series of SQL calls. – If asked for an iterator : • An Iterator object is created which holds the Code. Holder. • Individual Resolved. Concept. References are resolved from the SQL Server as needed.

Questions ? ?