Apache Hive The Apache Hive data warehouse software

![*Drop database Syntax: DROP [IF EXISTS] database_name [RESTRICT|CASCADE]; Example: hive> Drop database employ; OK *Drop database Syntax: DROP [IF EXISTS] database_name [RESTRICT|CASCADE]; Example: hive> Drop database employ; OK](https://slidetodoc.com/presentation_image_h/22f8a86fb6017570d4cbaa56acd48d30/image-13.jpg)

![Drop Table DROP TABLE [IF EXISTS] table_name [PURGE]; DROP TABLE removes metadata and data Drop Table DROP TABLE [IF EXISTS] table_name [PURGE]; DROP TABLE removes metadata and data](https://slidetodoc.com/presentation_image_h/22f8a86fb6017570d4cbaa56acd48d30/image-22.jpg)

![HIVE DML(Data Manipulation language) Loading data in to table LOAD DATA [LOCAL] INPATH 'path HIVE DML(Data Manipulation language) Loading data in to table LOAD DATA [LOCAL] INPATH 'path](https://slidetodoc.com/presentation_image_h/22f8a86fb6017570d4cbaa56acd48d30/image-24.jpg)

![-Dropping view Use the following syntax to drop a view: DROP VIEW [IF EXISTS] -Dropping view Use the following syntax to drop a view: DROP VIEW [IF EXISTS]](https://slidetodoc.com/presentation_image_h/22f8a86fb6017570d4cbaa56acd48d30/image-35.jpg)

- Slides: 72

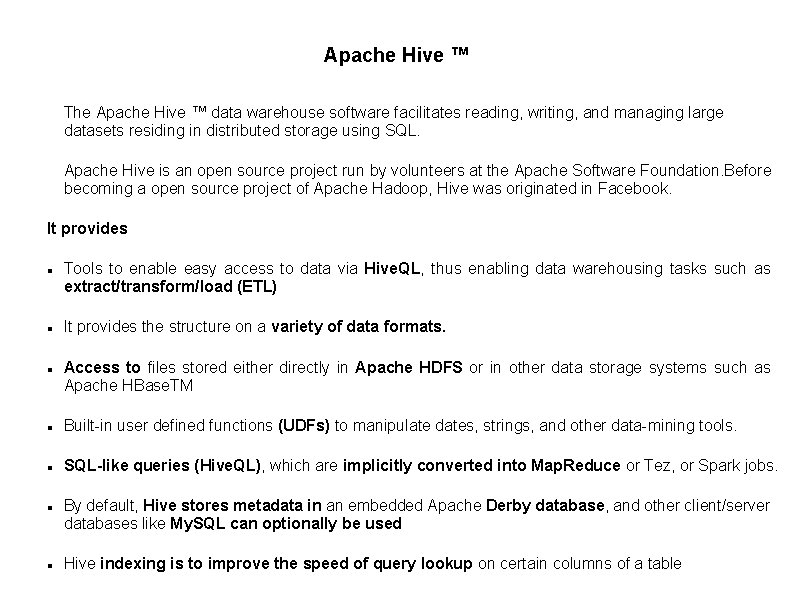

Apache Hive ™ The Apache Hive ™ data warehouse software facilitates reading, writing, and managing large datasets residing in distributed storage using SQL. Apache Hive is an open source project run by volunteers at the Apache Software Foundation. Before becoming a open source project of Apache Hadoop, Hive was originated in Facebook. It provides Tools to enable easy access to data via Hive. QL, thus enabling data warehousing tasks such as extract/transform/load (ETL) It provides the structure on a variety of data formats. Access to files stored either directly in Apache HDFS or in other data storage systems such as Apache HBase. TM Built-in user defined functions (UDFs) to manipulate dates, strings, and other data-mining tools. SQL-like queries (Hive. QL), which are implicitly converted into Map. Reduce or Tez, or Spark jobs. By default, Hive stores metadata in an embedded Apache Derby database, and other client/server databases like My. SQL can optionally be used Hive indexing is to improve the speed of query lookup on certain columns of a table

Difference between pig Pig hadoop and Hive hadoop have a similar goal- they are tools that ease the complexity of writing complex java Map. Reduce programs However, when to use Pig Latin and when to use Hive. QL is the question NO PIG HIVE 1 Pig is data flow language HIVE is declarative language(Hive. QL) 2 Pig is used for programming Mainly used for creating reports 3 PIG is best for semi structured data Hive is best for structured Data 4 Pig does not have any metadata Hive defines tables schema before + stores database and schema is defined while schema information in database loading data 5 No web interface It has HWI(Hive web interface) 6 Doesn't support JDBC Provides support for JDBC 7 Schema is created implicitly Schema should mentioned explictly 8 Suitable for programmers and researchers Suitable for data analysts 9 Pig Hadoop Component operates on the client side of any cluster. Hive Hadoop Component operates on the server side of any cluster 10 Pig can be installed easily over Hive Need some configuration to setup

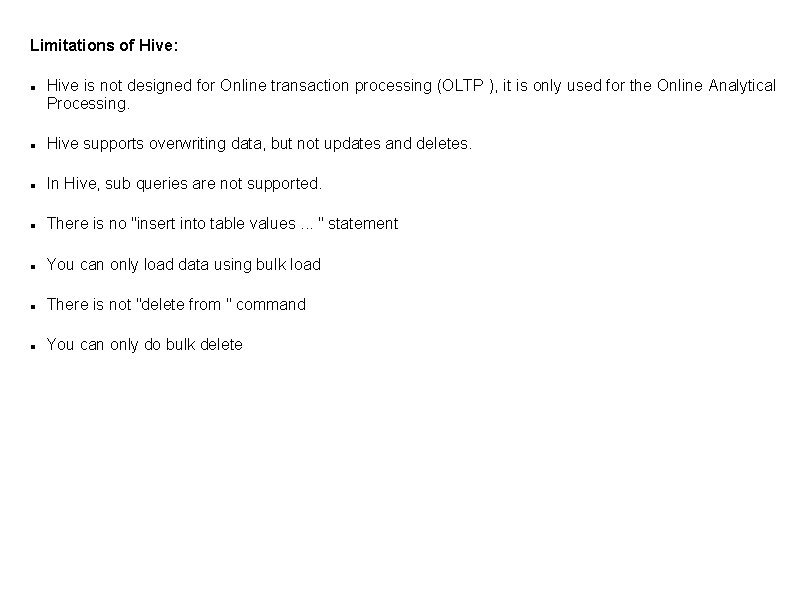

Limitations of Hive: Hive is not designed for Online transaction processing (OLTP ), it is only used for the Online Analytical Processing. Hive supports overwriting data, but not updates and deletes. In Hive, sub queries are not supported. There is no "insert into table values. . . " statement You can only load data using bulk load There is not "delete from " command You can only do bulk delete

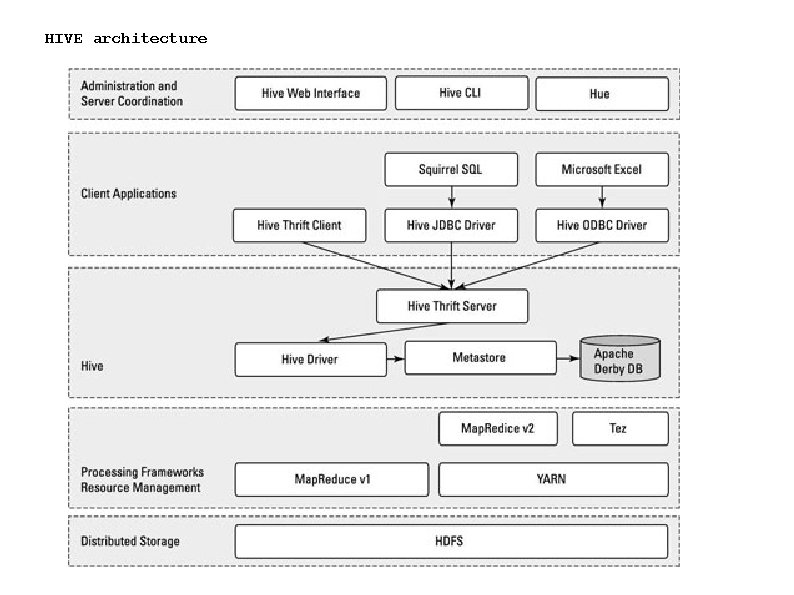

HIVE architecture

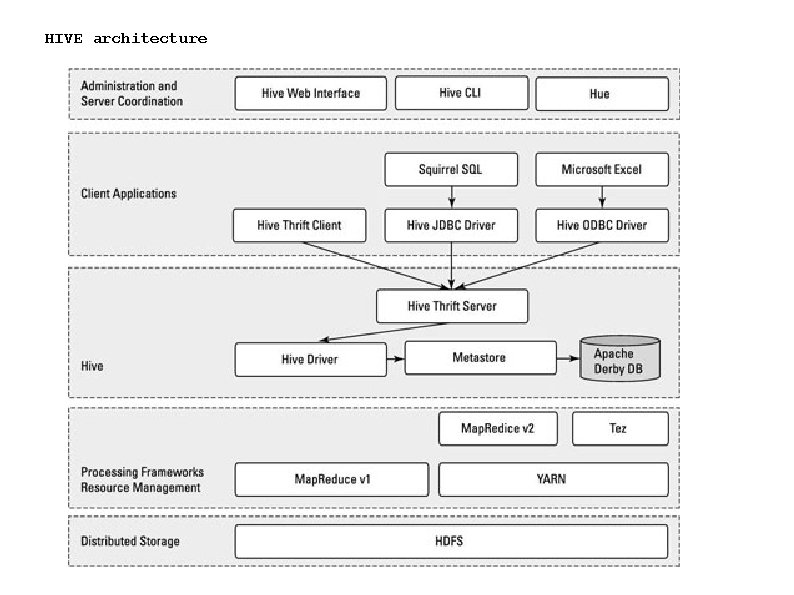

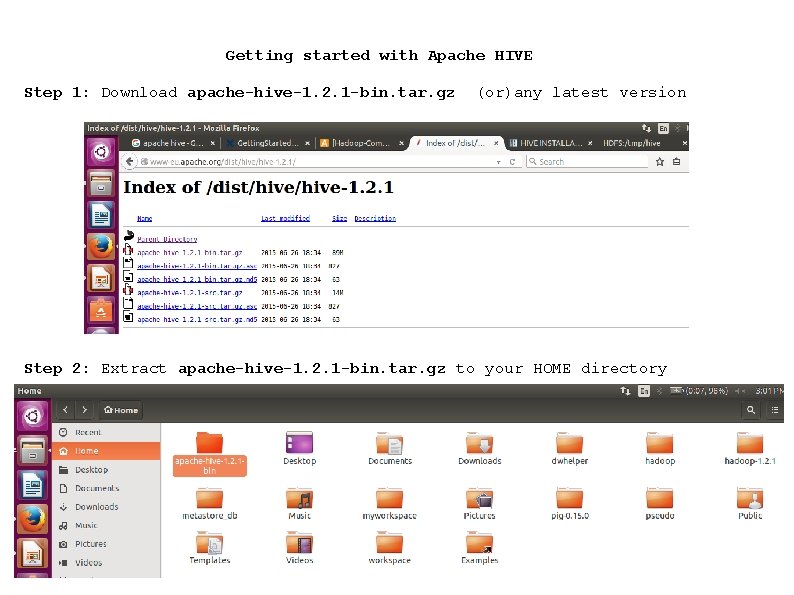

Getting started with Apache HIVE Step 1: Download apache-hive-1. 2. 1 -bin. tar. gz (or)any latest version Step 2: Extract apache-hive-1. 2. 1 -bin. tar. gz to your HOME directory

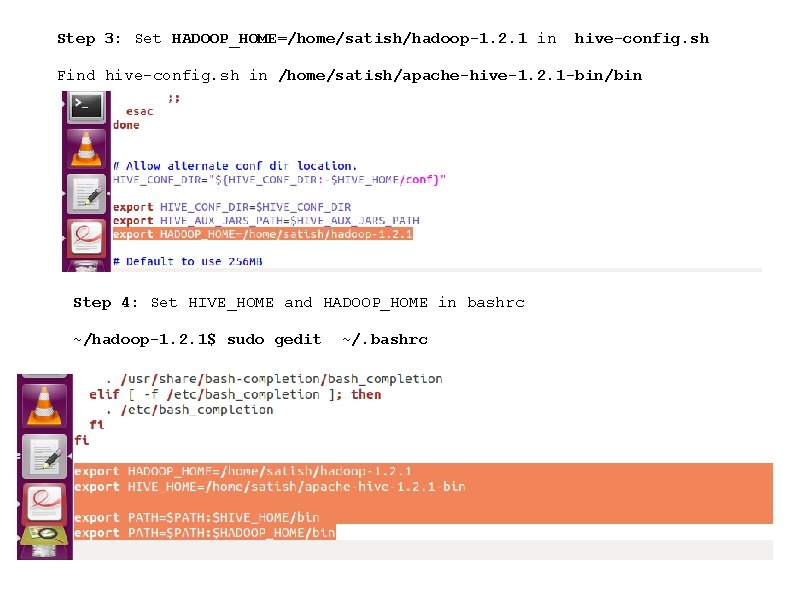

Step 3: Set HADOOP_HOME=/home/satish/hadoop-1. 2. 1 in hive-config. sh Find hive-config. sh in /home/satish/apache-hive-1. 2. 1 -bin/bin Step 4: Set HIVE_HOME and HADOOP_HOME in bashrc ~/hadoop-1. 2. 1$ sudo gedit ~/. bashrc

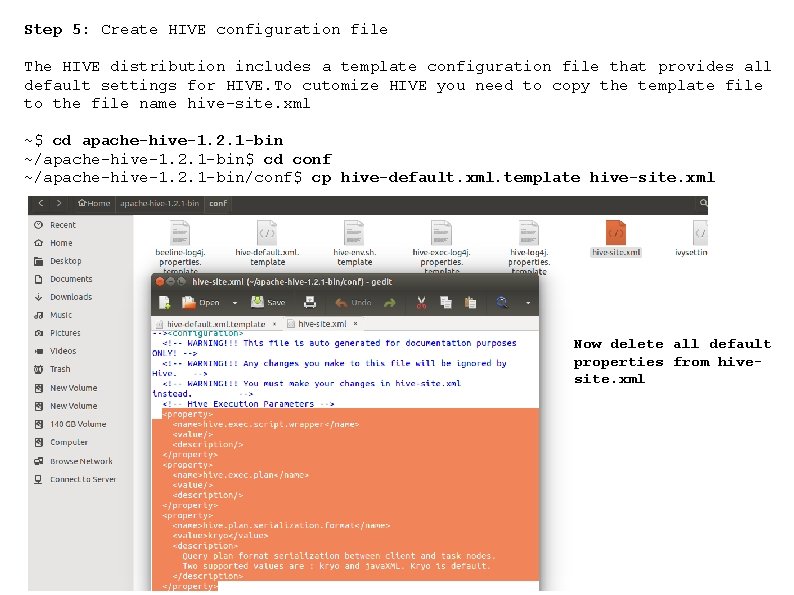

Step 5: Create HIVE configuration file The HIVE distribution includes a template configuration file that provides all default settings for HIVE. To cutomize HIVE you need to copy the template file to the file name hive-site. xml ~$ cd apache-hive-1. 2. 1 -bin ~/apache-hive-1. 2. 1 -bin$ cd conf ~/apache-hive-1. 2. 1 -bin/conf$ cp hive-default. xml. template hive-site. xml Now delete all default properties from hivesite. xml

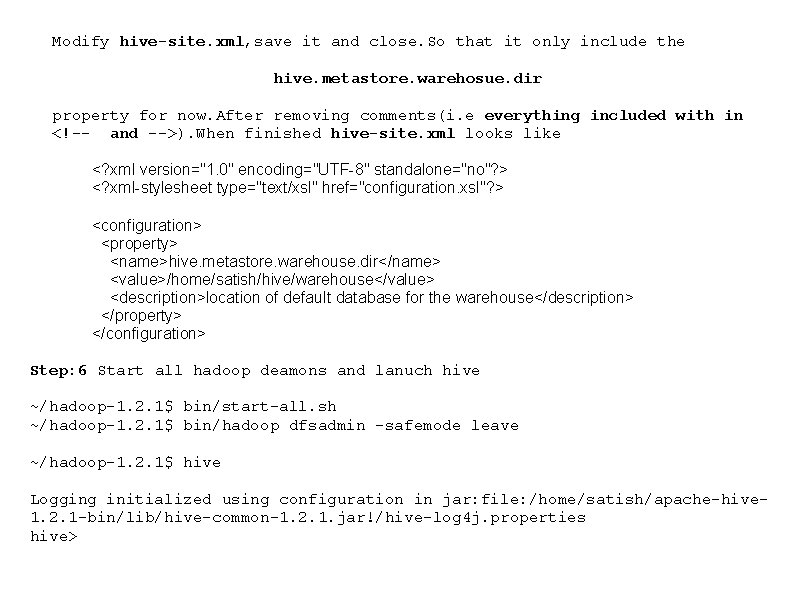

Modify hive-site. xml, save it and close. So that it only include the hive. metastore. warehosue. dir property for now. After removing comments(i. e everything included with in <!-- and -->). When finished hive-site. xml looks like <? xml version="1. 0" encoding="UTF-8" standalone="no"? > <? xml-stylesheet type="text/xsl" href="configuration. xsl"? > <configuration> <property> <name>hive. metastore. warehouse. dir</name> <value>/home/satish/hive/warehouse</value> <description>location of default database for the warehouse</description> </property> </configuration> Step: 6 Start all hadoop deamons and lanuch hive ~/hadoop-1. 2. 1$ bin/start-all. sh ~/hadoop-1. 2. 1$ bin/hadoop dfsadmin -safemode leave ~/hadoop-1. 2. 1$ hive Logging initialized using configuration in jar: file: /home/satish/apache-hive 1. 2. 1 -bin/lib/hive-common-1. 2. 1. jar!/hive-log 4 j. properties hive>

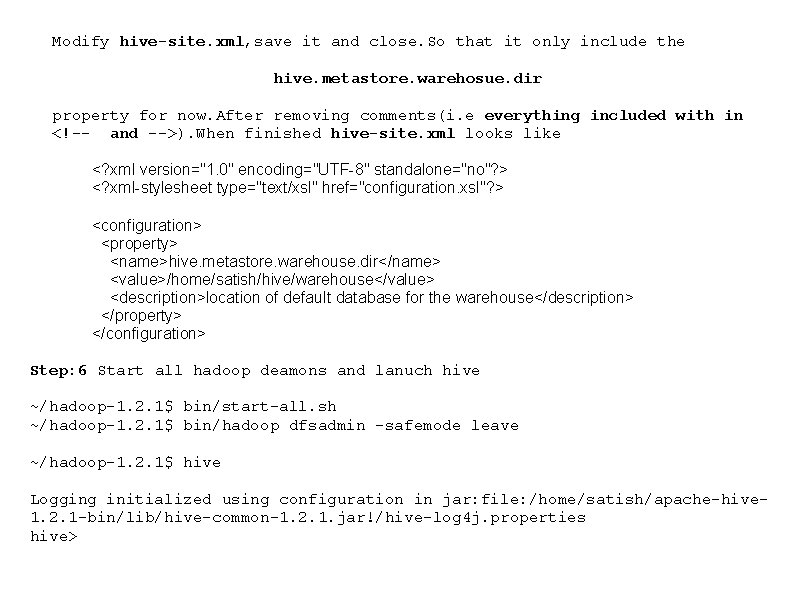

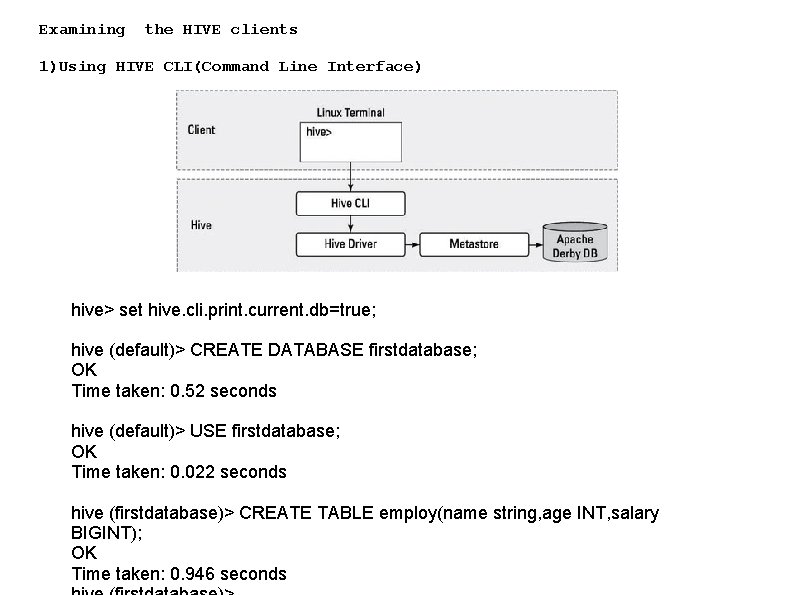

Examining the HIVE clients 1)Using HIVE CLI(Command Line Interface) hive> set hive. cli. print. current. db=true; hive (default)> CREATE DATABASE firstdatabase; OK Time taken: 0. 52 seconds hive (default)> USE firstdatabase; OK Time taken: 0. 022 seconds hive (firstdatabase)> CREATE TABLE employ(name string, age INT, salary BIGINT); OK Time taken: 0. 946 seconds

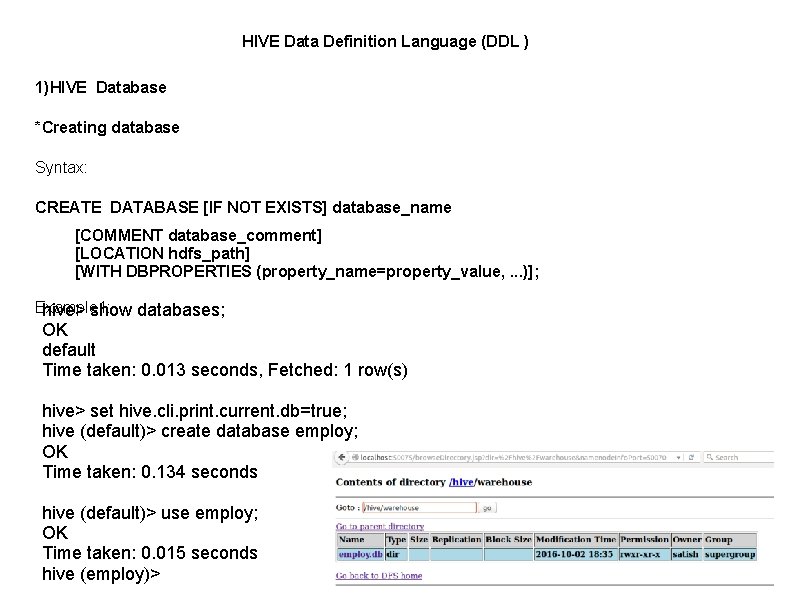

HIVE Data Definition Language (DDL ) 1)HIVE Database *Creating database Syntax: CREATE DATABASE [IF NOT EXISTS] database_name [COMMENT database_comment] [LOCATION hdfs_path] [WITH DBPROPERTIES (property_name=property_value, . . . )]; Example 1: hive> show databases; OK default Time taken: 0. 013 seconds, Fetched: 1 row(s) hive> set hive. cli. print. current. db=true; hive (default)> create database employ; OK Time taken: 0. 134 seconds hive (default)> use employ; OK Time taken: 0. 015 seconds hive (employ)>

Example 2: hive (default)> create database IF NOT EXISTS employ > COMMENT 'Database for employees' > LOCATION '/hive/warehouse' > with DBPROPERTIES ('creator'='ram', 'city'='vijayawada'); OK Time taken: 0. 014 seconds Trying same database again hive (default)> create database employ > COMMENT 'Database for employees' > LOCATION '/hive/warehouse' > with DBPROPERTIES ('creator'='ram', 'city'='vijayawada'); FAILED: Execution Error, return code 1 from org. apache. hadoop. hive. ql. exec. DDLTask. Database employ already exists hive (default)> show databases; OK default employ Time taken: 0. 012 seconds, Fetched: 2 row(s)

![Drop database Syntax DROP IF EXISTS databasename RESTRICTCASCADE Example hive Drop database employ OK *Drop database Syntax: DROP [IF EXISTS] database_name [RESTRICT|CASCADE]; Example: hive> Drop database employ; OK](https://slidetodoc.com/presentation_image_h/22f8a86fb6017570d4cbaa56acd48d30/image-13.jpg)

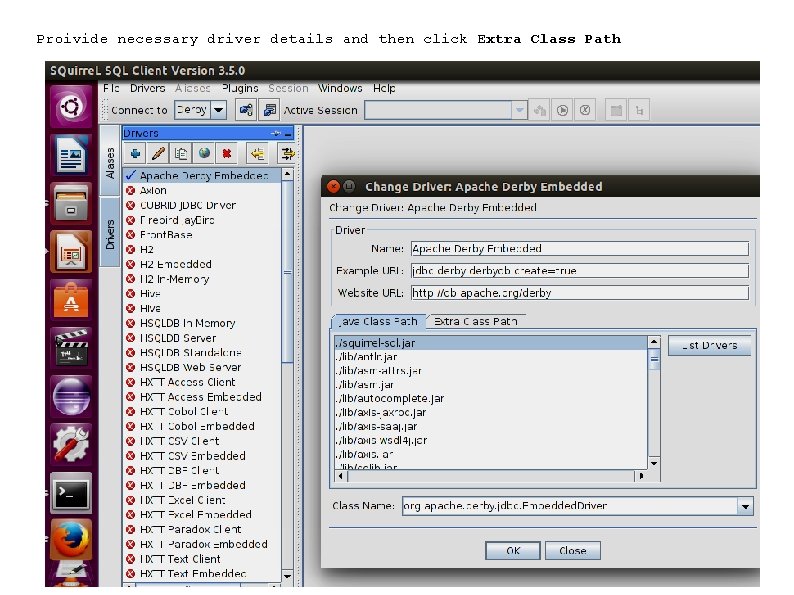

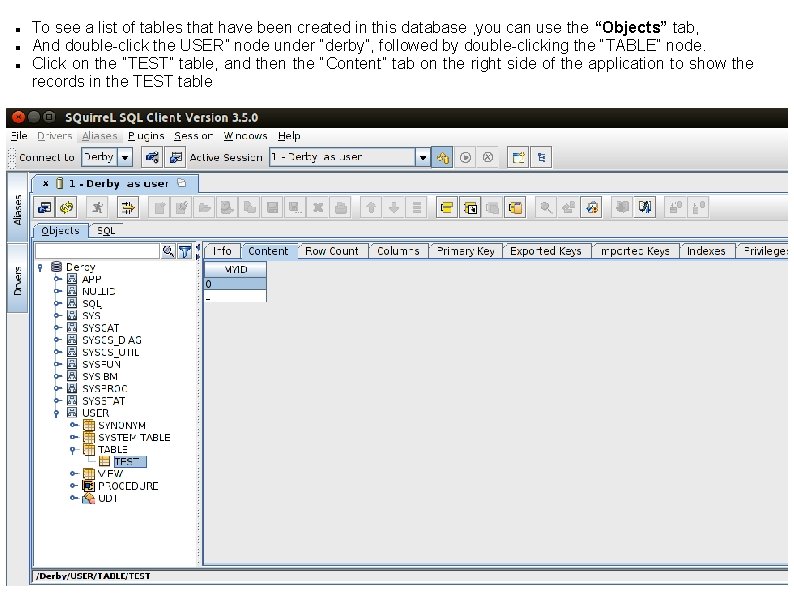

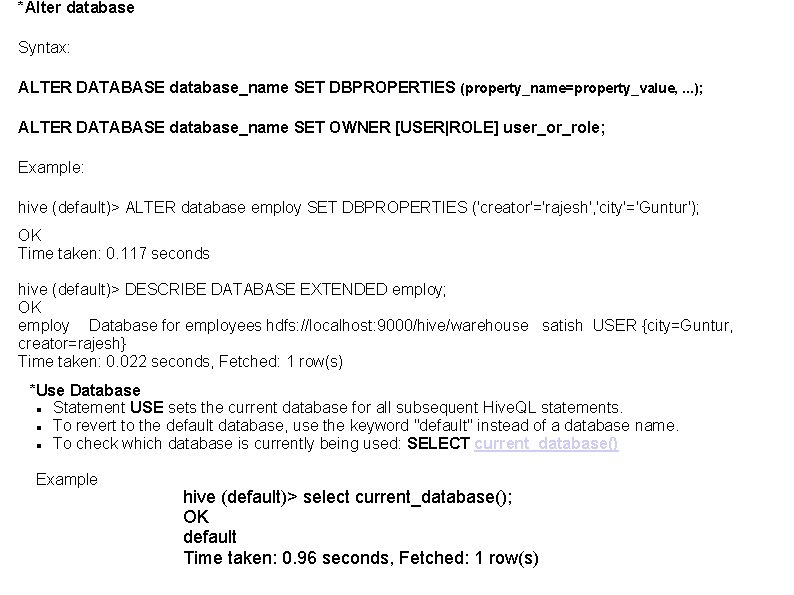

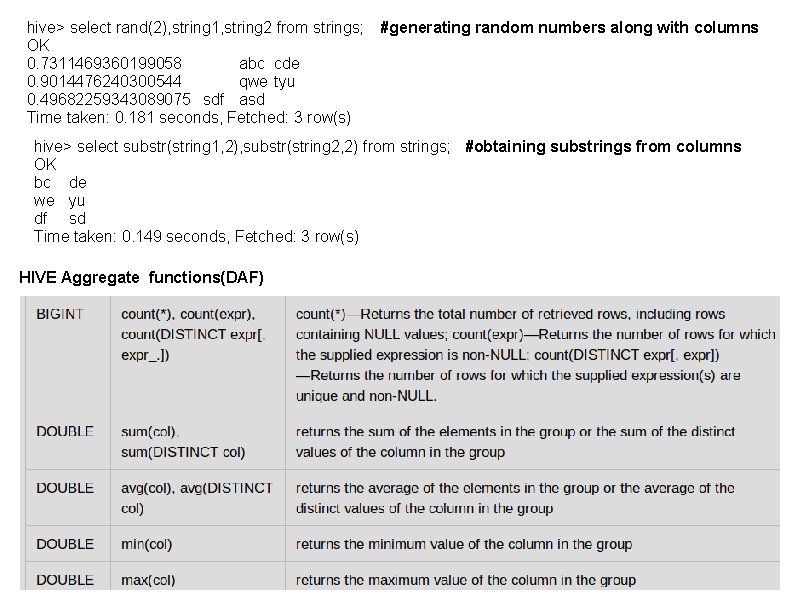

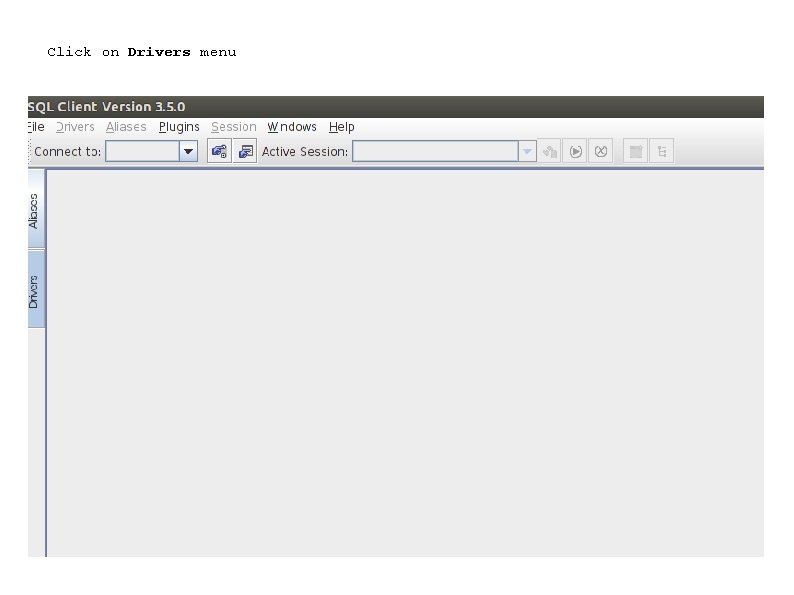

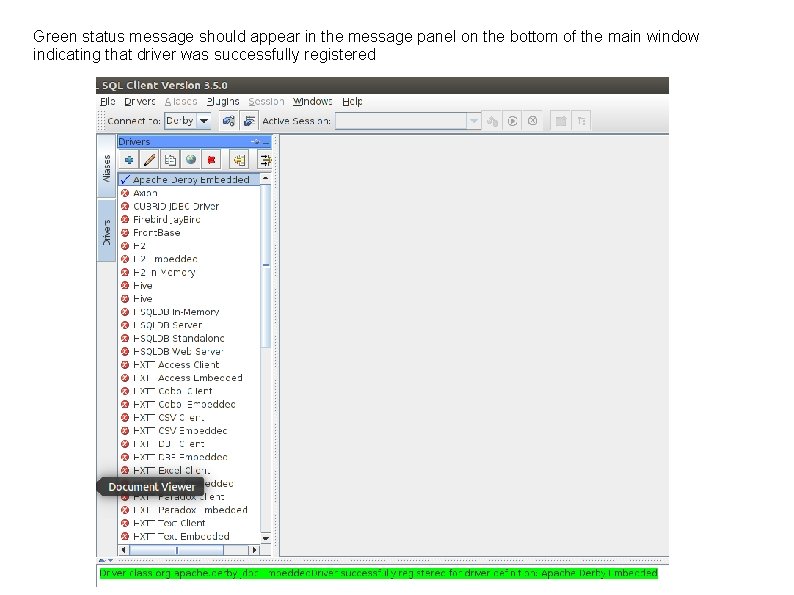

*Drop database Syntax: DROP [IF EXISTS] database_name [RESTRICT|CASCADE]; Example: hive> Drop database employ; OK Time taken: 1. 416 seconds hive> show databases; OK default Time taken: 0. 25 seconds, Fetched: 1 row(s) Hive> The default behavior is RESTRICT, where DROP DATABASE will fail if the database is not empty. To drop the tables in the database as well, use DROP DATABASE db_name CASCADE *Describe database hive> DESCRIBE DATABASE EXTENDED employ; OK employ Database for employees hdfs: //localhost: 9000/hive/warehouse {city=vijayawada, creator=ram} Time taken: 0. 018 seconds, Fetched: 1 row(s) satish USER

*Alter database Syntax: ALTER DATABASE database_name SET DBPROPERTIES (property_name=property_value, . . . ); ALTER DATABASE database_name SET OWNER [USER|ROLE] user_or_role; Example: hive (default)> ALTER database employ SET DBPROPERTIES ('creator'='rajesh', 'city'='Guntur'); OK Time taken: 0. 117 seconds hive (default)> DESCRIBE DATABASE EXTENDED employ; OK employ Database for employees hdfs: //localhost: 9000/hive/warehouse satish USER {city=Guntur, creator=rajesh} Time taken: 0. 022 seconds, Fetched: 1 row(s) *Use Database Statement USE sets the current database for all subsequent Hive. QL statements. To revert to the default database, use the keyword "default" instead of a database name. To check which database is currently being used: SELECT current_database() Example hive (default)> select current_database(); OK default Time taken: 0. 96 seconds, Fetched: 1 row(s)

hive (default)> use employ; OK Time taken: 0. 017 seconds hive (employ)> select current_database(); OK employ Time taken: 0. 115 seconds, Fetched: 1 row(s) hive (employ)> If you have a lot of databases, you can restrict the ones listed using a regular expression, The following example lists only those databases that start with the letter e and end with any other characters (the. * part): hive (employ)> show databases LIKE 'e. *'; OK employ Time taken: 0. 136 seconds, Fetched: 1 row(s) hive (employ)>

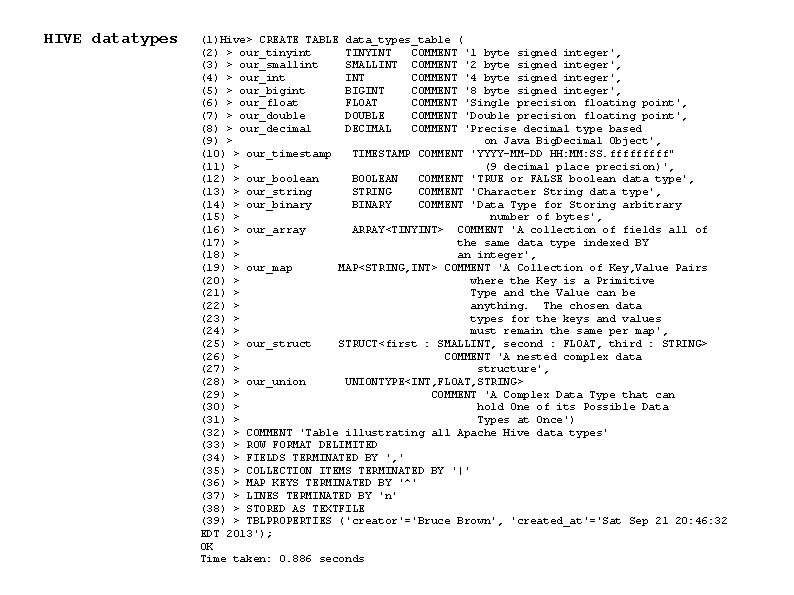

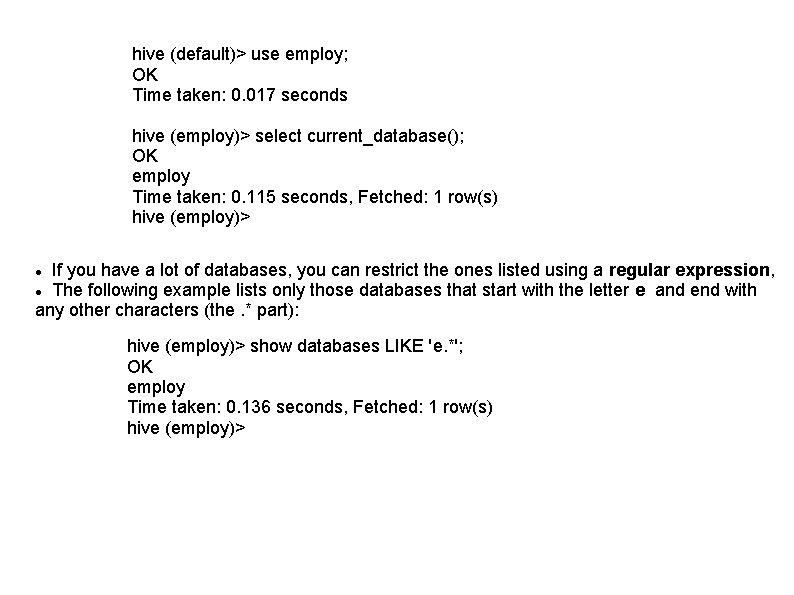

HIVE datatypes (1)Hive> CREATE TABLE data_types_table ( (2) > our_tinyint TINYINT COMMENT '1 byte signed integer', (3) > our_smallint SMALLINT COMMENT '2 byte signed integer', (4) > our_int INT COMMENT '4 byte signed integer', (5) > our_bigint BIGINT COMMENT '8 byte signed integer', (6) > our_float FLOAT COMMENT 'Single precision floating point', (7) > our_double DOUBLE COMMENT 'Double precision floating point', (8) > our_decimal DECIMAL COMMENT 'Precise decimal type based (9) > on Java Big. Decimal Object', (10) > our_timestamp TIMESTAMP COMMENT 'YYYY-MM-DD HH: MM: SS. fffff" (11) > (9 decimal place precision)', (12) > our_boolean BOOLEAN COMMENT 'TRUE or FALSE boolean data type', (13) > our_string STRING COMMENT 'Character String data type', (14) > our_binary BINARY COMMENT 'Data Type for Storing arbitrary (15) > number of bytes', (16) > our_array ARRAY<TINYINT> COMMENT 'A collection of fields all of (17) > the same data type indexed BY (18) > an integer', (19) > our_map MAP<STRING, INT> COMMENT 'A Collection of Key, Value Pairs (20) > where the Key is a Primitive (21) > Type and the Value can be (22) > anything. The chosen data (23) > types for the keys and values (24) > must remain the same per map', (25) > our_struct STRUCT<first : SMALLINT, second : FLOAT, third : STRING> (26) > COMMENT 'A nested complex data (27) > structure', (28) > our_union UNIONTYPE<INT, FLOAT, STRING> (29) > COMMENT 'A Complex Data Type that can (30) > hold One of its Possible Data (31) > Types at Once') (32) > COMMENT 'Table illustrating all Apache Hive data types' (33) > ROW FORMAT DELIMITED (34) > FIELDS TERMINATED BY ', ' (35) > COLLECTION ITEMS TERMINATED BY '|' (36) > MAP KEYS TERMINATED BY '^' (37) > LINES TERMINATED BY 'n' (38) > STORED AS TEXTFILE (39) > TBLPROPERTIES ('creator'='Bruce Brown', 'created_at'='Sat Sep 21 20: 46: 32 EDT 2013'); OK Time taken: 0. 886 seconds

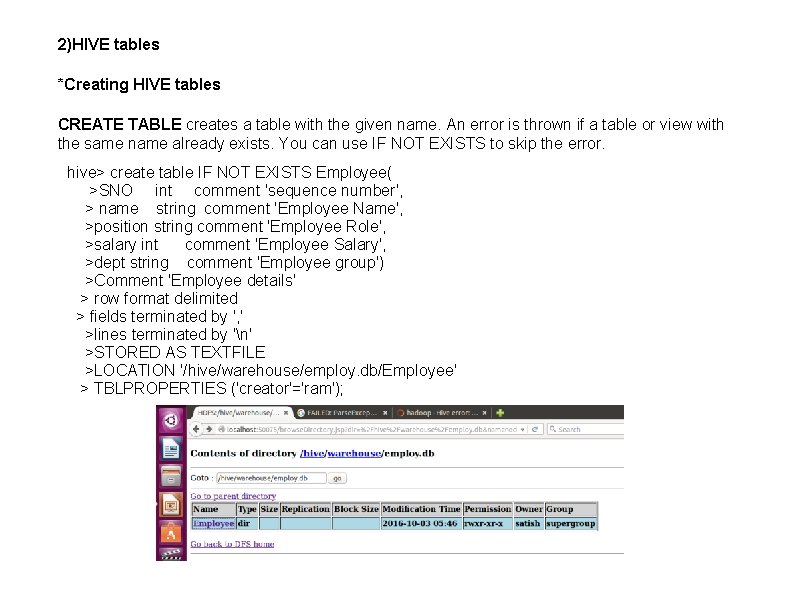

2)HIVE tables *Creating HIVE tables CREATE TABLE creates a table with the given name. An error is thrown if a table or view with the same name already exists. You can use IF NOT EXISTS to skip the error. hive> create table IF NOT EXISTS Employee( >SNO int comment 'sequence number', > name string comment 'Employee Name', >position string comment 'Employee Role', >salary int comment 'Employee Salary', >dept string comment 'Employee group') >Comment 'Employee details' > row format delimited > fields terminated by ', ' >lines terminated by 'n' >STORED AS TEXTFILE >LOCATION '/hive/warehouse/employ. db/Employee' > TBLPROPERTIES ('creator'='ram');

The default TEXTFILE format for your HIVE table are slower to process and they consume lots of disk space unless you compress them. For these reasons, Apcahe Hive community came up with several choices for storing our tables on the HDFS TEXTFILE Use STORED AS TEXTFILE if the data needs to be stored as plain text files. Default file format for HIVE reccords. Alphanumeric characters from the unicode are used to store you data SEQUENCEFILE Use STORED AS SEQUENCEFILE if the data needs to be compressed. The format for binary file composed of key/value pairs RCFILE(Record Columnar FILE) Store records in a column-oriented fashion rather than row oriented fashion. Use RCFILE format if you have large number of cloumns , but only a few columns are typicaly used ORC(Optimized Row Columnar FILE) A format that has significant optimizaions to improve HIVE reads and writes and the processing of tables INPUTFORMAT and OUTPUTFORMAT Lets you to specify the java class that will used to read an write data from the HIVE table The statement like 'STORED as TEXTFILE' is used so that you don't have to specify both INPUTFORMAT and OUTPUTFORMAT for every CREATE TABLE statement

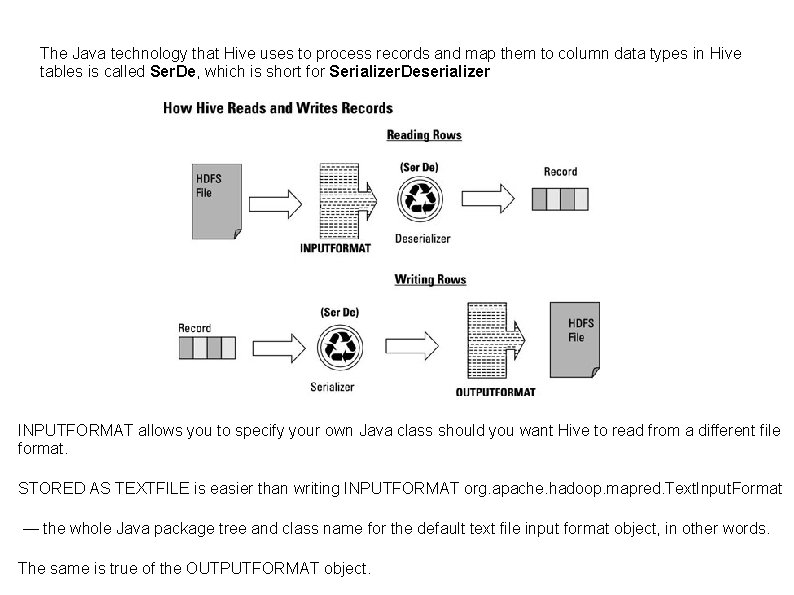

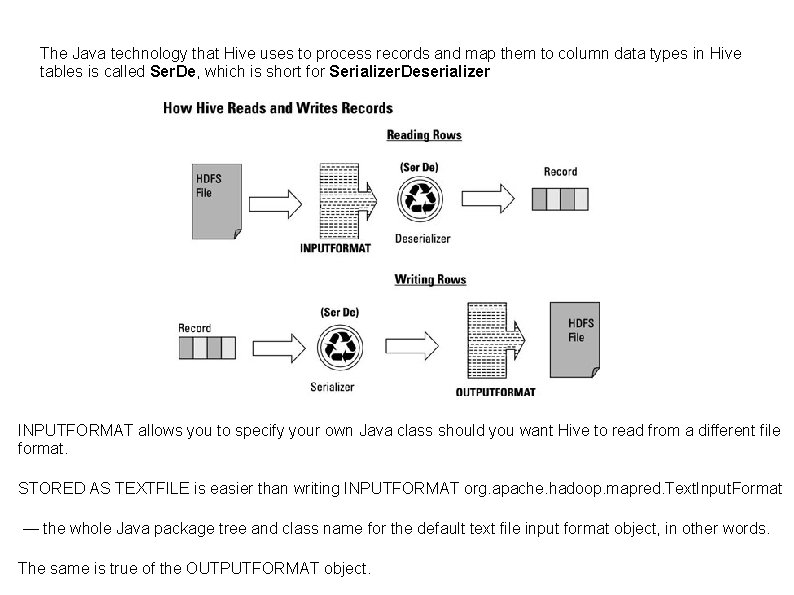

The Java technology that Hive uses to process records and map them to column data types in Hive tables is called Ser. De, which is short for Serializer. Deserializer INPUTFORMAT allows you to specify your own Java class should you want Hive to read from a different file format. STORED AS TEXTFILE is easier than writing INPUTFORMAT org. apache. hadoop. mapred. Text. Input. Format — the whole Java package tree and class name for the default text file input format object, in other words. The same is true of the OUTPUTFORMAT object.

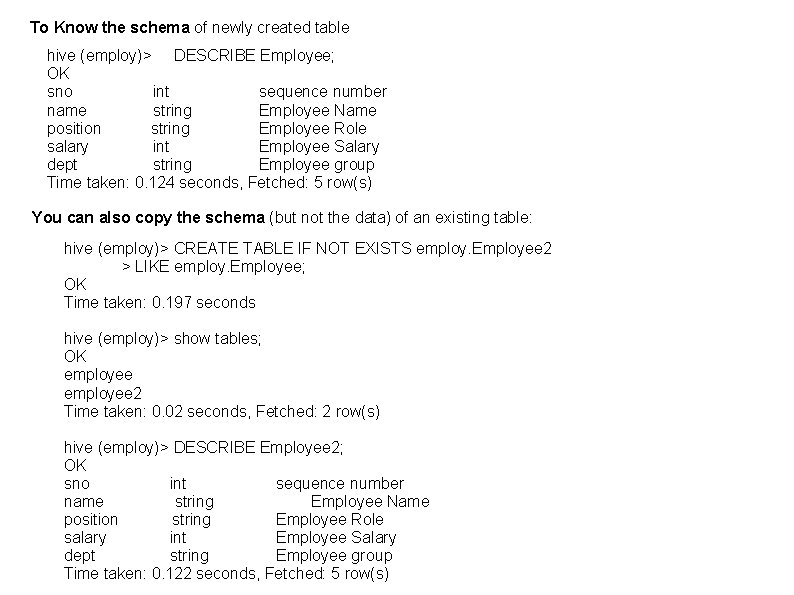

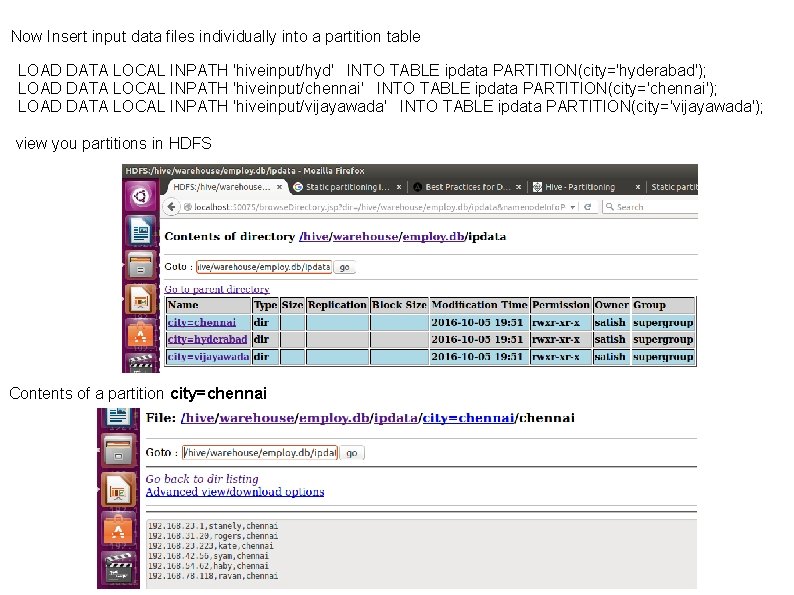

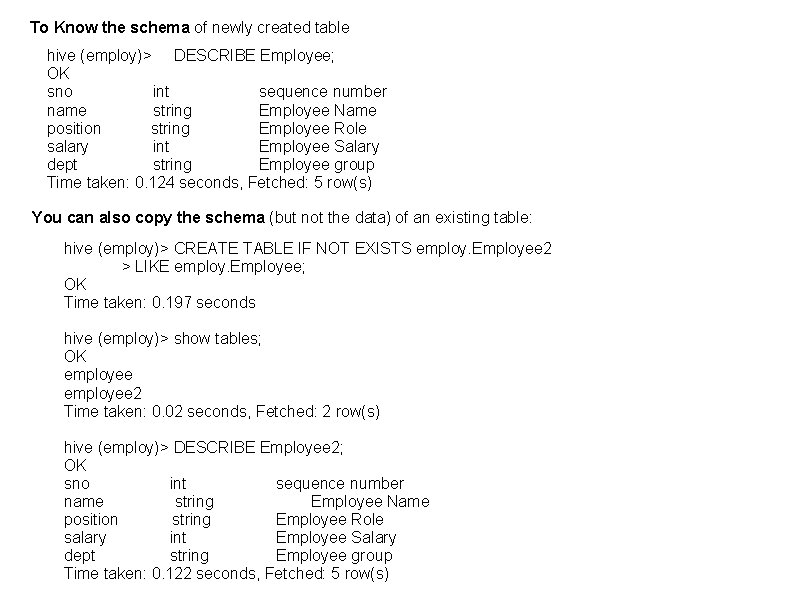

To Know the schema of newly created table hive (employ)> DESCRIBE Employee; OK sno int sequence number name string Employee Name position string Employee Role salary int Employee Salary dept string Employee group Time taken: 0. 124 seconds, Fetched: 5 row(s) You can also copy the schema (but not the data) of an existing table: hive (employ)> CREATE TABLE IF NOT EXISTS employ. Employee 2 > LIKE employ. Employee; OK Time taken: 0. 197 seconds hive (employ)> show tables; OK employee 2 Time taken: 0. 02 seconds, Fetched: 2 row(s) hive (employ)> DESCRIBE Employee 2; OK sno int sequence number name string Employee Name position string Employee Role salary int Employee Salary dept string Employee group Time taken: 0. 122 seconds, Fetched: 5 row(s)

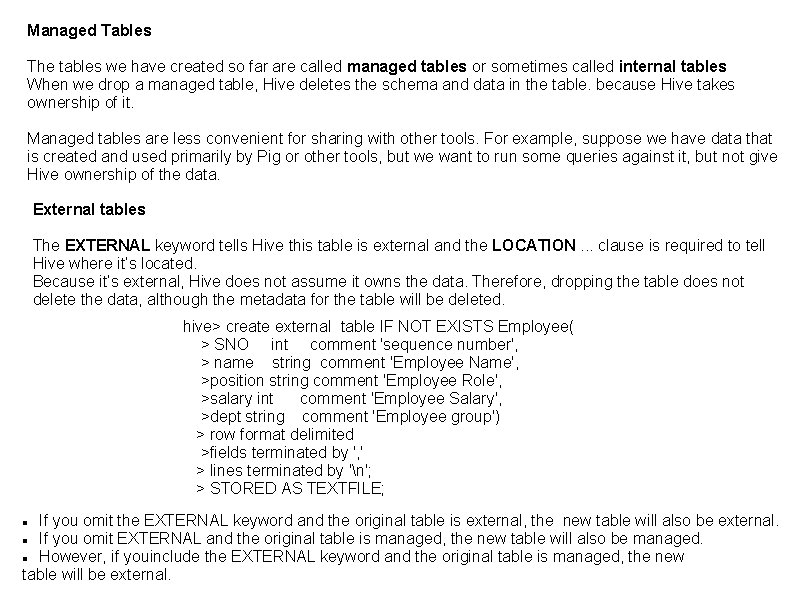

Managed Tables The tables we have created so far are called managed tables or sometimes called internal tables When we drop a managed table, Hive deletes the schema and data in the table. because Hive takes ownership of it. Managed tables are less convenient for sharing with other tools. For example, suppose we have data that is created and used primarily by Pig or other tools, but we want to run some queries against it, but not give Hive ownership of the data. External tables The EXTERNAL keyword tells Hive this table is external and the LOCATION. . . clause is required to tell Hive where it’s located. Because it’s external, Hive does not assume it owns the data. Therefore, dropping the table does not delete the data, although the metadata for the table will be deleted. hive> create external table IF NOT EXISTS Employee( > SNO int comment 'sequence number', > name string comment 'Employee Name', >position string comment 'Employee Role', >salary int comment 'Employee Salary', >dept string comment 'Employee group') > row format delimited >fields terminated by ', ' > lines terminated by 'n'; > STORED AS TEXTFILE; If you omit the EXTERNAL keyword and the original table is external, the new table will also be external. If you omit EXTERNAL and the original table is managed, the new table will also be managed. However, if youinclude the EXTERNAL keyword and the original table is managed, the new table will be external.

![Drop Table DROP TABLE IF EXISTS tablename PURGE DROP TABLE removes metadata and data Drop Table DROP TABLE [IF EXISTS] table_name [PURGE]; DROP TABLE removes metadata and data](https://slidetodoc.com/presentation_image_h/22f8a86fb6017570d4cbaa56acd48d30/image-22.jpg)

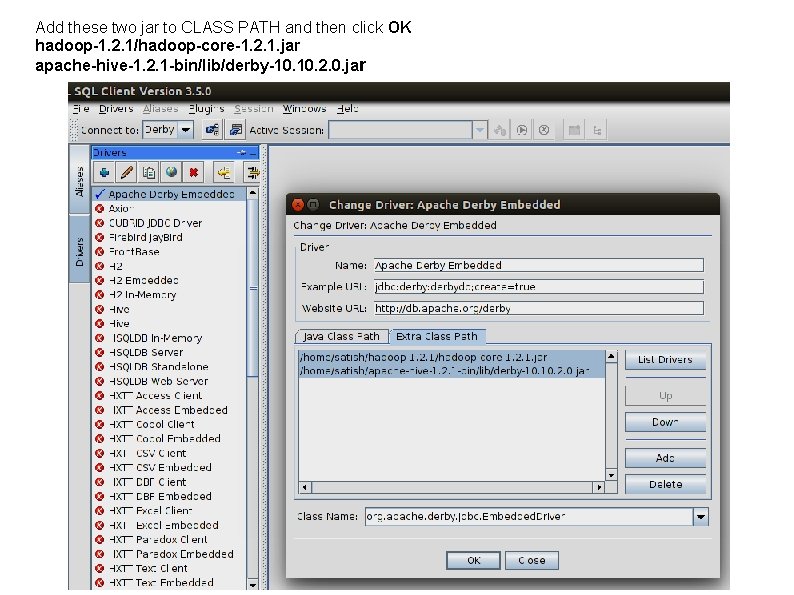

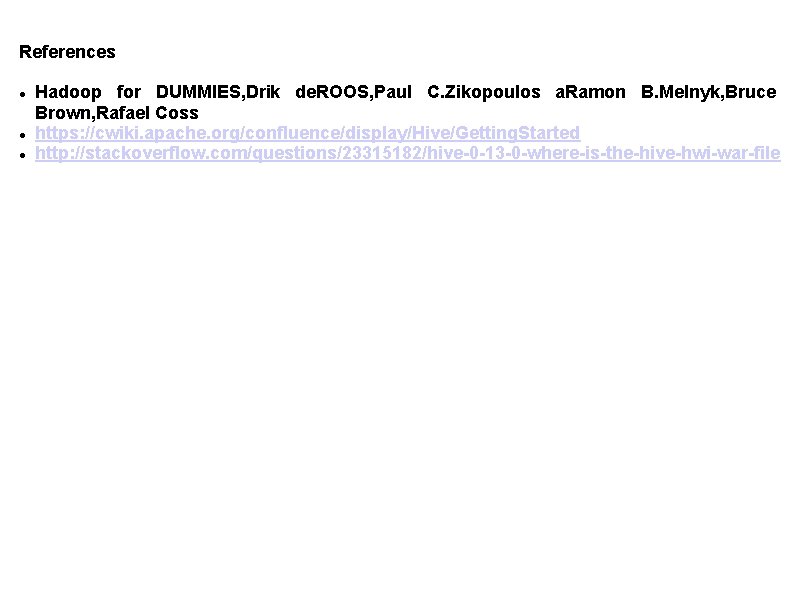

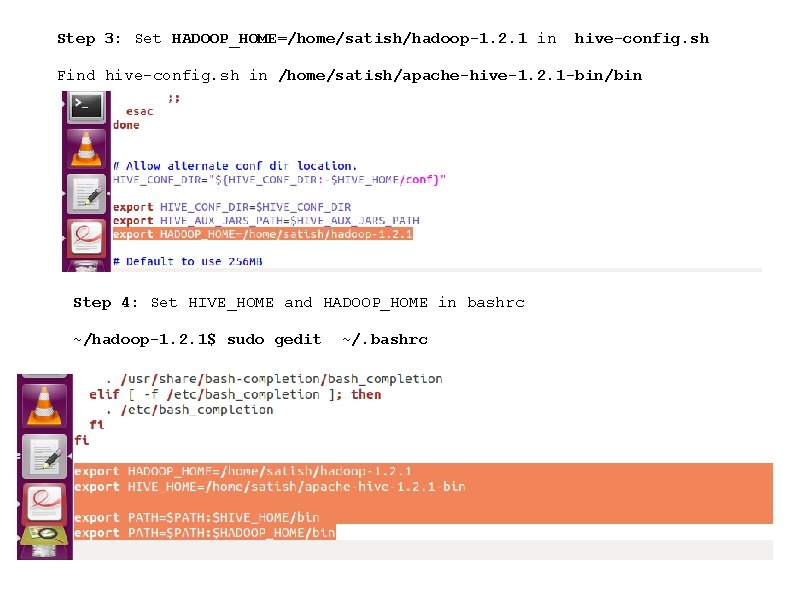

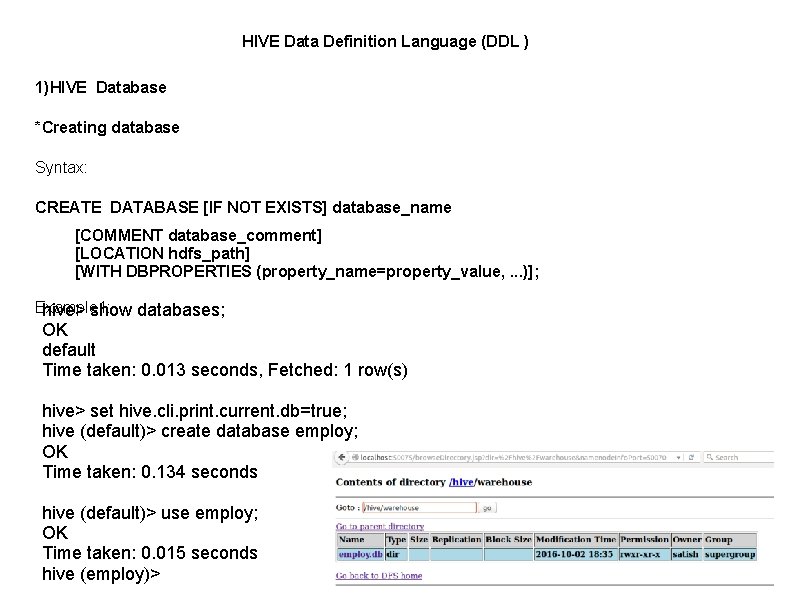

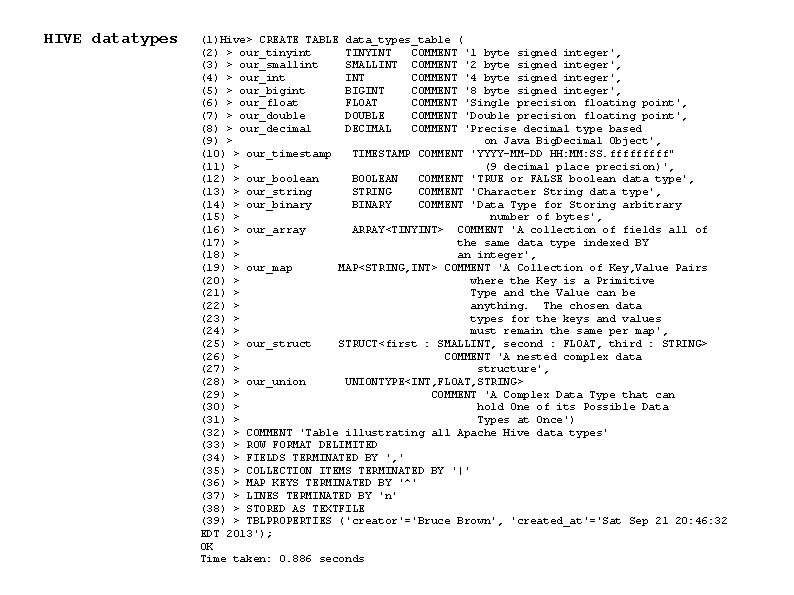

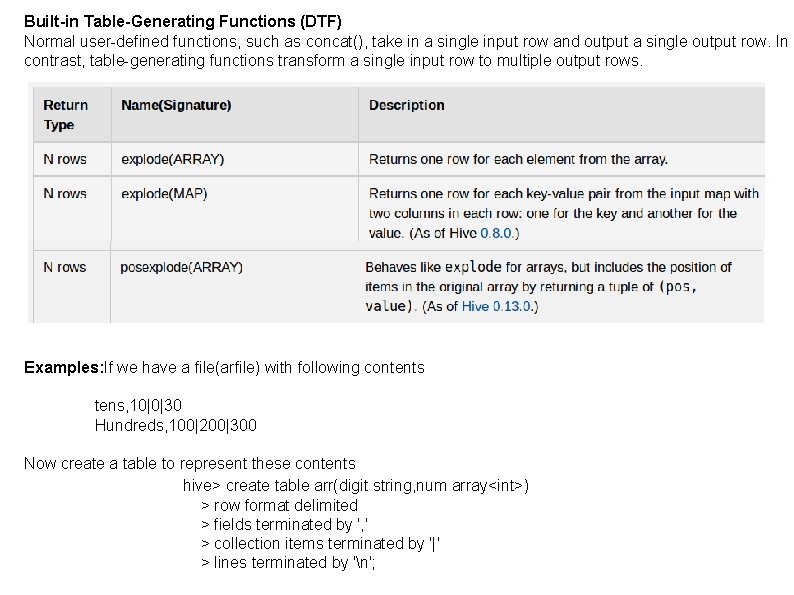

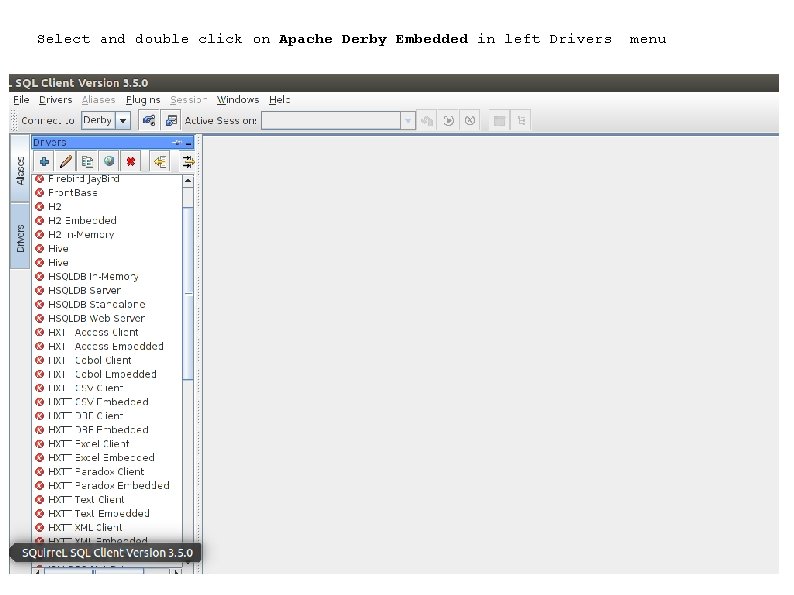

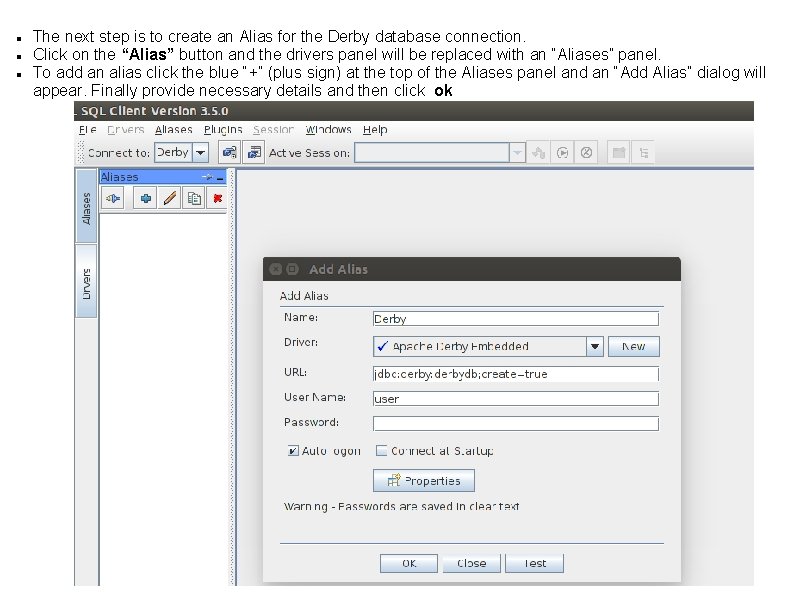

Drop Table DROP TABLE [IF EXISTS] table_name [PURGE]; DROP TABLE removes metadata and data for this table. The data is actually moved to the. Trash/Current directory if Trash is configured When dropping an EXTERNAL table, data in the table will NOT be deleted from the file system. When dropping a table referenced by views, no warning is given (the views are left dangling as invalid and must be dropped or recreated by the user). If PURGE is specified, the table data does not go to the. Trash/Current directory and so cannot be retrieved in the event of a mistaken DROP. Example: hive (employ)> DROP table IF EXISTS employee 2; OK Time taken: 0. 305 seconds Truncate Table TRUNCATE TABLE table_name [PARTITION partition_spec]; partition_spec: : (partition_column = partition_col_value, . . . ) Removes all rows from a table or partition(s). The rows will be trashed if the filesystem Trash is enabled, otherwise they are deleted

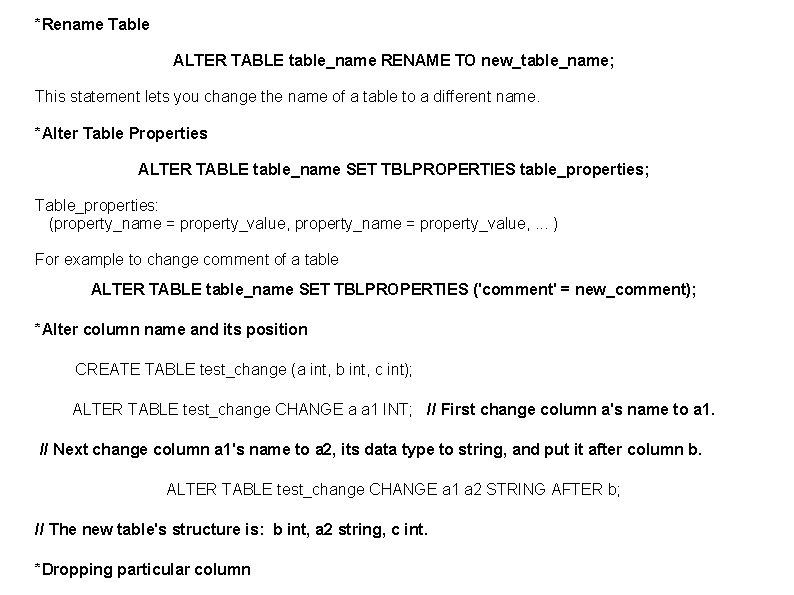

*Rename Table ALTER TABLE table_name RENAME TO new_table_name; This statement lets you change the name of a table to a different name. *Alter Table Properties ALTER TABLE table_name SET TBLPROPERTIES table_properties; Table_properties: (property_name = property_value, . . . ) For example to change comment of a table ALTER TABLE table_name SET TBLPROPERTIES ('comment' = new_comment); *Alter column name and its position CREATE TABLE test_change (a int, b int, c int); ALTER TABLE test_change CHANGE a a 1 INT; // First change column a's name to a 1. // Next change column a 1's name to a 2, its data type to string, and put it after column b. ALTER TABLE test_change CHANGE a 1 a 2 STRING AFTER b; // The new table's structure is: b int, a 2 string, c int. *Dropping particular column

![HIVE DMLData Manipulation language Loading data in to table LOAD DATA LOCAL INPATH path HIVE DML(Data Manipulation language) Loading data in to table LOAD DATA [LOCAL] INPATH 'path](https://slidetodoc.com/presentation_image_h/22f8a86fb6017570d4cbaa56acd48d30/image-24.jpg)

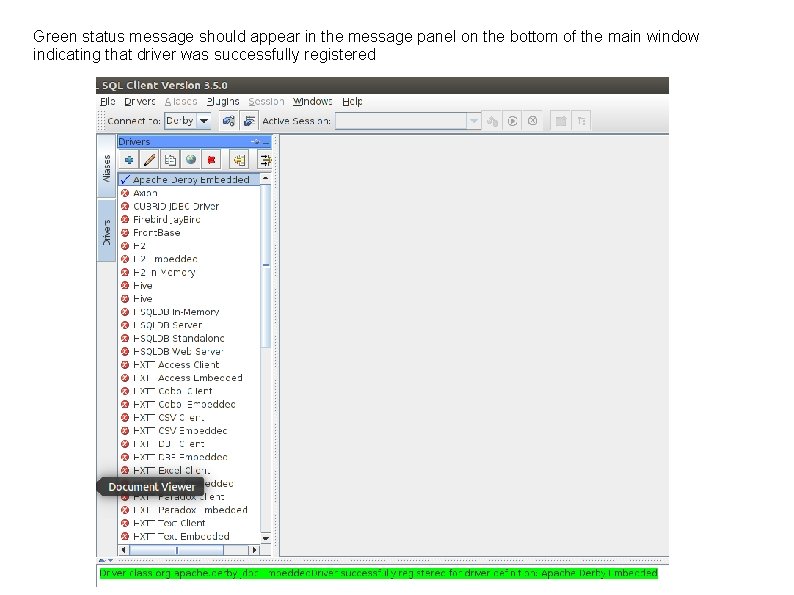

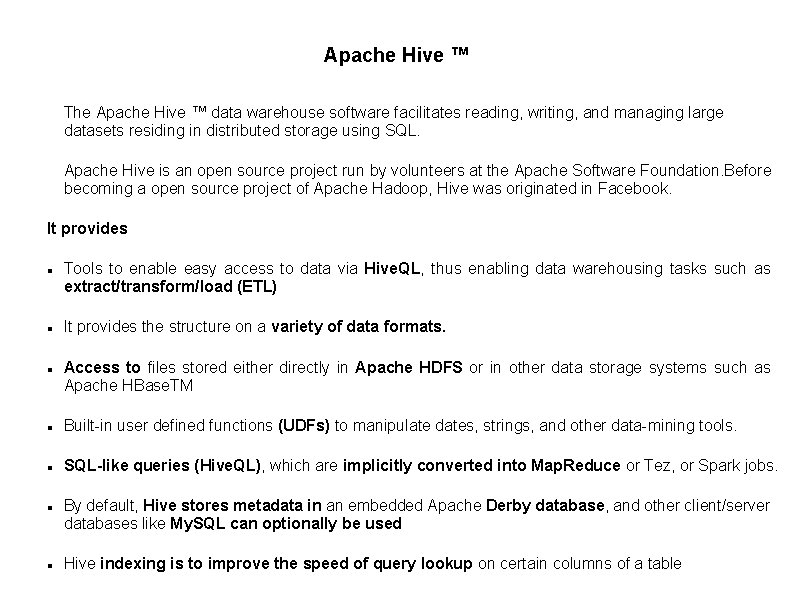

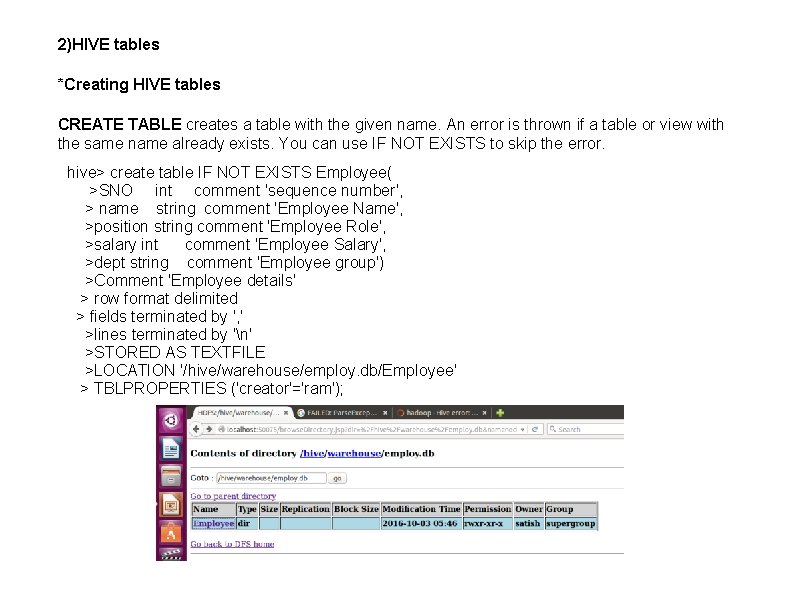

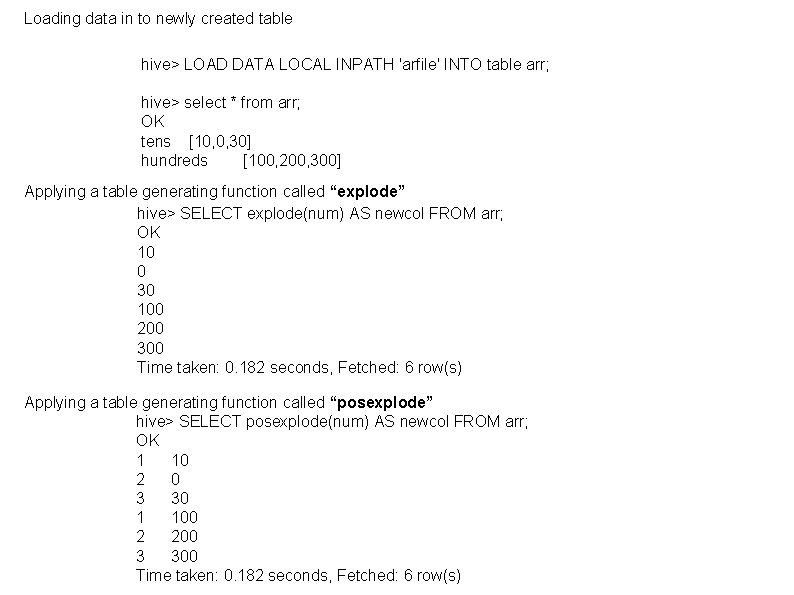

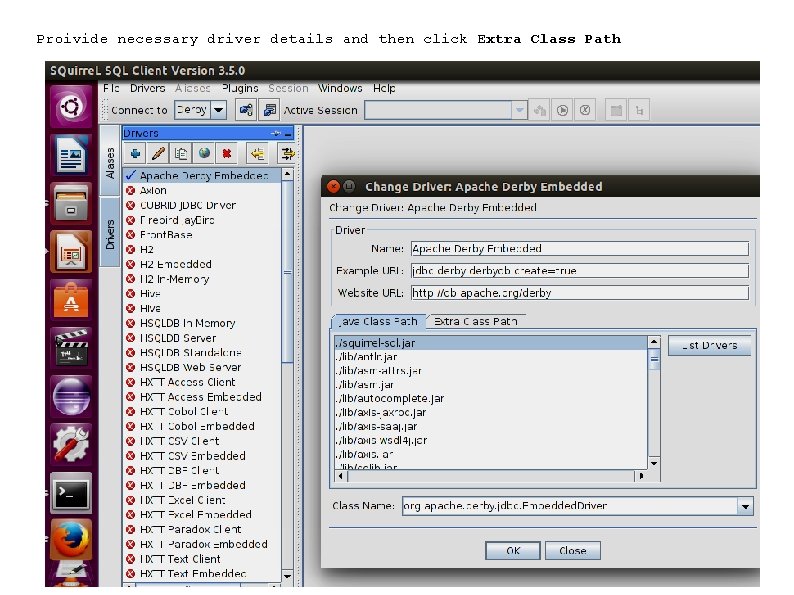

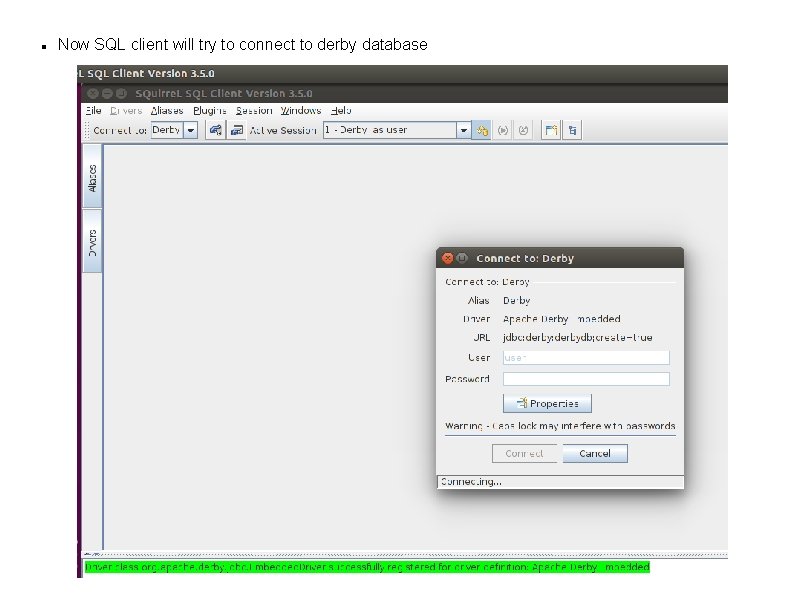

HIVE DML(Data Manipulation language) Loading data in to table LOAD DATA [LOCAL] INPATH 'path to file' [OVERWRITE] INTO TABLE table_name Example: Consider text file in local filesystem with following contents

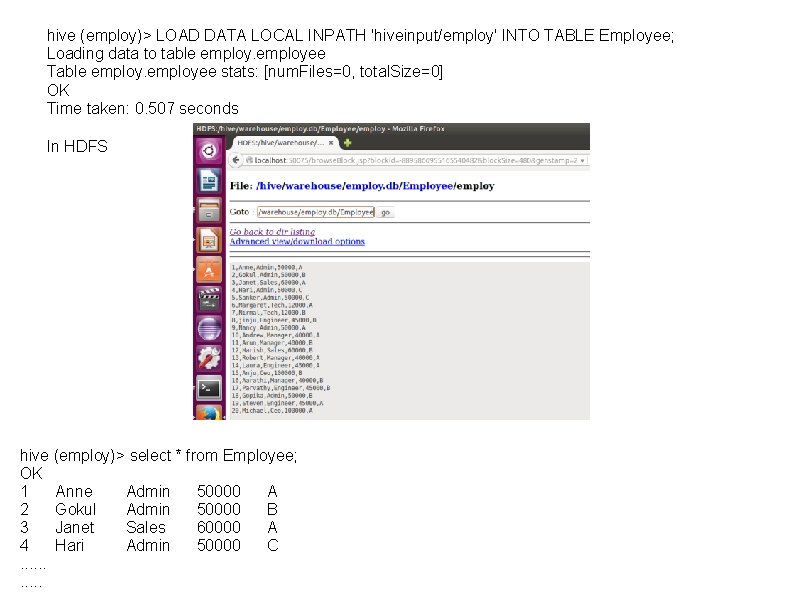

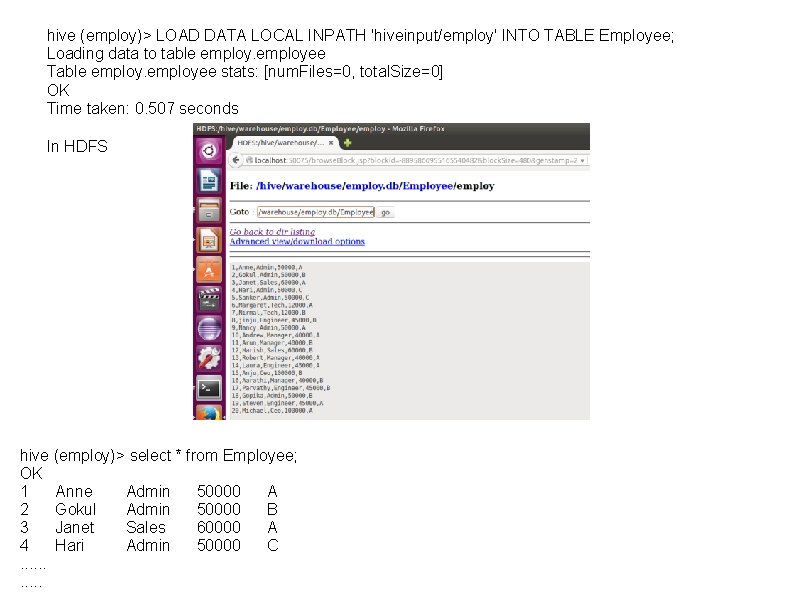

hive (employ)> LOAD DATA LOCAL INPATH 'hiveinput/employ' INTO TABLE Employee; Loading data to table employee Table employee stats: [num. Files=0, total. Size=0] OK Time taken: 0. 507 seconds In HDFS hive (employ)> select * from Employee; OK 1 Anne Admin 50000 A 2 Gokul Admin 50000 B 3 Janet Sales 60000 A 4 Hari Admin 50000 C. . .

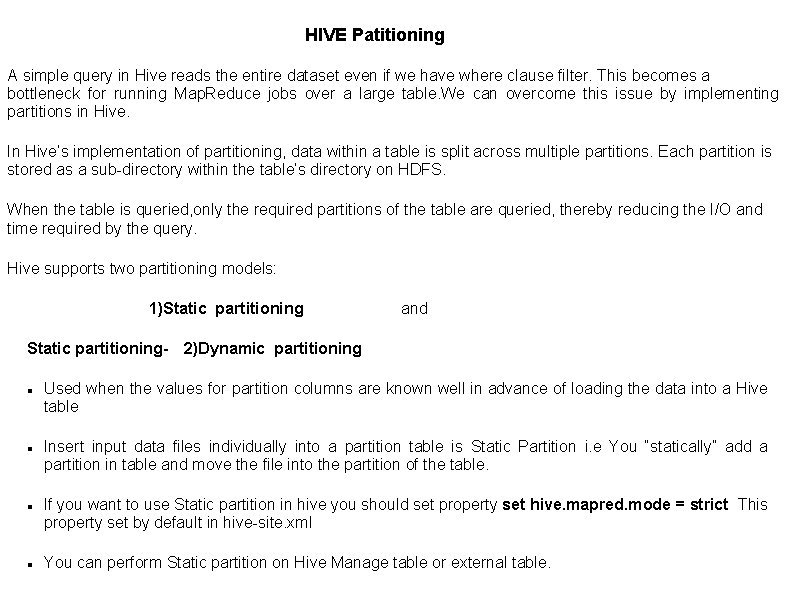

HIVE Patitioning A simple query in Hive reads the entire dataset even if we have where clause filter. This becomes a bottleneck for running Map. Reduce jobs over a large table. We can overcome this issue by implementing partitions in Hive. In Hive’s implementation of partitioning, data within a table is split across multiple partitions. Each partition is stored as a sub-directory within the table’s directory on HDFS. When the table is queried, only the required partitions of the table are queried, thereby reducing the I/O and time required by the query. Hive supports two partitioning models: 1)Static partitioning and Static partitioning- 2)Dynamic partitioning Used when the values for partition columns are known well in advance of loading the data into a Hive table Insert input data files individually into a partition table is Static Partition i. e You “statically” add a partition in table and move the file into the partition of the table. If you want to use Static partition in hive you should set property set hive. mapred. mode = strict This property set by default in hive-site. xml You can perform Static partition on Hive Manage table or external table.

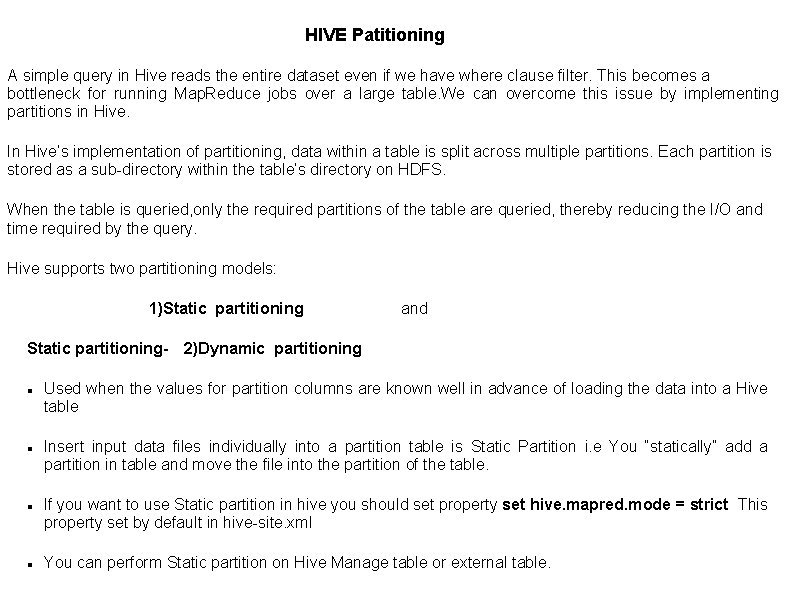

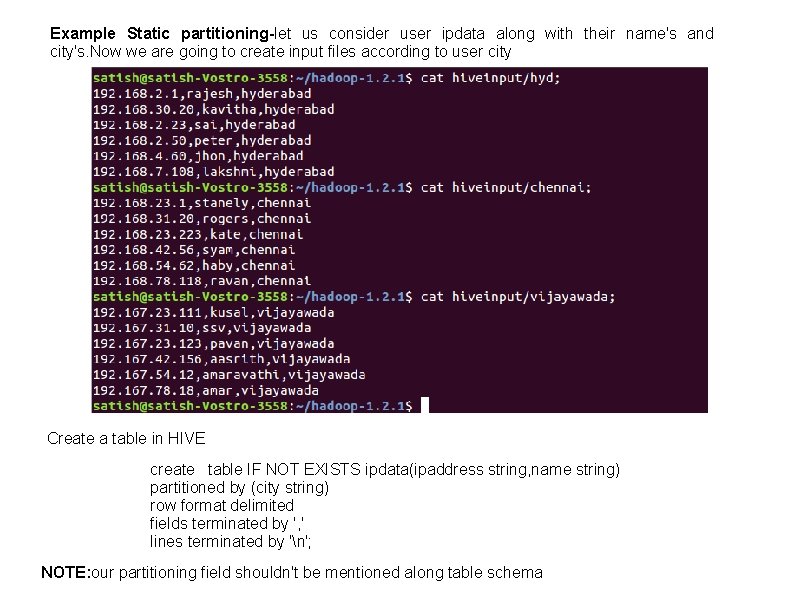

Example Static partitioning-let us consider user ipdata along with their name's and city's. Now we are going to create input files according to user city Create a table in HIVE create table IF NOT EXISTS ipdata(ipaddress string, name string) partitioned by (city string) row format delimited fields terminated by ', ' lines terminated by 'n'; NOTE: our partitioning field shouldn't be mentioned along table schema

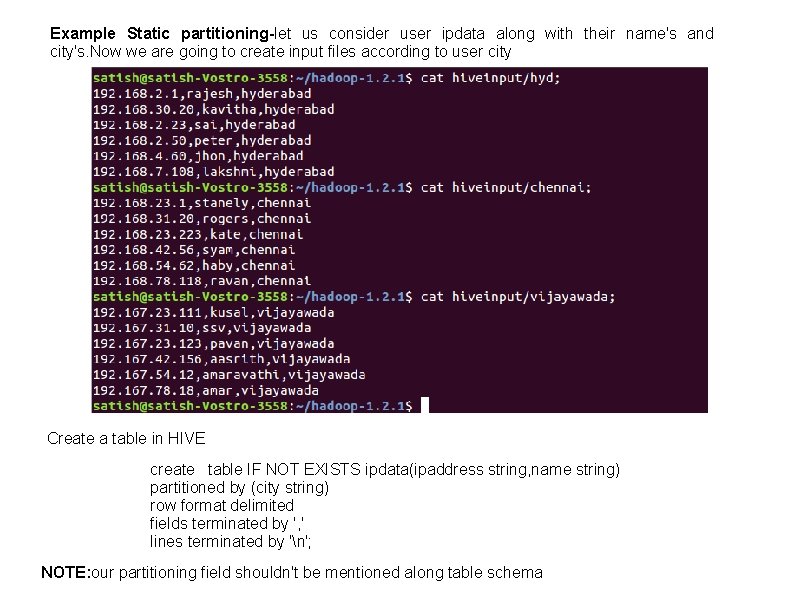

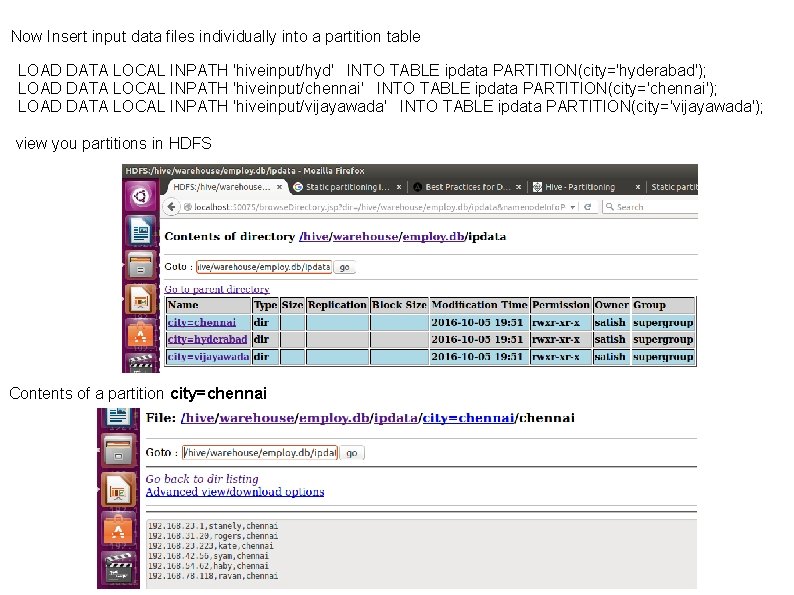

Now Insert input data files individually into a partition table LOAD DATA LOCAL INPATH 'hiveinput/hyd' INTO TABLE ipdata PARTITION(city='hyderabad'); LOAD DATA LOCAL INPATH 'hiveinput/chennai' INTO TABLE ipdata PARTITION(city='chennai'); LOAD DATA LOCAL INPATH 'hiveinput/vijayawada' INTO TABLE ipdata PARTITION(city='vijayawada'); view you partitions in HDFS Contents of a partition city=chennai

To Know the schema of newly created table hive (employ)> DESCRIBE Employee; OK sno int sequence number name string Employee Name position string Employee Role salary int Employee Salary dept string Employee group Time taken: 0. 124 seconds, Fetched: 5 row(s) You can also copy the schema (but not the data) of an existing table: hive (employ)> CREATE TABLE IF NOT EXISTS employ. Employee 2 > LIKE employ. Employee; OK Time taken: 0. 197 seconds hive (employ)> show tables; OK employee 2 Time taken: 0. 02 seconds, Fetched: 2 row(s) hive (employ)> DESCRIBE Employee 2; OK sno int sequence number name string Employee Name position string Employee Role salary int Employee Salary dept string Employee group Time taken: 0. 122 seconds, Fetched: 5 row(s)

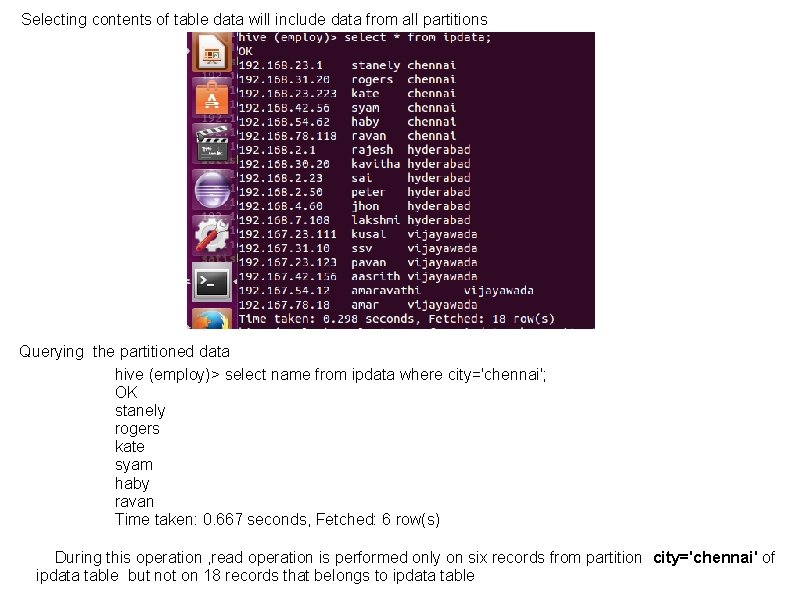

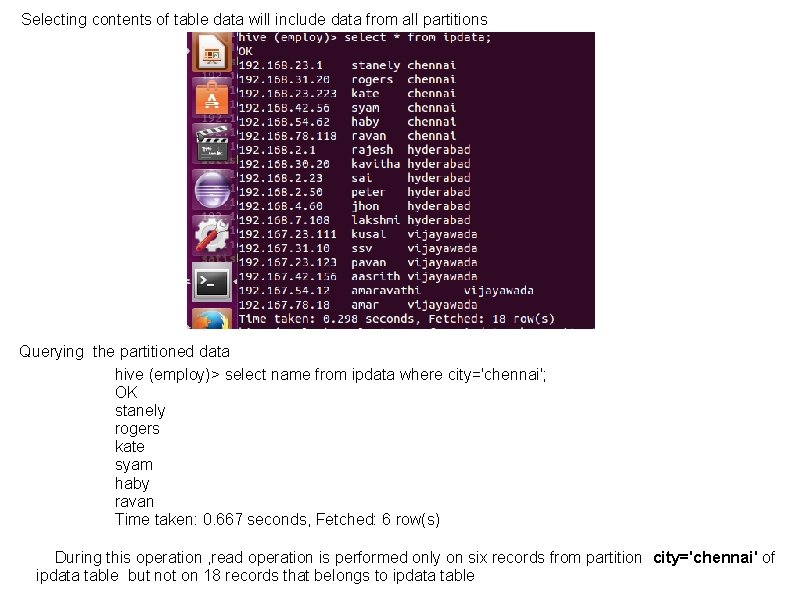

Selecting contents of table data will include data from all partitions Querying the partitioned data hive (employ)> select name from ipdata where city='chennai'; OK stanely rogers kate syam haby ravan Time taken: 0. 667 seconds, Fetched: 6 row(s) During this operation , read operation is performed only on six records from partition city='chennai' of ipdata table but not on 18 records that belongs to ipdata table

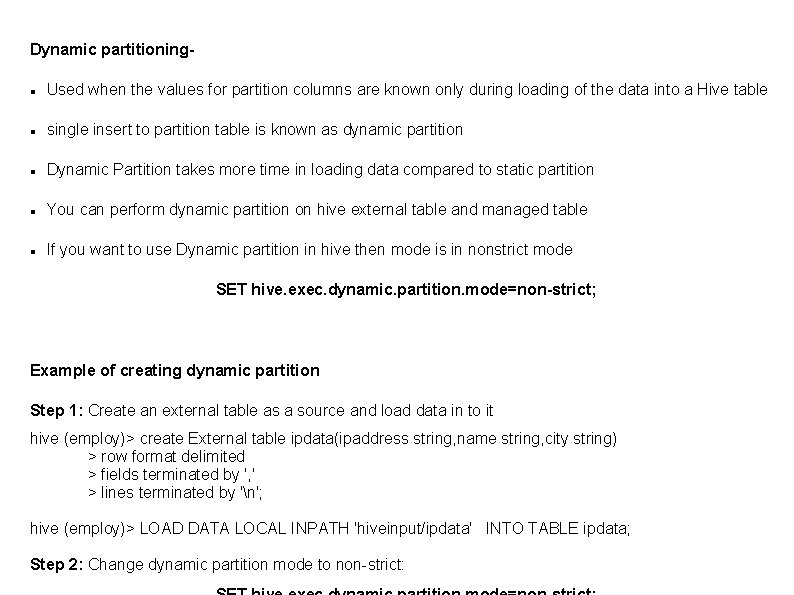

Dynamic partitioning Used when the values for partition columns are known only during loading of the data into a Hive table single insert to partition table is known as dynamic partition Dynamic Partition takes more time in loading data compared to static partition You can perform dynamic partition on hive external table and managed table If you want to use Dynamic partition in hive then mode is in nonstrict mode SET hive. exec. dynamic. partition. mode=non-strict; Example of creating dynamic partition Step 1: Create an external table as a source and load data in to it hive (employ)> create External table ipdata(ipaddress string, name string, city string) > row format delimited > fields terminated by ', ' > lines terminated by 'n'; hive (employ)> LOAD DATA LOCAL INPATH 'hiveinput/ipdata' INTO TABLE ipdata; Step 2: Change dynamic partition mode to non-strict:

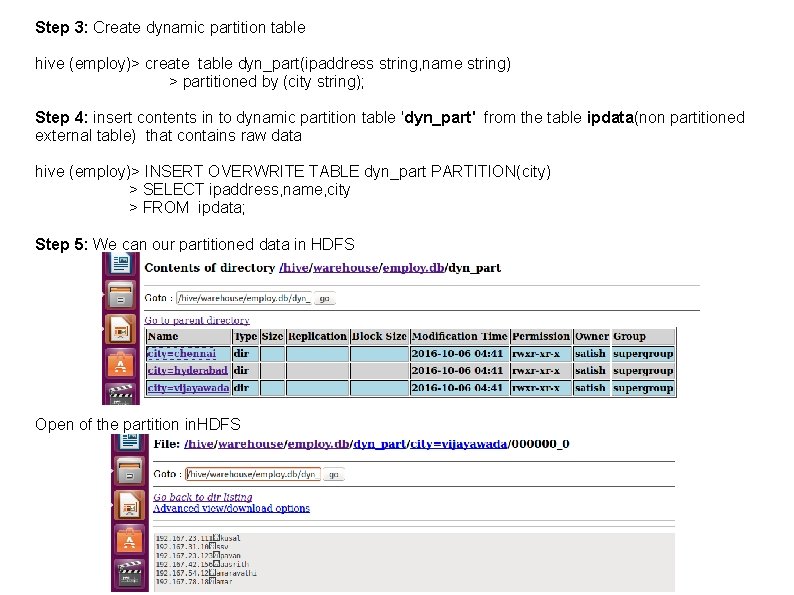

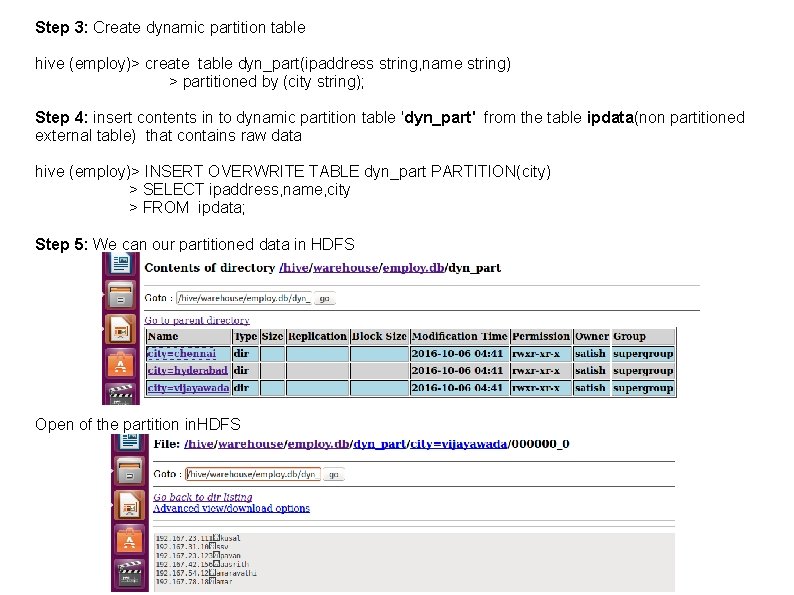

Step 3: Create dynamic partition table hive (employ)> create table dyn_part(ipaddress string, name string) > partitioned by (city string); Step 4: insert contents in to dynamic partition table 'dyn_part' from the table ipdata(non partitioned external table) that contains raw data hive (employ)> INSERT OVERWRITE TABLE dyn_part PARTITION(city) > SELECT ipaddress, name, city > FROM ipdata; Step 5: We can our partitioned data in HDFS Open of the partition in. HDFS

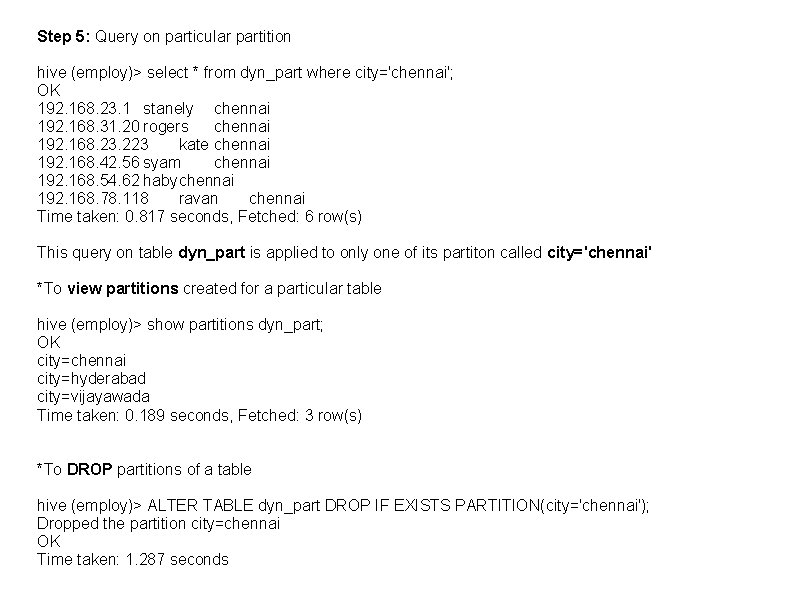

Step 5: Query on particular partition hive (employ)> select * from dyn_part where city='chennai'; OK 192. 168. 23. 1 stanely chennai 192. 168. 31. 20 rogers chennai 192. 168. 23. 223 kate chennai 192. 168. 42. 56 syam chennai 192. 168. 54. 62 habychennai 192. 168. 78. 118 ravan chennai Time taken: 0. 817 seconds, Fetched: 6 row(s) This query on table dyn_part is applied to only one of its partiton called city='chennai' *To view partitions created for a particular table hive (employ)> show partitions dyn_part; OK city=chennai city=hyderabad city=vijayawada Time taken: 0. 189 seconds, Fetched: 3 row(s) *To DROP partitions of a table hive (employ)> ALTER TABLE dyn_part DROP IF EXISTS PARTITION(city='chennai'); Dropped the partition city=chennai OK Time taken: 1. 287 seconds

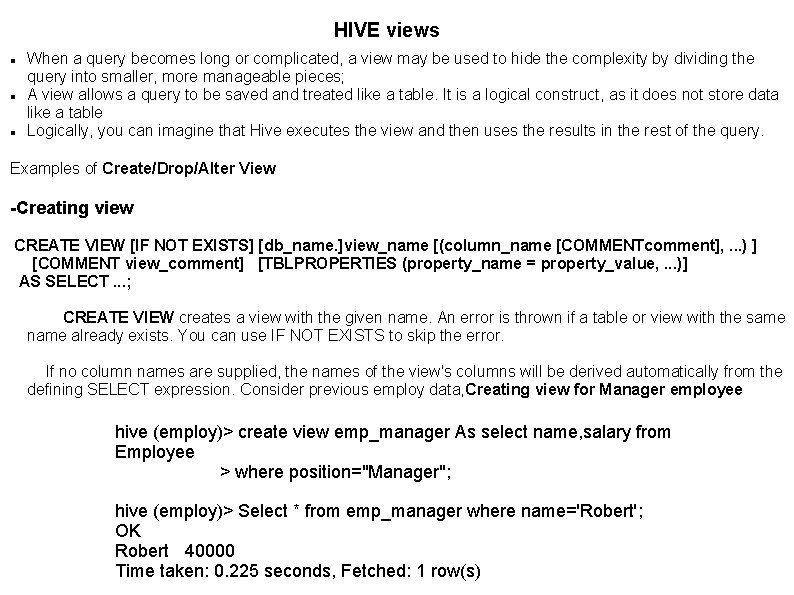

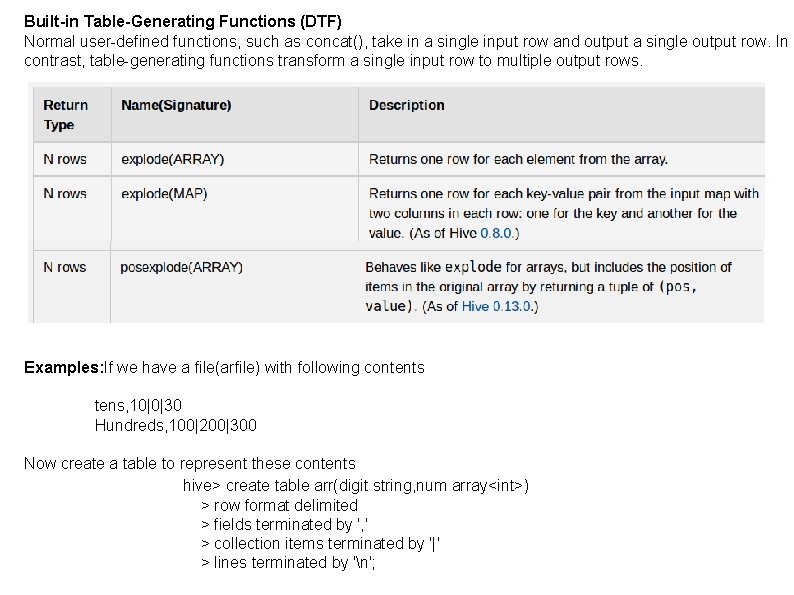

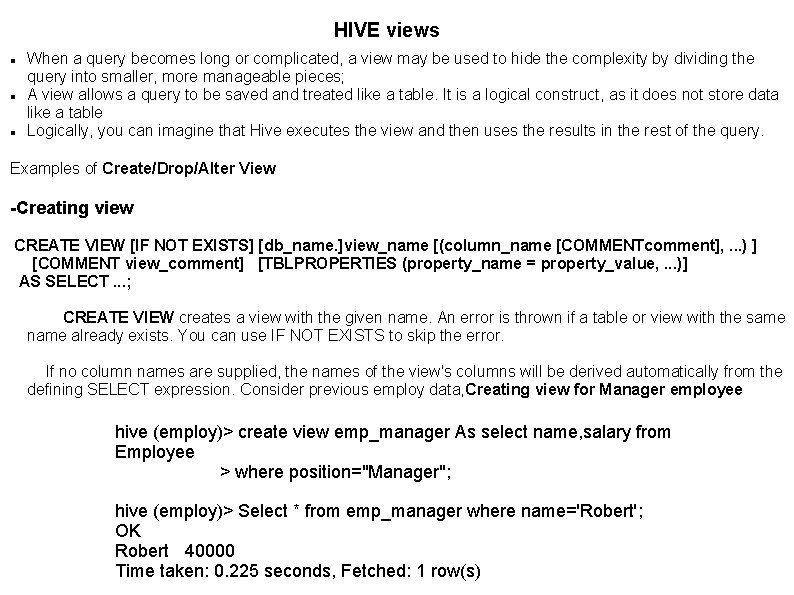

HIVE views When a query becomes long or complicated, a view may be used to hide the complexity by dividing the query into smaller, more manageable pieces; A view allows a query to be saved and treated like a table. It is a logical construct, as it does not store data like a table Logically, you can imagine that Hive executes the view and then uses the results in the rest of the query. Examples of Create/Drop/Alter View -Creating view CREATE VIEW [IF NOT EXISTS] [db_name. ]view_name [(column_name [COMMENTcomment], . . . ) ] [COMMENT view_comment] [TBLPROPERTIES (property_name = property_value, . . . )] AS SELECT. . . ; CREATE VIEW creates a view with the given name. An error is thrown if a table or view with the same name already exists. You can use IF NOT EXISTS to skip the error. If no column names are supplied, the names of the view's columns will be derived automatically from the defining SELECT expression. Consider previous employ data, Creating view for Manager employee hive (employ)> create view emp_manager As select name, salary from Employee > where position="Manager"; hive (employ)> Select * from emp_manager where name='Robert'; OK Robert 40000 Time taken: 0. 225 seconds, Fetched: 1 row(s)

![Dropping view Use the following syntax to drop a view DROP VIEW IF EXISTS -Dropping view Use the following syntax to drop a view: DROP VIEW [IF EXISTS]](https://slidetodoc.com/presentation_image_h/22f8a86fb6017570d4cbaa56acd48d30/image-35.jpg)

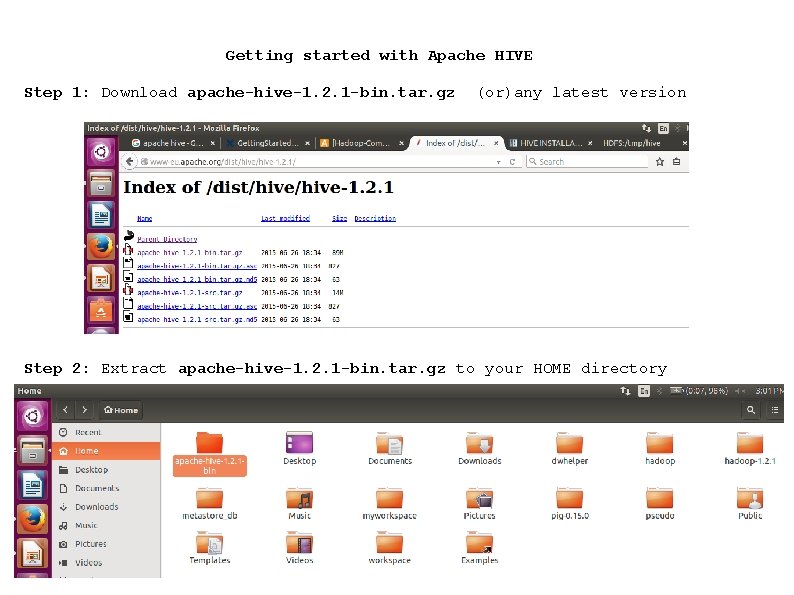

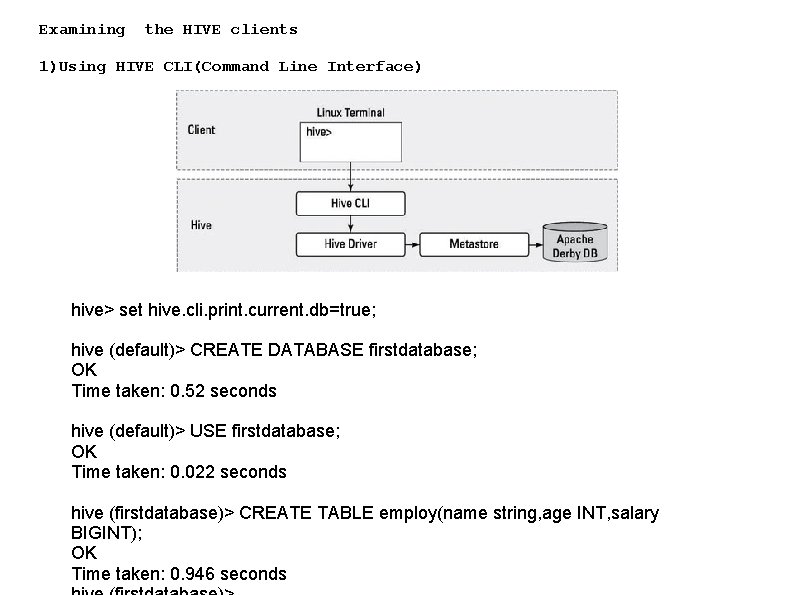

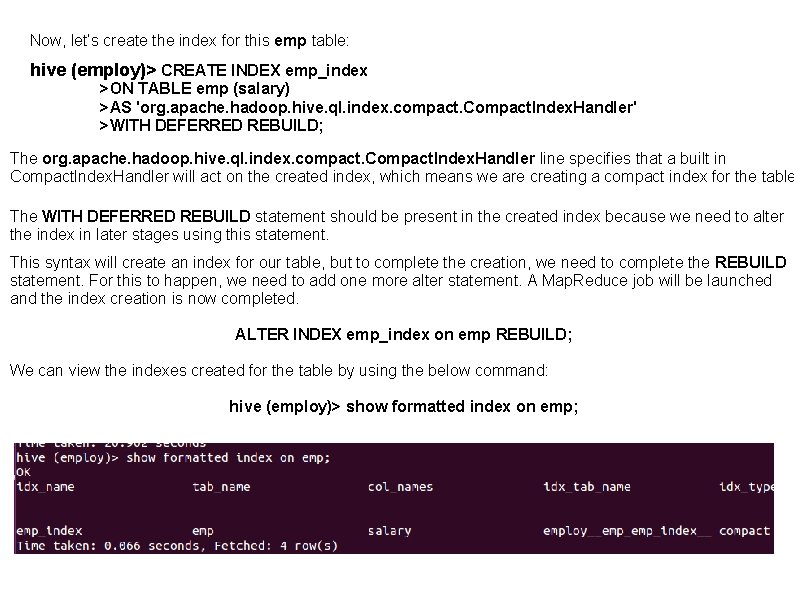

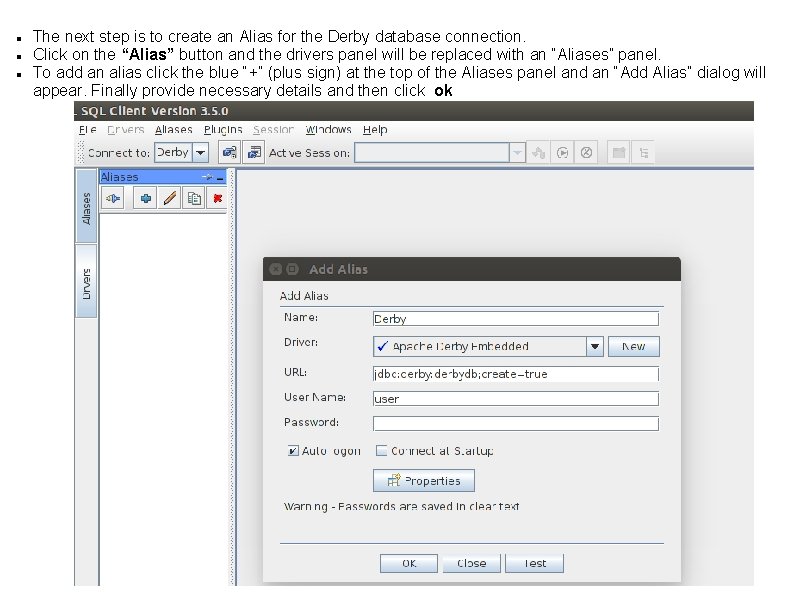

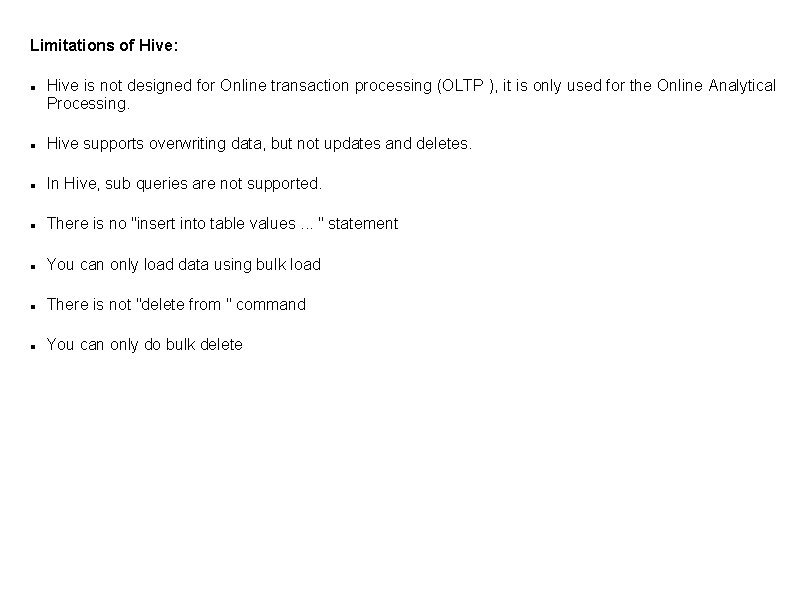

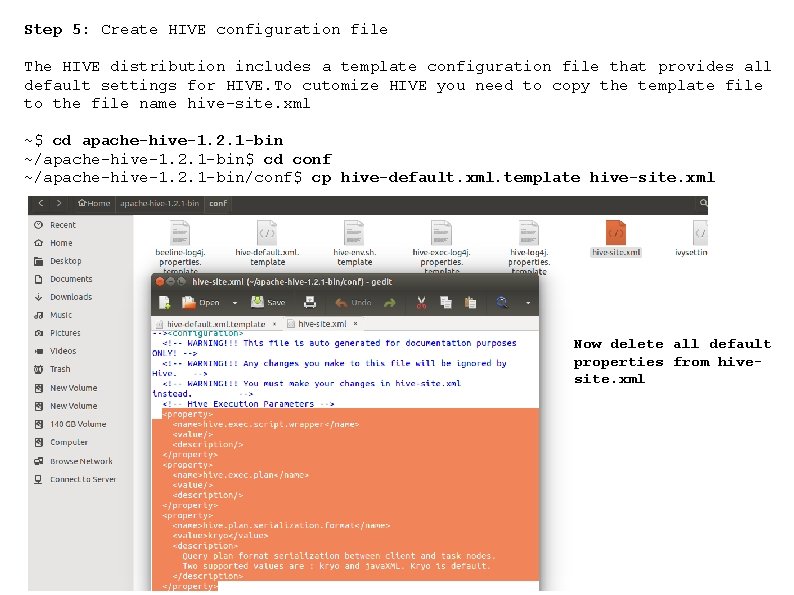

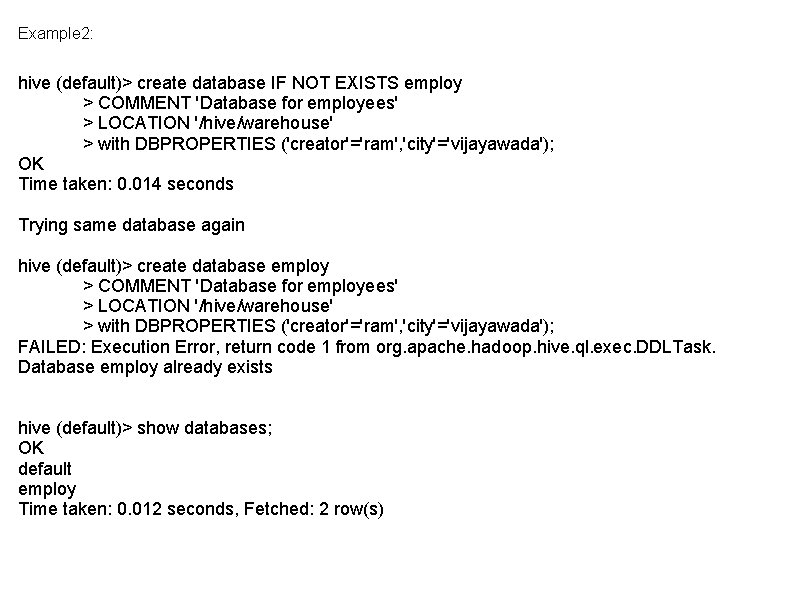

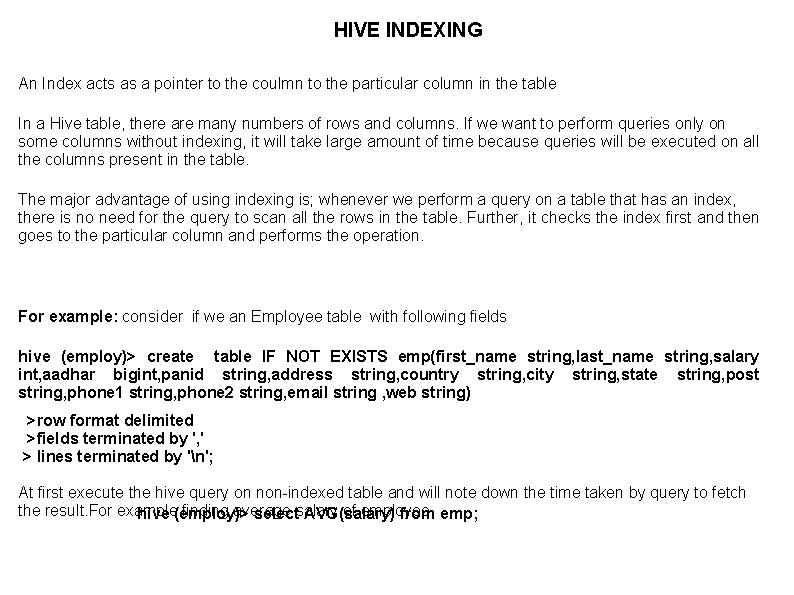

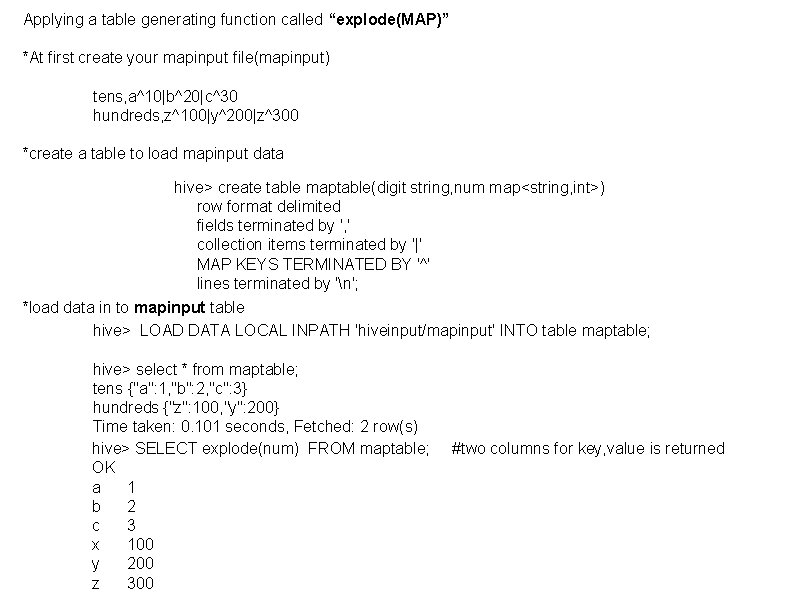

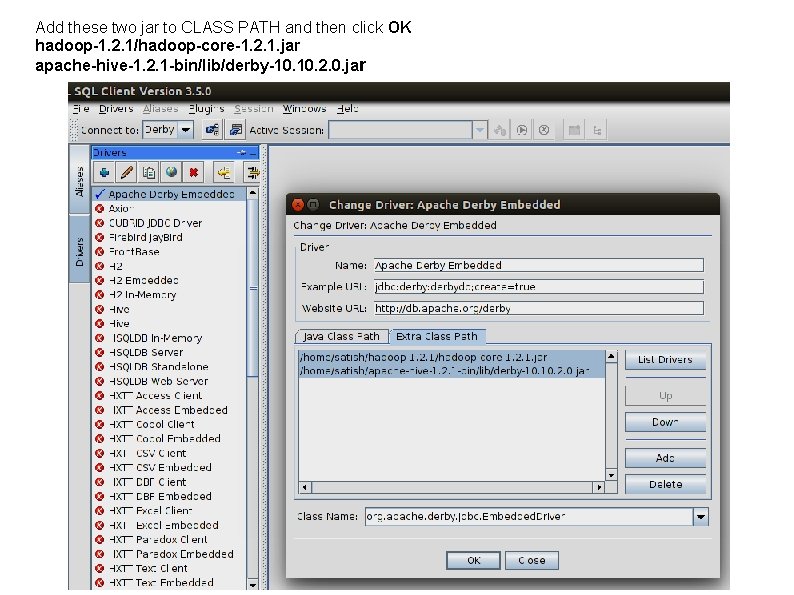

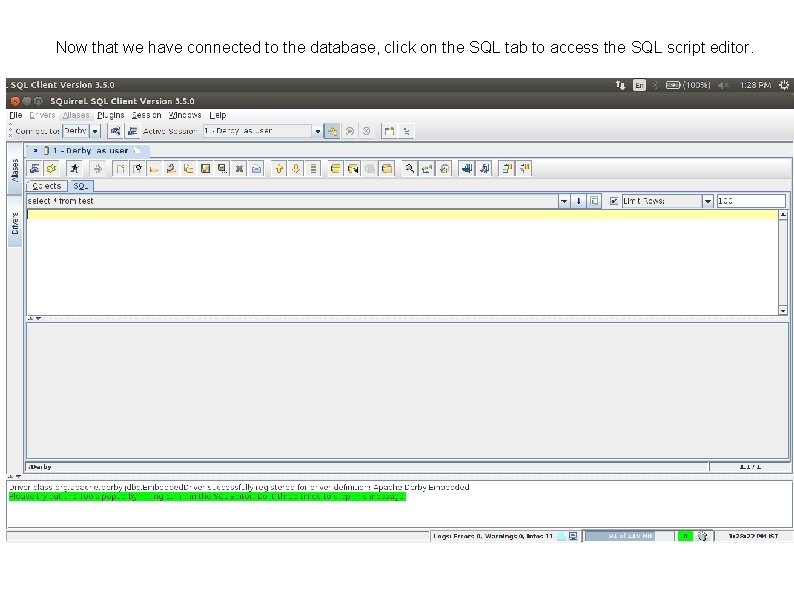

-Dropping view Use the following syntax to drop a view: DROP VIEW [IF EXISTS] [db_name. ]view_name; DROP VIEW removes metadata for the specified view. (It is illegal to use DROP TABLE on a view. ) The following query drops a view named as emp_manager hive> DROP VIEW emp_manager; Alter View Properties ALTER VIEW [db_name. ]view_name SET TBLPROPERTIES table_properties; table_properties: : (property_name = property_value, . . . ) As with ALTER TABLE, you can use this statement to add your own metadata to a view. Example: ALTER VIEW employ. emp_manager SET TBLPROPERTIES('Creator'='name');

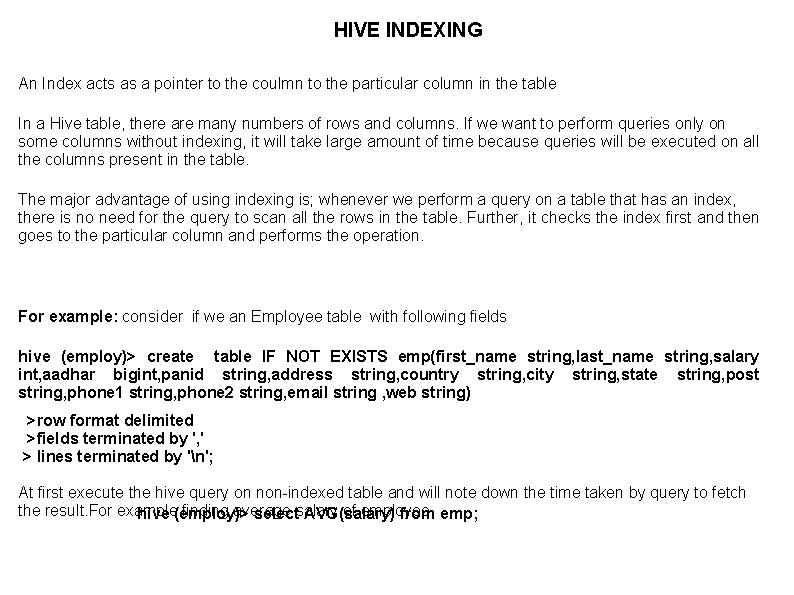

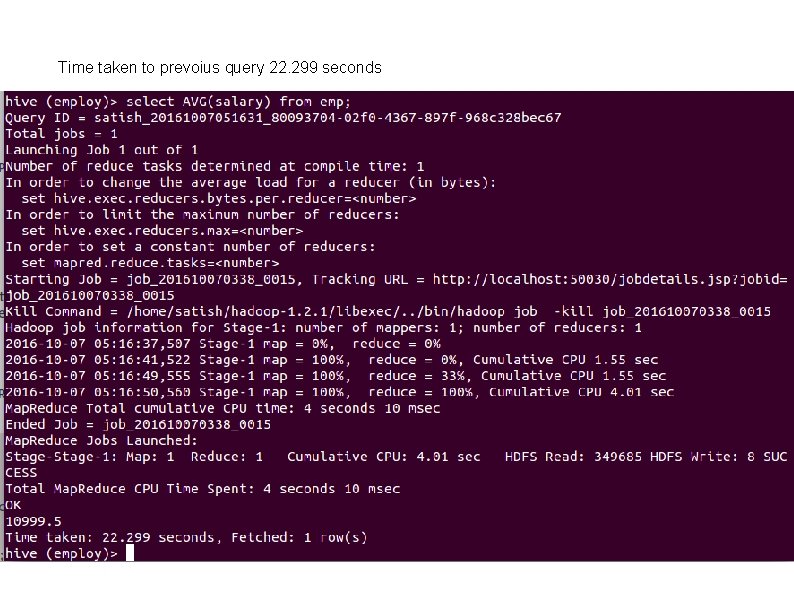

HIVE INDEXING An Index acts as a pointer to the coulmn to the particular column in the table In a Hive table, there are many numbers of rows and columns. If we want to perform queries only on some columns without indexing, it will take large amount of time because queries will be executed on all the columns present in the table. The major advantage of using indexing is; whenever we perform a query on a table that has an index, there is no need for the query to scan all the rows in the table. Further, it checks the index first and then goes to the particular column and performs the operation. For example: consider if we an Employee table with following fields hive (employ)> create table IF NOT EXISTS emp(first_name string, last_name string, salary int, aadhar bigint, panid string, address string, country string, city string, state string, post string, phone 1 string, phone 2 string, email string , web string) >row format delimited >fields terminated by ', ' > lines terminated by 'n'; At first execute the hive query on non-indexed table and will note down the time taken by query to fetch the result. For example finding average salary of employee hive (employ)> select AVG(salary) from emp;

Time taken to prevoius query 22. 299 seconds

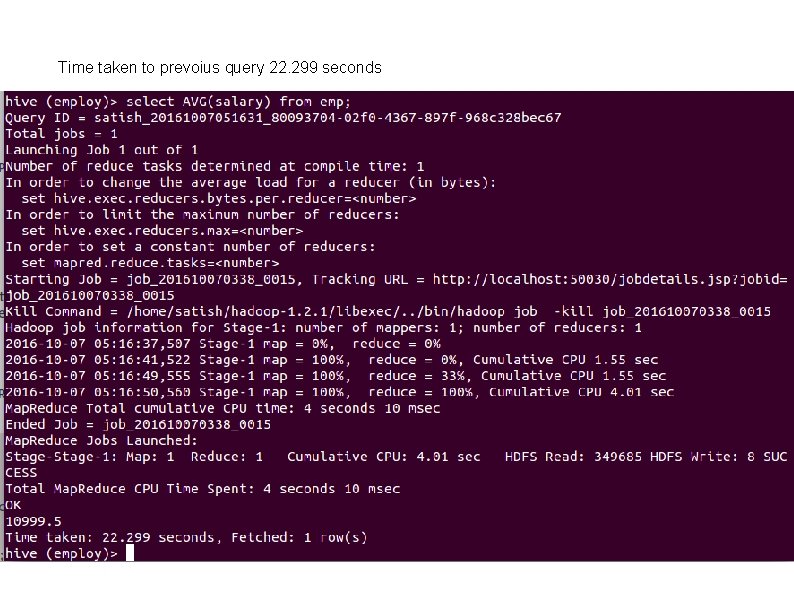

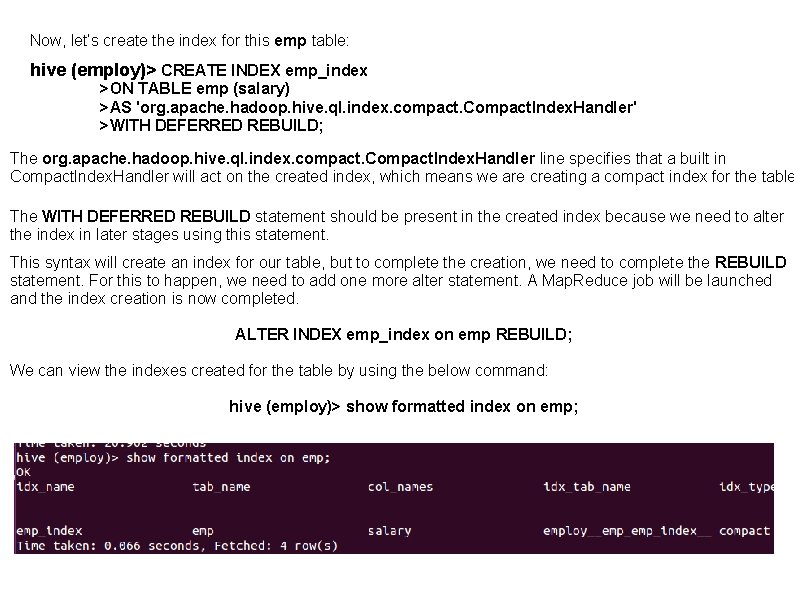

Now, let’s create the index for this emp table: hive (employ)> CREATE INDEX emp_index >ON TABLE emp (salary) >AS 'org. apache. hadoop. hive. ql. index. compact. Compact. Index. Handler' >WITH DEFERRED REBUILD; The org. apache. hadoop. hive. ql. index. compact. Compact. Index. Handler line specifies that a built in Compact. Index. Handler will act on the created index, which means we are creating a compact index for the table The WITH DEFERRED REBUILD statement should be present in the created index because we need to alter the index in later stages using this statement. This syntax will create an index for our table, but to complete the creation, we need to complete the REBUILD statement. For this to happen, we need to add one more alter statement. A Map. Reduce job will be launched and the index creation is now completed. ALTER INDEX emp_index on emp REBUILD; We can view the indexes created for the table by using the below command: hive (employ)> show formatted index on emp;

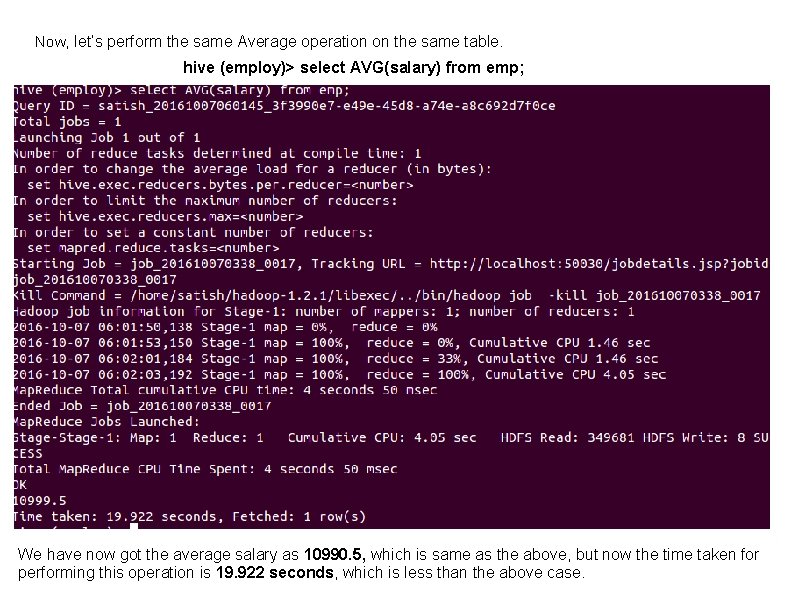

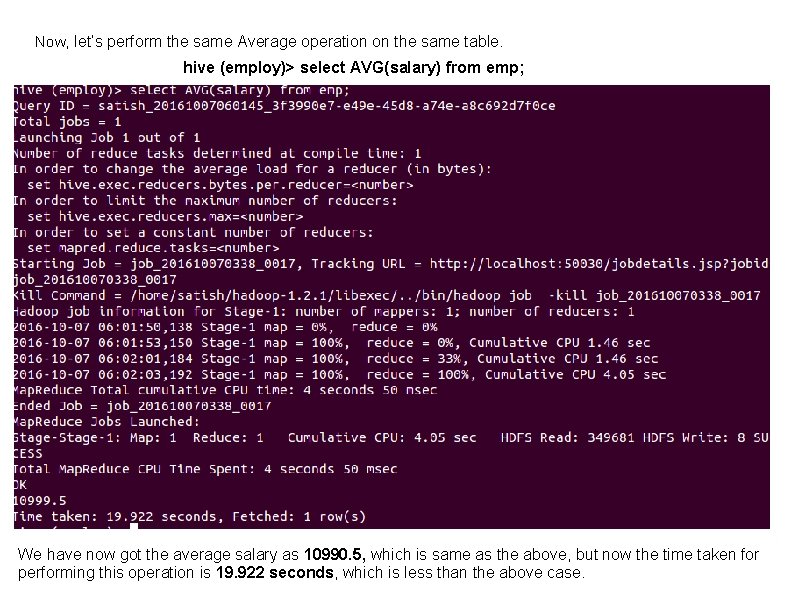

Now, let’s perform the same Average operation on the same table. hive (employ)> select AVG(salary) from emp; We have now got the average salary as 10990. 5, which is same as the above, but now the time taken for performing this operation is 19. 922 seconds, which is less than the above case.

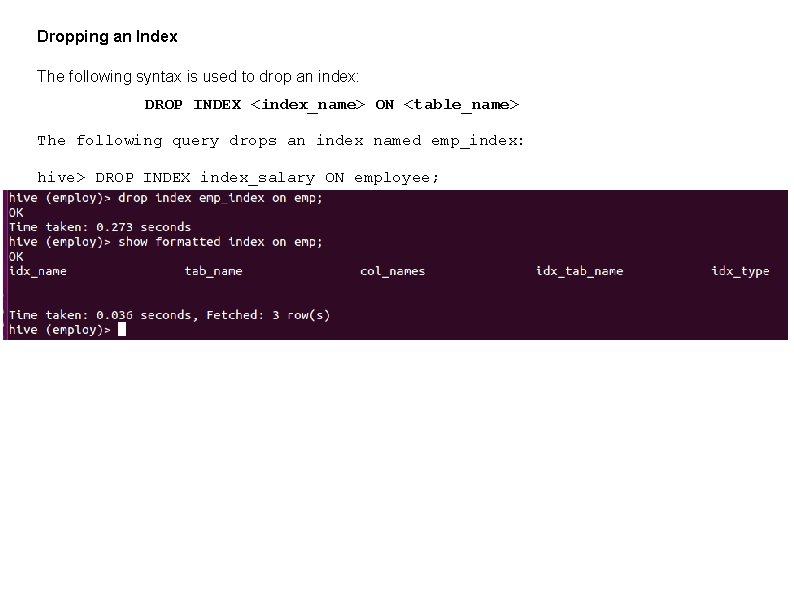

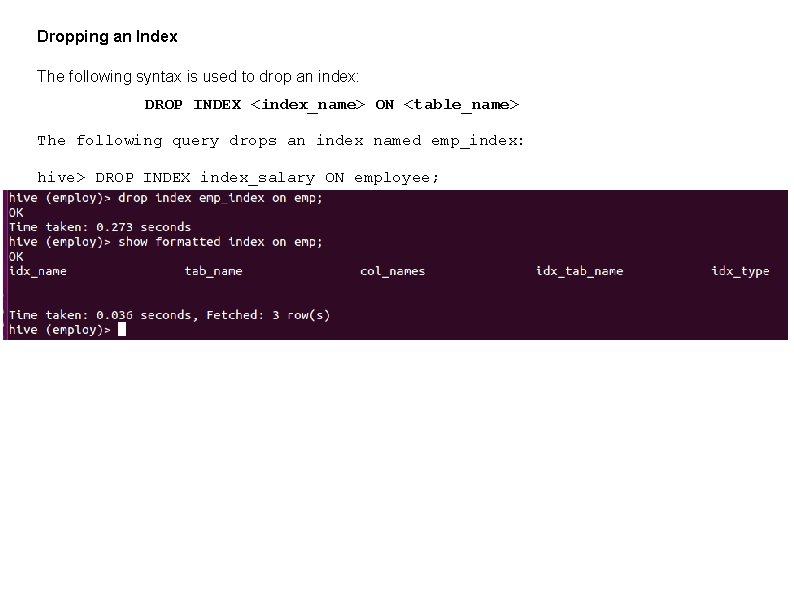

Dropping an Index The following syntax is used to drop an index: DROP INDEX <index_name> ON <table_name> The following query drops an index named emp_index: hive> DROP INDEX index_salary ON employee;

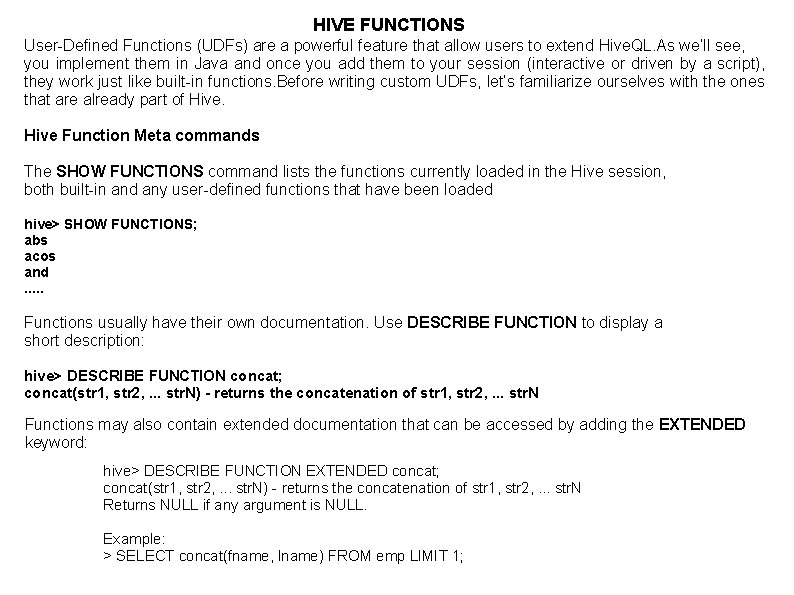

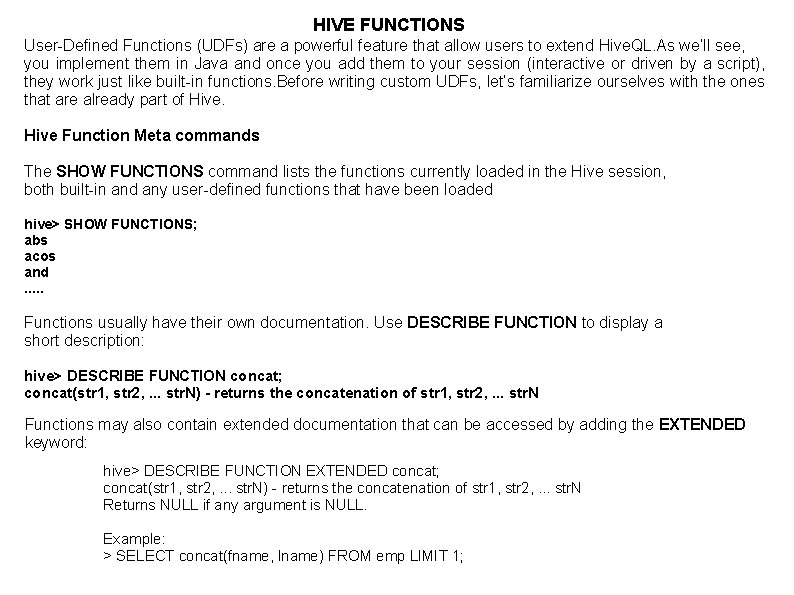

HIVE FUNCTIONS User-Defined Functions (UDFs) are a powerful feature that allow users to extend Hive. QL. As we’ll see, you implement them in Java and once you add them to your session (interactive or driven by a script), they work just like built-in functions. Before writing custom UDFs, let’s familiarize ourselves with the ones that are already part of Hive Function Meta commands The SHOW FUNCTIONS command lists the functions currently loaded in the Hive session, both built-in and any user-defined functions that have been loaded hive> SHOW FUNCTIONS; abs acos and. . . Functions usually have their own documentation. Use DESCRIBE FUNCTION to display a short description: hive> DESCRIBE FUNCTION concat; concat(str 1, str 2, . . . str. N) - returns the concatenation of str 1, str 2, . . . str. N Functions may also contain extended documentation that can be accessed by adding the EXTENDED keyword: hive> DESCRIBE FUNCTION EXTENDED concat; concat(str 1, str 2, . . . str. N) - returns the concatenation of str 1, str 2, . . . str. N Returns NULL if any argument is NULL. Example: > SELECT concat(fname, lname) FROM emp LIMIT 1;

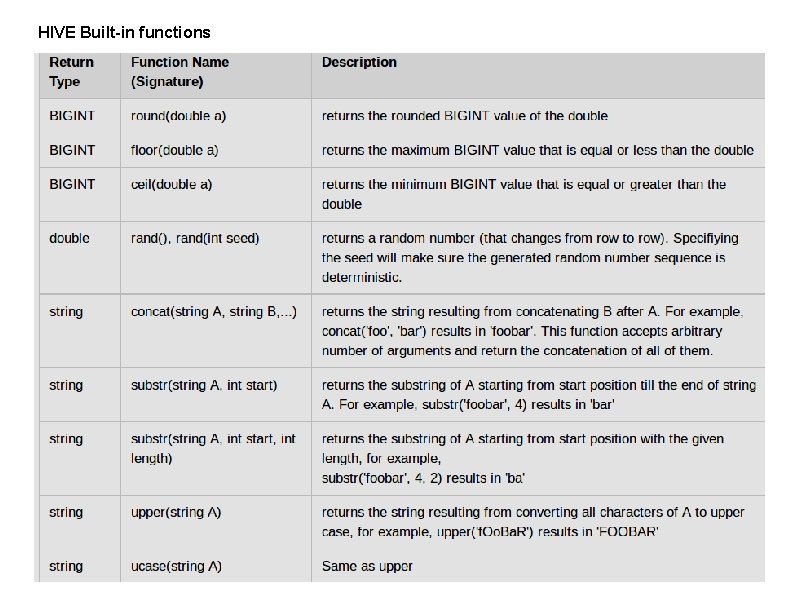

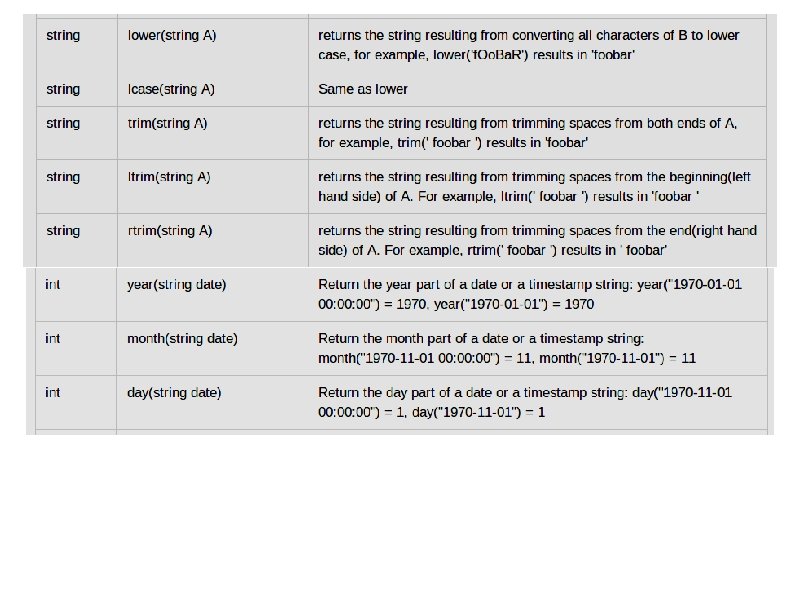

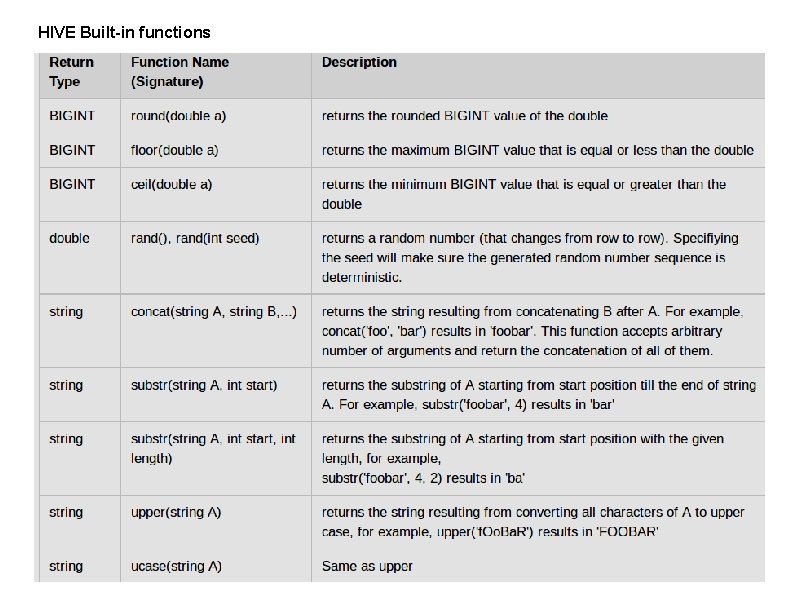

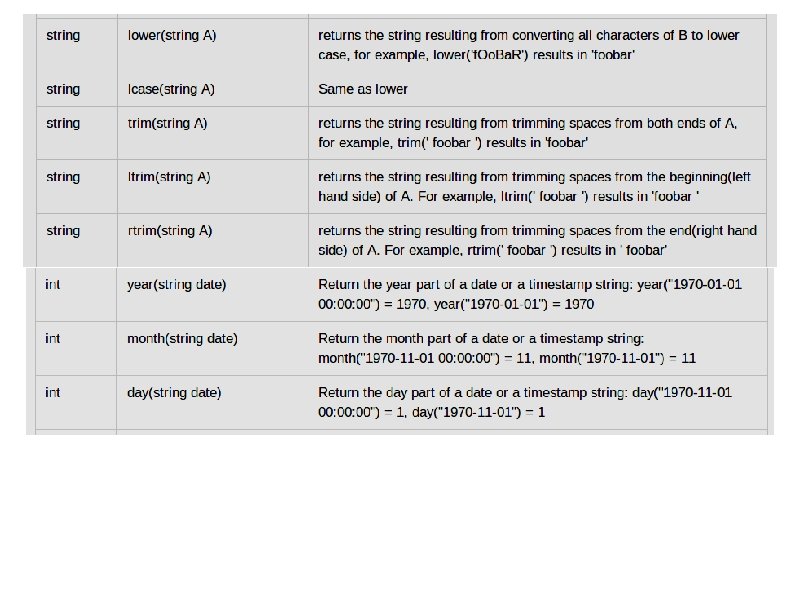

HIVE Built-in functions

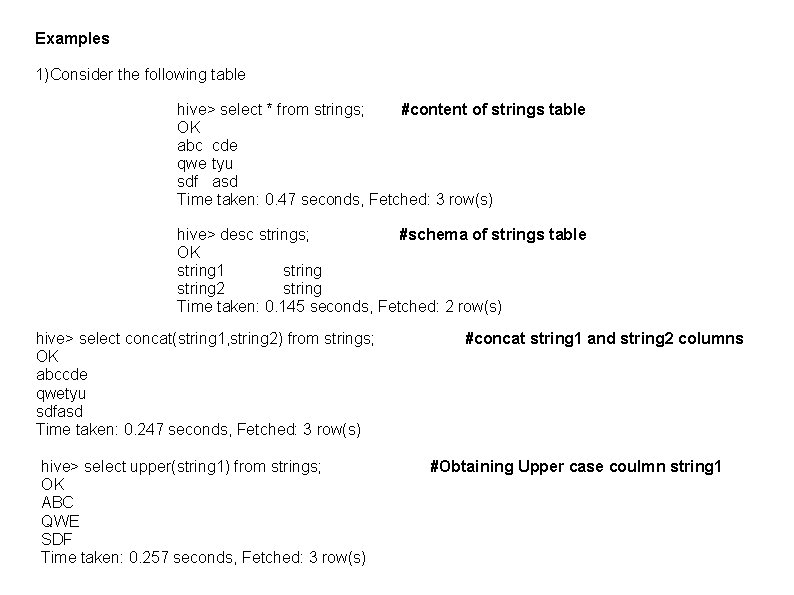

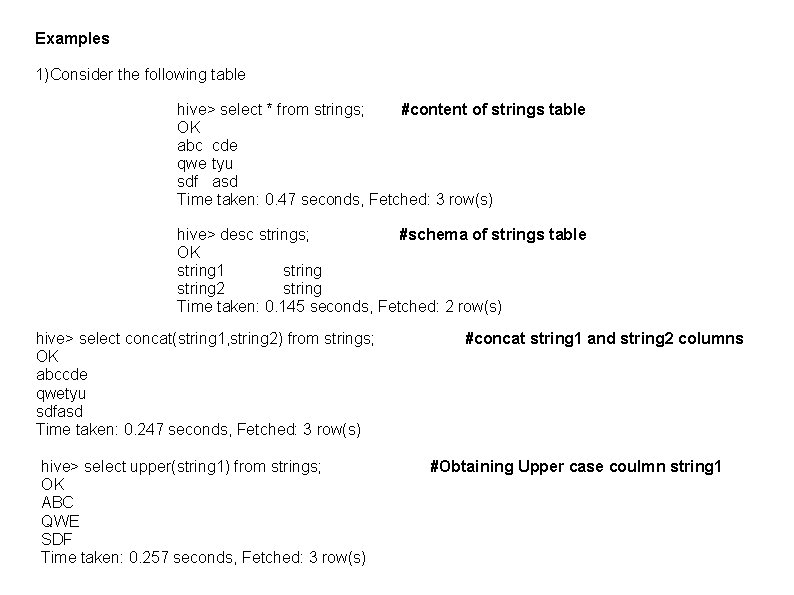

Examples 1)Consider the following table hive> select * from strings; #content of strings table OK abc cde qwe tyu sdf asd Time taken: 0. 47 seconds, Fetched: 3 row(s) hive> desc strings; #schema of strings table OK string 1 string 2 string Time taken: 0. 145 seconds, Fetched: 2 row(s) hive> select concat(string 1, string 2) from strings; OK abccde qwetyu sdfasd Time taken: 0. 247 seconds, Fetched: 3 row(s) hive> select upper(string 1) from strings; OK ABC QWE SDF Time taken: 0. 257 seconds, Fetched: 3 row(s) #concat string 1 and string 2 columns #Obtaining Upper case coulmn string 1

hive> select rand(2), string 1, string 2 from strings; #generating random numbers along with columns OK 0. 7311469360199058 abc cde 0. 9014476240300544 qwe tyu 0. 49682259343089075 sdf asd Time taken: 0. 181 seconds, Fetched: 3 row(s) hive> select substr(string 1, 2), substr(string 2, 2) from strings; #obtaining substrings from columns OK bc de we yu df sd Time taken: 0. 149 seconds, Fetched: 3 row(s) HIVE Aggregate functions(DAF)

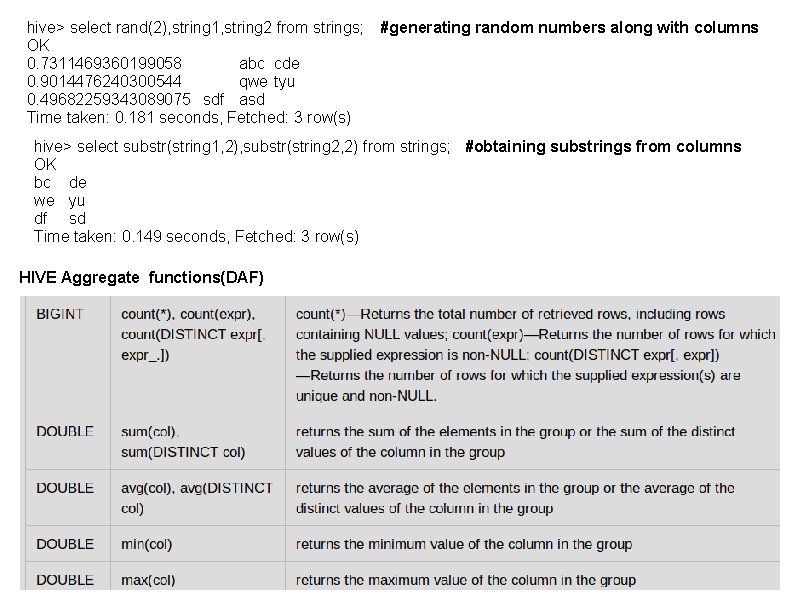

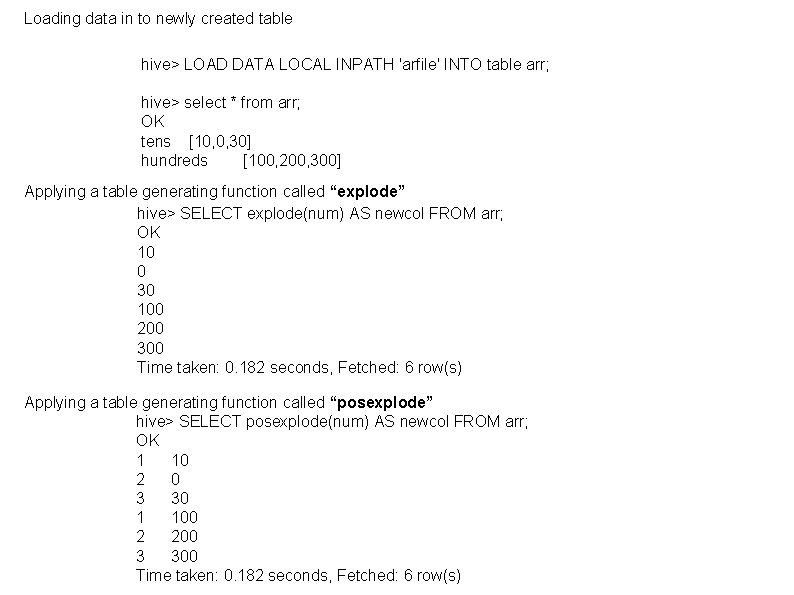

Built-in Table-Generating Functions (DTF) Normal user-defined functions, such as concat(), take in a single input row and output a single output row. In contrast, table-generating functions transform a single input row to multiple output rows. Examples: If we have a file(arfile) with following contents tens, 10|0|30 Hundreds, 100|200|300 Now create a table to represent these contents hive> create table arr(digit string, num array<int>) > row format delimited > fields terminated by ', ' > collection items terminated by '|' > lines terminated by 'n';

Loading data in to newly created table hive> LOAD DATA LOCAL INPATH 'arfile' INTO table arr; hive> select * from arr; OK tens [10, 0, 30] hundreds [100, 200, 300] Applying a table generating function called “explode” hive> SELECT explode(num) AS newcol FROM arr; OK 10 0 30 100 200 300 Time taken: 0. 182 seconds, Fetched: 6 row(s) Applying a table generating function called “posexplode” hive> SELECT posexplode(num) AS newcol FROM arr; OK 1 10 2 0 3 30 1 100 2 200 3 300 Time taken: 0. 182 seconds, Fetched: 6 row(s)

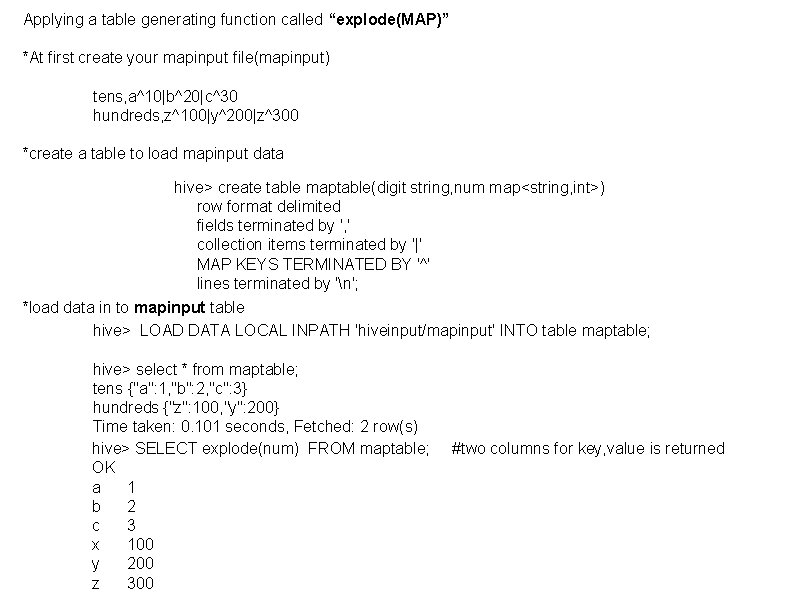

Applying a table generating function called “explode(MAP)” *At first create your mapinput file(mapinput) tens, a^10|b^20|c^30 hundreds, z^100|y^200|z^300 *create a table to load mapinput data hive> create table maptable(digit string, num map<string, int>) row format delimited fields terminated by ', ' collection items terminated by '|' MAP KEYS TERMINATED BY '^' lines terminated by 'n'; *load data in to mapinput table hive> LOAD DATA LOCAL INPATH 'hiveinput/mapinput' INTO table maptable; hive> select * from maptable; tens {"a": 1, "b": 2, "c": 3} hundreds {"z": 100, "y": 200} Time taken: 0. 101 seconds, Fetched: 2 row(s) hive> SELECT explode(num) FROM maptable; OK a 1 b 2 c 3 x 100 y 200 z 300 #two columns for key, value is returned

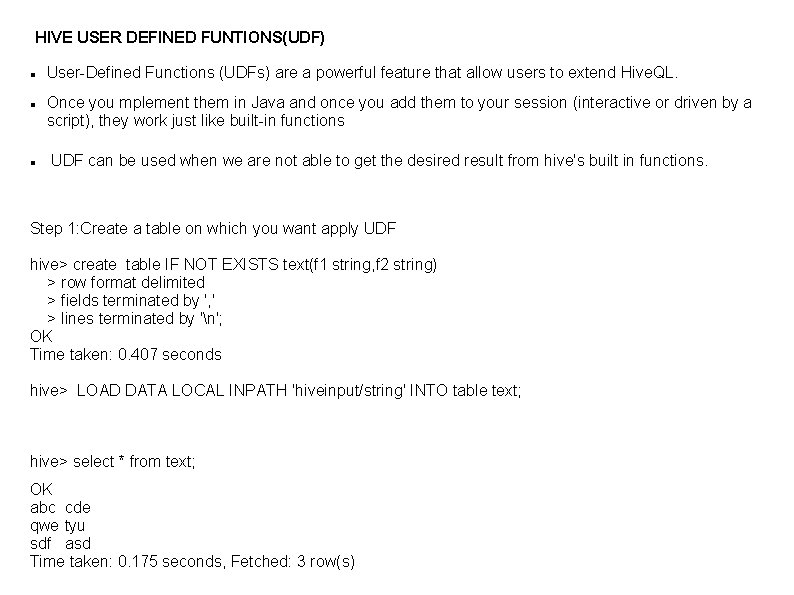

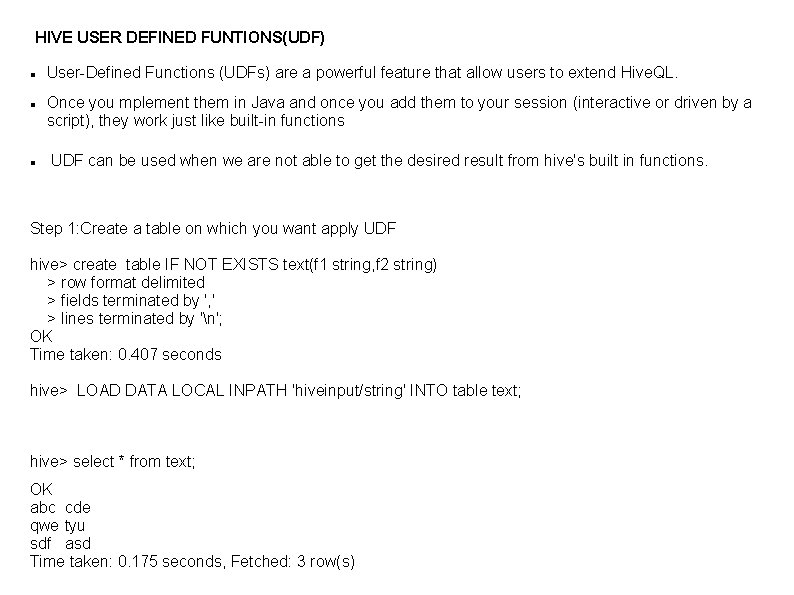

HIVE USER DEFINED FUNTIONS(UDF) User-Defined Functions (UDFs) are a powerful feature that allow users to extend Hive. QL. Once you mplement them in Java and once you add them to your session (interactive or driven by a script), they work just like built-in functions UDF can be used when we are not able to get the desired result from hive's built in functions. Step 1: Create a table on which you want apply UDF hive> create table IF NOT EXISTS text(f 1 string, f 2 string) > row format delimited > fields terminated by ', ' > lines terminated by 'n'; OK Time taken: 0. 407 seconds hive> LOAD DATA LOCAL INPATH 'hiveinput/string' INTO table text; hive> select * from text; OK abc cde qwe tyu sdf asd Time taken: 0. 175 seconds, Fetched: 3 row(s)

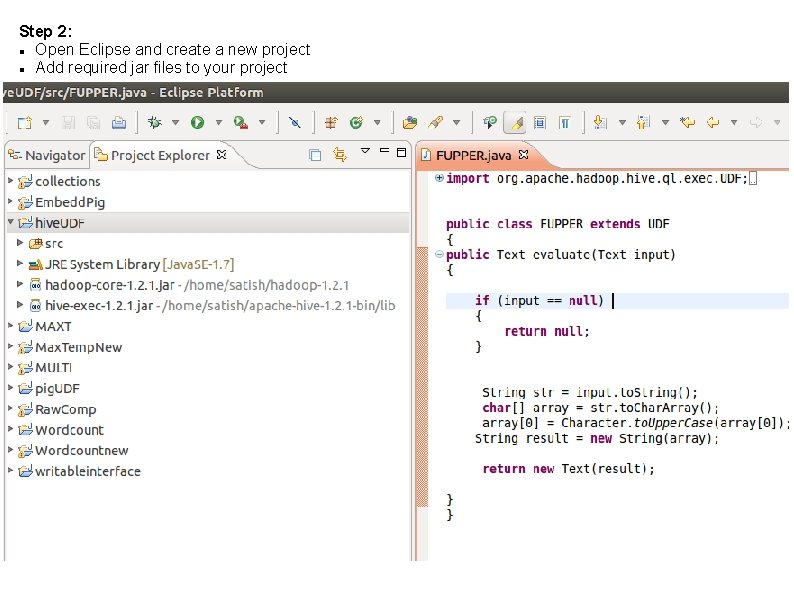

Step 2: Open Eclipse and create a new project Add required jar files to your project

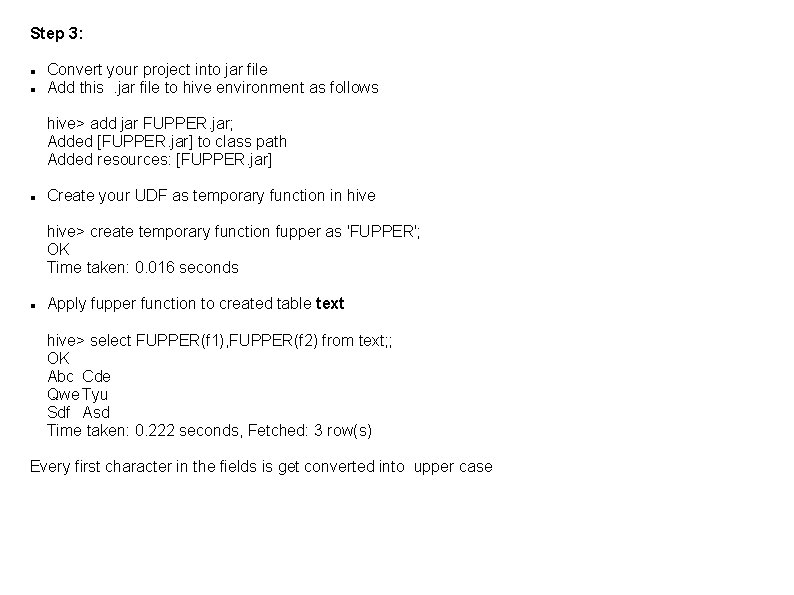

Step 3: Convert your project into jar file Add this. jar file to hive environment as follows hive> add jar FUPPER. jar; Added [FUPPER. jar] to class path Added resources: [FUPPER. jar] Create your UDF as temporary function in hive> create temporary function fupper as 'FUPPER'; OK Time taken: 0. 016 seconds Apply fupper function to created table text hive> select FUPPER(f 1), FUPPER(f 2) from text; ; OK Abc Cde Qwe Tyu Sdf Asd Time taken: 0. 222 seconds, Fetched: 3 row(s) Every first character in the fields is get converted into upper case

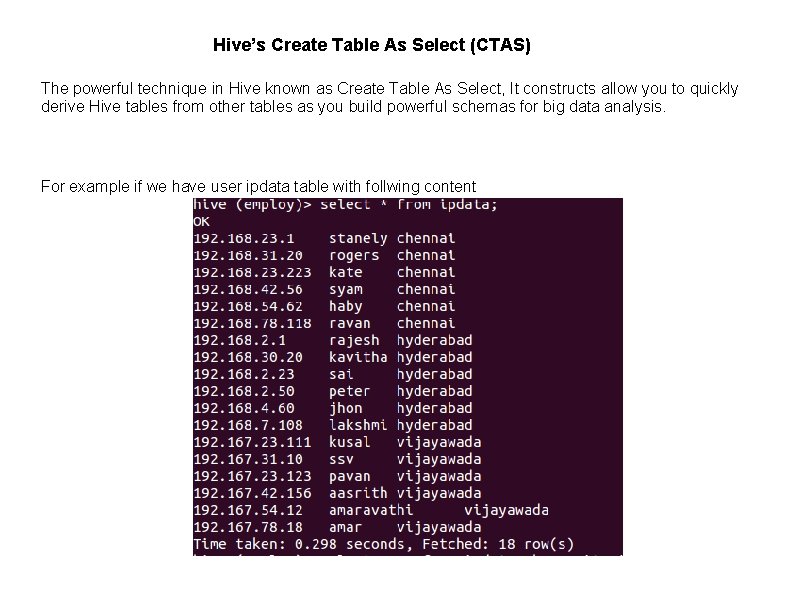

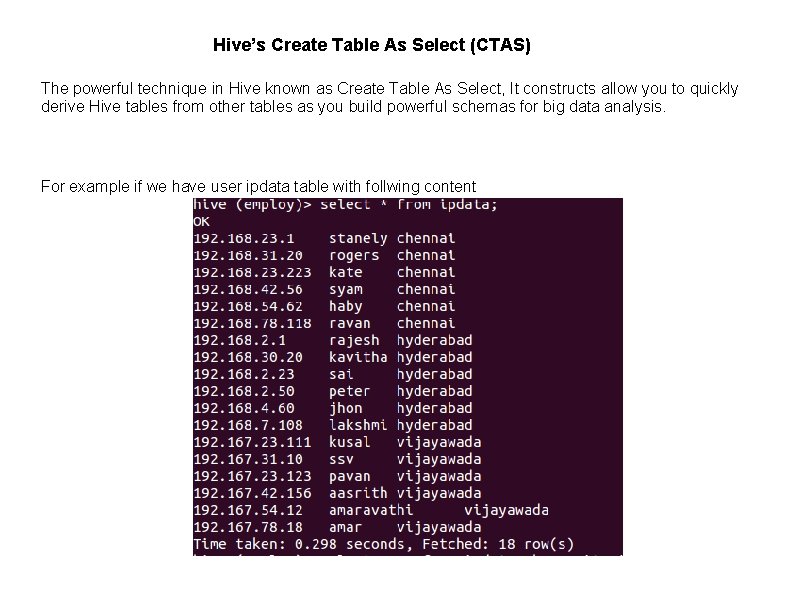

Hive’s Create Table As Select (CTAS) The powerful technique in Hive known as Create Table As Select, It constructs allow you to quickly derive Hive tables from other tables as you build powerful schemas for big data analysis. For example if we have user ipdata table with follwing content

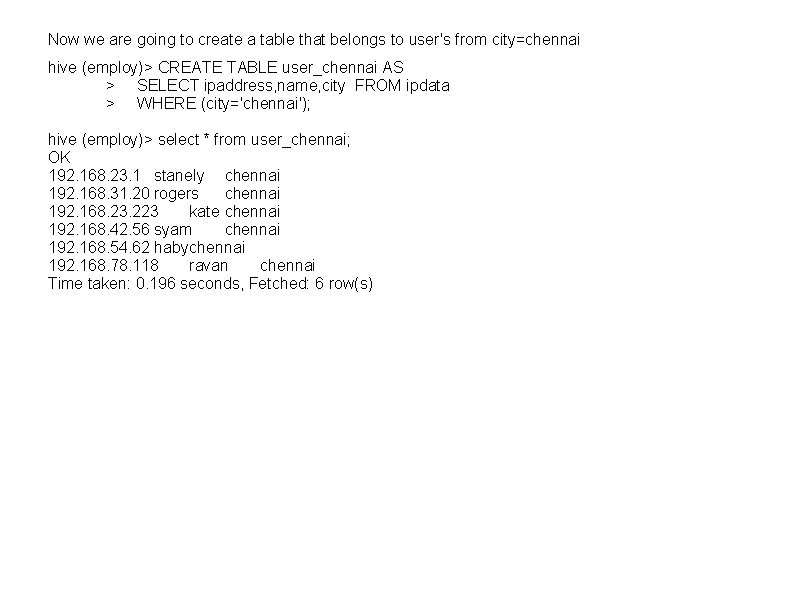

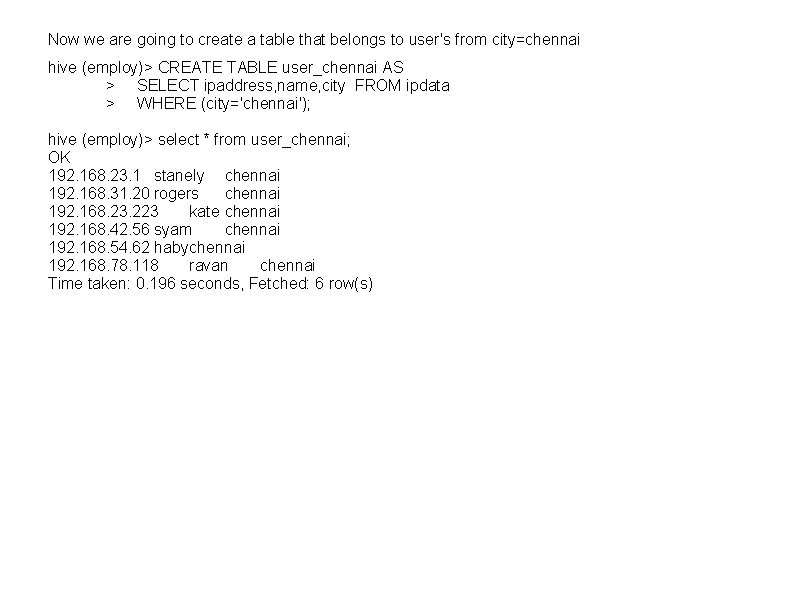

Now we are going to create a table that belongs to user's from city=chennai hive (employ)> CREATE TABLE user_chennai AS > SELECT ipaddress, name, city FROM ipdata > WHERE (city='chennai'); hive (employ)> select * from user_chennai; OK 192. 168. 23. 1 stanely chennai 192. 168. 31. 20 rogers chennai 192. 168. 23. 223 kate chennai 192. 168. 42. 56 syam chennai 192. 168. 54. 62 habychennai 192. 168. 78. 118 ravan chennai Time taken: 0. 196 seconds, Fetched: 6 row(s)

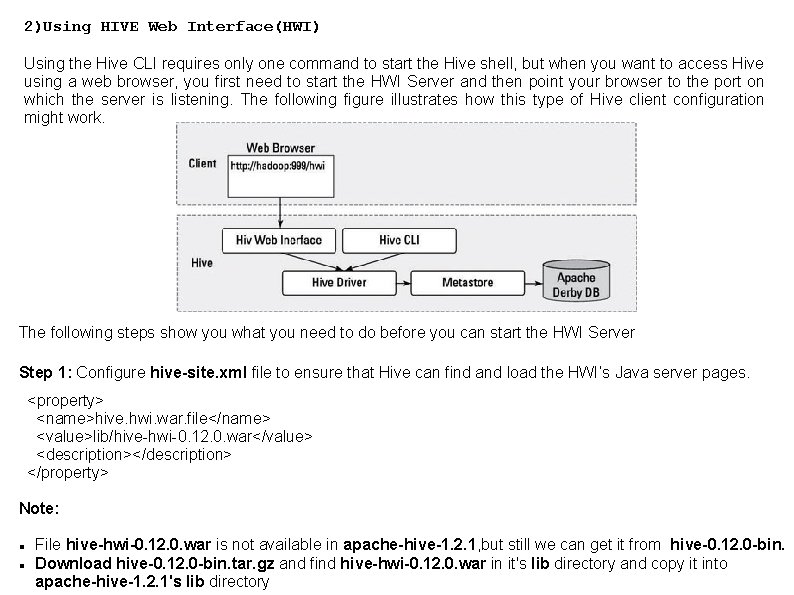

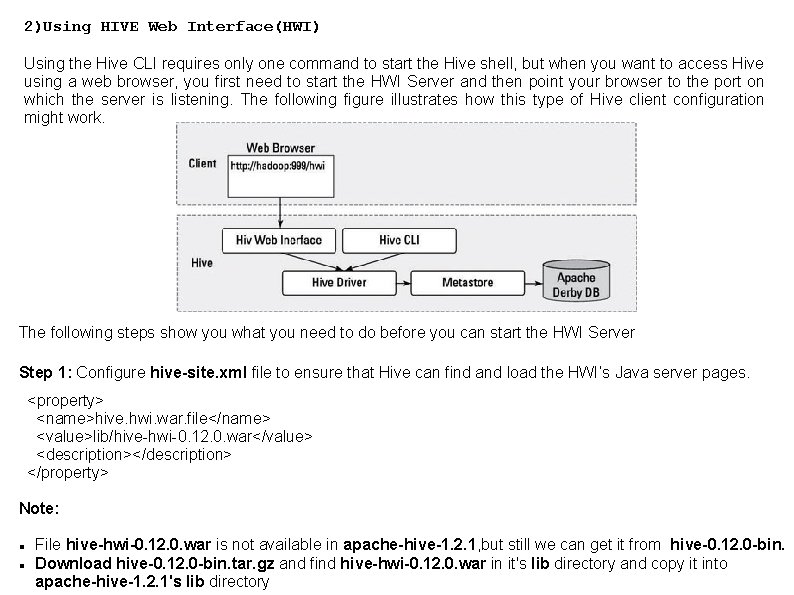

2)Using HIVE Web Interface(HWI) Using the Hive CLI requires only one command to start the Hive shell, but when you want to access Hive using a web browser, you first need to start the HWI Server and then point your browser to the port on which the server is listening. The following figure illustrates how this type of Hive client configuration might work. The following steps show you what you need to do before you can start the HWI Server Step 1: Configure hive-site. xml file to ensure that Hive can find and load the HWI’s Java server pages. <property> <name>hive. hwi. war. file</name> <value>lib/hive-hwi-0. 12. 0. war</value> <description></description> </property> Note: File hive-hwi-0. 12. 0. war is not available in apache-hive-1. 2. 1, but still we can get it from hive-0. 12. 0 -bin. Download hive-0. 12. 0 -bin. tar. gz and find hive-hwi-0. 12. 0. war in it's lib directory and copy it into apache-hive-1. 2. 1's lib directory

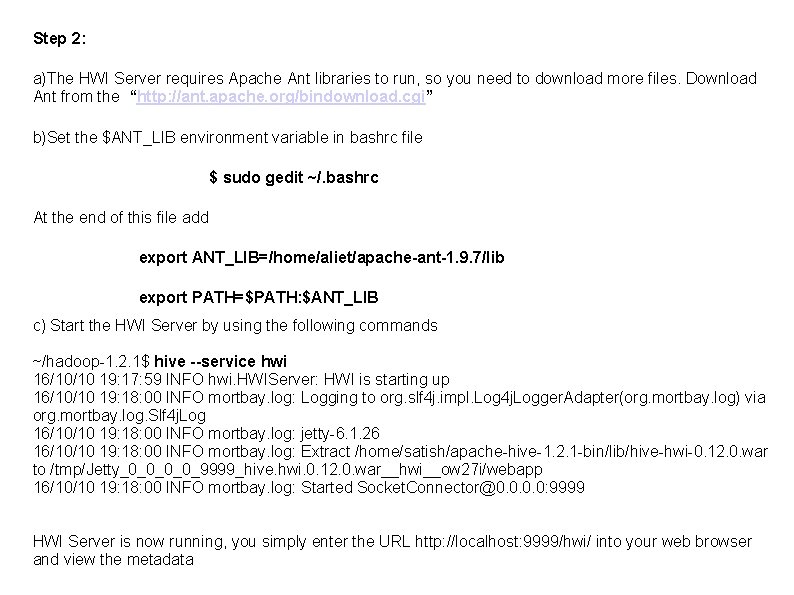

Step 2: a)The HWI Server requires Apache Ant libraries to run, so you need to download more files. Download Ant from the “http: //ant. apache. org/bindownload. cgi” b)Set the $ANT_LIB environment variable in bashrc file $ sudo gedit ~/. bashrc At the end of this file add export ANT_LIB=/home/aliet/apache-ant-1. 9. 7/lib export PATH=$PATH: $ANT_LIB c) Start the HWI Server by using the following commands ~/hadoop-1. 2. 1$ hive --service hwi 16/10/10 19: 17: 59 INFO hwi. HWIServer: HWI is starting up 16/10/10 19: 18: 00 INFO mortbay. log: Logging to org. slf 4 j. impl. Log 4 j. Logger. Adapter(org. mortbay. log) via org. mortbay. log. Slf 4 j. Log 16/10/10 19: 18: 00 INFO mortbay. log: jetty-6. 1. 26 16/10/10 19: 18: 00 INFO mortbay. log: Extract /home/satish/apache-hive-1. 2. 1 -bin/lib/hive-hwi-0. 12. 0. war to /tmp/Jetty_0_0_9999_hive. hwi. 0. 12. 0. war__hwi__ow 27 i/webapp 16/10/10 19: 18: 00 INFO mortbay. log: Started Socket. Connector@0. 0: 9999 HWI Server is now running, you simply enter the URL http: //localhost: 9999/hwi/ into your web browser and view the metadata

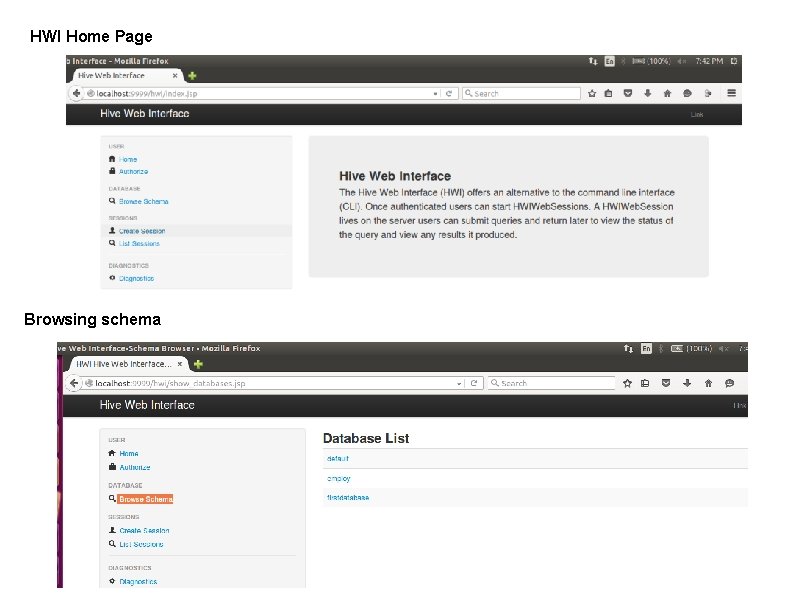

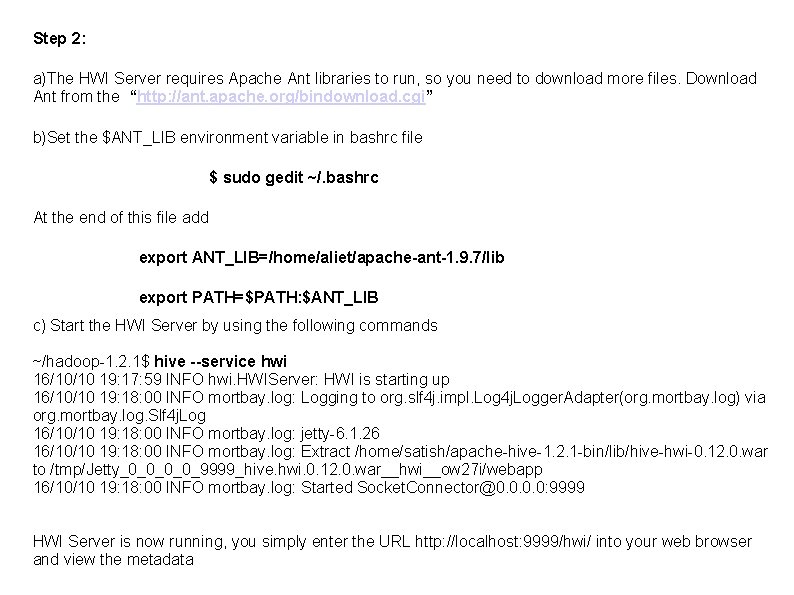

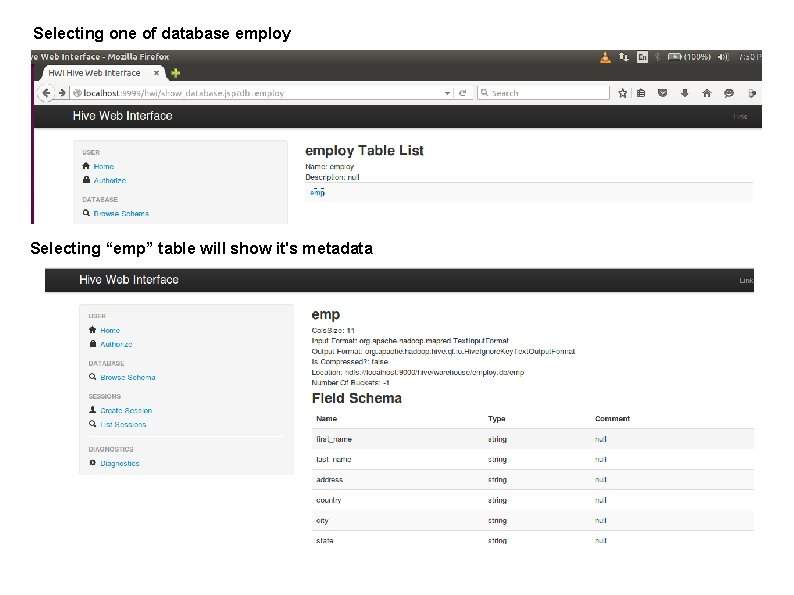

HWI Home Page Browsing schema

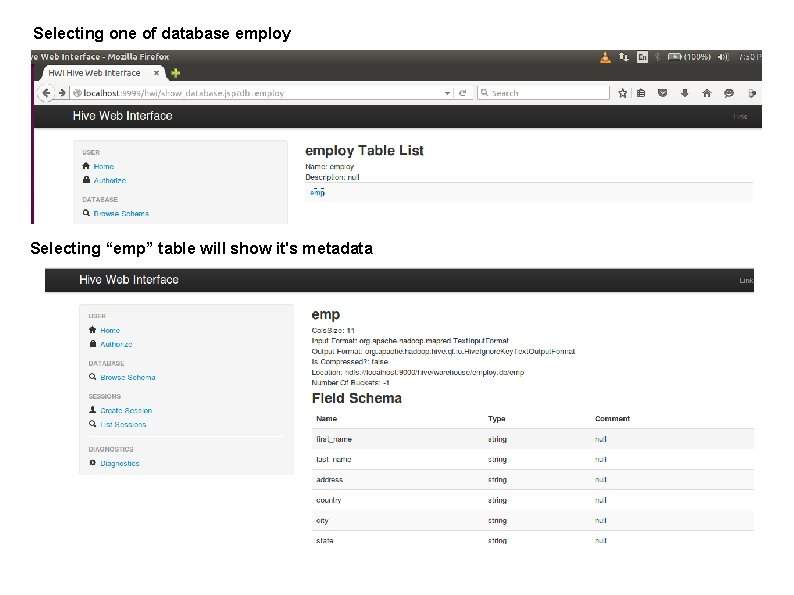

Selecting one of database employ Selecting “emp” table will show it's metadata

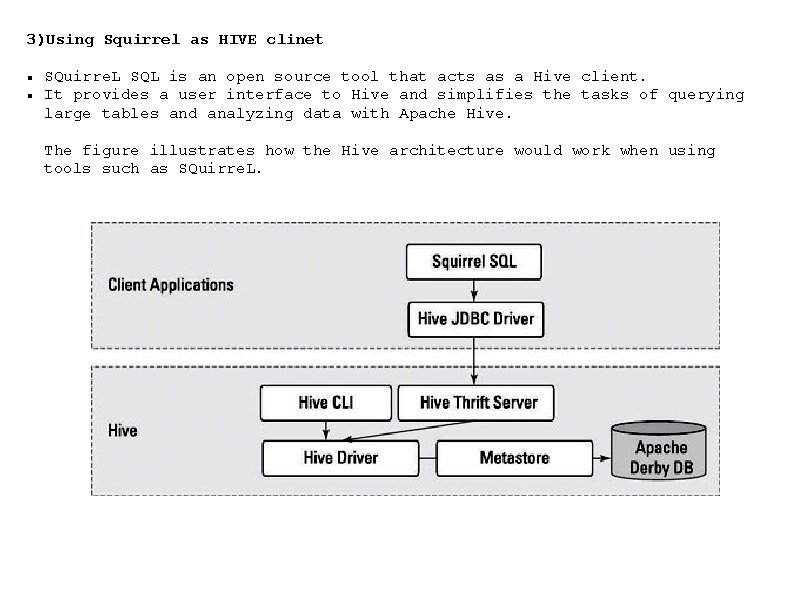

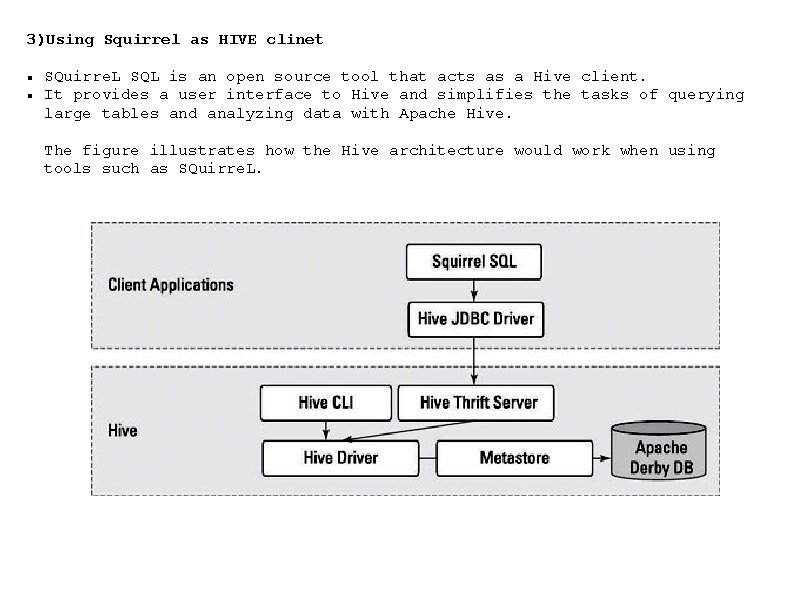

3)Using Squirrel as HIVE clinet SQuirre. L SQL is an open source tool that acts as a Hive client. It provides a user interface to Hive and simplifies the tasks of querying large tables and analyzing data with Apache Hive. The figure illustrates how the Hive architecture would work when using tools such as SQuirre. L.

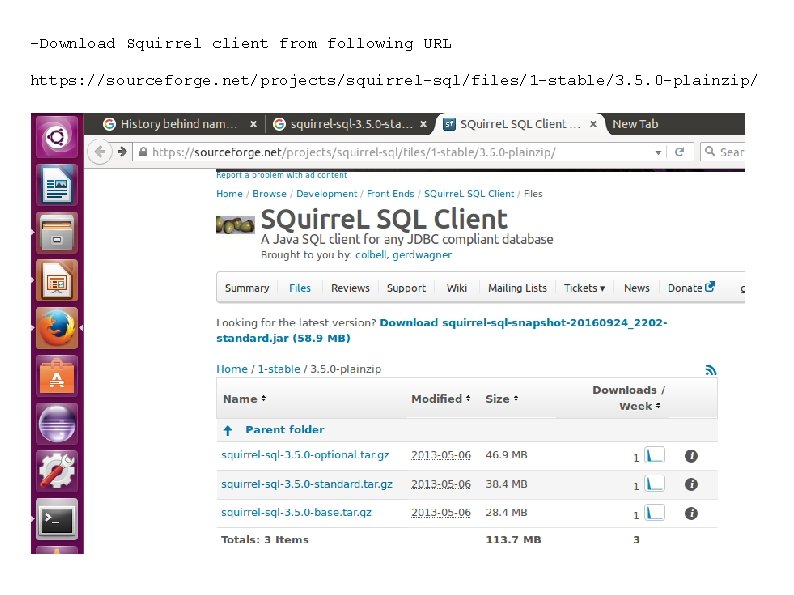

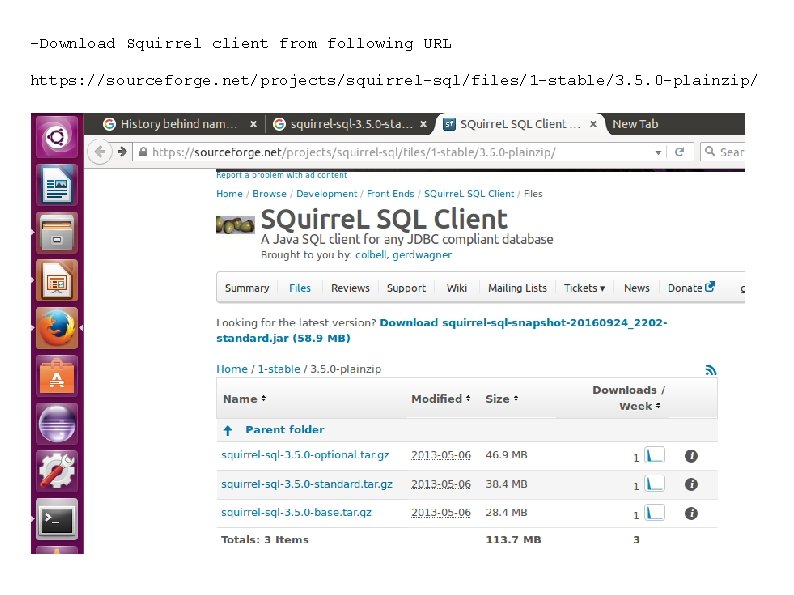

-Download Squirrel client from following URL https: //sourceforge. net/projects/squirrel-sql/files/1 -stable/3. 5. 0 -plainzip/

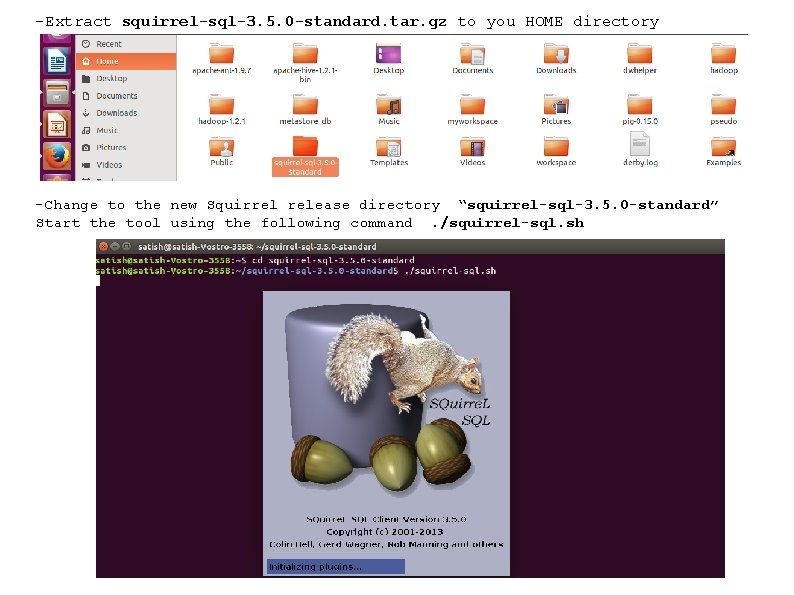

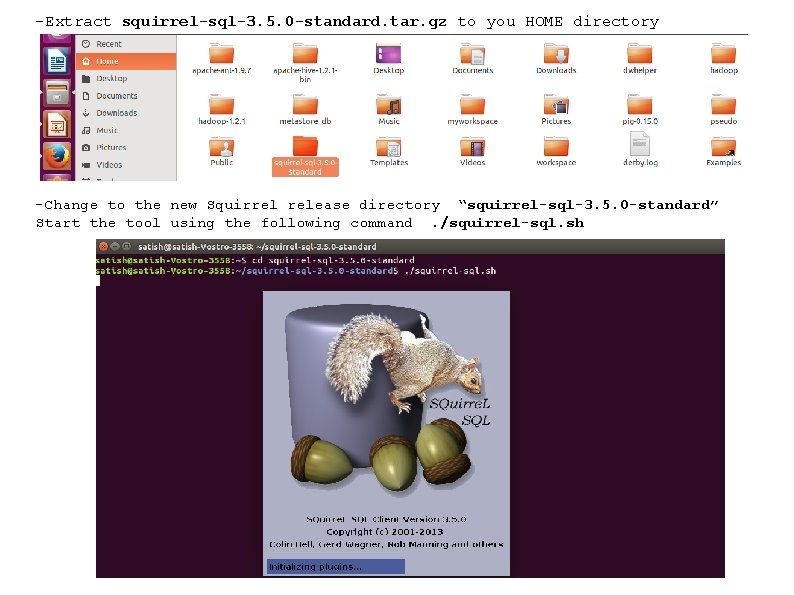

-Extract squirrel-sql-3. 5. 0 -standard. tar. gz to you HOME directory -Change to the new Squirrel release directory “squirrel-sql-3. 5. 0 -standard” Start the tool using the following command. /squirrel-sql. sh

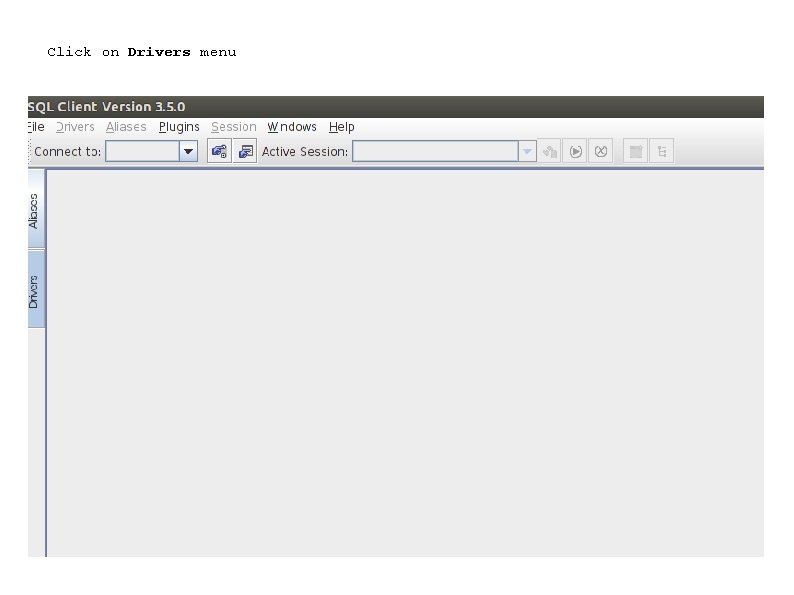

Click on Drivers menu

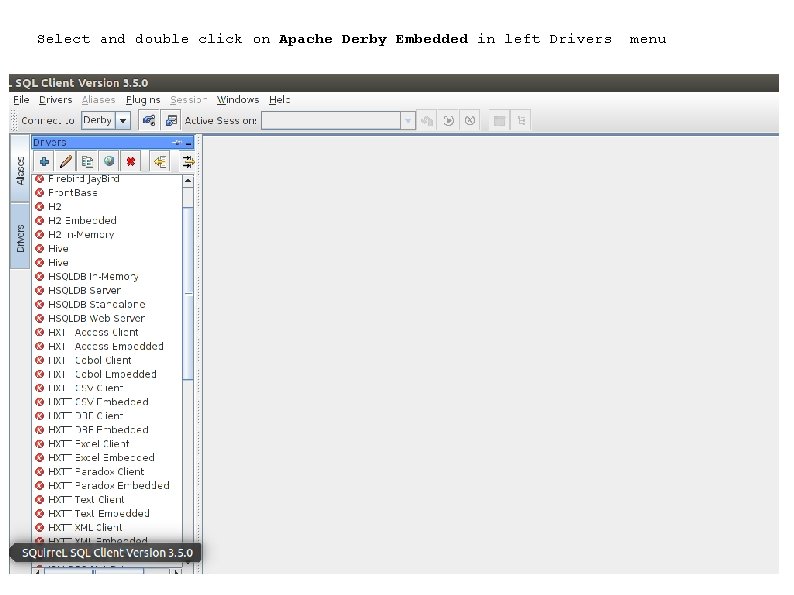

Select and double click on Apache Derby Embedded in left Drivers menu

Proivide necessary driver details and then click Extra Class Path

Add these two jar to CLASS PATH and then click OK hadoop-1. 2. 1/hadoop-core-1. 2. 1. jar apache-hive-1. 2. 1 -bin/lib/derby-10. 2. 0. jar

Green status message should appear in the message panel on the bottom of the main window indicating that driver was successfully registered

The next step is to create an Alias for the Derby database connection. Click on the “Alias” button and the drivers panel will be replaced with an “Aliases” panel. To add an alias click the blue “+” (plus sign) at the top of the Aliases panel and an “Add Alias” dialog will appear. Finally provide necessary details and then click ok

Now SQL client will try to connect to derby database

Now that we have connected to the database, click on the SQL tab to access the SQL script editor.

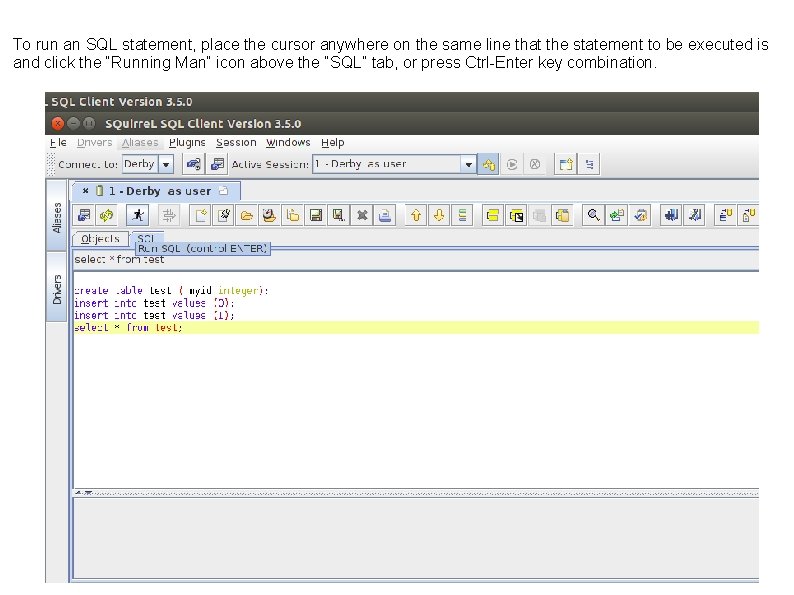

To run an SQL statement, place the cursor anywhere on the same line that the statement to be executed is and click the “Running Man” icon above the “SQL” tab, or press Ctrl-Enter key combination.

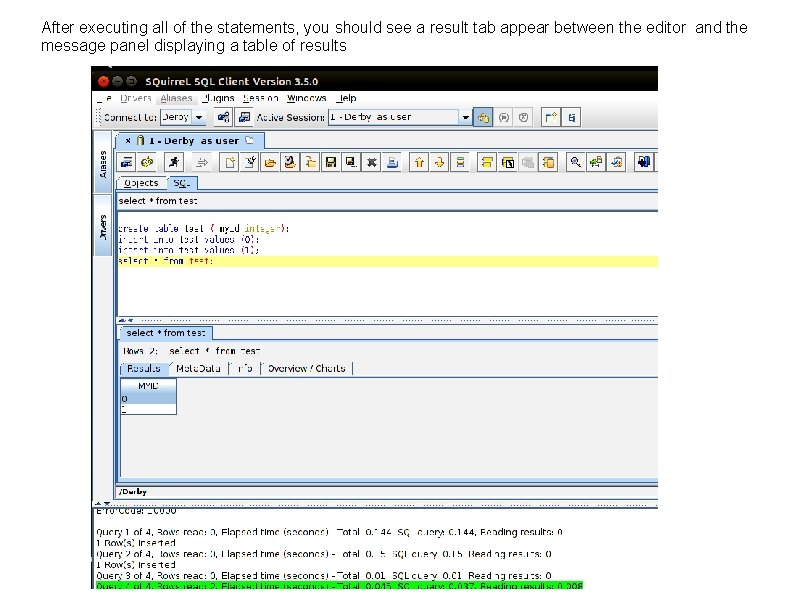

After executing all of the statements, you should see a result tab appear between the editor and the message panel displaying a table of results

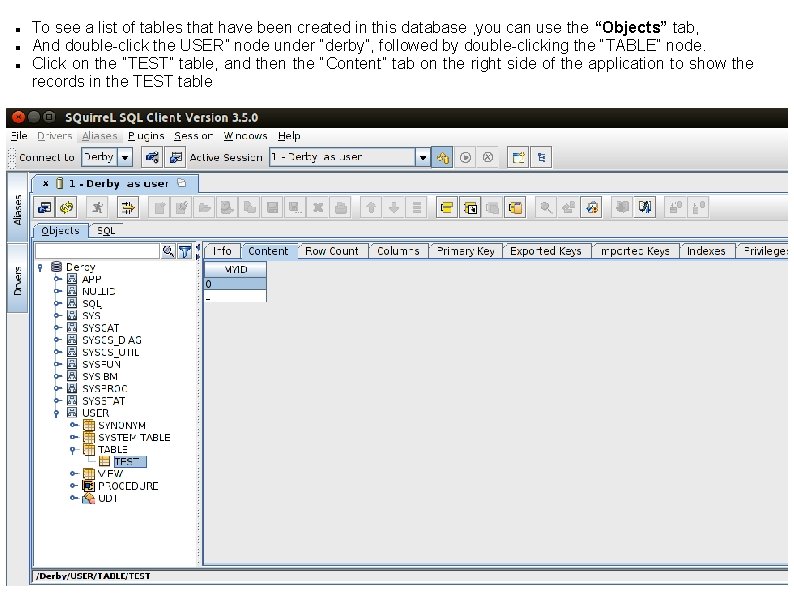

To see a list of tables that have been created in this database , you can use the “Objects” tab, And double-click the USER” node under “derby”, followed by double-clicking the “TABLE” node. Click on the “TEST” table, and then the “Content” tab on the right side of the application to show the records in the TEST table

References Hadoop for DUMMIES, Drik de. ROOS, Paul C. Zikopoulos a. Ramon B. Melnyk, Bruce Brown, Rafael Coss https: //cwiki. apache. org/confluence/display/Hive/Getting. Started http: //stackoverflow. com/questions/23315182/hive-0 -13 -0 -where-is-the-hive-hwi-war-file