Apache Flink TM Counting elements in streams Kostas

- Slides: 47

Apache Flink. TM Counting elements in streams Kostas Tzoumas @kostas_tzoumas

Introduction 2

Data streaming is becoming increasingly popular* *Biggest understatement of 2016 3

Streaming technology is enabling the obvious: continuous processing on data that is continuously produced 4

Streaming is the next programming paradigm for data applications, and you need to start thinking in terms of streams 5

Counting 6

Continuous counting § A seemingly simple application, but generally an unsolved problem § E. g. , count visitors, impressions, interactions, clicks, etc § Aggregations and OLAP cube operations are generalizations of counting 7

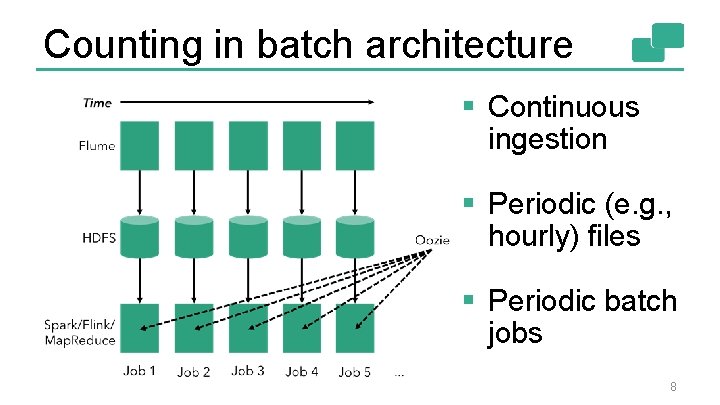

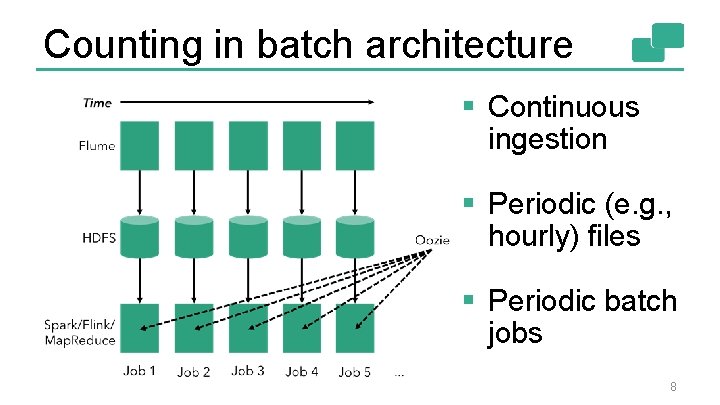

Counting in batch architecture § Continuous ingestion § Periodic (e. g. , hourly) files § Periodic batch jobs 8

Problems with batch architecture § High latency § Too many moving parts § Implicit treatment of time § Out of order event handling § Implicit batch boundaries 9

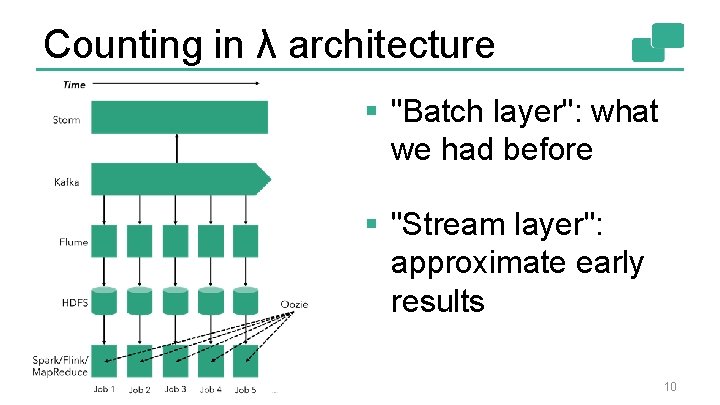

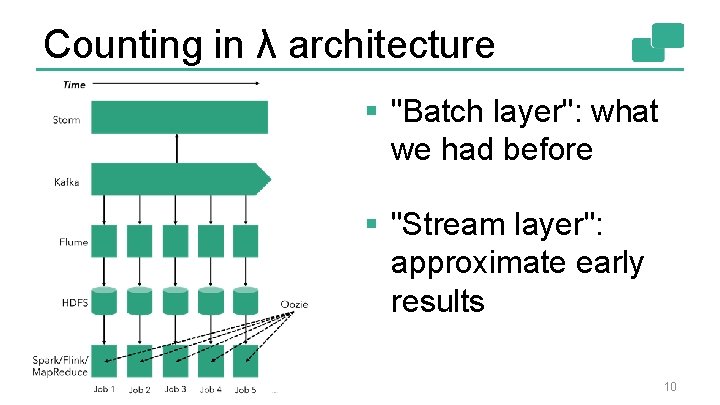

Counting in λ architecture § "Batch layer": what we had before § "Stream layer": approximate early results 10

Problems with batch and λ § Way too many moving parts (and code dup) § Implicit treatment of time § Out of order event handling § Implicit batch boundaries 11

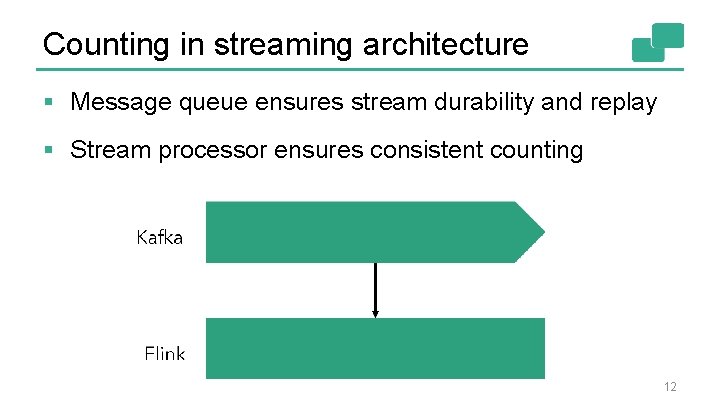

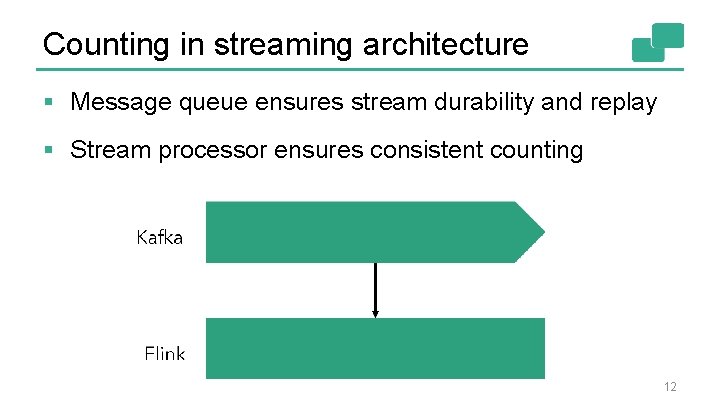

Counting in streaming architecture § Message queue ensures stream durability and replay § Stream processor ensures consistent counting 12

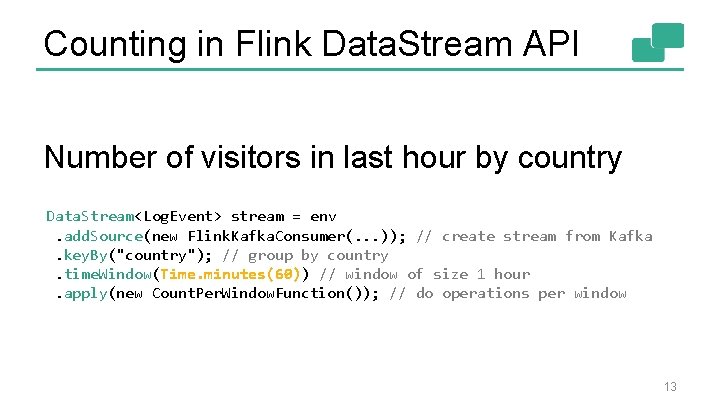

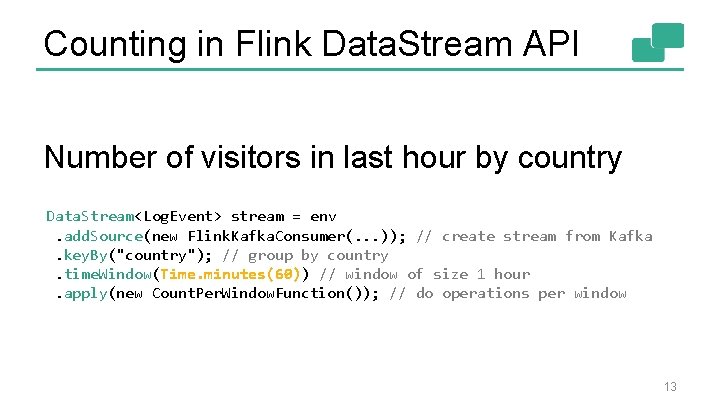

Counting in Flink Data. Stream API Number of visitors in last hour by country Data. Stream<Log. Event> stream = env. add. Source(new Flink. Kafka. Consumer(. . . )); // create stream from Kafka. key. By("country"); // group by country. time. Window(Time. minutes(60)) // window of size 1 hour. apply(new Count. Per. Window. Function()); // do operations per window 13

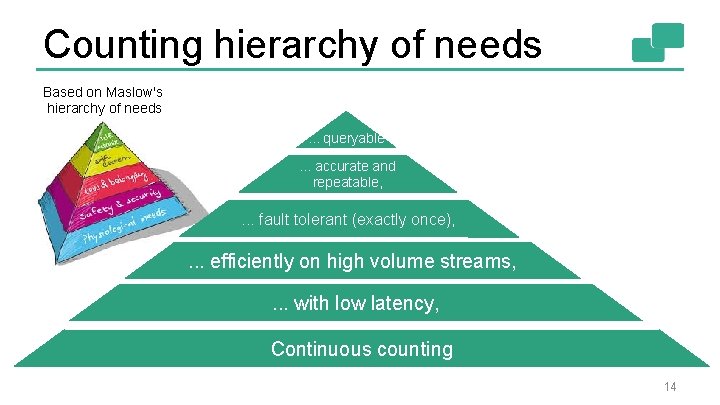

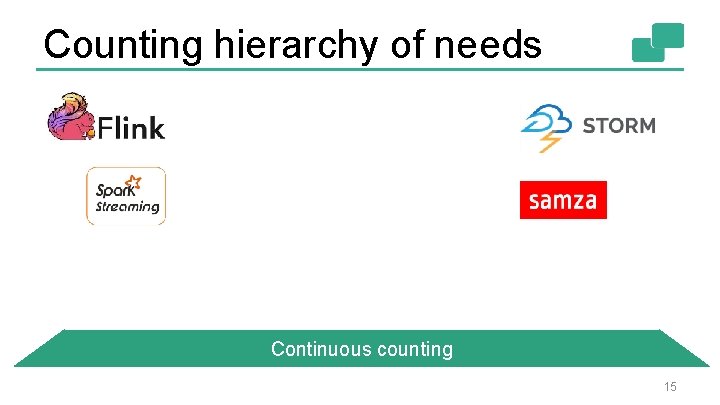

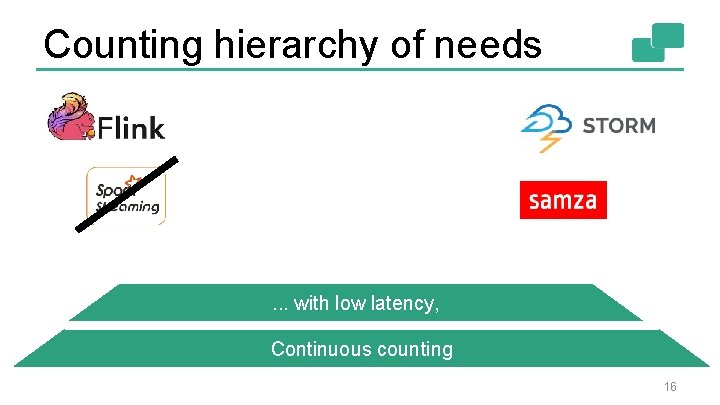

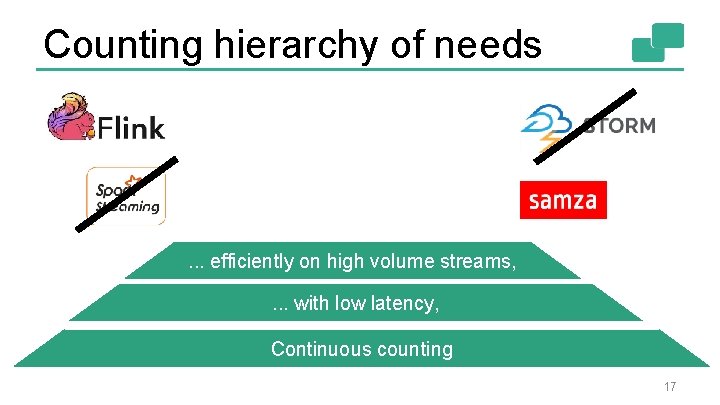

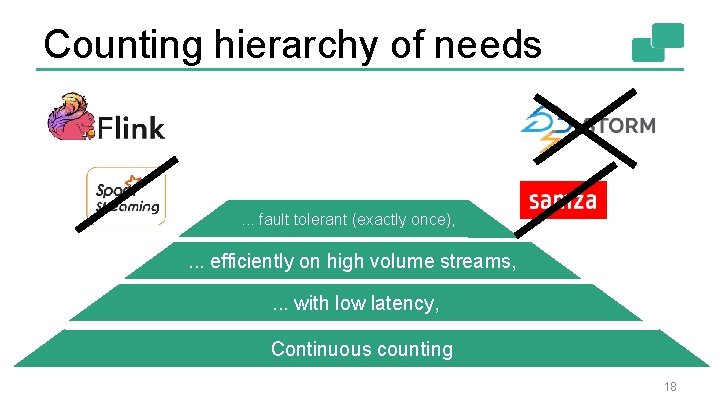

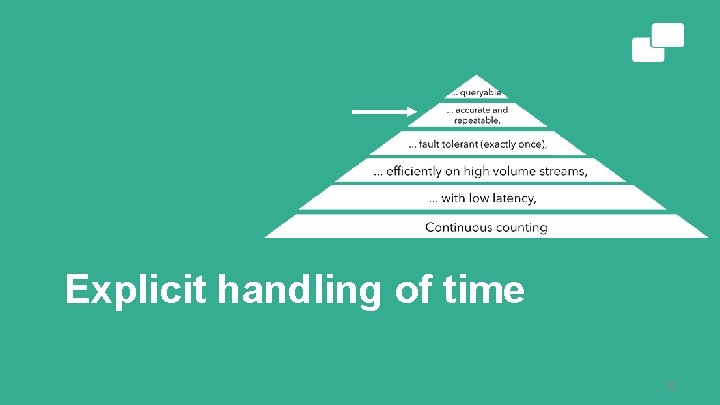

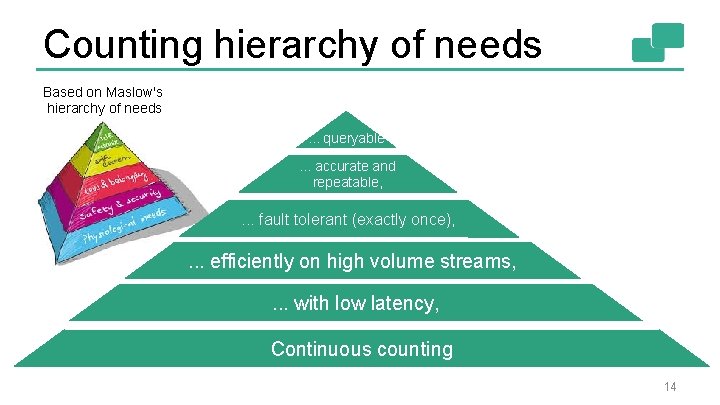

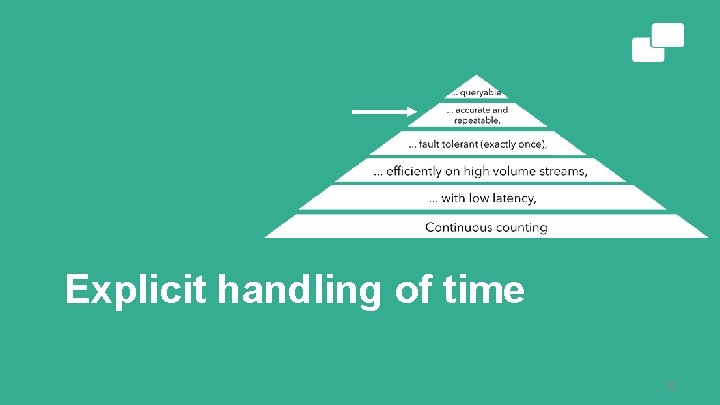

Counting hierarchy of needs Based on Maslow's hierarchy of needs. . . queryable. . . accurate and repeatable, . . . fault tolerant (exactly once), . . . efficiently on high volume streams, . . . with low latency, Continuous counting 14

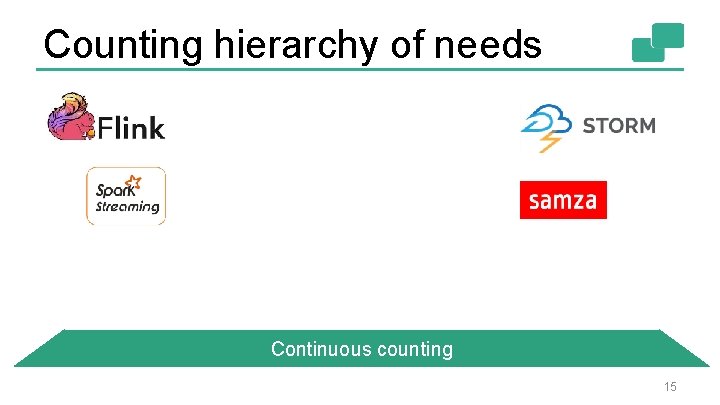

Counting hierarchy of needs Continuous counting 15

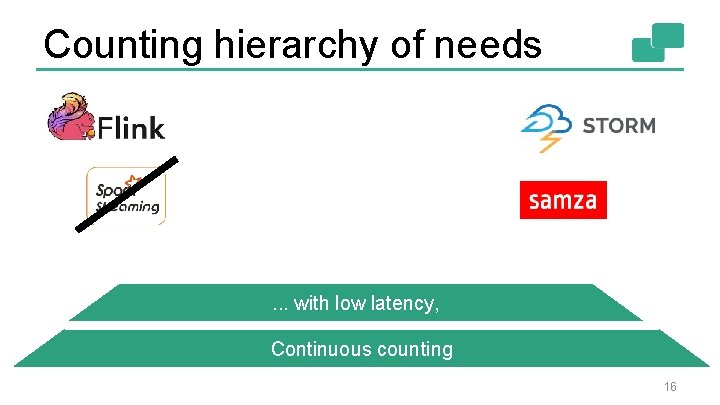

Counting hierarchy of needs . . . with low latency, Continuous counting 16

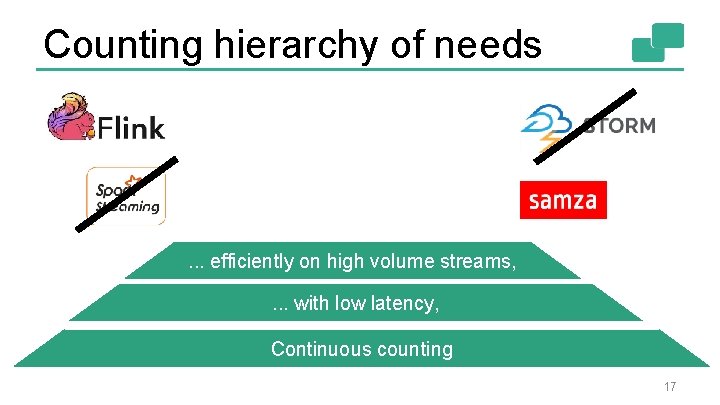

Counting hierarchy of needs . . . efficiently on high volume streams, . . . with low latency, Continuous counting 17

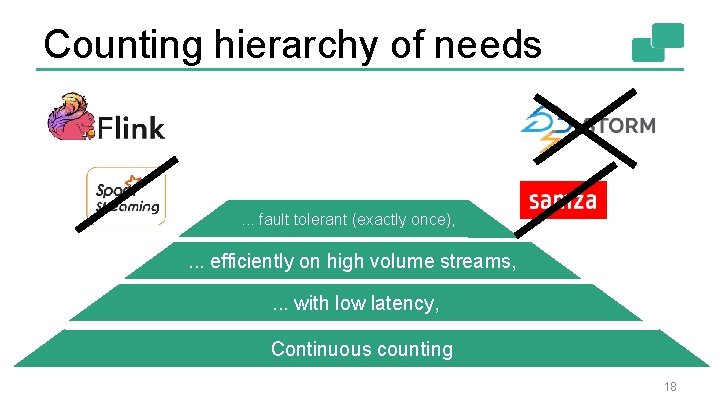

Counting hierarchy of needs . . . fault tolerant (exactly once), . . . efficiently on high volume streams, . . . with low latency, Continuous counting 18

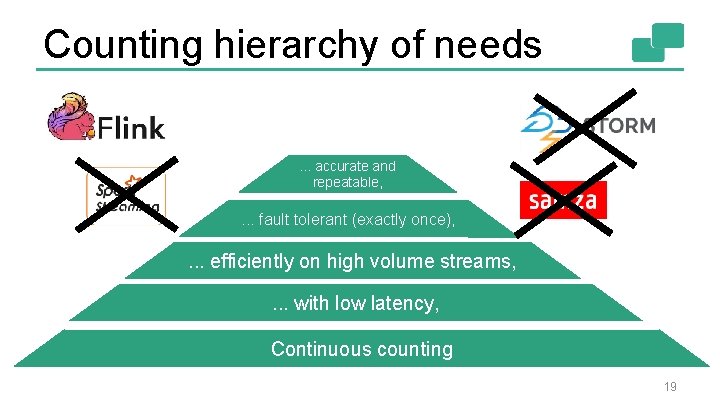

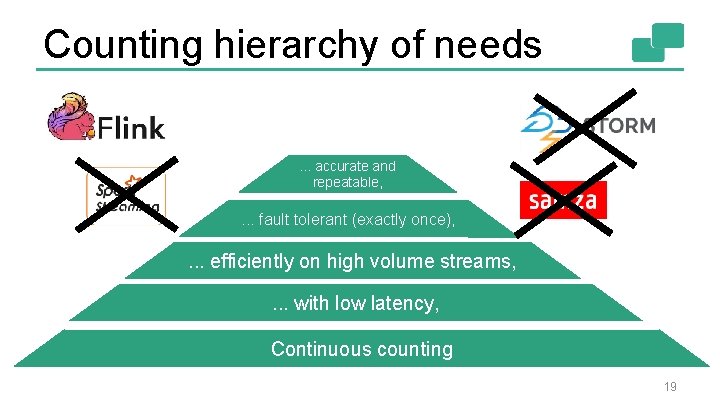

Counting hierarchy of needs. . . accurate and repeatable, . . . fault tolerant (exactly once), . . . efficiently on high volume streams, . . . with low latency, Continuous counting 19

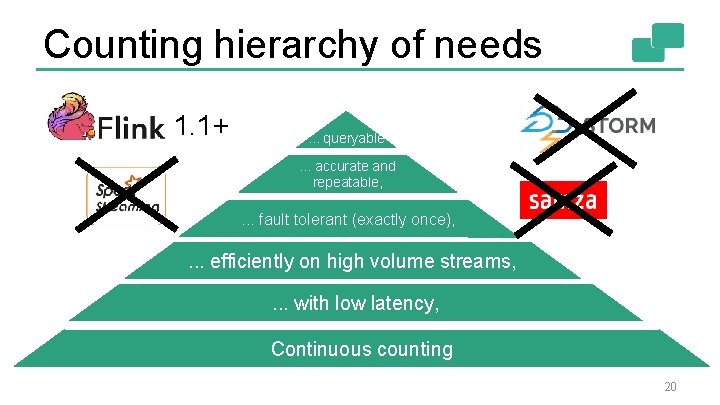

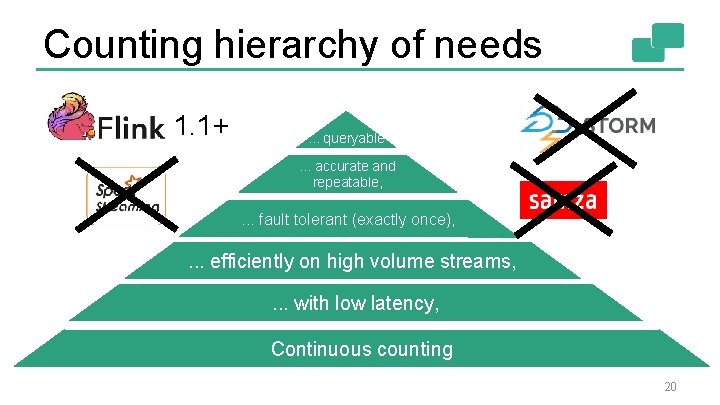

Counting hierarchy of needs 1. 1+ . . . queryable. . . accurate and repeatable, . . . fault tolerant (exactly once), . . . efficiently on high volume streams, . . . with low latency, Continuous counting 20

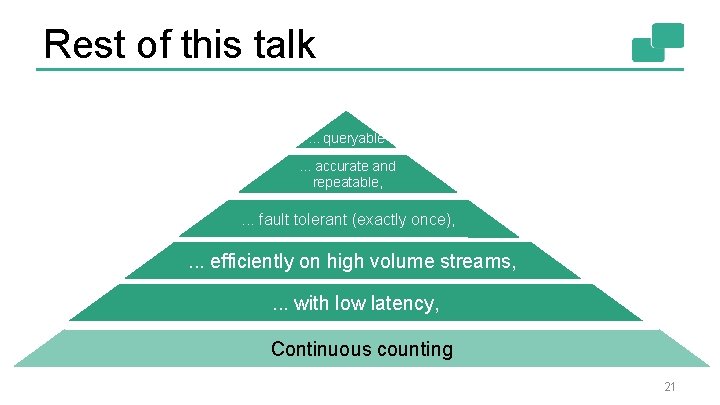

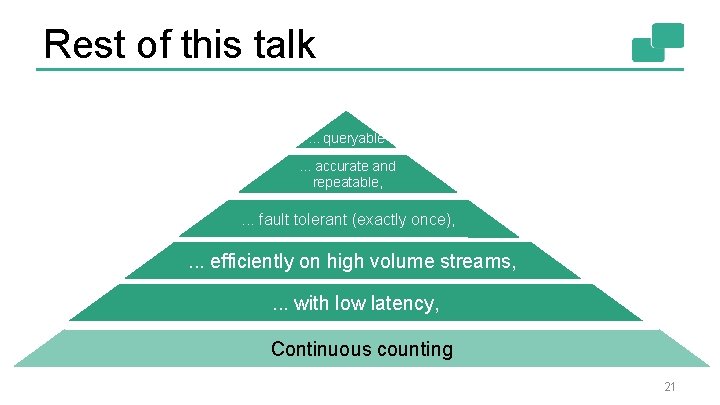

Rest of this talk. . . queryable. . . accurate and repeatable, . . . fault tolerant (exactly once), . . . efficiently on high volume streams, . . . with low latency, Continuous counting 21

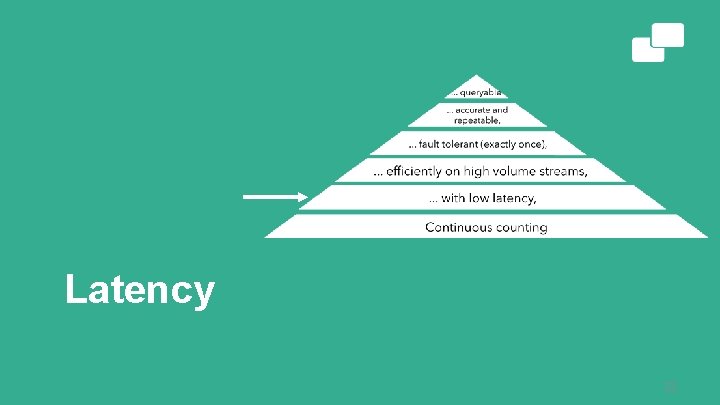

Latency 22

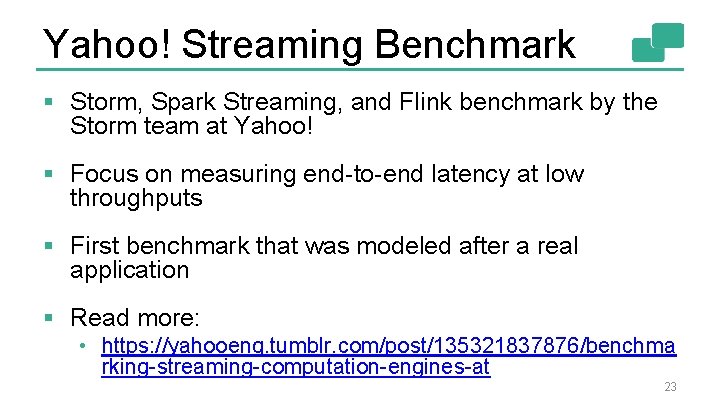

Yahoo! Streaming Benchmark § Storm, Spark Streaming, and Flink benchmark by the Storm team at Yahoo! § Focus on measuring end-to-end latency at low throughputs § First benchmark that was modeled after a real application § Read more: • https: //yahooeng. tumblr. com/post/135321837876/benchma rking-streaming-computation-engines-at 23

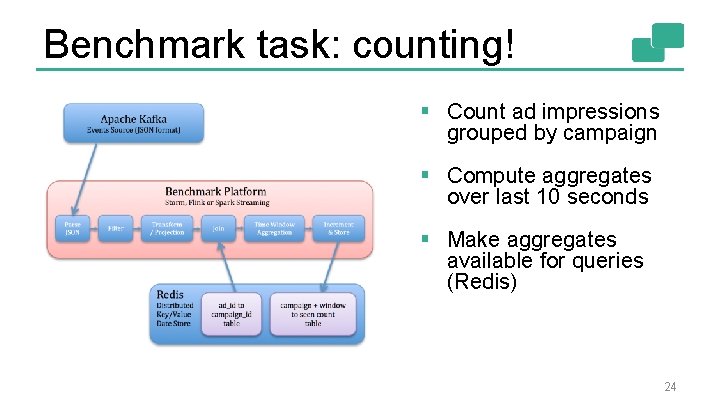

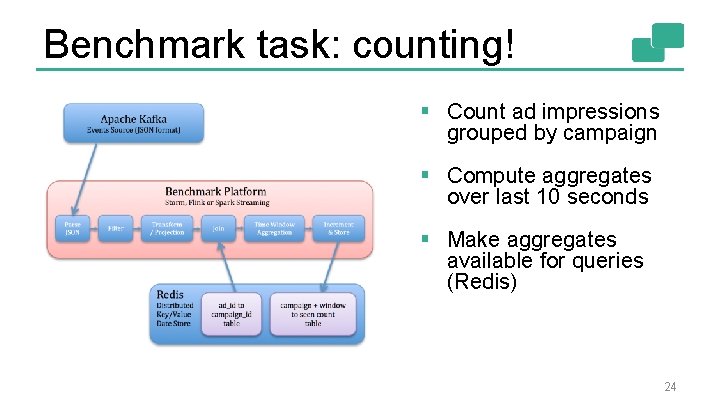

Benchmark task: counting! § Count ad impressions grouped by campaign § Compute aggregates over last 10 seconds § Make aggregates available for queries (Redis) 24

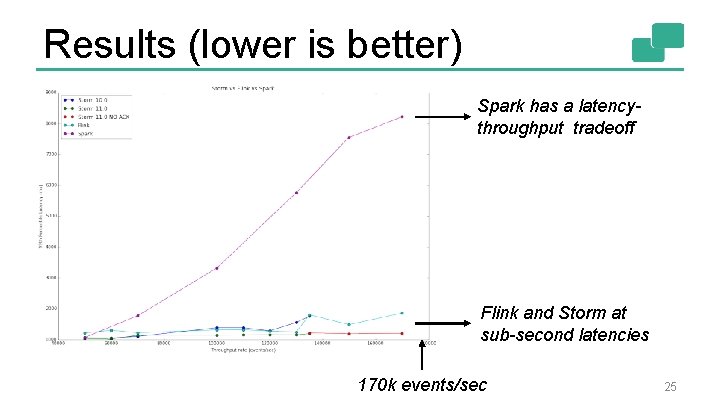

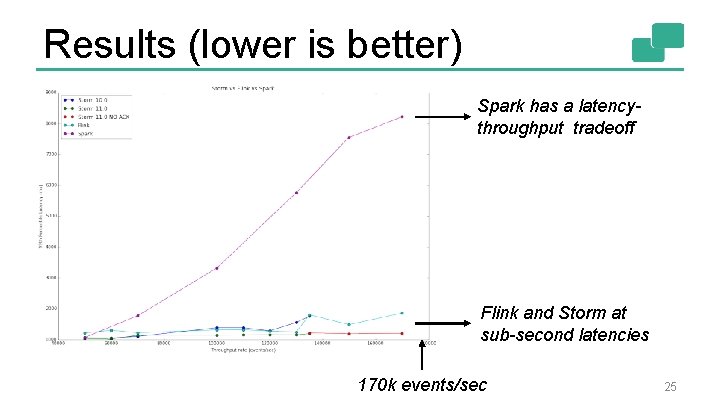

Results (lower is better) Spark has a latencythroughput tradeoff Flink and Storm at sub-second latencies 170 k events/sec 25

Efficiency, and scalability 26

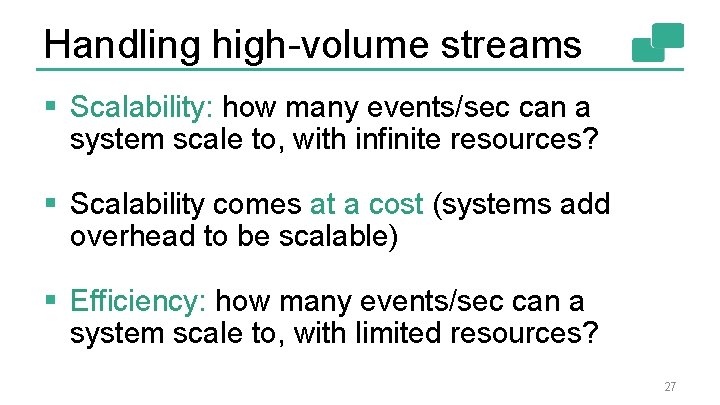

Handling high-volume streams § Scalability: how many events/sec can a system scale to, with infinite resources? § Scalability comes at a cost (systems add overhead to be scalable) § Efficiency: how many events/sec can a system scale to, with limited resources? 27

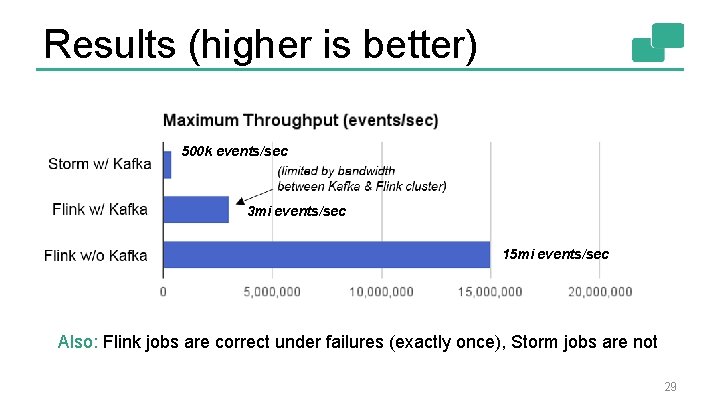

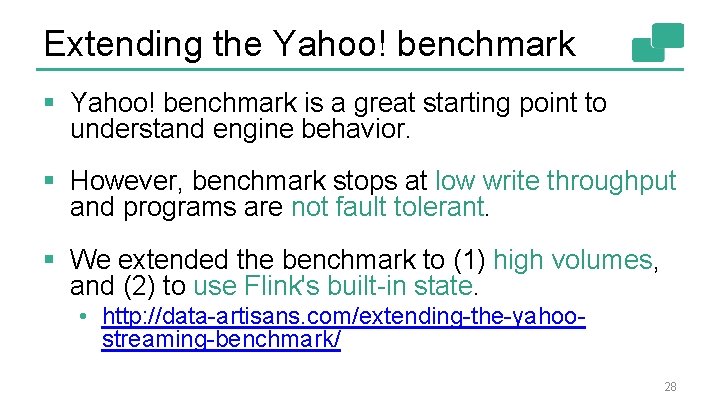

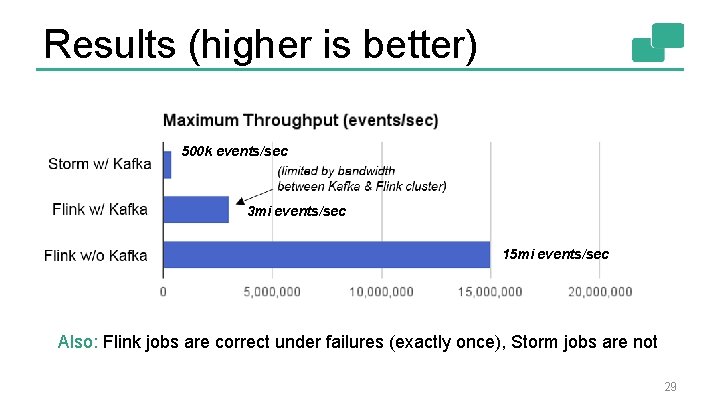

Extending the Yahoo! benchmark § Yahoo! benchmark is a great starting point to understand engine behavior. § However, benchmark stops at low write throughput and programs are not fault tolerant. § We extended the benchmark to (1) high volumes, and (2) to use Flink's built-in state. • http: //data-artisans. com/extending-the-yahoostreaming-benchmark/ 28

Results (higher is better) 500 k events/sec 3 mi events/sec 15 mi events/sec Also: Flink jobs are correct under failures (exactly once), Storm jobs are not 29

Fault tolerance and repeatability 30

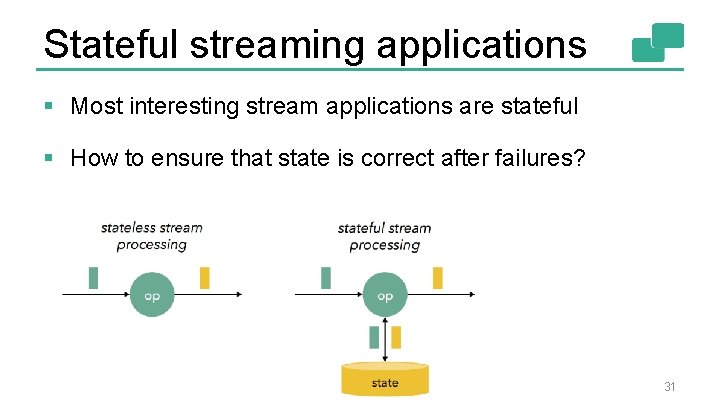

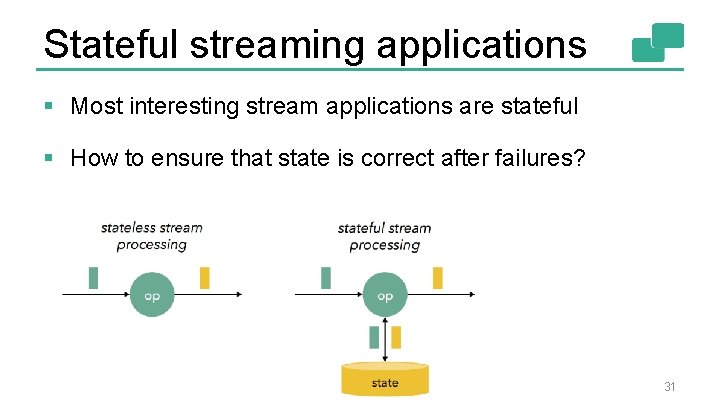

Stateful streaming applications § Most interesting stream applications are stateful § How to ensure that state is correct after failures? 31

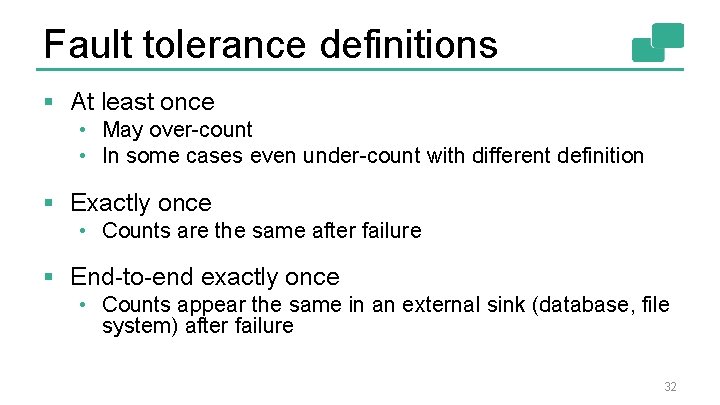

Fault tolerance definitions § At least once • May over-count • In some cases even under-count with different definition § Exactly once • Counts are the same after failure § End-to-end exactly once • Counts appear the same in an external sink (database, file system) after failure 32

Fault tolerance in Flink § Flink guarantees exactly once § End-to-end exactly once supported with specific sources and sinks • E. g. , Kafka Flink HDFS § Internally, Flink periodically takes consistent snapshots of the state without ever stopping the computation 33

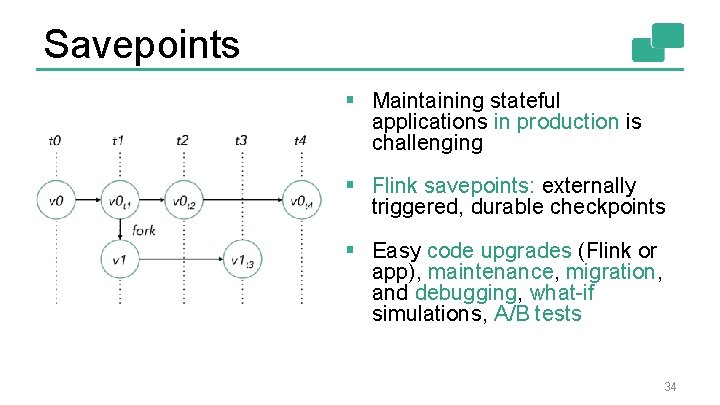

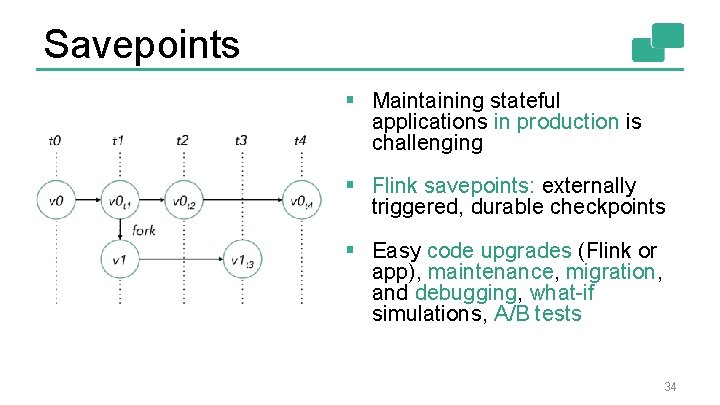

Savepoints § Maintaining stateful applications in production is challenging § Flink savepoints: externally triggered, durable checkpoints § Easy code upgrades (Flink or app), maintenance, migration, and debugging, what-if simulations, A/B tests 34

Explicit handling of time 35

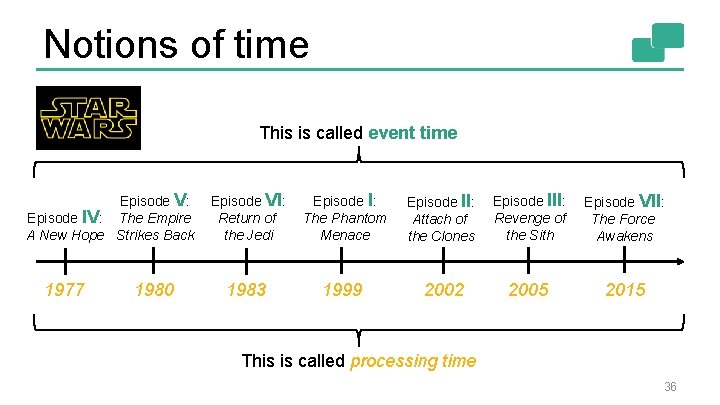

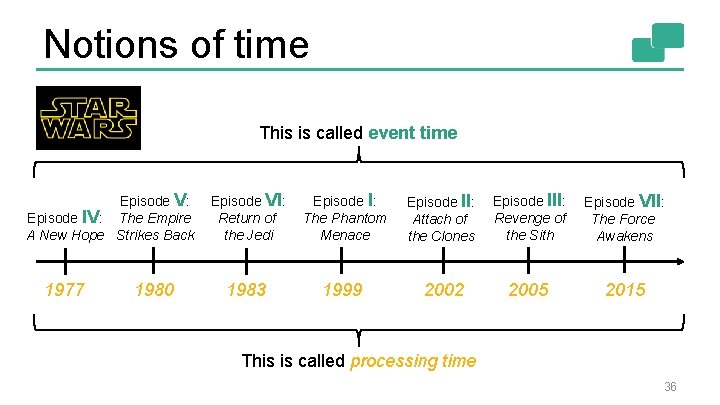

Notions of time This is called event time Episode V: Episode IV: The Empire A New Hope Strikes Back 1977 1980 Episode VI: Return of the Jedi Episode I: The Phantom Menace Episode II: Attach of the Clones Episode III: Revenge of the Sith Episode VII: The Force Awakens 1983 1999 2002 2005 2015 This is called processing time 36

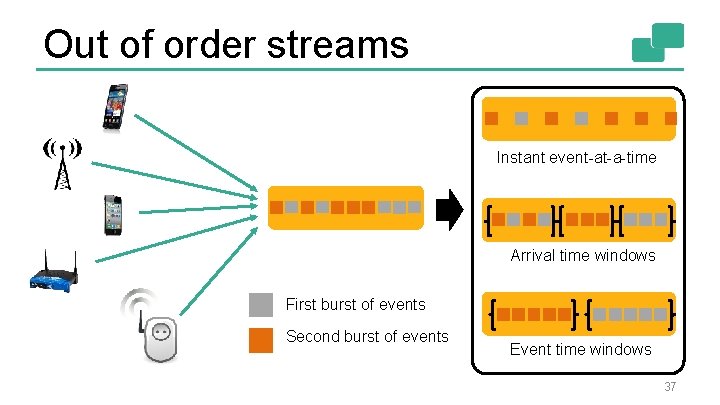

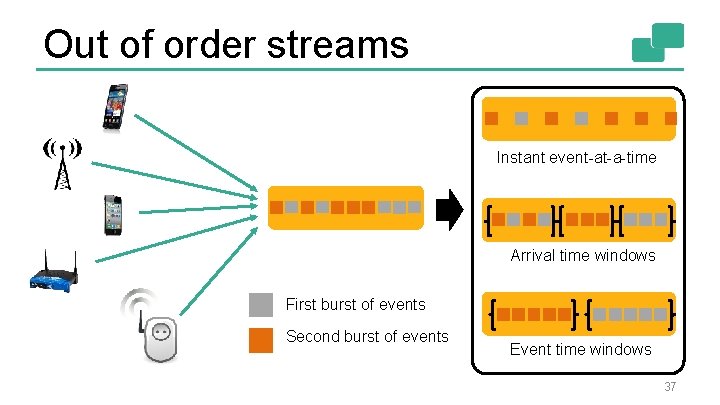

Out of order streams Instant event-at-a-time Arrival time windows First burst of events Second burst of events Event time windows 37

Why event time § Most stream processors are limited to processing/arrival time, Flink can operate on event time as well § Benefits of event time • Accurate results for out of order data • Sessions and unaligned windows • Time travel (backstreaming) 38

What's coming up in Flink 39

Evolution of streaming in Flink § Flink 0. 9 (Jun 2015): Data. Stream API in beta, exactly-once guarantees via checkpoiting § Flink 0. 10 (Nov 2015): Event time support, windowing mechanism based on Dataflow/Beam model, graduated Data. Stream API, high availability, state interface, new/updated connectors (Kafka, Nifi, Elastic, . . . ), improved monitoring § Flink 1. 0 (Mar 2015): Data. Stream API stability, out of core state, savepoints, CEP library, improved monitoring, Kafka 0. 9 support 40

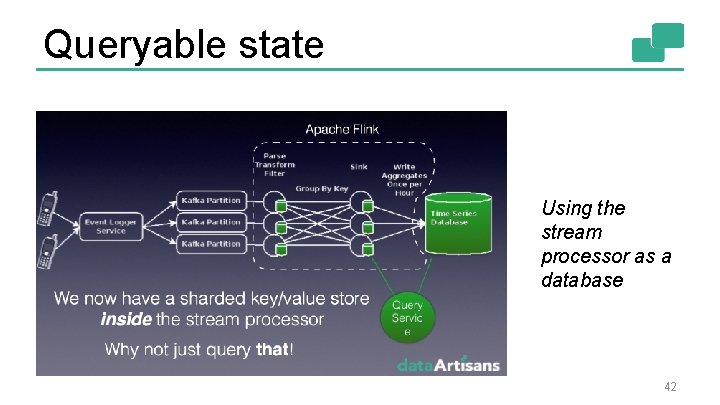

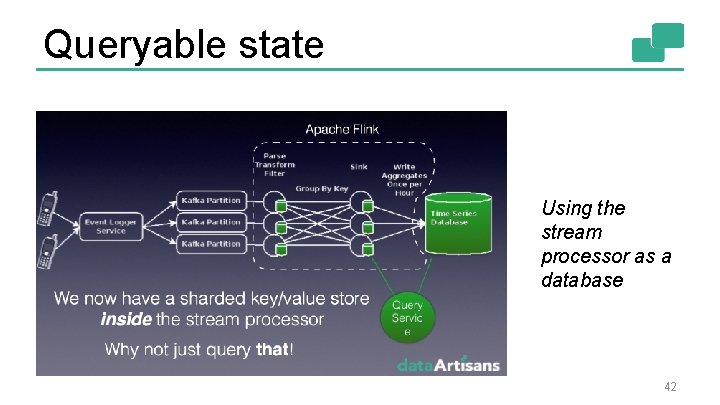

Upcoming features § SQL: ongoing work in collaboration with Apache Calcite § Dynamic scaling: adapt resources to stream volume, historical stream processing § Queryable state: ability to query the state inside the stream processor § Mesos support § More sources and sinks (e. g. , Kinesis, Cassandra) 41

Queryable state Using the stream processor as a database 42

Closing 43

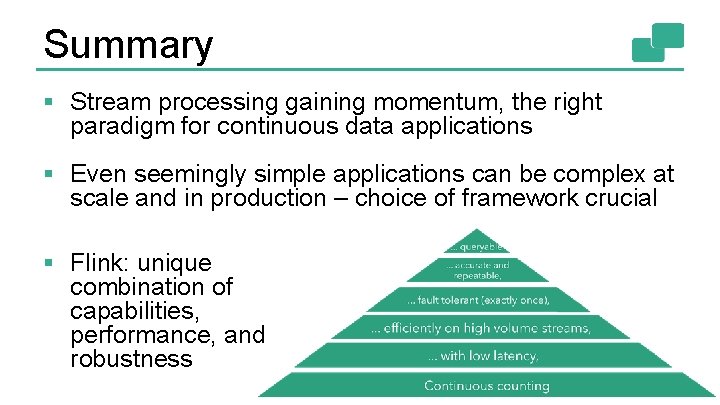

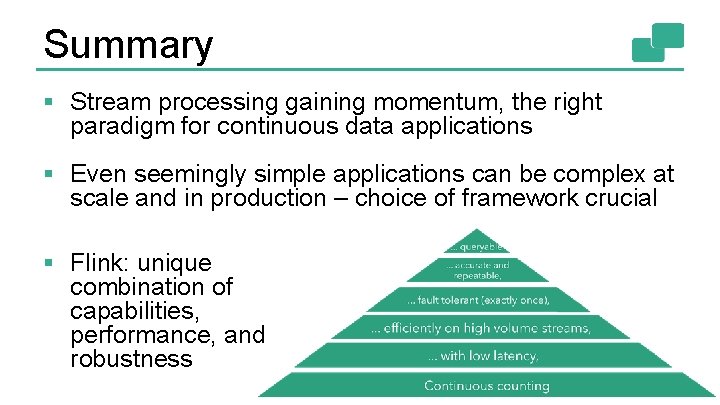

Summary § Stream processing gaining momentum, the right paradigm for continuous data applications § Even seemingly simple applications can be complex at scale and in production – choice of framework crucial § Flink: unique combination of capabilities, performance, and robustness 44

Flink in the wild 30 billion events daily See talks by 2 billion events in 10 1 Gb machines Picked Flink for "Saiki" data integration & distribution platform at 45

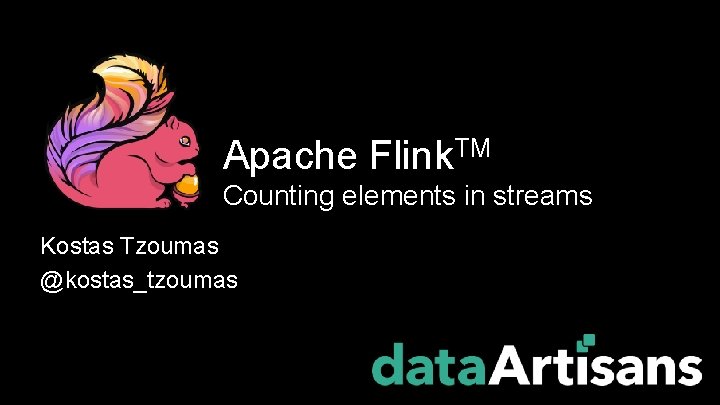

Join the community! § Follow: @Apache. Flink, @data. Artisans § Read: flink. apache. org/blog, data-artisans. com/blog § Subscribe: (news | user | dev) @ flink. apache. org 46

2 meetups next week in Bay Area! What's new in Flink 1. 0 & recent performance benchmarks with Flink http: //www. meetup. com/Bay-Area-Apache-Flink-Meetup/ April 5, San Francisco April 6, San Jose 47