Anatomy of a High Performance ManyThreaded Matrix Multiplication

- Slides: 33

Anatomy of a High. Performance Many-Threaded Matrix Multiplication Tyler M. Smith, Robert A. van de Geijn, Mikhail Smelyanskiy, Jeff Hammond, Field G. Van Zee 1

Introduction l Shared memory parallelism for GEMM l Many-threaded architectures require more sophisticated methods of parallelism l Explore the opportunities for parallelism to explain which we will exploit l Need finer grain parallelism 2

Outline ØGoto. BLAS approach l. Opportunities for Parallelism l. Many-threaded Results 3

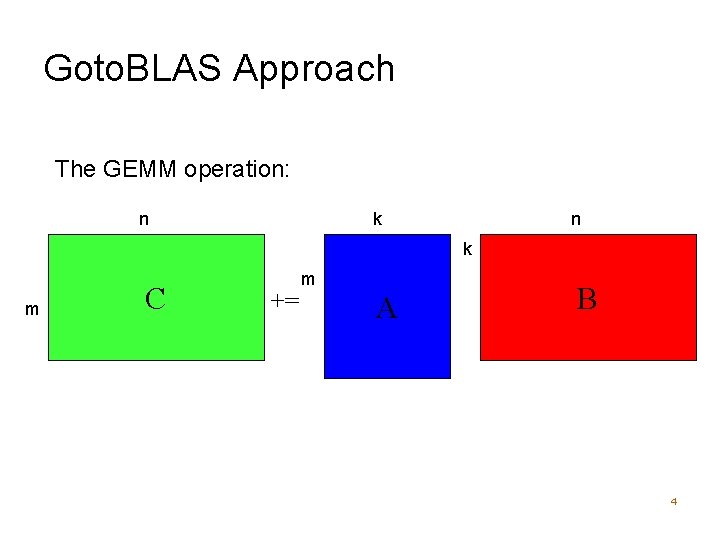

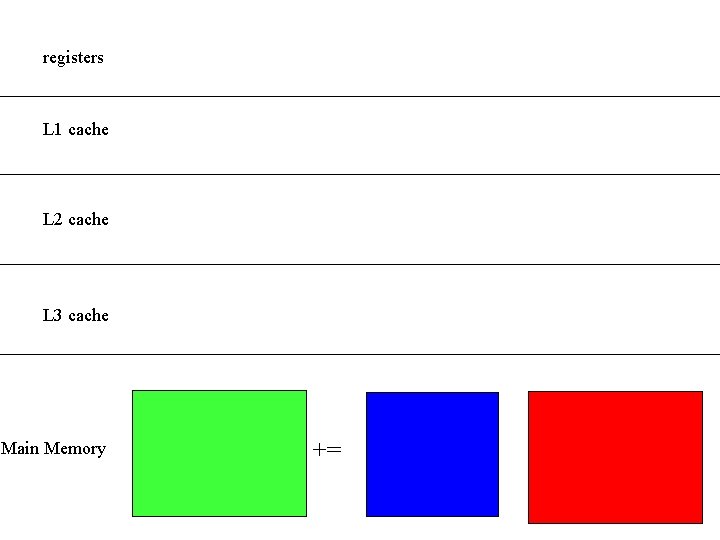

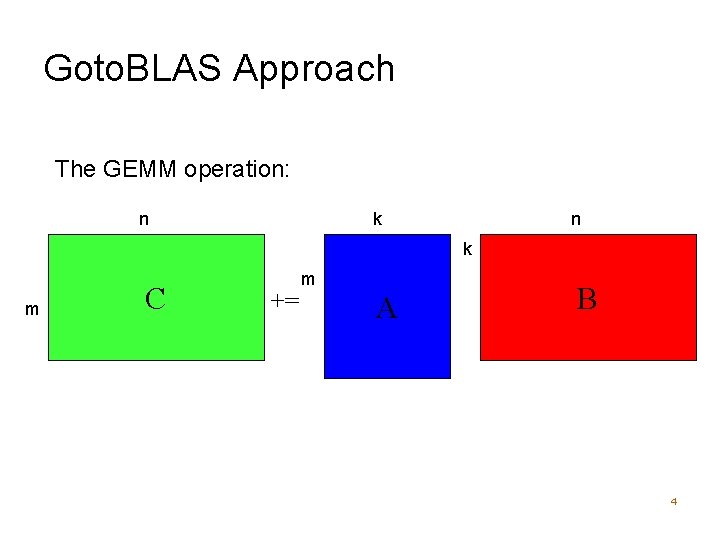

Goto. BLAS Approach The GEMM operation: n k m C += m A B 4

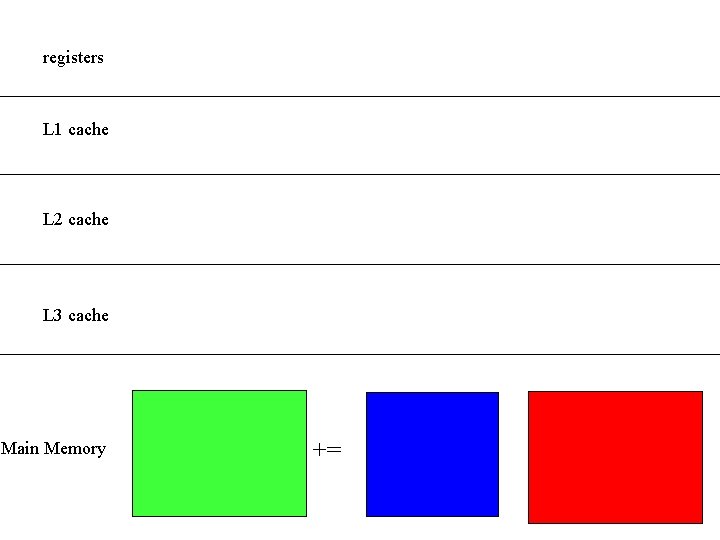

registers L 1 cache L 2 cache L 3 cache Main Memory +=

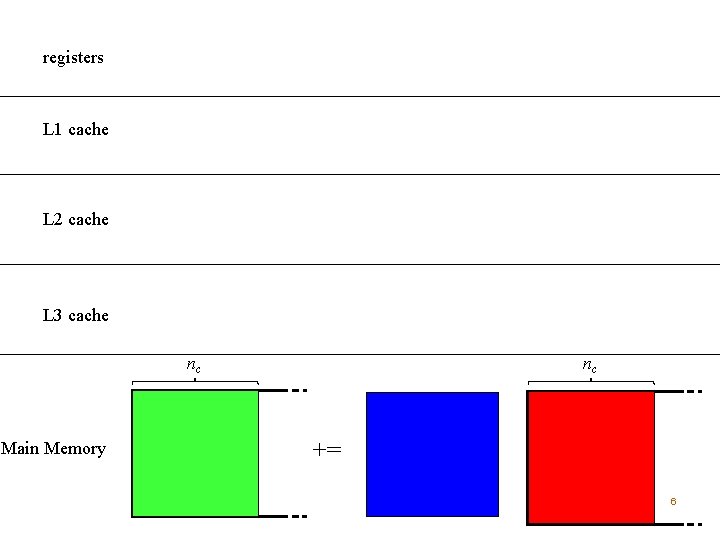

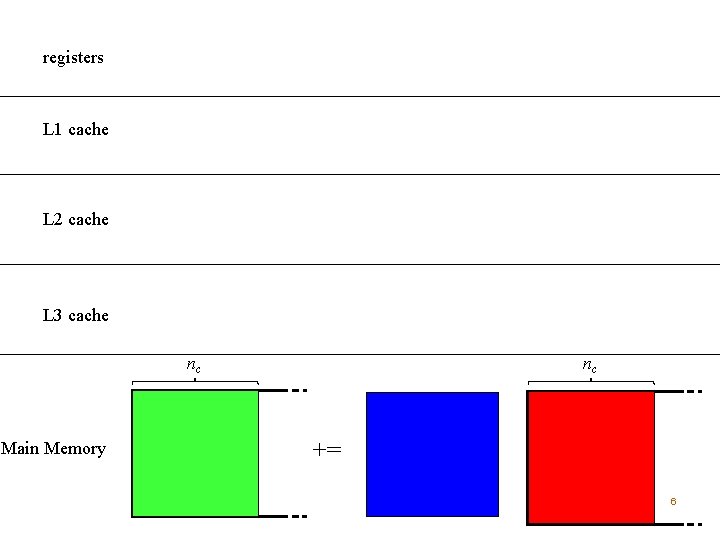

registers L 1 cache L 2 cache L 3 cache nc Main Memory nc += 6

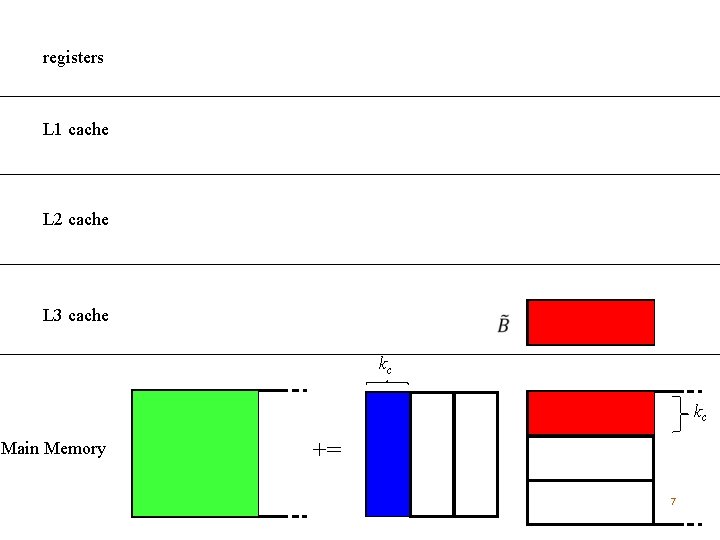

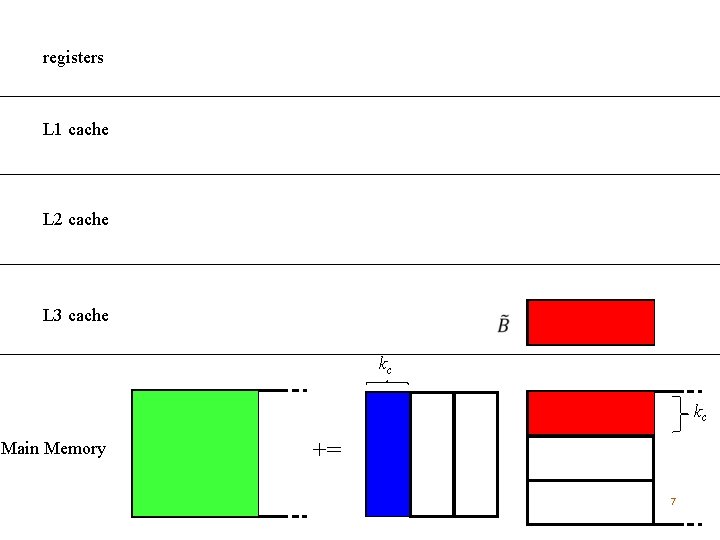

registers L 1 cache L 2 cache L 3 cache kc kc Main Memory += 7

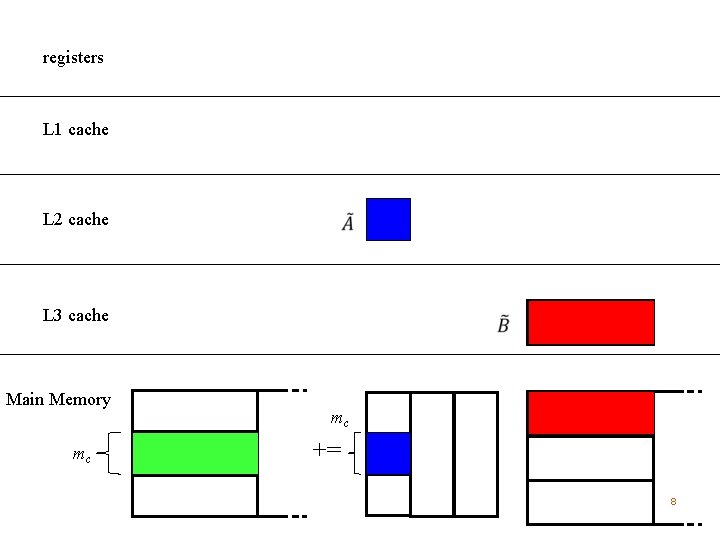

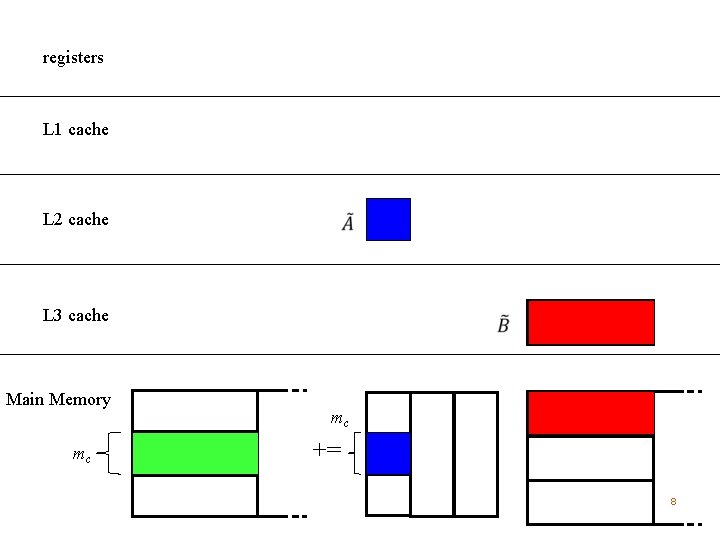

registers L 1 cache L 2 cache L 3 cache Main Memory mc mc += 8

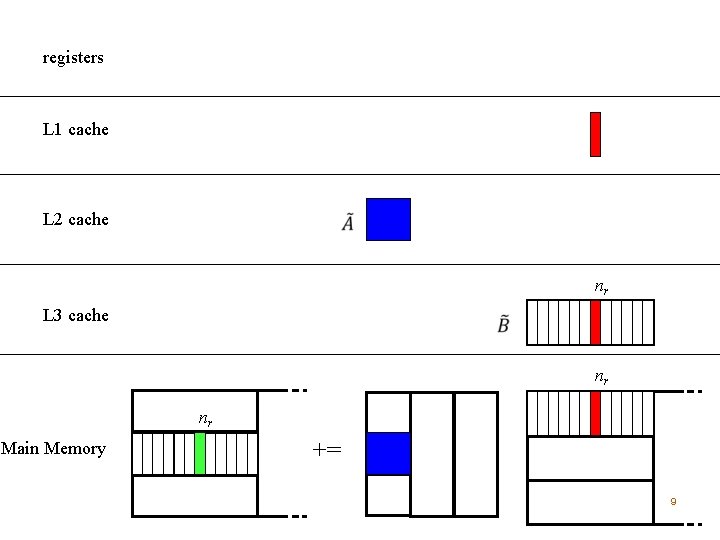

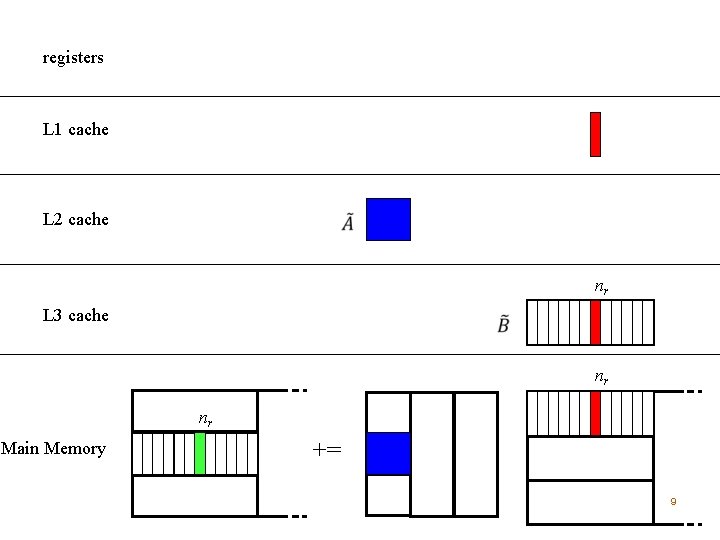

registers L 1 cache L 2 cache nr L 3 cache nr nr Main Memory += 9

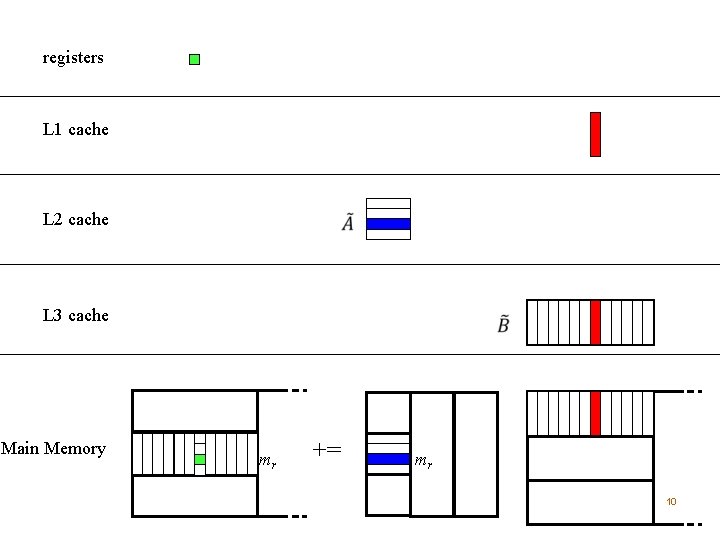

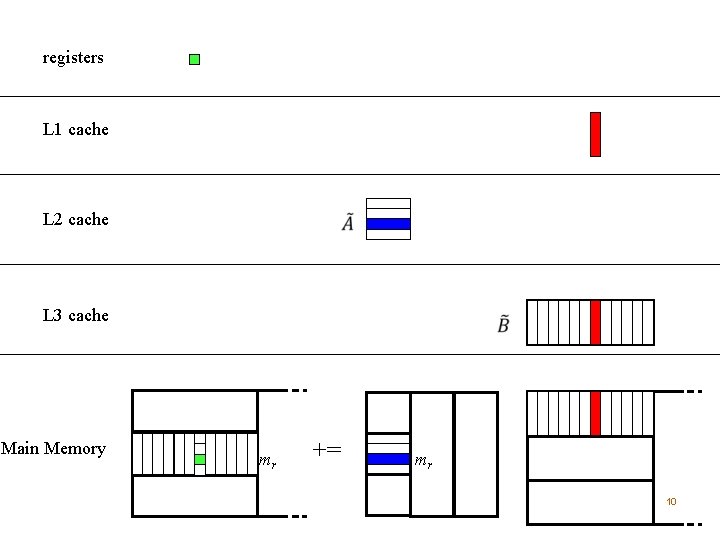

registers L 1 cache L 2 cache L 3 cache Main Memory mr += mr 10

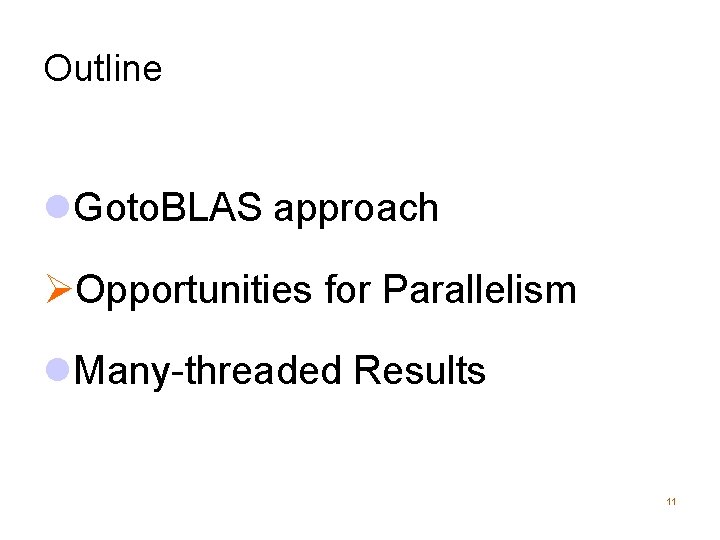

Outline l. Goto. BLAS approach ØOpportunities for Parallelism l. Many-threaded Results 11

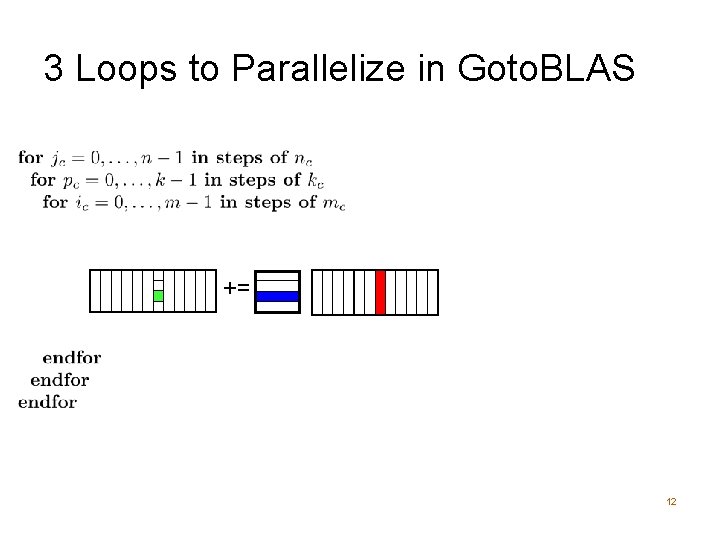

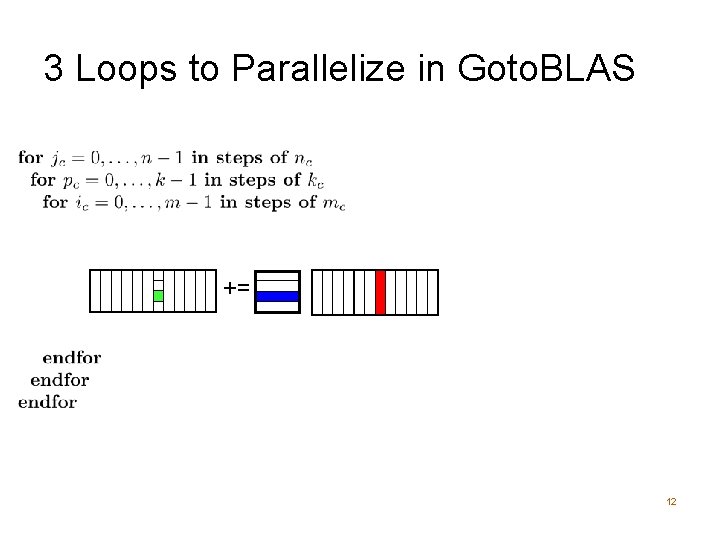

3 Loops to Parallelize in Goto. BLAS += 12

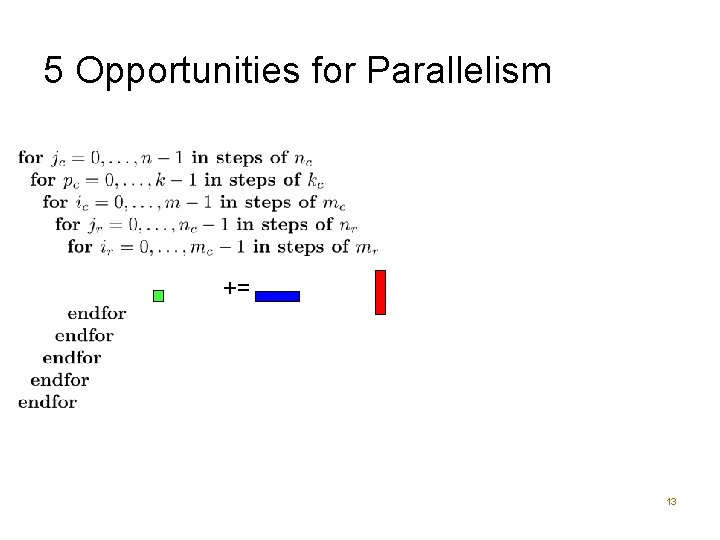

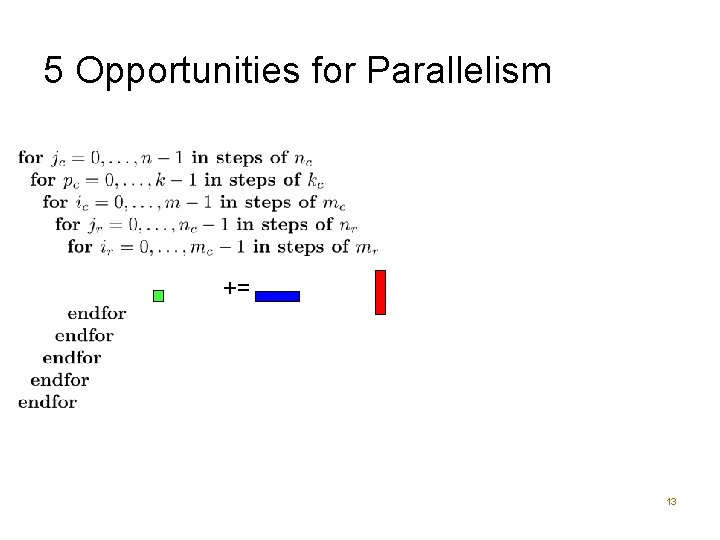

5 Opportunities for Parallelism += 13

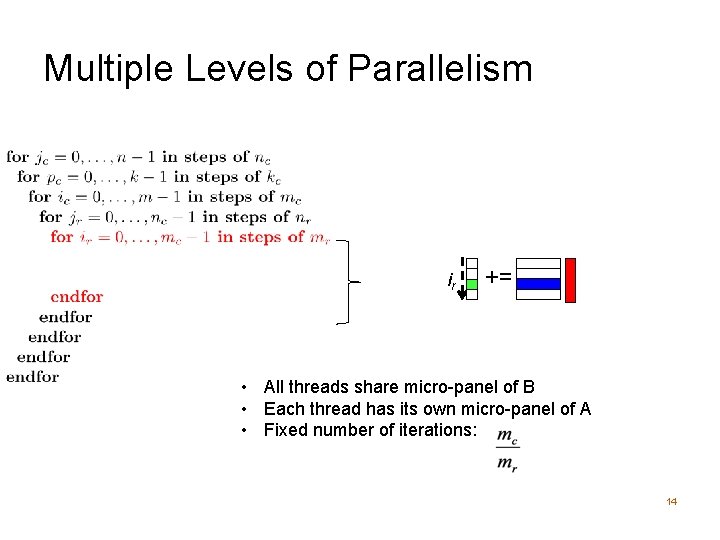

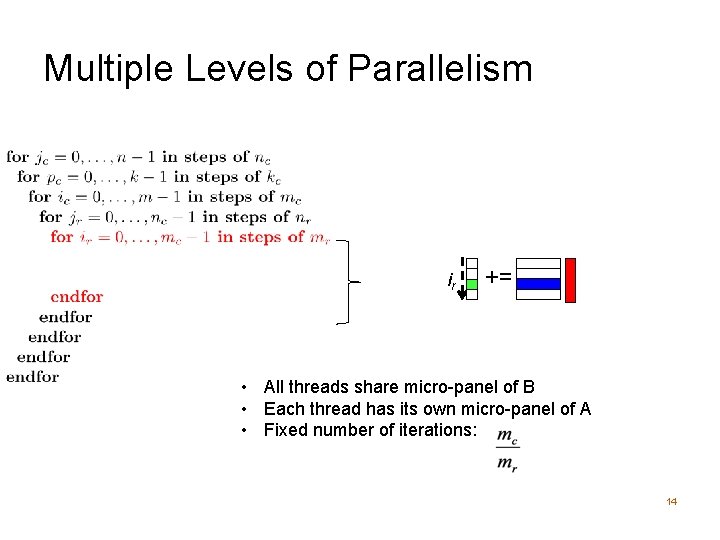

Multiple Levels of Parallelism ir += • All threads share micro-panel of B • Each thread has its own micro-panel of A • Fixed number of iterations: 14

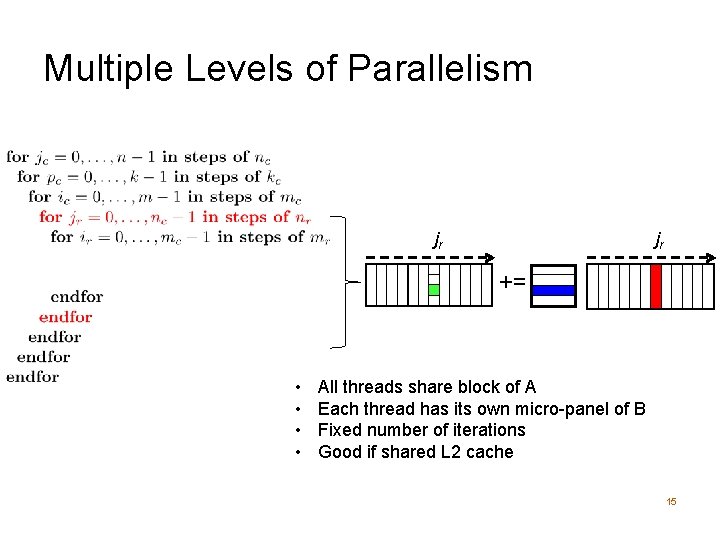

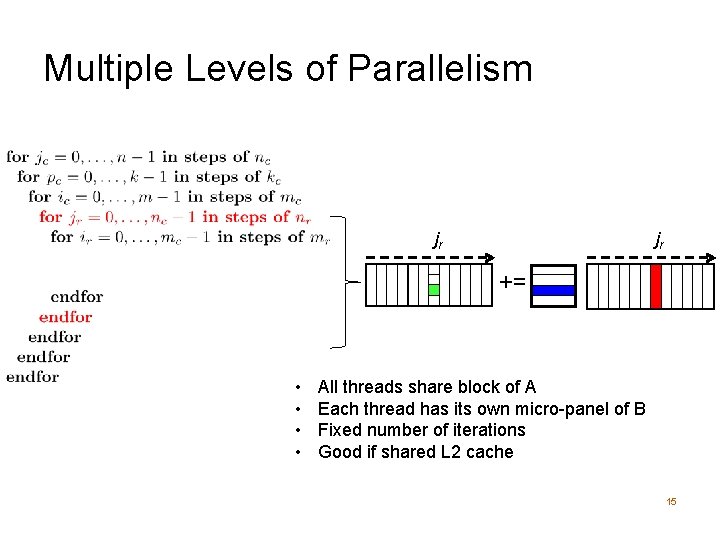

Multiple Levels of Parallelism jr jr += • • All threads share block of A Each thread has its own micro-panel of B Fixed number of iterations Good if shared L 2 cache 15

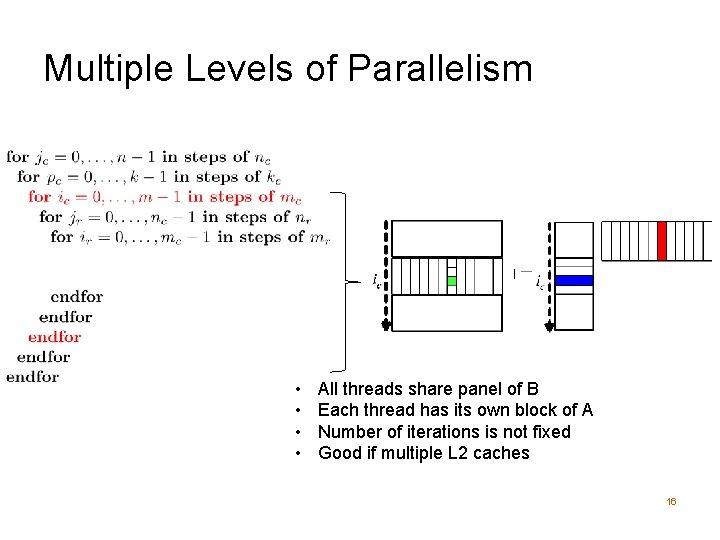

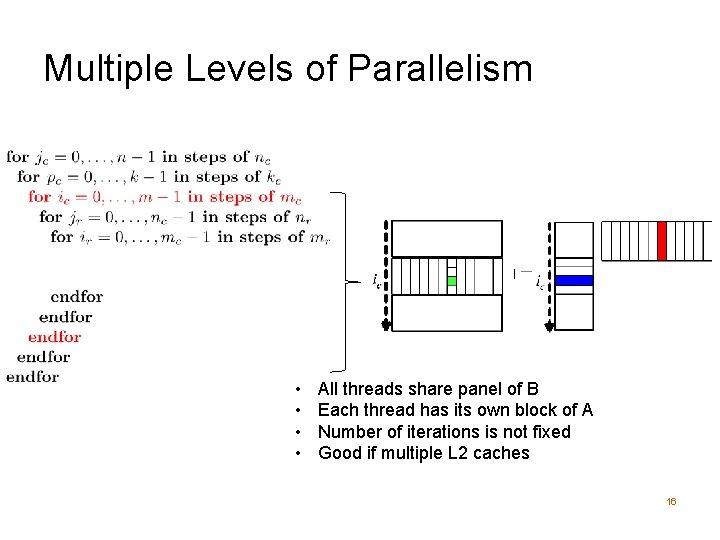

Multiple Levels of Parallelism • • All threads share panel of B Each thread has its own block of A Number of iterations is not fixed Good if multiple L 2 caches 16

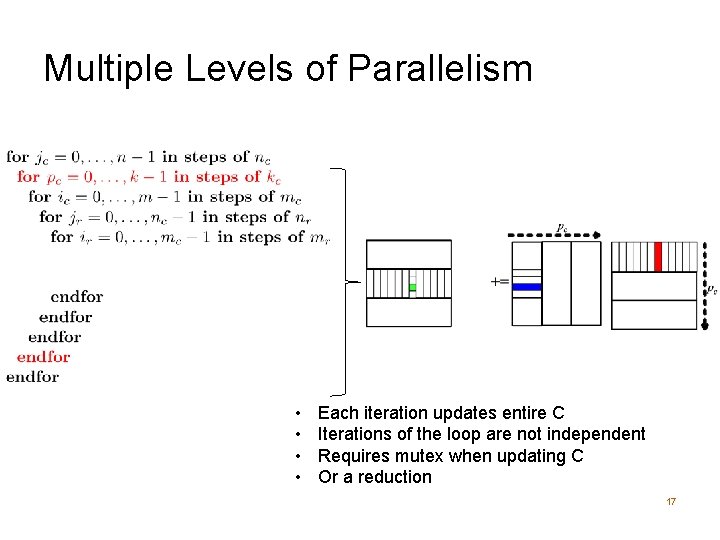

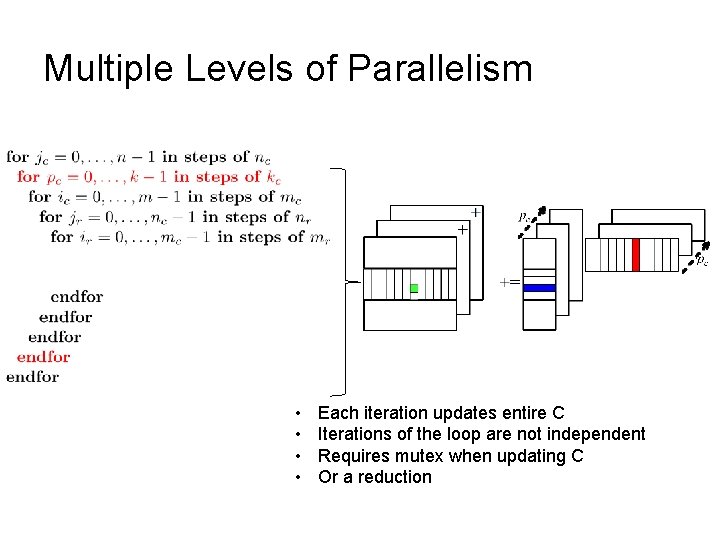

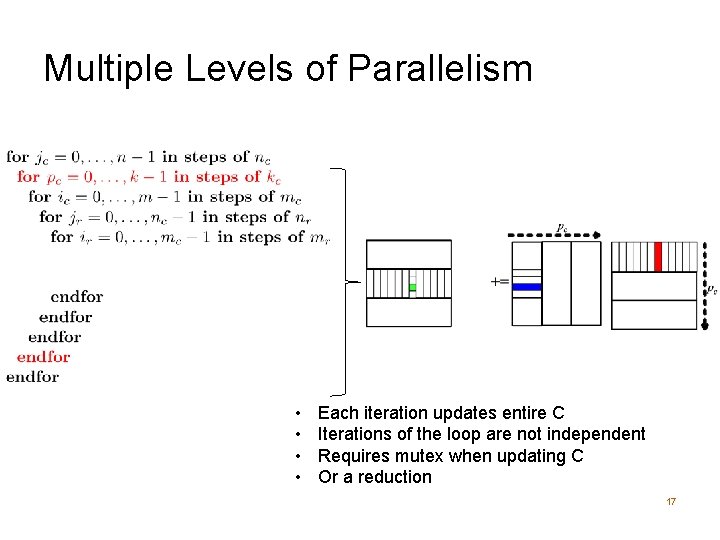

Multiple Levels of Parallelism • • Each iteration updates entire C Iterations of the loop are not independent Requires mutex when updating C Or a reduction 17

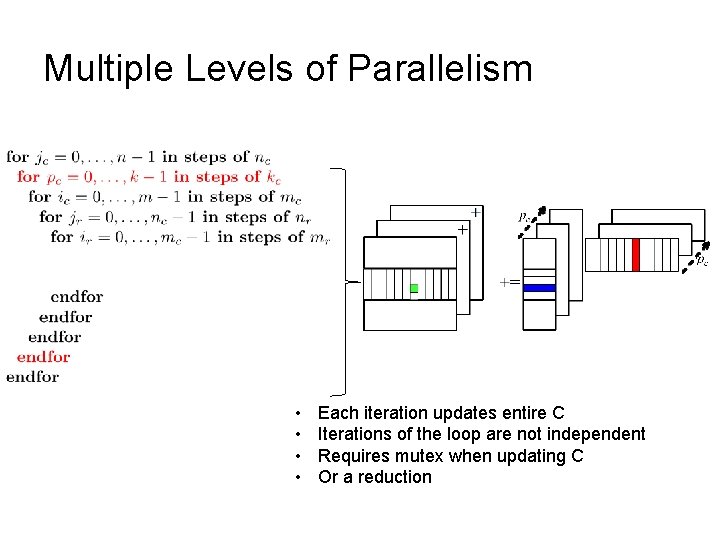

Multiple Levels of Parallelism • • Each iteration updates entire C Iterations of the loop are not independent Requires mutex when updating C Or a reduction

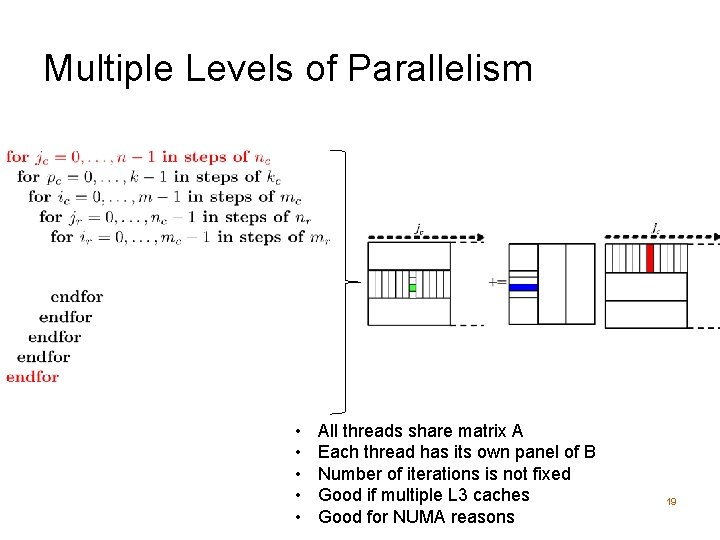

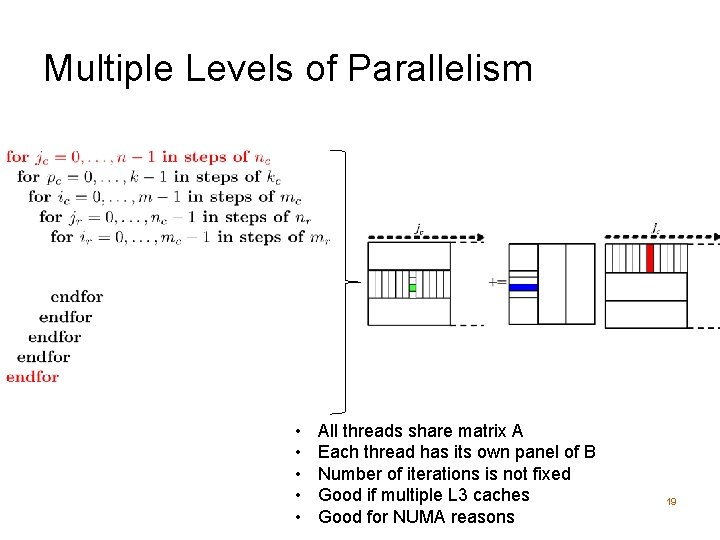

Multiple Levels of Parallelism • • • All threads share matrix A Each thread has its own panel of B Number of iterations is not fixed Good if multiple L 3 caches Good for NUMA reasons 19

Outline l. Goto. BLAS approach l. Opportunities for Parallelism ØMany-threaded Results 20

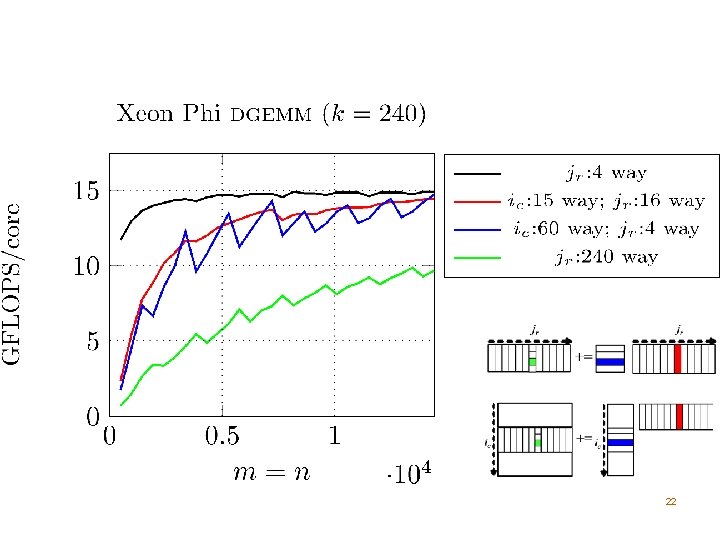

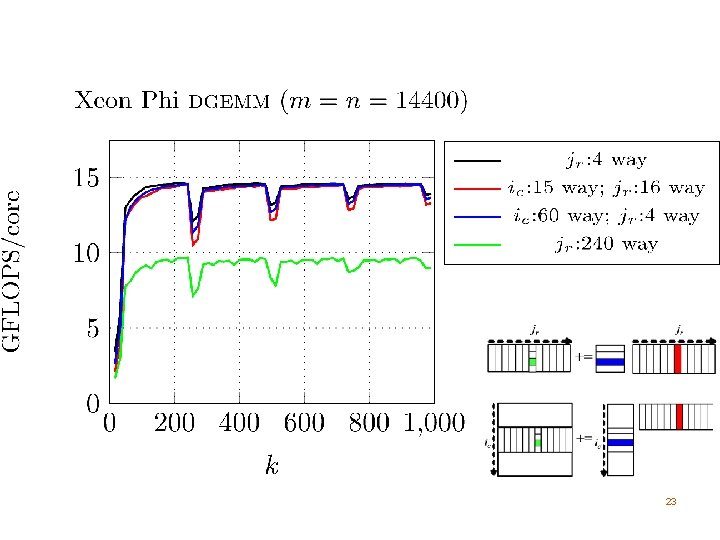

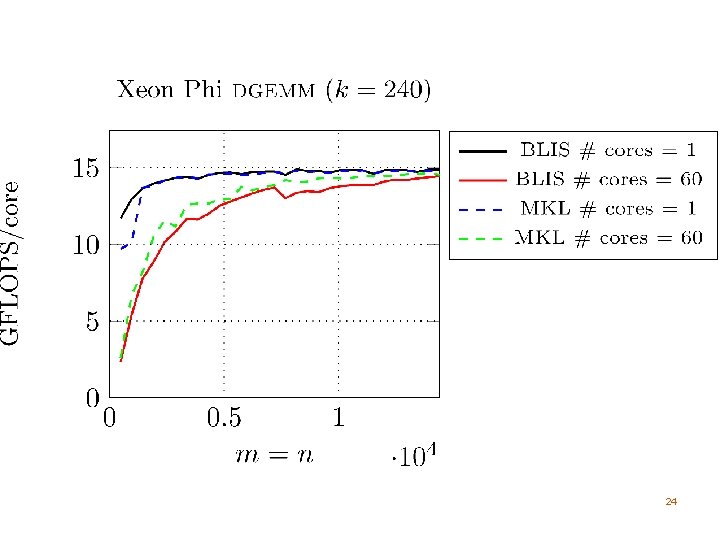

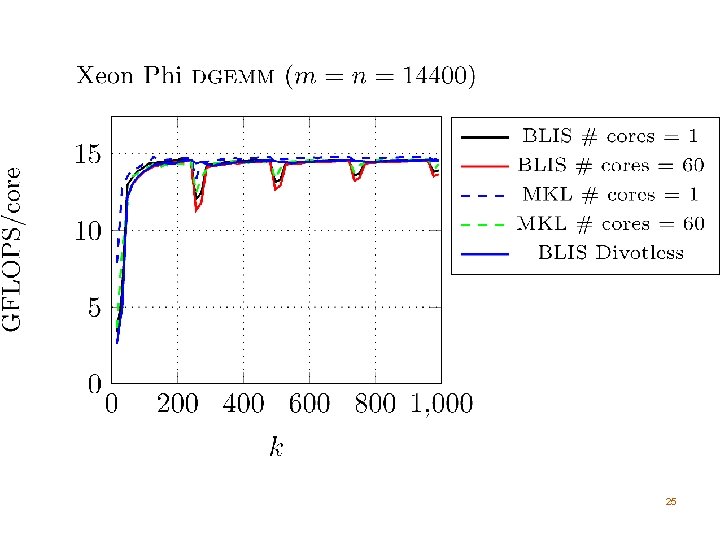

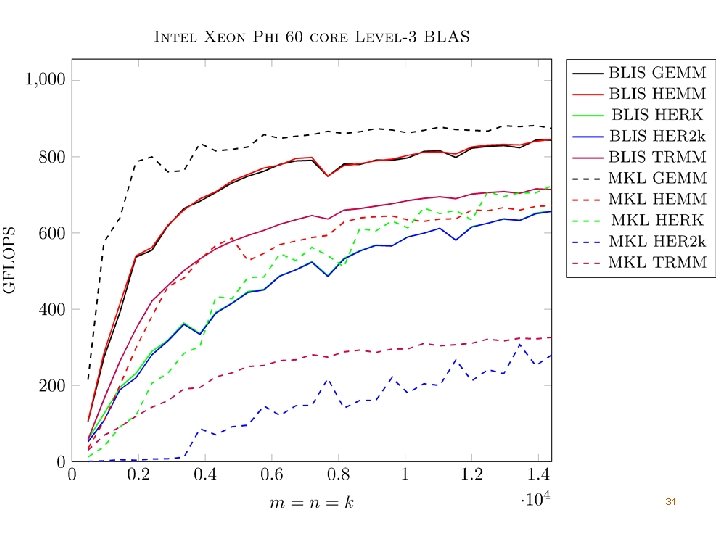

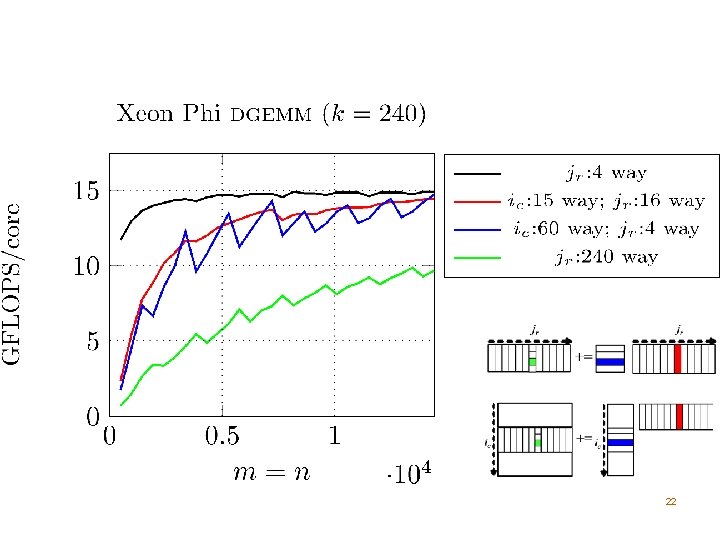

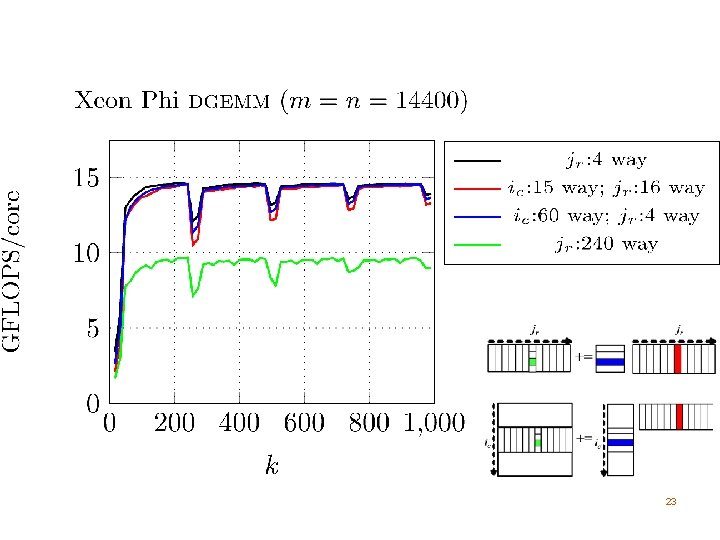

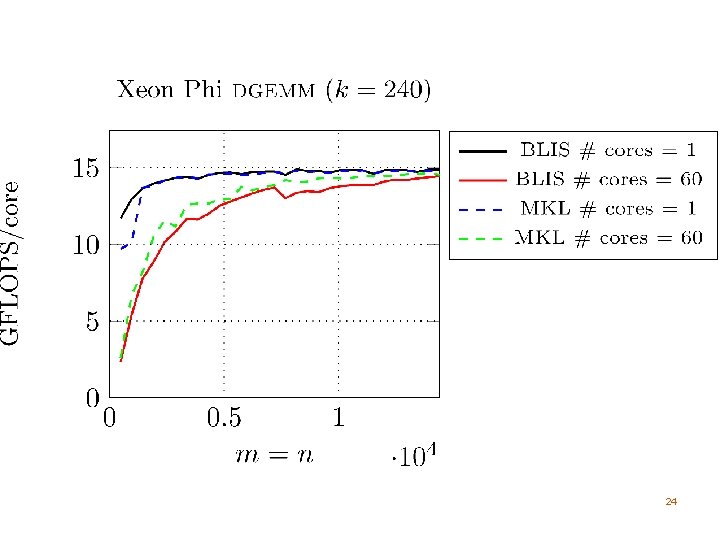

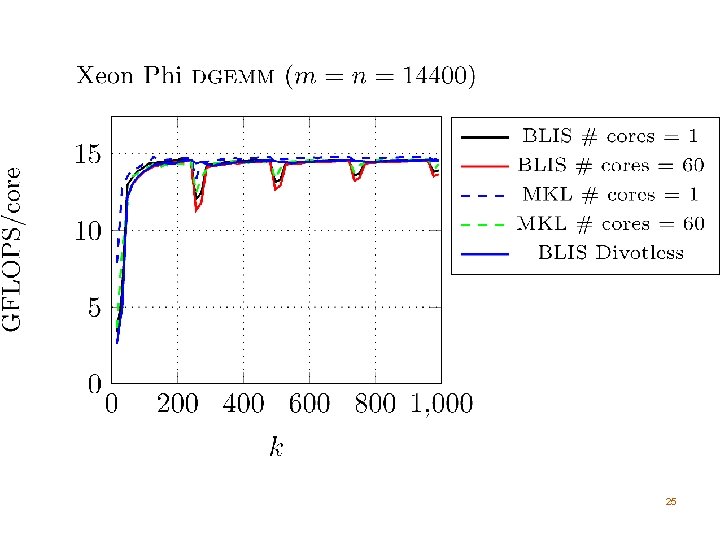

Intel Xeon Phi l Many Threads ¡ 60 cores, 4 threads per core ¡Need to use > 2 threads per core to utilize FPU l We do not block for the L 1 cache ¡Difficult to amortize the cost of updating C with 4 threads sharing an L 1 cache ¡We consider part of the L 2 cache as a virtual L 1 l Each core has its own L 2 cache 21

22

23

24

25

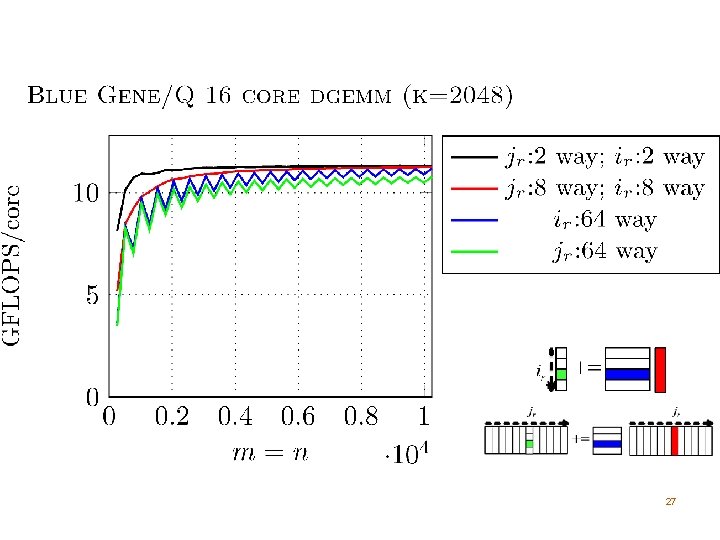

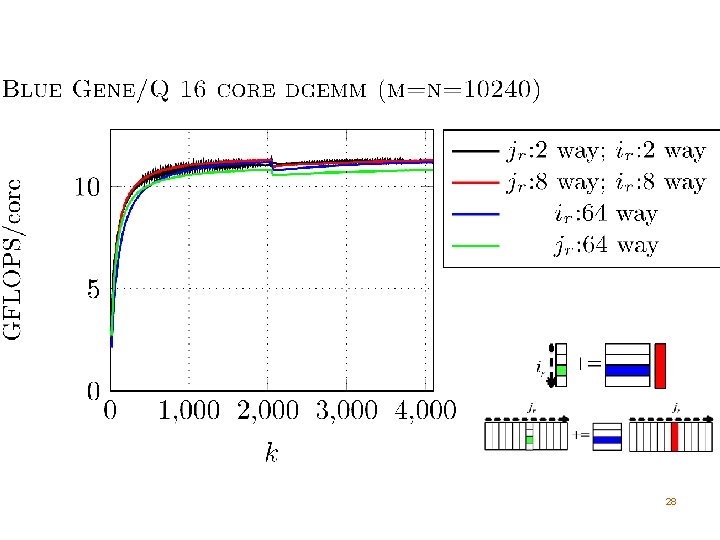

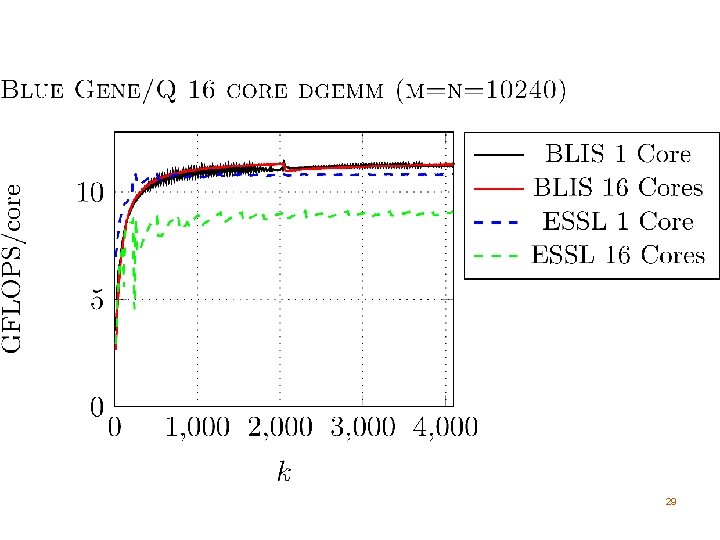

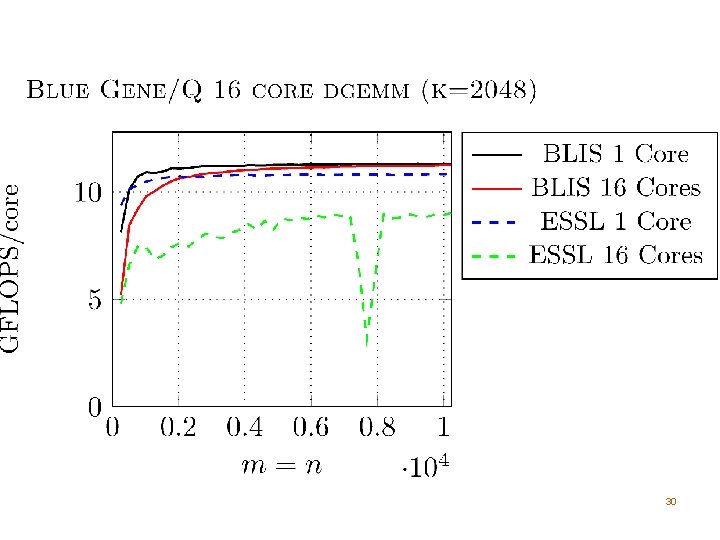

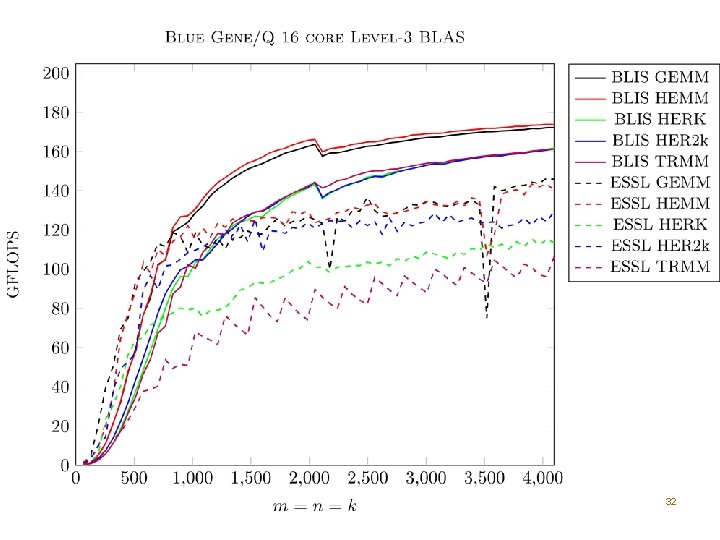

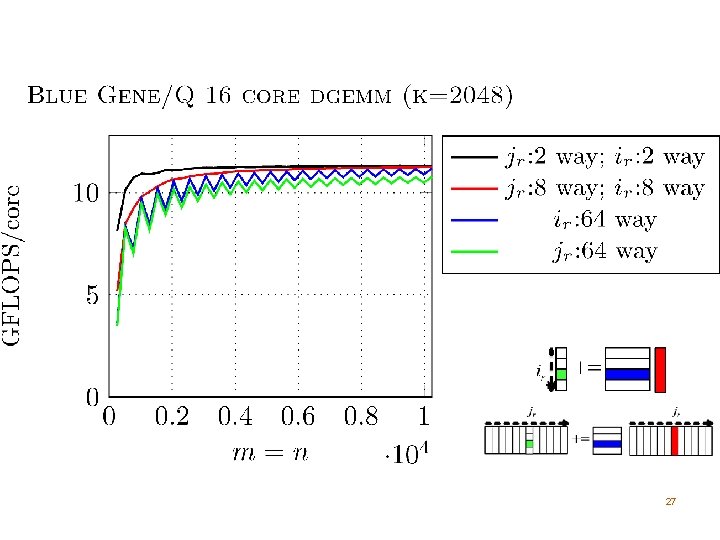

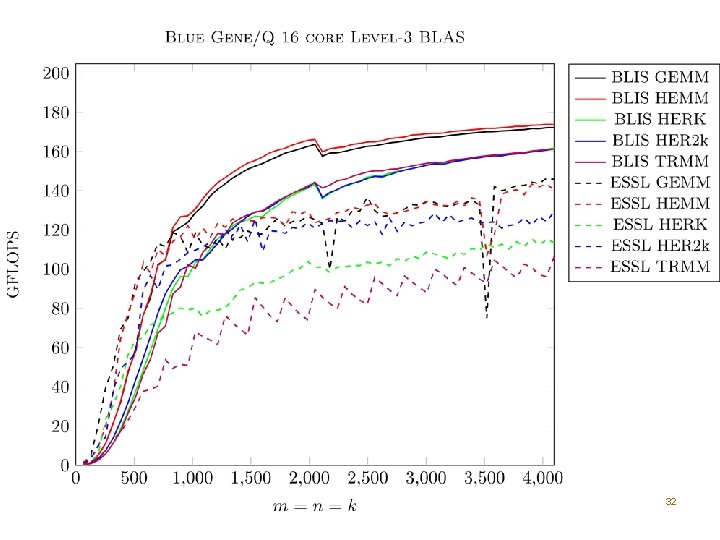

IBM Blue Gene/Q l (Not quite as) Many Threads ¡ 16 cores, 4 threads per core ¡Need to use > 2 threads per core to utilize FPU l We do not block for the L 1 cache ¡Difficult to amortize the cost of updating C with 4 threads sharing an L 1 cache ¡We consider part of the L 2 cache as a virtual L 1 l Single large, shared L 2 cache 26

27

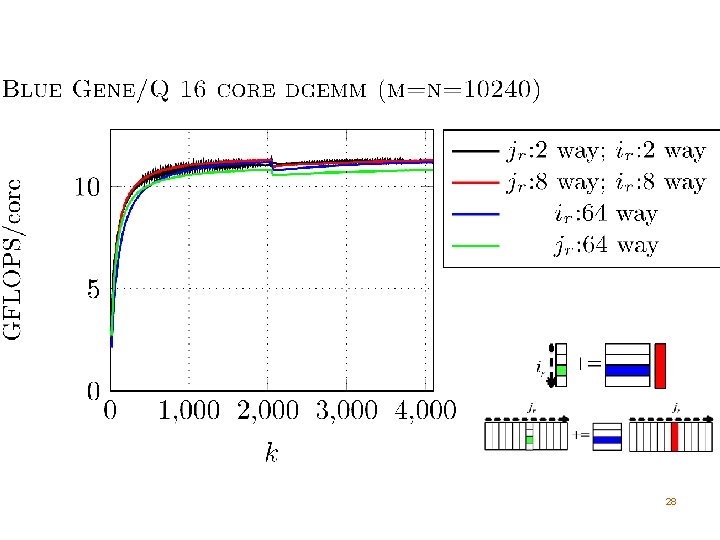

28

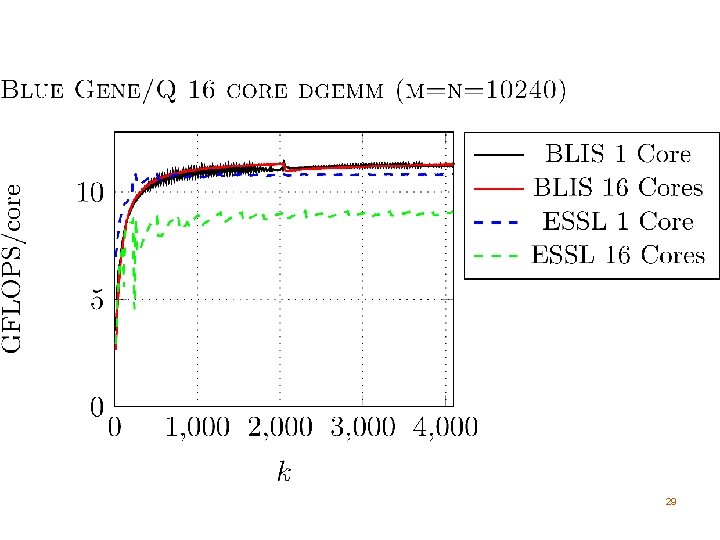

29

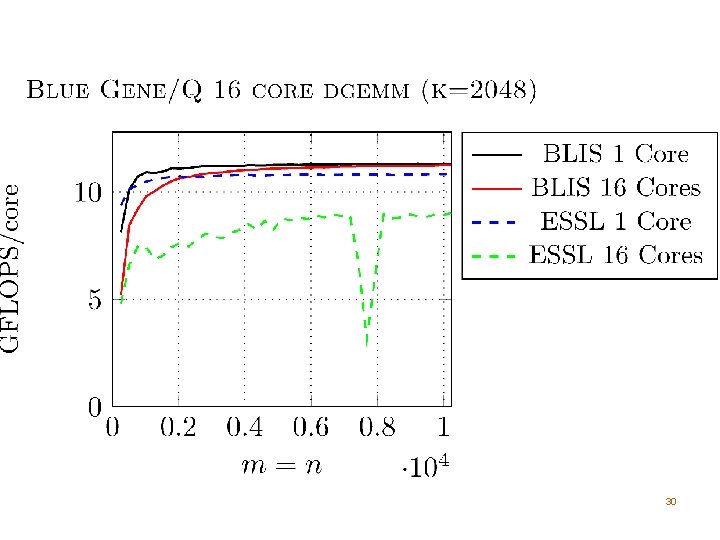

30

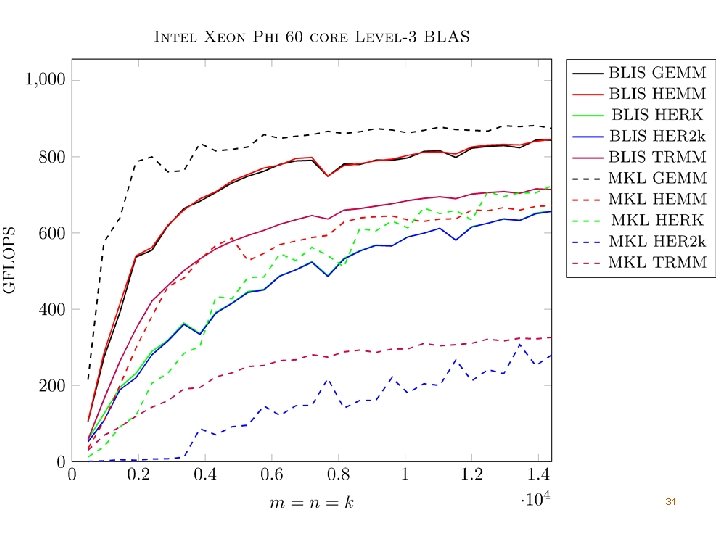

31

32

Thank You l Questions? l Source code available at: ¡code. google. com/p/blis/ 33