Analyzing and Interpreting Child Outcomes Data Christina Kasprzak

- Slides: 110

Analyzing and Interpreting Child Outcomes Data Christina Kasprzak Austin, Texas November 2010 1

Objective for the day To share with you ideas and resources for use in training and TA that will help districts to analyze and use COSF data 2

Agenda • Looking at data—generally; national; state; regional • Follow up discussion about assessment tools • Communicating data results • Public reporting requirements • Framework for a quality outcomes system 3

Recap from March • Assessment (more debrief on this after lunch) • • • no assessment created for this outcomes process best practices on assessment = multiple data sources types of assessment including pros and cons benefits of limiting assessments for COSF selecting tools for COSF process activity – reviewing assessment tools and identifying strengths, weaknesses, how it fits with COSF process 4

Recap from March • Promoting Data Quality – ECO Training Materials and Activities • • • COSF refresher training quality review of COSF team discussion involving families in outcomes process written child example reviewing a COSF for quality 5

Why do a good job with COSF data? It’s hard to change attitudes! What motivates people? Altruistic? Fear? Logic? Money? 6

Why do a good job with COSF data? Altruistic: Because you believe child and family outcomes are why you do your job! Fear: Because you can look bad! (to the state; to the public via public reporting) Logic: Because a program should be accountable for the results of their services! Money: Because OMB is using the data to make decisions– federal dollars are at stake! 7

Why do a good job with COSF data? • Today’s focus on ‘looking at data’ will give you more tools and resources for changing attitudes! 8

Looking at Data 9

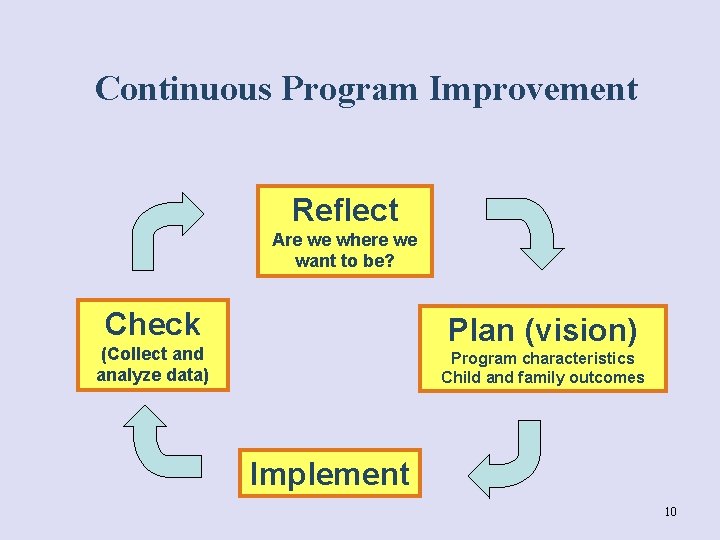

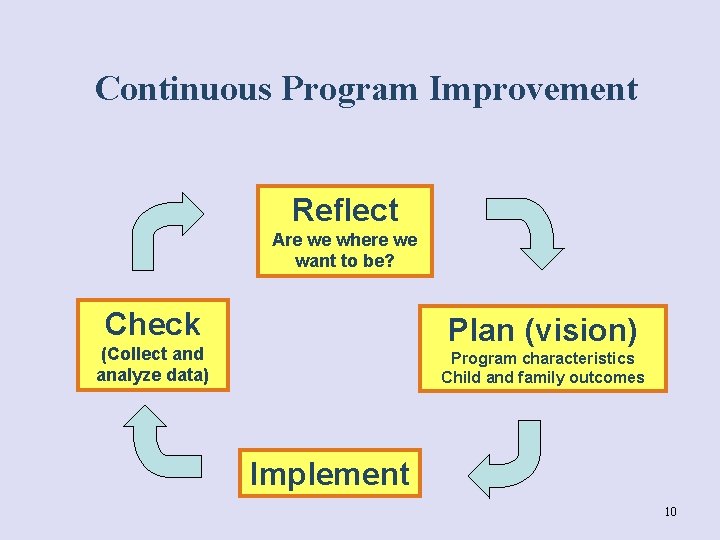

Continuous Program Improvement Reflect Are we where we want to be? Check Plan (vision) (Collect and analyze data) Program characteristics Child and family outcomes Implement 10

Using data for program improvement = EIA Evidence Inference Action 11

Evidence • Evidence refers to the numbers, such as “ 45% of children in category b” • The numbers are not debatable 12

Inference • How do you interpret the #s? • What can you conclude from the #s? • Does evidence mean good news? Bad news? News we can’t interpret? • To reach an inference, sometimes we analyze data in other ways (ask for more evidence) 13

Inference • Inference is debatable -- even reasonable people can reach different conclusions • Stakeholders can help with putting meaning on the numbers • Early on, the inference may be more a question of the quality of the data 14

Action • Given the inference from the numbers, what should be done? • Recommendations or action steps • Action can be debatable – and often is • Another role for stakeholders • Again, early on the action might have to do with improving the quality of the data 15

Promoting quality data through data analysis 16

Promoting quality data through data analysis • Examine the data for inconsistencies • If/when you find something strange, look for other data that might help explain it. • Is the variation caused by something other than bad data? 17

The validity of your data is questionable if… The overall pattern in the data looks “strange’: – Compared to what you expect – Compared to other data – Compared to similar states/regions/school districts 18

Let’s look at some data … 19

Remember: Part C &619 Child Outcomes (see cheat sheet) 1. Positive social-emotional skills (including social relationships); 2. Acquisition and use of knowledge and skills (including early language/communication [and early literacy]); and 3. Use of appropriate behaviors to meet their needs 20

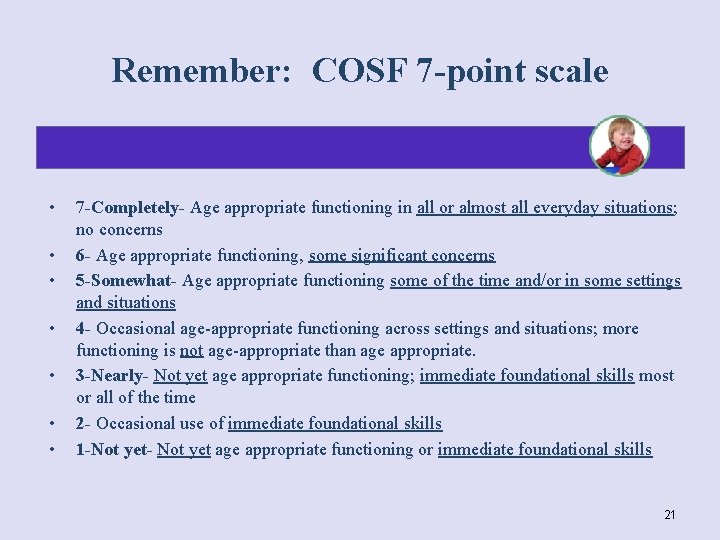

Remember: COSF 7 -point scale • • 7 -Completely- Age appropriate functioning in all or almost all everyday situations; no concerns 6 - Age appropriate functioning, some significant concerns 5 -Somewhat- Age appropriate functioning some of the time and/or in some settings and situations 4 - Occasional age-appropriate functioning across settings and situations; more functioning is not age-appropriate than age appropriate. 3 -Nearly- Not yet age appropriate functioning; immediate foundational skills most or all of the time 2 - Occasional use of immediate foundational skills 1 -Not yet- Not yet age appropriate functioning or immediate foundational skills 21

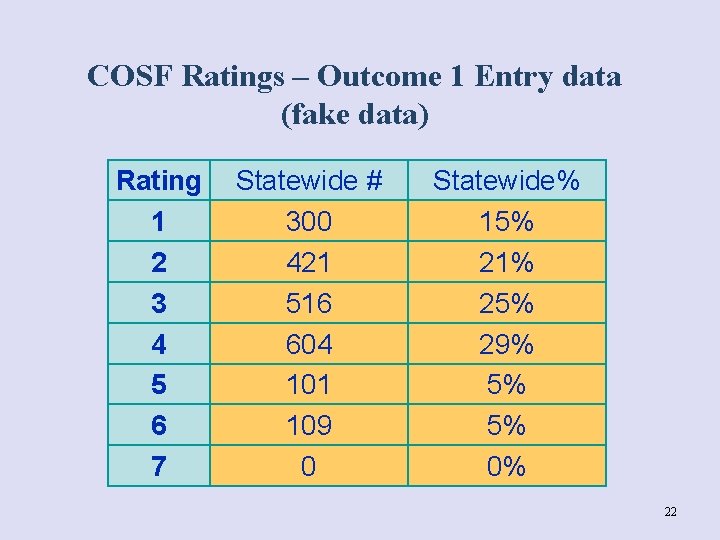

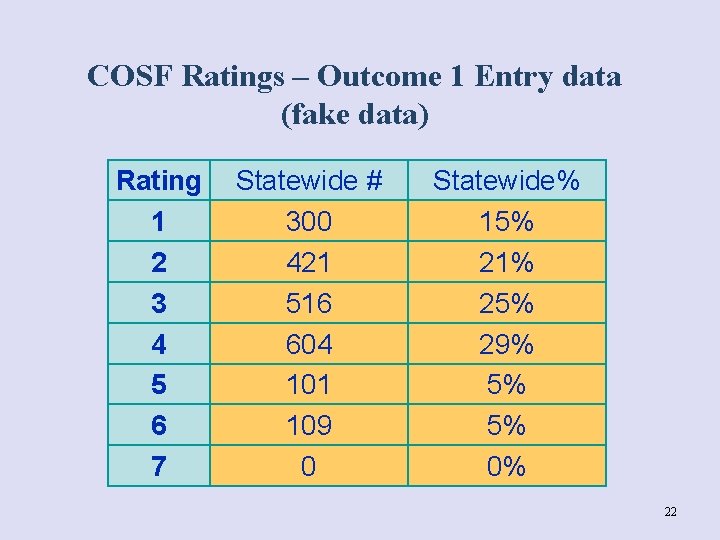

COSF Ratings – Outcome 1 Entry data (fake data) Rating 1 2 3 4 5 6 7 Statewide # 300 421 516 604 101 109 0 Statewide% 15% 21% 25% 29% 5% 5% 0% 22

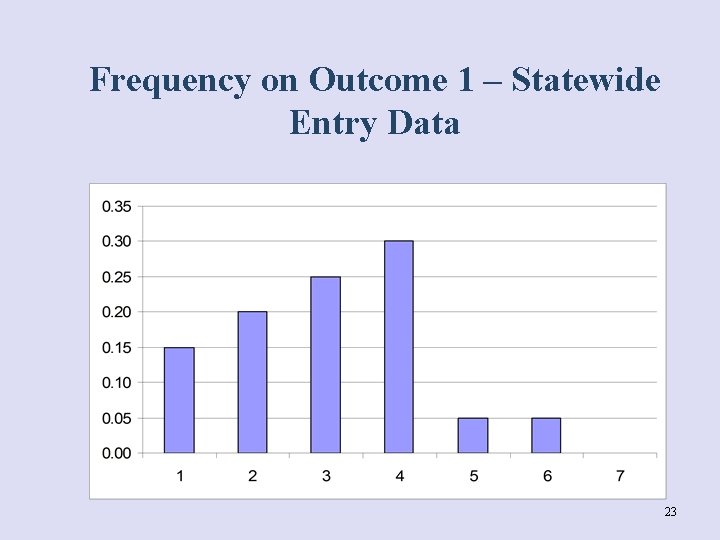

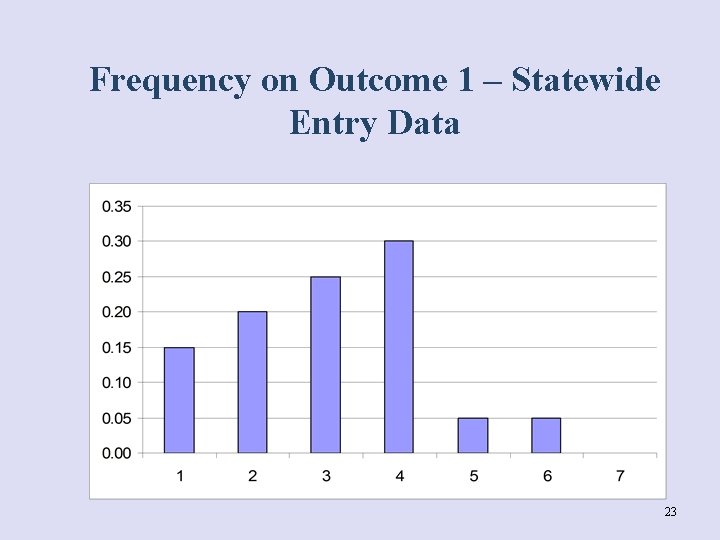

Frequency on Outcome 1 – Statewide Entry Data 23

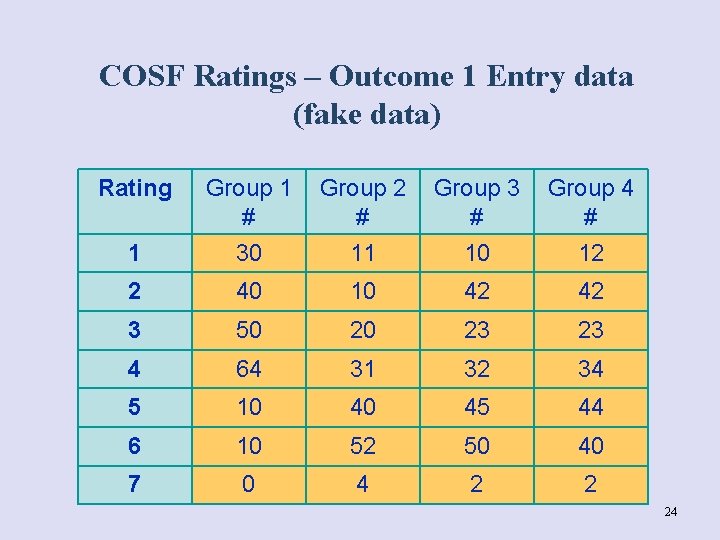

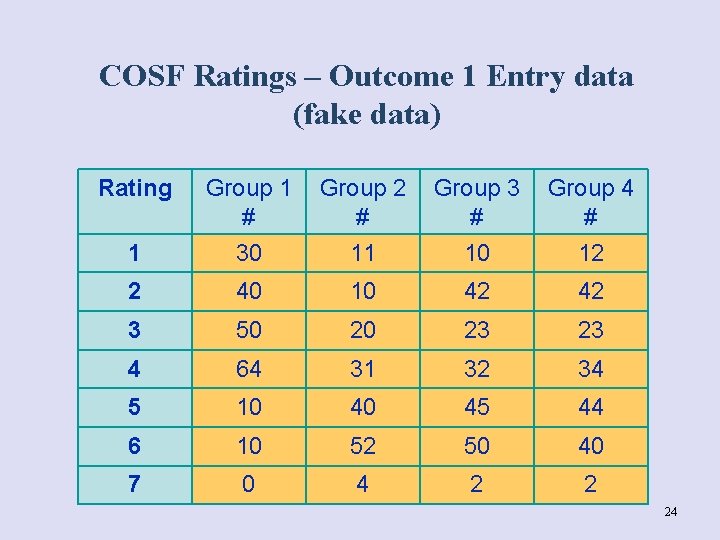

COSF Ratings – Outcome 1 Entry data (fake data) Rating Group 1 # Group 2 # Group 3 # Group 4 # 1 30 11 10 12 2 40 10 42 42 3 50 20 23 23 4 64 31 32 34 5 10 40 45 44 6 10 52 50 40 7 0 4 2 2 24

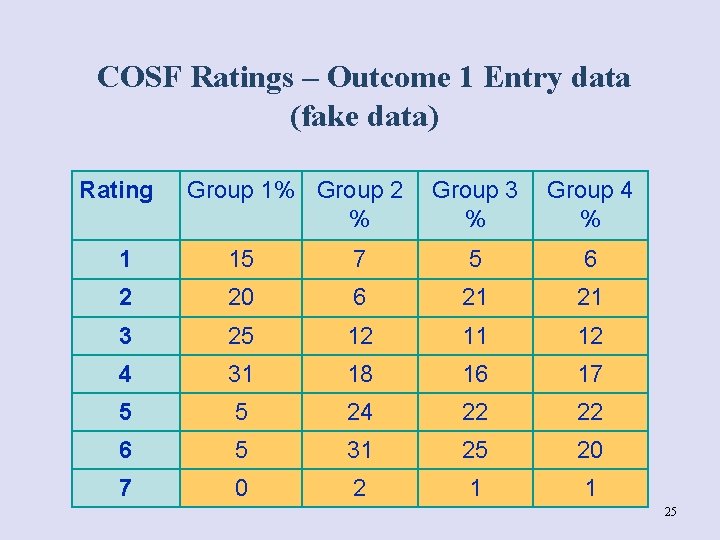

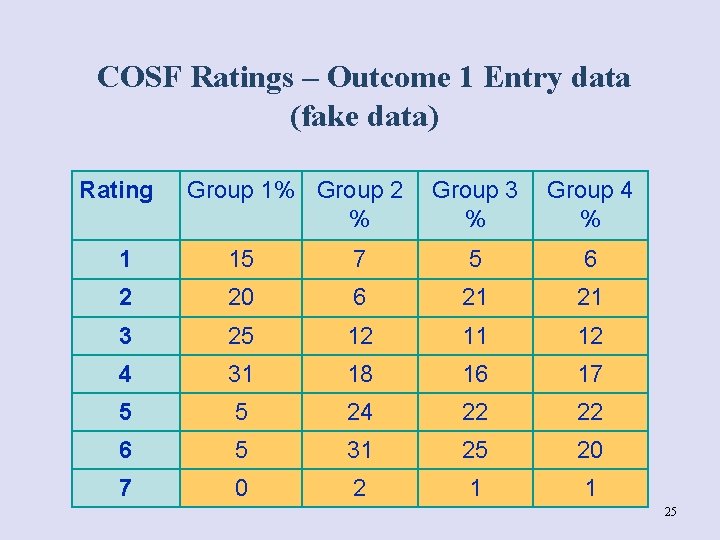

COSF Ratings – Outcome 1 Entry data (fake data) Rating Group 1% Group 2 % Group 3 % Group 4 % 1 15 7 5 6 2 20 6 21 21 3 25 12 11 12 4 31 18 16 17 5 5 24 22 22 6 5 31 25 20 7 0 2 1 1 25

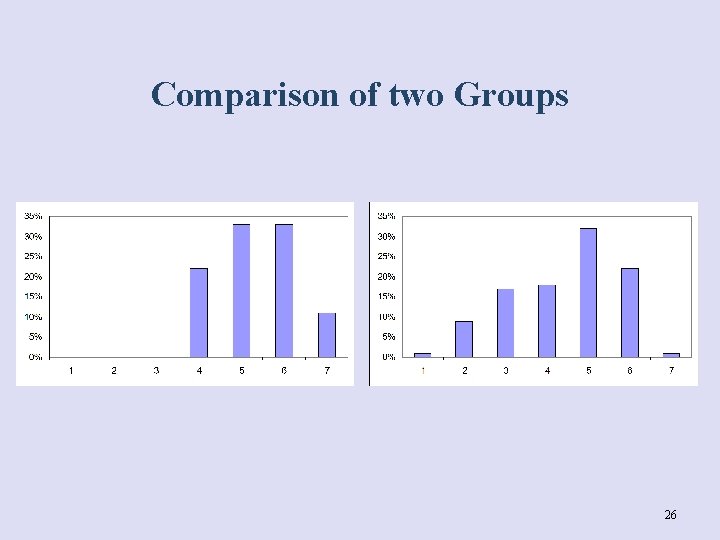

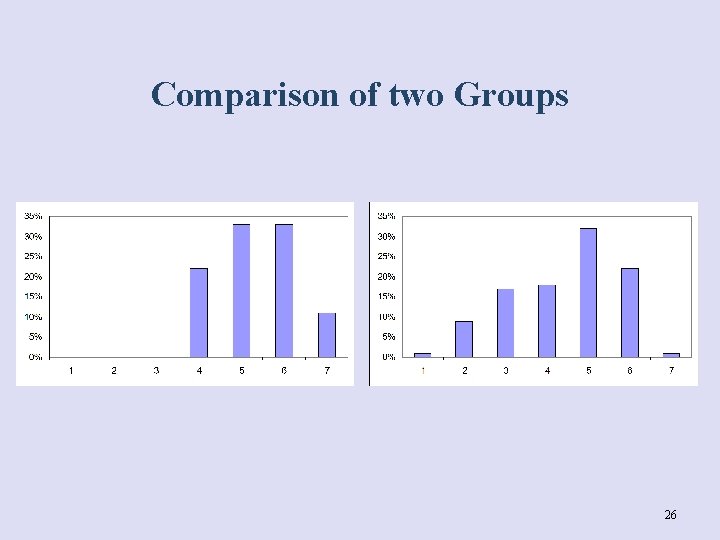

Comparison of two Groups 26

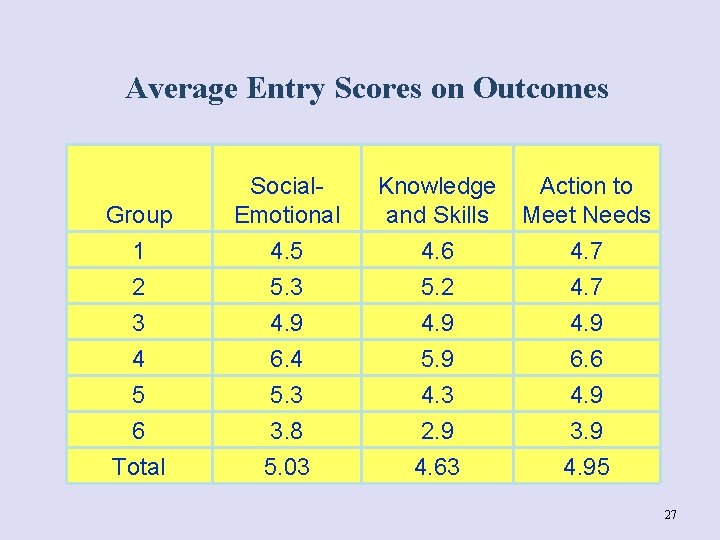

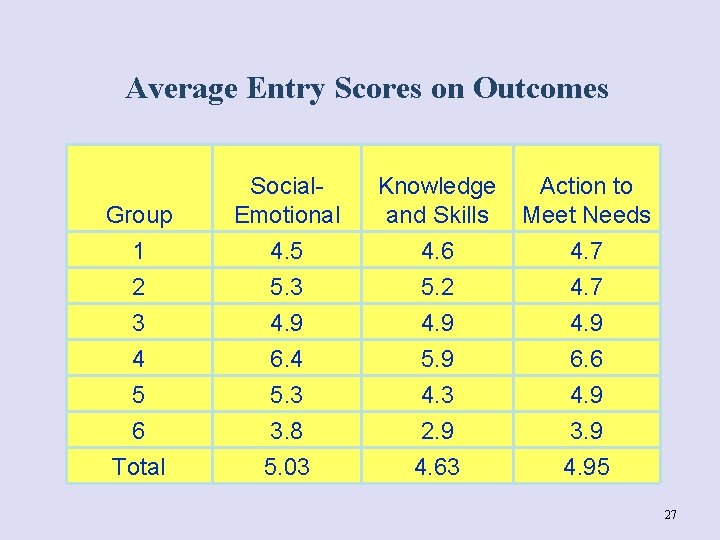

Average Entry Scores on Outcomes Group 1 2 Social. Emotional 4. 5 5. 3 Knowledge and Skills 4. 6 5. 2 Action to Meet Needs 4. 7 3 4 5 6 Total 4. 9 6. 4 5. 3 3. 8 5. 03 4. 9 5. 9 4. 3 2. 9 4. 63 4. 9 6. 6 4. 9 3. 9 4. 95 27

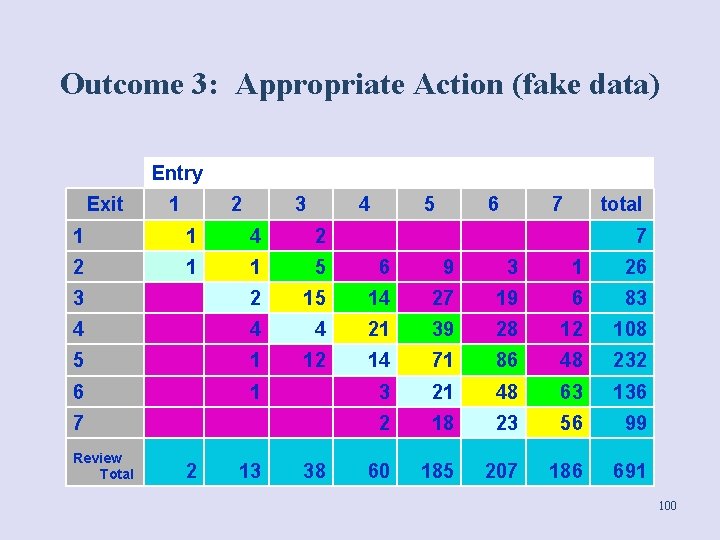

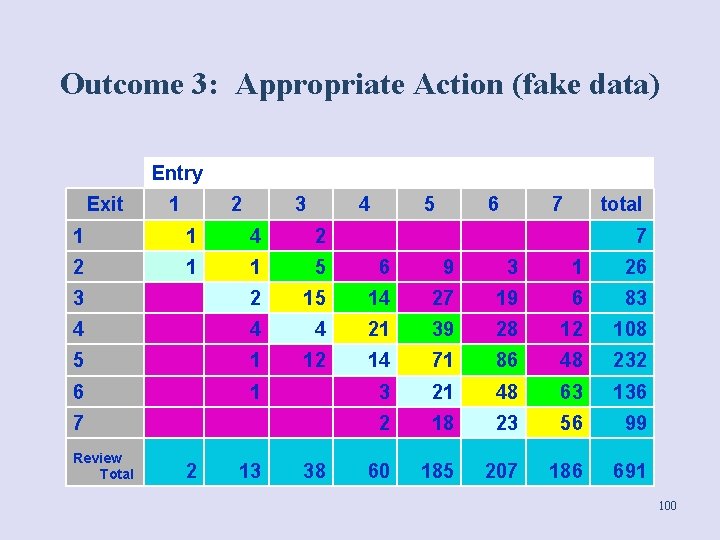

Outcome 3: Appropriate Action (fake data) Entry Exit 1 2 3 4 5 6 7 total 1 1 4 2 7 2 1 1 5 6 9 3 1 26 3 2 15 14 27 19 6 83 4 4 4 21 39 28 12 108 5 1 12 14 71 86 48 232 6 1 3 21 48 63 136 7 2 18 23 56 99 60 185 207 186 691 Review Total 2 13 38 100

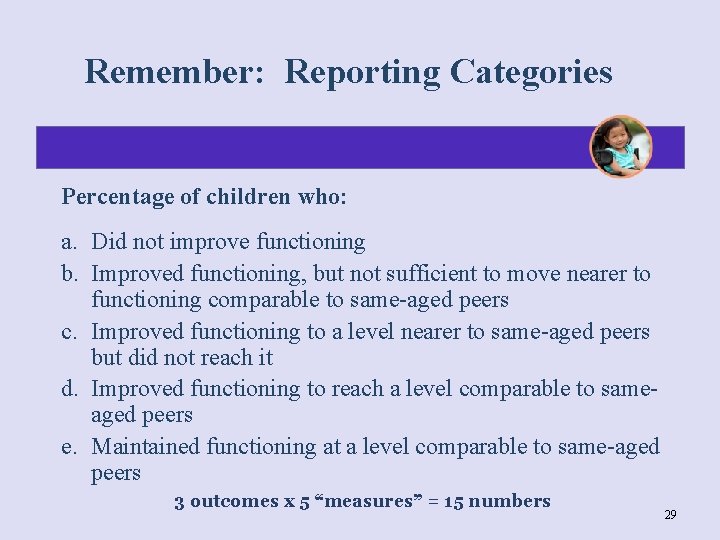

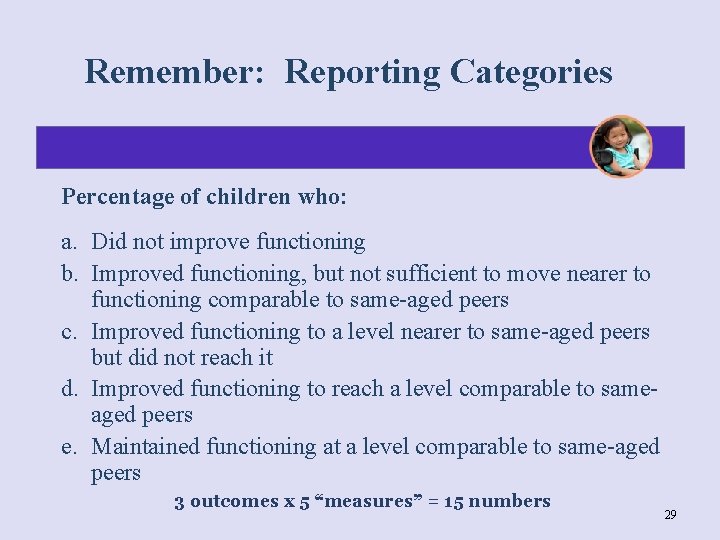

Remember: Reporting Categories Percentage of children who: a. Did not improve functioning b. Improved functioning, but not sufficient to move nearer to functioning comparable to same-aged peers c. Improved functioning to a level nearer to same-aged peers but did not reach it d. Improved functioning to reach a level comparable to sameaged peers e. Maintained functioning at a level comparable to same-aged peers 3 outcomes x 5 “measures” = 15 numbers 29

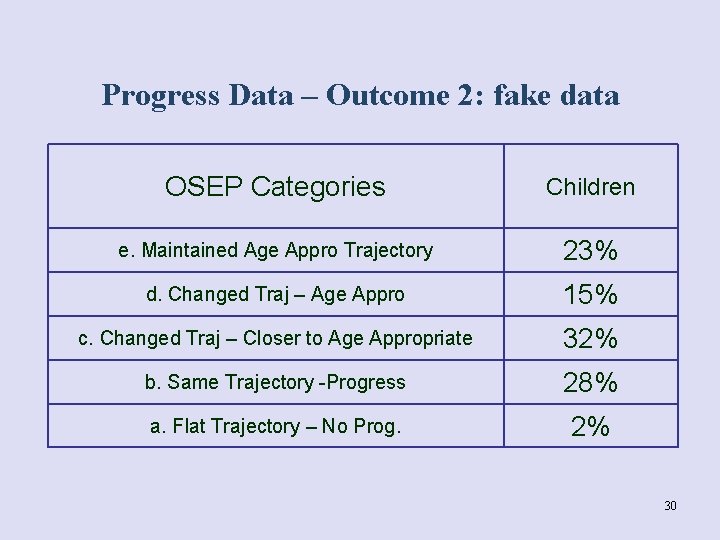

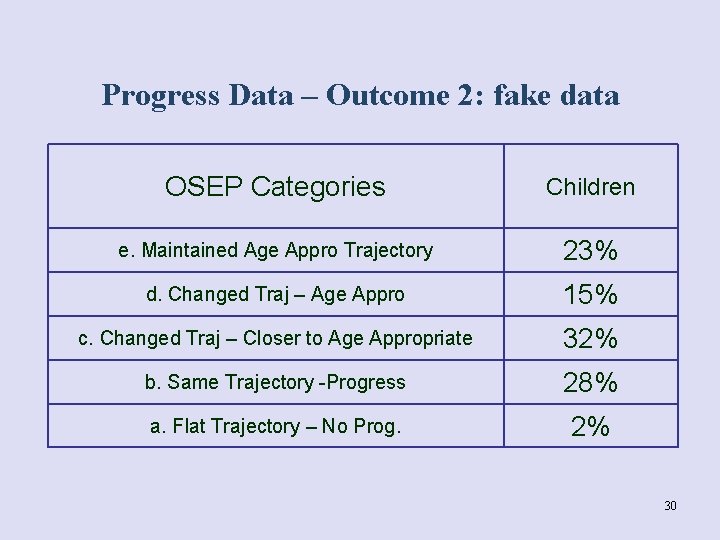

Progress Data – Outcome 2: fake data OSEP Categories Children e. Maintained Age Appro Trajectory 23% d. Changed Traj – Age Appro 15% c. Changed Traj – Closer to Age Appropriate 32% b. Same Trajectory -Progress 28% a. Flat Trajectory – No Prog. 2% 30

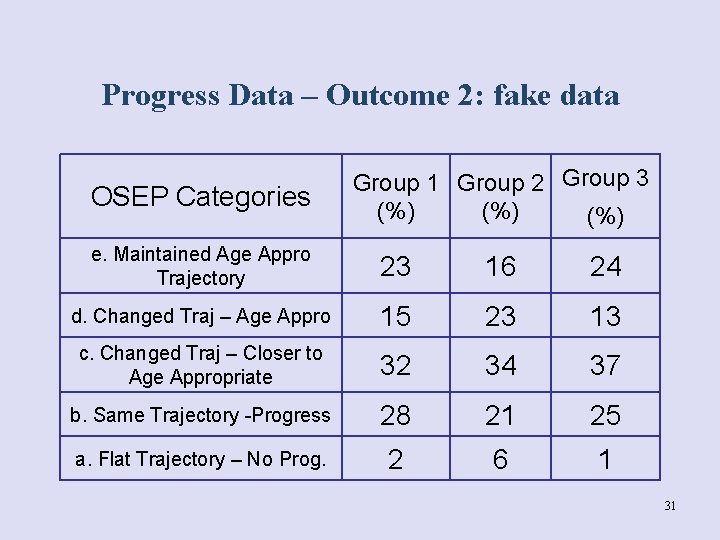

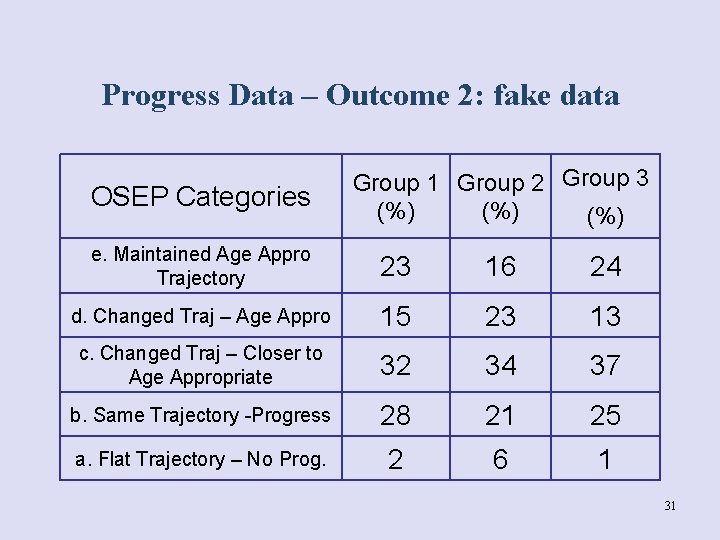

Progress Data – Outcome 2: fake data OSEP Categories Group 1 Group 2 Group 3 (%) (%) e. Maintained Age Appro Trajectory 23 16 24 d. Changed Traj – Age Appro 15 23 13 c. Changed Traj – Closer to Age Appropriate 32 34 37 b. Same Trajectory -Progress 28 21 25 a. Flat Trajectory – No Prog. 2 6 1 31

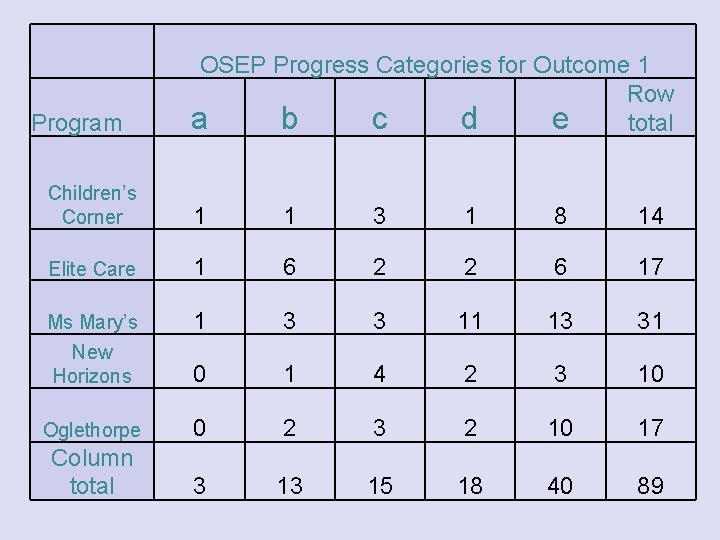

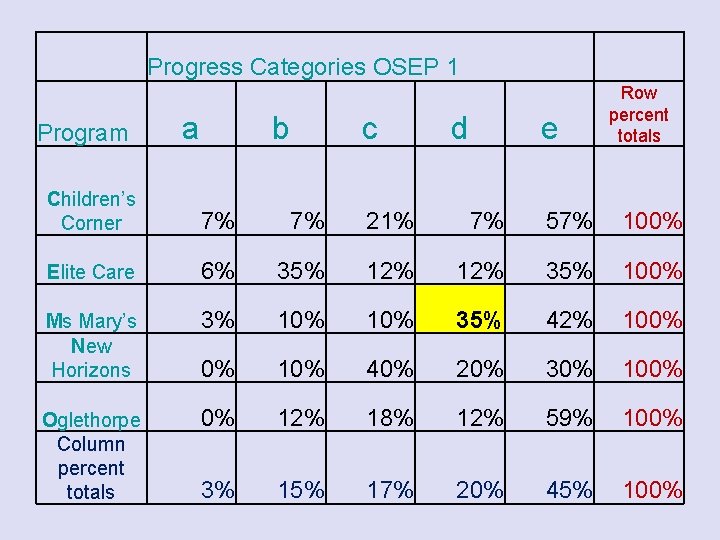

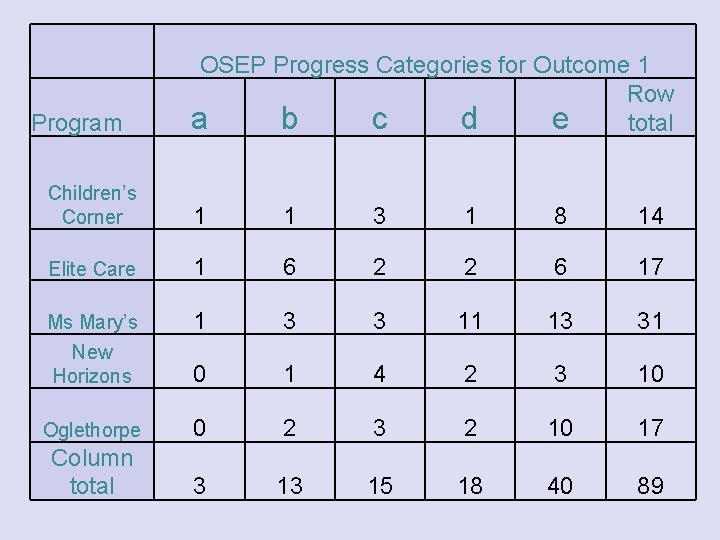

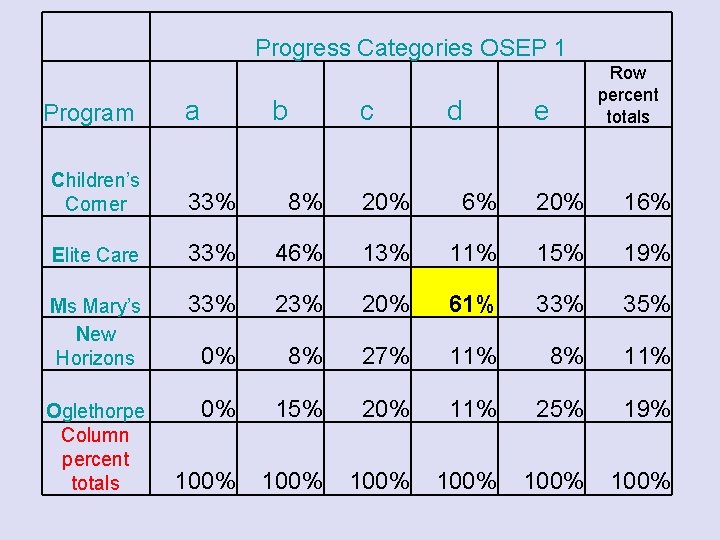

Program OSEP Progress Categories for Outcome 1 Row a b c d e total Children’s Corner 1 1 3 1 8 14 Elite Care 1 6 2 2 6 17 Ms Mary’s New Horizons 1 3 3 11 13 31 0 1 4 2 3 10 Oglethorpe 0 2 3 2 10 17 Column total 3 13 15 18 40 89

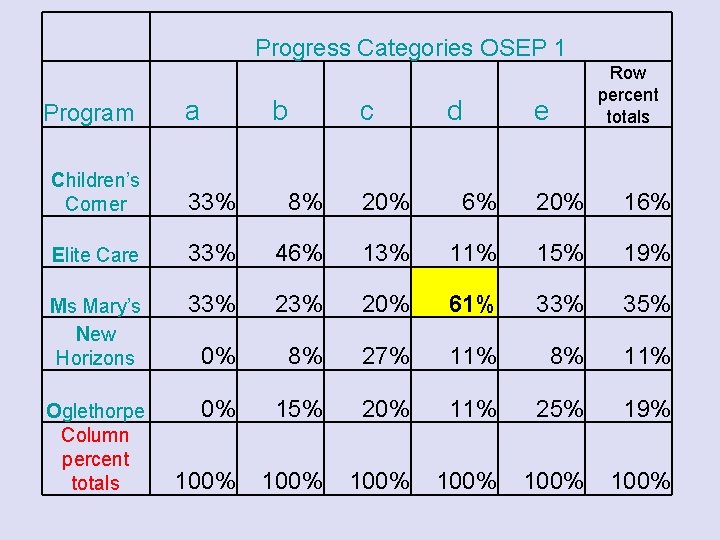

Progress Categories OSEP 1 Program a b c d Row percent totals e Children’s Corner 33% 8% 20% 6% 20% 16% Elite Care 33% 46% 13% 11% 15% 19% Ms Mary’s New Horizons 33% 20% 61% 33% 35% 0% 8% 27% 11% 8% 11% Oglethorpe Column percent totals 0% 15% 20% 11% 25% 19% 100% 100%

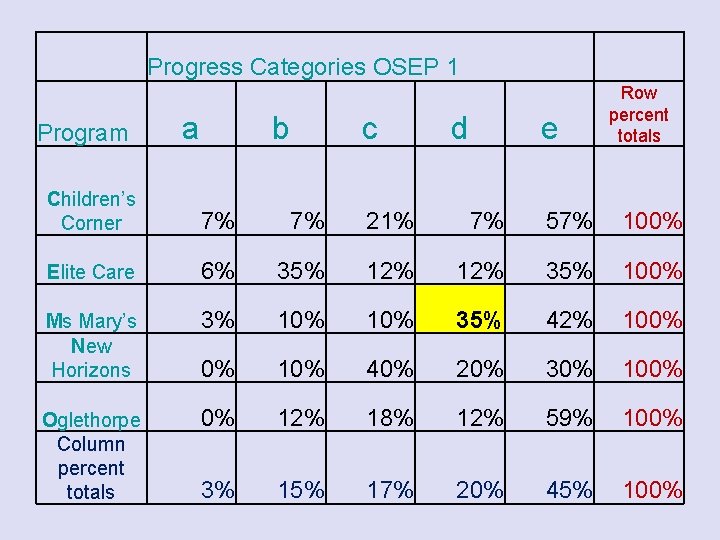

Progress Categories OSEP 1 Program a b c d e Row percent totals Children’s Corner 7% 7% 21% 7% 57% 100% Elite Care 6% 35% 12% 35% 100% Ms Mary’s New Horizons 3% 10% 35% 42% 100% 0% 10% 40% 20% 30% 100% Oglethorpe Column percent totals 0% 12% 18% 12% 59% 100% 3% 15% 17% 20% 45% 100%

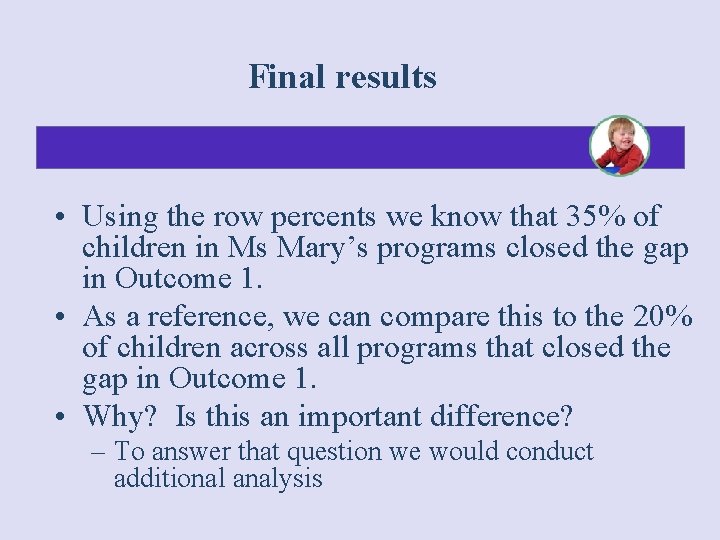

Final results • Using the row percents we know that 35% of children in Ms Mary’s programs closed the gap in Outcome 1. • As a reference, we can compare this to the 20% of children across all programs that closed the gap in Outcome 1. • Why? Is this an important difference? – To answer that question we would conduct additional analysis

Questions to ask • Do the data make sense? – Am I surprised? Do I believe the data? Believe some of the data? All of the data? • If the data are reasonable (or when they become reasonable), what might they tell us? 36

Examining COSF data at one time point • One group - Frequency Distribution – Tables – Graphs • Comparing Groups – Graphs – Averages 37

What we’ve looked at: Do outcomes vary by: • Unit/District/Program? • Rating at Entry? • Amount of movement on the scale? • % in the various progress categories? 38

What else might you want to look at? Do outcomes vary by child/family variables or by service variables, e. g. : • Services received? • Age at entry to service? • Type of services received? • Family outcomes? • Education level of parent? 39

Activity 1: Reviewing sample data 40

Small Groups • Break into small groups of ~5 • Walk through the state example answering questions as you go • Whole group: share highlights of your conversations 41

Application How could you use this type of data discussion in your training and TA? What experiences or resources do you have with discussing outcomes data in your training and TA? 42

Summary Statements 43

Origin of the Summary Statements • States reported on the OSEP Progress Categories for a few years • States knew they would be asked to set targets • Using the progress categories would require setting 15 targets… 44

Origin of the Summary Statements • ECO prepared papers with options • Convened stakeholders • Extensive discussion about pros and cons of various summary statements • See Options and ECO Recommendations for Summary Statements for Target Setting on the ECO web site: http: //www. fpg. unc. edu/~eco/assets/pdfs/summary_of_target_setting-2. pdf 45

Summary Statements 1. Of those children who entered the program below age expectations in each Outcome, the percent who substantially increased their rate of growth by the time they turned 6 years of age or exited the program. 2. The percent of children who were functioning within age expectations in each Outcome by the time they turned 6 years of age or exited the program. 46

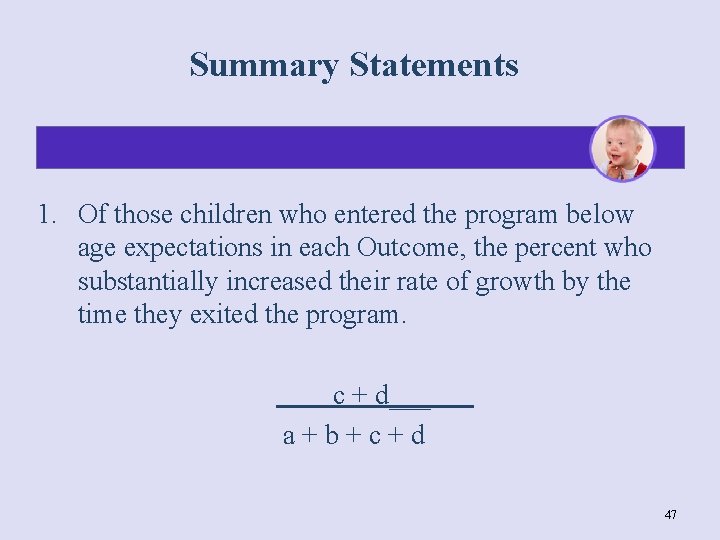

Summary Statements 1. Of those children who entered the program below age expectations in each Outcome, the percent who substantially increased their rate of growth by the time they exited the program. c + d___ a+b+c+d 47

Other Ways to Think about Summary Statement 1 • How many children changed growth trajectories during their time in the program? • Percent of the children who entered the program below age expectations made greater than expected gains, made substantial increases in their rates of growth, i. e. changed their growth trajectories 48

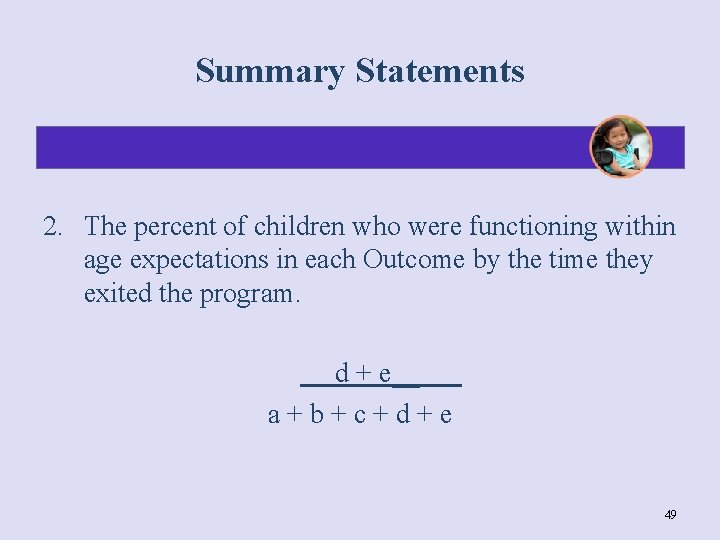

Summary Statements 2. The percent of children who were functioning within age expectations in each Outcome by the time they exited the program. d + e__ a+b+c+d+e 49

Other Ways to Think about Summary Statement 2 • How many children were functioning like same aged peers when they left the program? • Percent of the children who were functioning at age expectations in this outcome area when they exited the program, including those who: • started out behind and caught up and • entered and exited at age level 50

The connection: COSF ratings OSEP categories Summary Statements 51

National and Texas Data 52

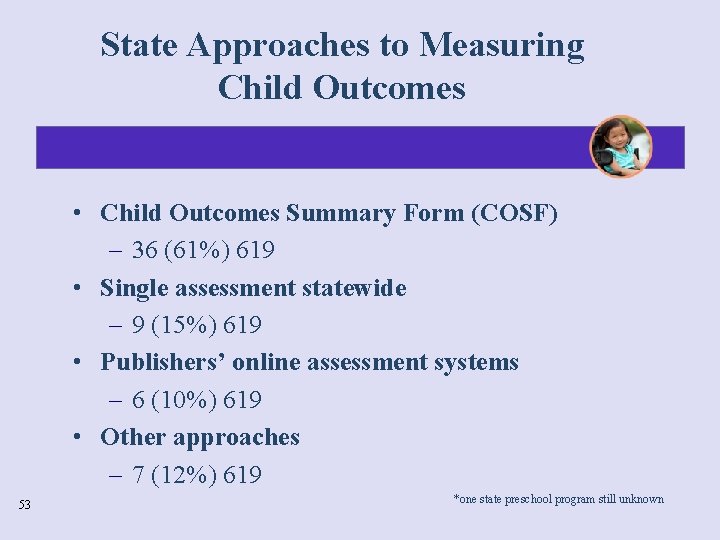

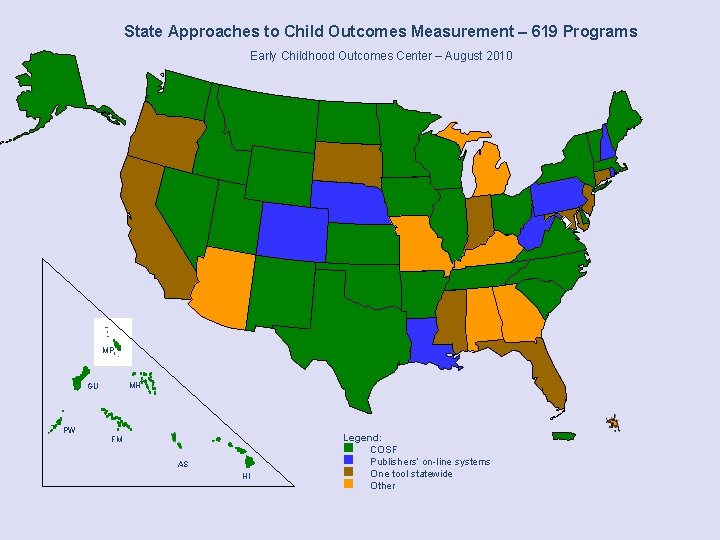

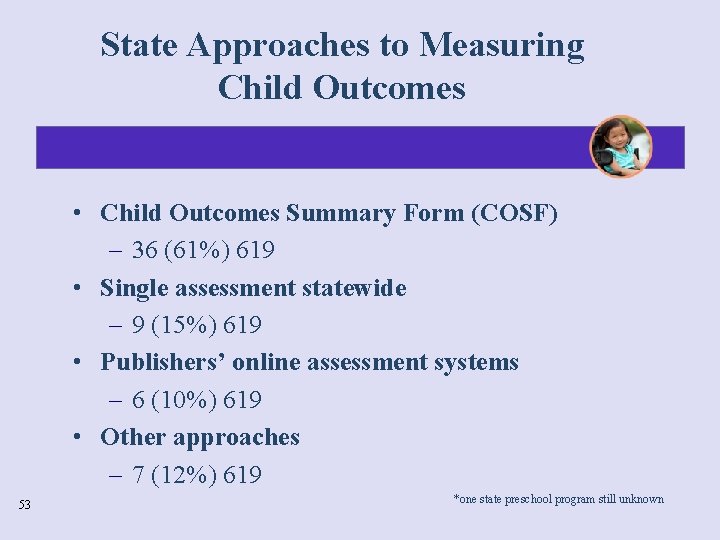

State Approaches to Measuring Child Outcomes • Child Outcomes Summary Form (COSF) – 36 (61%) 619 • Single assessment statewide – 9 (15%) 619 • Publishers’ online assessment systems – 6 (10%) 619 • Other approaches – 7 (12%) 619 53 *one state preschool program still unknown

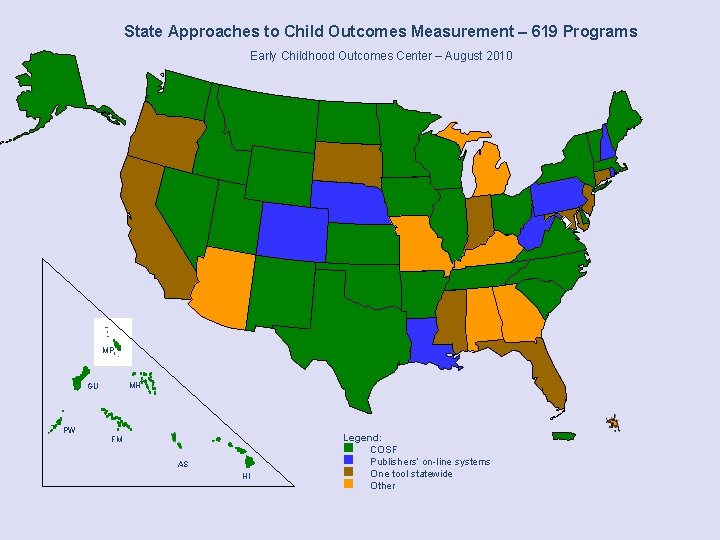

State Approaches to Child Outcomes Measurement – 619 Programs Early Childhood Outcomes Center – August 2010 MP MH GU PW FM AS HI Legend: n COSF n Publishers’ on-line systems n One tool statewide n Other

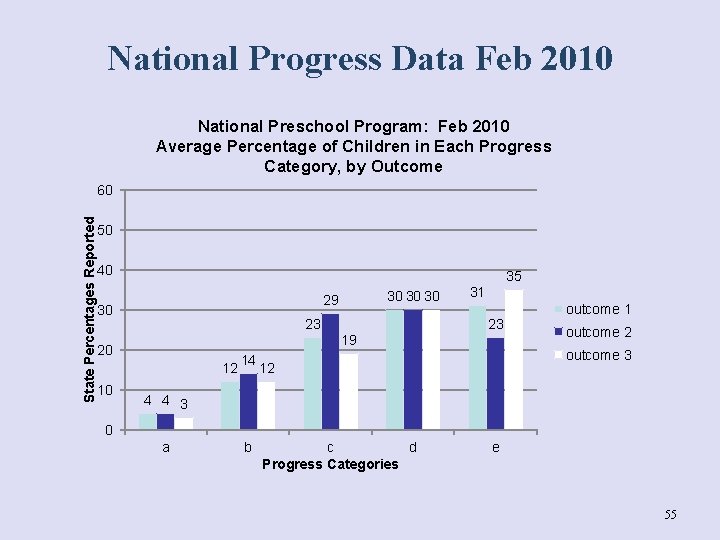

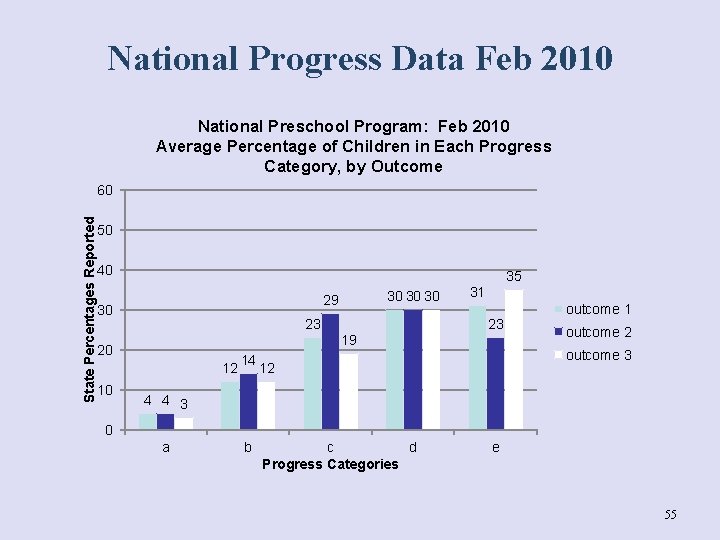

National Progress Data Feb 2010 National Preschool Program: Feb 2010 Average Percentage of Children in Each Progress Category, by Outcome State Percentages Reported 60 50 40 35 30 23 31 23 19 20 12 10 30 30 30 29 14 outcome 1 outcome 2 outcome 3 12 4 4 3 0 a b c d Progress Categories e 55

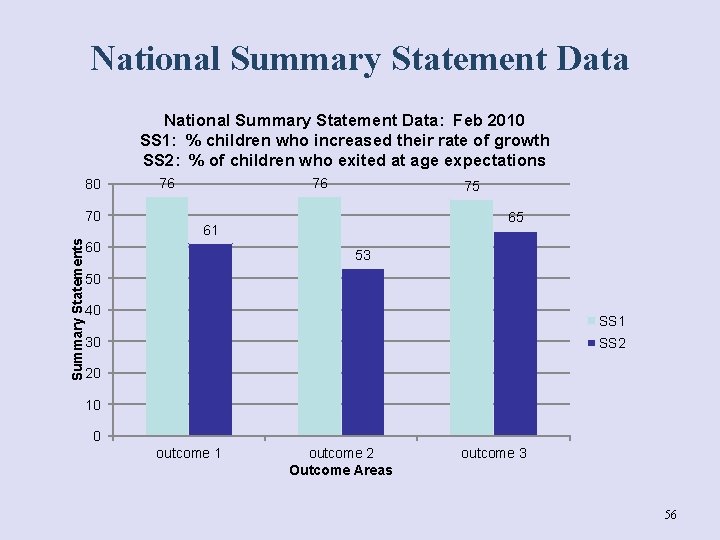

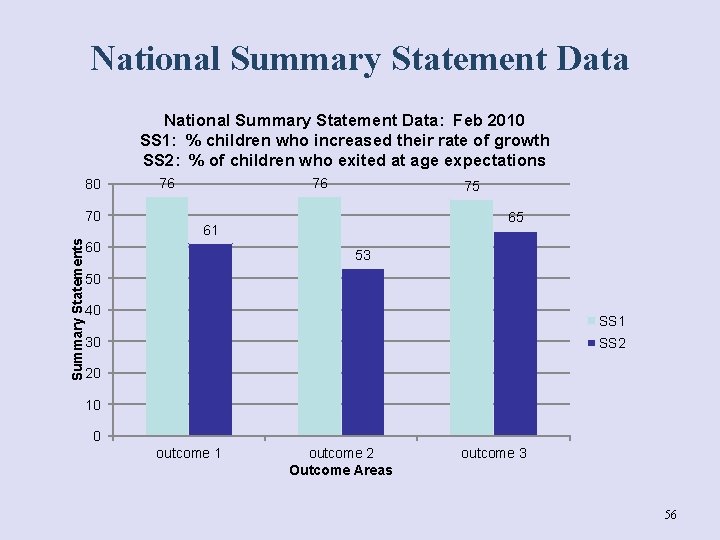

National Summary Statement Data: Feb 2010 SS 1: % children who increased their rate of growth SS 2: % of children who exited at age expectations 80 Summary Statements 70 76 76 75 65 61 60 53 50 40 SS 1 30 SS 2 20 10 0 outcome 1 outcome 2 Outcome Areas outcome 3 56

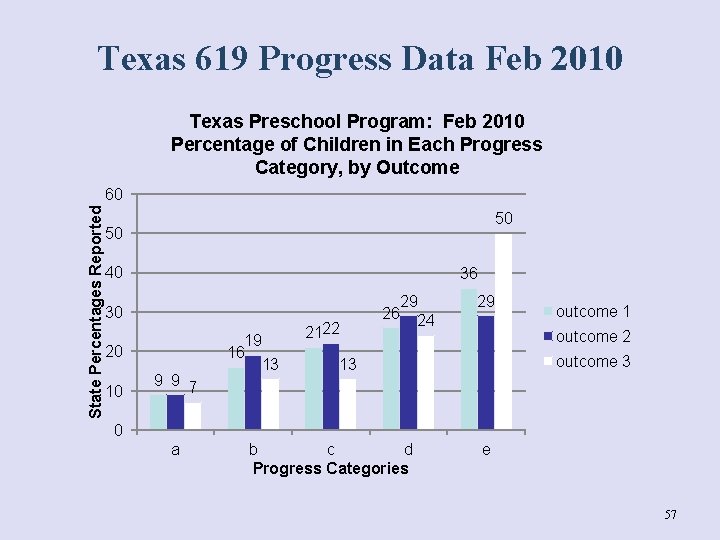

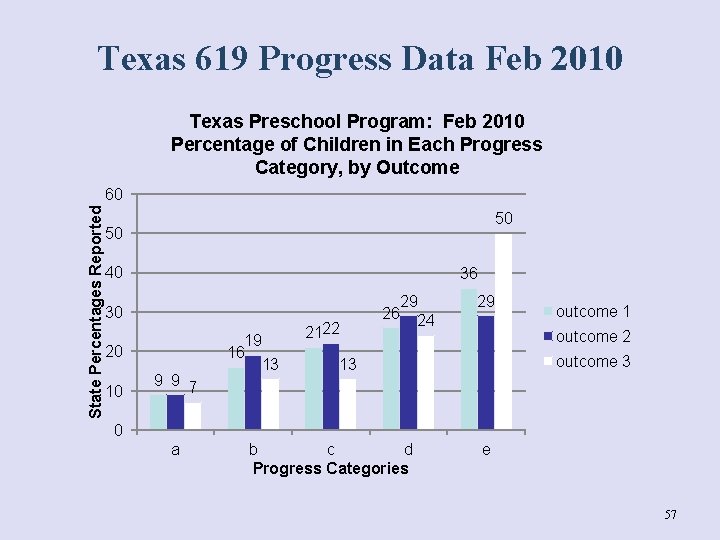

Texas 619 Progress Data Feb 2010 Texas Preschool Program: Feb 2010 Percentage of Children in Each Progress Category, by Outcome State Percentages Reported 60 50 50 40 36 30 20 10 9 9 7 19 16 13 2122 29 26 24 29 outcome 1 outcome 2 outcome 3 13 0 a b c d Progress Categories e 57

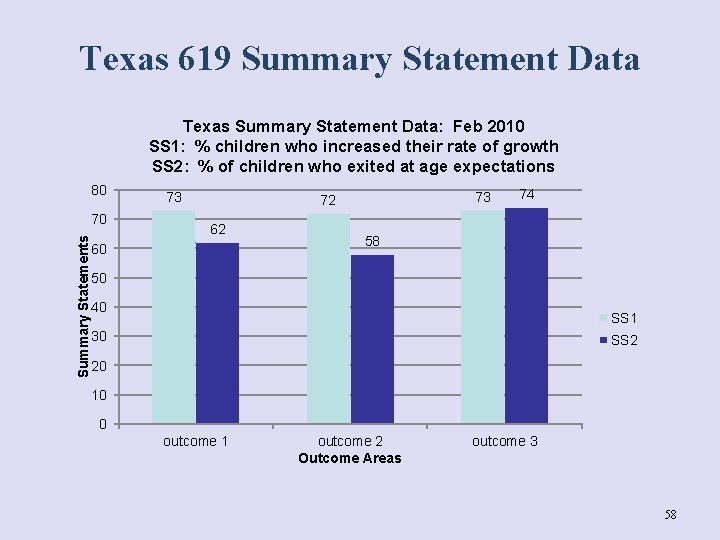

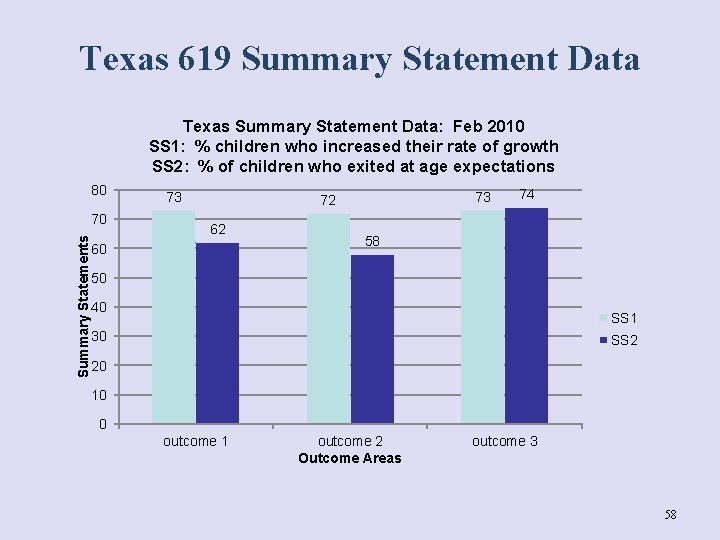

Texas 619 Summary Statement Data Texas Summary Statement Data: Feb 2010 SS 1: % children who increased their rate of growth SS 2: % of children who exited at age expectations 80 Summary Statements 70 73 73 72 62 60 74 58 50 40 SS 1 30 SS 2 20 10 0 outcome 1 outcome 2 Outcome Areas outcome 3 58

Activity 2: Texas statewide and regional data 59

Small Group Instructions: 1. Review Texas Statewide data 2. Review regional data (comparing to one another and to the state) 3. Discuss: – – – What surprises you about the data? What questions do the data raise? What additional data collection or analysis would you do to dig deeper? 4. “Gallery Walk” - Record you small groups best ideas on sheet to be posted and shared with whole group 60

Application How could you use this type of activity in your training and TA? What experiences or resources do you have with discussing outcomes data in your training and TA? 61

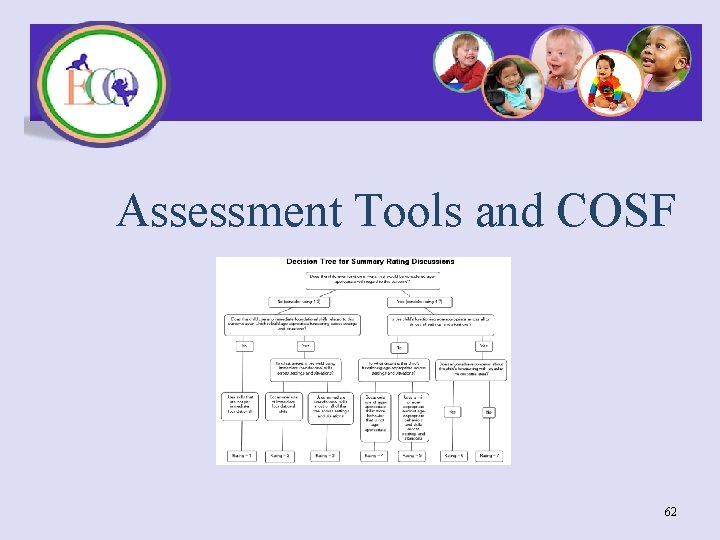

Assessment Tools and COSF 62

Recap from March - Assessment • Assessment (more debrief on this after lunch) • no assessment created for this outcomes process • best practices on assessment = multiple data sources • types of assessment including pros and cons • benefits of limiting assessments for COSF • selecting tools for COSF process • activity – reviewing assessment tools and identifying strengths, weaknesses, how it fits with COSF process 63

Selecting and implementing good formal assessments as an essential component of good child outcomes measurement Assessment considerations in reporting child outcomes data a. b. c. d. No assessment developed for this purpose No ‘perfect’ assessment Formal assessment is one piece of information Formal assessment can provide consistency across teachers/providers, programs, state e. Formal assessment can ground teachers/providers in age expectations 64

DEC recommended practices on early childhood assessment 1. Professionals and families collaborate in planning and implementing assessment. 2. Assessment is individualized and appropriate for the child and family. 3. Assessment provides useful information for intervention. 4. Professionals share information in respectful and useful ways. 5. Professionals meet legal and procedural requirements and meet recommended practice guidelines. 65

Types of Assessment • Norm-referenced instrument • • • Criterion-Referenced instrument Curriculum-based instrument Direct observation Progress monitoring Parent or professional report (and any combination of above) 66

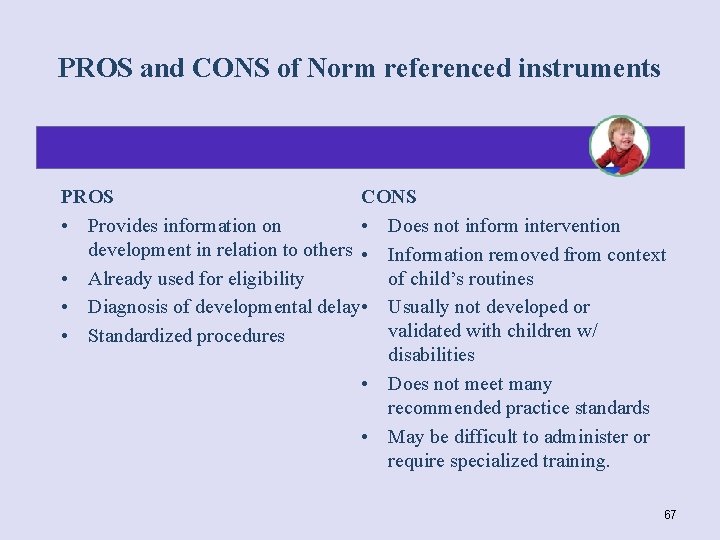

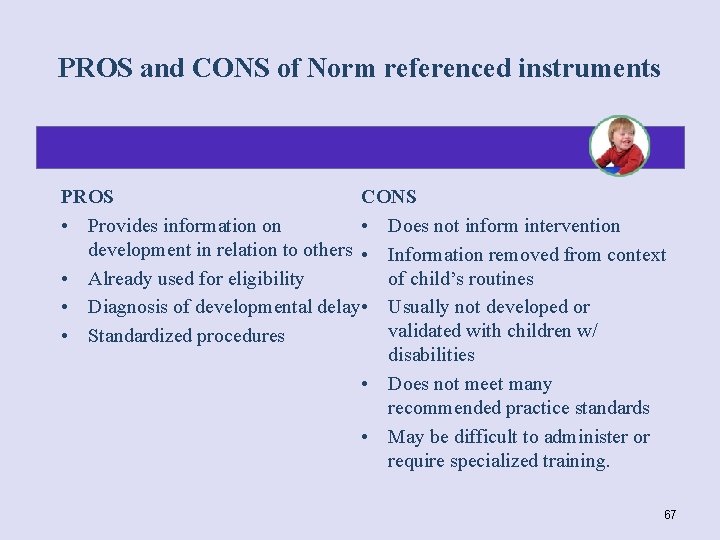

PROS and CONS of Norm referenced instruments PROS CONS • Provides information on • Does not inform intervention development in relation to others • Information removed from context • Already used for eligibility of child’s routines • Diagnosis of developmental delay • Usually not developed or validated with children w/ • Standardized procedures disabilities • Does not meet many recommended practice standards • May be difficult to administer or require specialized training. 67

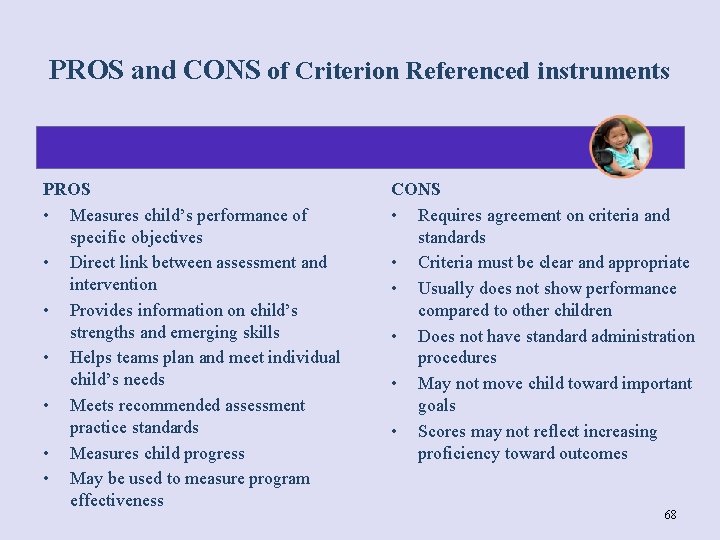

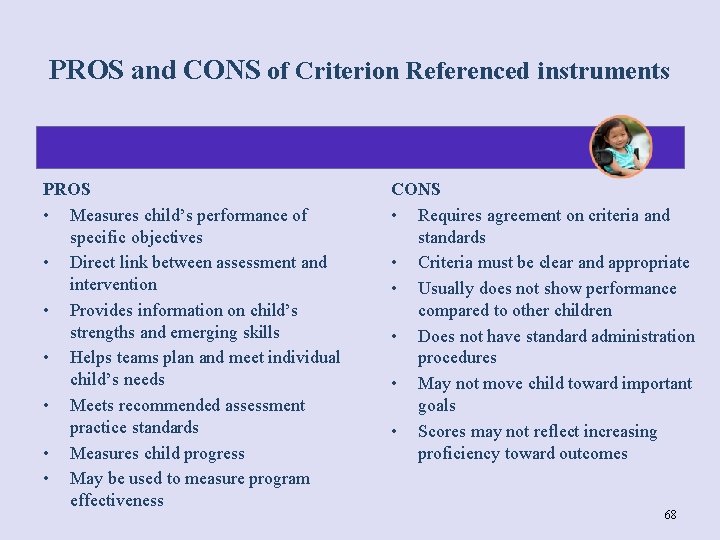

PROS and CONS of Criterion Referenced instruments PROS • Measures child’s performance of specific objectives • Direct link between assessment and intervention • Provides information on child’s strengths and emerging skills • Helps teams plan and meet individual child’s needs • Meets recommended assessment practice standards • Measures child progress • May be used to measure program effectiveness CONS • Requires agreement on criteria and standards • Criteria must be clear and appropriate • Usually does not show performance compared to other children • Does not have standard administration procedures • May not move child toward important goals • Scores may not reflect increasing proficiency toward outcomes 68

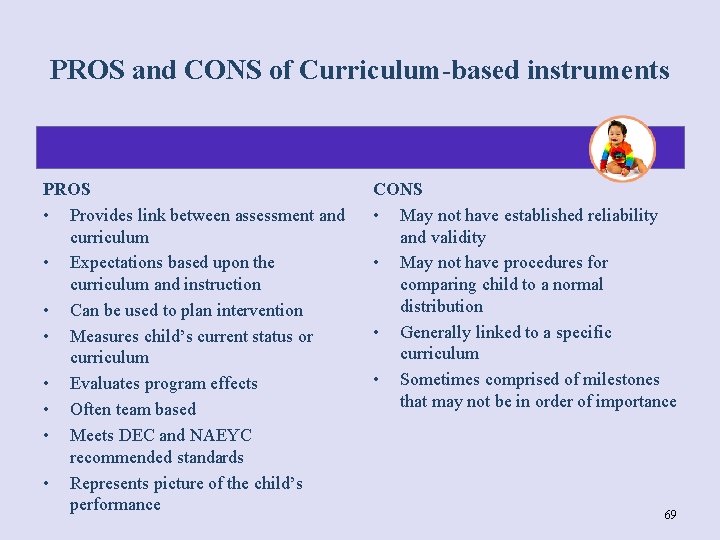

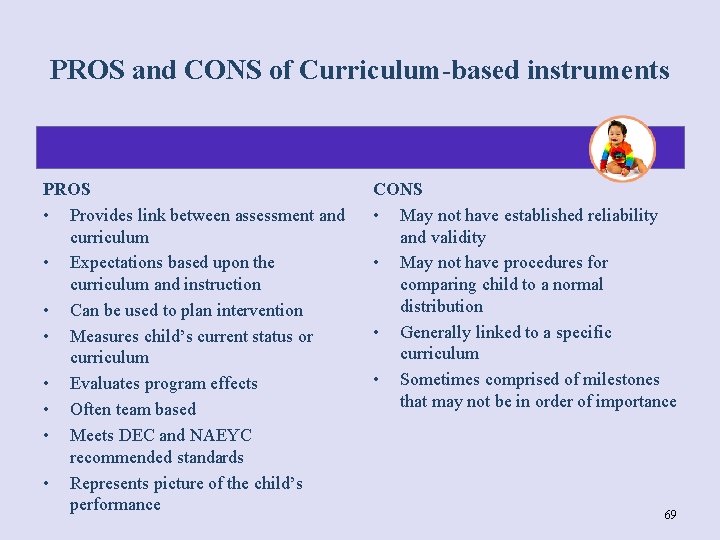

PROS and CONS of Curriculum-based instruments PROS • Provides link between assessment and curriculum • Expectations based upon the curriculum and instruction • Can be used to plan intervention • Measures child’s current status or curriculum • Evaluates program effects • Often team based • Meets DEC and NAEYC recommended standards • Represents picture of the child’s performance CONS • May not have established reliability and validity • May not have procedures for comparing child to a normal distribution • Generally linked to a specific curriculum • Sometimes comprised of milestones that may not be in order of importance 69

Benefits of limiting assessment tools used for COSF • Ensure use of quality assessments as foundation for COSF • Increase the consistency across individuals and programs (ensure the quality of the data) • Reduce Cost/Resources it takes to train and support many tools • Other benefits? 70

What types of criteria to consider in the process of selecting tools for use with COSF • How well does it cover the 3 outcome areas? • How functional is the information collected about the child? • Does the instrument allow a child to show their skills and behaviors in natural settings and situations? • Does the instrument incorporate observation, parent input, or other sources? • Is the instrument limited to an ideal testing situation? 71

How’s it going? Successes? Challenges? Next steps? 72

Activity 3: Reviewing data on assessments used with COSFs 73

Small Group Instructions: 1. Review data on assessments used with COSFs 2. Discuss: – – What do the data say? What stands out for you? What might this data mean? What questions does it raise? What next steps might be taken? 3. Share back with whole group 74

Application How could you use an activity like this in your training and TA? What experiences or resources do you have about assessment that you already use in your training and TA? 75

Communicating Effectively with the Media and Public about Child Outcomes Data 76

Being prepared………. . • How will we talk about the child outcomes data with: – The media – State legislators – State agency heads – Families – Early intervention and 619 providers – State advisory councils – Other key stakeholders in your state 77

Being prepared means………. • Thinking ahead about how to talk about the data. • Writing out the specific messages you want to make (an internal ‘talking points’ memo). • Developing a 1 -2 page fact sheet that summarizes the findings and your messages. • Using public dissemination opportunities to get out key messages that will educate the public about your programs, their benefits. 78

Being prepared means thinking about… • What audiences? • What you want each audience to know about your program including any recent changes in eligibility, system, etc. )? • What you want each audience to know about the data? 79

Being prepared means………. • Identifying key spokespersons. • Being thoroughly familiar with your state’s data. • Practicing your talking points with individuals who are not familiar with the program. 80

Crafting the messages: Set the context • Provide the context (Federal reporting). • Use the ECO Center Q&A document** to explain: – What are the child outcomes – Why we are measuring and reporting outcomes – The ultimate goal is to enable young children to be active and successful participants during the early childhood years and in the future in a variety of settings, in their homes with their families, in child care, preschool or school programs, and in the community. 81

Crafting the messages: Summary Statement #1 • Of those children who entered the program below age expectations in Outcome __, the percent who substantially increased their rate of growth by the time they turned 3/6 years of age or exited the program. • Share the numbers; describe them in simple ways: – “Nearly two-thirds of the children made greater than expected progress while in the program. ” 82

Crafting the messages: Summary Statement #2 • The percent of children who were functioning within age expectations in Outcome __ by the time they turned 3/6 years of age or exited the program. • Share the numbers; describe them in simple ways: – “About half of the children were functioning like same age peers when they left the program. ” 83

Key issues in messaging the data…. • How do we look ahead and become thoroughly prepared to present and explain the child outcomes data? 84

Anticipate Questions • What are 3 questions that different audiences may ask you about the child outcomes data? – Families – Legislators – Agency heads – State or local councils/boards – The media 85

Making the message understandable…. . How do you make the message easily understandable for the public? ü Use “Plain Speak” ü Don’t be repetitive ü Explain how your data relates to the average person in your state ü What are you saying about how the children are doing? ü Discuss in terms of what is important to all families 86

Describe the numbers in simple ways …. – “Nearly half the children showed made greater than expected progress while they were in the program. ” – “About two-thirds of the children were performing like same age peers when they left the program. ” You can talk about more than the two Summary Statements. 87

Give YOUR interpretation about the numbers…. . • “We see these data as good news…. ” • “We are pleased that the data shows that children in these programs are making progress between the time they enter and leave these programs…” • “Many children are catching up with peers in the same age group…” 88

Share other key messages to educate your audiences…. • “These programs serve many different children…. ” • “Some children have mild delays or problems in one area only. These are children who can ‘catch up’”. • “Other children have more significant disabilities; some make substantial progress and others make less progress”. 89

Link messages to broader EC issues… • Point out how the program is helping get children ready for school. • Note that there is lots of policy attention and research about the cost effectiveness of early learning programs. 90

Think ahead about messages that might work or not work…. • What are some messages that have worked for you in the past? • What are some messages that didn’t work so well, or were misinterpreted by the media or public or other key audiences? 91

If the data show possible problems…. • Get out in front of the data, and note the problem areas: – “We see large differences in the data in different regions………. . ” • Then, offer interpretations and note that you are trying to understand such differences: – “We are trying to understand these variations. They may have to do with differences in the children being served or in ways the data are being collected…. . ” 92

Preparing a response…. . • Find the main message you want to communicate • Translate the main message into a simple statement about the data • Use quotes to explain the meaning of the data; give an interpretation – Include quote by state official. – Include quote by program or provider. – Include quote(s) from parent(s). 93

End any messaging by returning to the big picture message…. . “The goal of these programs is for children to be active and successful participants now and in the future”. 94

Activity 4: Prepare to answer questions from different audiences 95

Small Group Instructions: 1. Identify 3 key questions that different audiences may ask about child outcomes data 2. Choose one key question to focus on for creating a response. 3. Discuss how you might use data to respond to the question. What are the messages you want to send? 4. Share back with whole group 96

Application How could you use the messaging materials in your training and TA? What similar experiences or resources do you have that you already use in your training and TA? 97

Public Reporting 98

Public Reporting • Requirements • Timelines • Expectations 99

Wrap Up Day 1 100

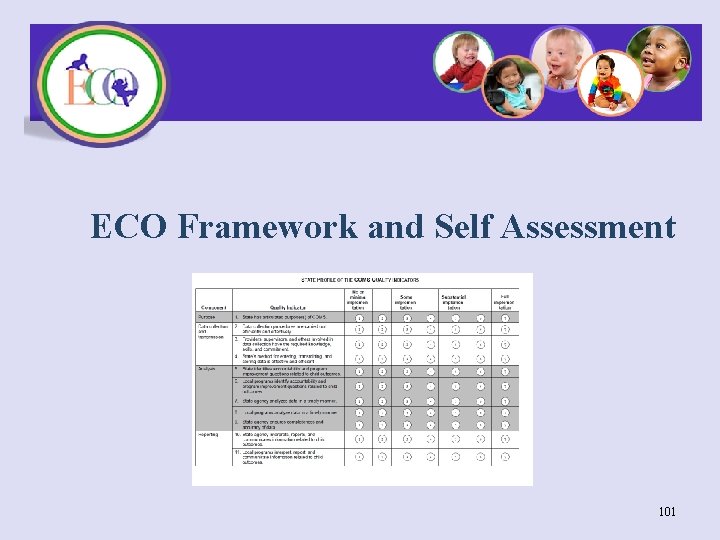

ECO Framework and Self Assessment 101

Purpose of the ECO Framework • Designed to identify key components that make up a quality outcomes measurement system. • Designed to be used by state agencies to assess progress toward full implementation of a child outcomes measurement system. 102

Components measured • Purpose • Data collection and transmission • Analysis • Reporting • Using data • Evaluation • Cross-system coordination 103

Self Assessment Scale 1 = No or minimal implementation 3 = Some implementation 5 = Substantial implementation 7 = Full implementation (effective, efficient) 104

Activity 3: ECO Framework and Self Assessment 105

Small Group Instructions: 1. Break into 6 groups – each assigned a focus: 1. 2. 3. Data collection and transmission Analysis Reporting AND Using data 2. Discuss and complete the self assessment area assigned to your group. 3. Share back with whole group • How is Texas doing in this area? • How are regions/districts doing in this area? 106

Application How could you use the framework and/or self assessment in your training and TA? What similar experiences or resources do you have that you already use in your training and TA? 107

Needs Assessment 108

Keeping our eye on the prize: High quality services for children and families that will lead to good outcomes. 109

Find more resources at: http: //www. the-eco-center-org 110