Analysis of Virtualization Technologies for High Performance Computing

- Slides: 25

Analysis of Virtualization Technologies for High Performance Computing Environments Andrew J. Younge, Robert Henschel, James T. Brown, Gregor von Laszewski, Judy Qiu, Geoffrey C. Fox Indiana University Bloomington https: //portal. futuregrid. org 1

Outline • • • Introduction Related work Feature comparison Future. Grid experimental setup Performance comparison – HPCC – SPEC • Discussion https: //portal. futuregrid. org 2

Introduction • What is Virtualization? – A method of partitioning a physical computer into multiple “virtual” computers, each acting independently as if they were running directly on hardware. • What is a Hypervisor? – A technique used to run multiple operating systems simultaneously on a single resource. – Also called a Virtual Machine Monitor (VMM). • What is a Virtual Machine? – A software implementation of a machine that executes as if it was running on a physical resource directly. • Why does it matter? – Cloud Computing!!! https: //portal. futuregrid. org 3

Motivation • Most “Cloud” deployments rely on virtualization. – Amazon EC 2, Go. Grid, Azure, Rackspace Cloud … – Nimbus, Eucalyptus, Open. Nebula, Open. Stack … • Number of Virtualization tools or Hypervisors available today. – Xen, KVM, VMWare, Virtualbox, Hyper-V … • Need to compare these hypervisors for use within the scientific computing community. https: //portal. futuregrid. org 4

Current Hypervisors https: //portal. futuregrid. org 5

Hypervisors • Evaluate Xen, KVM, and Virtual. Box hypervisors against native hardware – Common, well documented – Open source, open architecture – Relatively mature & stable • Cannot benchmark VMWare hypervisors due to proprietary licensing issues. https: //portal. futuregrid. org 6

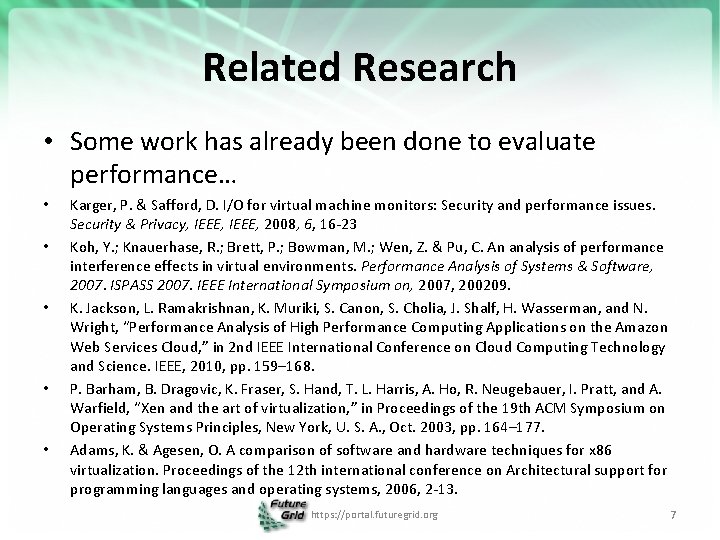

Related Research • Some work has already been done to evaluate performance… • • • Karger, P. & Safford, D. I/O for virtual machine monitors: Security and performance issues. Security & Privacy, IEEE, 2008, 6, 16 -23 Koh, Y. ; Knauerhase, R. ; Brett, P. ; Bowman, M. ; Wen, Z. & Pu, C. An analysis of performance interference effects in virtual environments. Performance Analysis of Systems & Software, 2007. ISPASS 2007. IEEE International Symposium on, 2007, 200209. K. Jackson, L. Ramakrishnan, K. Muriki, S. Canon, S. Cholia, J. Shalf, H. Wasserman, and N. Wright, “Performance Analysis of High Performance Computing Applications on the Amazon Web Services Cloud, ” in 2 nd IEEE International Conference on Cloud Computing Technology and Science. IEEE, 2010, pp. 159– 168. P. Barham, B. Dragovic, K. Fraser, S. Hand, T. L. Harris, A. Ho, R. Neugebauer, I. Pratt, and A. Warfield, “Xen and the art of virtualization, ” in Proceedings of the 19 th ACM Symposium on Operating Systems Principles, New York, U. S. A. , Oct. 2003, pp. 164– 177. Adams, K. & Agesen, O. A comparison of software and hardware techniques for x 86 virtualization. Proceedings of the 12 th international conference on Architectural support for programming languages and operating systems, 2006, 2 -13. https: //portal. futuregrid. org 7

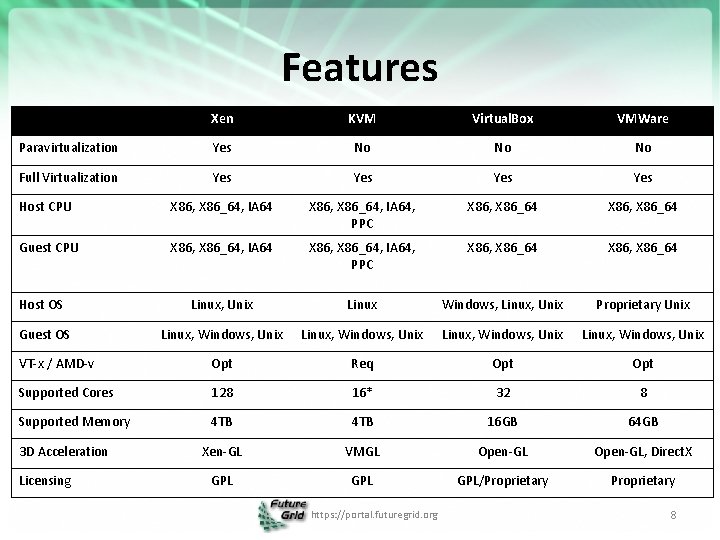

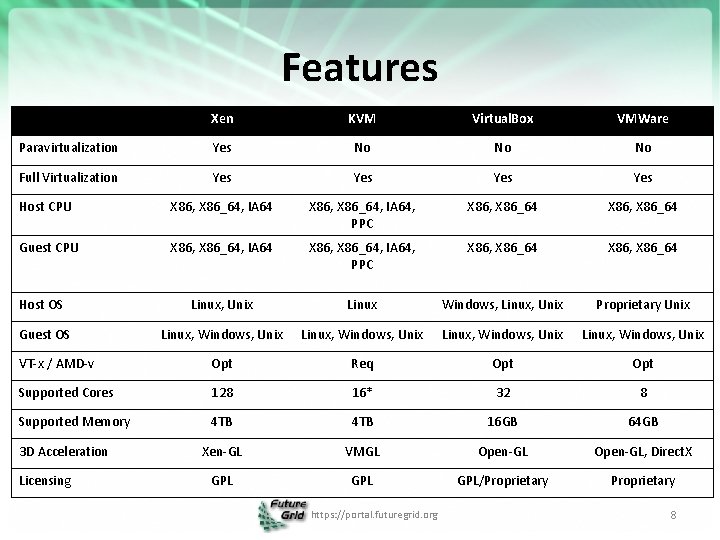

Features Xen KVM Virtual. Box VMWare Paravirtualization Yes No No No Full Virtualization Yes Yes Host CPU X 86, X 86_64, IA 64, PPC X 86, X 86_64 Guest CPU X 86, X 86_64, IA 64, PPC X 86, X 86_64 Host OS Linux, Unix Linux Windows, Linux, Unix Proprietary Unix Guest OS Linux, Windows, Unix VT-x / AMD-v Opt Req Opt Supported Cores 128 16* 32 8 Supported Memory 4 TB 16 GB 64 GB Xen-GL VMGL Open-GL, Direct. X GPL GPL/Proprietary 3 D Acceleration Licensing https: //portal. futuregrid. org 8

Usability • KVM and Virtual. Box trivial to install & deploy. – Xen requires special kernel, leading to more complications. – VMWare ESX runs as a standalone hypervisor. • All are supported under Libvirt API. – Used by many Iaa. S frameworks. • Xen & Virtualbox have nice CLI, VMWare has an advanced web based GUI. https: //portal. futuregrid. org 9

Performance Analysis • In order to assess various performance metrics, benchmarks are needed. – Provide a fair, apples-to-apples comparison between each hypervisor. – Comparisons can be made across other benchmark submissions on different machines. – Reproducible and verifiable results. – Open standards, no special optimizations or tricks available (hopefully). https: //portal. futuregrid. org 10

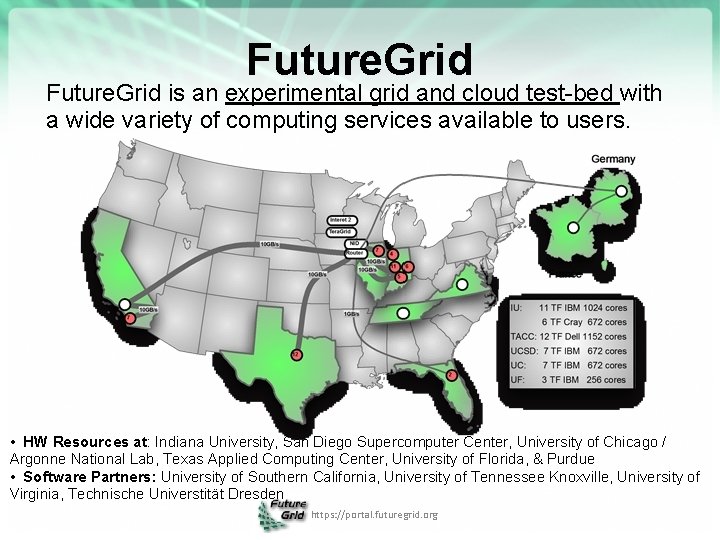

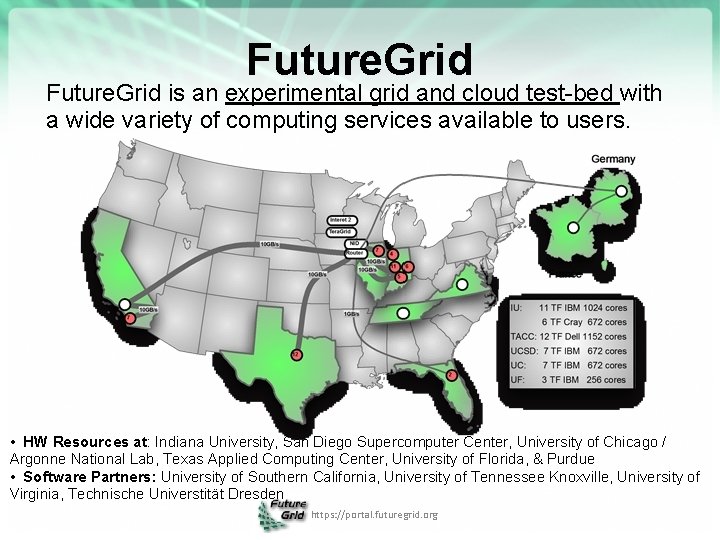

Future. Grid is an experimental grid and cloud test-bed with a wide variety of computing services available to users. • HW Resources at: Indiana University, San Diego Supercomputer Center, University of Chicago / Argonne National Lab, Texas Applied Computing Center, University of Florida, & Purdue • Software Partners: University of Southern California, University of Tennessee Knoxville, University of Virginia, Technische Universtität Dresden https: //portal. futuregrid. org

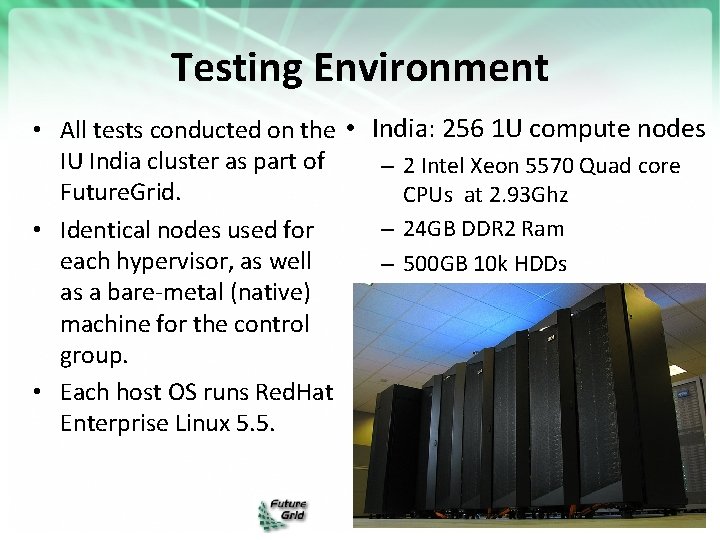

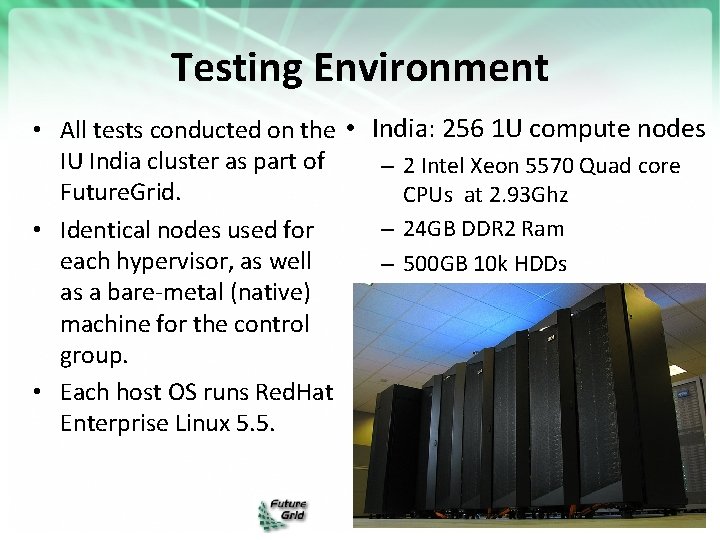

Testing Environment • All tests conducted on the • India: 256 1 U compute nodes IU India cluster as part of – 2 Intel Xeon 5570 Quad core Future. Grid. CPUs at 2. 93 Ghz – 24 GB DDR 2 Ram • Identical nodes used for each hypervisor, as well – 500 GB 10 k HDDs as a bare-metal (native) – Infini. Band DDR 20 Gbs machine for the control group. • Each host OS runs Red. Hat Enterprise Linux 5. 5. 12

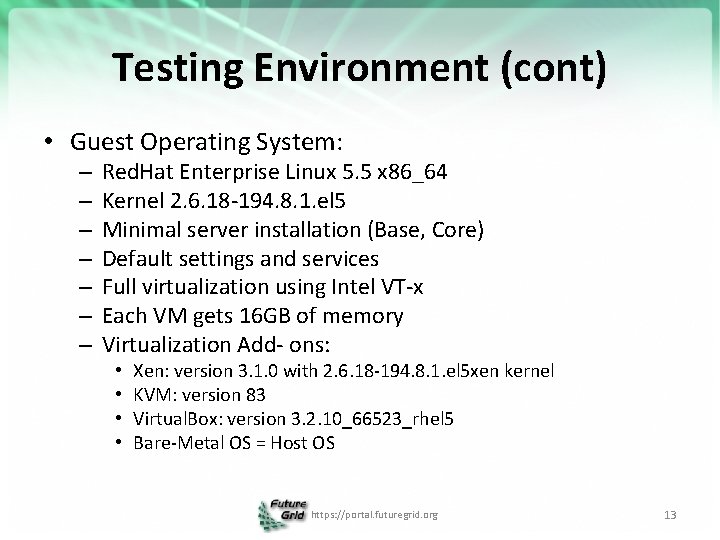

Testing Environment (cont) • Guest Operating System: – – – – Red. Hat Enterprise Linux 5. 5 x 86_64 Kernel 2. 6. 18 -194. 8. 1. el 5 Minimal server installation (Base, Core) Default settings and services Full virtualization using Intel VT-x Each VM gets 16 GB of memory Virtualization Add- ons: • • Xen: version 3. 1. 0 with 2. 6. 18 -194. 8. 1. el 5 xen kernel KVM: version 83 Virtual. Box: version 3. 2. 10_66523_rhel 5 Bare-Metal OS = Host OS https: //portal. futuregrid. org 13

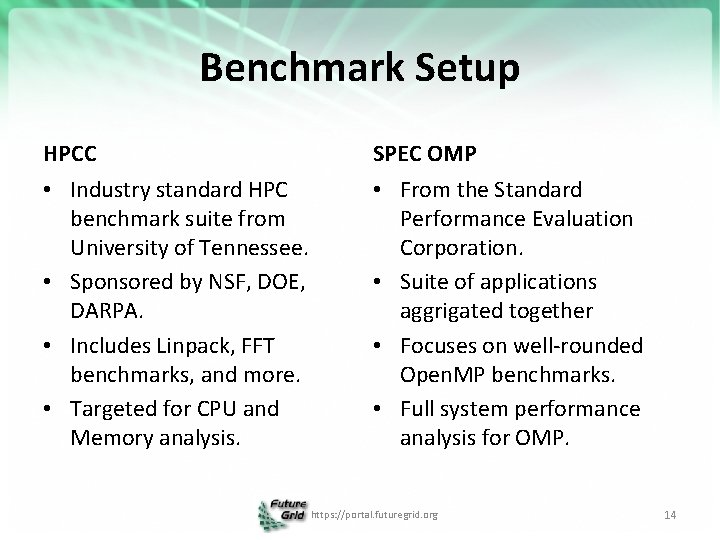

Benchmark Setup HPCC SPEC OMP • Industry standard HPC benchmark suite from University of Tennessee. • Sponsored by NSF, DOE, DARPA. • Includes Linpack, FFT benchmarks, and more. • Targeted for CPU and Memory analysis. • From the Standard Performance Evaluation Corporation. • Suite of applications aggrigated together • Focuses on well-rounded Open. MP benchmarks. • Full system performance analysis for OMP. https: //portal. futuregrid. org 14

https: //portal. futuregrid. org 15

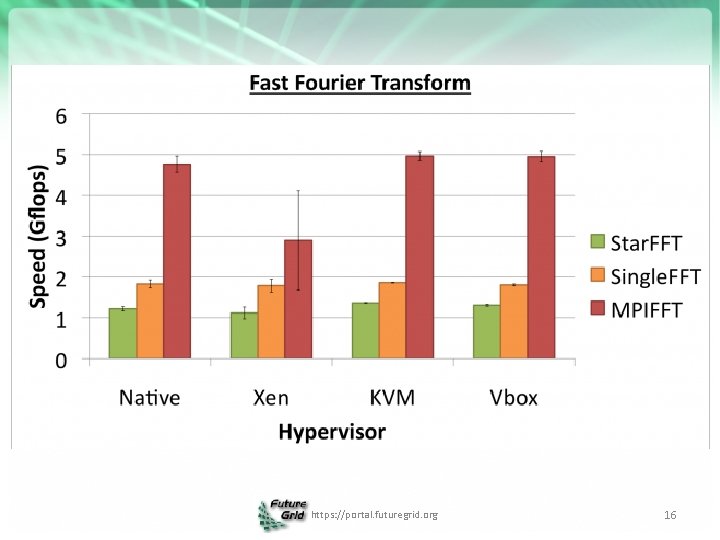

https: //portal. futuregrid. org 16

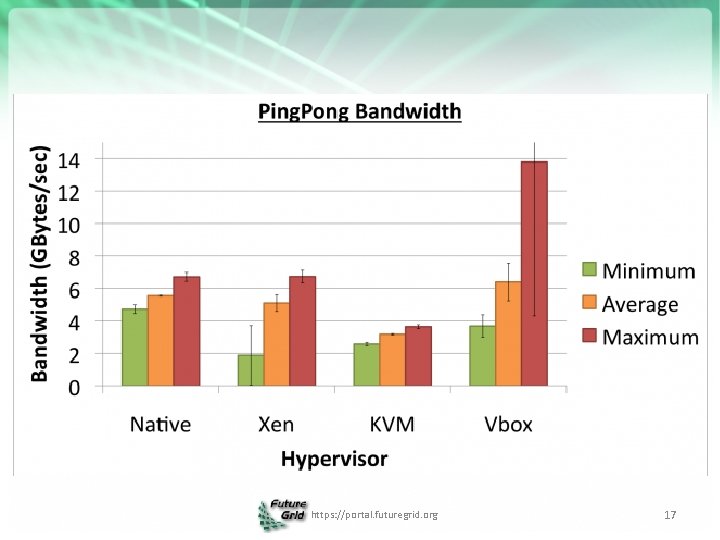

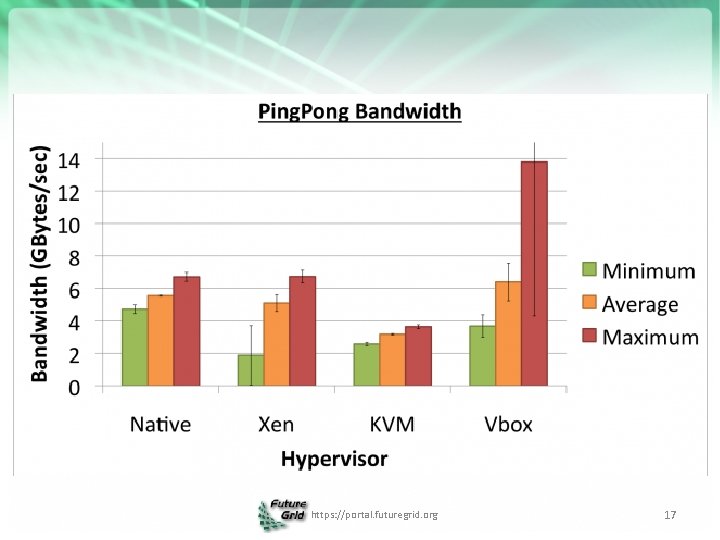

https: //portal. futuregrid. org 17

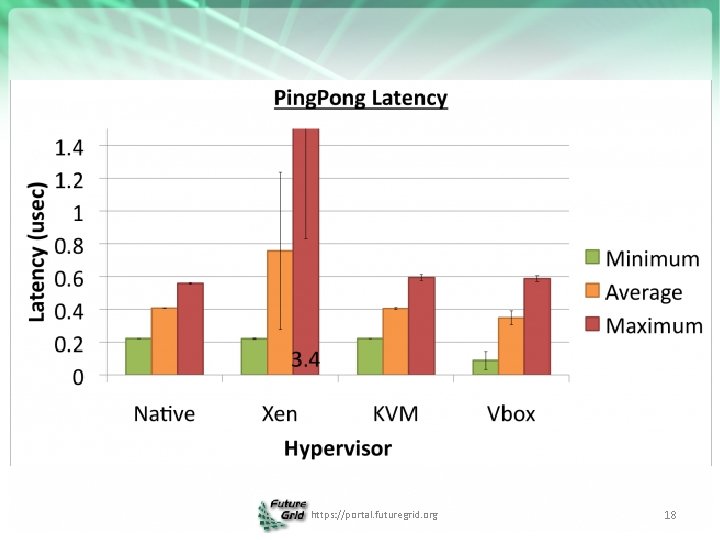

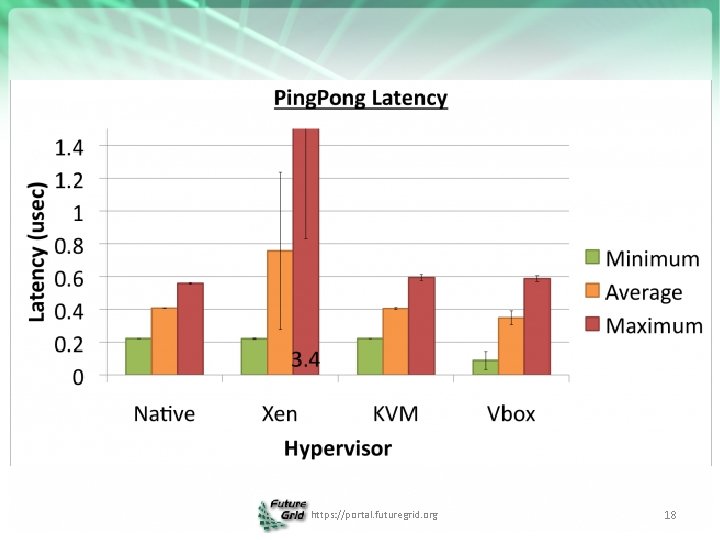

https: //portal. futuregrid. org 18

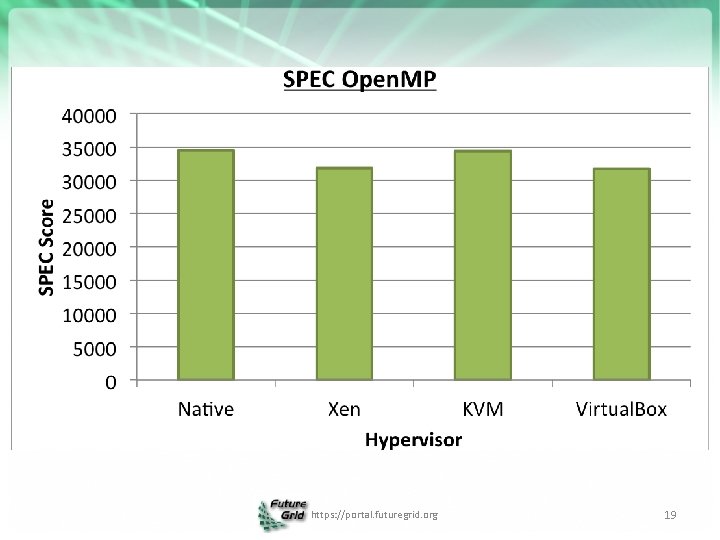

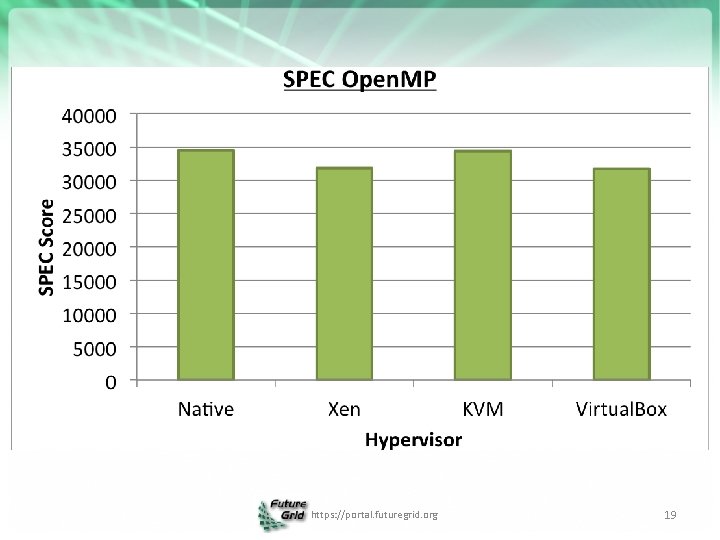

https: //portal. futuregrid. org 19

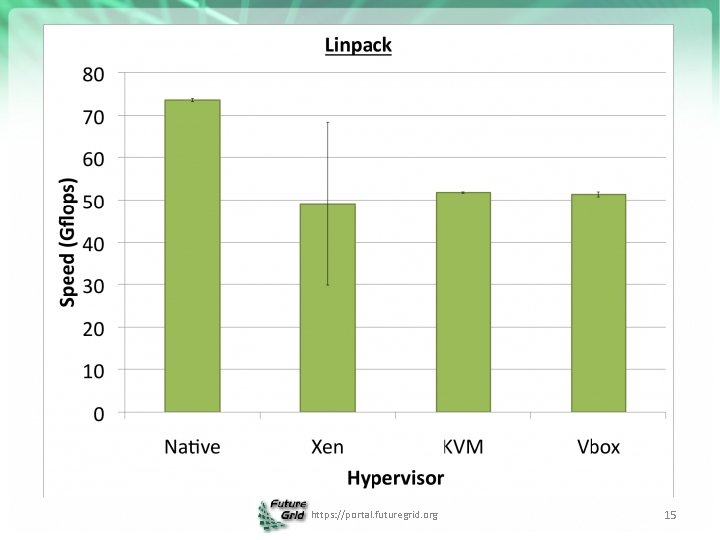

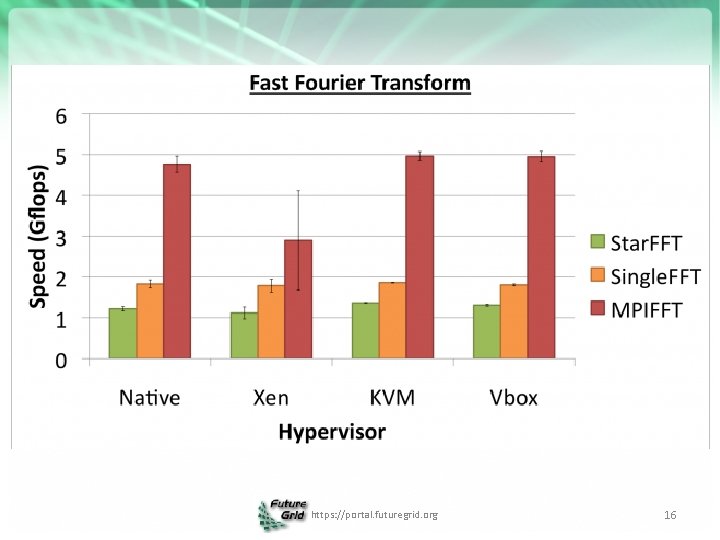

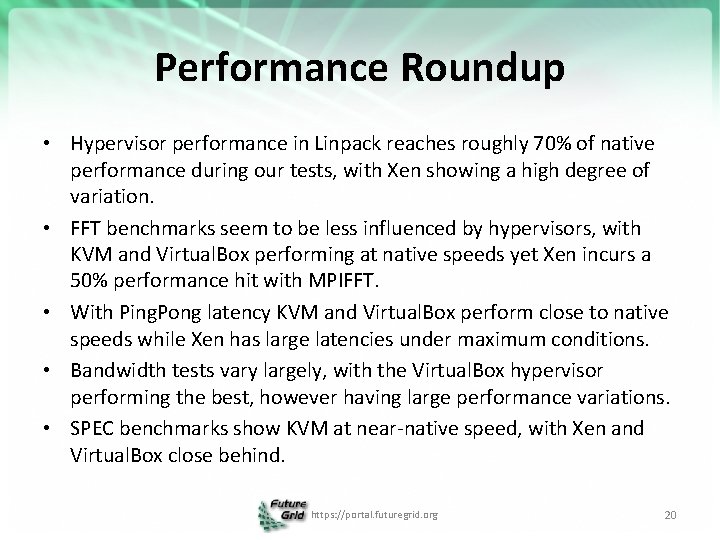

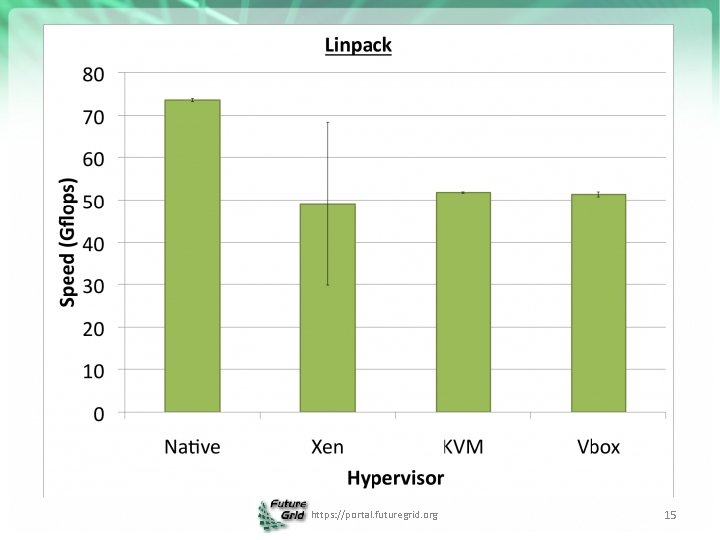

Performance Roundup • Hypervisor performance in Linpack reaches roughly 70% of native performance during our tests, with Xen showing a high degree of variation. • FFT benchmarks seem to be less influenced by hypervisors, with KVM and Virtual. Box performing at native speeds yet Xen incurs a 50% performance hit with MPIFFT. • With Ping. Pong latency KVM and Virtual. Box perform close to native speeds while Xen has large latencies under maximum conditions. • Bandwidth tests vary largely, with the Virtual. Box hypervisor performing the best, however having large performance variations. • SPEC benchmarks show KVM at near-native speed, with Xen and Virtual. Box close behind. https: //portal. futuregrid. org 20

Conclusion • Big Question: Is Cloud Computing viable for scientific High Performance Computing? – Our answer is “Yes” (for the most part). • Features: All hypervisors are similar. • Performance: KVM is fastest across most benchmarks, Virtual. Box close. • Overall, we have found KVM to be the best hypervisor choice for HPC. – Currently moving to KVM for all of Future. Grid. https: //portal. futuregrid. org 21

THANK YOU! https: //portal. futuregrid. org 22

Virtual Machines http: //futuregrid. org 23

Feature Roundup • All hypervisors evaluated have acceptable level of features for x 86 virtualization. • Xen provides best expandability, supporting up to 128 CPUs and 4 TB of RAM. – Can remove CPU limit for KVM. – Virtual. Box needs to add support for >16 GB RAM. • All have API plugins to allow for simplified Iaa. S usage. Need to learn more from a performance comparison…. https: //portal. futuregrid. org 24

Benchmarks • SPEC Benchmarks – spec. CPU 2006 – CPU bound benchmark – spec. MPI 2007 – MPI: cpu, memory, network – spec. OMP 2001 – Open MP: cpu, memory, IPC – spec. VIRT 2010 – CPU, memory, disk I/O, network • HPCC Benchmarks – HPL (Linpack), DGEMM, STREAM, PTRANS, Random. Access, FFT, etc http: //futuregrid. org 25