Analysis of Trained CNN Receptive Field Weights of

- Slides: 18

Analysis of Trained CNN (Receptive Field & Weights of Network) Bukweon Kim

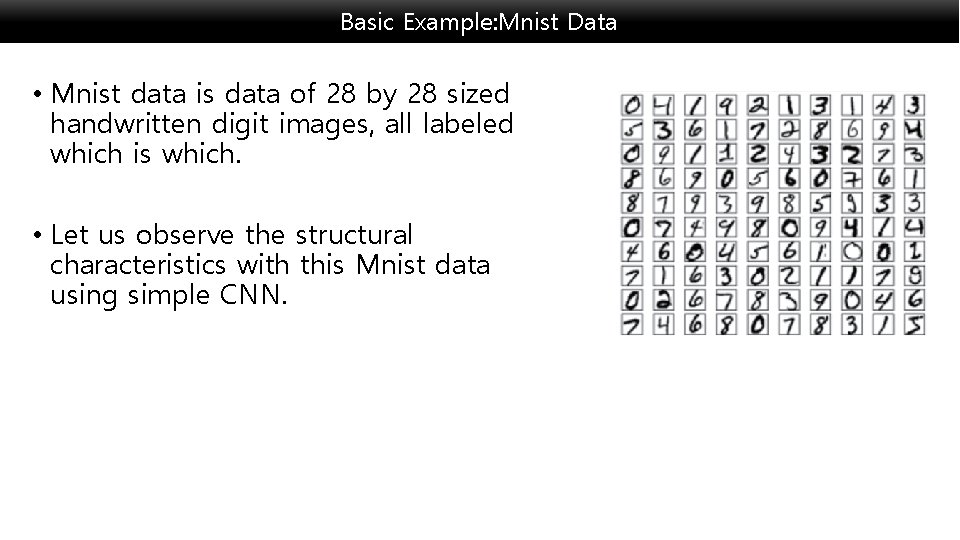

Basic Example: Mnist Data • Mnist data is data of 28 by 28 sized handwritten digit images, all labeled which is which. • Let us observe the structural characteristics with this Mnist data using simple CNN.

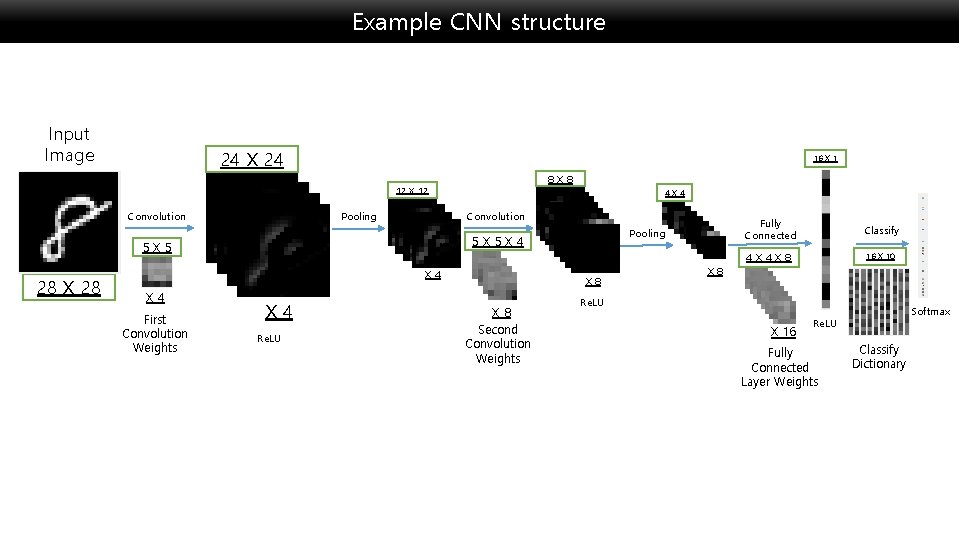

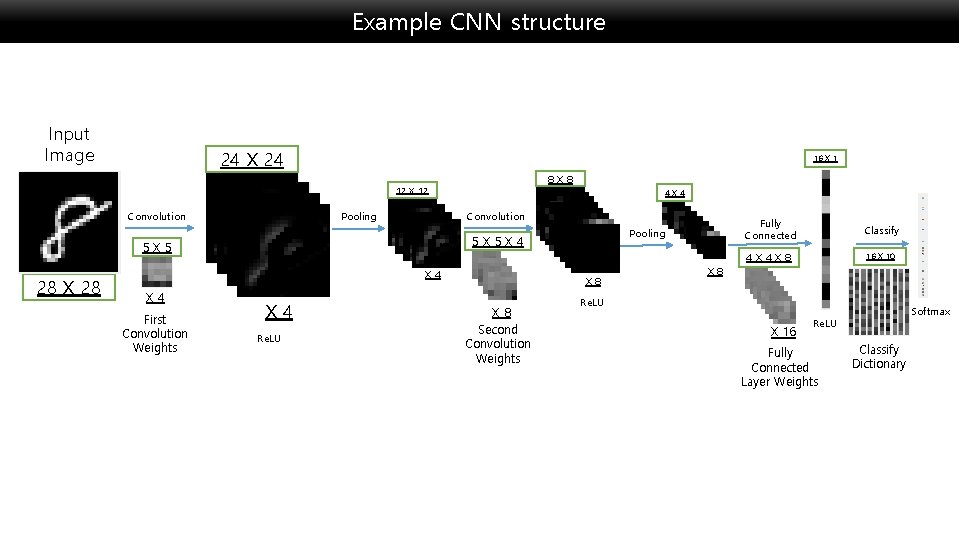

Example CNN structure Input Image 24 X 24 16 X 1 8 X 8 12 X 12 Convolution Pooling Convolution X 4 First Convolution Weights Pooling 5 X 5 X 4 5 X 5 28 X 28 4 X 4 Re. LU X 8 Second Convolution Weights Fully Connected Classify 4 X 4 X 8 16 X 10 X 8 Re. LU X 16 Softmax Re. LU Fully Connected Layer Weights Classify Dictionary

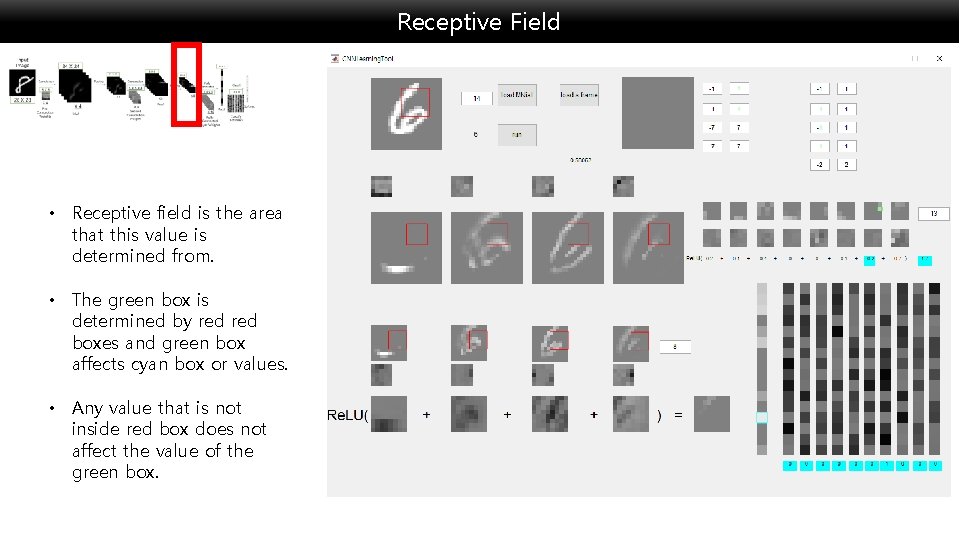

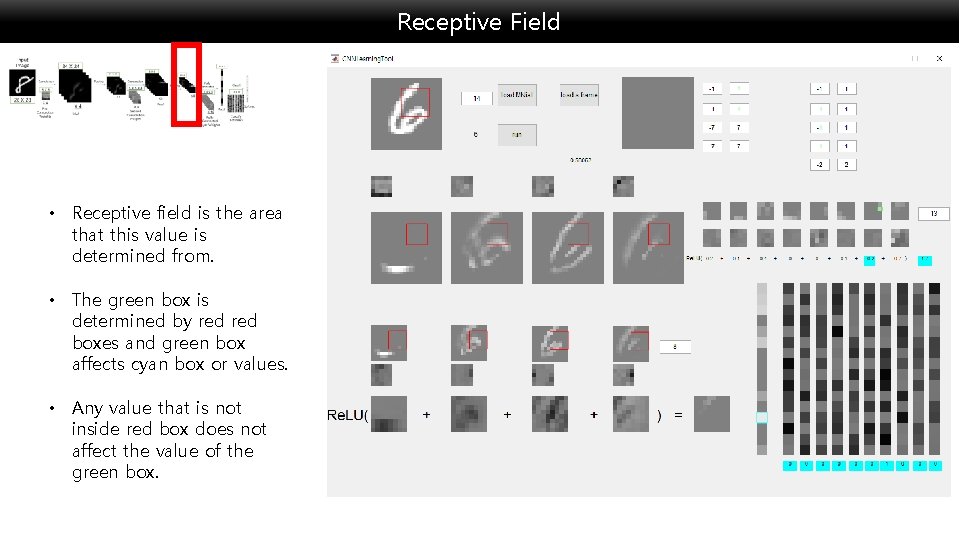

Receptive Field • Receptive field is the area that this value is determined from. • The green box is determined by red boxes and green box affects cyan box or values. • Any value that is not inside red box does not affect the value of the green box.

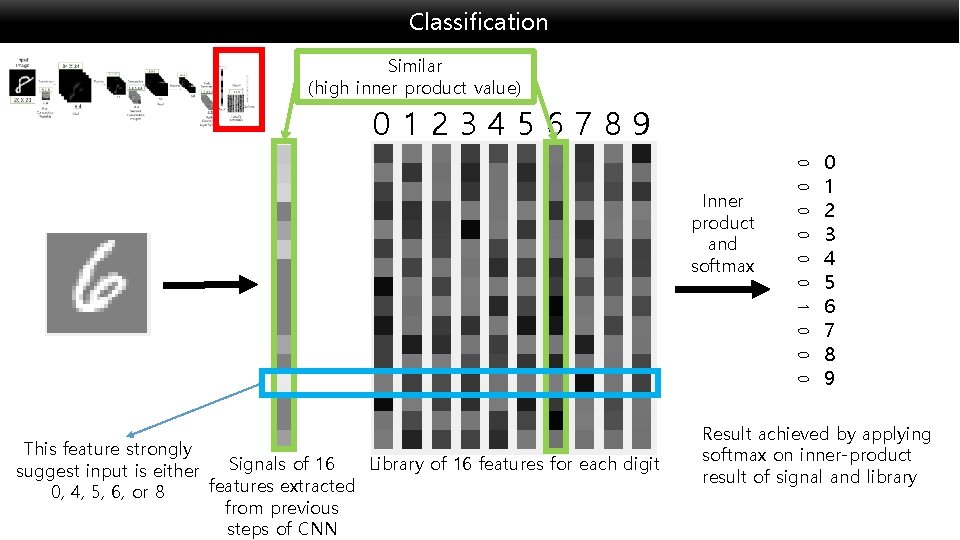

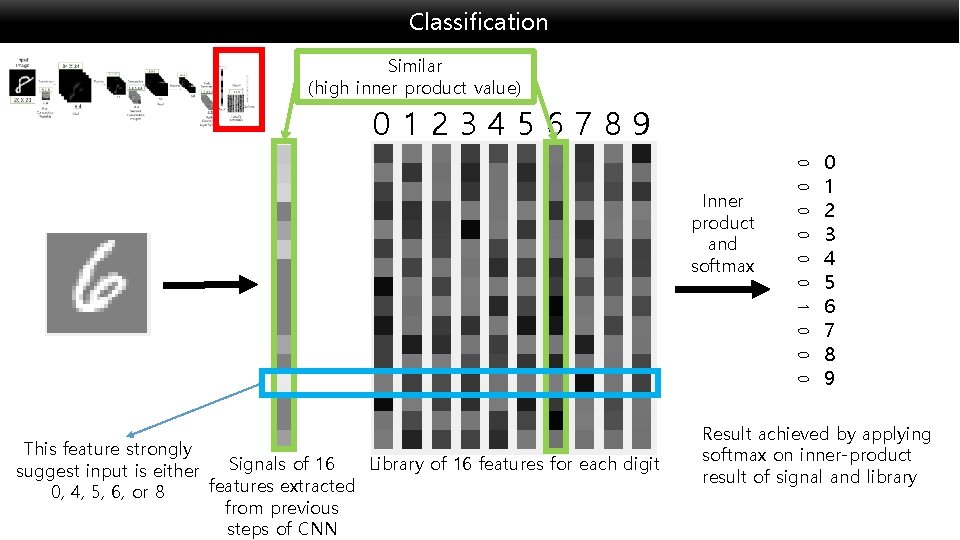

Classification Similar (high inner product value) 0123456789 0 0 0 Inner product and softmax 0 1 0 0 0 This feature strongly Library of 16 features for each digit Signals of 16 suggest input is either features extracted 0, 4, 5, 6, or 8 from previous steps of CNN 0 1 2 3 4 5 6 7 8 9 Result achieved by applying softmax on inner-product result of signal and library

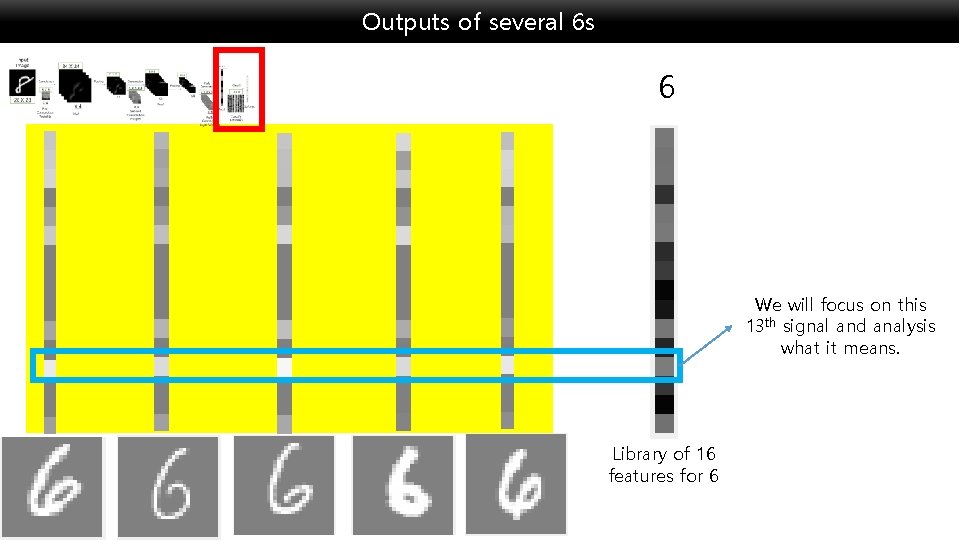

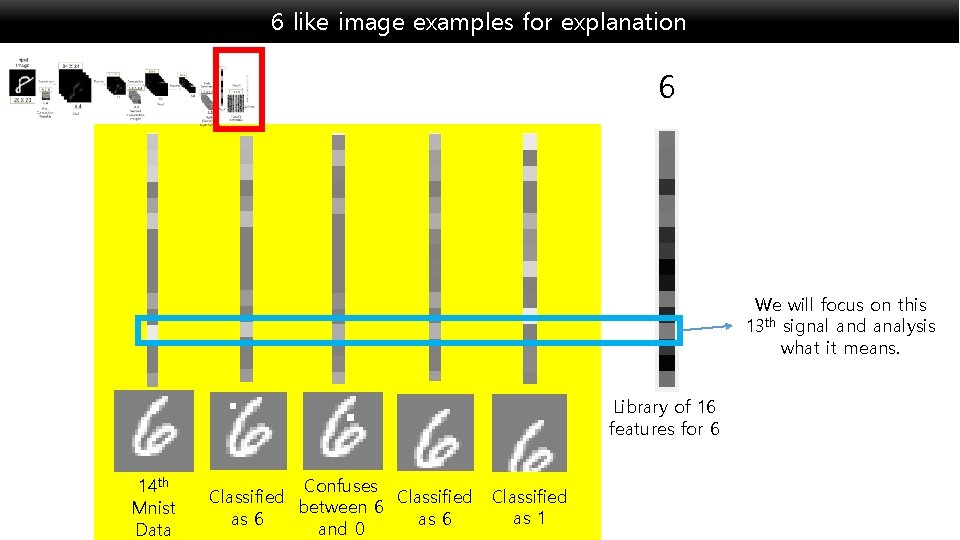

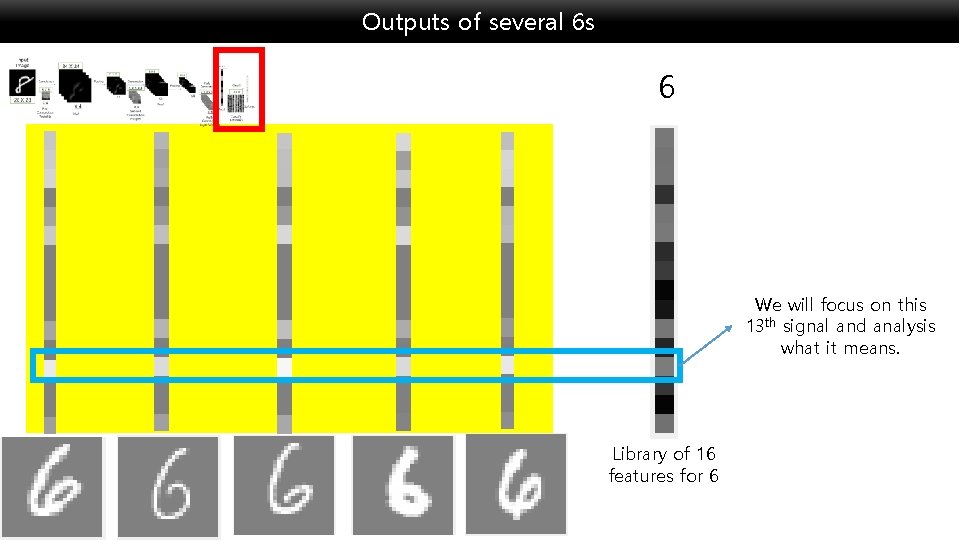

Outputs of several 6 s 6 We will focus on this 13 th signal and analysis what it means. Library of 16 features for 6

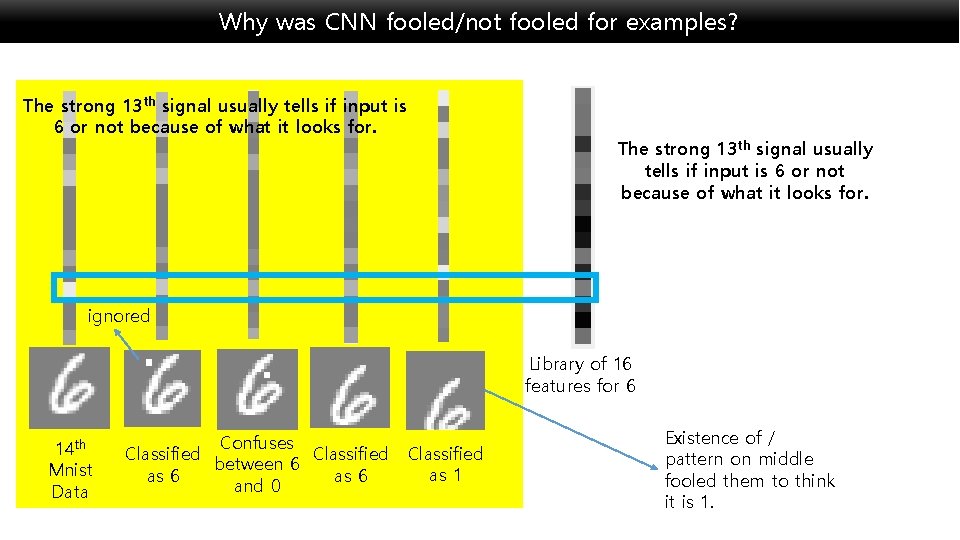

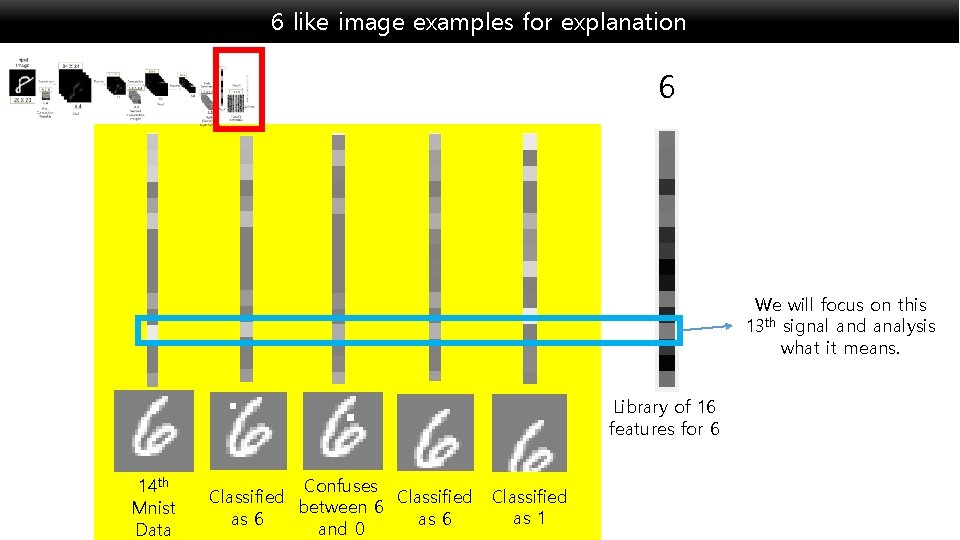

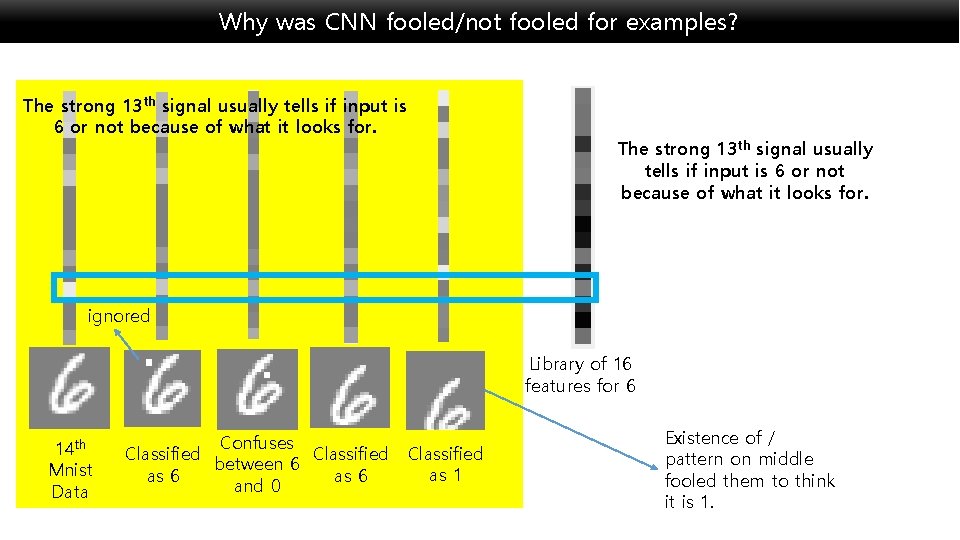

6 like image examples for explanation 6 We will focus on this 13 th signal and analysis what it means. Library of 16 features for 6 14 th Mnist Data Confuses Classified between 6 as 6 and 0 Classified as 1

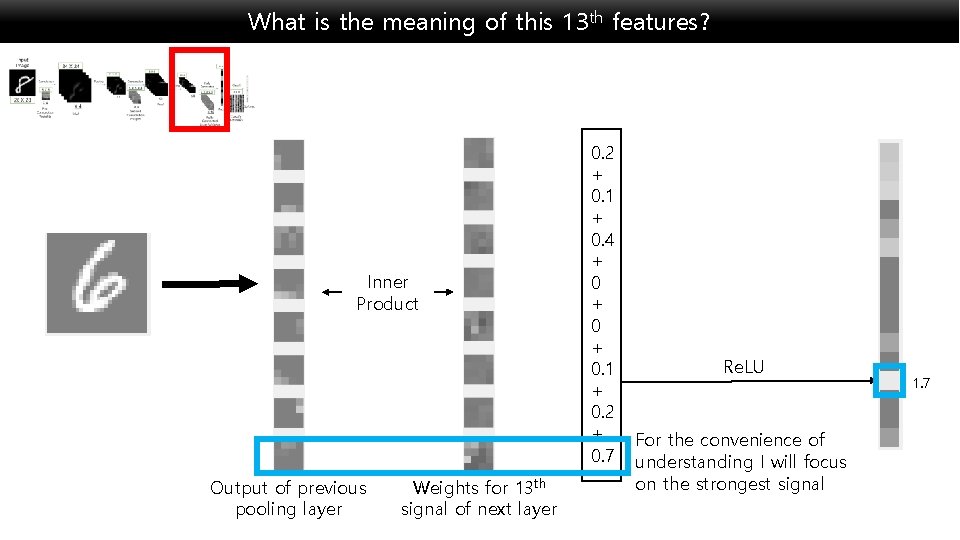

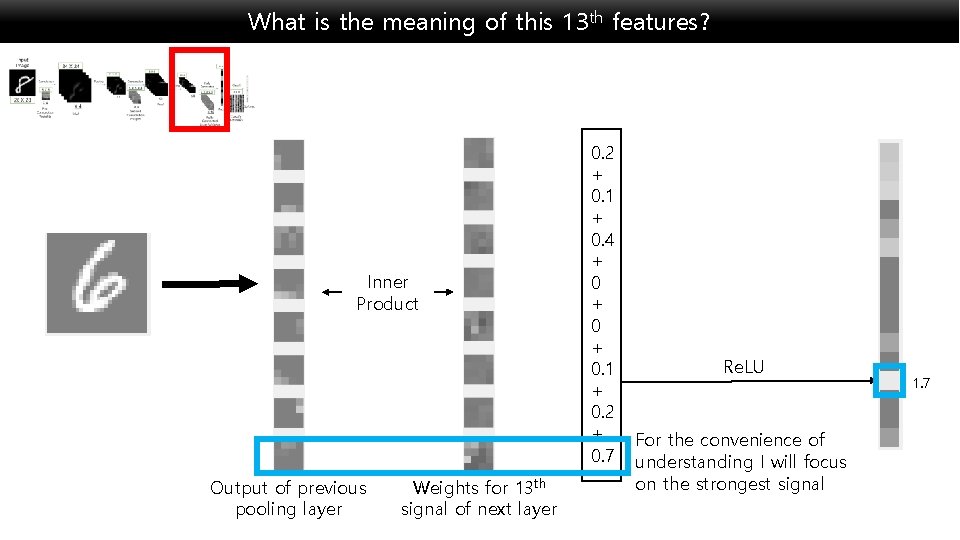

What is the meaning of this 13 th features? Inner Product Output of previous pooling layer Weights for 13 th signal of next layer 0. 2 + 0. 1 + 0. 4 + 0 + 0. 1 + 0. 2 + 0. 7 Re. LU For the convenience of understanding I will focus on the strongest signal 1. 7

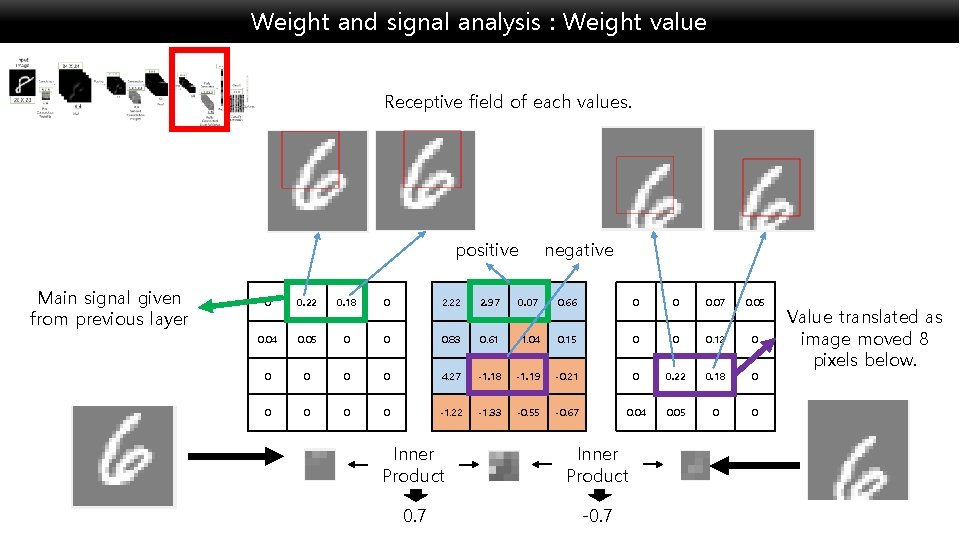

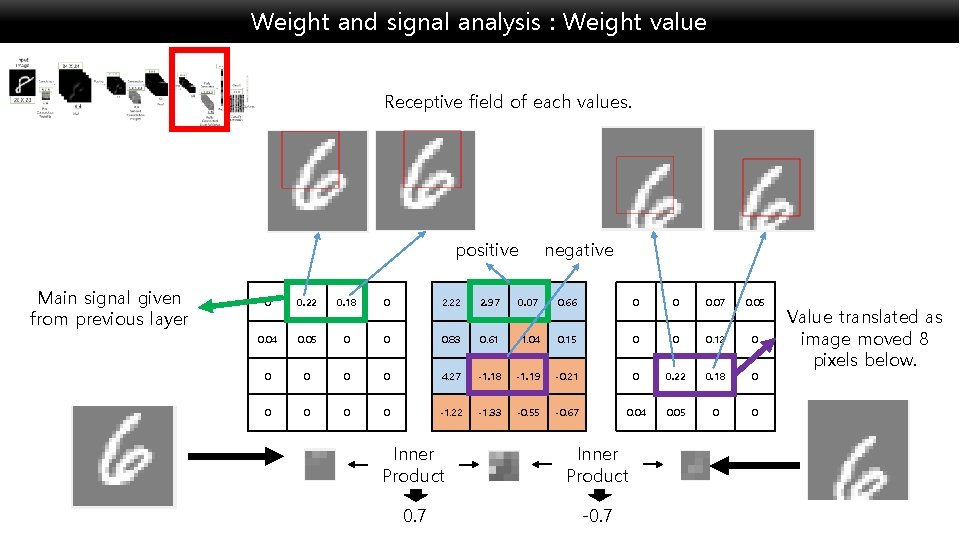

Weight and signal analysis : Weight value Receptive field of each values. negative positive Main signal given from previous layer 0 0. 22 0. 18 0 2. 22 2. 97 0. 07 0. 66 0 0 0. 07 0. 05 0. 04 0. 05 0 0 0. 83 0. 61 -1. 04 0. 15 0 0 0. 12 0 0 0 4. 27 -1. 18 -1. 19 -0. 21 0 0. 22 0. 18 0 0 0 -1. 22 -1. 33 -0. 55 -0. 67 0. 04 0. 05 0 0 Inner Product 0. 7 -0. 7 Value translated as image moved 8 pixels below.

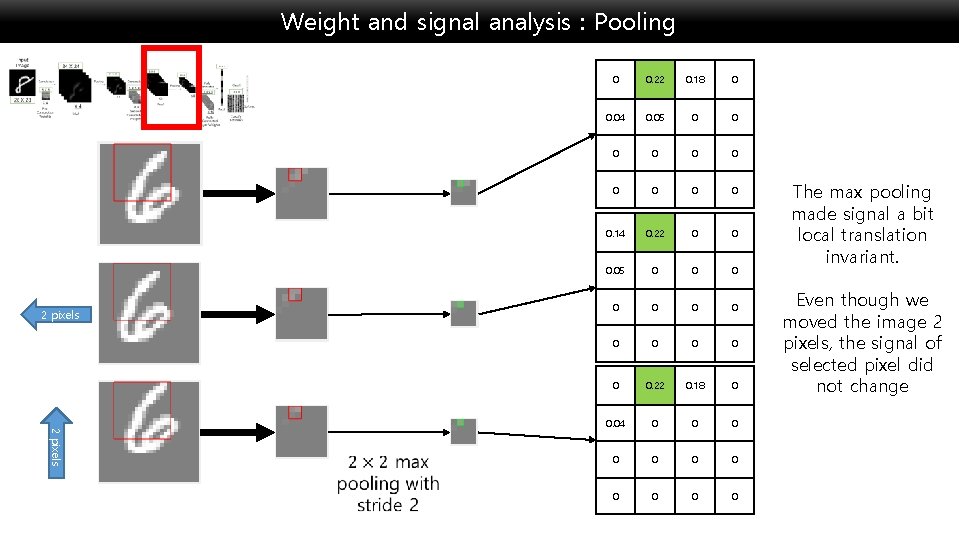

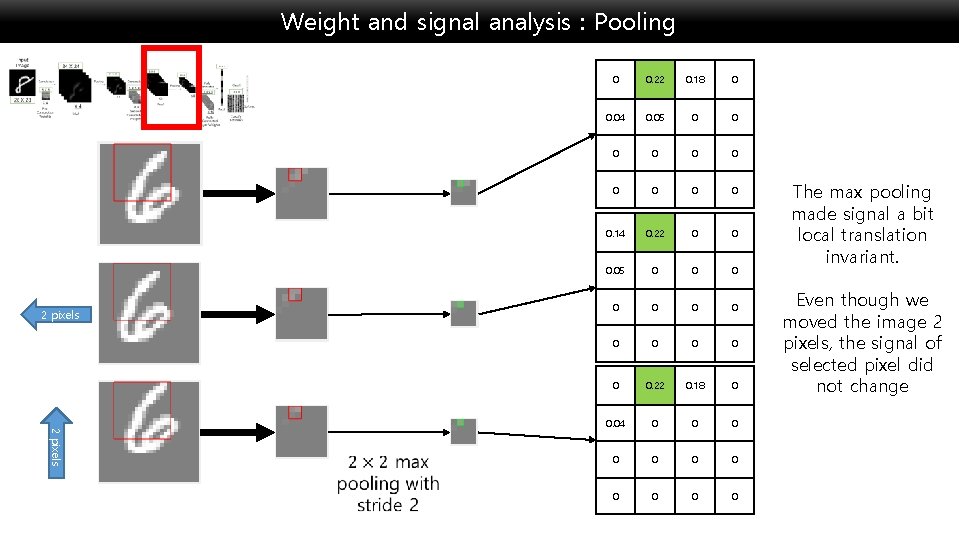

Weight and signal analysis : Pooling 2 pixels 0 0. 22 0. 18 0 0. 04 0. 05 0 0 0. 14 0. 22 0 0 0. 05 0 0 0 0. 22 0. 18 0 0. 04 0 0 0 The max pooling made signal a bit local translation invariant. Even though we moved the image 2 pixels, the signal of selected pixel did not change

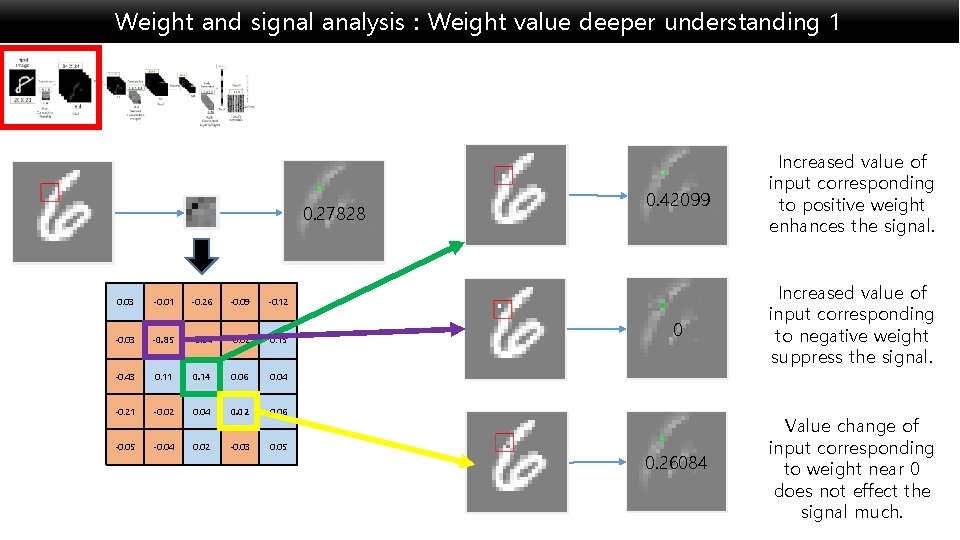

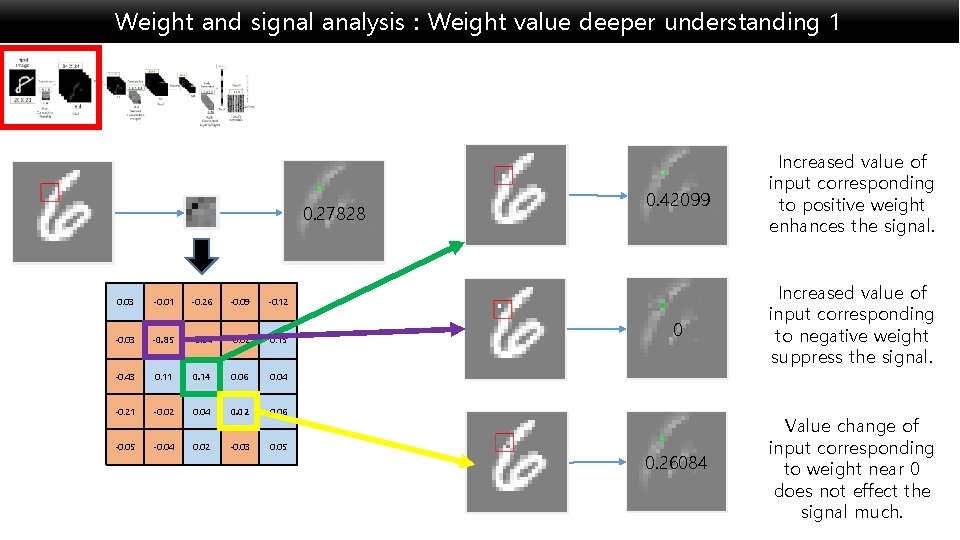

Weight and signal analysis : Weight value deeper understanding 1 0. 27828 0. 03 -0. 01 -0. 26 -0. 09 -0. 12 -0. 03 -0. 85 -0. 04 -0. 02 0. 15 -0. 43 0. 11 0. 14 0. 06 0. 04 -0. 21 -0. 02 0. 04 0. 02 0. 06 -0. 05 -0. 04 0. 02 -0. 03 0. 05 0. 42099 Increased value of input corresponding to positive weight enhances the signal. 0 Increased value of input corresponding to negative weight suppress the signal. 0. 26084 Value change of input corresponding to weight near 0 does not effect the signal much.

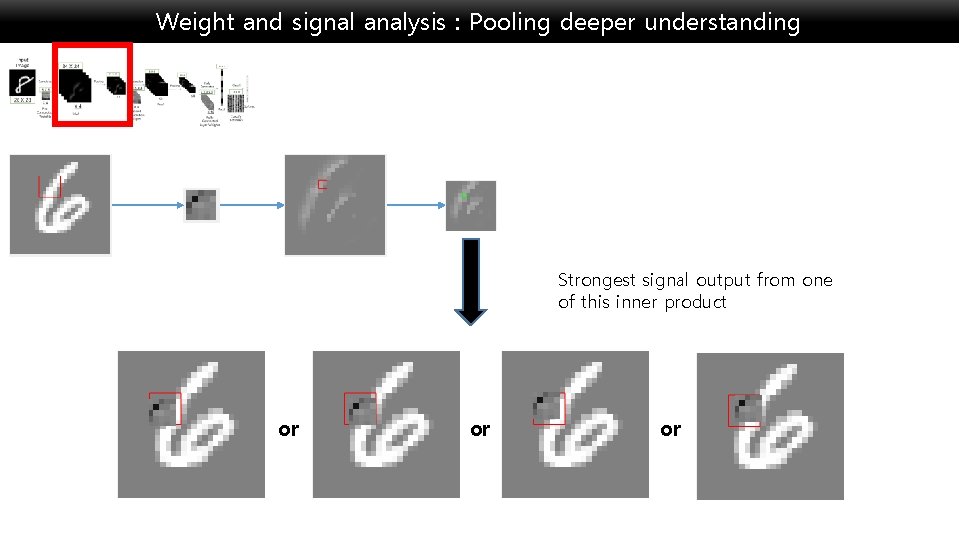

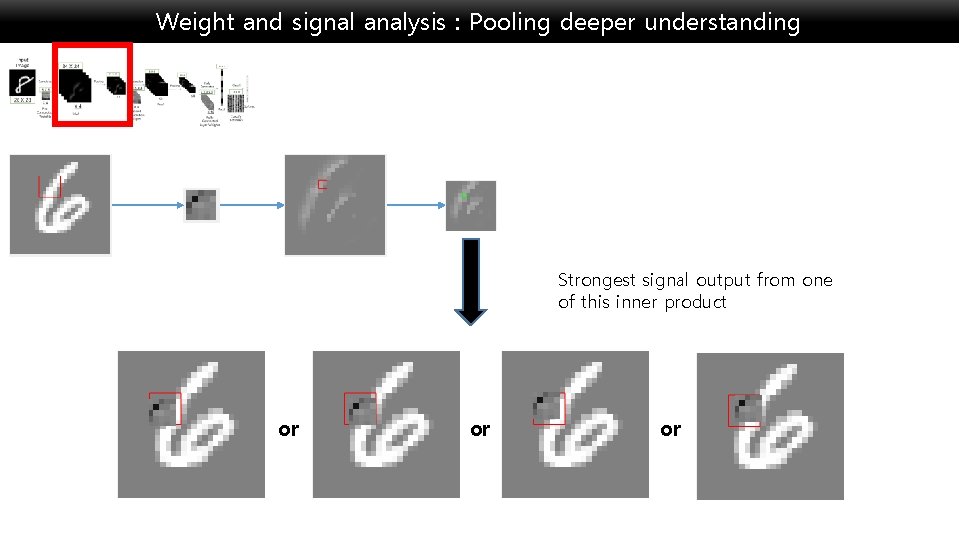

Weight and signal analysis : Pooling deeper understanding Strongest signal output from one of this inner product or or or

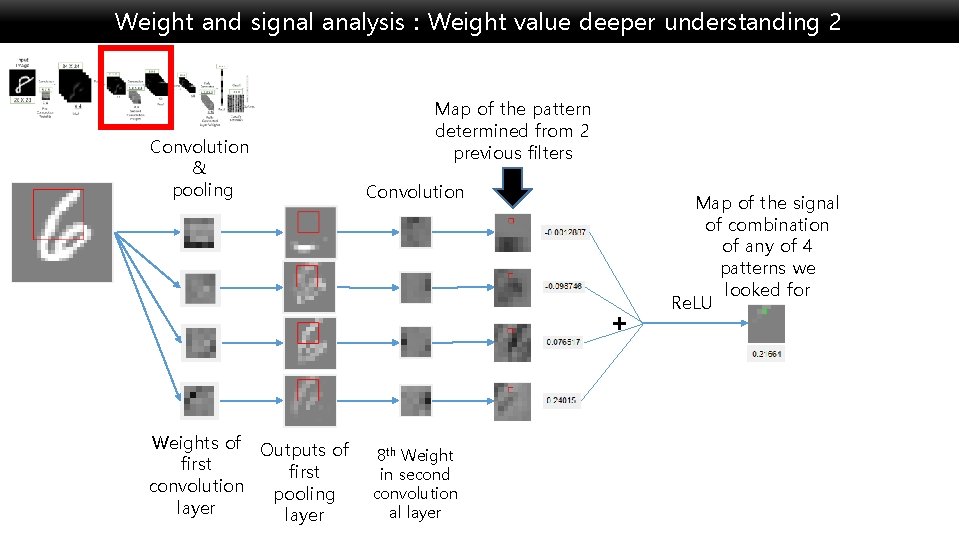

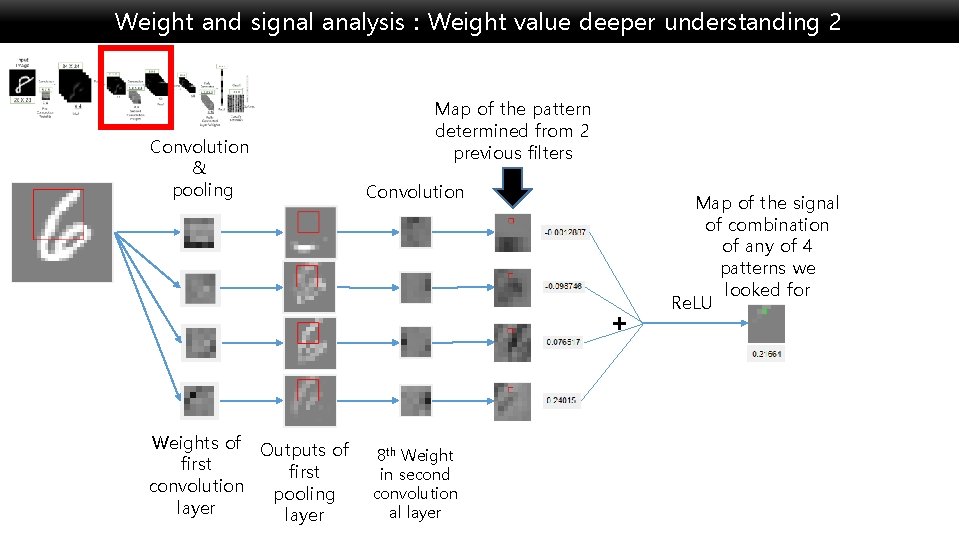

Weight and signal analysis : Weight value deeper understanding 2 Convolution & pooling Map of the pattern determined from 2 previous filters Convolution + Weights of Outputs of first convolution pooling layer 8 th Weight in second convolution al layer Map of the signal of combination of any of 4 patterns we looked for Re. LU

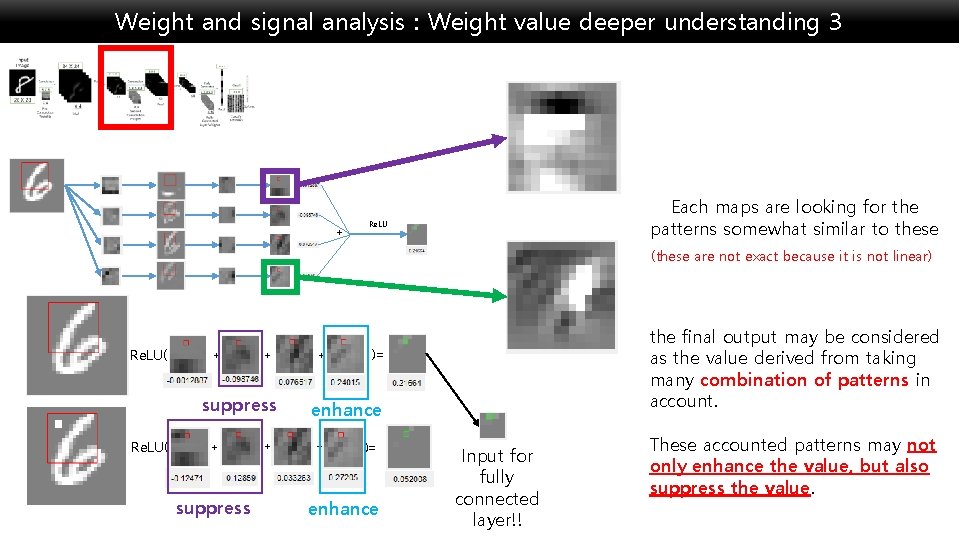

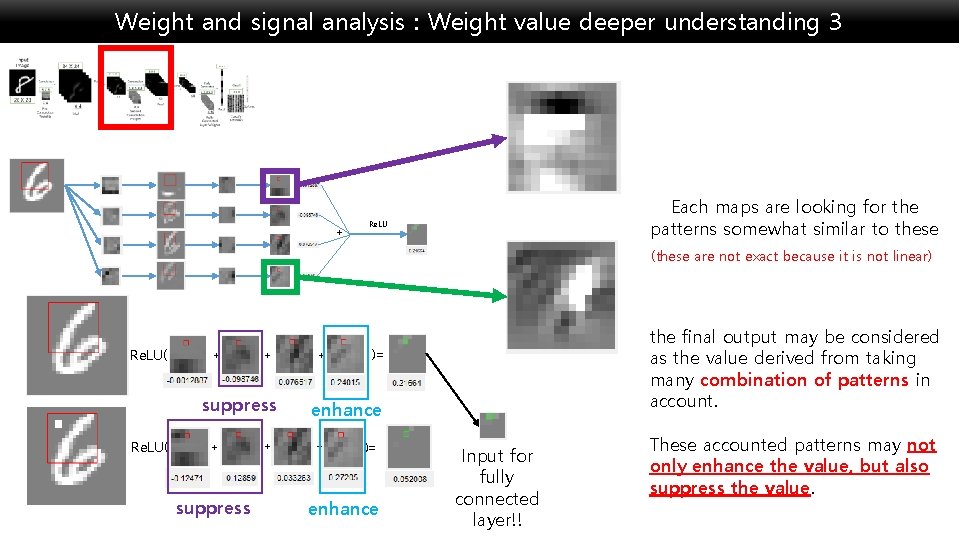

Weight and signal analysis : Weight value deeper understanding 3 + Each maps are looking for the patterns somewhat similar to these Re. LU (these are not exact because it is not linear) Re. LU( + + suppress Re. LU( + suppress + + the final output may be considered as the value derived from taking many combination of patterns in account. )= enhance + )= enhance Input for fully connected layer!! These accounted patterns may not only enhance the value, but also suppress the value.

Why was CNN fooled/not fooled for examples? The strong 13 th signal usually tells if input is 6 or not because of what it looks for. ignored Library of 16 features for 6 14 th Mnist Data Confuses Classified between 6 as 6 and 0 Classified as 1 Existence of / pattern on middle fooled them to think it is 1.

Conclusion • The CNN with Re. LU looks for combination of patterns as it gets deeper. • The pooling layer tells CNN that we are looking for local translation invariant features. • Deeper layers of CNN allow the network to look for more complex combination of patterns. Also, it allow wider invariance for local patterns. • With knowing what exactly CNN looks for, we can tell have deeper understanding of how the CNN works, and what can or can’t it do.

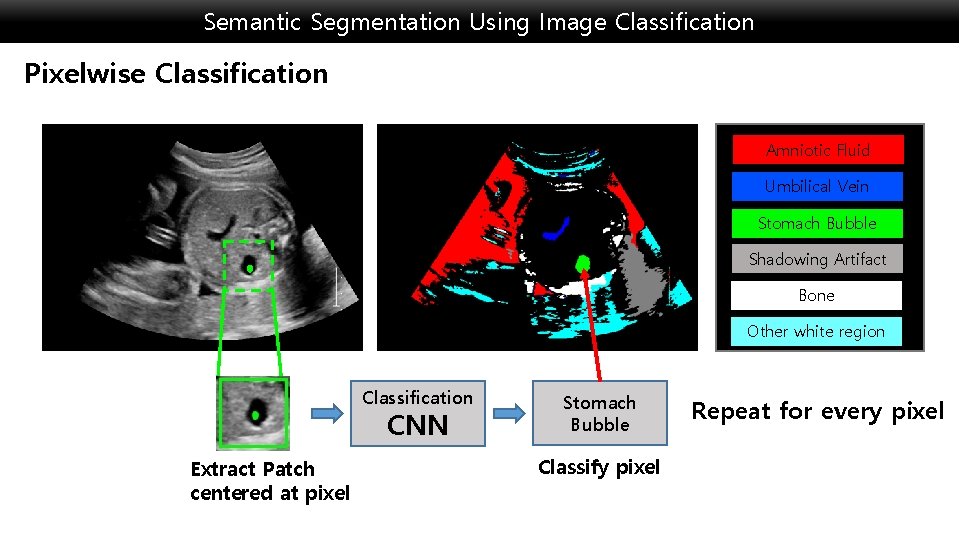

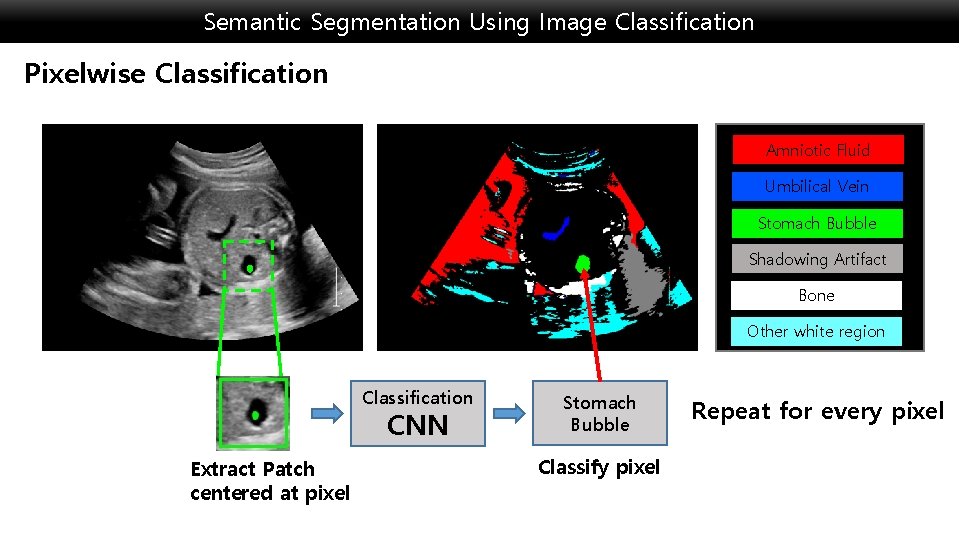

Semantic Segmentation Using Image Classification Pixelwise Classification Amniotic Fluid Umbilical Vein Stomach Bubble Shadowing Artifact Bone Other white region Classification CNN Extract Patch centered at pixel Stomach Bubble Classify pixel Repeat for every pixel

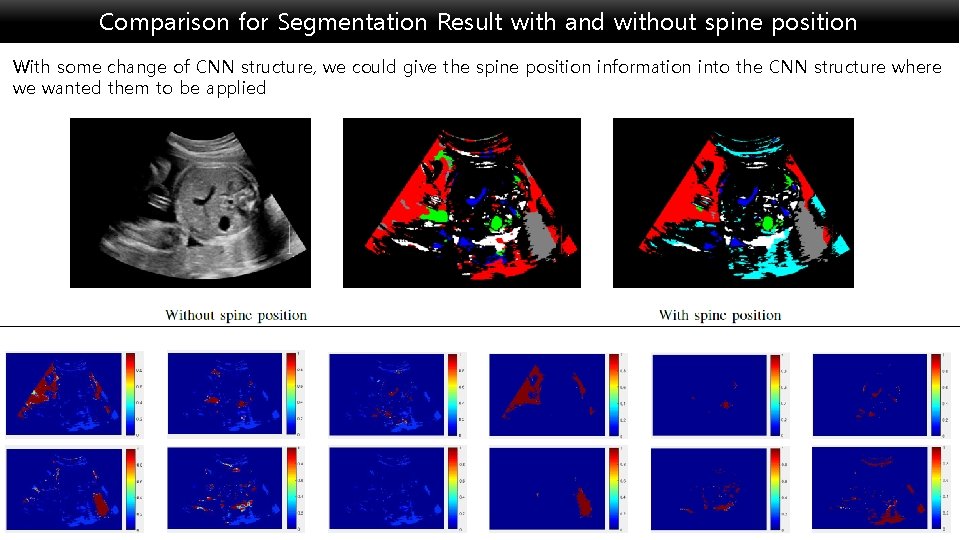

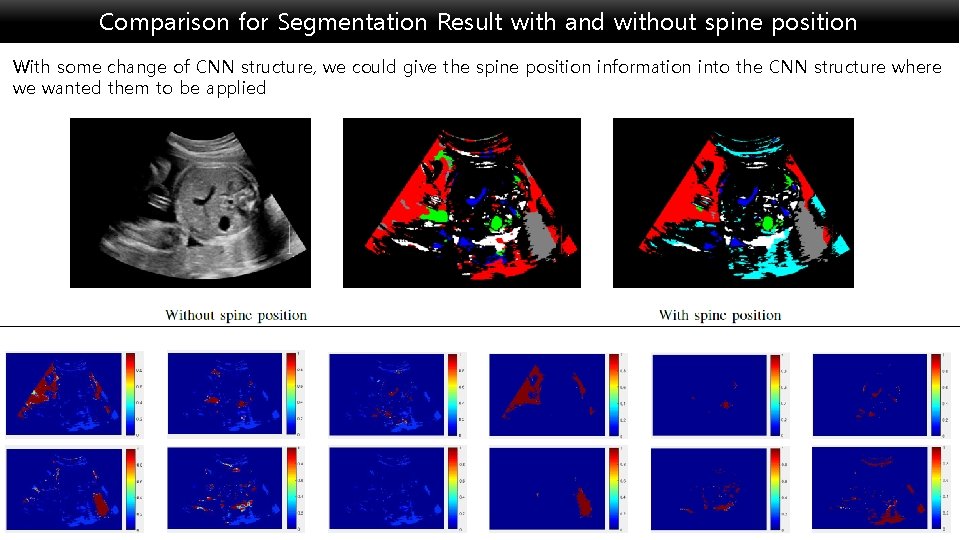

Comparison for Segmentation Result with and without spine position With some change of CNN structure, we could give the spine position information into the CNN structure where we wanted them to be applied