Analysis of Cilk A Formal Model for Cilk

- Slides: 42

Analysis of Cilk

A Formal Model for Cilk § A thread: maximal sequence of instructions in a procedure instance (at runtime!) not containing spawn, sync, return § For a given computation, define a dag § Threads are vertices § Continuation edges within procedures § Spawn edges § Initial & final threads (in main)

Work & Critical Path § § Threads are sequential: work = running time Define TP = running time on P processors Then T 1 = work in the computation And T∞ = critical-path length, longest path in the dag

Lower Bounds on TP § TP ≥ T 1 / P (no miracles in the model, but they do happen occasionally) § TP ≥ T∞ (dependencies limit parallelism) § Speedup is T 1 / TP § (Asymptotic) linear speedup means Θ(P) § Parallelism is T 1 / T∞ (average work available at every step along critical path)

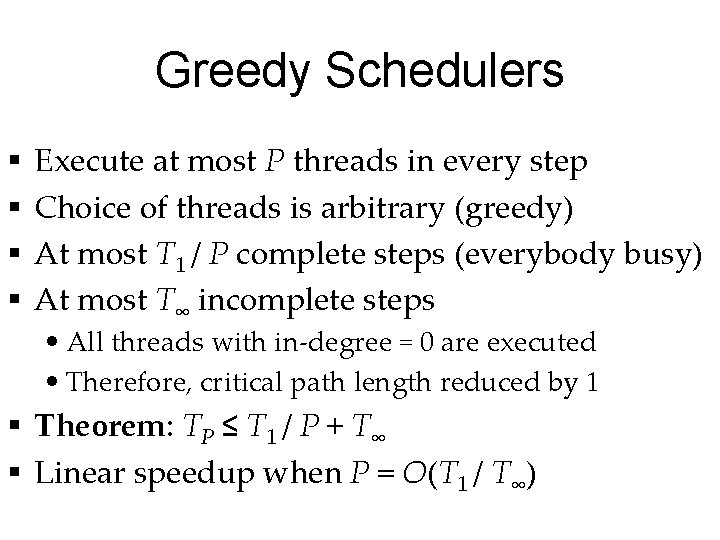

Greedy Schedulers § § Execute at most P threads in every step Choice of threads is arbitrary (greedy) At most T 1 / P complete steps (everybody busy) At most T∞ incomplete steps • All threads with in-degree = 0 are executed • Therefore, critical path length reduced by 1 § Theorem: TP ≤ T 1 / P + T∞ § Linear speedup when P = O(T 1 / T∞)

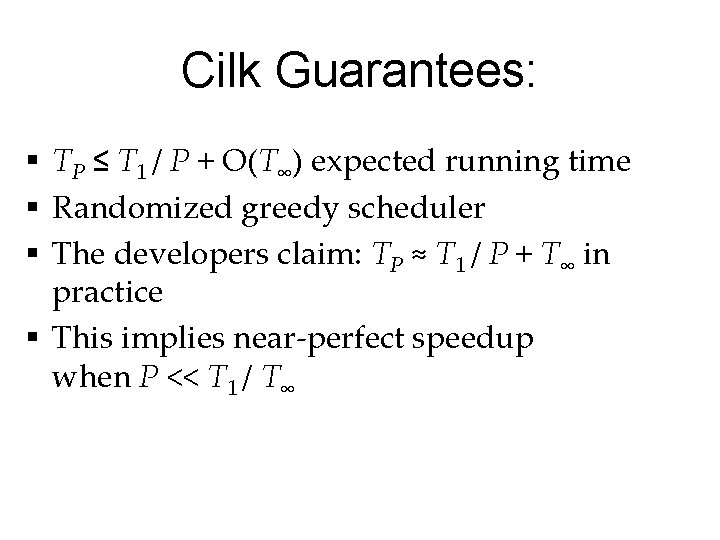

Cilk Guarantees: § TP ≤ T 1 / P + O(T∞) expected running time § Randomized greedy scheduler § The developers claim: TP ≈ T 1 / P + T∞ in practice § This implies near-perfect speedup when P << T 1 / T∞

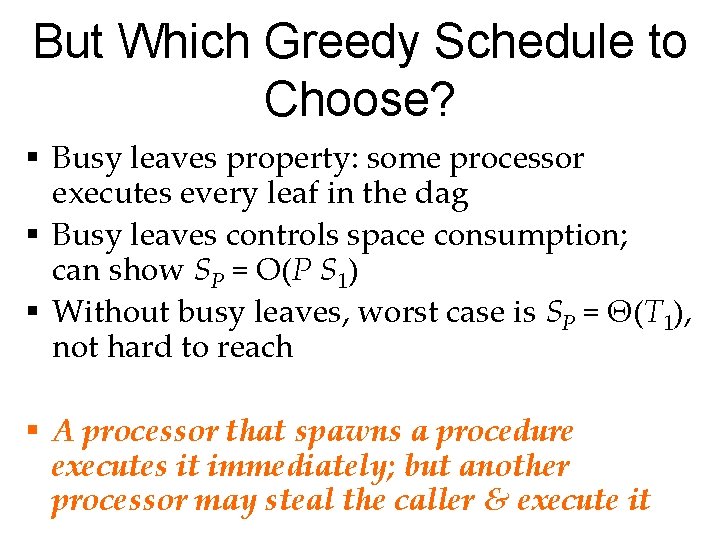

But Which Greedy Schedule to Choose? § Busy leaves property: some processor executes every leaf in the dag § Busy leaves controls space consumption; can show SP = O(P S 1) § Without busy leaves, worst case is SP = Θ(T 1), not hard to reach § A processor that spawns a procedure executes it immediately; but another processor may steal the caller & execute it

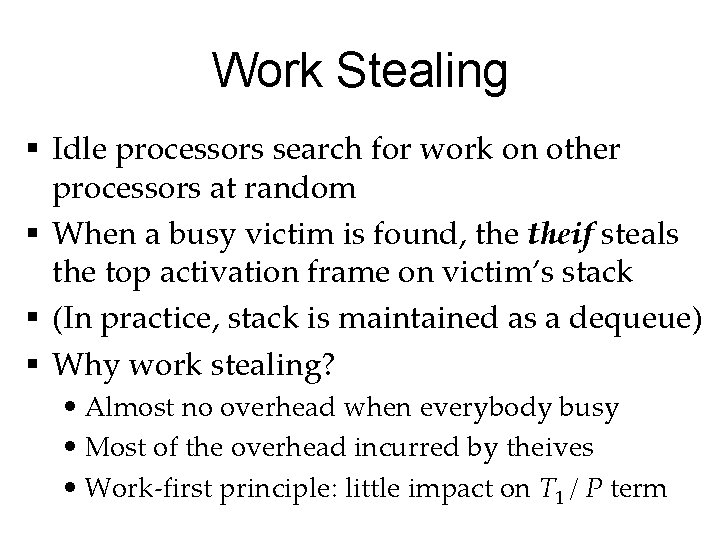

Work Stealing § Idle processors search for work on other processors at random § When a busy victim is found, theif steals the top activation frame on victim’s stack § (In practice, stack is maintained as a dequeue) § Why work stealing? • Almost no overhead when everybody busy • Most of the overhead incurred by theives • Work-first principle: little impact on T 1 / P term

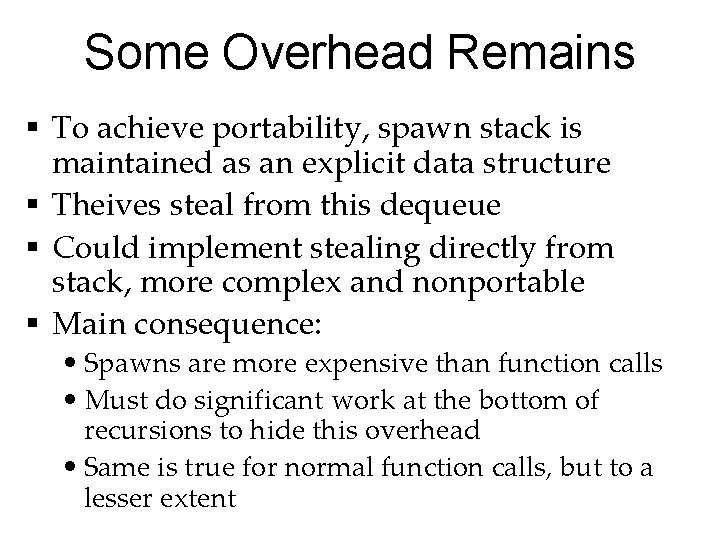

Some Overhead Remains § To achieve portability, spawn stack is maintained as an explicit data structure § Theives steal from this dequeue § Could implement stealing directly from stack, more complex and nonportable § Main consequence: • Spawns are more expensive than function calls • Must do significant work at the bottom of recursions to hide this overhead • Same is true for normal function calls, but to a lesser extent

More Cilk Features

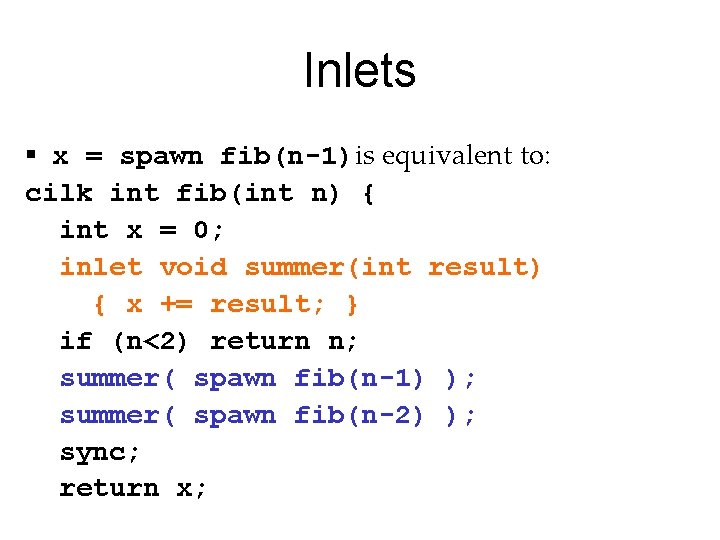

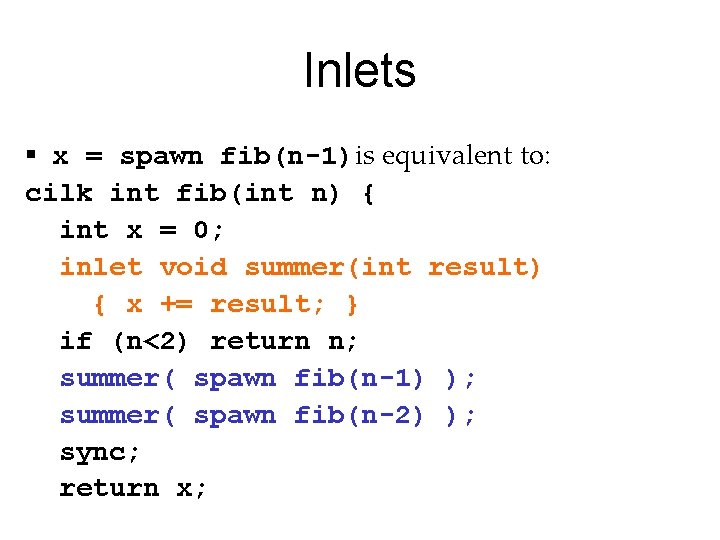

Inlets § x = spawn fib(n-1)is equivalent to: cilk int fib(int n) { int x = 0; inlet void summer(int result) { x += result; } if (n<2) return n; summer( spawn fib(n-1) ); summer( spawn fib(n-2) ); sync; return x;

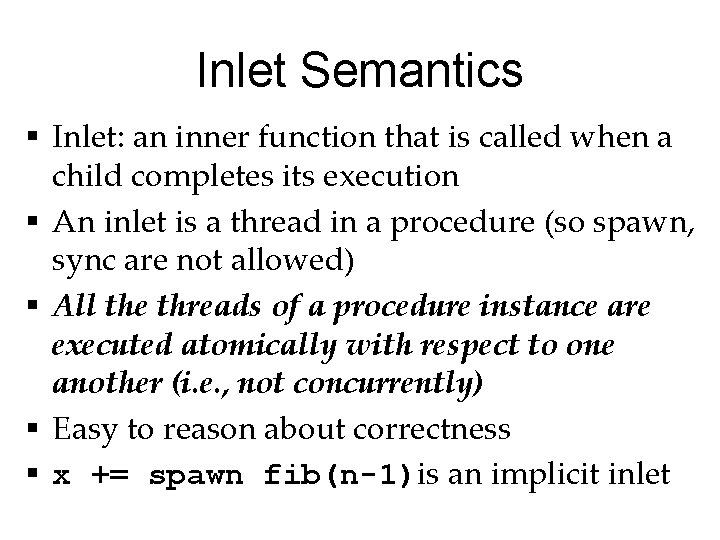

Inlet Semantics § Inlet: an inner function that is called when a child completes its execution § An inlet is a thread in a procedure (so spawn, sync are not allowed) § All the threads of a procedure instance are executed atomically with respect to one another (i. e. , not concurrently) § Easy to reason about correctness § x += spawn fib(n-1)is an implicit inlet

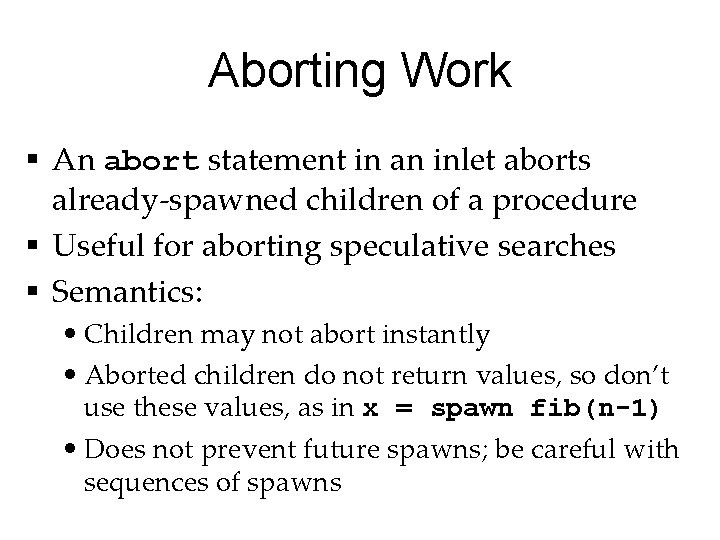

Aborting Work § An abort statement in an inlet aborts already-spawned children of a procedure § Useful for aborting speculative searches § Semantics: • Children may not abort instantly • Aborted children do not return values, so don’t use these values, as in x = spawn fib(n-1) • Does not prevent future spawns; be careful with sequences of spawns

The SYNCHED Built-In Variable § True only if no children are currently executing § False if some children may be executing now § Useful for avoiding space and work overheads that reduce the critical path when there is no need to

Cilk’s Memory Model § Memory operations of two threads are guaranteed to be ordered only if there is a dependence path between them (ancestordescendant relationship) § Unordered threads may see inconsistent views of memory

Locks § Mutual-exclusion variables § Memory operations that a thread performs before releasing a lock are seen by other threads after they acquire the lock § Using locks invalidates all the performance guarantees that Cilk provides § In short, Cilk supports locks but don’t use them unless you must

Useful but Obsolete § Cilk as a library • Can call Cilk procedures from C, C++, Fortran • Necessary for building general-purpose C libraries § Cilk on clusters with distributed memory • Programmer sees the same shared-memory model • Used an interesting memory-consistency protocol to support shared-memory view • Was performance ever good enough?

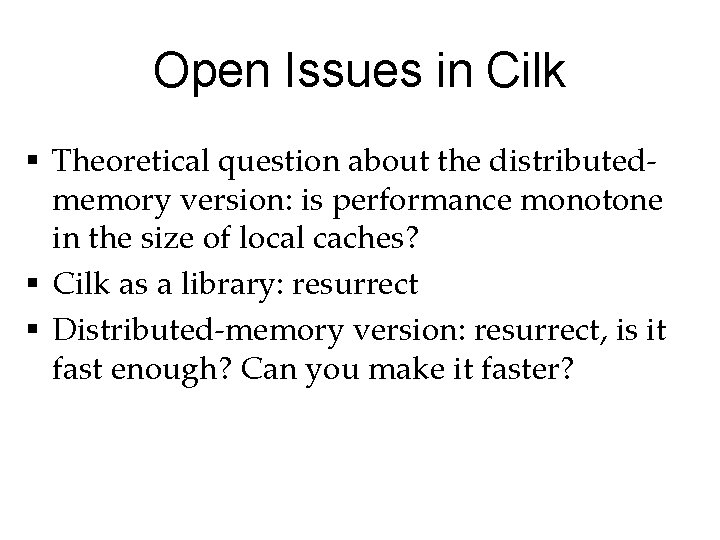

Some Open Problems Perhaps good enough for a thesis

Open Issues in Cilk § Theoretical question about the distributedmemory version: is performance monotone in the size of local caches? § Cilk as a library: resurrect § Distributed-memory version: resurrect, is it fast enough? Can you make it faster?

Parallel Merge Sort in Cilk

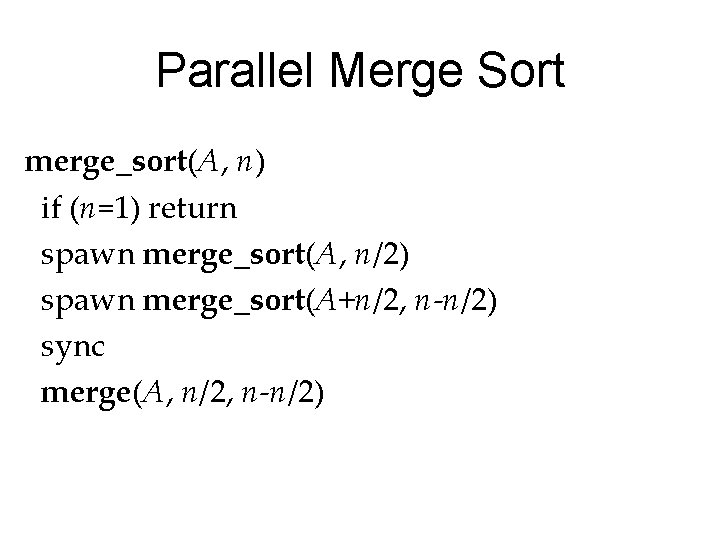

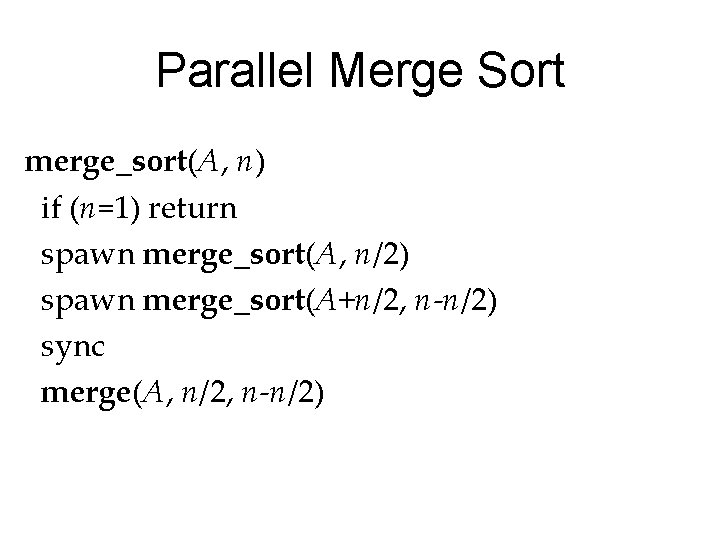

Parallel Merge Sort merge_sort(A, n) if (n=1) return spawn merge_sort(A, n/2) spawn merge_sort(A+n/2, n-n/2) sync merge(A, n/2, n-n/2)

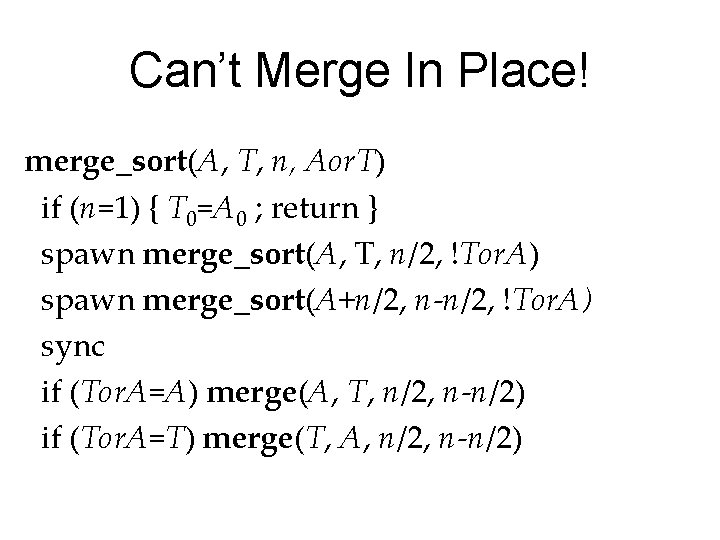

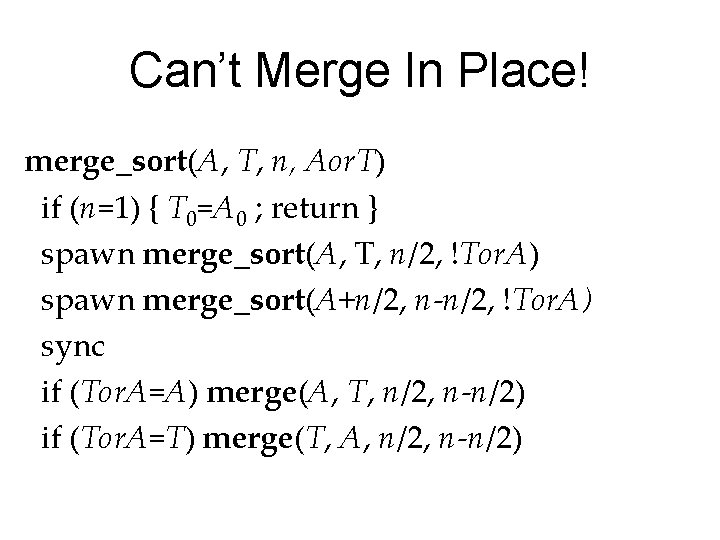

Can’t Merge In Place! merge_sort(A, T, n, Aor. T) if (n=1) { T 0=A 0 ; return } spawn merge_sort(A, T, n/2, !Tor. A) spawn merge_sort(A+n/2, n-n/2, !Tor. A) sync if (Tor. A=A) merge(A, T, n/2, n-n/2) if (Tor. A=T) merge(T, A, n/2, n-n/2)

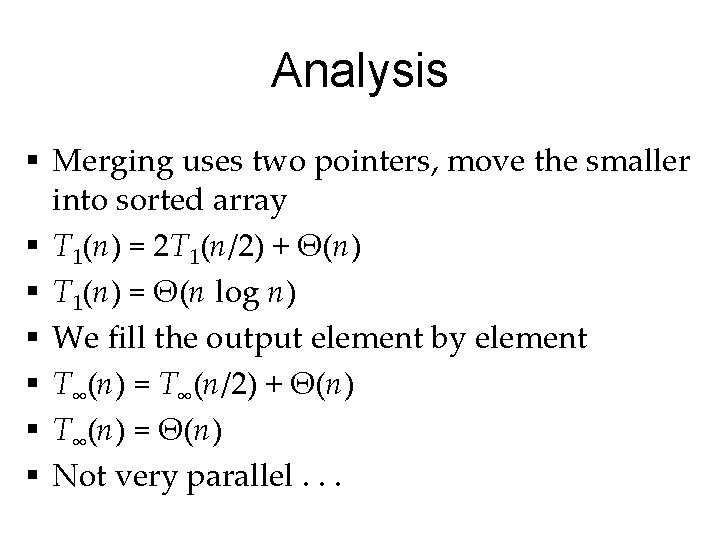

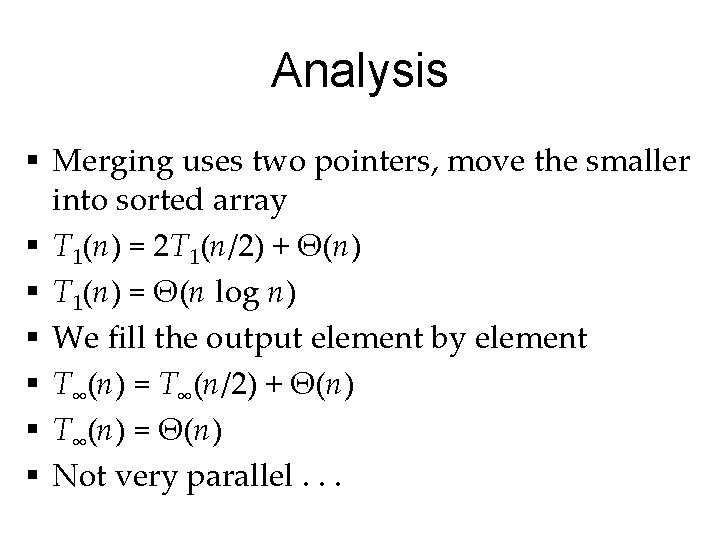

Analysis § Merging uses two pointers, move the smaller into sorted array § T 1(n) = 2 T 1(n/2) + Θ(n) § T 1(n) = Θ(n log n) § We fill the output element by element § T∞(n) = T∞(n/2) + Θ(n) § T∞(n) = Θ(n) § Not very parallel. . .

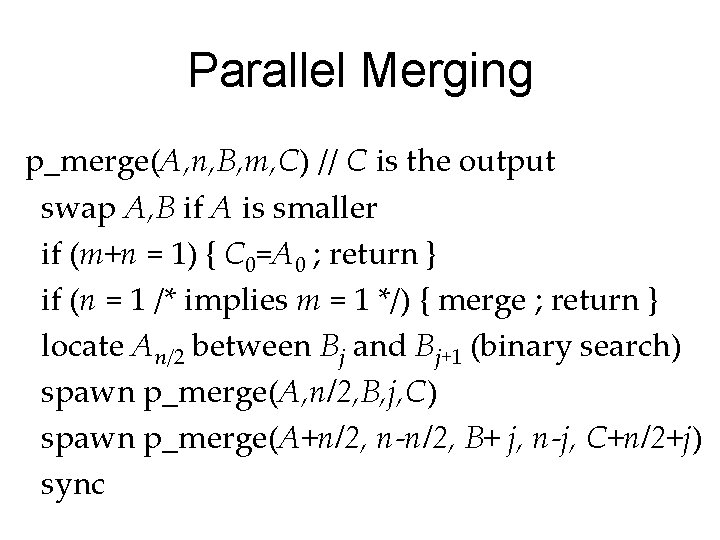

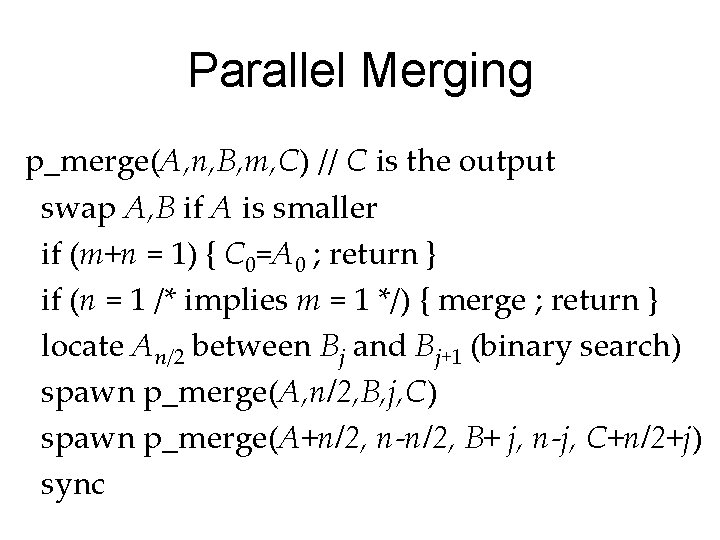

Parallel Merging p_merge(A, n, B, m, C) // C is the output swap A, B if A is smaller if (m+n = 1) { C 0=A 0 ; return } if (n = 1 /* implies m = 1 */) { merge ; return } locate An/2 between Bj and Bj+1 (binary search) spawn p_merge(A, n/2, B, j, C) spawn p_merge(A+n/2, n-n/2, B+ j, n-j, C+n/2+j) sync

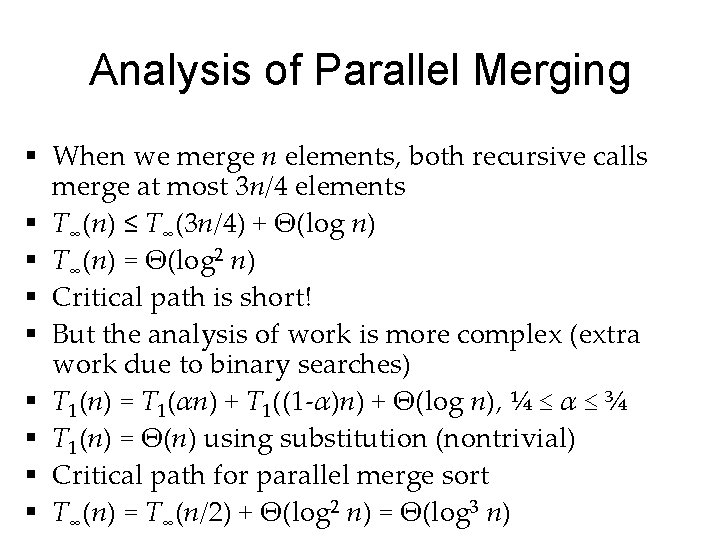

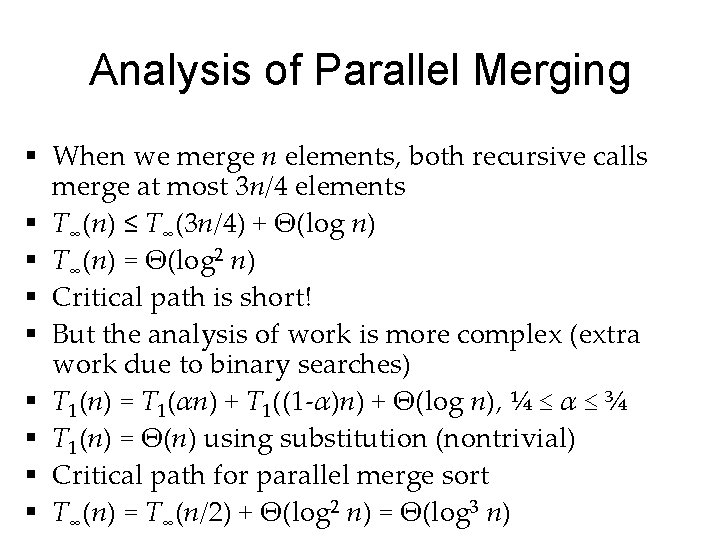

Analysis of Parallel Merging § When we merge n elements, both recursive calls merge at most 3 n/4 elements § T∞(n) ≤ T∞(3 n/4) + Θ(log n) § T∞(n) = Θ(log 2 n) § Critical path is short! § But the analysis of work is more complex (extra work due to binary searches) § T 1(n) = T 1(αn) + T 1((1 -α)n) + Θ(log n), ¼ ≤ α ≤ ¾ § T 1(n) = Θ(n) using substitution (nontrivial) § Critical path for parallel merge sort § T∞(n) = T∞(n/2) + Θ(log 2 n) = Θ(log 3 n)

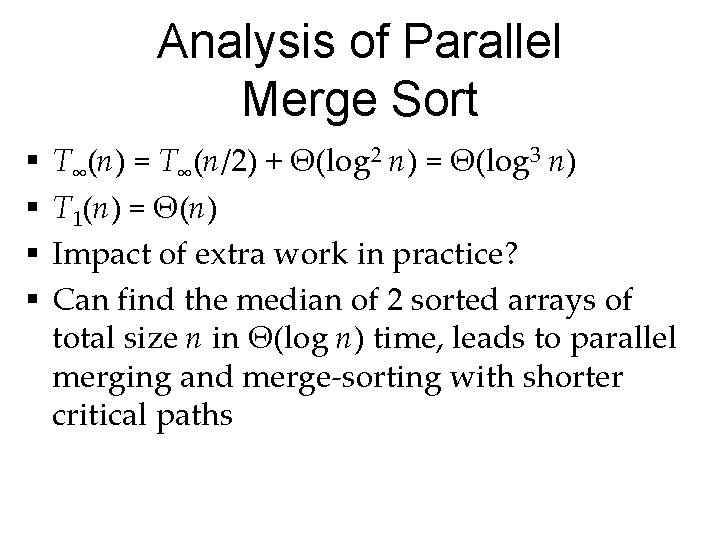

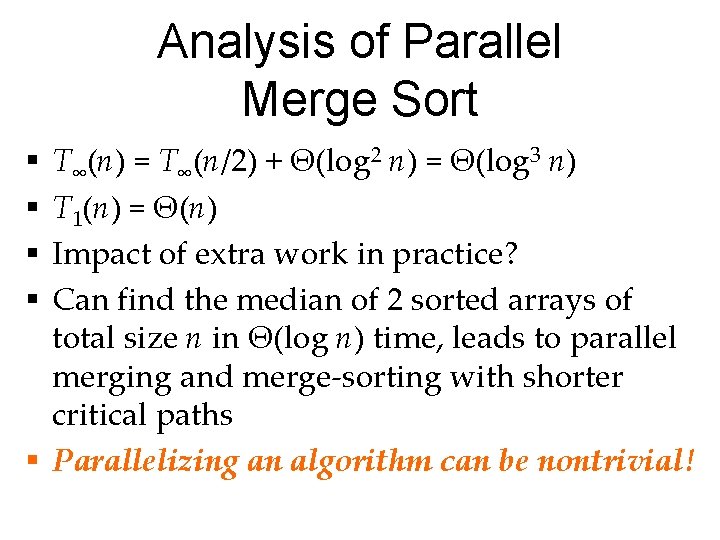

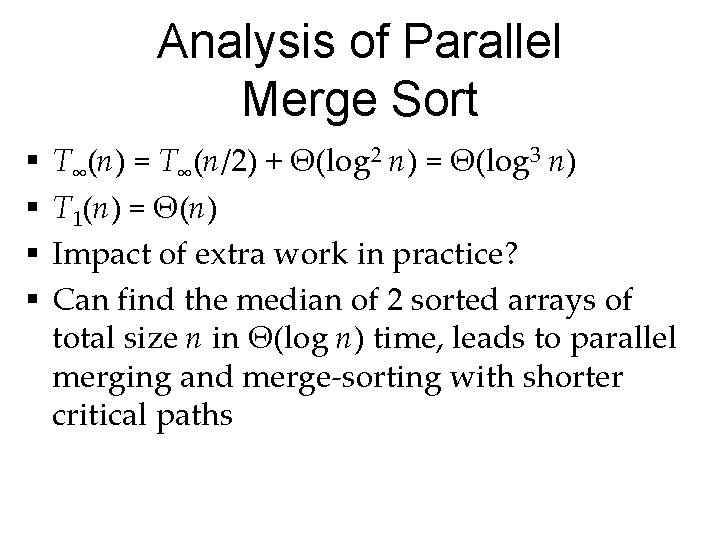

Analysis of Parallel Merge Sort § § T∞(n) = T∞(n/2) + Θ(log 2 n) = Θ(log 3 n) T 1(n) = Θ(n) Impact of extra work in practice? Can find the median of 2 sorted arrays of total size n in Θ(log n) time, leads to parallel merging and merge-sorting with shorter critical paths

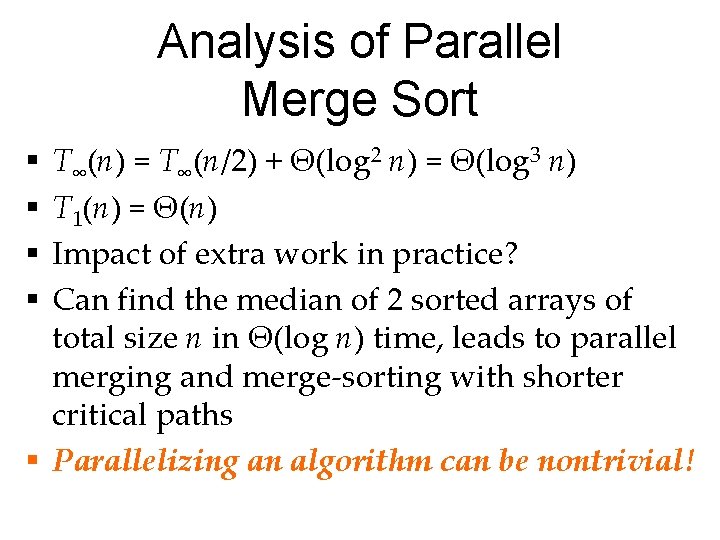

Analysis of Parallel Merge Sort T∞(n) = T∞(n/2) + Θ(log 2 n) = Θ(log 3 n) T 1(n) = Θ(n) Impact of extra work in practice? Can find the median of 2 sorted arrays of total size n in Θ(log n) time, leads to parallel merging and merge-sorting with shorter critical paths § Parallelizing an algorithm can be nontrivial! § §

Cache-Efficient Sorting

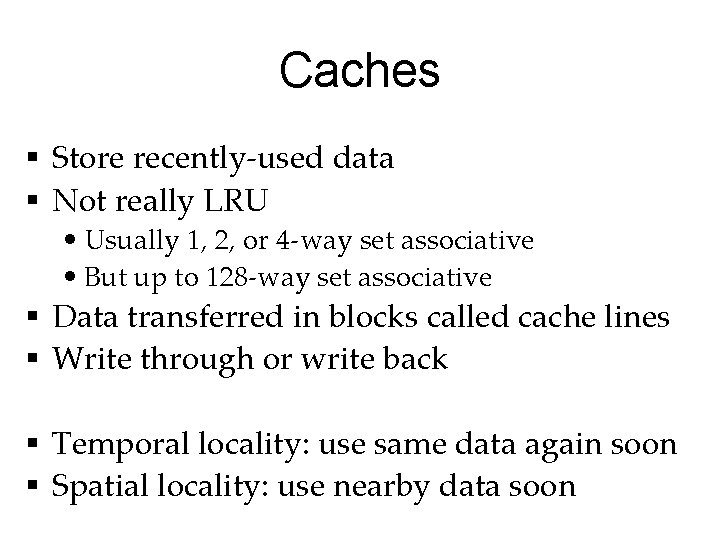

Caches § Store recently-used data § Not really LRU • Usually 1, 2, or 4 -way set associative • But up to 128 -way set associative § Data transferred in blocks called cache lines § Write through or write back § Temporal locality: use same data again soon § Spatial locality: use nearby data soon

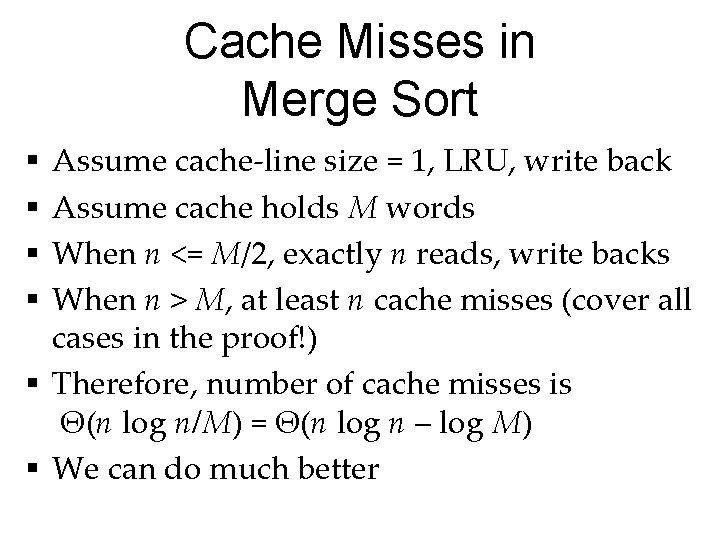

Cache Misses in Merge Sort Assume cache-line size = 1, LRU, write back Assume cache holds M words When n <= M/2, exactly n reads, write backs When n > M, at least n cache misses (cover all cases in the proof!) § Therefore, number of cache misses is Θ(n log n/M) = Θ(n log n – log M) § We can do much better § §

The Key Idea § Merge M/2 sorted runs into one, not 2 into 1 § Keep one element from each run in a heap, together with a run label § Extract the min, move to sorted run, insert another element from same run into heap § Reading from sorted runs & writing to sorted ouput removes elements of the heap, but this cost is O(n) cache misses § Θ(n log. M n) = Θ(n log n / log M)

This is Poly-Merge Sort § Optimal in terms of cache misses § Can adapt to long cache lines, sorting on disks, etc § Originally invented from sorting on tapes on a machine with several tape drives § Often, Θ(n log n / log M) is really Θ(n) in practice § Example: • • 32 KB cache, 4+4 bytes elements 4192 -way merges Can sort 64 MB of data in 1 merge, 256 GB in 2 merges But more merges with long cache lines

From Quick Sort To Sample Sort § Same number of cache misses with normal quick sort § Key idea • Choose a large random sample, Θ(M) elements • Sort the samples • Classify all the elements using binary searches • Determine size of intervals • Partition • Recursively sort the intervals § Cache miss # probably similar to merge sort

Distributed-Memory Sorting

Issues in Sample Sort § Main idea: Partition input into P intervals, classify elements, send elements in ith interval to processor i, sort locally § Most of the communication in one global allto-all phase § Load balancing: Intervals must be similar in size § How do we sort the sample?

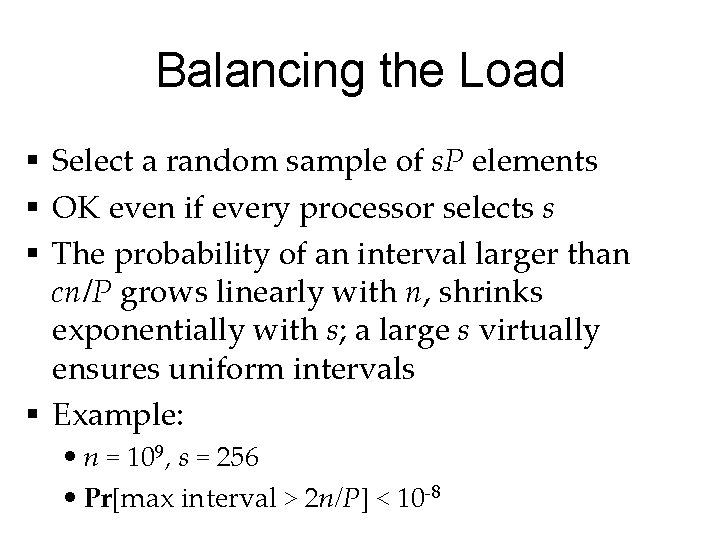

Balancing the Load § Select a random sample of s. P elements § OK even if every processor selects s § The probability of an interval larger than cn/P grows linearly with n, shrinks exponentially with s; a large s virtually ensures uniform intervals § Example: • n = 109, s = 256 • Pr[max interval > 2 n/P] < 10 -8

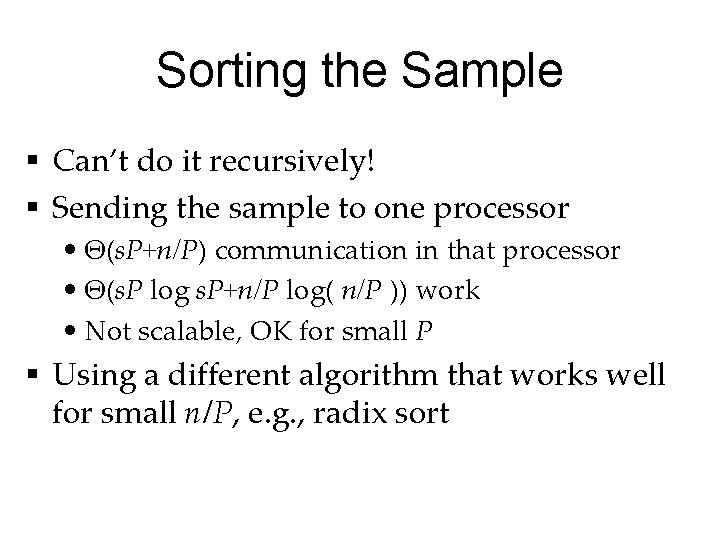

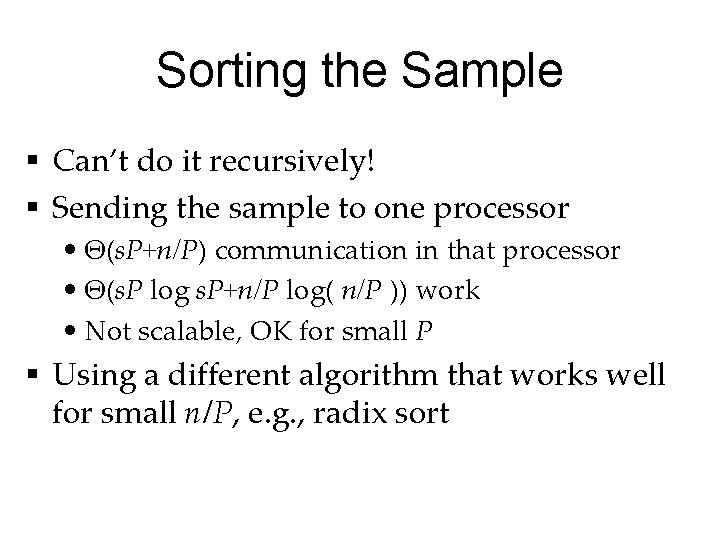

Sorting the Sample § Can’t do it recursively! § Sending the sample to one processor • Θ(s. P+n/P) communication in that processor • Θ(s. P log s. P+n/P log( n/P )) work • Not scalable, OK for small P § Using a different algorithm that works well for small n/P, e. g. , radix sort

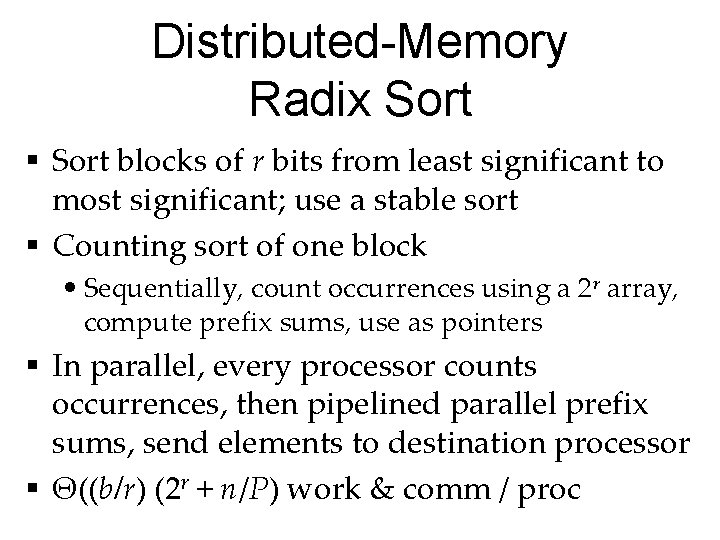

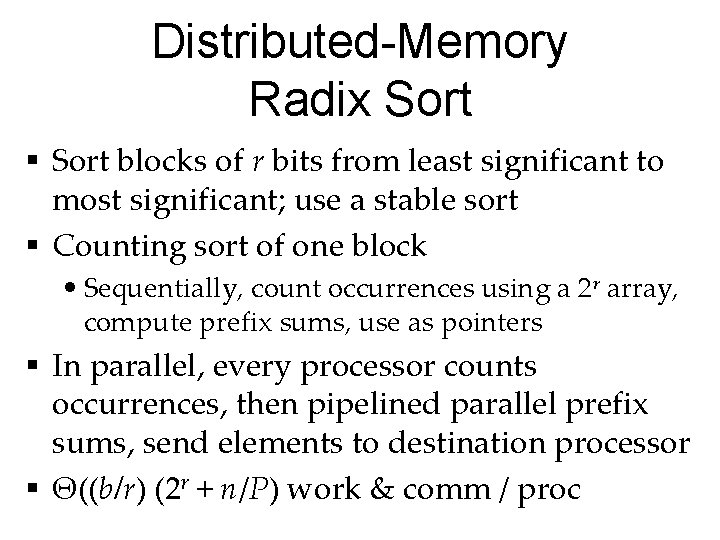

Distributed-Memory Radix Sort § Sort blocks of r bits from least significant to most significant; use a stable sort § Counting sort of one block • Sequentially, count occurrences using a 2 r array, compute prefix sums, use as pointers § In parallel, every processor counts occurrences, then pipelined parallel prefix sums, send elements to destination processor § Θ((b/r) (2 r + n/P) work & comm / proc

Odds and Ends

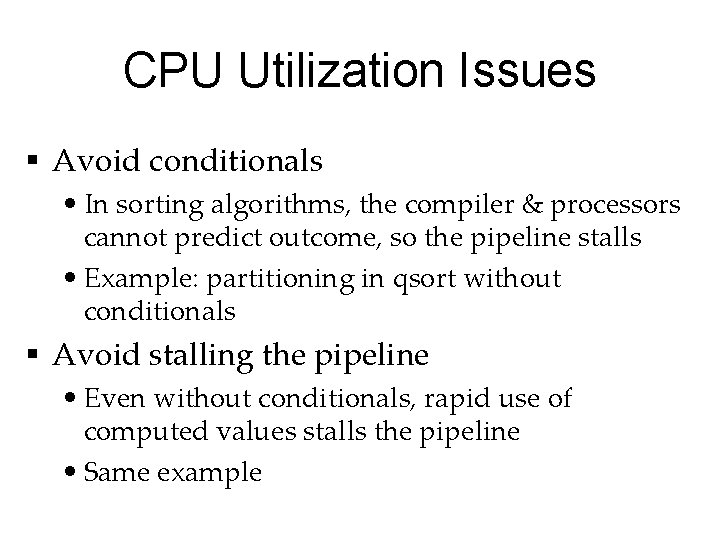

CPU Utilization Issues § Avoid conditionals • In sorting algorithms, the compiler & processors cannot predict outcome, so the pipeline stalls • Example: partitioning in qsort without conditionals § Avoid stalling the pipeline • Even without conditionals, rapid use of computed values stalls the pipeline • Same example

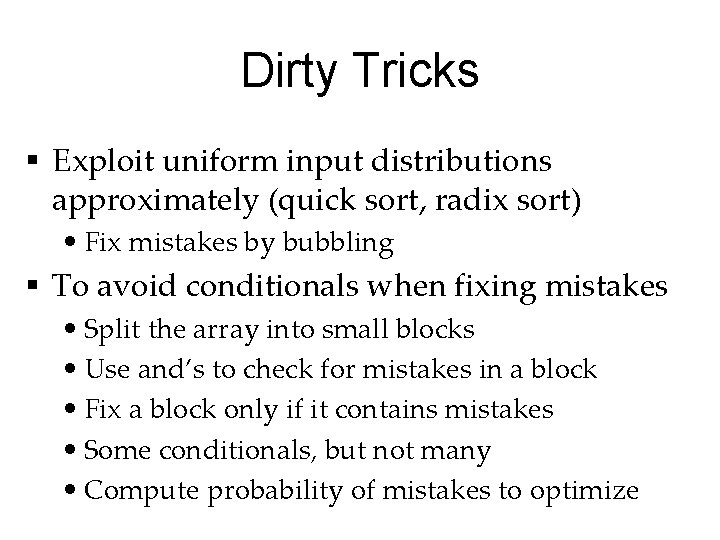

Dirty Tricks § Exploit uniform input distributions approximately (quick sort, radix sort) • Fix mistakes by bubbling § To avoid conditionals when fixing mistakes • Split the array into small blocks • Use and’s to check for mistakes in a block • Fix a block only if it contains mistakes • Some conditionals, but not many • Compute probability of mistakes to optimize

The Exercise § Get sort. cilk, more instuctions inside § Convert sequential merge sort into parallel merge sort • Make it as fast as possible as long is it is a parallel merge sort (e. g. , make the bottom of the recursion fast) § Convert the fast sort into the parallel fastest sort you can § Submit files in home directory, one page with output on 1, 2 procs + possible explanation (on one side of the page)