Analysis experience at GSIAF Marian Ivanov HEP data

- Slides: 15

Analysis experience at GSIAF Marian Ivanov

HEP data analysis ● ● ● Typical HEP data analysis (physic analysis, calibration, alignment) and any statistical algorithms needs continuous algorithm refinement cycles The refinement cycles should be (optimally) on the seconds, minutes level Using the parallelism is the only way to analyze HEP data in reasonable time

Data analysis (calibration, alignment) ● Where do we run? ● DAQ farm (calibration) ● HLT farm (calibration, alignment) ● ● Prompt data processing (calib, align, reco, analysis) with PROOF Batch Analysis on the Grid infrastructure

Tuning of statistical algorithms ● ● For our analysis it is often not enough to study just histograms. – Deeper understanding of correlations between variables is needed. To study correlations on large statistic the Root TTrees can be used. – In case of non trivial processing algorithm, intermediate steps might be needed. – It should be possible to analyze (debug) the intermediate results of such non trivial algorithm Process function: 1)Preprocess data (can be CPU expensive, e. g track refitting) ● optionally store the preprocessed data in TTrees 2)Histogram and/or fit and/or fill matrices with preprocessed data

Component model ● Algorithmic part of our analysis and calibration software should be independent of the running environment ● ● Example: TPC calibration classes (components)(running, tuning Offline, used in HLT, DAQ and Offline) Analysis and calibration code should be written following a component based model ● TSelector (for PROOF) and Ali. Analysis. Task (see presentation of Andreas Morsch) – just simple wrapper

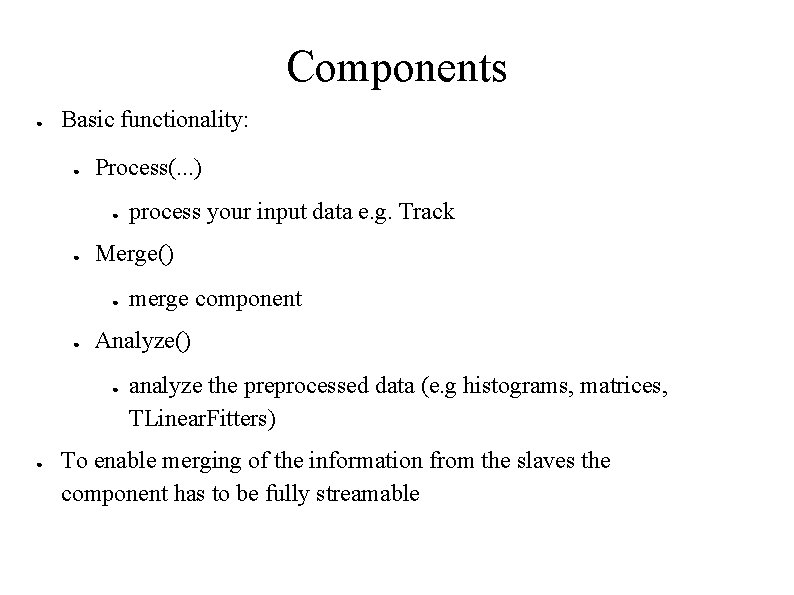

Components ● Basic functionality: ● Process(. . . ) ● ● Merge() ● ● merge component Analyze() ● ● process your input data e. g. Track analyze the preprocessed data (e. g histograms, matrices, TLinear. Fitters) To enable merging of the information from the slaves the component has to be fully streamable

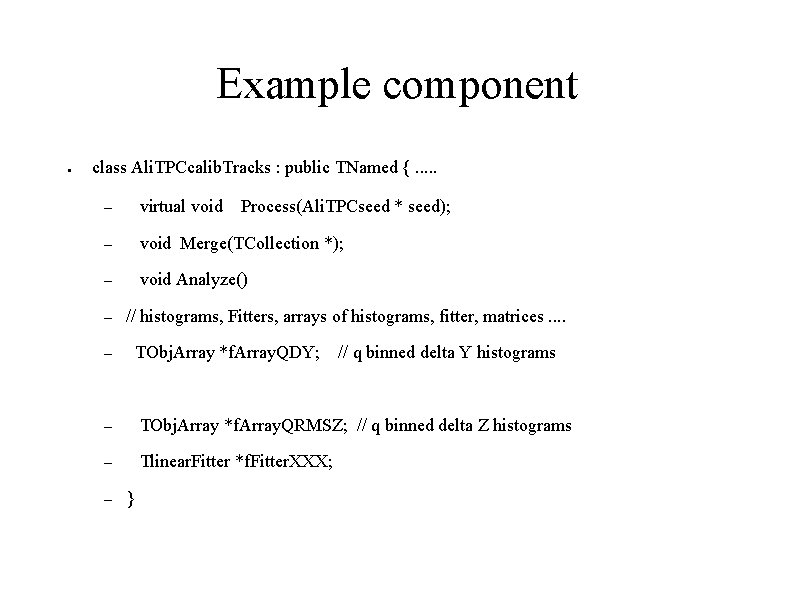

Example component ● class Ali. TPCcalib. Tracks : public TNamed {. . . – virtual void – void Merge(TCollection *); – void Analyze() – Process(Ali. TPCseed * seed); // histograms, Fitters, arrays of histograms, fitter, matrices. . TObj. Array *f. Array. QDY; – // q binned delta Y histograms – TObj. Array *f. Array. QRMSZ; // q binned delta Z histograms – Tlinear. Fitter *f. Fitter. XXX; – }

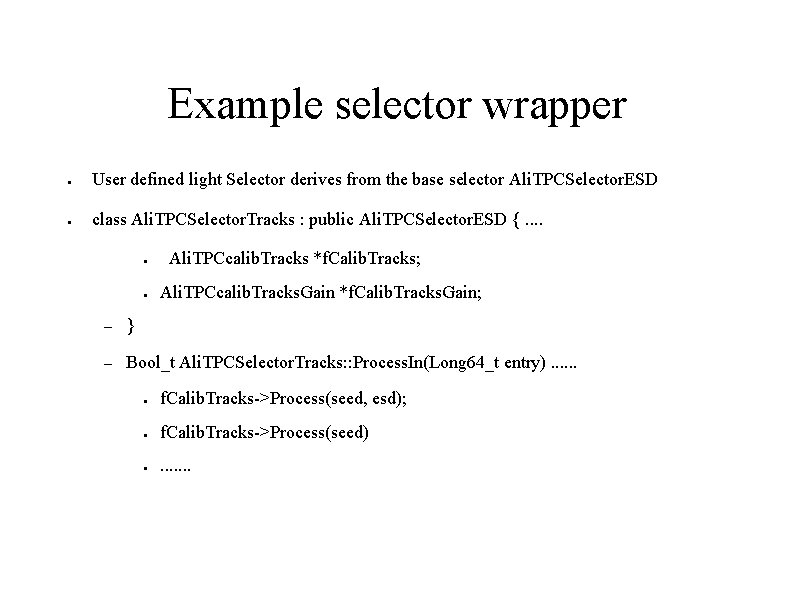

Example selector wrapper ● User defined light Selector derives from the base selector Ali. TPCSelector. ESD ● class Ali. TPCSelector. Tracks : public Ali. TPCSelector. ESD {. . ● ● Ali. TPCcalib. Tracks *f. Calib. Tracks; Ali. TPCcalib. Tracks. Gain *f. Calib. Tracks. Gain; – } – Bool_t Ali. TPCSelector. Tracks: : Process. In(Long 64_t entry). . . ● f. Calib. Tracks->Process(seed, esd); ● f. Calib. Tracks->Process(seed) ● . . . .

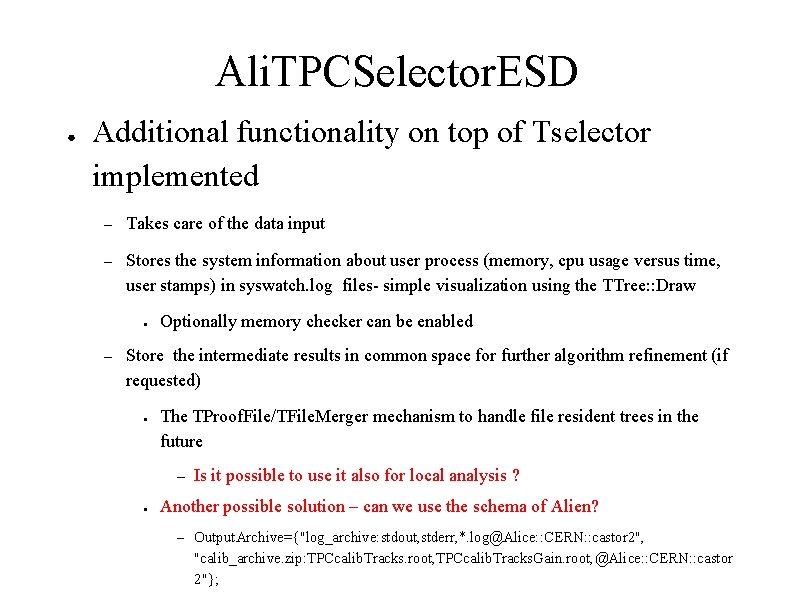

Ali. TPCSelector. ESD ● Additional functionality on top of Tselector implemented – Takes care of the data input – Stores the system information about user process (memory, cpu usage versus time, user stamps) in syswatch. log files- simple visualization using the TTree: : Draw ● – Optionally memory checker can be enabled Store the intermediate results in common space for further algorithm refinement (if requested) ● The TProof. File/TFile. Merger mechanism to handle file resident trees in the future – ● Is it possible to use it also for local analysis ? Another possible solution – can we use the schema of Alien? – Output. Archive={"log_archive: stdout, stderr, *. log@Alice: : CERN: : castor 2", "calib_archive. zip: TPCcalib. Tracks. root, TPCcalib. Tracks. Gain. root, @Alice: : CERN: : castor 2"};

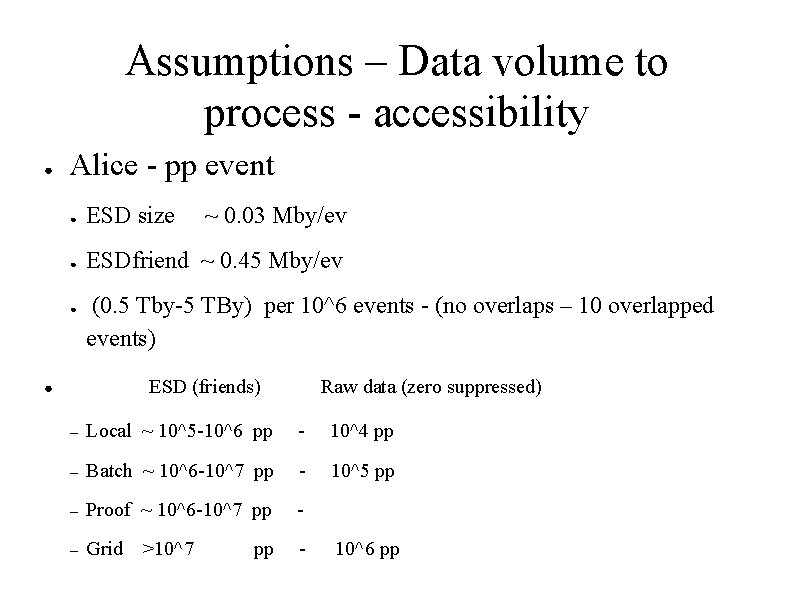

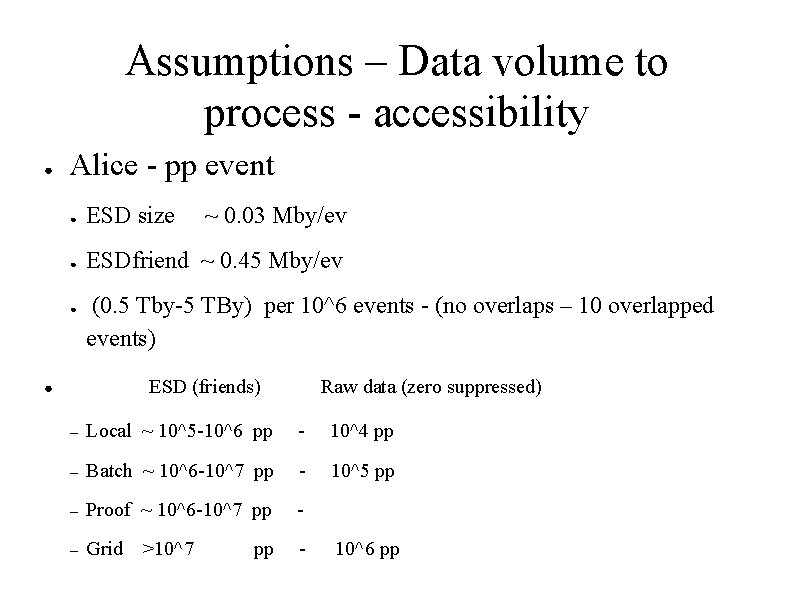

Assumptions – Data volume to process - accessibility ● Alice - pp event ● ESD size ● ESDfriend ~ 0. 45 Mby/ev ● ~ 0. 03 Mby/ev (0. 5 Tby-5 TBy) per 10^6 events - (no overlaps – 10 overlapped events) ESD (friends) ● Raw data (zero suppressed) – Local ~ 10^5 -10^6 pp - 10^4 pp – Batch ~ 10^6 -10^7 pp - 10^5 pp – Proof ~ 10^6 -10^7 pp - – Grid - >10^7 pp 10^6 pp

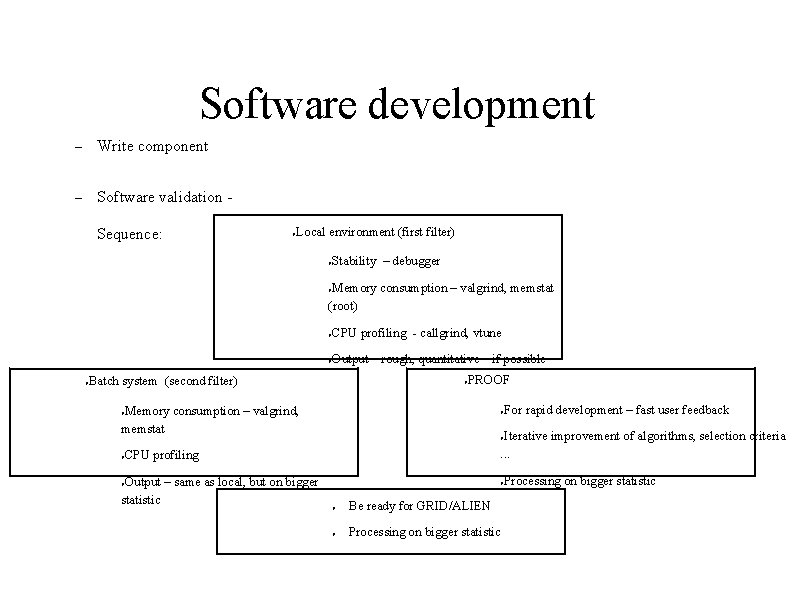

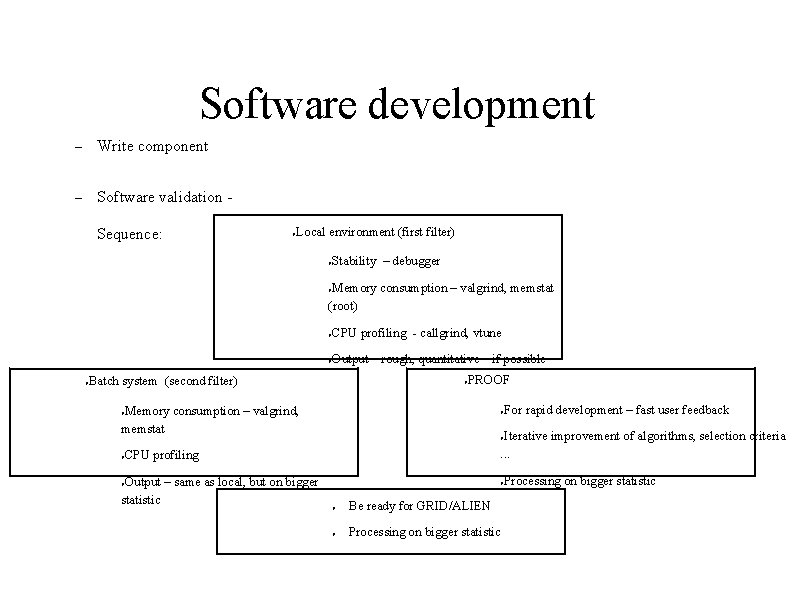

Software development – Write component – Software validation Sequence: ● Local environment (first filter) ● Stability – debugger Memory consumption – valgrind, memstat (root) ● ● ● CPU profiling - callgrind, vtune ● Output – rough, quantitative – if possible Batch system (second filter) ● PROOF Memory consumption – valgrind, memstat ● ● ● Iterative improvement of algorithms, selection criteria. . . ● CPU profiling Output – same as local, but on bigger statistic For rapid development – fast user feedback ● ● ● Be ready for GRID/ALIEN ● Processing on bigger statistic

Proof experience (0) ● ● It is impossible to write code without bugs Only privileged users can use debugger directly on the Proof slaves and/or master For normal users the code has to be debugged locally ==> It would be nice if the code running locally and on the PROOF could be the same – It is (almost) the case now – Input lists? TProof. File ?

Proof experience (1) ● ● Debugging on PROOF is not trivial We tried dumping important system information into a special log file (memory, cpu. . . ). Analyzing these TTrees really helps to understand the processing problems.

Proof experience (2) ● ● In our approach the users can generate files with preprocessed information In order to allow users to control those files we had to create a way to interact with XRD in a “file system manner” – Ali. XRDProof. Toolkit: ● ● Generate the list of files - similar to find command Check the consistency of the data from the list - reject corrupted files. Corrupted files are one of our biggest problems, as the network at GSI is less stable than in Cern.

Conclusion ● ● PROOF is easy to use and well suited for our needs We have observed some problems, but they are usually fixed fast We use it successfully for development of calibration components Further development of tools to simplify debugging on PROOF is very welcome.