Analysing Computational Thinking and Computer Programming Processes DIGITAL

- Slides: 25

Analysing Computational Thinking and Computer Programming Processes DIGITAL TECHNOLOGIES RESEARCH GROUP AT MACQUARIE UNIVERSITY 6 December 2017 Matt Bower, Kay-Dennis Boom, Jennifer Lai

How do we know… • Playing with robots and online programming environments can be fun, but how do we know if it is developing students’ computational thinking capabilities? • What pedagogical strategies actually help students to become better computer programmers and problem solvers using technology? 2

Determining indicators of computational thinking KAY-DENNIS BOOM, MATT BOWER, JENS SIEMON

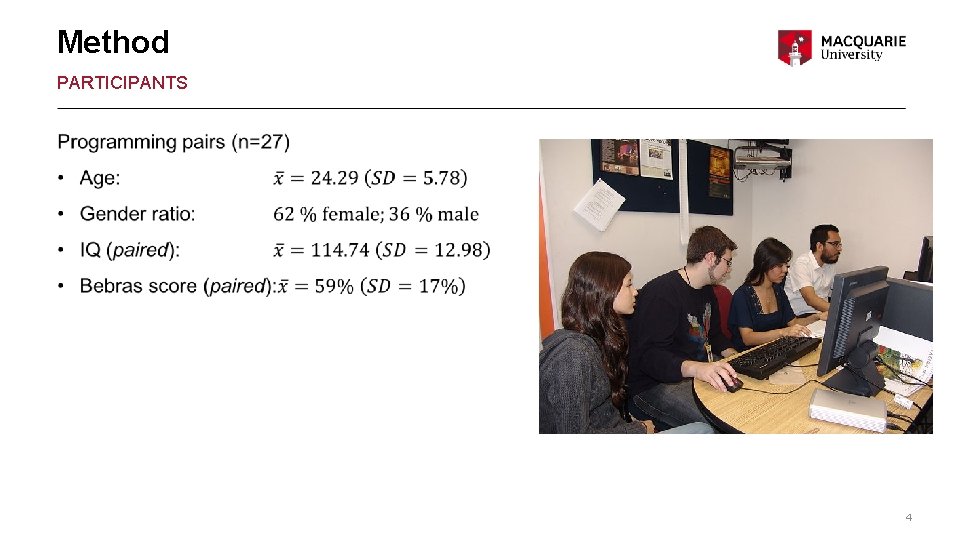

Method PARTICIPANTS 4

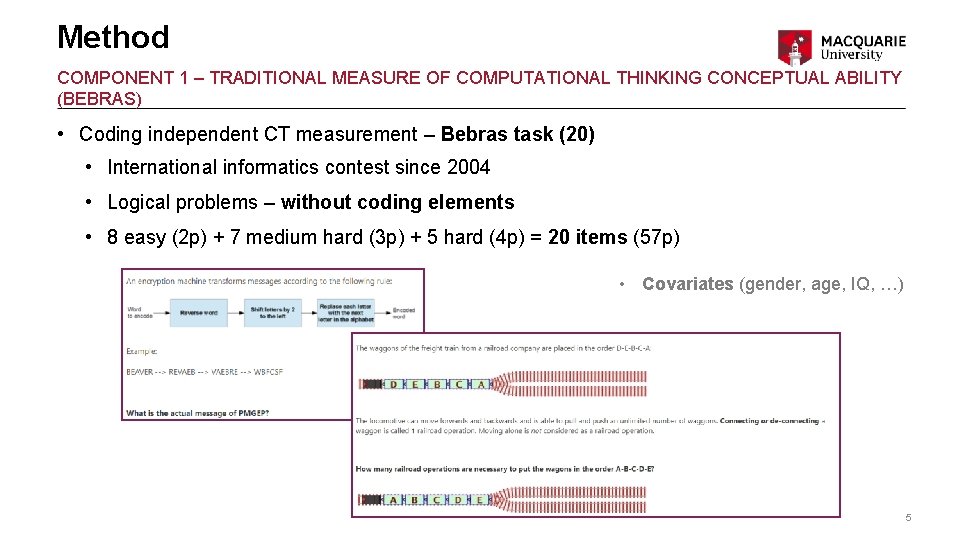

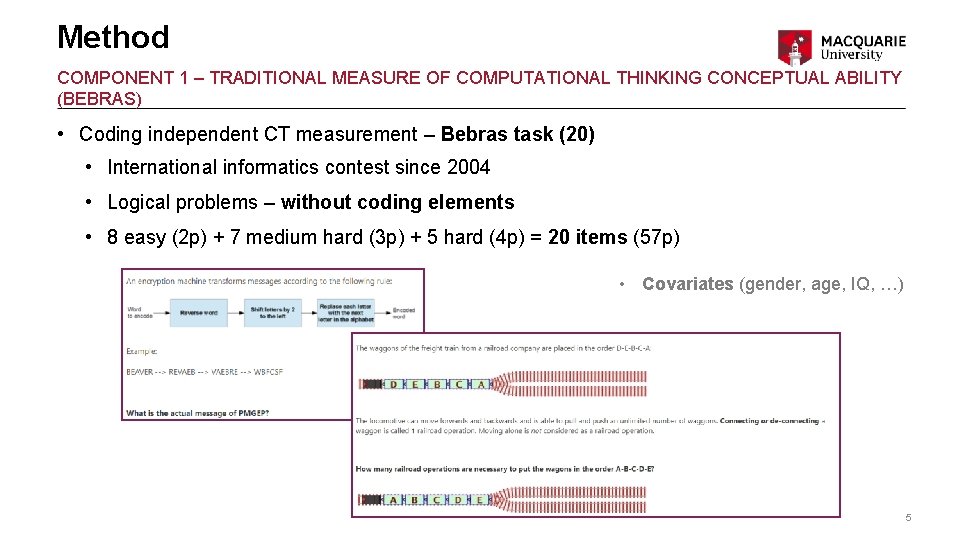

Method COMPONENT 1 – TRADITIONAL MEASURE OF COMPUTATIONAL THINKING CONCEPTUAL ABILITY (BEBRAS) • Coding independent CT measurement – Bebras task (20) • International informatics contest since 2004 • Logical problems – without coding elements • 8 easy (2 p) + 7 medium hard (3 p) + 5 hard (4 p) = 20 items (57 p) • Covariates (gender, age, IQ, …) 5

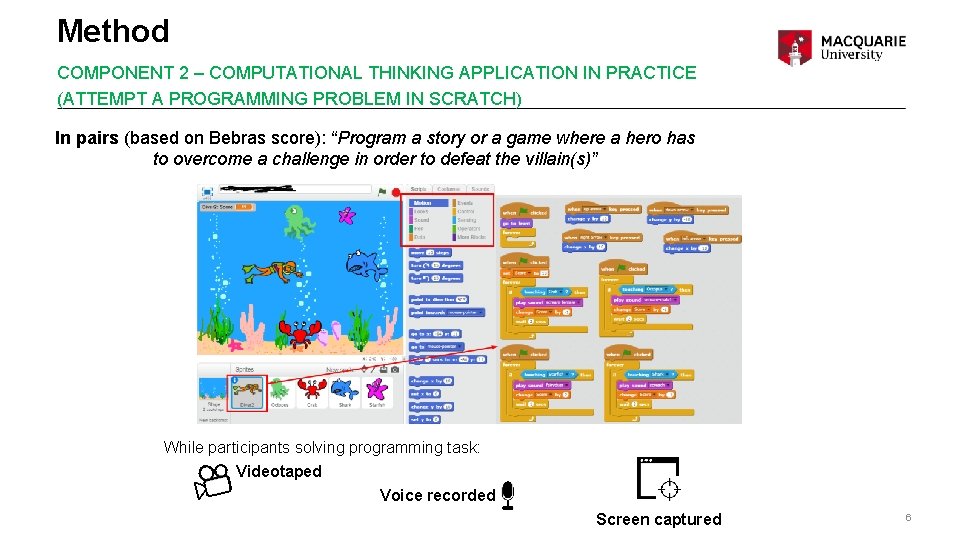

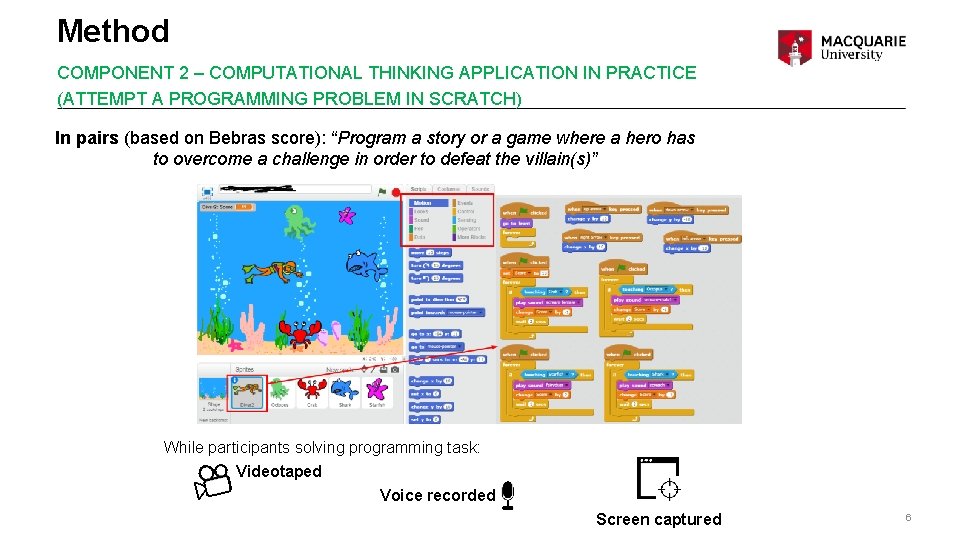

Method COMPONENT 2 – COMPUTATIONAL THINKING APPLICATION IN PRACTICE (ATTEMPT A PROGRAMMING PROBLEM IN SCRATCH) In pairs (based on Bebras score): “Program a story or a game where a hero has to overcome a challenge in order to defeat the villain(s)” While participants solving programming task: Videotaped Voice recorded Screen captured 6

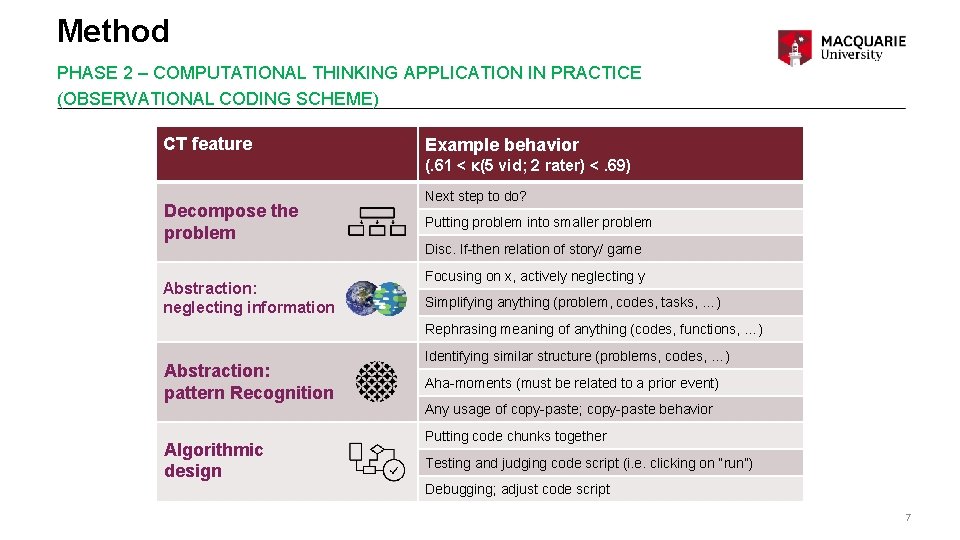

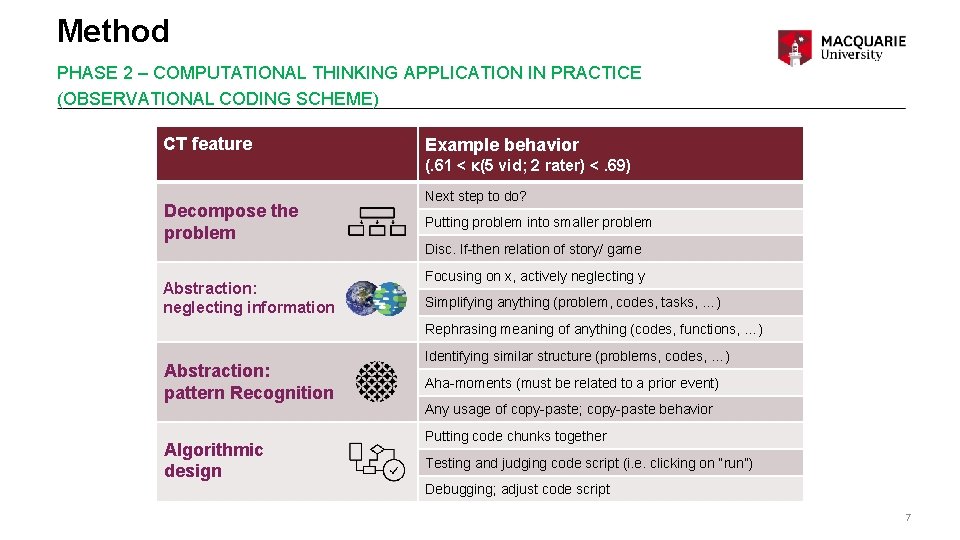

Method PHASE 2 – COMPUTATIONAL THINKING APPLICATION IN PRACTICE (OBSERVATIONAL CODING SCHEME) CT feature Example behavior (. 61 < κ(5 vid; 2 rater) <. 69) Decompose the problem Abstraction: neglecting information Next step to do? Putting problem into smaller problem Disc. If-then relation of story/ game Focusing on x, actively neglecting y Simplifying anything (problem, codes, tasks, …) Rephrasing meaning of anything (codes, functions, …) Abstraction: pattern Recognition Algorithmic design Identifying similar structure (problems, codes, …) Aha-moments (must be related to a prior event) Any usage of copy-paste; copy-paste behavior Putting code chunks together Testing and judging code script (i. e. clicking on “run”) Debugging; adjust code script 7

Method PHASE 2 – COMPUTATIONAL THINKING APPLICATION IN PRACTICE (CODING IN INTERACT) 8

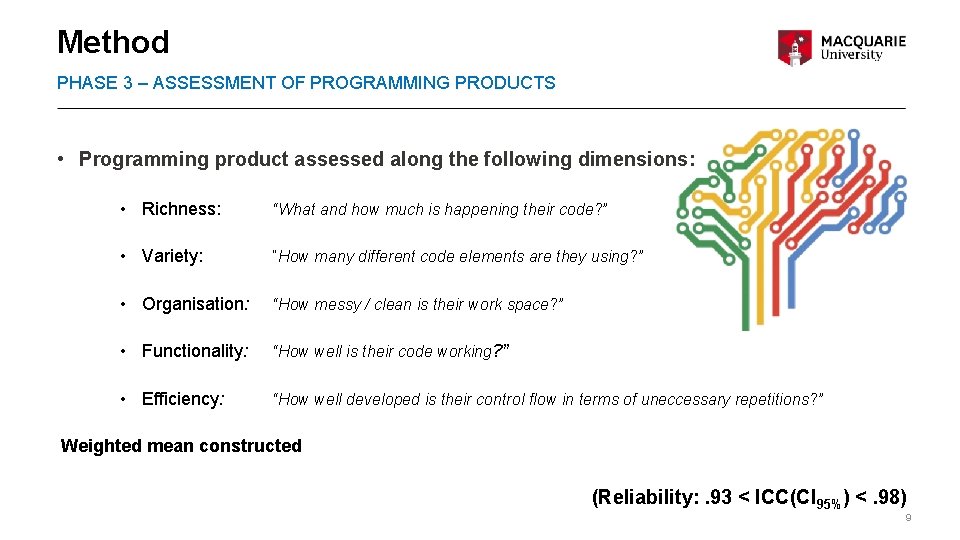

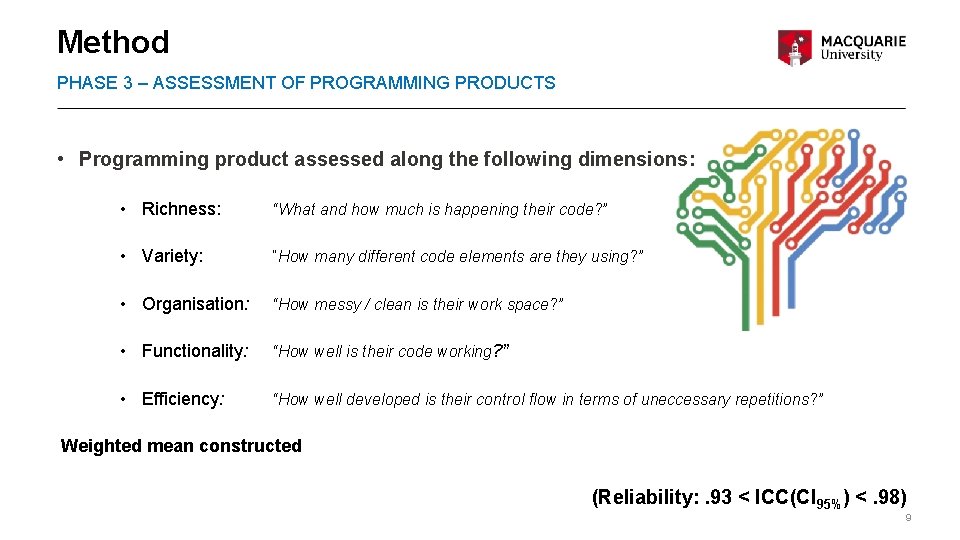

Method PHASE 3 – ASSESSMENT OF PROGRAMMING PRODUCTS • Programming product assessed along the following dimensions: • Richness: “What and how much is happening their code? ” • Variety: “How many different code elements are they using? ” • Organisation: “How messy / clean is their work space? ” • Functionality: “How well is their code working? ” • Efficiency: “How well developed is their control flow in terms of uneccessary repetitions? ” Weighted mean constructed (Reliability: . 93 < ICC(CI 95%) <. 98) 9

Results COMPUTATION THINKING APPLICATION IN PRACTICE How is computational thinking applied in practice when solving a programming problem? Out of 40 min, participants spent. . . Mean Decompose the problem Min – Max 03 min 06 sec 00 min 24 sec – 9 min 3 sec - - Abst. : pattern recognition 00 min, 34 sec (pairs = 17) 00 min 4 sec – 1 min 30 sec Algorithmic design 14 min 59 sec 04 min 9 sec – 24 min 25 sec = CT behavioural (total) 18 min 28 sec 06 min 18 sec – 28 min 10 sec Abst. : neglecting information 10

Results COMPUTATION THINKING CONCEPTUAL ABILITY VS PROGRAMMING PRODUCT What is the relationship between computational thinking conceptual ability and quality of programming products? CT (w/o coding elements) Bebras score Programming ability r Richness . 39* Variety . 26 Organisation . 05 Functionality . 29* Efficiency . 24 = weighted mean . 30* * Indicates p <. 05 SMALL POSITIVE CORRELATION 11

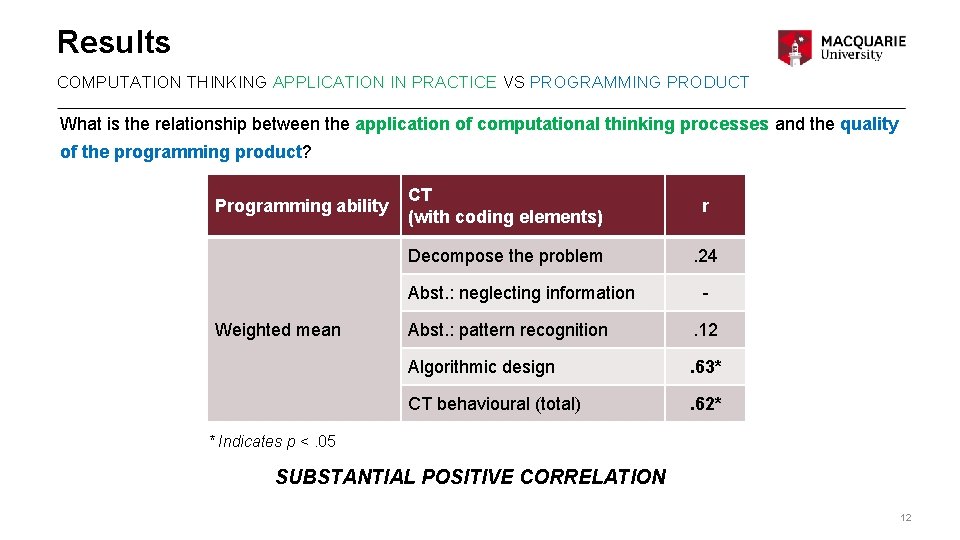

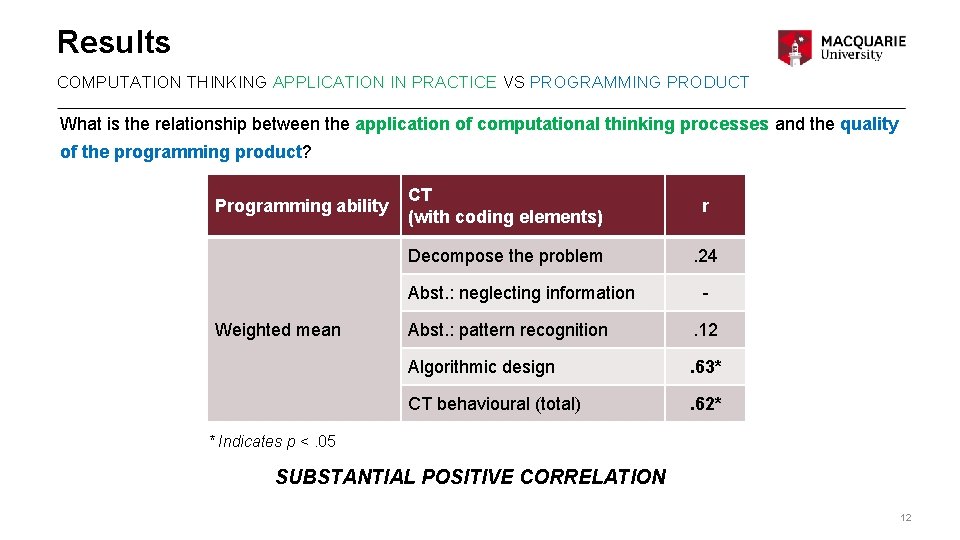

Results COMPUTATION THINKING APPLICATION IN PRACTICE VS PROGRAMMING PRODUCT What is the relationship between the application of computational thinking processes and the quality of the programming product? Programming ability CT (with coding elements) r Decompose the problem . 24 Abst. : neglecting information Weighted mean - Abst. : pattern recognition . 12 Algorithmic design . 63* CT behavioural (total) . 62* * Indicates p <. 05 SUBSTANTIAL POSITIVE CORRELATION 12

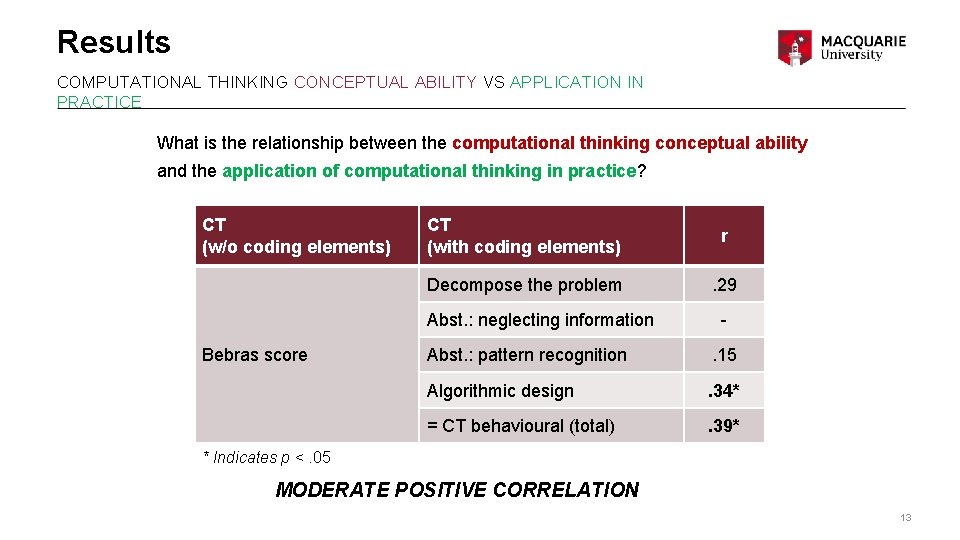

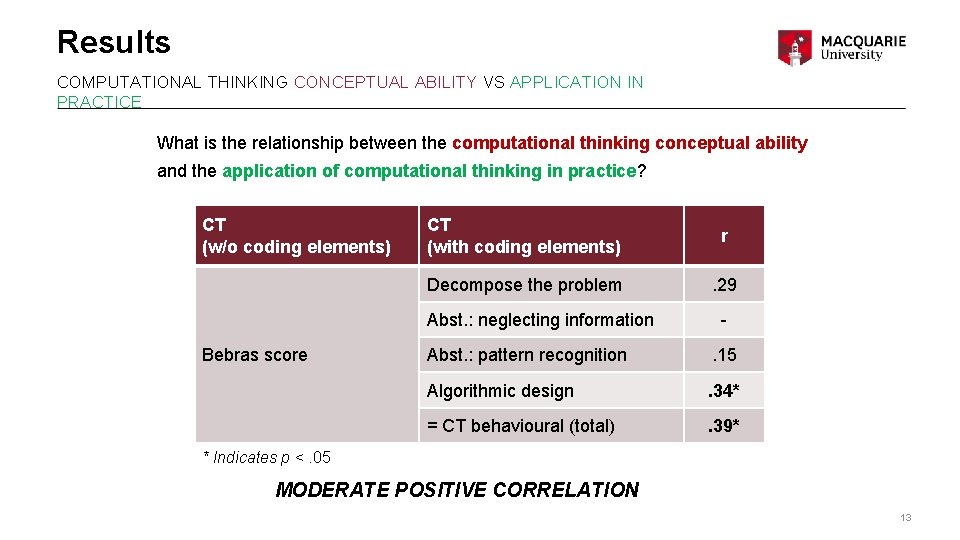

Results COMPUTATIONAL THINKING CONCEPTUAL ABILITY VS APPLICATION IN PRACTICE What is the relationship between the computational thinking conceptual ability and the application of computational thinking in practice? CT (w/o coding elements) CT (with coding elements) r Decompose the problem . 29 Abst. : neglecting information Bebras score - Abst. : pattern recognition . 15 Algorithmic design . 34* = CT behavioural (total) . 39* * Indicates p <. 05 MODERATE POSITIVE CORRELATION 13

Summary IN SUMMARY • Indicators of CT (conceptual, practice, product) satisfactorily reliable • Small but significant positive correlation between CT conceptual ability & quality of programming product • Substantial significant positive correlation between CT application in practice & quality of programming product • Moderate significant positive correlation between CT conceptual ability & CT application in practice • This research indicates that we need to be focusing upon observations of computational thinking in practice as the best predictor of ability to solve problems using computers • Limitation: only one programming context (cohort, task, environment) examined • Further research to examine how different tasks, programming environments and pedagogical strategies may influence computational thinking application in practice 14

Validating a theoretical framework for analysing computer programming processes MATT BOWER, JENNIFER LAI, JENS SIEMON, GARRY FALLOON

Research problem • How do we describe and analyse the process by which people write computer programs? • Important in order to understand how teachers might better support the development of computer programming capabilities? • • • Activity Theory has been used as framework for analysing how activities are undertaken within social constructivist contexts Subject pairs of students working together, Object is the computer program, the tools (instruments) that can be used by subjects to develop the program are computers, integrated development environments, etc. Activity theory does not encompass any of the specific computer programming processes that people undertake Figure 1. The elements of an activity system and their interrelationships (Engeström, 1987) 16

Computational thinking & notional machine • Computational Thinking: Solving problems, designing systems, and understanding human behaviour, by drawing on the concepts fundamental to computer science (Wing, 2006) • Notional Machine: The notional machine is an abstract version of the computer, “an idealised, conceptual computer whose properties are implied by the constructs in the programming language employed” (du Boulay, et al. , 1989) • These concepts can be used to theoretically ground how people go about performing computer programming process • Theory should represent reality - model requires empirical validation OFFICE | FACULTY | DEPARTMENT 17

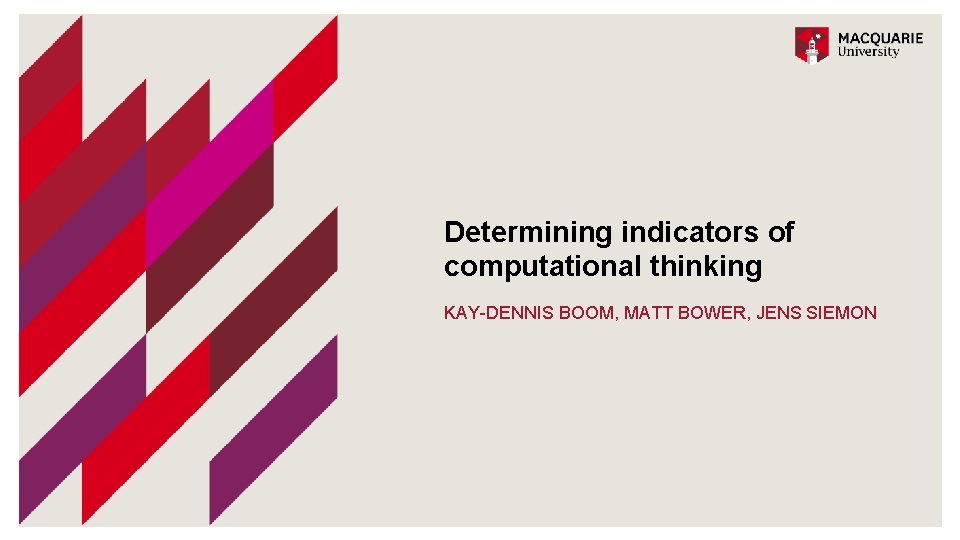

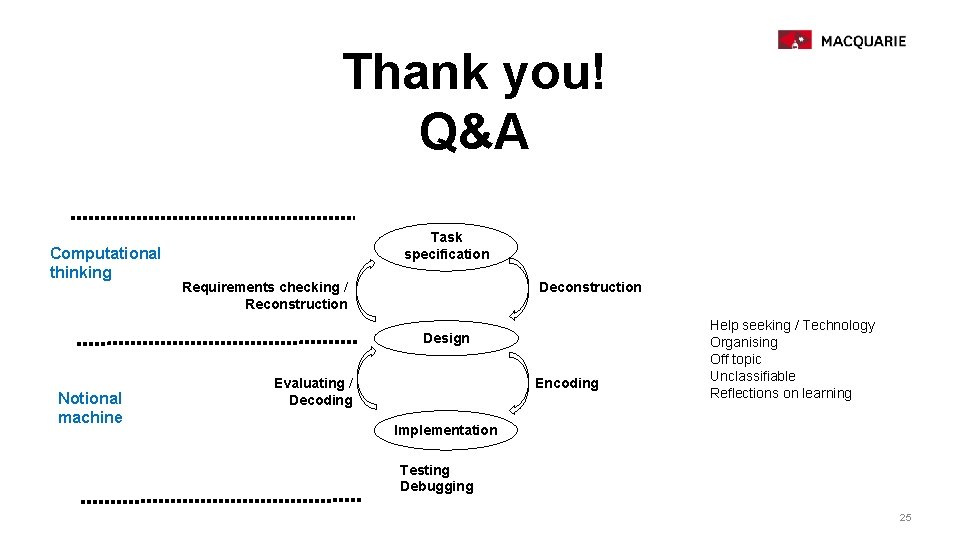

Research model • Thematic analysis using an informed grounded theory approach, starting with an initial framework. Computational thinking Task specification Requirements checking Reconstruction Design Notional machine Encoding Evaluating Decoding Help seeking Technology Organising Off topic Unclassifiable Reflections on learning Implementation Testing Debugging 18

Methodology • Data was collected from 10 pairs of students completing a scratch programming activity: • 5 pairs of pre-service teachers with little experience • 5 pairs of third year computing students • Program a story game where a hero has to overcome a challenge in order to defeat the villain(s). • Each pair spent approximately 40 minutes to undertake the task. 19

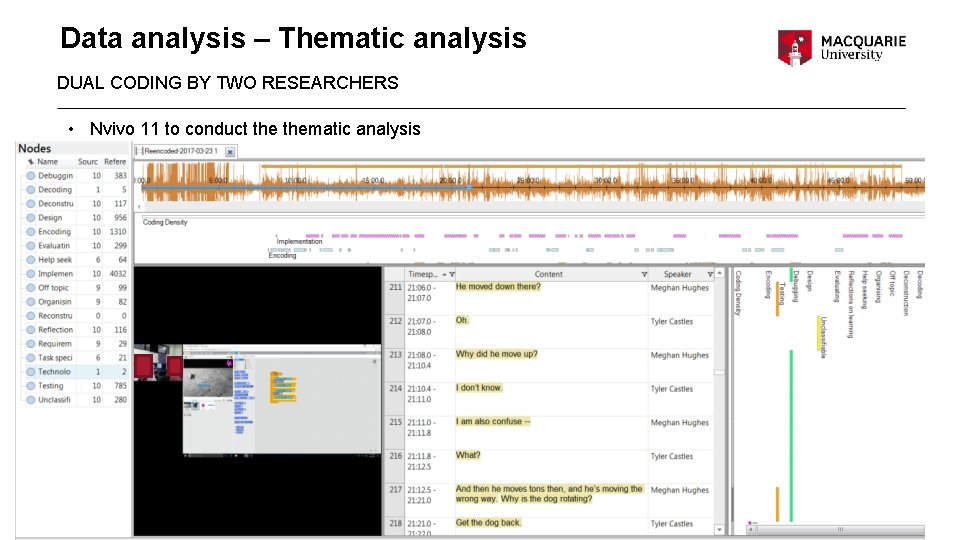

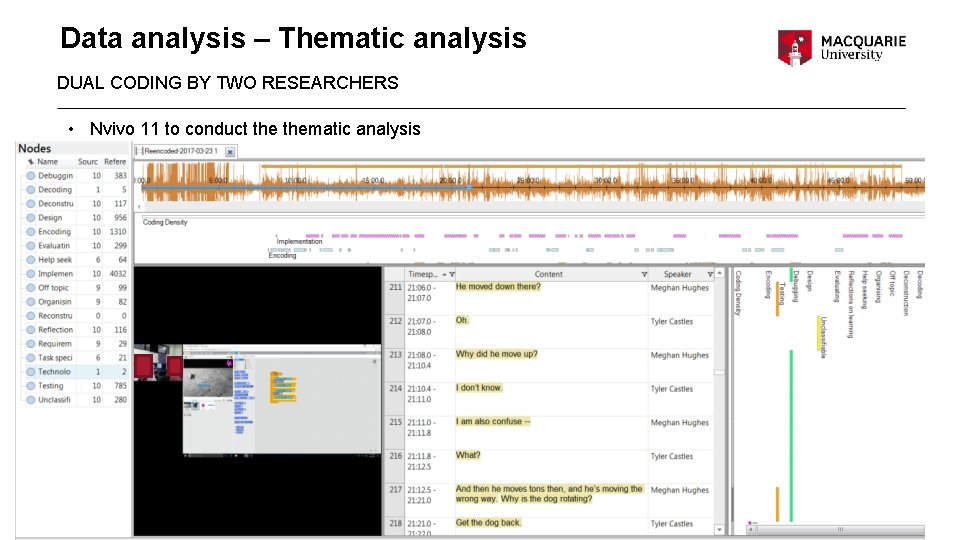

Data analysis – Thematic analysis DUAL CODING BY TWO RESEARCHERS • Nvivo 11 to conduct thematic analysis 20

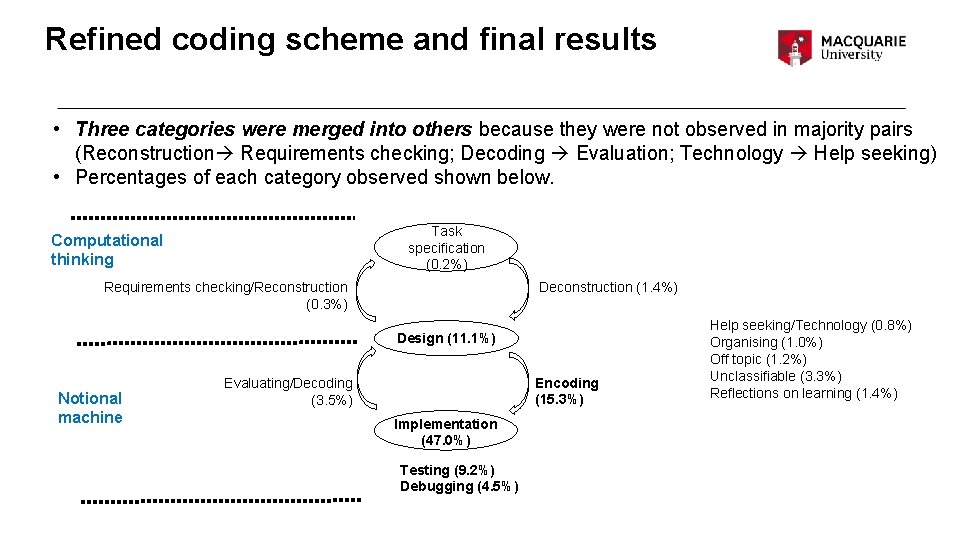

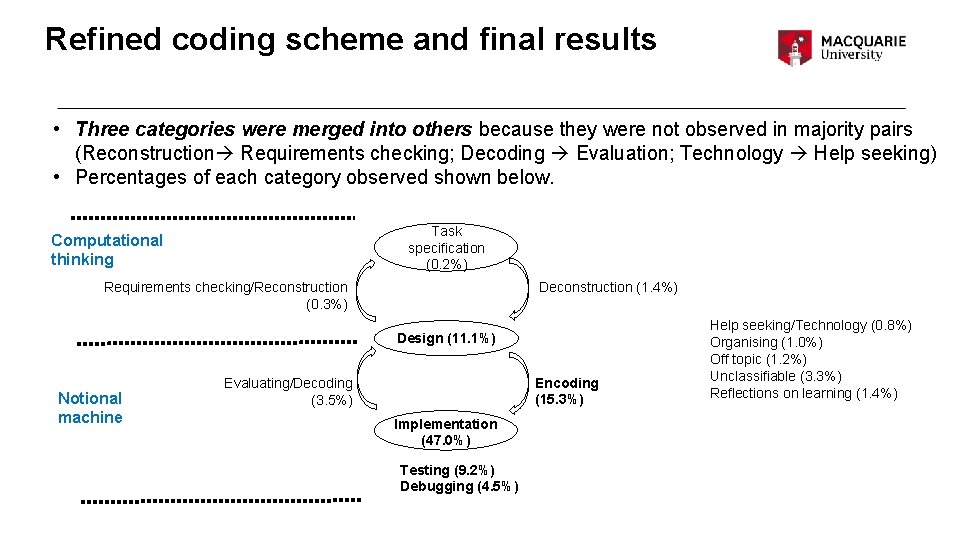

Refined coding scheme and final results • Three categories were merged into others because they were not observed in majority pairs (Reconstruction Requirements checking; Decoding Evaluation; Technology Help seeking) • Percentages of each category observed shown below. Task specification (0. 2%) Computational thinking Requirements checking/Reconstruction (0. 3%) Deconstruction (1. 4%) Design (11. 1%) Notional machine Evaluating/Decoding (3. 5%) Encoding (15. 3%) Implementation (47. 0%) Testing (9. 2%) Debugging (4. 5%) Help seeking/Technology (0. 8%) Organising (1. 0%) Off topic (1. 2%) Unclassifiable (3. 3%) Reflections on learning (1. 4%)

Some significant differences between computing and education students • Chi-square test: = 154. 47, df = 13, p-value < 2. 2 e-16 22

Framework was able to show that 1. 2. 3. 4. 5. 6. Computing students had relatively greater focus on deconstructing the problem Computing students made relatively greater focus on evaluating the program Education students made relatively greater focus on help seeking Computing students made relatively greater focus on implementation Education students made relatively greater focus on reflections on learning Organising was not significant (p=0. 0067) but very low value may indicate that with larger sample education pairs tended to dedicate more attention to groupwork OFFICE | FACULTY | DEPARTMENT 23

Summary and future work • Study has empirically validated a theoretical framework for describing computer programming processes and shows, using a single contrast, how it can be used to perform educational analysis • Further research could investigate how programming process differs for: • • • Different tasks (e. g. more complex specifications) Different languages (e. g. C++, Python, Blockly) Different programming environment (e. g. visual vs. text interfaces) Different Cohorts (e. g. children, experts, gender) Different teacher interventions (e. g. forms of scaffolding and modelling) 24

Thank you! Q&A Computational thinking Task specification Requirements checking / Reconstruction Design Notional machine Encoding Evaluating / Decoding Help seeking / Technology Organising Off topic Unclassifiable Reflections on learning Implementation Testing Debugging 25