An Overview of MIMD Architectures 12142021 courseeleg 652

- Slides: 29

An Overview of MIMD Architectures 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 1

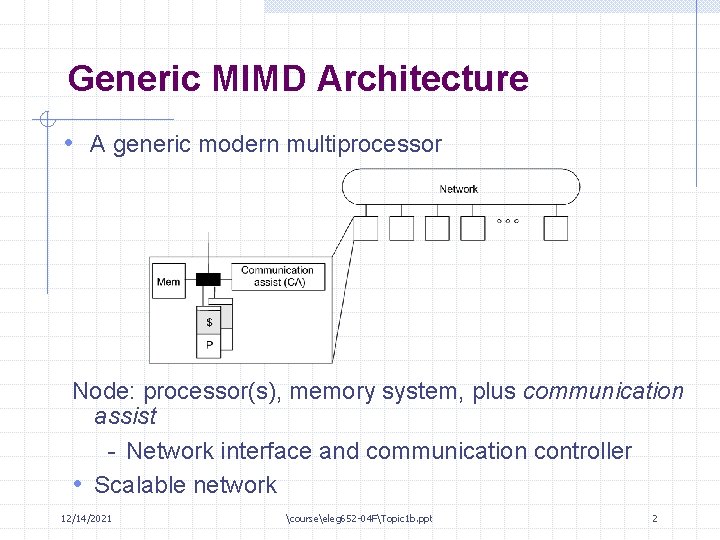

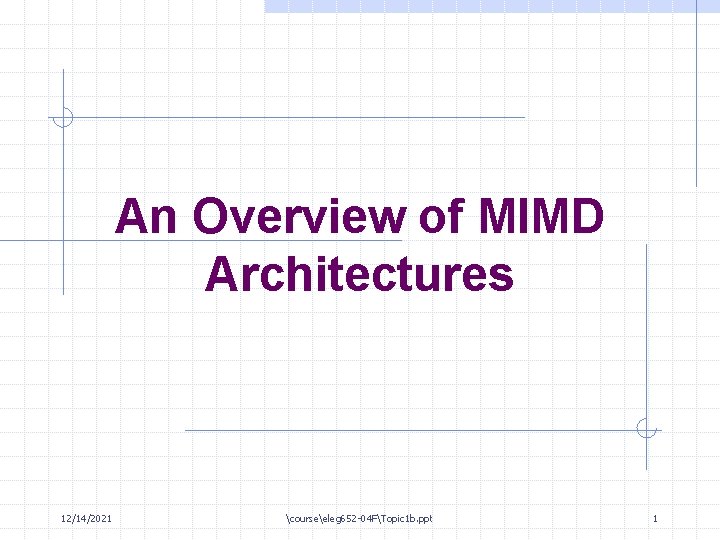

Generic MIMD Architecture • A generic modern multiprocessor Node: processor(s), memory system, plus communication assist - Network interface and communication controller • Scalable network 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 2

Classification • Shared memory model vs. distributed memory model 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 3

Distributed Memory MIMD Machines (Multicomputers, MPPs, clusters, etc. ) • Message passing programming models • Interconnect networks • Generations/history: 12/14/2021 1983 -87: COSMIC CUBE i. PSC/I, II software routing 1988 -92: mesh-connected (hardware routing) Intel paragon 1993 -99: CM-5, IBM-SP 1996 - : clusters courseeleg 652 -04 FTopic 1 b. ppt 4

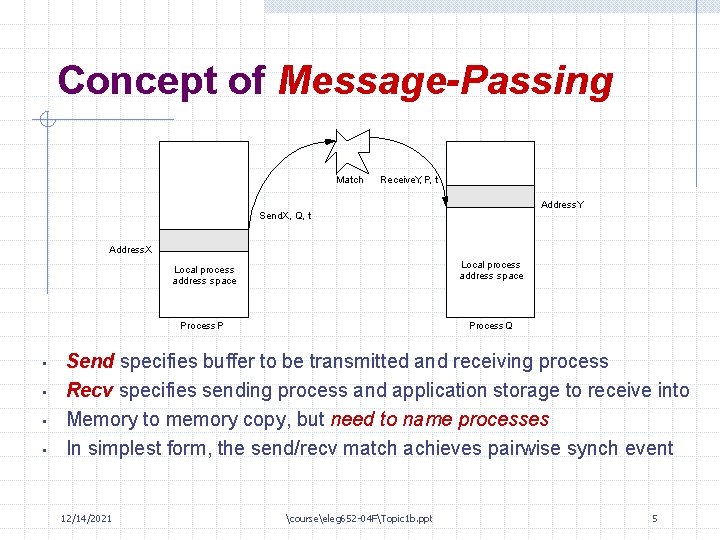

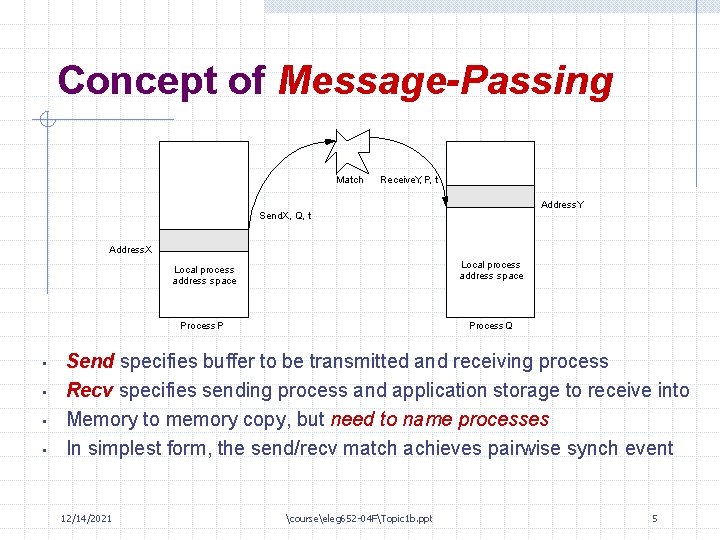

Concept of Message-Passing Match Receive. Y, P, t Address. Y Send. X, Q, t Address. X • • Local process address space Process P Process Q Send specifies buffer to be transmitted and receiving process Recv specifies sending process and application storage to receive into Memory to memory copy, but need to name processes In simplest form, the send/recv match achieves pairwise synch event 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 5

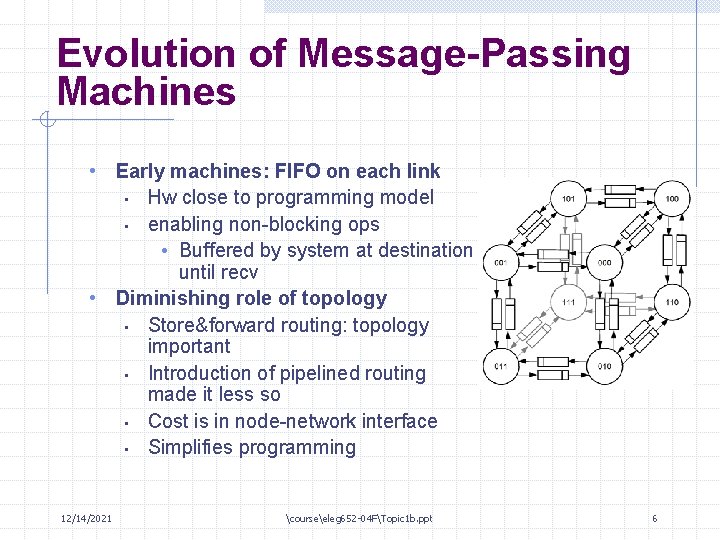

Evolution of Message-Passing Machines • Early machines: FIFO on each link Hw close to programming model • enabling non-blocking ops • Buffered by system at destination until recv • Diminishing role of topology • Store&forward routing: topology important • Introduction of pipelined routing made it less so • Cost is in node-network interface • Simplifies programming • 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 6

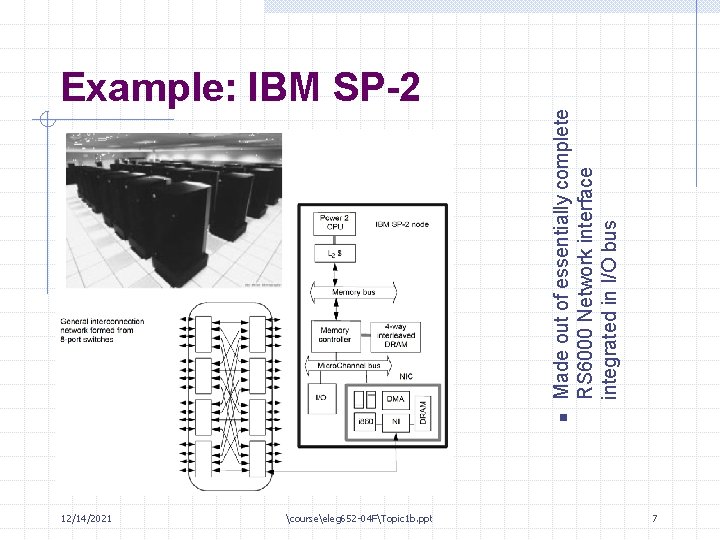

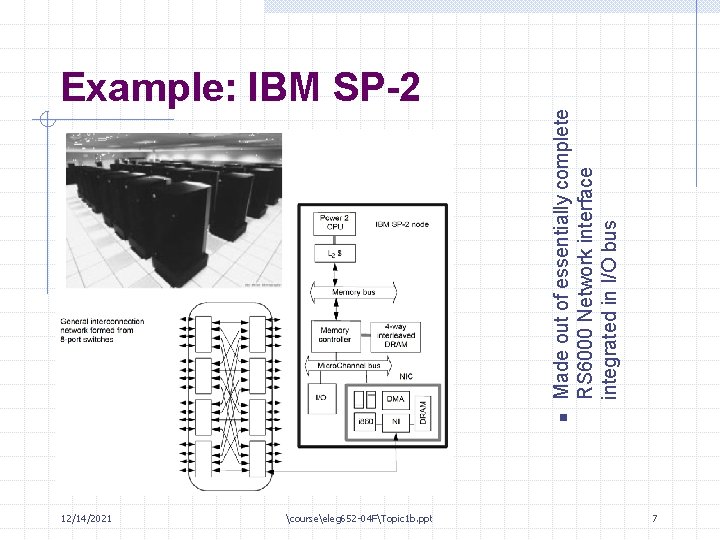

n 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt Made out of essentially complete RS 6000 Network interface integrated in I/O bus Example: IBM SP-2 7

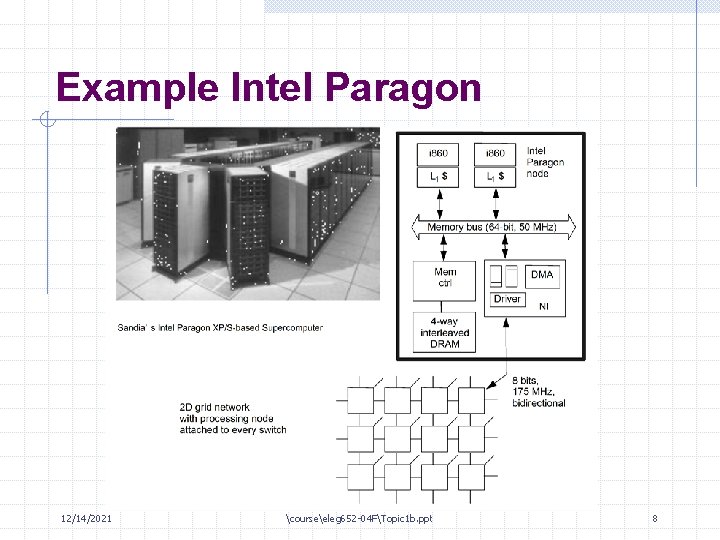

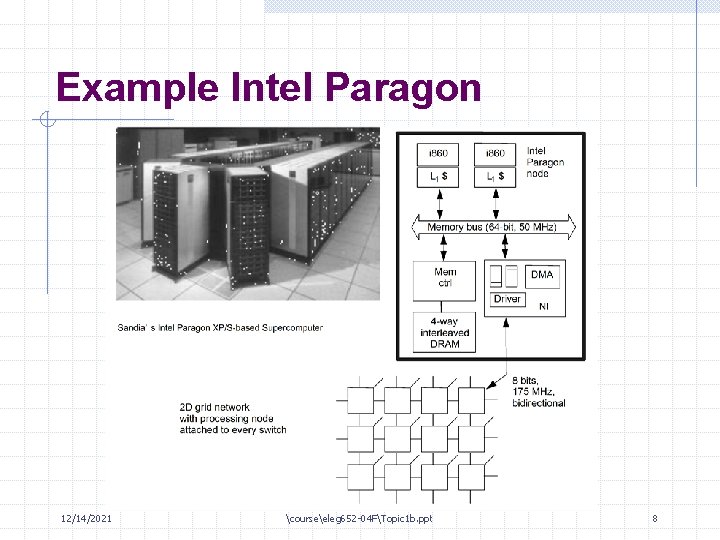

Example Intel Paragon 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 8

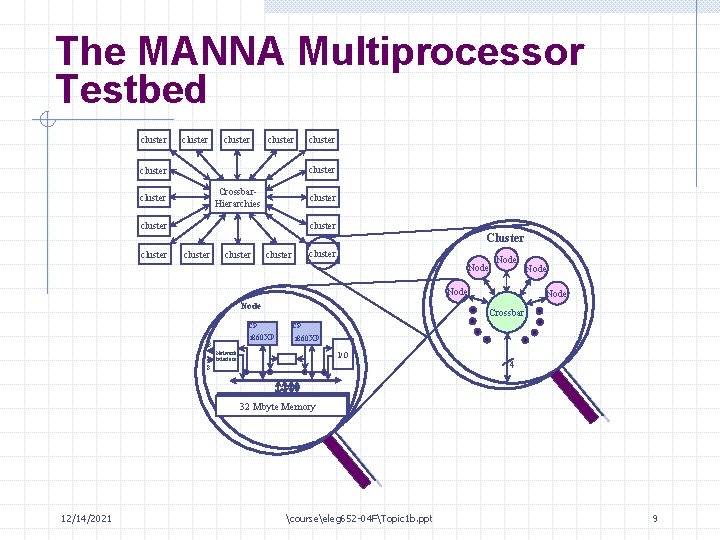

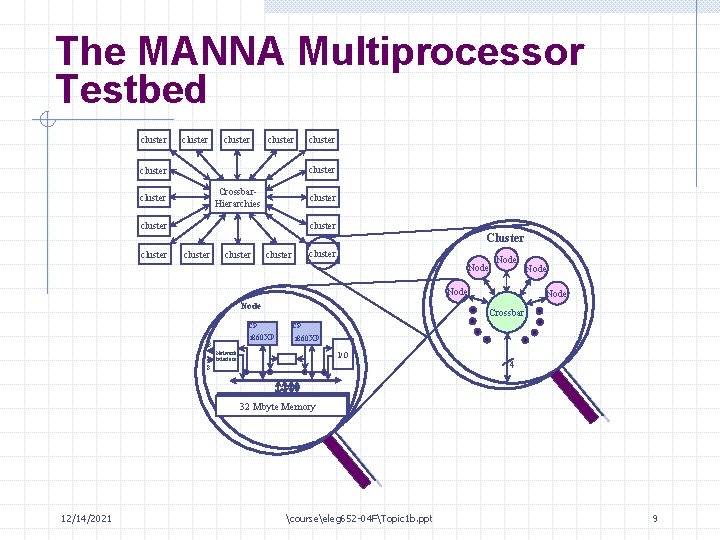

The MANNA Multiprocessor Testbed cluster cluster Crossbar. Hierarchies cluster Cluster cluster cluster Node CP i 860 XP 8 Node Crossbar CP i 860 XP Network Interface I/O 8 4 32 Mbyte Memory 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 9

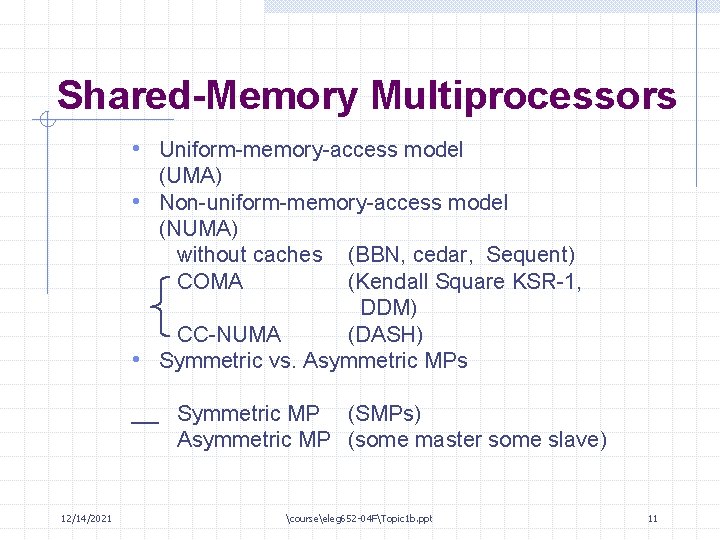

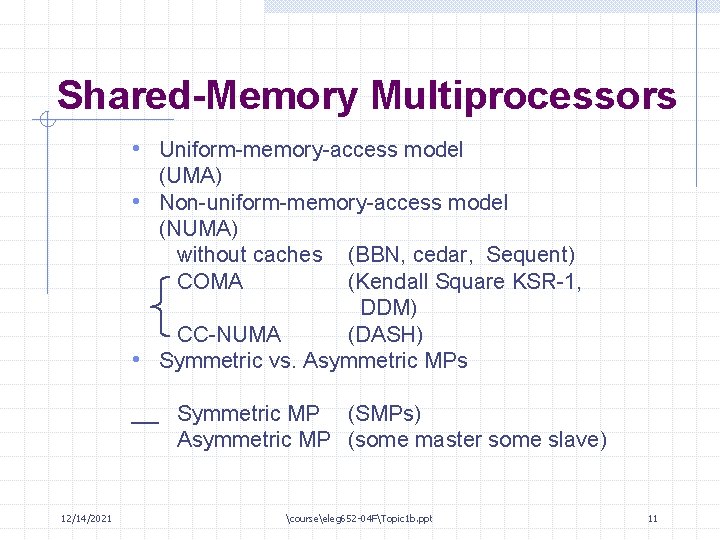

Shared-Memory Multiprocessors • Uniform-memory-access model (UMA) • Non-uniform-memory-access model (NUMA) without caches (BBN, cedar, Sequent) COMA (Kendall Square KSR-1, DDM) CC-NUMA (DASH) • Symmetric vs. Asymmetric MPs Symmetric MP (SMPs) Asymmetric MP (some master some slave) 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 11

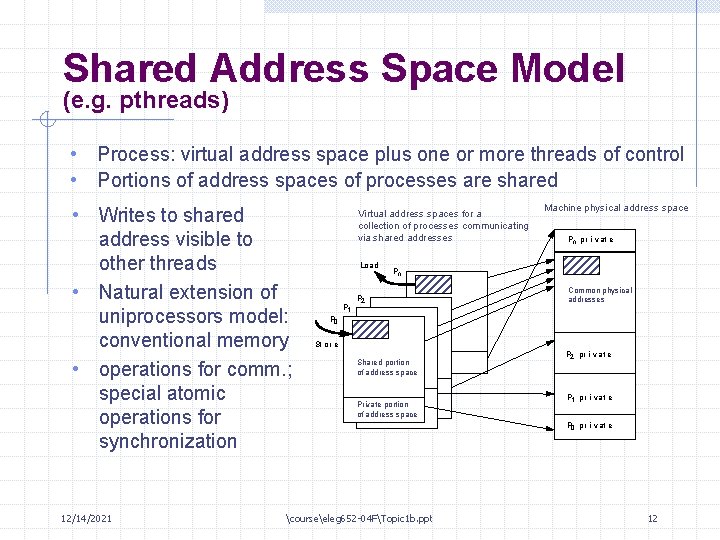

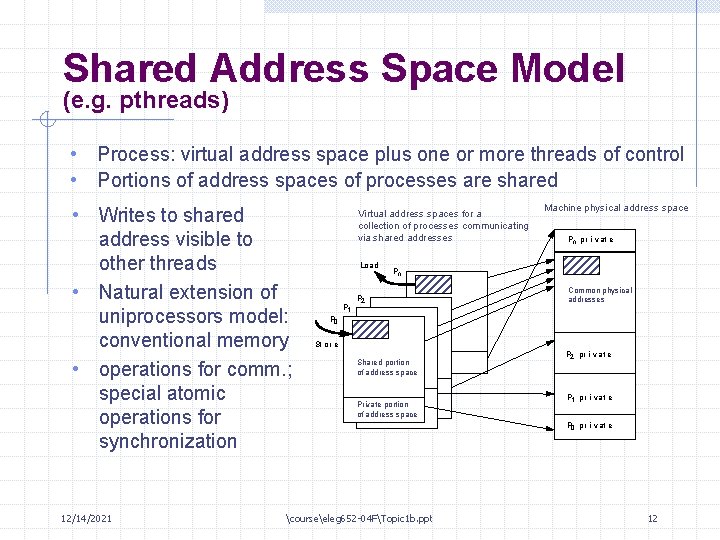

Shared Address Space Model (e. g. pthreads) • Process: virtual address space plus one or more threads of control • Portions of address spaces of processes are shared • Writes to shared address visible to other threads • Natural extension of uniprocessors model: conventional memory • operations for comm. ; special atomic operations for synchronization 12/14/2021 Virtual address spaces for a collection of processes communicating via shared addresses Load P 1 Machine physical address space Pn pr i v at e Pn P 2 Common physical addresses P 0 St or e Shared portion of address space Private portion of address space courseeleg 652 -04 FTopic 1 b. ppt P 2 pr i vat e P 1 pr i vat e P 0 pr i vat e 12

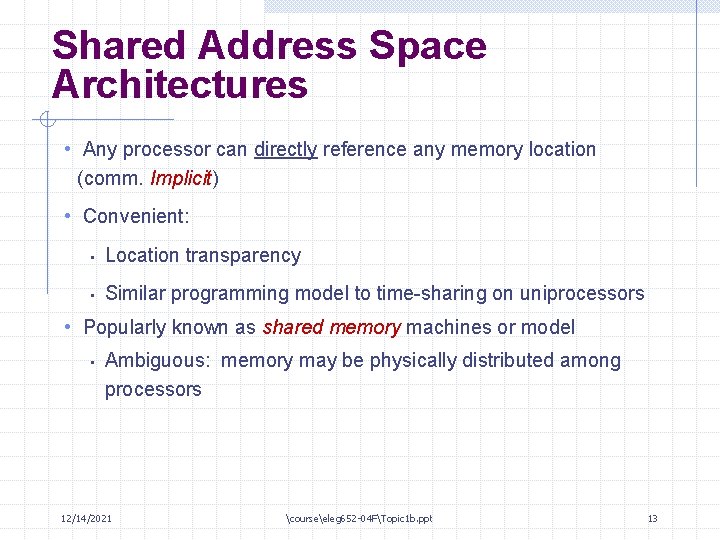

Shared Address Space Architectures • Any processor can directly reference any memory location (comm. Implicit) • Convenient: • Location transparency • Similar programming model to time-sharing on uniprocessors • Popularly known as shared memory machines or model • Ambiguous: memory may be physically distributed among processors 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 13

Shared-Memory Parallel Computers (late 90’s –early 2000’s) • SMPs (Intel-Quad, SUN SMPs) • Supercomputers • • • Cray T 3 E Convex 2000 SGI Origin/Onyx • Tera Computers 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 14

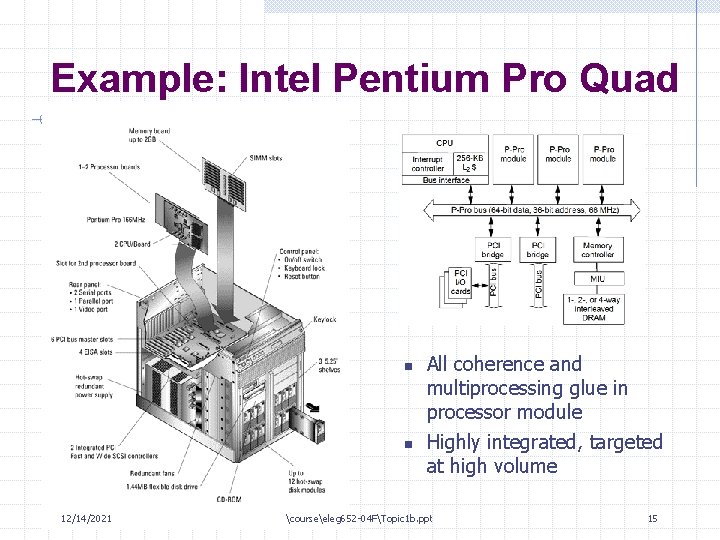

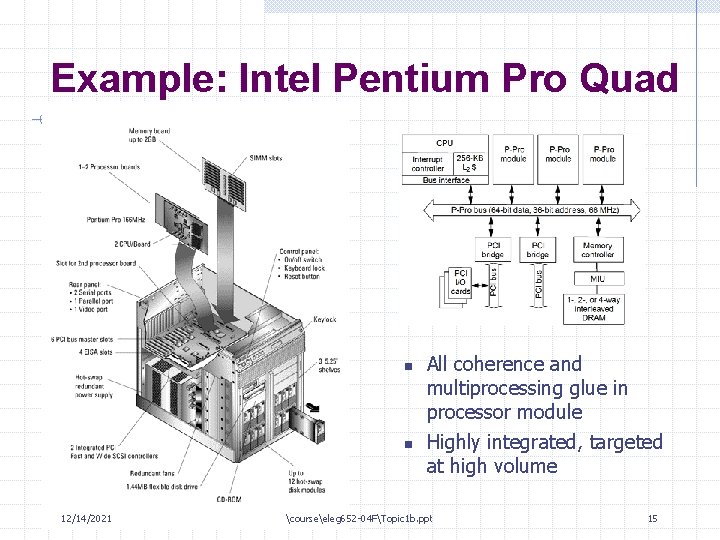

Example: Intel Pentium Pro Quad n n 12/14/2021 All coherence and multiprocessing glue in processor module Highly integrated, targeted at high volume courseeleg 652 -04 FTopic 1 b. ppt 15

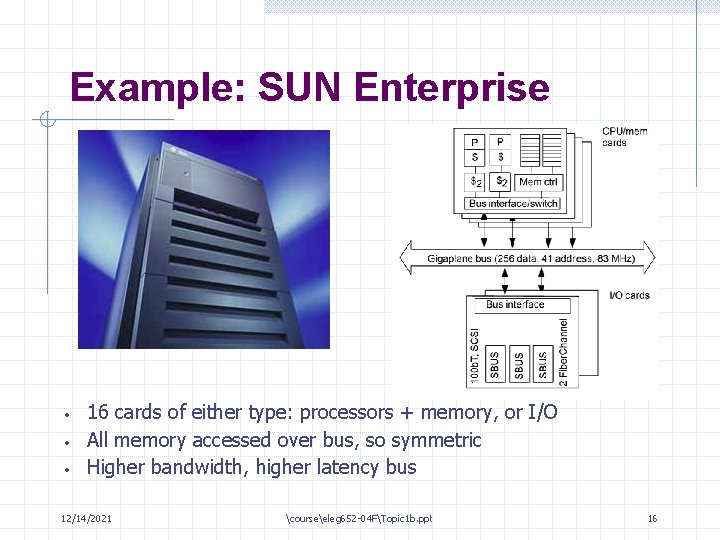

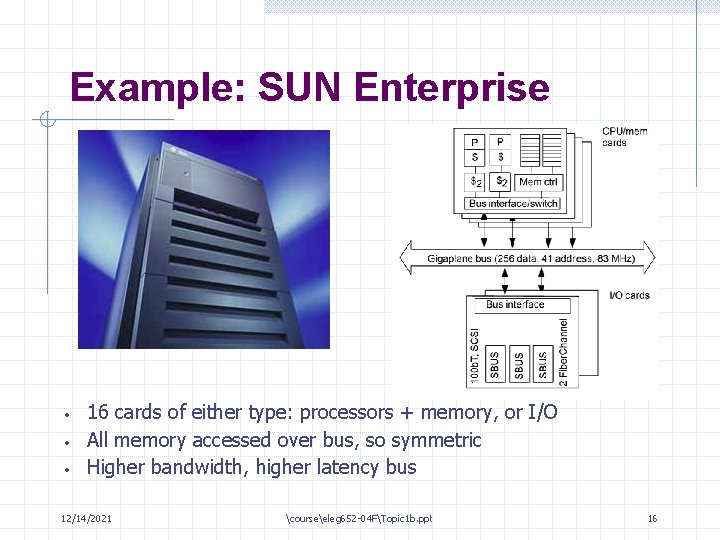

Example: SUN Enterprise • • • 16 cards of either type: processors + memory, or I/O All memory accessed over bus, so symmetric Higher bandwidth, higher latency bus 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 16

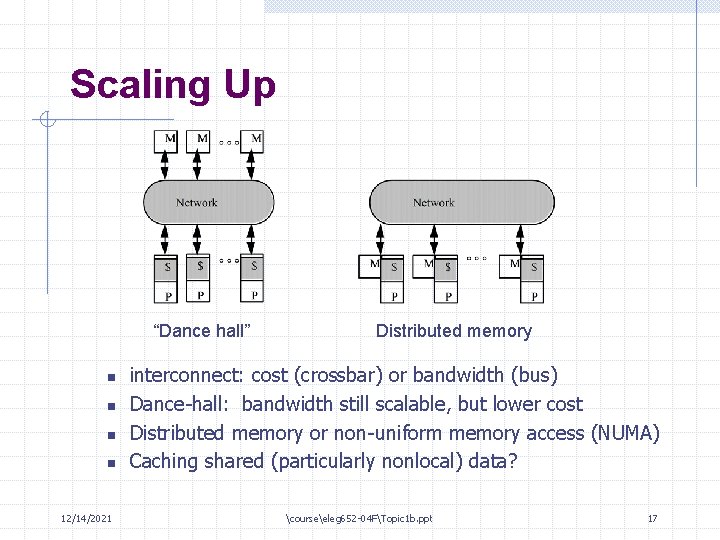

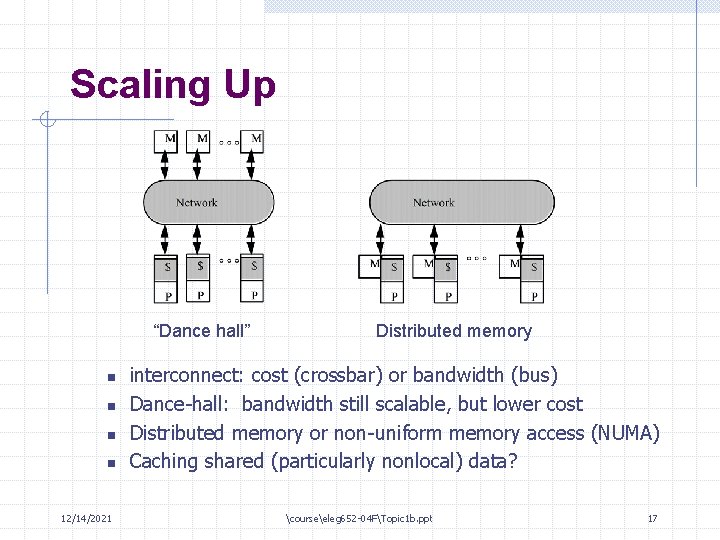

Scaling Up “Dance hall” n n 12/14/2021 Distributed memory interconnect: cost (crossbar) or bandwidth (bus) Dance-hall: bandwidth still scalable, but lower cost Distributed memory or non-uniform memory access (NUMA) Caching shared (particularly nonlocal) data? courseeleg 652 -04 FTopic 1 b. ppt 17

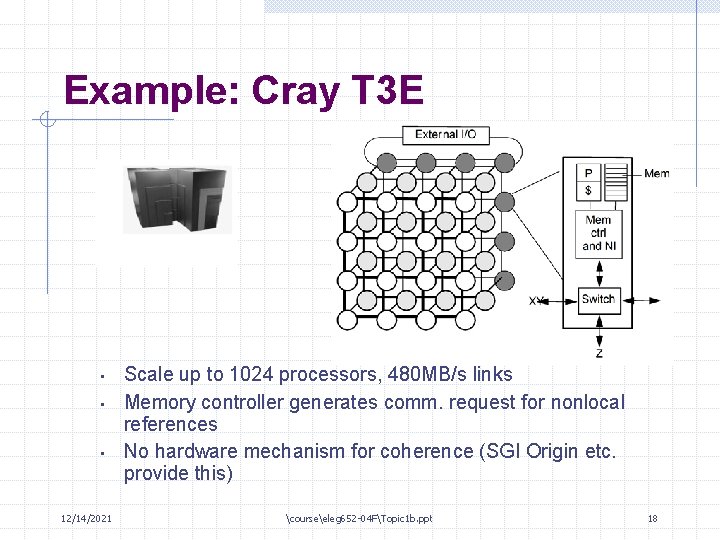

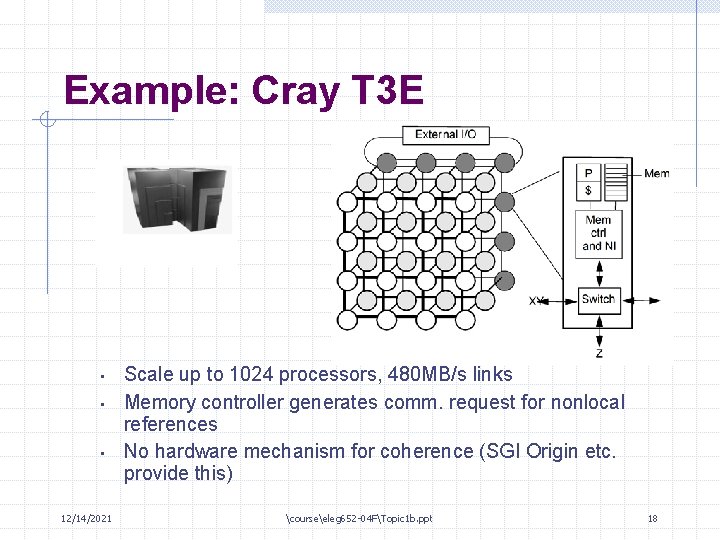

Example: Cray T 3 E • • • 12/14/2021 Scale up to 1024 processors, 480 MB/s links Memory controller generates comm. request for nonlocal references No hardware mechanism for coherence (SGI Origin etc. provide this) courseeleg 652 -04 FTopic 1 b. ppt 18

Multithreaded Shared-Memory MIMD • “time sharing” one instruction processing unit in a pipelined fashion by all instruction streams 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 19

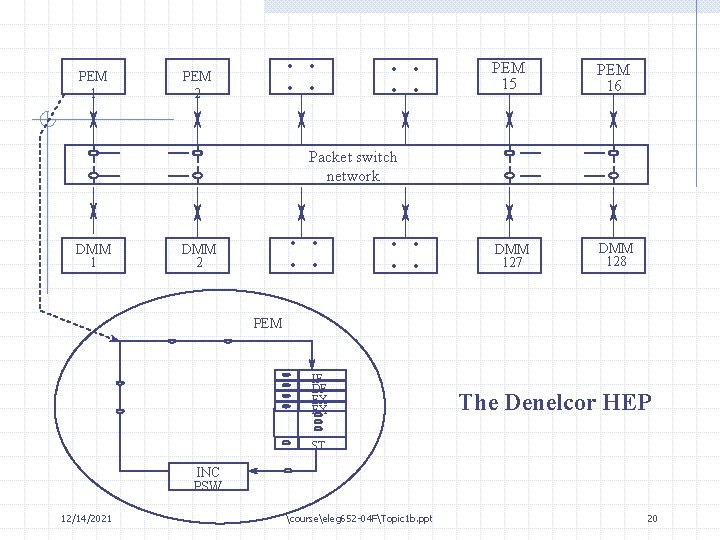

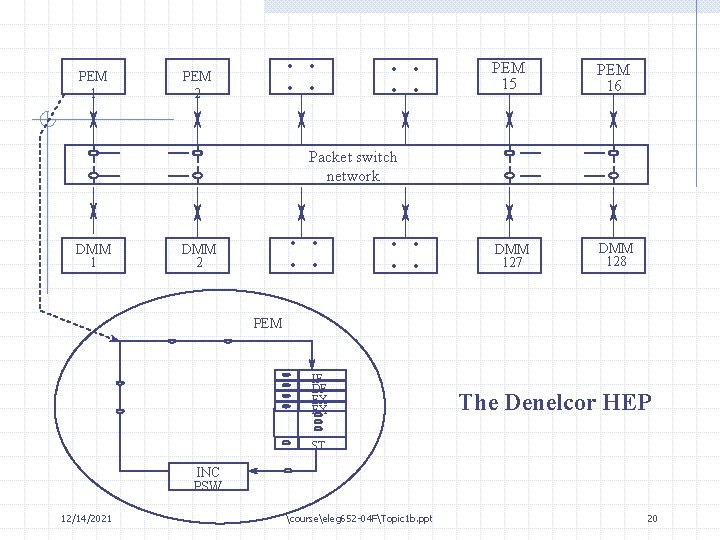

PEM 1 . . PEM 2 . . PEM 15 PEM 16 DMM 127 DMM 128 Packet switch network . . DMM 2 . . DMM 1 PEM IF DF EX EX The Denelcor HEP ST INC PSW 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 20

Denelcor HEP 12/14/2021 Many inst. streams single P-unit 16 PEM + 128 DMM : 64 bit/DMM Packet-switching network I-stream creation is under program control = • • • 50 I-streams Programmability : SISAL, Fortran courseeleg 652 -04 FTopic 1 b. ppt 21

Tera MTA (1990) • A shared memory LIW multiprocessor • 128 fine threads have 32 registers each • • • 12/14/2021 to tolerate FU, synchronization and memory latency. Explicit-dependence look ahead increases single-thread concurrency. Synchronization uses full/empty bits. courseeleg 652 -04 FTopic 1 b. ppt 22

CM-5 Scalable Massively Parallel Supercomputer for 1990’s • 1012 million floating-point operations per second • • 12/14/2021 (Tera-Flops) 64, 000 powerful RISC microprocessors working together Scalable : performance grows transparently Universal : support a vast variety of application domains Highly reliable : sustained performance for large jobs requiring weeks/months to run. courseeleg 652 -04 FTopic 1 b. ppt 23

Future Trend of MIMD Computers • Program execution models : beyond the SPMD model • Hybrid architecture: provide both sharedmemory and message-passing • Efficient mechanism for latency AND bw management –called the “memory-wall” problem 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 24

Shared Memory Architecture Examples (2000 – now) • Sun’s Wildfire Architecture (Henn&Patt, section 6. 11, page 622) • • 12/14/2021 Intel Xeon Multithreaded Architecture SGI Onyx-3000 IBM p 690 Others courseeleg 652 -04 FTopic 1 b. ppt 25

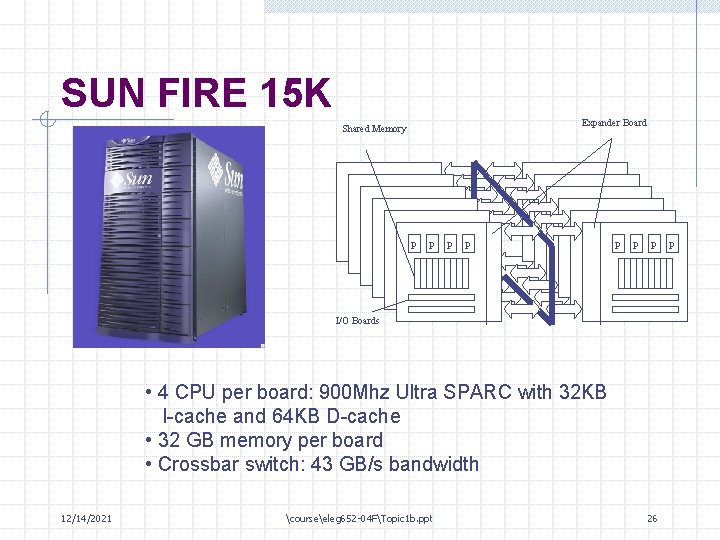

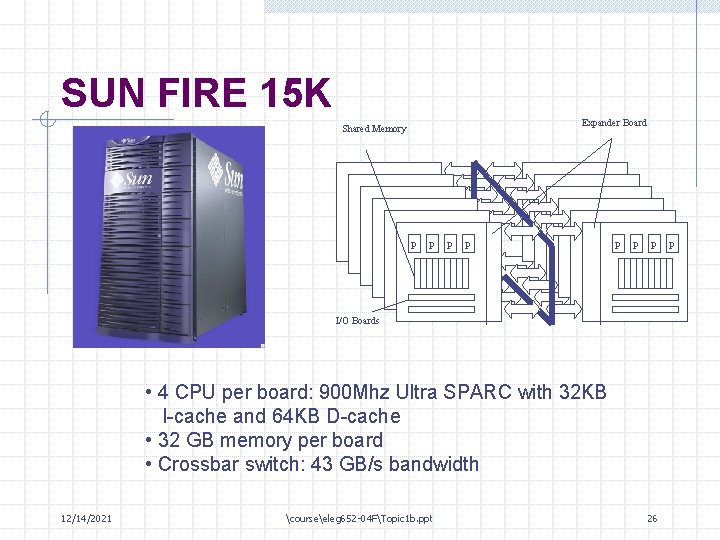

SUN FIRE 15 K Expander Board Shared Memory p p p p I/O Boards • 4 CPU per board: 900 Mhz Ultra SPARC with 32 KB I-cache and 64 KB D-cache • 32 GB memory per board • Crossbar switch: 43 GB/s bandwidth 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 26 p

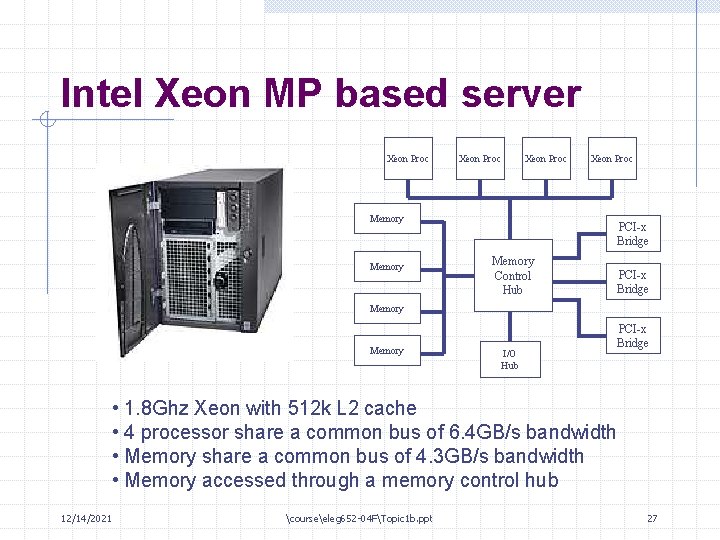

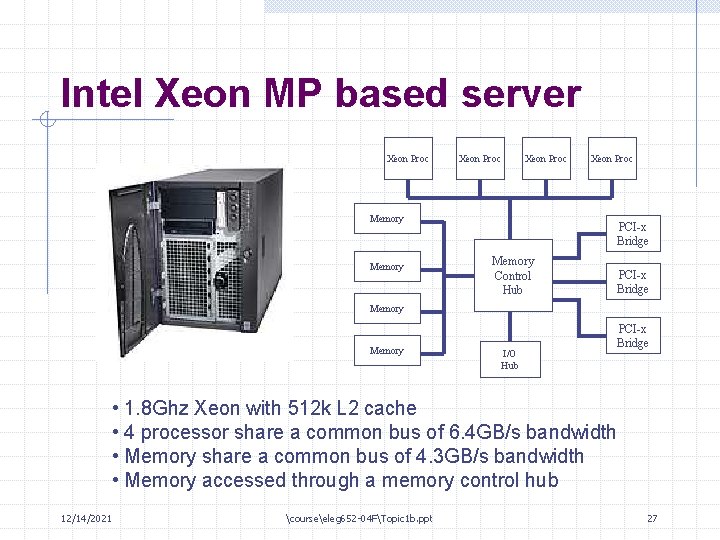

Intel Xeon MP based server Xeon Proc Memory Xeon Proc PCI-x Bridge Memory Control Hub PCI-x Bridge Memory I/O Hub PCI-x Bridge • 1. 8 Ghz Xeon with 512 k L 2 cache • 4 processor share a common bus of 6. 4 GB/s bandwidth • Memory share a common bus of 4. 3 GB/s bandwidth • Memory accessed through a memory control hub 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 27

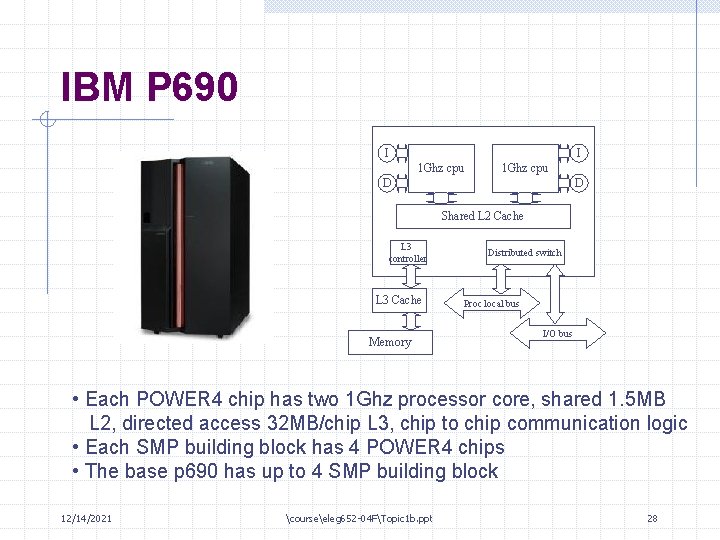

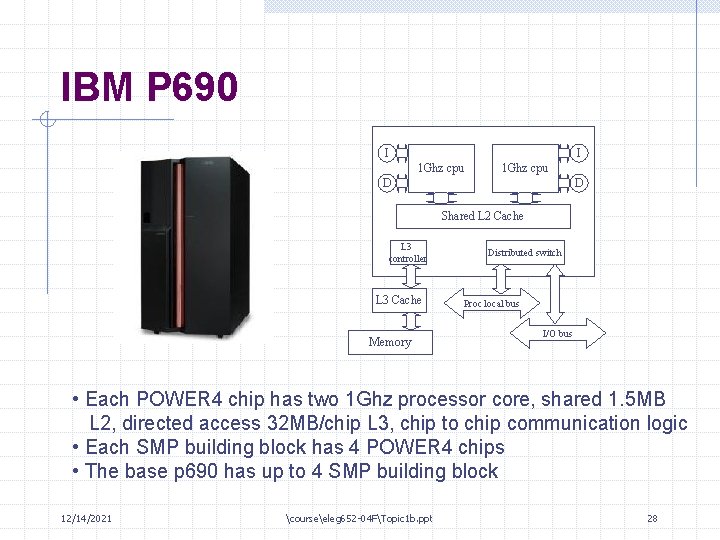

IBM P 690 I I 1 Ghz cpu D D Shared L 2 Cache L 3 controller L 3 Cache Memory Distributed switch Proc local bus I/O bus • Each POWER 4 chip has two 1 Ghz processor core, shared 1. 5 MB L 2, directed access 32 MB/chip L 3, chip to chip communication logic • Each SMP building block has 4 POWER 4 chips • The base p 690 has up to 4 SMP building block 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 28

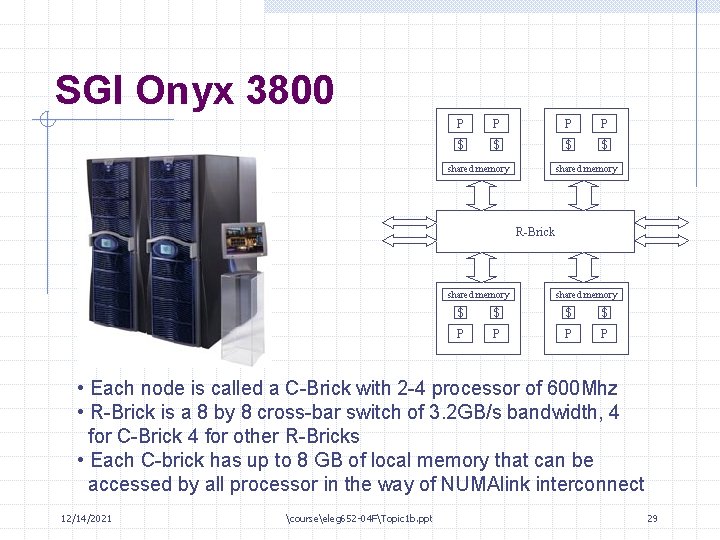

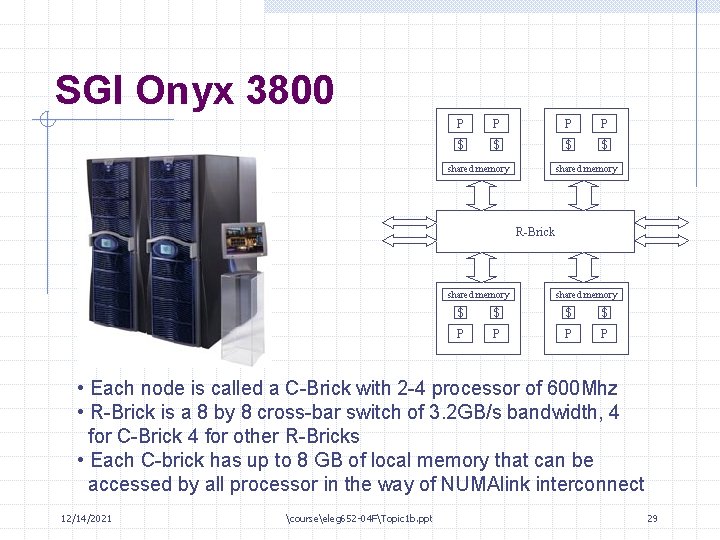

SGI Onyx 3800 P P $ $ shared memory R-Brick shared memory $ $ P P • Each node is called a C-Brick with 2 -4 processor of 600 Mhz • R-Brick is a 8 by 8 cross-bar switch of 3. 2 GB/s bandwidth, 4 for C-Brick 4 for other R-Bricks • Each C-brick has up to 8 GB of local memory that can be accessed by all processor in the way of NUMAlink interconnect 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 29

Recent High-End MIMD Parallel Architecture Projects • ASCI Projects (USA) ASCI Blue • ASCI Red • ASCI Blue Mountains • HTMT Project (USA) • The Earth Simulator (Japan) • HPCS architectures (USA) • 12/14/2021 courseeleg 652 -04 FTopic 1 b. ppt 30