An Introduction to the V 3 VEE Project

- Slides: 55

An Introduction to the V 3 VEE Project and the Palacios Virtual Machine Monitor Peter A. Dinda Department of Electrical Engineering and Computer Science Northwestern University http: //v 3 vee. org

Overview • We are building a new, public, opensource VMM for modern architectures – You can download it now! • We are leveraging it for research in HPC, systems, architecture, and teaching • You can join us 2

Outline • • V 3 VEE Project Why a new VMM? Palacios VMM Research leveraging Palacios – – – Virtualization for HPC Virtual Passthrough I/O Adaptation for Multicore and HPC Overlay Networking for HPC Alternative Paging • Participation 3

V 3 VEE Overview • Goal: Create a new, public, open source virtual machine monitor (VMM) framework for modern x 86/x 64 architectures (those with hardware virtualization support) that permits the compile-time/run-time composition of VMMs with structures optimized for… – – High performance computing research and use Computer architecture research Experimental computer systems research Teaching Community resource development project (NSF CRI) X-Stack exascale systems software research (DOE) 4

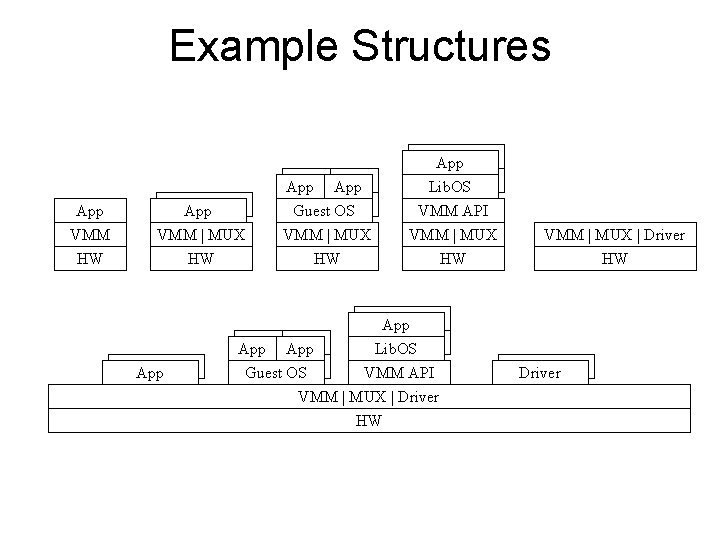

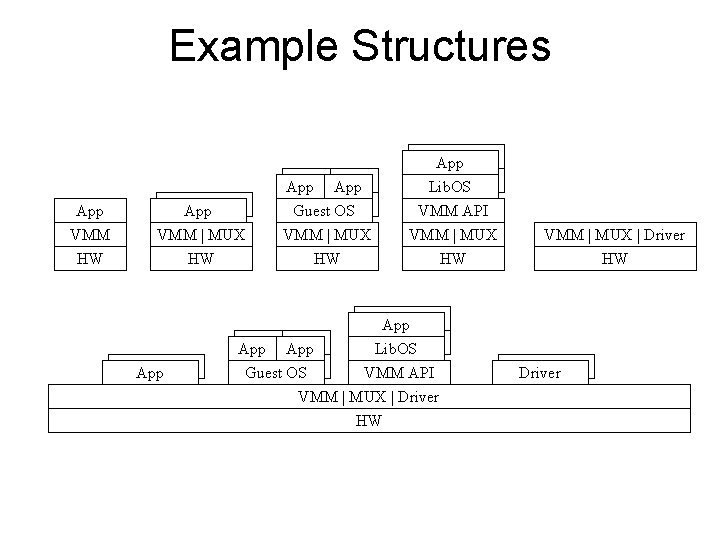

Example Structures App VMM HW App VMM | MUX HW App App Lib. OS Guest OS VMM | MUX HW App Lib. OS VMM API VMM | MUX HW App App Lib. OS Guest OS VMM API VMM | MUX | Driver HW Driver

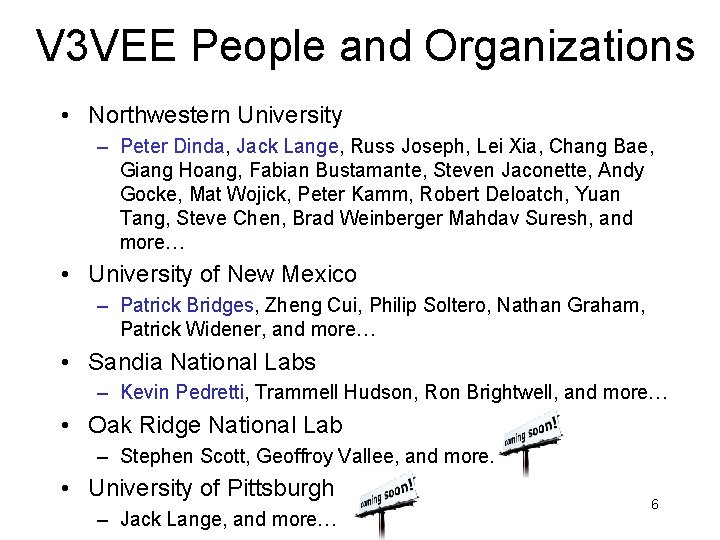

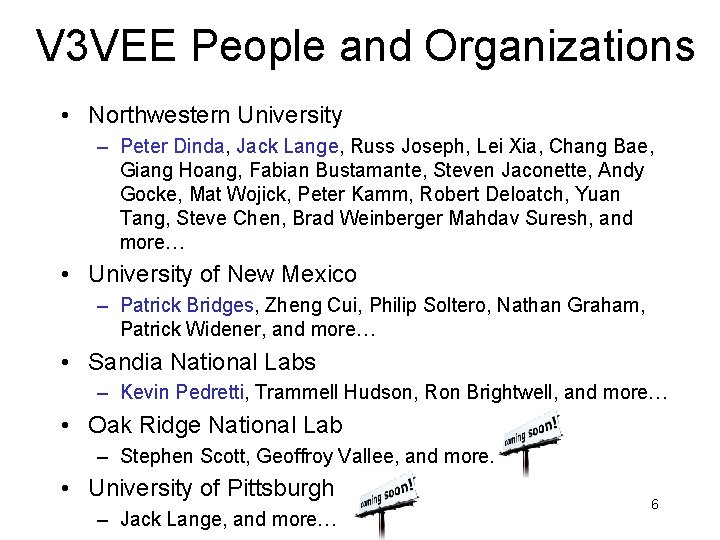

V 3 VEE People and Organizations • Northwestern University – Peter Dinda, Jack Lange, Russ Joseph, Lei Xia, Chang Bae, Giang Hoang, Fabian Bustamante, Steven Jaconette, Andy Gocke, Mat Wojick, Peter Kamm, Robert Deloatch, Yuan Tang, Steve Chen, Brad Weinberger Mahdav Suresh, and more… • University of New Mexico – Patrick Bridges, Zheng Cui, Philip Soltero, Nathan Graham, Patrick Widener, and more… • Sandia National Labs – Kevin Pedretti, Trammell Hudson, Ron Brightwell, and more… • Oak Ridge National Lab – Stephen Scott, Geoffroy Vallee, and more… • University of Pittsburgh – Jack Lange, and more… 6

Support (2006 -2013) 7

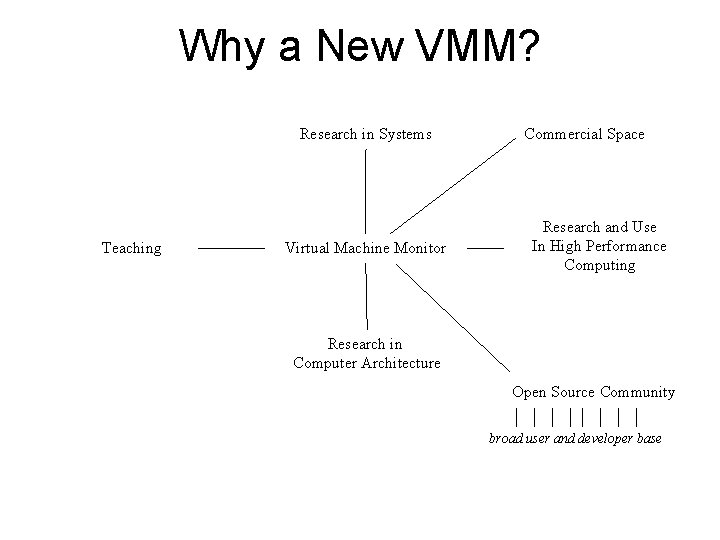

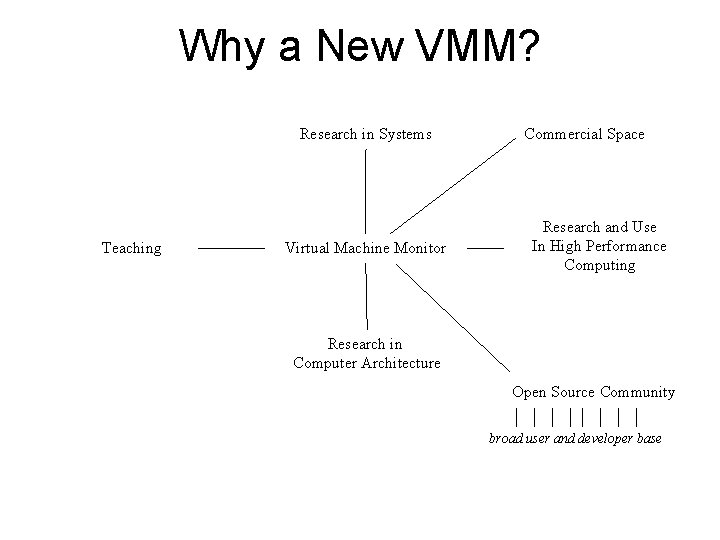

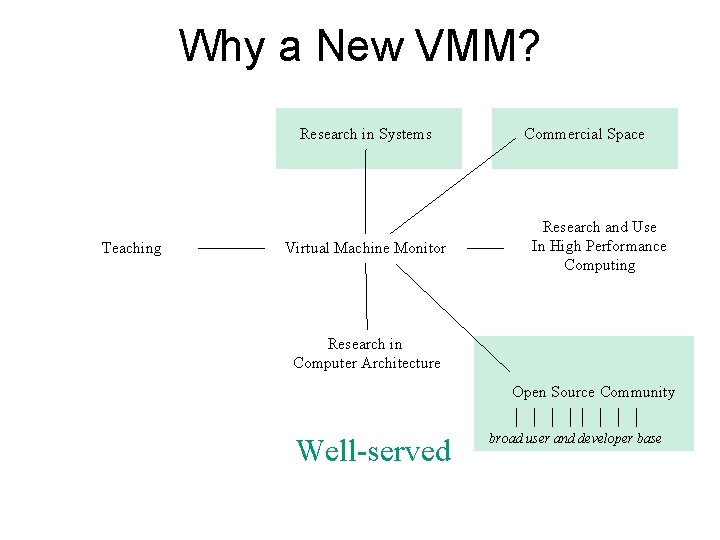

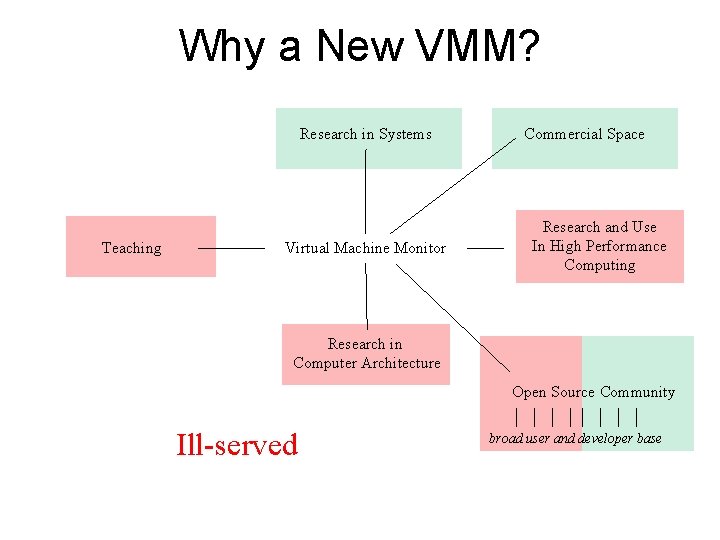

Why a New VMM? Research in Systems Teaching Virtual Machine Monitor Commercial Space Research and Use In High Performance Computing Research in Computer Architecture Open Source Community broad user and developer base

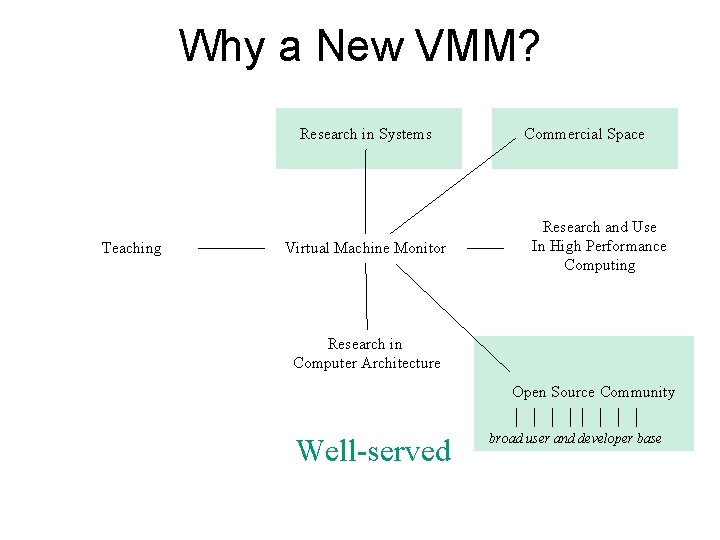

Why a New VMM? Research in Systems Teaching Virtual Machine Monitor Commercial Space Research and Use In High Performance Computing Research in Computer Architecture Open Source Community Well-served broad user and developer base

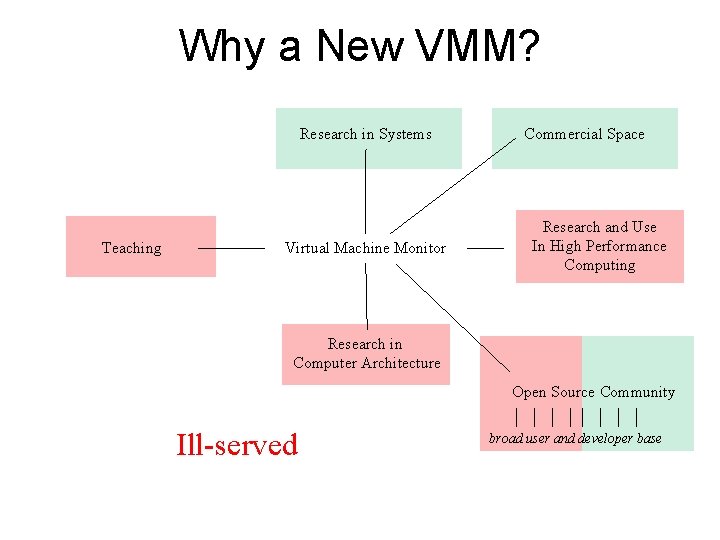

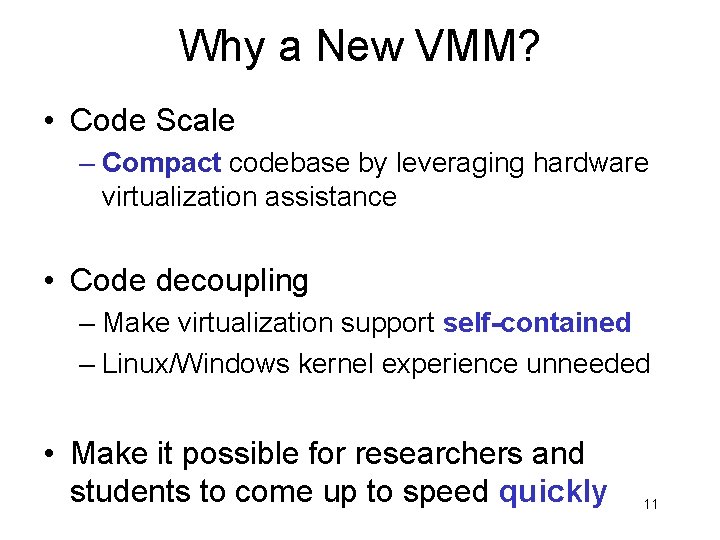

Why a New VMM? Research in Systems Teaching Virtual Machine Monitor Commercial Space Research and Use In High Performance Computing Research in Computer Architecture Open Source Community Ill-served broad user and developer base

Why a New VMM? • Code Scale – Compact codebase by leveraging hardware virtualization assistance • Code decoupling – Make virtualization support self-contained – Linux/Windows kernel experience unneeded • Make it possible for researchers and students to come up to speed quickly 11

Why a New VMM? • Freedom – BSD license for flexibility • Raw performance at large scales 12

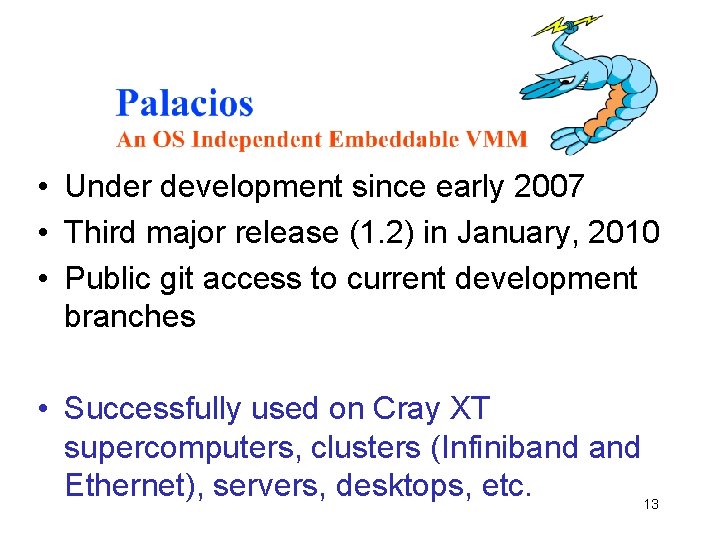

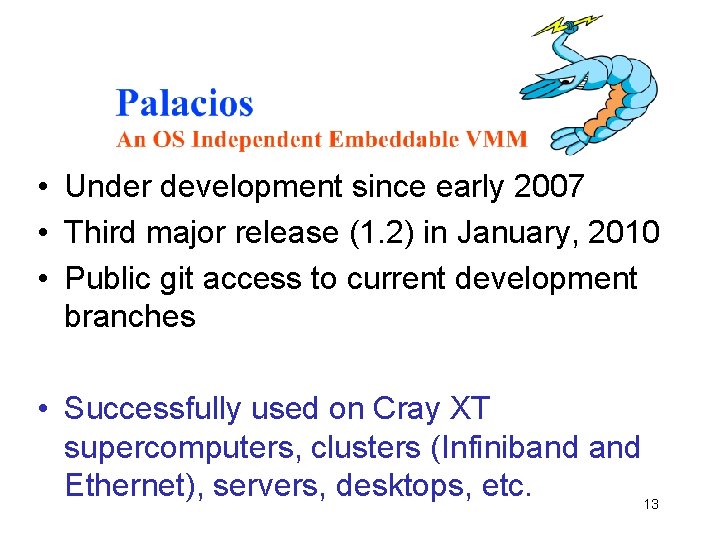

• Under development since early 2007 • Third major release (1. 2) in January, 2010 • Public git access to current development branches • Successfully used on Cray XT supercomputers, clusters (Infiniband Ethernet), servers, desktops, etc. 13

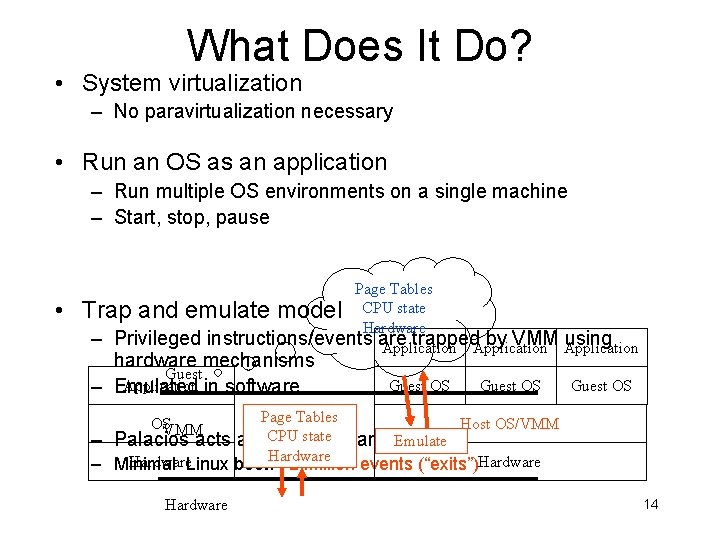

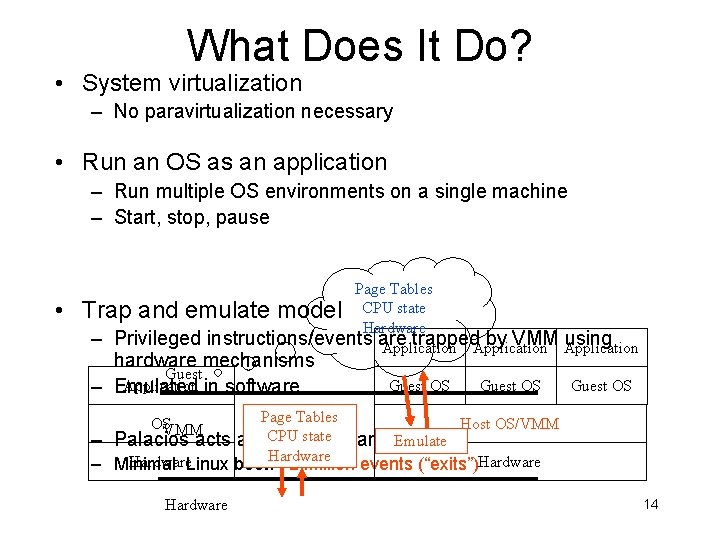

What Does It Do? • System virtualization – No paravirtualization necessary • Run an OS as an application – Run multiple OS environments on a single machine – Start, stop, pause • Page Tables Trap and emulate model CPU state Hardware – Privileged instructions/events are trapped. Application by VMM Application using Application hardware mechanisms Guest Application – Emulated in software OS VMM – Palacios acts Page Tables CPUevent state as an Hardware Guest OS handler loop Emulate Guest OS Host OS/VMM Hardware. Linux boot: ~2 million events (“exits”)Hardware – Minimal Hardware 14

Leveraging Modern Hardware • Requires and makes extensive use of modern x 86/x 64 virtualization support – AMD SVM (AMD-V) – Intel VT-x • Nested paging support in addition to several varieties of shadow paging • Straightforward passthrough PCI • IOMMU/PCI-SIG in progress 15

Design Choices • Minimal interface – Suitable for use with a lightweight kernel (LWK) • Compile- and run-time configurability – Create a VMM tailored to specific environments • Low noise – No deferred work • Contiguous memory pre-allocation – No surprising memory system behavior • Passthrough resources and resource partitioning 16

Embeddability • Palacios compiles to a static library • Clean, well-defined host OS interface allows Palacios to be embedded in different Host OSes – Palacios adds virtualization support to an OS – Palacios + lightweight kernel = traditional “type. I” “bare metal” VMM • Current embeddings – Kitten, Geek. OS, Minix 3, Linux [partial] 17

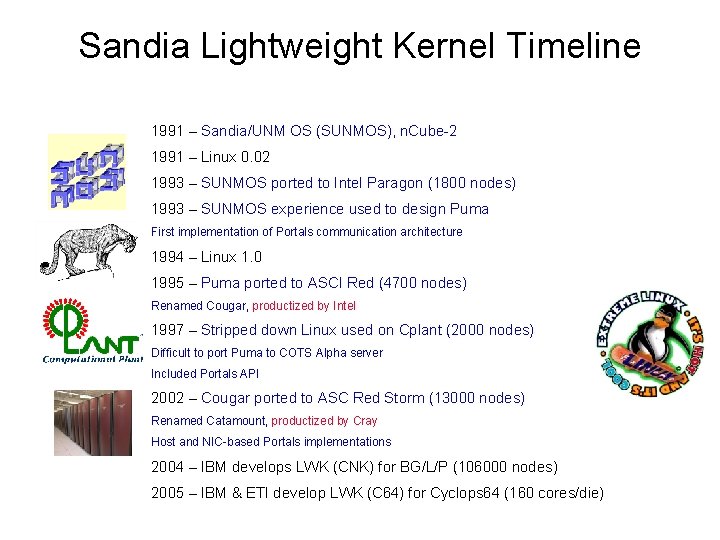

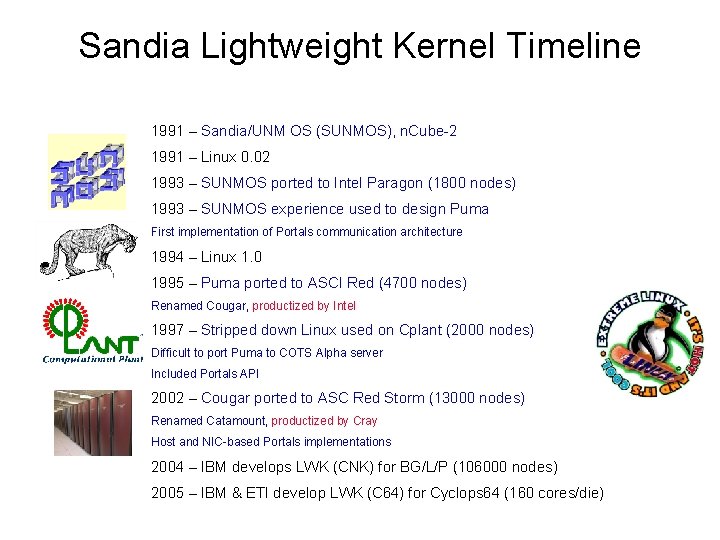

Sandia Lightweight Kernel Timeline 1991 – Sandia/UNM OS (SUNMOS), n. Cube-2 1991 – Linux 0. 02 1993 – SUNMOS ported to Intel Paragon (1800 nodes) 1993 – SUNMOS experience used to design Puma First implementation of Portals communication architecture 1994 – Linux 1. 0 1995 – Puma ported to ASCI Red (4700 nodes) Renamed Cougar, productized by Intel 1997 – Stripped down Linux used on Cplant (2000 nodes) Difficult to port Puma to COTS Alpha server Included Portals API 2002 – Cougar ported to ASC Red Storm (13000 nodes) Renamed Catamount, productized by Cray Host and NIC-based Portals implementations 2004 – IBM develops LWK (CNK) for BG/L/P (106000 nodes) 2005 – IBM & ETI develop LWK (C 64) for Cyclops 64 (160 cores/die)

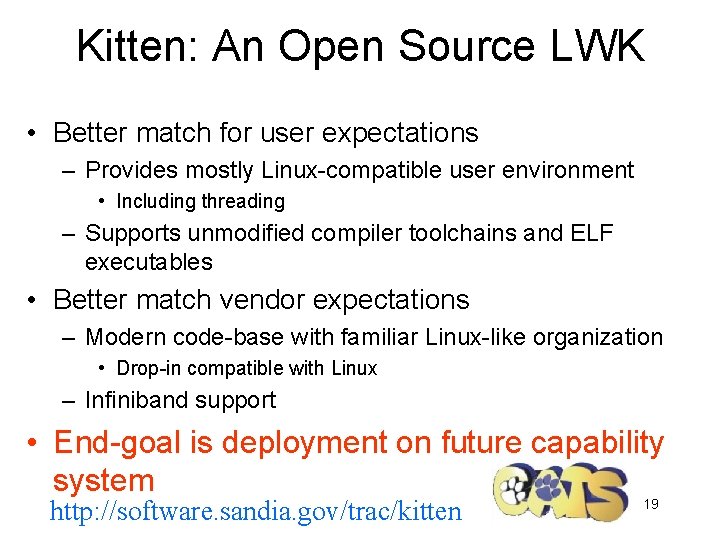

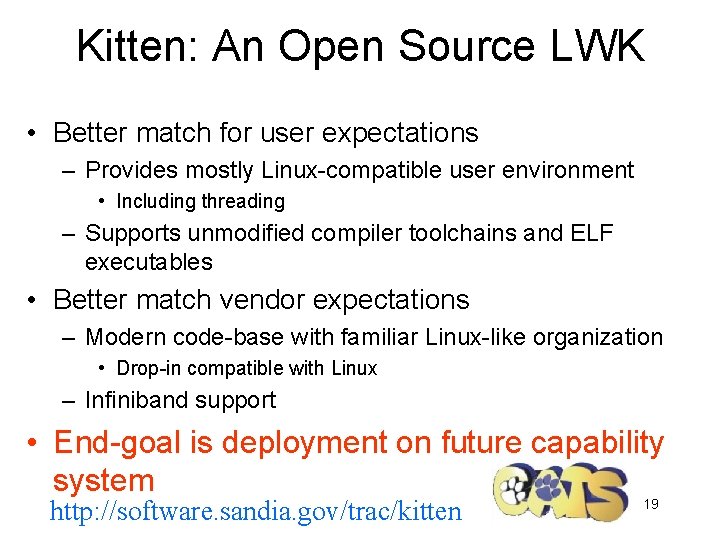

Kitten: An Open Source LWK • Better match for user expectations – Provides mostly Linux-compatible user environment • Including threading – Supports unmodified compiler toolchains and ELF executables • Better match vendor expectations – Modern code-base with familiar Linux-like organization • Drop-in compatible with Linux – Infiniband support • End-goal is deployment on future capability system http: //software. sandia. gov/trac/kitten 19

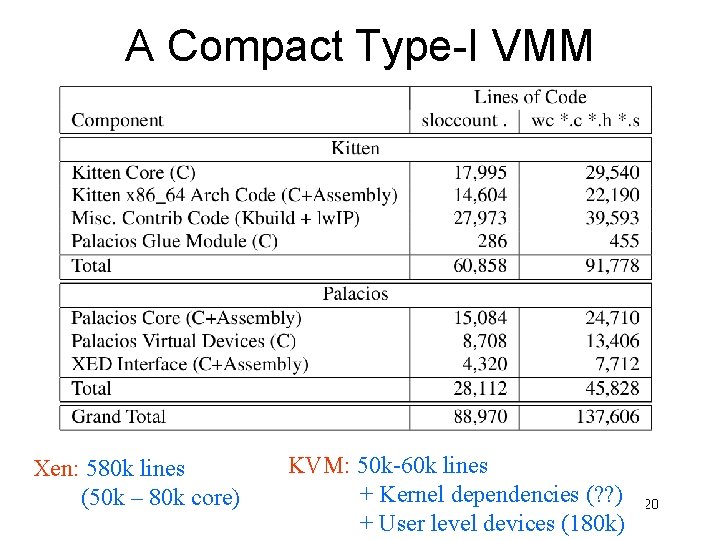

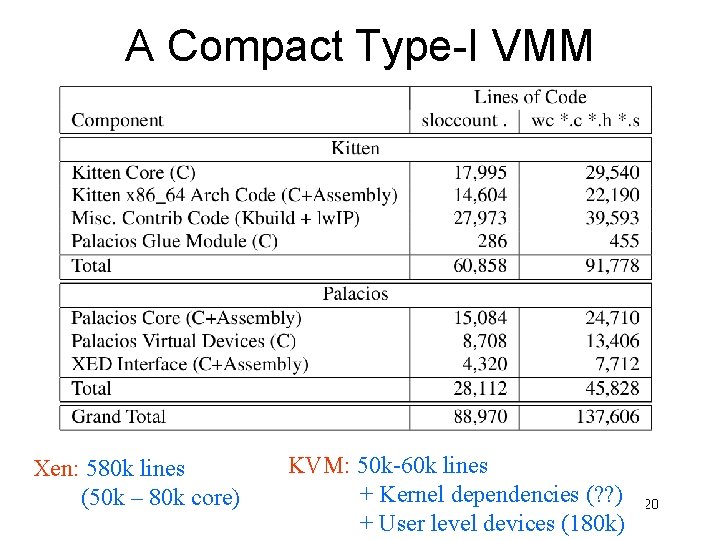

A Compact Type-I VMM Xen: 580 k lines (50 k – 80 k core) KVM: 50 k-60 k lines + Kernel dependencies (? ? ) + User level devices (180 k) 20

Outline • • V 3 VEE Project Why a new VMM? Palacios VMM Research leveraging Palacios – – – Virtualization for HPC Virtual Passthrough I/O Adaptation for Multicore and HPC Overlay Networking for HPC Alternative Paging • Participation 21

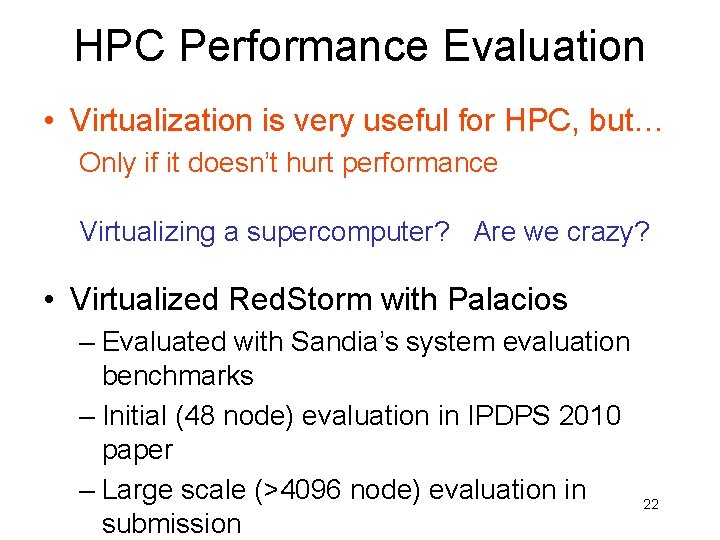

HPC Performance Evaluation • Virtualization is very useful for HPC, but… Only if it doesn’t hurt performance Virtualizing a supercomputer? Are we crazy? • Virtualized Red. Storm with Palacios – Evaluated with Sandia’s system evaluation benchmarks – Initial (48 node) evaluation in IPDPS 2010 paper – Large scale (>4096 node) evaluation in submission 22

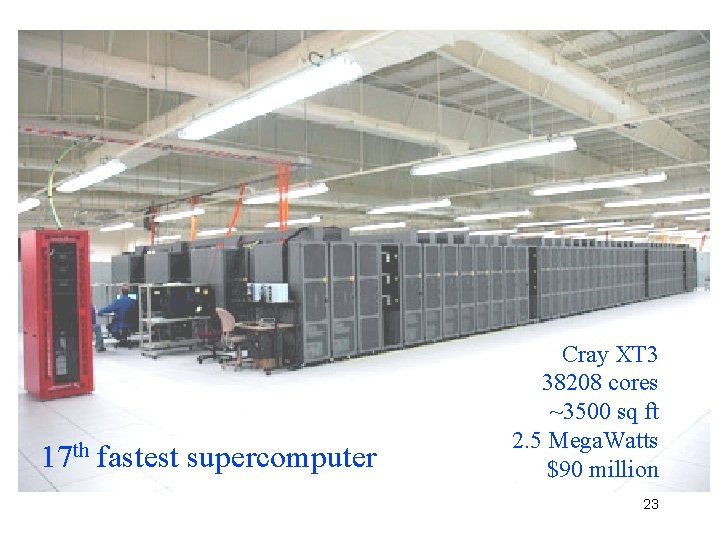

17 th fastest supercomputer Cray XT 3 38208 cores ~3500 sq ft 2. 5 Mega. Watts $90 million 23

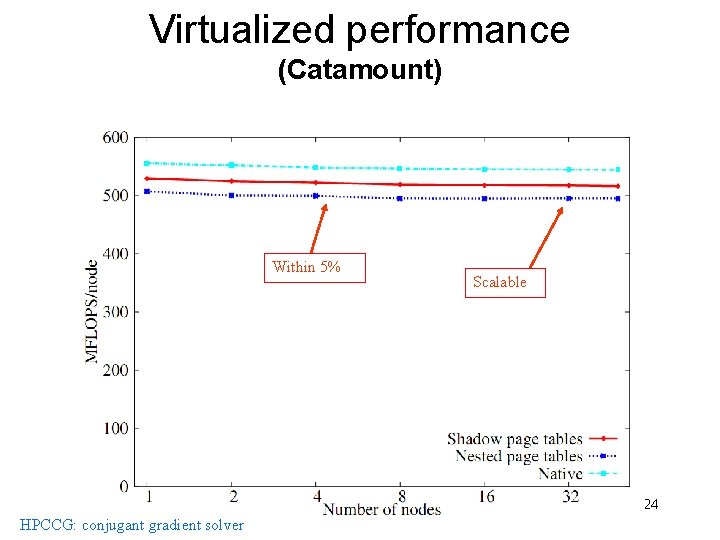

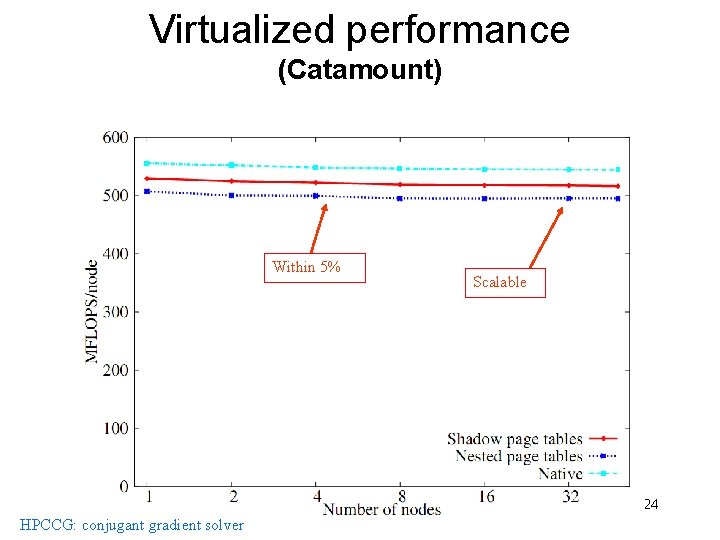

Virtualized performance (Catamount) Within 5% Scalable 24 HPCCG: conjugant gradient solver

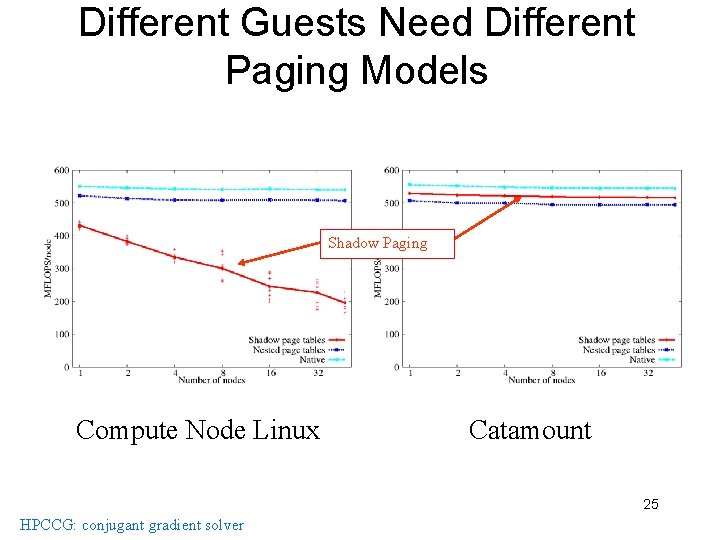

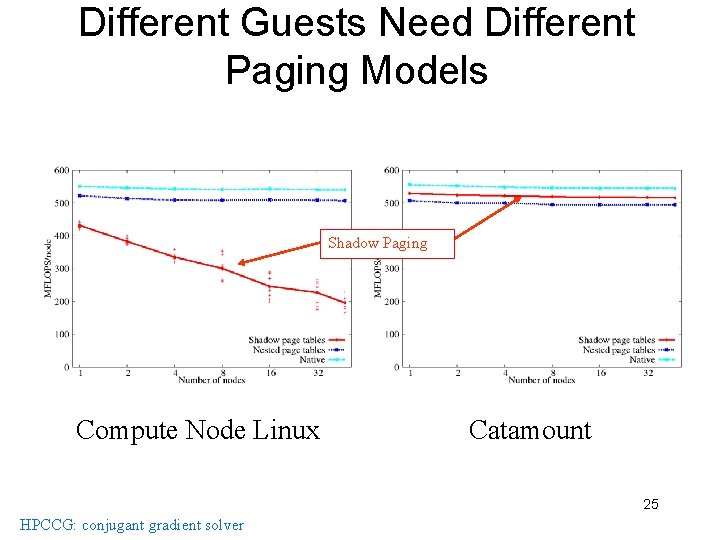

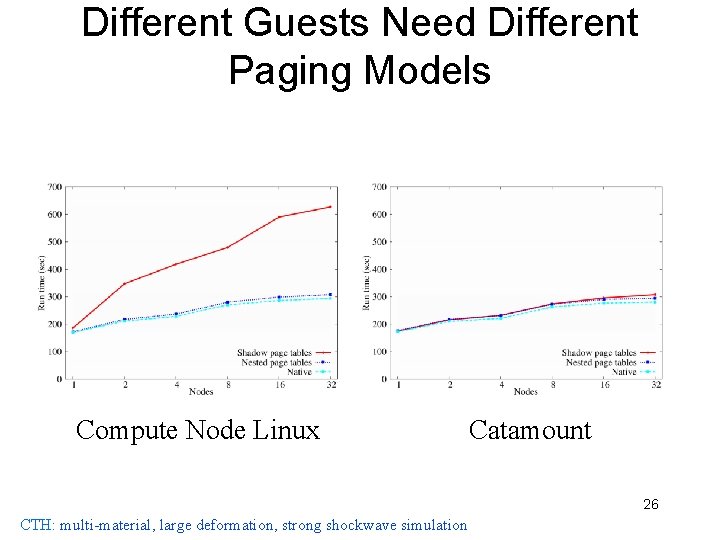

Different Guests Need Different Paging Models Shadow Paging Compute Node Linux Catamount 25 HPCCG: conjugant gradient solver

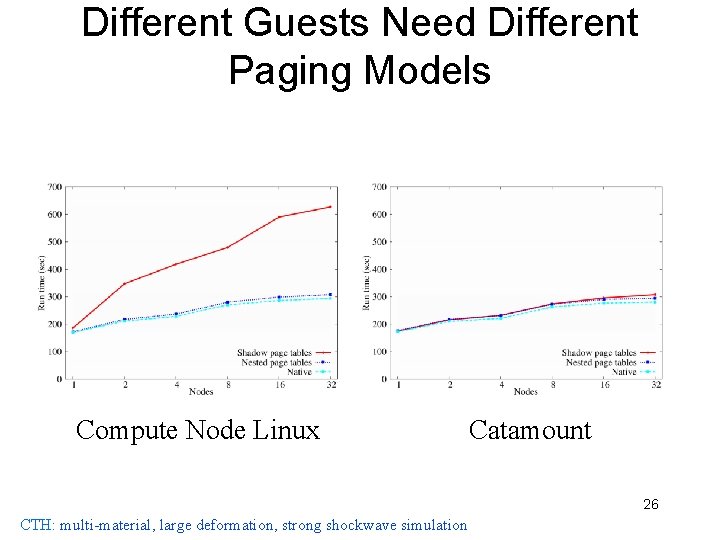

Different Guests Need Different Paging Models Compute Node Linux Catamount 26 CTH: multi-material, large deformation, strong shockwave simulation

Large Scale Study • Evaluation on full Red. Storm system – 12 hours of dedicated system time on full machine – Largest virtualization performance scaling study to date • Measured performance at exponentially increasing scales – 4096 nodes • Publicity – New York Times – Slashdot – HPCWire – Communications of the ACM – PC World 27

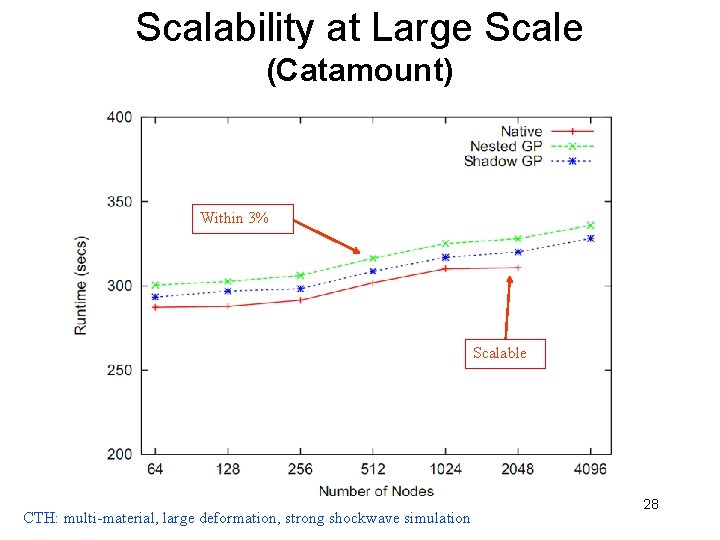

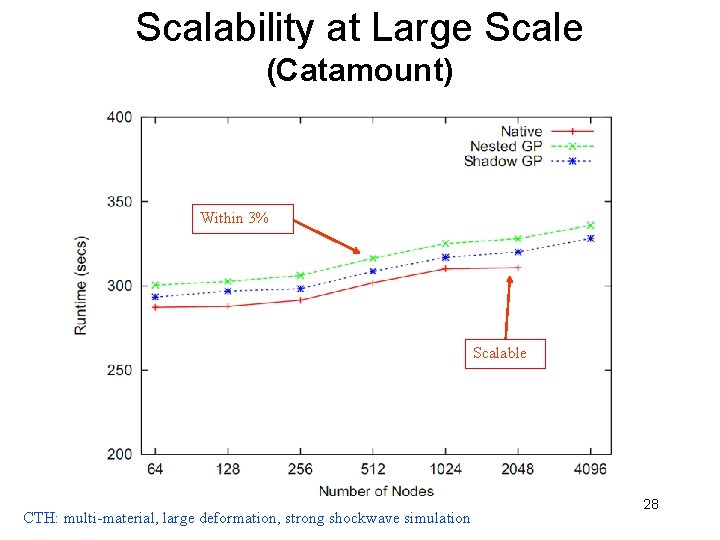

Scalability at Large Scale (Catamount) Within 3% Scalable CTH: multi-material, large deformation, strong shockwave simulation 28

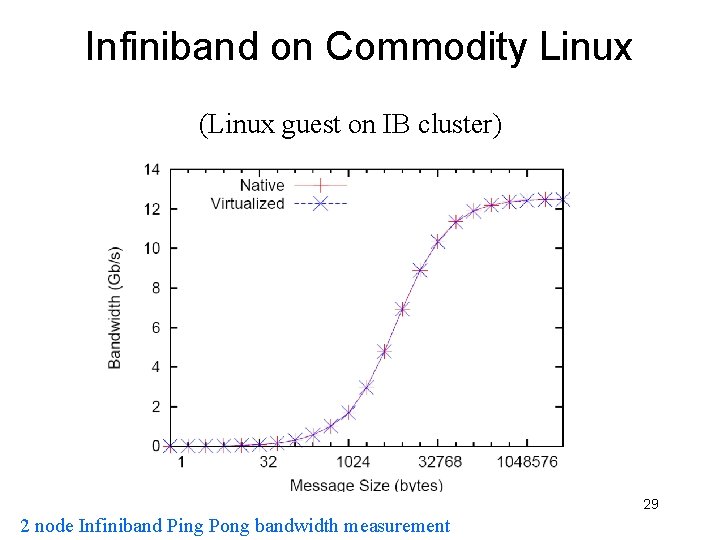

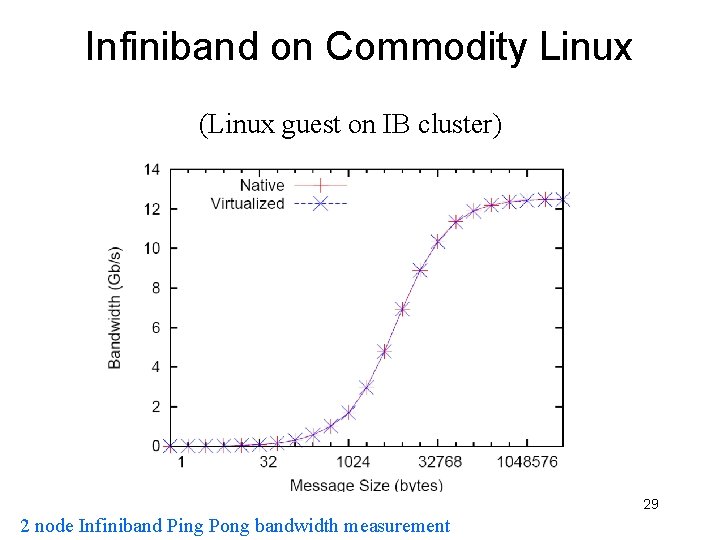

Infiniband on Commodity Linux (Linux guest on IB cluster) 29 2 node Infiniband Ping Pong bandwidth measurement

Observations • Virtualization can scale on the fastest machines on in the world running tightly coupled parallel applications • Best virtualization approach depends on the guest OS and the workload – Paging models, I/O models, scheduling models, etc… • Useful for VMM to have asynchronous and synchronous access to guest knowledge – Symbiotic Virtualization (Lange’s thesis) 30

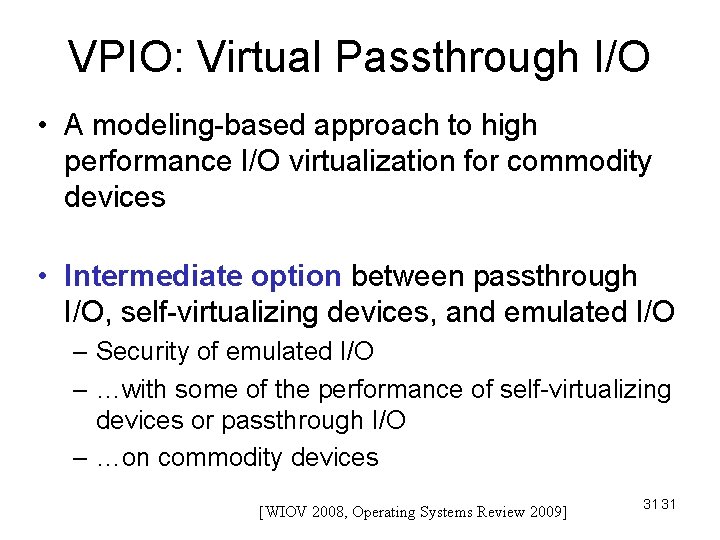

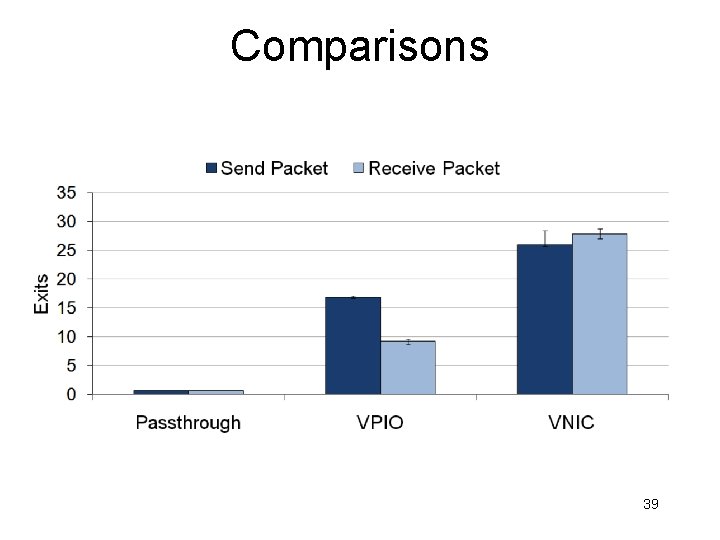

VPIO: Virtual Passthrough I/O • A modeling-based approach to high performance I/O virtualization for commodity devices • Intermediate option between passthrough I/O, self-virtualizing devices, and emulated I/O – Security of emulated I/O – …with some of the performance of self-virtualizing devices or passthrough I/O – …on commodity devices [WIOV 2008, Operating Systems Review 2009] 31 31

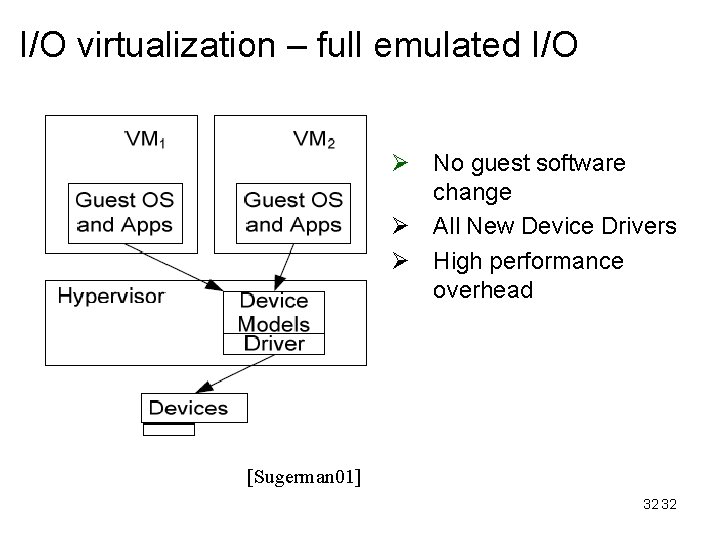

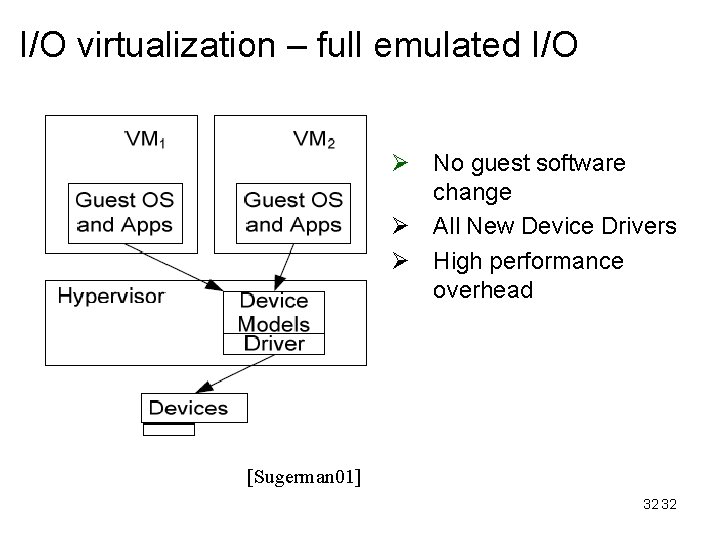

I/O virtualization – full emulated I/O Ø No guest software change Ø All New Device Drivers Ø High performance overhead [Sugerman 01] 32 32

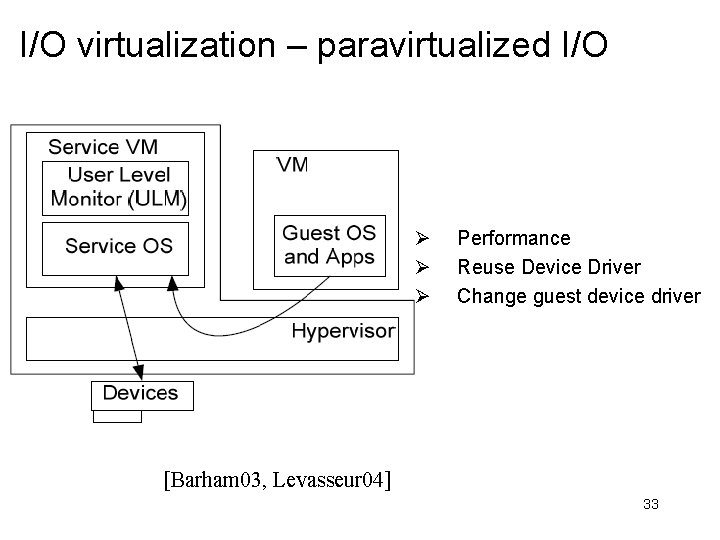

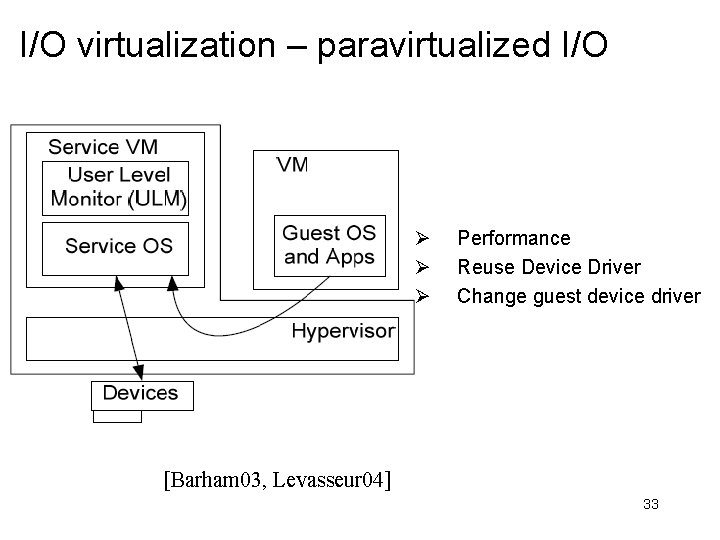

I/O virtualization – paravirtualized I/O Ø Ø Ø Performance Reuse Device Driver Change guest device driver [Barham 03, Levasseur 04] 33

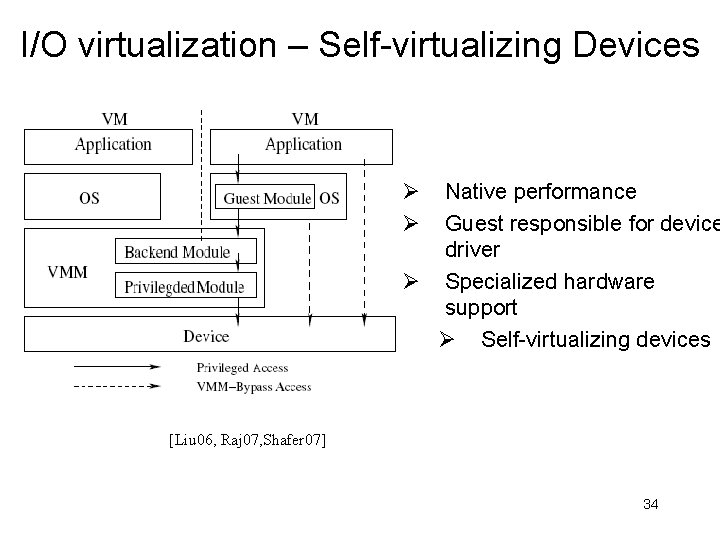

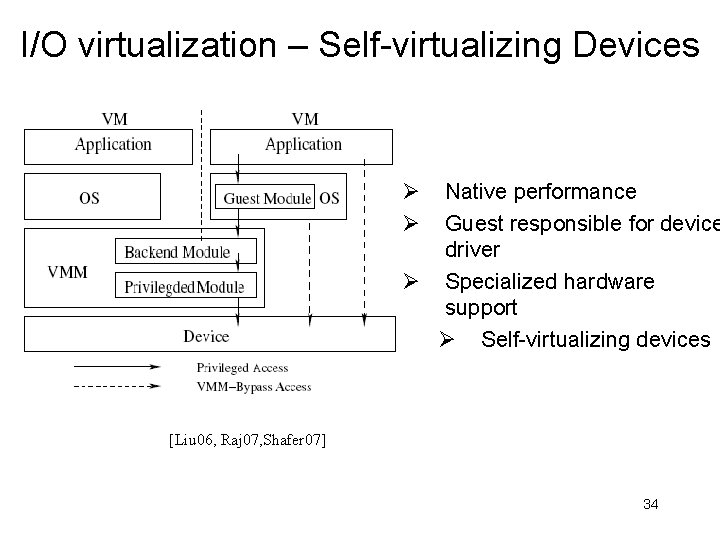

I/O virtualization – Self-virtualizing Devices Ø Ø Native performance Guest responsible for device driver Ø Specialized hardware support Ø Self-virtualizing devices [Liu 06, Raj 07, Shafer 07] 34

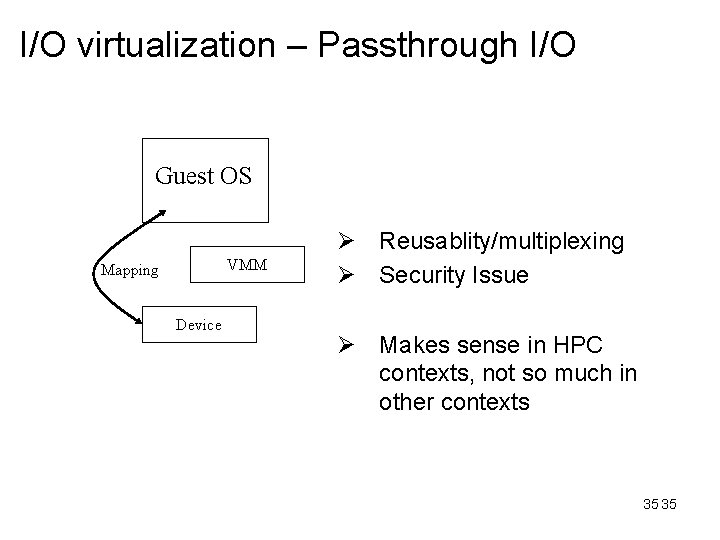

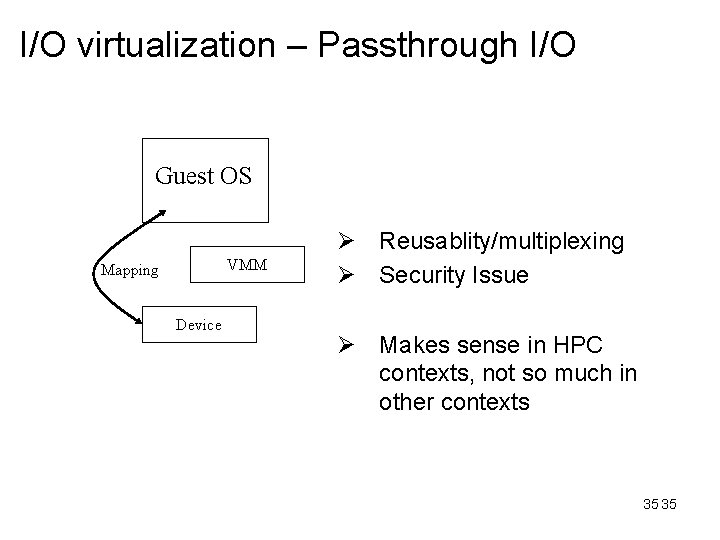

I/O virtualization – Passthrough I/O Guest OS VMM Mapping Device Ø Reusablity/multiplexing Ø Security Issue Ø Makes sense in HPC contexts, not so much in other contexts 35 35

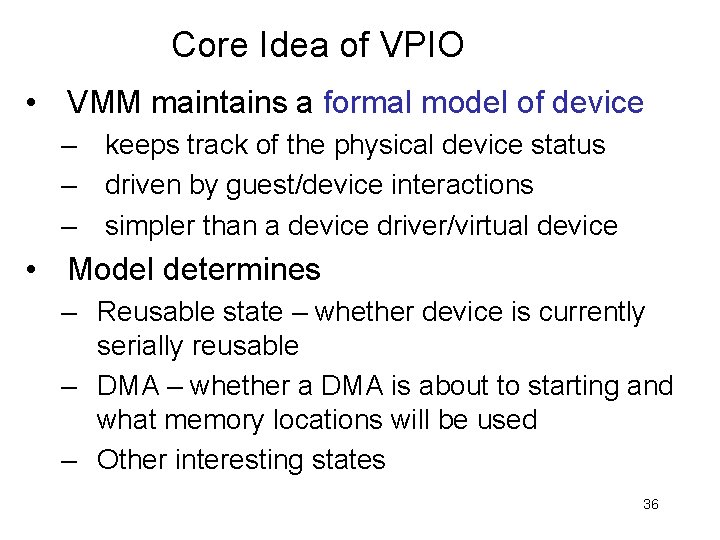

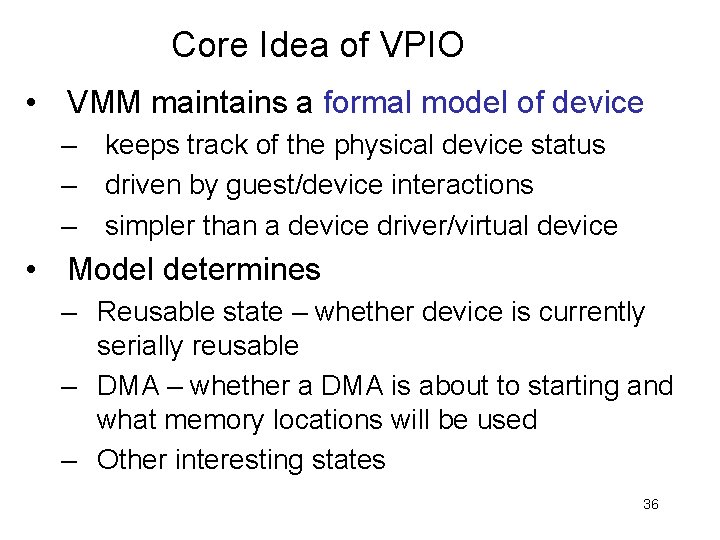

Core Idea of VPIO • VMM maintains a formal model of device – keeps track of the physical device status – driven by guest/device interactions – simpler than a device driver/virtual device • Model determines – Reusable state – whether device is currently serially reusable – DMA – whether a DMA is about to starting and what memory locations will be used – Other interesting states 36

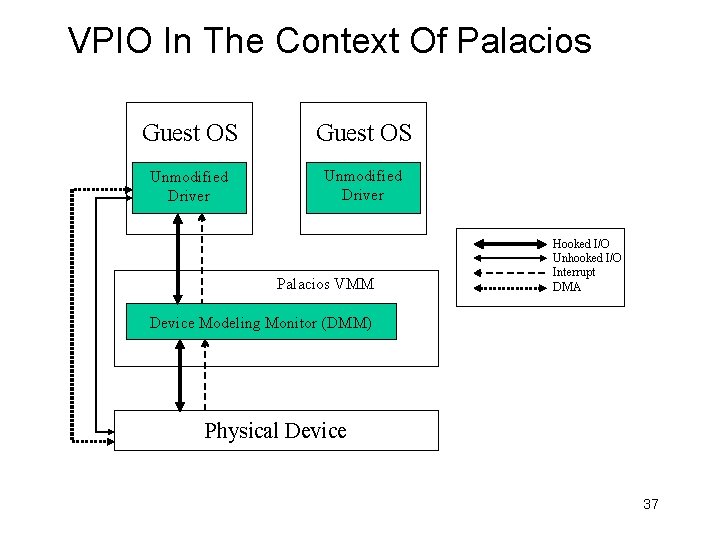

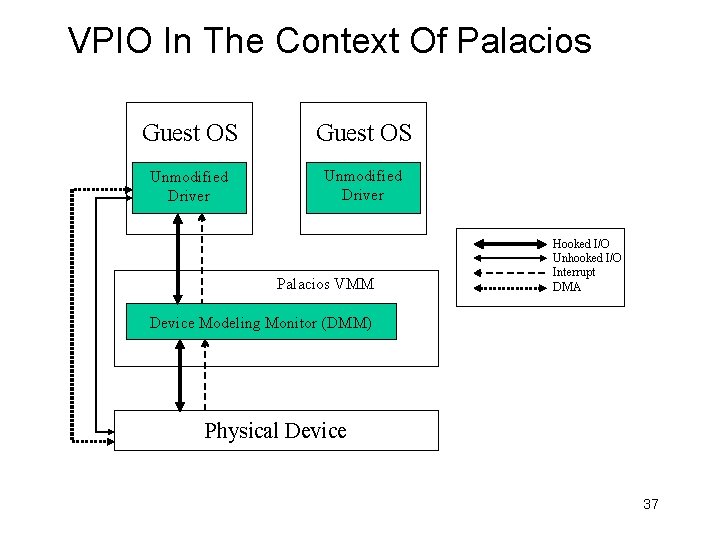

VPIO In The Context Of Palacios Guest OS Unmodified Driver Palacios VMM Hooked I/O Unhooked I/O Interrupt DMA Device Modeling Monitor (DMM) Physical Device 37

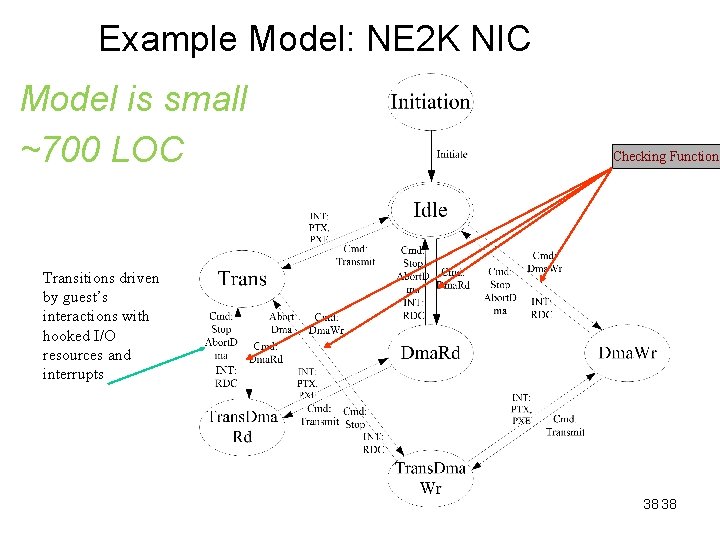

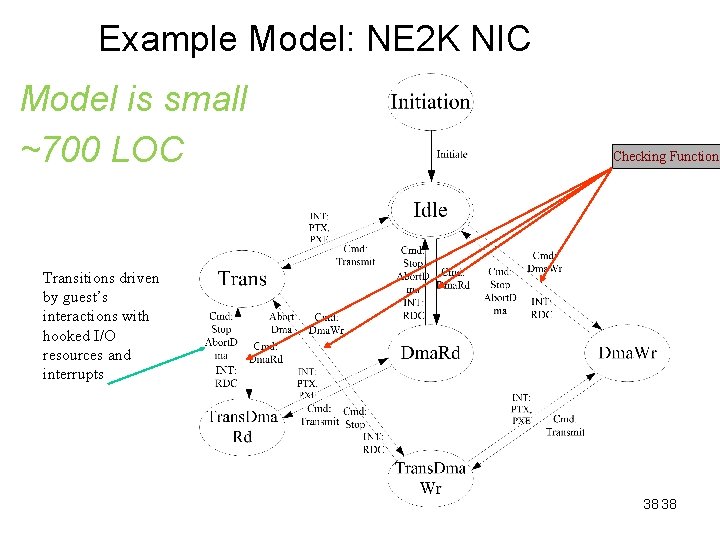

Example Model: NE 2 K NIC Model is small ~700 LOC Checking Function Transitions driven by guest’s interactions with hooked I/O resources and interrupts 38 38

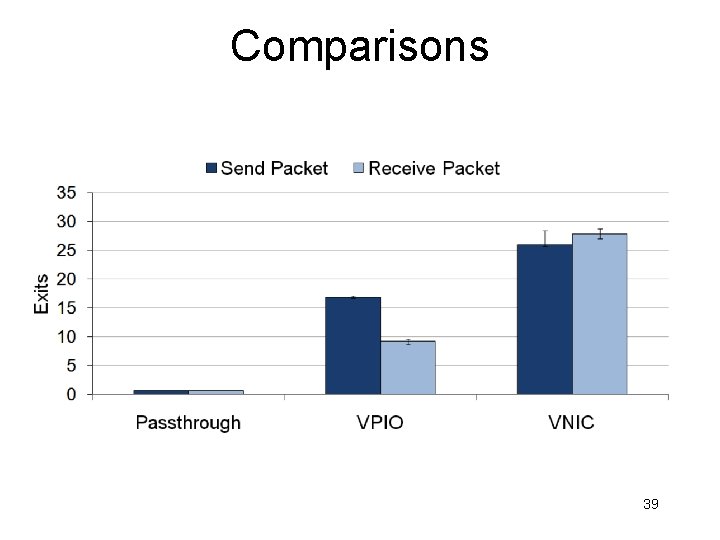

Comparisons 39

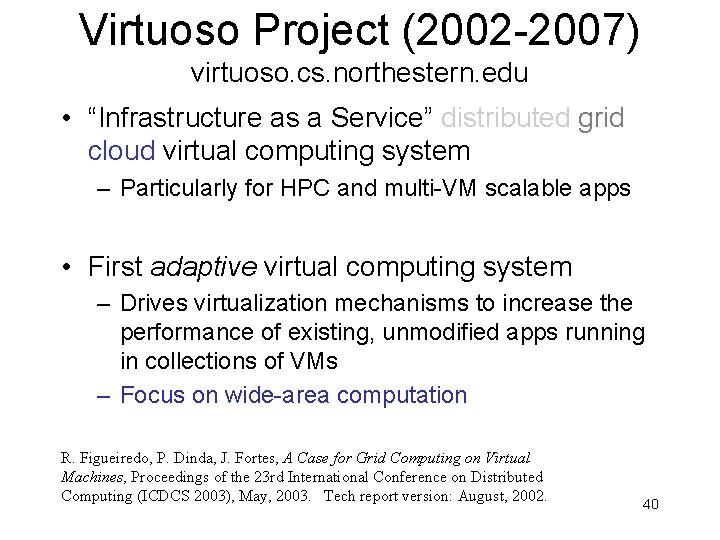

Virtuoso Project (2002 -2007) virtuoso. cs. northestern. edu • “Infrastructure as a Service” distributed grid cloud virtual computing system – Particularly for HPC and multi-VM scalable apps • First adaptive virtual computing system – Drives virtualization mechanisms to increase the performance of existing, unmodified apps running in collections of VMs – Focus on wide-area computation R. Figueiredo, P. Dinda, J. Fortes, A Case for Grid Computing on Virtual Machines, Proceedings of the 23 rd International Conference on Distributed Computing (ICDCS 2003), May, 2003. Tech report version: August, 2002. 40

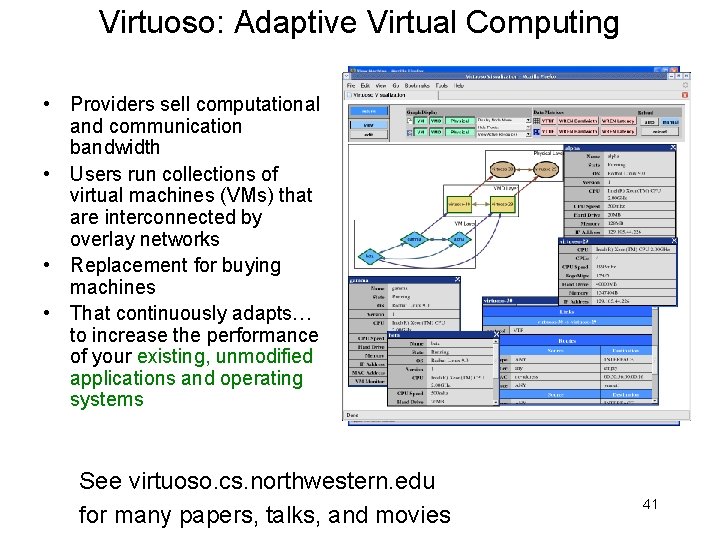

Virtuoso: Adaptive Virtual Computing • Providers sell computational and communication bandwidth • Users run collections of virtual machines (VMs) that are interconnected by overlay networks • Replacement for buying machines • That continuously adapts… to increase the performance of your existing, unmodified applications and operating systems See virtuoso. cs. northwestern. edu for many papers, talks, and movies 41

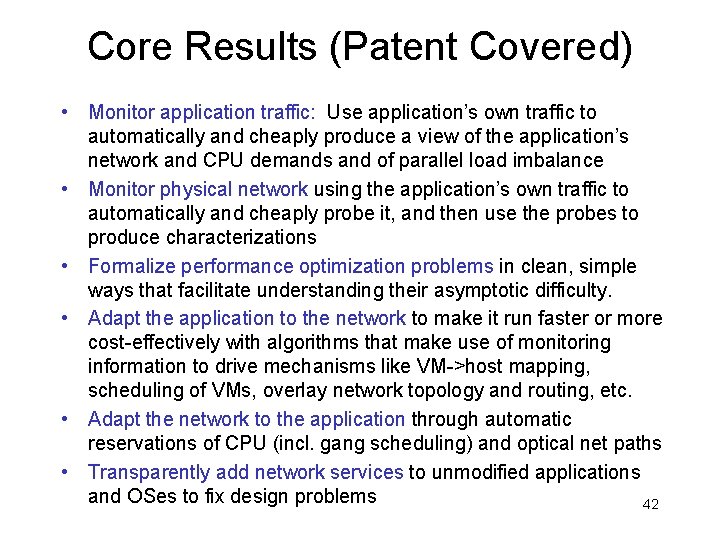

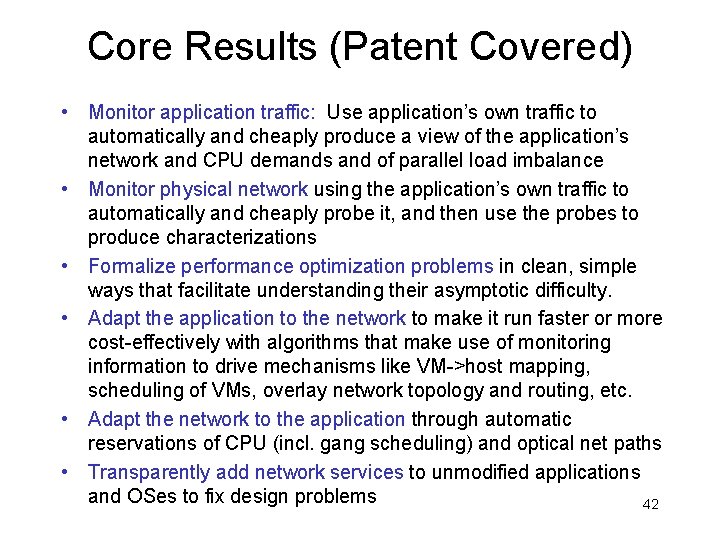

Core Results (Patent Covered) • Monitor application traffic: Use application’s own traffic to automatically and cheaply produce a view of the application’s network and CPU demands and of parallel load imbalance • Monitor physical network using the application’s own traffic to automatically and cheaply probe it, and then use the probes to produce characterizations • Formalize performance optimization problems in clean, simple ways that facilitate understanding their asymptotic difficulty. • Adapt the application to the network to make it run faster or more cost-effectively with algorithms that make use of monitoring information to drive mechanisms like VM->host mapping, scheduling of VMs, overlay network topology and routing, etc. • Adapt the network to the application through automatic reservations of CPU (incl. gang scheduling) and optical net paths • Transparently add network services to unmodified applications and OSes to fix design problems 42

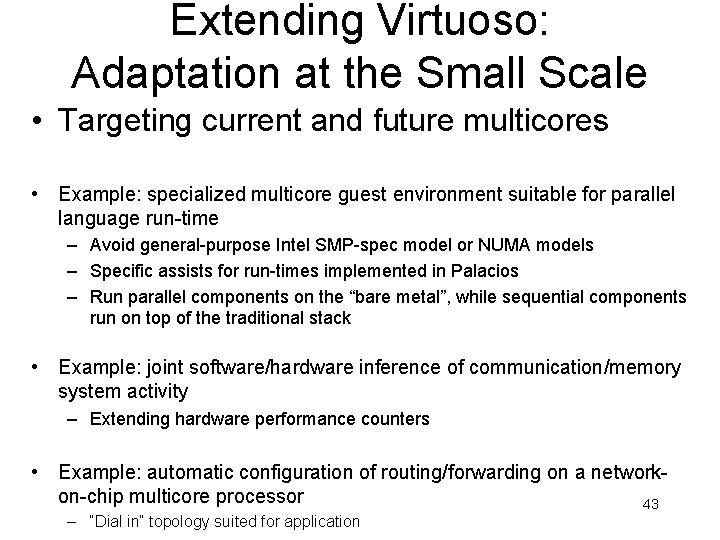

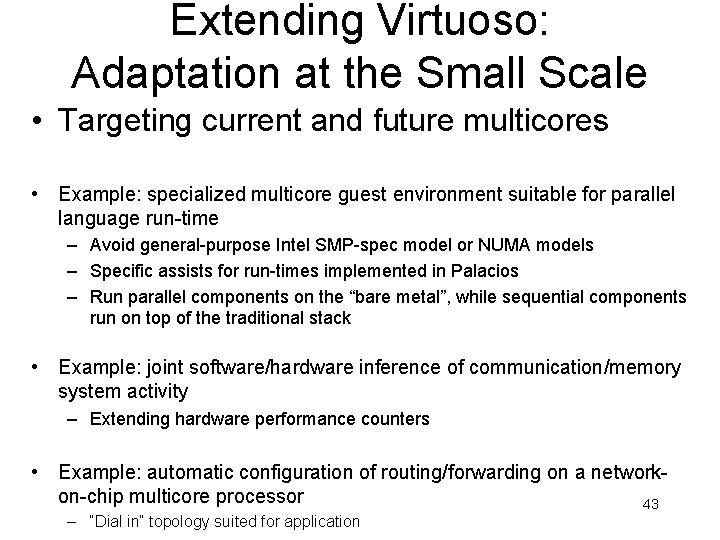

Extending Virtuoso: Adaptation at the Small Scale • Targeting current and future multicores • Example: specialized multicore guest environment suitable for parallel language run-time – Avoid general-purpose Intel SMP-spec model or NUMA models – Specific assists for run-times implemented in Palacios – Run parallel components on the “bare metal”, while sequential components run on top of the traditional stack • Example: joint software/hardware inference of communication/memory system activity – Extending hardware performance counters • Example: automatic configuration of routing/forwarding on a networkon-chip multicore processor 43 – “Dial in” topology suited for application

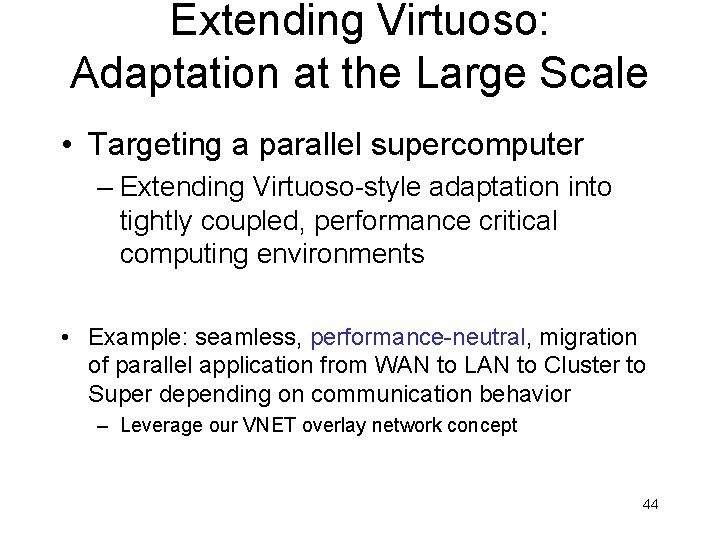

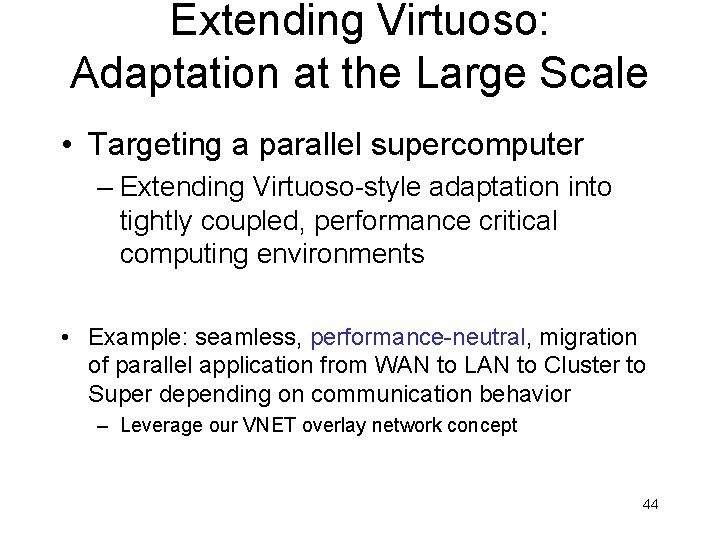

Extending Virtuoso: Adaptation at the Large Scale • Targeting a parallel supercomputer – Extending Virtuoso-style adaptation into tightly coupled, performance critical computing environments • Example: seamless, performance-neutral, migration of parallel application from WAN to LAN to Cluster to Super depending on communication behavior – Leverage our VNET overlay network concept 44

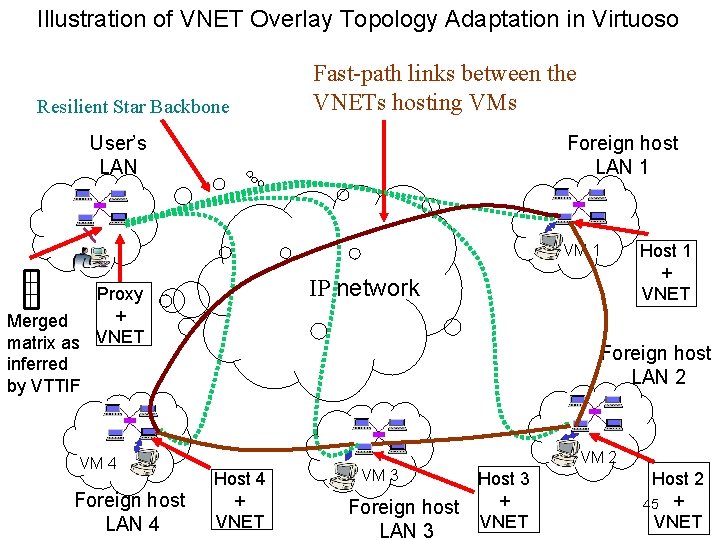

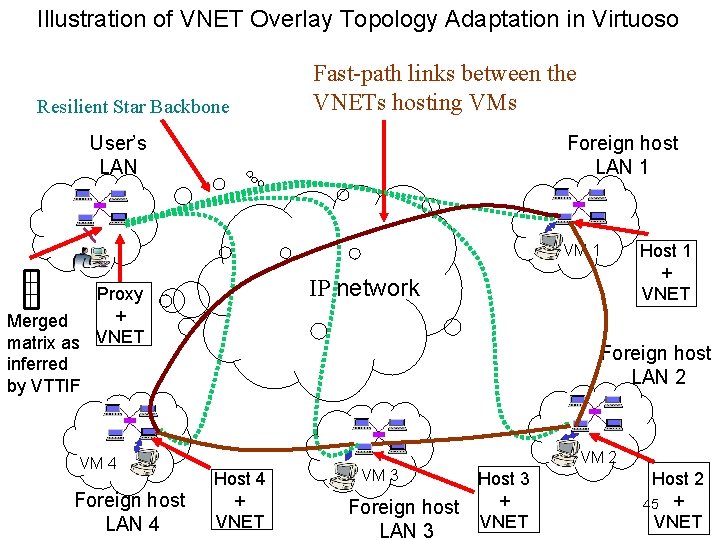

Illustration of VNET Overlay Topology Adaptation in Virtuoso Resilient Star Backbone Fast-path links between the VNETs hosting VMs User’s LAN Foreign host LAN 1 VM 1 IP network Proxy + Merged matrix as VNET inferred by VTTIF VM 4 Foreign host LAN 4 Host 1 + VNET Foreign host LAN 2 VM 2 Host 4 + VNET VM 3 Foreign host LAN 3 Host 3 + VNET Host 2 45 + VNET

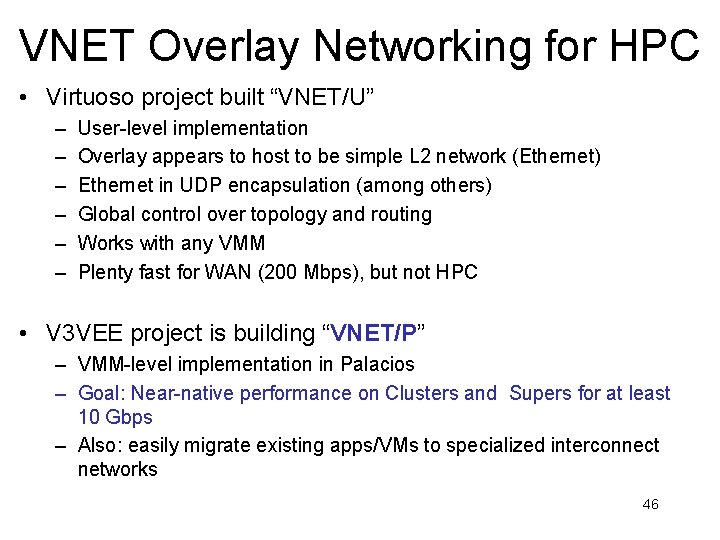

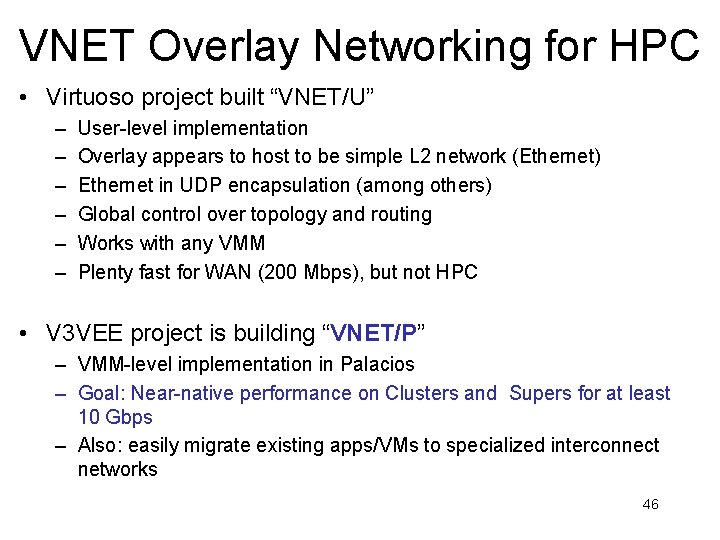

VNET Overlay Networking for HPC • Virtuoso project built “VNET/U” – – – User-level implementation Overlay appears to host to be simple L 2 network (Ethernet) Ethernet in UDP encapsulation (among others) Global control over topology and routing Works with any VMM Plenty fast for WAN (200 Mbps), but not HPC • V 3 VEE project is building “VNET/P” – VMM-level implementation in Palacios – Goal: Near-native performance on Clusters and Supers for at least 10 Gbps – Also: easily migrate existing apps/VMs to specialized interconnect networks 46

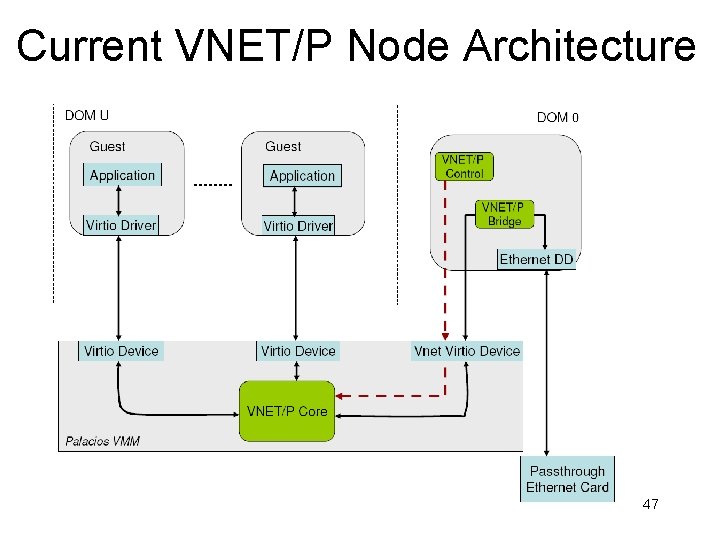

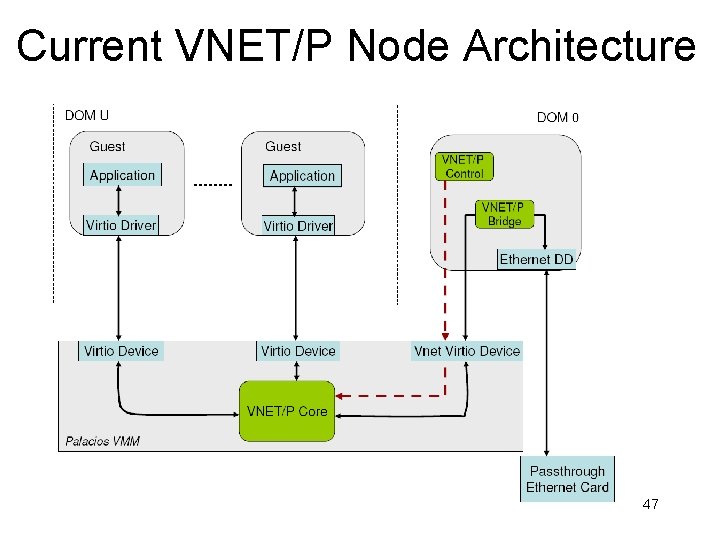

Current VNET/P Node Architecture 47

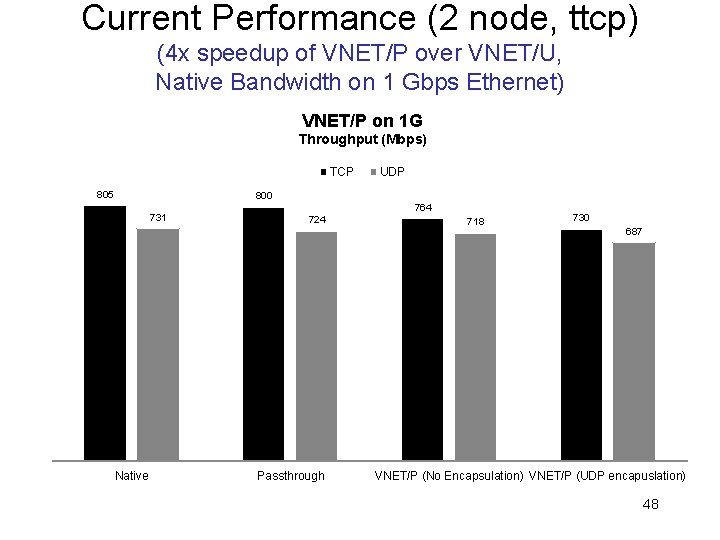

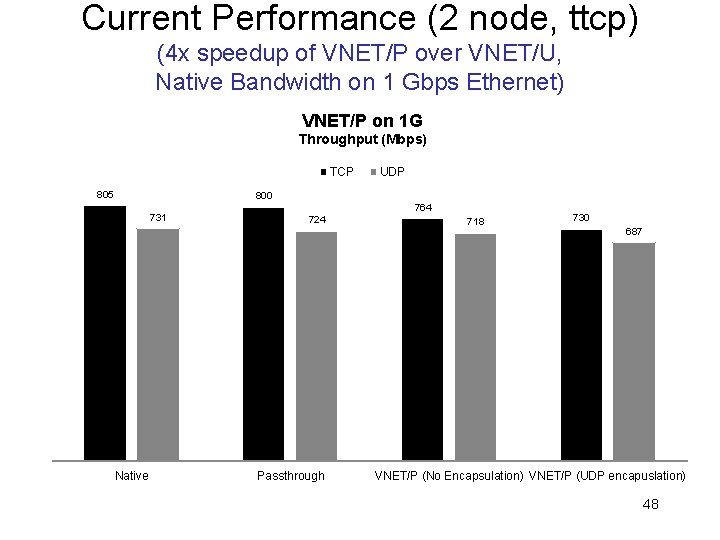

Current Performance (2 node, ttcp) (4 x speedup of VNET/P over VNET/U, Native Bandwidth on 1 Gbps Ethernet) VNET/P on 1 G Throughput (Mbps) TCP 805 UDP 800 731 Native 764 724 Passthrough 718 730 687 VNET/P (No Encapsulation) VNET/P (UDP encapuslation) 48

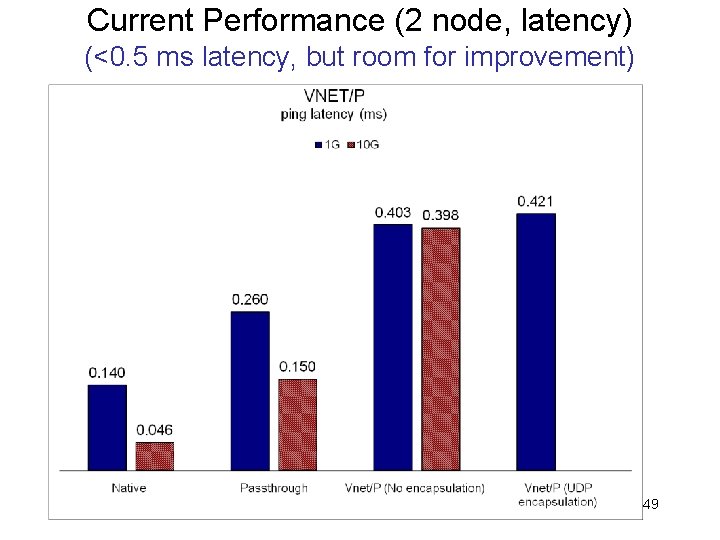

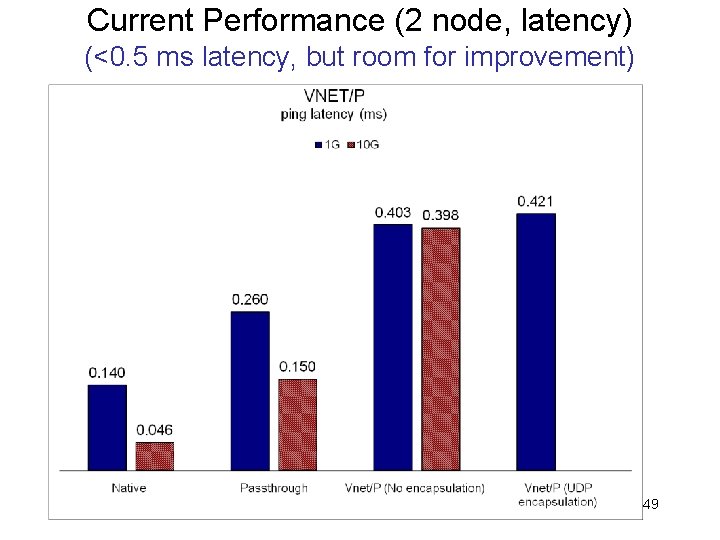

Current Performance (2 node, latency) (<0. 5 ms latency, but room for improvement) 49

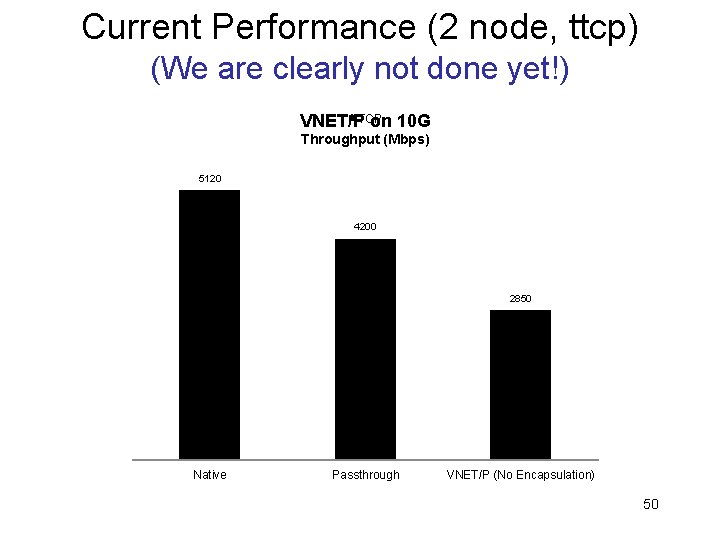

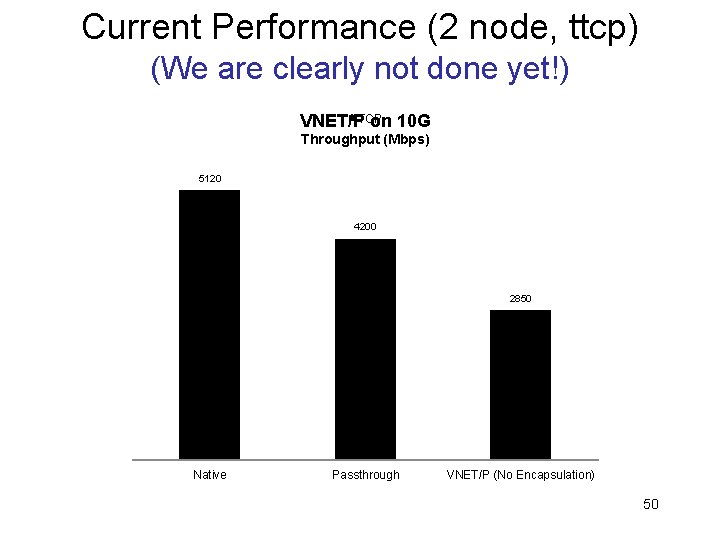

Current Performance (2 node, ttcp) (We are clearly not done yet!) VNET/PTCP on 10 G Throughput (Mbps) 5120 4200 2850 Native Passthrough VNET/P (No Encapsulation) 50

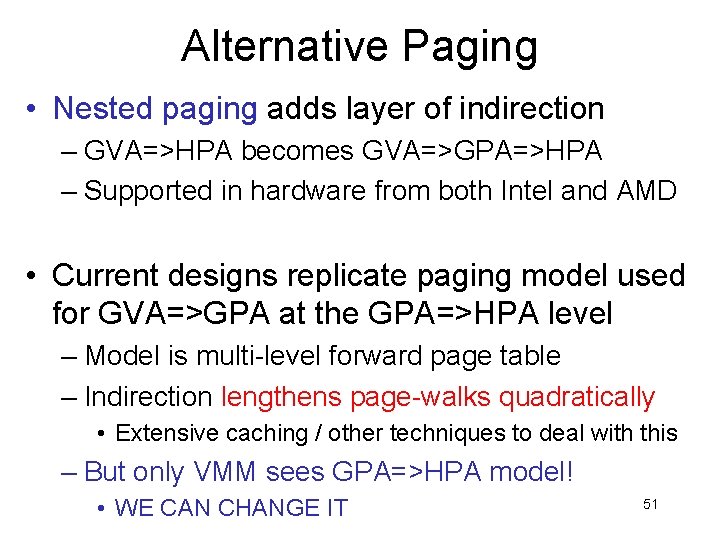

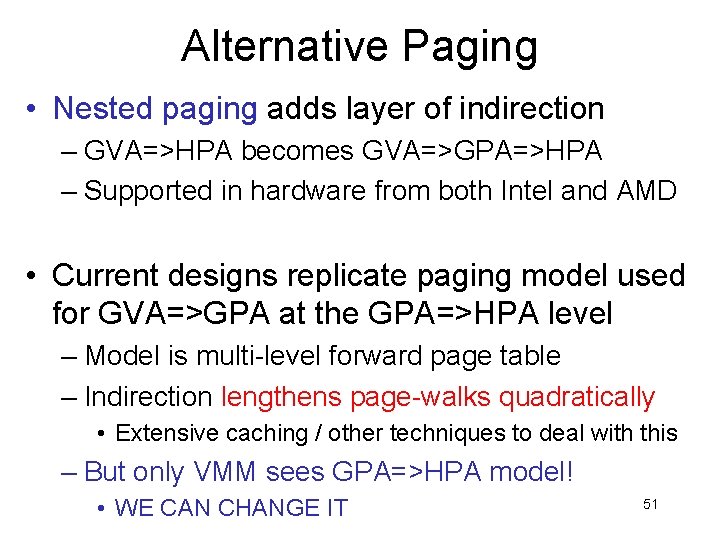

Alternative Paging • Nested paging adds layer of indirection – GVA=>HPA becomes GVA=>GPA=>HPA – Supported in hardware from both Intel and AMD • Current designs replicate paging model used for GVA=>GPA at the GPA=>HPA level – Model is multi-level forward page table – Indirection lengthens page-walks quadratically • Extensive caching / other techniques to deal with this – But only VMM sees GPA=>HPA model! • WE CAN CHANGE IT 51

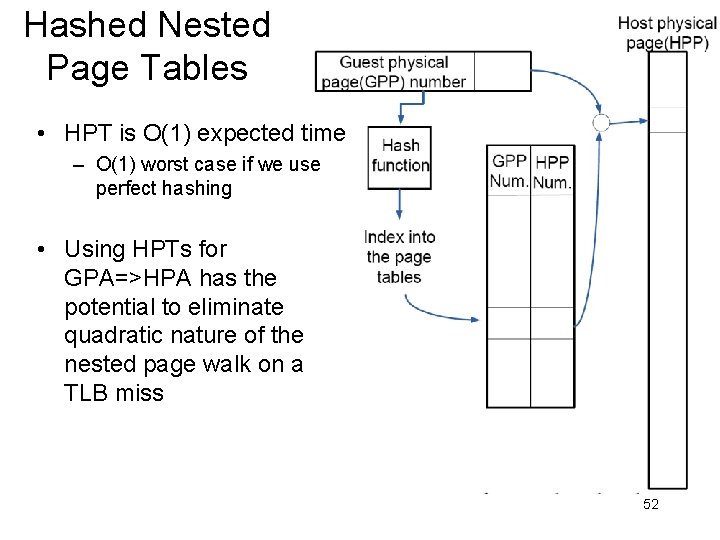

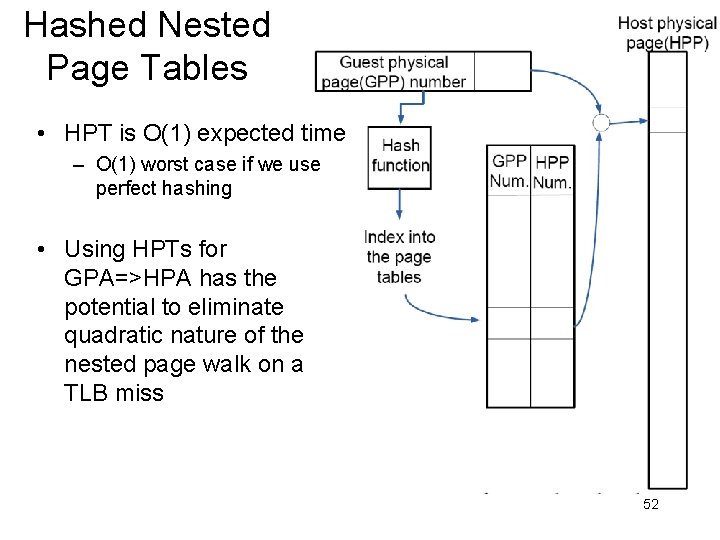

Hashed Nested Page Tables • HPT is O(1) expected time – O(1) worst case if we use perfect hashing • Using HPTs for GPA=>HPA has the potential to eliminate quadratic nature of the nested page walk on a TLB miss 52

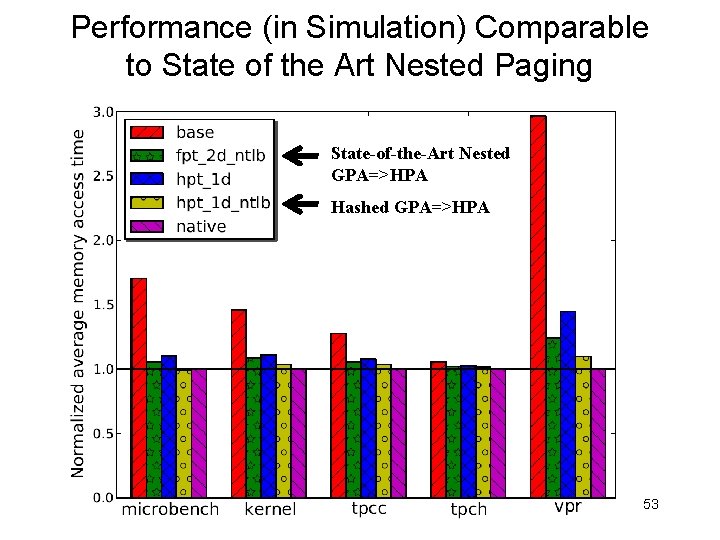

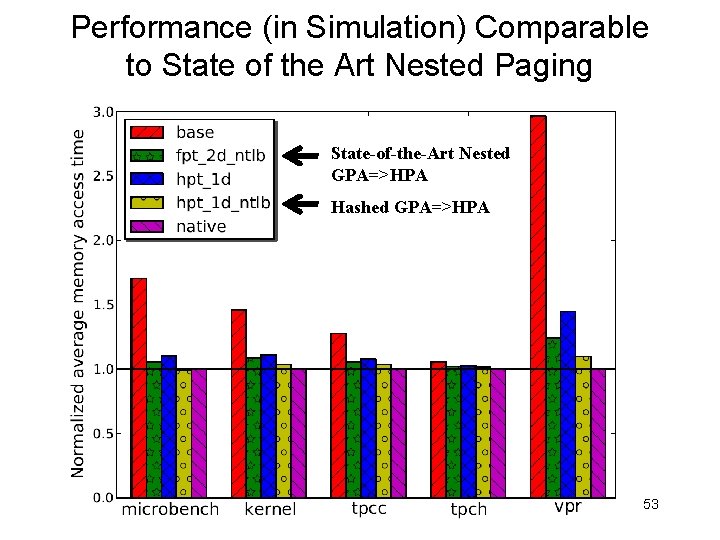

Performance (in Simulation) Comparable to State of the Art Nested Paging State-of-the-Art Nested GPA=>HPA Hashed GPA=>HPA 53

Conclusion • The V 3 VEE Project is building a new, public, open-source VMM for modern architectures – It’s small so that you easily come up to speed on it – It enables research and use that might not be possible without easy access to VMM internals • You can join us! 54

• Peter Dinda – http: //pdinda. org For More Information • V 3 VEE Project (including downloads) – http: //v 3 vee. org – Including downloads and git access 55