An introduction to the CAP quality survey presenter

- Slides: 30

An introduction to the CAP quality survey presenter name & time Resource based on Liesbeth Baartman

Content of the training CAP: theory behind it, what is it? Quality: explanation and discussion Self-evaluation process and experiences CAP quality measurement questionnaire: short demonstration, instruction to fill out the survey

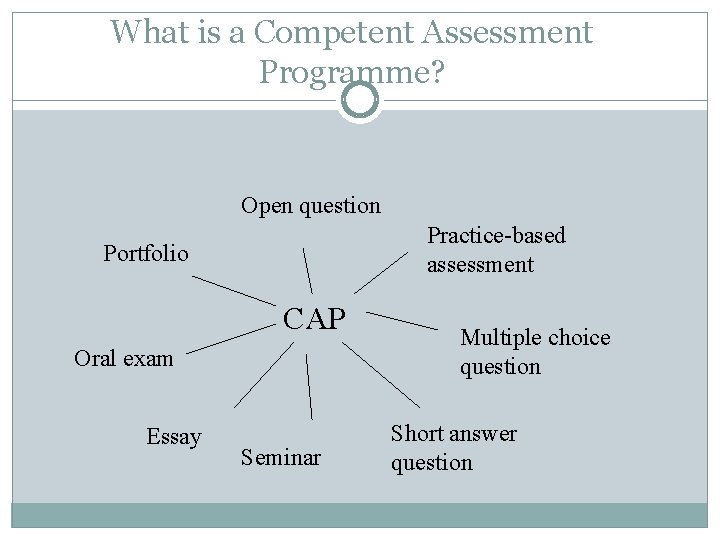

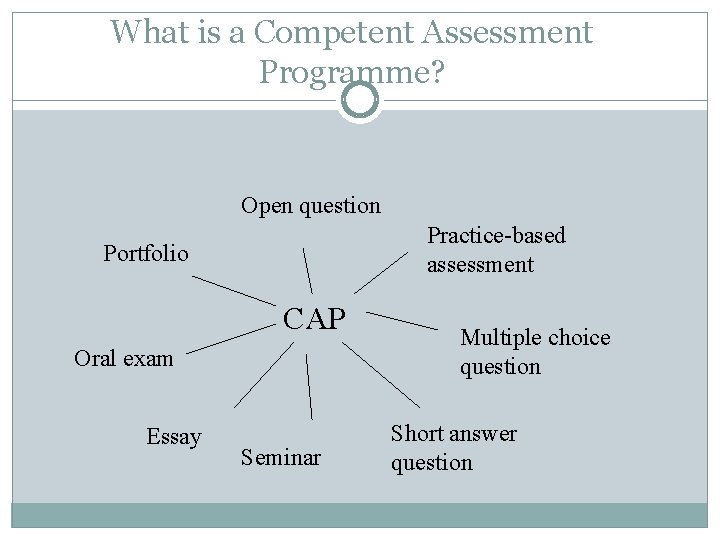

What is a Competent Assessment Programme? Open question Practice-based assessment Portfolio CAP Oral exam Essay Seminar Multiple choice question Short answer question

CAP: a mix of methods Ø competency is too complex to be assessed by one single method Ø complementary: combine various assessment methods together, the mixed method is suitable for measuring competencies Ø “traditional” and“new” combined Ø depending on context, Ø formative and / or summative? However, CAP is NOT always easy to describe and demonstrate in training.

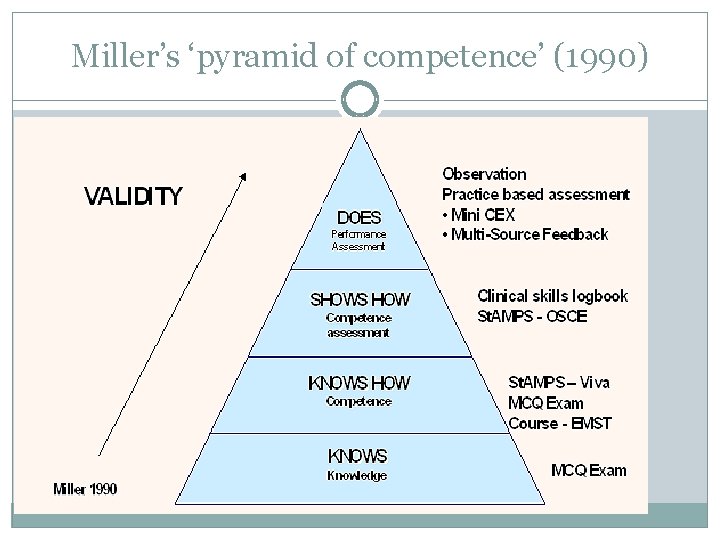

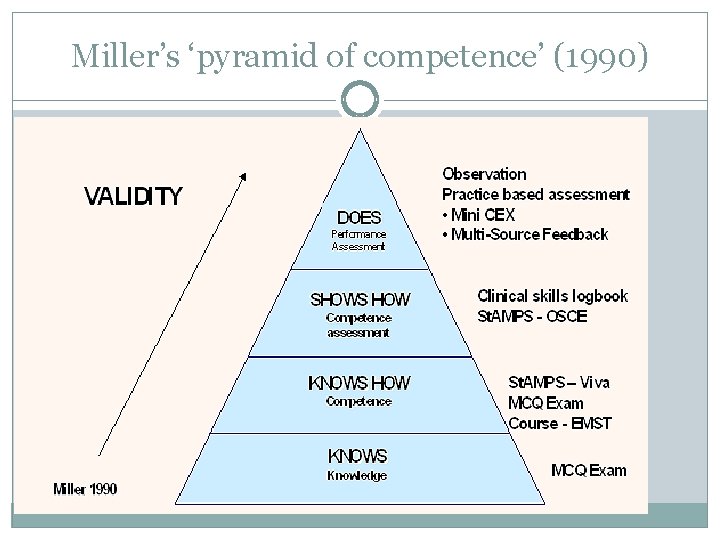

Miller’s ‘pyramid of competence’ (1990)

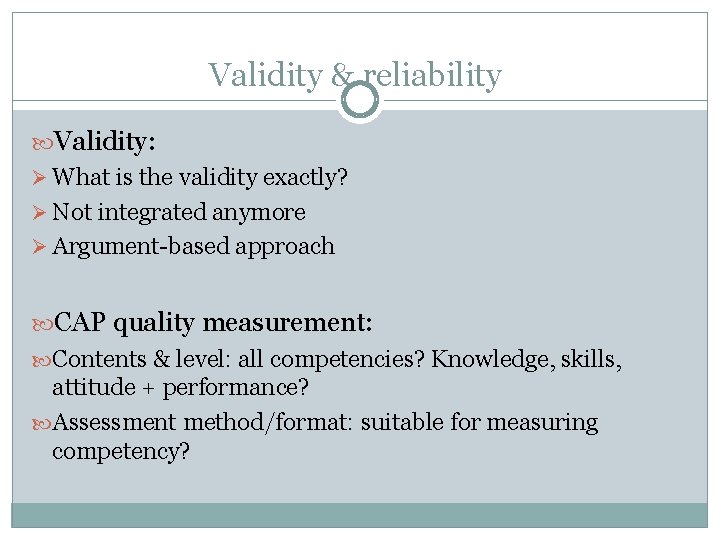

Validity & reliability Validity: Ø What is the validity exactly? Ø Not integrated anymore Ø Argument-based approach CAP quality measurement: Contents & level: all competencies? Knowledge, skills, attitude + performance? Assessment method/format: suitable for measuring competency?

Validity & reliability Reliability: Ø Competence / performance varies depending on the task Ø Standardization does not necessarily lead to higher reliability Ø Rely on human judgment Ø Often qualitative information Key result independent of specific assessor or circumstances: Ø Instruction / training assessor Ø Multiple assessors and different tests

Additional new quality criteria Formative function: Provides good feedback? Students learn something from the test? Does the test have a positive impact on learning? Self / Peer-assessment CAP quality measure: Meaning / Self / Educational implications

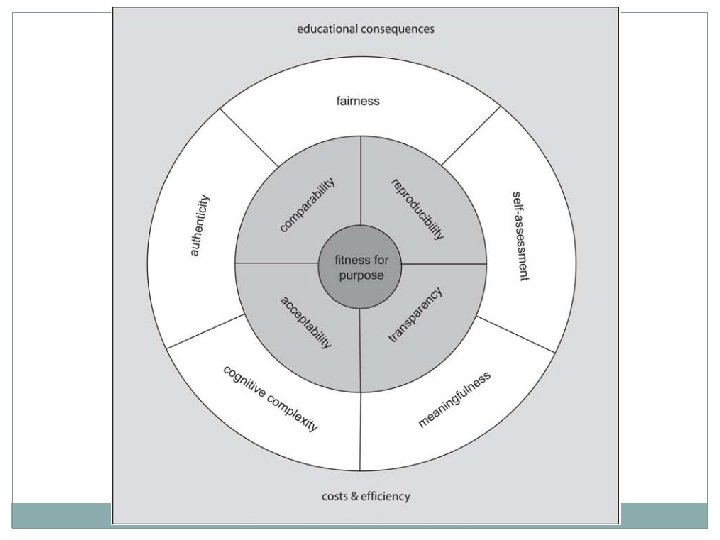

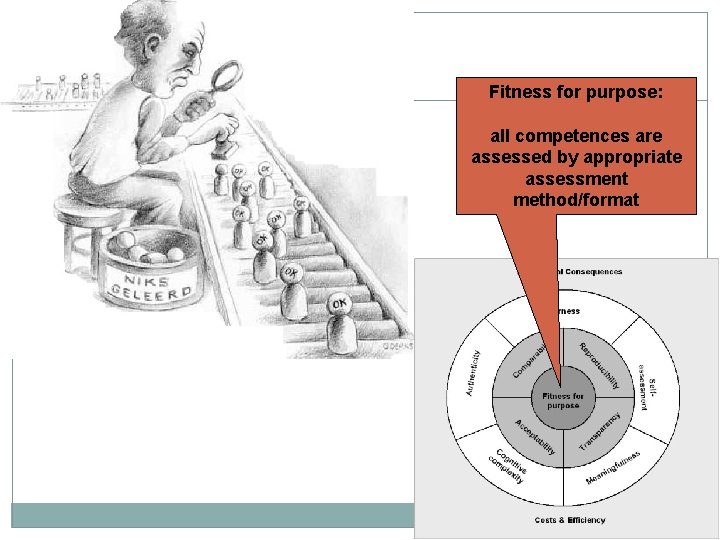

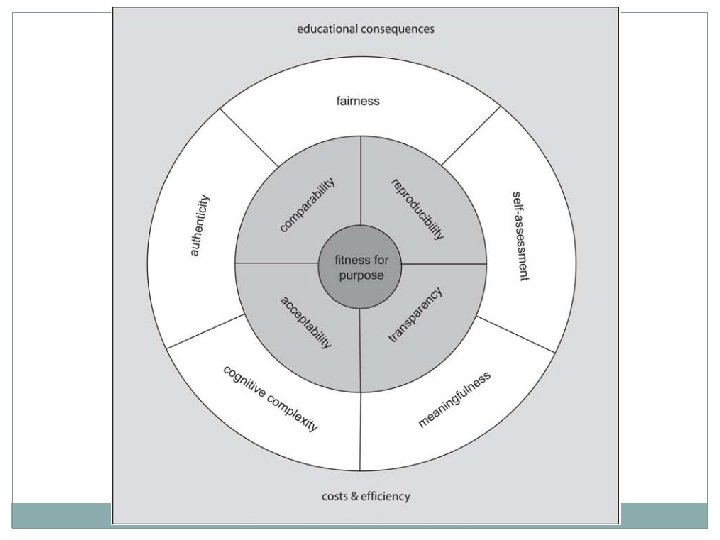

Fitness for purpose: all competences are assessed by appropriate assessment method/format

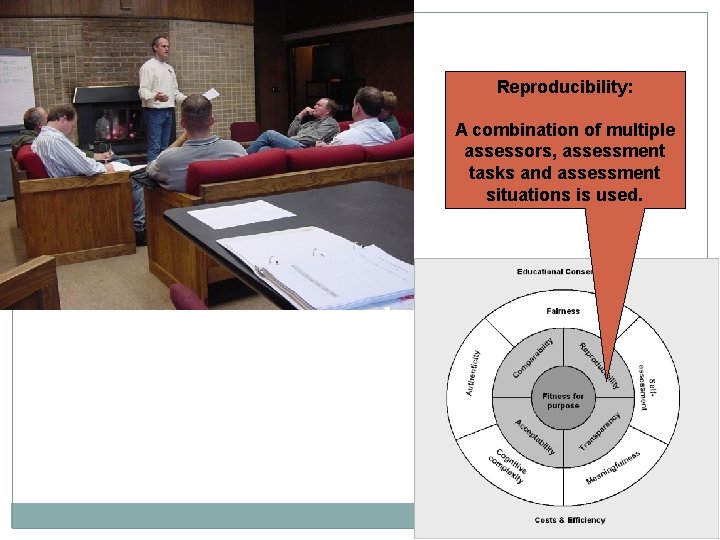

Reproducibility: A combination of multiple assessors, assessment tasks and assessment situations is used.

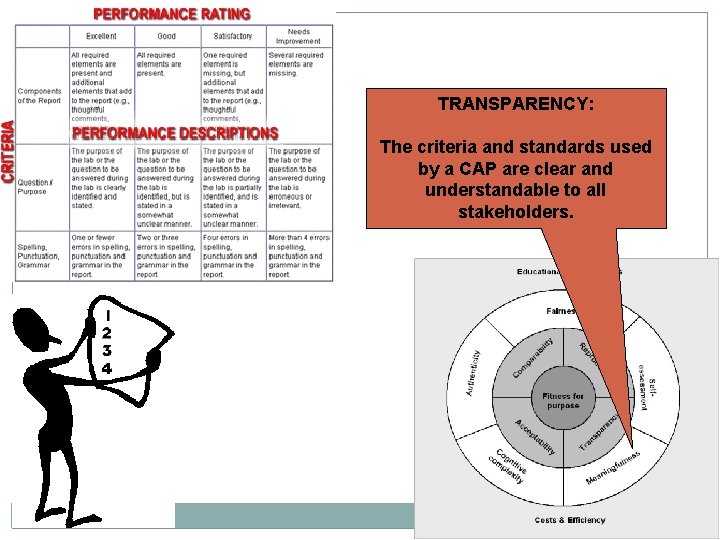

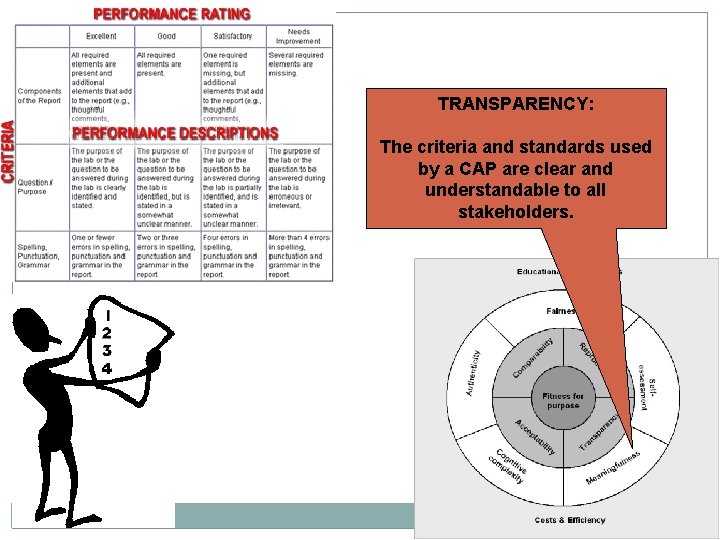

TRANSPARENCY: The criteria and standards used by a CAP are clear and understandable to all stakeholders.

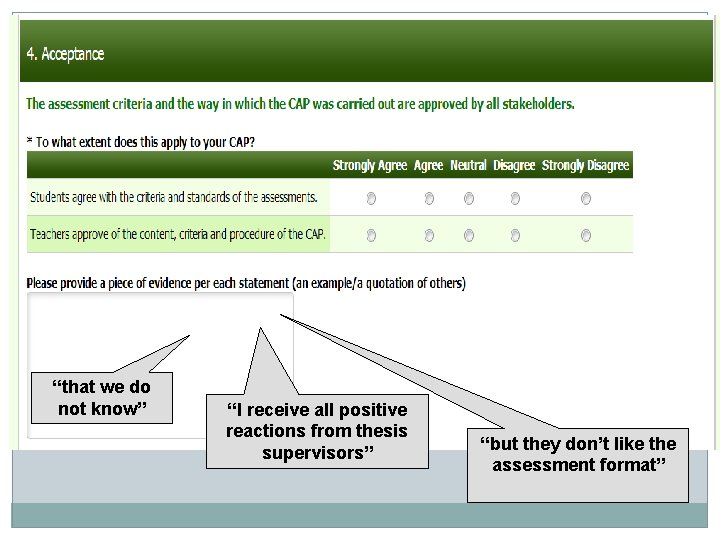

ACCEPTANCY: Do the stakeholders agree on the design and implementation of the assessment?

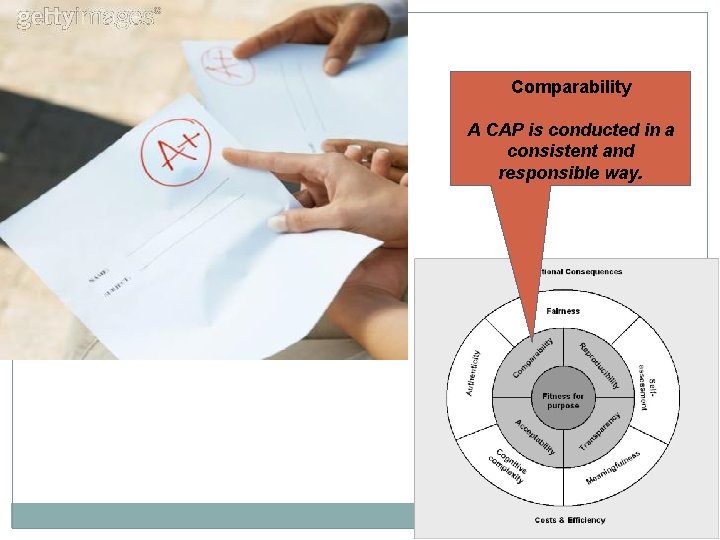

Comparability A CAP is conducted in a consistent and responsible way.

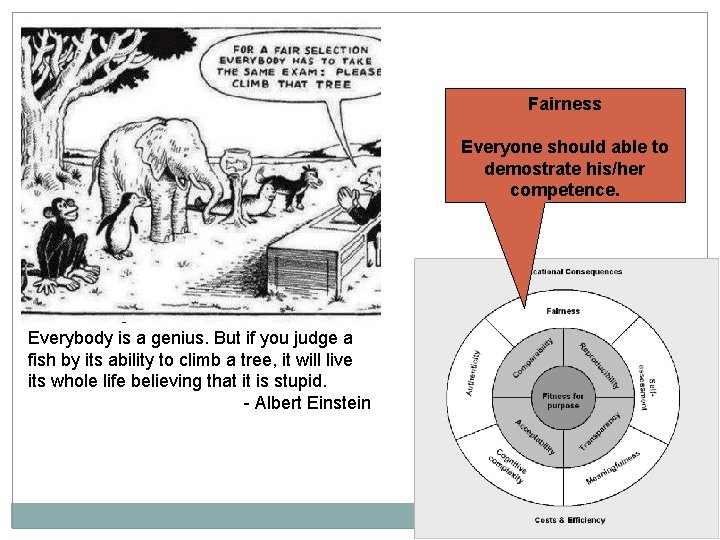

Fairness Everyone should able to demostrate his/her competence. Everybody is a genius. But if you judge a fish by its ability to climb a tree, it will live its whole life believing that it is stupid. - Albert Einstein

Fitness for self‐assessment/reflection The CAP stimulates selfreflection and formulation of one’s own learning goals

MEANINGFULNESS: Assessment feedback should help one learn.

COGNITIVE COMPLEXITY: The assessment methods should allow students to demonstrate different levels of cognitive skills and thinking processes

AUTHENTICITY: The assessment tasks should reflect the professional tasks

Educational Consequences The CAP has a positive influence on teaching & learning

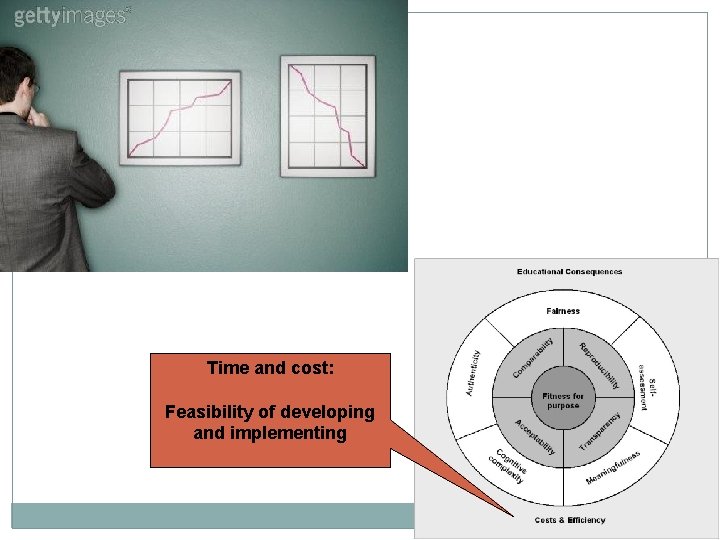

Time and cost: Feasibility of developing and implementing

Self-evaluation

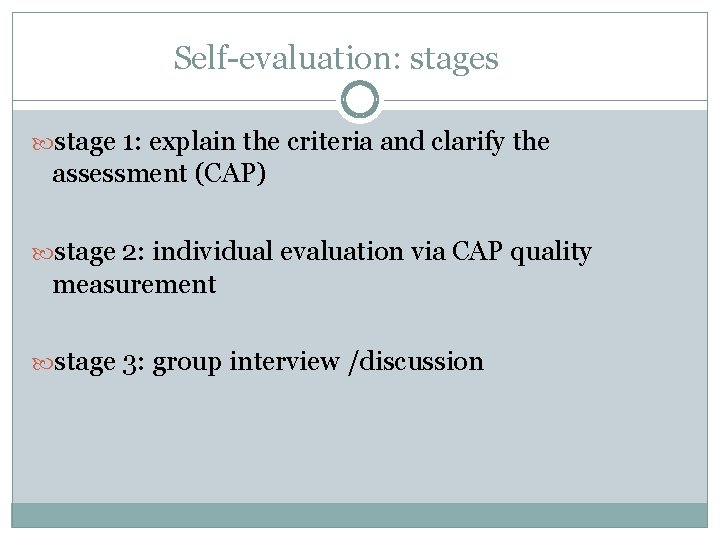

Self-evaluation: stages stage 1: explain the criteria and clarify the assessment (CAP) stage 2: individual evaluation via CAP quality measurement stage 3: group interview /discussion

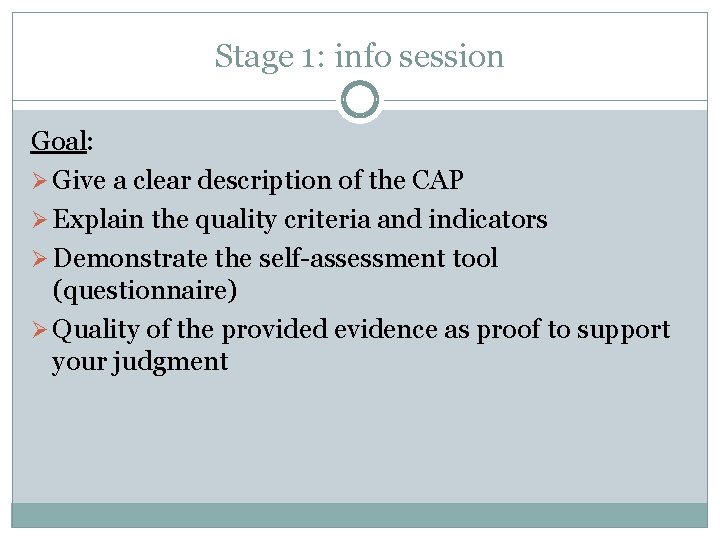

Stage 1: info session Goal: Ø Give a clear description of the CAP Ø Explain the quality criteria and indicators Ø Demonstrate the self-assessment tool (questionnaire) Ø Quality of the provided evidence as proof to support your judgment

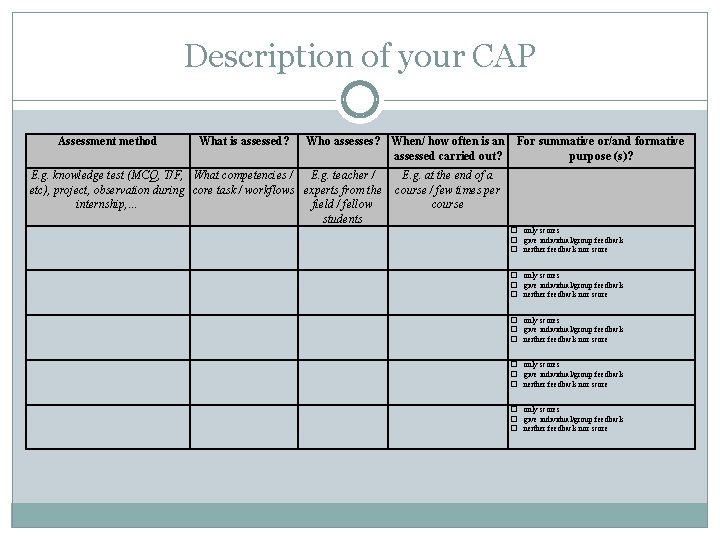

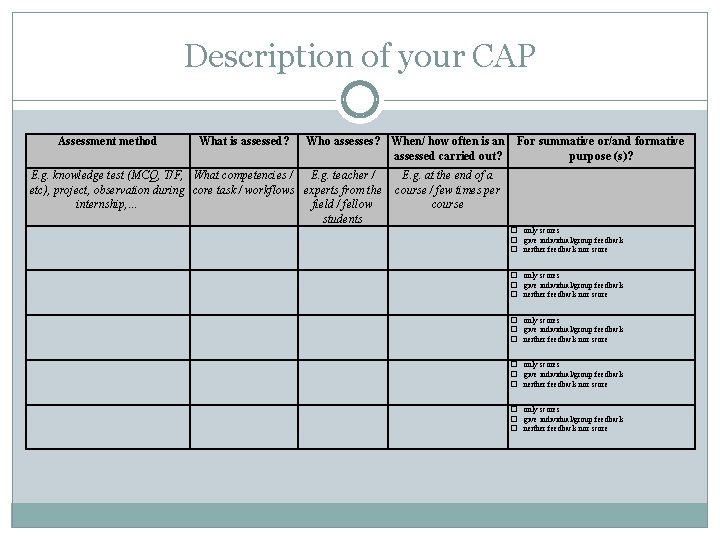

Description of your CAP Assessment method What is assessed? Who assesses? When/ how often is an assessed carried out? E. g. knowledge test (MCQ, T/F, What competencies / E. g. teacher / etc), project, observation during core task / workflows experts from the internship, … field / fellow students For summative or/and formative purpose (s)? E. g. at the end of a course / few times per course ☐ only scores ☐ give individual/group feedback ☐ neither feedback nor score ☐ only scores ☐ give individual/group feedback ☐ neither feedback nor score

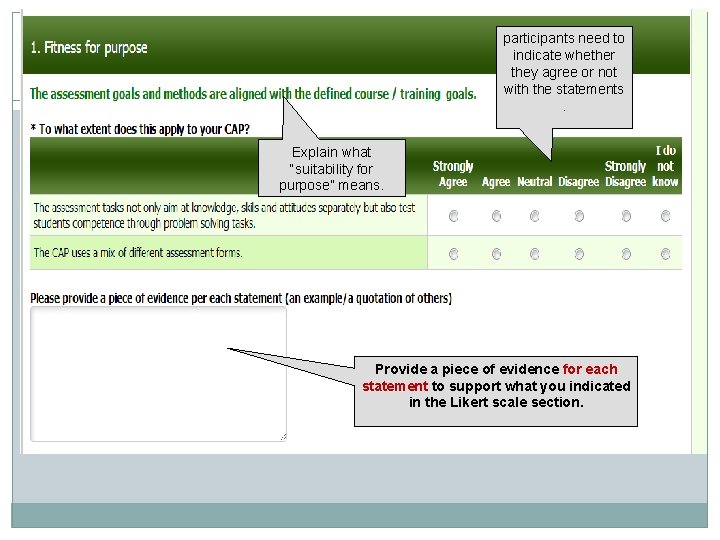

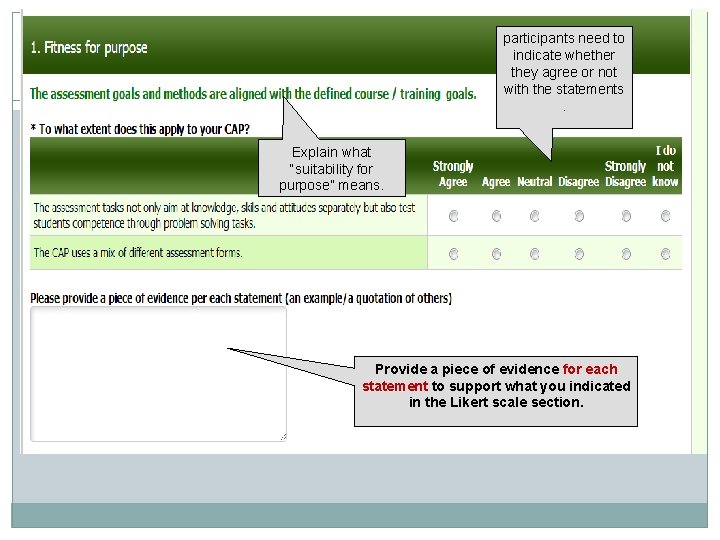

participants need to indicate whether they agree or not with the statements. Explain what “suitability for purpose” means. Provide a piece of evidence for each statement to support what you indicated in the Likert scale section.

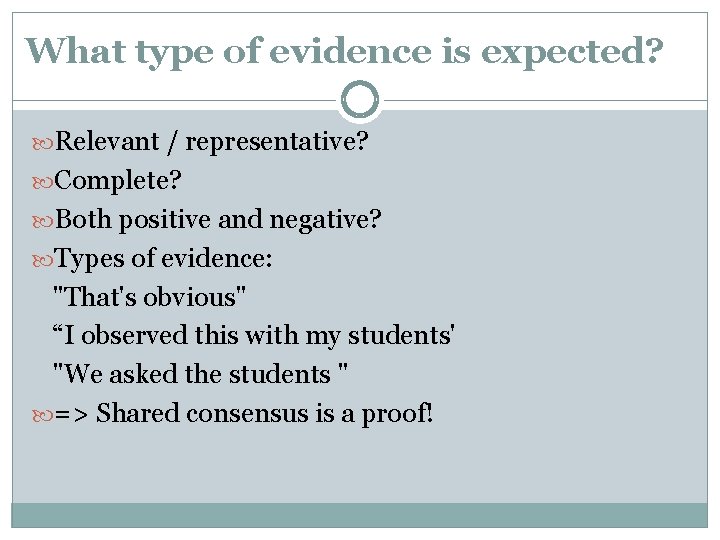

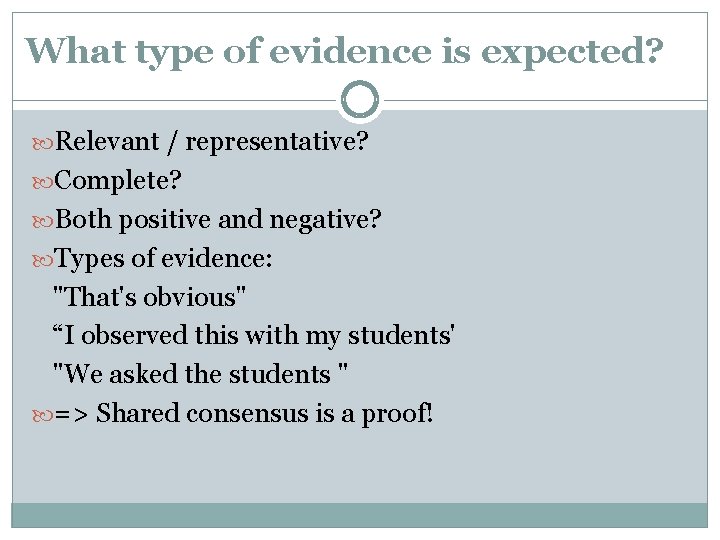

What type of evidence is expected? Relevant / representative? Complete? Both positive and negative? Types of evidence: "That's obvious" “I observed this with my students' "We asked the students " => Shared consensus is a proof!

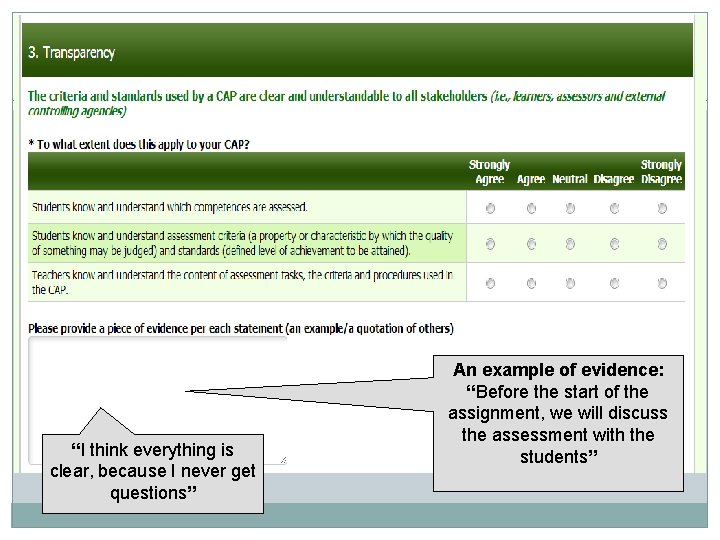

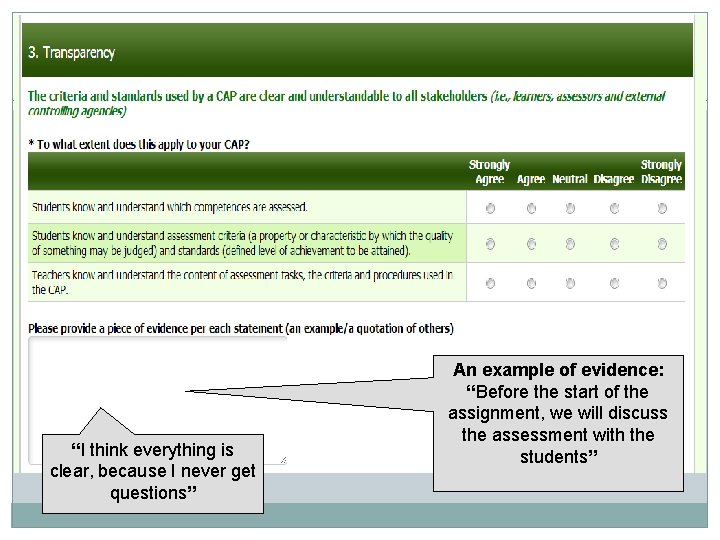

“I think everything is clear, because I never get questions” An example of evidence: “Before the start of the assignment, we will discuss the assessment with the students”

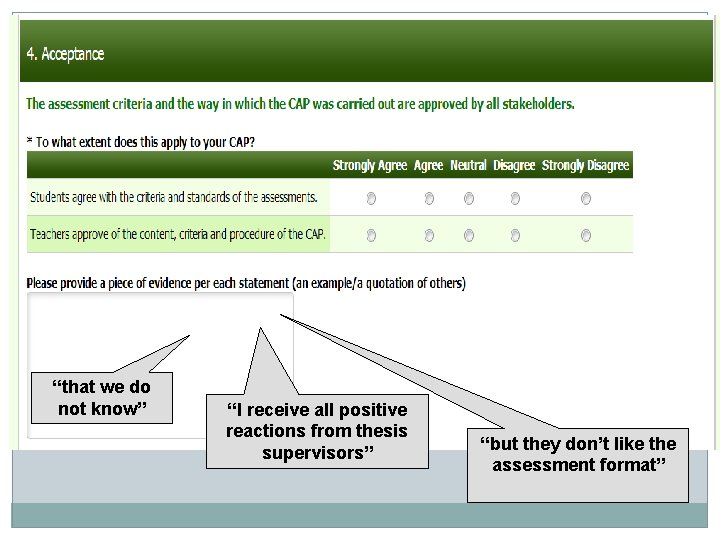

“that we do not know” “I receive all positive reactions from thesis supervisors” “but they don’t like the assessment format”

Next time… Discuss interpretation CAP quality measurement Preparation of the group interviews Carry out the group interview