An Improved Model for Live Migration in Data

![CPU model POWER DRAW vs USAGE, CPU 900, 00 800, 00 POWER [W] 700, CPU model POWER DRAW vs USAGE, CPU 900, 00 800, 00 POWER [W] 700,](https://slidetodoc.com/presentation_image_h2/d0eca4609d2c73945b96600d72f18334/image-10.jpg)

![Experimental setup • VM INSTANCES INSTANCE NAME CPU [%] MEMORY [%] DIRTYING RATE [%] Experimental setup • VM INSTANCES INSTANCE NAME CPU [%] MEMORY [%] DIRTYING RATE [%]](https://slidetodoc.com/presentation_image_h2/d0eca4609d2c73945b96600d72f18334/image-19.jpg)

- Slides: 27

An Improved Model for Live Migration in Data Centre Simulators Vincenzo De Maio, Gabor Kecskemeti, Radu Prodan Distributed and Parallel Systems University of Innsbruck

Summary • Introduction – Motivation – Background • Model • Design • Implementation • Evaluation • Conclusion and future work

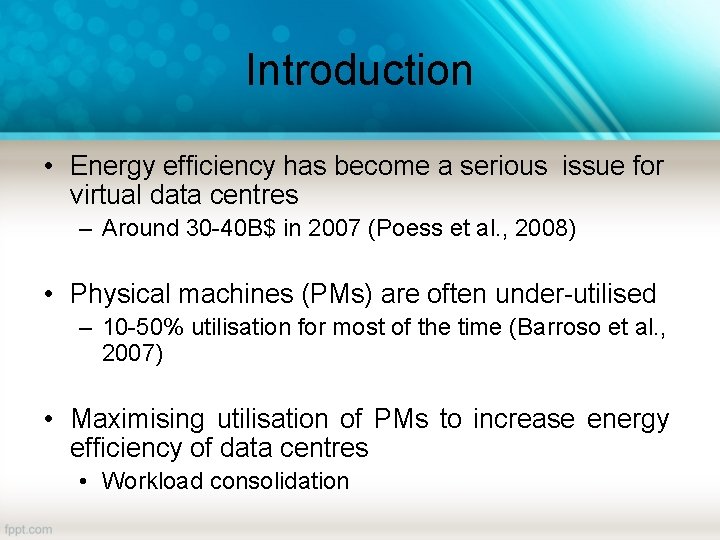

Introduction • Energy efficiency has become a serious issue for virtual data centres – Around 30 -40 B$ in 2007 (Poess et al. , 2008) • Physical machines (PMs) are often under-utilised – 10 -50% utilisation for most of the time (Barroso et al. , 2007) • Maximising utilisation of PMs to increase energy efficiency of data centres • Workload consolidation

Workload consolidation • Mapping VMs on a reduced subset of PMs

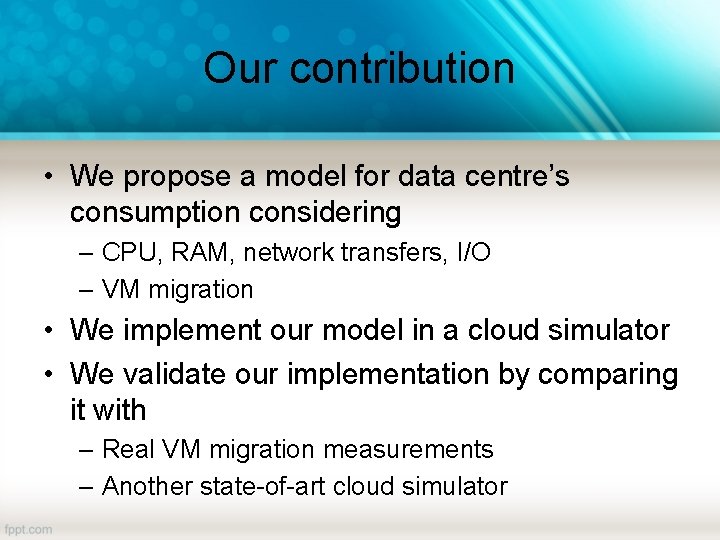

Consolidation: pros and cons • PROs: – Increases utilisation of PMs – The new mapping may allow to shut down unutilised PMs, increasing energy efficiency • CONs: – Drawbacks in performance and energy consumption are possible – Necessity of predicting the benefits of a new VM-PM mapping

Motivation • Evaluating which VM consolidation policy is more efficient – Difficult to perform experiments in real Cloud infrastructures • Cloud Simulation

Cloud simulation • We need – Energy prediction models • CPU, network transfers, I/O • VM Migration – Simulation framework

Our contribution • We propose a model for data centre’s consumption considering – CPU, RAM, network transfers, I/O – VM migration • We implement our model in a cloud simulator • We validate our implementation by comparing it with – Real VM migration measurements – Another state-of-art cloud simulator

Summary • Introduction – Motivation – Background • Model • Design • Evaluation • Implementation • Conclusion and future work

![CPU model POWER DRAW vs USAGE CPU 900 00 800 00 POWER W 700 CPU model POWER DRAW vs USAGE, CPU 900, 00 800, 00 POWER [W] 700,](https://slidetodoc.com/presentation_image_h2/d0eca4609d2c73945b96600d72f18334/image-10.jpg)

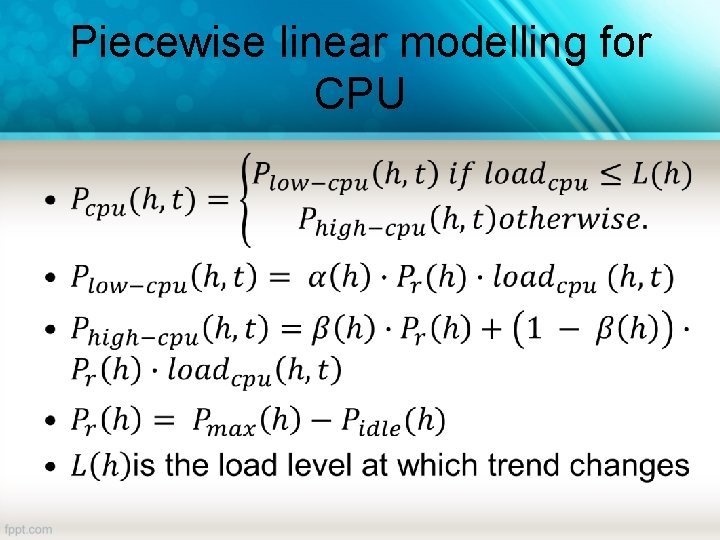

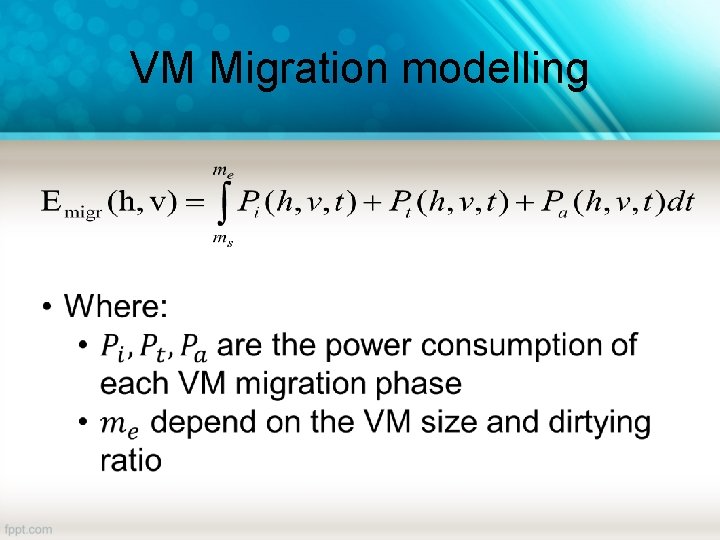

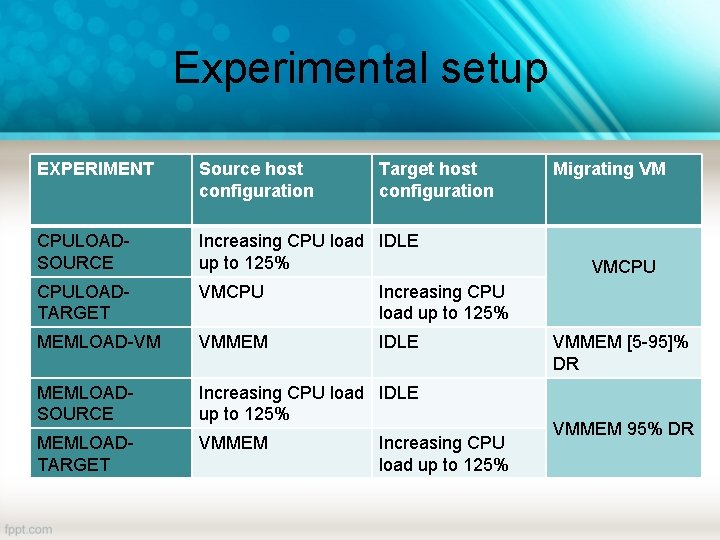

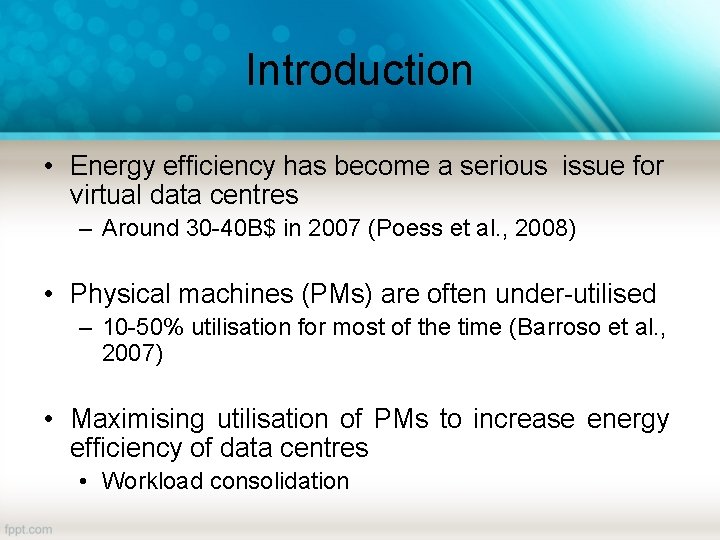

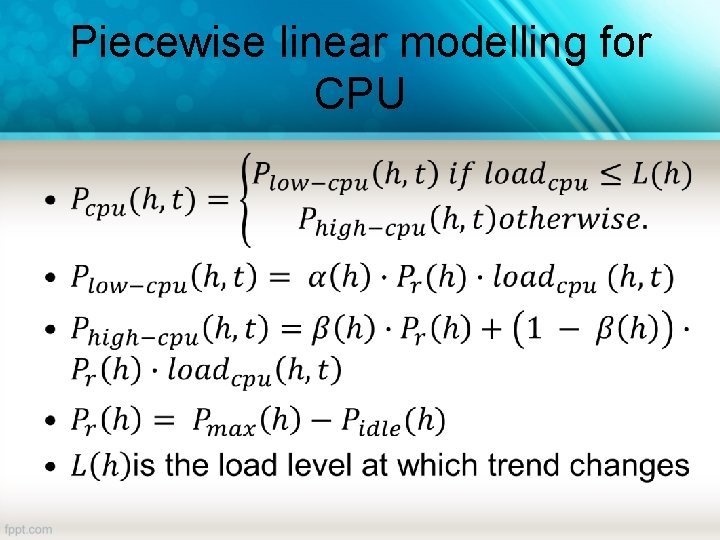

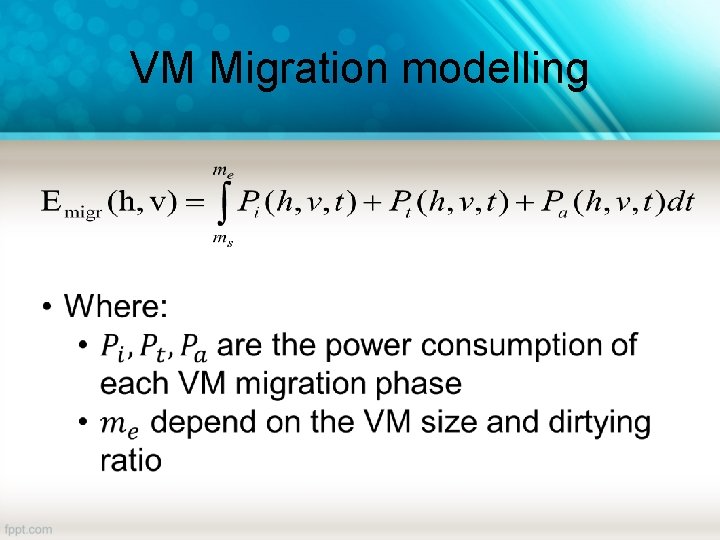

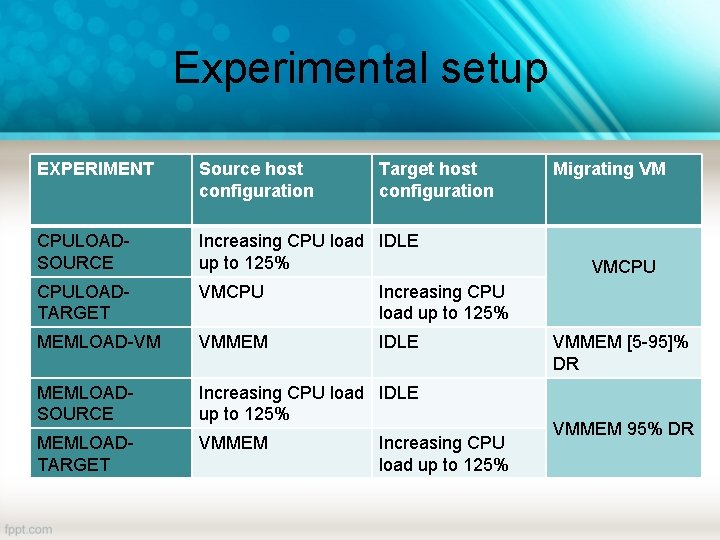

CPU model POWER DRAW vs USAGE, CPU 900, 00 800, 00 POWER [W] 700, 00 Strong change of trend at the same level of load 600, 00 500, 00 400, 00 300, 00 200, 00 100, 00% 10, 00% 20, 00% 30, 00% 40, 00% 50, 00% 60, 00% CPU LOAD AMD Opteron Intel Xeon 70, 00% 80, 00% 90, 00% 100, 00%

Piecewise linear modelling for CPU •

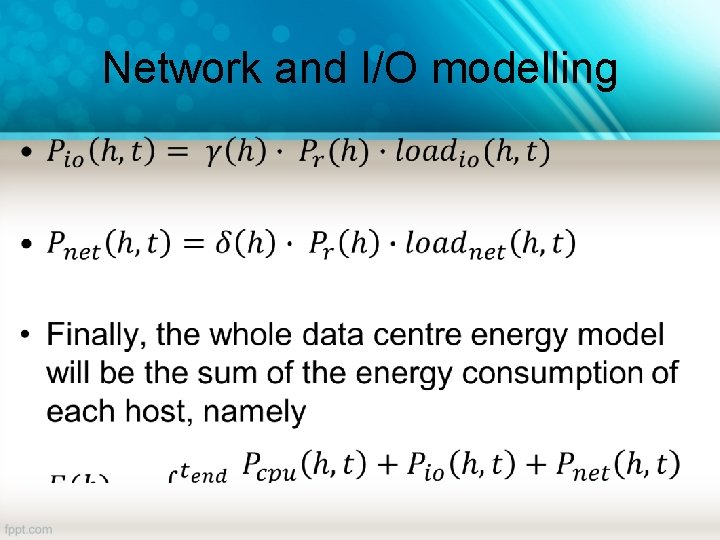

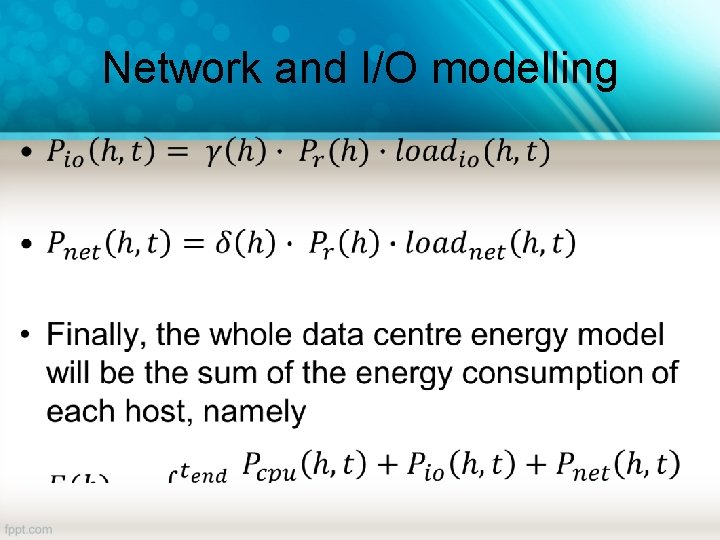

Network and I/O modelling •

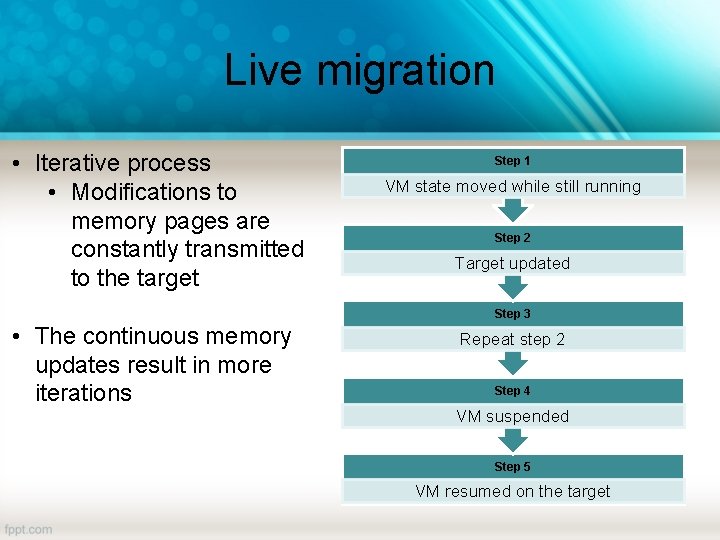

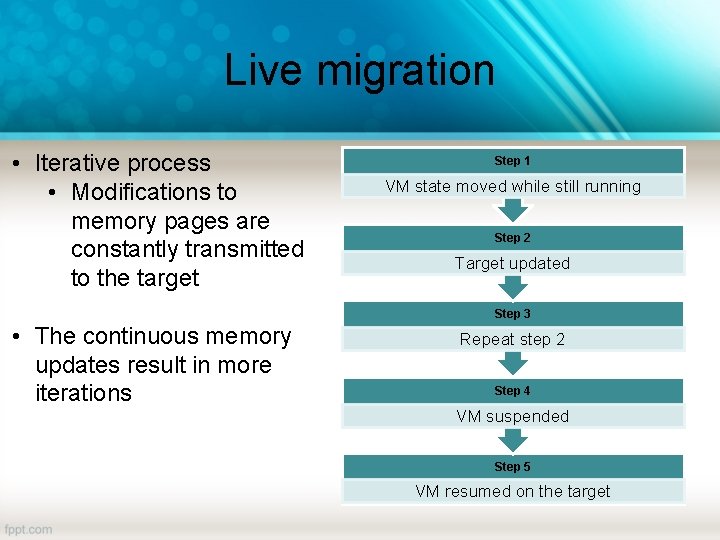

Live migration • Iterative process • Modifications to memory pages are constantly transmitted to the target Step 1 VM state moved while still running Step 2 Target updated Step 3 • The continuous memory updates result in more iterations Repeat step 2 Step 4 VM suspended Step 5 VM resumed on the target

Migration energy phases • Normal execution phase – Each actor performs its normal operation (no migration decision taken yet) • Initiation phase – From the issuing of the migration to the effective start of migration • Transfer phase – The state of the VM is transferred over the network • Service activation phase – The machine is started on the target and the source releases the resources previously owned by the VM

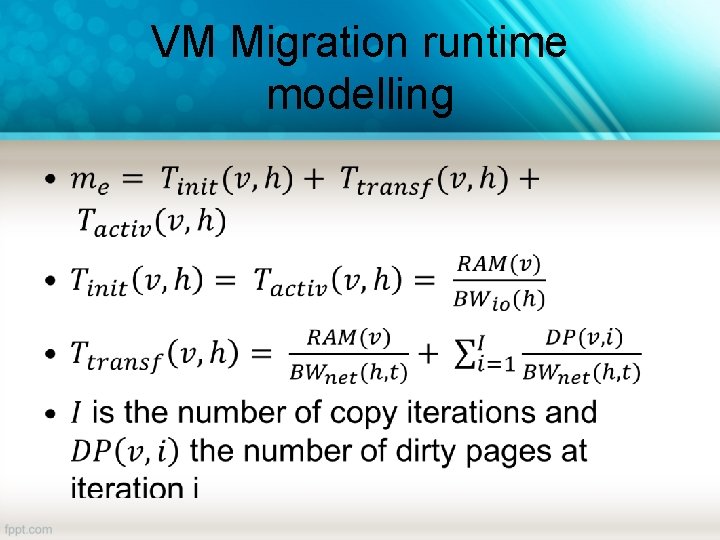

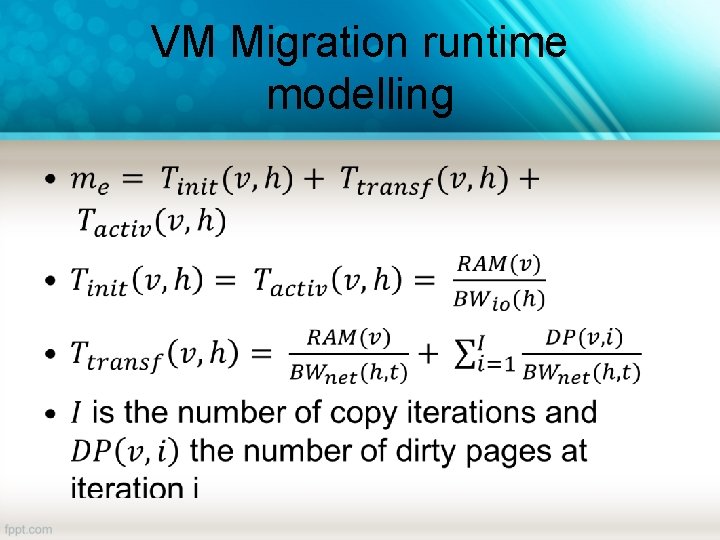

VM Migration modelling

Energy model • Initiation Phase • Transfer Phase • Activation Phase

VM Migration runtime modelling •

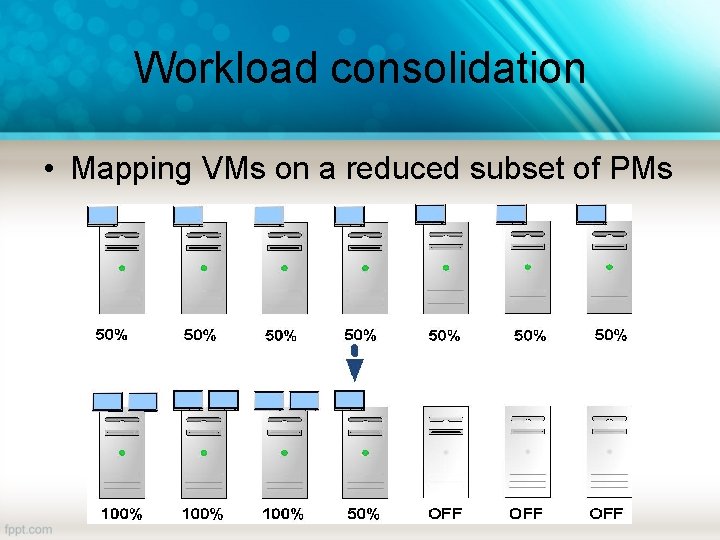

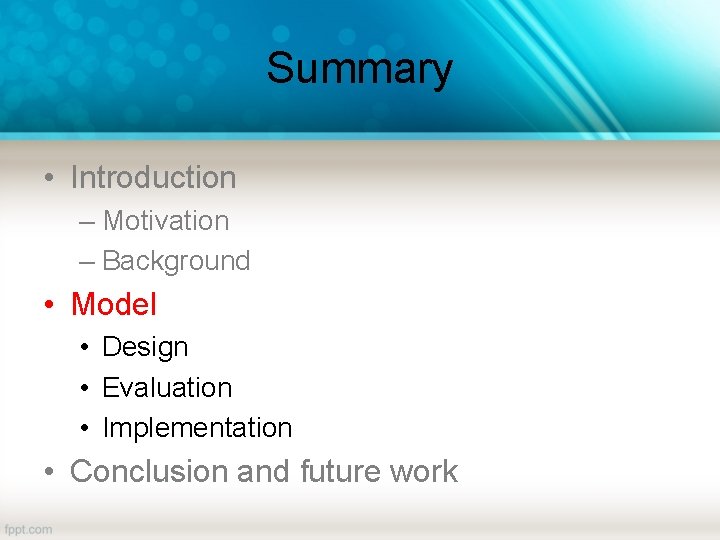

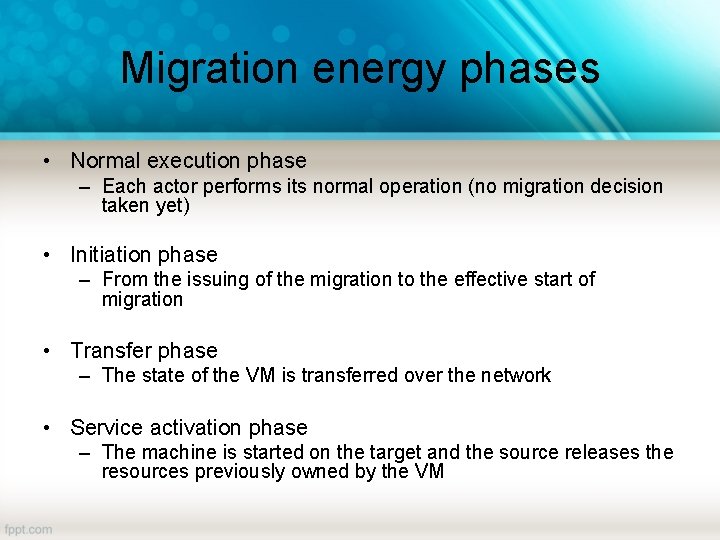

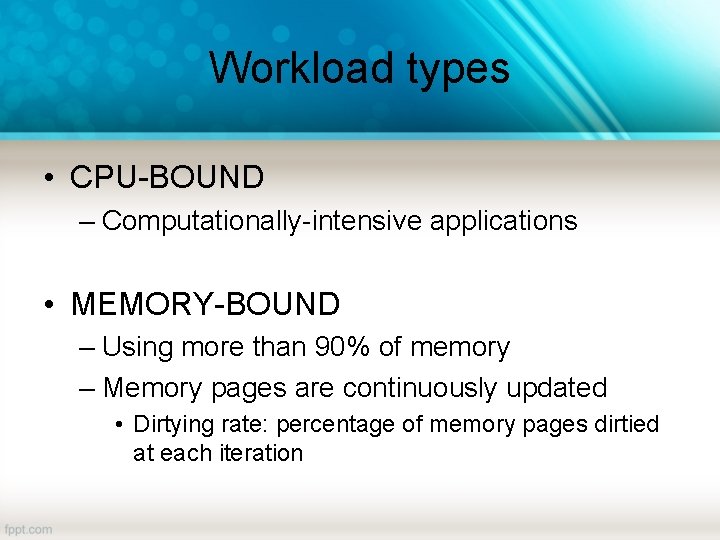

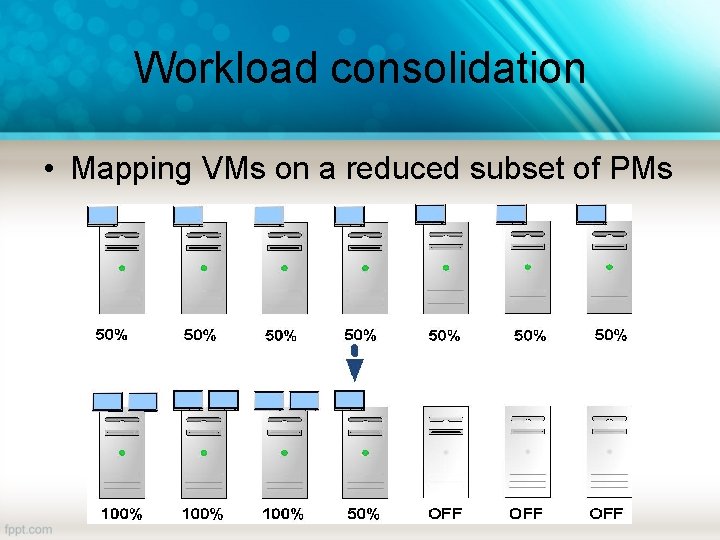

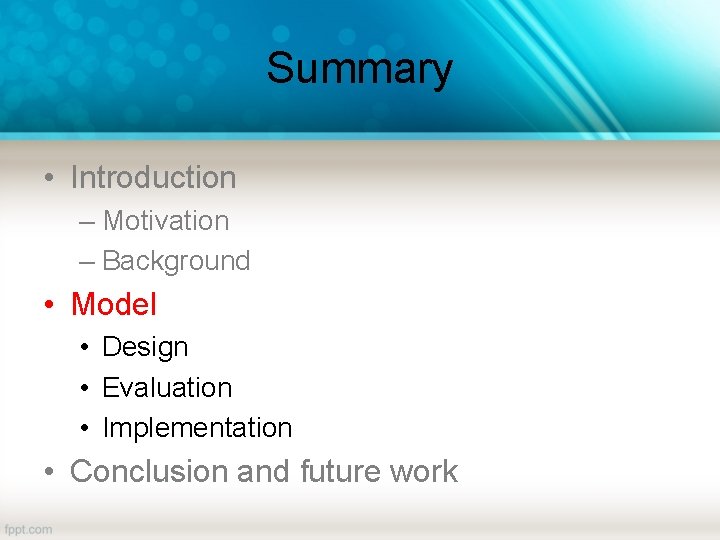

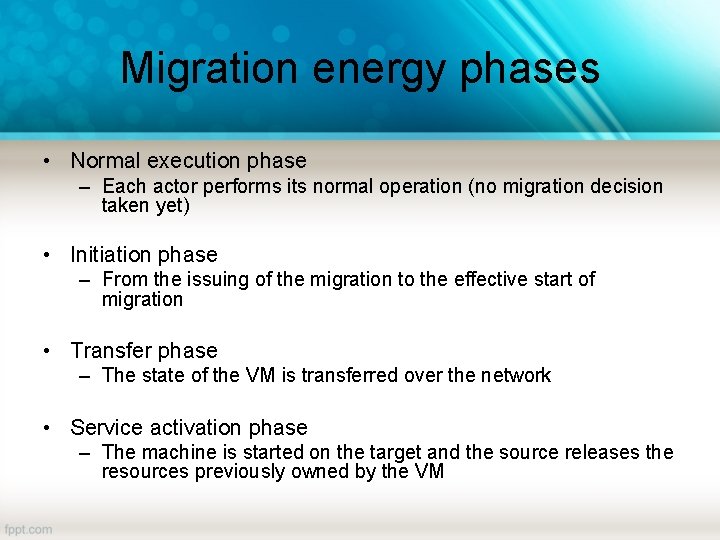

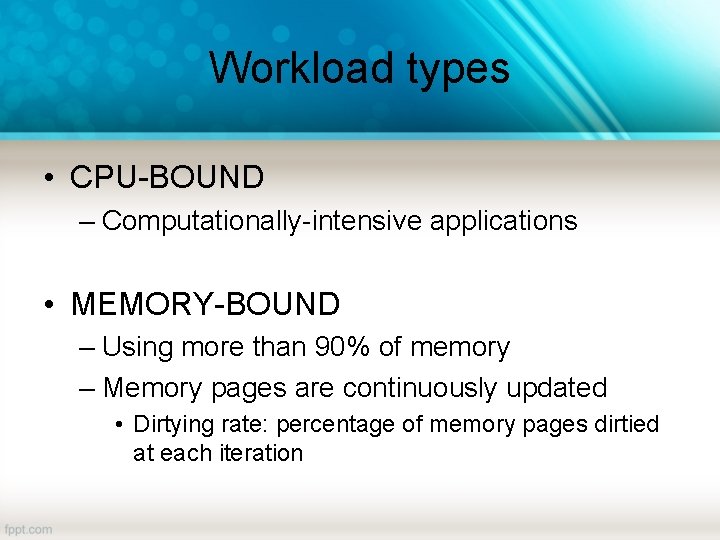

Workload types • CPU-BOUND – Computationally-intensive applications • MEMORY-BOUND – Using more than 90% of memory – Memory pages are continuously updated • Dirtying rate: percentage of memory pages dirtied at each iteration

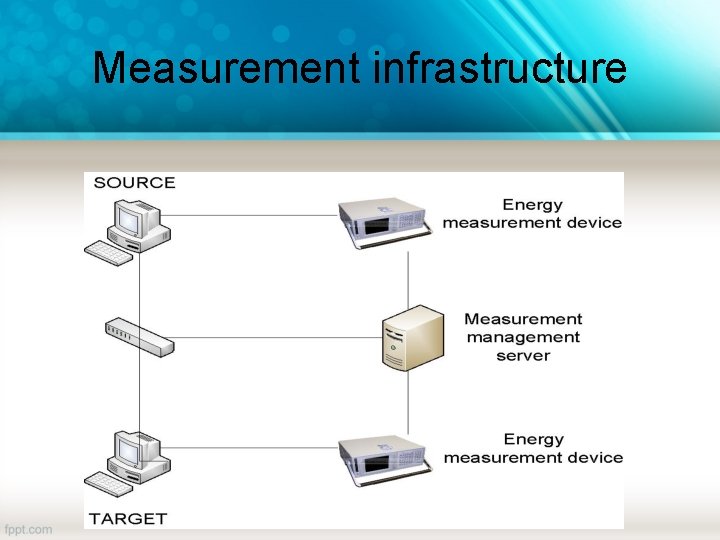

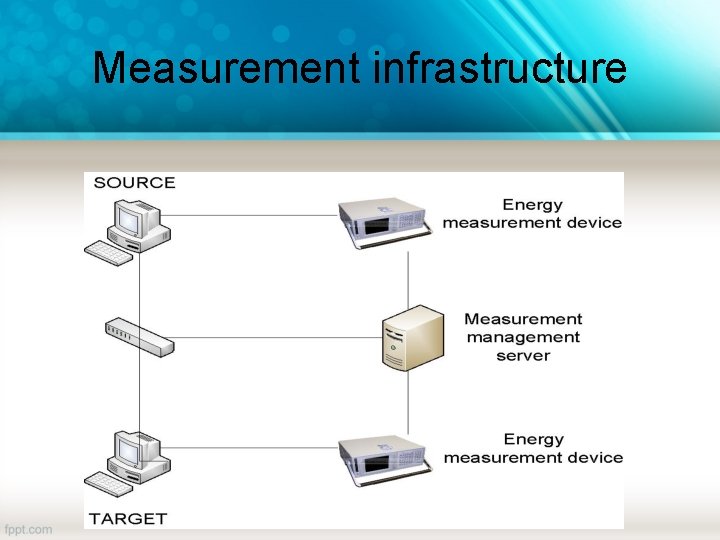

![Experimental setup VM INSTANCES INSTANCE NAME CPU MEMORY DIRTYING RATE Experimental setup • VM INSTANCES INSTANCE NAME CPU [%] MEMORY [%] DIRTYING RATE [%]](https://slidetodoc.com/presentation_image_h2/d0eca4609d2c73945b96600d72f18334/image-19.jpg)

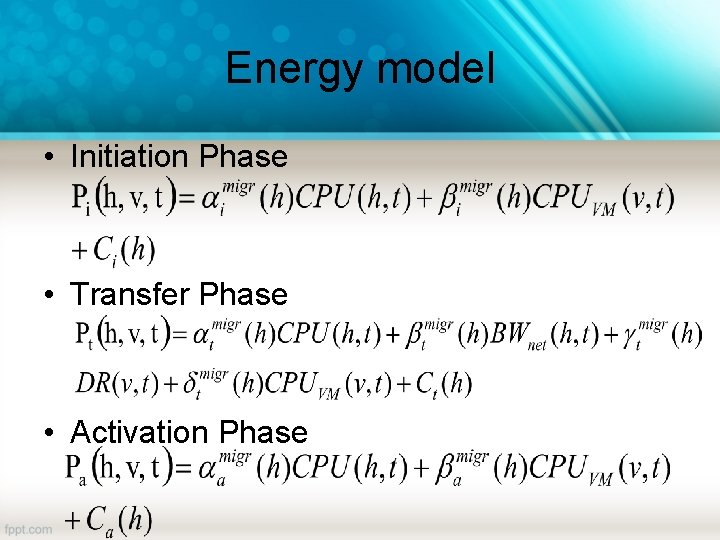

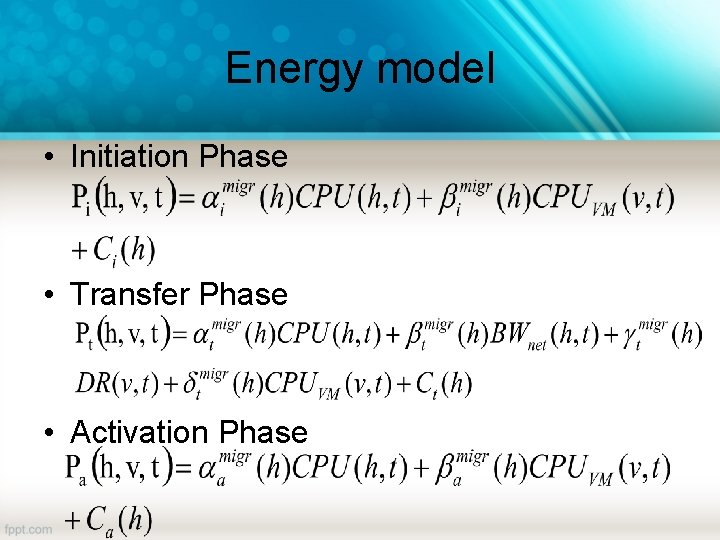

Experimental setup • VM INSTANCES INSTANCE NAME CPU [%] MEMORY [%] DIRTYING RATE [%] VMCPU 100 5 5 VMMEM 100 [5 -95]

Experimental setup EXPERIMENT Source host configuration Target host configuration CPULOADSOURCE Increasing CPU load IDLE up to 125% CPULOADTARGET VMCPU Increasing CPU load up to 125% MEMLOAD-VM VMMEM IDLE MEMLOADSOURCE Increasing CPU load IDLE up to 125% MEMLOADTARGET VMMEM Increasing CPU load up to 125% Migrating VM VMCPU VMMEM [5 -95]% DR VMMEM 95% DR

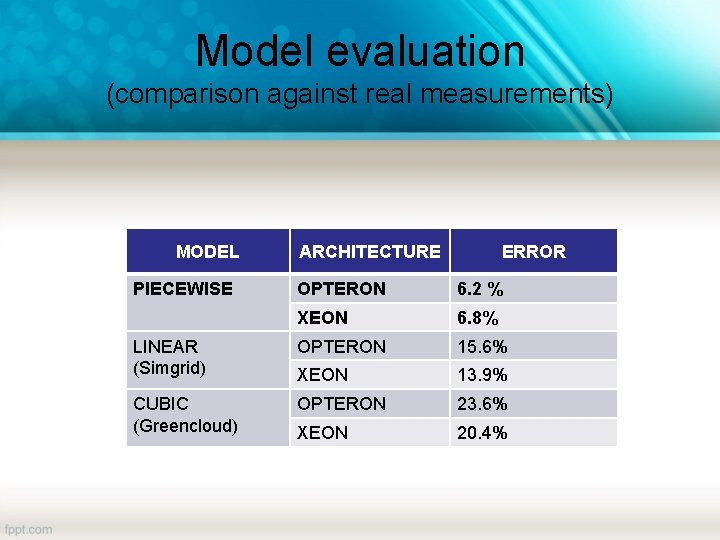

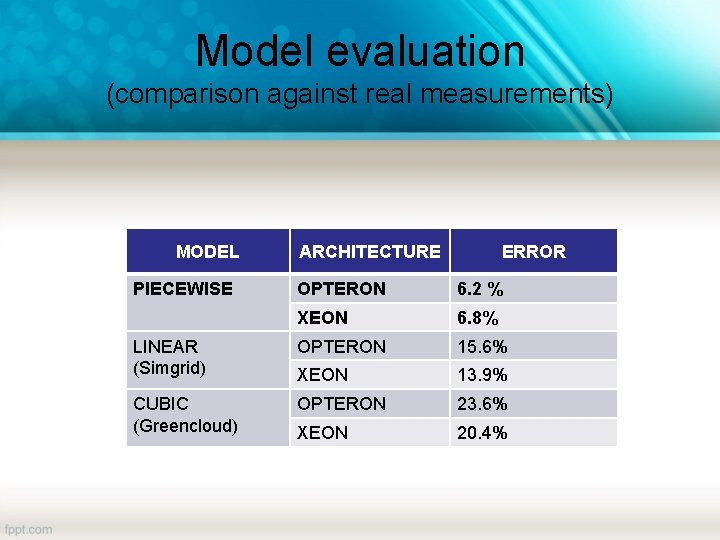

Measurement infrastructure

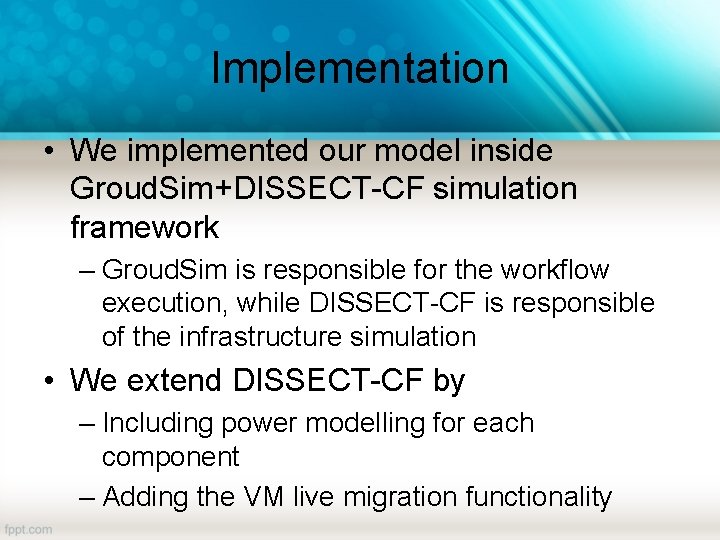

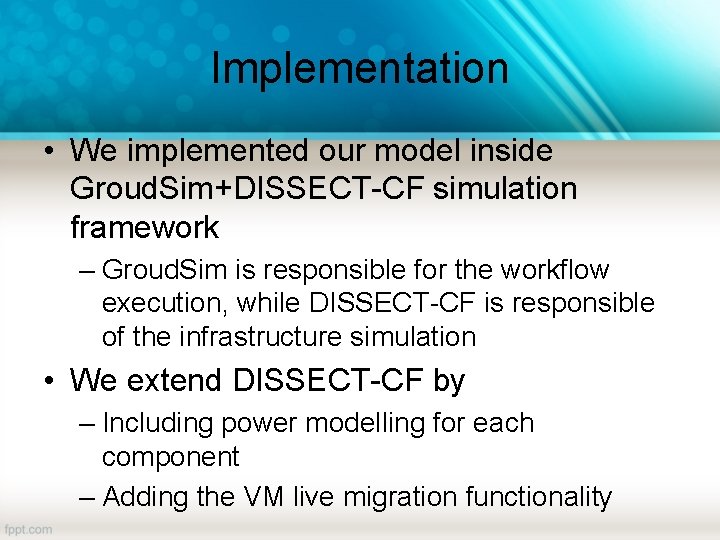

Model evaluation (comparison against real measurements) MODEL PIECEWISE ARCHITECTURE ERROR OPTERON 6. 2 % XEON 6. 8% LINEAR (Simgrid) OPTERON 15. 6% XEON 13. 9% CUBIC (Greencloud) OPTERON 23. 6% XEON 20. 4%

Implementation • We implemented our model inside Groud. Sim+DISSECT-CF simulation framework – Groud. Sim is responsible for the workflow execution, while DISSECT-CF is responsible of the infrastructure simulation • We extend DISSECT-CF by – Including power modelling for each component – Adding the VM live migration functionality

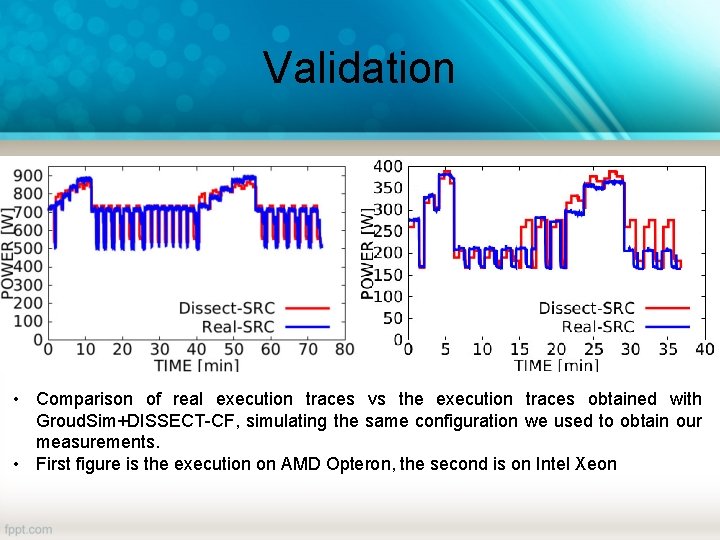

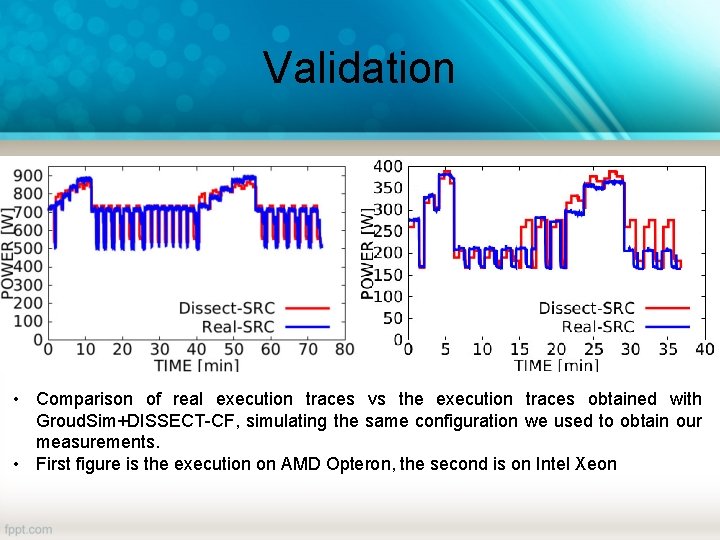

Validation • Comparison of real execution traces vs the execution traces obtained with Groud. Sim+DISSECT-CF, simulating the same configuration we used to obtain our measurements. • First figure is the execution on AMD Opteron, the second is on Intel Xeon

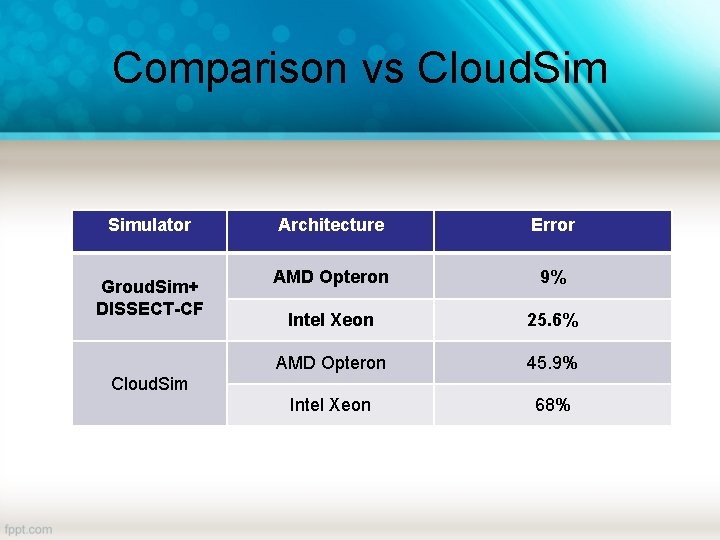

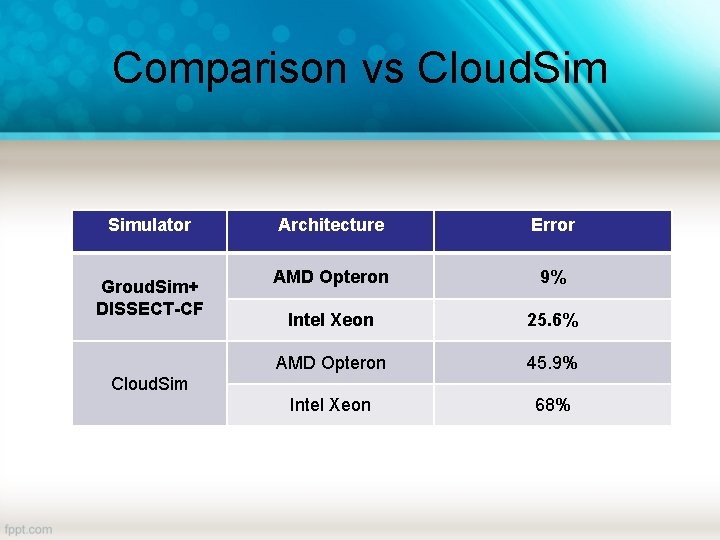

Comparison vs Cloud. Simulator Groud. Sim+ DISSECT-CF Architecture Error AMD Opteron 9% Intel Xeon 25. 6% AMD Opteron 45. 9% Intel Xeon 68% Cloud. Sim

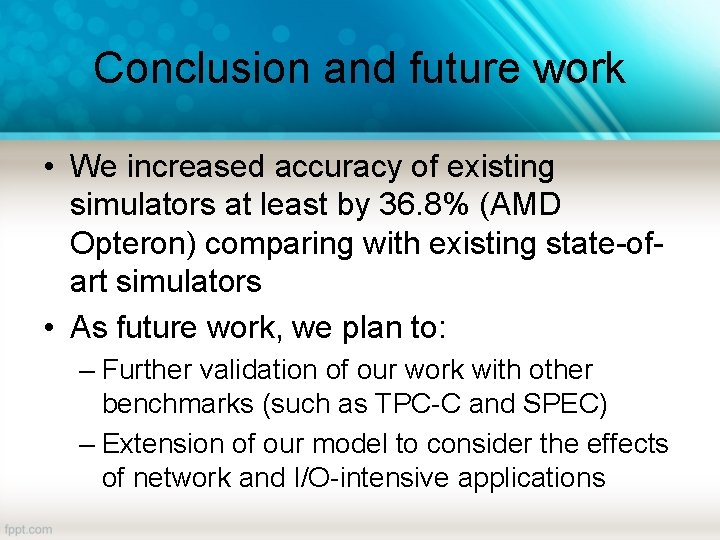

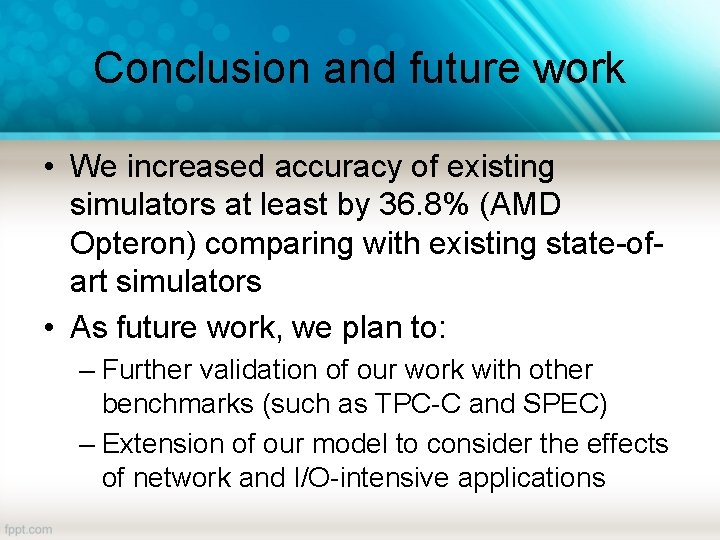

Conclusion and future work • We increased accuracy of existing simulators at least by 36. 8% (AMD Opteron) comparing with existing state-ofart simulators • As future work, we plan to: – Further validation of our work with other benchmarks (such as TPC-C and SPEC) – Extension of our model to consider the effects of network and I/O-intensive applications

Thanks for your attention! vincenzo@dps. uibk. ac. at