An Approach to Measure Java Code Quality in

![Problem n n Component Repository promote reuse success [Griss, 1994] Artifacts quality must be Problem n n Component Repository promote reuse success [Griss, 1994] Artifacts quality must be](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-5.jpg)

![Most Referenced Metrics n LOC n Cyclomatic Complexity [Mc. Cabe, 1976] n Chidamber and Most Referenced Metrics n LOC n Cyclomatic Complexity [Mc. Cabe, 1976] n Chidamber and](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-9.jpg)

![Problems related to Metrics [Ince, 1988 and Briand, 2002] n Metrics Validation ¡ Theoretical Problems related to Metrics [Ince, 1988 and Briand, 2002] n Metrics Validation ¡ Theoretical](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-10.jpg)

![Quality in a Reuse Environment [Etzkorn, 2001] n ISO 9126 13 Quality in a Reuse Environment [Etzkorn, 2001] n ISO 9126 13](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-13.jpg)

![Metrics Selection n Mc. Cabe Metric [Mc. Cabe, 1976] ¡ Theoretical Validation, according to Metrics Selection n Mc. Cabe Metric [Mc. Cabe, 1976] ¡ Theoretical Validation, according to](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-16.jpg)

![Metrics Selection n CK Metrics [Chidamber, 1994], ¡ Theoretical Validation, ¡ Developed in a Metrics Selection n CK Metrics [Chidamber, 1994], ¡ Theoretical Validation, ¡ Developed in a](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-17.jpg)

![Referências [Frakes, 1994] W. B. Frakes and S. Isoda, "Success Factors of Systematic Software Referências [Frakes, 1994] W. B. Frakes and S. Isoda, "Success Factors of Systematic Software](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-26.jpg)

![n n [Refactorit, 2001] Refactorit tool, online, last update: 01/2008, available: http: //www. aqris. n n [Refactorit, 2001] Refactorit tool, online, last update: 01/2008, available: http: //www. aqris.](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-27.jpg)

- Slides: 28

An Approach to Measure Java Code Quality in Reuse Environment Aline Timóteo Advisor: Silvio Meira UFPE – Federal University of Pernambuco alt. timoteo@gmail. com 1

Summary n n Motivation Background ¡ n n n Metrics An Approach to Measure Java Code Quality Main Contributions Status 2

Motivation 3

Motivation n Reuse Benefit ¡ ¡ n Productivity Cost Quality Reuse is a competitive advantage!!!!! Reuse environment [Frakes, 1994] ¡ ¡ ¡ Process Metrics Tools n n Repository Search engine Domain tools … 4

![Problem n n Component Repository promote reuse success Griss 1994 Artifacts quality must be Problem n n Component Repository promote reuse success [Griss, 1994] Artifacts quality must be](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-5.jpg)

Problem n n Component Repository promote reuse success [Griss, 1994] Artifacts quality must be assured by the organization that maintains a repository? [Seacord, 1999] How to minimize low-quality artifacts reuse? 5

Background 6

Metrics n n “Software metrics is a method to quantify attributes in software processes, products and projects” [Daskalantonakis, 1992] Metrics Timeline ¡ ¡ Age 1: before 1991, where the main focus was on metrics based on the code complexity Age 2: after 1992, where the main focus was on metrics based on the concepts of Object Oriented (OO) systems 7

Age 1: Complexity Age 2: Object Oriented 8

![Most Referenced Metrics n LOC n Cyclomatic Complexity Mc Cabe 1976 n Chidamber and Most Referenced Metrics n LOC n Cyclomatic Complexity [Mc. Cabe, 1976] n Chidamber and](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-9.jpg)

Most Referenced Metrics n LOC n Cyclomatic Complexity [Mc. Cabe, 1976] n Chidamber and Kemerer Metrics [Chidamber, 1994] n Lorenz and Kidd Metrics [Lorenz, 1994] n MOOD Metrics [Brito, 1994] 9

![Problems related to Metrics Ince 1988 and Briand 2002 n Metrics Validation Theoretical Problems related to Metrics [Ince, 1988 and Briand, 2002] n Metrics Validation ¡ Theoretical](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-10.jpg)

Problems related to Metrics [Ince, 1988 and Briand, 2002] n Metrics Validation ¡ Theoretical Validation n ¡ n Measurement goal Experimental hypothesis Environment or context Empirical validation Metrics Automation ¡ ¡ ¡ Different set of metrics implemented Bad documentation Quality attributes x Metrics 10

An Approach to Measure Java Code Quality 11

An Approach to Measure Java Code Quality n Quality Attributes x Metrics n Metrics Selection and Specification n Quality Attributes measurement 12

![Quality in a Reuse Environment Etzkorn 2001 n ISO 9126 13 Quality in a Reuse Environment [Etzkorn, 2001] n ISO 9126 13](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-13.jpg)

Quality in a Reuse Environment [Etzkorn, 2001] n ISO 9126 13

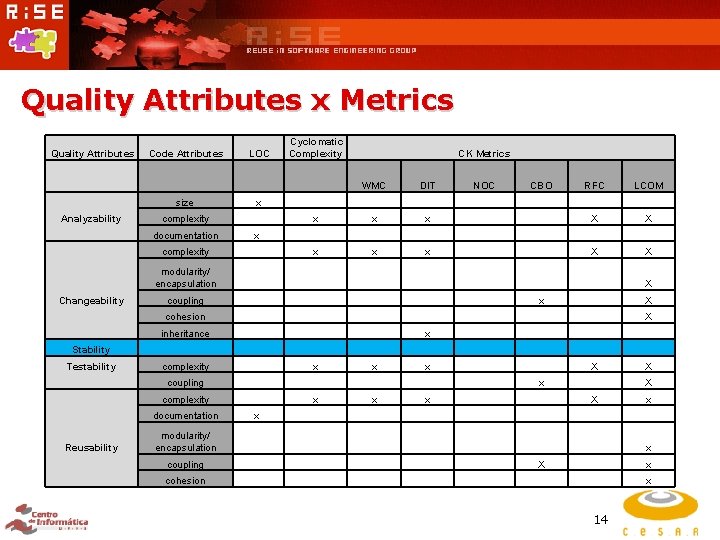

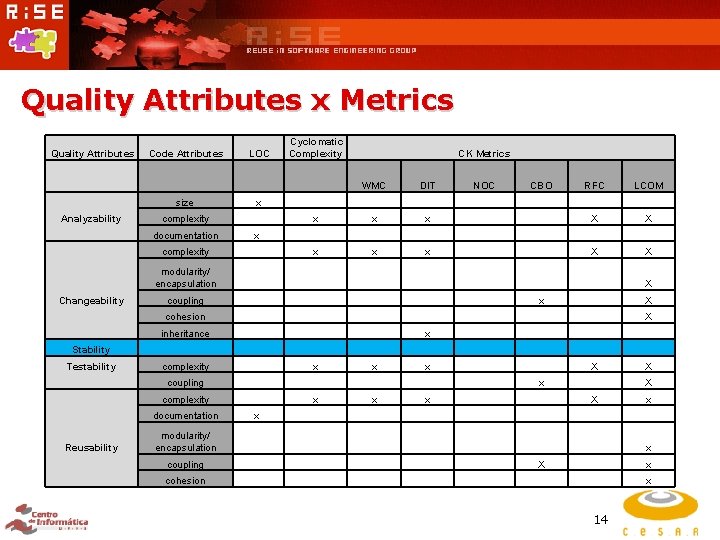

Quality Attributes x Metrics Quality Attributes Code Attributes LOC size x Analyzability complexity Cyclomatic Complexity CK Metrics WMC DIT NOC CBO RFC LCOM x x x X X documentation x complexity x x x X X modularity/ encapsulation X Changeability coupling x X cohesion X inheritance x Stability Testability complexity x x x X X coupling x X complexity x x x X x documentation x Reusability modularity/ encapsulation x coupling X x cohesion x 14

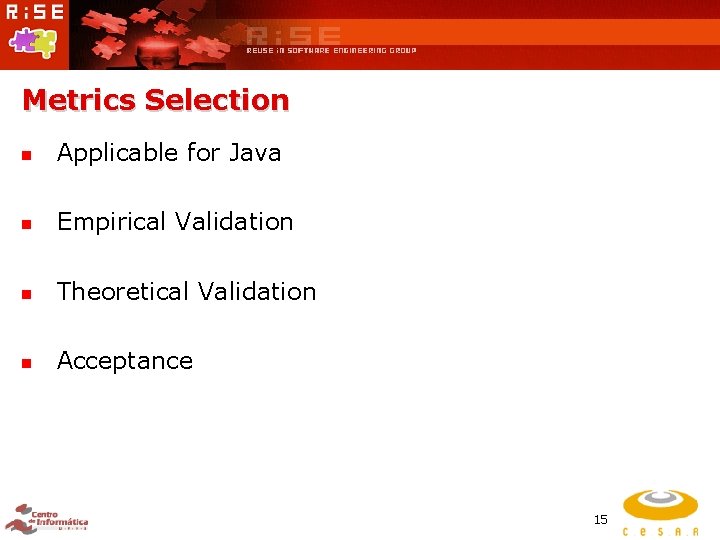

Metrics Selection n Applicable for Java n Empirical Validation n Theoretical Validation n Acceptance 15

![Metrics Selection n Mc Cabe Metric Mc Cabe 1976 Theoretical Validation according to Metrics Selection n Mc. Cabe Metric [Mc. Cabe, 1976] ¡ Theoretical Validation, according to](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-16.jpg)

Metrics Selection n Mc. Cabe Metric [Mc. Cabe, 1976] ¡ Theoretical Validation, according to graphos teory ¡ Independence of technology ¡ Empirical Validation ¡ Acceptance [Refactorit, 2001; Metrics, 2005; JHawk, 2007] 16

![Metrics Selection n CK Metrics Chidamber 1994 Theoretical Validation Developed in a Metrics Selection n CK Metrics [Chidamber, 1994], ¡ Theoretical Validation, ¡ Developed in a](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-17.jpg)

Metrics Selection n CK Metrics [Chidamber, 1994], ¡ Theoretical Validation, ¡ Developed in a OO context ¡ ¡ Empirical Validation [Briand, 1994; Chidamber, 1998; Tang, 1999]. Acceptance [Refactorit, 2001; Metrics, 2005; JHawk, 2007] 17

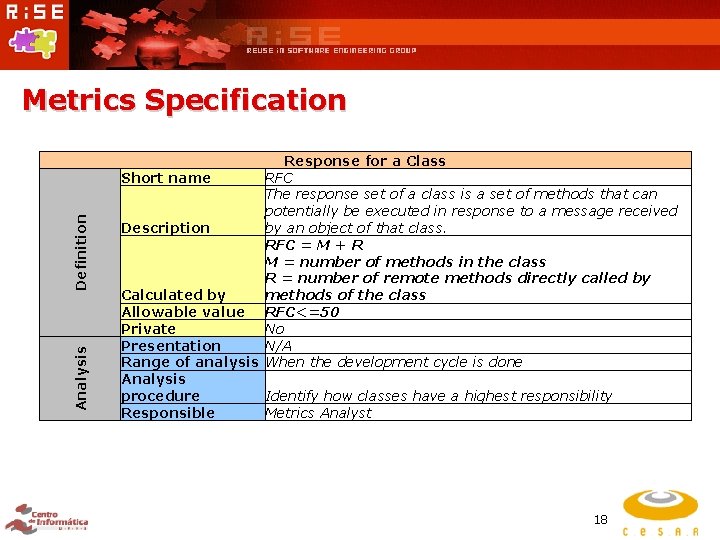

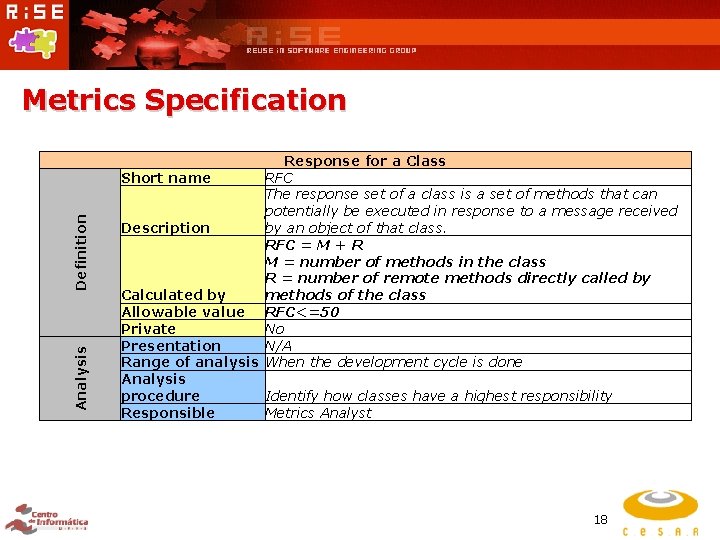

Analysis Definition Metrics Specification Response for a Class Short name RFC The response set of a class is a set of methods that can potentially be executed in response to a message received Description by an object of that class. RFC = M + R M = number of methods in the class R = number of remote methods directly called by Calculated by methods of the class Allowable value RFC<=50 Private No Presentation N/A Range of analysis When the development cycle is done Analysis procedure Identify how classes have a highest responsibility Responsible Metrics Analyst 18

Quality Attributes Measurement (QAM) n QAM = (the number of metrics that have a allowable value) ¡ Heuristically n QAM >= Number of metrics /2 n Example: Quality Attribute Testability n Code Attribute WMC complexity x coupling CK Metrics DIT x CBO x RFC LCOM x x x Max Testability = 5 Min Testability = 2, 5 <= QAM <= 5 19

Approach Automation 20

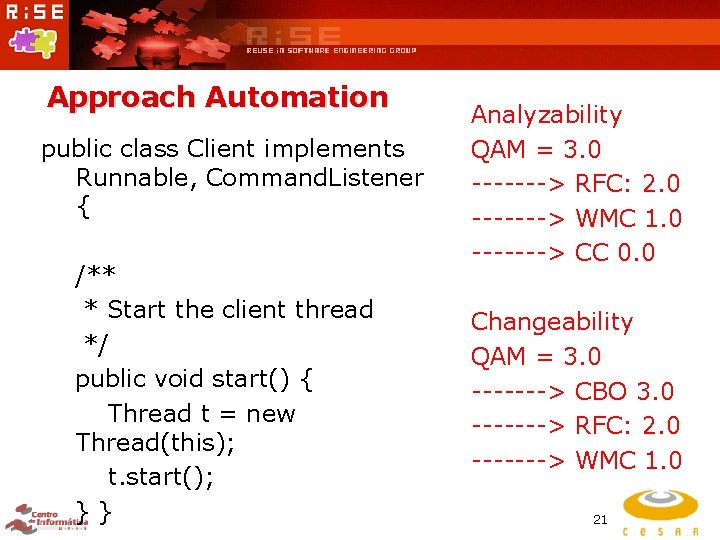

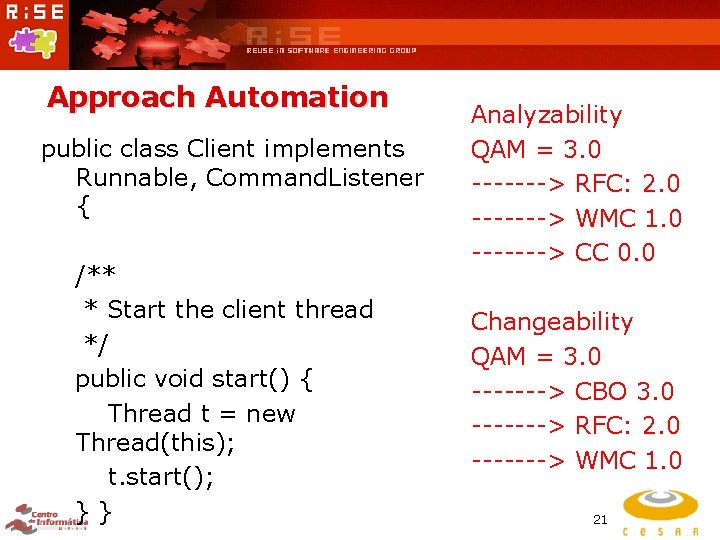

Approach Automation public class Client implements Runnable, Command. Listener { /** * Start the client thread */ public void start() { Thread t = new Thread(this); t. start(); }} Analyzability QAM = 3. 0 -------> RFC: 2. 0 -------> WMC 1. 0 -------> CC 0. 0 Changeability QAM = 3. 0 -------> CBO 3. 0 -------> RFC: 2. 0 -------> WMC 1. 0 21

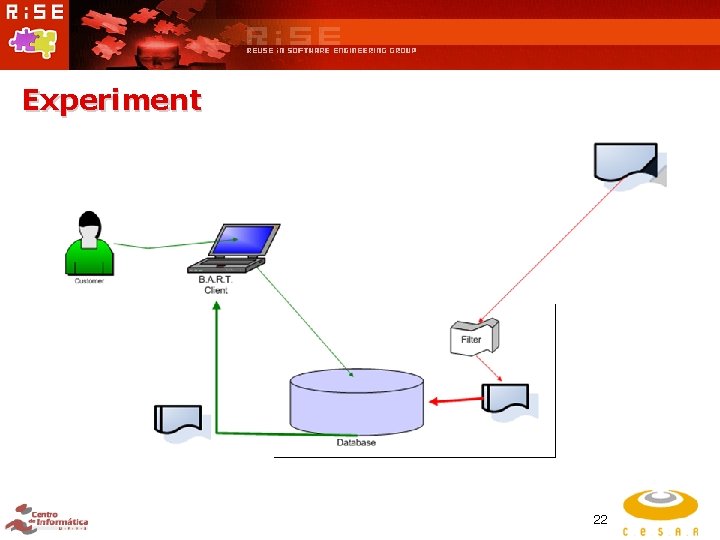

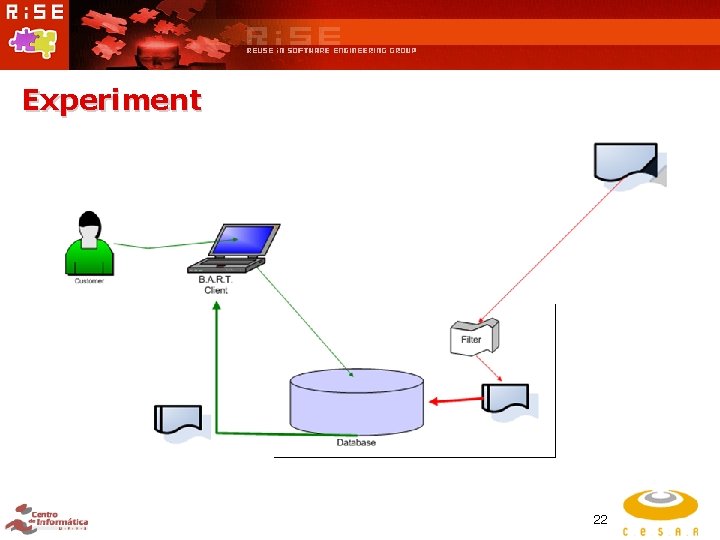

Experiment 22

Experiment n Main Question ¡ n Compare B. A. R. T. search results ¡ ¡ n The retrieval component quality is better? Results before introduce filter Results after introduce filter Apply questionnaire for customers 23

Main Contributions n Introduce quality analysis in a repository ¡ Reduce code problem propagation ¡ Highest Reliability 24

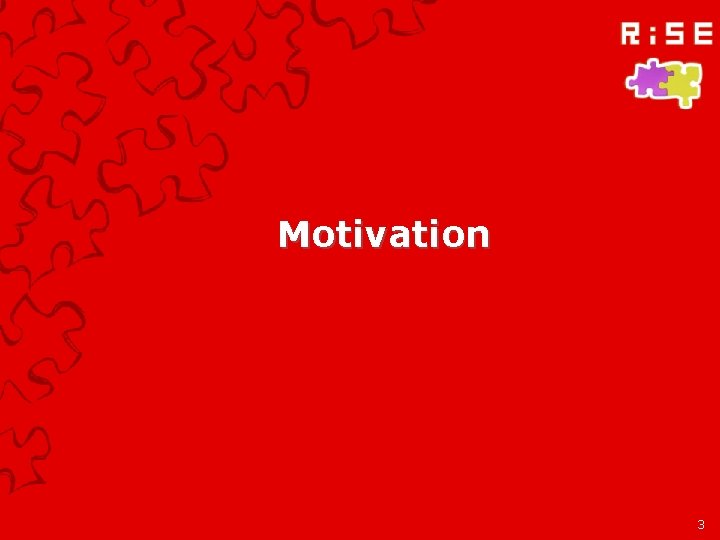

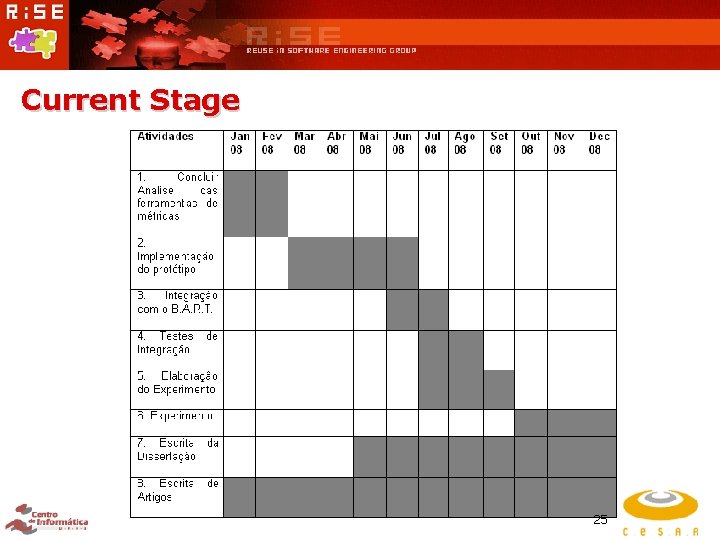

Current Stage 25

![Referências Frakes 1994 W B Frakes and S Isoda Success Factors of Systematic Software Referências [Frakes, 1994] W. B. Frakes and S. Isoda, "Success Factors of Systematic Software](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-26.jpg)

Referências [Frakes, 1994] W. B. Frakes and S. Isoda, "Success Factors of Systematic Software Reuse, " IEEE n n n n Software, vol. 11, pp. 14 --19, 1994. [Griss, 1994] M. L. Griss, "Software Reuse Experience at Hewlett-Packard, " presented at 16 th International Conference on Software Engineering (ICSE), Sorrento, Italy, 1994. [Garcia, 2006] V. C. Garcia, D. Lucrédio, F. A. Durão, E. C. R. Santos, E. S. Almeida, R. P. M. Fortes, and S. R. L. Meira, "From Specification to Experimentation: A Software Component Search Engine Architecture, " presented at The 9 th International Symposium on Component-Based Software Engineering (CBSE 2006), Mälardalen University, Västerås, Sweden, 2006. [Etzkorn, 2001] Letha H. Etzkorn, William E. Hughes Jr. , Carl G. Davis: Automated reusability quality analysis of OO legacy software. Information & Software Technology 43(5): 295 -308 (2001) [Daskalantonakis, 1992] M. K. Daskalantonakis, “A Pratical View of Software Measurement and Implementation Experiences Within Motorola”, IEEE Transactions on Software Engineering, vol 18, 1992, pp. 998– 1010. [Mc. Cabe, 1976] T. J. Mc. Cabe, “A Complexity Measure”. IEEE Transactions of Software Engineering, vol SE-2, 1976, pp. 308 -320. [Chidamber, 1994] S. R. Chidamber, C. F. Kemerer, “A Metrics Suite for Object Oriented Design”, IEEE Transactions on Software Engineering, vol 20, Piscataway - USA, 1994, pp. 476 -493. [Lorenz, 1994] M. Lorenz, J. Kidd, “Object-Oriented Software Metrics: A Practical Guide”, Englewood Cliffs, New Jersey - USA, 1994. [Brito, 1994] A. F. Brito, R. Carapuça, "Object-Oriented Software Engineering: Measuring and controlling the development process", 4 th Interntional Conference on Software Quality, USA, 1994. [Ince, 1988] D. C. Ince, M. J. Sheppard, "System design metrics: a review and perspective", Second IEE/BCS Conference, Liverpool - UK, 1988, pp. 23 -27. [Briand, 2002] L. C. Briand, S. Morasca, V. R. Basili, “An Operational Process for Goal-Driven Definition of Measures”, Software Engineering - IEEE Transactions, vol 28, 2002, pp. 1106 -1125. [Morasca, 1989] S. Morasca, L. C. Briand, V. R. Basili, E. J. Weyuker, M. V. Zelkowitz, B. Kitchenham, S. Lawrence Pfleeger, N. Fenton, "Towards a framework for software measurementvalidation", Software Engineering, IEEE Transactions, vol 23, 1995, pp. 187 -189. [Seacord, 1999] Robert C. Seacord. Software engineering component repositories. Technical report, Software Engineering Institute (SEI), 1999 26

![n n Refactorit 2001 Refactorit tool online last update 012008 available http www aqris n n [Refactorit, 2001] Refactorit tool, online, last update: 01/2008, available: http: //www. aqris.](https://slidetodoc.com/presentation_image/ca82ff9232f9d2775cc20911d7efdbae/image-27.jpg)

n n [Refactorit, 2001] Refactorit tool, online, last update: 01/2008, available: http: //www. aqris. com/display/ap/Refactor. It [Jdepend, 2005] JDepend tool, online, last update: 03/2006, available: http: //www. clarkware. com/software/JDepend. html [Metrics, 2005] Metrics Eclipse Plugin, online, last update: 07/2005, available: http: //sourceforge. net/projects/metrics [Jhawk, 2007] JHawk Eclipse Plugin, online, last update: 03/2007, available: http: //www. virtualmachinery. com/jhawkprod. htm 27

Aline Timóteo UFPE – Federal University of Pernambuco alt. timoteo@gmail. com 28