An Analysis of Parallel Mixing with Attacker Controlled

![Theorem 1 Pr[s 1 -> s 1] = (1 -0)/((2 -1)) = 1 M Theorem 1 Pr[s 1 -> s 1] = (1 -0)/((2 -1)) = 1 M](https://slidetodoc.com/presentation_image/5a7fd6e10f72fa7e284a3343c30d10d7/image-9.jpg)

![Anonymity Metrics • Anon [Golle and Juels ‘ 04] • Entropy [SD’ 02, DSCP’ Anonymity Metrics • Anon [Golle and Juels ‘ 04] • Entropy [SD’ 02, DSCP’](https://slidetodoc.com/presentation_image/5a7fd6e10f72fa7e284a3343c30d10d7/image-10.jpg)

- Slides: 19

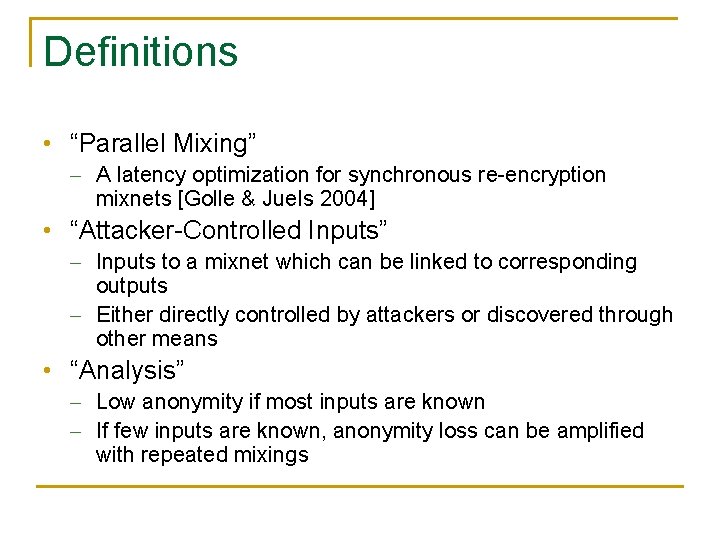

An Analysis of Parallel Mixing with Attacker. Controlled Inputs Nikita Borisov formerly of UC Berkeley

Definitions • “Parallel Mixing” - A latency optimization for synchronous re-encryption mixnets [Golle & Juels 2004] • “Attacker-Controlled Inputs” - Inputs to a mixnet which can be linked to corresponding outputs - Either directly controlled by attackers or discovered through other means • “Analysis” - Low anonymity if most inputs are known - If few inputs are known, anonymity loss can be amplified with repeated mixings

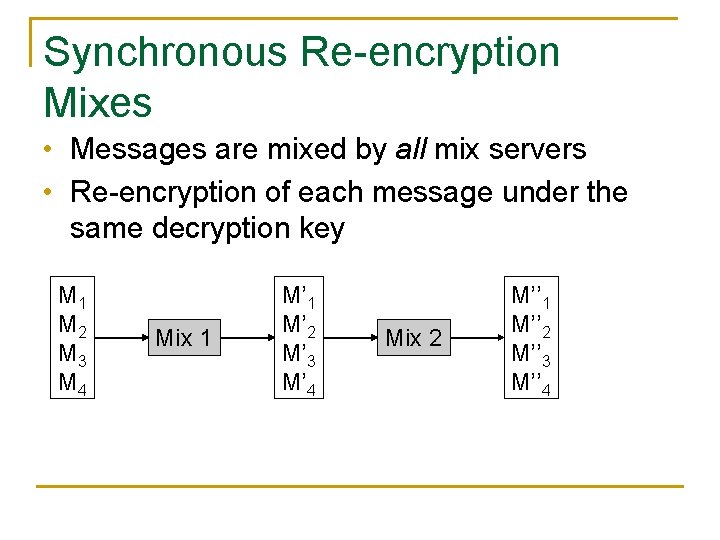

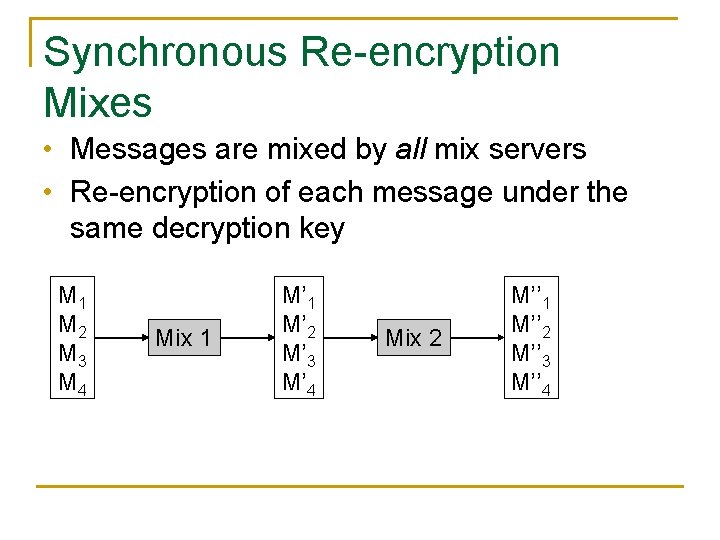

Synchronous Re-encryption Mixes • Messages are mixed by all mix servers • Re-encryption of each message under the same decryption key M 1 M 2 M 3 M 4 Mix 1 M’ 2 M’ 3 M’ 4 Mix 2 M’’ 1 M’’ 2 M’’ 3 M’’ 4

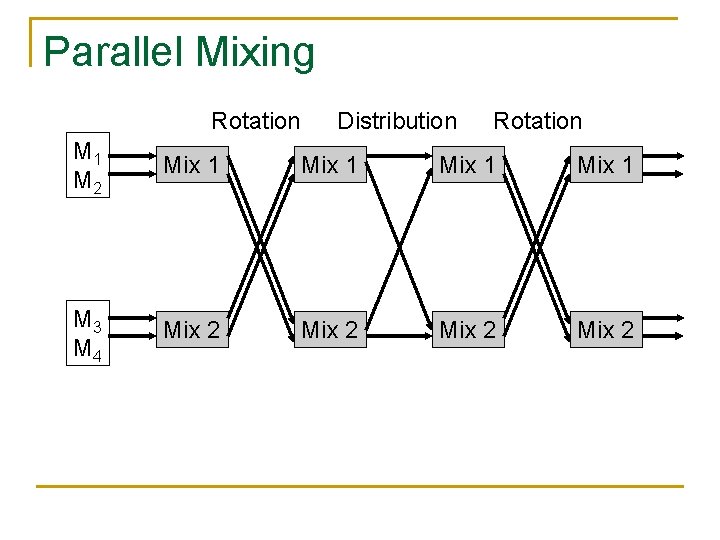

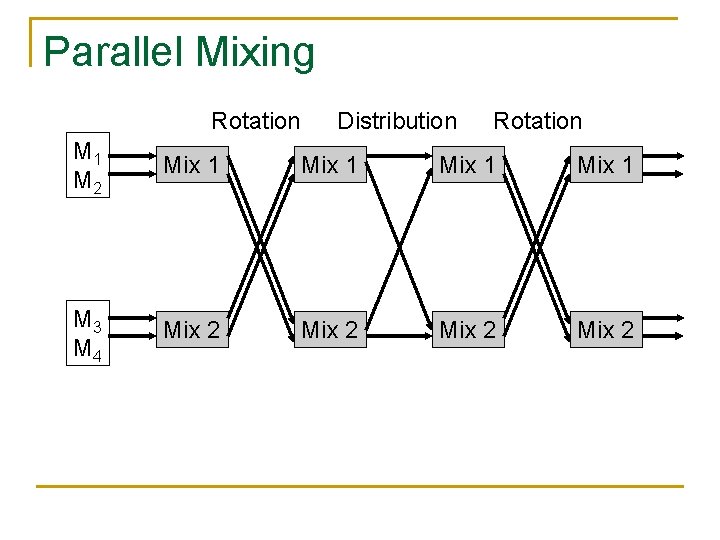

Parallel Mixing Rotation Distribution Rotation M 1 M 2 Mix 1 M 3 M 4 Mix 2

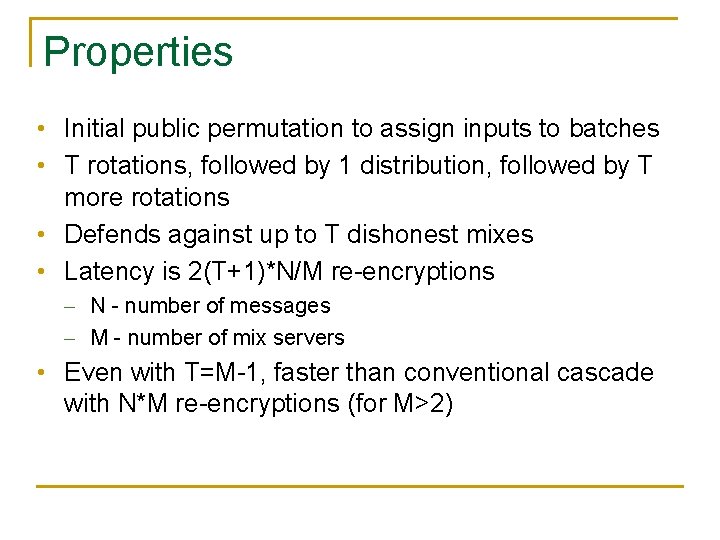

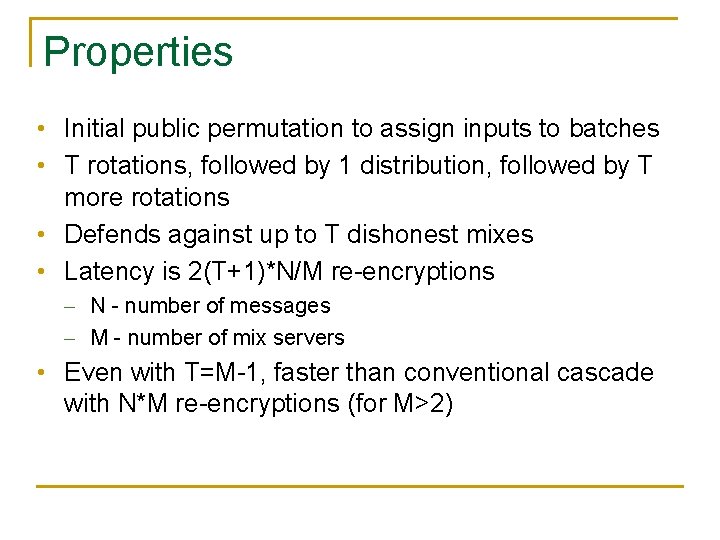

Properties • Initial public permutation to assign inputs to batches • T rotations, followed by 1 distribution, followed by T more rotations • Defends against up to T dishonest mixes • Latency is 2(T+1)*N/M re-encryptions - N - number of messages - M - number of mix servers • Even with T=M-1, faster than conventional cascade with N*M re-encryptions (for M>2)

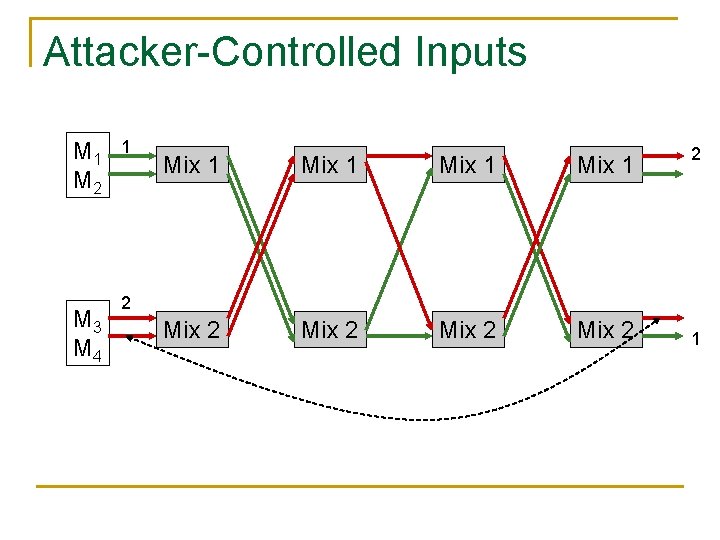

Attacker-Controlled Inputs M 1 M 2 M 3 M 4 1 Mix 1 Mix 2 2 2 1

Overview • • • Introduction Analysis Methods Analysis Results Multiple-round analysis Open problems Conclusions

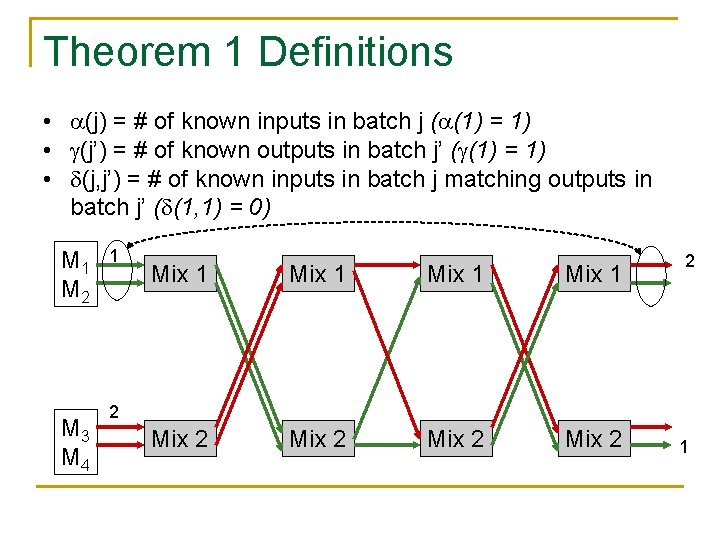

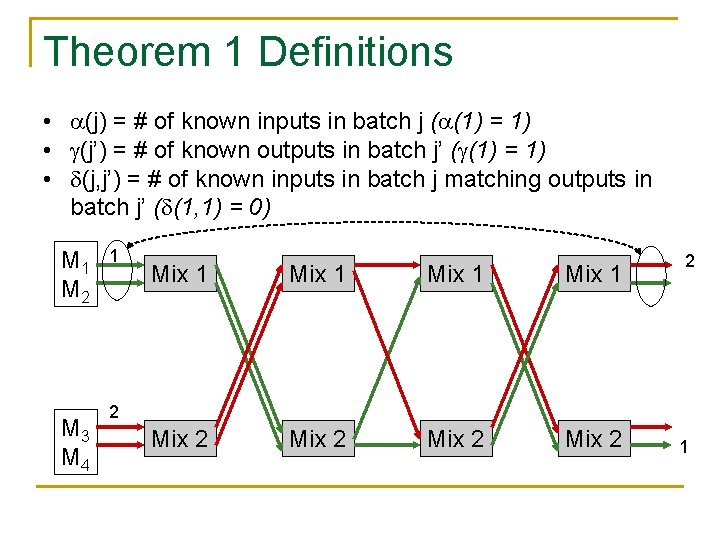

Theorem 1 Definitions • (j) = # of known inputs in batch j ( (1) = 1) • (j’) = # of known outputs in batch j’ ( (1) = 1) • (j, j’) = # of known inputs in batch j matching outputs in batch j’ ( (1, 1) = 0) M 1 M 2 M 3 M 4 1 Mix 1 Mix 2 2 2 1

![Theorem 1 Prs 1 s 1 1 02 1 1 M Theorem 1 Pr[s 1 -> s 1] = (1 -0)/((2 -1)) = 1 M](https://slidetodoc.com/presentation_image/5a7fd6e10f72fa7e284a3343c30d10d7/image-9.jpg)

Theorem 1 Pr[s 1 -> s 1] = (1 -0)/((2 -1)) = 1 M 2 M 3 M 4 1 Mix 1 Mix 2 2 2 1

![Anonymity Metrics Anon Golle and Juels 04 Entropy SD 02 DSCP Anonymity Metrics • Anon [Golle and Juels ‘ 04] • Entropy [SD’ 02, DSCP’](https://slidetodoc.com/presentation_image/5a7fd6e10f72fa7e284a3343c30d10d7/image-10.jpg)

Anonymity Metrics • Anon [Golle and Juels ‘ 04] • Entropy [SD’ 02, DSCP’ 02] • Can compute either metric using Theorem 1 - Need to know (j), (j’), and (j, j’) for each j, j’

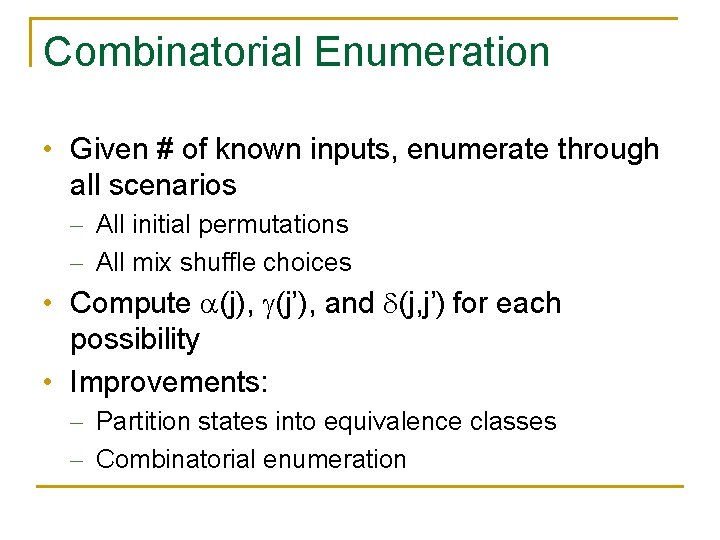

Scenarios • Given a scenario: - # of known inputs - Distribution of known inputs among input batches - Distribution of known outputs among output batches • We can compute: - (j), (j’), and (j, j’) - Anonymity metrics • What’s a typical scenario? - Distribution of anonymity metrics

Combinatorial Enumeration • Given # of known inputs, enumerate through all scenarios - All initial permutations - All mix shuffle choices • Compute (j), (j’), and (j, j’) for each possibility • Improvements: - Partition states into equivalence classes - Combinatorial enumeration

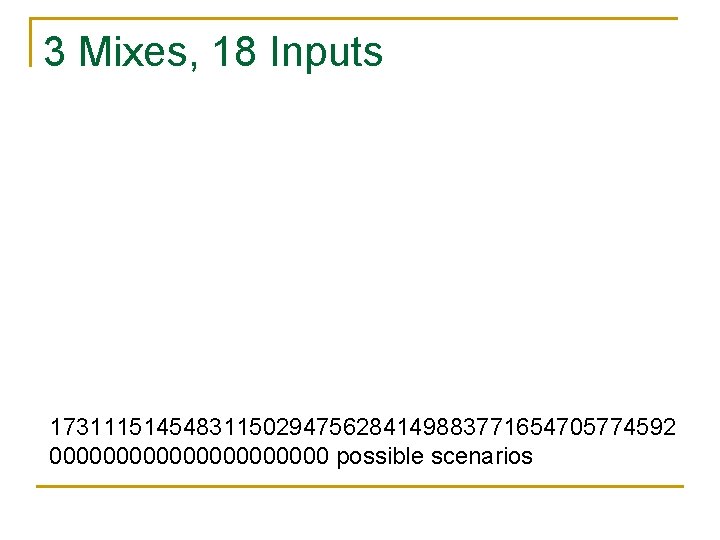

3 Mixes, 18 Inputs 17311151454831150294756284149883771654705774592 00000000000 possible scenarios

Sampling • Full enumeration still impractical for large systems • Instead, we use sampling: - Given a # of known inputs, simulate a random scenario - Compute (j), (j’), and (j, j’) and anonymity metrics - Repeat • Get a sampled distribution of metrics - Misses the tail of distribution, but we don’t care

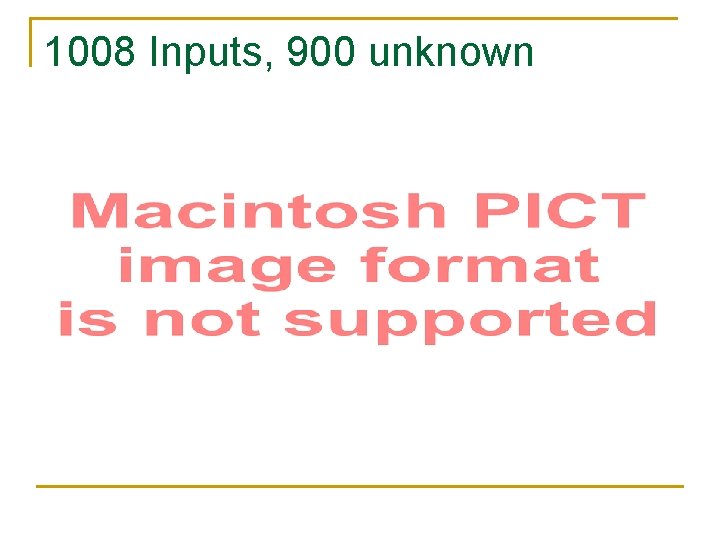

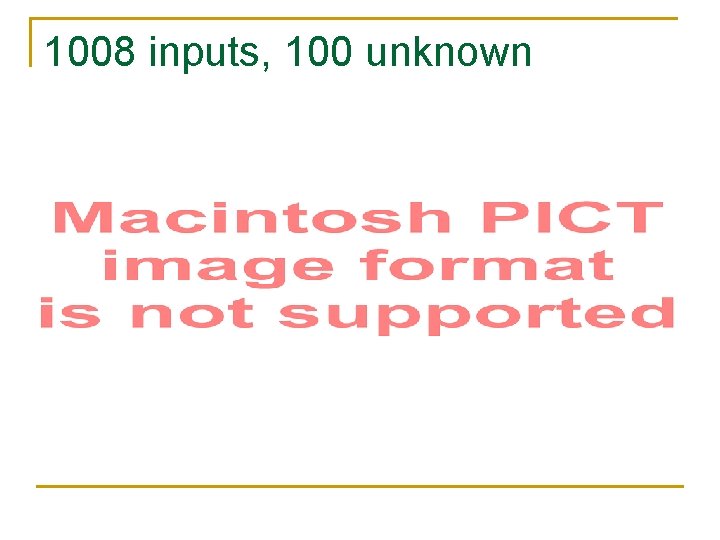

1008 Inputs, 900 unknown

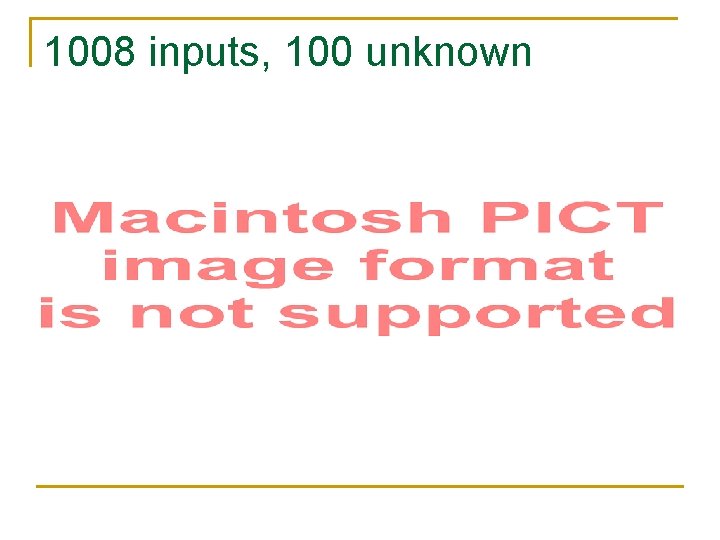

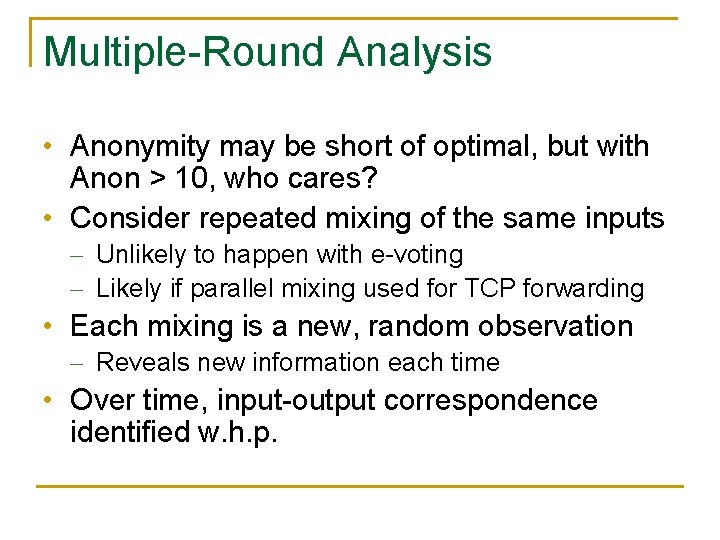

1008 inputs, 100 unknown

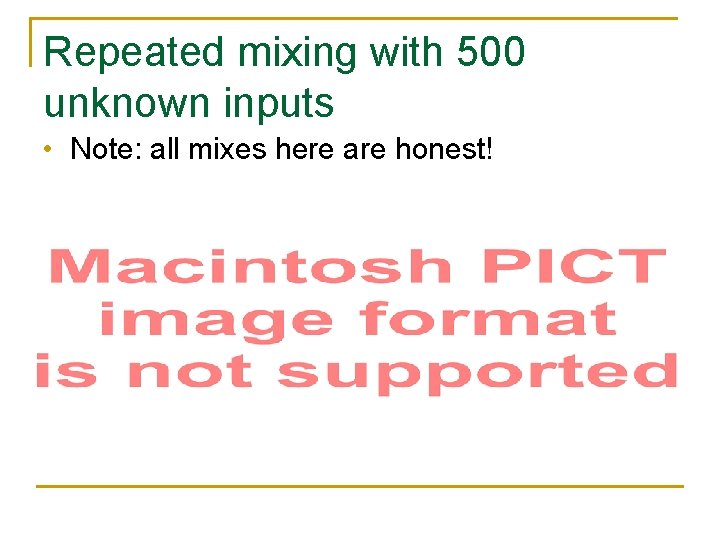

Multiple-Round Analysis • Anonymity may be short of optimal, but with Anon > 10, who cares? • Consider repeated mixing of the same inputs - Unlikely to happen with e-voting - Likely if parallel mixing used for TCP forwarding • Each mixing is a new, random observation - Reveals new information each time • Over time, input-output correspondence identified w. h. p.

Repeated mixing with 500 unknown inputs • Note: all mixes here are honest!

Conclusions • Parallel mixing reveals information when attackers control some inputs - Big problem if most inputs are controlled - When fewer inputs are known, repeated mixings may still be a problem • This problem exists even if all mixes are honest • Statistical approximations should be checked by simulations