An Adaptive Framework for Oversubscription Management in CPUGPU

An Adaptive Framework for Oversubscription Management in CPU-GPU Unified Memory DATE 2021 Debashis Ganguly Rami Melhem, Jun Yang

De-facto for General Purpose Computing 2

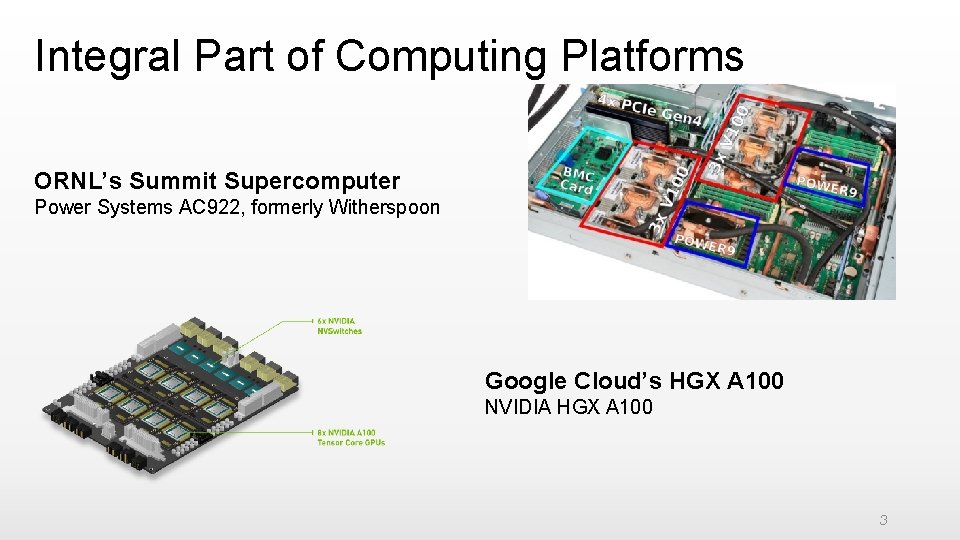

Integral Part of Computing Platforms ORNL’s Summit Supercomputer Power Systems AC 922, formerly Witherspoon Google Cloud’s HGX A 100 NVIDIA HGX A 100 3

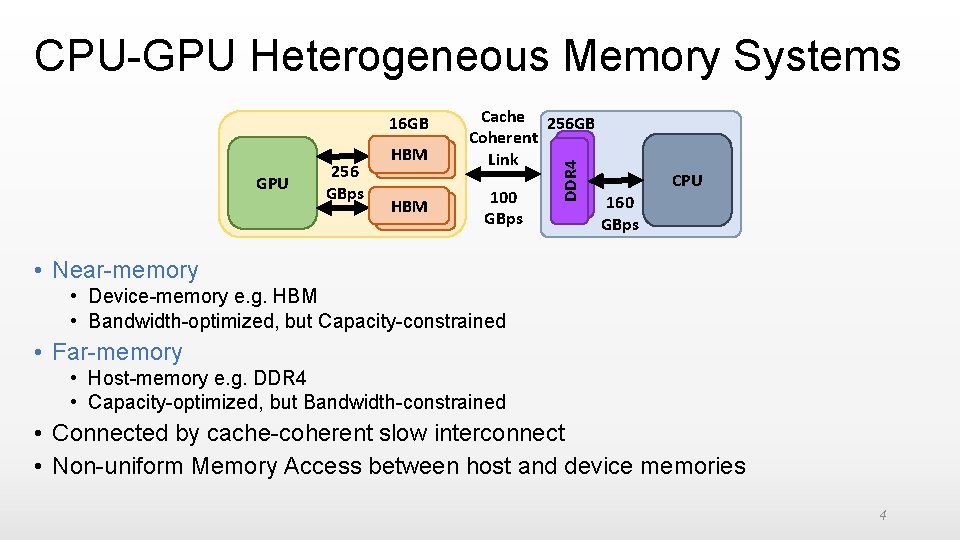

CPU-GPU Heterogeneous Memory Systems GPU 256 GBps HBM Cache 256 GB Coherent Link 100 GBps DDR 4 16 GB CPU 160 GBps • Near-memory • Device-memory e. g. HBM • Bandwidth-optimized, but Capacity-constrained • Far-memory • Host-memory e. g. DDR 4 • Capacity-optimized, but Bandwidth-constrained • Connected by cache-coherent slow interconnect • Non-uniform Memory Access between host and device memories 4

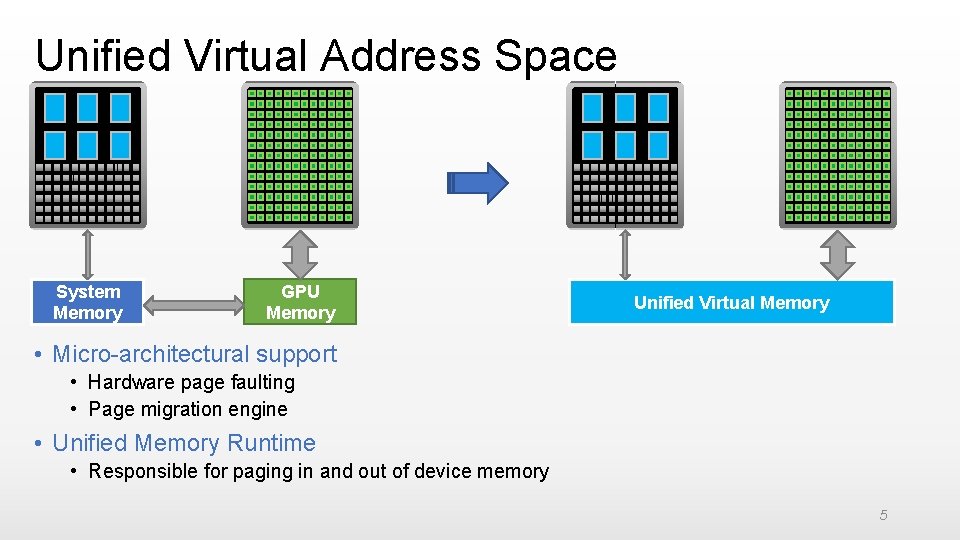

Unified Virtual Address Space System Memory GPU Memory Unified Virtual Memory • Micro-architectural support • Hardware page faulting • Page migration engine • Unified Memory Runtime • Responsible for paging in and out of device memory 5

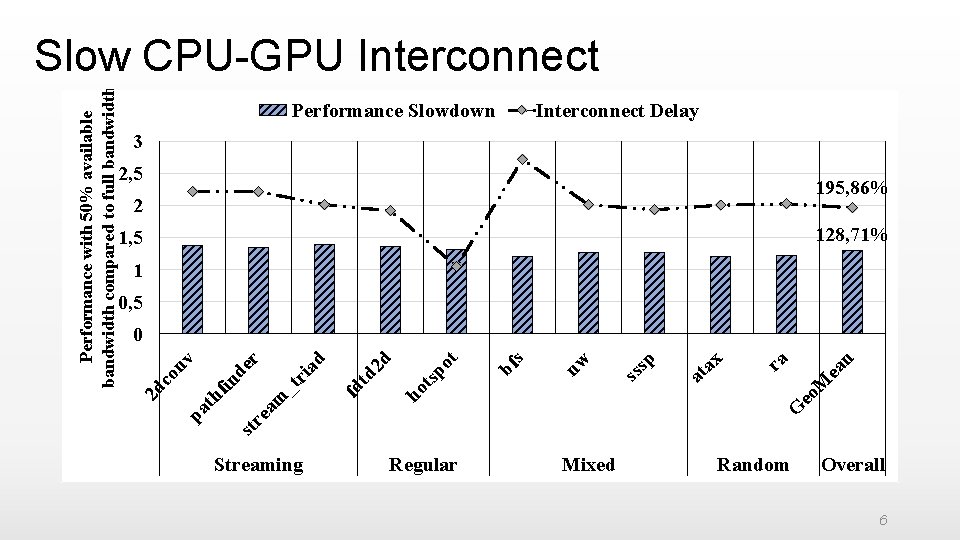

Streaming Regular Mixed G ea n eo M ra ax sp ss nw bf s sp ot Performance Slowdown at ho t 2 d td fd r ia d _t r am re st fin de th pa co nv 2 d Performance with 50% available bandwidth compared to full bandwidth Slow CPU-GPU Interconnect Delay 3 2, 5 2 195, 86% 1, 5 128, 71% 1 0, 5 0 Random Overall 6

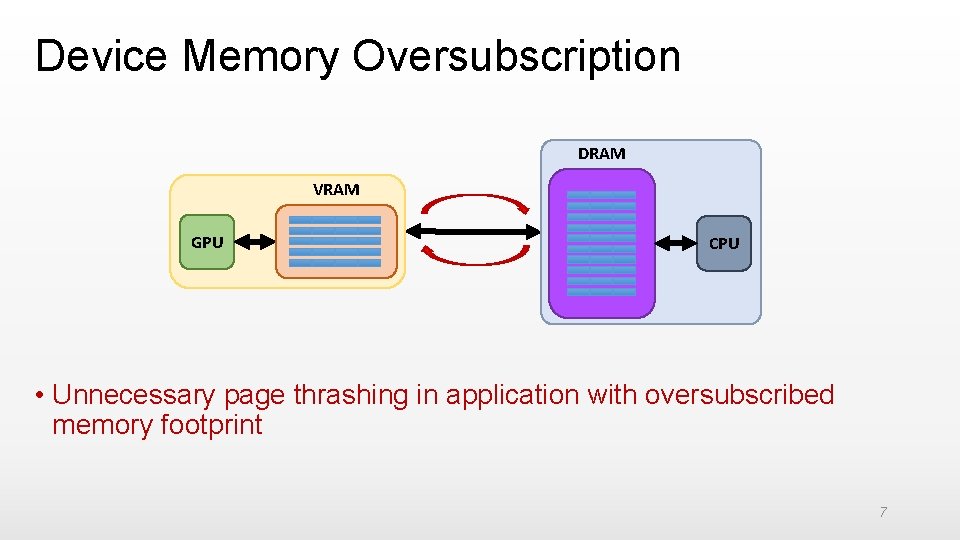

Device Memory Oversubscription DRAM VRAM GPU CPU • Unnecessary page thrashing in application with oversubscribed memory footprint 7

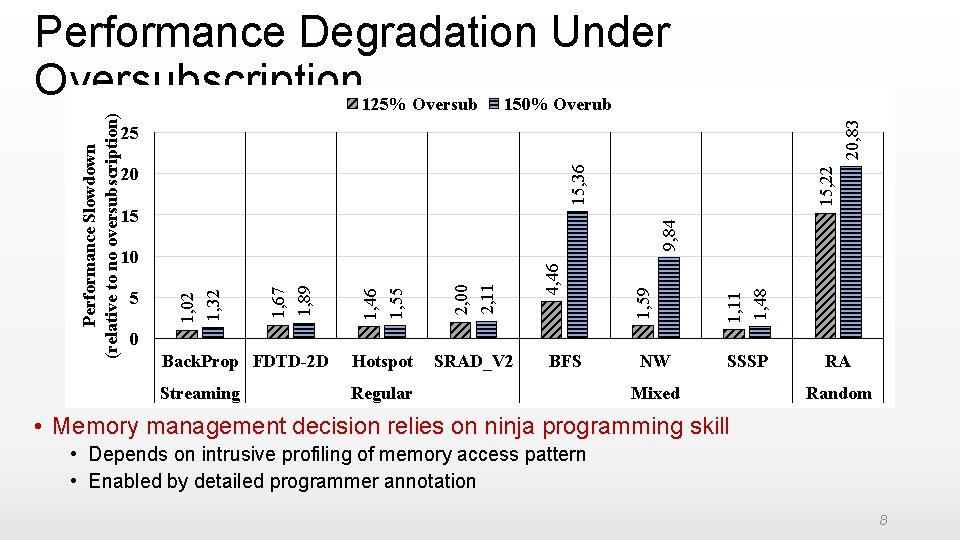

20, 83 25 15, 22 15, 36 20 SRAD_V 2 Streaming Regular 1, 11 1, 48 Hotspot 1, 59 2, 00 2, 11 Back. Prop FDTD-2 D 1, 67 1, 89 5 1, 46 1, 55 10 4, 46 9, 84 15 1, 02 1, 32 Performance Slowdown (relative to no oversubscription) Performance Degradation Under Oversubscription 125% Oversub 150% Overub 0 BFS NW SSSP Mixed RA Random • Memory management decision relies on ninja programming skill • Depends on intrusive profiling of memory access pattern • Enabled by detailed programmer annotation 8

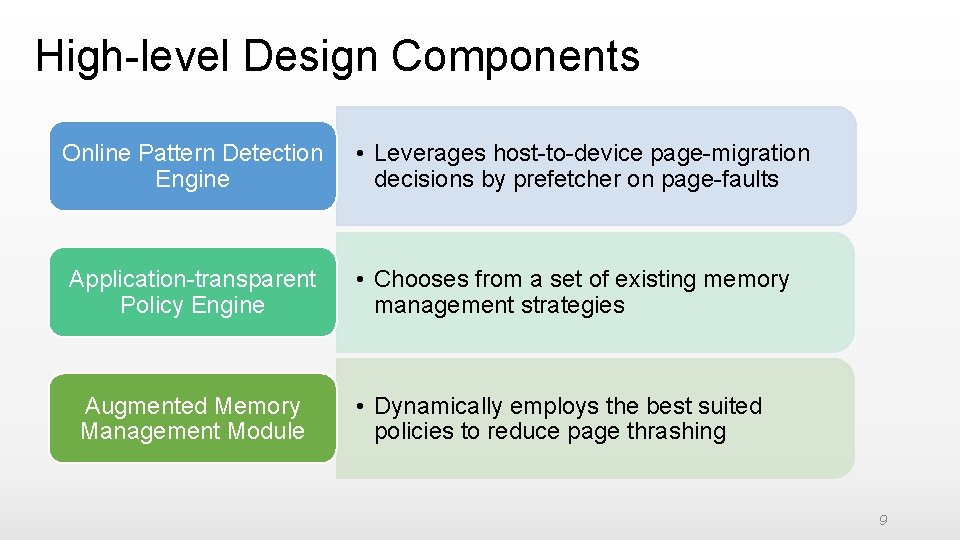

High-level Design Components Online Pattern Detection Engine Application-transparent Policy Engine Augmented Memory Management Module • Leverages host-to-device page-migration decisions by prefetcher on page-faults • Chooses from a set of existing memory management strategies • Dynamically employs the best suited policies to reduce page thrashing 9

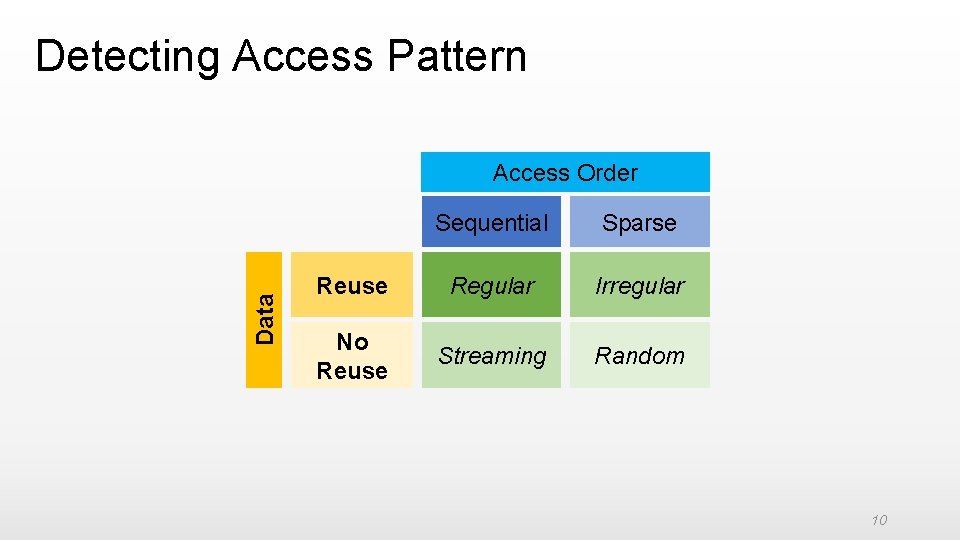

Detecting Access Pattern Data Access Order Sequential Sparse Reuse Regular Irregular No Reuse Streaming Random 10

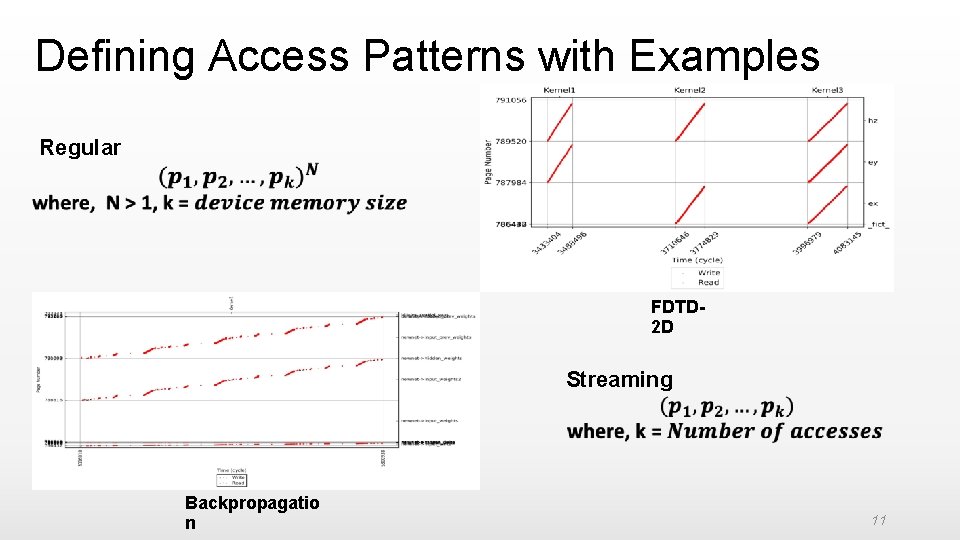

Defining Access Patterns with Examples Regular FDTD 2 D Streaming Backpropagatio n 11

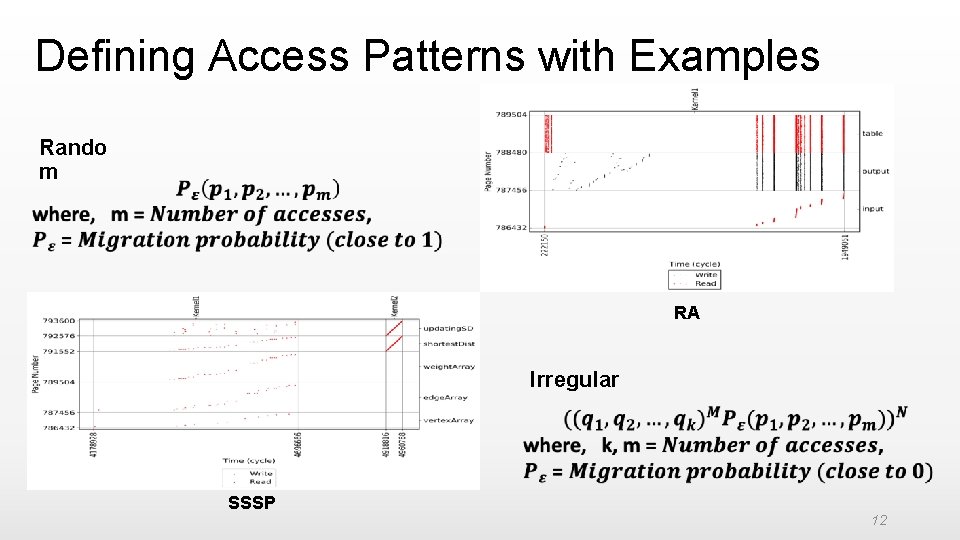

Defining Access Patterns with Examples Rando m RA Irregular SSSP 12

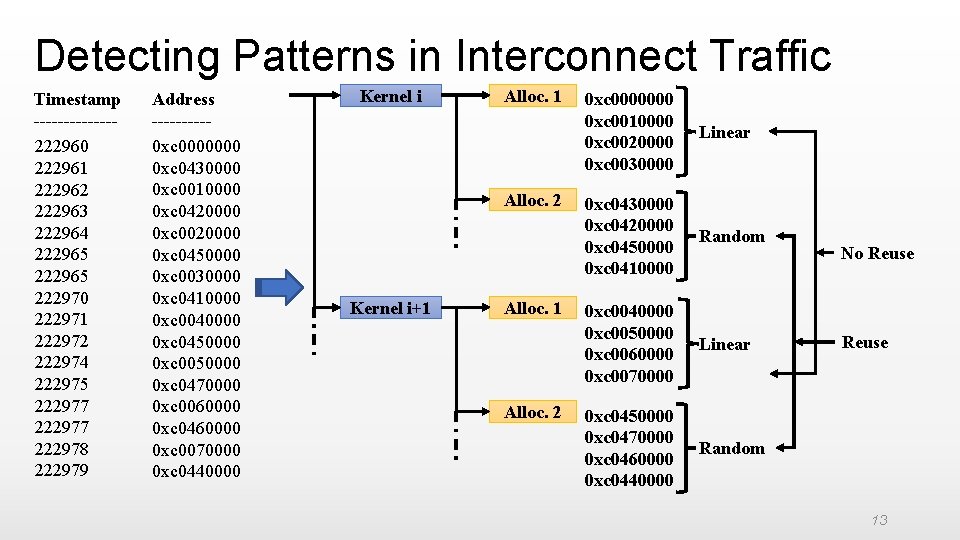

Detecting Patterns in Interconnect Traffic Timestamp -------222960 222961 222962 222963 222964 222965 222970 222971 222972 222974 222975 222977 222978 222979 Address -----0 xc 0000000 0 xc 0430000 0 xc 0010000 0 xc 0420000 0 xc 0020000 0 xc 0450000 0 xc 0030000 0 xc 0410000 0 xc 0040000 0 xc 0450000 0 xc 0050000 0 xc 0470000 0 xc 0060000 0 xc 0460000 0 xc 0070000 0 xc 0440000 Kernel i Alloc. 1 Alloc. 2 Kernel i+1 Alloc. 2 0 xc 0000000 0 xc 0010000 0 xc 0020000 0 xc 0030000 Linear 0 xc 0430000 0 xc 0420000 0 xc 0450000 0 xc 0410000 Random 0 xc 0040000 0 xc 0050000 0 xc 0060000 0 xc 0070000 Linear 0 xc 0450000 0 xc 0470000 0 xc 0460000 0 xc 0440000 Random No Reuse 13

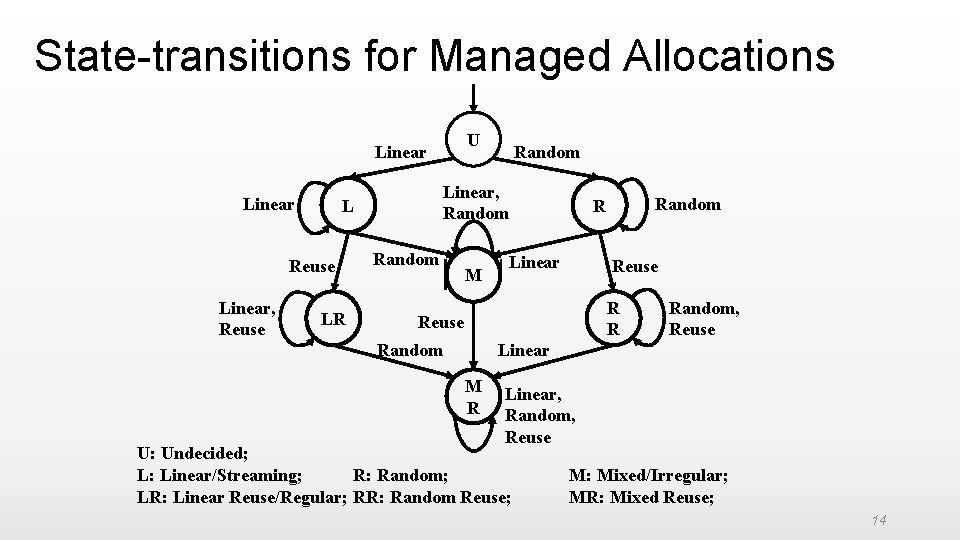

State-transitions for Managed Allocations U Linear, Reuse Linear, Random L Reuse LR Random M Random R Linear Reuse R R Reuse Random, Reuse Linear M R Linear, Random, Reuse U: Undecided; L: Linear/Streaming; R: Random; LR: Linear Reuse/Regular; RR: Random Reuse; M: Mixed/Irregular; MR: Mixed Reuse; 14

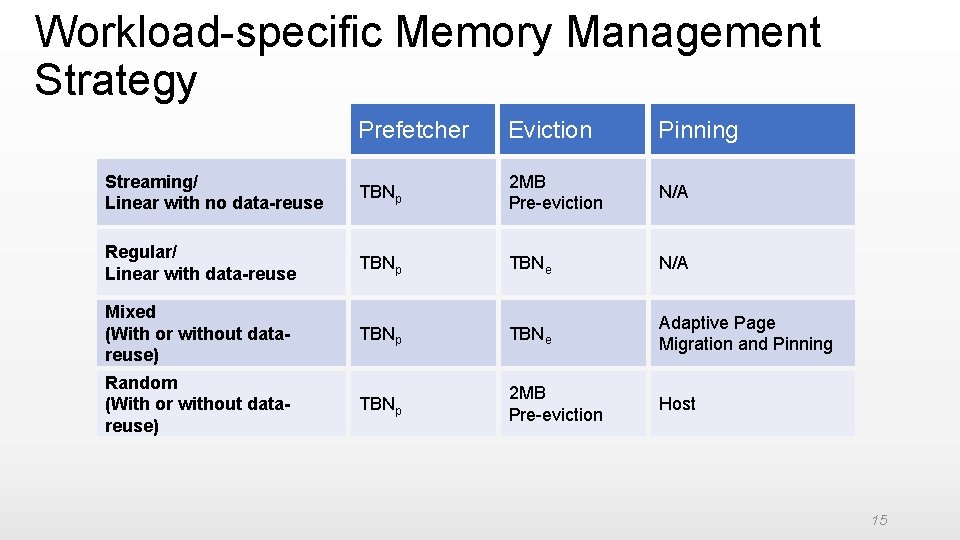

Workload-specific Memory Management Strategy Prefetcher Eviction Pinning Streaming/ Linear with no data-reuse TBNp 2 MB Pre-eviction N/A Regular/ Linear with data-reuse TBNp TBNe N/A Mixed (With or without datareuse) TBNp TBNe Adaptive Page Migration and Pinning Random (With or without datareuse) TBNp 2 MB Pre-eviction Host 15

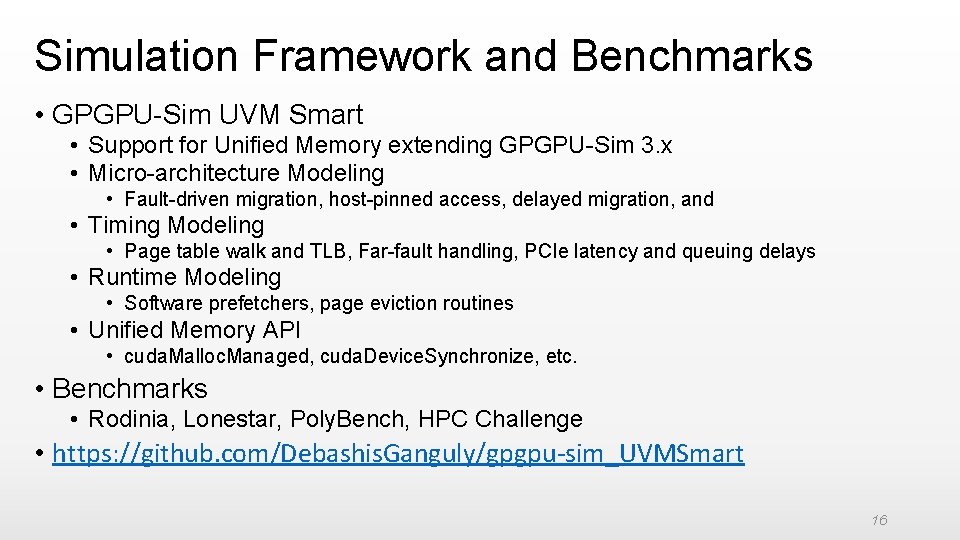

Simulation Framework and Benchmarks • GPGPU-Sim UVM Smart • Support for Unified Memory extending GPGPU-Sim 3. x • Micro-architecture Modeling • Fault-driven migration, host-pinned access, delayed migration, and • Timing Modeling • Page table walk and TLB, Far-fault handling, PCIe latency and queuing delays • Runtime Modeling • Software prefetchers, page eviction routines • Unified Memory API • cuda. Malloc. Managed, cuda. Device. Synchronize, etc. • Benchmarks • Rodinia, Lonestar, Poly. Bench, HPC Challenge • https: //github. com/Debashis. Ganguly/gpgpu-sim_UVMSmart 16

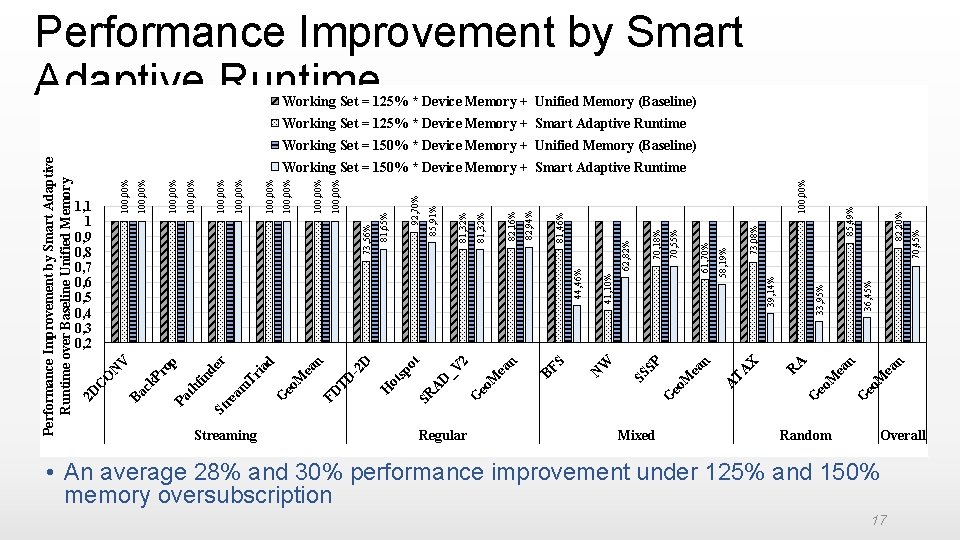

Performance Improvement by Smart Adaptive Runtime Working Set = 125% * Device Memory + Unified Memory (Baseline) Working Set = 125% * Device Memory + Smart Adaptive Runtime Performance Improvement by Smart Adaptive Runtime over Baseline Unified Memory 2 D C O N V 100, 00% Ba 100, 00% ck Pr op 100, 00% Pa th 100, 00% fin de St r 100, 00% re am 100, 00% Tr ia d 100, 00% G eo 100, 00% M ea n 100, 00% FD 100, 00% TD -2 D 73, 56% Working Set = 150% * Device Memory + Unified Memory (Baseline) Streaming Regular Random 70, 45% 82, 20% ea n eo M G G eo M R ea n A 33, 95% 39, 14% X 36, 45% 73, 08% 58, 19% TA ea n eo M 85, 49% 100, 00% Mixed A 61, 70% 70, 55% 70, 18% G SS SP 41, 10% W N G BF S eo M ea n 2 _V D SR A sp ot 44, 46% 62, 82% 81, 46% 82, 94% 82, 16% 81, 32% 85, 91% 81, 65% H ot 1, 1 1 0, 9 0, 8 0, 7 0, 6 0, 5 0, 4 0, 3 0, 2 92, 70% Working Set = 150% * Device Memory + Smart Adaptive Runtime Overall • An average 28% and 30% performance improvement under 125% and 150% memory oversubscription 17

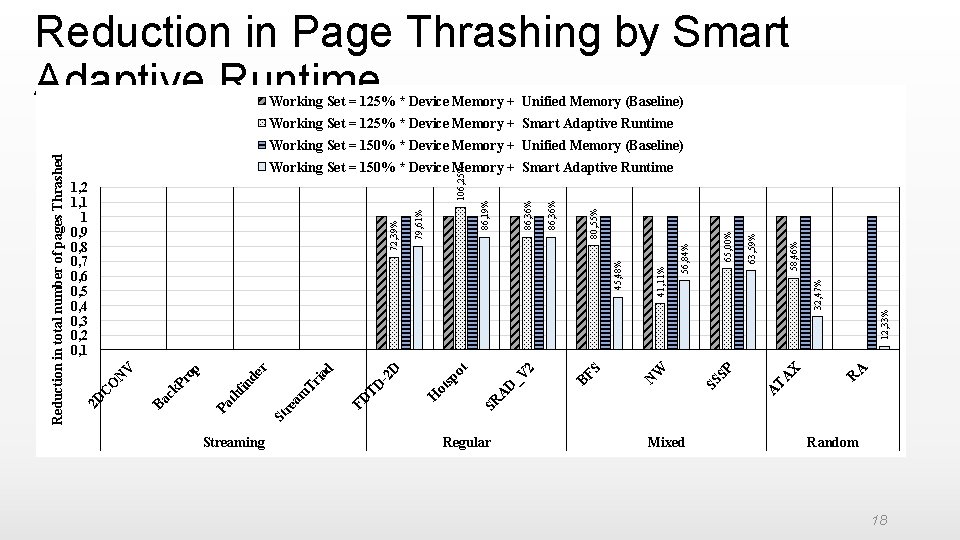

Reduction in Page Thrashing by Smart Adaptive Runtime Working Set = 125% * Device Memory + Unified Memory (Baseline) Working Set = 125% * Device Memory + Smart Adaptive Runtime Streaming 12, 33% A R A TA X 32, 47% 58, 46% 63, 59% 65, 00% SS SP 56, 84% 41, 11% W N BF S 2 SR A D _V sp ot H ot -2 D TD FD St r ea m Tr ia d r fin de Pa th p Pr o Ba ck C O N V 45, 48% 80, 55% 86, 36% 86, 19% 72, 39% 1, 2 1, 1 1 0, 9 0, 8 0, 7 0, 6 0, 5 0, 4 0, 3 0, 2 0, 1 79, 61% 106, 25% Working Set = 150% * Device Memory + Smart Adaptive Runtime 2 D Reduction in total number of pages Thrashed Working Set = 150% * Device Memory + Unified Memory (Baseline) Regular Mixed Random 18

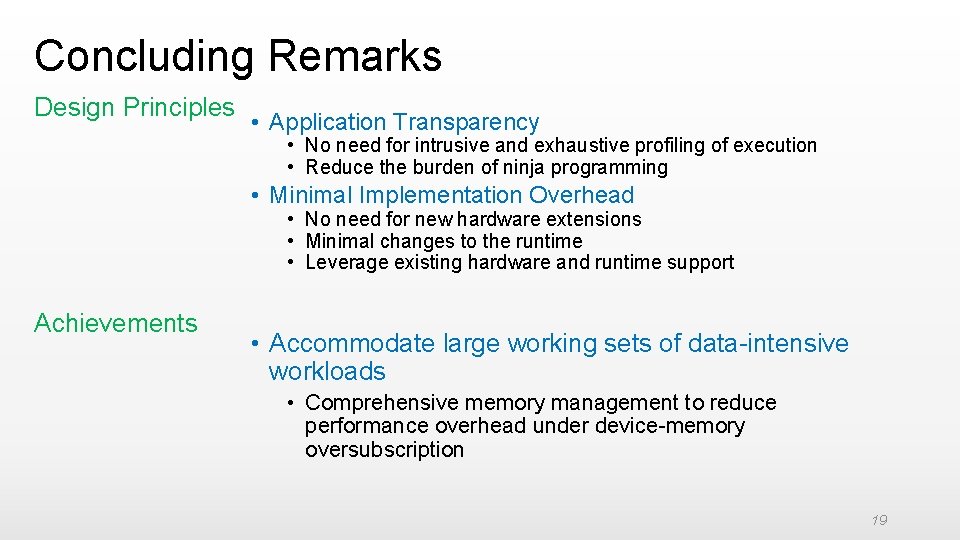

Concluding Remarks Design Principles • Application Transparency • No need for intrusive and exhaustive profiling of execution • Reduce the burden of ninja programming • Minimal Implementation Overhead • No need for new hardware extensions • Minimal changes to the runtime • Leverage existing hardware and runtime support Achievements • Accommodate large working sets of data-intensive workloads • Comprehensive memory management to reduce performance overhead under device-memory oversubscription 19

An Adaptive Framework for Oversubscription Management in CPU-GPU Unified Memory Debashis Ganguly Ph. D. debashis@cs. pitt. edu https: //people. cs. pitt. edu/~debashis/

- Slides: 20