AMD Opteron Architecture and Software Infrastructure Tim Wilkens

- Slides: 49

AMD Opteron Architecture and Software Infrastructure Tim Wilkens Ph. D. Member of Technical Staff tim. wilkens@amd. com 6/6/2021 Computation Products Group 1

Agenda q Architecture – Opteron, Itanium, Xeon. EMT q ACML – AMD’s superior equivalent of MKL q Compilers – PGI performance enhancements q Performance – delivering on the promise q Summary and Closing Points 6/6/2021 Computation Products Group 2

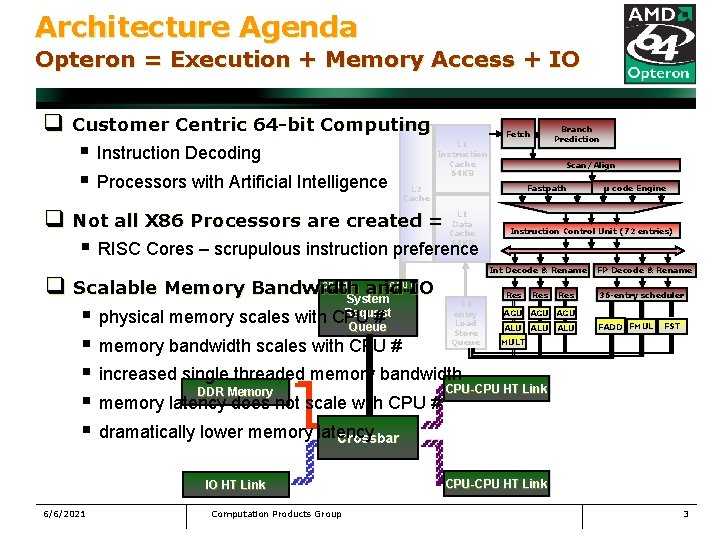

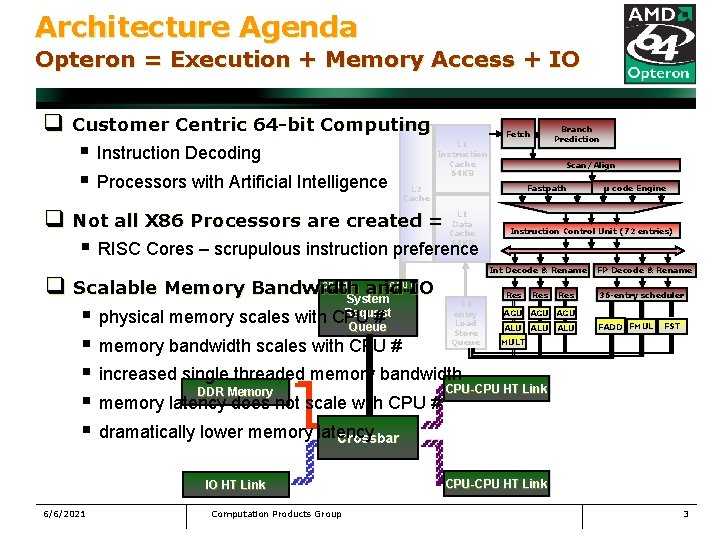

Architecture Agenda Opteron = Execution + Memory Access + IO q Customer Centric 64 -bit Computing § Instruction Decoding § Processors with Artificial Intelligence Branch Prediction Fetch L 1 Instruction Cache 64 KB Scan/Align Fastpath L 2 Cache q Not all X 86 Processors are created = § RISC Cores – scrupulous instruction preference L 1 Data Cache 64 KB Instruction Control Unit (72 entries) Int Decode & & Rename q Scalable Memory Bandwidth and IO System Request § physical memory scales with CPU # Queue § memory bandwidth scales with CPU # § increased single threaded memory bandwidth CPU-CPU HT Link DDR Memory § memory latency does not scale with CPU # § dramatically lower memory latency Crossbar CPU 1 CPU 0 44 entry Load Store Queue IO HT Link 6/6/2021 Computation Products Group μ μ code Engine Res Res Res FP FP Decode & & Rename 36 -entry scheduler AGU AGU ALU ALU FADD FMUL FST MULT CPU-CPU HT Link 3

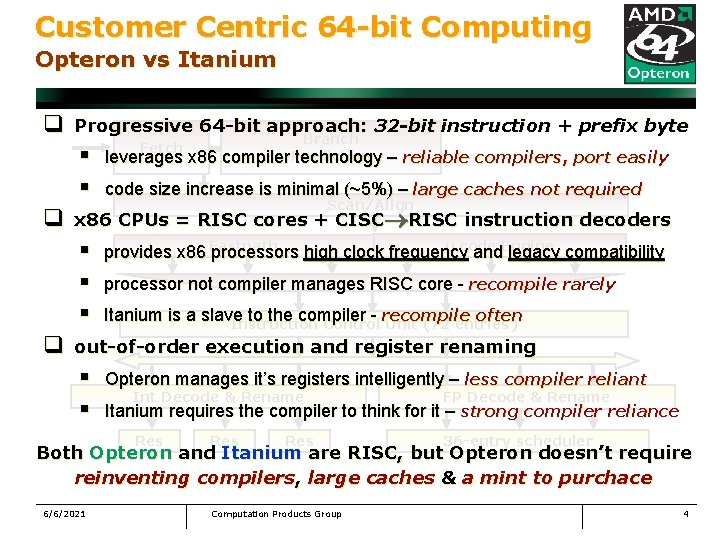

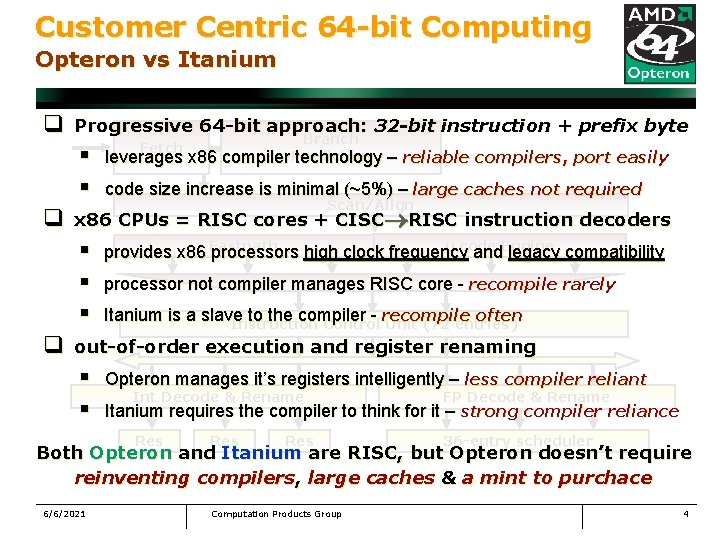

Customer Centric 64 -bit Computing Opteron vs Itanium q Progressive 64 -bit approach: 32 -bit instruction + prefix byte § § q leverages x 86 Branch compiler. Prediction technology – reliable compilers, port easily code size increase is minimal (~5%) – large caches not required Scan/Align x 86 CPUs = RISC cores + CISC RISC instruction decoders § § § q Fetch μ code Engine provides x 86 Fastpath processors high clock frequency and legacy compatibility processor not compiler manages RISC core - recompile rarely Itanium is a slave to the compiler - recompile often Instruction Control Unit (72 entries) out-of-order execution and register renaming § § Opteron manages it’s registers intelligently – less compiler reliant Int Decode & Rename FP Decode & Rename Res 36 -entry scheduler Itanium requires the compiler to think for it – strong compiler reliance Res Both Opteron and Itanium are RISC, but Opteron doesn’t require reinventing compilers, large caches & a mint to purchace 6/6/2021 Computation Products Group 4

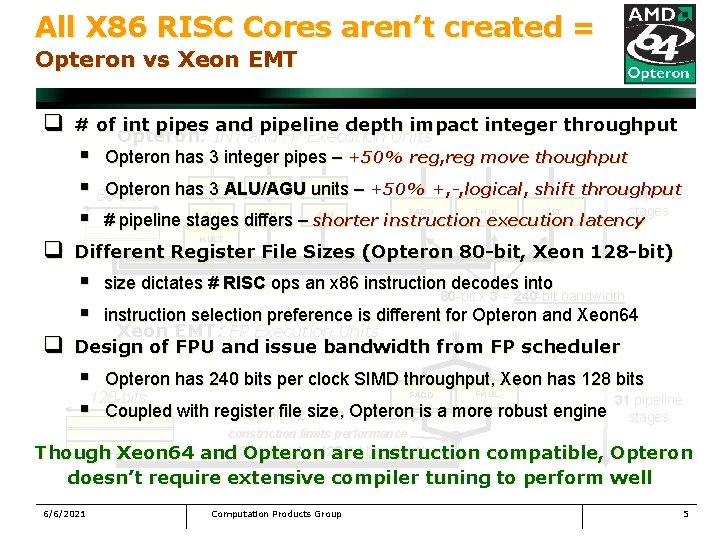

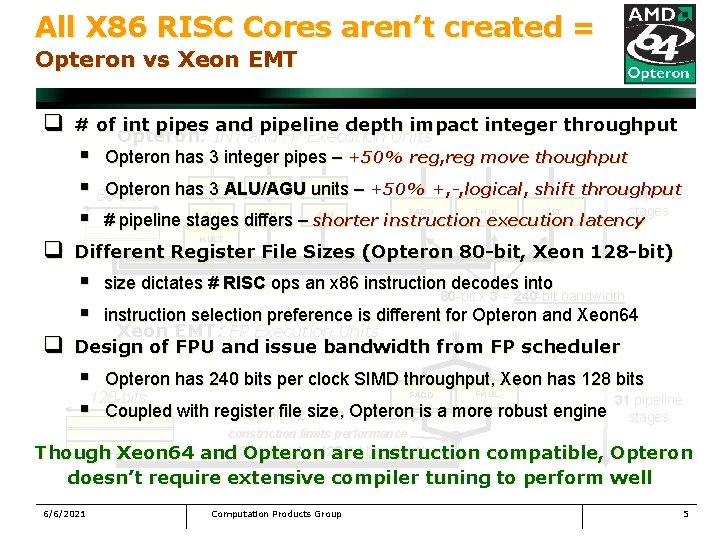

All X 86 RISC Cores aren’t created = Opteron vs Xeon EMT q # of int pipes and pipeline depth impact integer throughput Opteron: INT and FP Execution Units § Opteron has 3 integer pipes – +50% reg, reg move thoughput § 80 -bits Opteron has 3 ALU/AGU units – +50% +, -, logical, shift throughput 12 pipeline stages § # pipeline stages differs – shorter instruction execution latency q AGU ALU ALU FADD FMUL FST MULT Different Register File Sizes (Opteron 80 -bit, Xeon 128 -bit) § § q AGU size dictates # RISC ops an x 86 instruction decodes into 80 -bit x 3 = 240 -bit bandwidth instruction selection preference is different for Opteron and Xeon 64 Xeon EMT: FP Execution Units Design of FPU and issue bandwidth from FP scheduler § Opteron has 240 bits per clock SIMD throughput, Xeon has 128 bits 128 -bits 31 pipeline § Coupled with register Opteron is a more robust engine stages 80 -bitfile x 2 size, = 160 bits FADD FMUL constriction limits performance -bits x 1 = 128 -bit bandwidth Though Xeon 64 and 128 Opteron are instruction compatible, Opteron doesn’t require extensive compiler tuning to perform well 6/6/2021 Computation Products Group 5

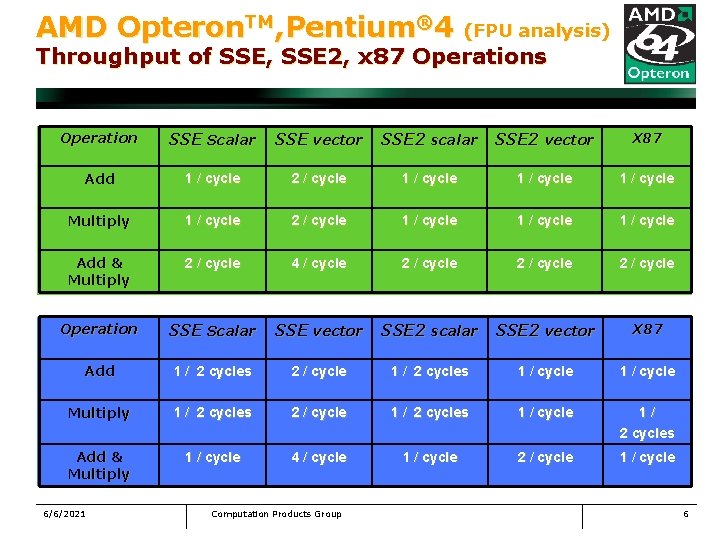

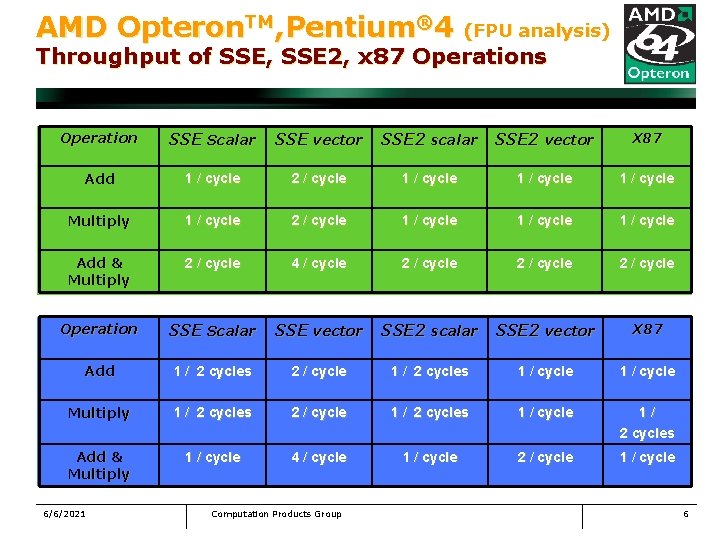

AMD Opteron. TM, Pentium® 4 (FPU analysis) Throughput of SSE, SSE 2, x 87 Operations Operation SSE Scalar SSE vector SSE 2 scalar SSE 2 vector X 87 Add 1 / cycle 2 / cycle 1 / cycle Multiply 1 / cycle 2 / cycle 1 / cycle Add & Multiply 2 / cycle 4 / cycle 2 / cycle Operation SSE Scalar SSE vector SSE 2 scalar SSE 2 vector X 87 Add 1 / 2 cycles 2 / cycle 1 / 2 cycles 1 / cycle Multiply 1 / 2 cycles 2 / cycle 1 / 2 cycles 1 / cycle 1/ 2 cycles Add & Multiply 1 / cycle 4 / cycle 1 / cycle 2 / cycle 1 / cycle 6/6/2021 Computation Products Group 6

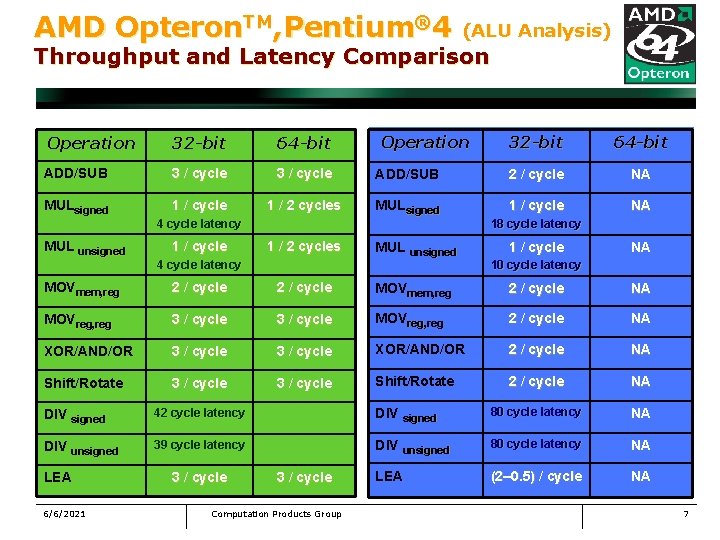

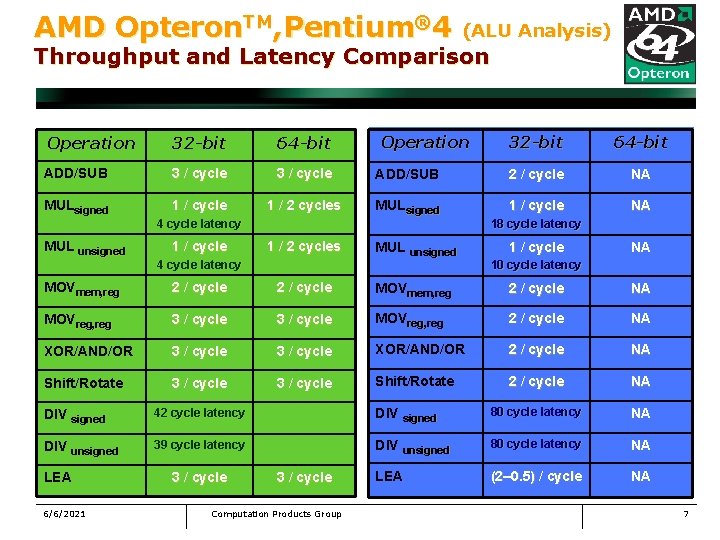

AMD Opteron. TM, Pentium® 4 (ALU Analysis) Throughput and Latency Comparison Operation 32 -bit 64 -bit ADD/SUB 3 / cycle MULsigned 1 / cycle 1 / 2 cycles 4 cycle latency MUL unsigned 1 / cycle Operation 32 -bit 64 -bit ADD/SUB 2 / cycle NA MULsigned 1 / cycle NA 18 cycle latency 1 / 2 cycles MUL unsigned 4 cycle latency 1 / cycle NA 10 cycle latency MOVmem, reg 2 / cycle NA MOVreg, reg 3 / cycle MOVreg, reg 2 / cycle NA XOR/AND/OR 3 / cycle XOR/AND/OR 2 / cycle NA Shift/Rotate 3 / cycle Shift/Rotate 2 / cycle NA DIV signed 42 cycle latency DIV signed 80 cycle latency NA DIV unsigned 39 cycle latency DIV unsigned 80 cycle latency NA LEA (2– 0. 5) / cycle NA LEA 6/6/2021 3 / cycle Computation Products Group 7

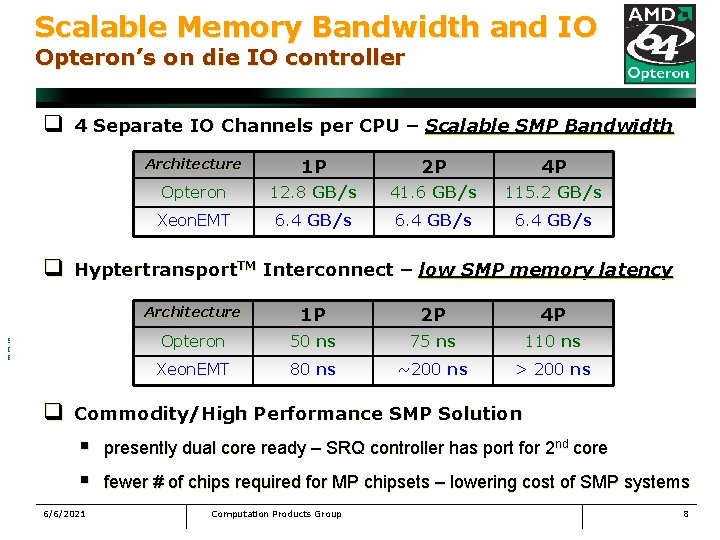

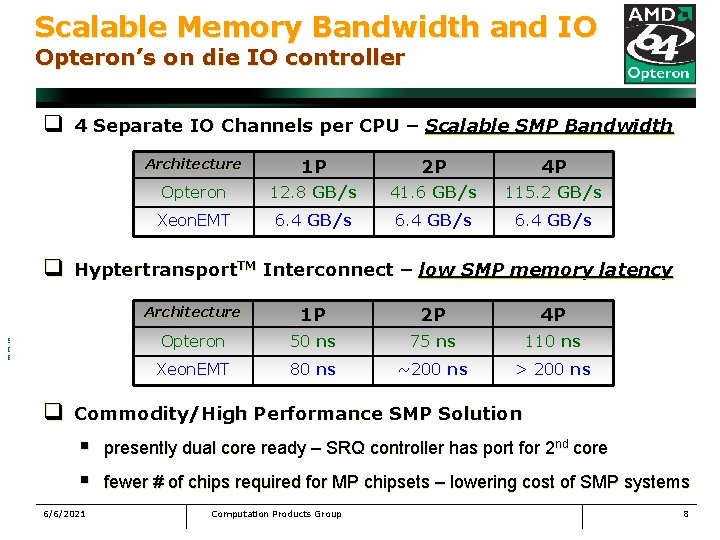

Scalable Memory Bandwidth and IO Opteron’s on die IO controller q 4 Opteron™ Separate. Processor IO Channels – Scalable SMP Bandwidth AMD Serverper CPU Intel Xeon MP Processor Server Hyper. Transport™ Technology Buses Enable Glueless Expansion for up to 8 -way Servers DDR 144 -bit 6. 4 Hyper. Transport™ Technology Buses for Glueless I/O or CPU Expansion AMD Opteron Processor AMD Opteron GB/s DDR Memory Processor Architecture CPU 0 1 P System GB/s Request Queue Memory Capacity Scales w/ Number of Processors 12. 8 6. 4 GB/s 2 P 4 P 41. 6 GB/s 115. 2 GB/s Maximum of Four Processors per Memory Controller Hub 6. 4 GB/s Limited Memory Bandwidth Shared by All Memory DDR 144 -bit Intel Xeon Processor FSB Bus Bandwidth Shared Across All Four Processors Intel Xeon Processor 6. 4 GB/s Maximum of Three PCI-X Bridges per Memory Controller Hub 25. 6 – 28. 8 DDR 144 -bit 1 P DDR 144 -bit Memory Address Buffer 2 P I/O & Memory Share FSB 4 P Memory Address Buffer GB/s. Other 6/6/2021 Bridge 2 I/O IDE, USB, IO HT LPC, Etc. Link. Hub** presently dual core Bridge PCI-X Bridge DDR 144 -bit Memory Address Buffer Commodity/High Performance SMP Solution § § 6. 4 – 8. 0 PCI-X GB/s CPU-CPU HT Link PCI-X Bridge Memory Dual channels Hyper. Transport PCI-X Opteron 50 75 ns ns. Bridge Link Has Amplens Ctlr 110 Hub Bandwidth For (MCH) Memory to DDR Memory. I/O Devices DDR Address 1. 6 – 2. 0 Xeon. EMT 80 ns. Crossbar ns > GT/s 200 ns 144 -bit~200 Buffer PCI-X coherent Bridge PCI-X Bridge * PCI-X Other 6. 4 I/O Xeon. EMT CPU 1 Hyptertransport. TM Interconnect GB/s – low SMP memory latency Separate Memory and I/O Paths Eliminates Most Bus Contention q Opteron AMD Opteron™ Processor DDR 144 -bit q Architecture 1. 6 GT/s ready – SRQ controller non-coherent Key I/O PCI 3 Hub CPU-CPU HT Link IDE, FDC, USB, Etc. has port for 2 nd Bandwidth Bottlenecks: Link Bandwidth < I/O Bridge Bandwidth core 6. 4 – 8. 0 GB/s Memory Traffic I/O Traffic IPC Traffic fewer # of chips required for MP chipsets – lowering cost of SMP systems Computation Products Group 8

ACML 2. 1 Agenda q Features q BLAS, LAPACK, FFT Performance q Open MP Performance q ACML 2. 5 Snap Shot – Soon to be released 6/6/2021 Computation Products Group 9

Acknowledgments Work carried out in collaboration with the Numerical Algorithms Group (NAG). NAG Below is a list of contributors for each facet of ACML. NAG Project Manager: Manager Mick Pont AMD Compiler/OS Support: Support Chip Freitag BLAS/LAPACK Arch/Optimization: Arch/Optimization Ed Smyth Tim Wilkens FFT Arch/Optimization: Arch/Optimization Lawrence Mulholland Tim Wilkens Themos Tsikas 6/6/2021 Computation Products Group 10

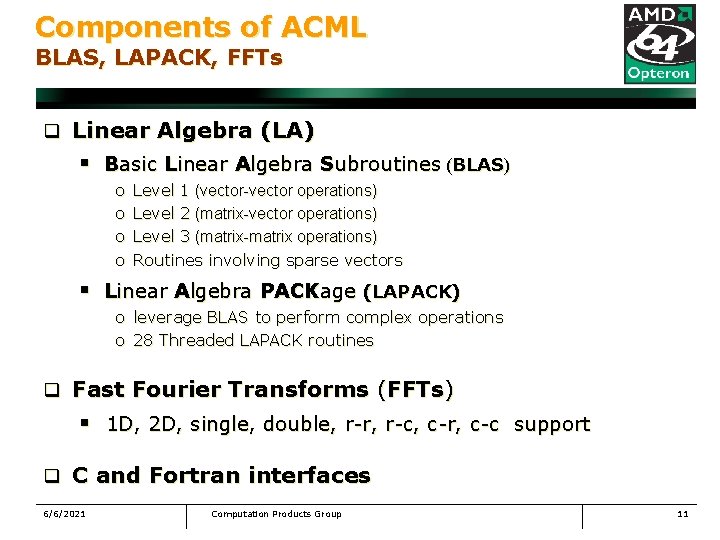

Components of ACML BLAS, LAPACK, FFTs q Linear Algebra (LA) § Basic Linear Algebra Subroutines (BLAS) o o Level 1 (vector-vector operations) Level 2 (matrix-vector operations) Level 3 (matrix-matrix operations) Routines involving sparse vectors § Linear Algebra PACKage (LAPACK) o leverage BLAS to perform complex operations o 28 Threaded LAPACK routines q Fast Fourier Transforms (FFTs) § 1 D, 2 D, single, double, r-r, r-c, c-r, c-c support q C and Fortran interfaces 6/6/2021 Computation Products Group 11

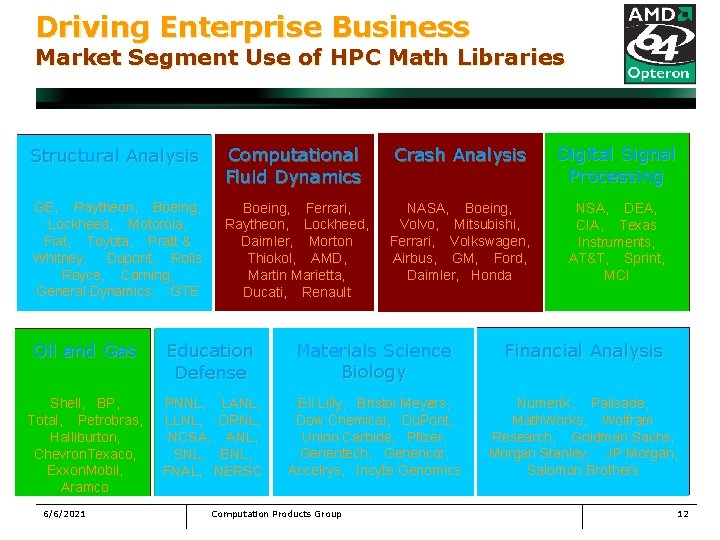

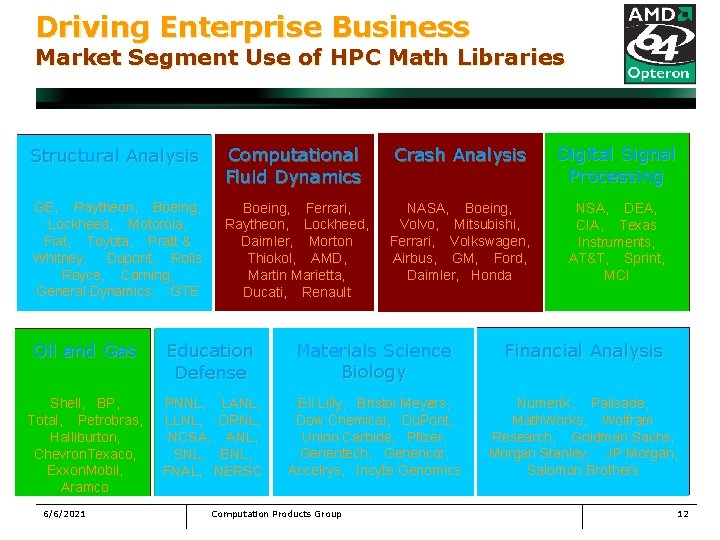

Driving Enterprise Business Market Segment Use of HPC Math Libraries Structural Analysis Computational Fluid Dynamics Crash Analysis Digital Signal Processing GE, Raytheon, Boeing, Lockheed, Motorola, Fiat, Toyota, Pratt & Whitney, Dupont, Rolls Royce, Corning, General Dynamics, GTE Boeing, Ferrari, Raytheon, Lockheed, Daimler, Morton Thiokol, AMD, Martin Marietta, Ducati, Renault NASA, Boeing, Volvo, Mitsubishi, Ferrari, Volkswagen, Airbus, GM, Ford, Daimler, Honda NSA, DEA, CIA, Texas Instruments, AT&T, Sprint, MCI Oil and Gas Education Defense Materials Science Biology Financial Analysis Shell, BP, Total, Petrobras, Halliburton, Chevron. Texaco, Exxon. Mobil, Aramco PNNL, LANL, LLNL, ORNL, NCSA, ANL, SNL, BNL, FNAL, NERSC Eli Lilly, Bristol Meyers, Dow Chemical, Du. Pont, Union Carbide, Pfizer, Genentech, Genencor, Accelrys, Incyte Genomics Numeri. X, Palisade, Math. Works, Wolfram Research, Goldman Sachs, Morgan Stanley, JP Morgan, Salomon Brothers 6/6/2021 Computation Products Group 12

Connecting HPC and you How HPC impacts our daily lives q Gaming – Real World Realism § water surfaces, physics gaming engines q Rendered Movies § modeling real clothing surfaces (PDEs) q Medical Procedures § CAT scan imaging, Cancer Radiation Therapy q Airline Flight Schedules § minimizing equations of constraint (fuel, food, time, etc) q National Security § voice analysis and authentication, weapons simulation 6/6/2021 Computation Products Group 13

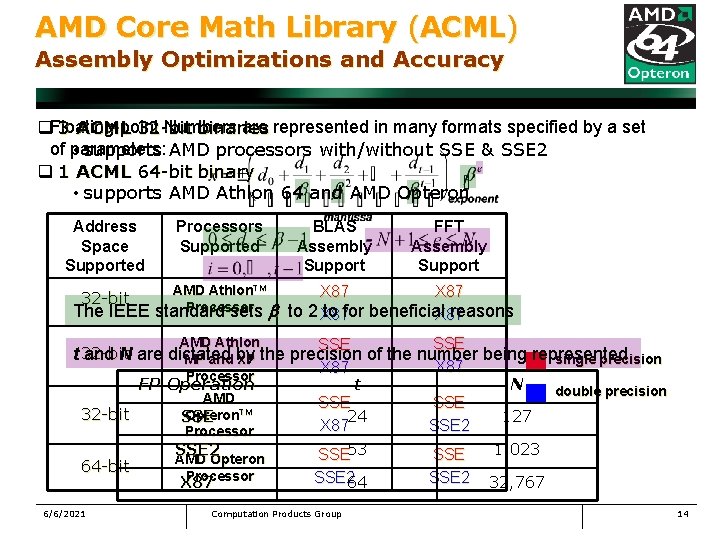

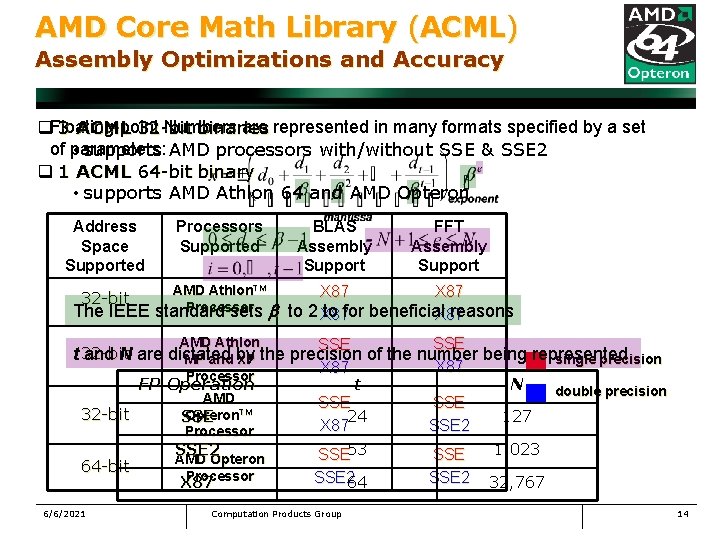

AMD Core Math Library (ACML) Assembly Optimizations and Accuracy Numbers are represented in many formats specified by a set q. Floating-point 3 ACML 32 -bit binaries of parameters: • supports AMD processors with/without SSE & SSE 2 q 1 ACML 64 -bit binary • supports AMD Athlon 64 and AMD Opteron Address Space Supported Processors Supported 32 -bit AMD Athlon. TM Processor standard sets b The IEEE AMD Athlon BLAS Assembly Support FFT Assembly Support X 87 to 2 X 87 to for beneficial reasons X 87 SSE t 32 -bit and N are dictated by the precision of the number being represented MP and XP single precision Processor FP Operation 32 -bit AMD Opteron. TM SSE Processor 64 -bit AMD Opteron Processor X 87 6/6/2021 t SSE 24 X 87 SSE 53 X 87 SSE 264 Computation Products Group SSE SSE 2 N double precision 127 1, 023 32, 767 14

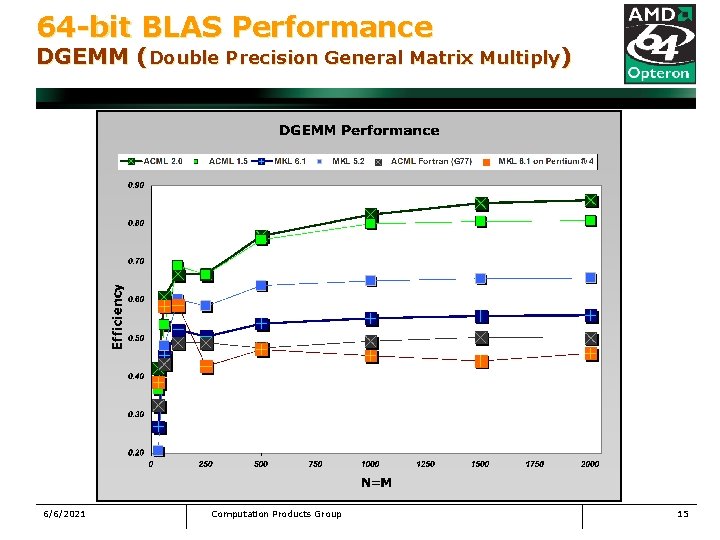

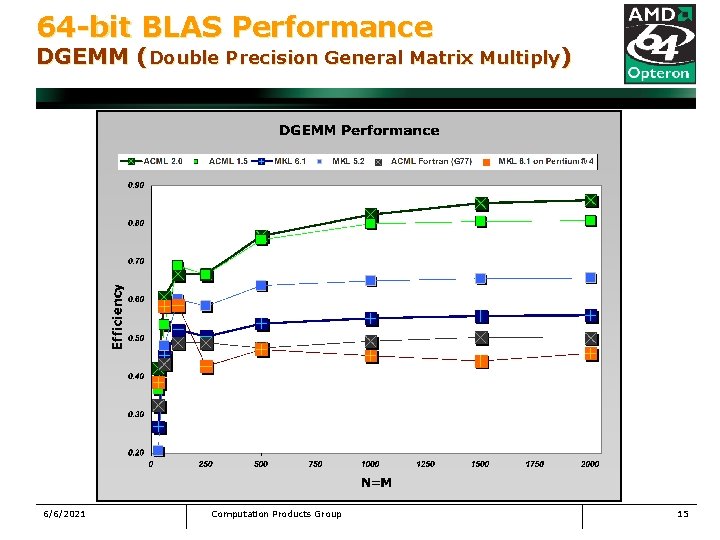

64 -bit BLAS Performance DGEMM (Double Precision General Matrix Multiply) 6/6/2021 Computation Products Group 15

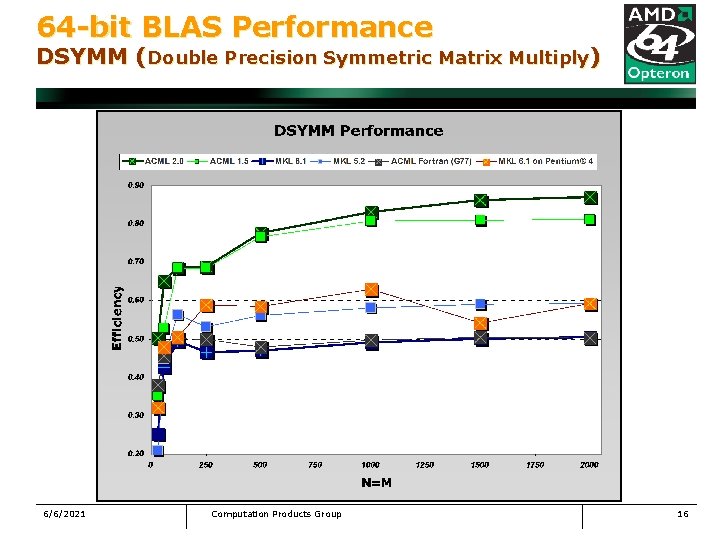

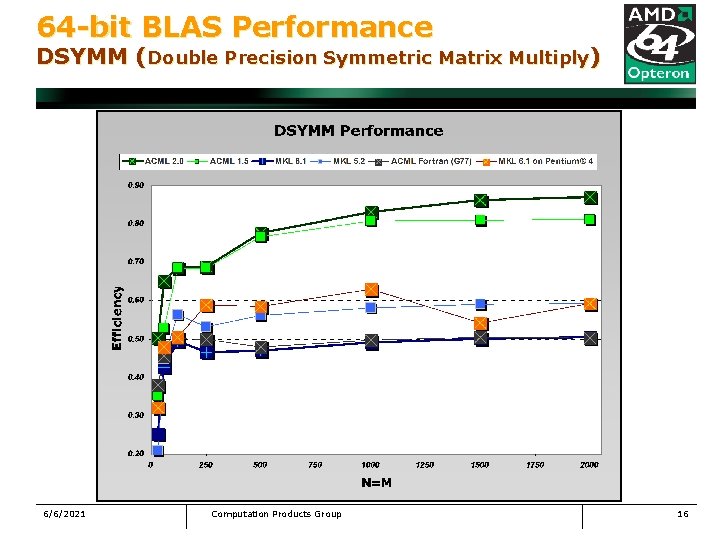

64 -bit BLAS Performance DSYMM (Double Precision Symmetric Matrix Multiply) 6/6/2021 Computation Products Group 16

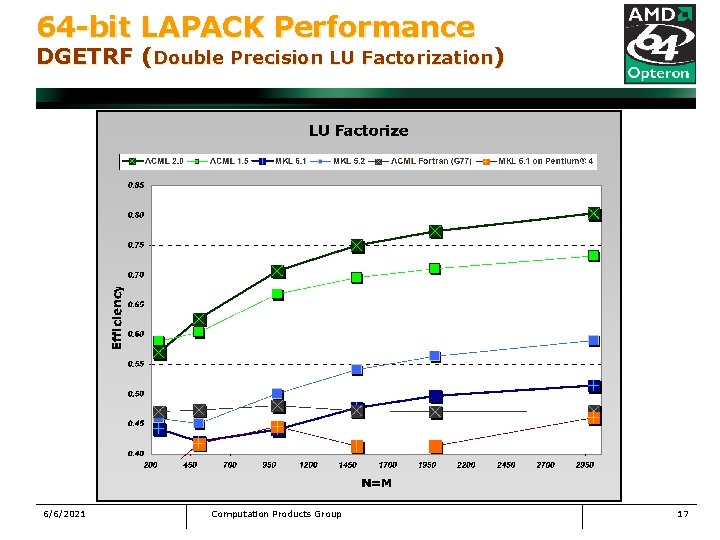

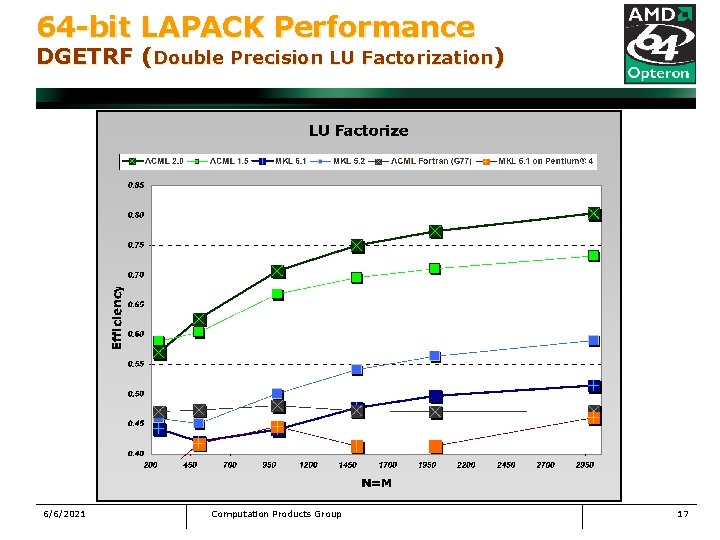

64 -bit LAPACK Performance DGETRF (Double Precision LU Factorization) 6/6/2021 Computation Products Group 17

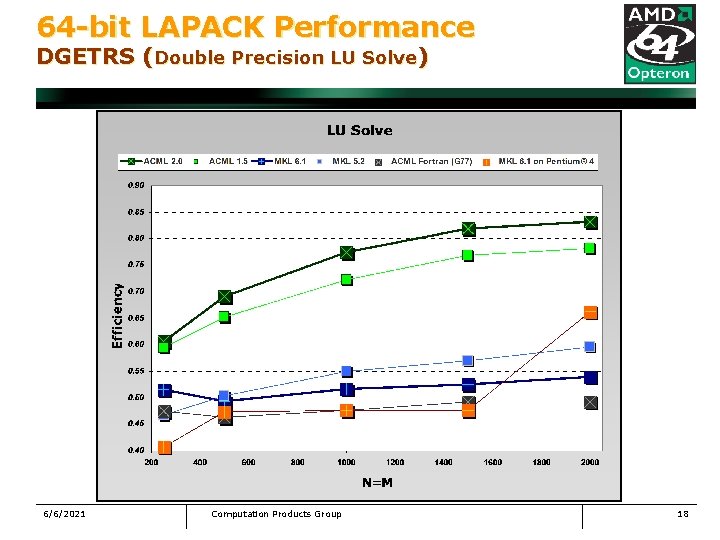

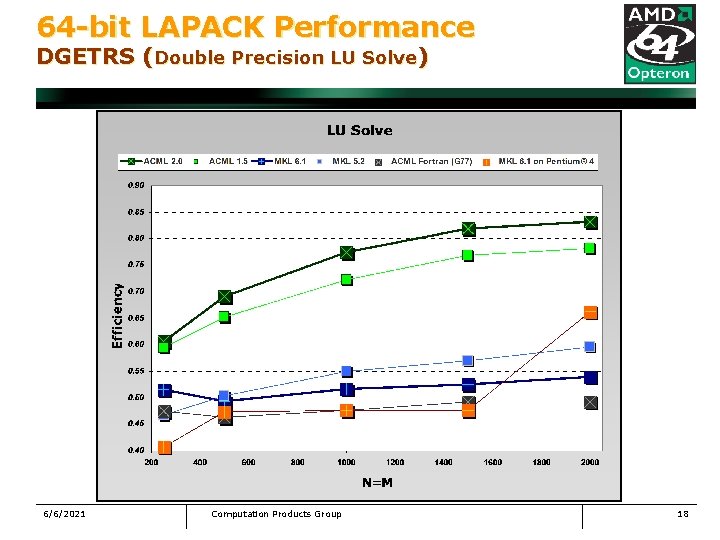

64 -bit LAPACK Performance DGETRS (Double Precision LU Solve) 6/6/2021 Computation Products Group 18

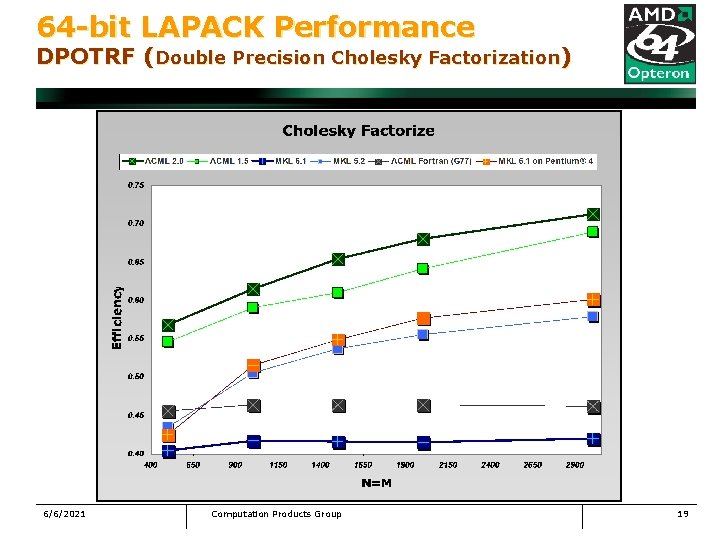

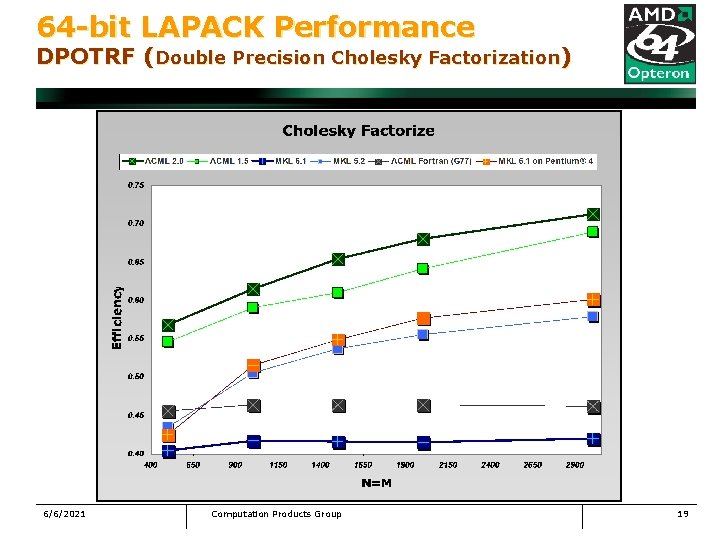

64 -bit LAPACK Performance DPOTRF (Double Precision Cholesky Factorization) 6/6/2021 Computation Products Group 19

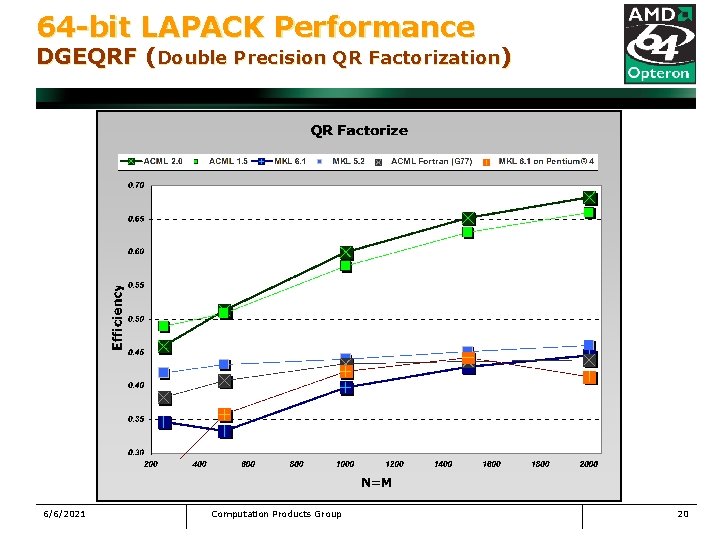

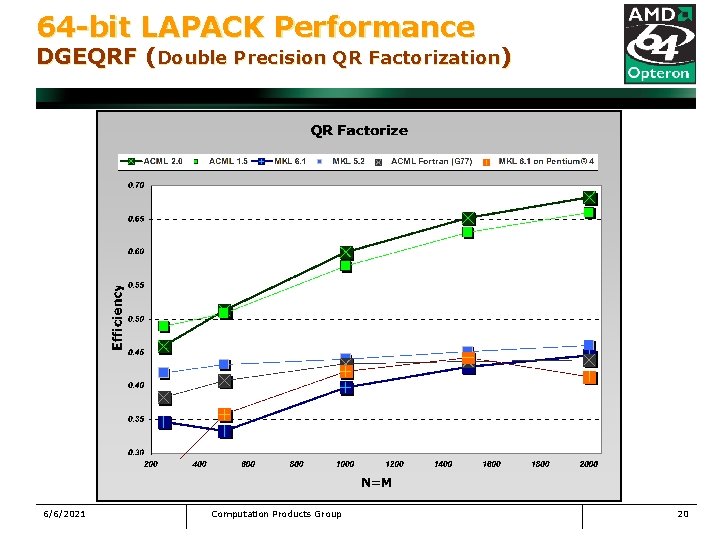

64 -bit LAPACK Performance DGEQRF (Double Precision QR Factorization) 6/6/2021 Computation Products Group 20

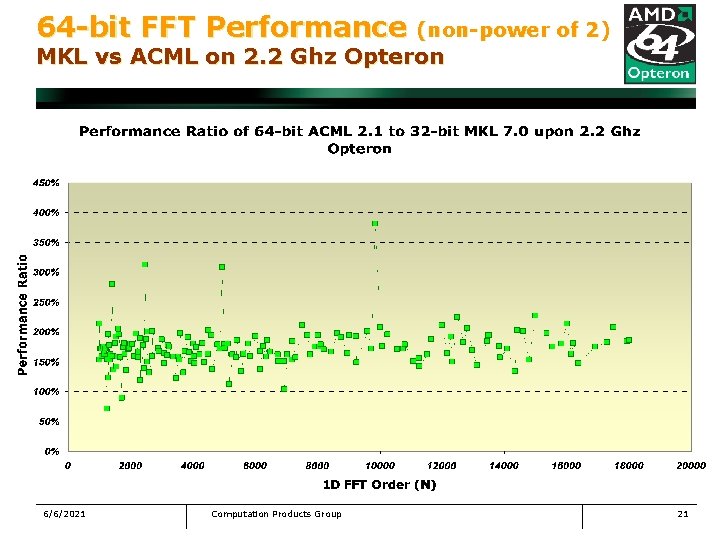

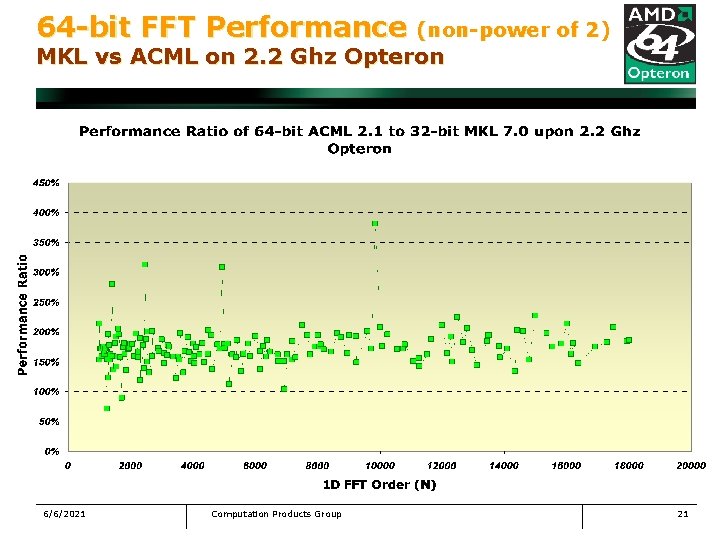

64 -bit FFT Performance (non-power of 2) MKL vs ACML on 2. 2 Ghz Opteron 6/6/2021 Computation Products Group 21

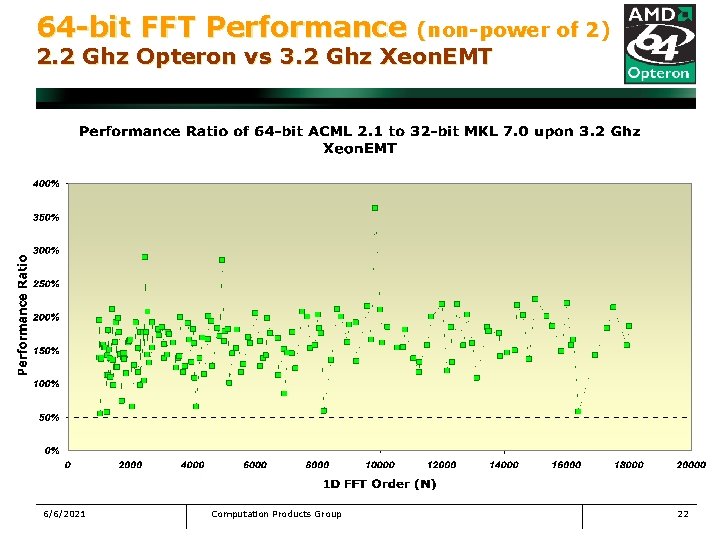

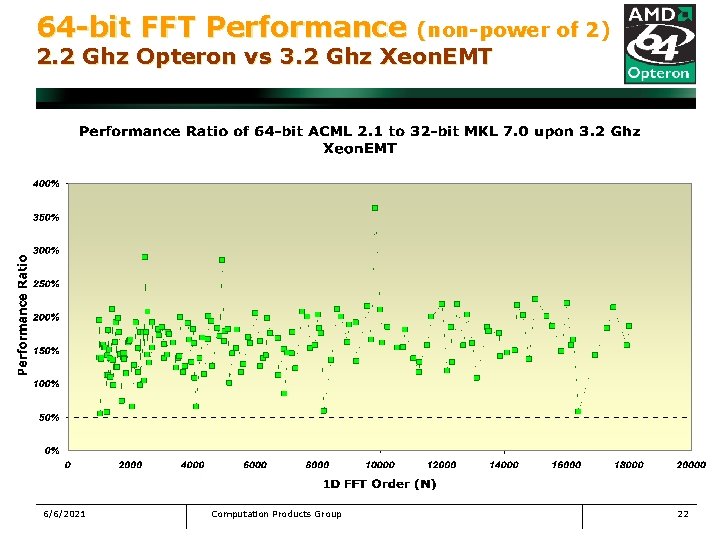

64 -bit FFT Performance (non-power of 2) 2. 2 Ghz Opteron vs 3. 2 Ghz Xeon. EMT 6/6/2021 Computation Products Group 22

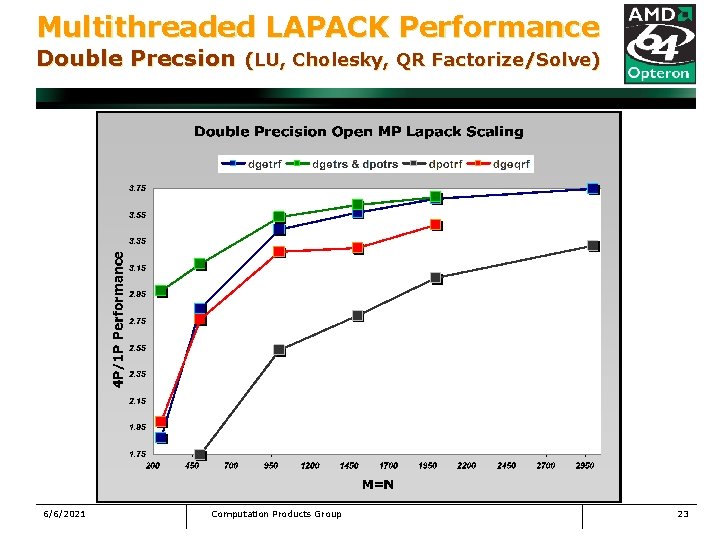

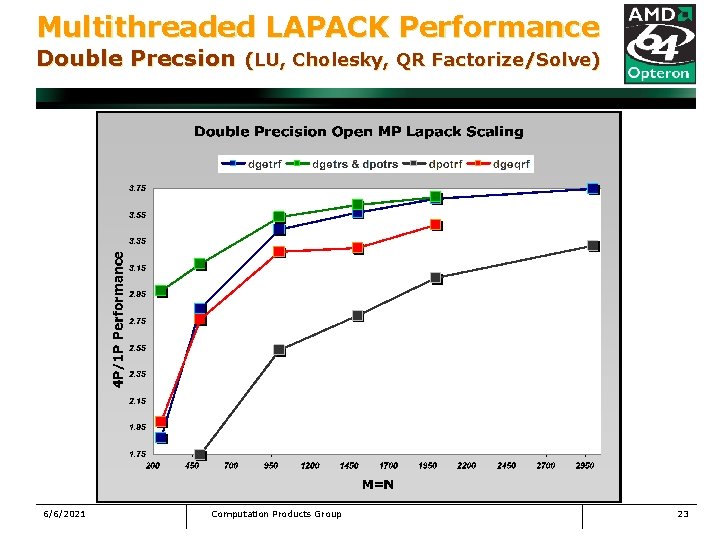

Multithreaded LAPACK Performance Double Precsion (LU, Cholesky, QR Factorize/Solve) 6/6/2021 Computation Products Group 23

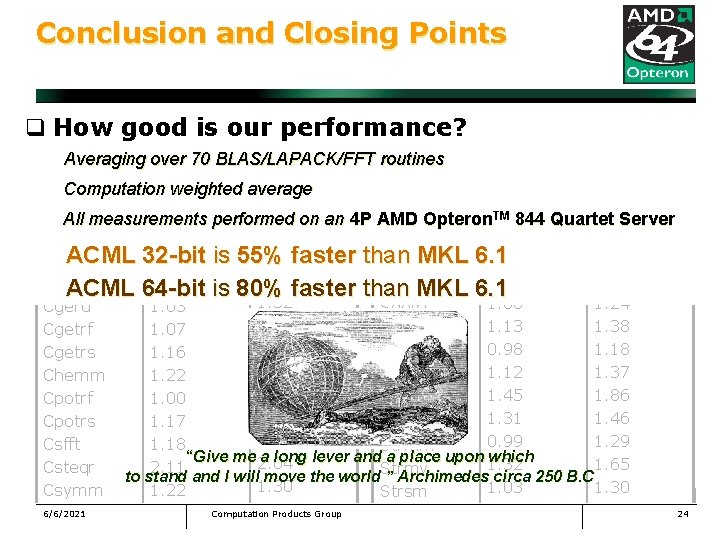

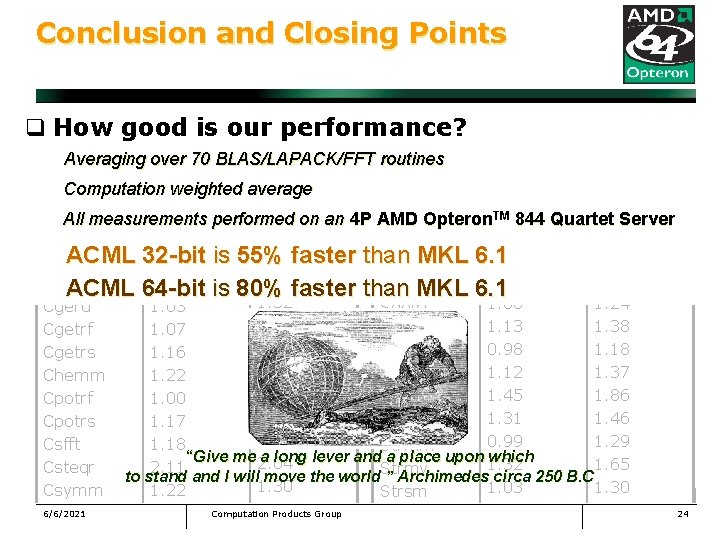

Conclusion and Closing Points q How good is our performance? Routine ACML 32 ACML 64 Averaging over 70 BLAS/LAPACK/FFT routines 5. 05 5. 19 6. 72 Cbdsqr 5. 24 Sbdsqr 1. 17 1. 12 1. 28 Computation weighted average Cfft 1 d 1. 03 Scfft 1. 21 1. 32 TM 844 Quartet Cgemm 1. 13 Sgemm All measurements performed on an 4 P AMD Opteron 1. 19 Server 1. 25 1. 41 1. 76 Cgemv 1. 03 Sgemv 1. 12 faster than 1. 05 1. 29 ACML 32 -bit is 55% MKL 6. 1 Cgeqrf 1. 03 Sgeqrf 1. 33 2. 23 2. 76 Cgerc 1. 00 Sger. MKL 6. 1 ACML 64 -bit is 80% faster than 1. 32 1. 00 1. 24 Cgeru 1. 03 Sgetrf 1. 13 1. 38 Cgetrf 1. 07 Sgetrs 1. 20 0. 98 1. 18 Cgetrs 1. 16 Spotrf 1. 31 1. 12 1. 37 Chemm 1. 22 Spotrs 1. 04 1. 45 1. 86 Cpotrf 1. 00 Ssteqr 1. 20 1. 31 1. 46 Cpotrs 1. 17 Ssymm 1. 23 0. 99 1. 29 Csfft 1. 18 Strmm “Give me 2. 04 a long lever and a place upon 1. 32 which 1. 65 Csteqr 2. 11 Strmv to stand I will move the world ” Archimedes circa 250 B. C 1. 30 1. 03 1. 30 Csymm 1. 22 Strsm 1. 08 1. 34 1. 67 6/6/2021 Computation Products Group 24 Ctrmm 1. 01 Strsv 1. 14 7. 21 7. 91

64 -ACML 2. 5 Snapshot Small Dgemm Enhancements 6/6/2021 Computation Products Group 25

Compiler Ecosystem PGI , Pathscale , GNU , Absoft Intel, Microsoft and SUN 6/6/2021 Computation Products Group 26

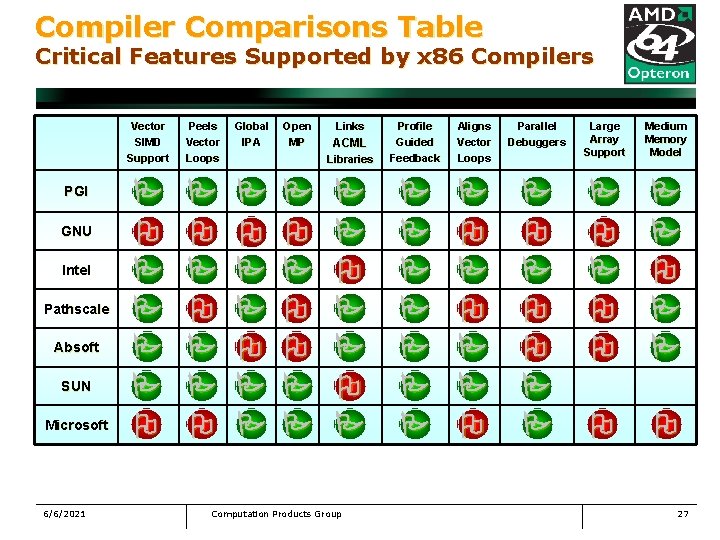

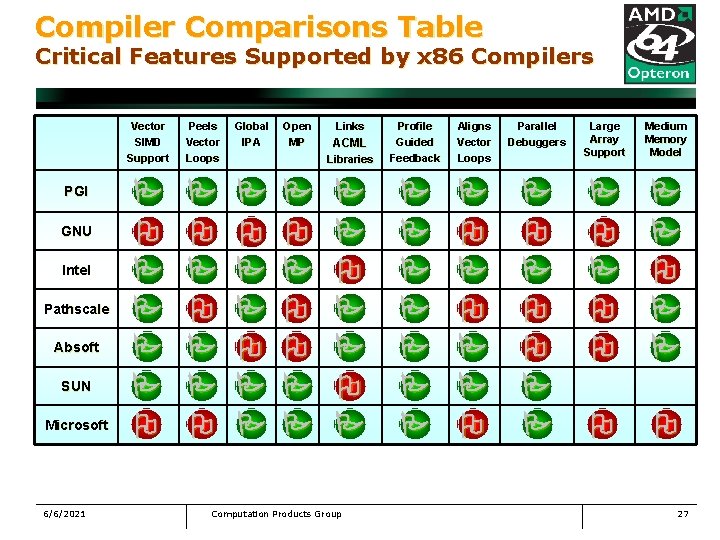

Compiler Comparisons Table Critical Features Supported by x 86 Compilers PGI GNU Intel Pathscale Absoft SUN Microsoft 6/6/2021 Vector SIMD Support Peels Vector Loops Global IPA Open MP Links Aligns Vector Loops Parallel Debuggers Libraries Profile Guided Feedback Large Array Support Medium Memory Model ACML Computation Products Group 27

Tuning Performance with Compilers Maintaining Stability while Optimizing q STEP 0: Build application using the following procedure: § compile all files with the most aggressive optimization flags below: -tp k 8 -64 –fastsse § if compilation fails or the application doesn’t run properly, turn off vectorization: -tp k 8 -64 –fast –Mscalarsse § if problems persist compile at Optimization level 1: -tp k 8 -64 –O 0 q STEP 1: Profile binary and determine performance critical routines q STEP 2: Repeat STEP 0 on performance critical functions, one at a time, and run binary after each step to check stability 6/6/2021 Computation Products Group 28

Tuning Memory IO Bandwidth Optimizing large streaming operations q 2 Methods of writing to memory in x 86/x 86 -64: § traditional memory stores cause write allocates to cache Mov %rax, [%rdi] 1. 2. 3. § movsd %xmm 0, [%rdi] movapd %xmm 0, [%rdi] page to be modified is read into cache is modified, written to memory when new memory page loaded to write N bytes, 2 N bytes of bandwidth generated non-temporal stores bypass cache and write directly to memory 1. no write allocate to cache, to write N bytes, N bytes of bandwidth generated 2. data is not backed up into cache, do not use with often reused data q Use only on functions which write L 2/2 > bytes of data or more, normally would assure little cache reuse value Group all eligible routines into a common file to as to simplify the compilation procedure. Enable non-temporal stores in PGI compiler with the –Mnontemporal compiler option 6/6/2021 Computation Products Group 29

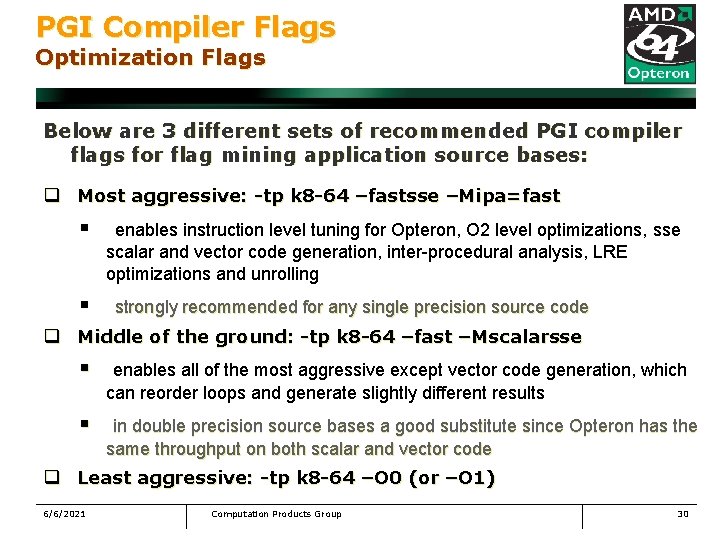

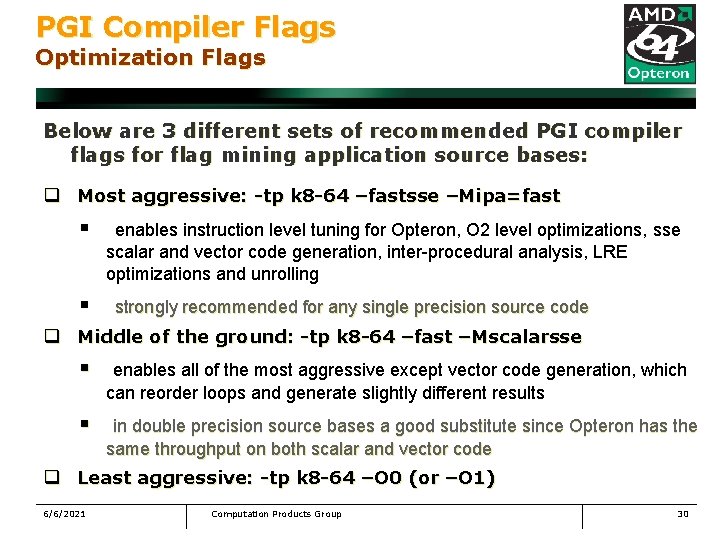

PGI Compiler Flags Optimization Flags Below are 3 different sets of recommended PGI compiler flags for flag mining application source bases: q Most aggressive: -tp k 8 -64 –fastsse –Mipa=fast § § enables instruction level tuning for Opteron, O 2 level optimizations, sse scalar and vector code generation, inter-procedural analysis, LRE optimizations and unrolling strongly recommended for any single precision source code q Middle of the ground: -tp k 8 -64 –fast –Mscalarsse § enables all of the most aggressive except vector code generation, which can reorder loops and generate slightly different results § in double precision source bases a good substitute since Opteron has the same throughput on both scalar and vector code q Least aggressive: -tp k 8 -64 –O 0 (or –O 1) 6/6/2021 Computation Products Group 30

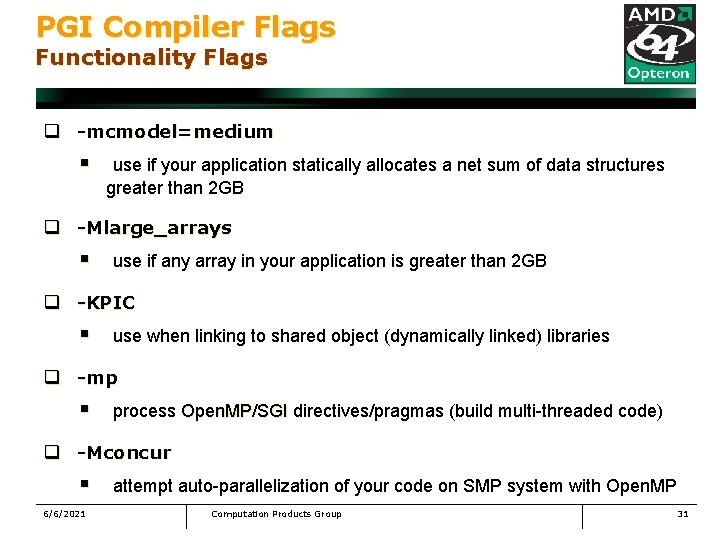

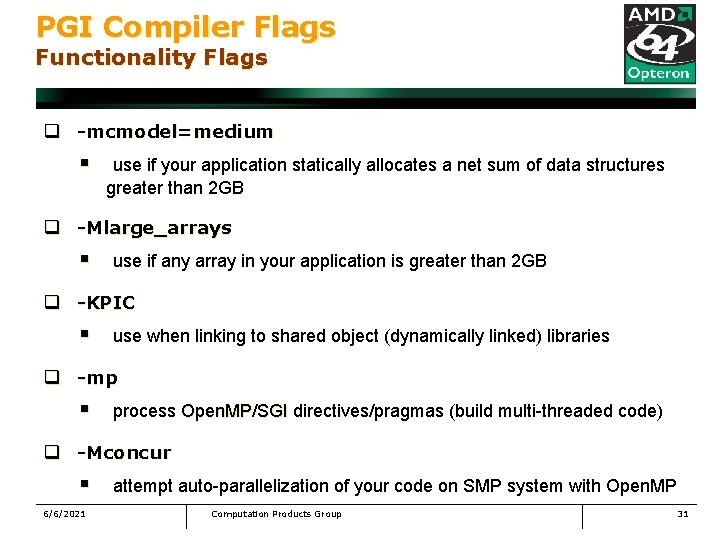

PGI Compiler Flags Functionality Flags q -mcmodel=medium § use if your application statically allocates a net sum of data structures greater than 2 GB q -Mlarge_arrays § use if any array in your application is greater than 2 GB q -KPIC § use when linking to shared object (dynamically linked) libraries q -mp § process Open. MP/ Open. MP SGI directives/pragmas (build multi-threaded code) q -Mconcur § 6/6/2021 attempt auto-parallelization of your code on SMP system with Open. MP Computation Products Group 31

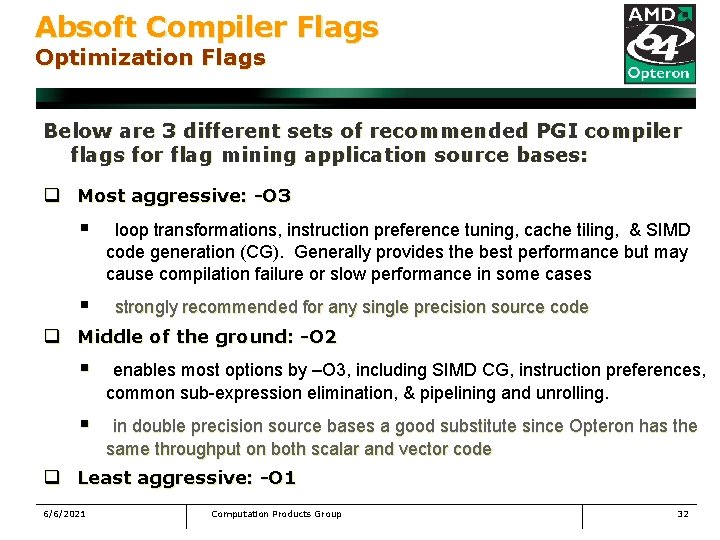

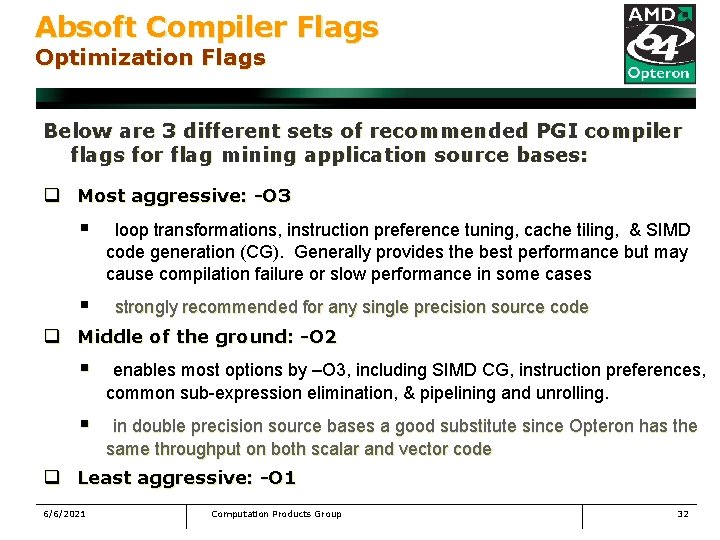

Absoft Compiler Flags Optimization Flags Below are 3 different sets of recommended PGI compiler flags for flag mining application source bases: q Most aggressive: -O 3 § § loop transformations, instruction preference tuning, cache tiling, & SIMD code generation (CG). Generally provides the best performance but may cause compilation failure or slow performance in some cases strongly recommended for any single precision source code q Middle of the ground: -O 2 § enables most options by –O 3, including SIMD CG, instruction preferences, common sub-expression elimination, & pipelining and unrolling. § in double precision source bases a good substitute since Opteron has the same throughput on both scalar and vector code q Least aggressive: -O 1 6/6/2021 Computation Products Group 32

Absoft Compiler Flags Functionality Flags q -mcmodel=medium § use if your application statically allocates a net sum of data structures greater than 2 GB q -g 77 § enables full compatibility with g 77 produced objects and libraries (must use this option to link to GNU ACML libraries) q -fpic § use when linking to shared object (dynamically linked) libraries q -safefp § 6/6/2021 performs certain floating point operations in a slower manner that avoids overflow, underflow and assures proper handling of Na. Ns Computation Products Group 33

Pathscale Compiler Flags Optimization Flags q Most aggressive: -Ofast § Equivalent to –O 3 –ipa –OPT: Ofast –fno-math-errno q Aggressive : -O 3 § § optimizations for highest quality code enabled at cost of compile time Some generally beneficial optimization included may hurt performance q Reasonable: -O 2 § § 6/6/2021 Extensive conservative optimizations Optimizations almost always beneficial Faster compile time Avoids changes which affect floating point accuracy. Computation Products Group 34

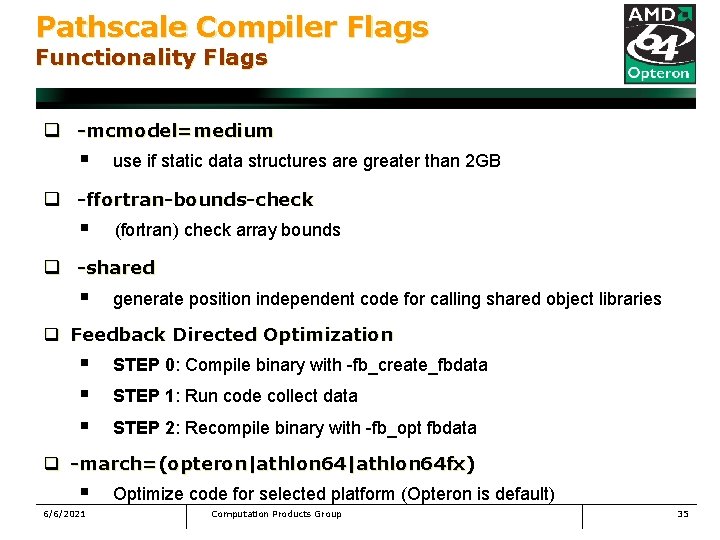

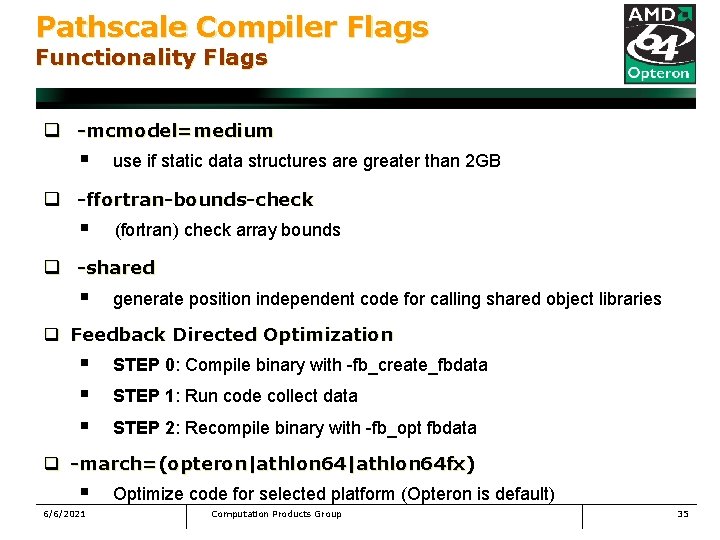

Pathscale Compiler Flags Functionality Flags q -mcmodel=medium § use if static data structures are greater than 2 GB q -ffortran-bounds-check § (fortran) check array bounds q -shared § generate position independent code for calling shared object libraries q Feedback Directed Optimization § § § STEP 0: Compile binary with -fb_create_fbdata STEP 1: Run code collect data STEP 2: Recompile binary with -fb_opt fbdata q -march=(opteron|athlon 64 fx) § 6/6/2021 Optimize code for selected platform (Opteron is default) Computation Products Group 35

Microsoft Compiler Flags Optimization Flags q Recommended Flags : /O 2 /Ob 2 /GL /fp: fast § § /O 2 turns on several general optimization & /O 2 enable inline expansion § /fp: fast allows the compiler to use a fast floating point model /GL enables inter-procedural optimizations q Feedback Directed Optimization § § § STEP 0: Compile binary with /LTCG: PGI STEP 1: Run code collect data STEP 2: Recompile binary with /LTCG: PGO q Turn off Buffer Over Run Checking § 6/6/2021 The compiler by default runs on /GS to check for buffer overruns. Turning off checking by specifying /GS- may result in additional performance Computation Products Group 36

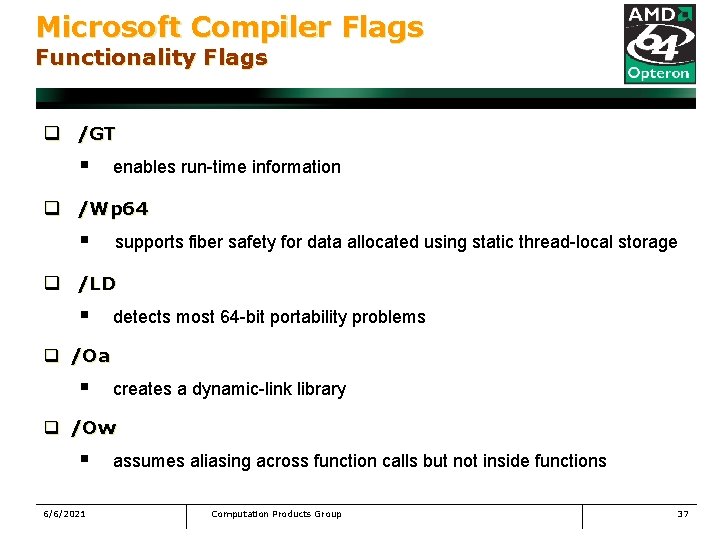

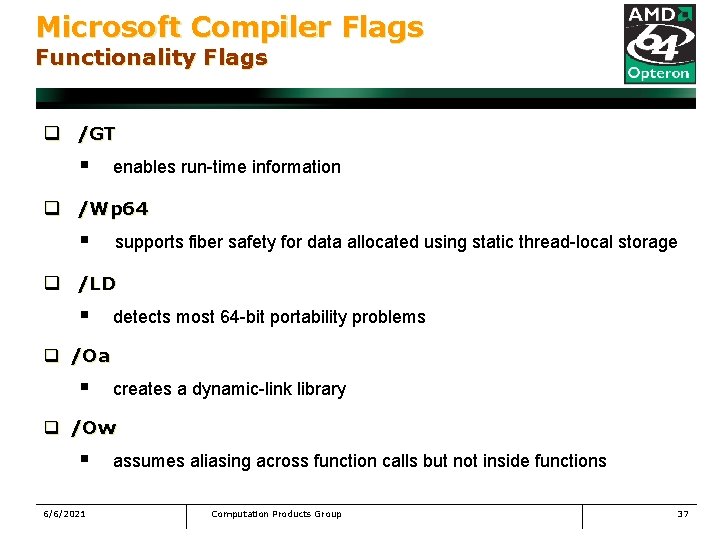

Microsoft Compiler Flags Functionality Flags q /GT § enables run-time information q /Wp 64 § supports fiber safety for data allocated using static thread-local storage q /LD § detects most 64 -bit portability problems q /Oa § creates a dynamic-link library q /Ow § 6/6/2021 assumes aliasing across function calls but not inside functions Computation Products Group 37

64 -Bit Operating Systems Recommendations and Status q SUSE SLES 9 with latest Service Pack available § § Has technology for supporting latest AMD processor features Widest breadth of NUMA support and enabled by default Oprofile system profiler installable as an RPM and modularized complete support for static & dynamically linked 32 -bit binaries q Red Hat Enterprise Server 3. 0 Service Pack 2 or later § § NUMA features support not as complete as that of SUSE SLES 9 Oprofile installable as an RPM but installation is not modularized and may require a kernel rebuild if RPM version isn’t satisfactory § only SP 2 or later has complete 32 -bit shared object library support (a requirement to run all 32 -bit binaries in 64 -bit) § Posix-threading library changed between 2. 1 and 3. 0, may require users to rebuild applications 6/6/2021 Computation Products Group 38

AMD 64 ISV Performance Fluent, LS-DYNA, STAR-CD, Ansys 6/6/2021 Computation Products Group 39

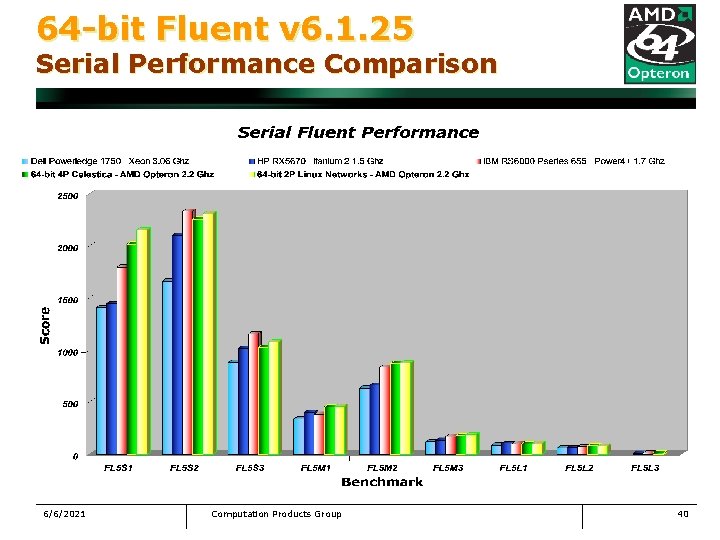

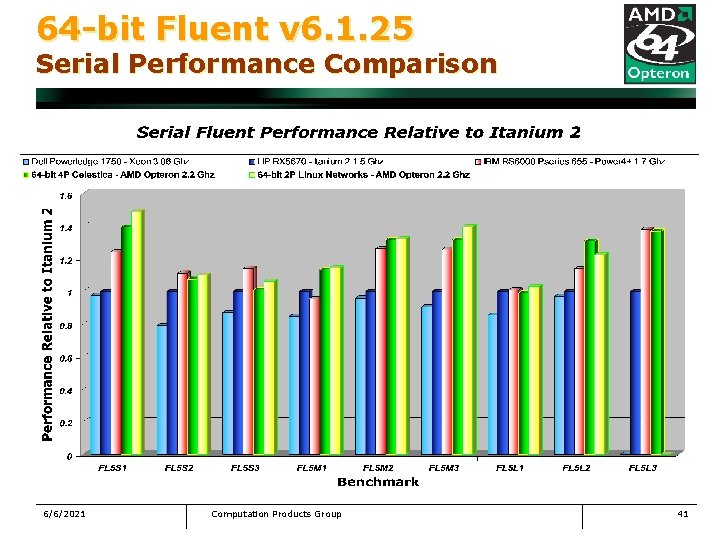

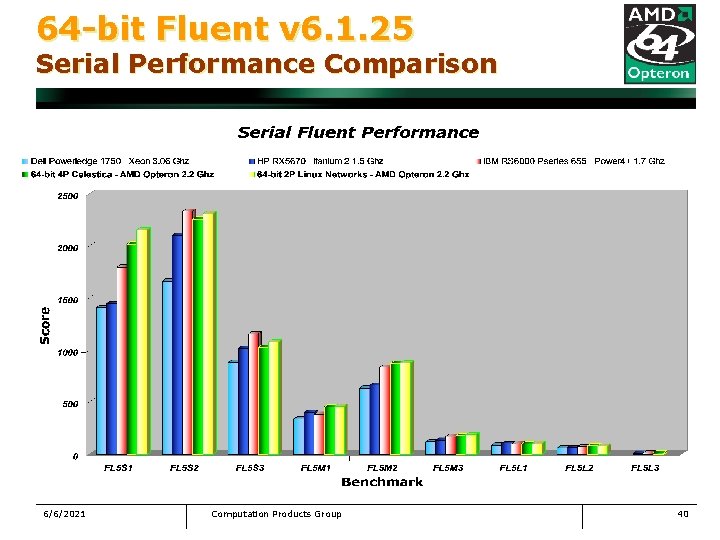

64 -bit Fluent v 6. 1. 25 Serial Performance Comparison 6/6/2021 Computation Products Group 40

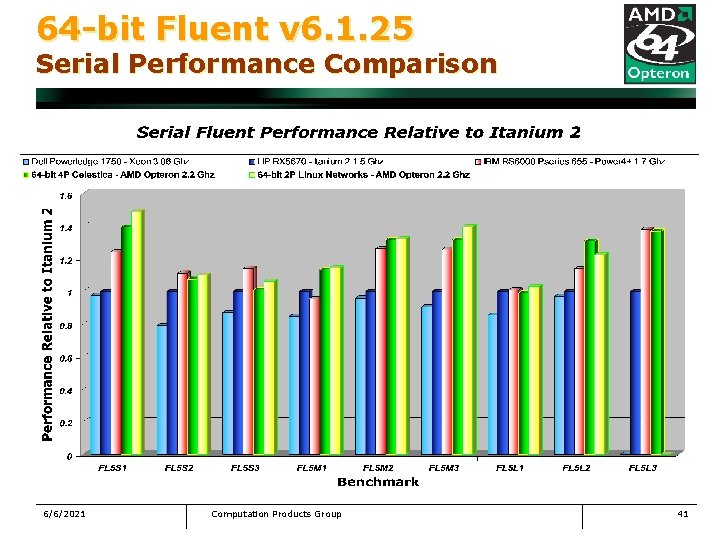

64 -bit Fluent v 6. 1. 25 Serial Performance Comparison 6/6/2021 Computation Products Group 41

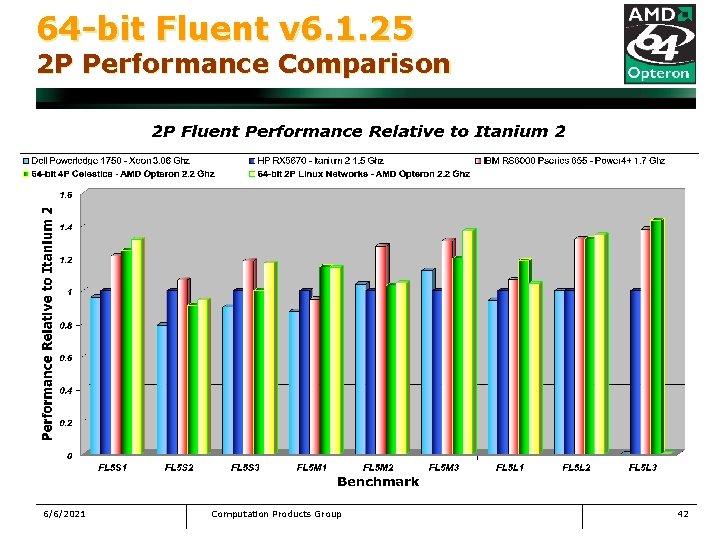

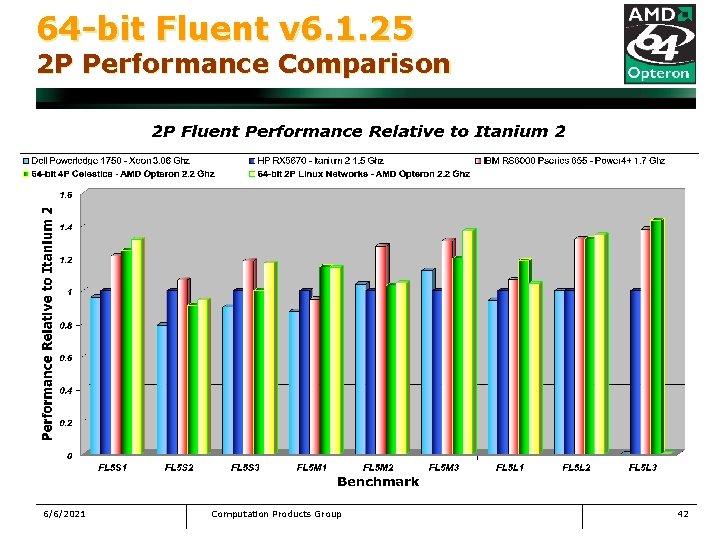

64 -bit Fluent v 6. 1. 25 2 P Performance Comparison 6/6/2021 Computation Products Group 42

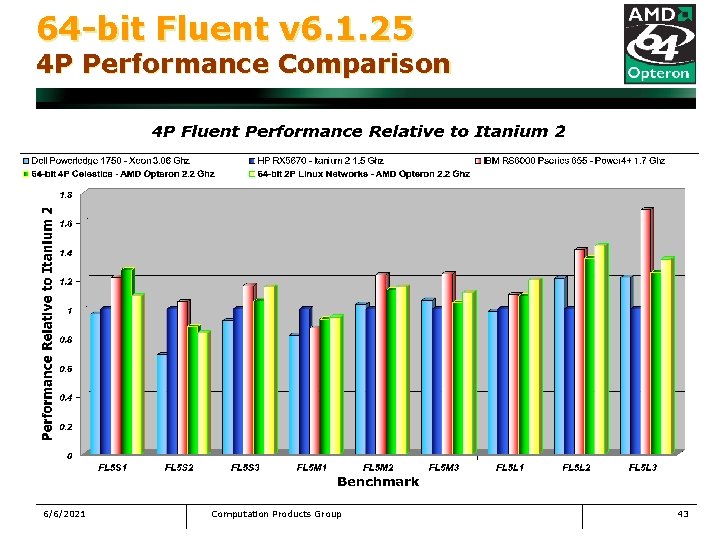

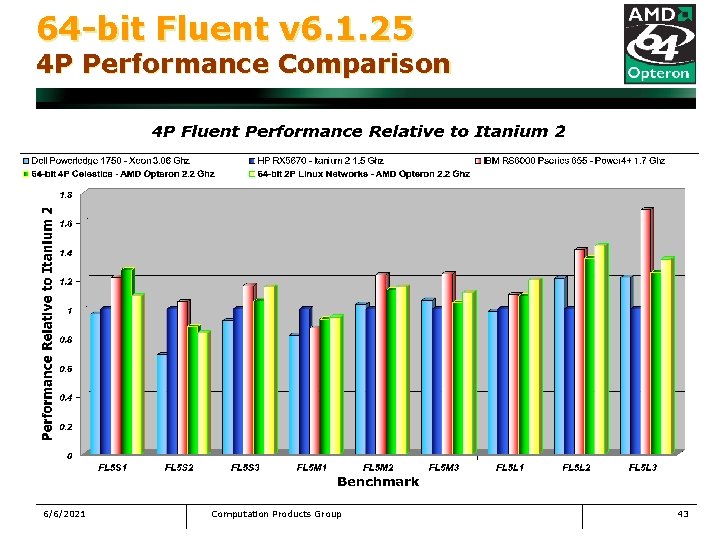

64 -bit Fluent v 6. 1. 25 4 P Performance Comparison 6/6/2021 Computation Products Group 43

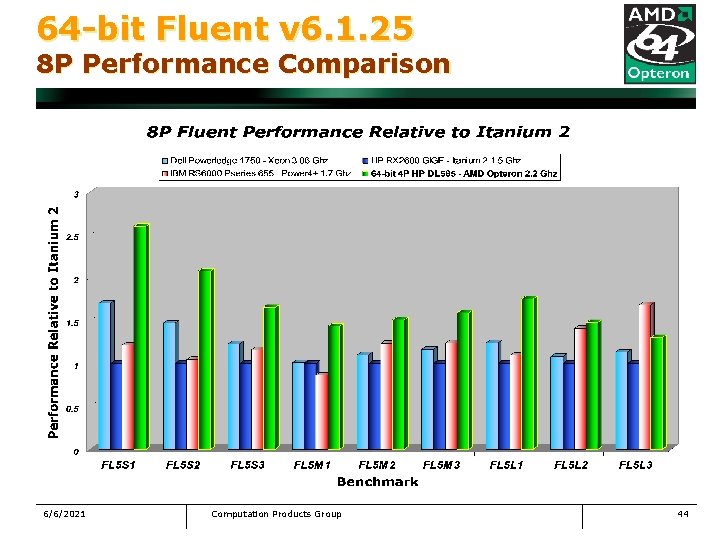

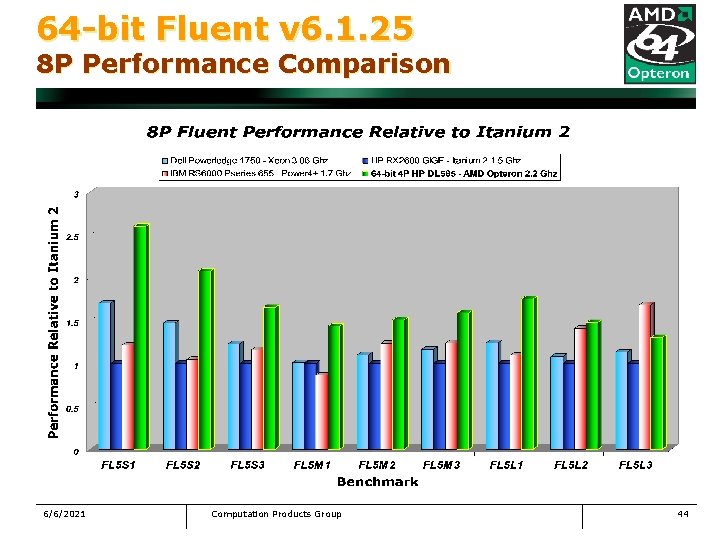

64 -bit Fluent v 6. 1. 25 8 P Performance Comparison 6/6/2021 Computation Products Group 44

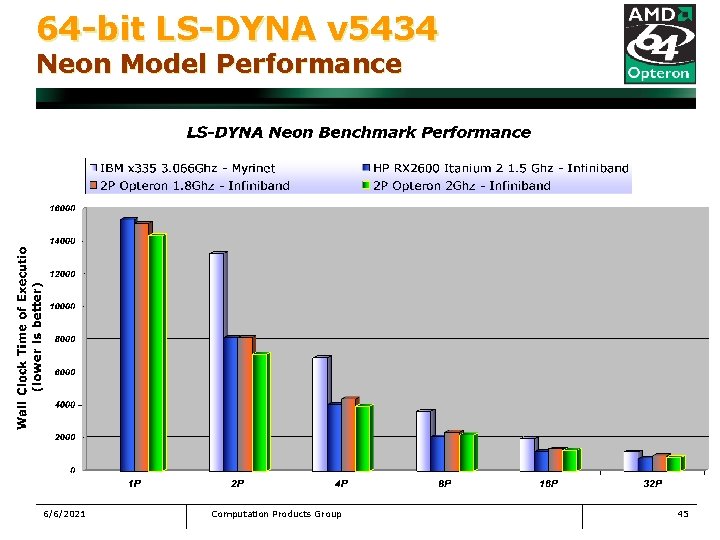

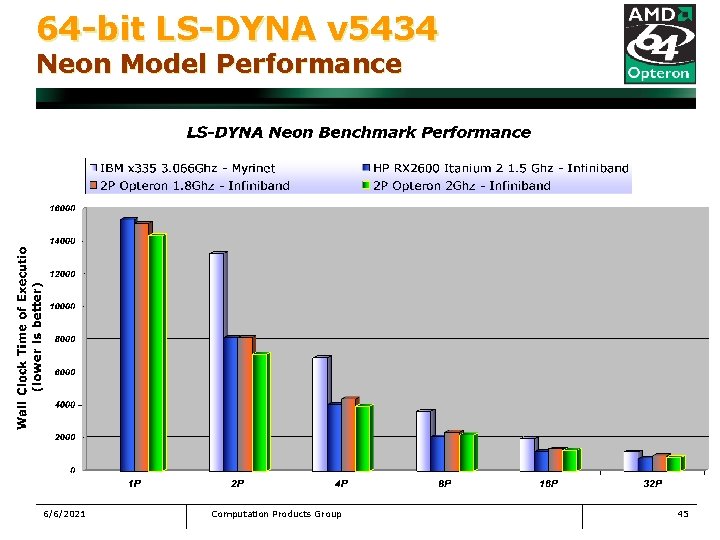

64 -bit LS-DYNA v 5434 Neon Model Performance 6/6/2021 Computation Products Group 45

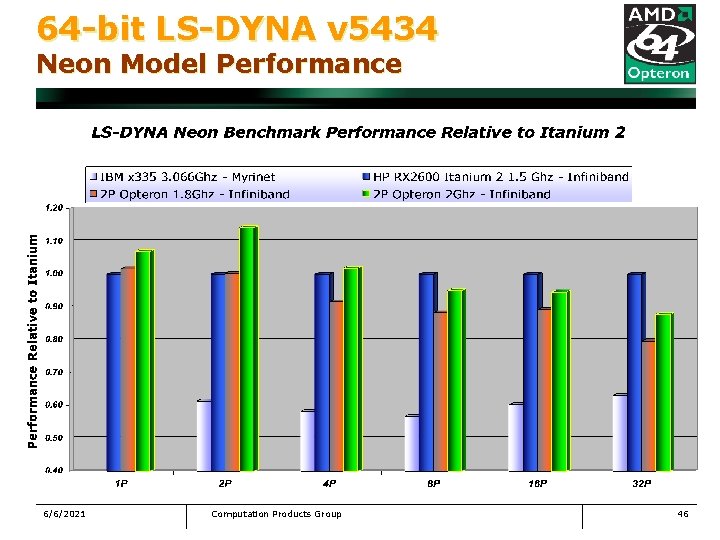

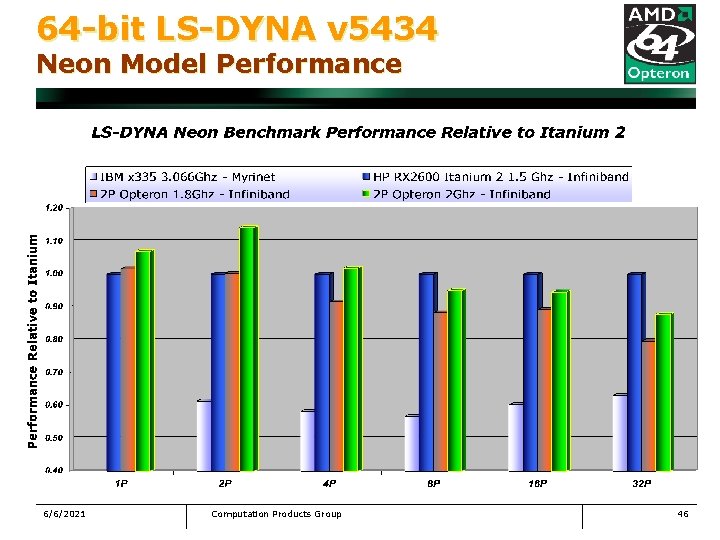

64 -bit LS-DYNA v 5434 Neon Model Performance 6/6/2021 Computation Products Group 46

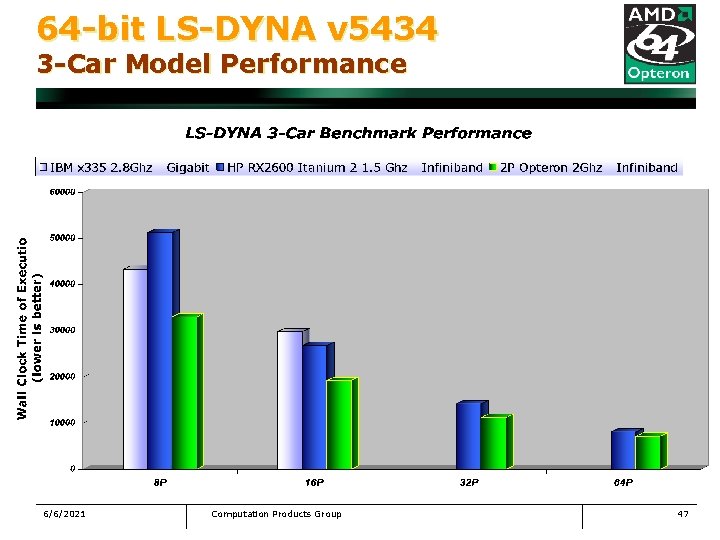

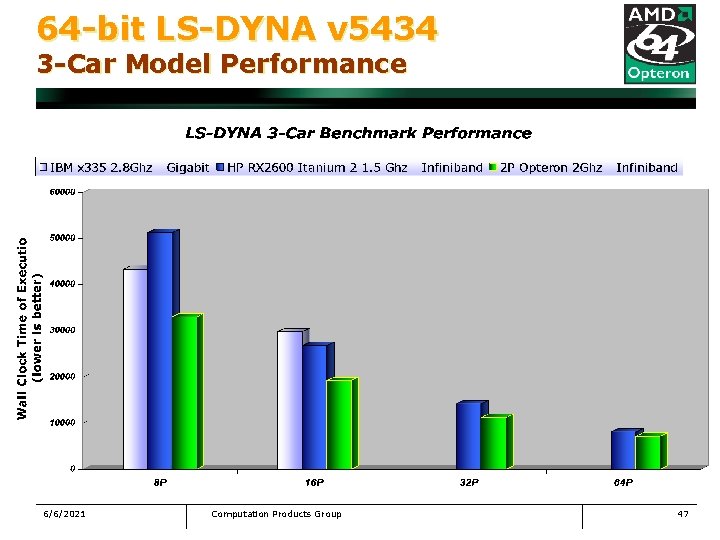

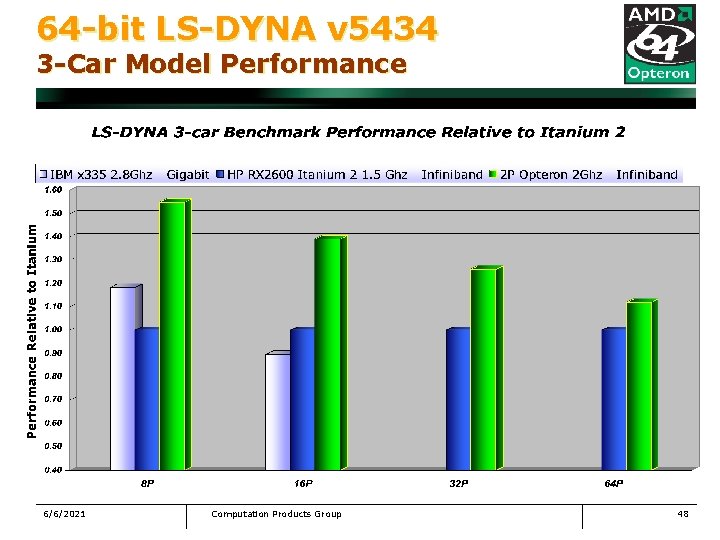

64 -bit LS-DYNA v 5434 3 -Car Model Performance 6/6/2021 Computation Products Group 47

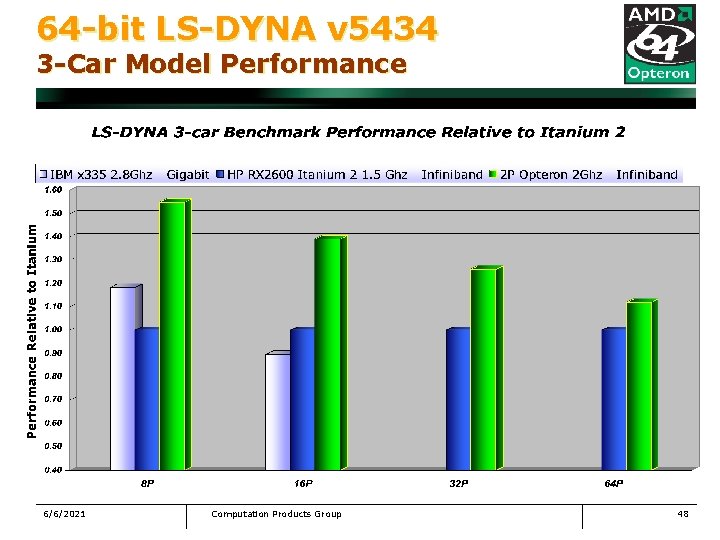

64 -bit LS-DYNA v 5434 3 -Car Model Performance 6/6/2021 Computation Products Group 48

Trademark Attribution AMD, the AMD Arrow Logo, AMD Opteron and combinations thereof are trademarks of Advanced Micro Devices, Inc. Hyper. Transport is a licensed trademark of the Hyper. Transport Technology Consortium. Other product names used in this presentation are for identification purposes only and may be trademarks of their respective companies. 6/6/2021 Computation Products Group 49