Alternative Assessment Portfolios Openended questions Roleplaying Student logs

- Slides: 18

Alternative Assessment • Portfolios • Open-ended questions • Role-playing • Student logs or journals • Interviews • Exhibitions • Event Tasks • Observation • Individual or group projects

Alternative or Authentic Assessment “…designed to take place in a real-life setting, one that is less contrived and artificial than traditional forms of testing. ”

Authentic Assessment Is thought to be performance-based, requiring students to demonstrate specific skills and competencies rather than simply selecting one of several predetermined answers to a question.

Technical Quality of Alternative Assessment • Reliability • Validity • Fairness

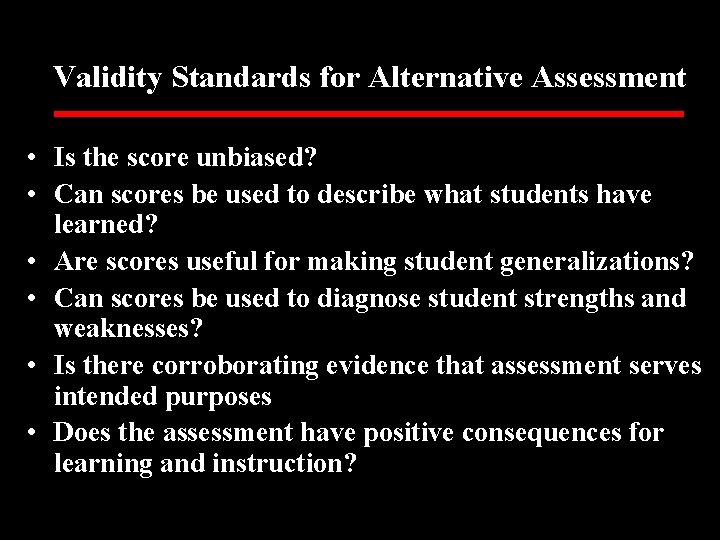

Validity Standards for Alternative Assessment • Is the score unbiased? • Can scores be used to describe what students have learned? • Are scores useful for making student generalizations? • Can scores be used to diagnose student strengths and weaknesses? • Is there corroborating evidence that assessment serves intended purposes • Does the assessment have positive consequences for learning and instruction?

Establishing Criteria for Judging Alternative Assessments • Checklist Be specific • Analytic assessment Observe component “pieces” when analyzing performance • Holistic assessment Evaluate the overall quality of the performance

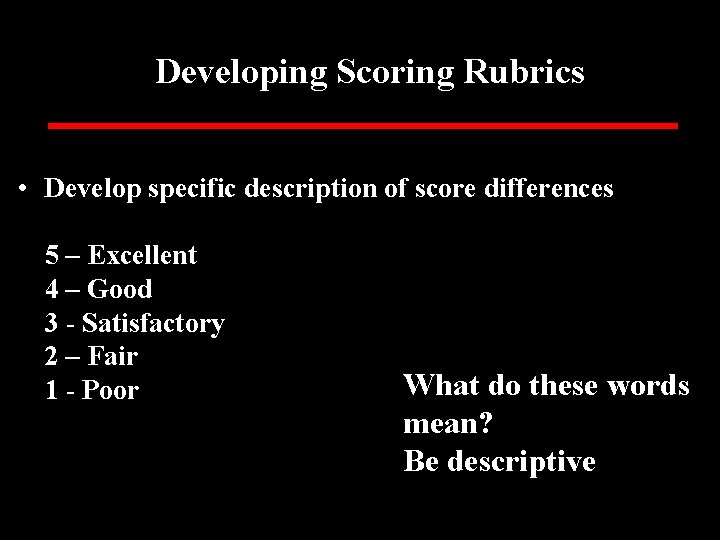

Developing Scoring Rubrics • Develop specific description of score differences 5 – Excellent 4 – Good 3 - Satisfactory 2 – Fair 1 - Poor What do these words mean? Be descriptive

Alternative Assessment Techniques • Observation • Individual or Group Projects • Portfolios • Exhibitions • Student Logs or Journals

Guidelines for Developing Alternative Assessments • • • Determining Purpose Defining the Target Selecting the Appropriate Assessment Task Setting Performance Criteria Determining the Quality of the Assessment

Tips for Improving Alternative Assessment • Ensure assessment is congruent with intended outcomes and instructional practices • Recognize that observation is a legitimate method of assessment • Use an assessment procedure appropriate for the use of the results • Use authentic assessment in realistic settings • Design clear, explicit scoring rubrics • Provide scoring rubrics to students • Be objective! • Record immediately after observation • Use multiple observations • Supplement observations with other evidence of achievement

Some Questions to Consider Is the assessment task. . • • • matched to the outcome goals? meaningful to students, parents, and teachers? fair and free from bias? authentic? measurable? feasible?

Grading: A Summative Evaluation

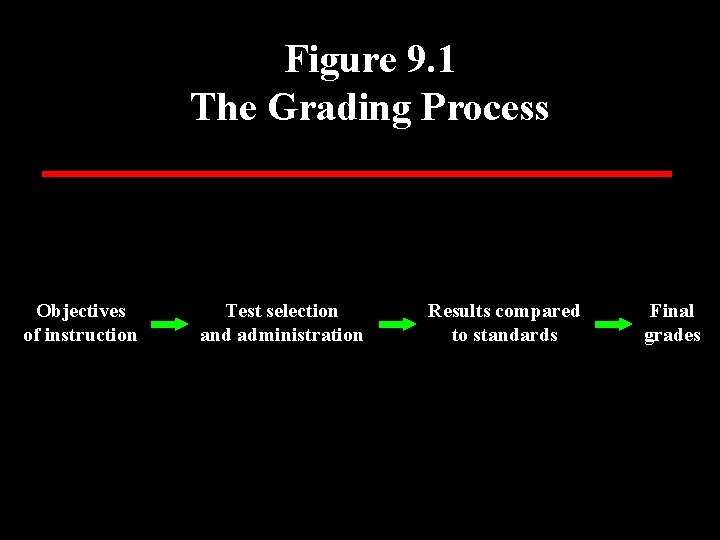

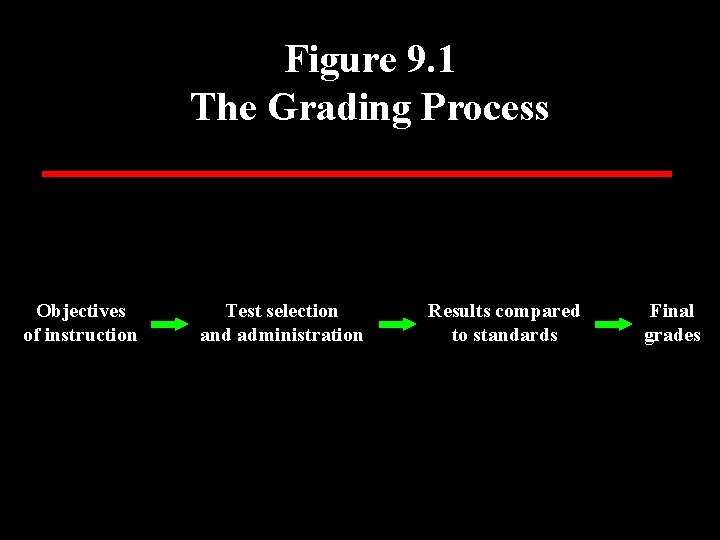

Figure 9. 1 The Grading Process Objectives of instruction Test selection and administration Results compared to standards Final grades

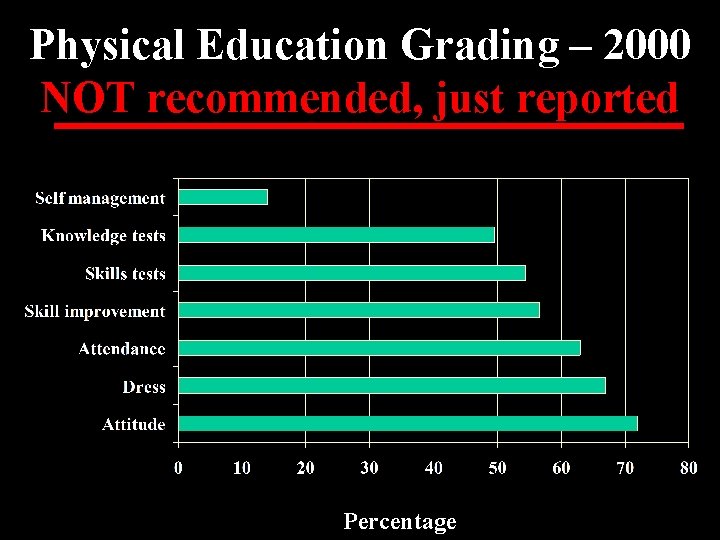

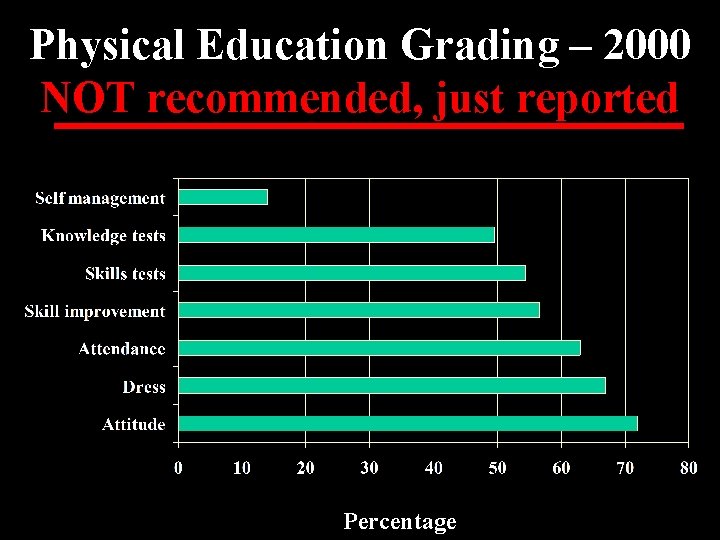

Physical Education Grading – 2000 NOT recommended, just reported Percentage

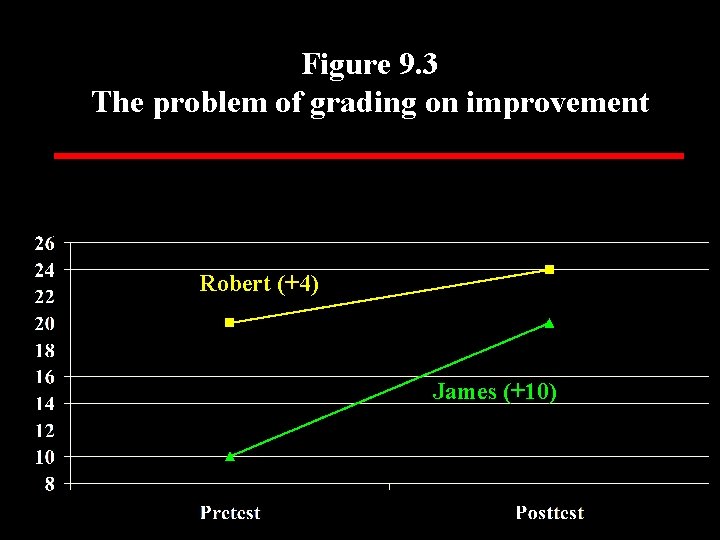

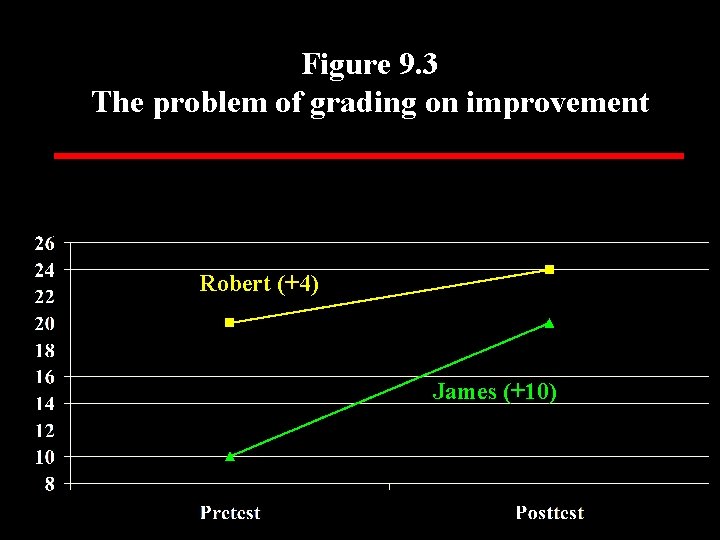

Figure 9. 3 The problem of grading on improvement Robert (+4) James (+10)

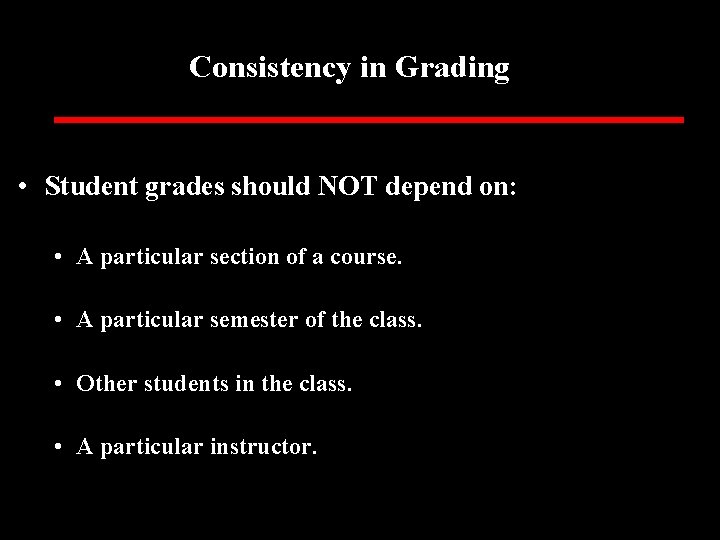

Consistency in Grading • Student grades should NOT depend on: • A particular section of a course. • A particular semester of the class. • Other students in the class. • A particular instructor.

Making Grading Fair, Reliable, and Valid • • Determine defensible objectives Ability group students (to max engagement) Construct tests which reflect objectivity No test is perfectly reliable Grades should reflect status, not improvement Don't use grades to reward good effort Consider grades as measurements, not evaluations

Grading Mechanics • Determine and Weight Course Objectives • Measure the Degree of Attainment of Course Objectives • Obtain a Composite Score (do NOT get hung up here) Rank Method Normalizing Method Standard Score Method • Convert Composite Scores to a Grade