All or Nothing The Challenge of Hardware Offload

- Slides: 10

All or Nothing The Challenge of Hardware Offload _________________ December 5, 2018 Dan Daly

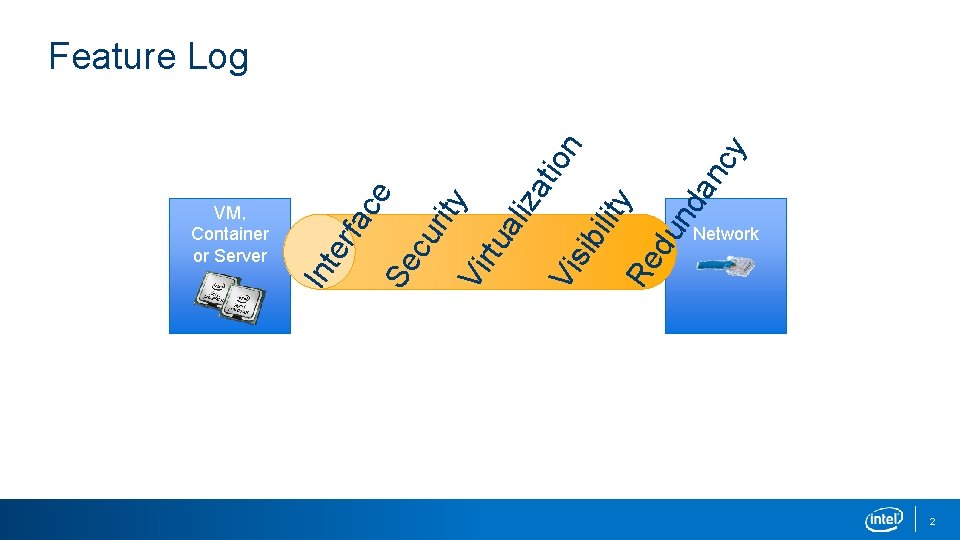

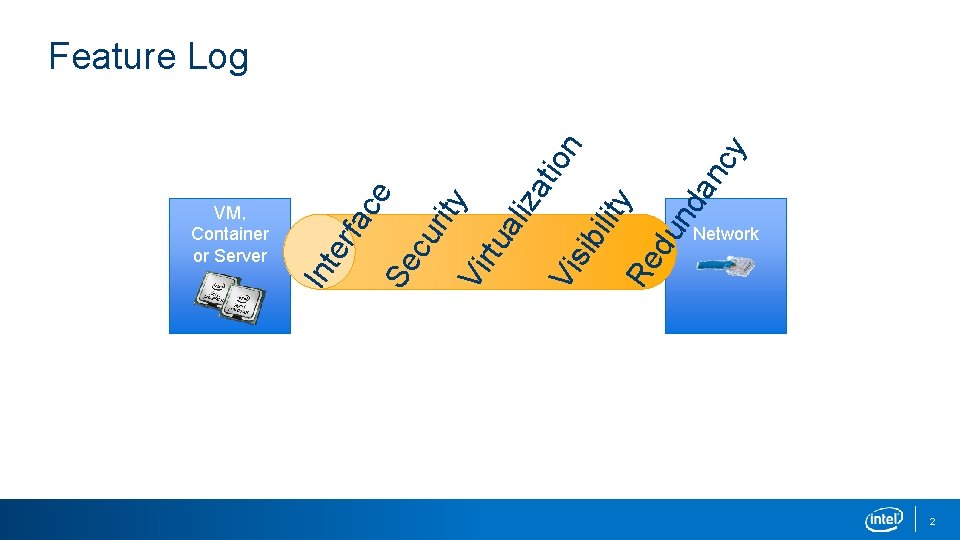

cy on an ity za ti nd du Re ali bil Vi si Vi rtu ity ur Se c ce rfa VM, Container or Server Int e Feature Log Network 2

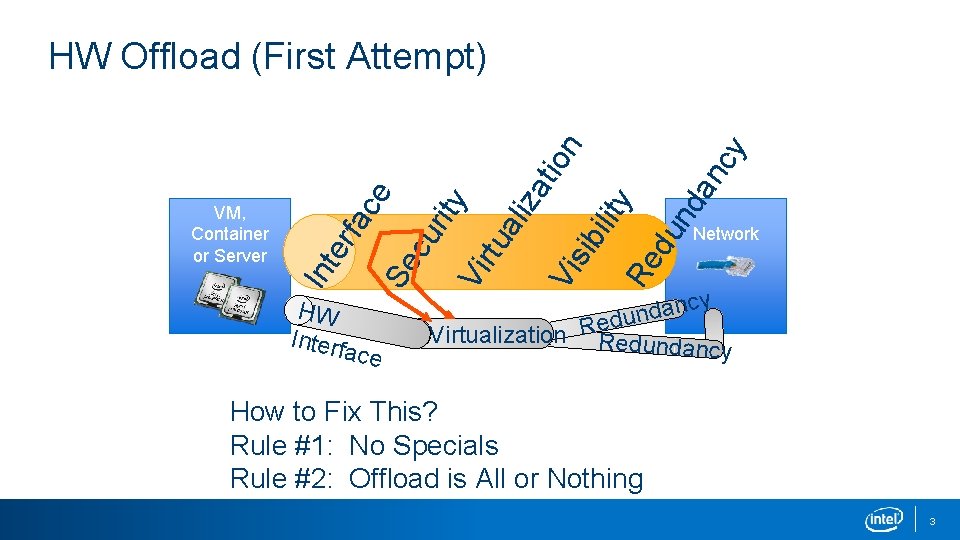

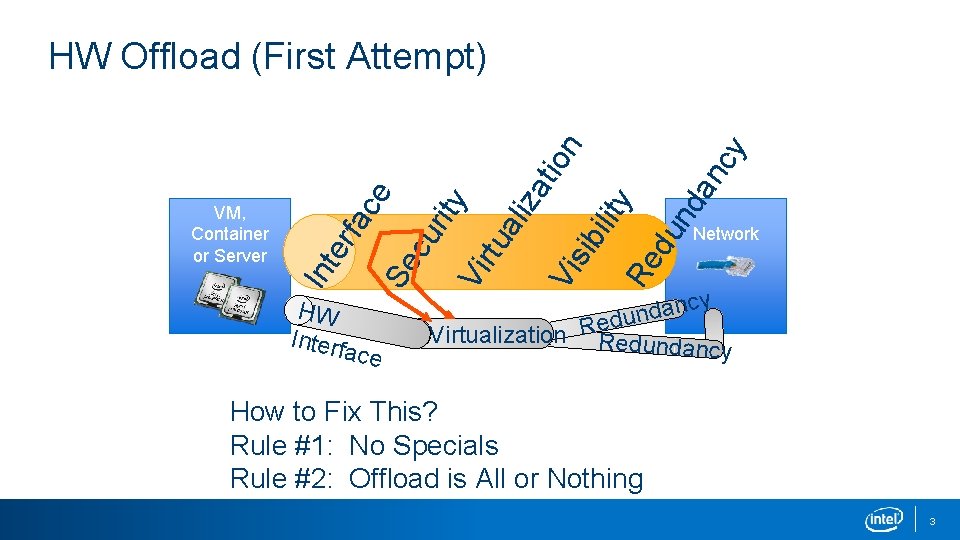

cy an nd cy du an nd Re Re ilit y sib Vi si Vi Network du bil n tio rtu ali za Vi rtu ity za ti ali ity Vi cu Se er rity Se c ur rfa e e fac Int e HW Interf ac Int VM, Container or Server ce on HW Offload (First Attempt) cy n a d n du e R Virtualization Redun dancy How to Fix This? Rule #1: No Specials Rule #2: Offload is All or Nothing 3

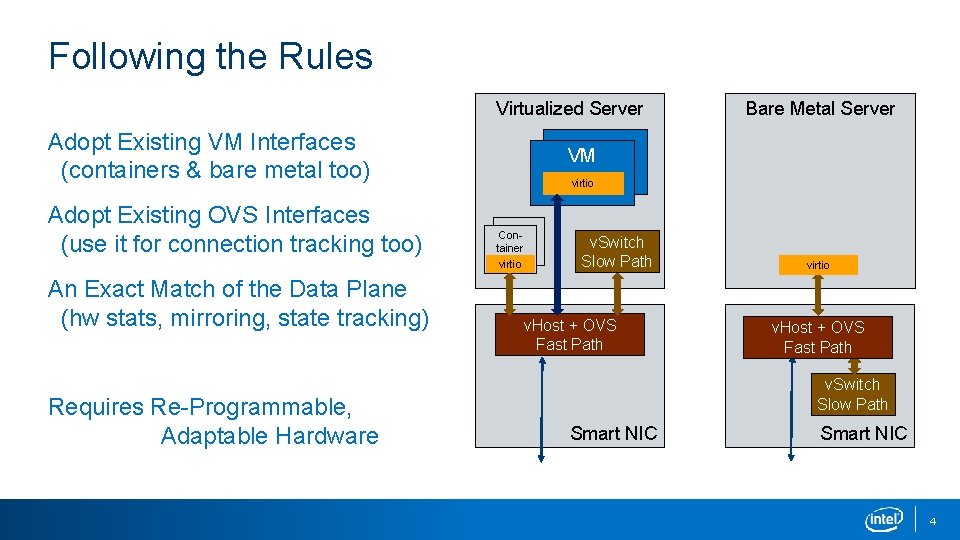

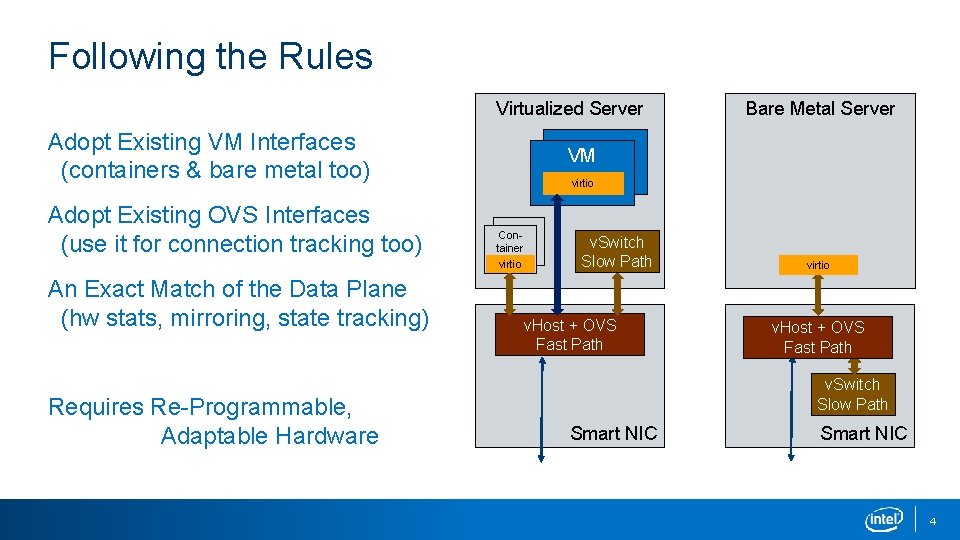

Following the Rules Virtualized Server Adopt Existing VM Interfaces (containers & bare metal too) Adopt Existing OVS Interfaces (use it for connection tracking too) VM virtio Container virtio An Exact Match of the Data Plane (hw stats, mirroring, state tracking) Requires Re-Programmable, Adaptable Hardware Bare Metal Server v. Switch Slow Path v. Host + OVS Fast Path virtio v. Host + OVS Fast Path v. Switch Slow Path Smart NIC 4

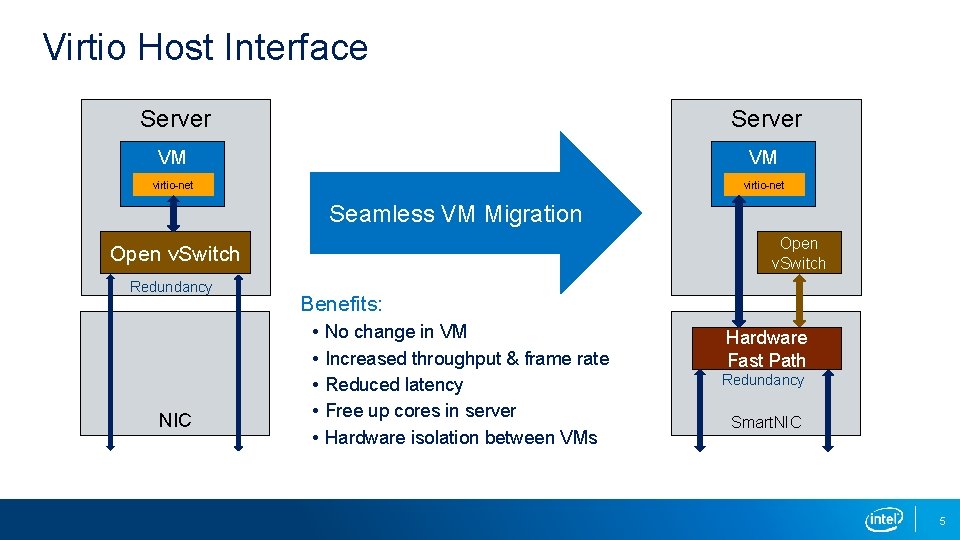

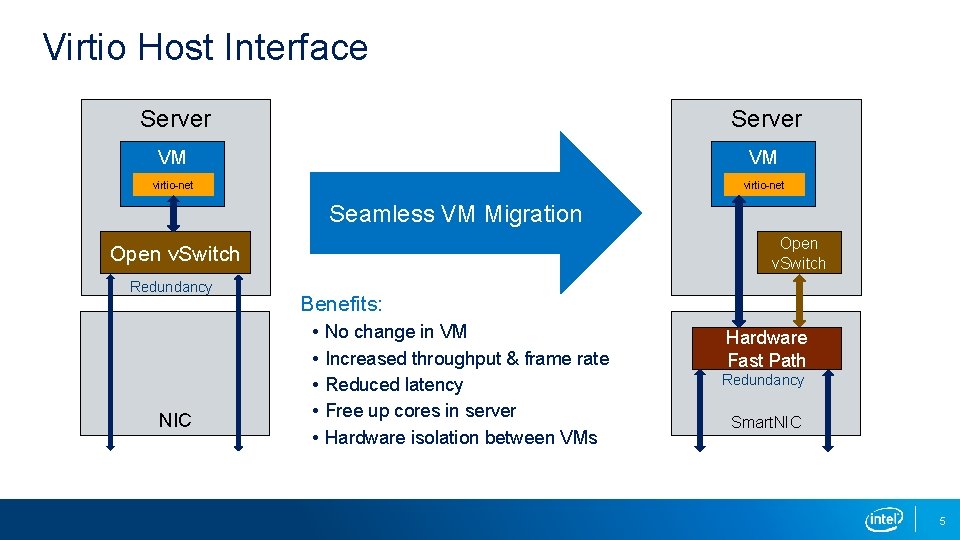

Virtio Host Interface Server VM VM virtio-net Seamless VM Migration Open v. Switch Redundancy NIC Benefits: • • • No change in VM Increased throughput & frame rate Reduced latency Free up cores in server Hardware isolation between VMs Hardware Fast Path Redundancy Smart. NIC 5

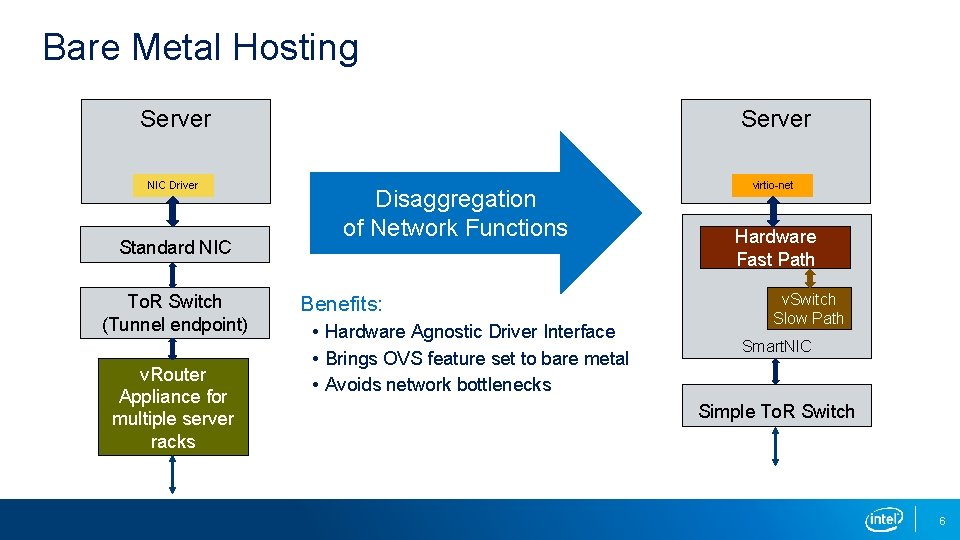

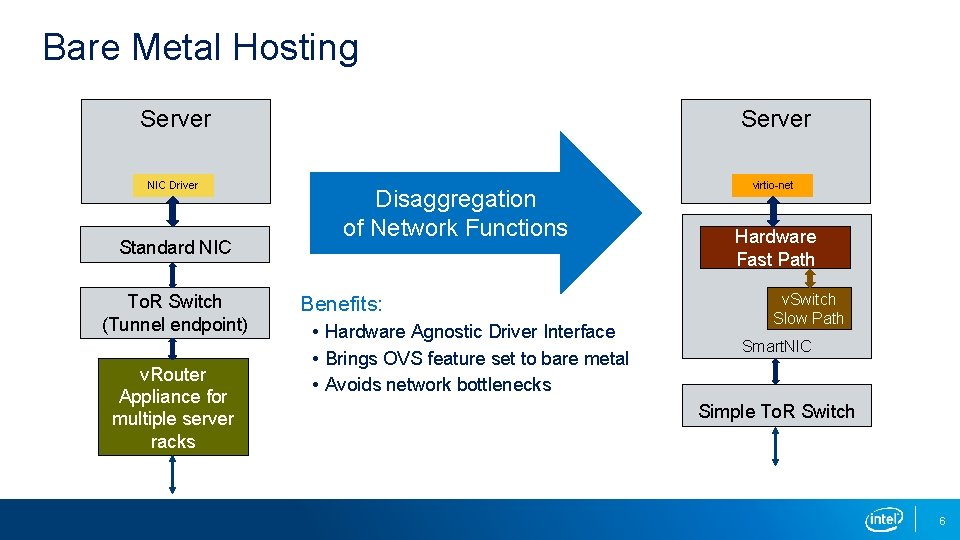

Bare Metal Hosting Server NIC Driver virtio-net Standard NIC To. R Switch (Tunnel endpoint) v. Router Appliance for multiple server racks Disaggregation of Network Functions Benefits: • Hardware Agnostic Driver Interface • Brings OVS feature set to bare metal • Avoids network bottlenecks Hardware Fast Path v. Switch Slow Path Smart. NIC Simple To. R Switch 6

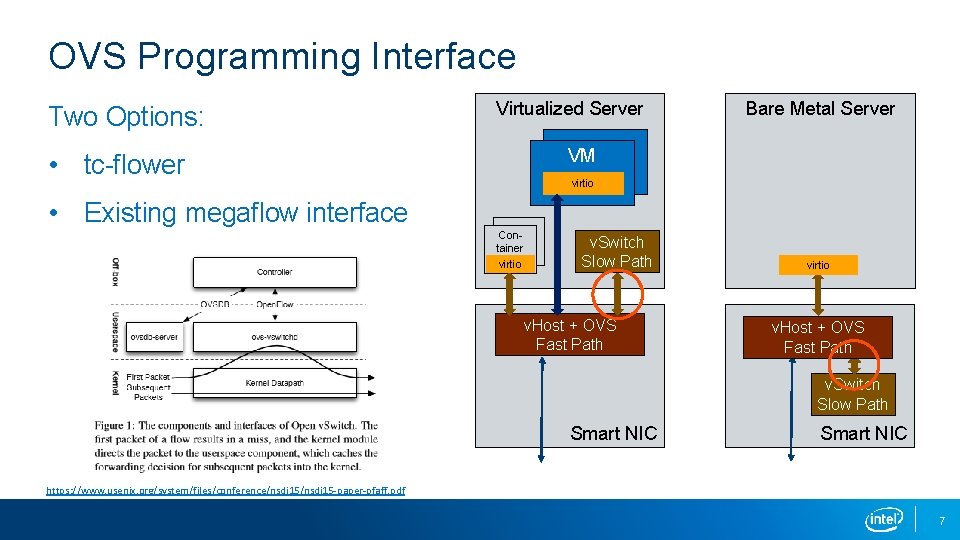

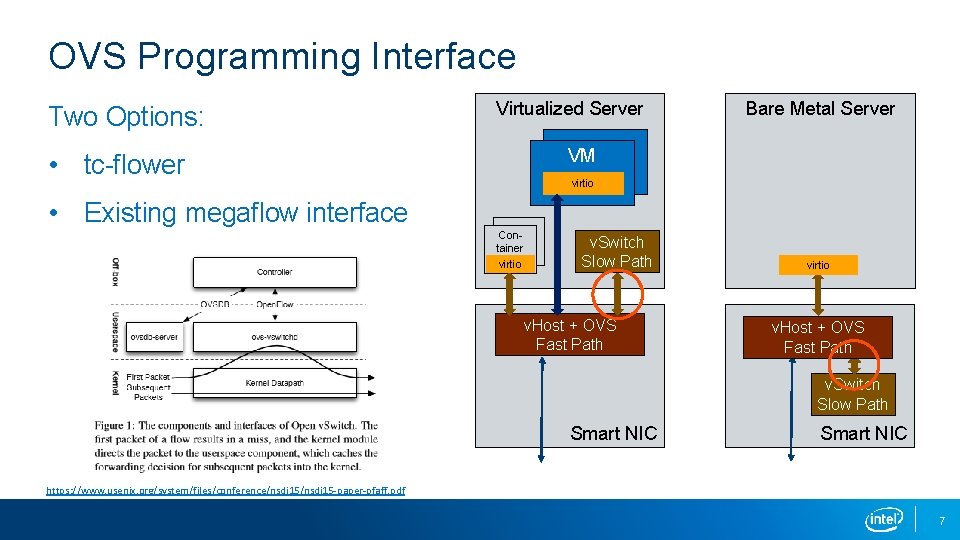

OVS Programming Interface Virtualized Server Two Options: Bare Metal Server VM • tc-flower virtio • Existing megaflow interface Container virtio v. Switch Slow Path v. Host + OVS Fast Path virtio v. Host + OVS Fast Path v. Switch Slow Path Smart NIC https: //www. usenix. org/system/files/conference/nsdi 15 -paper-pfaff. pdf 7

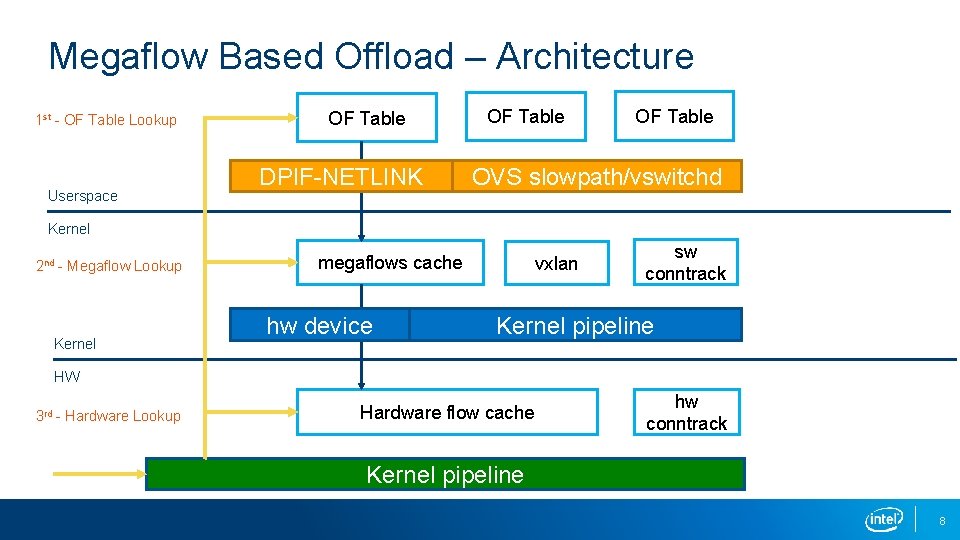

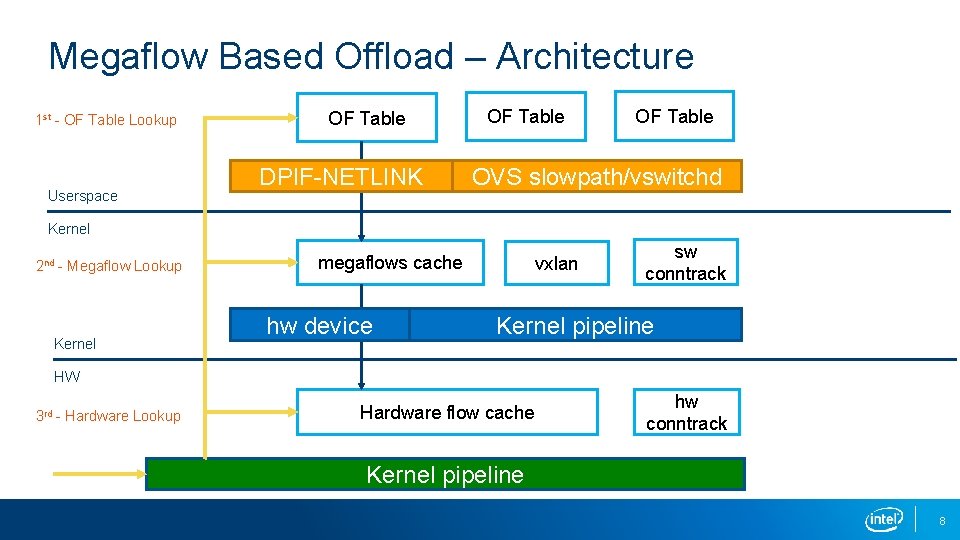

Megaflow Based Offload – Architecture 1 st - OF Table Lookup Userspace OF Table DPIF-NETLINK OF Table OVS slowpath/vswitchd Kernel 2 nd - Megaflow Lookup Kernel megaflows cache vxlan hw device sw conntrack Kernel pipeline HW 3 rd - Hardware Lookup Hardware flow cache hw conntrack Kernel pipeline 8

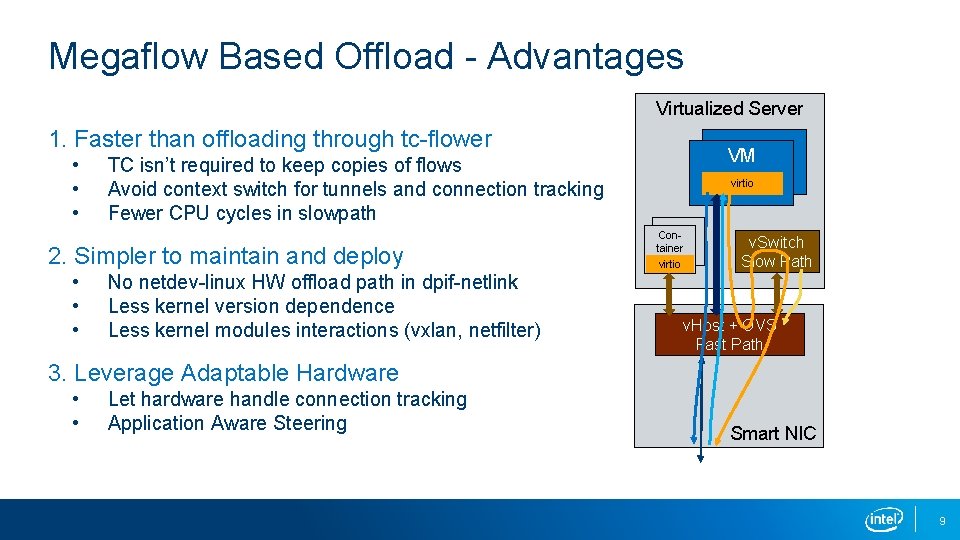

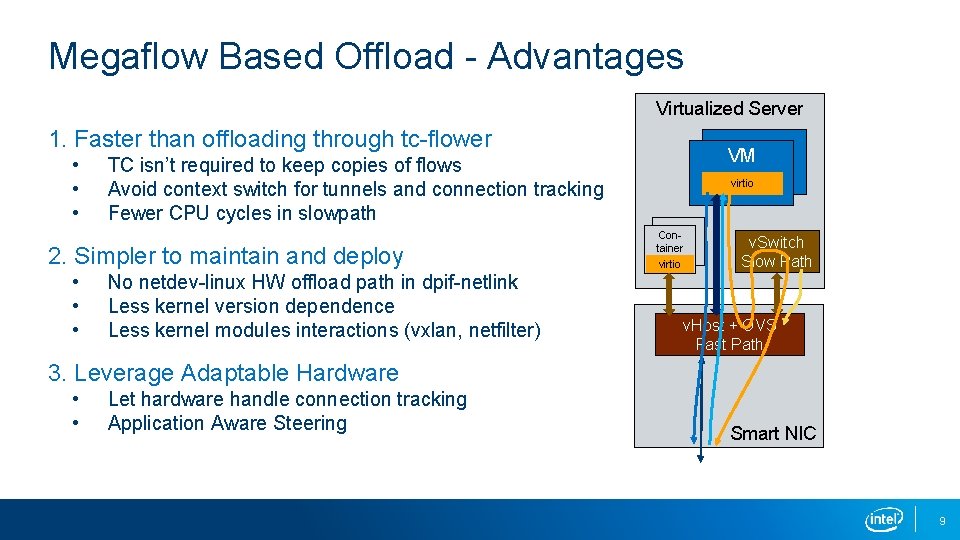

Megaflow Based Offload - Advantages Virtualized Server 1. Faster than offloading through tc-flower • • • virtio Container 2. Simpler to maintain and deploy • • • VM TC isn’t required to keep copies of flows Avoid context switch for tunnels and connection tracking Fewer CPU cycles in slowpath virtio No netdev-linux HW offload path in dpif-netlink Less kernel version dependence Less kernel modules interactions (vxlan, netfilter) v. Switch Slow Path v. Host + OVS Fast Path 3. Leverage Adaptable Hardware • • Let hardware handle connection tracking Application Aware Steering Smart NIC 9

An Exact Match of the Data Plane Some Software- Extremely hard to change • Anything in a customer VM • Kernel upgrades are no fun At the same time, v. Switch requirements are moving extremely fast • Tunneling, connection tracking, telemetry, mirroring, failover Intel Approach • Build Programmable, Adaptable Hardware (see next session) 10