ALICE TPC CRU Ken Oyama Nagasaki Institute of

- Slides: 24

ALICE TPC CRU Ken Oyama Nagasaki Institute of Applied Science Mar. 16, 2020 mini Workshop in Tamachi JP 17 H 02903 JP 16 K 13808 JP 17 K 18783

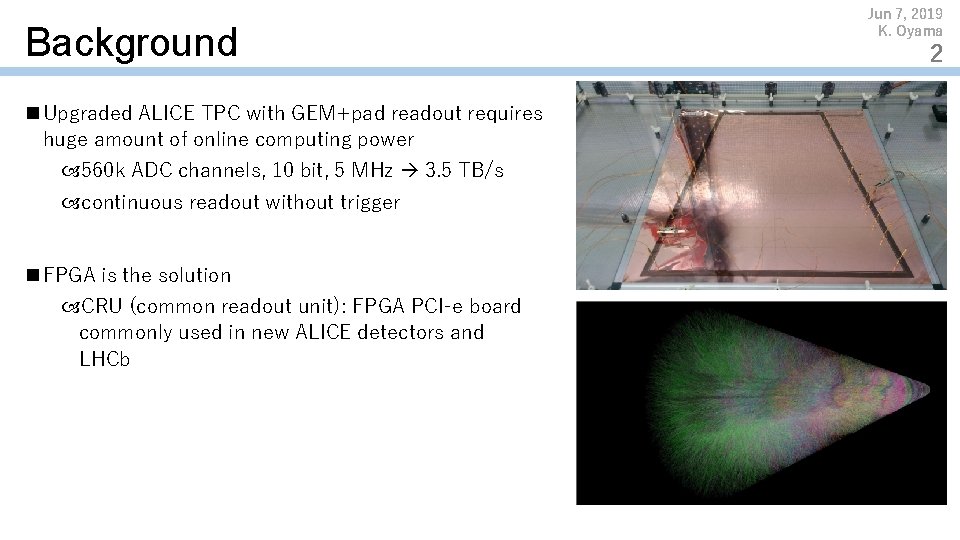

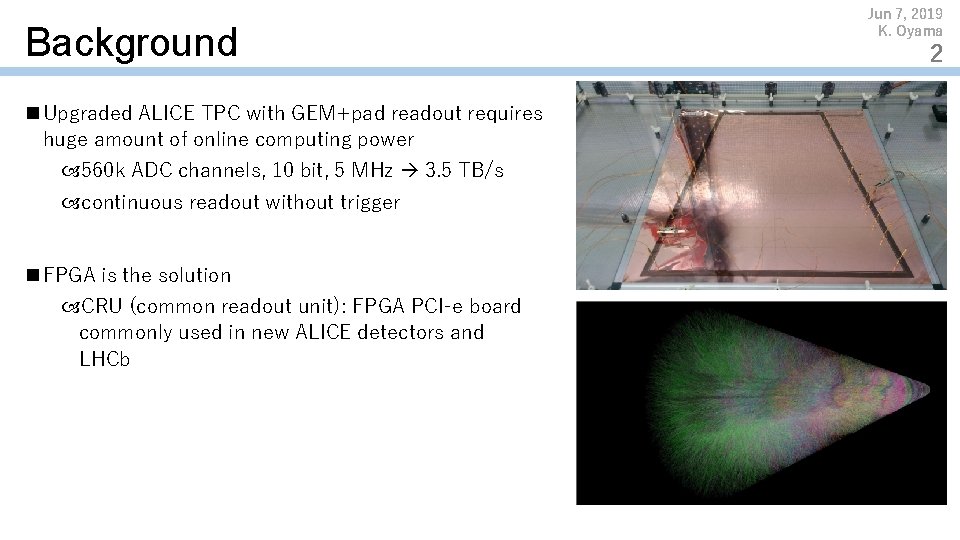

Background n Upgraded ALICE TPC with GEM+pad readout requires huge amount of online computing power 560 k ADC channels, 10 bit, 5 MHz 3. 5 TB/s continuous readout without trigger n FPGA is the solution CRU (common readout unit): FPGA PCI-e board commonly used in new ALICE detectors and LHCb Jun 7, 2019 K. Oyama 2

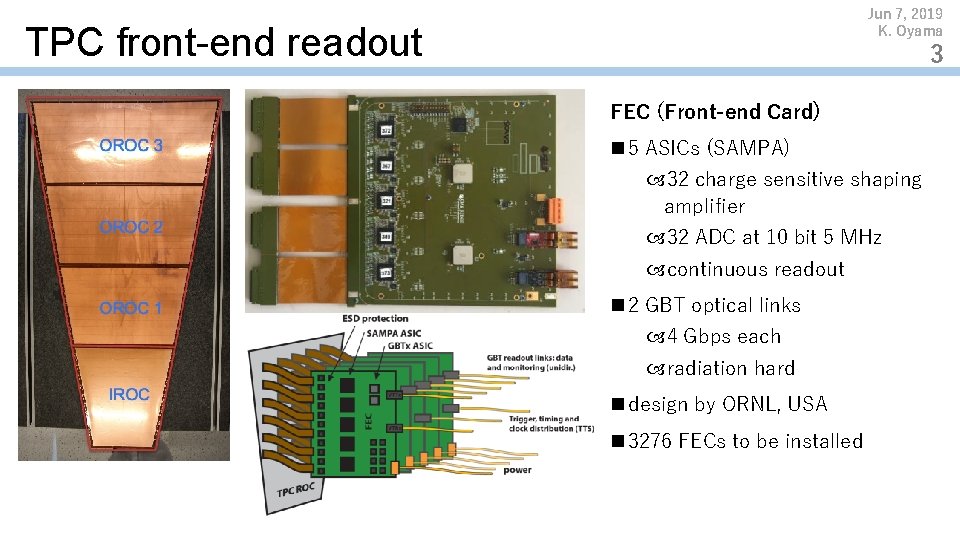

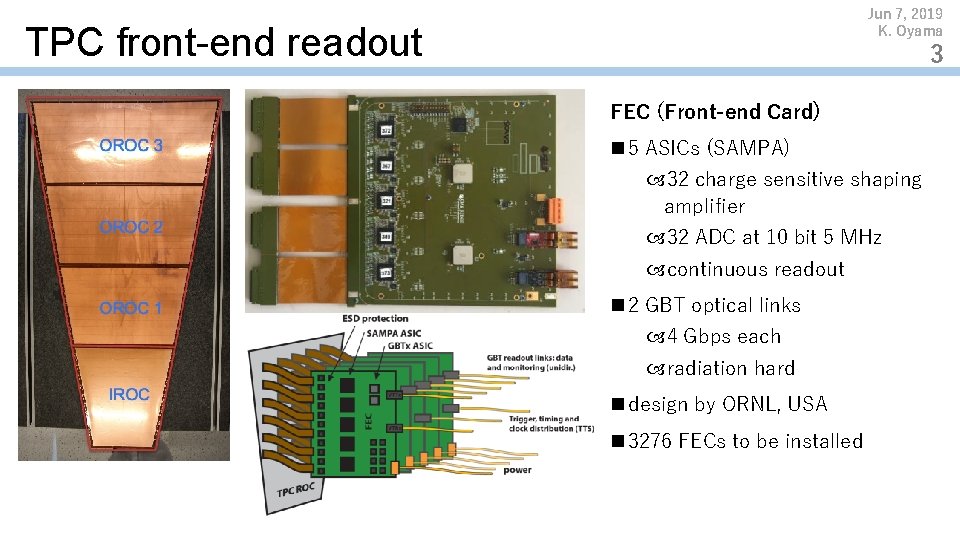

Jun 7, 2019 K. Oyama TPC front-end readout 3 FEC (Front-end Card) n 5 ASICs (SAMPA) 32 charge sensitive shaping amplifier 32 ADC at 10 bit 5 MHz continuous readout n 2 GBT optical links 4 Gbps each radiation hard n design by ORNL, USA n 3276 FECs to be installed

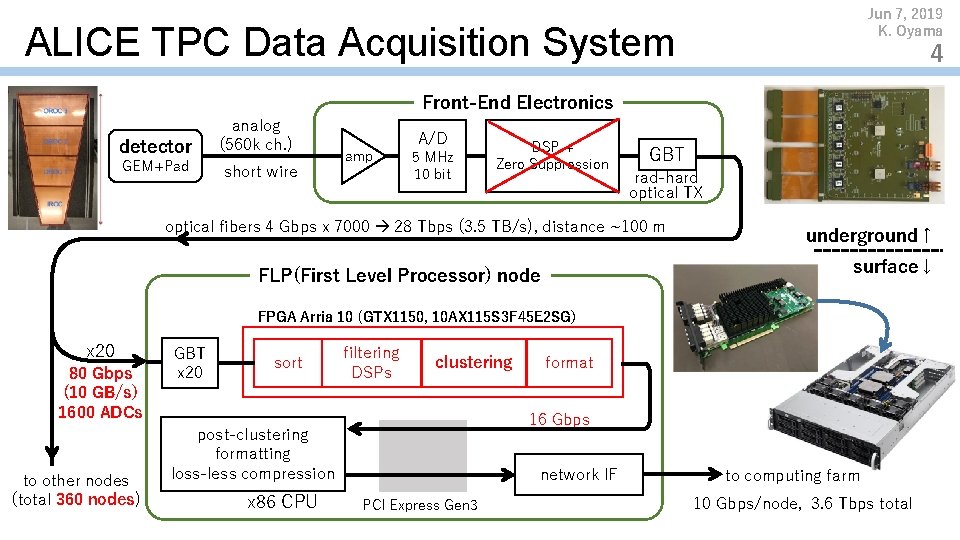

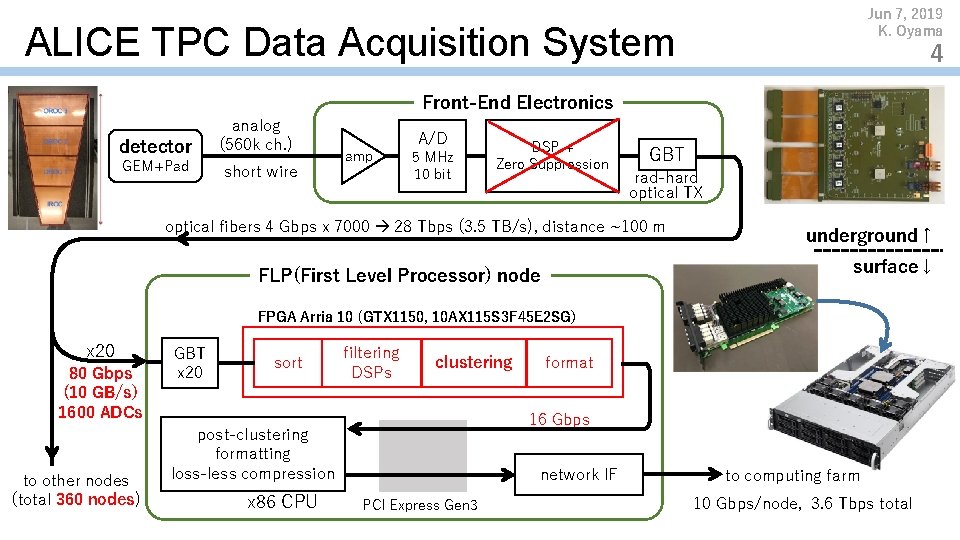

Jun 7, 2019 K. Oyama ALICE TPC Data Acquisition System 4 Front-End Electronics detector GEM+Pad analog (560 k ch. ) short wire amp A/D 5 MHz 10 bit DSP + Zero Suppression GBT rad-hard optical TX optical fibers 4 Gbps x 7000 28 Tbps (3. 5 TB/s), distance ~100 m underground↑ surface↓ FLP(First Level Processor) node FPGA Arria 10 (GTX 1150, 10 AX 115 S 3 F 45 E 2 SG) x 20 80 Gbps (10 GB/s) 1600 ADCs to other nodes (total 360 nodes) GBT x 20 sort filtering DSPs clustering 16 Gbps post-clustering formatting loss-less compression x 86 CPU format network IF PCI Express Gen 3 to computing farm 10 Gbps/node, 3. 6 Tbps total

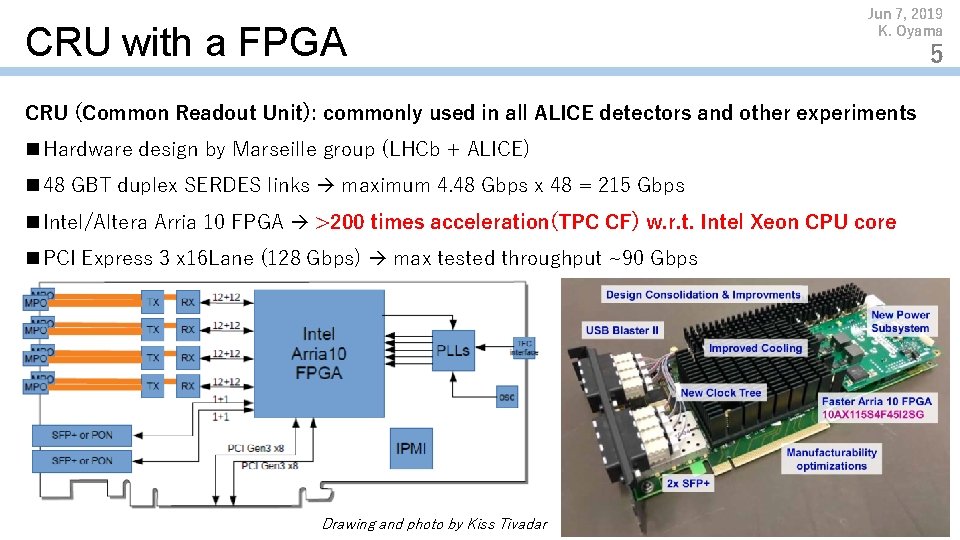

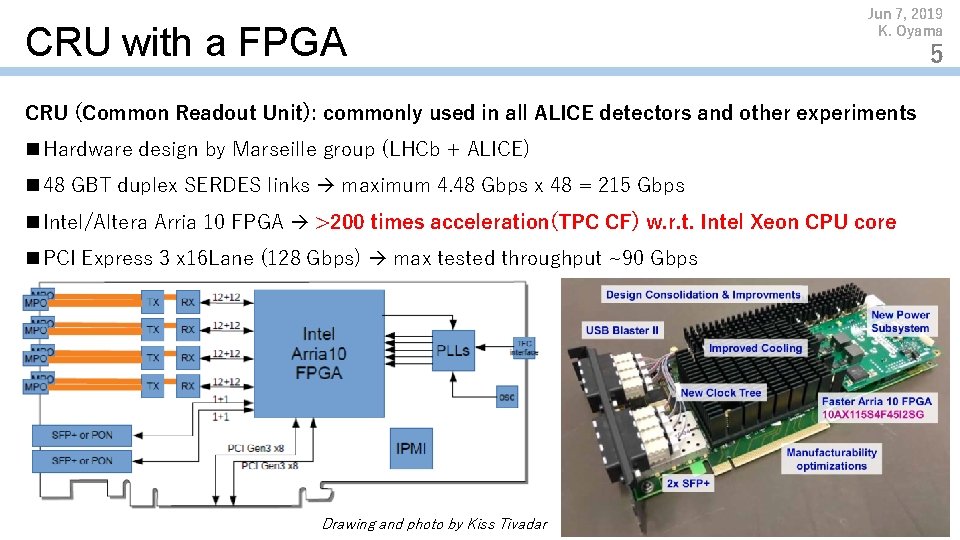

CRU with a FPGA Jun 7, 2019 K. Oyama CRU (Common Readout Unit): commonly used in all ALICE detectors and other experiments n Hardware design by Marseille group (LHCb + ALICE) n 48 GBT duplex SERDES links maximum 4. 48 Gbps x 48 = 215 Gbps n Intel/Altera Arria 10 FPGA >200 times acceleration(TPC CF) w. r. t. Intel Xeon CPU core n PCI Express 3 x 16 Lane (128 Gbps) max tested throughput ~90 Gbps n 現在最終バージョンの評価段階に Drawing and photo by Kiss Tivadar 5

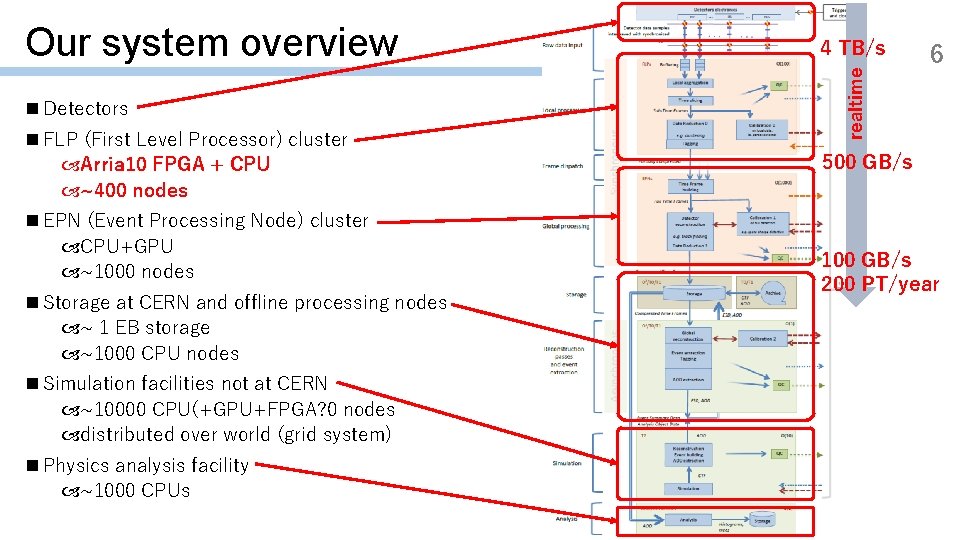

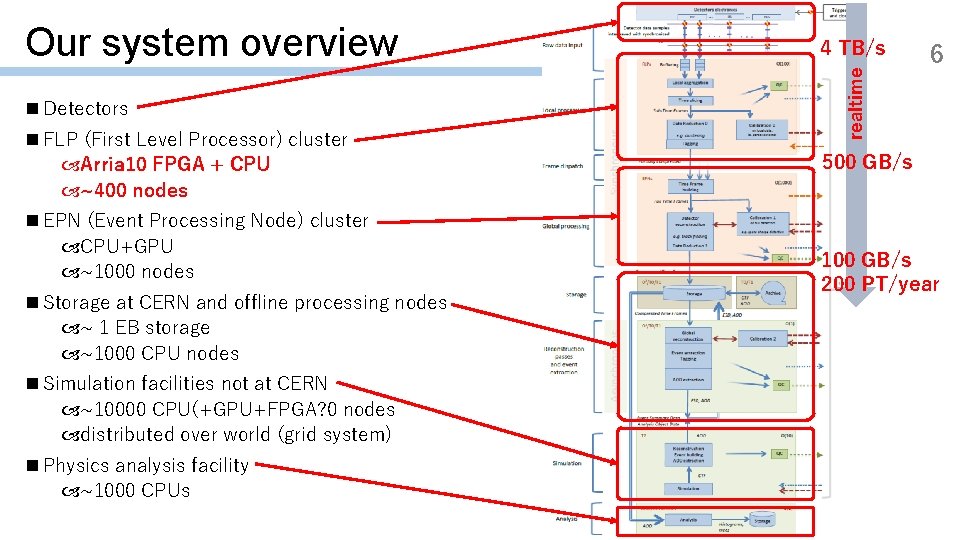

n Detectors n FLP (First Level Processor) cluster Arria 10 FPGA + CPU ~400 nodes n EPN (Event Processing Node) cluster CPU+GPU ~1000 nodes n Storage at CERN and offline processing nodes ~ 1 EB storage ~1000 CPU nodes n Simulation facilities not at CERN ~10000 CPU(+GPU+FPGA? 0 nodes distributed over world (grid system) n Physics analysis facility ~1000 CPUs 4 TB/s realtime Our system overview Jun 7, 2019 K. Oyama 6 500 GB/s 100 GB/s 200 PT/year

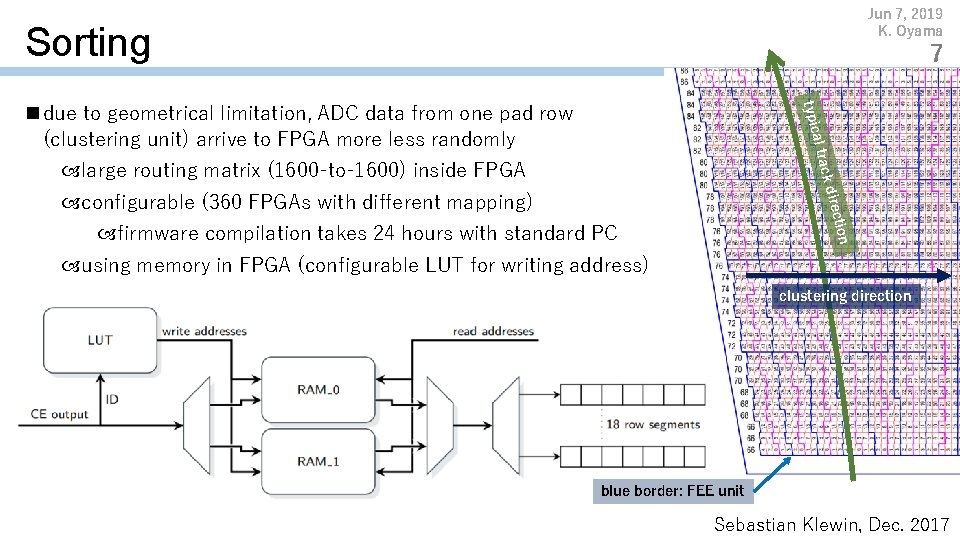

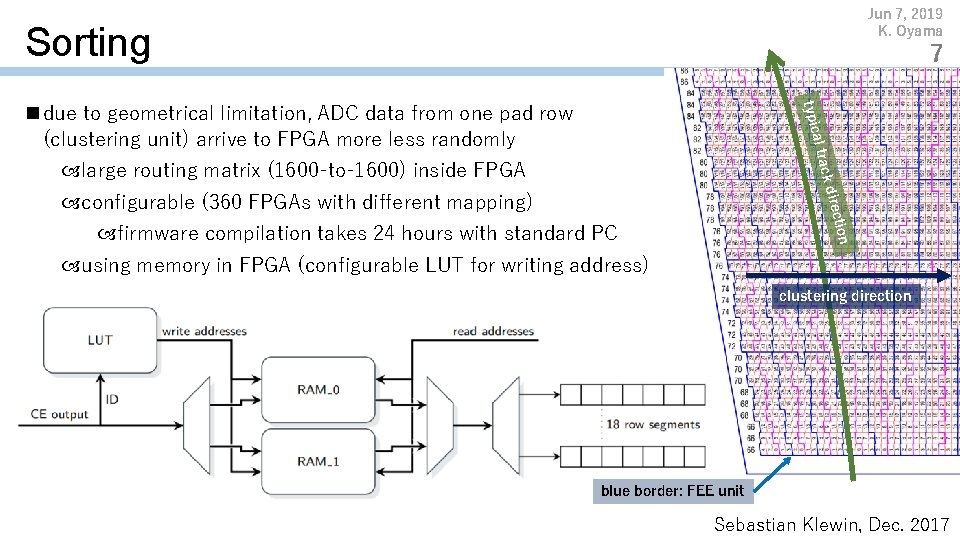

Jun 7, 2019 K. Oyama Sorting 7 al tra typic n due to geometrical limitation, ADC data from one pad row (clustering unit) arrive to FPGA more less randomly ir ck d large routing matrix (1600 -to-1600) inside FPGA n ectio configurable (360 FPGAs with different mapping) firmware compilation takes 24 hours with standard PC using memory in FPGA (configurable LUT for writing address) clustering direction blue border: FEE unit Sebastian Klewin, Dec. 2017

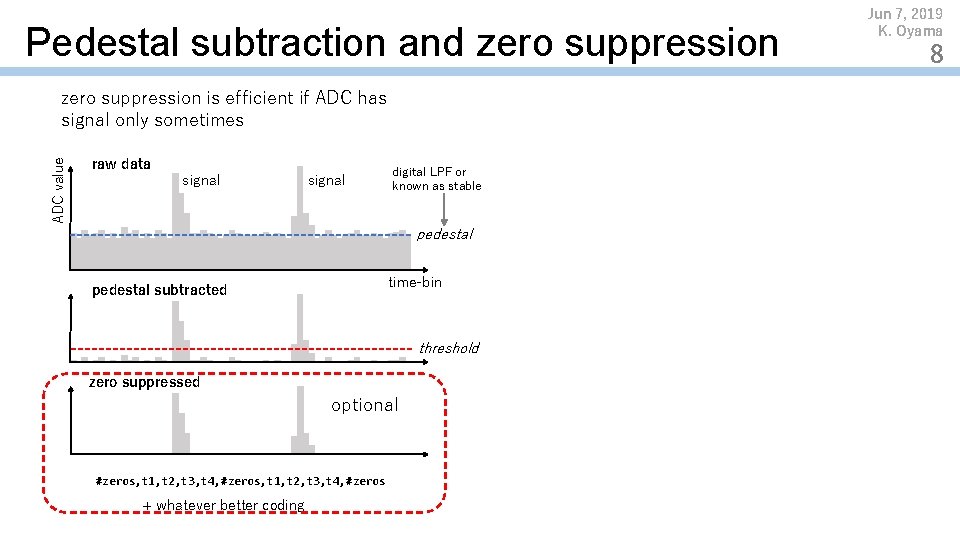

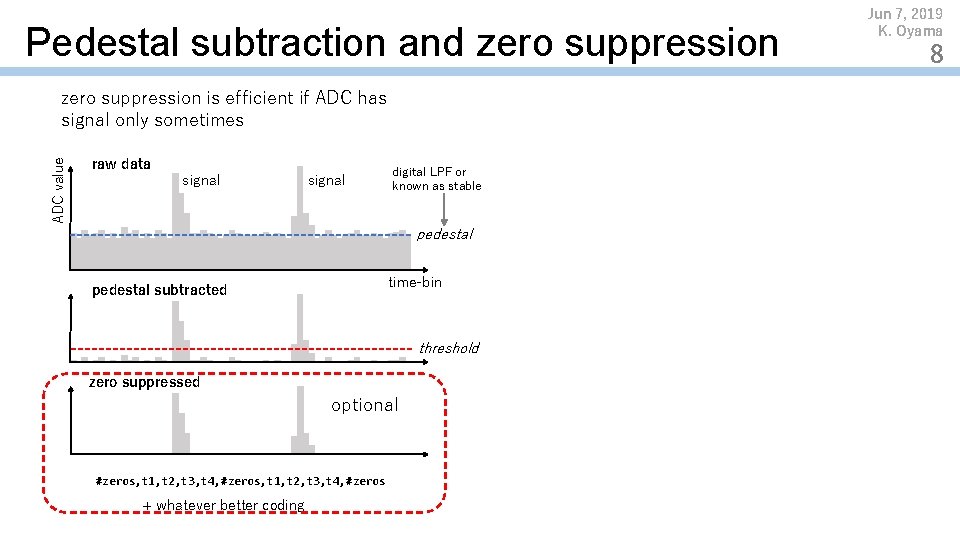

Pedestal subtraction and zero suppression ADC value zero suppression is efficient if ADC has signal only sometimes raw data signal digital LPF or known as stable pedestal time-bin pedestal subtracted threshold zero suppressed optional #zeros, t 1, t 2, t 3, t 4, #zeros + whatever better coding Jun 7, 2019 K. Oyama 8

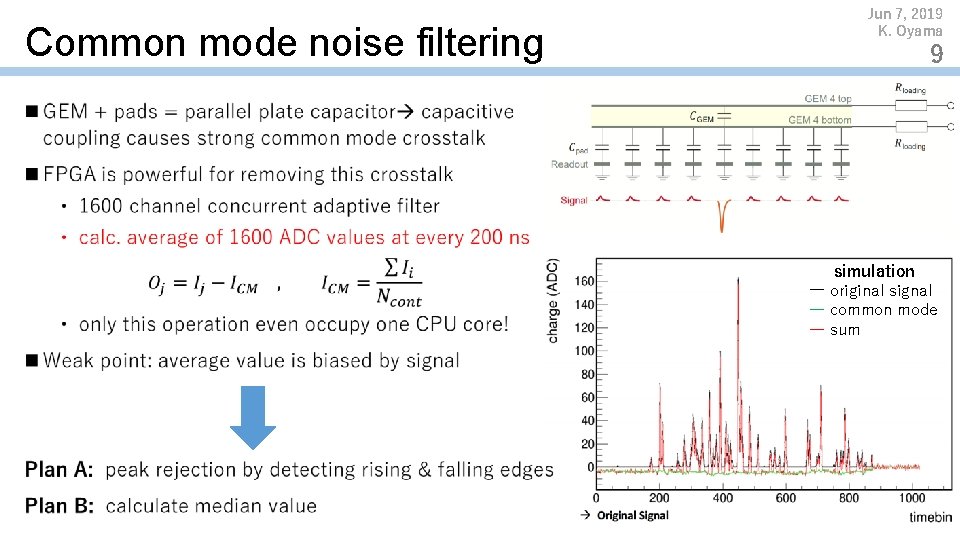

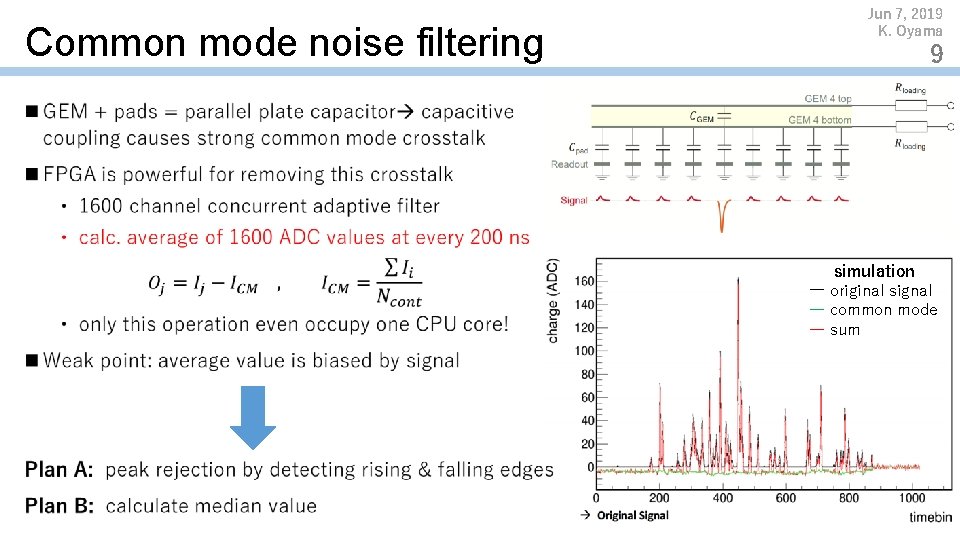

Common mode noise filtering Jun 7, 2019 K. Oyama 9 n simulation ― original signal ― common mode ― sum

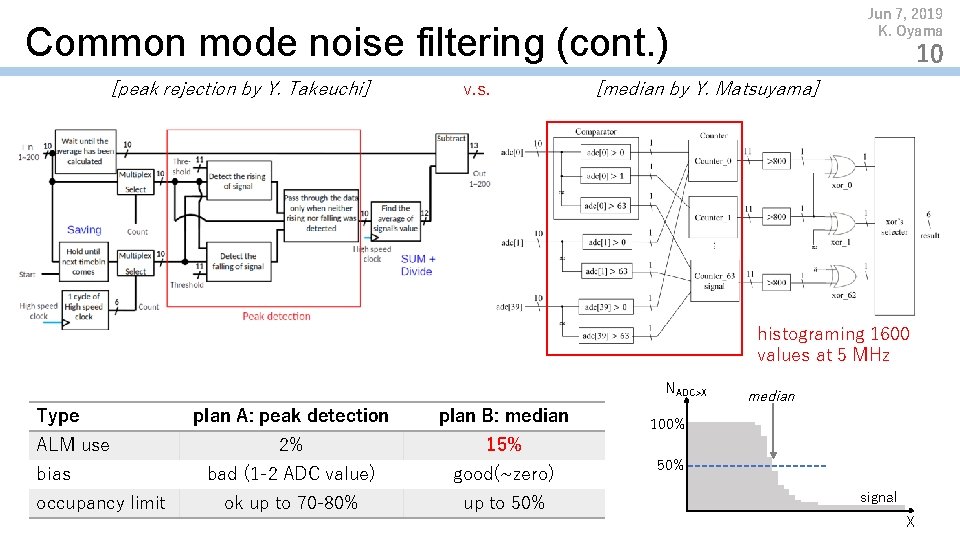

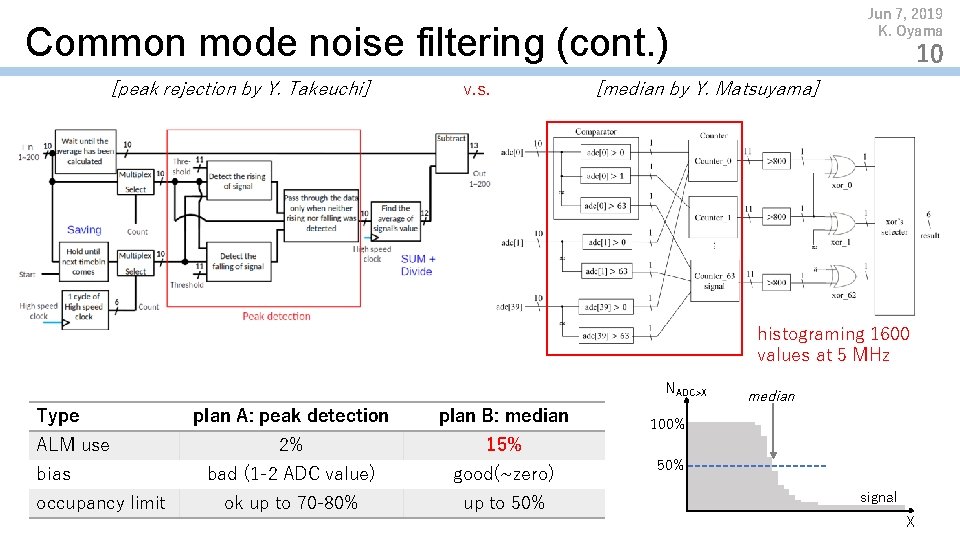

Jun 7, 2019 K. Oyama Common mode noise filtering (cont. ) [peak rejection by Y. Takeuchi] v. s. 10 [median by Y. Matsuyama] histograming 1600 values at 5 MHz NADC>X Type ALM use bias occupancy limit plan A: peak detection plan B: median 2% 15% bad (1 -2 ADC value) good(~zero) ok up to 70 -80% up to 50% median 100% 50% signal X

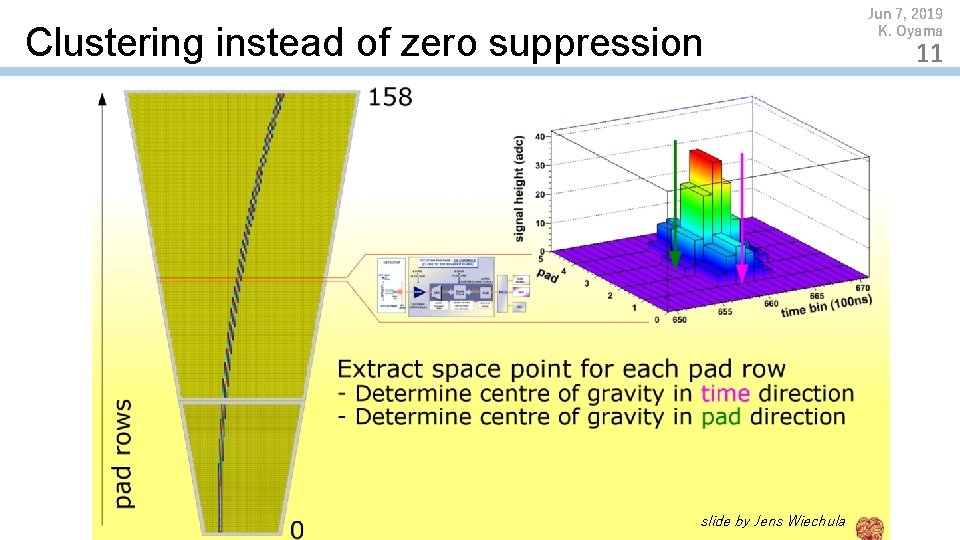

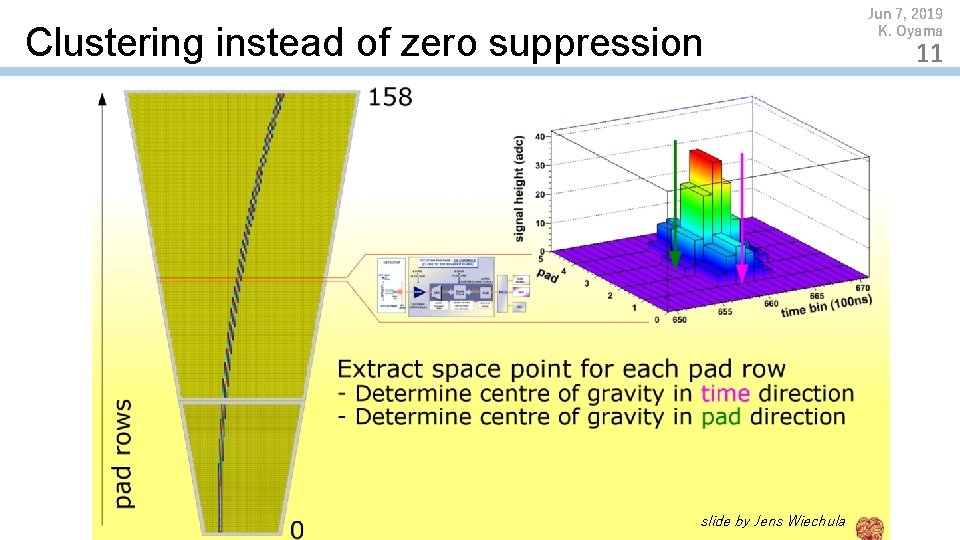

Clustering instead of zero suppression slide by Jens Wiechula Jun 7, 2019 K. Oyama 11

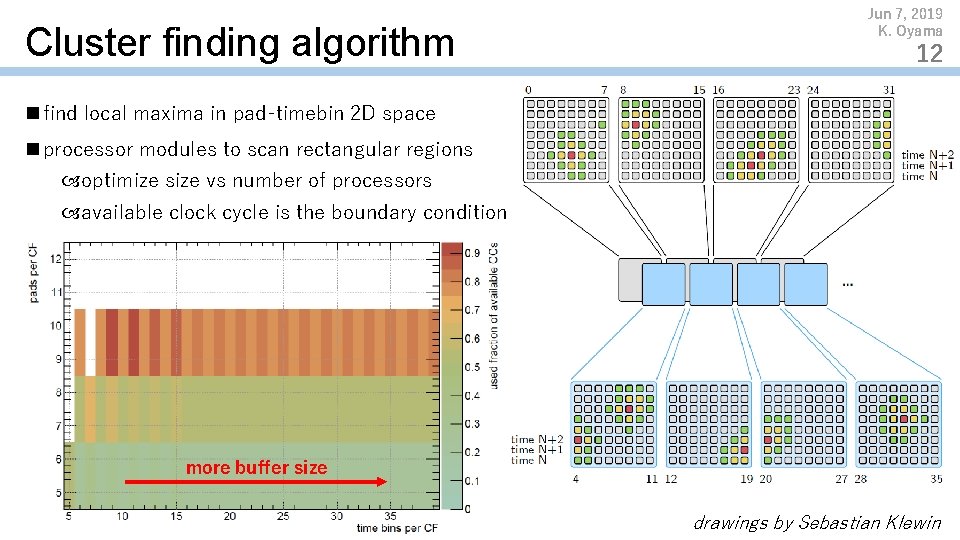

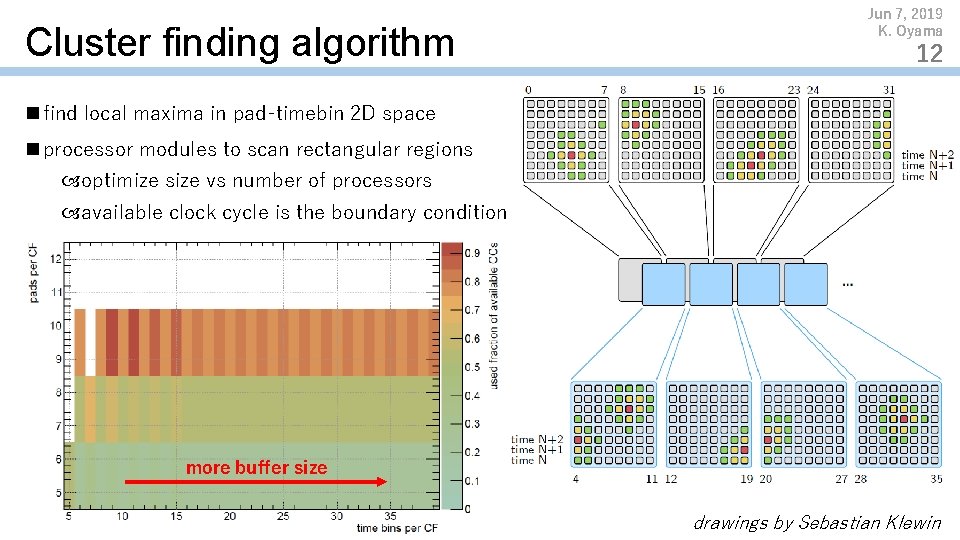

Cluster finding algorithm Jun 7, 2019 K. Oyama 12 n find local maxima in pad-timebin 2 D space n processor modules to scan rectangular regions optimize size vs number of processors available clock cycle is the boundary condition more buffer size drawings by Sebastian Klewin

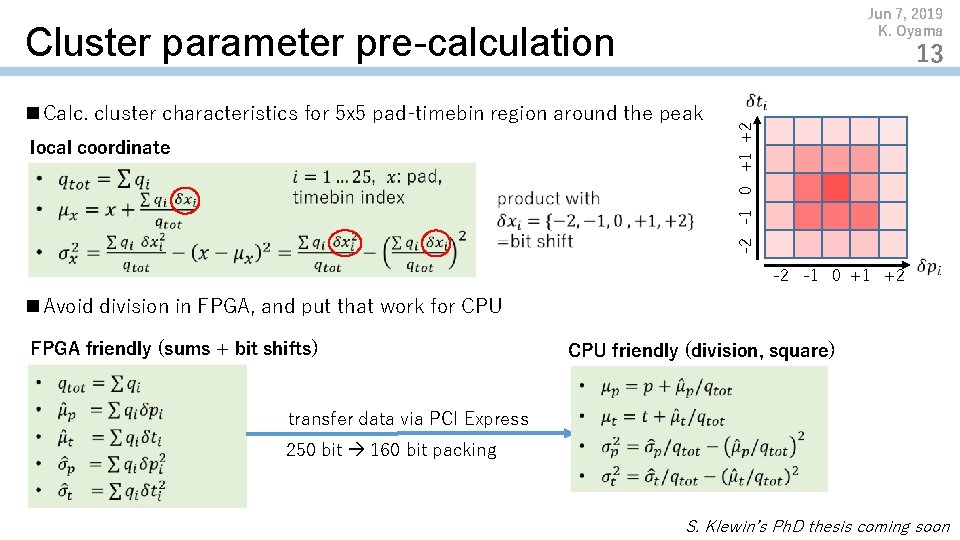

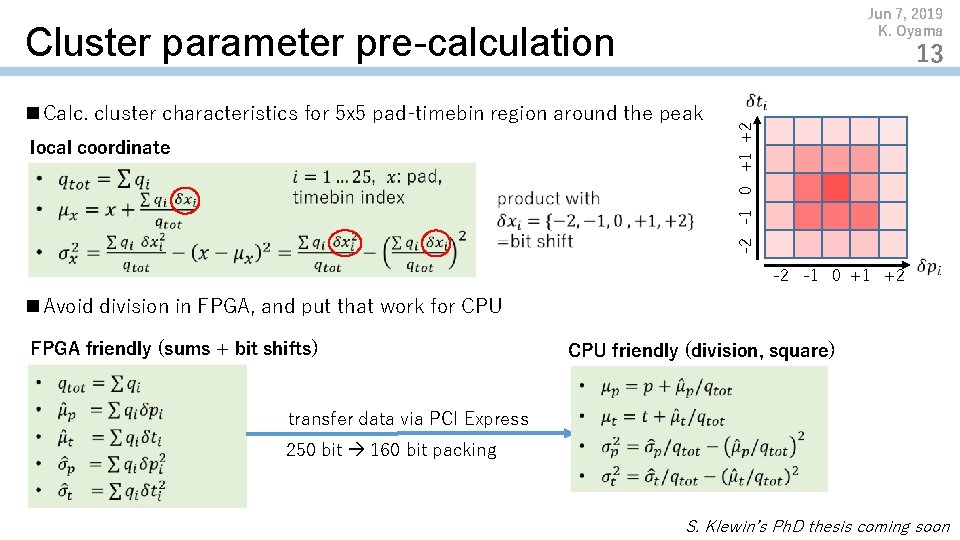

Jun 7, 2019 K. Oyama Cluster parameter pre-calculation 13 local coordinate -2 -1 0 +1 +2 n Calc. cluster characteristics for 5 x 5 pad-timebin region around the peak -2 -1 0 +1 +2 n Avoid division in FPGA, and put that work for CPU FPGA friendly (sums + bit shifts) CPU friendly (division, square) transfer data via PCI Express 250 bit 160 bit packing S. Klewin’s Ph. D thesis coming soon

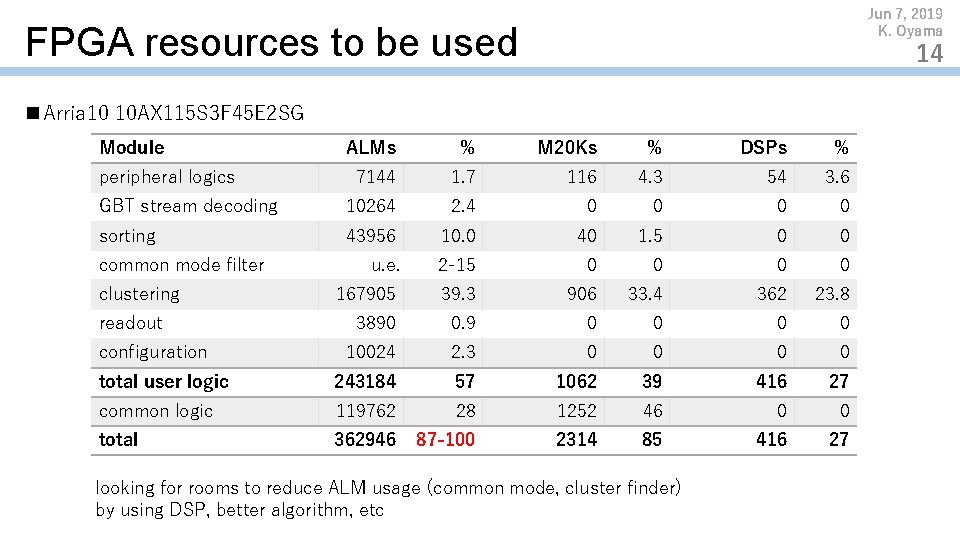

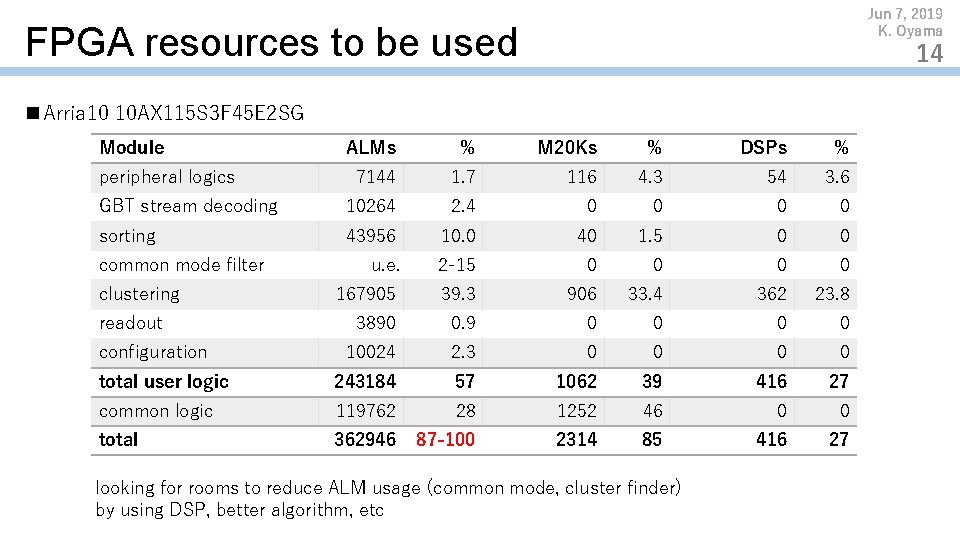

Jun 7, 2019 K. Oyama FPGA resources to be used 14 n Arria 10 10 AX 115 S 3 F 45 E 2 SG Module ALMs % M 20 Ks % DSPs % 7144 1. 7 116 4. 3 54 3. 6 GBT stream decoding 10264 2. 4 0 0 sorting 43956 10. 0 40 1. 5 0 0 u. e. 2 -15 0 0 167905 39. 3 906 33. 4 362 23. 8 3890 0. 9 0 0 10024 2. 3 0 0 total user logic 243184 57 1062 39 416 27 common logic 119762 28 1252 46 0 0 total 362946 87 -100 2314 85 416 27 peripheral logics common mode filter clustering readout configuration looking for rooms to reduce ALM usage (common mode, cluster finder) by using DSP, better algorithm, etc

More online processing Question by T. Gunji n 2 D deconvolution n Tracking within sector? n Distortion correction using high resolution digital current monitor different data source, 144 channels 144 GEM 4 -top channel x 1 k. Hz x 17 bit Numerical calculations (Possion solver, Langiven) or Machine Learning if numerical calculation is too heavy Jun 7, 2019 K. Oyama 15

Clustering in other devices? n Estimation by D. Rohr https: //indico. cern. ch/event/849606/ CPU: terribly slow (no solution) GPU clusterizer (Open. CL 2. 0 C) works on simulated data 250 GPU needed for 50 k. Hz Pb. Pb Did not contain deconvolution Did not contain noise suppression Did not contain PCIe transfer Did not contain decoding of Zero-Suppressed data including all needed algo, 500 GPU (at price 700 -1000 eur) is the safe number more servers (63 -125 more) to contain 4 GPU each, 200 eur per server cost range: 300 k to 750 k Eur (30 M to 75 M JPY) increases network bandwidth from FLP to EPN (infiniband) Jun 7, 2019 K. Oyama 16

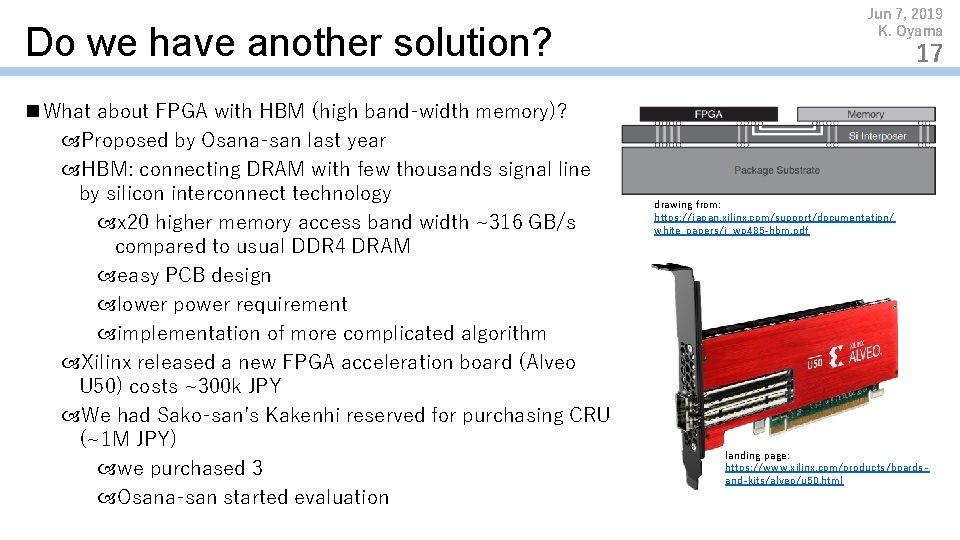

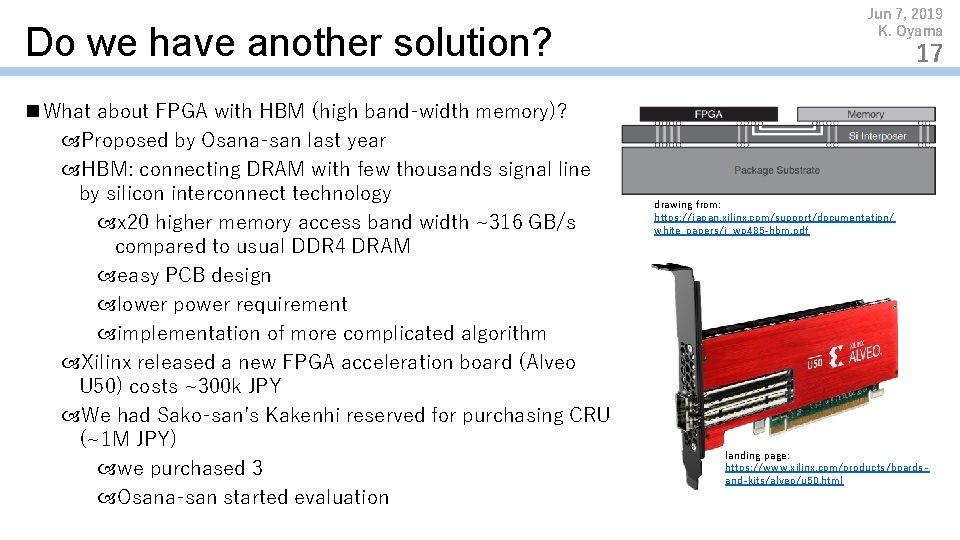

Do we have another solution? n What about FPGA with HBM (high band-width memory)? Proposed by Osana-san last year HBM: connecting DRAM with few thousands signal line by silicon interconnect technology x 20 higher memory access band width ~316 GB/s compared to usual DDR 4 DRAM easy PCB design lower power requirement implementation of more complicated algorithm Xilinx released a new FPGA acceleration board (Alveo U 50) costs ~300 k JPY We had Sako-san’s Kakenhi reserved for purchasing CRU (~1 M JPY) we purchased 3 Osana-san started evaluation Jun 7, 2019 K. Oyama 17 drawing from: https: //japan. xilinx. com/support/documentation/ white_papers/j_wp 485 -hbm. pdf landing page: https: //www. xilinx. com/products/boardsand-kits/alveo/u 50. html

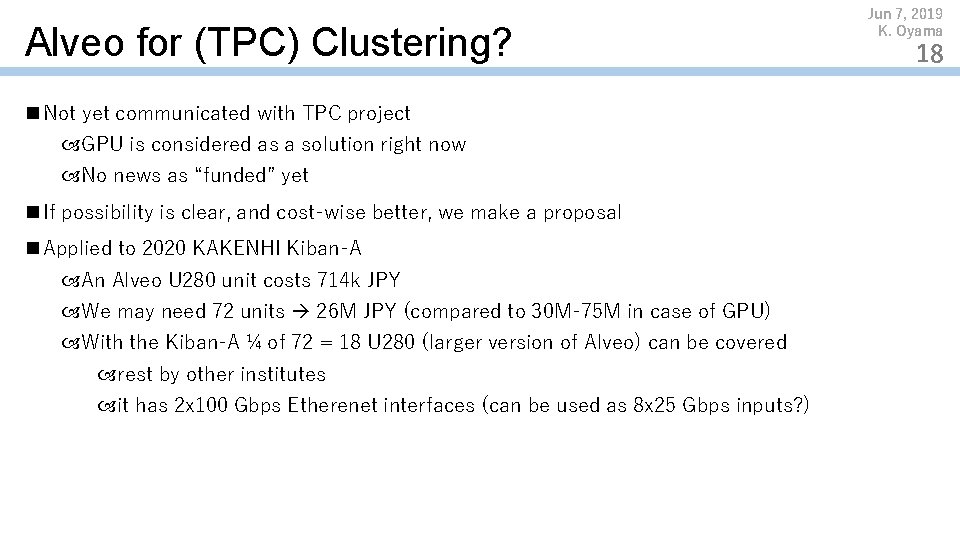

Alveo for (TPC) Clustering? n Not yet communicated with TPC project GPU is considered as a solution right now No news as “funded” yet n If possibility is clear, and cost-wise better, we make a proposal n Applied to 2020 KAKENHI Kiban-A An Alveo U 280 unit costs 714 k JPY We may need 72 units 26 M JPY (compared to 30 M-75 M in case of GPU) With the Kiban-A ¼ of 72 = 18 U 280 (larger version of Alveo) can be covered rest by other institutes it has 2 x 100 Gbps Etherenet interfaces (can be used as 8 x 25 Gbps inputs? ) Jun 7, 2019 K. Oyama 18

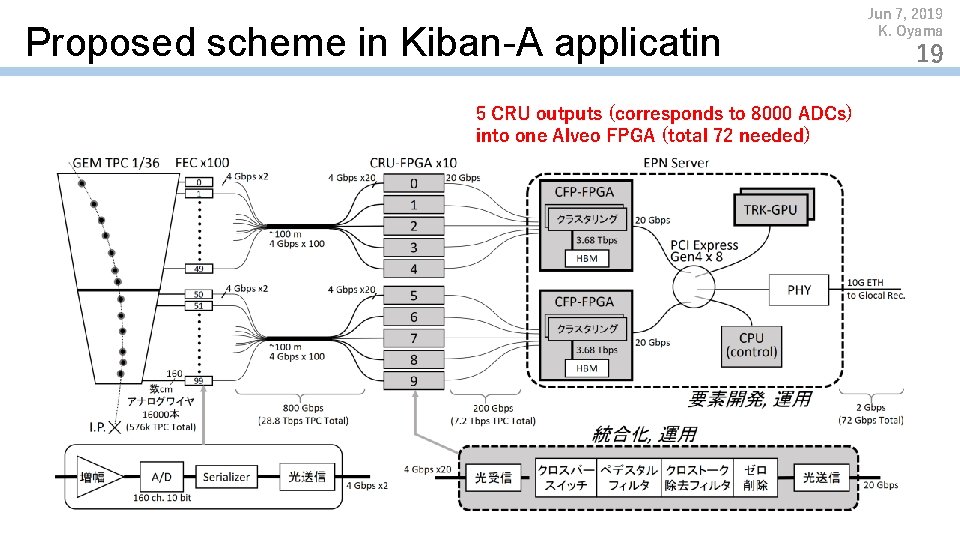

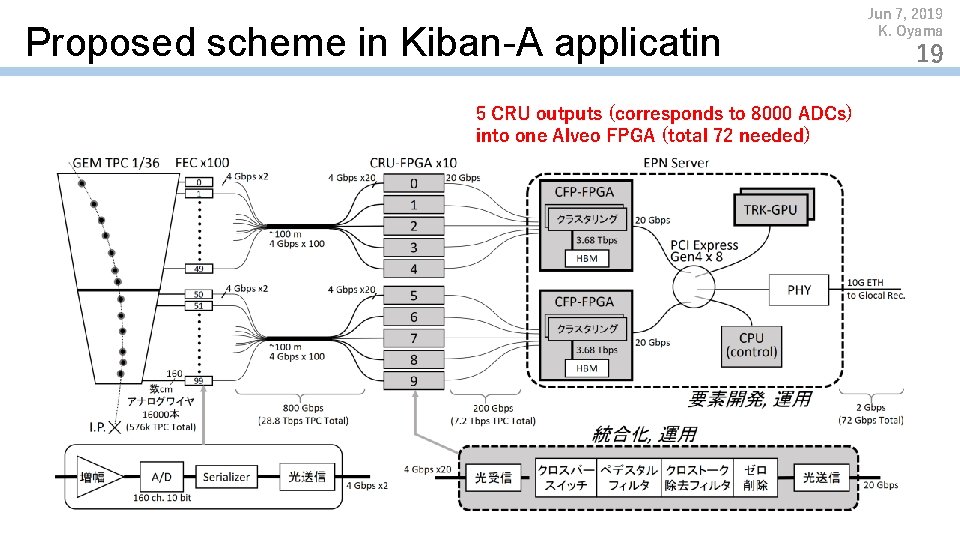

Proposed scheme in Kiban-A applicatin 5 CRU outputs (corresponds to 8000 ADCs) into one Alveo FPGA (total 72 needed) Jun 7, 2019 K. Oyama 19

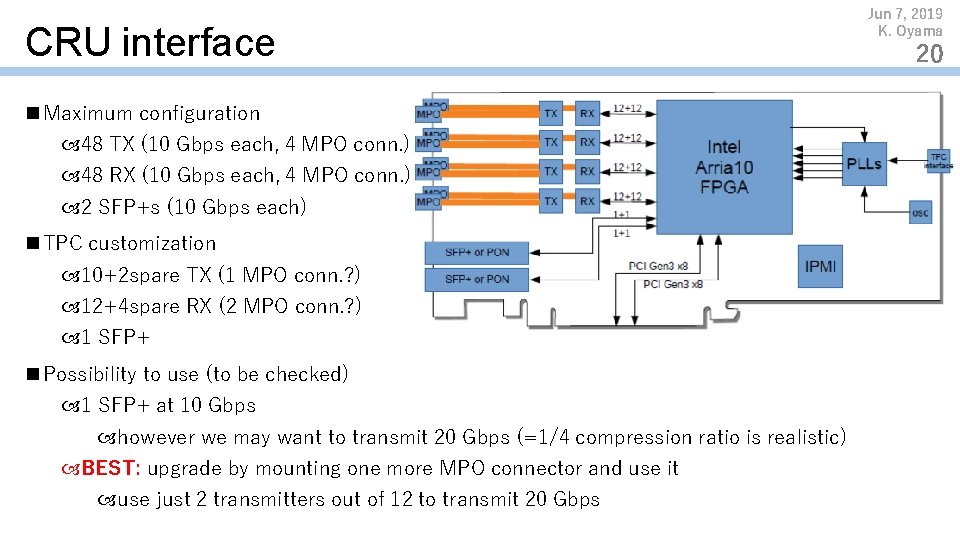

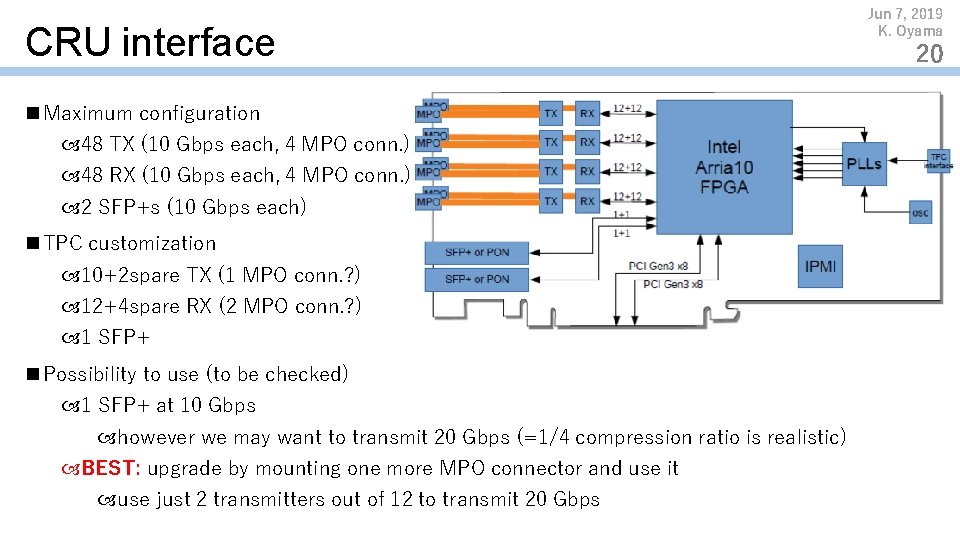

CRU interface n Maximum configuration 48 TX (10 Gbps each, 4 MPO conn. ) 48 RX (10 Gbps each, 4 MPO conn. ) 2 SFP+s (10 Gbps each) n TPC customization 10+2 spare TX (1 MPO conn. ? ) 12+4 spare RX (2 MPO conn. ? ) 1 SFP+ n Possibility to use (to be checked) 1 SFP+ at 10 Gbps however we may want to transmit 20 Gbps (=1/4 compression ratio is realistic) BEST: upgrade by mounting one more MPO connector and use it use just 2 transmitters out of 12 to transmit 20 Gbps Jun 7, 2019 K. Oyama 20

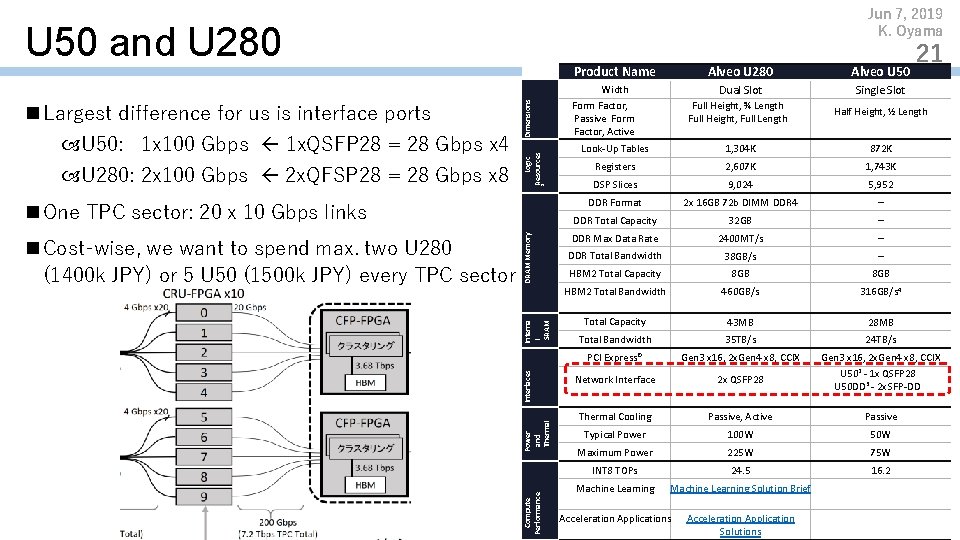

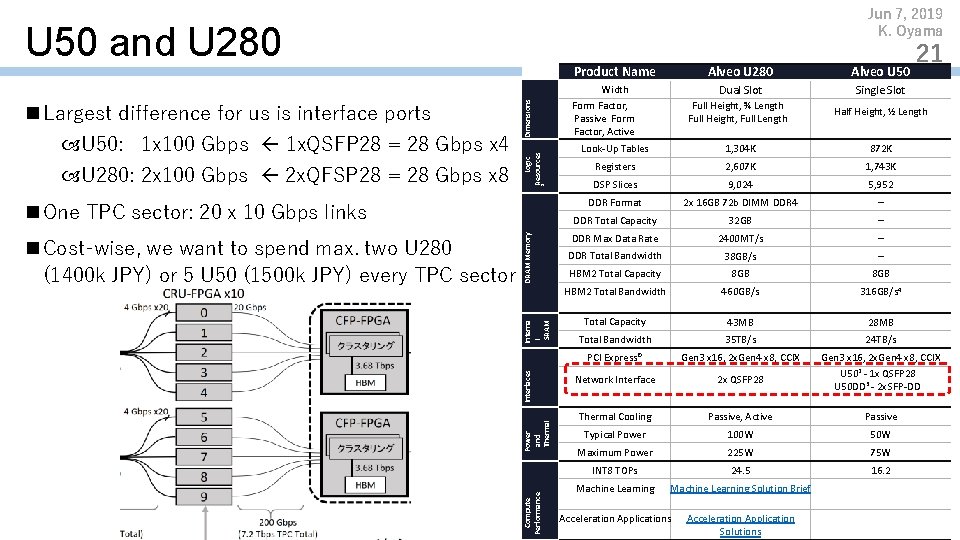

Jun 7, 2019 K. Oyama U 50 and U 280 1 U 280: 2 x 100 Gbps 2 x. QFSP 28 = 28 Gbps x 8 Dimensions U 50: 1 x 100 Gbps 1 x. QSFP 28 = 28 Gbps x 4 Compute Performance Power and Thermal Interfaces Interna l SRAM DRAM Memory n One TPC sector: 20 x 10 Gbps links n Cost-wise, we want to spend max. two U 280 (1400 k JPY) or 5 U 50 (1500 k JPY) every TPC sector Alveo U 280 Alveo U 50 Dual Slot Single Slot Full Height, ¾ Length Full Height, Full Length Half Height, ½ Length Look-Up Tables 1, 304 K 872 K Registers 2, 607 K 1, 743 K DSP Slices 9, 024 5, 952 DDR Format 2 x 16 GB 72 b DIMM DDR 4 – DDR Total Capacity 32 GB – DDR Max Data Rate 2400 MT/s – DDR Total Bandwidth 38 GB/s – HBM 2 Total Capacity 8 GB HBM 2 Total Bandwidth 460 GB/s 316 GB/s 4 Total Capacity 43 MB 28 MB Total Bandwidth 35 TB/s 24 TB/s PCI Express® Gen 3 x 16, 2 x. Gen 4 x 8, CCIX Network Interface 2 x QSFP 28 Gen 3 x 16, 2 x. Gen 4 x 8, CCIX U 502 - 1 x QSFP 28 U 50 DD 3 - 2 x SFP-DD Thermal Cooling Passive, Active Passive Typical Power 100 W 50 W Maximum Power 225 W 75 W INT 8 TOPs 24. 5 16. 2 Machine Learning Solution Brief Width Form Factor, Passive Form Factor, Active Logic Resources n Largest difference for us is interface ports Product Name 21 Acceleration Applications Acceleration Application Solutions

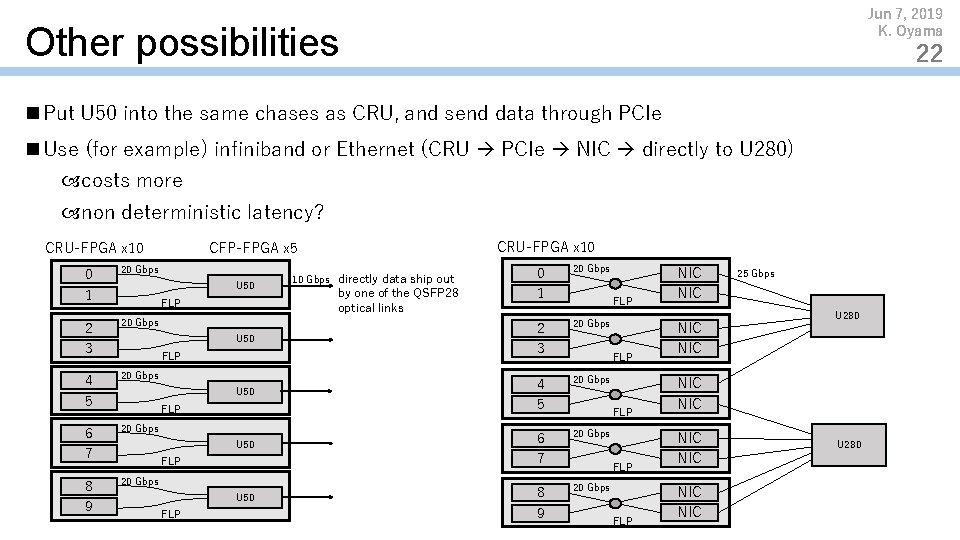

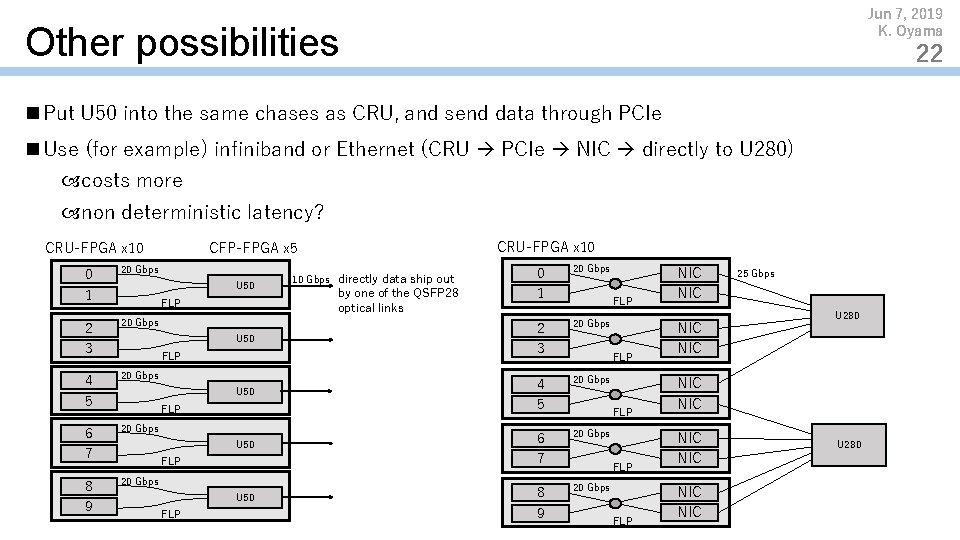

Jun 7, 2019 K. Oyama Other possibilities 22 n Put U 50 into the same chases as CRU, and send data through PCIe n Use (for example) infiniband or Ethernet (CRU PCIe NIC directly to U 280) costs more non deterministic latency? 0 1 20 Gbps 2 3 20 Gbps 4 5 20 Gbps 6 7 20 Gbps 8 9 20 Gbps CRU-FPGA x 10 CFP-FPGA x 5 CRU-FPGA x 10 U 50 FLP U 50 FLP 10 Gbps directly data ship out by one of the QSFP 28 optical links 0 1 20 Gbps 2 3 20 Gbps 4 5 20 Gbps 6 7 20 Gbps 8 9 20 Gbps FLP FLP FLP NIC NIC 25 Gbps U 280 NIC NIC NIC U 280

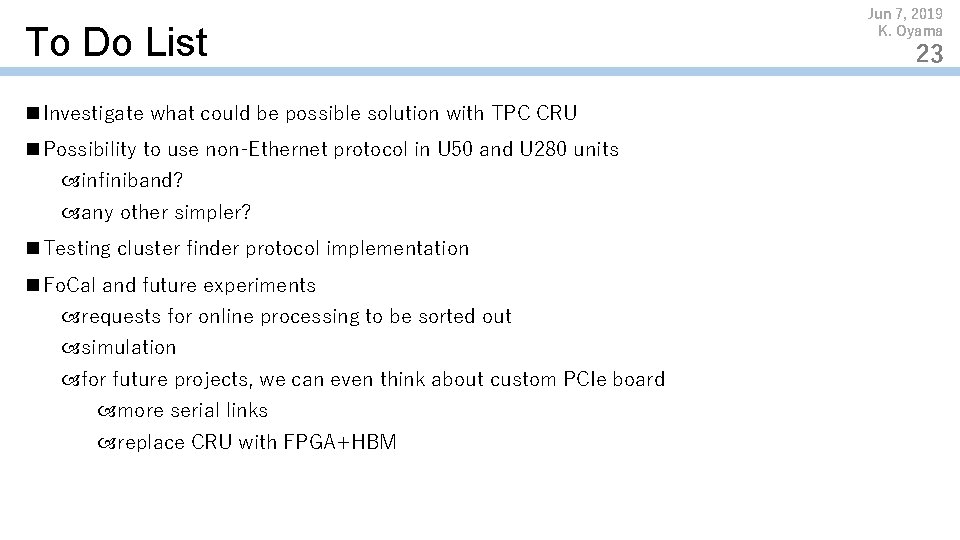

To Do List n Investigate what could be possible solution with TPC CRU n Possibility to use non-Ethernet protocol in U 50 and U 280 units infiniband? any other simpler? n Testing cluster finder protocol implementation n Fo. Cal and future experiments requests for online processing to be sorted out simulation for future projects, we can even think about custom PCIe board more serial links replace CRU with FPGA+HBM Jun 7, 2019 K. Oyama 23

Paper n Independently from TPC project, maybe it makes sense to prepare a paper n Very general detector data processing acceleration Stencil calculation for TPC (not necessarily ALICE TPC) like detector Calorimeter like detector Performance Jun 7, 2019 K. Oyama 24