Algoritmi per IR Ranking The big fight find

Algoritmi per IR Ranking

The big fight: find the best ranking. . .

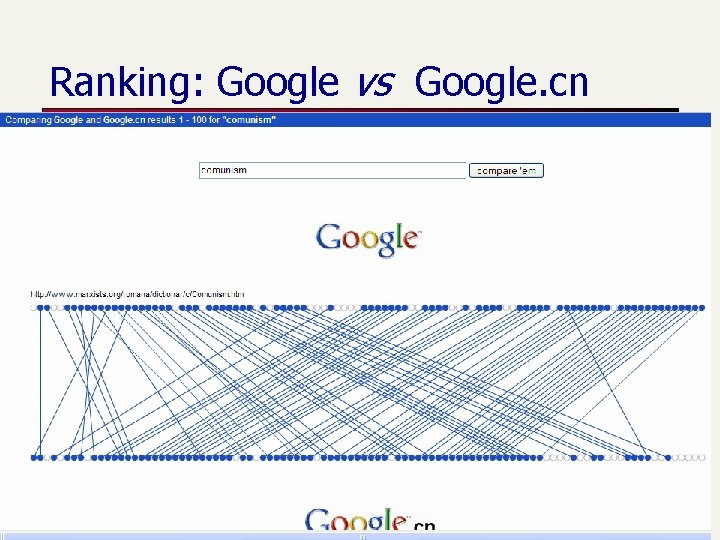

Ranking: Google vs Google. cn

Algoritmi per IR Text-based Ranking (1° generation)

Similarity between binary vectors n Document is binary vector X, Y in {0, 1}D n Score: overlap measure What’s wrong ?

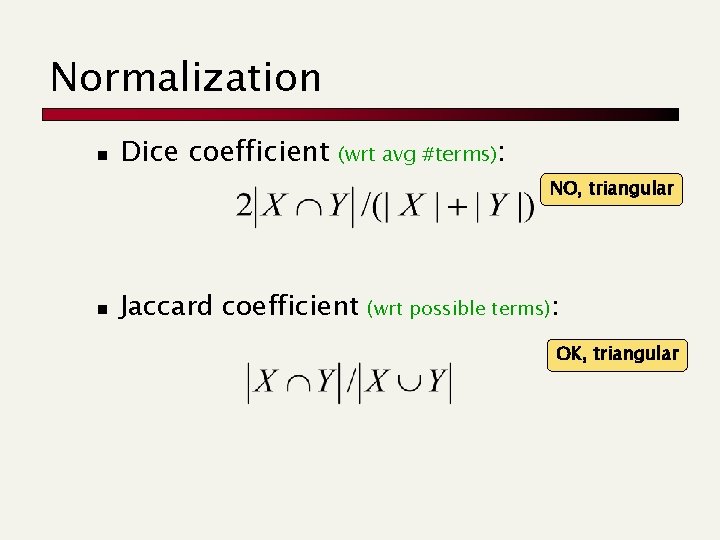

Normalization n Dice coefficient (wrt avg #terms): NO, triangular n Jaccard coefficient (wrt possible terms): OK, triangular

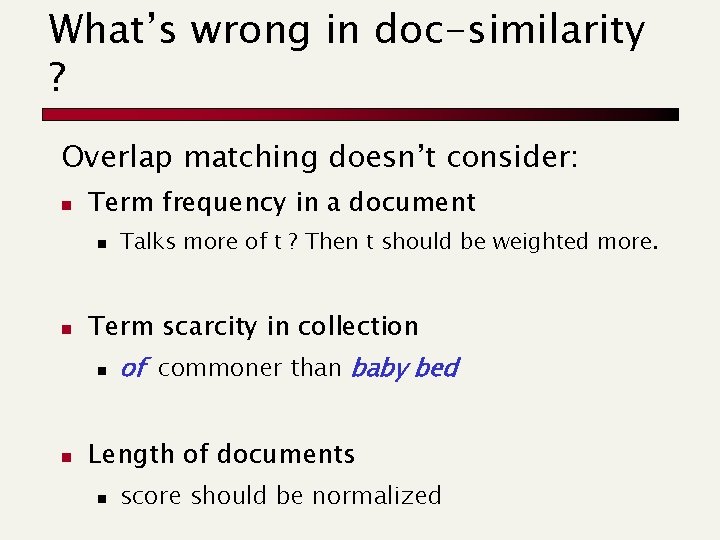

What’s wrong in doc-similarity ? Overlap matching doesn’t consider: n Term frequency in a document n n Term scarcity in collection n n Talks more of t ? Then t should be weighted more. of commoner than baby bed Length of documents n score should be normalized

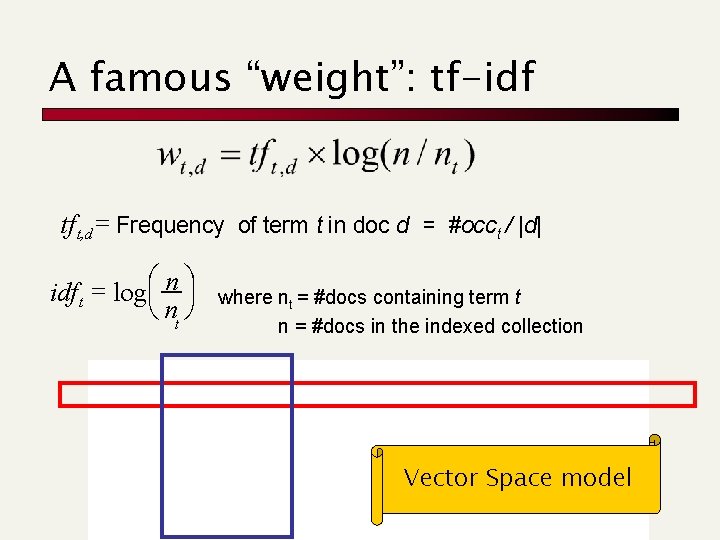

A famous “weight”: tf-idf tf t, d = Frequency of term t in doc d = #occt / |d| idf t = logæç n ö÷ è nt ø where nt = #docs containing term t n = #docs in the indexed collection Vector Space model

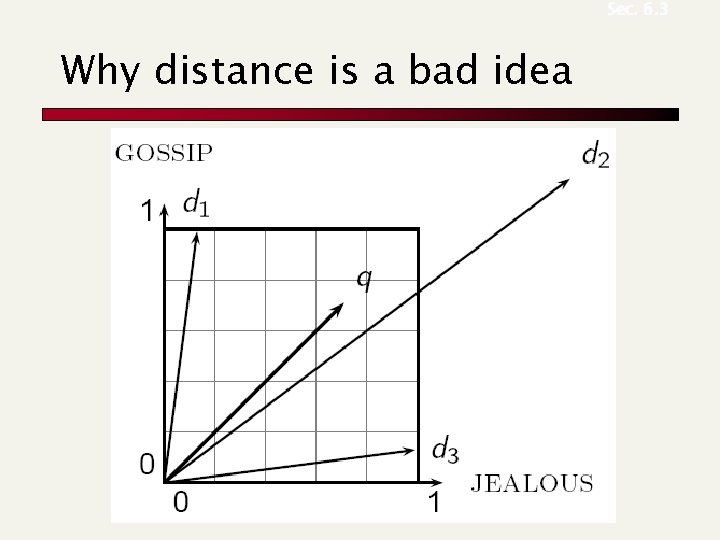

Sec. 6. 3 Why distance is a bad idea

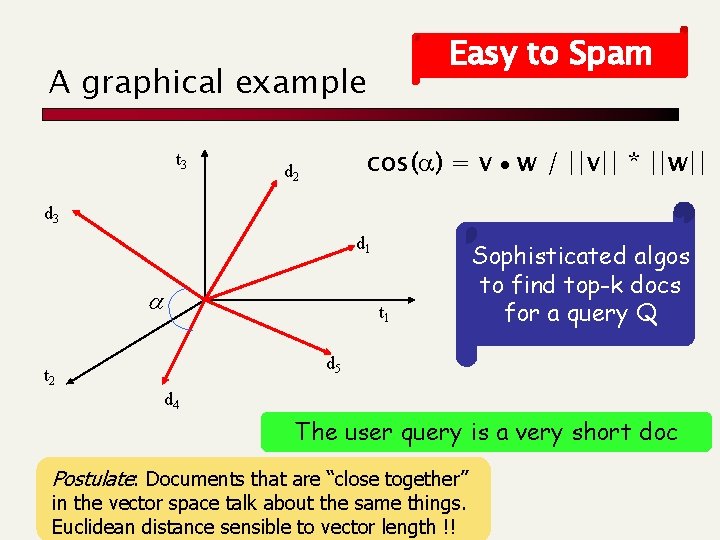

Easy to Spam A graphical example t 3 cos( ) = v w / ||v|| * ||w|| d 2 d 3 d 1 a t 1 Sophisticated algos to find top-k docs for a query Q d 5 t 2 d 4 The user query is a very short doc Postulate: Documents that are “close together” in the vector space talk about the same things. Euclidean distance sensible to vector length !!

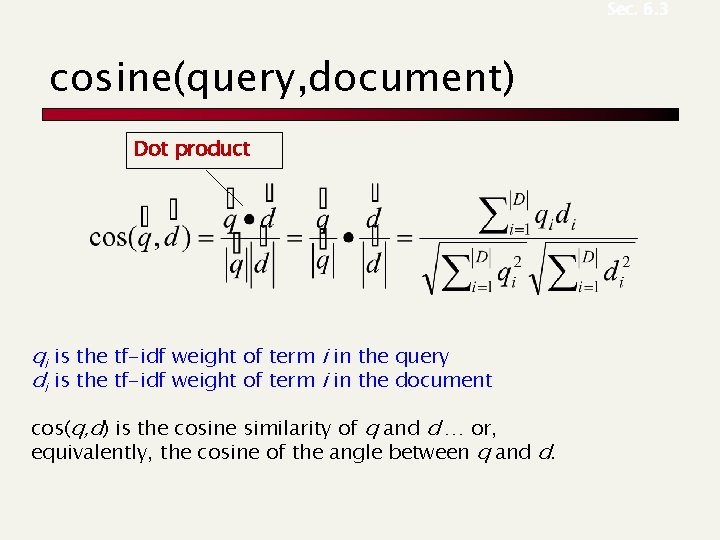

Sec. 6. 3 cosine(query, document) Dot product qi is the tf-idf weight of term i in the query di is the tf-idf weight of term i in the document cos(q, d) is the cosine similarity of q and d … or, equivalently, the cosine of the angle between q and d.

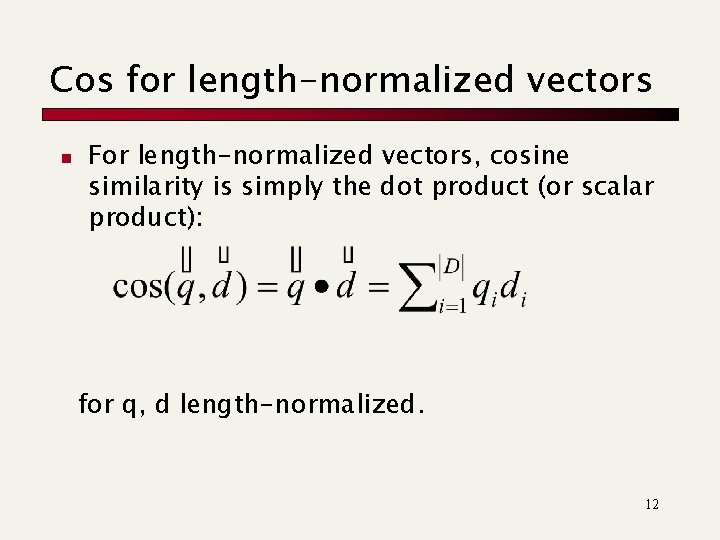

Cos for length-normalized vectors n For length-normalized vectors, cosine similarity is simply the dot product (or scalar product): for q, d length-normalized. 12

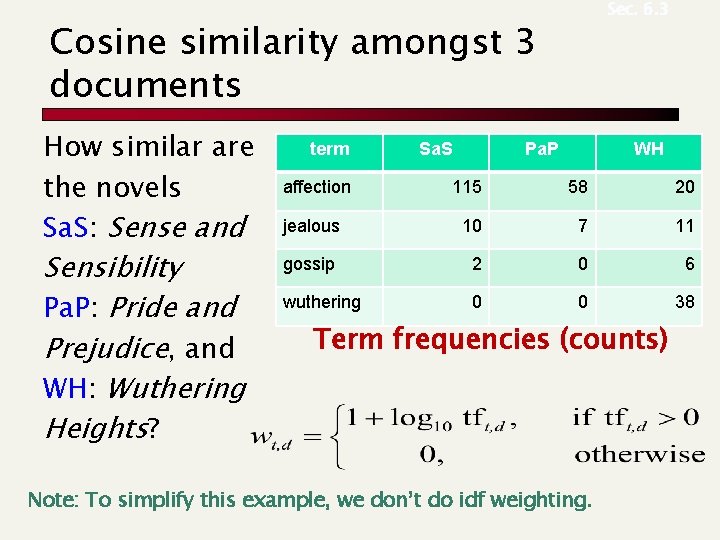

Sec. 6. 3 Cosine similarity amongst 3 documents How similar are the novels Sa. S: Sense and Sensibility Pa. P: Pride and Prejudice, and WH: Wuthering Heights? term affection Sa. S Pa. P WH 115 58 20 jealous 10 7 11 gossip 2 0 6 wuthering 0 0 38 Term frequencies (counts) Note: To simplify this example, we don’t do idf weighting.

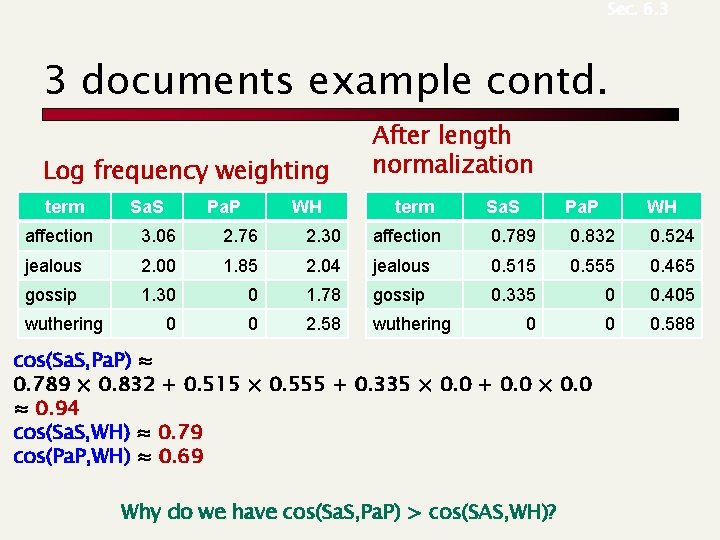

Sec. 6. 3 3 documents example contd. Log frequency weighting term Sa. S Pa. P WH After length normalization term Sa. S Pa. P WH affection 3. 06 2. 76 2. 30 affection 0. 789 0. 832 0. 524 jealous 2. 00 1. 85 2. 04 jealous 0. 515 0. 555 0. 465 gossip 1. 30 0 1. 78 gossip 0. 335 0 0. 405 0 0 2. 58 wuthering 0 0 0. 588 wuthering cos(Sa. S, Pa. P) ≈ 0. 789 × 0. 832 + 0. 515 × 0. 555 + 0. 335 × 0. 0 + 0. 0 × 0. 0 ≈ 0. 94 cos(Sa. S, WH) ≈ 0. 79 cos(Pa. P, WH) ≈ 0. 69 Why do we have cos(Sa. S, Pa. P) > cos(SAS, WH)?

Vector spaces and other operators n Vector space OK for bag-of-words queries n n n Clean metaphor for similar-document queries Not a good combination with operators: Boolean, wild-card, positional, proximity First generation of search engines n Invented before “spamming” web search

Algoritmi per IR Top-k retrieval

Sec. 7. 1. 1 Speed-up top-k retrieval n Costly is the computation of the cos n Find a set A of contenders, with K < |A| << N n n A does not necessarily contain the top K, but has many docs from among the top K Return the top K docs in A, according to the score The same approach is also used for other (noncosine) scoring functions Will look at several schemes following this

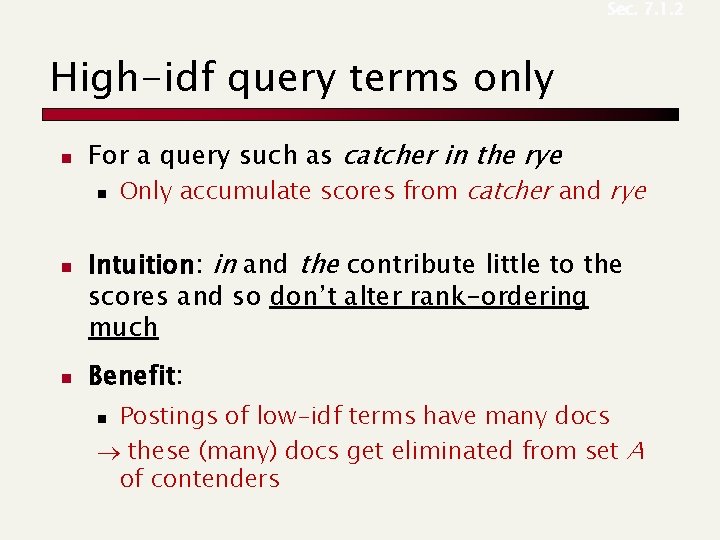

Sec. 7. 1. 2 Index elimination n n Consider docs containing at least one query term. Hence this means… Take this further: 1. Only consider high-idf query terms 2. Only consider docs containing many query terms

Sec. 7. 1. 2 High-idf query terms only n n n For a query such as catcher in the rye n Only accumulate scores from catcher and rye Intuition: in and the contribute little to the scores and so don’t alter rank-ordering much Benefit: Postings of low-idf terms have many docs these (many) docs get eliminated from set A of contenders n

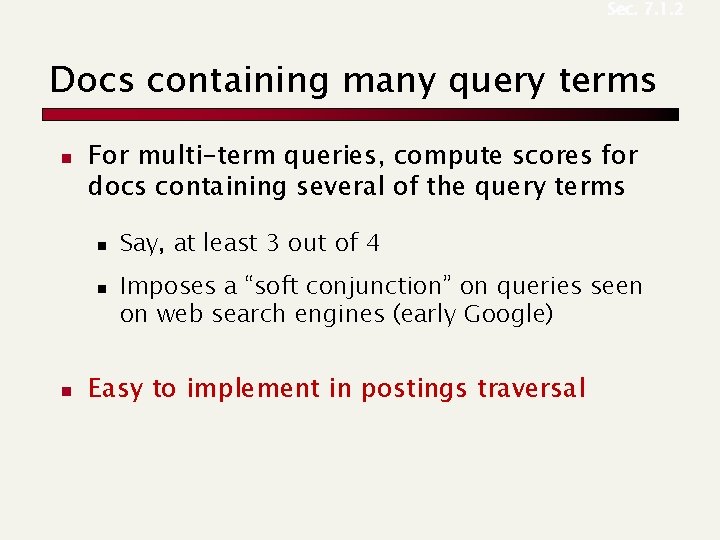

Sec. 7. 1. 2 Docs containing many query terms n For multi-term queries, compute scores for docs containing several of the query terms n n n Say, at least 3 out of 4 Imposes a “soft conjunction” on queries seen on web search engines (early Google) Easy to implement in postings traversal

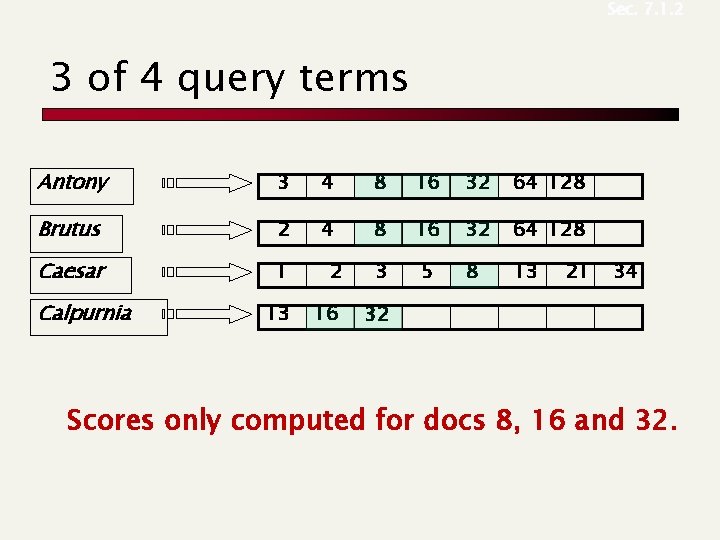

Sec. 7. 1. 2 3 of 4 query terms Antony 3 4 8 16 32 64 128 Brutus 2 4 8 16 32 64 128 Caesar 1 2 3 5 8 13 13 16 32 Calpurnia 21 34 Scores only computed for docs 8, 16 and 32.

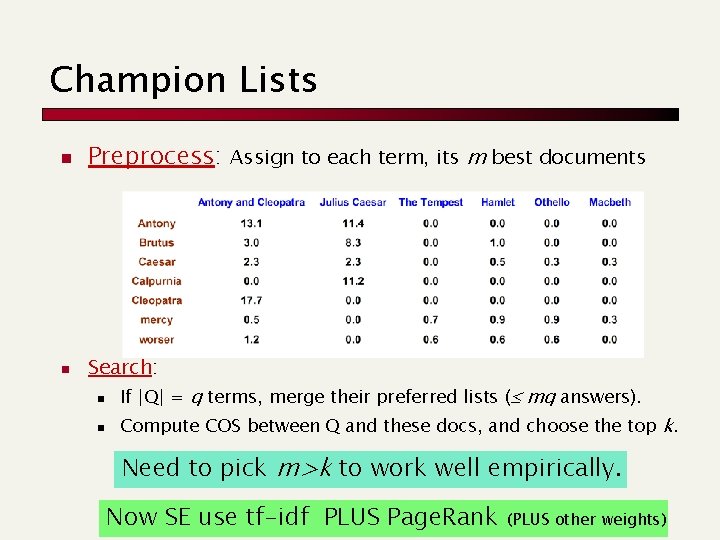

Champion Lists n Preprocess: Assign to each term, its m best documents n Search: n n If |Q| = q terms, merge their preferred lists ( mq answers). Compute COS between Q and these docs, and choose the top k. Need to pick m>k to work well empirically. Now SE use tf-idf PLUS Page. Rank (PLUS other weights)

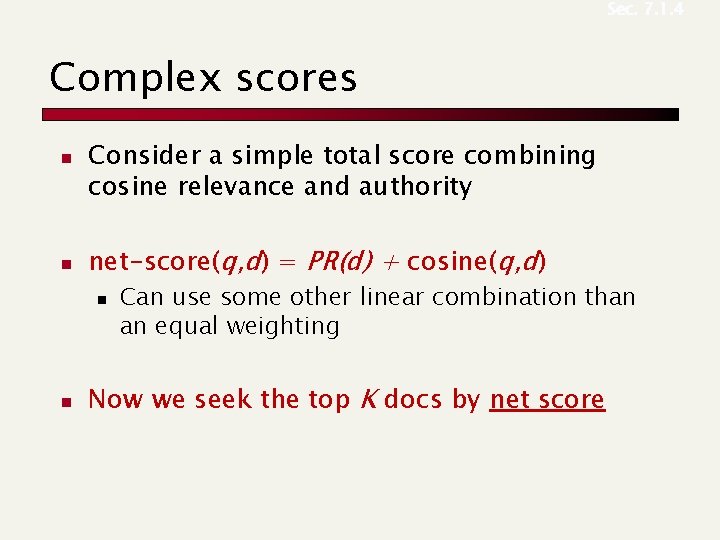

Sec. 7. 1. 4 Complex scores n n Consider a simple total score combining cosine relevance and authority net-score(q, d) = PR(d) + cosine(q, d) n n Can use some other linear combination than an equal weighting Now we seek the top K docs by net score

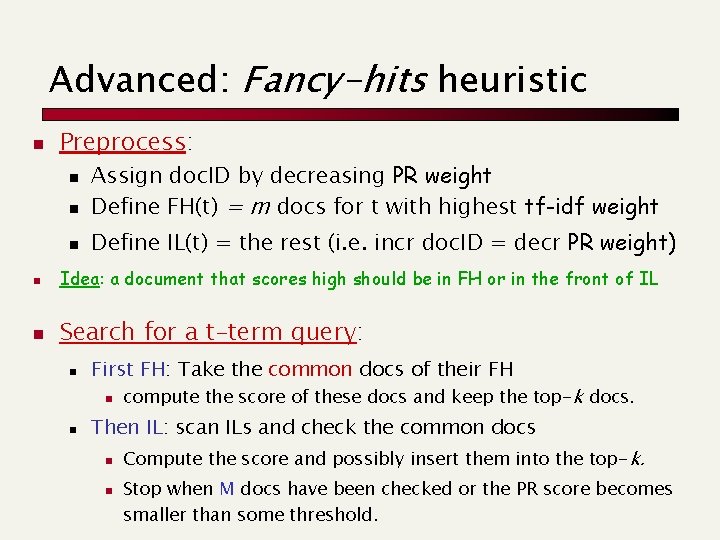

Advanced: Fancy-hits heuristic n Preprocess: n n n Assign doc. ID by decreasing PR weight Define FH(t) = m docs for t with highest tf-idf weight Define IL(t) = the rest (i. e. incr doc. ID = decr PR weight) n Idea: a document that scores high should be in FH or in the front of IL n Search for a t-term query: n First FH: Take the common docs of their FH n n compute the score of these docs and keep the top-k docs. Then IL: scan ILs and check the common docs n n Compute the score and possibly insert them into the top-k. Stop when M docs have been checked or the PR score becomes smaller than some threshold.

Algoritmi per IR Speed-up querying by clustering

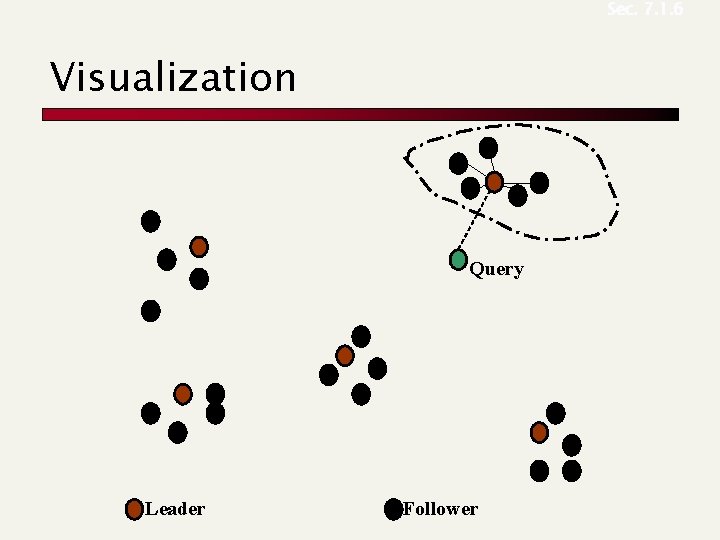

Sec. 7. 1. 6 Visualization Query Leader Follower

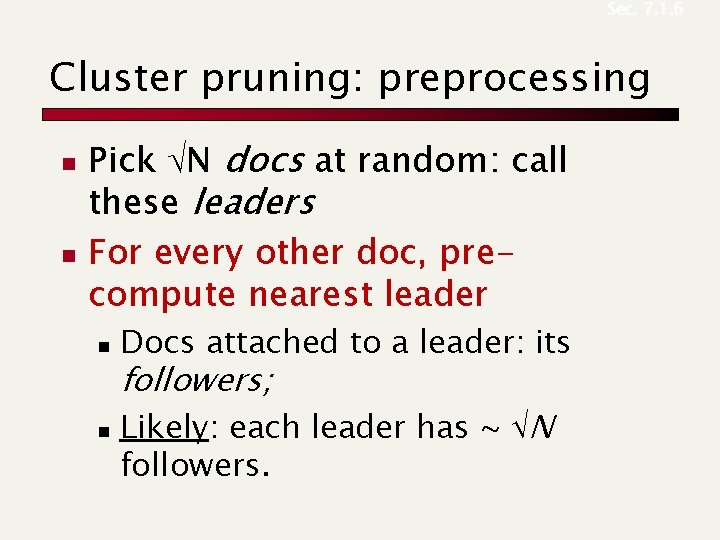

Sec. 7. 1. 6 Cluster pruning: preprocessing n n Pick N docs at random: call these leaders For every other doc, precompute nearest leader n n Docs attached to a leader: its followers; Likely: each leader has ~ N followers.

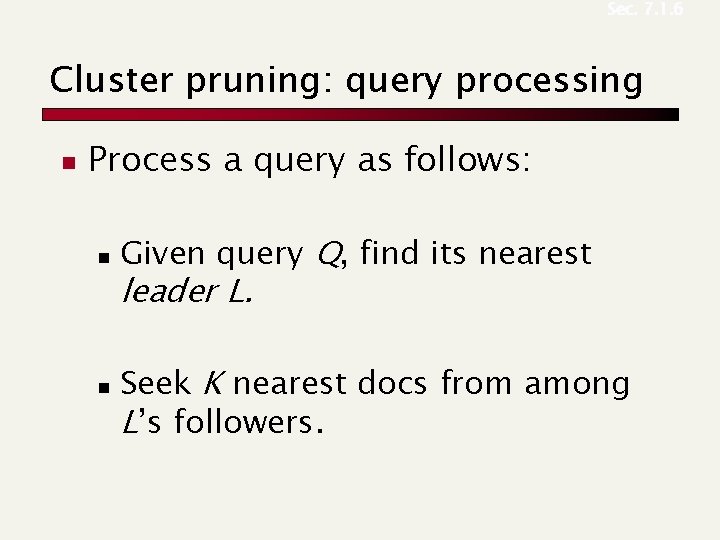

Sec. 7. 1. 6 Cluster pruning: query processing n Process a query as follows: n n Given query Q, find its nearest leader L. Seek K nearest docs from among L’s followers.

Sec. 7. 1. 6 Why use random sampling n n Fast Leaders reflect data distribution

Sec. 7. 1. 6 General variants n n n Have each follower attached to b 1=3 (say) nearest leaders. From query, find b 2=4 (say) nearest leaders and their followers. Can recur on leader/follower construction.

Algoritmi per IR Relevance feedback

Sec. 9. 1 Relevance Feedback n Relevance feedback: user feedback on relevance of docs in initial set of results n n User issues a (short, simple) query The user marks some results as relevant or non -relevant. The system computes a better representation of the information need based on feedback. Relevance feedback can go through one or more iterations.

Sec. 9. 1. 1 Rocchio’s Algorithm n n The Rocchio algorithm uses the vector space model to pick a relevance feed-back query Rocchio seeks the query qopt that maximizes

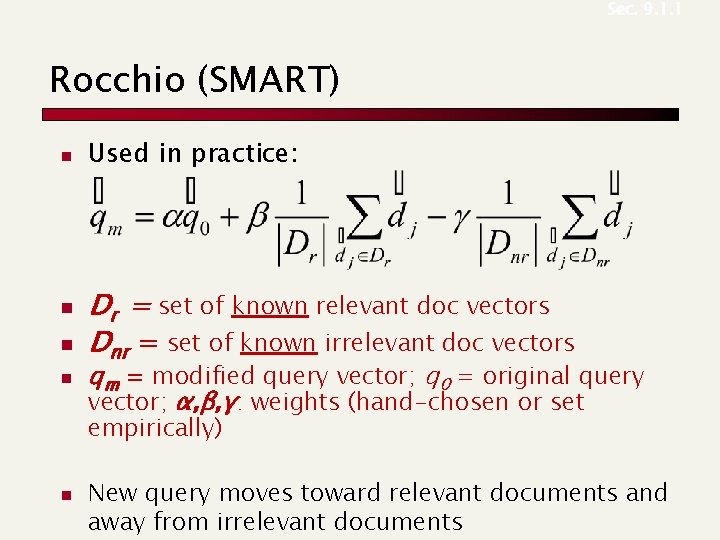

Sec. 9. 1. 1 Rocchio (SMART) n n Used in practice: Dr = set of known relevant doc vectors Dnr = set of known irrelevant doc vectors qm = modified query vector; q 0 = original query vector; α, β, γ: weights (hand-chosen or set empirically) n New query moves toward relevant documents and away from irrelevant documents

Relevance Feedback: Problems n n n Users are often reluctant to provide explicit feedback It’s often harder to understand why a particular document was retrieved after applying relevance feedback There is no clear evidence that relevance feedback is the “best use” of the user’s time.

Sec. 9. 1. 4 Relevance Feedback on the Web n Some search engines offer a similar/related pages feature (this is a trivial form of relevance feedback) n n α/β/γ ? ? Some don’t because it’s hard to explain to users: n n Google (link-based) Altavista Stanford Web. Base Alltheweb bing Yahoo Excite initially had true relevance feedback, but abandoned it due to lack of use.

Sec. 9. 1. 6 Pseudo relevance feedback n Pseudo-relevance feedback automates the “manual” part of true relevance feedback. n n n Retrieve a list of hits for the user’s query Assume that the top k are relevant. Do relevance feedback (e. g. , Rocchio) Works very well on average But can go horribly wrong for some queries. Several iterations can cause query drift.

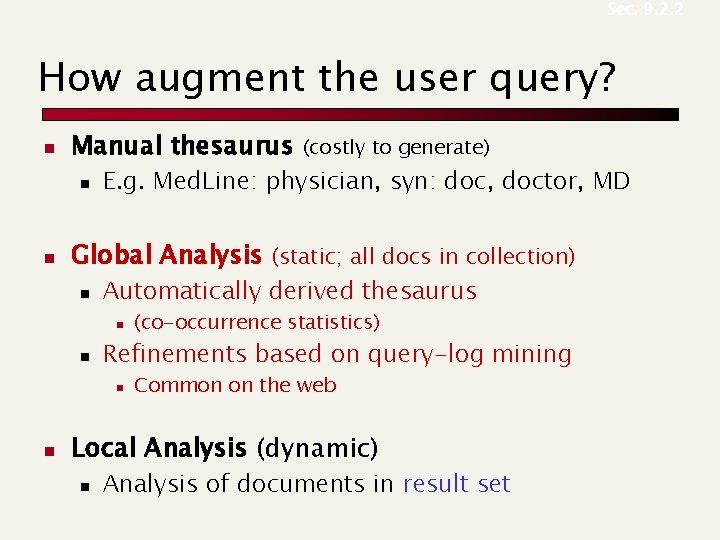

Sec. 9. 2. 2 Query Expansion n n In relevance feedback, users give additional input (relevant/non-relevant) on documents, which is used to reweight terms in the documents In query expansion, users give additional input (good/bad search term) on words or phrases

Sec. 9. 2. 2 How augment the user query? n Manual thesaurus n n E. g. Med. Line: physician, syn: doc, doctor, MD Global Analysis (static; all docs in collection) n Automatically derived thesaurus n n (co-occurrence statistics) Refinements based on query-log mining n n (costly to generate) Common on the web Local Analysis (dynamic) n Analysis of documents in result set

Query assist Would you expect such a feature to increase the query volume at a search engine?

Algoritmi per IR Zone indexes

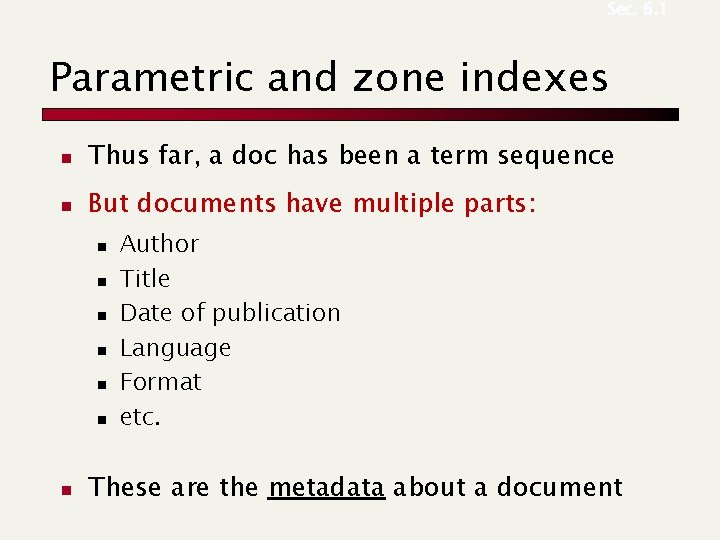

Sec. 6. 1 Parametric and zone indexes n Thus far, a doc has been a term sequence n But documents have multiple parts: n n n n Author Title Date of publication Language Format etc. These are the metadata about a document

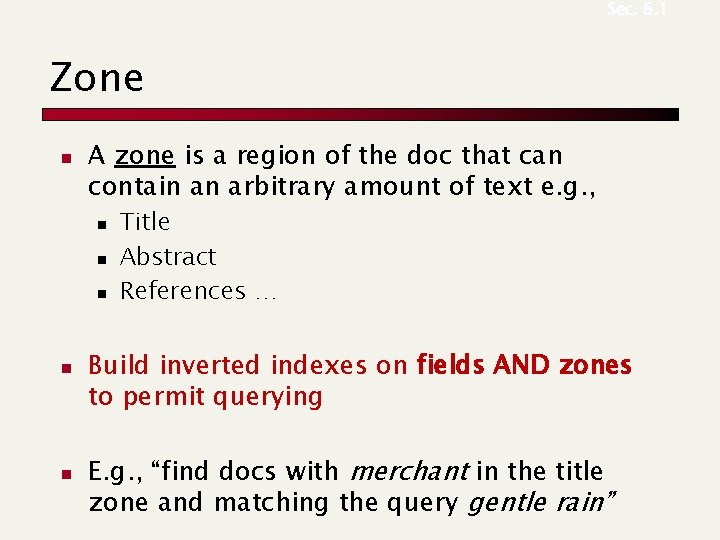

Sec. 6. 1 Zone n A zone is a region of the doc that can contain an arbitrary amount of text e. g. , n n n Title Abstract References … Build inverted indexes on fields AND zones to permit querying E. g. , “find docs with merchant in the title zone and matching the query gentle rain”

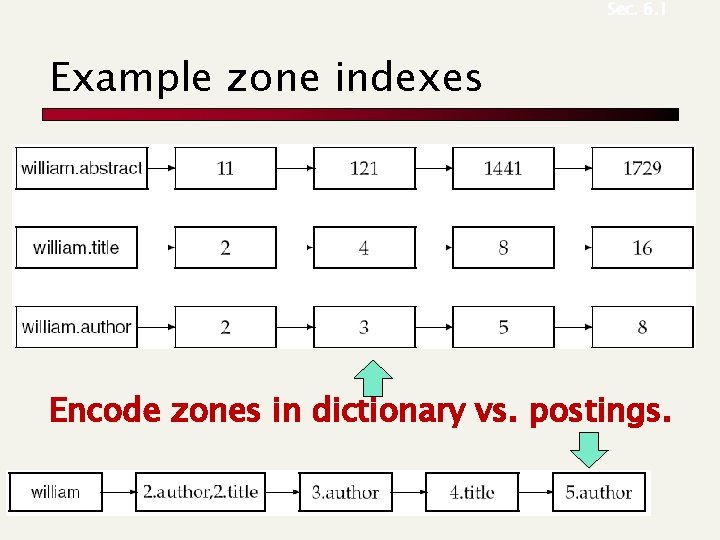

Sec. 6. 1 Example zone indexes Encode zones in dictionary vs. postings.

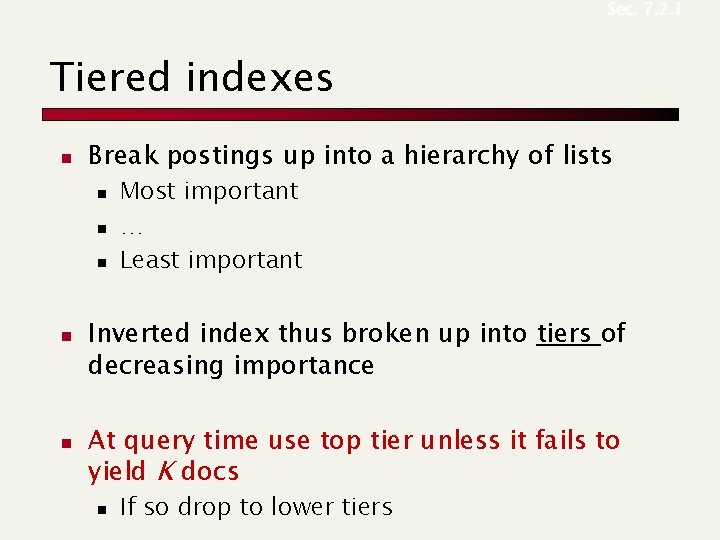

Sec. 7. 2. 1 Tiered indexes n Break postings up into a hierarchy of lists n n n Most important … Least important Inverted index thus broken up into tiers of decreasing importance At query time use top tier unless it fails to yield K docs n If so drop to lower tiers

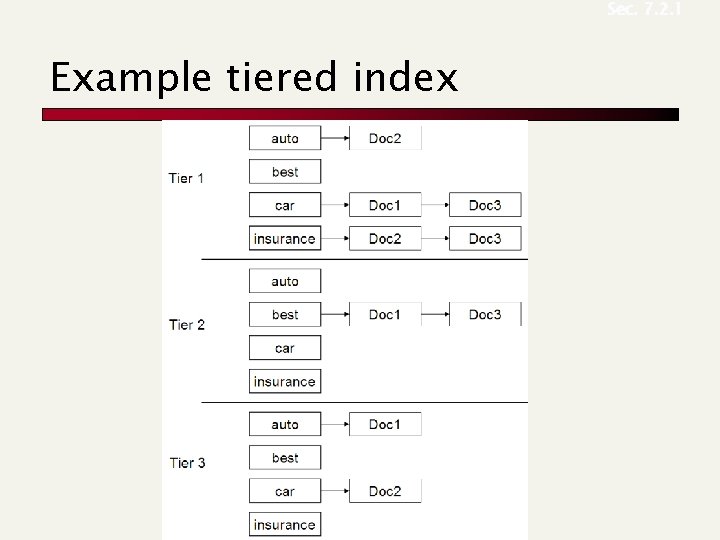

Sec. 7. 2. 1 Example tiered index

Sec. 7. 2. 2 Query term proximity n n n Free text queries: just a set of terms typed into the query box – common on the web Users prefer docs in which query terms occur within close proximity of each other Would like scoring function to take this into account – how?

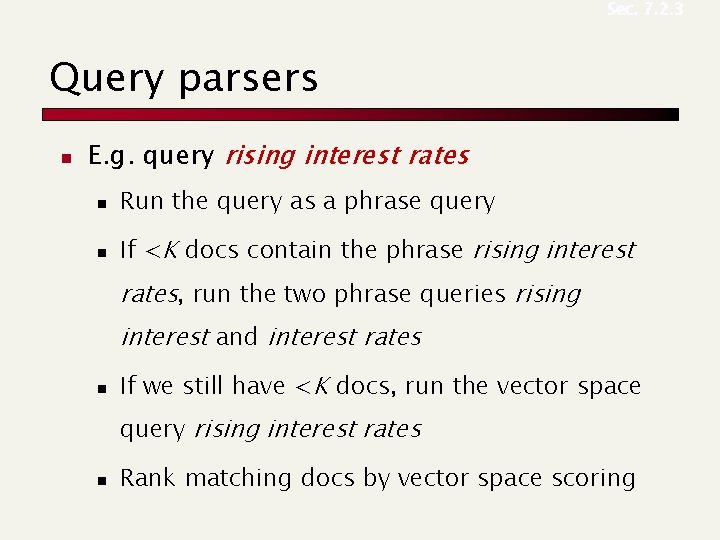

Sec. 7. 2. 3 Query parsers n E. g. query rising interest rates n Run the query as a phrase query n If <K docs contain the phrase rising interest rates, run the two phrase queries rising interest and interest rates n If we still have <K docs, run the vector space query rising interest rates n Rank matching docs by vector space scoring

Algoritmi per IR Quality of a Search Engine

Is it good ? n How fast does it index n n n How fast does it search n n Number of documents/hour (Average document size) Latency as a function of index size Expressiveness of the query language

Measures for a search engine n All of the preceding criteria are measurable n The key measure: user happiness …useless answers won’t make a user happy

Happiness: elusive to measure n Commonest approach is given by the relevance of search results n n How do we measure it ? Requires 3 elements: 1. 2. 3. A benchmark document collection A benchmark suite of queries A binary assessment of either Relevant or Irrelevant for each query-doc pair

Evaluating an IR system n Standard benchmarks n TREC: National Institute of Standards and Testing (NIST) has run large IR testbed for many years n Other doc collections: marked by human experts, for each query and for each doc, Relevant or Irrelevant L On the Web everything is more complicated since we cannot mark the entire corpus !!

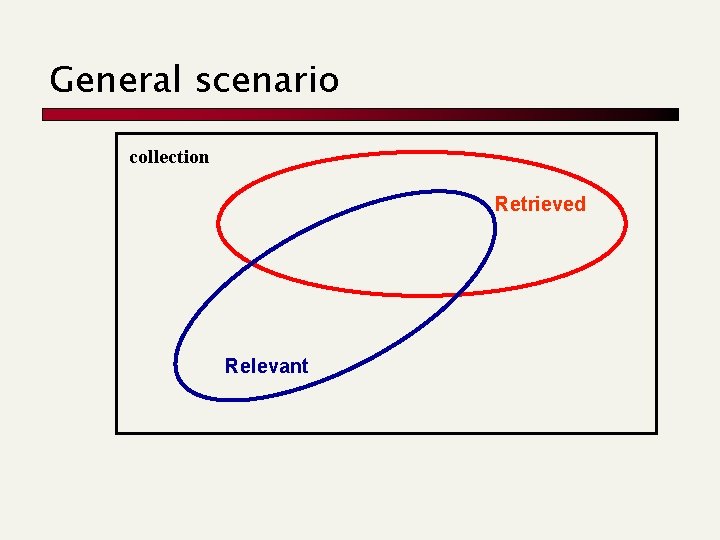

General scenario collection Retrieved Relevant

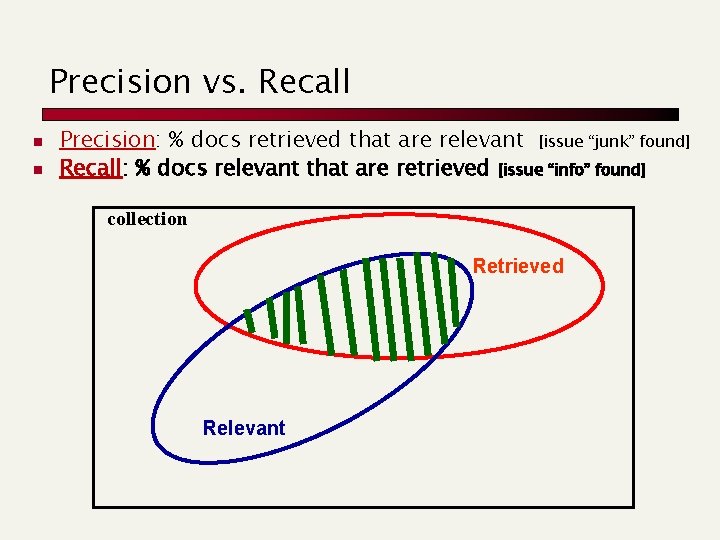

Precision vs. Recall n n Precision: % docs retrieved that are relevant [issue “junk” found] Recall: % docs relevant that are retrieved [issue “info” found] collection Retrieved Relevant

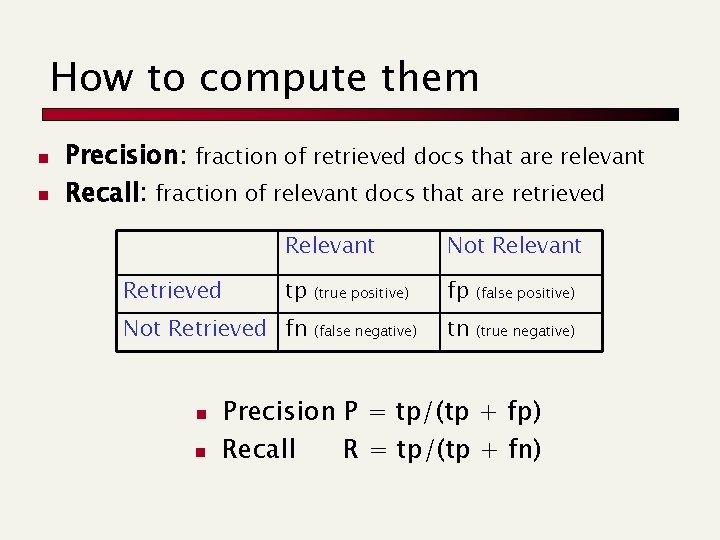

How to compute them n n Precision: fraction of retrieved docs that are relevant Recall: fraction of relevant docs that are retrieved Relevant Not Relevant tp (true positive) fp (false positive) (false negative) tn (true negative) Not Retrieved fn n n Precision P = tp/(tp + fp) Recall R = tp/(tp + fn)

Some considerations n n n Can get high recall (but low precision) by retrieving all docs for all queries! Recall is a non-decreasing function of the number of docs retrieved Precision usually decreases

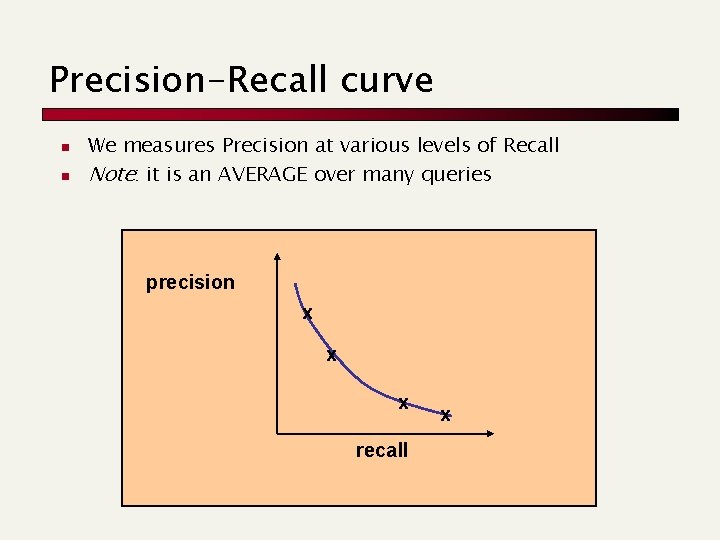

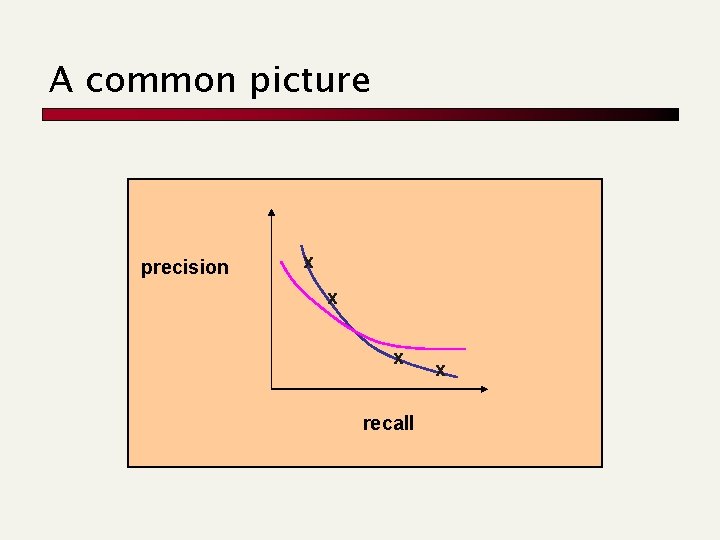

Precision-Recall curve n n We measures Precision at various levels of Recall Note: it is an AVERAGE over many queries precision x x x recall x

A common picture precision x x x recall x

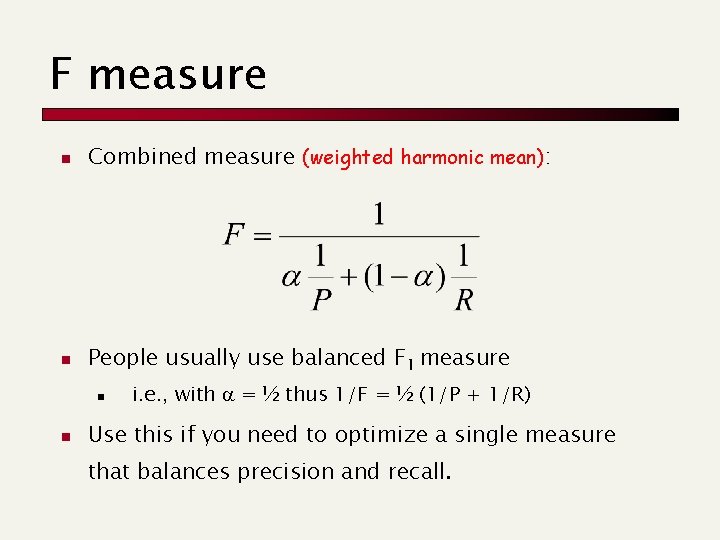

F measure n Combined measure (weighted harmonic mean): n People usually use balanced F 1 measure n n i. e. , with = ½ thus 1/F = ½ (1/P + 1/R) Use this if you need to optimize a single measure that balances precision and recall.

Information Retrieval Recommendation Systems

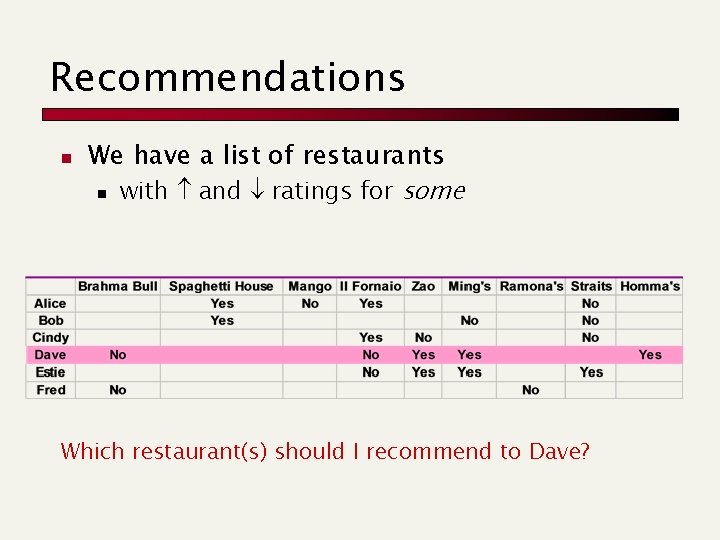

Recommendations n We have a list of restaurants n with and ratings for some Which restaurant(s) should I recommend to Dave?

Basic Algorithm n Recommend the most popular restaurants n n say # positive votes minus # negative votes What if Dave does not like Spaghetti?

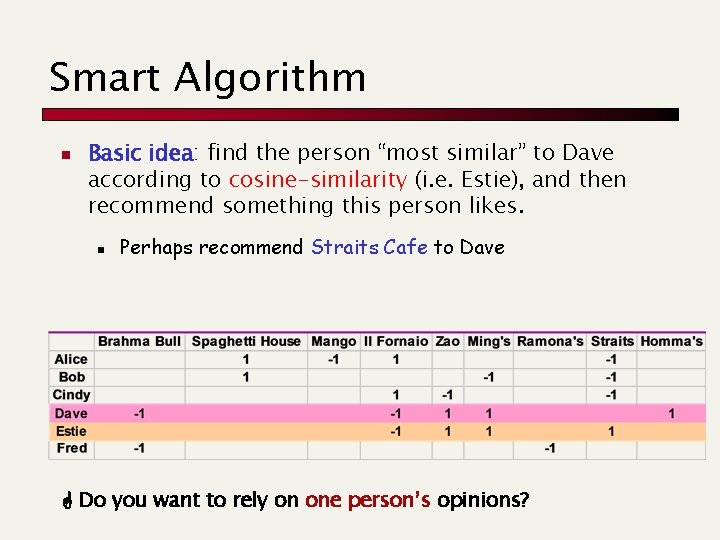

Smart Algorithm n Basic idea: find the person “most similar” to Dave according to cosine-similarity (i. e. Estie), and then recommend something this person likes. n Perhaps recommend Straits Cafe to Dave Do you want to rely on one person’s opinions?

- Slides: 64