Algorithms Lecture 21 Dealing with NPhardness Some possibilities

Algorithms Lecture 21

Dealing with NP-hardness • Some possibilities – Try to solve the problem as efficiently as possible (even if not in polynomial time) – Try to solve special cases of the problem, or a relaxed version of the problem, in polynomial time – Find approximate solutions in polynomial time • Finding approximate (rather than exact) solutions can also improve performance even when dealing with problems in P

Load balancing

Load balancing • Given n jobs with running times t 1, …, tn, and m machines • Want an assignment A(1), …, A(m) of jobs to machines that minimizes the makespan – I. e. , the time for the last machine to finish, where machine i finishes in time Ti = j A(i) tj • This is an NP-hard problem

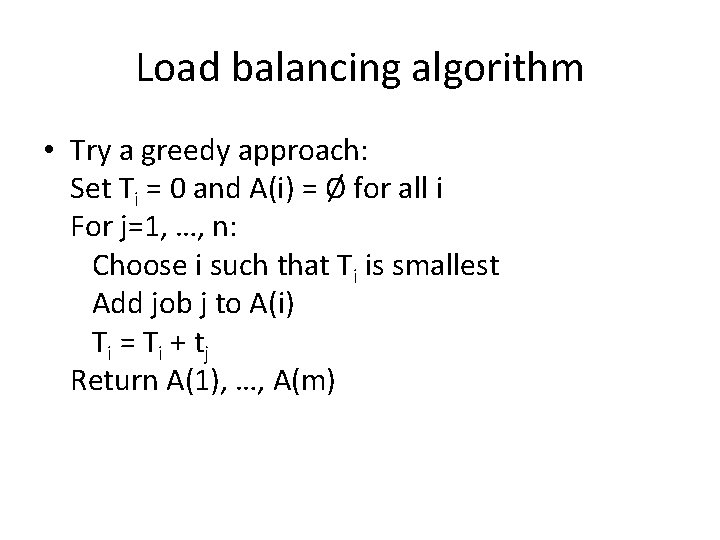

Load balancing algorithm • Try a greedy approach: Set Ti = 0 and A(i) = Ø for all i For j=1, …, n: Choose i such that Ti is smallest Add job j to A(i) Ti = Ti + t j Return A(1), …, A(m)

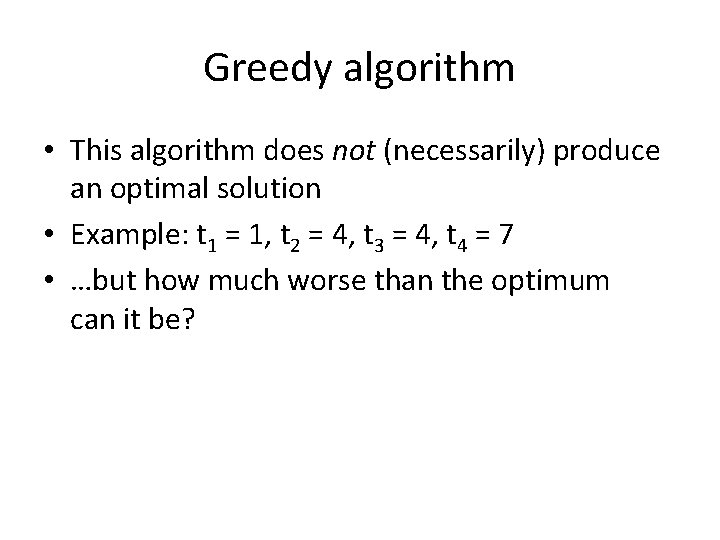

Greedy algorithm • This algorithm does not (necessarily) produce an optimal solution • Example: t 1 = 1, t 2 = 4, t 3 = 4, t 4 = 7 • …but how much worse than the optimum can it be?

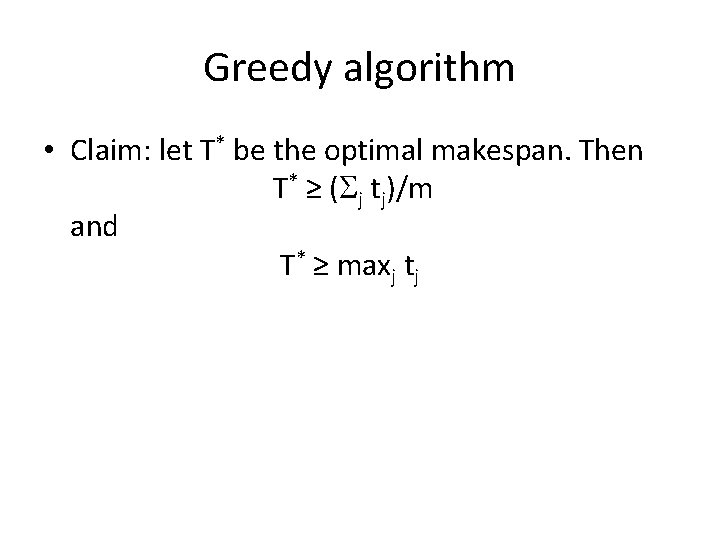

Greedy algorithm • Claim: let T* be the optimal makespan. Then T* ≥ ( j tj)/m and T* ≥ maxj tj

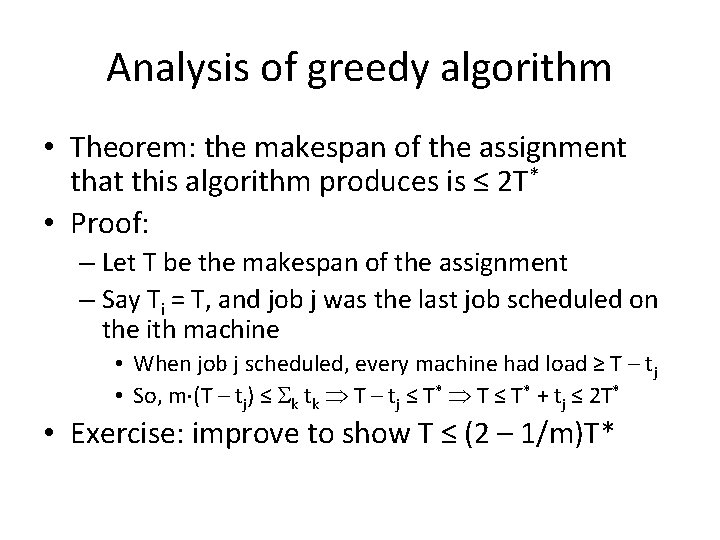

Analysis of greedy algorithm • Theorem: the makespan of the assignment that this algorithm produces is ≤ 2 T* • Proof: – Let T be the makespan of the assignment – Say Ti = T, and job j was the last job scheduled on the ith machine • When job j scheduled, every machine had load ≥ T – tj • So, m (T – tj) ≤ k tk T – tj ≤ T* T ≤ T* + tj ≤ 2 T* • Exercise: improve to show T ≤ (2 – 1/m)T*

Side note • In fact, this tells you how to find an example showing that the bound is (almost) tight – Make job with highest running time scheduled last, when loads on all machines are equal – E. g. , m machines; m (m-1) jobs taking time 1 and one job taking time m • Optimal makespan T* = m • Makespan from algorithm = 2 m-1 = (2 -1/m) T*

Improving the algorithm • The analysis also suggests a way to improve the algorithm: – Sort jobs from largest to smallest before assigning them as before • Let t 1, …, tn be jobs in sorted order (we may assume n > m [why? ]) • Claim: T* ≥ 2 tj for any j > m • Proof: the first j jobs each take time ≥ tj, and some machine must get two of them

Analysis • Theorem: the makespan of the assignment that this algorithm produces is ≤ 1. 5 T* • Proof: – Say machine i has the maximum load – If it has one job, then the schedule is optimal – Otherwise, say job j > m was the last job assigned to machine i – Since tj ≤ T*/2, modifying the proof from before gives the claimed result

Center selection

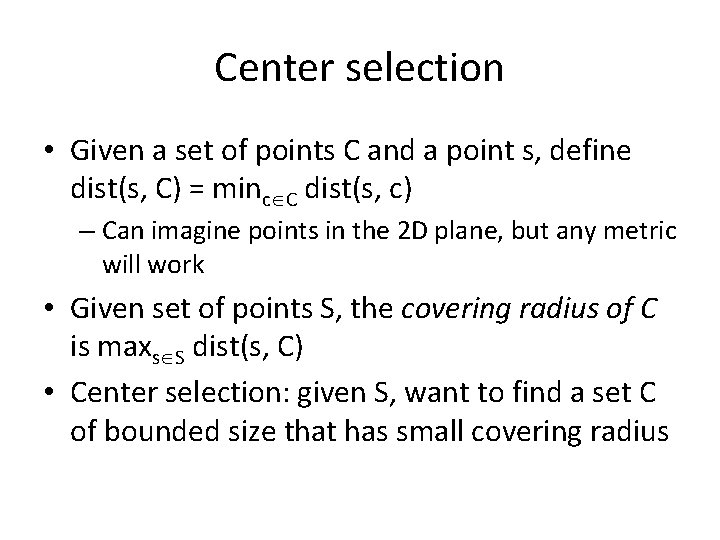

Center selection • Given a set of points C and a point s, define dist(s, C) = minc C dist(s, c) – Can imagine points in the 2 D plane, but any metric will work • Given set of points S, the covering radius of C is maxs S dist(s, C) • Center selection: given S, want to find a set C of bounded size that has small covering radius

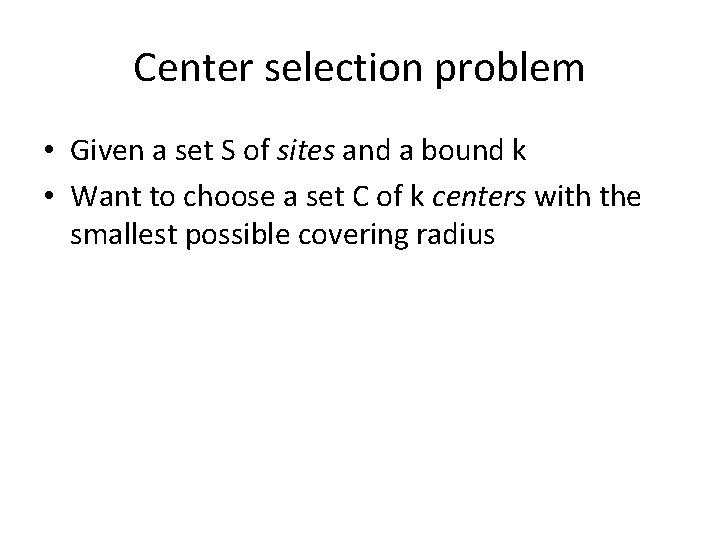

Center selection problem • Given a set S of sites and a bound k • Want to choose a set C of k centers with the smallest possible covering radius

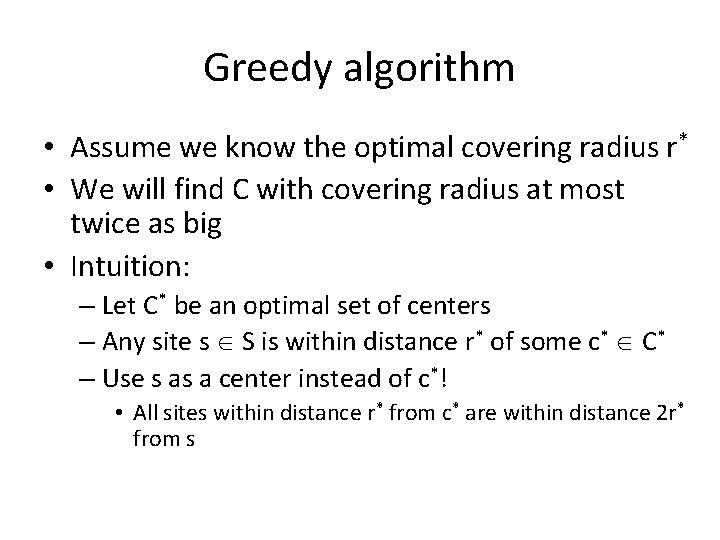

Greedy algorithm • Assume we know the optimal covering radius r* • We will find C with covering radius at most twice as big • Intuition: – Let C* be an optimal set of centers – Any site s S is within distance r* of some c* C* – Use s as a center instead of c*! • All sites within distance r* from c* are within distance 2 r* from s

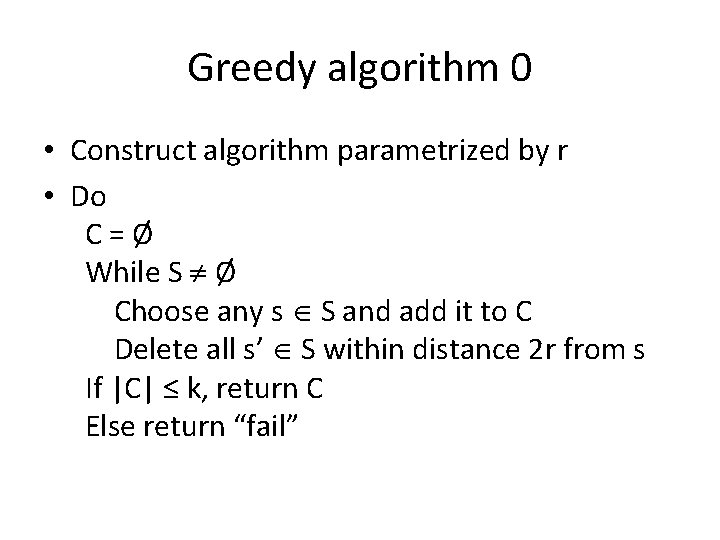

Greedy algorithm 0 • Construct algorithm parametrized by r • Do C=Ø While S Ø Choose any s S and add it to C Delete all s’ S within distance 2 r from s If |C| ≤ k, return C Else return “fail”

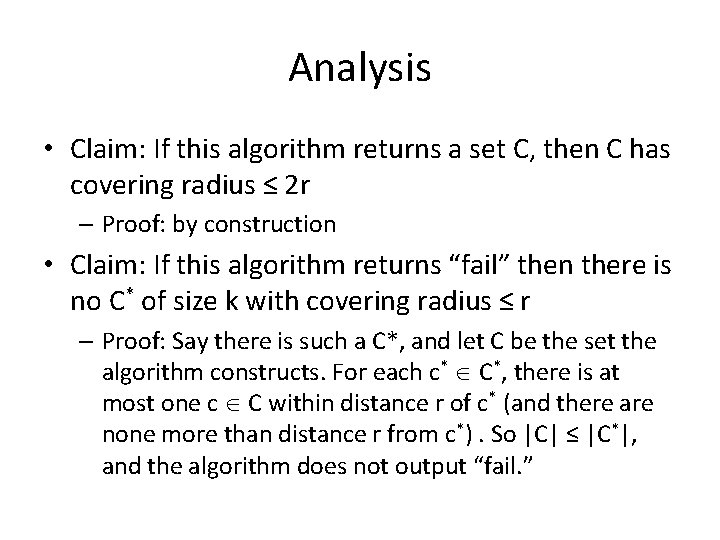

Analysis • Claim: If this algorithm returns a set C, then C has covering radius ≤ 2 r – Proof: by construction • Claim: If this algorithm returns “fail” then there is no C* of size k with covering radius ≤ r – Proof: Say there is such a C*, and let C be the set the algorithm constructs. For each c* C*, there is at most one c C within distance r of c* (and there are none more than distance r from c*). So |C| ≤ |C*|, and the algorithm does not output “fail. ”

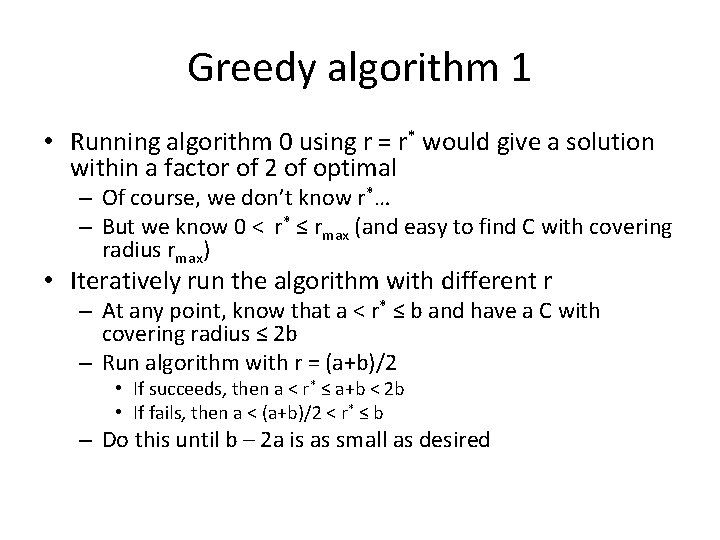

Greedy algorithm 1 • Running algorithm 0 using r = r* would give a solution within a factor of 2 of optimal – Of course, we don’t know r*… – But we know 0 < r* ≤ rmax (and easy to find C with covering radius rmax) • Iteratively run the algorithm with different r – At any point, know that a < r* ≤ b and have a C with covering radius ≤ 2 b – Run algorithm with r = (a+b)/2 • If succeeds, then a < r* ≤ a+b < 2 b • If fails, then a < (a+b)/2 < r* ≤ b – Do this until b – 2 a is as small as desired

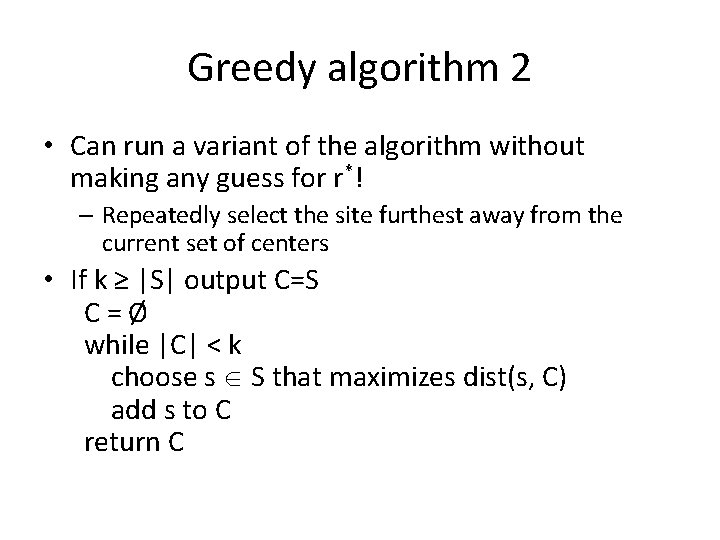

Greedy algorithm 2 • Can run a variant of the algorithm without making any guess for r*! – Repeatedly select the site furthest away from the current set of centers • If k ≥ |S| output C=S C=Ø while |C| < k choose s S that maximizes dist(s, C) add s to C return C

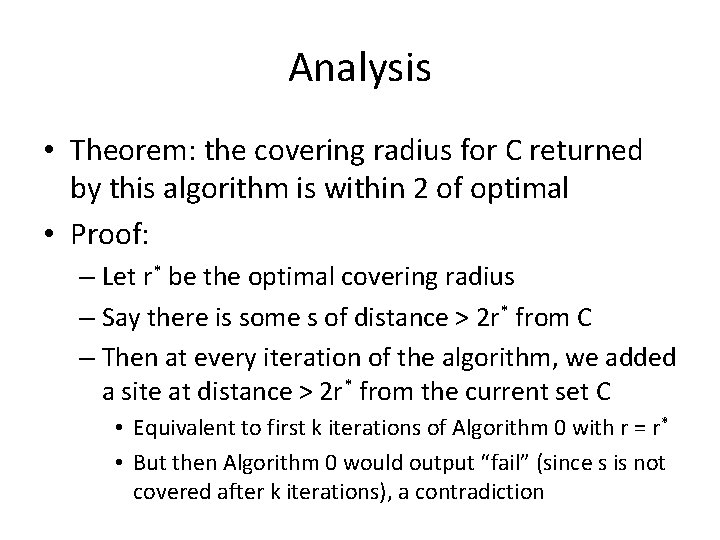

Analysis • Theorem: the covering radius for C returned by this algorithm is within 2 of optimal • Proof: – Let r* be the optimal covering radius – Say there is some s of distance > 2 r* from C – Then at every iteration of the algorithm, we added a site at distance > 2 r* from the current set C • Equivalent to first k iterations of Algorithm 0 with r = r* • But then Algorithm 0 would output “fail” (since s is not covered after k iterations), a contradiction

Vertex cover

Vertex cover • For a graph G, let vc(G) be the size of the smallest vertex cover • Recall: a set of edges F is a matching if no vertex is incident to two edges in F • Claim: if F is a matching in G, then |F| ≤ vc(G) – Proof: the vertex cover must cover F, and that requires |F| vertices

Algorithm for vertex cover • This gives a simple approximation algorithm for the vertex cover problem: – Find a maximal matching F • Repeatedly select edges while ensuring a matching – For every edge in F, add both vertices to a cover C • C is a vertex cover, since F is maximal • C is a 2 -approximation since |C| = 2|F| ≤ 2 vc(G) • A more complicated version of this algorithm works for weighted vertex cover

Reductions

Reductions • NP-complete problems can be reduced to each other – If you can solve one exactly in poly-time, you can solve any exactly in poly-time • This sometimes carries over to approximation algorithms, but sometimes does not

Reductions • As a positive example, consider the reduction f from 3 SAT to independent set • An assignment satisfying k clauses in an independent set of size k in f( ) – So any algorithm giving a c-approximation for 3 SAT gives a c-approximation for independent set, and vice versa

Reductions • As a negative example, consider the reduction from vertex cover to independent set – Vertex cover C independent set V C • Fix a graph G with vc(G) = |V|/2 – Previous algorithm might return vertex cover V • This is within a factor of 2 of optimal – But ind(G) = |V|/2, while using our algorithm + the above reduction gives the empty set • Horrible approximation!

- Slides: 27