Algorithmic Bias and Fairness 1 Automated Decision Making

Algorithmic Bias and Fairness 1

Automated Decision Making: Pros h Handles large volumes of data (Google search, airline reservations, online markets, . . ) h Avoids certain kinds of bias 5 Parole judges being more lenient after a meal 5 Making hiring decisions based on the name of the person 5 Subjectivity in evaluations of papers, music, teaching, etc. h Human judgment in NYC stop and frisk policy 5 4. 4 M were stopped between 2004 -2012 5 88% of them led to no further action 5 83% of the people stopped were Black or Hispanic – only about half in the population are. 2

Complex and Opaque Decisions h Hard to understand make sense of h Values, biases and potential discrimination built in h The code is opaque and often trade secret h Facebook’s newsfeed algorithm, recidivism algorithms, genetic testing 3

Gatekeeping Function h Decide what gets attention, what is published, and what is censored h Google’s search results of geopolitical queries might depend on location, e. g. , different maps of Pakistan or India. h Learning algorithms that make hiring decisions. 5 Pattern: Low commute time favors low turnover 5 Policy: Don’t hire from far off places with bad public transportation 5 Impact: People from poor and far off neighborhoods may not be hired 4

Subjective Decision Making h Algorithms to understand translate language, drive cars, pilot planes, and diagnose diseases. 5 No right answer, but judgment and values. h Detecting and removing terrorist content on the social networks. 5 The definition of important words such as `terrorist’ and ‘extreme content’ are controversial 5 The scale makes it difficult for manual intervention. 5 Algorithmic decisions may not be as good as people 5

Machine Learning h Programs might be using protected attributes such as race and gender to make predictions h Even if the protected attributes are not used, they could be using other “proxy” attributes which will have the same effect, e. g. , zip code. h Recommendations based on earlier actions might create bubbles, eg. Detecting trends on Twitter. h Example: Predictive policing 5 Predicting the neighborhoods most likely to be involved in future crime based on crime statistics 5 Rational but may be indistinguishable from racial profiling 5 More police in the neighborhood lead to more arrests. 5 Could lead to positive feedback loops and become a selffulfilling prophecy. 6

Data Privacy h Who owns your browser data? h Can your insurance company get access to your grocery list or peek into your fridge? h Can hospitals get access to consumer data to predict who is going to get sick? h Can your employer access your grades? 7

Transparency and Notification h If the algorithm is opaque, there is no understanding or trust in the program, e. g. , medical decisions, hiring decisions 5 Google’s search algorithm judged not demonstrably anticompetitive in the US 5 European Commission has successfully pursued an antitrust investigation h Many points of trust: algorithm, input, learning data, control surfaces, assumptions and models the algorithm uses, etc. h Complete transparency makes it vulnerable to hacking. Does not guarantee scrutiny. h Consumers might demand the right to be notified when using their information or demand excluding their personal information 8

Algorithmic Accountability h How search engines censor violent/sexual search terms h What influences Facebook’s newsfeed program or Google’s advertisements h Need causal explanations that link our digital experience with data they are based upon 9

Government Regulation h Destabilizing effect of high-speed trading systems led to demands of transparency of these algorithms and ability to modify them h Should search algorithms be forced to follow some “search neutrality rules”? h Requires public officials to have access to the program and modify it in the interest of public. h There is no one right answer to the queries Google handles, which makes it difficult. 10

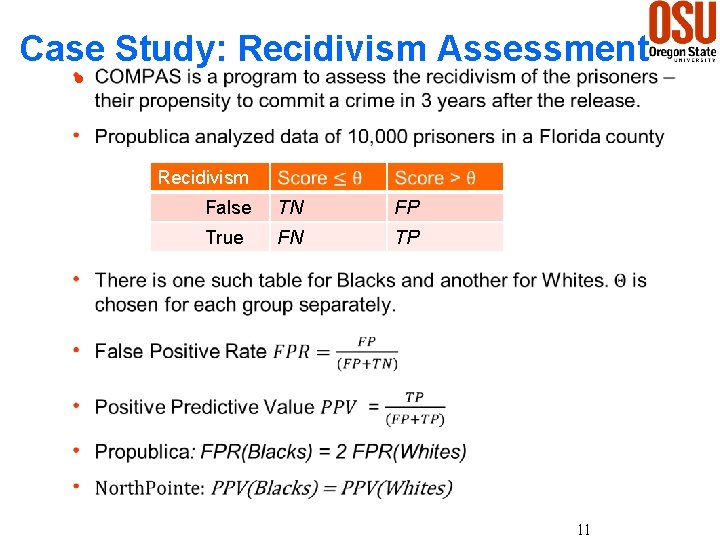

Case Study: Recidivism Assessment h Recidivism False TN FP True FN TP 11

Conflicting Demands on Fairness White Recidivism Black =True Prediction = Positive 12

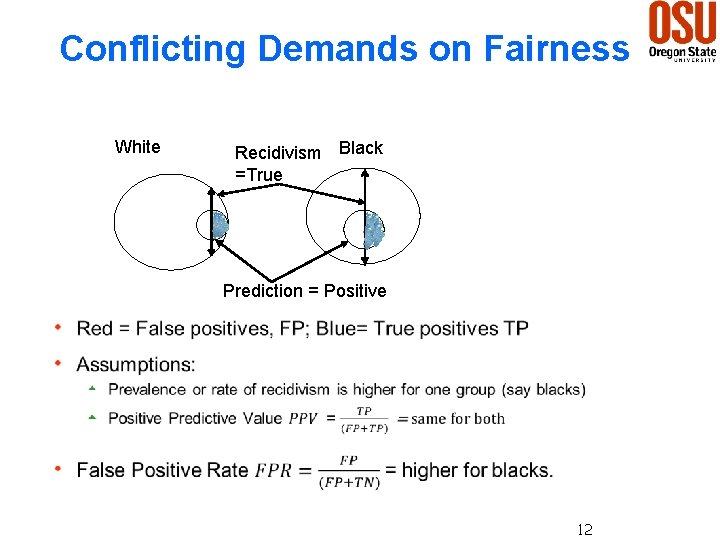

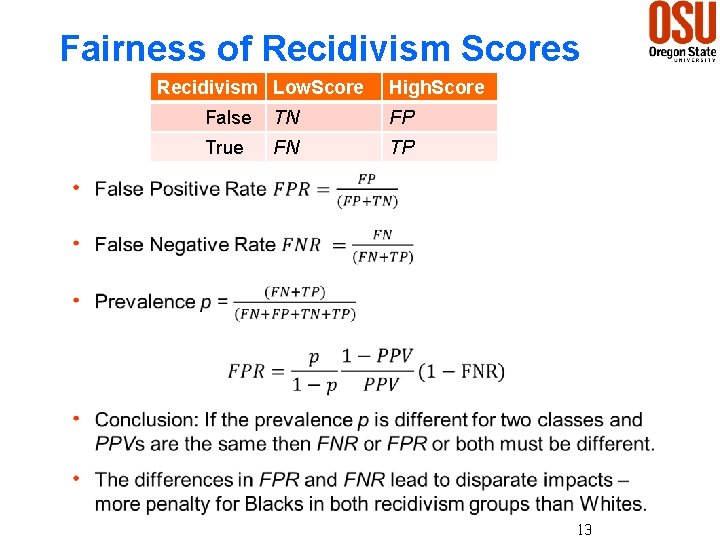

Fairness of Recidivism Scores Recidivism Low. Score High. Score False TN FP True FN TP 13

Summary h It is mathematically impossible to achieve both equal PPV and equal FPR across different groups. h The differences in FPR and FNR persist in subgroups of defendants. h However, evidence suggests that data-driven risk assessment tools (in medicine) are more accurate than human judgment. h Human driven decisions are themselves prone to exhibiting racial bias, eg, paroles, sentencing, stop and frisk, arrests, etc. 14

Case Study: Online Market Places h How do we ensure that the sellers are honest about the quality of their goods? 5 Study: In early 2000’s e. Bay merchants misrepresented the quality of their sports trading cards 5 Problem largely solved by the feedback and reputation systems h New development: demand for more information 5 Study (2012): Subjects rated trustworthiness of potential borrowers from photographs of them. 5 People who looked trustworthy are more likely to get loans 5 They are also more likely to repay their loans. h More information leads to more freedom 5 People can now choose whom to do business with based on looks 5 A growing body of evidence suggests this leads to discrimination 15

Discrimination in Online Markets h Air-Bn. B Study: 20 profiles sent to 6400 hosts 5 The profiles are identical except 10 of them have names common to white people and the rest to blacks 5 Result: Requests for black-sounding names were 16% less successful 5 Discrimination was pervasive. Most of the people who rejected never hosted a black guest. h Other areas of discrimination: credit, labor markets, housing. h Discrimination also occurs in algorithmic decisions. h Searches for black sounding names on Google were more likely to bring up ads about arrest records. 5 Why? 5 Learning from the past search data. 16

Principles and Recommendations h Don’t Ignore potential discrimination 5 Collect good data including race and gender stats 5 Do regular reports and occasional audits 5 Public disclosure of discrimination-related data h Keep an experimental mindset to evaluate different design options 5 Airbnb withholding host pictures from its ads 17

Design Decisions h Control the information, its timing and salience 5 When can you see the picture of Uber driver? h Increase automation and charge for control 5 Make instant book the default on Air. Bn. B and charge a fee if the host wants to approve the guest first h Prioritize discrimination issues 5 Remind the host about anti-discrimination policies at the time of the transaction h Make algorithms discrimination-aware 5 Set explicit objectives: want my black and white customers to be rejected at the same rate 18

Virtual Screens h In mid 60’s less than 10% of the big 5 orchestras were women h Moved away from face-to-face to behind-the-screen auditions h Success rate of female musicians increased by 160% h The online market allows virtual screens between buyers and sellers, between employers and employees. 19

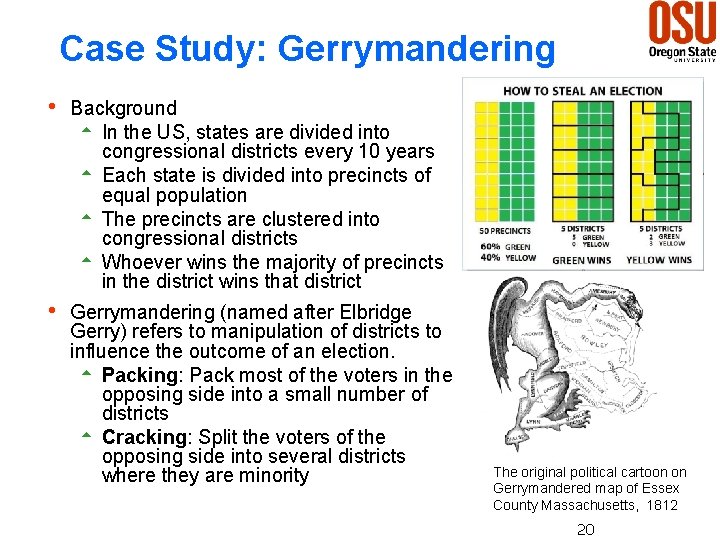

Case Study: Gerrymandering h Background 5 In the US, states are divided into congressional districts every 10 years 5 Each state is divided into precincts of equal population 5 The precincts are clustered into congressional districts 5 Whoever wins the majority of precincts in the district wins that district h Gerrymandering (named after Elbridge Gerry) refers to manipulation of districts to influence the outcome of an election. 5 Packing: Pack most of the voters in the opposing side into a small number of districts 5 Cracking: Split the voters of the opposing side into several districts where they are minority The original political cartoon on Gerrymandered map of Essex County Massachusetts, 1812 20

Impact of gerrymandering h Racial gerrymandering that intentionally reduces minority representation was ruled illegal in 1960. h In 1980, voting rights act was amended to make states redraw maps if they had racially discriminatory impact. h Partisan gerrymandering has not been ruled illegal 5 When republicans drew the maps (17 states) they won about 53 percent of the vote and 72 percent of the seats. 5 When democrats drew the maps (6 states), they won about 56 percent of the vote and 71 percent of the seats. h Proportional representation: Each party receives roughly the same percent of votes as it wins the percent of the seats h Wasted votes: Votes cast to the losing side or above the minimum the winner needed to win. h Efficiency gap: The difference in the wasted votes / total wasted. It is intended to measure partisan bias. 21

Wisconsin’s redistricting in 2011 h Wisconsin’s Republican-led redistricting was struck down by a 3 judge panel. It was heard by the supreme court on October 3. A decision is pending. h The arguments of the plaintiffs: 5 Big efficiency gap indicates bias especially if it is persistent. Wisconsin’s gap is the biggest ever. 5 It violates voters’ right to equal treatment 5 It discriminates against their views (first amendment argument) h Arguments of the defendants: 5 Efficiency gaps arise naturally, e. g. , when democrats pack into cities 5 Courts should stay out of it. States can appoint independent commissions if they are concerned h Justice Kennedy’s vote is probably going to be decisive. 22

Discussion Suppose you are heading an independent commission to recommend a fair redistricting approach. h How do you define fair redistricting? Why? h How would you go about implementing your recommendation? h What role do computer algorithms play? 23

- Slides: 23