Algorithm Efficiency Searching and Sorting Algorithms l l

- Slides: 28

Algorithm Efficiency: Searching and Sorting Algorithms l l Recursion and Searching Problems Execution time of Algorithms growth rate Order-of-Magnitude Analysis and Big O Notation l l The efficiency of Searching Algorithms Sorting Algorithms and their Efficiency 1

Recursion and Search Problems l Problem: Look for the word ‘vademecum’ in a dictionary! l Solution 1: Start at the beginning of the dictionary and look at every word in order until you find ‘vademecum’ -- sequential search l l You want a faster way to perform the search? Solution 2: Open the dictionary probably to a point near its middle and glance at the page, determine which “half” of the dictionary contains the desired word -- binary search. 2

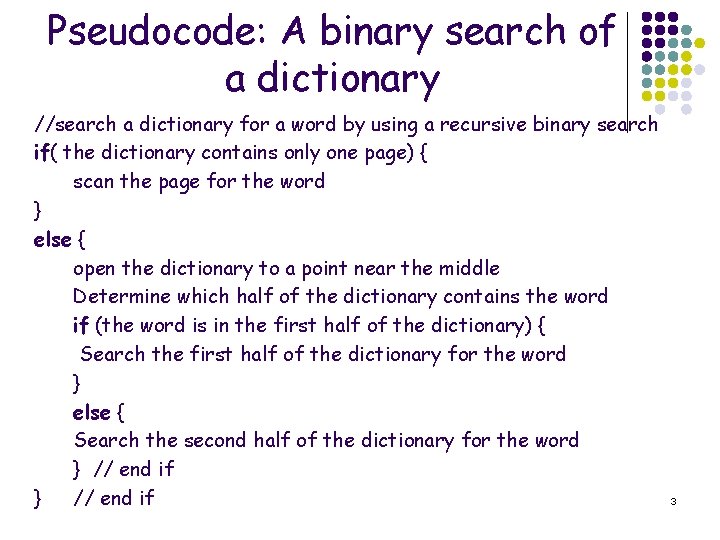

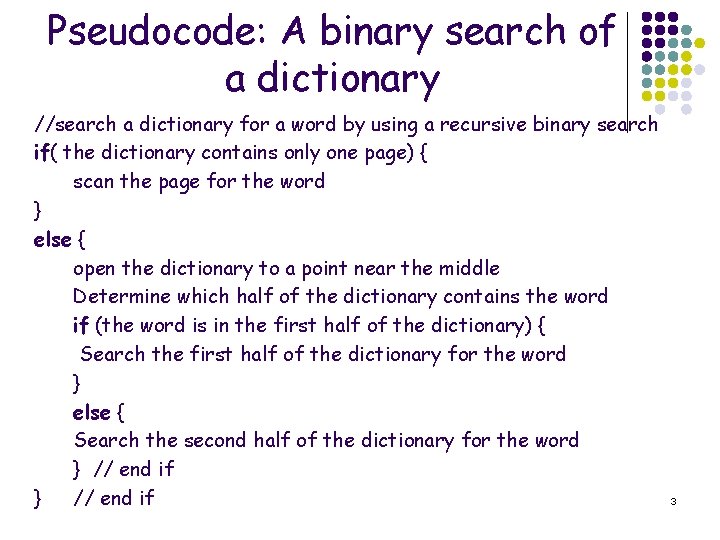

Pseudocode: A binary search of a dictionary //search a dictionary for a word by using a recursive binary search if( the dictionary contains only one page) { scan the page for the word } else { open the dictionary to a point near the middle Determine which half of the dictionary contains the word if (the word is in the first half of the dictionary) { Search the first half of the dictionary for the word } else { Search the second half of the dictionary for the word } // end if 3

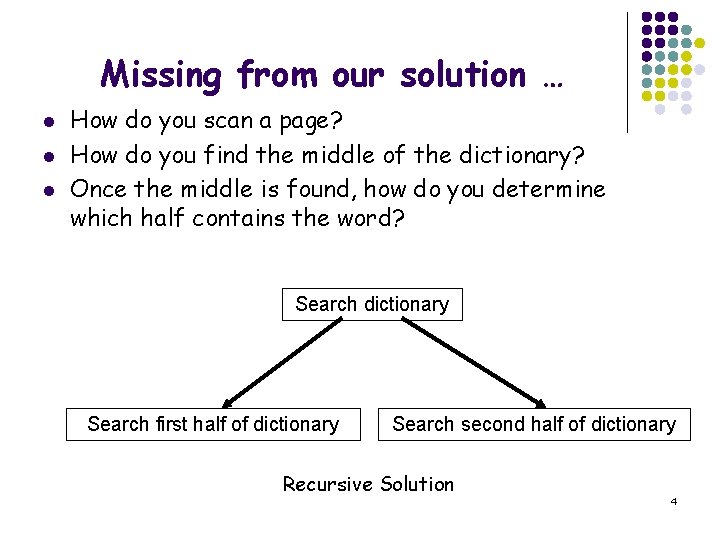

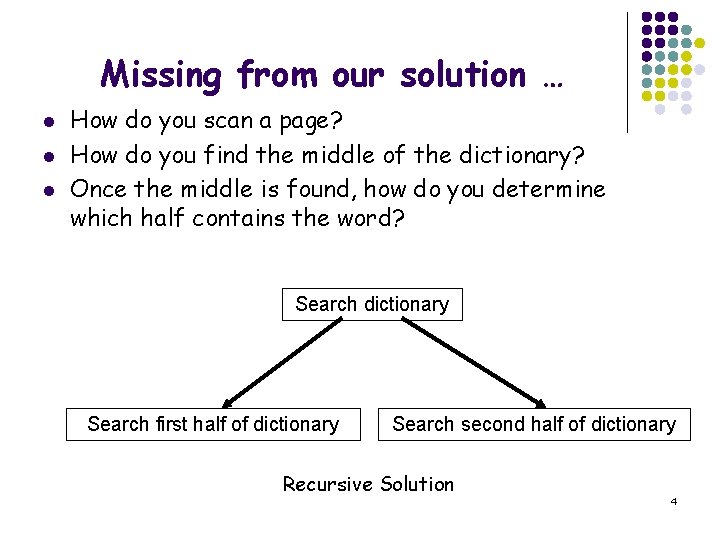

Missing from our solution … l l l How do you scan a page? How do you find the middle of the dictionary? Once the middle is found, how do you determine which half contains the word? Search dictionary Search first half of dictionary Search second half of dictionary Recursive Solution 4

A binary search uses divide-and. Conquer strategy l l After you have divided the dictionary so many times that you are left with only single page, halving ceases l Now the problem is sufficiently small that you can solve it directly by scanning the single page that remains for the word – base case This strategy is one of Divide-and-conquer: You solve the dictionary search problem by first dividing the dictionary into two halves and then conquering the appropriate half 5

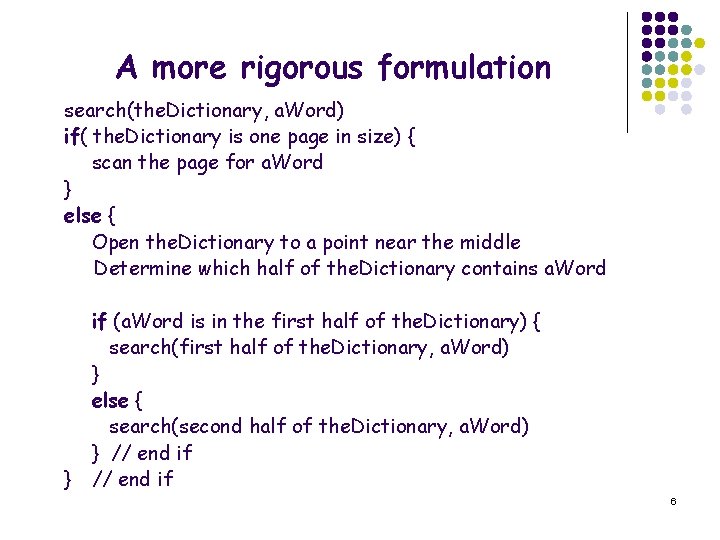

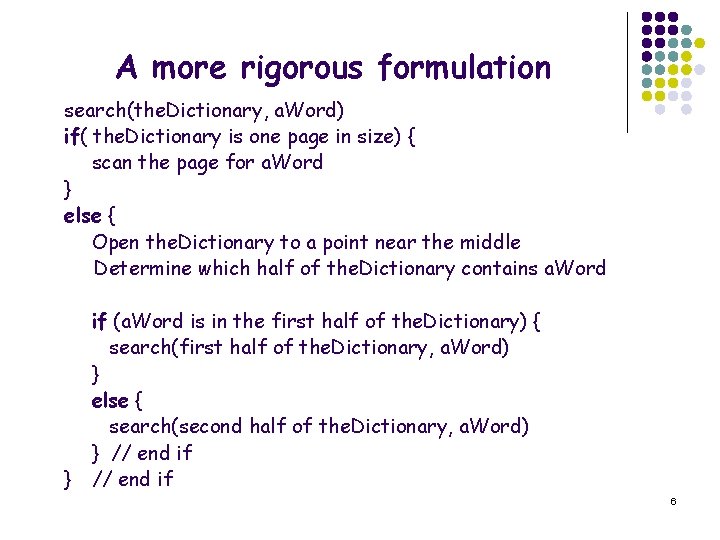

A more rigorous formulation search(the. Dictionary, a. Word) if( the. Dictionary is one page in size) { scan the page for a. Word } else { Open the. Dictionary to a point near the middle Determine which half of the. Dictionary contains a. Word } if (a. Word is in the first half of the. Dictionary) { search(first half of the. Dictionary, a. Word) } else { search(second half of the. Dictionary, a. Word) } // end if 6

Determining the Efficiency of Algorithms l l Comparison of Algorithms is a topic that is central to computer Science l The choice of algorithm for a given application often has a great impact l Responsive word processors, automatic teller machines, video games, and life support systems all depend on efficient algorithms Consider efficiency when selecting algorithms The analysis of algorithms is an area of computer science that provide tools for contrasting the efficiency of different methods of solutions An analysis should focus on gross differences in the efficiency of algorithms that are likely to dominate the overall cost of a solution 7

Determining the Efficiency of Algorithms l l l The efficiency of both time and memory is important, but the emphasis will be on time. Three difficulties with comparing programs instead of algorithms l How are the algorithms coded? l What computer should you use? l What data the programs use? Algorithm analysis should be independent of specific implementations, computers, and data l Computer scientists employ mathematical techniques that analyze algorithms independently of specific implementations, computers, or data. l Begin the analysis by counting the number of significant operations in a particular solution 8

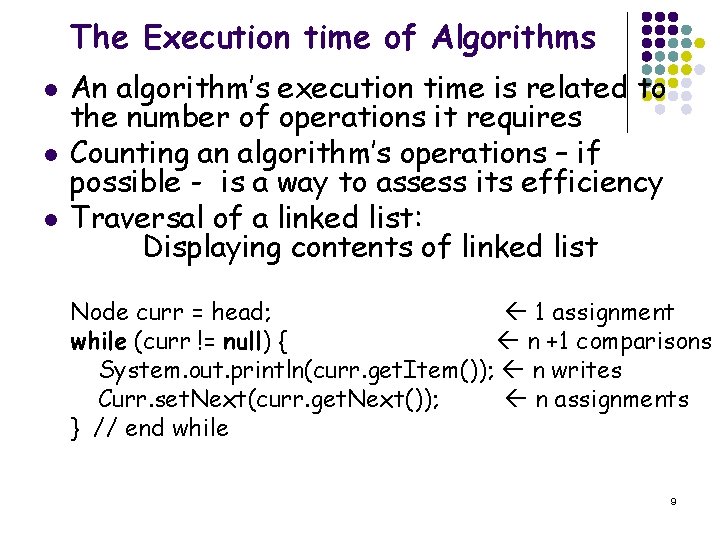

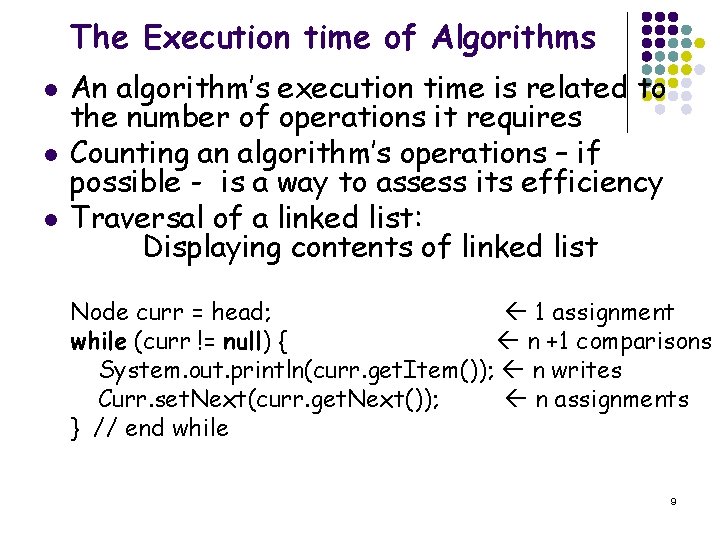

The Execution time of Algorithms l l l An algorithm’s execution time is related to the number of operations it requires Counting an algorithm’s operations – if possible - is a way to assess its efficiency Traversal of a linked list: Displaying contents of linked list Node curr = head; 1 assignment while (curr != null) { n +1 comparisons System. out. println(curr. get. Item()); n writes Curr. set. Next(curr. get. Next()); n assignments } // end while 9

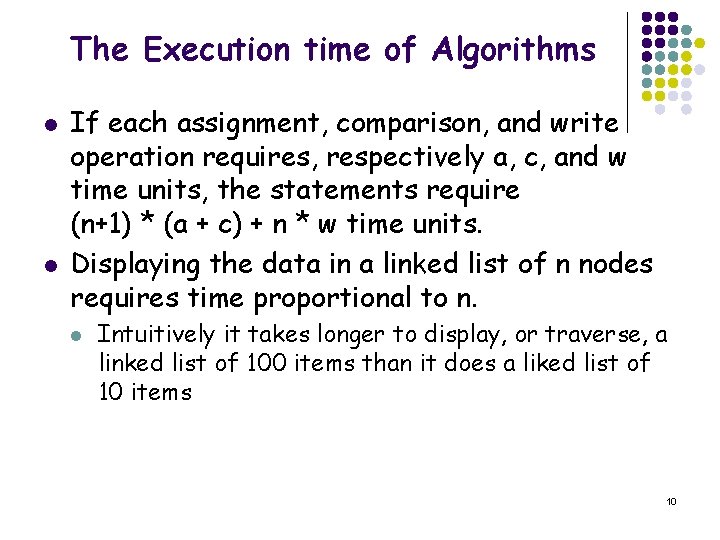

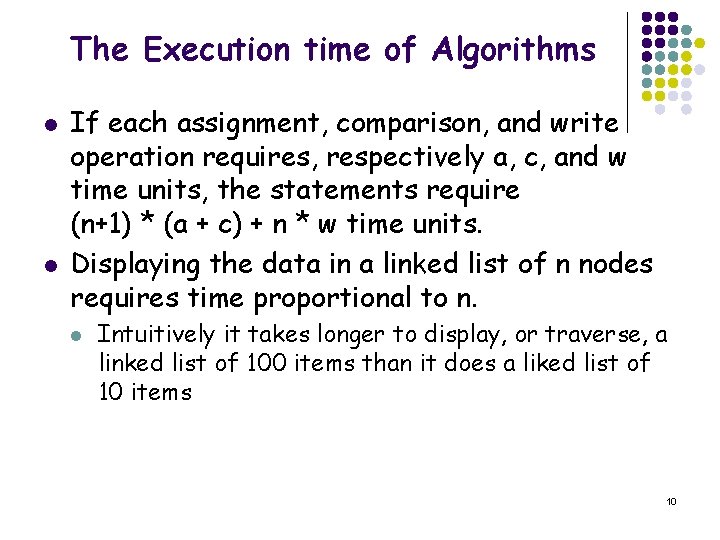

The Execution time of Algorithms l l If each assignment, comparison, and write operation requires, respectively a, c, and w time units, the statements require (n+1) * (a + c) + n * w time units. Displaying the data in a linked list of n nodes requires time proportional to n. l Intuitively it takes longer to display, or traverse, a linked list of 100 items than it does a liked list of 10 items 10

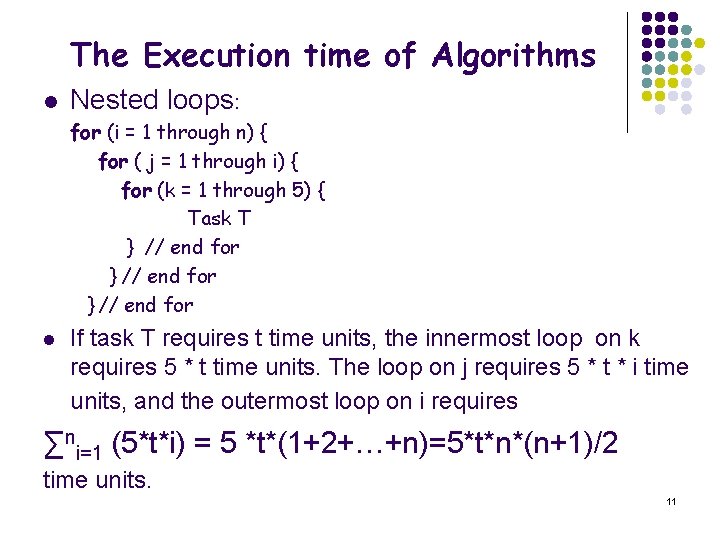

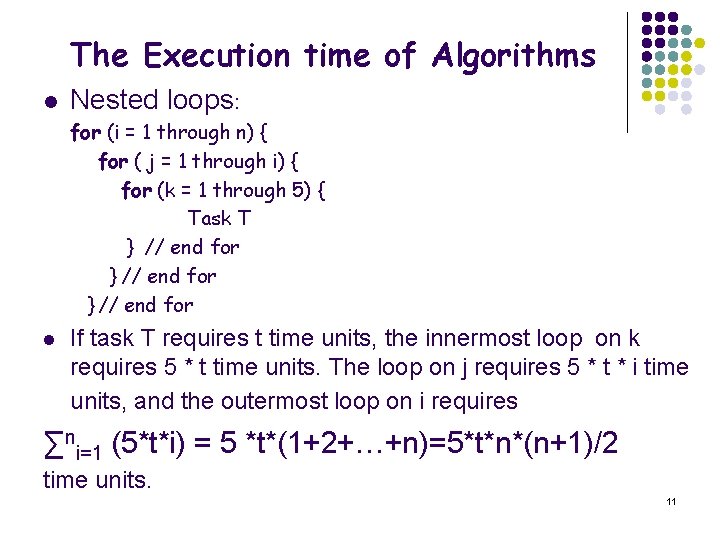

The Execution time of Algorithms l Nested loops: for (i = 1 through n) { for ( j = 1 through i) { for (k = 1 through 5) { Task T } // end for l If task T requires t time units, the innermost loop on k requires 5 * t time units. The loop on j requires 5 * t * i time units, and the outermost loop on i requires ∑ni=1 (5*t*i) = 5 *t*(1+2+…+n)=5*t*n*(n+1)/2 time units. 11

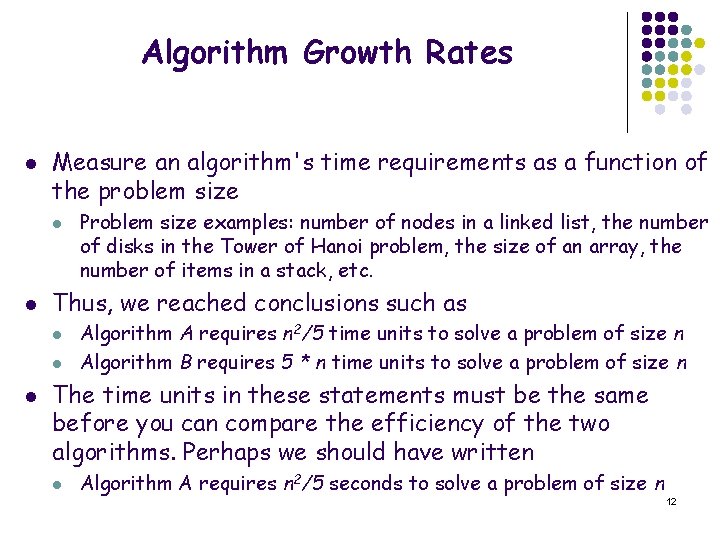

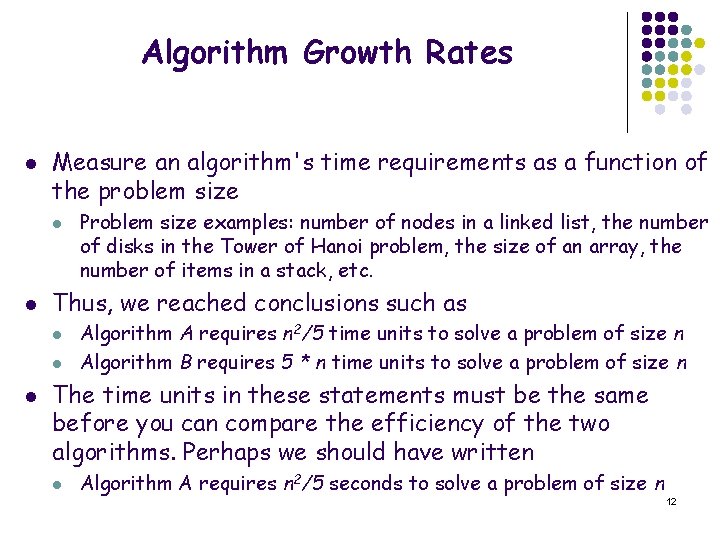

Algorithm Growth Rates l Measure an algorithm's time requirements as a function of the problem size l l Thus, we reached conclusions such as l l l Problem size examples: number of nodes in a linked list, the number of disks in the Tower of Hanoi problem, the size of an array, the number of items in a stack, etc. Algorithm A requires n 2/5 time units to solve a problem of size n Algorithm B requires 5 * n time units to solve a problem of size n The time units in these statements must be the same before you can compare the efficiency of the two algorithms. Perhaps we should have written l Algorithm A requires n 2/5 seconds to solve a problem of size n 12

Algorithm Growth Rates l But, preceding statement is inherent to the following difficulties l On what computer does the algorithm require n 2/5 seconds? What implementation of the algorithm requires n 2/5 seconds? l What data caused the algorithm to require n 2/5 seconds? l l l Most important thing to learn is how quickly the algorithm’s time requirement grows as a function of the problem size Statements such as l l l Algorithm A requires time proportional to n 2 Algorithm B requires time proportional to n Each express an algorithm’s proportional time requirement, or growth rate, and enable you to compare algorithm A with another algorithm B 13

Algorithm Growth Rates l Compare algorithm efficiencies for large problems l l l Although you cannot determine the exact time requirement for either algorithm A or algorithm B from these statements, you can determine that for large problems, B will require significantly less time than A. B’s time requirement as a function of the problem size n increases at a slower rate than A’s time requirement, because n increases at a slower rate than n 2 Even if B actually requires 5*n seconds and actually A requires n 2/5 seconds, B eventually will require significantly less time than A, as n increases. 14

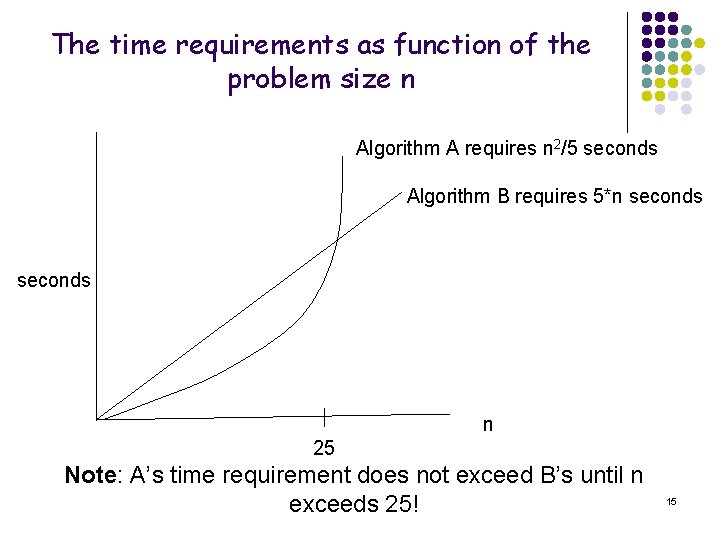

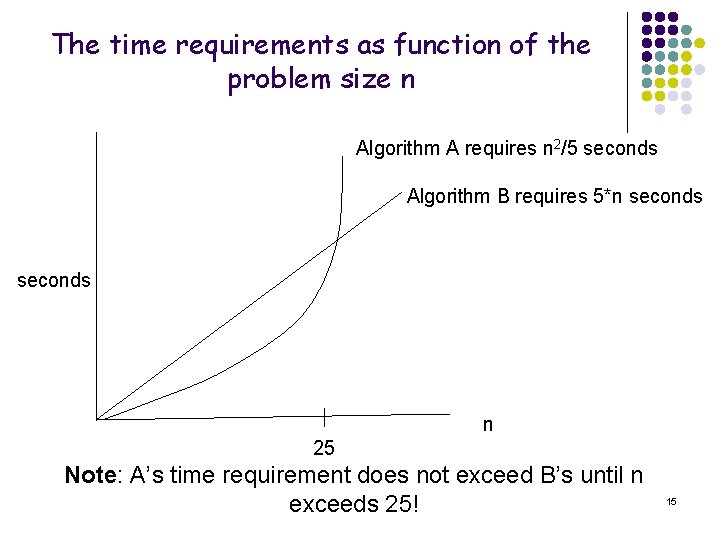

The time requirements as function of the problem size n Algorithm A requires n 2/5 seconds Algorithm B requires 5*n seconds n 25 Note: A’s time requirement does not exceed B’s until n exceeds 25! 15

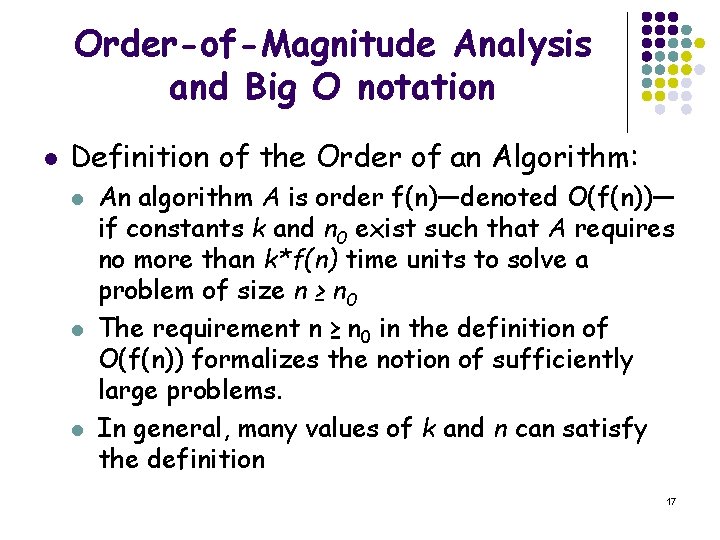

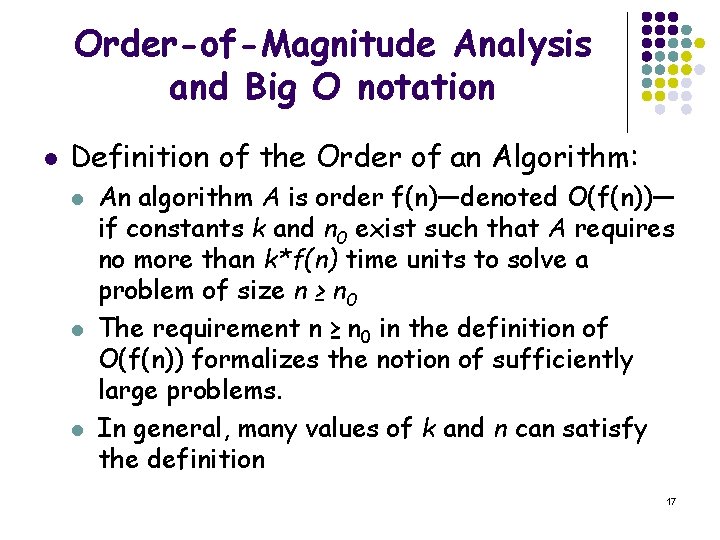

Order-of-Magnitude Analysis and Big O notation l l l If Algorithm A requires time proportional to f(n), Algorithm A is said to be order f(n), which is denoted as O(f(n)). The function f(n) is called the algorithm’s growth -rate function Because the notation uses the capital letter O to denote order, it is called the Big O notation l l If a problem of size n requires time that is directly proportional to n, the problem is O(n)—that is, order n. If the time requirement is directly proportional to n 2, the problem is O(n 2), and so on. 16

Order-of-Magnitude Analysis and Big O notation l Definition of the Order of an Algorithm: l l l An algorithm A is order f(n)—denoted O(f(n))— if constants k and n 0 exist such that A requires no more than k*f(n) time units to solve a problem of size n ≥ n 0 The requirement n ≥ n 0 in the definition of O(f(n)) formalizes the notion of sufficiently large problems. In general, many values of k and n can satisfy the definition 17

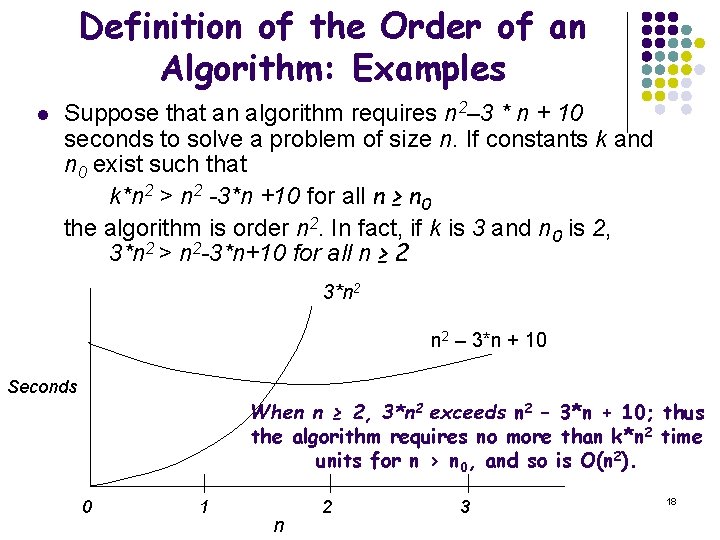

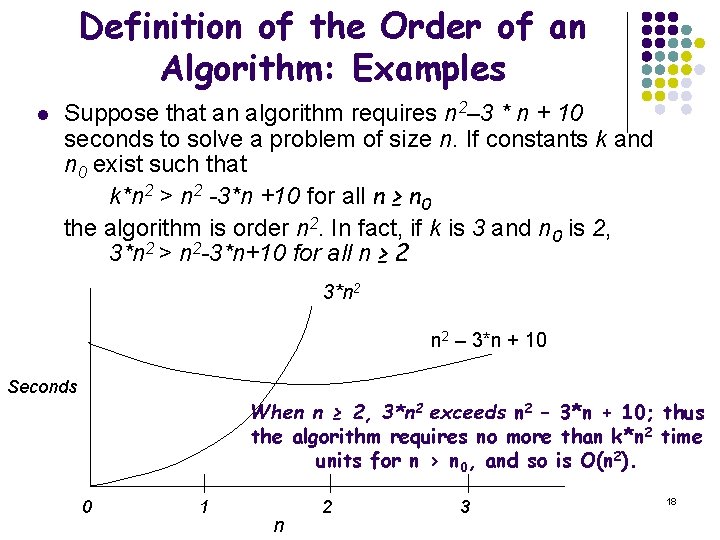

Definition of the Order of an Algorithm: Examples l Suppose that an algorithm requires n 2– 3 * n + 10 seconds to solve a problem of size n. If constants k and n 0 exist such that k*n 2 > n 2 -3*n +10 for all n ≥ n 0 the algorithm is order n 2. In fact, if k is 3 and n 0 is 2, 3*n 2 > n 2 -3*n+10 for all n ≥ 2 3*n 2 – 3*n + 10 Seconds When n ≥ 2, 3*n 2 exceeds n 2 – 3*n + 10; thus the algorithm requires no more than k*n 2 time units for n > n 0, and so is O(n 2). 0 1 n 2 3 18

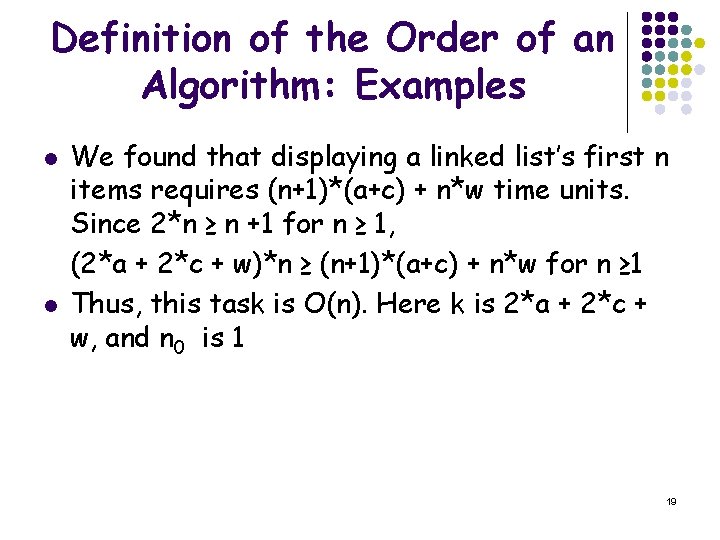

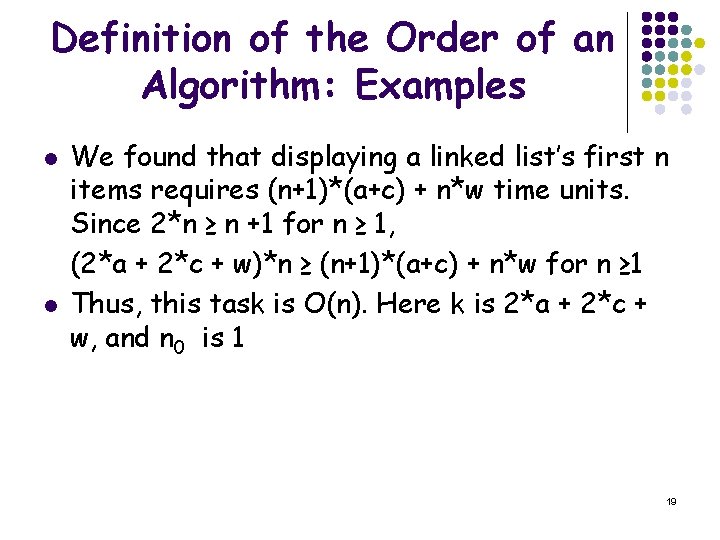

Definition of the Order of an Algorithm: Examples l l We found that displaying a linked list’s first n items requires (n+1)*(a+c) + n*w time units. Since 2*n ≥ n +1 for n ≥ 1, (2*a + 2*c + w)*n ≥ (n+1)*(a+c) + n*w for n ≥ 1 Thus, this task is O(n). Here k is 2*a + 2*c + w, and n 0 is 1 19

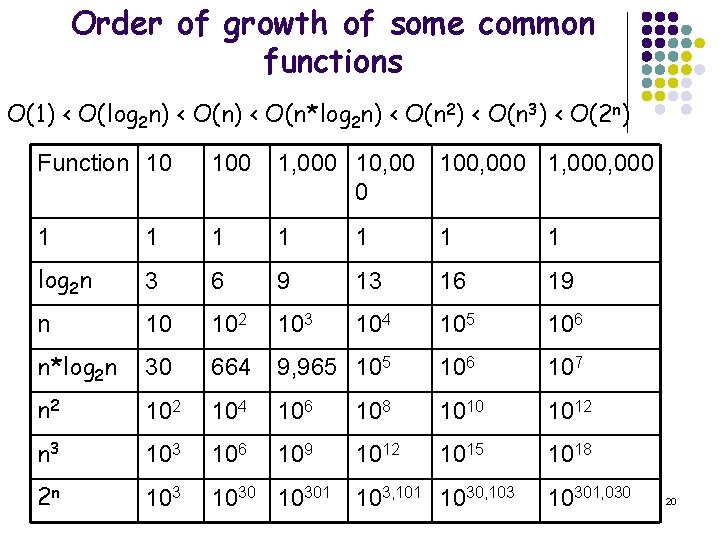

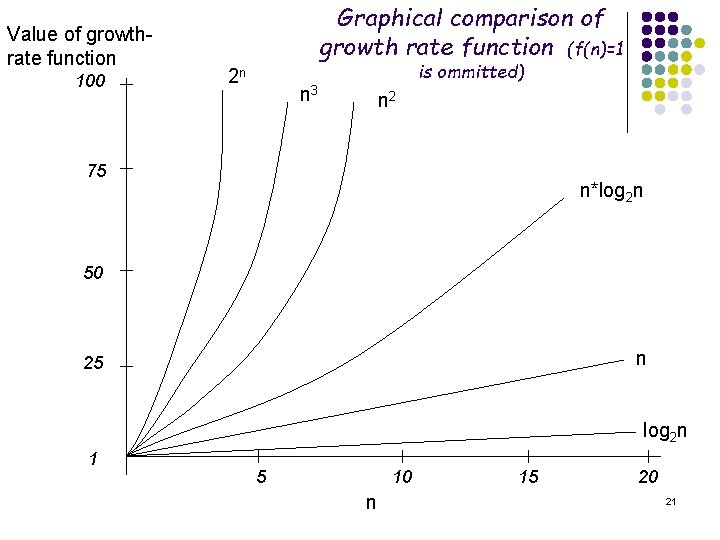

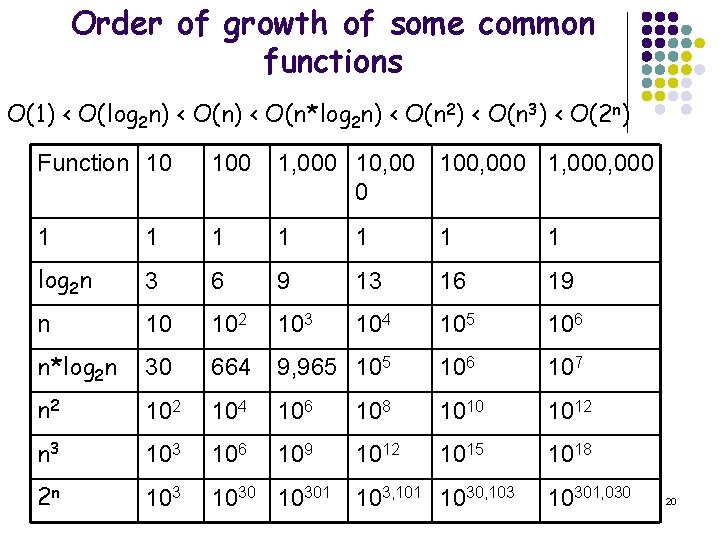

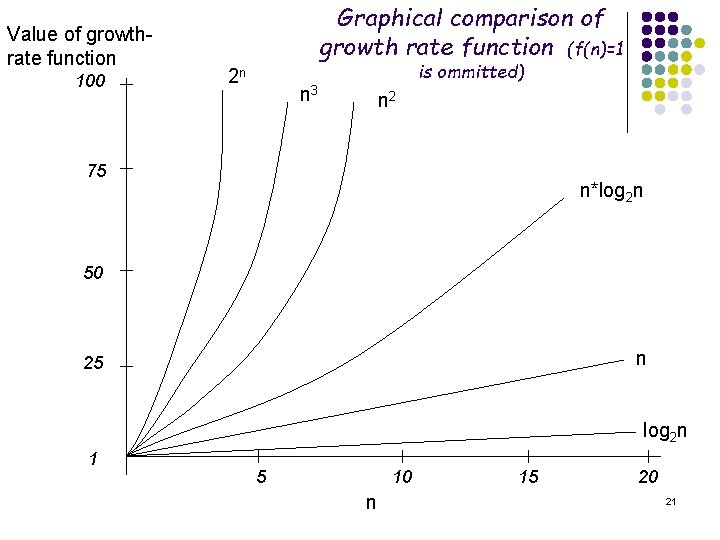

Order of growth of some common functions O(1) < O(log 2 n) < O(n*log 2 n) < O(n 2) < O(n 3) < O(2 n) Function 10 100 1, 000 10, 00 100, 000 1, 000 0 1 1 1 1 log 2 n 3 6 9 13 16 19 n 10 102 103 104 105 106 n*log 2 n 30 664 9, 965 106 107 n 2 104 106 108 1010 1012 n 3 106 109 1012 1015 1018 2 n 10301 103, 101 1030, 10301, 030 20

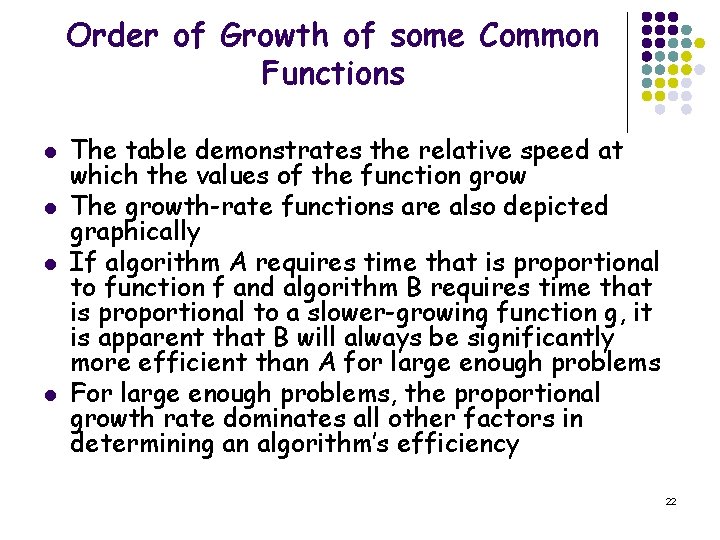

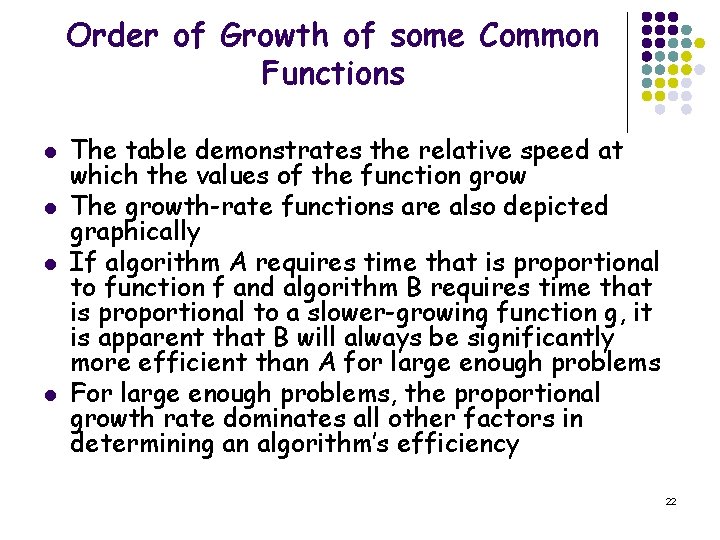

Value of growthrate function 100 Graphical comparison of growth rate function (f(n)=1 2 n is ommitted) n 3 n 2 75 n*log 2 n 50 n 25 log 2 n 1 5 10 n 15 20 21

Order of Growth of some Common Functions l l The table demonstrates the relative speed at which the values of the function grow The growth-rate functions are also depicted graphically If algorithm A requires time that is proportional to function f and algorithm B requires time that is proportional to a slower-growing function g, it is apparent that B will always be significantly more efficient than A for large enough problems For large enough problems, the proportional growth rate dominates all other factors in determining an algorithm’s efficiency 22

Order of Growth of some Common Functions l Some properties of growth-rate functions: l l l You can ignore low-order terms in an algorithm’s growth-rate function. E. g. if an algorithm is O(n 3+4*n 2+3), it is also O(n 3). You can ignore a multiplicative constant in the high-order term of an algorithm’s growth-rate function. e. g. if an algorithm is O(5*n 3), it is also O(n 3) O(f(n)) + O(g(n)) = O(f(n) + g(n)). E. g. if an algorithm is O(n 2) + O(n), it is also O(n 2 + n), which is simply O(n 2). 23

Worst-case and Average-case Analyses l Algorithms can require different times to solve different problems of the same size l l E. g. the time that an algorithm requires to search n items might depend on the nature of the items Maximum amount of time that an algorithm can require to solve a problem of size n—is the worst case An average-case analysis attempts to determine the average amount of time that an algorithm requires to solve problems of size n. Worst-case analysis is easier to calculate and is thus more common 24

The efficiency of Searching Algorithms l Order-of-magnitude analysis: - Efficiency of Sequential Search and Binary Search of an array l Sequential search: - to search from an array of n items, you look each item in turn, starting with the first one, until either you find the desired item or you search to the end of the data collection l l Worst case: O(n) Average case: O(n) Best case: O(1) Does the algorithm order depend on whether or not the initial data is sorted? 25

The efficiency of Searching Algorithms l Binary search: -Searches a sorted array for a particular item by repeatedly dividing the array in half—the binary search algorithm searches successively smaller arrays. The size of a given array is approximately one-half the size of the array previously searched l At each division, the algorithm makes a comparison. The number of comparison is equal to the number of times that the algorithm divides the array in half 26

The efficiency of Searching Algorithms – Binary Search Suppose that n = 2 k for some k The search requires the following steps: l l 1. 2. 3. l l Inspect the middle item of an array of size n/22, and so on, until only one item remains. You will have performed k divisions (n/2 k = 1) In the worst case, the algorithm performs k divisions and, therefore, k comparisons. Because n=2 k, k = log 2 n. Thus, the algorithm is O(log 2 n) in the worst case when n = 2 k. 27

The efficiency of Searching Algorithms – Binary Search l l What if n is not a power of 2? Find the smallest k such that 2 k-1 < n < 2 k l The algorithm still requires at most k divisions to obtain a sub-array with one item. It follows that k-1 < log 2 n < k k < 1 + log 2 n < k+1 K = 1 + log 2 n rounded down l l l Thus the algorithm is still O(log 2 n) in the worst case when n ≠ 2 k. How does binary search compare to sequential search? E. g. log 21, 000 = 19, so one million sorted items can require one million comparisons with SS but at most 20 with BS! Note: Maintaining the array in sorted order requires an overhead cost, which can be substantial!! 28