Algorithm Analysis tools Lecture 3 Algorithm Analysis Motivation

- Slides: 29

Algorithm Analysis tools Lecture 3

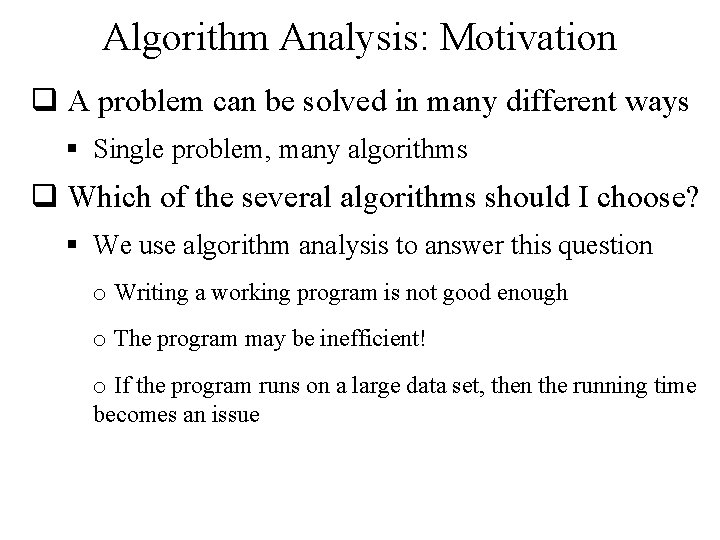

Algorithm Analysis: Motivation q A problem can be solved in many different ways § Single problem, many algorithms q Which of the several algorithms should I choose? § We use algorithm analysis to answer this question o Writing a working program is not good enough o The program may be inefficient! o If the program runs on a large data set, then the running time becomes an issue

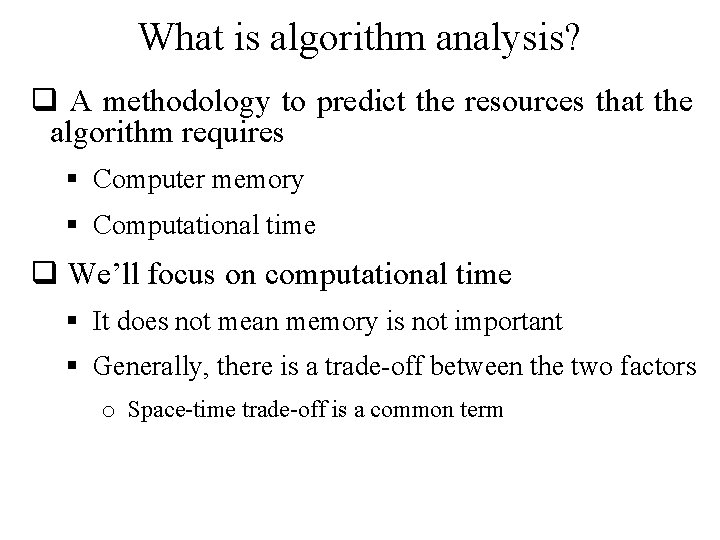

What is algorithm analysis? q A methodology to predict the resources that the algorithm requires § Computer memory § Computational time q We’ll focus on computational time § It does not mean memory is not important § Generally, there is a trade-off between the two factors o Space-time trade-off is a common term

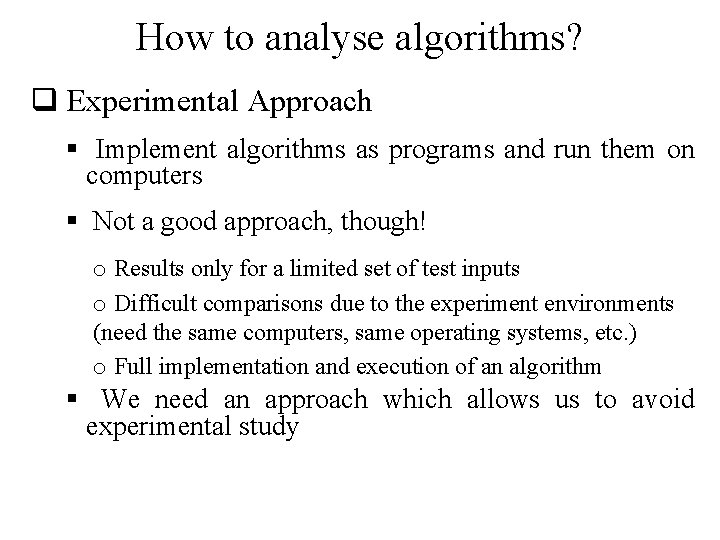

How to analyse algorithms? q Experimental Approach § Implement algorithms as programs and run them on computers § Not a good approach, though! o Results only for a limited set of test inputs o Difficult comparisons due to the experiment environments (need the same computers, same operating systems, etc. ) o Full implementation and execution of an algorithm § We need an approach which allows us to avoid experimental study

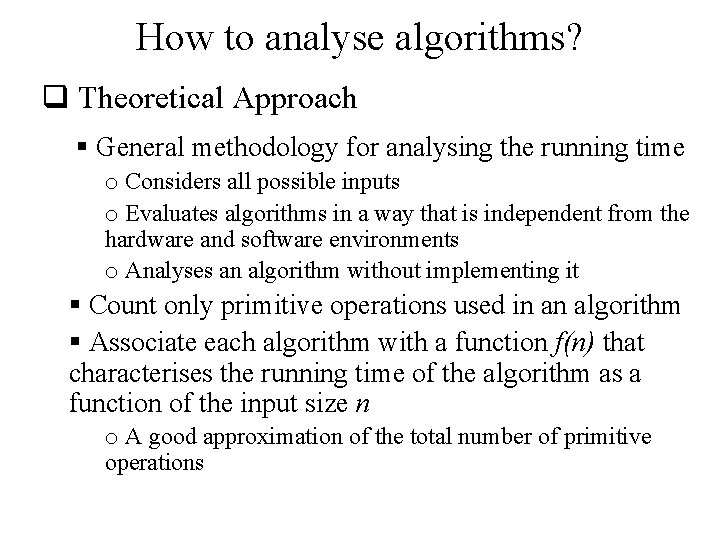

How to analyse algorithms? q Theoretical Approach § General methodology for analysing the running time o Considers all possible inputs o Evaluates algorithms in a way that is independent from the hardware and software environments o Analyses an algorithm without implementing it § Count only primitive operations used in an algorithm § Associate each algorithm with a function f(n) that characterises the running time of the algorithm as a function of the input size n o A good approximation of the total number of primitive operations

Primitive Operations q Basic computations performed by an algorithm q Each operation corresponding to a low-level instruction with a constant execution time q Largely independent from the programming language q Examples § Evaluating an expression (x + y) § Assigning a value to a variable (x ← 5) § Comparing two numbers (x < y) § Indexing into an array (A[i]) § Calling a method (mycalculator. sum()) § Returning from a method (return result)

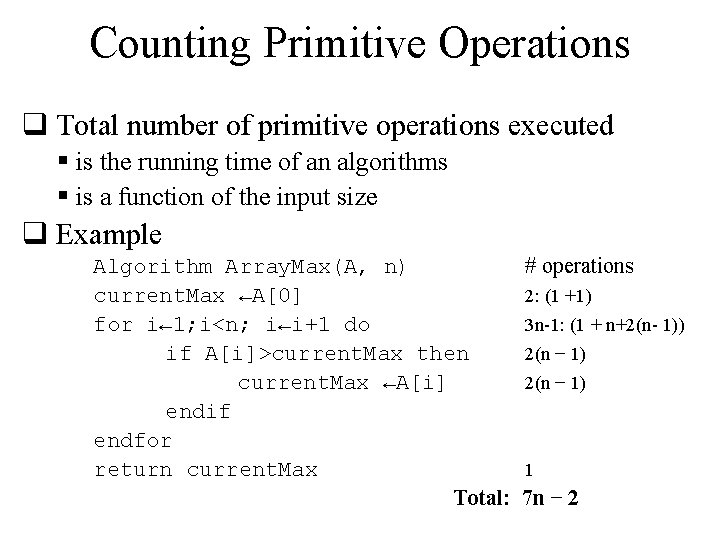

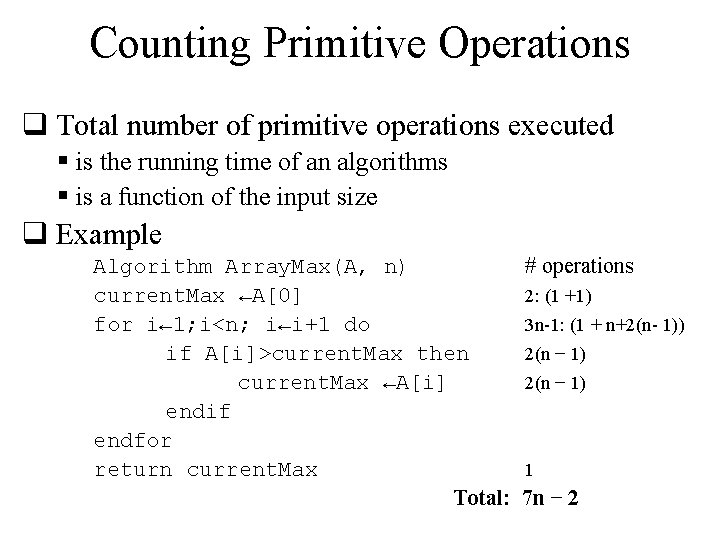

Counting Primitive Operations q Total number of primitive operations executed § is the running time of an algorithms § is a function of the input size q Example Algorithm Array. Max(A, n) current. Max ←A[0] for i← 1; i<n; i←i+1 do if A[i]>current. Max then current. Max ←A[i] endif endfor return current. Max Total: # operations 2: (1 +1) 3 n-1: (1 + n+2(n- 1)) 2(n − 1) 1 7 n − 2

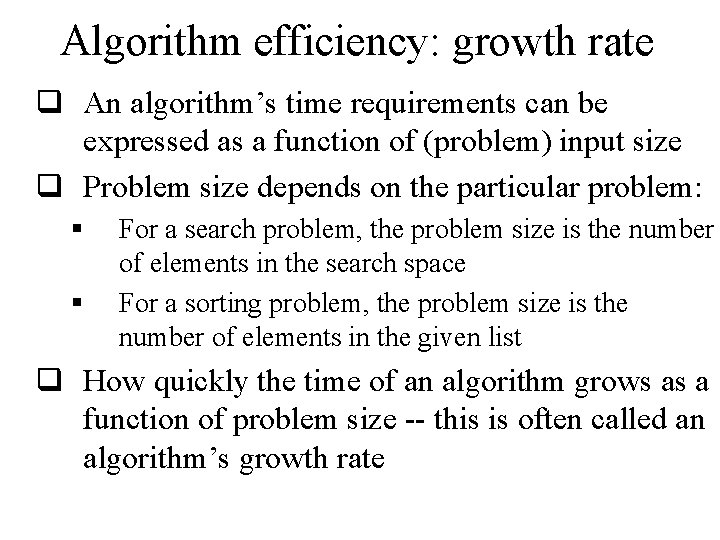

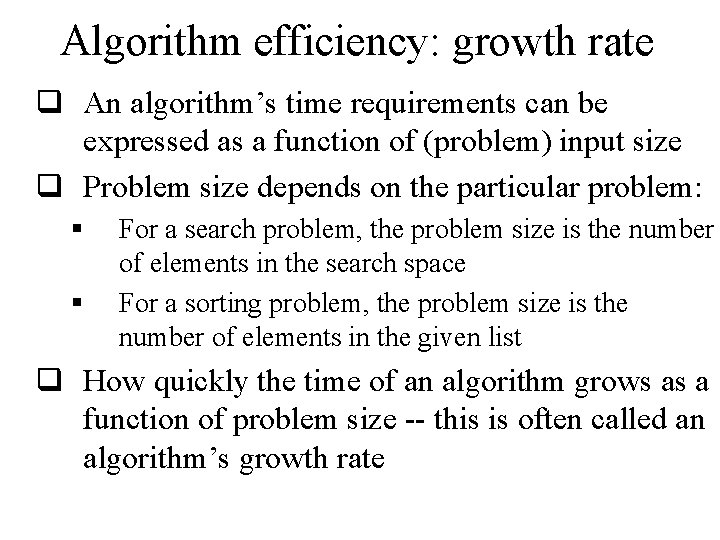

Algorithm efficiency: growth rate q An algorithm’s time requirements can be expressed as a function of (problem) input size q Problem size depends on the particular problem: § § For a search problem, the problem size is the number of elements in the search space For a sorting problem, the problem size is the number of elements in the given list q How quickly the time of an algorithm grows as a function of problem size -- this is often called an algorithm’s growth rate

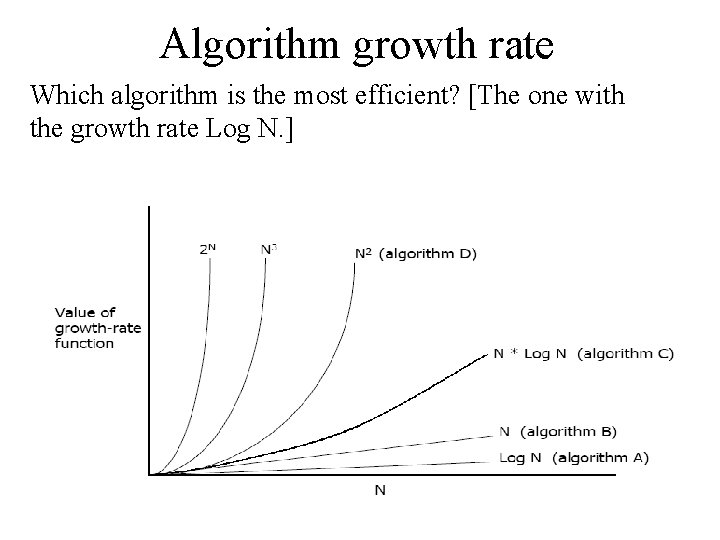

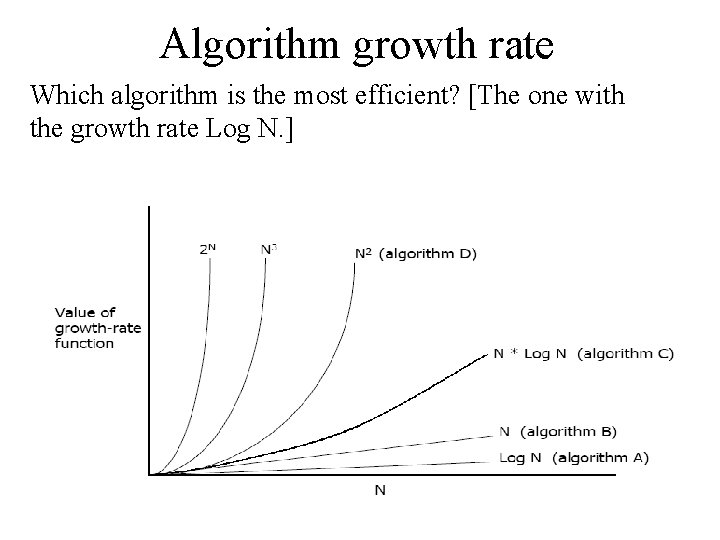

Algorithm growth rate Which algorithm is the most efficient? [The one with the growth rate Log N. ]

Algorithmic time complexity q Rather than counting the exact number of primitive operations, we approximate the runtime of an algorithm as a function of data size – time complexity q Algorithms A, B, C and D (previous slide) belong to different complexity classes q We’ll not cover complexity classes in detail – they will be covered in Algorithm Analysis course, in a later semester q We’ll briefly discuss seven basic functions which are often used in complexity analysis

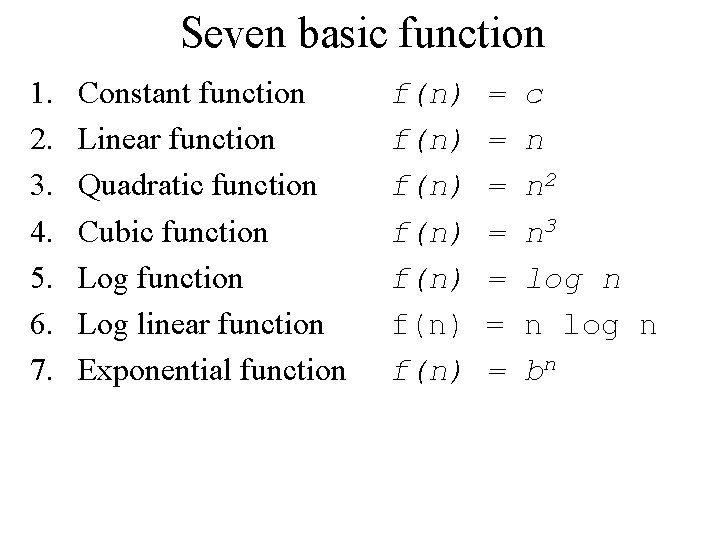

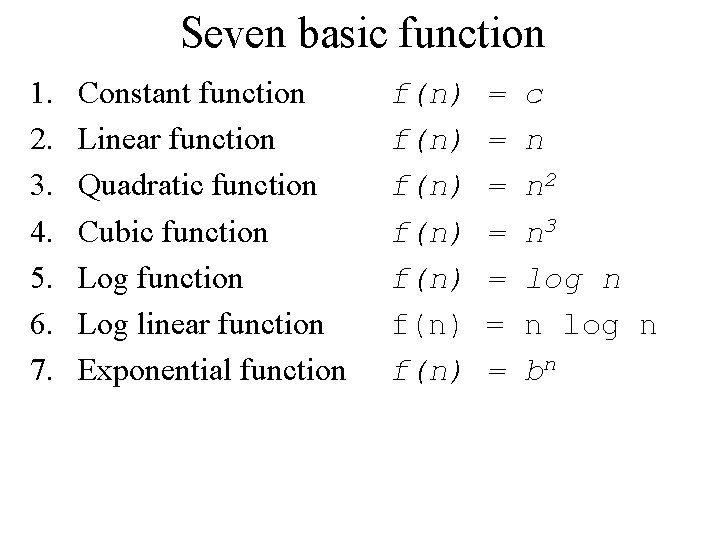

Seven basic function 1. 2. 3. 4. 5. 6. 7. Constant function Linear function Quadratic function Cubic function Log linear function Exponential function f(n) f(n) = = = = c n n 2 n 3 log n n log n bn

Constant function q For a given argument/variable n, the function always returns a constant value q It is independent of variable n q It is commonly used to approximate the total number of primitive operations in an algorithm q Most common constant function is g(n) = 1 q Any constant value c can be expressed as constant function f(n) = c. g(1)

Linear function q For a given argument/variable n, the function always returns n q This function arises in algorithm analysis any time we have to do a single basic operation over each of n elements § For example, finding min/max value in a list of values § Time complexity of linear/sequential search algorithm is linear

Quadratic function q For a given argument/variable n, the function always returns square of n q This function arises in algorithm analysis any time we use nested loops § § § The outer loop performs primitive operations in linear time; for each iteration, the inner loop also perform primitive operations in linear time For example, sorting an array in ascending/descending order using Bubble Sort (more later on) Time complexity of most algorithms is quadratic

Cubic function q For a given argument/variable n, the function always returns n x n q This function is very rarely used in algorithm analysis § Rather, a more general class “polynomial” is often used o f(n) = a 0 + a 1 n + a 2 n 2 + a 3 n 3 + … + adnd

Logarithmic function q For a given argument/variable n, the function always returns logarithmic value of n q Generally, it is written as f(n) = logbn, where b is base which is often 2 q This function is also very common in algorithm analysis q We normally approximate the logbn to a value x. x is number of times n is divided by b until the division results in a number less than or equal to 1 § log 327 is 3, since 27/3/3/3 = 1. § log 464 is 3, since 64/4/4/4 = 1 § log 212 is 4, since 12/2/2 = 0. 75 ≤ 1

Log linear function q For a given argument/variable n, the function always returns n log n q Generally, it is written as f(n) = n logbn, where b is base which is often 2 q This function is also common in algorithm analysis q Growth rate of log linear function is faster as compared to linear and log functions

Exponential function q For a given argument/variable n, the function always returns bn, where b is base and n is power (exponent) q This function is also common in algorithm analysis q Growth rate of exponential function is faster than all other functions

Algorithmic runtime q Worst-case running time § measures the maximum number of primitive operations executed § The worst case can occur fairly often o e. g. in searching a database for a particular piece of information q Best-case running time § measures the minimum number of primitive operations executed o Finding a value in a list, where the value is at the first position o Sorting a list of values, where values are already in desired order q Average-case running time § the efficiency averaged on all possible inputs § maybe difficult to define what “average” means

Complexity classes q Suppose the execution time of algorithm A is a quadratic function of n (i. e. an 2 + bn + c) q Suppose the execution time of algorithm B is a linear function of n (i. e. an + b) q Suppose the execution time of algorithm C is a an exponential function of n (i. e. a 2 n) q For large problems higher order terms dominate the rest q These three algorithms belong to three different “complexity classes”

Big-O and function growth rate q We use a convention O-notation (also called Big. Oh) to represent different complexity classes q The statement “f(n) is O(g(n))” means that the growth rate of f(n) is no more than the growth rate of g(n) q g(n) is an upper bound on f(n), i. e. maximum number of primitive operations q We can use the big-O notation to rank functions according to their growth rate

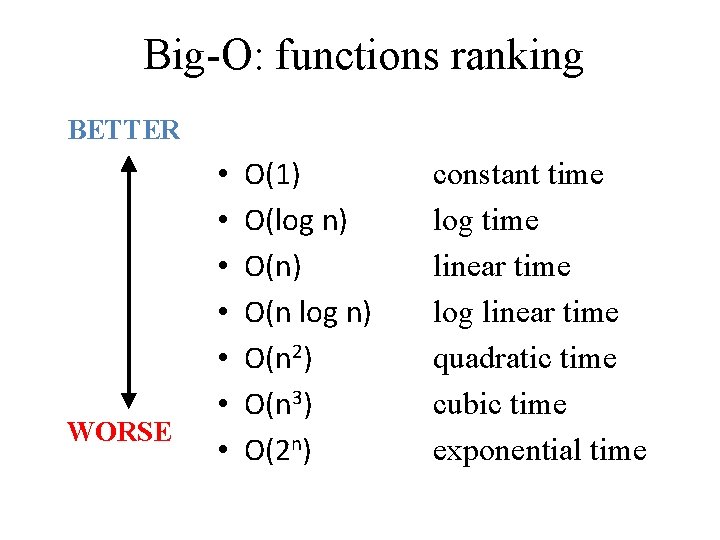

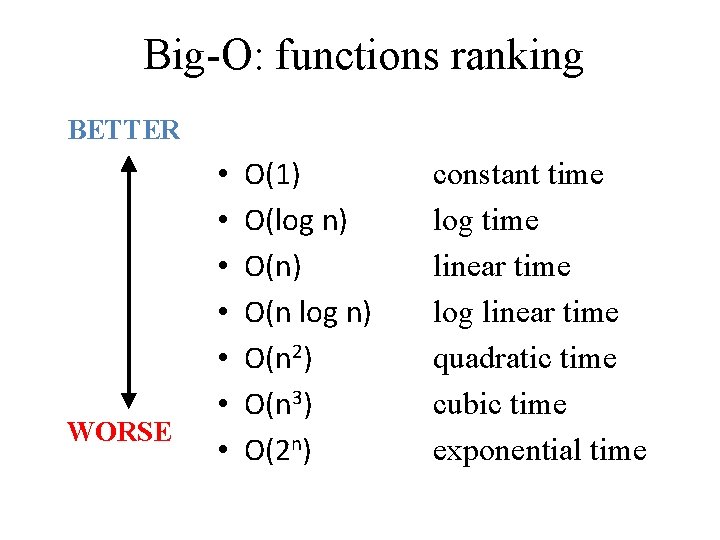

22 Big-O: functions ranking BETTER WORSE • • O(1) O(log n) O(n 2) O(n 3) O(2 n) constant time log time linear time log linear time quadratic time cubic time exponential time

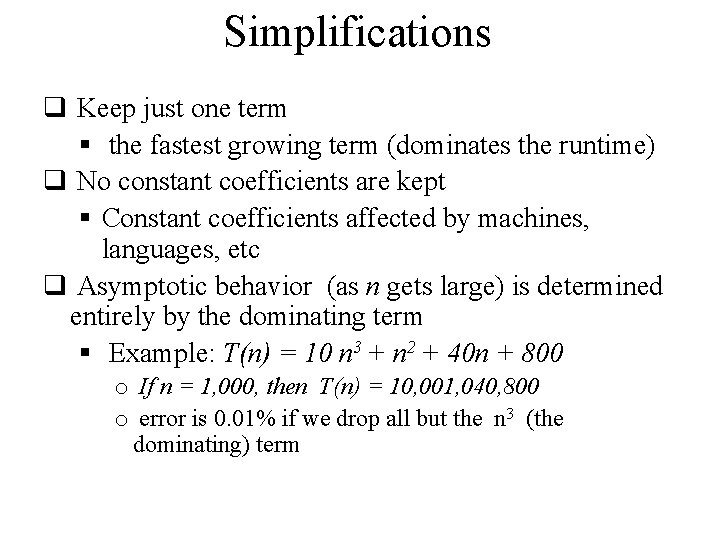

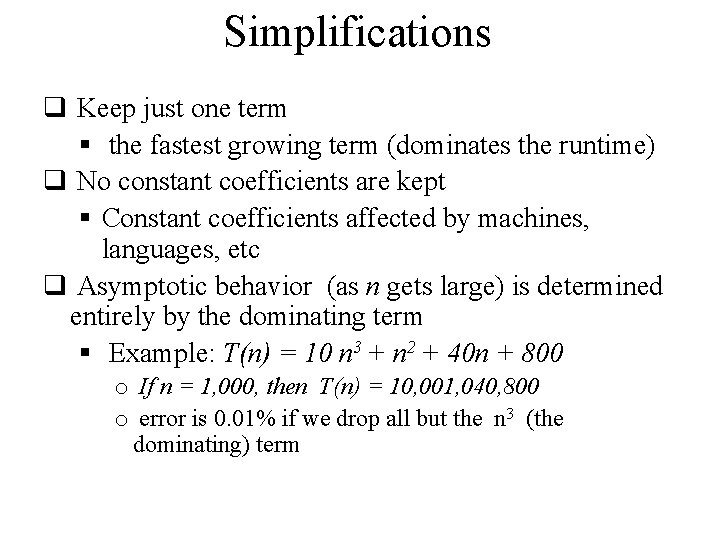

Simplifications q Keep just one term § the fastest growing term (dominates the runtime) q No constant coefficients are kept § Constant coefficients affected by machines, languages, etc q Asymptotic behavior (as n gets large) is determined entirely by the dominating term § Example: T(n) = 10 n 3 + n 2 + 40 n + 800 o If n = 1, 000, then T(n) = 10, 001, 040, 800 o error is 0. 01% if we drop all but the n 3 (the dominating) term

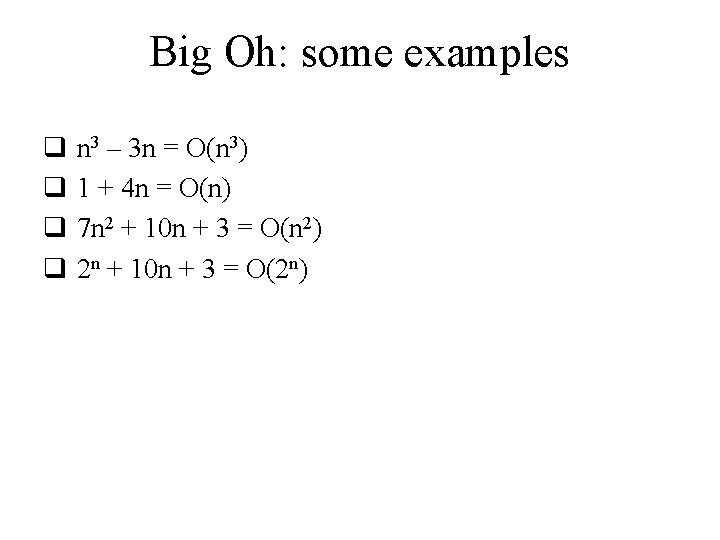

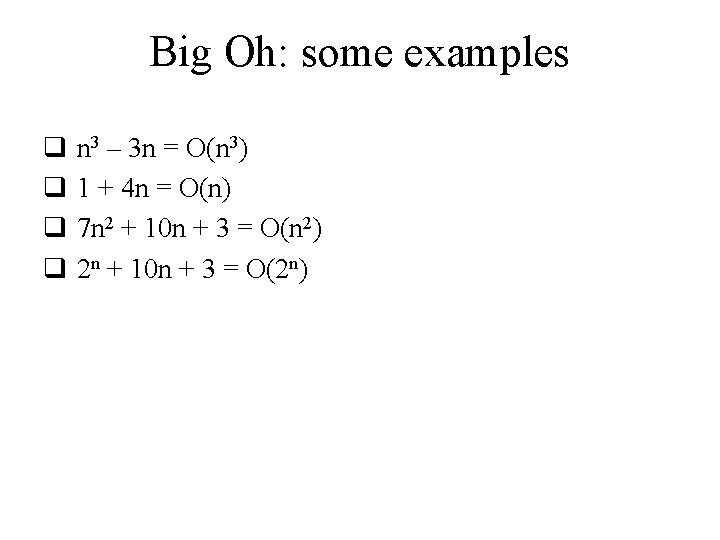

Big Oh: some examples q q n 3 – 3 n = O(n 3) 1 + 4 n = O(n) 7 n 2 + 10 n + 3 = O(n 2) 2 n + 10 n + 3 = O(2 n)

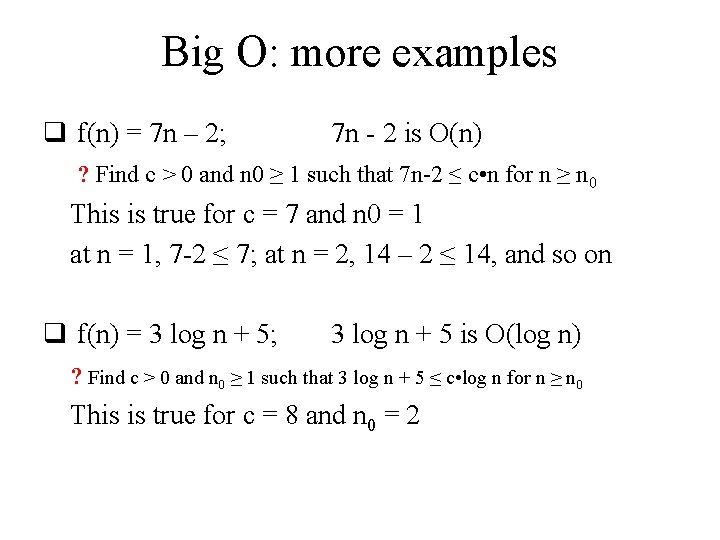

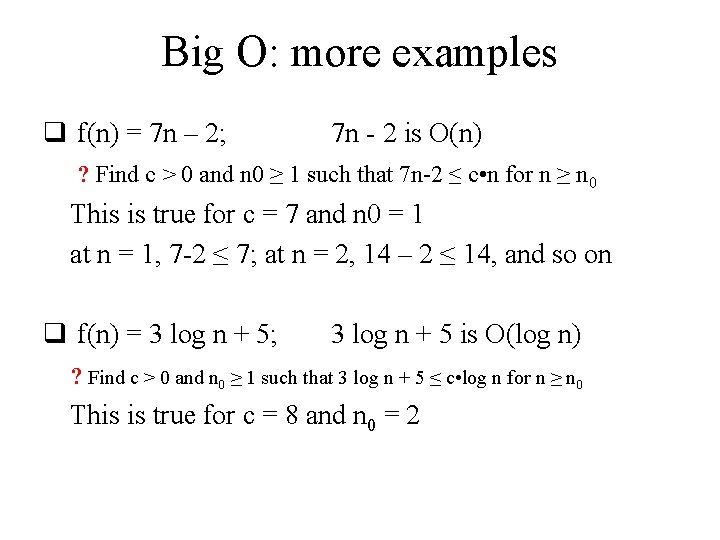

Big O: more examples q f(n) = 7 n – 2; 7 n - 2 is O(n) ? Find c > 0 and n 0 ≥ 1 such that 7 n-2 ≤ c • n for n ≥ n 0 This is true for c = 7 and n 0 = 1 at n = 1, 7 -2 ≤ 7; at n = 2, 14 – 2 ≤ 14, and so on q f(n) = 3 log n + 5; 3 log n + 5 is O(log n) ? Find c > 0 and n 0 ≥ 1 such that 3 log n + 5 ≤ c • log n for n ≥ n 0 This is true for c = 8 and n 0 = 2

Interpreting Big-O q f(n) is less than or equal to g(n) up to a constant factor and in the asymptotic sense as n approaching infinity (n→∞) q The big-O notation gives an upper bound on the growth rate of a function q The big-O notation allows us to § ignore constant factors and lower order terms § focus on the main components of a function that effect the growth § ignoring constants and lower order terms does not change the “complexity class” – it’s very important to remember

Asymptotic algorithm analysis q Determines the running time in big-O notation q Asymptotic analysis § find the worst-case number of primitives § operations executed as a function of the input size § express this function with big-O notation q Example: § algorithm array. Max executes at most 7 n − 2 primitive operations § algorithm array. Max runs in O(n) time

Practice q Express the following functions in terms of Big-O notation (a, b and c are constants) 1. f(n) = an 2 + bn + c 2. f(n) = 2 n + n log n + c 3. f(n) = n log n + b log n + c

Outlook Next week, we’ll discuss arrays and searching algorithms