Algorithm Analysis Techniques To review the following Order

- Slides: 15

Algorithm Analysis Techniques To review the following: • Order analysis • Summation techniques • Recursion • Recurrences A running example: Given an array of integers (positive, zero, negative), find a sequence of contiguous locations that result in the largest sum, among all possible such sequences.

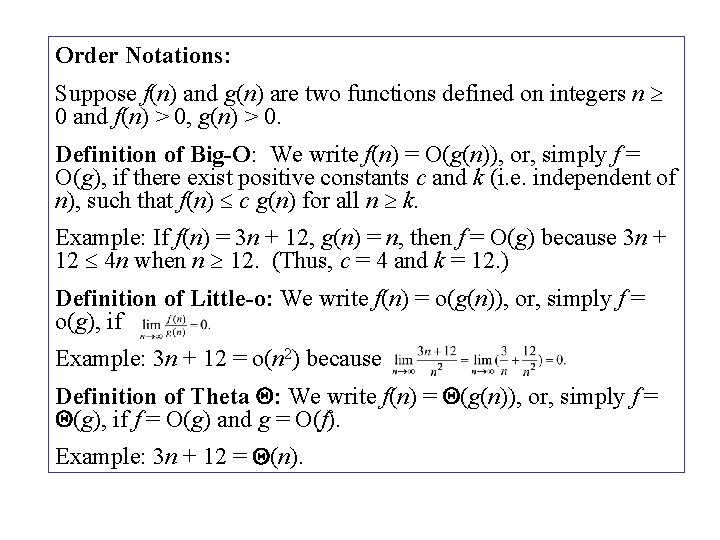

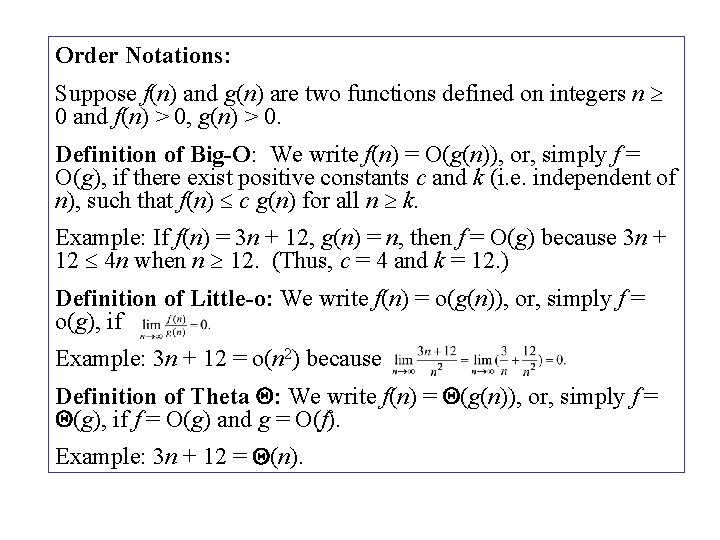

Order Notations: Suppose f(n) and g(n) are two functions defined on integers n 0 and f(n) > 0, g(n) > 0. Definition of Big-O: We write f(n) = O(g(n)), or, simply f = O(g), if there exist positive constants c and k (i. e. independent of n), such that f(n) c g(n) for all n k. Example: If f(n) = 3 n + 12, g(n) = n, then f = O(g) because 3 n + 12 4 n when n 12. (Thus, c = 4 and k = 12. ) Definition of Little-o: We write f(n) = o(g(n)), or, simply f = o(g), if Example: 3 n + 12 = o(n 2) because Definition of Theta : We write f(n) = (g(n)), or, simply f = (g), if f = O(g) and g = O(f). Example: 3 n + 12 = (n).

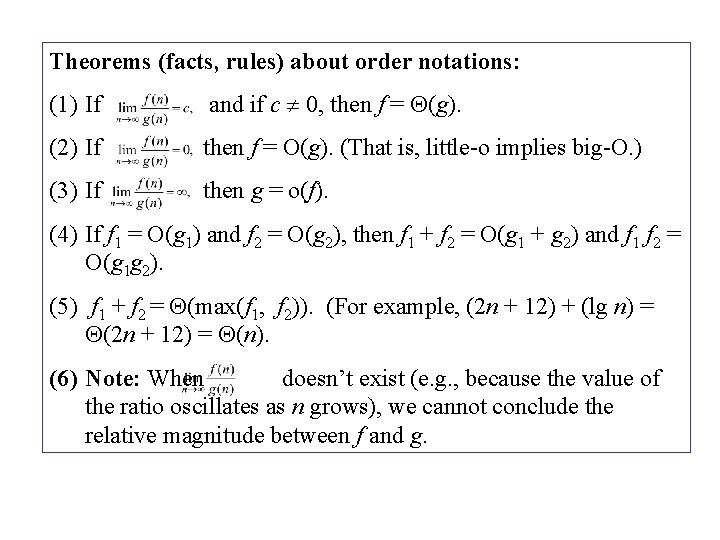

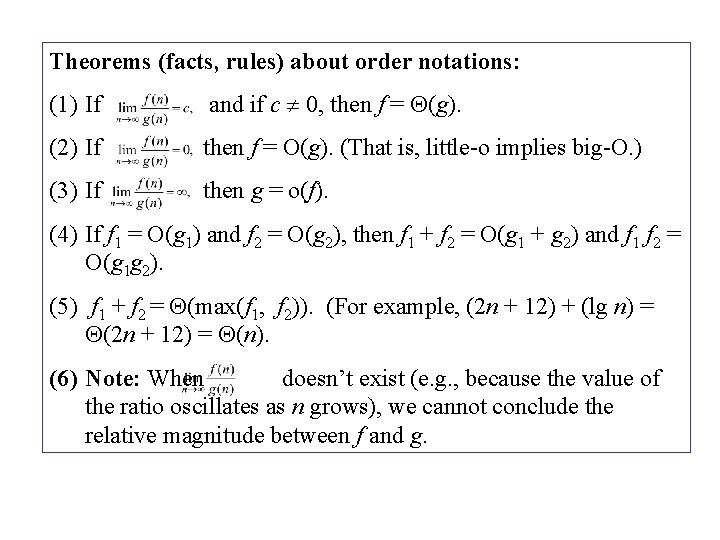

Theorems (facts, rules) about order notations: (1) If and if c 0, then f = (g). (2) If then f = O(g). (That is, little-o implies big-O. ) (3) If then g = o(f). (4) If f 1 = O(g 1) and f 2 = O(g 2), then f 1 + f 2 = O(g 1 + g 2) and f 1 f 2 = O(g 1 g 2). (5) f 1 + f 2 = (max(f 1, f 2)). (For example, (2 n + 12) + (lg n) = (2 n + 12) = (n). (6) Note: When doesn’t exist (e. g. , because the value of the ratio oscillates as n grows), we cannot conclude the relative magnitude between f and g.

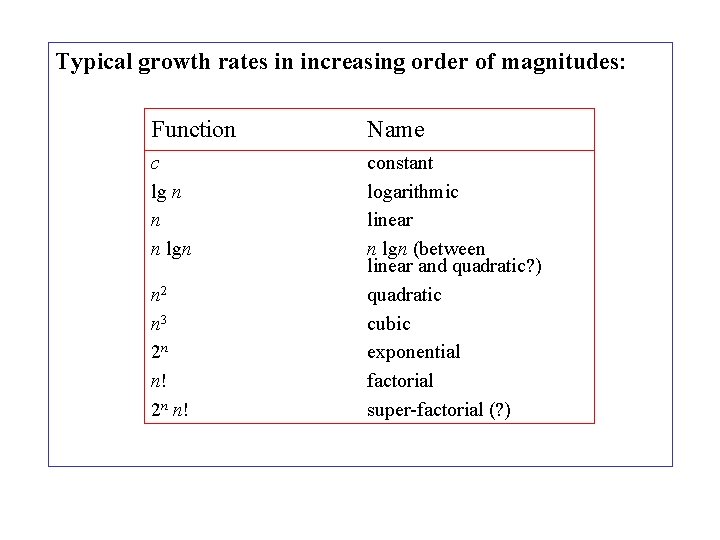

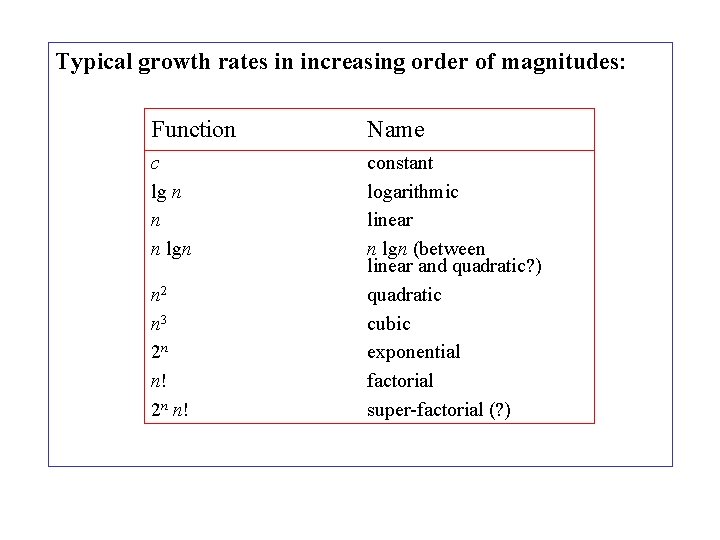

Typical growth rates in increasing order of magnitudes: Function Name c lg n n n lgn constant logarithmic linear n lgn (between linear and quadratic? ) quadratic cubic exponential factorial super-factorial (? ) n 2 n 3 2 n n!

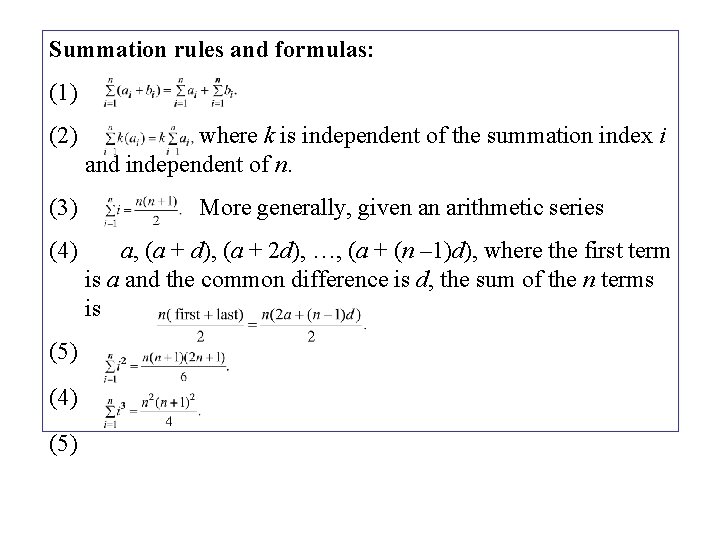

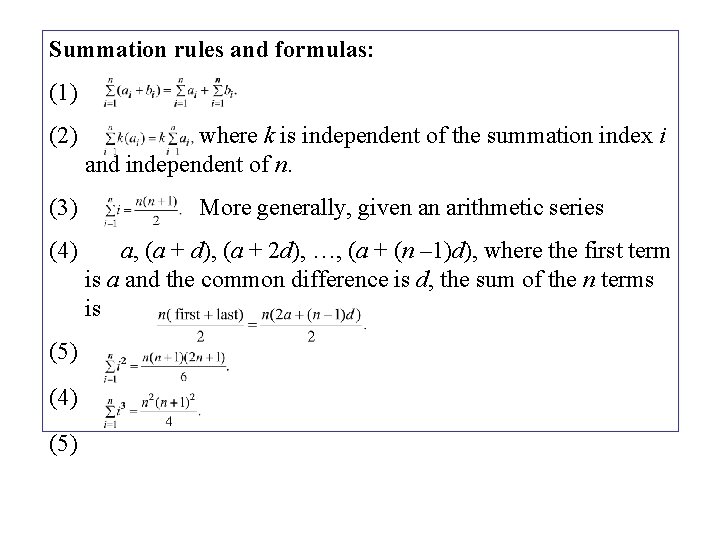

Summation rules and formulas: (1) (2) (3) (4) (5) where k is independent of the summation index i and independent of n. More generally, given an arithmetic series a, (a + d), (a + 2 d), …, (a + (n – 1)d), where the first term is a and the common difference is d, the sum of the n terms is

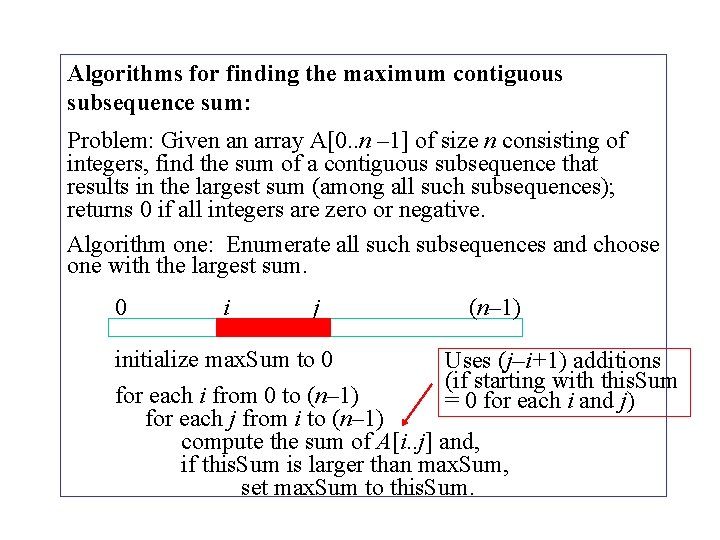

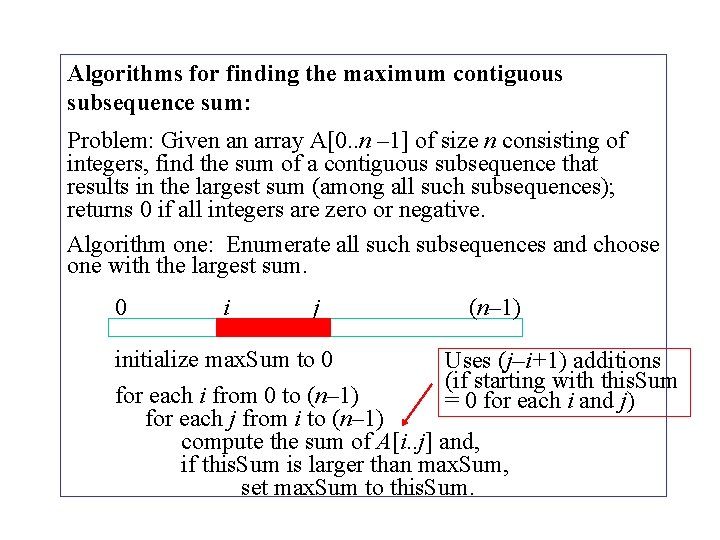

Algorithms for finding the maximum contiguous subsequence sum: Problem: Given an array A[0. . n – 1] of size n consisting of integers, find the sum of a contiguous subsequence that results in the largest sum (among all such subsequences); returns 0 if all integers are zero or negative. Algorithm one: Enumerate all such subsequences and choose one with the largest sum. 0 i j (n– 1) initialize max. Sum to 0 Uses (j–i+1) additions (if starting with this. Sum for each i from 0 to (n– 1) = 0 for each i and j) for each j from i to (n– 1) compute the sum of A[i. . j] and, if this. Sum is larger than max. Sum, set max. Sum to this. Sum.

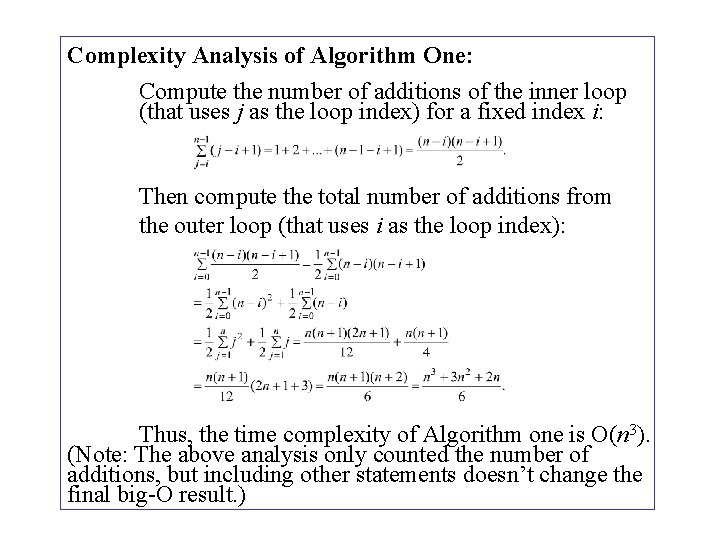

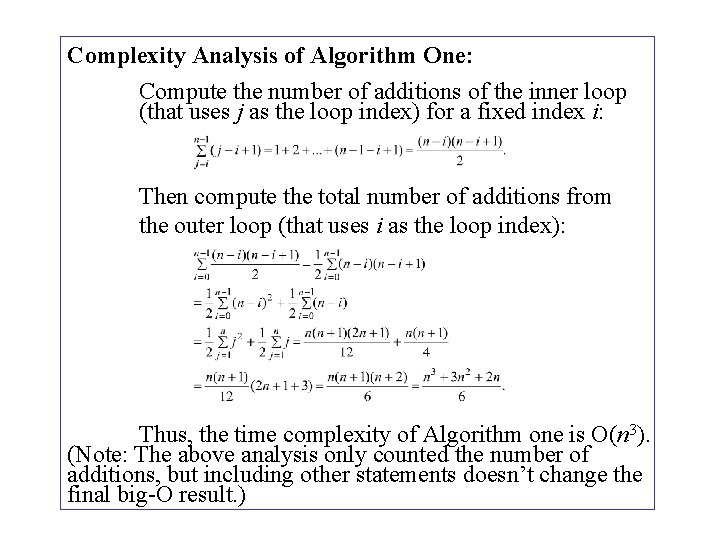

Complexity Analysis of Algorithm One: Compute the number of additions of the inner loop (that uses j as the loop index) for a fixed index i: Then compute the total number of additions from the outer loop (that uses i as the loop index): Thus, the time complexity of Algorithm one is O(n 3). (Note: The above analysis only counted the number of additions, but including other statements doesn’t change the final big-O result. )

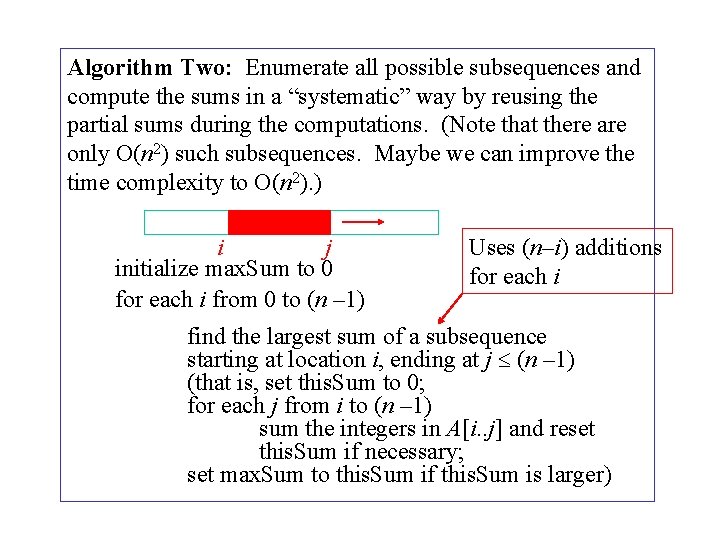

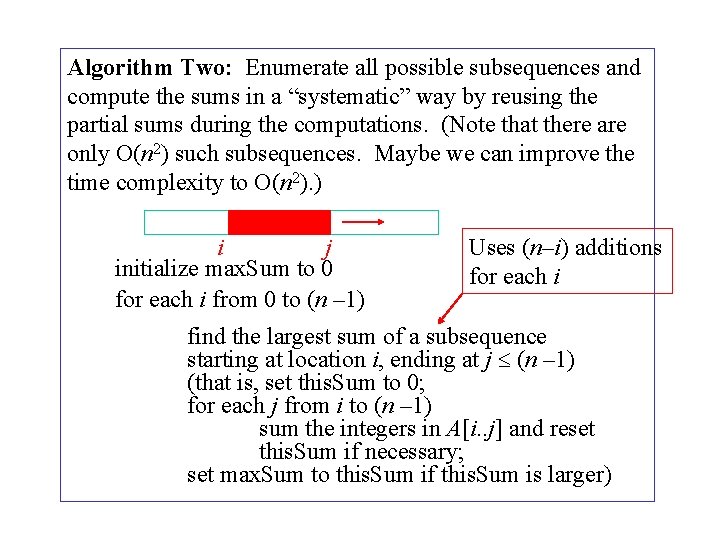

Algorithm Two: Enumerate all possible subsequences and compute the sums in a “systematic” way by reusing the partial sums during the computations. (Note that there are only O(n 2) such subsequences. Maybe we can improve the time complexity to O(n 2). ) i j initialize max. Sum to 0 for each i from 0 to (n – 1) Uses (n–i) additions for each i find the largest sum of a subsequence starting at location i, ending at j (n – 1) (that is, set this. Sum to 0; for each j from i to (n – 1) sum the integers in A[i. . j] and reset this. Sum if necessary; set max. Sum to this. Sum if this. Sum is larger)

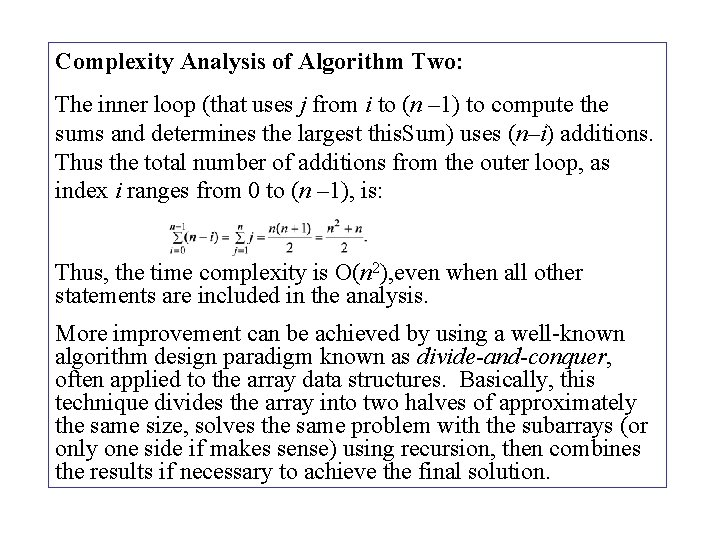

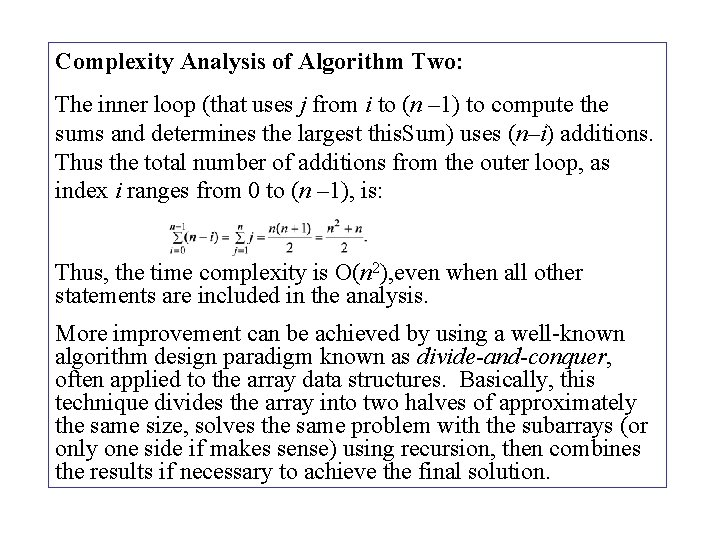

Complexity Analysis of Algorithm Two: The inner loop (that uses j from i to (n – 1) to compute the sums and determines the largest this. Sum) uses (n–i) additions. Thus the total number of additions from the outer loop, as index i ranges from 0 to (n – 1), is: Thus, the time complexity is O(n 2), even when all other statements are included in the analysis. More improvement can be achieved by using a well-known algorithm design paradigm known as divide-and-conquer, often applied to the array data structures. Basically, this technique divides the array into two halves of approximately the same size, solves the same problem with the subarrays (or only one side if makes sense) using recursion, then combines the results if necessary to achieve the final solution.

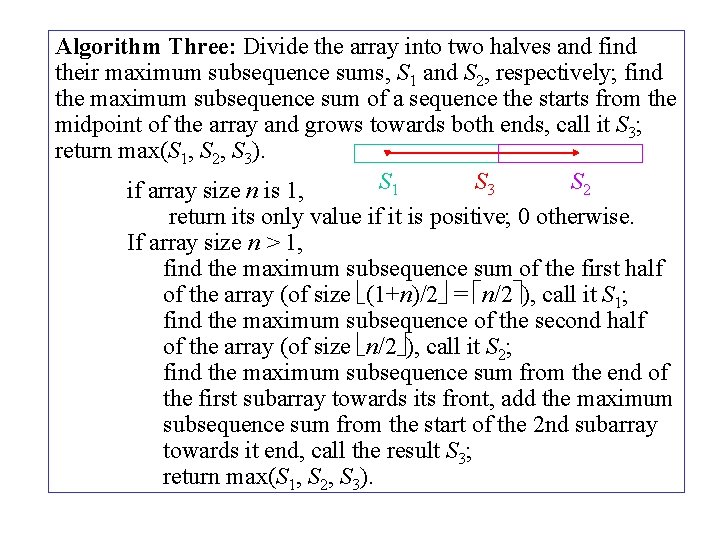

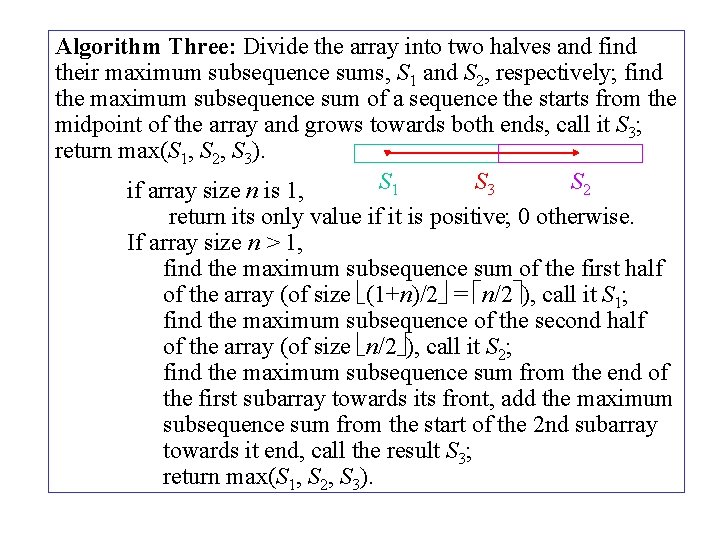

Algorithm Three: Divide the array into two halves and find their maximum subsequence sums, S 1 and S 2, respectively; find the maximum subsequence sum of a sequence the starts from the midpoint of the array and grows towards both ends, call it S 3; return max(S 1, S 2, S 3). S 1 S 3 S 2 if array size n is 1, return its only value if it is positive; 0 otherwise. If array size n > 1, find the maximum subsequence sum of the first half of the array (of size (1+n)/2 = n/2 ), call it S 1; find the maximum subsequence of the second half of the array (of size n/2 ), call it S 2; find the maximum subsequence sum from the end of the first subarray towards its front, add the maximum subsequence sum from the start of the 2 nd subarray towards it end, call the result S 3; return max(S 1, S 2, S 3).

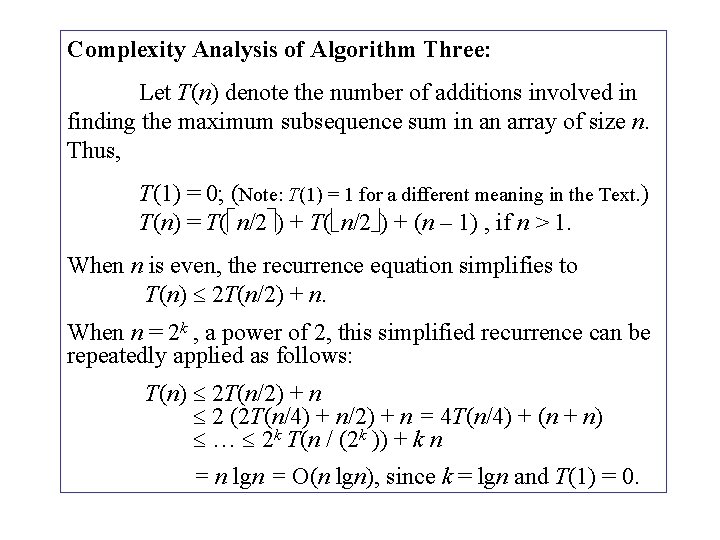

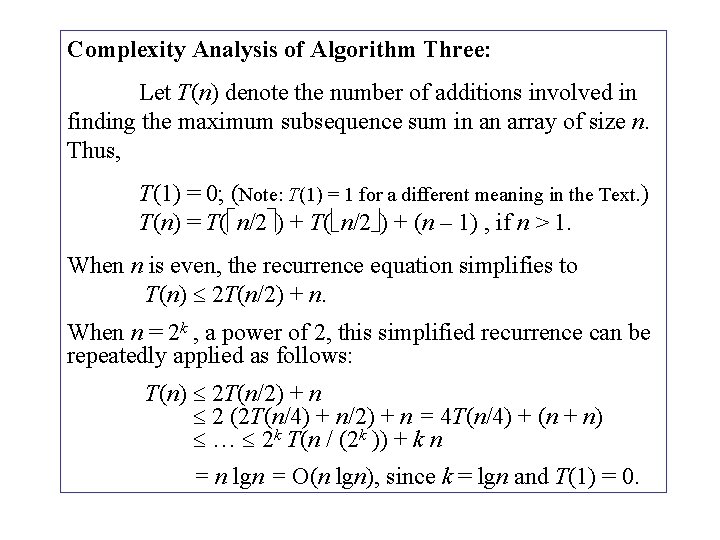

Complexity Analysis of Algorithm Three: Let T(n) denote the number of additions involved in finding the maximum subsequence sum in an array of size n. Thus, T(1) = 0; (Note: T(1) = 1 for a different meaning in the Text. ) T(n) = T( n/2 ) + (n – 1) , if n > 1. When n is even, the recurrence equation simplifies to T(n) 2 T(n/2) + n. When n = 2 k , a power of 2, this simplified recurrence can be repeatedly applied as follows: T(n) 2 T(n/2) + n 2 (2 T(n/4) + n/2) + n = 4 T(n/4) + (n + n) … 2 k T(n / (2 k )) + k n = n lgn = O(n lgn), since k = lgn and T(1) = 0.

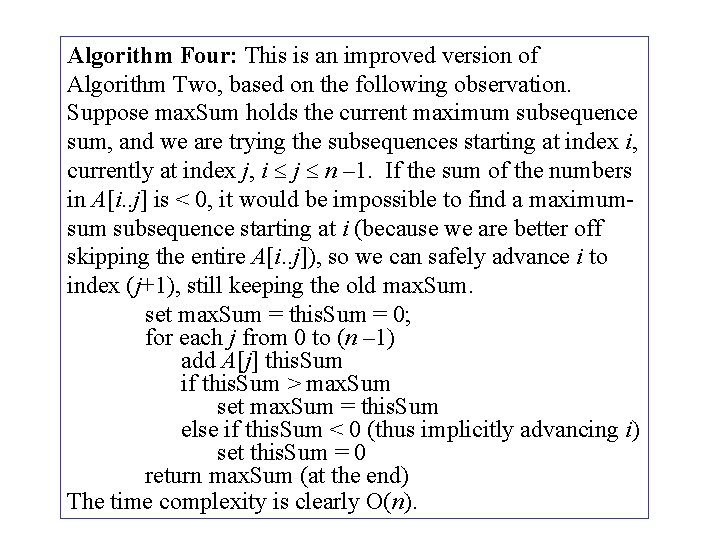

Algorithm Four: This is an improved version of Algorithm Two, based on the following observation. Suppose max. Sum holds the current maximum subsequence sum, and we are trying the subsequences starting at index i, currently at index j, i j n – 1. If the sum of the numbers in A[i. . j] is < 0, it would be impossible to find a maximumsum subsequence starting at i (because we are better off skipping the entire A[i. . j]), so we can safely advance i to index (j+1), still keeping the old max. Sum. set max. Sum = this. Sum = 0; for each j from 0 to (n – 1) add A[j] this. Sum if this. Sum > max. Sum set max. Sum = this. Sum else if this. Sum < 0 (thus implicitly advancing i) set this. Sum = 0 return max. Sum (at the end) The time complexity is clearly O(n).

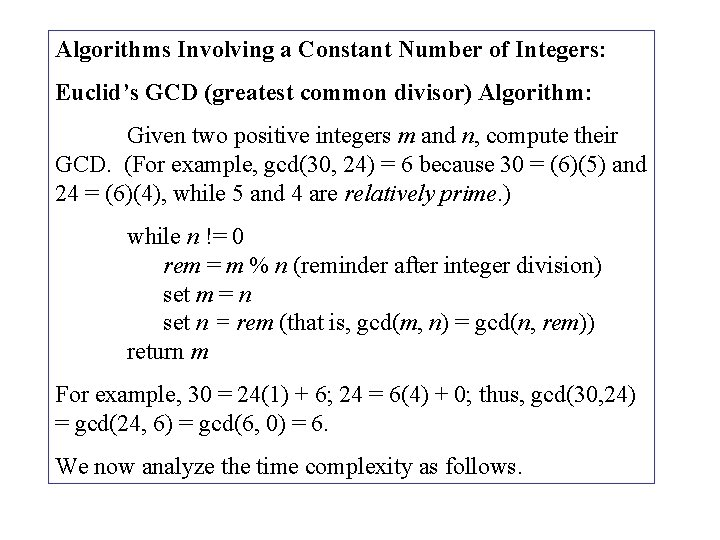

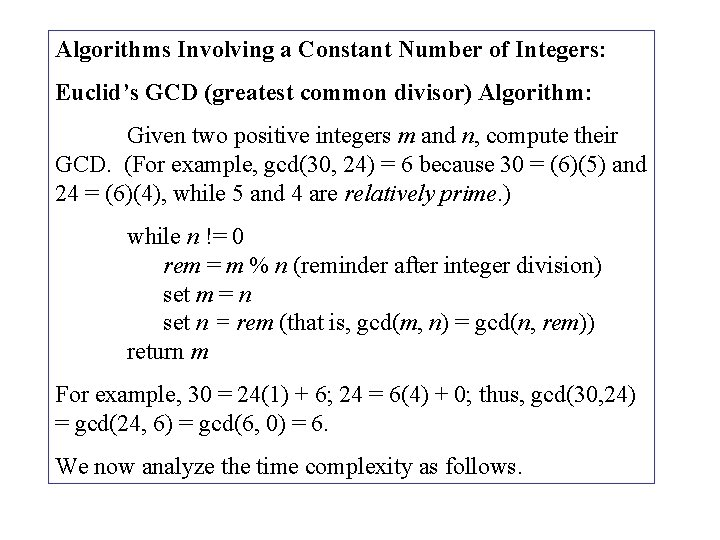

Algorithms Involving a Constant Number of Integers: Euclid’s GCD (greatest common divisor) Algorithm: Given two positive integers m and n, compute their GCD. (For example, gcd(30, 24) = 6 because 30 = (6)(5) and 24 = (6)(4), while 5 and 4 are relatively prime. ) while n != 0 rem = m % n (reminder after integer division) set m = n set n = rem (that is, gcd(m, n) = gcd(n, rem)) return m For example, 30 = 24(1) + 6; 24 = 6(4) + 0; thus, gcd(30, 24) = gcd(24, 6) = gcd(6, 0) = 6. We now analyze the time complexity as follows.

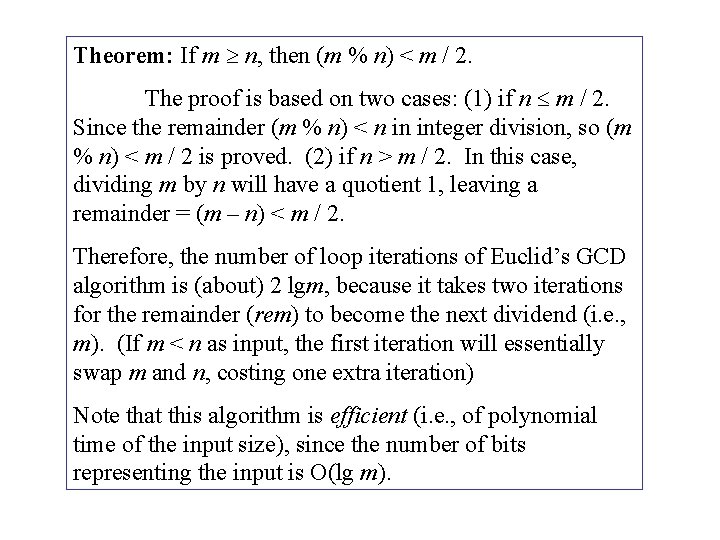

Theorem: If m n, then (m % n) < m / 2. The proof is based on two cases: (1) if n m / 2. Since the remainder (m % n) < n in integer division, so (m % n) < m / 2 is proved. (2) if n > m / 2. In this case, dividing m by n will have a quotient 1, leaving a remainder = (m – n) < m / 2. Therefore, the number of loop iterations of Euclid’s GCD algorithm is (about) 2 lgm, because it takes two iterations for the remainder (rem) to become the next dividend (i. e. , m). (If m < n as input, the first iteration will essentially swap m and n, costing one extra iteration) Note that this algorithm is efficient (i. e. , of polynomial time of the input size), since the number of bits representing the input is O(lg m).

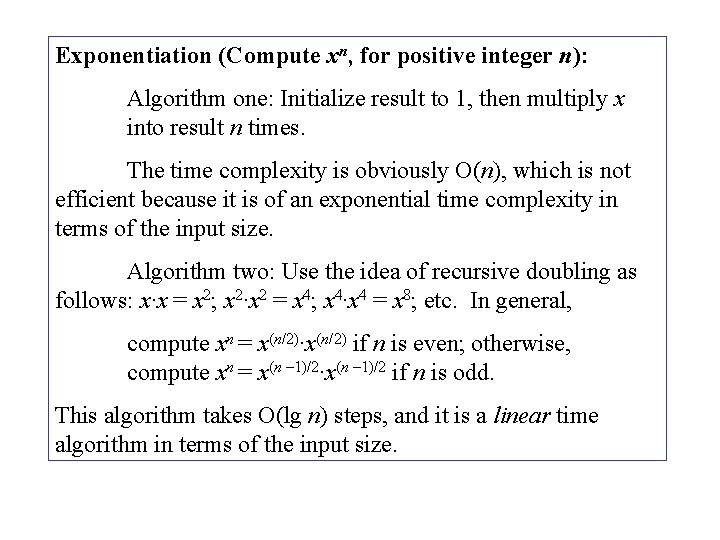

Exponentiation (Compute xn, for positive integer n): Algorithm one: Initialize result to 1, then multiply x into result n times. The time complexity is obviously O(n), which is not efficient because it is of an exponential time complexity in terms of the input size. Algorithm two: Use the idea of recursive doubling as follows: x·x = x 2; x 2·x 2 = x 4; x 4·x 4 = x 8; etc. In general, compute xn = x(n/2)·x(n/2) if n is even; otherwise, compute xn = x(n – 1)/2·x(n – 1)/2 if n is odd. This algorithm takes O(lg n) steps, and it is a linear time algorithm in terms of the input size.