Airline Reservation System MSE Project Phase III Presentation

- Slides: 24

Airline Reservation System MSE Project Phase III Presentation -- Kaavya Kuppa Committee Members: Dr. Daniel Andresen Dr. Torben Amtoft Dr. Mitchell L. Neilsen

Agenda for Phase III Presentation l l l l Project Overview Review of Work for Phase I and Phase II Action items from phase II Assessment Evaluation Project Evaluation Lessons Learned Demo

Project Overview l l l The main objective of my MSE project was to design, code and test an Airline Reservation System website, which allows the users to purchase airline tickets and book motels and packages available to them. This project is mainly developed for common people. General features provided by the website are: register, Search and browsing, flight, package and hotel booking etc.

Review of work for phases I and II l l l Phase I of the vision document mainly was the requirements specification phase where I have designed the vision document for the Airline Reservation System. All the use cases planned in the vision document for the user have been implemented

Review of work for phases I and II continued. . l l Phase II The phase II of the project mainly consisted of revising the documents from Phase I First working prototype was developed in this phase Creation of Test Plan

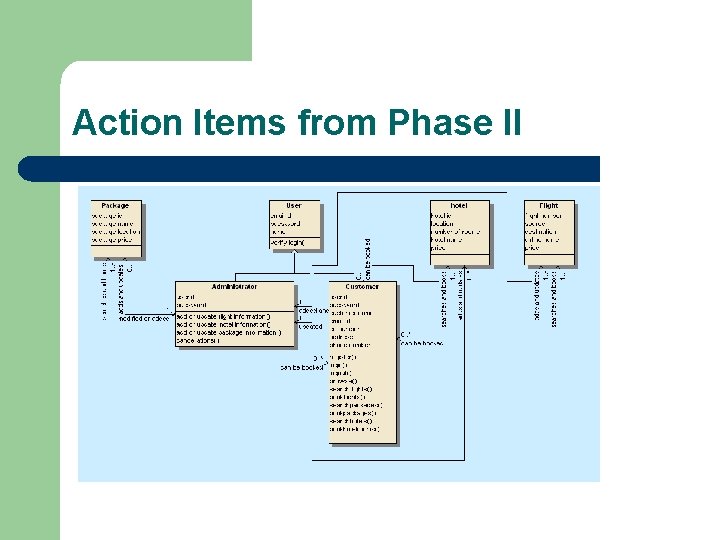

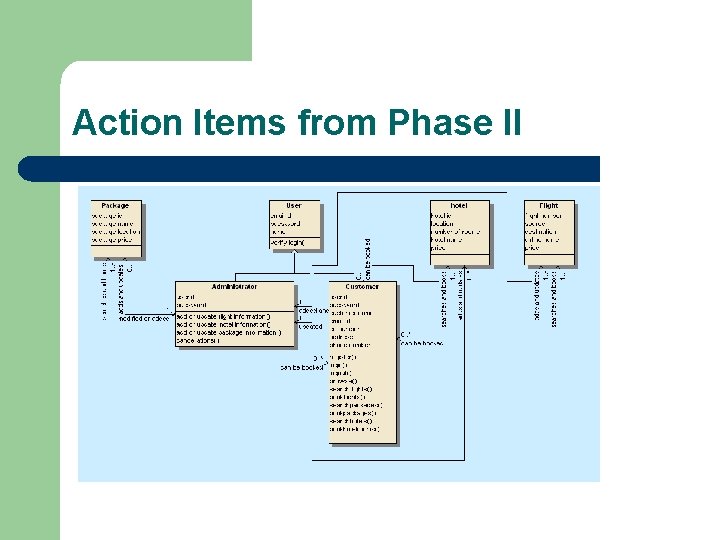

Action Items from Phase II l The major action item from Phase II was the class diagram. I have corrected the class diagram and the correct diagram has been depicted in the Component Design document.

Action Items from Phase II

Assessment Evaluation The Assessment Evaluation document is one of the Major deliverables of Phase III. l Two types of testing done on the Airline Reservation System application - Manual Testing - JMeter Performance Testing l

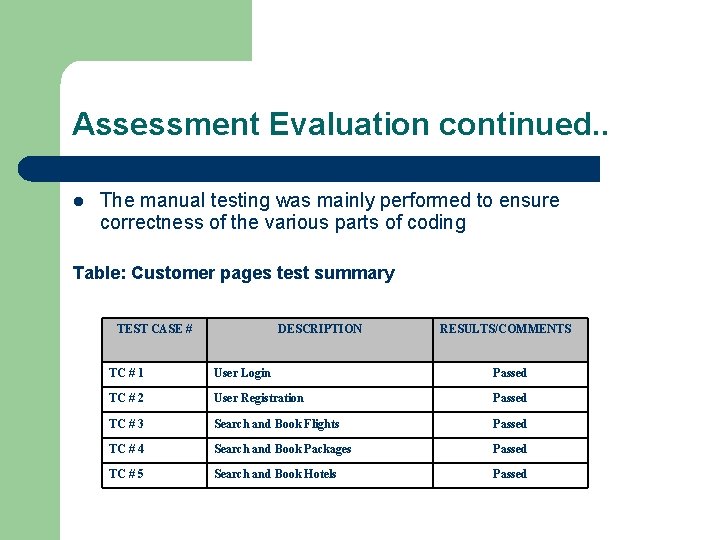

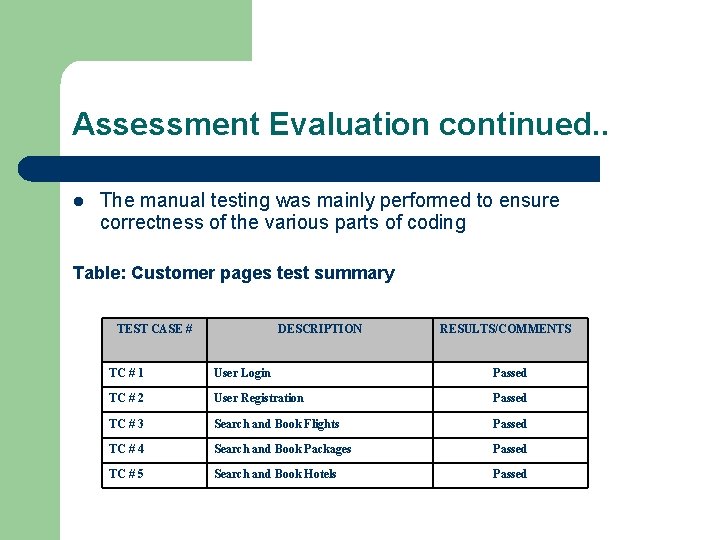

Assessment Evaluation continued. . l The manual testing was mainly performed to ensure correctness of the various parts of coding Table: Customer pages test summary TEST CASE # DESCRIPTION RESULTS/COMMENTS TC # 1 User Login Passed TC # 2 User Registration Passed TC # 3 Search and Book Flights Passed TC # 4 Search and Book Packages Passed TC # 5 Search and Book Hotels Passed

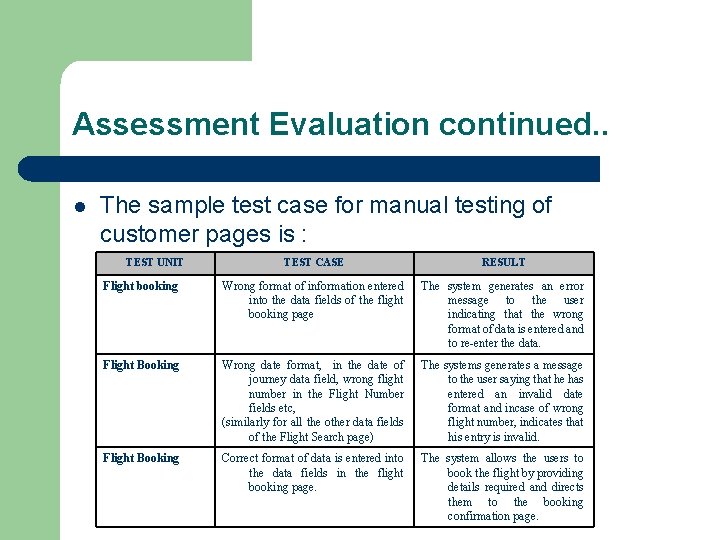

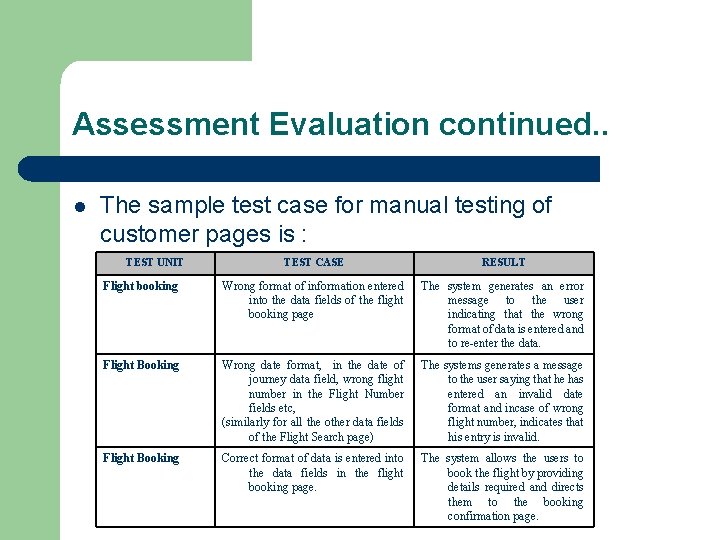

Assessment Evaluation continued. . l The sample test case for manual testing of customer pages is : TEST UNIT TEST CASE RESULT Flight booking Wrong format of information entered into the data fields of the flight booking page The system generates an error message to the user indicating that the wrong format of data is entered and to re-enter the data. Flight Booking Wrong date format, in the date of journey data field, wrong flight number in the Flight Number fields etc, (similarly for all the other data fields of the Flight Search page) The systems generates a message to the user saying that he has entered an invalid date format and incase of wrong flight number, indicates that his entry is invalid. Flight Booking Correct format of data is entered into the data fields in the flight booking page. The system allows the users to book the flight by providing details required and directs them to the booking confirmation page.

Assessment Evaluation continued. . The performance testing is mainly done to load test the web application. l The load is determined here in terms of the number of concurrent users of the website. l Determine the response time for the web page. (Response time is the time taken by the server to bring up a requested page in the browser, beginning from the time it has been requested) l

Assessment Evaluation continued. . l l The performance testing of the Airline Reservation System website was mainly done on three pages: Home. aspx page Booking. aspx page Flight. Search. aspx page

Assessment Evaluation continued. . l l l The tool used for performance testing is JMeter. Input to the JMeter tool consist of : Number of Threads/ Users Ramp-up period – time in seconds to load the total number of users chosen Loop Count - number of times the test is to be repeated

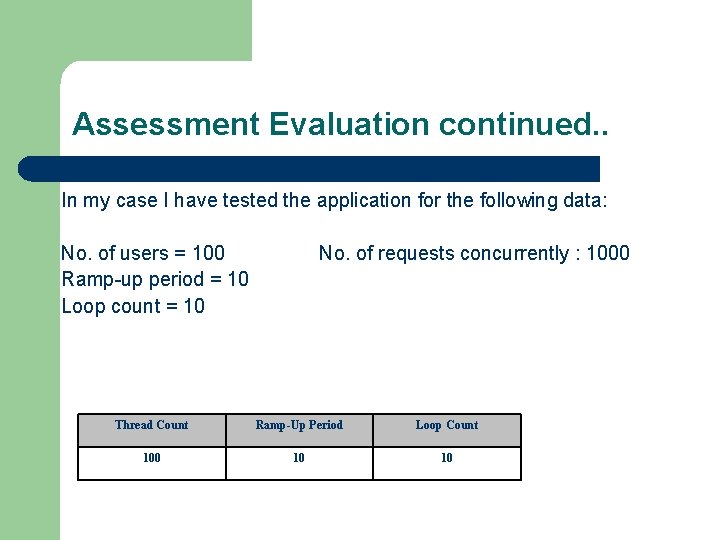

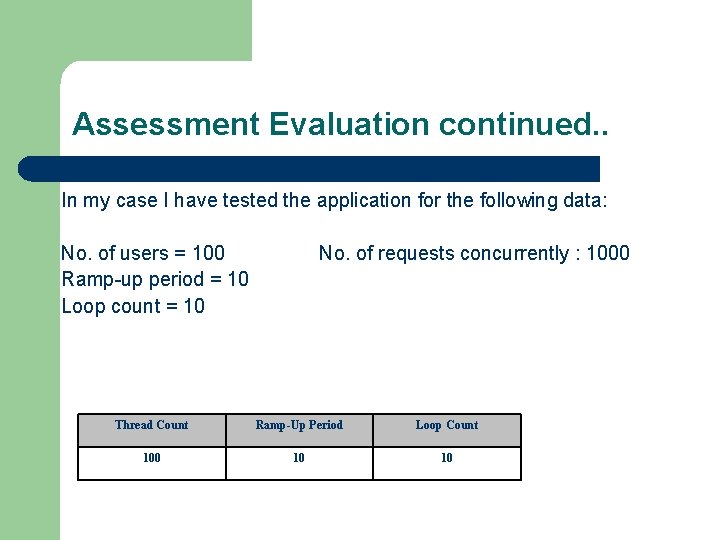

Assessment Evaluation continued. . In my case I have tested the application for the following data: No. of users = 100 Ramp-up period = 10 Loop count = 10 No. of requests concurrently : 1000 Thread Count Ramp-Up Period Loop Count 100 10 10

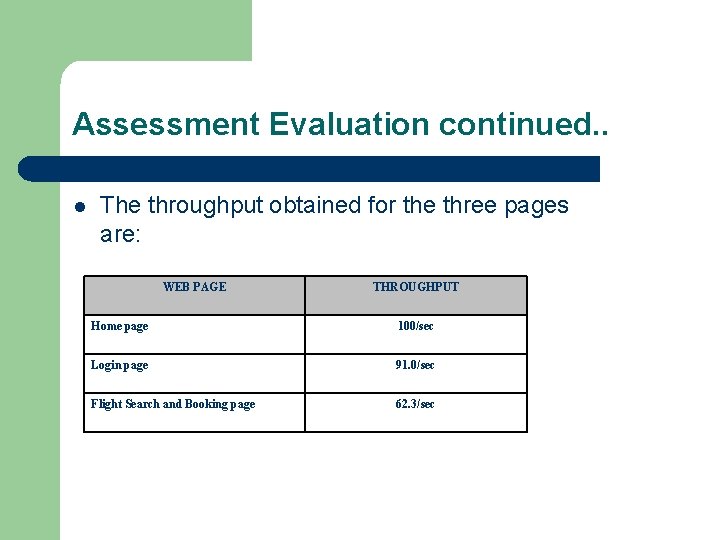

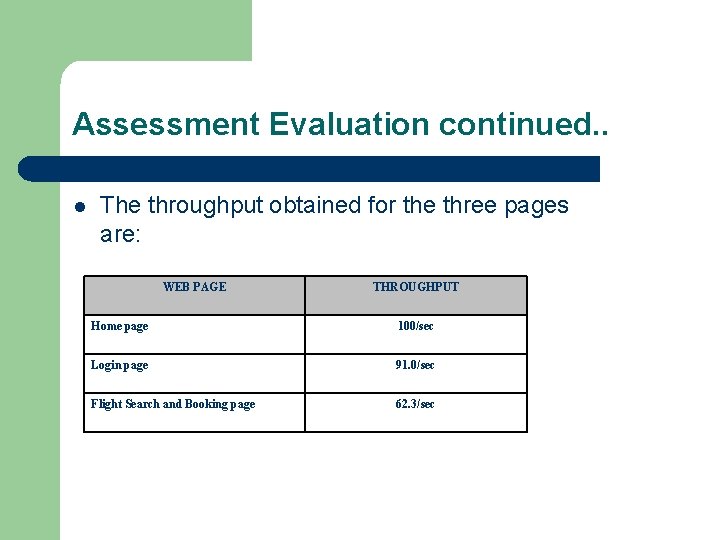

Assessment Evaluation continued. . l The throughput obtained for the three pages are: WEB PAGE THROUGHPUT Home page 100/sec Login page 91. 0/sec Flight Search and Booking page 62. 3/sec

Assessment Evaluation continued. . l I also tested for heavy load on the application, where the database had about 5000 elements in the table. l Initially the response time for the page, when it had a less number of elements in the table was around 15 ms But upon adding 5000 values to the table randomly and then repeating the same number of tests with J Meter the average response time for the Customer Details page shot up to 3699 ms. The throughput for the page was also very less around 12. 3 requests / sec l l

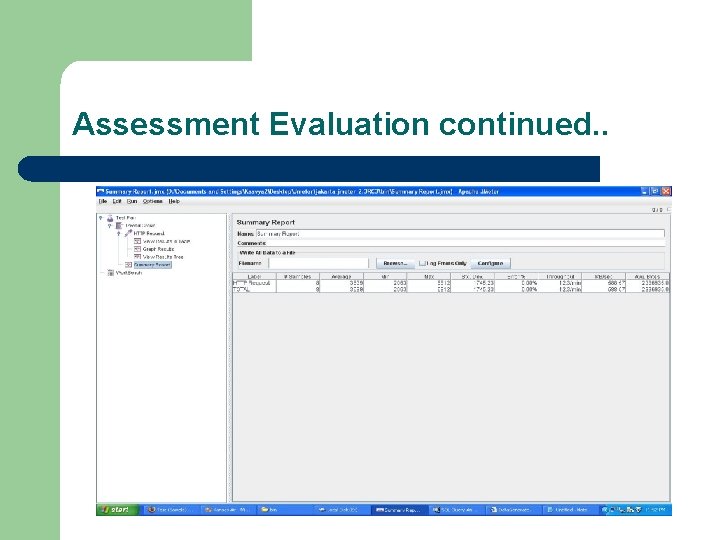

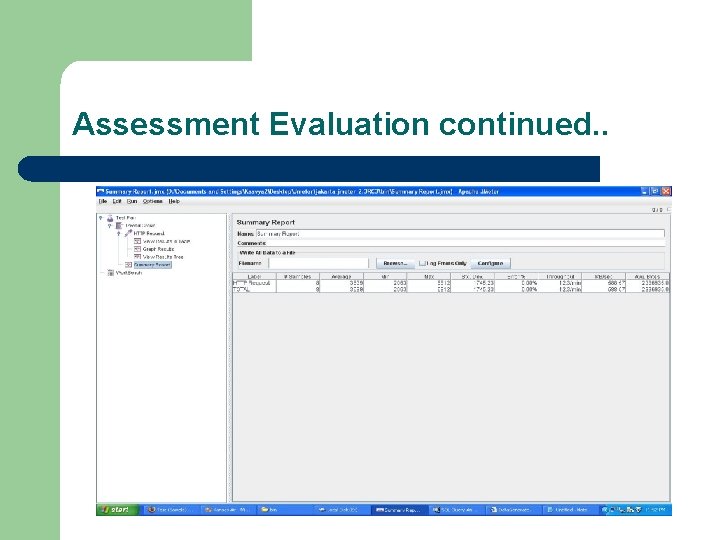

Assessment Evaluation continued. .

Assessment Evaluation continued. . l The environment used to performance test the application is as follows: l Operating System – Windows XP Professional Memory – 0. 99 GB RAM 80 GB Hard Disk Intel® Pentium® M Processor 1. 7 GHz l l l

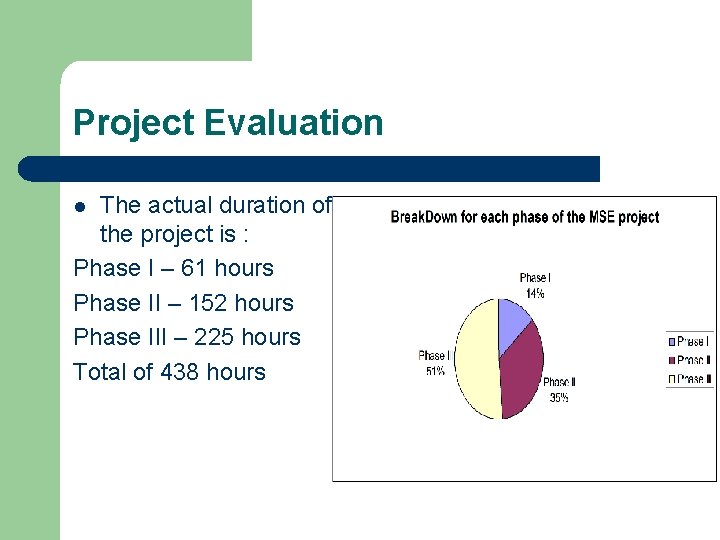

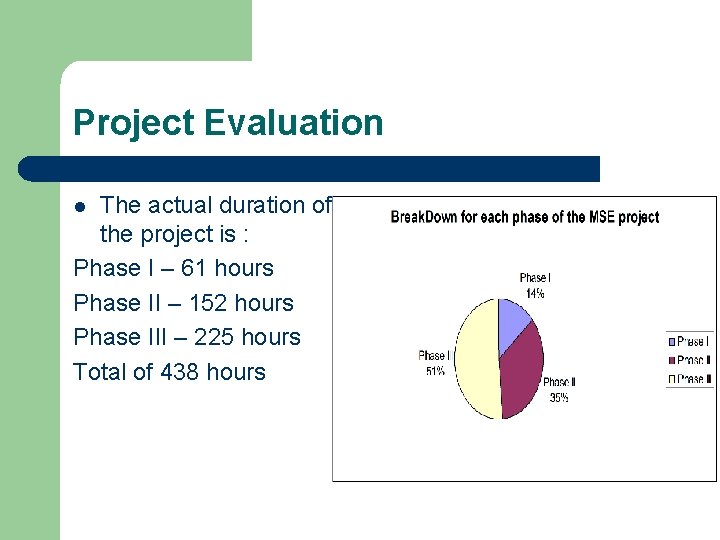

Project Evaluation The actual duration of the project is : Phase I – 61 hours Phase II – 152 hours Phase III – 225 hours Total of 438 hours l

Project Evaluation Continued. . l l Of the total hours spent on the project nearly 190 hours was dedicated to coding. Nearly 43. 3% of the whole time was dedicated to coding the project. Nearly 80 hours , that is nearly 20% of the time was spent on the documentation for the project.

Project Evaluation continued. . l l The total lines of code for the project is 4912 LOC. Out of that

Lessons Learnt l l I have had the chance to develop the project in a new technology. I have come into touch with various tools like J Meter, SLOC count for counting the number of lines of code I have developed a whole project, going through all the phases of the project. To be ready for the unexpected

Demo l. DEMO

Questions ?