Agile Testing Introduction to Agile Testing The definition

- Slides: 73

Agile Testing

Introduction to Agile Testing The definition of agile testing can be described as follows: “Testing practice for projects using agile technologies, considering development as per-requisite of testing and introducing a test-first design methodology. In agile development, testing process is being integrated throughout the project life-cycle, testing the software throughout its development. ” This Agile is a methodology which is seeing increasingly widespread, and it is easy to understand, significantly if you consider the developer's and user's point of view. Users: They are not interested to spend much of their time wondering in detail about the exact requirement and processes to be considered for the entire system, following the review a huge specification, which they know could come back to puzzle them. Developers: They are not interested to have to follow a lined specification, without any expression of their own contribution towards creative talents, specifically if they can see a better alternative of processing the work.

Agile Testing Defined • Agile testing is a software testing practice that follows the principles of Agile software development. Agile development integrates testing into the development process, rather than keeping it as a separate and distinct SDLC phase. • Agile testing involves a cross-functional Agile team actively relying on the special expertise contributed by testers. • Agile teams use a “whole-team” approach to “bake in quality” to the software product. Because testing occurs in real time, this approach allows the team to collaborate actively with the development team, helping them identify and transfer issues into executable specifications that guide coding. • Before the start of testing, the development team’s detailed design documents must be approved so that test case creation can start. • Both coding and testing are performed incrementally and iteratively in Sprints (or iterations), building each feature until it delivers enough stability and adds quality to the product.

Common Testing Challenges on an Agile Team Agile projects have specific problems for testers which would go beyond a lack of comprehensive documentation, and making the planning tedious. The process of testing in the agile environment is not a complex work when planned, but still there are certain common issues suffered. ü Environment's Complexity ü Nth iteration Testing ü Performance Bottlenecks ü Lack of focused testing ü Ownership of test automation

Testing Challenges - Environment's Complexity • In most of the agile teams, there would certainly be a continuous integration and deployment. But still, continuous deployment also indicates that environment is always in a constant pace. • As a tester, it is mandatory to be having a capacity to test in a stable environment whenever required. This is however sometime drains effort. • Especially, when there is a need to promote code to staging and production, the level of confidence high in promoting the version tested. • Solving a problem always becomes a problem because every application is different and environment often has many stakeholders.

Testing Challenges - Nth iteration Testing • The more is delivered; there is more probability to get things wrong in an unexpected way. Scripts get thickened, and an inadequate test set of single iteration may be a poor selection in the upcoming sprints. • Fixes might introduce unexpected issues which would lead to strategic problems which results to prone to turn into unpleasant expectations. • It is also usual to see bugs when a fix is backed out since it causes unintended issues which are equivalent to, or still worse than the initial ones. • After a couple of iterations, there is a least probability to test from scratch, but the complexity of the system would have reached a prominent stage where no part of the system is genuinely immune from new emerging issues/bugs. • There might be a thought to return to code freezes, waterfall approaches and testers as bug stoppers, but this agile project has already become clumsy.

Testing Challenges - Performance Bottlenecks • As software becomes gets more mature, the complexity of the software naturally goes up. • This complexity increases the lines of code which would introduce performance issues if the developer is not aware on how the changes would impact the end-user performance. • In order to solve this issue, one must first know what areas of the code are causing performance issues and how performance is being impacted over time.

Testing Challenges - Lack of focused testing • Testing in agile often becomes little complex and therein resulting it lack of focus on testing. There a lot of things which require some time to the testers. • Some of them is to concentrate and be prepared for testing in the later part for the future sprint with the aid of Business Analysts, discuss difficulties of the ongoing sprint with the developing team along with the Business Analysts, to ensure that the cons on the build-on CI, to also cooperate with product owner for a demo, to help the developers in extracting the test data, environment set-up or unit testing, integration test, review unit, make sure that non-functional testing is been satisfied whenever needed. • The test automation, support prod release, test in different environments and so on also to be noted in order to have an clean agile environment. This might often leave very little room for the actual testing process planned.

Testing Challenges - Ownership of test automation • Test automation is most important process of agile teams. There a lot of successful and also unsuccessful automation testing efforts. • The biggest factor in the success or failure of automation effort is the ownership of testing. If test automation is being owned by a team, it has good options of adding value. It’ is really been a tough process to promote this way of work and of course it takes some time. • Mostly, the testing team in the environment do not want any chance of the testing to go wrong. They'll want to make sure that they would abandon control and still on the other side, the developers in the team, might be a little reluctant in taking additional responsibility. • So, it's a little difficult task to all the teams work accordingly, but the point to be noted is when team owns test automation of the environment, the faster would be the feedback which in turn adds more and more value.

Few more Changes in Agile Testing • Start of a Sprint is typically the start of Testing. • Test estimation is provided as Sprint tasks on a Sprint Planning meeting. • The product owner, Scrum master, developer and tester form the Scrum team ( usually a team of 5 -7) • Entire scrum team is responsible for the quality and the result of a deliverable • A daily stand up meeting within the Scrum team spanning for 15 - 30 minutes to provide the status of each task assigned to team for the Sprint. • Maximum communication within the Scrum team. • Provide continuous feedback.

Pros of being Agile • Less risk of compressed test period • Test all the time, not just at the end. • Work together as one team towards common goal. • Defects are Easy to Fix • Fewer Changes Between Fixes • Flexibly Incorporate New Requirements • Early and Predictable Delivery. • Agile testing saves time and money • Less documentation when compared to waterfall modal • Regular feedback from the stake holders. • Daily meetings help in addressing the issues and getting the resolution in faster node.

Cons of being Agile • Agile testing proves to be the best testing methodology only if the requirements are clear to the project sponsors. • If the requirements frequently change (as allowed for in Agile), the following scenarios can occur: • Difficulty in leaving the traditional understanding of the roles • The team struggles to adapt to changes because significant effort has already gone into the initial requirements development and testing process. • Challenges in estimations and sizing requirements. Sometimes QA gets short shrift since it’s logically the last task in completing the user Story. Therefore, any delay in the prior development task risks impacting QA timelines. • QA is sometimes prevented from executing a test case for the whole iteration, leaving the team struggling to finish the task. • Not asking the right questions. It is very dangerous for QA not to ask questions, especially at the point where the user Story is picked up for implementation. Daily team meetings can avoid this problem. • Addition of new user Stories into the current iteration. QA should be included in the addition of the new user Story, to build up appropriate commitments and estimations in order to avoid misalignment and protracted timeframes

Roles of tester in SCRUM • Participate in Release/Sprint Planning • Support developers in Unit Testing – say them about testing view instead of development view • Test user story when completed, testers are last gate to confirm user completeness • Collaborate with customer and Product Owner to define acceptance criteria • Develop automation testing

Types of Testing

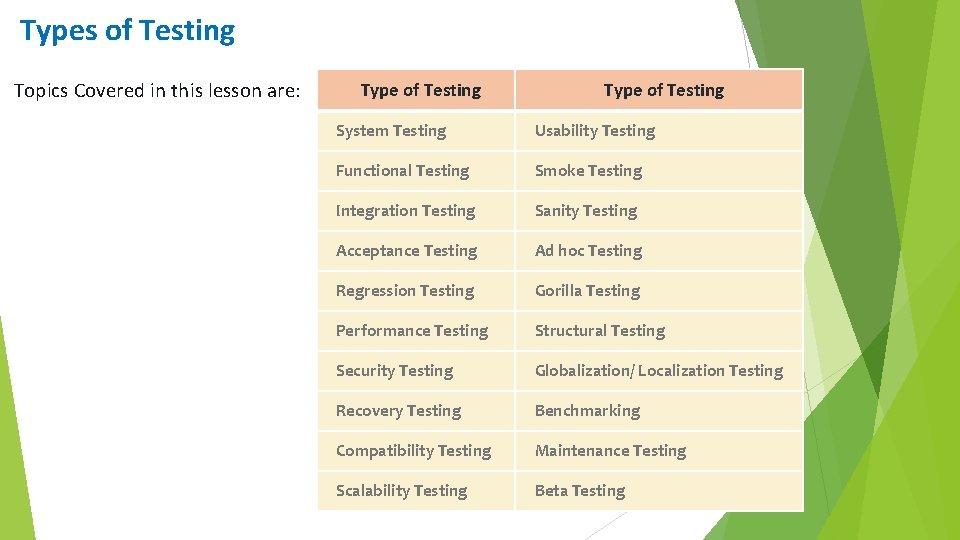

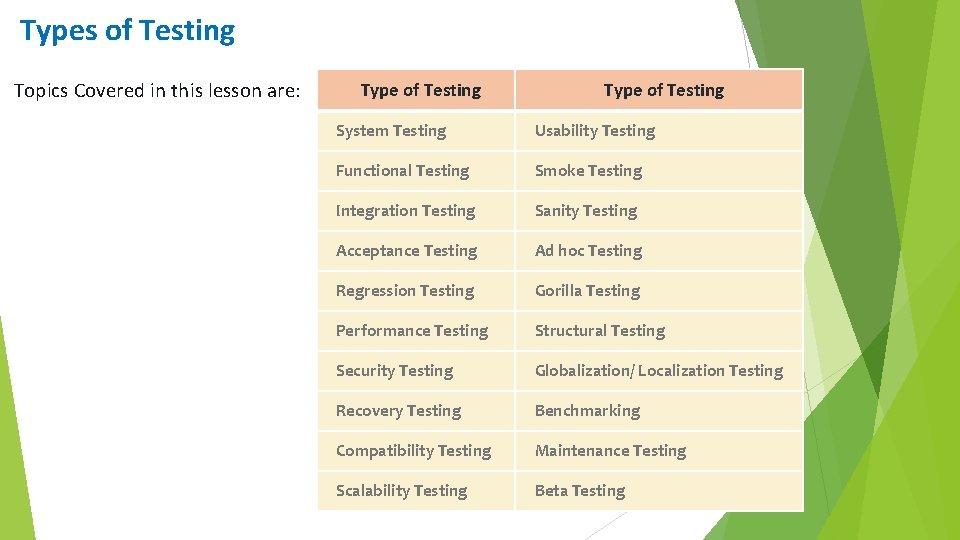

Types of Testing Topics Covered in this lesson are: Type of Testing System Testing Usability Testing Functional Testing Smoke Testing Integration Testing Sanity Testing Acceptance Testing Ad hoc Testing Regression Testing Gorilla Testing Performance Testing Structural Testing Security Testing Globalization/ Localization Testing Recovery Testing Benchmarking Compatibility Testing Maintenance Testing Scalability Testing Beta Testing

System Testing • Complete, integrated system is tested to evaluate it’s ability to satisfy the specified system requirements. • Broadly, system is evaluated for functional and non-functional requirements. • Non-functional tests include performance, security, compatibility, volume, load, stress.

Functional Testing • Functional tests are based on the functions, described in documents or understood by testers and may be performed at all test levels. Ø E. g. test for a component may be based on component specification. • Testing functionality can be done from two perspectives Ø Requirement based perspective Ø Business process based perspective • Specification-based techniques may be used to derive test conditions and test cases from the functionality of the software or system. • Experience based techniques can also be used. • Functional testing considers the external behavior of the software. • Test condition and test cases are derived from the functionality of the component or system.

Integration Testing • Focus on verifying the component interfaces when the units are combined to function as a single subsystem. • Goal is to see if the modules can be integrated properly. • Test activity can be considered as testing the design. • Intermediate level of testing • One of the most difficult aspects of software development is the integration and testing of large, untested sub-systems. The integrated system frequently fails in significant and mysterious ways, and it is difficult to fix it • Progressively unit tested software components are integrated and tested until the software works as a whole • Test that evaluate the interaction and consistency of interacting components.

Acceptance Testing • Participate in Release/Sprint Planning • Is the process of comparing a program to its requirements • Testing the system with the intent of confirming readiness of the product and customer acceptance. • The performance and reliability of the system will be tested and confirmed. • Formal testing conducted to enable a user, customer or other authorized entity to determine whether to accept a system or component. • An acceptance test is a test that the user defines, to tell whether the system as a whole works the way the user expects.

Regression Testing • Regression testing tests for changes. • Typically a regression test compares new output from a program against old output run using the same data.

Performance Testing • Determine what performance factors are most critical to this application and what degree. • Throughput • Response Time • Handling Peak-loads • Determining a good strategy to evaluate the performance capability

Load and Stress Testing Load Testing Evaluate the system’s capacity to handle large amounts of data during short time periods. Stress Testing Evaluate system’s capacity to handle large numbers of processing transactions during peak periods

Load and Stress Testing (Cont…) • Eliminates surprises and ensures that a System will be able to perform under load. • Without testing, it's impossible for to know what might happen when a site has to function under a heavy load. • Will response time degrade slowly or drop off precipitously? • At what point might a Web site crash completely? To conduct Load Testing on a web-site : • Apply stress to a web-site by simulating real users and real activity • Monitor response time as load is increased. • Perform Capacity Testing to determine the maximum load a Web site can handle before failing • Capacity testing reveals a system ultimate limit.

Security Testing • Protected at different levels based on the security requirements in the organization • Attempts to verify that protection mechanisms built into the systems will protect it from improper penetration • The tester play the roles of the individual who desires to penetrate the system. • Acquire passwords through external clerical means. • Overwhelm the system, thereby denying the service to others • Purposely cause system errors, hoping to penetrate • Browse through insecure data, hoping to find the key to system entry • The good security testing will ultimately penetrate the system.

Recovery Testing • Recovery testing is a system test that forces the software to fail in a variety of ways and verifies that recovery is properly performed. • Automatic - data recovery, check pointing mechanisms and restart are evaluated for correctness. • Human Intervention - mean time to repair is evaluated to determine whether is within acceptable limits.

Compatibility Testing • Testing a Web site, or Web-delivered application for compatibility with a range of the leading browsers and desktop hardware platforms • Testing an application on a range of different operating systems, and in combination with other applications • Verifying the compatibility and interoperability of a set of enterprise-class applications in a complex hardware and software environment designed to meet your specific business needs • Testing a peripheral with a wide range of PCs • Testing a server with different add-in cards, applications, and operating systems

Scalability Testing Scalability testing differs from simple load testing in that it focuses on the performance of your Web sites, hardware and software products, and internal applications at all the stages from minimum to maximum load.

Usability Testing • “Usability is a quality attribute that assesses how easy user interfaces are to use. The word 'usability' also refers to methods for improving ease-of-use during the design process”. • “Usability is the measure of the quality of a user's experience when interacting with a product or system”

Mutation Testing • Mutation Testing is based upon seeding the implementation with a fault (Mutating it) • Also called as Error Seeding • Method used to find effectiveness of testing • When tester finds a certain percentage of seeded defects, it is an indication of the effectiveness • Determine a testing technique that identifies this fault. • Mutated program is called as a mutant. • If the testing technique (test case) identifies this mutant, it is said to kill the mutant • The idea behind this approach is such that if the test case find these small differences, it is likely to be good at finding real faults.

Smoke Testing • Verify the major functionality of the software without concern for finer details. • Non-exhaustive software testing, ascertaining that the most crucial functions of a program work. • In a retailing application, core processes of Order Entry, Credit Card processing and Invoicing could be tested quickly.

Sanity Testing • Performed when cursory testing is sufficient to prove the application is functioning. • Normally includes a set of core tests of basic GUI functionality, connectivity to the database, application servers, printers. • Used prior to release as final inspection and after applying minor patches.

Ad hoc Testing • Testing done without any defined process. • No specified rules required. • It’s up to the tester to test the software of his/her own. • Tested by “end-users” with no knowledge of formal testing or the specific product. • Will generate new test scenarios which will exercise the product along new code paths.

Beta Testing • Carried out on a software product at the user’s site, in the absence of the development team. • About 400, 000 beta test sites for testing Windows 95 Operating System. • Enables the development team to fix several defects in a short time, before the official launch of a product.

Gorilla Testing • Gorilla Testing is used to test the GUI of the software package. • Assume that the software is given to the Gorilla, what does it do? • It presses the key randomly! • While we are testing GUI (particularly for game software), this type of testing is very useful.

Structural Testing of Software Structure / Architecture (Structural Testing) • A third target of testing is the structure of the system or component. • Structural testing assumes that the procedural design is known to us. • Test cases are designed which can test internal logic of the program. • Disadvantages: Ø Does not ensure that user’s requirements are met Ø Does not establish if the decisions / conditions / paths / statements are insufficient

Testing Related to Changes • Confirmation /Retesting : Ø It is the process of re-executing all the failed test cases to check if the development team has really fixed the defect or not. • Regression Testing : Ø It is the re-execution of few or all the test cases to check: 1) Any addition to the software 2) Any deletion to the software 3) Any updating or fixing of defect does not affect the functionality of unchanged modules.

Globalization / Localization Testing • Globalization testing checks proper functionality of the product with any of the culture / locale settings using every type of international input possible. • The localization process also includes translating any help content associated with the application. • Most localization teams use specialized tools that aid in the localization process by recycling translations of recurring text and resizing application UI elements to accommodate Localized text and Graphics. • Localization Testing check the quality of a product’s Localization for a particular target culture / locale.

Benchmarking Testing • Benchmarking involves activities that compare an organization product with similar market leading one • The comparison is based on the following factors. Ø Performance Parameters Ø User Friendliness Ø Richness of Features

Maintenance Testing • Why maintain software? Ø Model of reality: As the reality changes, the software must either adapt or die. Ø Pressures from satisfied users, to extend the functionality of the product. Ø Software is much easier to change than hardware. As a result, changes are often made to the software whenever possible. Ø Successful software , survives , well beyond the lifetime of the environment for which it was written.

Maintenance Testing • (Cont. …) Types of Maintenance Testing Ø Corrective maintenance is maintenance performed to correct faults in hardware or software [IEEE 1990] Ø Determining how the existing system may be affected by changes is called impact analysis, and is used to help decide how much regression testing to do. Ø Adaptive maintenance is software maintenance performed to make a computer program usable in a changed environment [IEEE 1990]. Ø Perfective maintenance is software maintenance performed to improve the performance, maintainability, or other attributes of a computer program. [IEEE 1990] Ø Preventive maintenance is maintenance preformed for the purpose of preventing problems before they occur [IEEE 1990]

Black-box Testing

Test Case Design • Beyond the psychological and economic aspects of testing, the most important aspect in software testing is the design of effective test cases. • Complete testing is impossible and a test of any program must be necessarily incomplete. • Obvious strategy is to try to reduce this incompleteness as much as possible.

Design Strategy • Given the constraints on time, cost etc • Key Question is … Ø What subset of all possible test cases has the highest probability of detecting the most errors?

What is a test case? • A set of inputs, execution preconditions, and expected outcomes developed for a particular objective, such as to exercise a particular program path or to verify compliance with a specific requirement. • Main Approaches • Two main approaches to designing test cases are • Black-box testing • White-box testing

Black-box testing • Tester views the program as a Black-box. • Tester is completely unconcerned about the internal behaviour or structure of the program. • Tester is only interested in finding circumstances in which the program does not behave according to its specifications. • Test data are solely derived from the specifications.

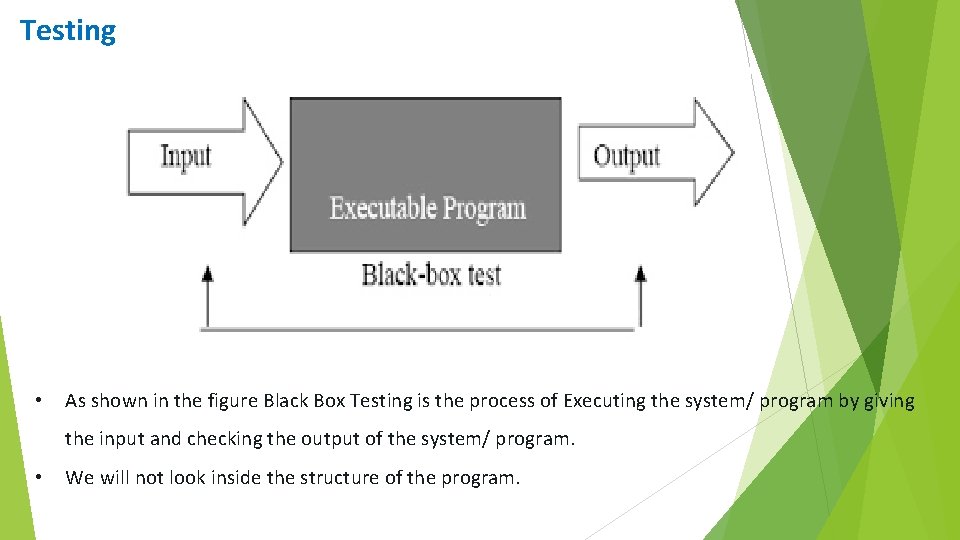

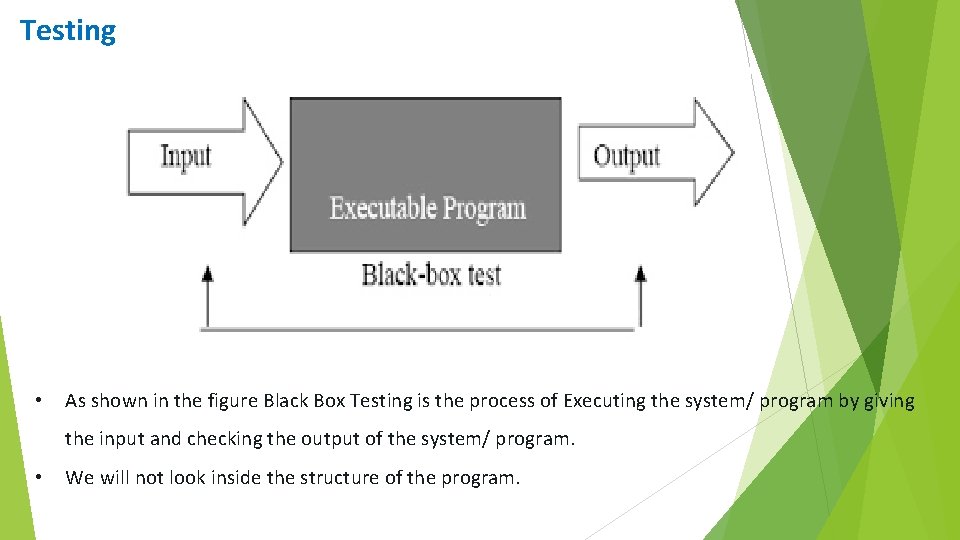

Testing • As shown in the figure Black Box Testing is the process of Executing the system/ program by giving the input and checking the output of the system/ program. • We will not look inside the structure of the program.

Why Black Box Testing…. ? • Purpose of Black Box Testing is to cause failures in order to make the faults visible. • Another purpose is to assess the overall quality level of the code.

Why the name Black Box…? • Software tester does not have access to the source code. • Code is considered to be a “Black Box” to the tester who cannot see inside the box. • Based on the specifications, the tester knows what to outcomes to expect from the black box.

Black Box Testing • Black Box Testing is called as Functionality Testing or Data-Driven Testing or Closed Box Testing. • A System or Components whose inputs, outputs and general functions are known, but whose contents or implementation are unknown for Testing. • Tester only knows the inputs and what the expected outcomes should be and not how the program arrives at those outputs. • Tester does not ever examine the programming code and does not need any further knowledge of the program other than its specifications.

Testing Strategies / Techniques • Black box testing should make use of randomly generated inputs (only a test range should be specified by the tester), to eliminate any guess work by the tester as to the methods of the function. • Data outside of the specified input range should be tested to check the robustness of the program. • Boundary cases should be tested (top and bottom of specified range) to make sure the highest and lowest allowable inputs produce proper output. • Number ZERO should be tested when numerical data is to be input. • Stress testing should be performed (try to overload the program with inputs to see where it reaches its maximum capacity), especially with real time systems. • Crash testing should be performed to see what it takes to bring the system down.

Advantages • More effective on larger units of code than glass box testing. • Tester needs no knowledge of implementation, including specific programming languages. • Tester and Programmer are independent of each other. • Tests are done from a user's point of view. • Test cases can be designed as soon as the specifications are complete.

Disadvantages • Only a small number of possible inputs can actually be tested, to test every possible input stream would take nearly forever. • Without clear and concise specifications, test cases are hard to design. • Could result in unnecessary repetition of test inputs if the tester is not informed of test cases already tried. • May leave many program paths untested.

Black-Box testing Methods • Equivalence partitioning • Boundary-Value Analysis • Cause-effect graphing • Error-Guessing

Equivalence Partitioning • It is the process of dividing the input domain in to the different class (Valid and Invalid), and for a valid input class make the equal partition, so that it will reduce the test cases. • This technique partitions the data to Equivalent sets. This technique optimizes the testing required and Helps to avoid redundancy. • Where a deposit rate is input, it may have a valid range of 0% to 15%. • There is a +ve test, represented by a ‘valid’ equivalent set: - Ø 0 <= percentage <=15 • There are two –ve tests, represented by the two ‘invalid’ equivalent sets: - Ø percentage < 0 Ø percentage > 15

Boundary value analysis • It is the process of checking the input on boundaries, one less than boundary and one greater than boundary. • This technique ensures that minimum, borderline, and maximum data Values for a particular variable or equivalence class are taken into account

Cause effect Graph • Functional or specification based technique system approach to selecting a set of high-yield test cases that explore combinations of input conditions. What is required to drive the self to meet the requirement? • A Boolean graph linking causes and effects. The graph is actually a digital-logic circuit (a combinatorial logic network) using a simpler notation than standard electronics notation. • Cause Effect Graphing: This is a Test data selection technique. The input and output domains are partitioned into classes and analysis is performed to determine which input classes cause which effect. A minimal set of inputs is chosen which will cover the entire effect set. It is a systematic method of generating test cases representing combinations of conditions.

Error Guessing • Error guessing is an ad hoc approach, based on intuition and experience, to identify tests that are likely to expose errors. • This is a Test data selection technique. The selection criterion is to pick values that seem likely to cause errors.

Exploratory testing • Exploratory testing is an approach to software testing that is concisely described as simultaneous learning, test design and test execution. • Cem Kaner, who coined the term in 1983, now defines exploratory testing as "a style of software testing that emphasizes the personal freedom and responsibility of the individual tester to continually optimize the quality of his/her work by treating test-related learning, test design, test execution, and test result interpretation as mutually supportive activities that run in parallel throughout the project. “ • Exploratory testing is often thought of as a black box testing technique. Instead, those who have studied it consider it a test approach that can be applied to any test technique, at any stage in the development process. • The key is neither the test technique nor the item being tested or reviewed; the key is the cognitive engagement of the tester, and the tester's responsibility for managing his or her time

Specification-based testing • Specification-based testing aims to test the functionality of software according to the applicable requirements. Thus, the tester inputs data into, and only sees the output from, the test object. This level of testing usually requires thorough test cases to be provided to the tester, who then can simply verify that for a given input, the output value (or behavior), either "is" or "is not" the same as the expected value specified in the test case. • Specification-based testing is necessary, but it is insufficient to guard against certain risks.

State Transition testing • State transition testing is used where some aspect of the system can be described in what is called a “finite state machine”. This simply means that the system can be in a (finite) number of different states, and the transitions from one state to another are determined by the rules of the “machine”. This is the model on which the system and the tests are based. Any system where you get a different output for the same input, depending on what has happened before, is a finite state system. • Models each state a system can exist in • Models each state transition • Defines for each state transition • start state • input • output • finish state

Grey box testing • Grey box testing involves having knowledge of internal data structures and algorithms for purposes of designing the test cases, but testing at the user, or black-box level. • Manipulating input data and formatting output do not qualify as grey box, because the input and output are clearly outside of the "black-box" that we are calling the system under test. • This distinction is particularly important when conducting integration testing between two modules of code written by two different developers, where only the interfaces are exposed for test. • However, modifying a data repository does qualify as grey box, as the user would not normally be able to change the data outside of the system under test. • Grey box testing may also include reverse engineering to determine, for instance, boundary values or error messages.

White-box Testing

White-Box Testing • It is the process of dividing the input • Tester is permitted to examine the internal structure of the program. • Tester derives test data from an examination of the program logic. • Common methods are statement coverage, decision coverage and condition coverage.

White-Box Testing is… … An approach that examine the program structure and derive test data from the program logic. White Box Testing is also known as … • Logic Driven Testing • Structured Testing • Glass Box Testing

White Box Testing is to Derive test cases: • Based on the program structure. • To guarantee that all the independent paths within a program module have been tested.

White-Box Testing Methods • Statement Coverage. • Decision/Condition-Coverage. • Path Coverage.

White Box Testing is to Derive test cases: • Based on the program structure. • To guarantee that all the independent paths within a program module have been tested. • White Box testing is also known as structural testing. 'White-box testing (also known as clear box testing, glass box testing, transparent box testing, and structural testing)

Branch Testing • Testing technique to satisfy coverage criteria which require that for each decision point, each possible branch (outcome) is executed at least once. • Contrast with testing, path; testing, statement. • Branch Coverage: A test coverage criteria which requires that for what decision point each possible branch be executed at least once.

Control Flow testing • control flow (or alternatively, flow of control) refers to the order in which the individual statements, instructions, or function calls of an imperative or a declarative program are executed or evaluated. • Within an imperative programming language, a control flow statement is a statement whose execution results in a choice being made as to which of two or more paths should be followed. For non-strict functional languages, functions and language constructs exist to achieve the same result, but they are not necessarily called control flow statements. • The kinds of control flow statements supported by different languages vary, but can be categorized by their effect: • continuation at a different statement (unconditional branch or jump), • executing a set of statements only if some condition is met (choice - i. e. conditional branch), • executing a set of statements zero or more times, until some condition is met (i. e. loop - the same as conditional branch), • executing a set of distant statements, after which the flow of control usually returns (subroutines, co routines, and continuations), • Stopping the program, preventing any further execution (unconditional halt).

Control flow testing • Statement Coverage: Execute each statement at least once will give statement coverage. • Decision Coverage: Execute each decision by making it true and false at least once • Condition Coverage: Execute each and every condition by making it true and false by each of the ways at least once will give condition coverage. • Path Coverage: Execute each and every possible path within the code at least once will give path coverage.

Path Testing • Testing to satisfy coverage criteria that each logical path through the program be tested often paths through the program are grouped into a finite set of classes. • One path from each class is then tested. • Paths derived from some graph construct • When a test case executes, it takes a path • Huge number of paths implies some simplification needed. • Big Problem: Infeasible paths • By itself, path testing can lead to a false sense of security. Path Coverage: Execute every possible path of a program • Strongest white box criterion.

Statement Testing: Testing to satisfy the criterion that each statement in a program be executed at least once during program Testing. Statement Coverage: Execute every statement of a program • Weakest White Box criterion

White Box, Black Box and Grey/Gray Box Software Testing methods are traditionally divided into White- box and Black-box testing. These two categories are used to describe the point of view that a test engineer takes when designing test cases. In addition to there is also Gray Box testing which is a combination of both. Ø Black-box testing - Behavior-oriented. Ø White-box testing - Structure-oriented. Ø Gray Box Testing - Projects use a combination of both approaches.