AGI Architectures Control Mechanisms Anatomy of an AGI

![NARS: Control Mechanism • A task aging factor: Durability – [0, 1] • Makes NARS: Control Mechanism • A task aging factor: Durability – [0, 1] • Makes](https://slidetodoc.com/presentation_image_h2/c2a10baae21e869d2fb6445dd79f29d9/image-10.jpg)

- Slides: 21

AGI Architectures & Control Mechanisms

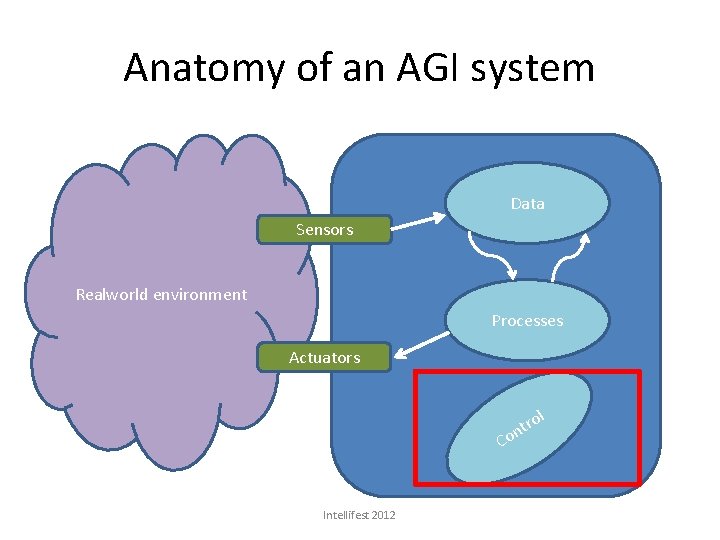

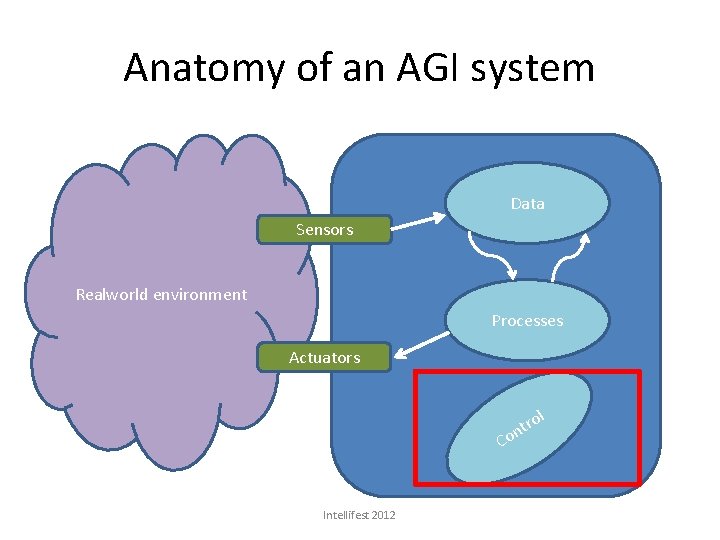

Anatomy of an AGI system Data Sensors Realworld environment Processes Actuators l tro n Co Intellifest 2012

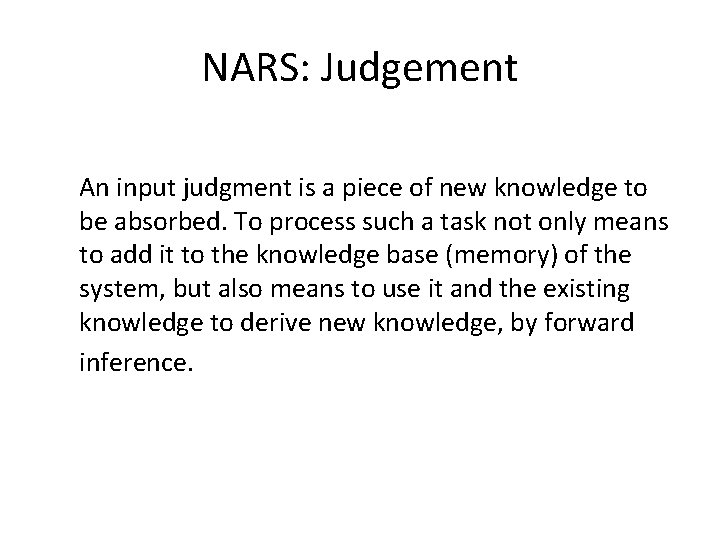

NARS: Judgement An input judgment is a piece of new knowledge to be absorbed. To process such a task not only means to add it to the knowledge base (memory) of the system, but also means to use it and the existing knowledge to derive new knowledge, by forward inference.

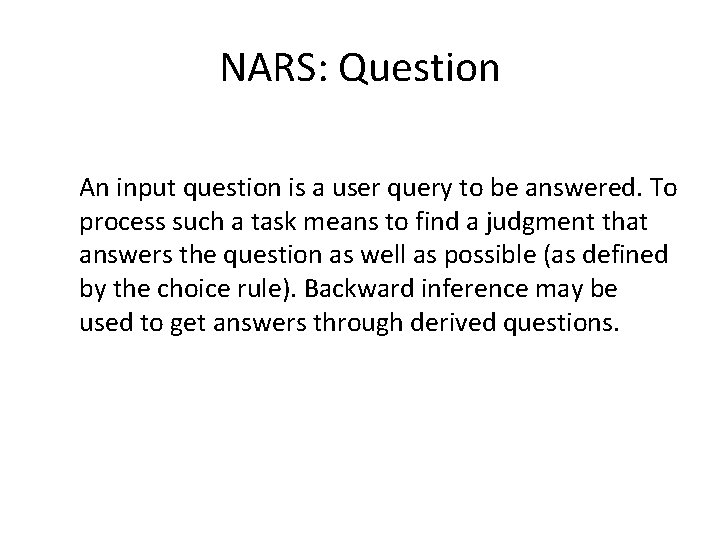

NARS: Question An input question is a user query to be answered. To process such a task means to find a judgment that answers the question as well as possible (as defined by the choice rule). Backward inference may be used to get answers through derived questions.

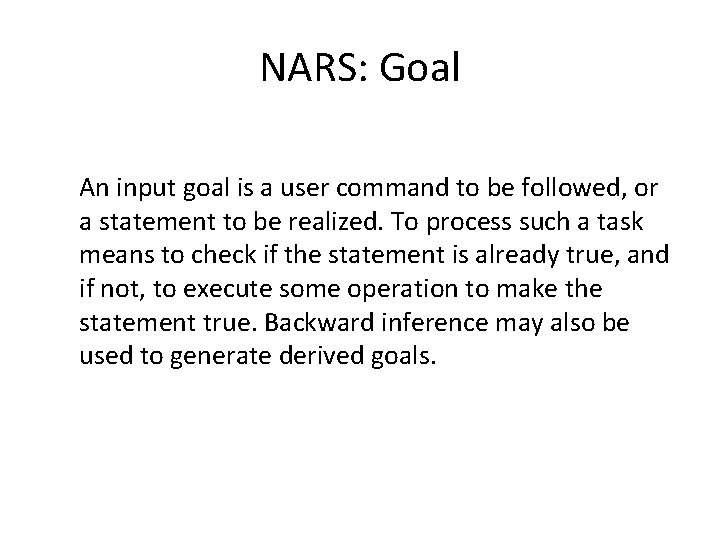

NARS: Goal An input goal is a user command to be followed, or a statement to be realized. To process such a task means to check if the statement is already true, and if not, to execute some operation to make the statement true. Backward inference may also be used to generate derived goals.

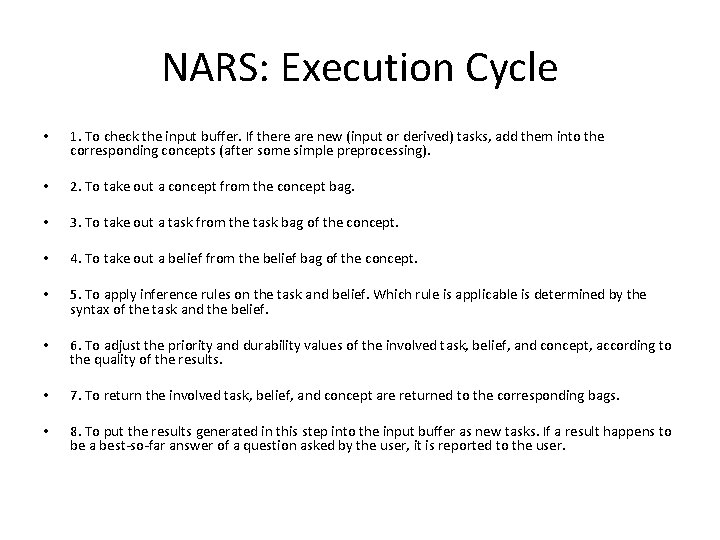

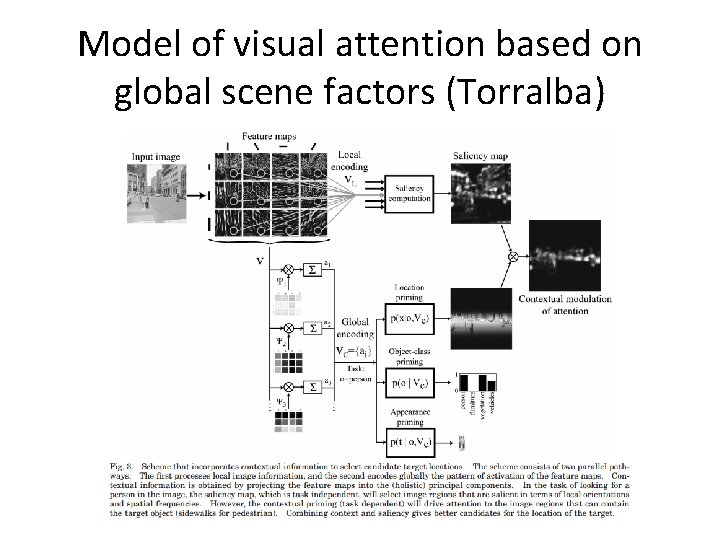

NARS: Control Mechanism • Responsible for resource management – Choosing premises and inference rules in each inference step – Memory allocation

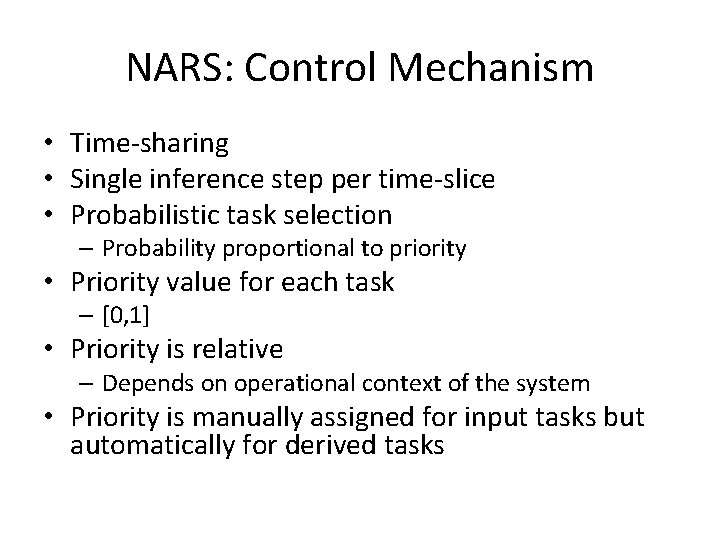

NARS: Control Mechanism • Time-sharing • Single inference step per time-slice • Probabilistic task selection – Probability proportional to priority • Priority value for each task – [0, 1] • Priority is relative – Depends on operational context of the system • Priority is manually assigned for input tasks but automatically for derived tasks

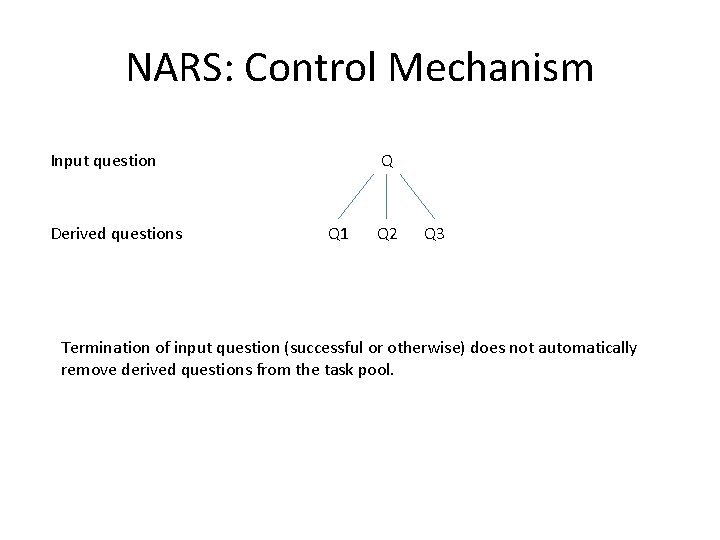

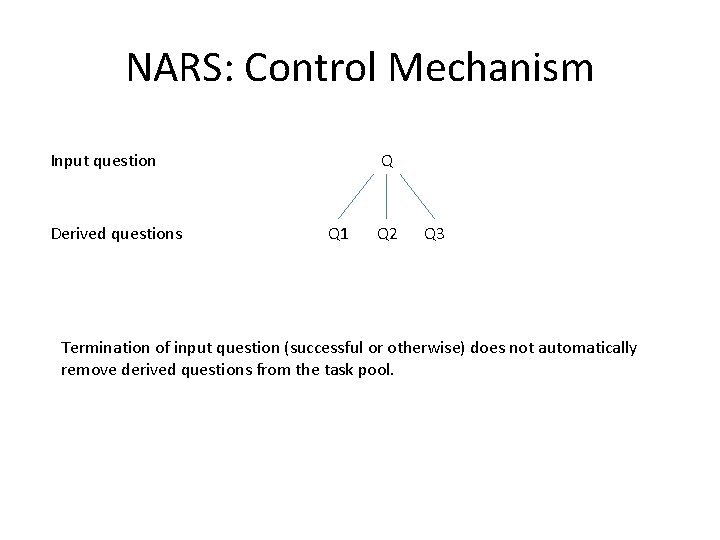

NARS: Control Mechanism Input question Derived questions Q Q 1 Q 2 Q 3 Termination of input question (successful or otherwise) does not automatically remove derived questions from the task pool.

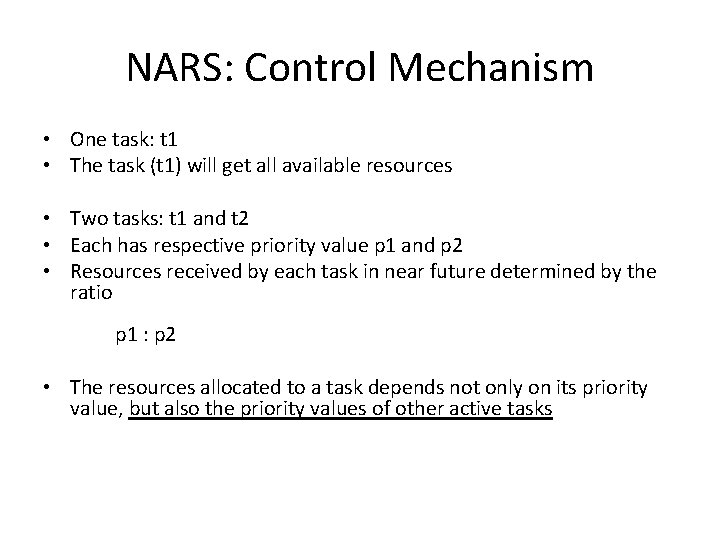

NARS: Control Mechanism • One task: t 1 • The task (t 1) will get all available resources • Two tasks: t 1 and t 2 • Each has respective priority value p 1 and p 2 • Resources received by each task in near future determined by the ratio p 1 : p 2 • The resources allocated to a task depends not only on its priority value, but also the priority values of other active tasks

![NARS Control Mechanism A task aging factor Durability 0 1 Makes NARS: Control Mechanism • A task aging factor: Durability – [0, 1] • Makes](https://slidetodoc.com/presentation_image_h2/c2a10baae21e869d2fb6445dd79f29d9/image-10.jpg)

NARS: Control Mechanism • A task aging factor: Durability – [0, 1] • Makes priority values decay gradually • Task priority: Durability * Priority • Constant re-evaluation of durability and priority based on context – If a good solution has been found, priority is decreased

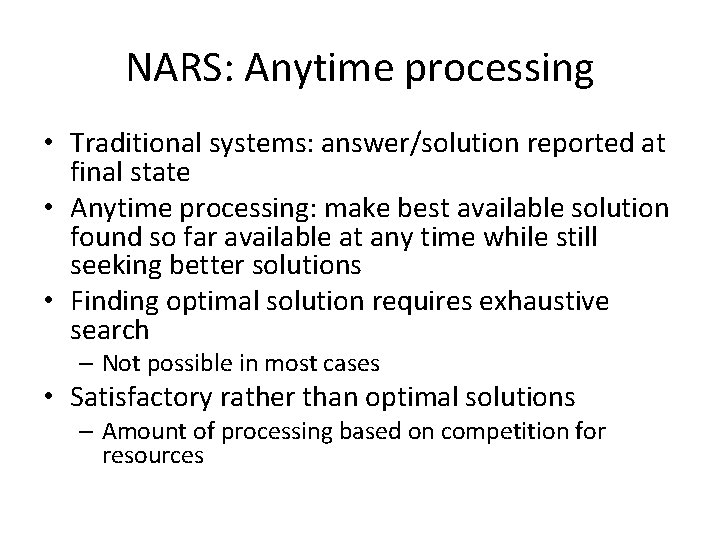

NARS: Anytime processing • Traditional systems: answer/solution reported at final state • Anytime processing: make best available solution found so far available at any time while still seeking better solutions • Finding optimal solution requires exhaustive search – Not possible in most cases • Satisfactory rather than optimal solutions – Amount of processing based on competition for resources

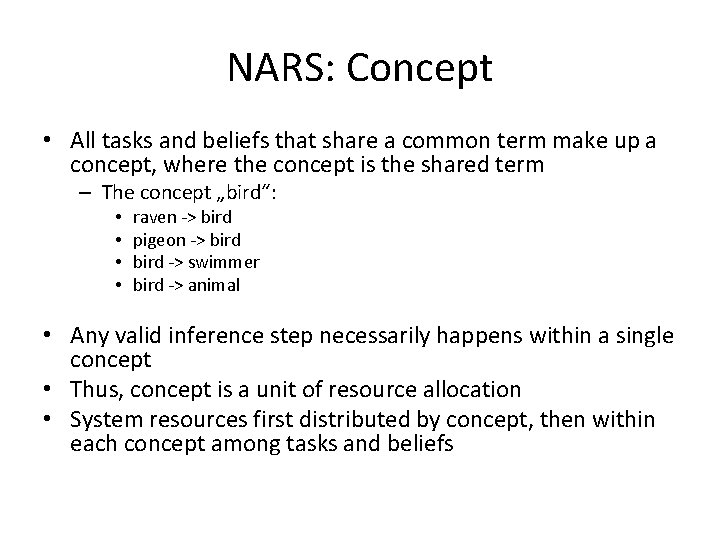

NARS: Memory Structure • Special data structure for system with insufficient resources: Bag • Probabilistic priority queue • Contains items, each having priority value • Two major operations: – Put in: Inserts the item in the bag, if bag is full, existing item with lowest priority is removed – Take out: Returns one item from a non-empty bag, chosen in probabalistic fashion based on priority

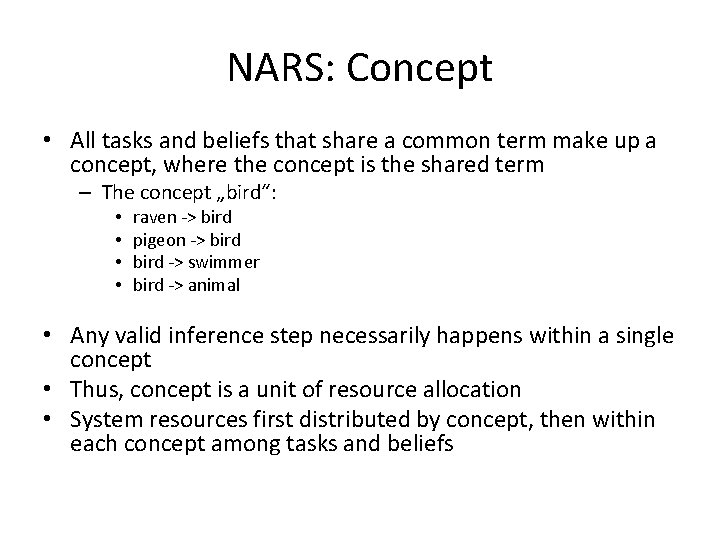

NARS: Concept • All tasks and beliefs that share a common term make up a concept, where the concept is the shared term – The concept „bird“: • • raven -> bird pigeon -> bird -> swimmer bird -> animal • Any valid inference step necessarily happens within a single concept • Thus, concept is a unit of resource allocation • System resources first distributed by concept, then within each concept among tasks and beliefs

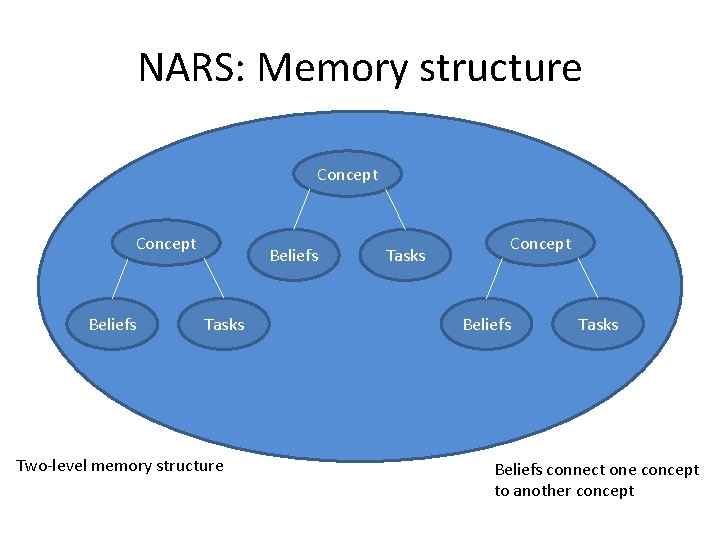

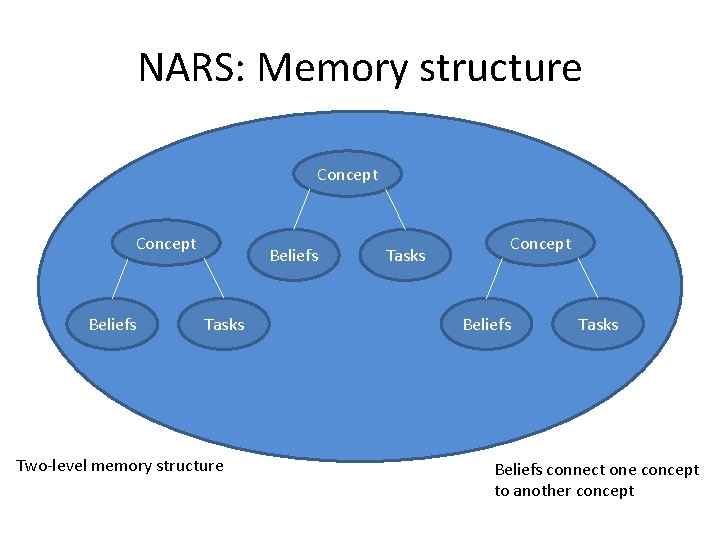

NARS: Memory structure Concept Beliefs Tasks Two-level memory structure Tasks Concept Beliefs Tasks Beliefs connect one concept to another concept

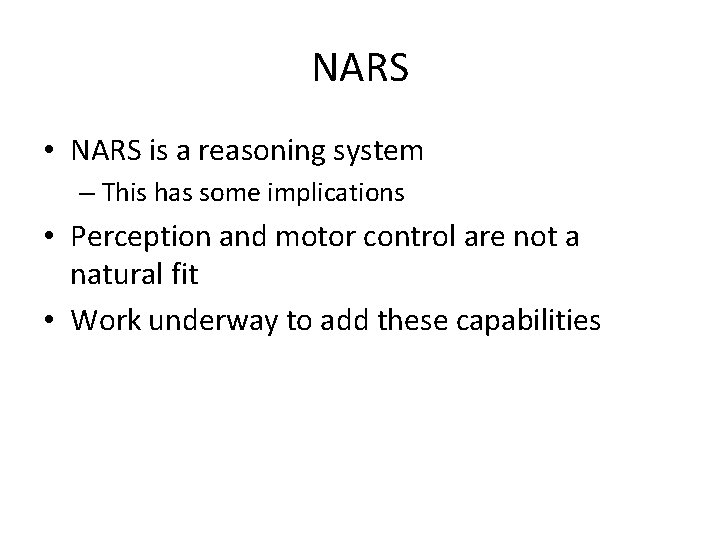

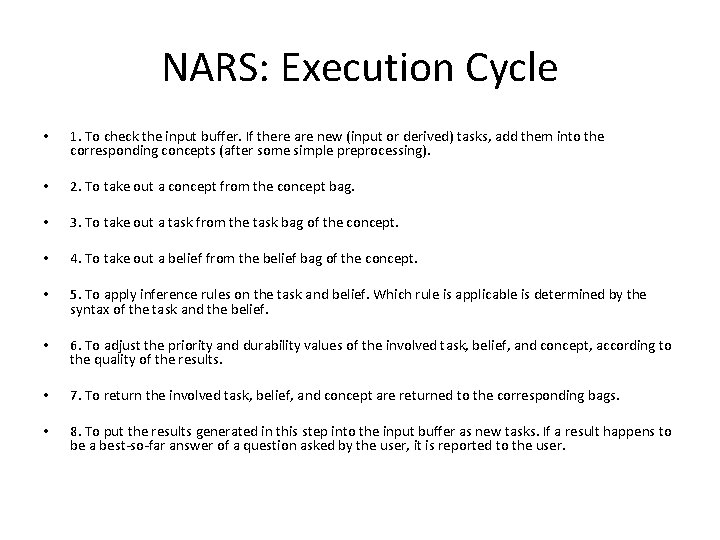

NARS: Execution Cycle • 1. To check the input buffer. If there are new (input or derived) tasks, add them into the corresponding concepts (after some simple preprocessing). • 2. To take out a concept from the concept bag. • 3. To take out a task from the task bag of the concept. • 4. To take out a belief from the belief bag of the concept. • 5. To apply inference rules on the task and belief. Which rule is applicable is determined by the syntax of the task and the belief. • 6. To adjust the priority and durability values of the involved task, belief, and concept, according to the quality of the results. • 7. To return the involved task, belief, and concept are returned to the corresponding bags. • 8. To put the results generated in this step into the input buffer as new tasks. If a result happens to be a best-so-far answer of a question asked by the user, it is reported to the user.

NARS • NARS is a reasoning system – This has some implications • Perception and motor control are not a natural fit • Work underway to add these capabilities

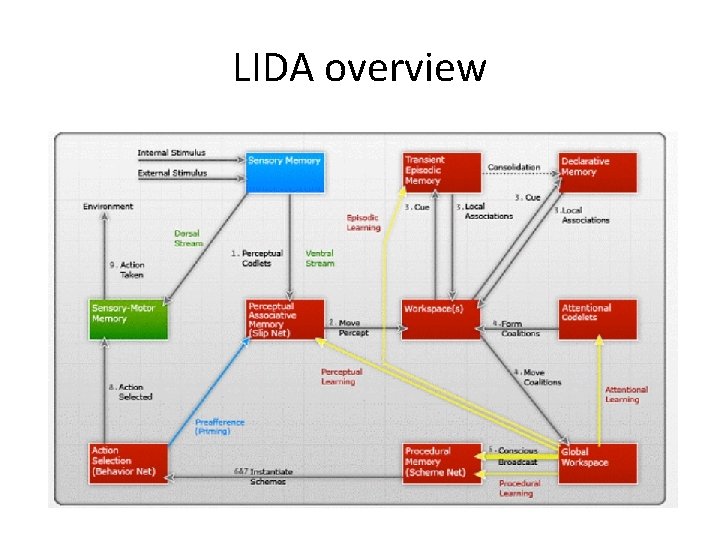

The LIDA cognitive architecture • Biologically / psychologically inspired • Hybrid (symbolic/subsymbolic) • Implementation of global workspace theory

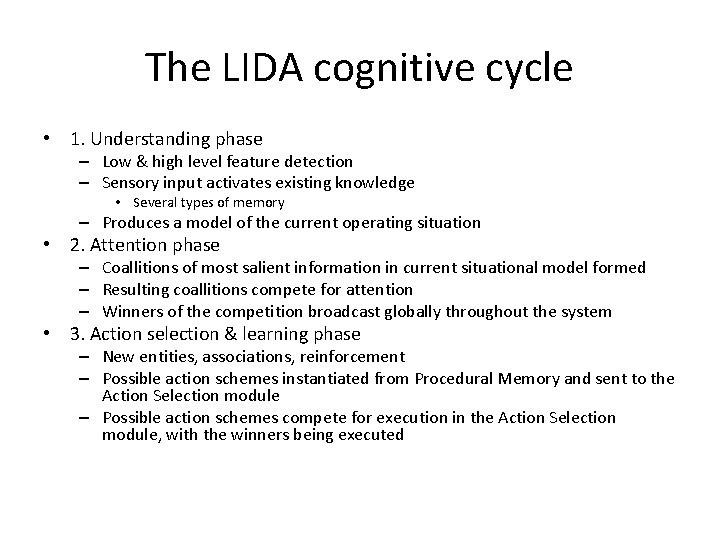

LIDA overview

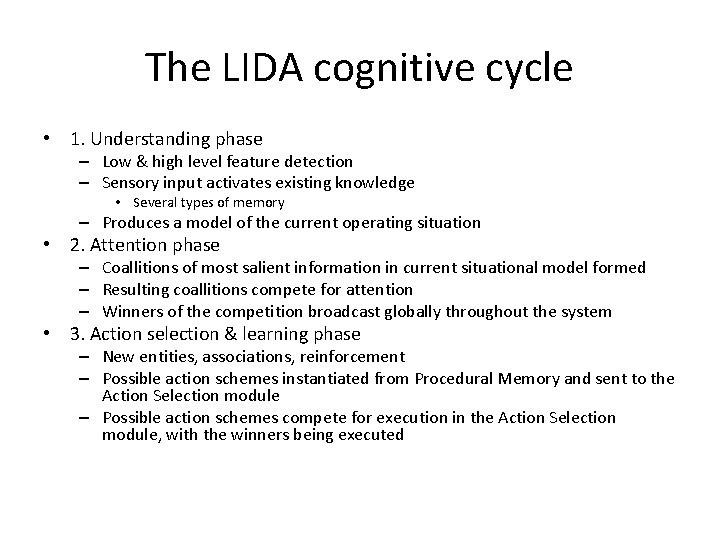

The LIDA cognitive cycle • 1. Understanding phase – Low & high level feature detection – Sensory input activates existing knowledge • Several types of memory – Produces a model of the current operating situation • 2. Attention phase – Coallitions of most salient information in current situational model formed – Resulting coallitions compete for attention – Winners of the competition broadcast globally throughout the system • 3. Action selection & learning phase – New entities, associations, reinforcement – Possible action schemes instantiated from Procedural Memory and sent to the Action Selection module – Possible action schemes compete for execution in the Action Selection module, with the winners being executed

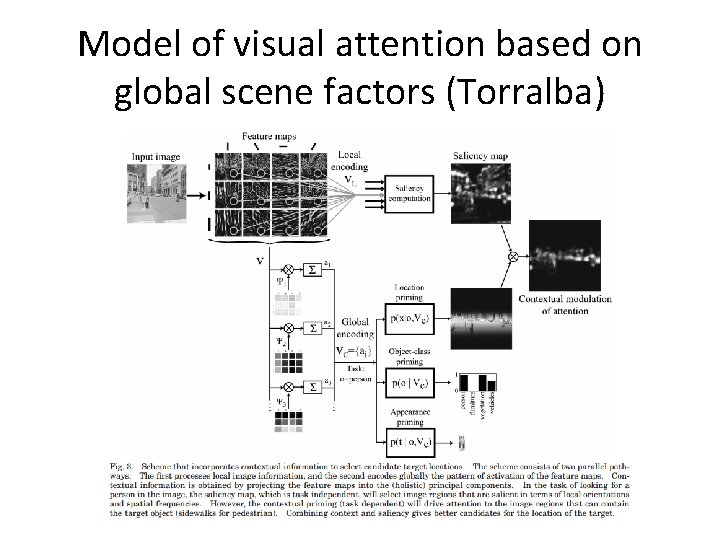

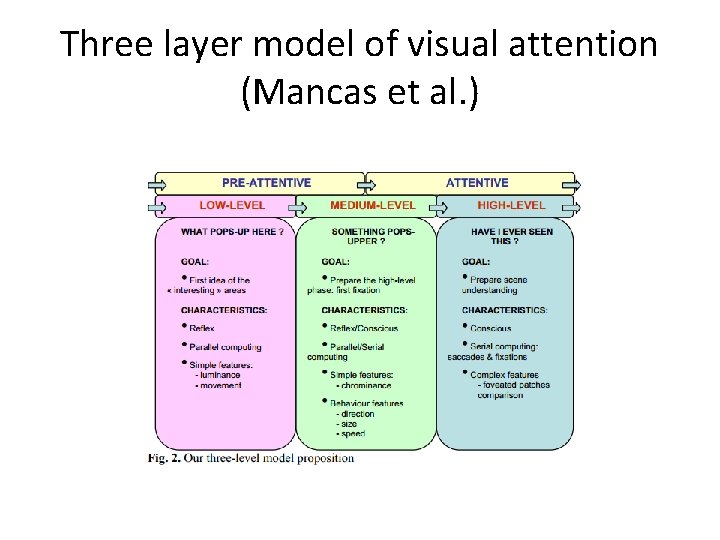

Model of visual attention based on global scene factors (Torralba)

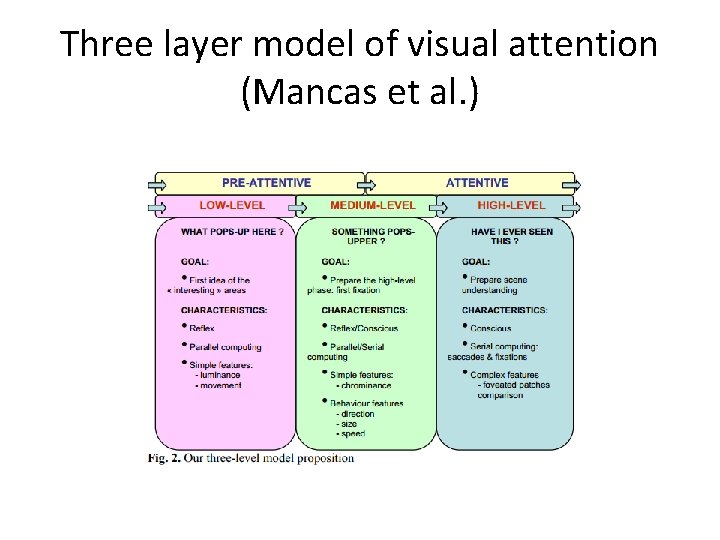

Three layer model of visual attention (Mancas et al. )