Aggregation of Parallel Computing and HardwareSoftware CoDesign Techniques

![Algorithmic ref. Implementation SNR [d. B] RSF Method RASF Method IOSNR [d. B] 5 Algorithmic ref. Implementation SNR [d. B] RSF Method RASF Method IOSNR [d. B] 5](https://slidetodoc.com/presentation_image_h2/7eadb3ae2c71ef05e54f86e09b1bca1a/image-9.jpg)

- Slides: 31

Aggregation of Parallel Computing and Hardware/Software Co-Design Techniques for High-Performance Remote Sensing Applications Presenter: Dr. Alejandro Castillo Atoche 2011/07/25 IGARSS’ 11 School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 1

Outline n n n Introduction Previous Work HW/SW Co-design Methodology Ø Case Study: DEDR-related RSF/RASF Algorithms Ø n Systolic Architectures (SAs) as Co-processors Ø n n New design Perspective: Super-Systolic Arrays and VLSI architectures Hardware Implementation Results Ø n Integration in a Co-design scheme Performance Analysis Conclusions School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 2

Introduction: Radar Imagery, Facts n n n The advanced high resolution operations of remote sensing (RS) are computationally complex. The recently development remote sensing (RS) image reconstruction/ enhancement techniques are definitively unacceptable for a (near) real time implementation. In previous works, the algorithms were implemented in conventional simulations in Personal Computers (normally MATLAB), in Digital Signal Processing (DSP) platforms or in Clusters of PCs. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 3

Introduction: HW/SW co-design, Facts n n n Why Hardware/software (HW/SW) co-design? The HW/SW co-design is a hybrid method aimed to increase the flexibility of the implementations and improvement of the overall design process. Why Systolic Arrays? Extremely fast. Easily scalable architecture. Why Parallel Techniques? Optimize and improve the performance of the loops that generally take most of the time in RS algorithms. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 4

MOTIVATION n n First, novel RS imaging applications require now a response in (near) real time in areas such as: target detection for military purpose, tracking wildfires, and monitoring oil spills, etc. Also, in previous works, virtual remote sensing laboratories had been developed. Now, we are intended to design efficient HW architectures pursuing the real time mode. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 5

CONTRIBUTIONS: n First, the application of parallel computing techniques using loop optimization transformations generates efficient super-systolic arrays (SSAs)-based coprocessors units of the selected reconstructive SP subtasks. n Second, the addressed HW/SW co-design methodology is aimed at an efficient HW implementation of the enhancement/reconstruction regularization methods using the proposed SSA-based co-processor architectures. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 6

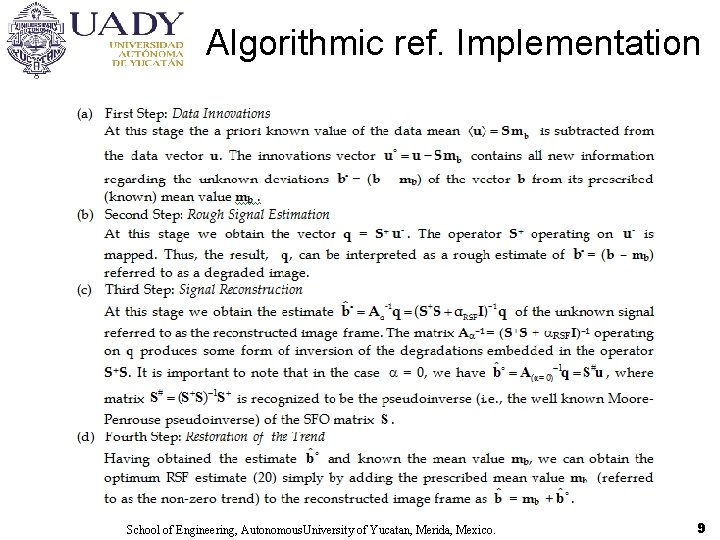

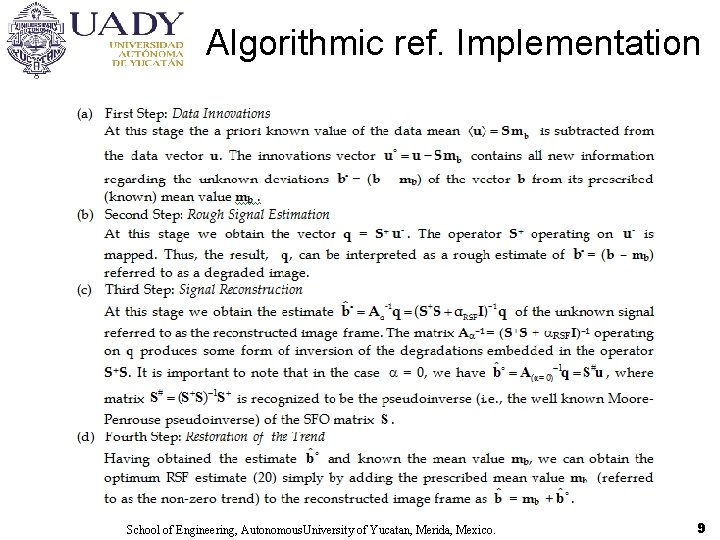

Algorithmic ref. Implementation School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 9

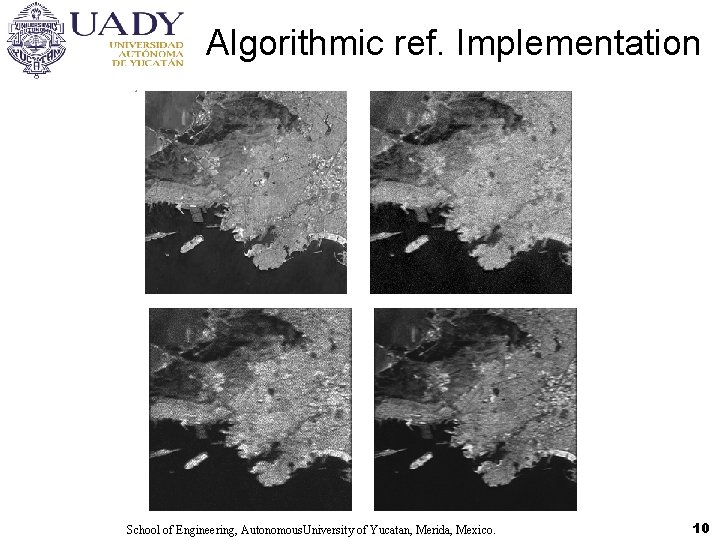

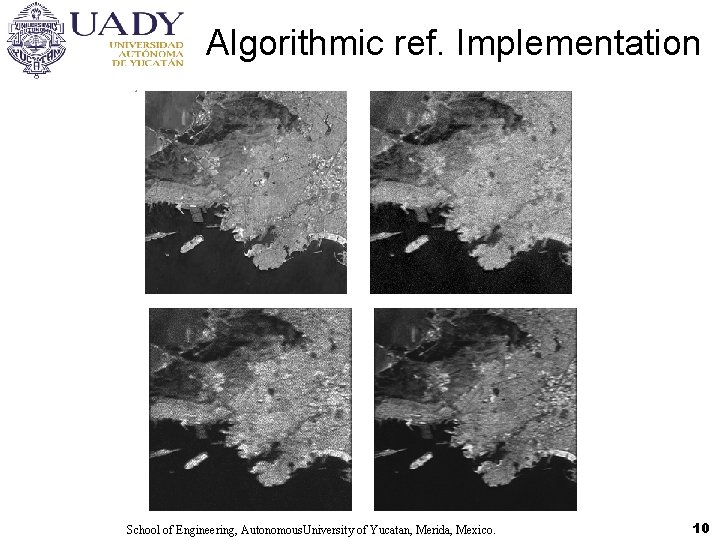

Algorithmic ref. Implementation School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 10

![Algorithmic ref Implementation SNR d B RSF Method RASF Method IOSNR d B 5 Algorithmic ref. Implementation SNR [d. B] RSF Method RASF Method IOSNR [d. B] 5](https://slidetodoc.com/presentation_image_h2/7eadb3ae2c71ef05e54f86e09b1bca1a/image-9.jpg)

Algorithmic ref. Implementation SNR [d. B] RSF Method RASF Method IOSNR [d. B] 5 4. 36 7. 94 10 6. 92 9. 75 15 7. 67 11. 36 20 9. 48 12. 72 School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 11

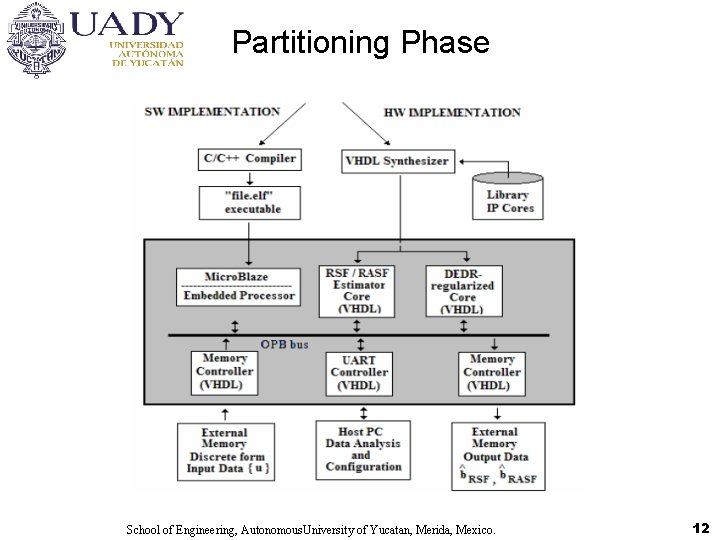

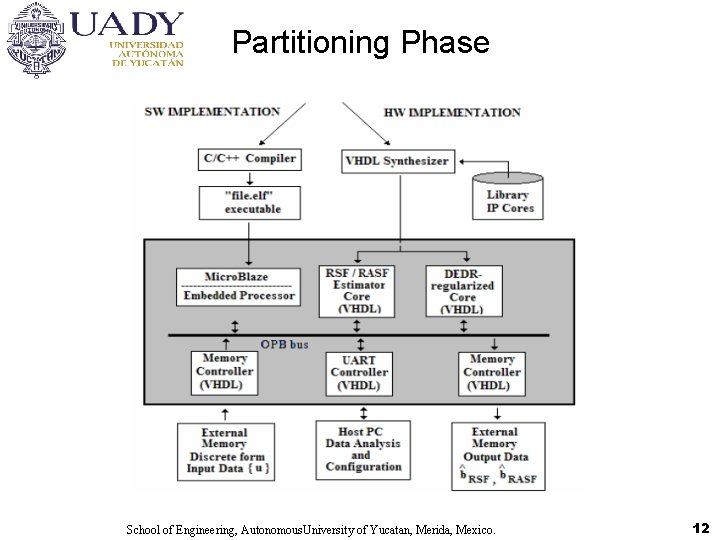

Partitioning Phase School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 12

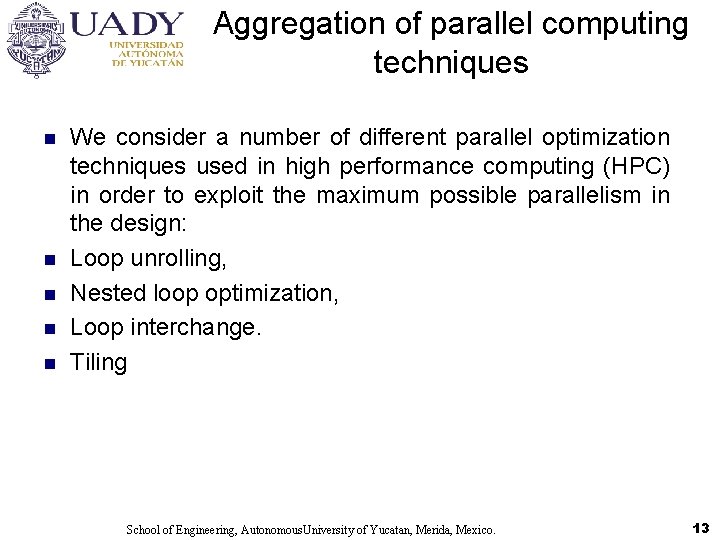

Aggregation of parallel computing techniques n n n We consider a number of different parallel optimization techniques used in high performance computing (HPC) in order to exploit the maximum possible parallelism in the design: Loop unrolling, Nested loop optimization, Loop interchange. Tiling School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 13

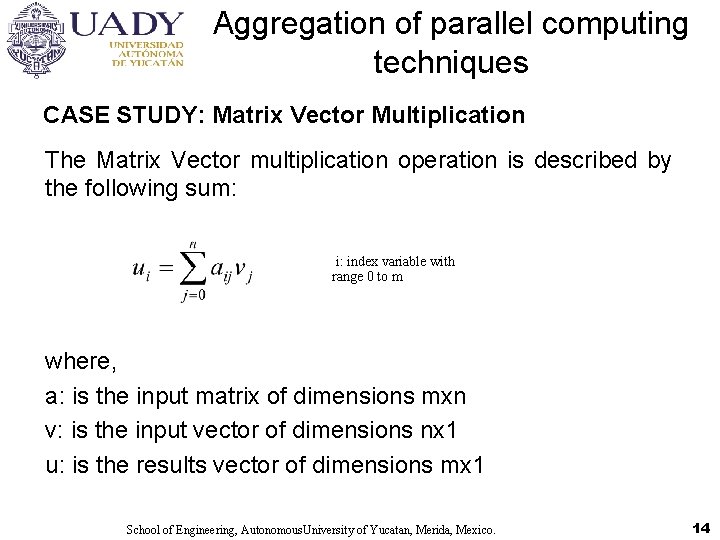

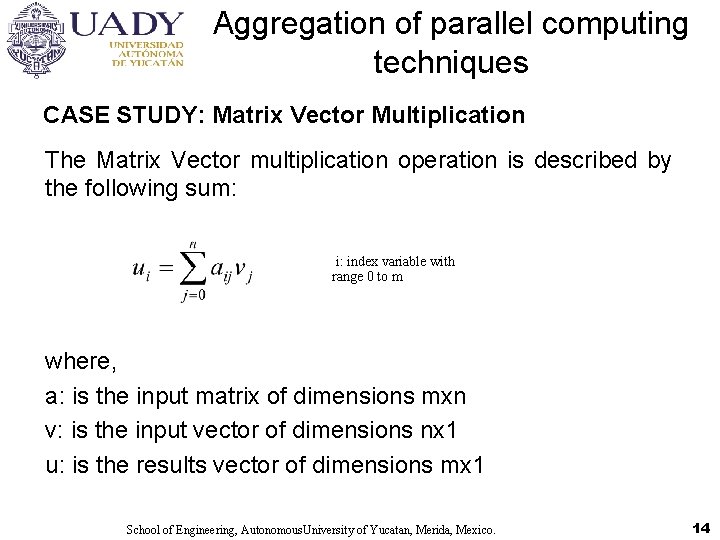

Aggregation of parallel computing techniques CASE STUDY: Matrix Vector Multiplication The Matrix Vector multiplication operation is described by the following sum: i: index variable with range 0 to m where, a: is the input matrix of dimensions mxn v: is the input vector of dimensions nx 1 u: is the results vector of dimensions mx 1 School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 14

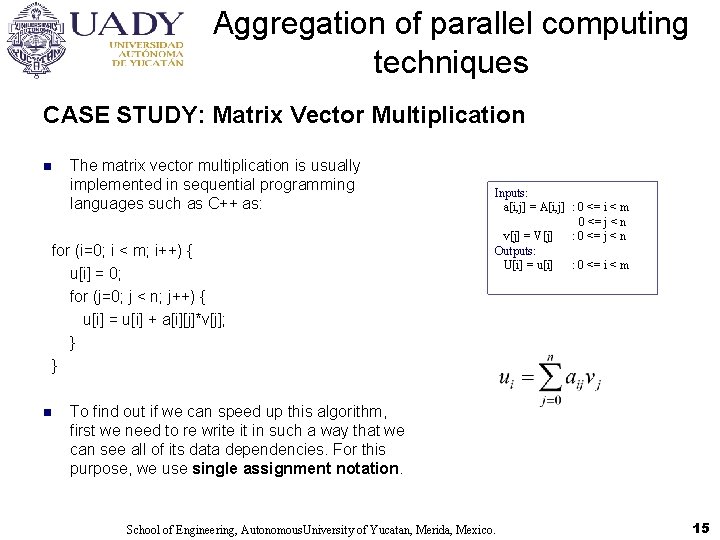

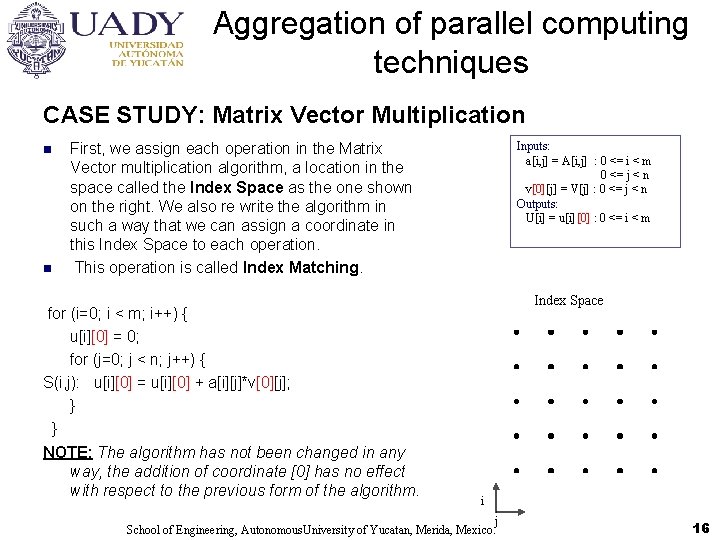

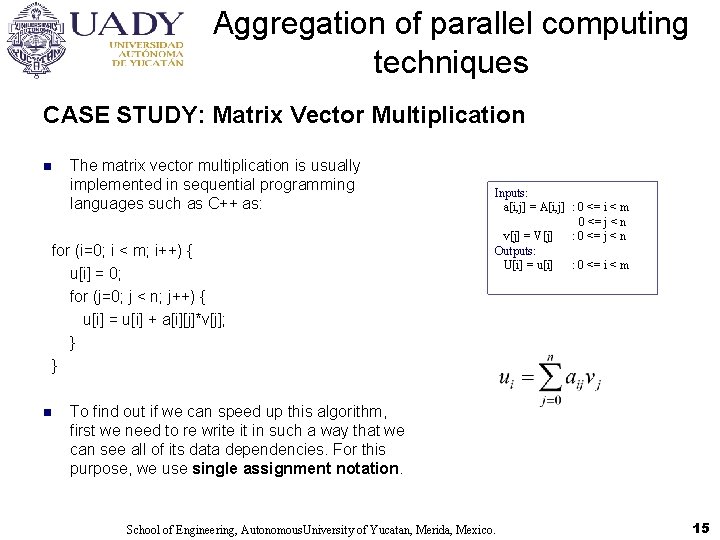

Aggregation of parallel computing techniques CASE STUDY: Matrix Vector Multiplication n The matrix vector multiplication is usually implemented in sequential programming languages such as C++ as: for (i=0; i < m; i++) { u[i] = 0; for (j=0; j < n; j++) { u[i] = u[i] + a[i][j]*v[j]; } } n Inputs: a[i, j] = A[i, j] : 0 <= i < m 0 <= j < n v[j] = V[j] : 0 <= j < n Outputs: U[i] = u[i] : 0 <= i < m To find out if we can speed up this algorithm, first we need to re write it in such a way that we can see all of its data dependencies. For this purpose, we use single assignment notation. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 15

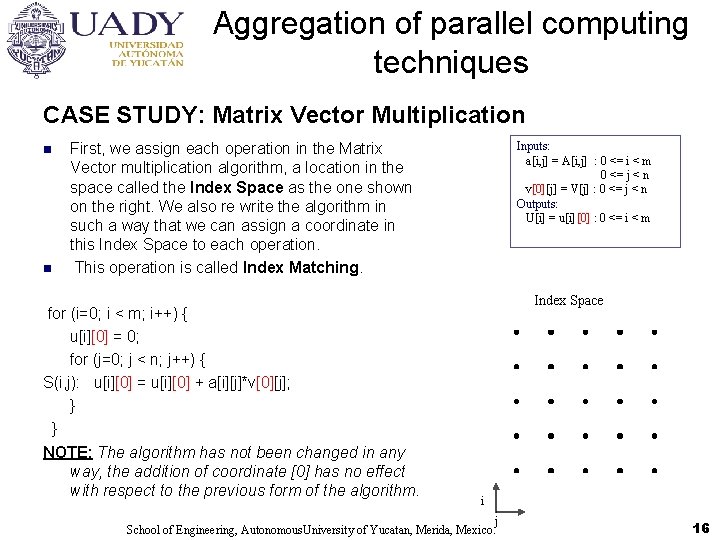

Aggregation of parallel computing techniques CASE STUDY: Matrix Vector Multiplication n n Inputs: a[i, j] = A[i, j] : 0 <= i < m 0 <= j < n v[0][j] = V[j] : 0 <= j < n Outputs: U[i] = u[i][0] : 0 <= i < m First, we assign each operation in the Matrix Vector multiplication algorithm, a location in the space called the Index Space as the one shown on the right. We also re write the algorithm in such a way that we can assign a coordinate in this Index Space to each operation. This operation is called Index Matching. for (i=0; i < m; i++) { u[i][0] = 0; for (j=0; j < n; j++) { S(i, j): u[i][0] = u[i][0] + a[i][j]*v[0][j]; } } NOTE: The algorithm has not been changed in any way, the addition of coordinate [0] has no effect with respect to the previous form of the algorithm. Index Space i j School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 16

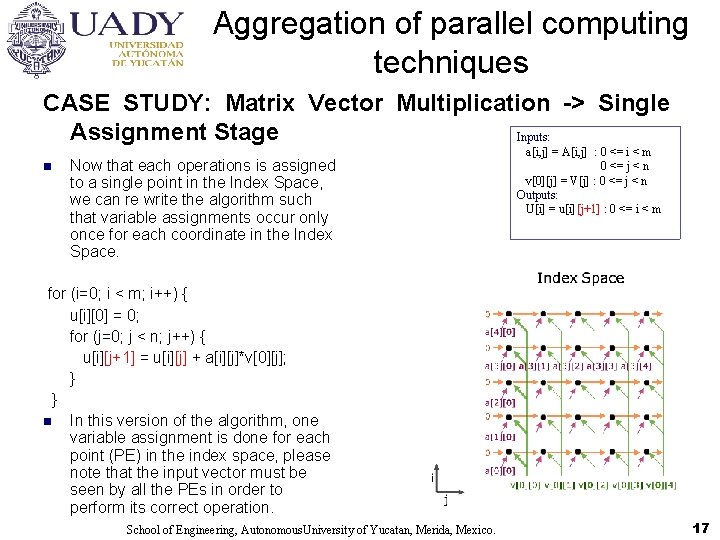

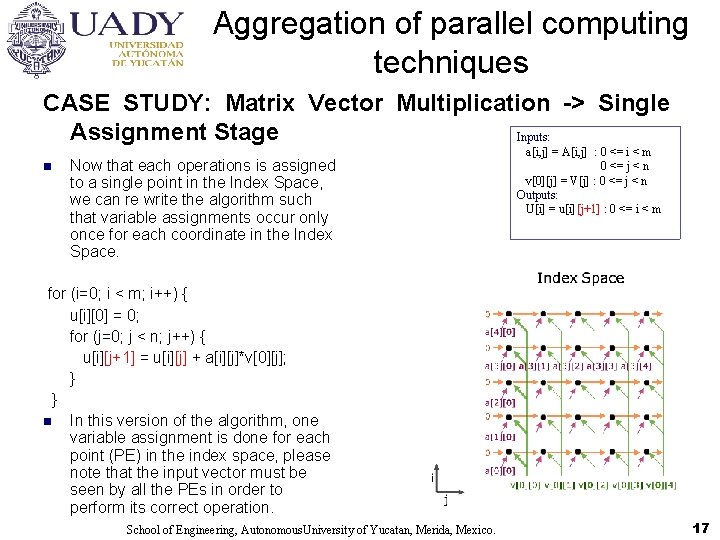

Aggregation of parallel computing techniques CASE STUDY: Matrix Vector Multiplication -> Single Assignment Stage Inputs: n Now that each operations is assigned to a single point in the Index Space, we can re write the algorithm such that variable assignments occur only once for each coordinate in the Index Space. a[i, j] = A[i, j] : 0 <= i < m 0 <= j < n v[0][j] = V[j] : 0 <= j < n Outputs: U[i] = u[i][j+1] : 0 <= i < m for (i=0; i < m; i++) { u[i][0] = 0; for (j=0; j < n; j++) { u[i][j+1] = u[i][j] + a[i][j]*v[0][j]; } } n In this version of the algorithm, one variable assignment is done for each point (PE) in the index space, please note that the input vector must be seen by all the PEs in order to perform its correct operation. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 17

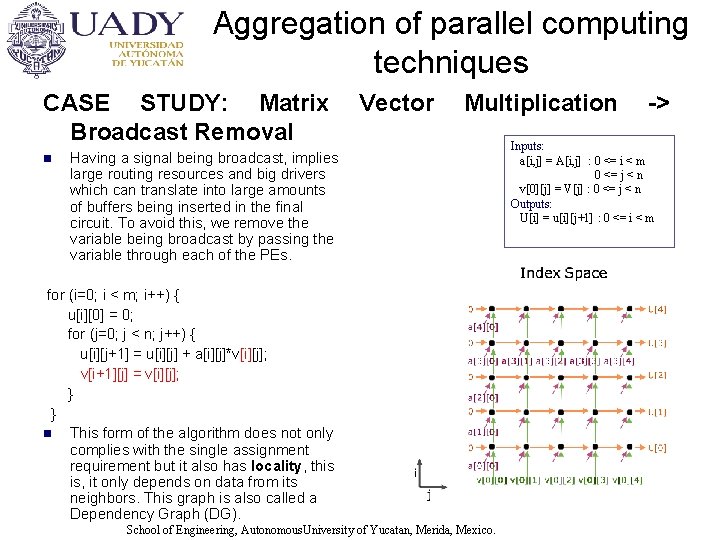

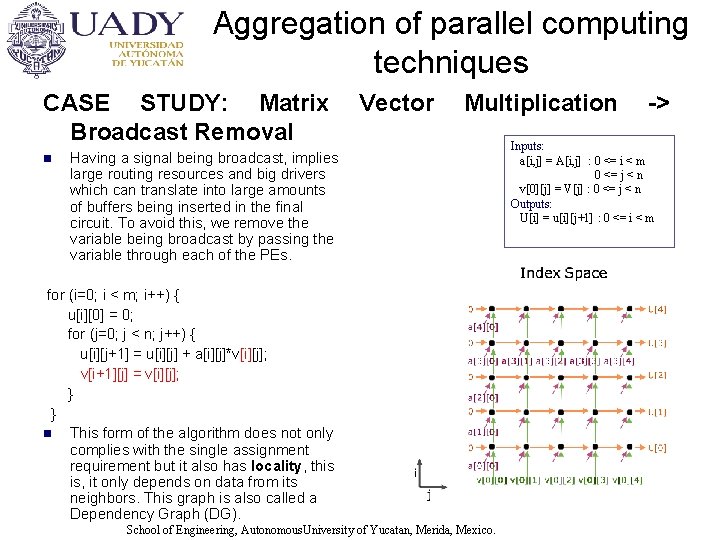

Aggregation of parallel computing techniques CASE STUDY: Matrix Broadcast Removal n Vector Multiplication Having a signal being broadcast, implies large routing resources and big drivers which can translate into large amounts of buffers being inserted in the final circuit. To avoid this, we remove the variable being broadcast by passing the variable through each of the PEs. for (i=0; i < m; i++) { u[i][0] = 0; for (j=0; j < n; j++) { u[i][j+1] = u[i][j] + a[i][j]*v[i][j]; v[i+1][j] = v[i][j]; } } n This form of the algorithm does not only complies with the single assignment requirement but it also has locality, this is, it only depends on data from its neighbors. This graph is also called a Dependency Graph (DG). School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. -> Inputs: a[i, j] = A[i, j] : 0 <= i < m 0 <= j < n v[0][j] = V[j] : 0 <= j < n Outputs: U[i] = u[i][j+1] : 0 <= i < m

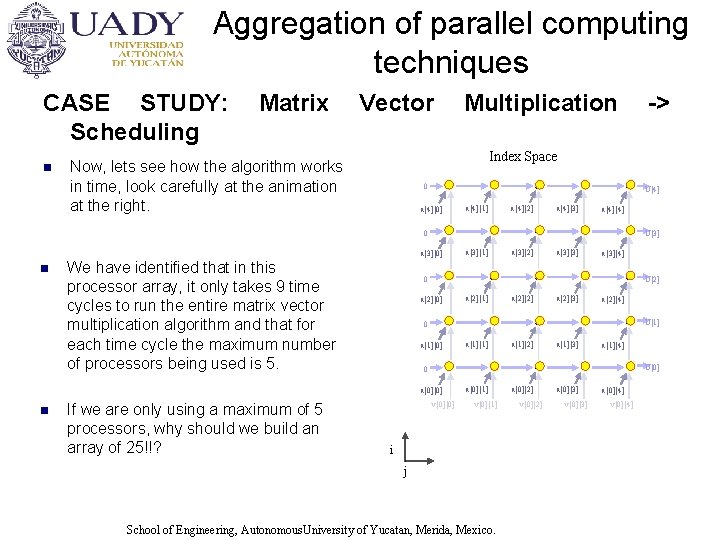

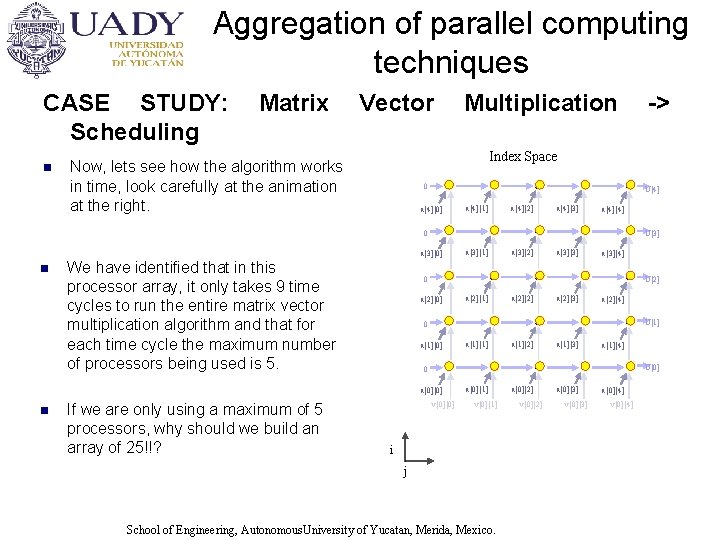

Aggregation of parallel computing techniques CASE STUDY: Scheduling n Matrix Vector Multiplication Index Space Now, lets see how the algorithm works in time, look carefully at the animation at the right. 0 U[4] a[4][0] a[4][1] a[4][2] a[4][3] a[4][4] 0 U[3] a[3][0] n We have identified that in this processor array, it only takes 9 time cycles to run the entire matrix vector multiplication algorithm and that for each time cycle the maximum number of processors being used is 5. If we are only using a maximum of 5 processors, why should we build an array of 25!!? a[3][1] a[3][2] a[3][3] a[3][4] U[2] 0 a[2][0] a[2][1] a[2][2] a[2][3] a[2][4] U[1] 0 a[1][0] a[1][1] a[1][2] a[1][3] a[1][4] U[0] 0 a[0][0] n -> v[0][0] a[0][1] v[0][1] i j School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. a[0][2] v[0][2] a[0][3] v[0][3] a[0][4] v[0][4]

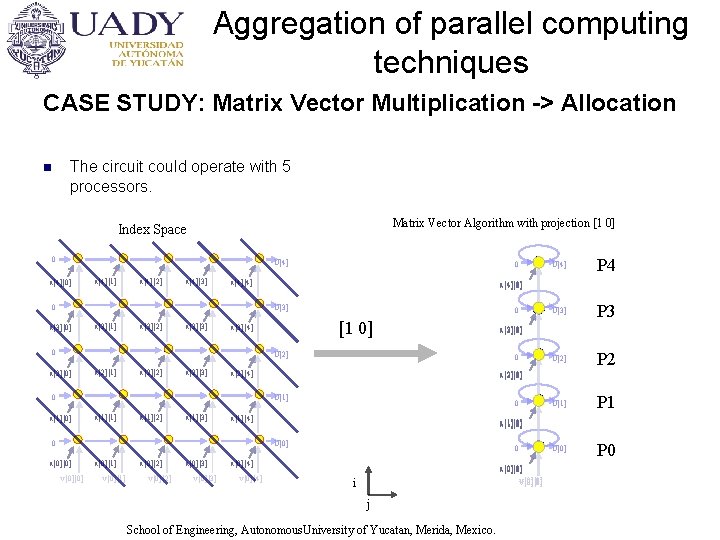

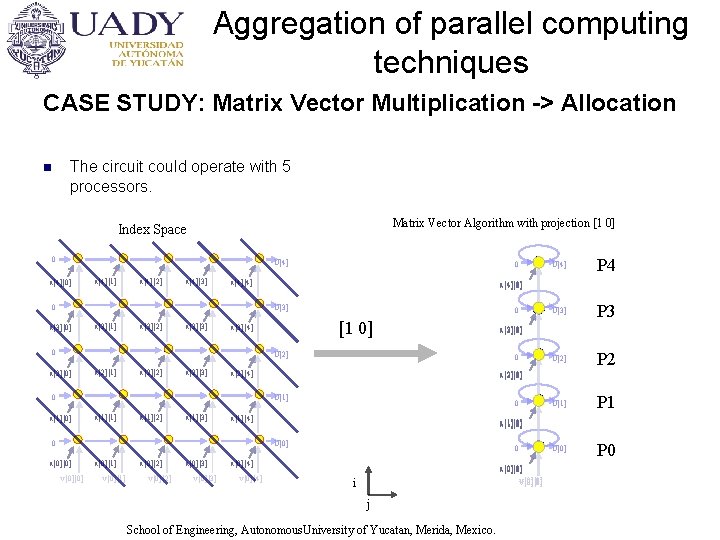

Aggregation of parallel computing techniques CASE STUDY: Matrix Vector Multiplication -> Allocation The circuit could operate with 5 processors. n Matrix Vector Algorithm with projection [1 0] Index Space 0 U[4] a[4][0] a[4][1] a[4][2] a[4][3] 0 a[4][4] 0 a[3][1] a[3][2] a[3][3] 0 [1 0] a[3][4] 0 U[2] a[2][0] a[2][1] a[2][2] a[2][3] a[1][1] a[1][2] a[1][3] 0 a[1][4] 0 v[0][0] a[0][1] v[0][1] a[0][2] v[0][2] a[0][3] v[0][3] 0 a[0][4] v[0][4] U[2] P 2 U[1] P 1 U[0] P 0 a[1][3] a[1][1] a[1][0] a[1][4] a[1][2] U[0] a[0][0] P 3 a[2][3] a[2][0] a[2][2] a[2][1] a[2][4] U[1] a[1][0] U[3] a[3][1] a[3][4] a[3][2] a[3][3] a[3][0] 0 a[2][4] 0 P 4 a[4][0] a[4][4] a[4][1] a[4][2] a[4][3] U[3] a[3][0] U[4] a[0][2] a[0][4] a[0][0] a[0][1] a[0][3] v[0][4] v[0][2] v[0][3] v[0][0] v[0][1] i j School of Engineering, Autonomous. University of Yucatan, Merida, Mexico.

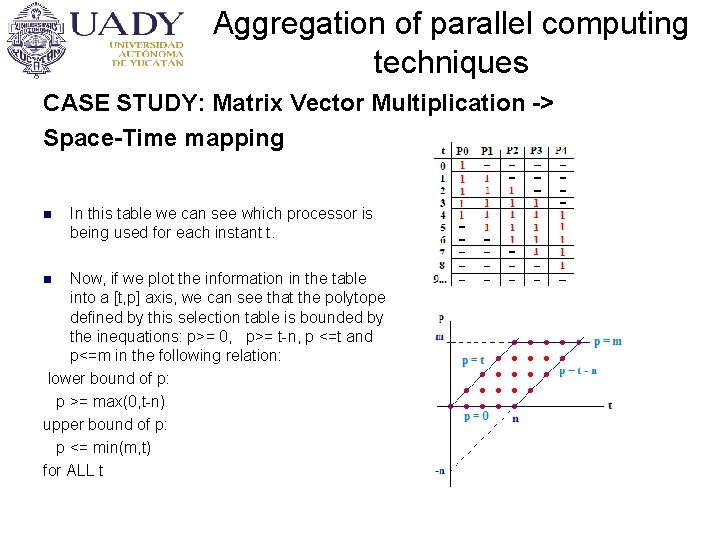

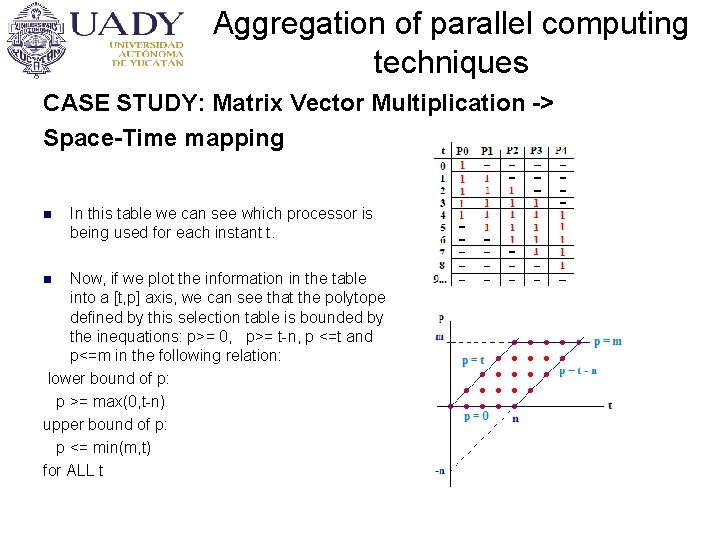

Aggregation of parallel computing techniques CASE STUDY: Matrix Vector Multiplication -> Space-Time mapping n In this table we can see which processor is being used for each instant t. Now, if we plot the information in the table into a [t, p] axis, we can see that the polytope defined by this selection table is bounded by the inequations: p>= 0, p>= t-n, p <=t and p<=m in the following relation: lower bound of p: p >= max(0, t-n) upper bound of p: p <= min(m, t) for ALL t n

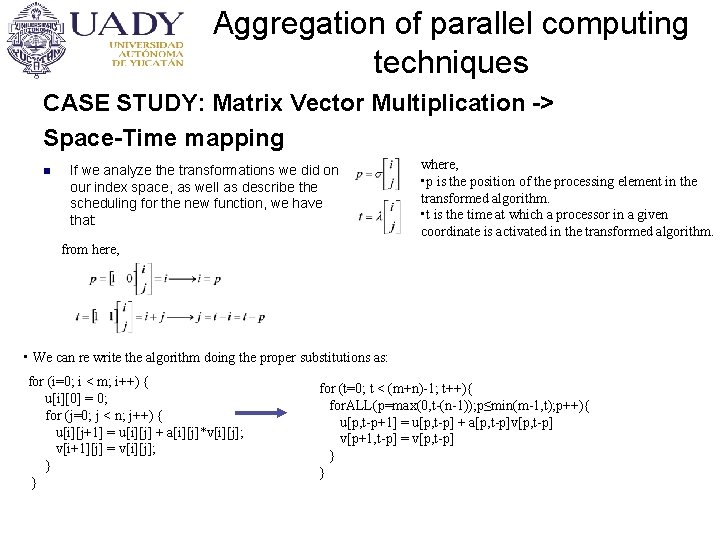

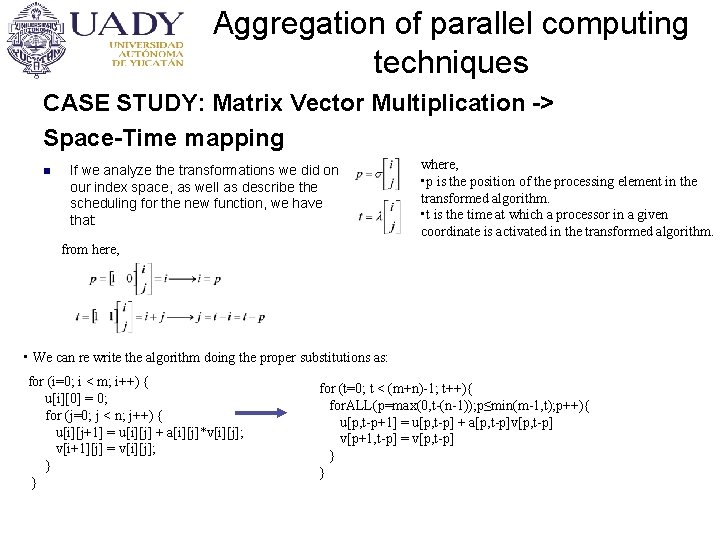

Aggregation of parallel computing techniques CASE STUDY: Matrix Vector Multiplication -> Space-Time mapping n If we analyze the transformations we did on our index space, as well as describe the scheduling for the new function, we have that: where, • p is the position of the processing element in the transformed algorithm. • t is the time at which a processor in a given coordinate is activated in the transformed algorithm. from here, • We can re write the algorithm doing the proper substitutions as: for (i=0; i < m; i++) { u[i][0] = 0; for (j=0; j < n; j++) { u[i][j+1] = u[i][j] + a[i][j]*v[i][j]; v[i+1][j] = v[i][j]; } } for (t=0; t < (m+n)-1; t++){ for. ALL(p=max(0, t-(n-1)); p≤min(m-1, t); p++){ u[p, t-p+1] = u[p, t-p] + a[p, t-p]v[p, t-p] v[p+1, t-p] = v[p, t-p] } }

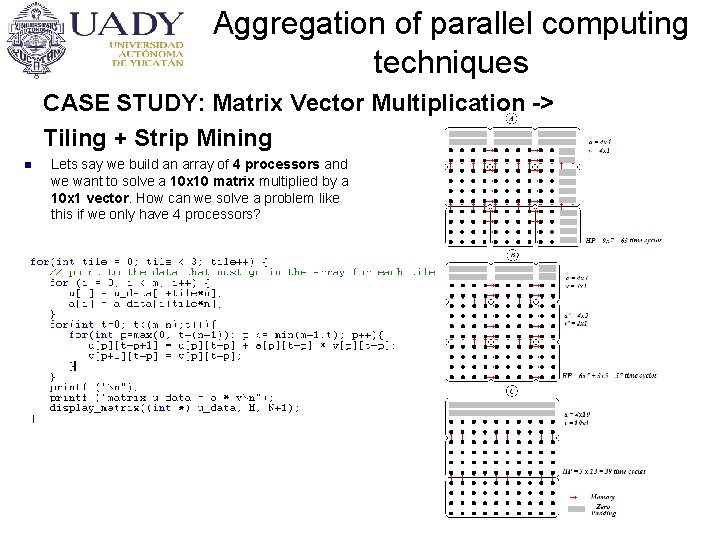

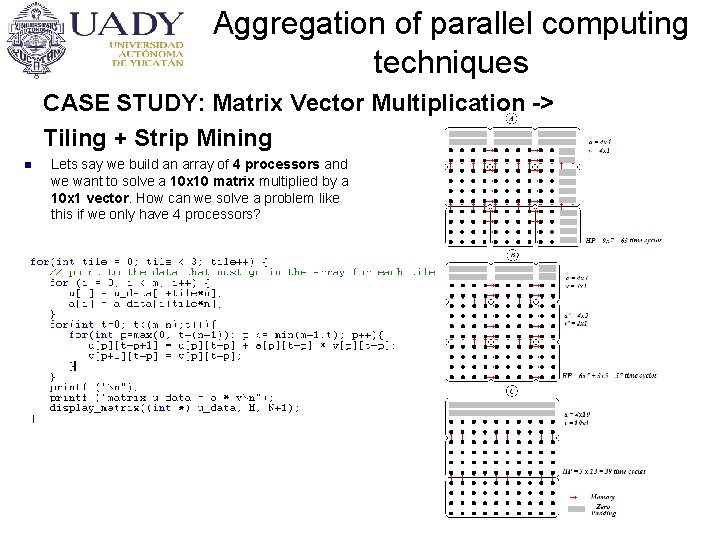

Aggregation of parallel computing techniques CASE STUDY: Matrix Vector Multiplication -> Tiling + Strip Mining n Lets say we build an array of 4 processors and we want to solve a 10 x 10 matrix multiplied by a 10 x 1 vector. How can we solve a problem like this if we only have 4 processors?

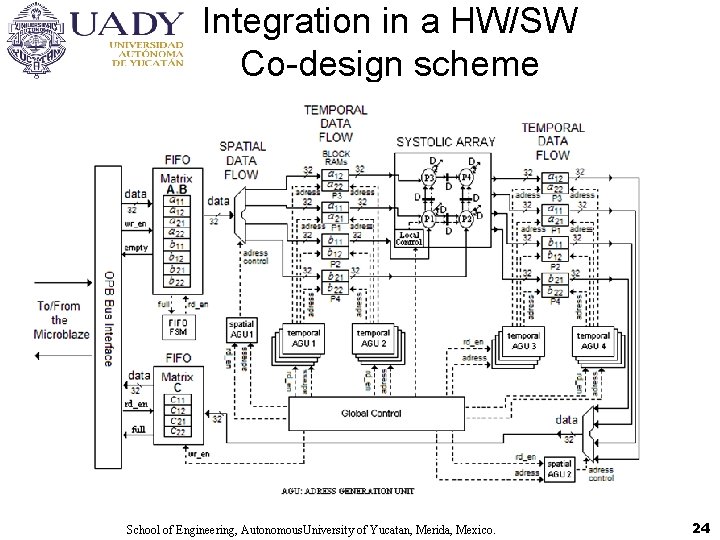

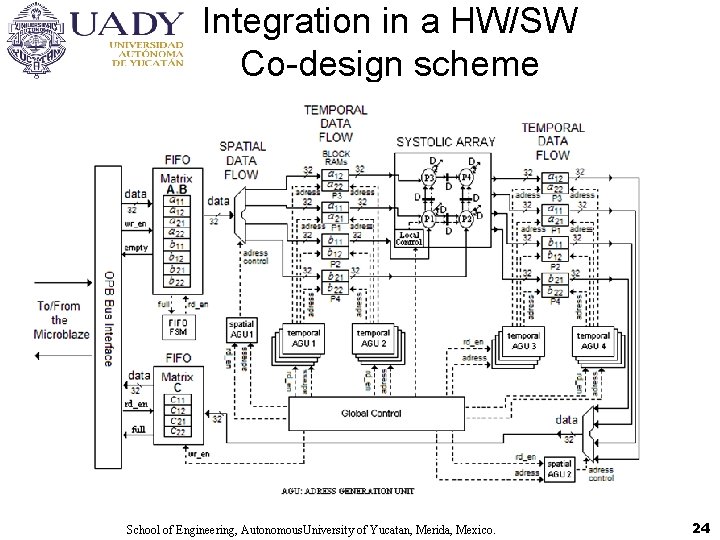

Integration in a HW/SW Co-design scheme School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 24

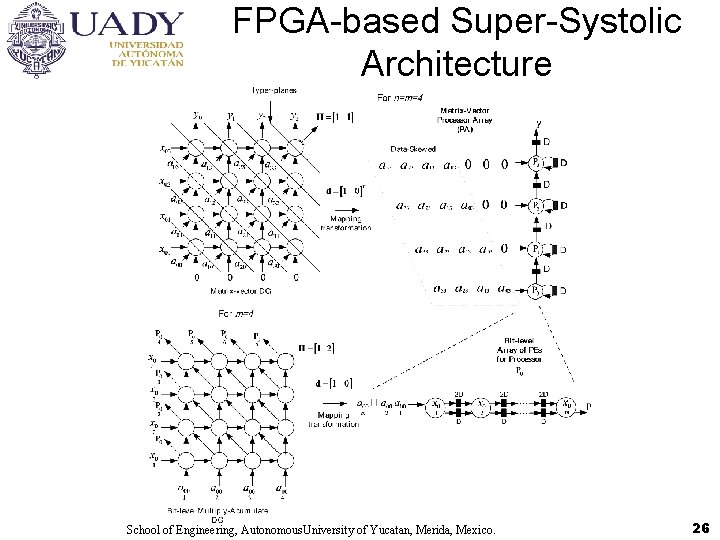

New Perspective: Super-Systolic Arrays Ø Super-Systolic Arrays is a network of systolic cells in which each cell is also conceptualized in another systolic array in a bit-level fashion. Ø The bit-level Super-Systolic architecture represents a High-Speed Highly-Pipelined structure than can be implemented as coprocessor unit or inclusive stand-alone VLSI ASIC architecture. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 25

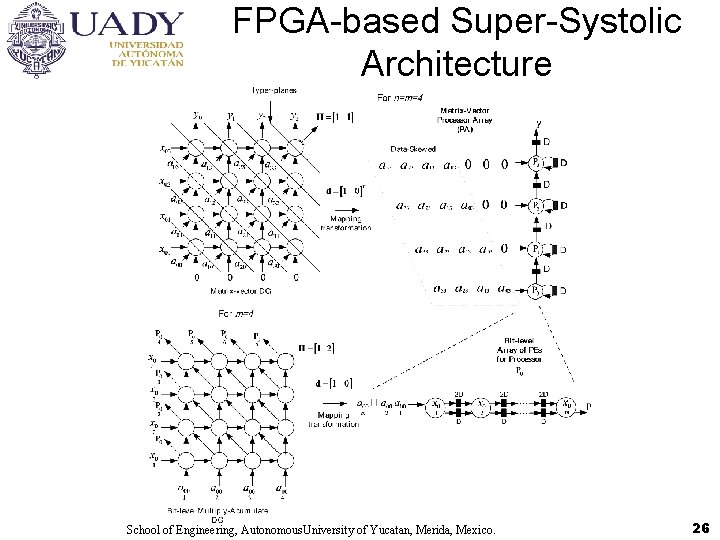

FPGA-based Super-Systolic Architecture School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 26

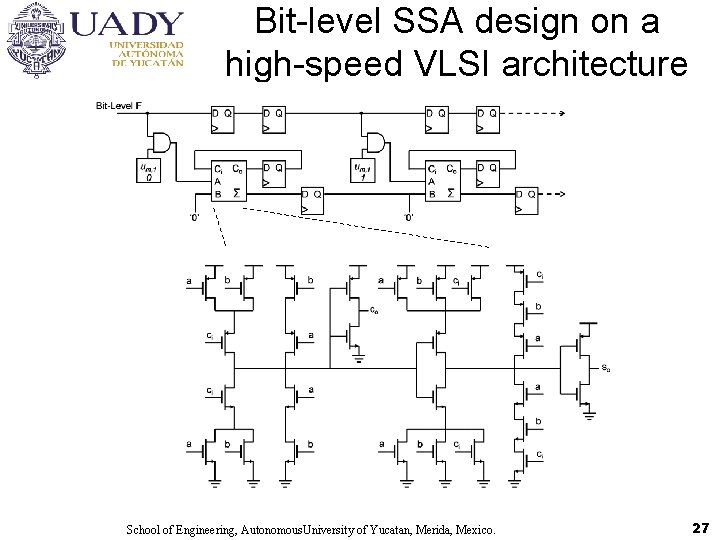

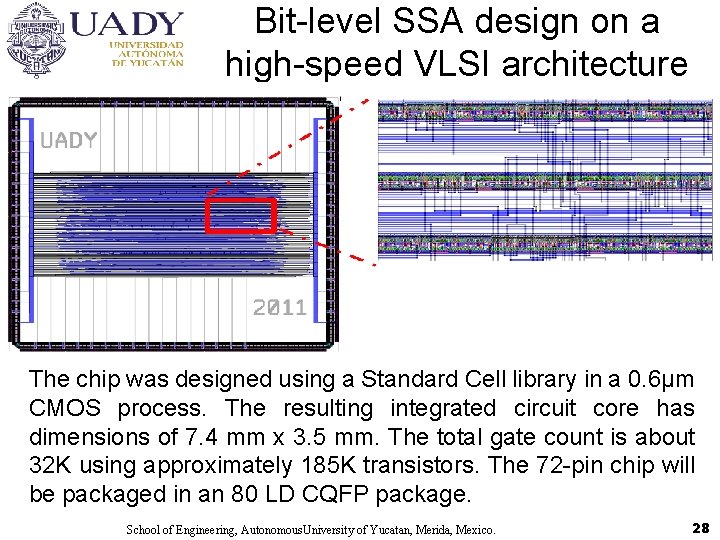

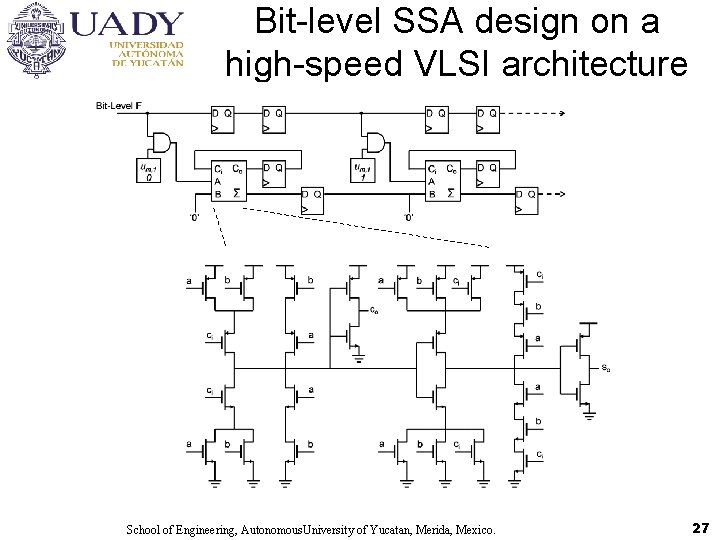

Bit-level SSA design on a high-speed VLSI architecture School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 27

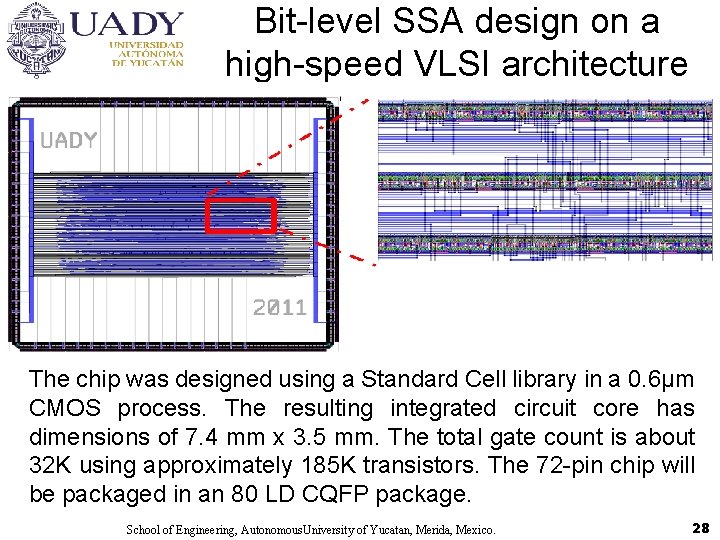

Bit-level SSA design on a high-speed VLSI architecture The chip was designed using a Standard Cell library in a 0. 6µm CMOS process. The resulting integrated circuit core has dimensions of 7. 4 mm x 3. 5 mm. The total gate count is about 32 K using approximately 185 K transistors. The 72 -pin chip will be packaged in an 80 LD CQFP package. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 28

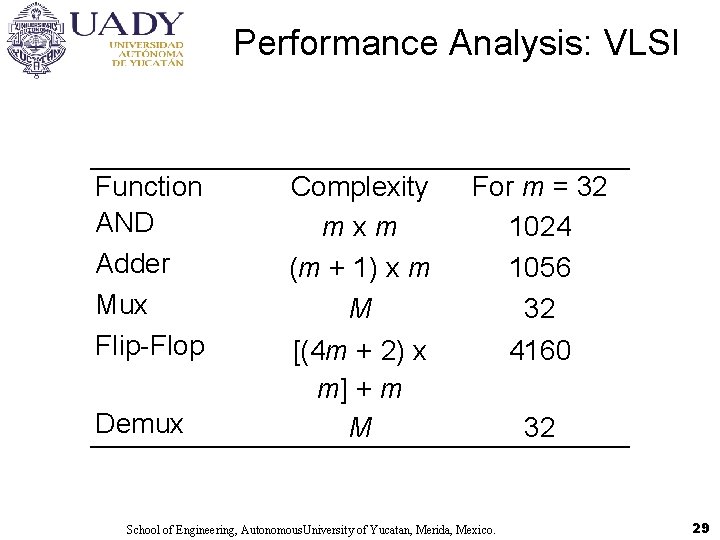

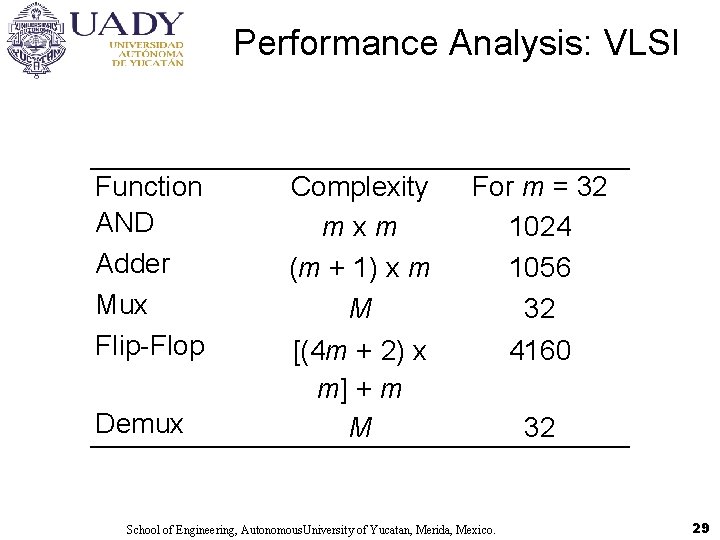

Performance Analysis: VLSI Function AND Adder Mux Flip-Flop Demux Complexity mxm (m + 1) x m M [(4 m + 2) x m] + m M For m = 32 1024 1056 32 4160 School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 32 29

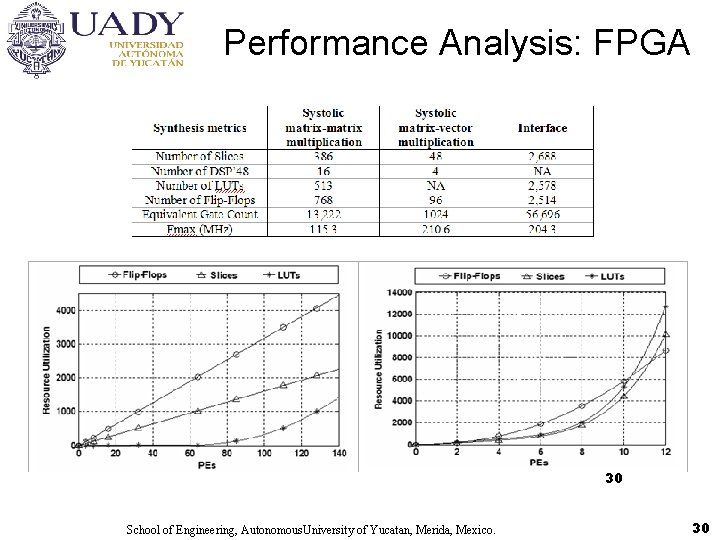

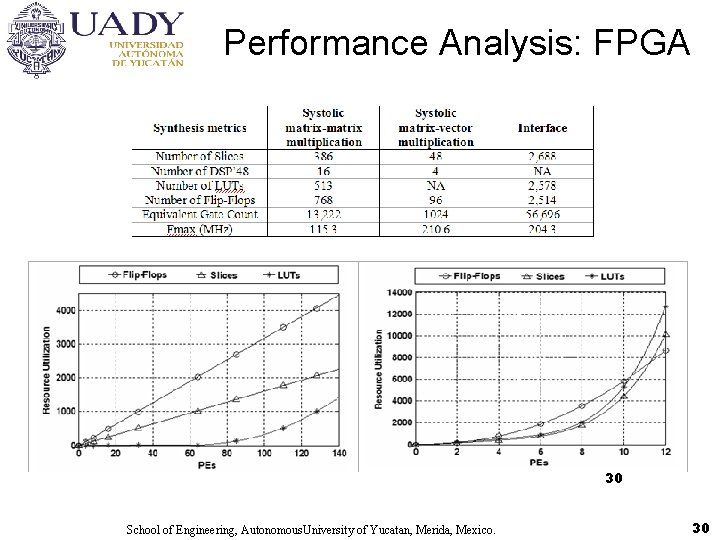

Performance Analysis: FPGA 30 School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 30

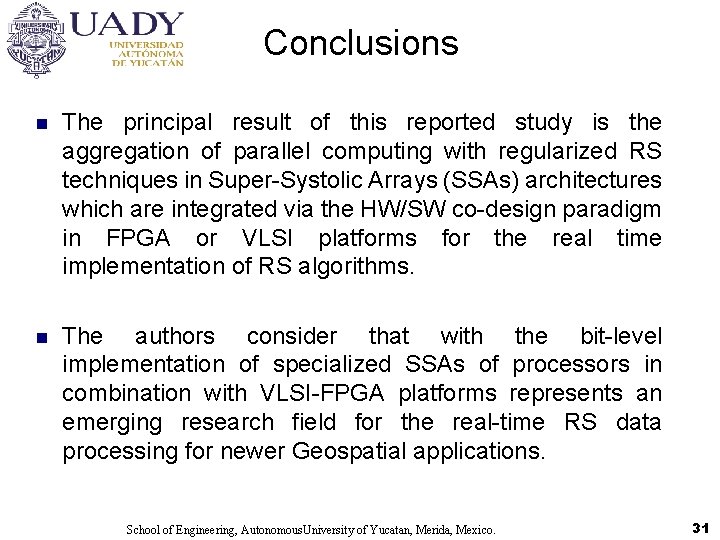

Conclusions n The principal result of this reported study is the aggregation of parallel computing with regularized RS techniques in Super-Systolic Arrays (SSAs) architectures which are integrated via the HW/SW co-design paradigm in FPGA or VLSI platforms for the real time implementation of RS algorithms. n The authors consider that with the bit-level implementation of specialized SSAs of processors in combination with VLSI-FPGA platforms represents an emerging research field for the real-time RS data processing for newer Geospatial applications. School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 31

Recent Selected Journal Papers n A. Castillo Atoche, D. Torres, Yuriy V. Shkvarko, “Towards Real Time Implementation of Reconstructive Signal Processing Algorithms Using Systolic Arrays Coprocessors”, JOURNAL OF SYSTEMS ARCHITECTURE (JSA), Edit. ELSEVIER, Volume 56, Issue 8, August 2010, Pages 327 -339, ISSN: 1383 -7621, doi: 10. 1016/j. sysarc. 2010. 05. 004. JCR. n A. Castillo Atoche, D. Torres, Yuriy V. Shkvarko, “Descriptive Regularization-Based Hardware/Software Co-Design for Real-Time Enhanced Imaging in Uncertain Remote Sensing Environment”, EURASIP JOURNAL ON ADVANCES IN SIGNAL PROCESSING (JASP), Edit. HINDAWI, Volume 2010, 31 pages, 2010. ISSN: 16876172, e-ISSN: 1687 -6180, doi: 10. 1155/ASP. JCR. n Yuriy V. Shkvarko, A. Castillo Atoche, D. Torres, “Near Real Time Enhancement of Geospatial Imagery via Systolic Implementation of Neural Network-Adapted Convex Regularization Techniques”, JOURNAL OF PATTERN RECOGNITION LETTERS, Edit. ELSEVIER, 2011. JCR. In Press School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 32

Thanks for your attention. Dr. Alejandro Castillo Atoche Email: acastill@uady. mx School of Engineering, Autonomous. University of Yucatan, Merida, Mexico. 33