Adversarially Learned OneClassifier for Novelty Detection COMPUTER VISION

![Experiments (cont’d) • Outlier Detection (Caltech-256) • Similar to previous works [52], we repeat Experiments (cont’d) • Outlier Detection (Caltech-256) • Similar to previous works [52], we repeat](https://slidetodoc.com/presentation_image/0bf8036022b8a09b2792b9c8b58ae8b3/image-11.jpg)

- Slides: 18

Adversarially Learned One-Classifier for Novelty Detection COMPUTER VISION AND PATTERN RECOGNITION (CVPR) 2018 EADELI@CS. STANFORD. EDU

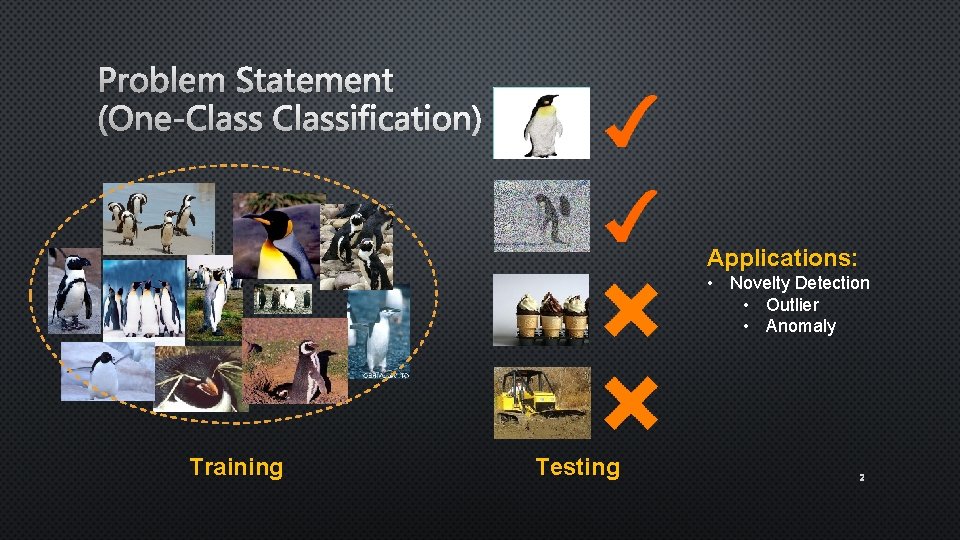

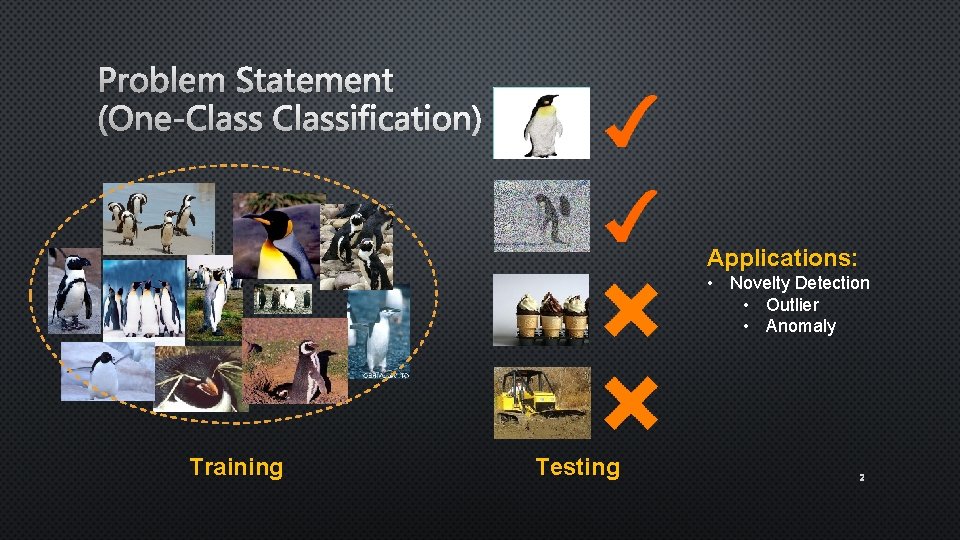

Problem Statement (One-Classification) Applications: • Novelty Detection • Outlier • Anomaly Training Testing 2

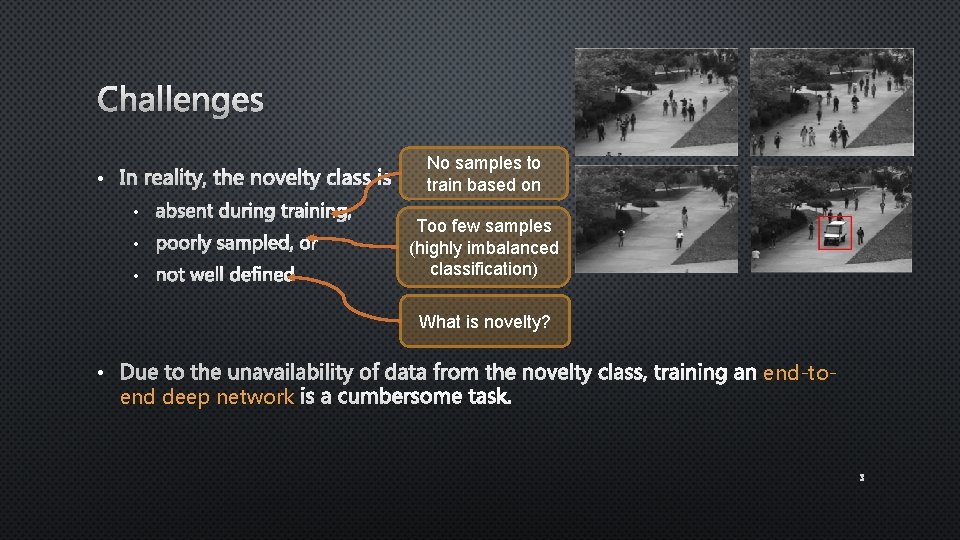

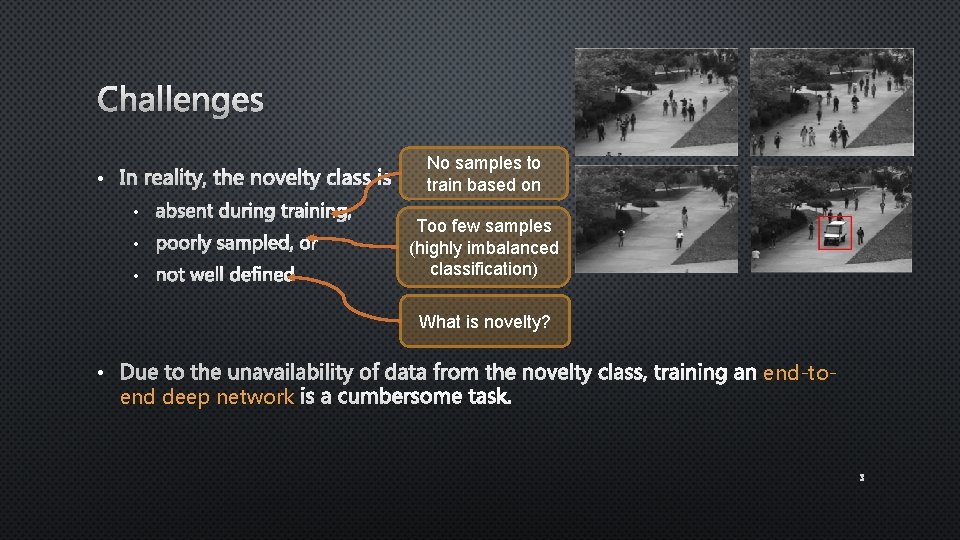

Challenges No samples to train based on • • Too few samples (highly imbalanced classification) What is novelty? • end deep network end-to- 3

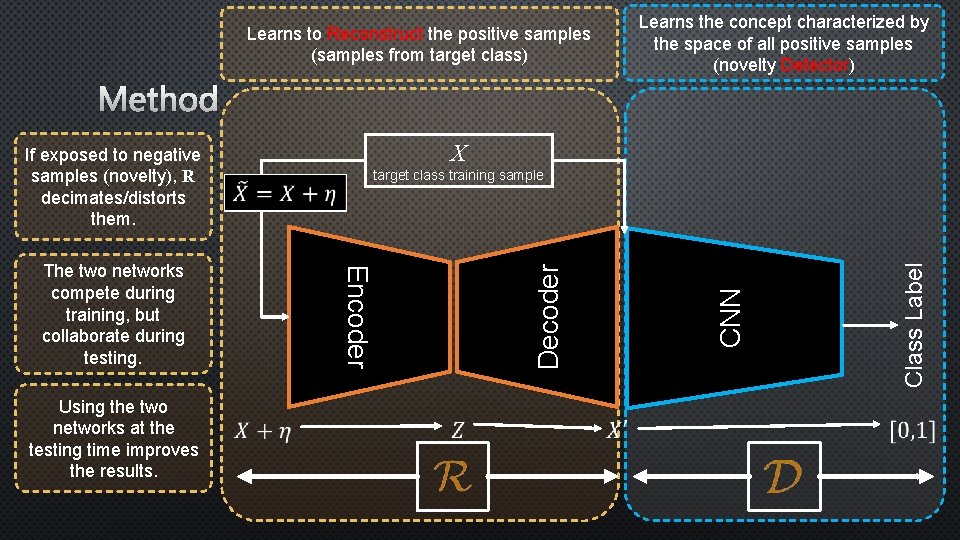

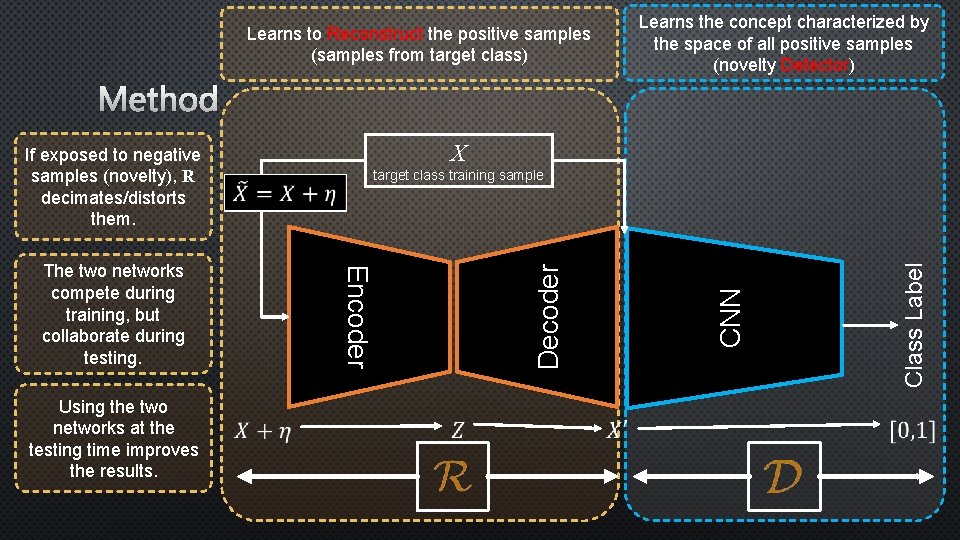

Learns the concept characterized by the space of all positive samples (novelty Detector) Learns to Reconstruct the positive samples (samples from target class) Method X Using the two networks at the testing time improves the results. Class Label Encoder The two networks compete during training, but collaborate during testing. target class training sample CNN Decoder If exposed to negative samples (novelty), R decimates/distorts them. 4

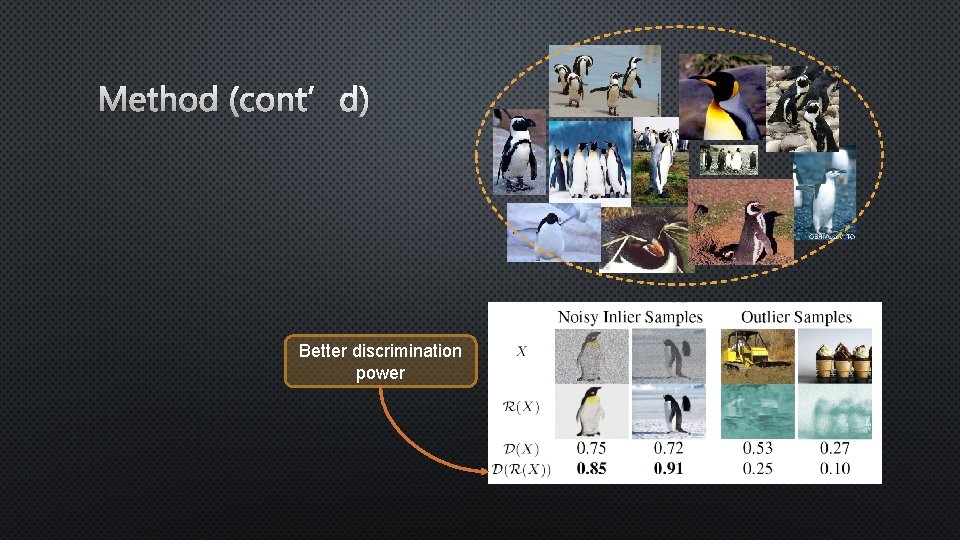

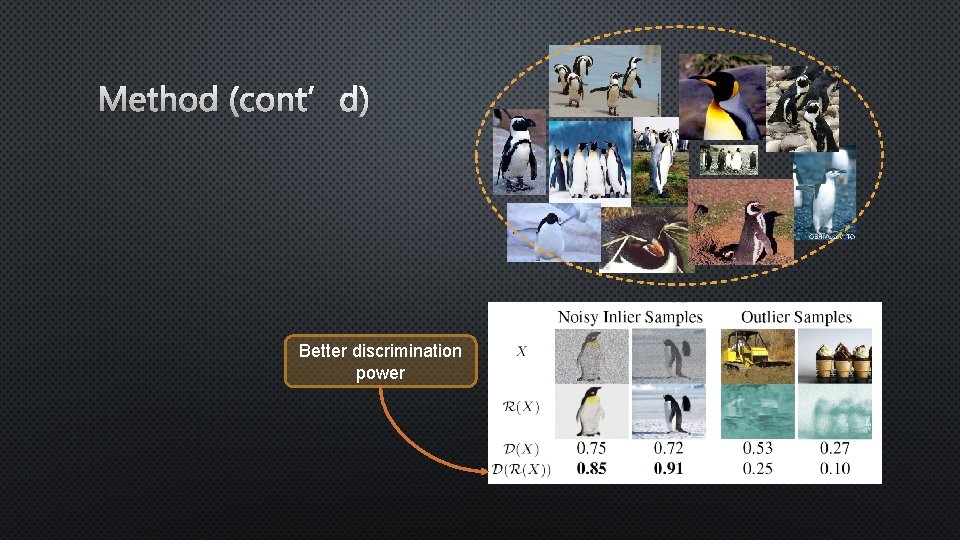

Method (cont’d) Better discrimination power 5

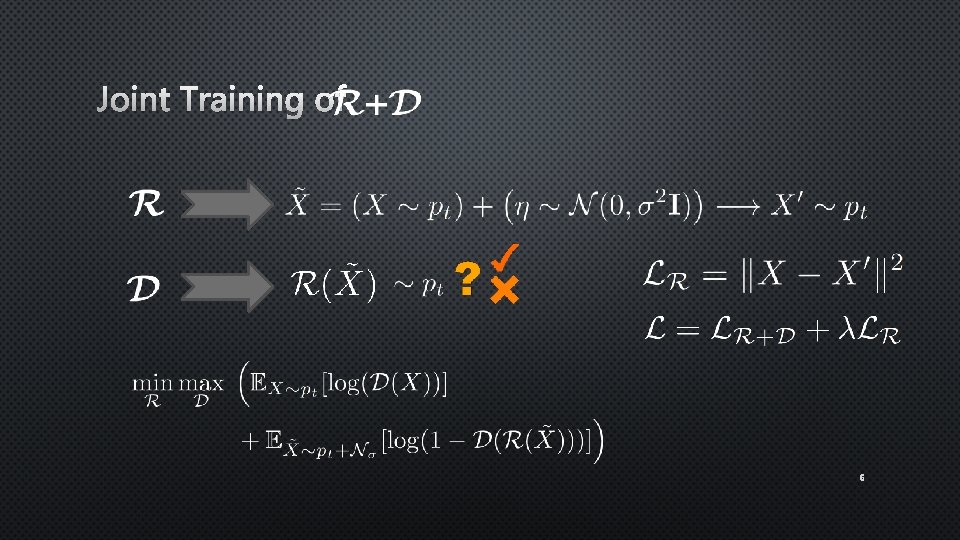

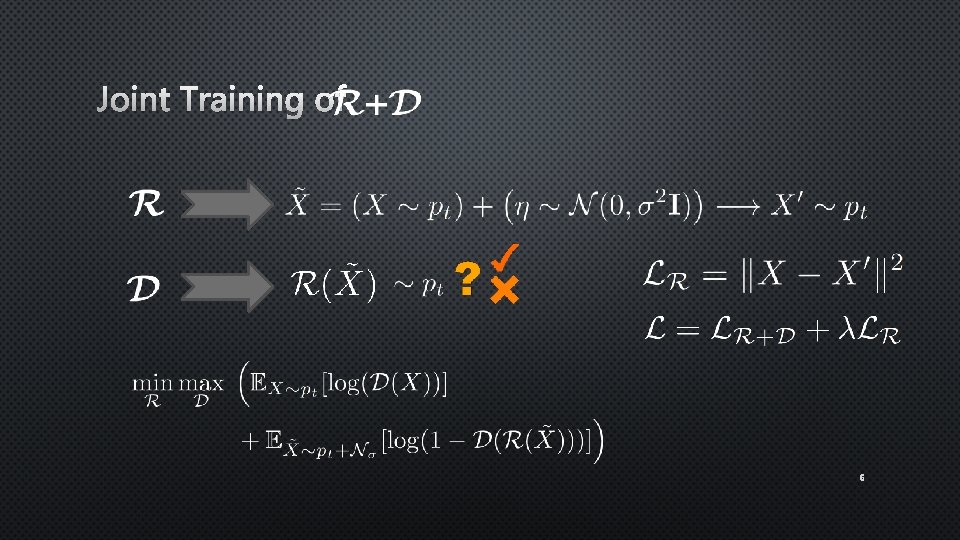

Joint Training of 6

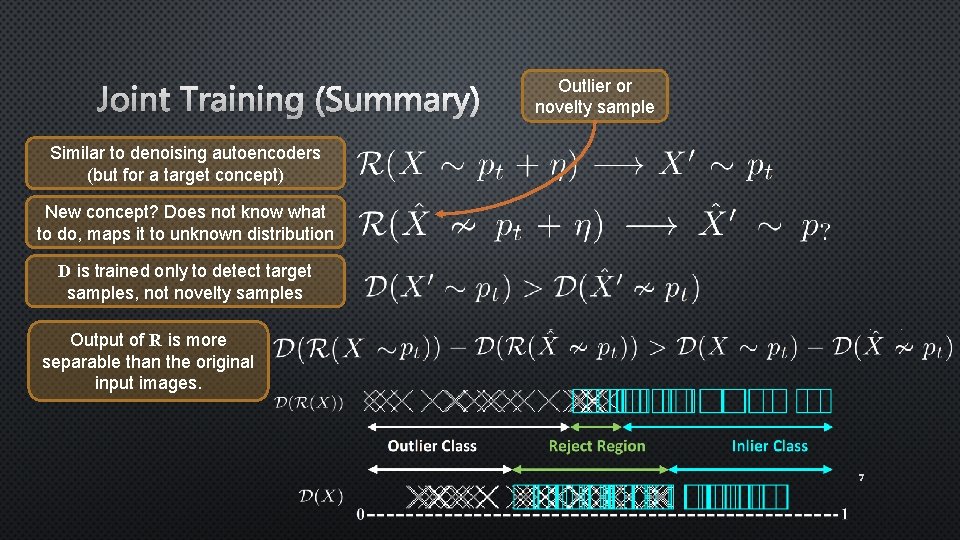

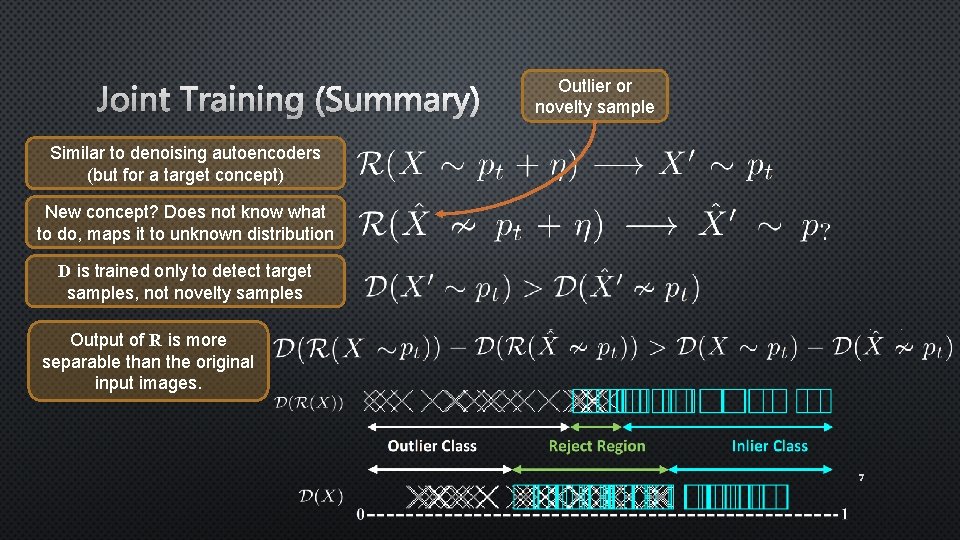

Joint Training (Summary) Outlier or novelty sample Similar to denoising autoencoders (but for a target concept) New concept? Does not know what to do, maps it to unknown distribution D is trained only to detect target samples, not novelty samples Output of R is more separable than the original input images. 7

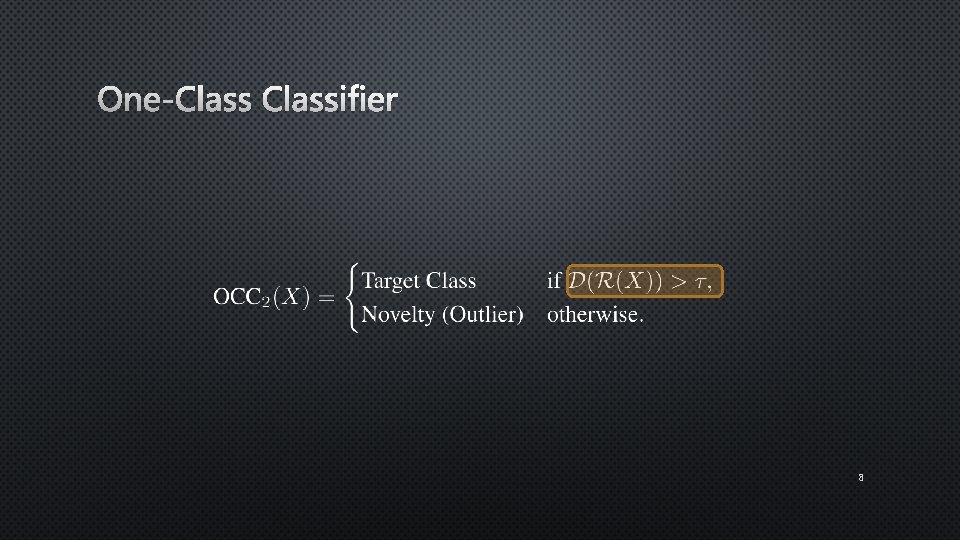

One-Classifier 8

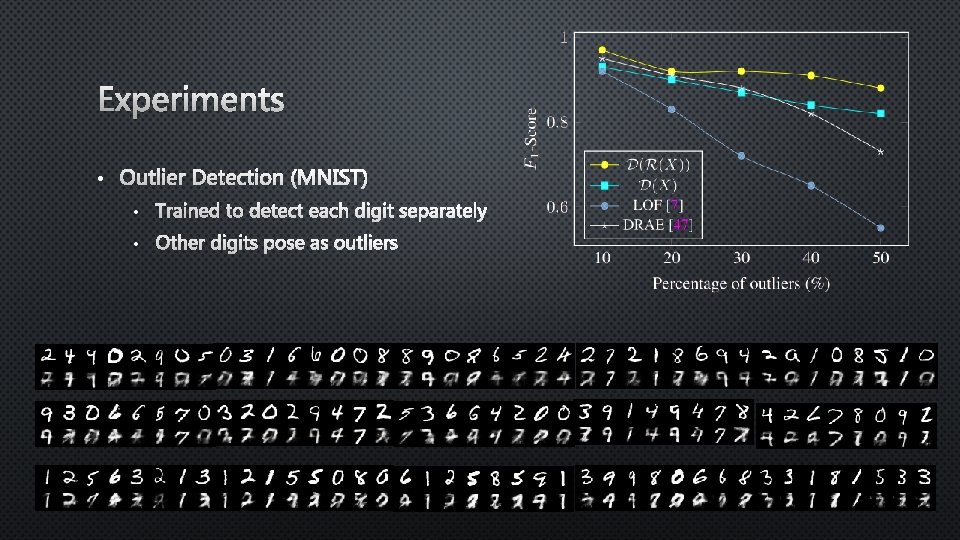

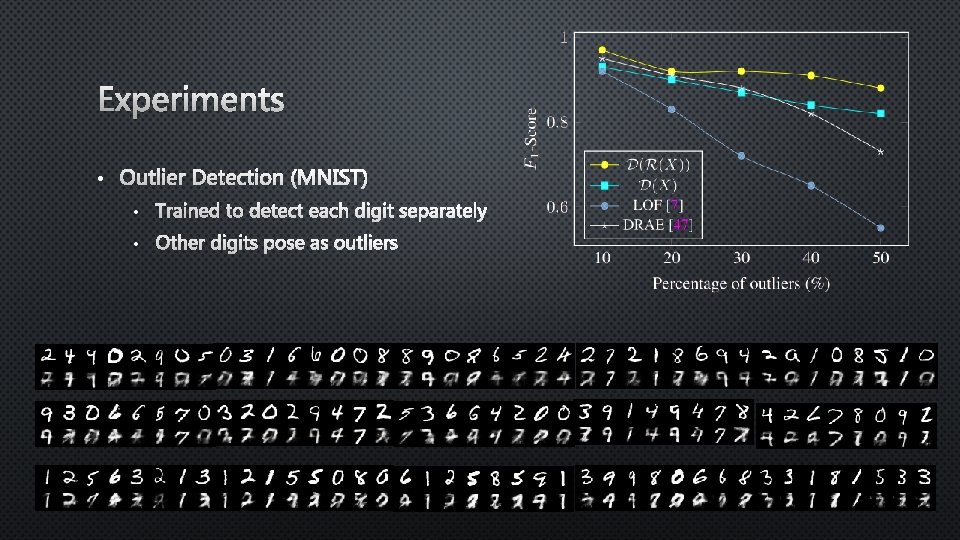

Experiments • Outlier Detection (MNIST) • Trained to detect each digit separately • Other digits pose as outliers 9

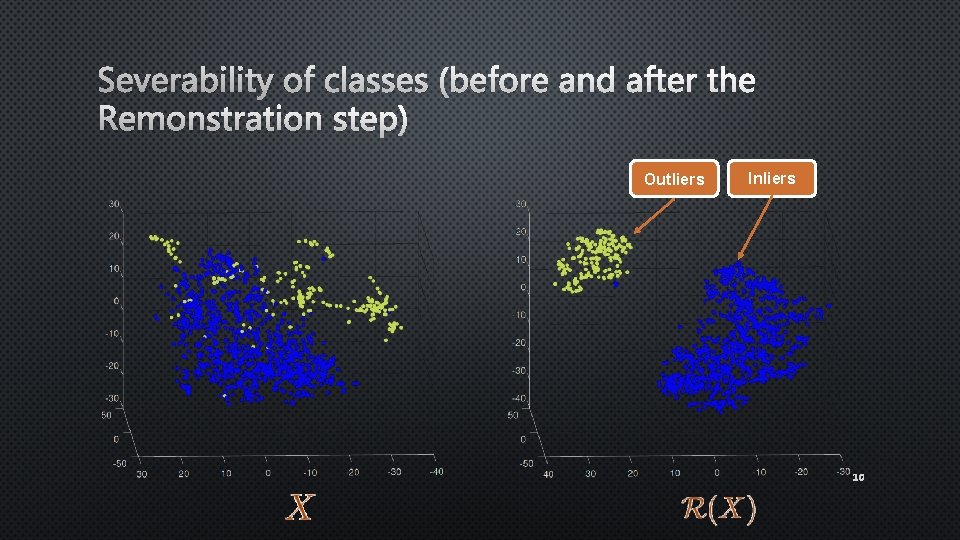

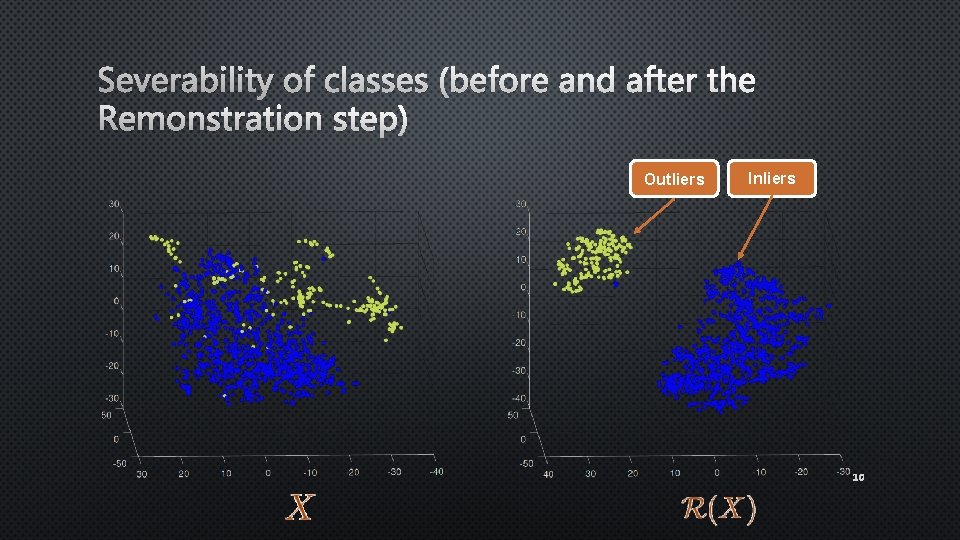

Severability of classes (before and after the Remonstration step) Outliers Inliers 10

![Experiments contd Outlier Detection Caltech256 Similar to previous works 52 we repeat Experiments (cont’d) • Outlier Detection (Caltech-256) • Similar to previous works [52], we repeat](https://slidetodoc.com/presentation_image/0bf8036022b8a09b2792b9c8b58ae8b3/image-11.jpg)

Experiments (cont’d) • Outlier Detection (Caltech-256) • Similar to previous works [52], we repeat the procedure three times and use images from n={1; 3; 5} randomly chosen categories as inliers (i. e. , target). • Outliers are randomly selected from the “clutter” category, such that each experiment has exactly 50% outliers. 1 inlier category 3 inlier categories 5 inlier categories 11

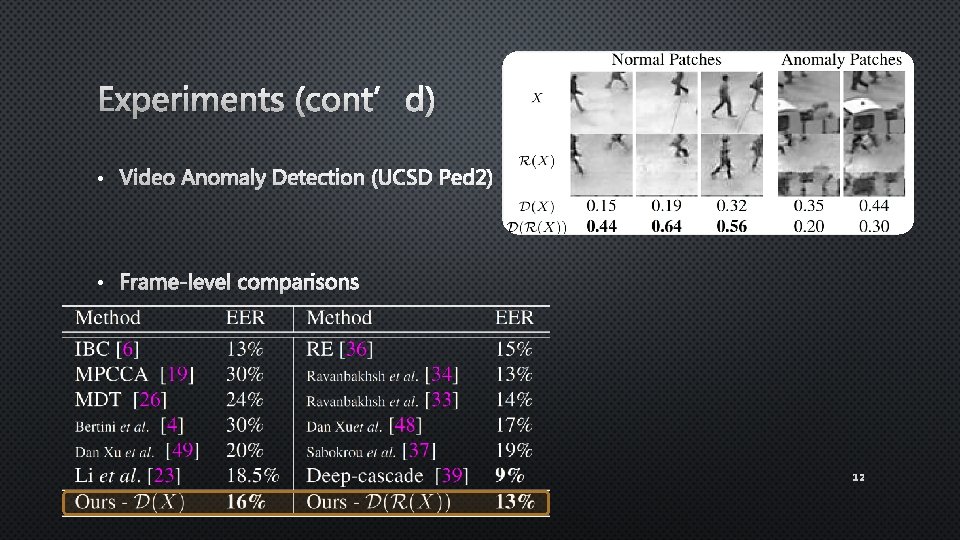

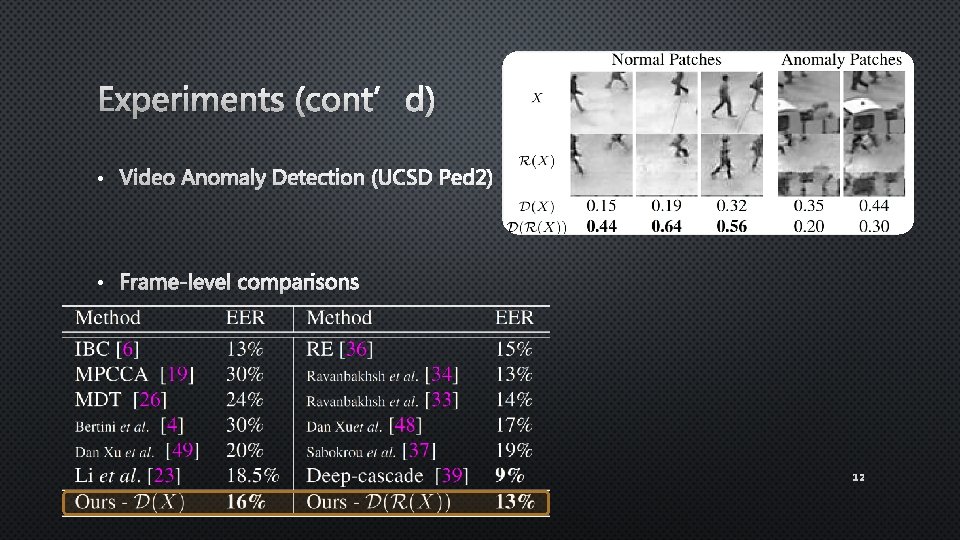

Experiments (cont’d) • Video Anomaly Detection (UCSD Ped 2) • Frame-level comparisons 12

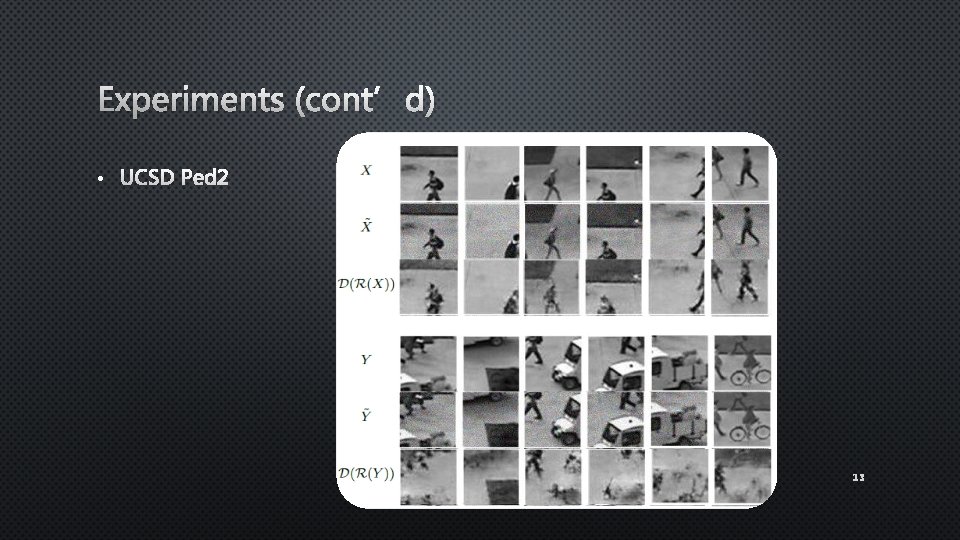

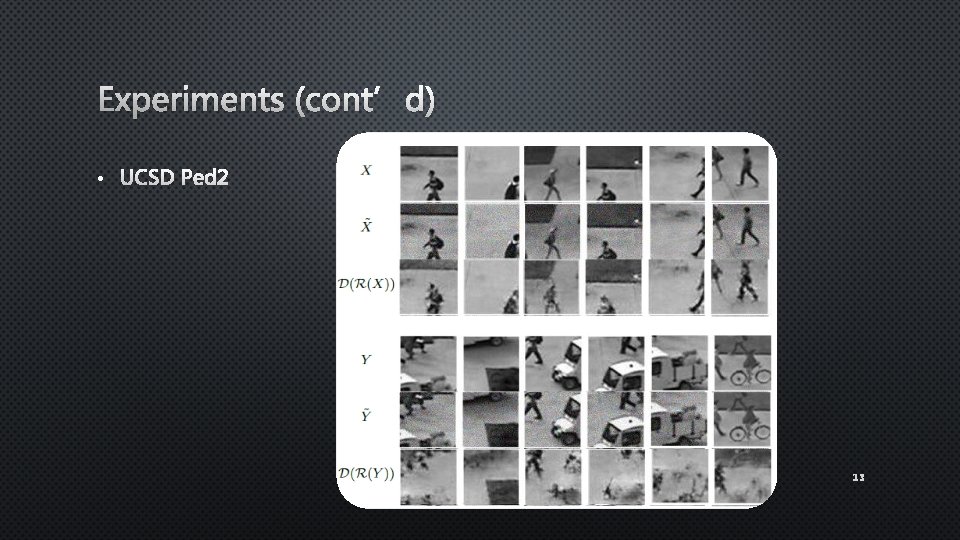

Experiments (cont’d) • UCSD Ped 2 13

Results (Cont’d) • UMN Video Anomaly Detection Dataset 14

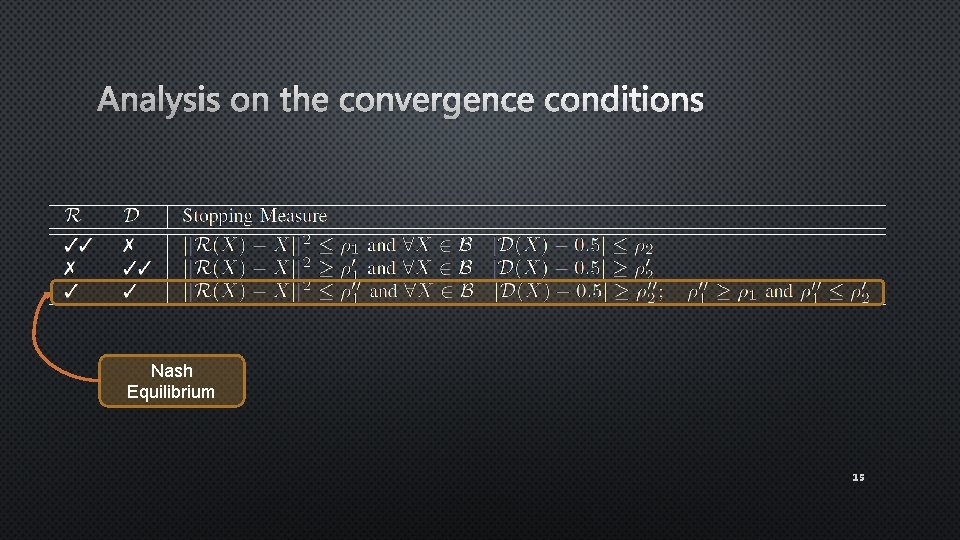

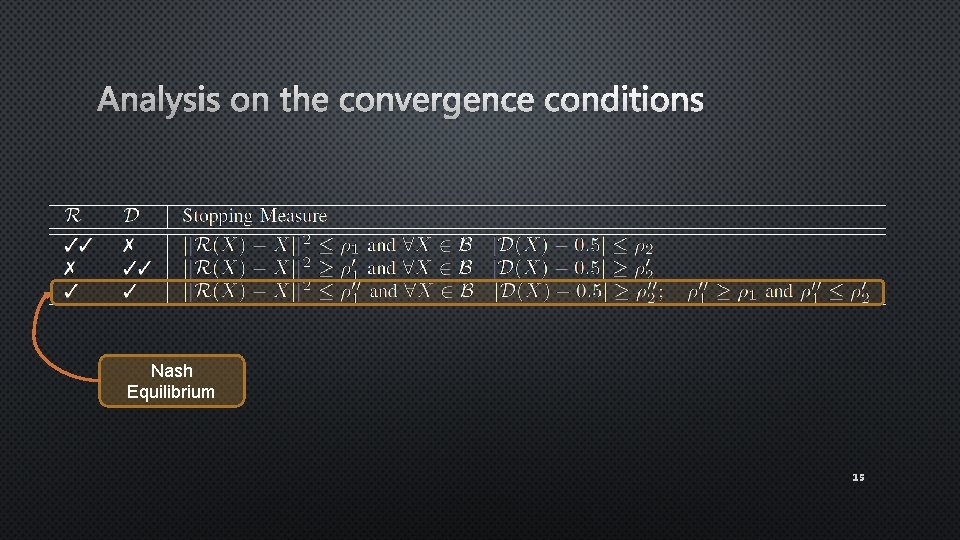

Analysis on the convergence conditions Nash Equilibrium 15

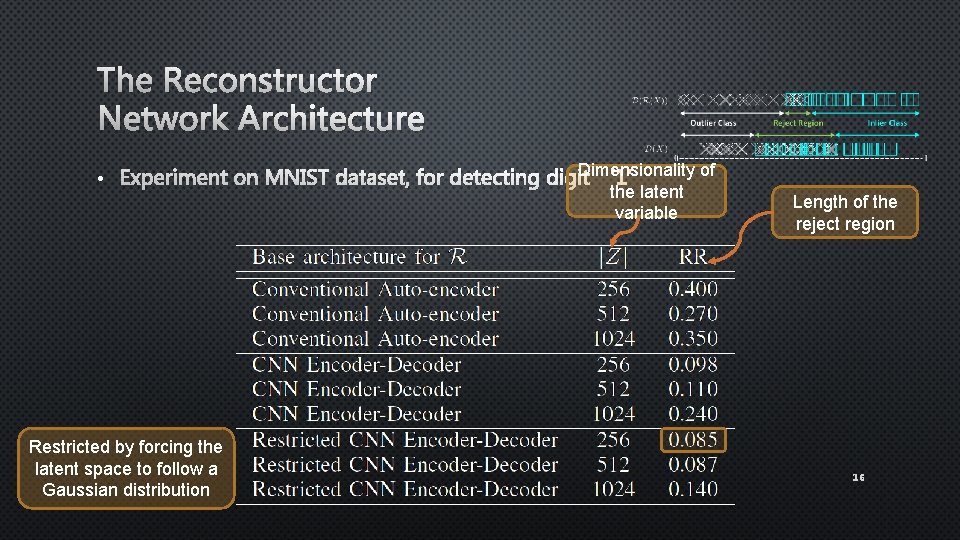

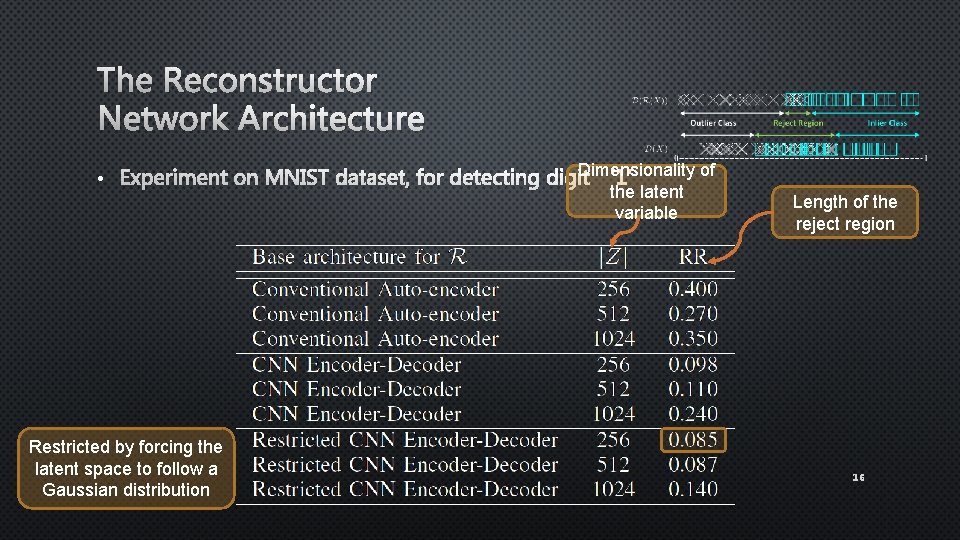

The Reconstructor Network Architecture Dimensionality of • Experiment on MNIST dataset, for detecting digit ‘ 1’ the latent variable Restricted by forcing the latent space to follow a Gaussian distribution Length of the reject region 16

Conclusion • We trained the first end-to-end trainable deep network for anomaly detection in images and videos • We trained two networks jointly that compete to train, but collaborate on evaluating any input test sample • Future direction: • Exploration of other types of noise in the reconstructor network • Gaining insights on the latent representation • Networks help each other learn better, any other applications? 17

Thank You! Any questions 18