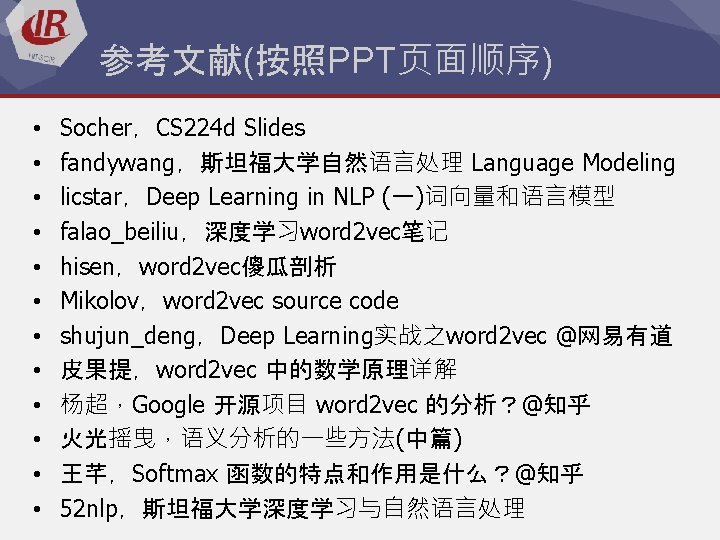

Advanced word vector representations Onehot Representation 0 0

- Slides: 54

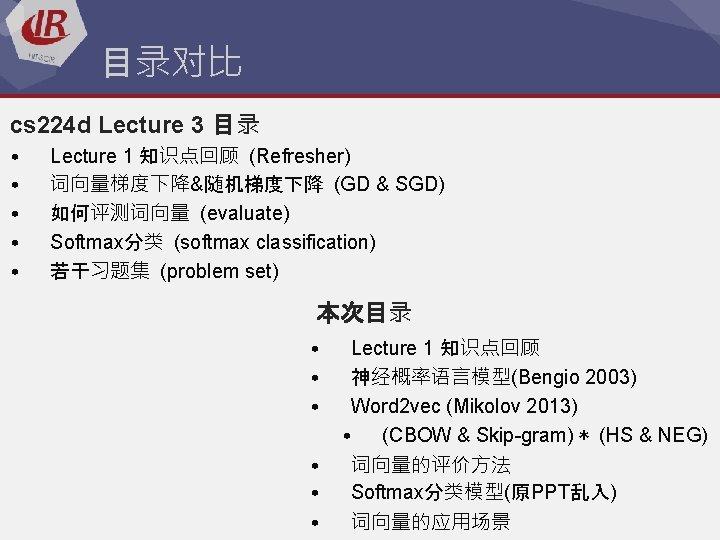

再探深度学习词向量表示 Advanced word vector representations 主讲人:李泽魁

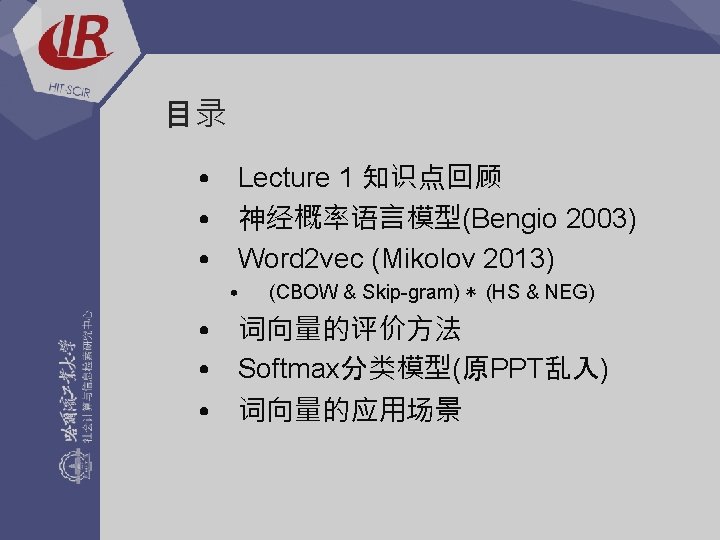

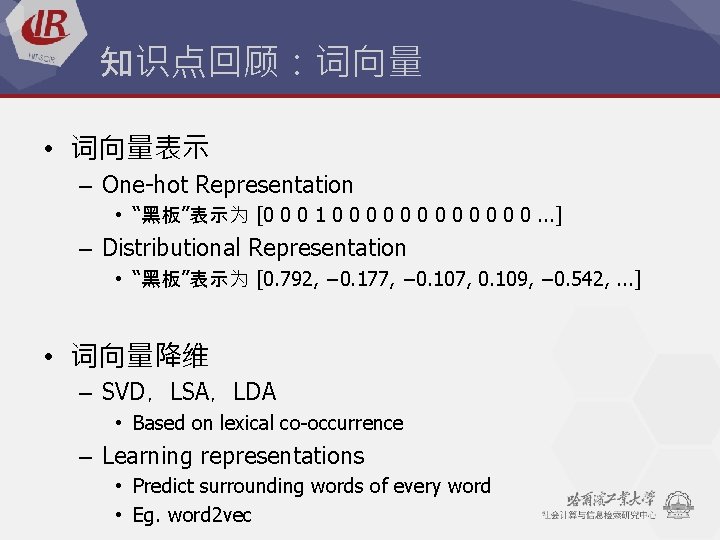

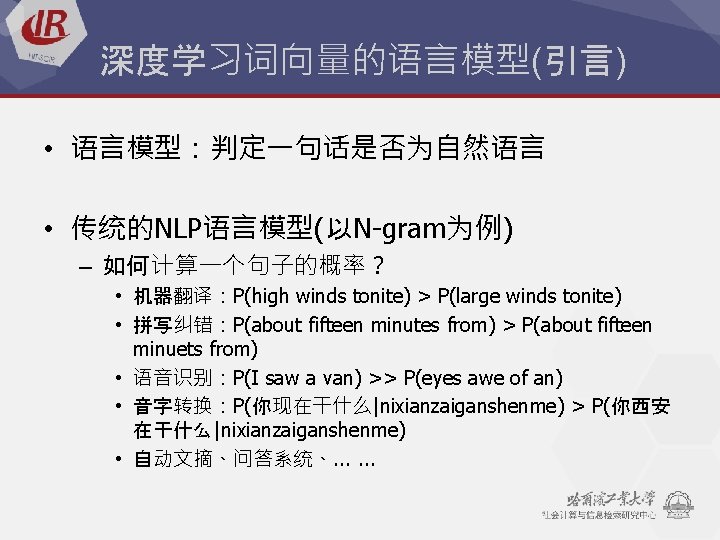

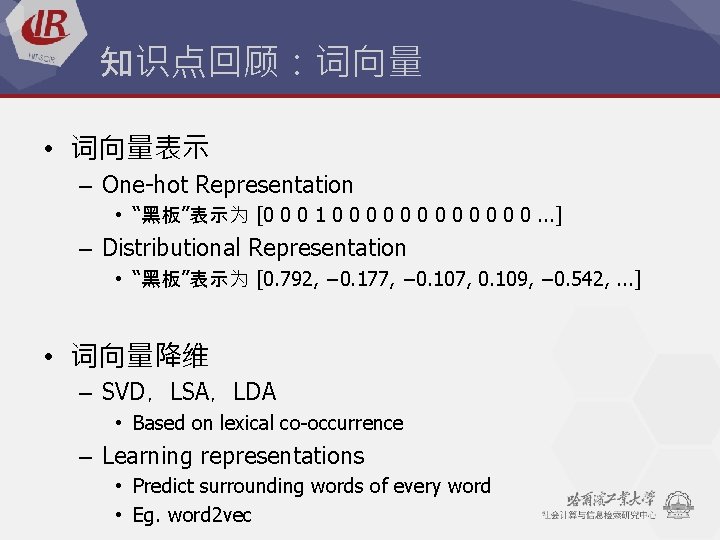

知识点回顾:词向量 • 词向量表示 – One-hot Representation • “黑板”表示为 [0 0 0 1 0 0 0. . . ] – Distributional Representation • “黑板”表示为 [0. 792, − 0. 177, − 0. 107, 0. 109, − 0. 542, . . . ] • 词向量降维 – SVD,LSA,LDA • Based on lexical co-occurrence – Learning representations • Predict surrounding words of every word • Eg. word 2 vec

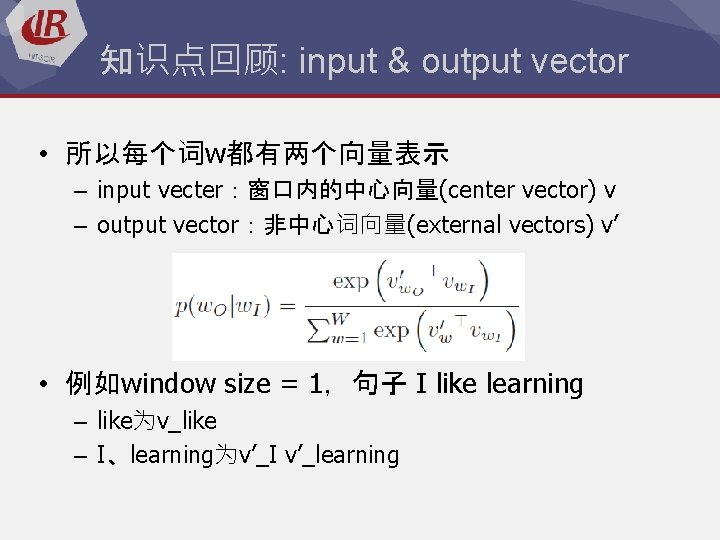

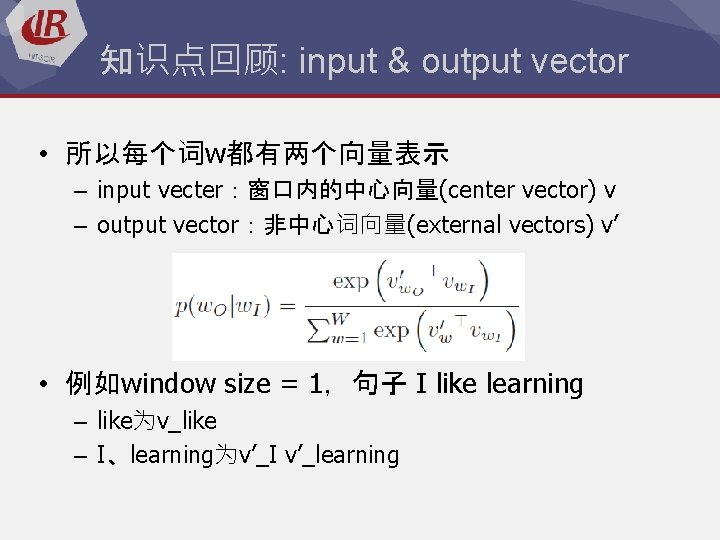

知识点回顾: input & output vector • 所以每个词w都有两个向量表示 – input vecter:窗口内的中心向量(center vector) v – output vector:非中心词向量(external vectors) v’ • 例如window size = 1,句子 I like learning – like为v_like – I、learning为v’_I v’_learning

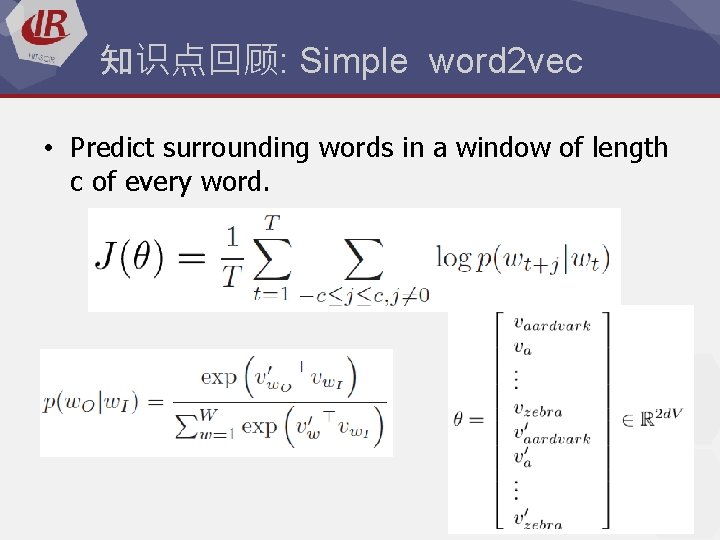

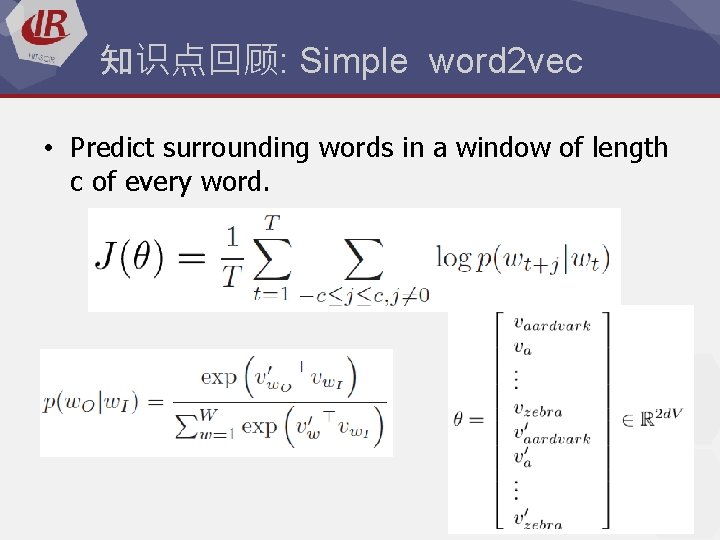

知识点回顾: Simple word 2 vec • Predict surrounding words in a window of length c of every word.

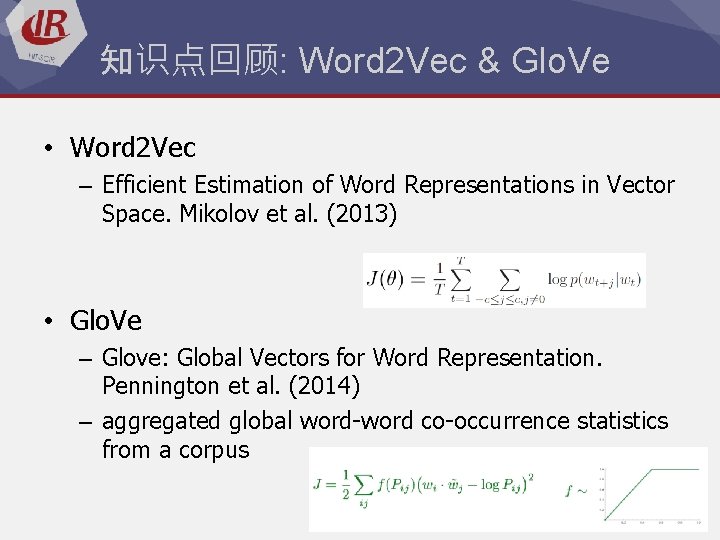

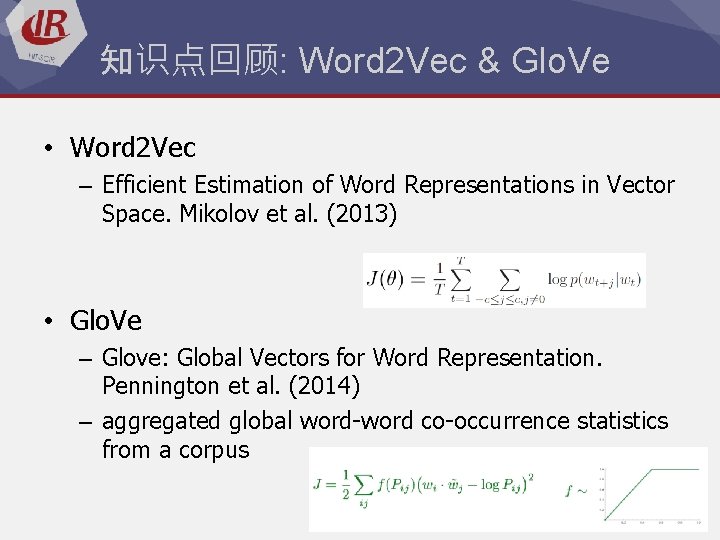

知识点回顾: Word 2 Vec & Glo. Ve • Word 2 Vec – Efficient Estimation of Word Representations in Vector Space. Mikolov et al. (2013) • Glo. Ve – Glove: Global Vectors for Word Representation. Pennington et al. (2014) – aggregated global word-word co-occurrence statistics from a corpus

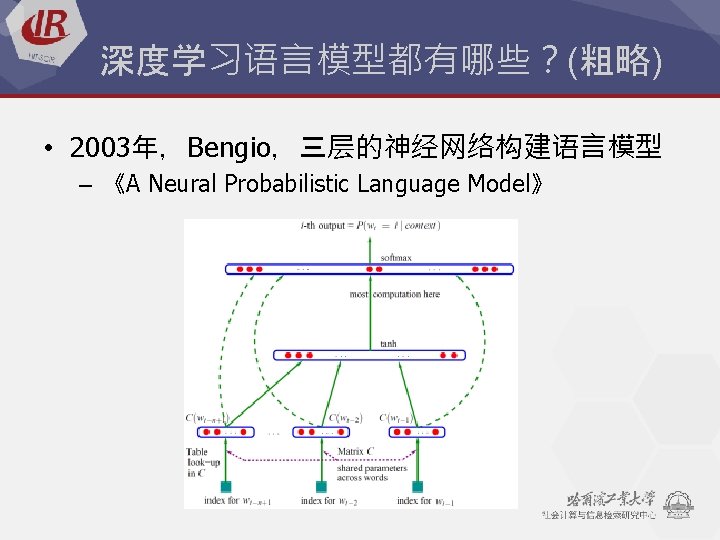

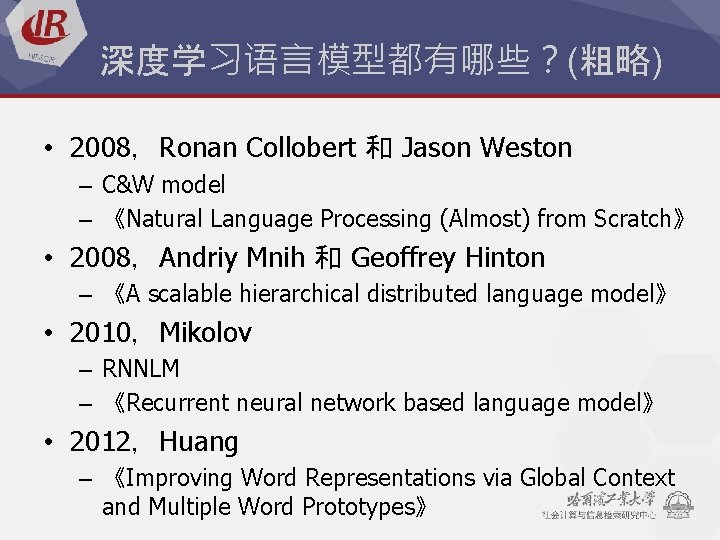

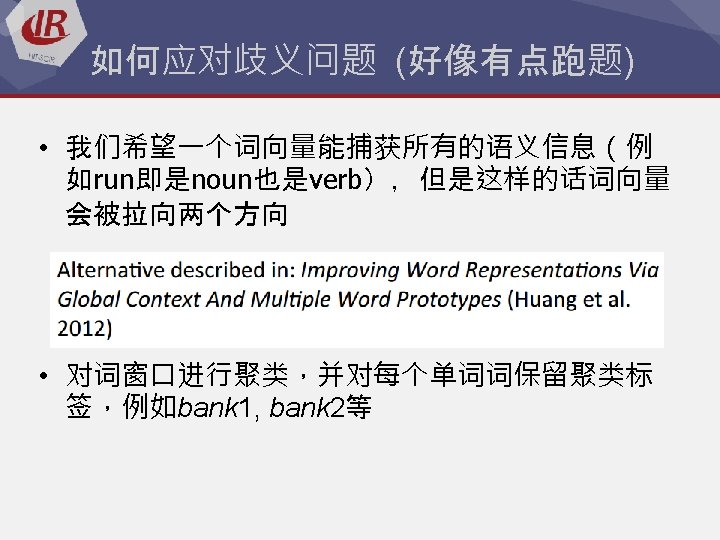

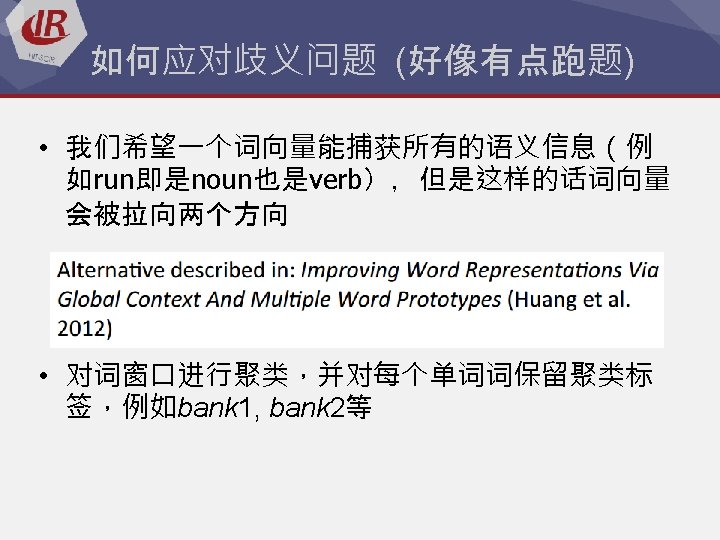

深度学习语言模型都有哪些?(粗略) • 2008,Ronan Collobert 和 Jason Weston – C&W model – 《Natural Language Processing (Almost) from Scratch》 • 2008,Andriy Mnih 和 Geoffrey Hinton – 《A scalable hierarchical distributed language model》 • 2010,Mikolov – RNNLM – 《Recurrent neural network based language model》 • 2012,Huang – 《Improving Word Representations via Global Context and Multiple Word Prototypes》

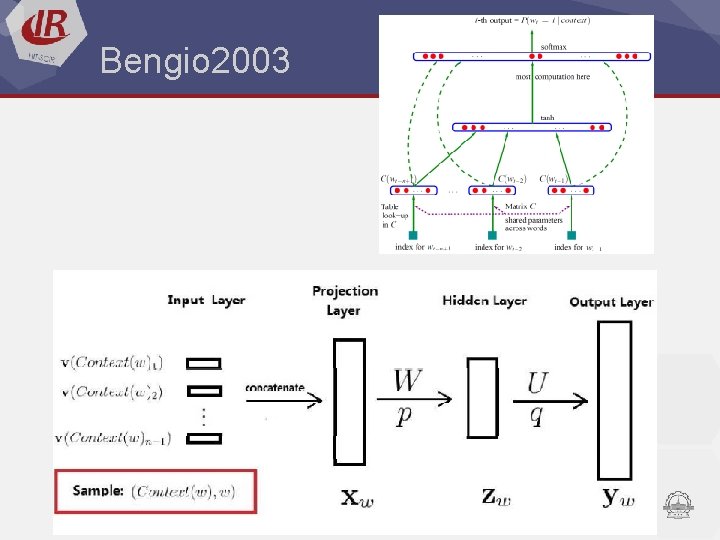

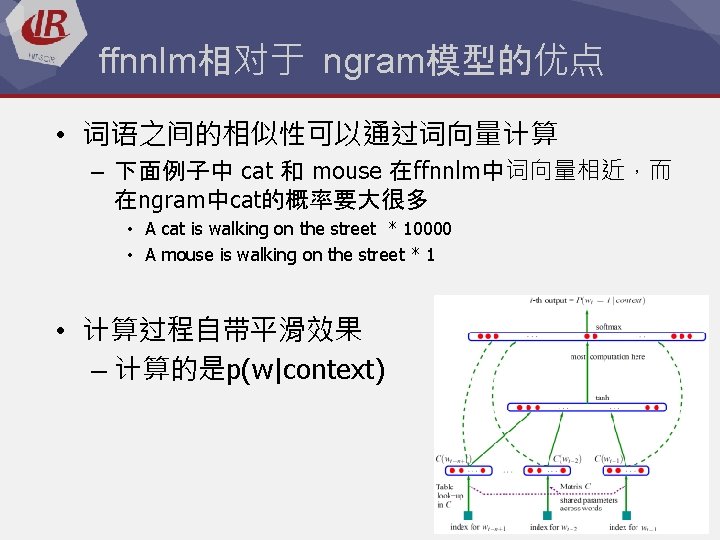

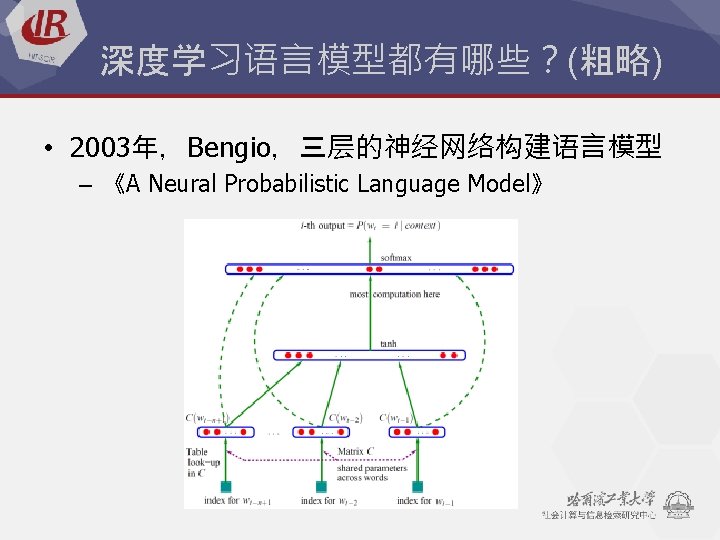

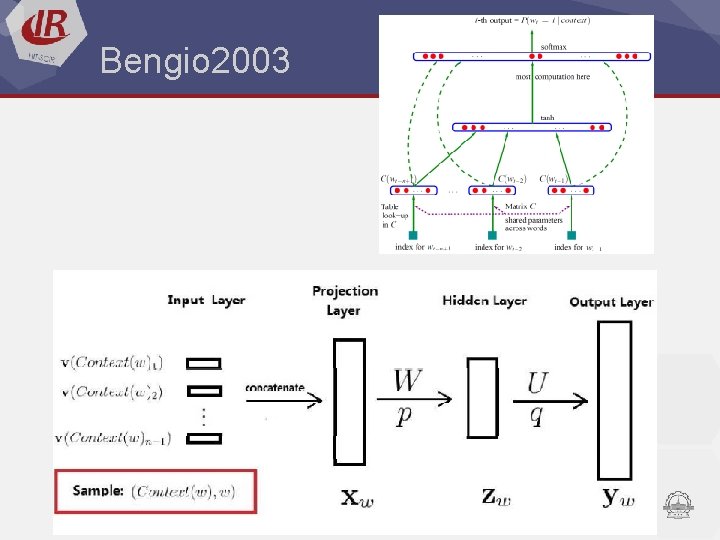

Bengio 2003

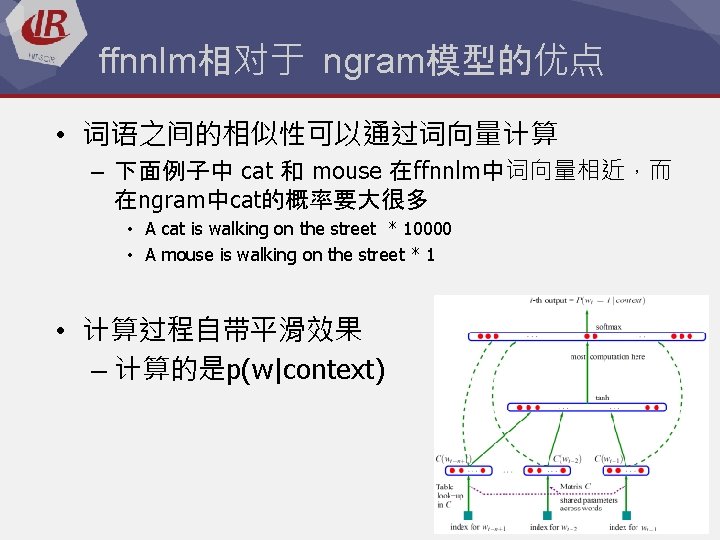

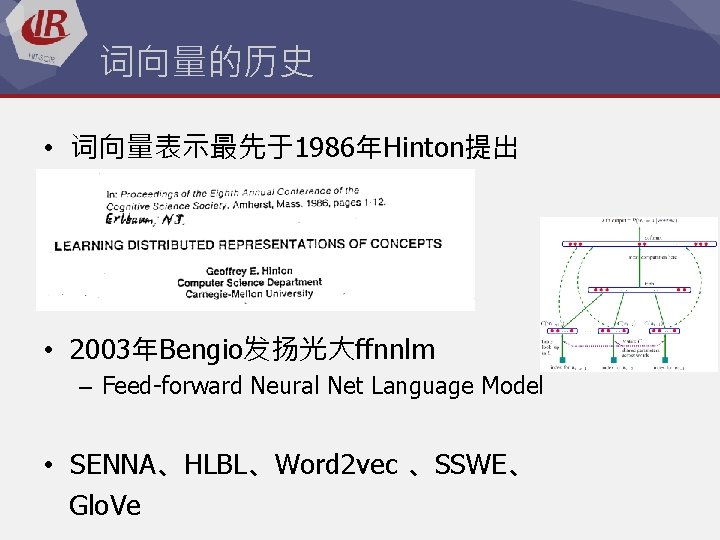

词向量的历史 • 词向量表示最先于1986年Hinton提出 • 2003年Bengio发扬光大ffnnlm – Feed-forward Neural Net Language Model • SENNA、HLBL、Word 2 vec 、SSWE、 Glo. Ve

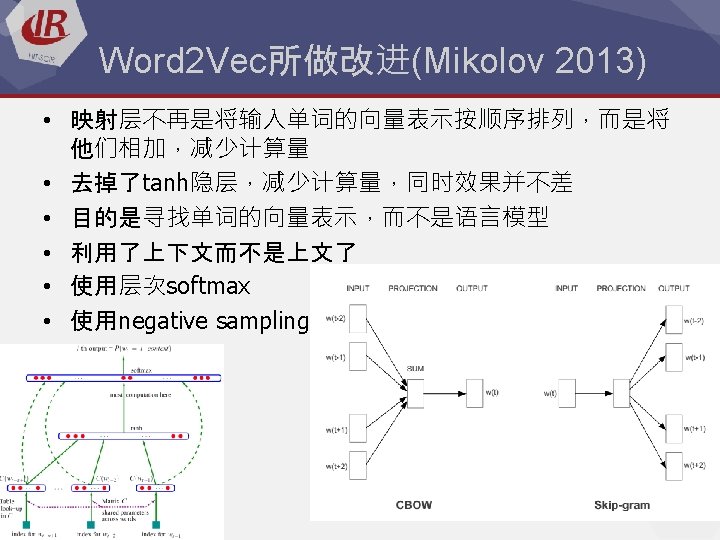

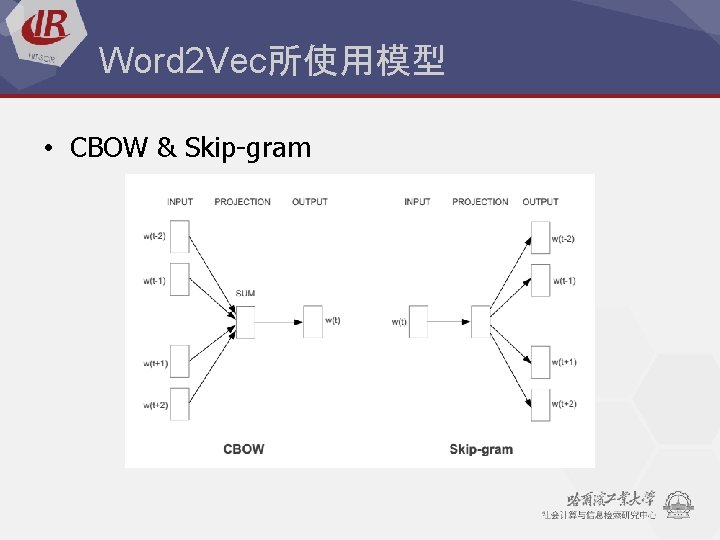

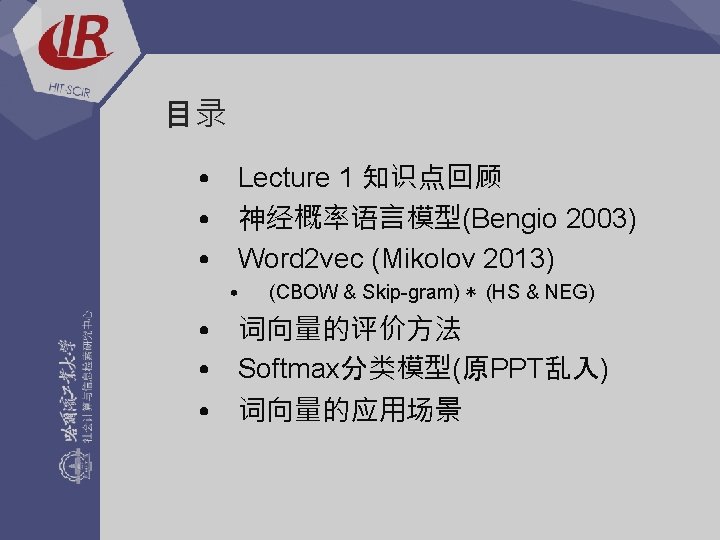

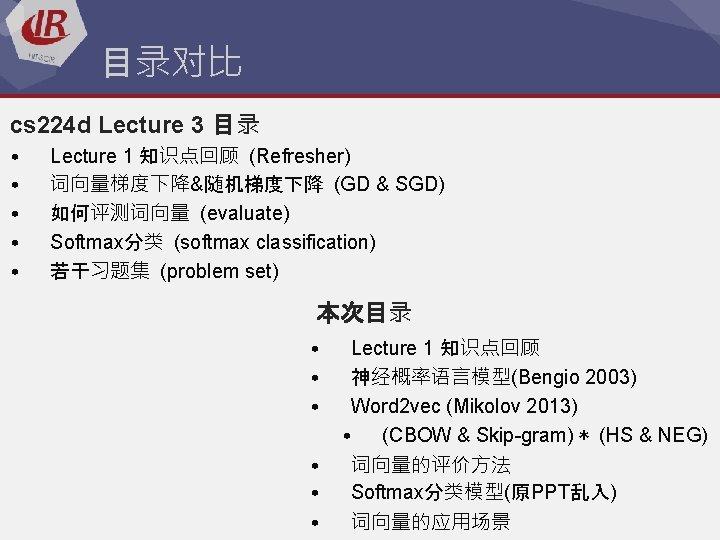

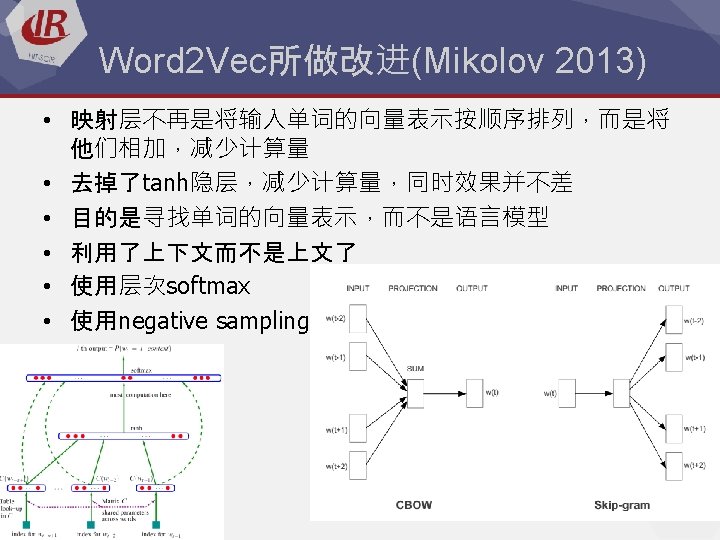

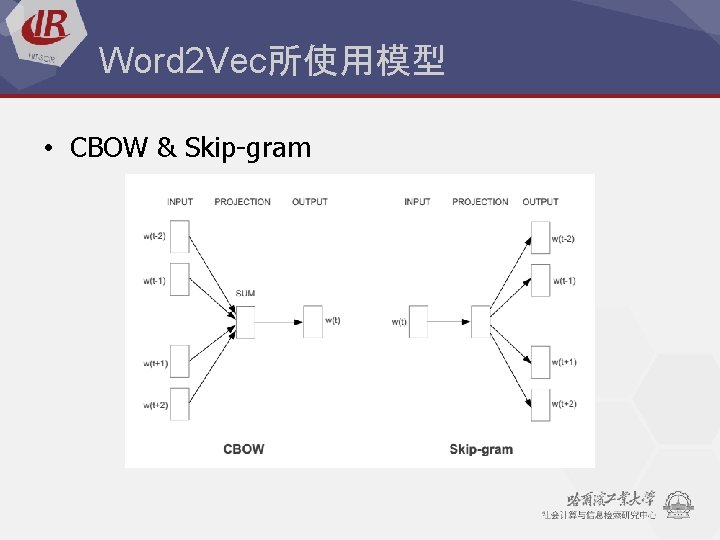

Word 2 Vec所使用模型 • CBOW & Skip-gram

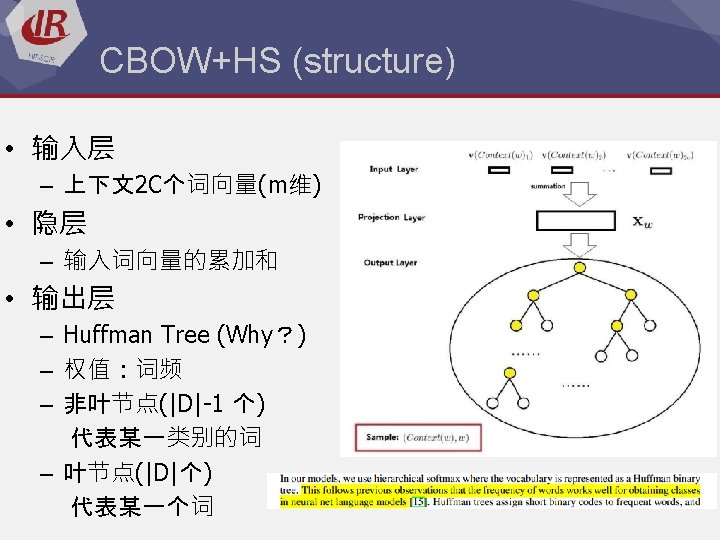

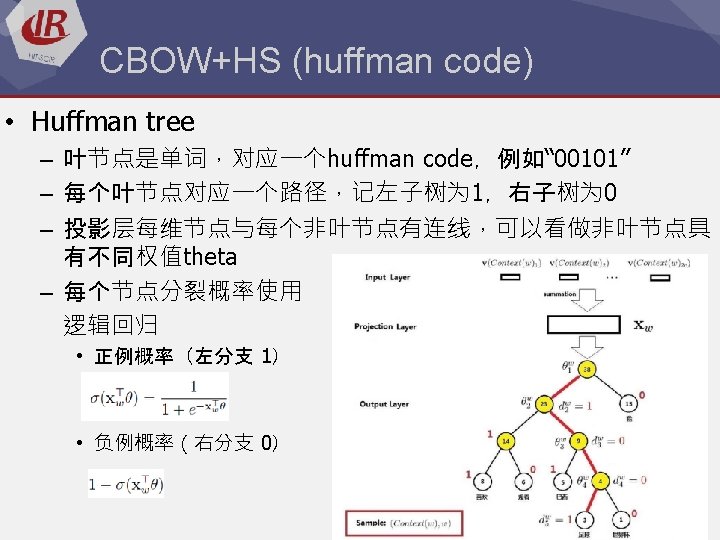

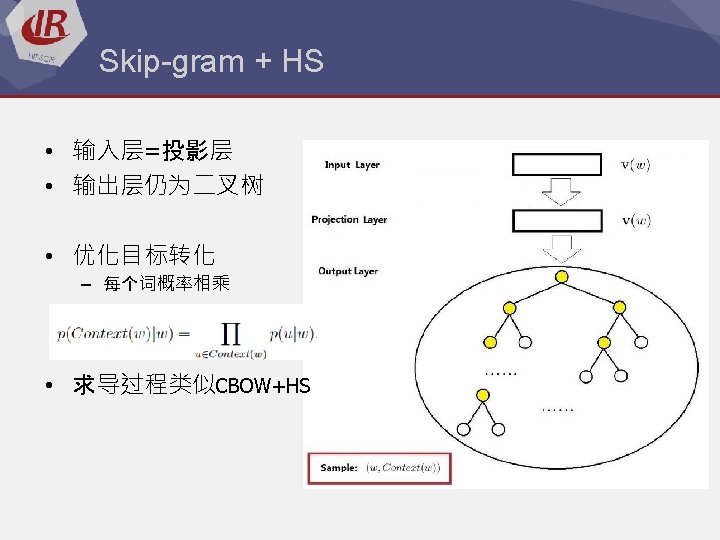

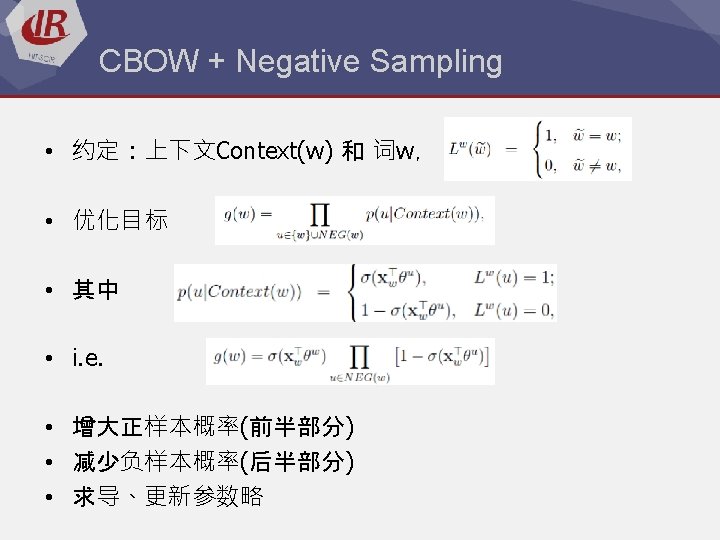

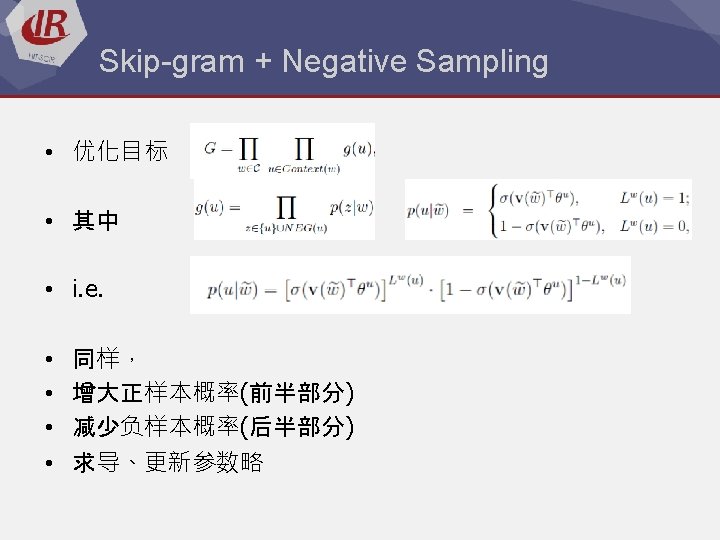

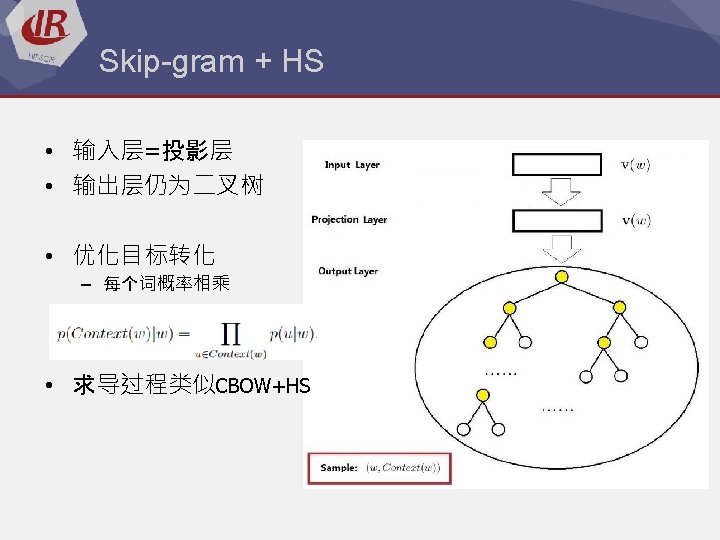

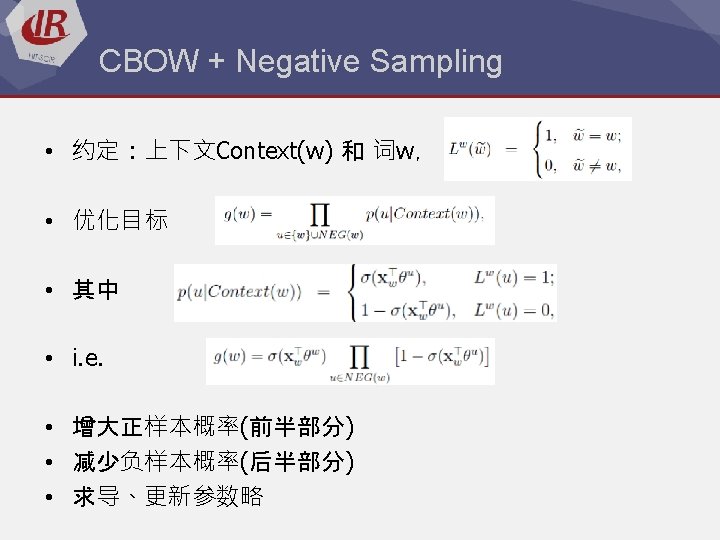

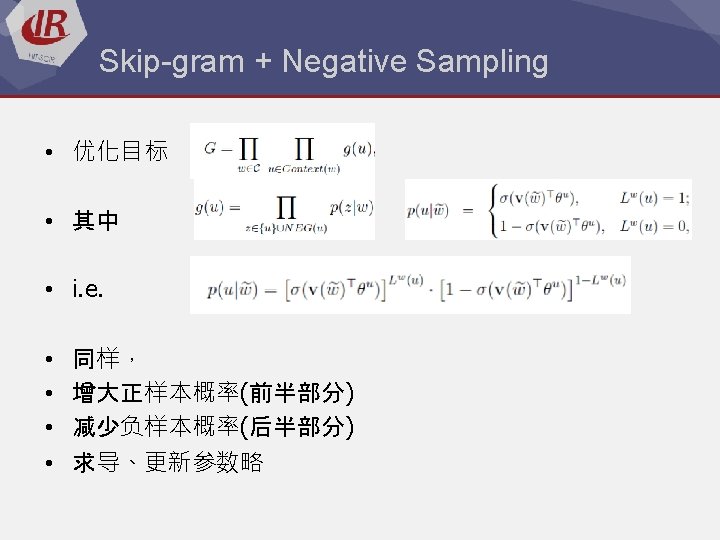

(CBOW & Skip-gram) *2 • 两个模型(可选其一) – CBOW (Continuous Bag-Of-Words Model) – Skip-gram (Continuous Skip-gram Model) • 两套框架(可选其一) – Hierarchical Softmax – Negative Sampling

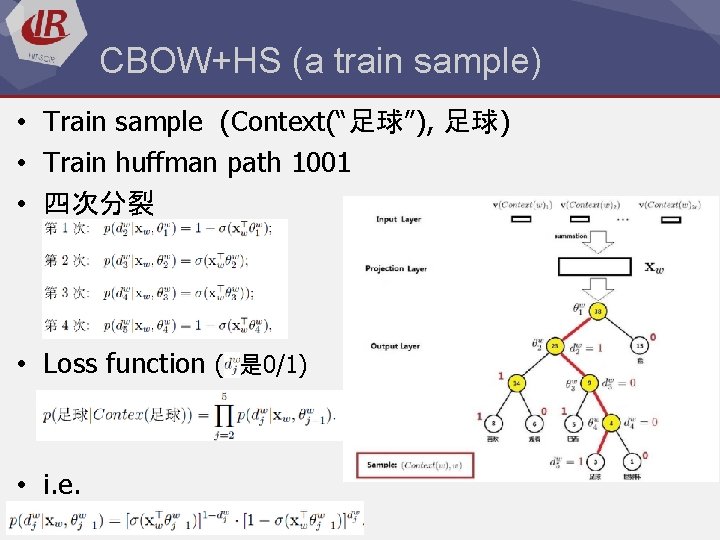

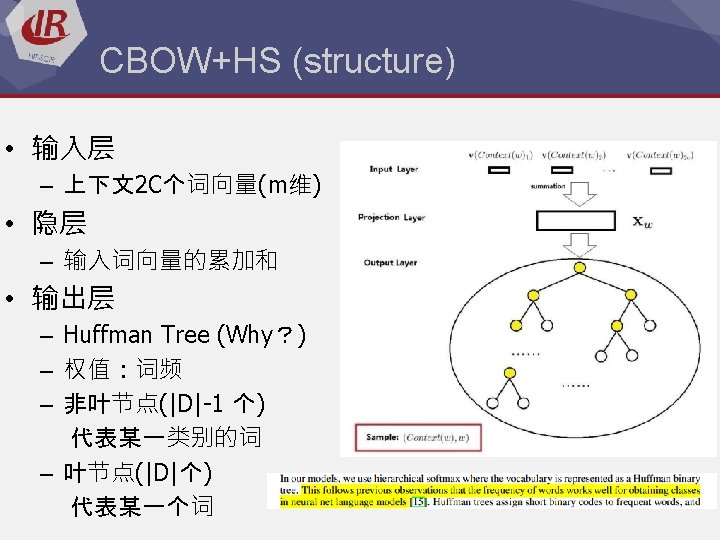

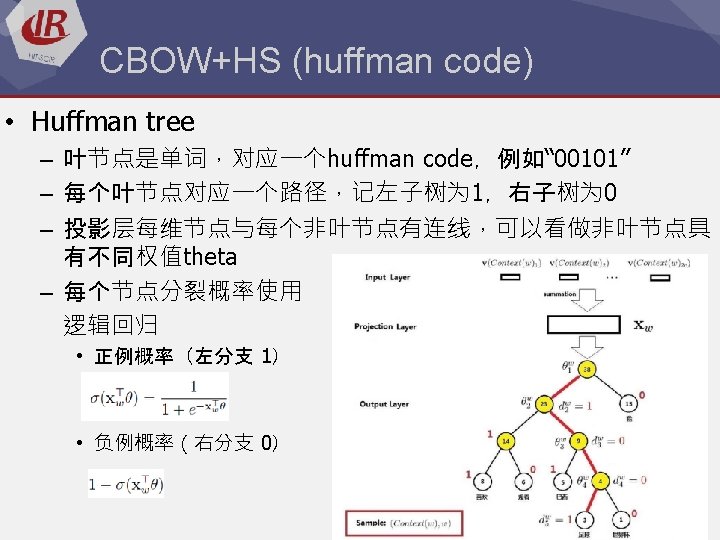

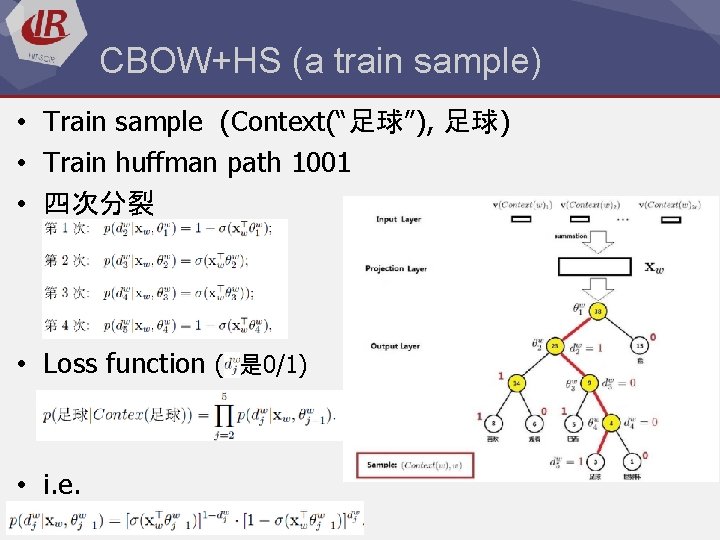

CBOW+HS (a train sample) • Train sample (Context(“足球”), 足球) • Train huffman path 1001 • 四次分裂 • Loss function ( 是 0/1) • i. e.

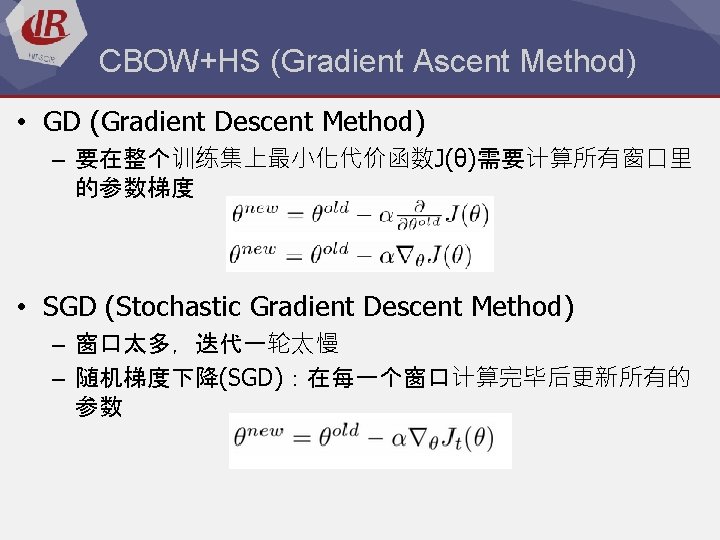

CBOW+HS (Gradient Ascent Method) • theta update (theta gradient) • word_vector update (word_vector gradient)

CBOW+HS (hierarchical) • No hierarchical structure – 输出层每一个词都算一遍,时间复杂度是O(|V|) • Binary tree – O(log 2(|V|))

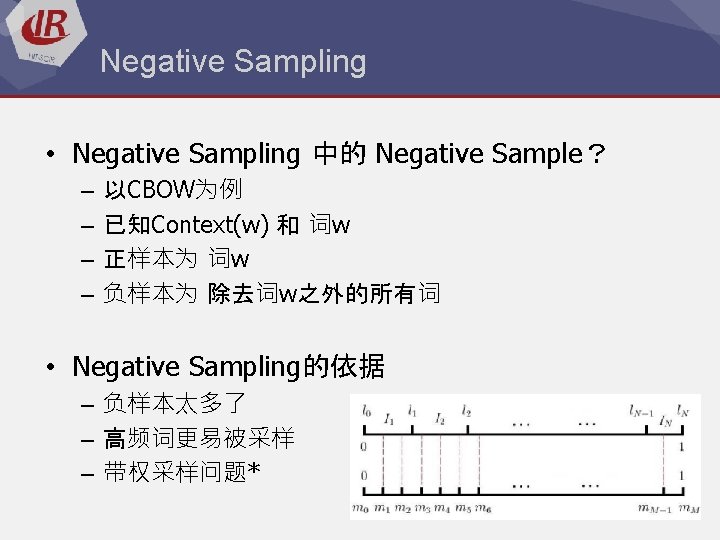

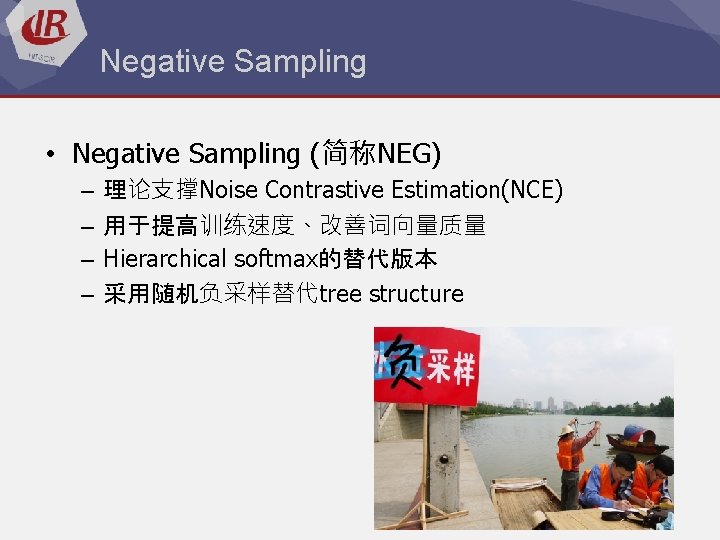

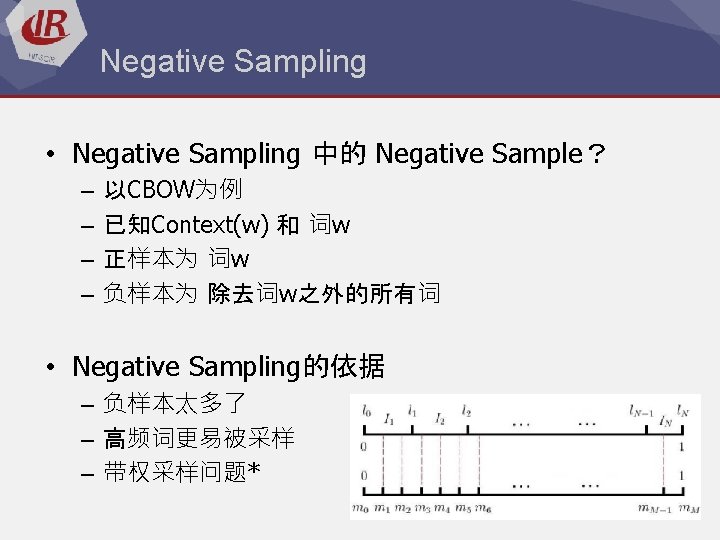

Negative Sampling • Negative Sampling (简称NEG) – – 理论支撑Noise Contrastive Estimation(NCE) 用于提高训练速度、改善词向量质量 Hierarchical softmax的替代版本 采用随机负采样替代tree structure

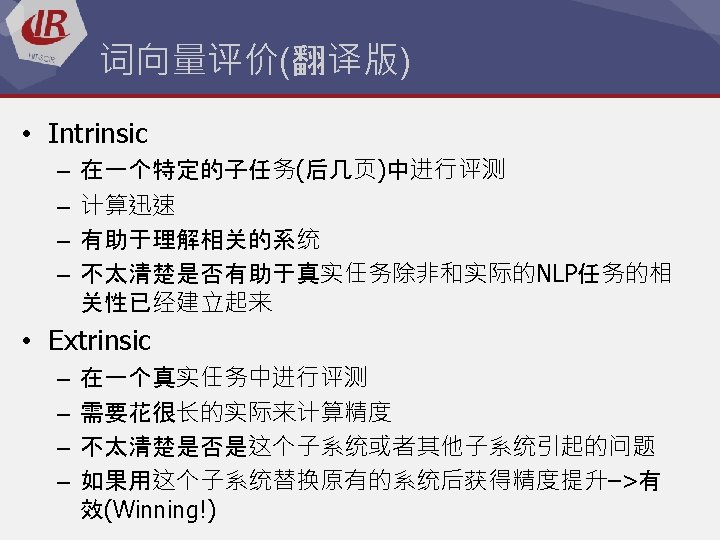

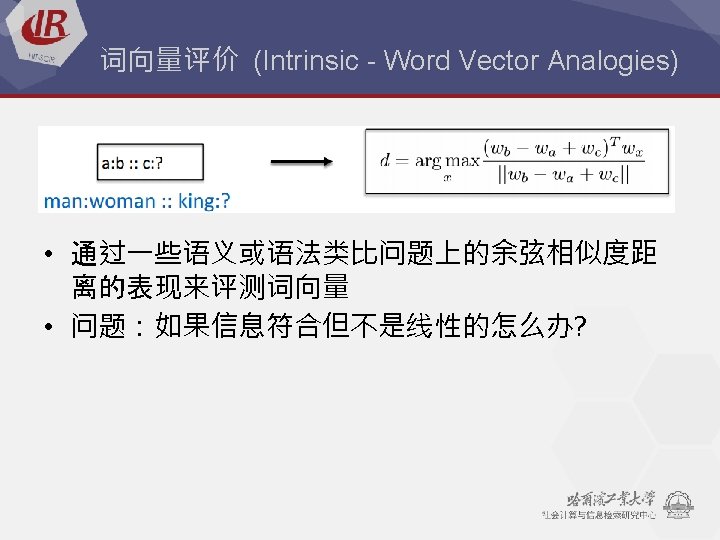

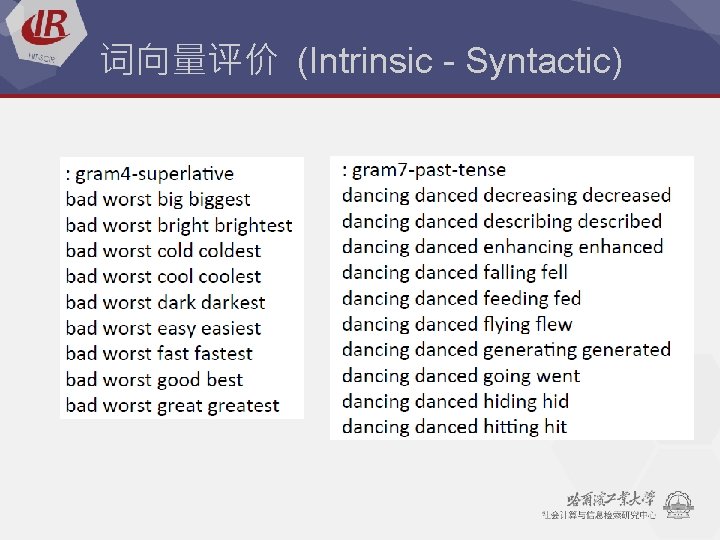

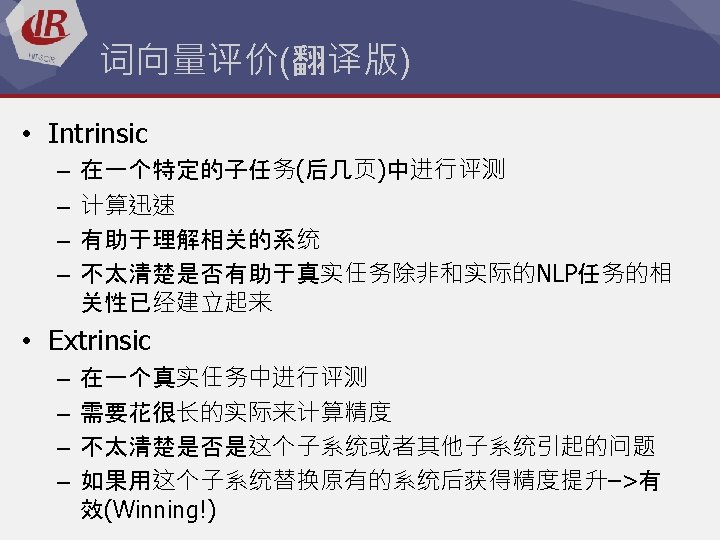

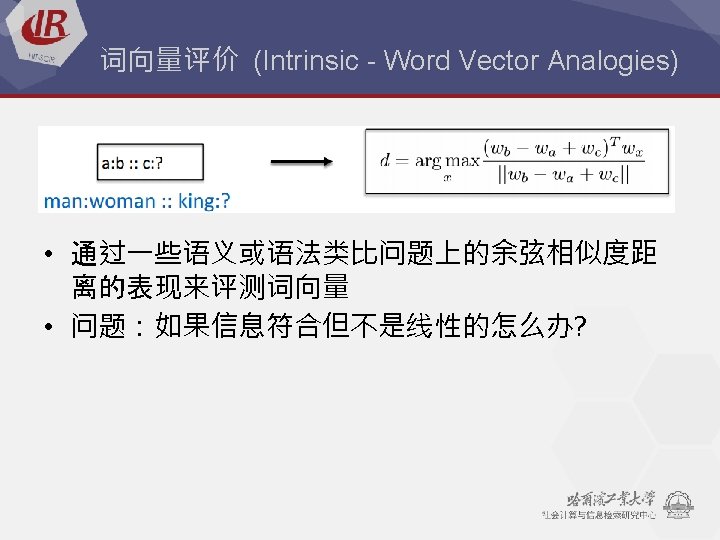

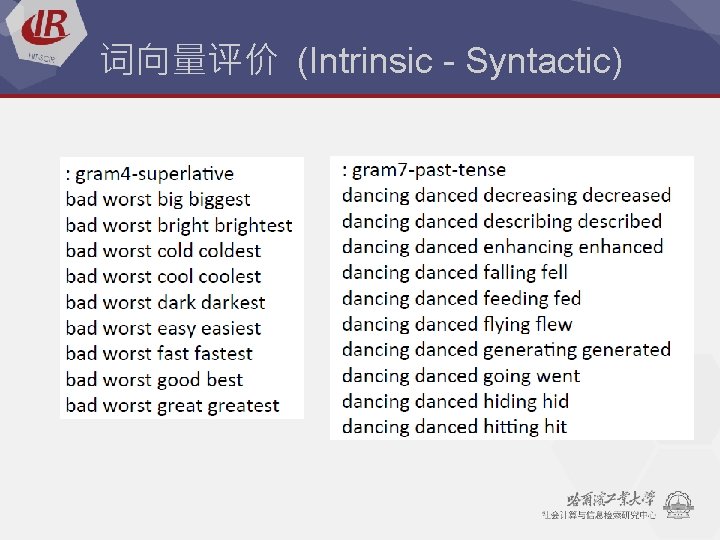

词向量评价 (Intrinsic - Syntactic)

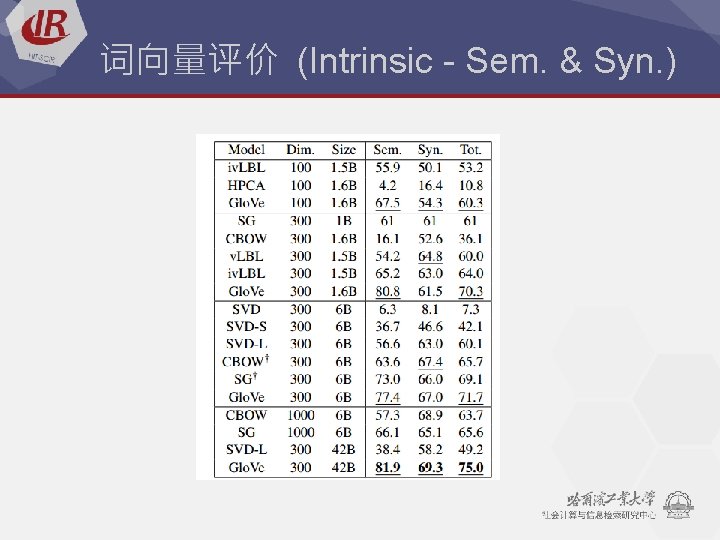

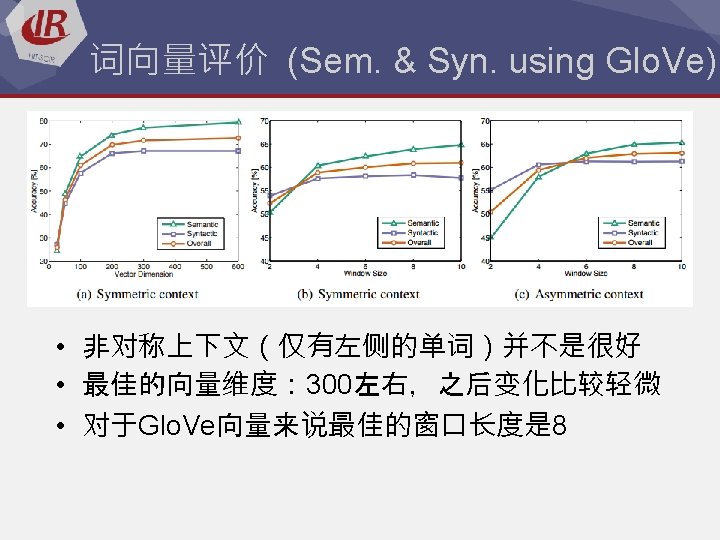

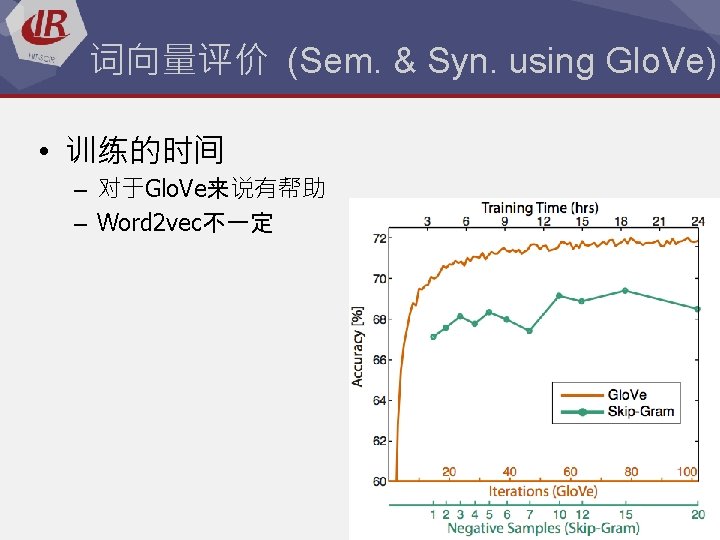

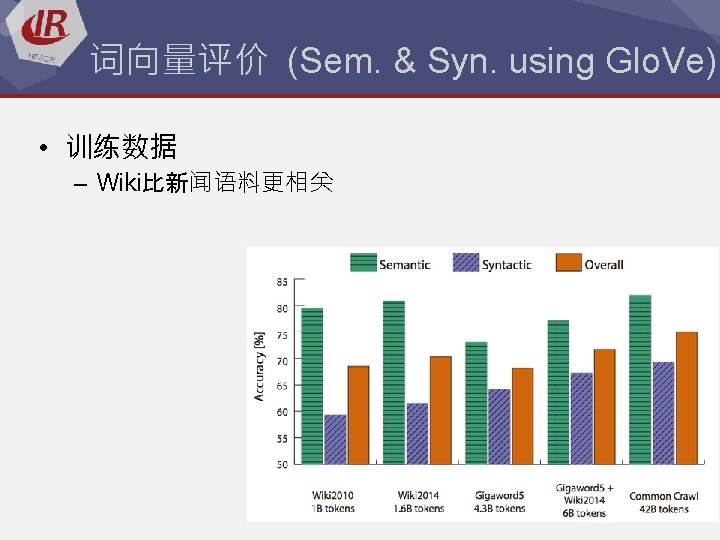

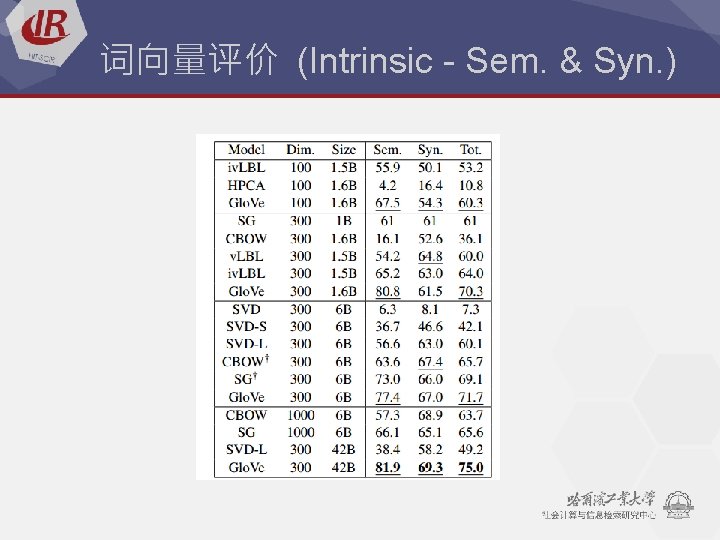

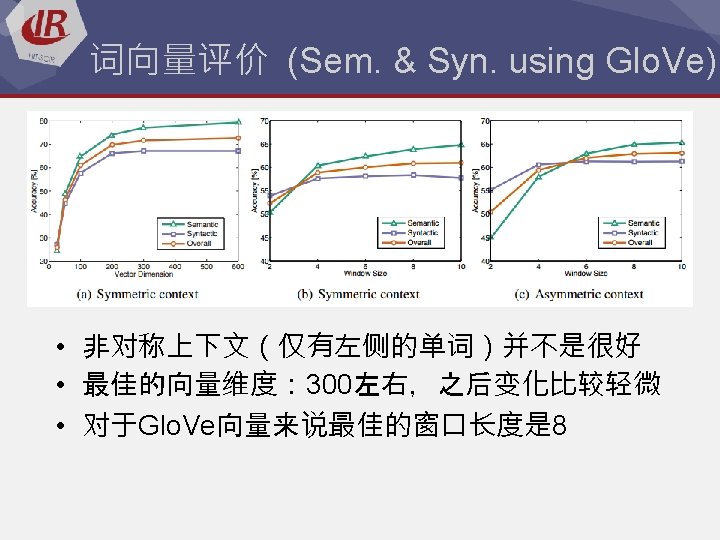

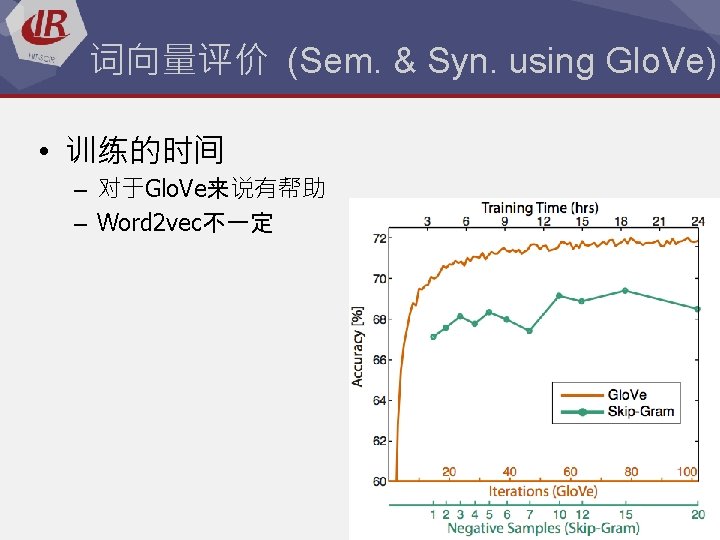

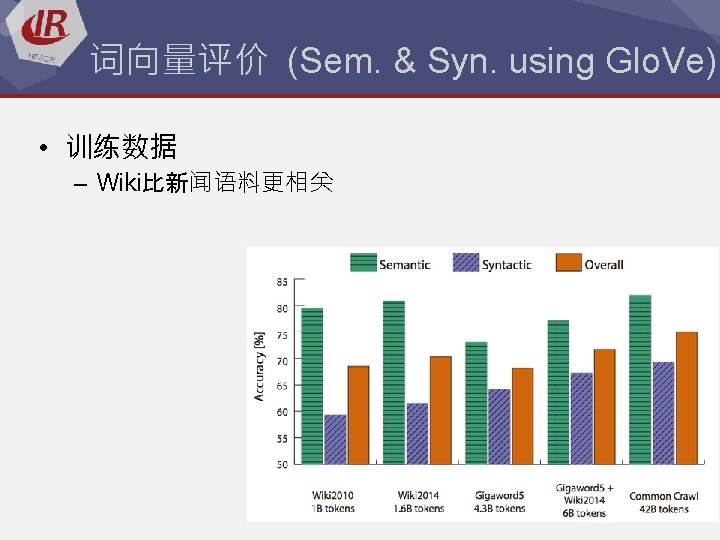

词向量评价 (Intrinsic - Sem. & Syn. )

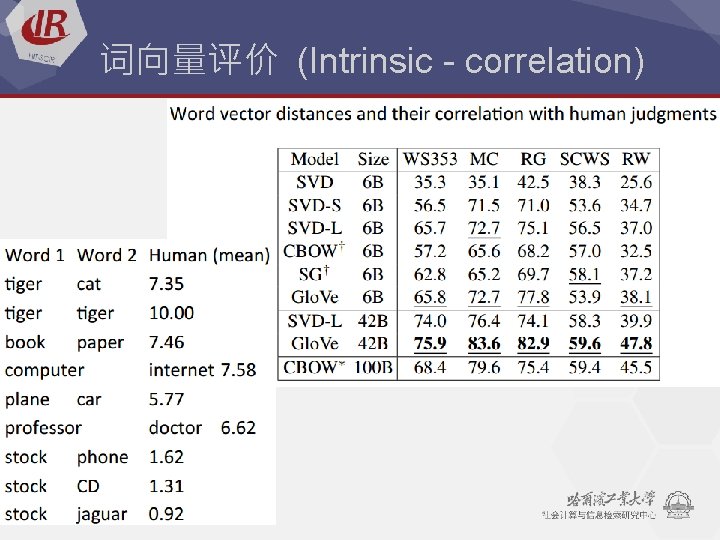

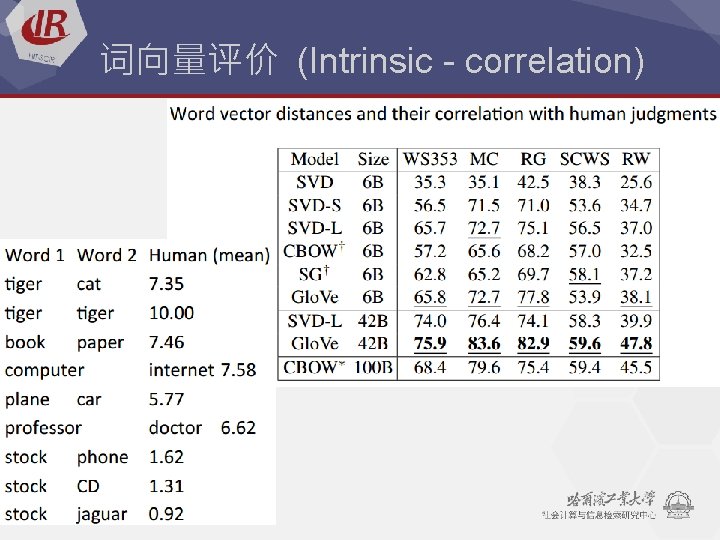

词向量评价 (Intrinsic - correlation)

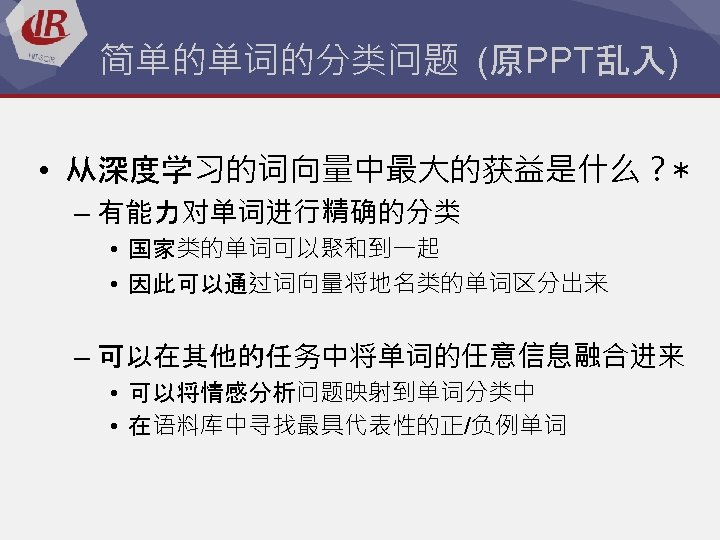

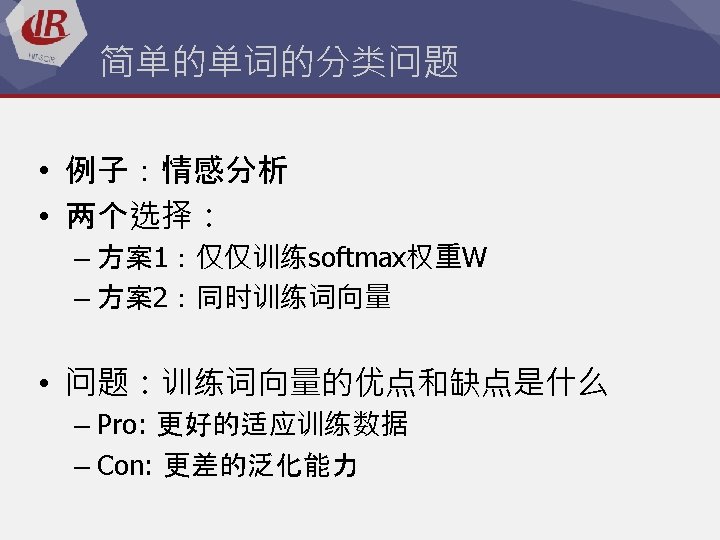

简单的单词的分类问题 -训练的词向量的情感分享可视化 Fun Enjoyable Worth Right Blarblar dull boring

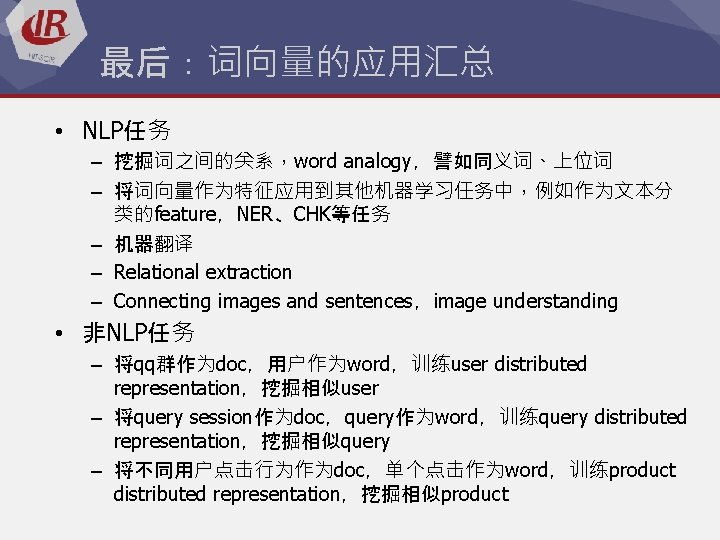

最后:词向量的应用汇总 • NLP任务 – 挖掘词之间的关系,word analogy,譬如同义词、上位词 – 将词向量作为特征应用到其他机器学习任务中,例如作为文本分 类的feature,NER、CHK等任务 – 机器翻译 – Relational extraction – Connecting images and sentences,image understanding • 非NLP任务 – 将qq群作为doc,用户作为word,训练user distributed representation,挖掘相似user – 将query session作为doc,query作为word,训练query distributed representation,挖掘相似query – 将不同用户点击行为作为doc,单个点击作为word,训练product distributed representation,挖掘相似product

Thanks Q&A