Advanced topics Andrew Ng Outline Selftaught learning Learning

![Neural Network example [Courtesy of Yann Le. Cun] Andrew Ng Neural Network example [Courtesy of Yann Le. Cun] Andrew Ng](https://slidetodoc.com/presentation_image_h/cca58a4533294ba40bed18bd6b24d197/image-15.jpg)

![Deep Belief Network Layer 4. [c 1, c 2, c 3] Layer 3. [b Deep Belief Network Layer 4. [c 1, c 2, c 3] Layer 3. [b](https://slidetodoc.com/presentation_image_h/cca58a4533294ba40bed18bd6b24d197/image-33.jpg)

- Slides: 52

Advanced topics Andrew Ng

Outline • Self-taught learning • Learning feature hierarchies (Deep learning) • Scaling up Andrew Ng

Self-taught learning Andrew Ng

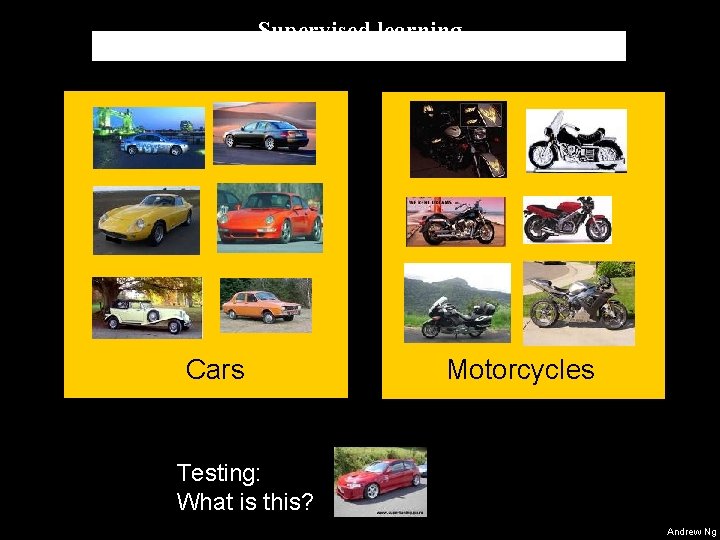

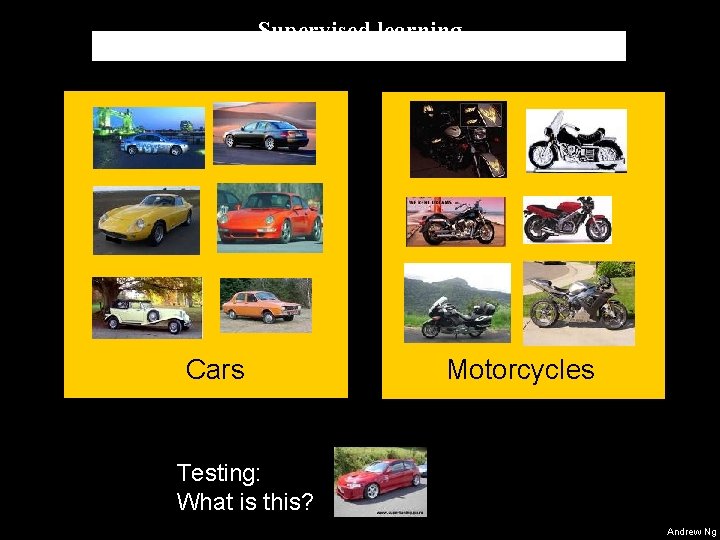

Supervised learning Cars Motorcycles Testing: What is this? Andrew Ng

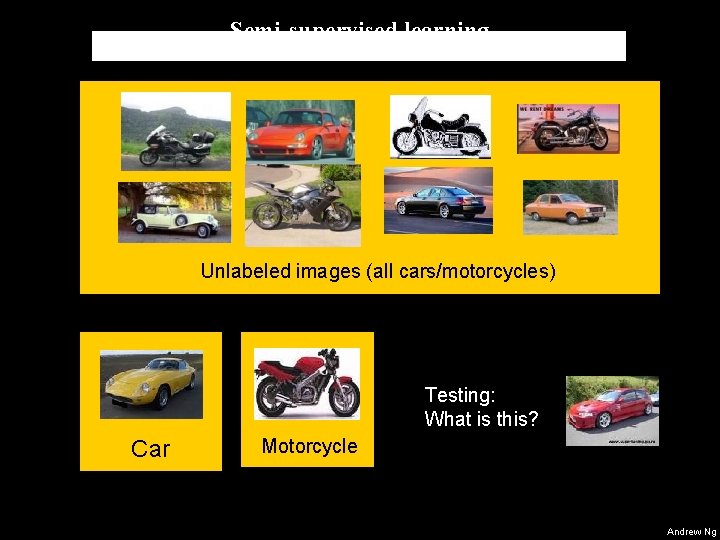

Semi-supervised learning Unlabeled images (all cars/motorcycles) Testing: What is this? Car Motorcycle Andrew Ng

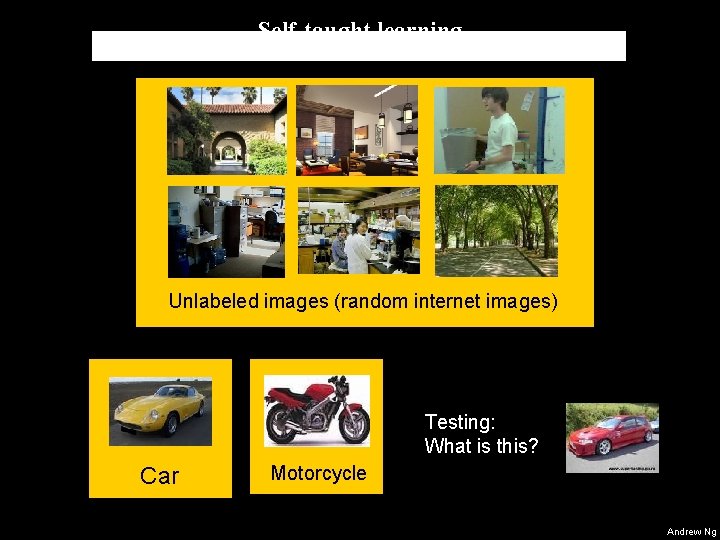

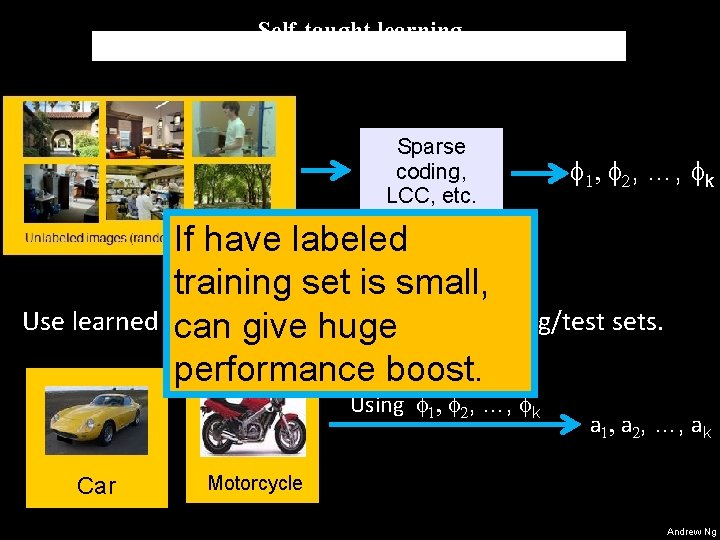

Self-taught learning Unlabeled images (random internet images) Testing: What is this? Car Motorcycle Andrew Ng

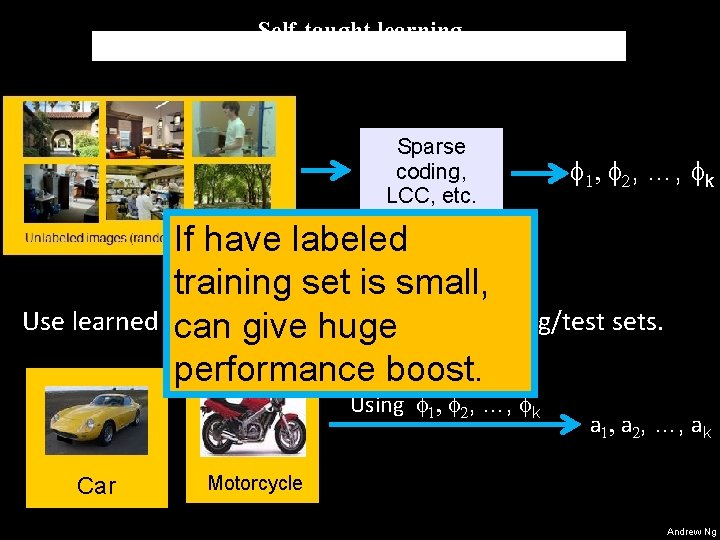

Self-taught learning Sparse coding, LCC, etc. f 1, f 2, …, fk If have labeled training set is small, Use learned fcan …, fk to represent training/test sets. 1, f 2, give huge performance boost. Using f 1, f 2, …, fk Car a 1, a 2, …, ak Motorcycle Andrew Ng

Learning feature hierarchies/Deep learning Andrew Ng

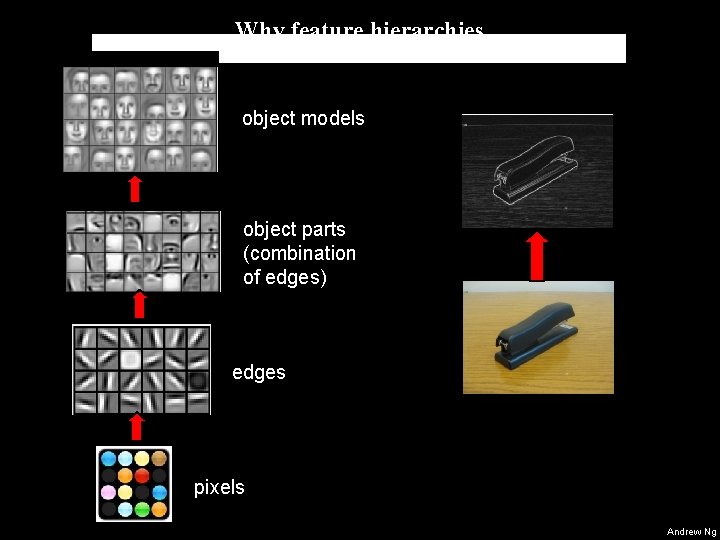

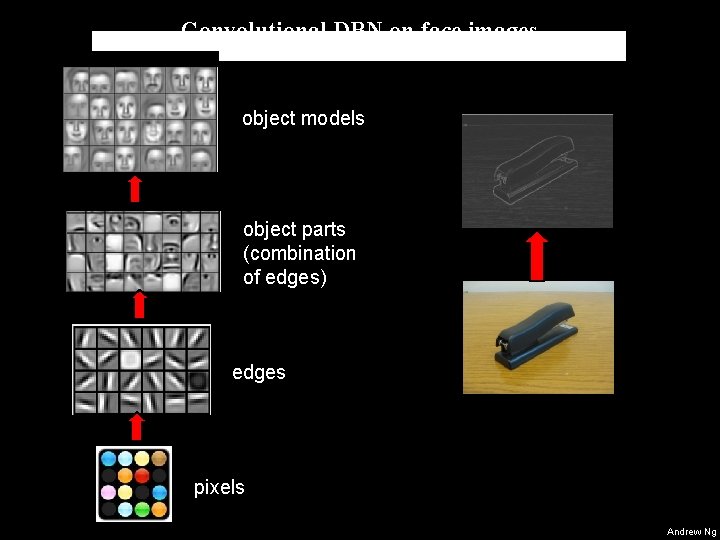

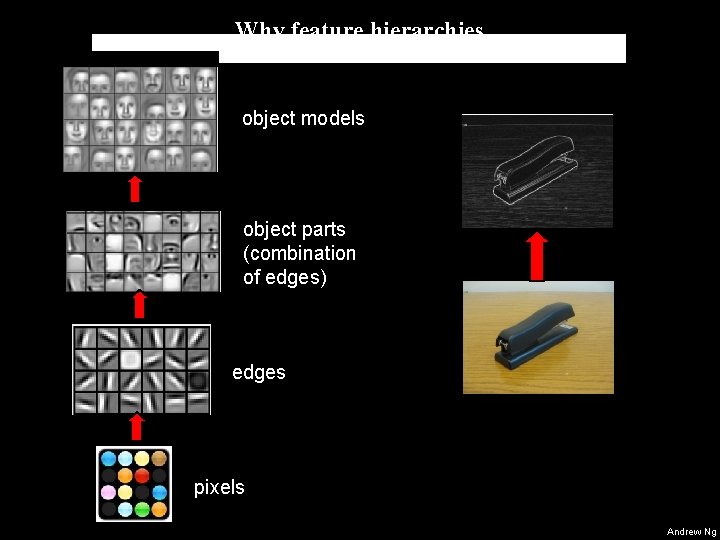

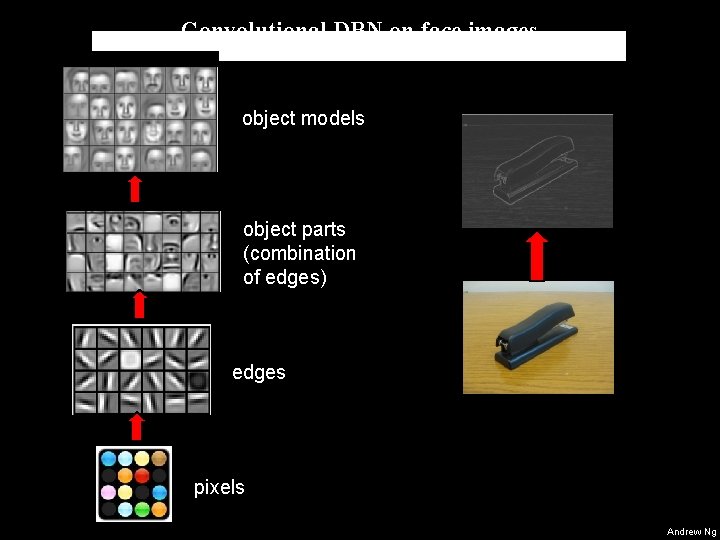

Why feature hierarchies object models object parts (combination of edges) edges pixels Andrew Ng

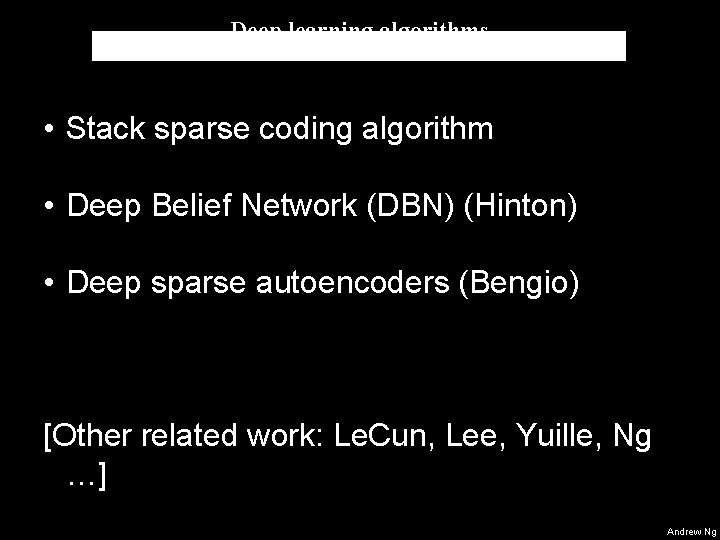

Deep learning algorithms • Stack sparse coding algorithm • Deep Belief Network (DBN) (Hinton) • Deep sparse autoencoders (Bengio) [Other related work: Le. Cun, Lee, Yuille, Ng …] Andrew Ng

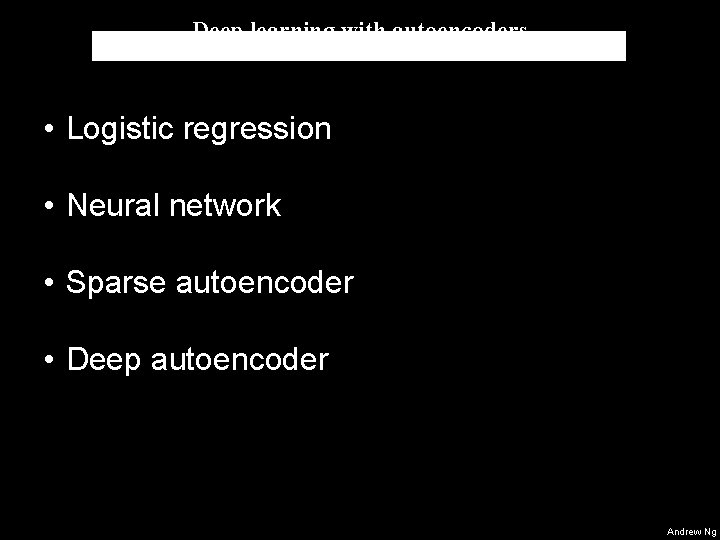

Deep learning with autoencoders • Logistic regression • Neural network • Sparse autoencoder • Deep autoencoder Andrew Ng

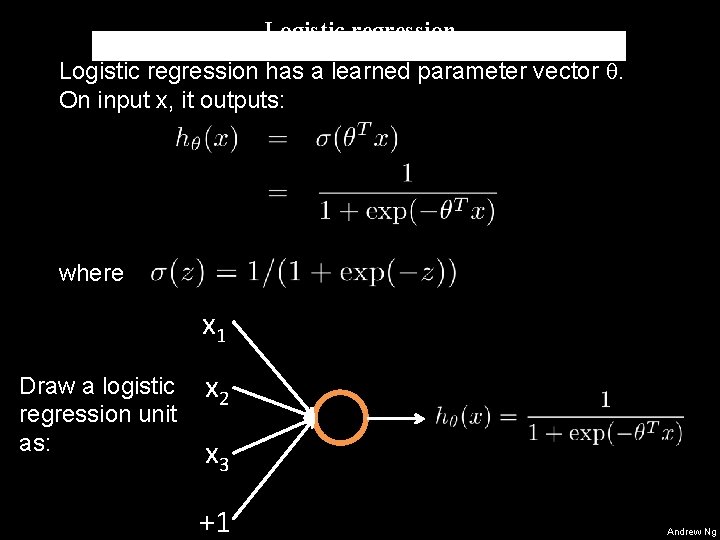

Logistic regression has a learned parameter vector q. On input x, it outputs: where x 1 Draw a logistic regression unit as: x 2 x 3 +1 Andrew Ng

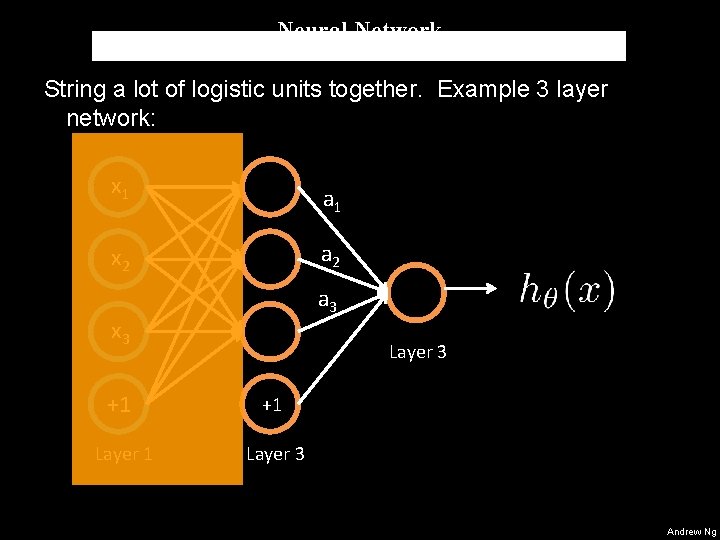

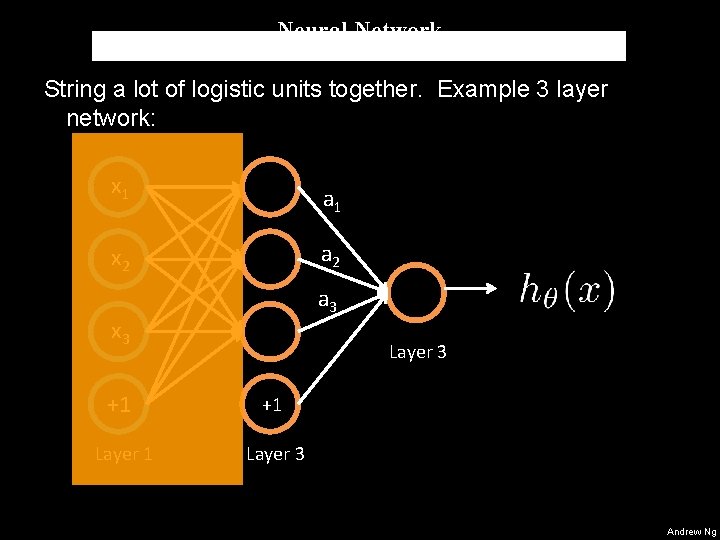

Neural Network String a lot of logistic units together. Example 3 layer network: x 1 a 2 x 2 a 3 x 3 Layer 3 +1 +1 Layer 3 Andrew Ng

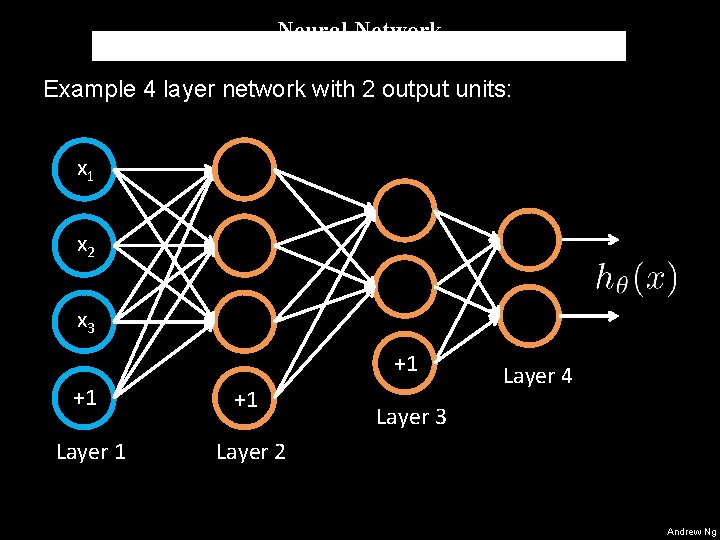

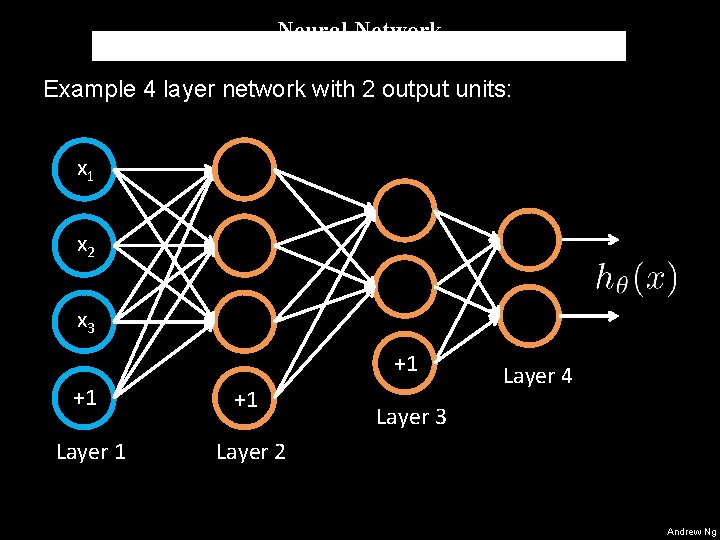

Neural Network Example 4 layer network with 2 output units: x 1 x 2 x 3 +1 +1 +1 Layer 2 Layer 4 Layer 3 Andrew Ng

![Neural Network example Courtesy of Yann Le Cun Andrew Ng Neural Network example [Courtesy of Yann Le. Cun] Andrew Ng](https://slidetodoc.com/presentation_image_h/cca58a4533294ba40bed18bd6b24d197/image-15.jpg)

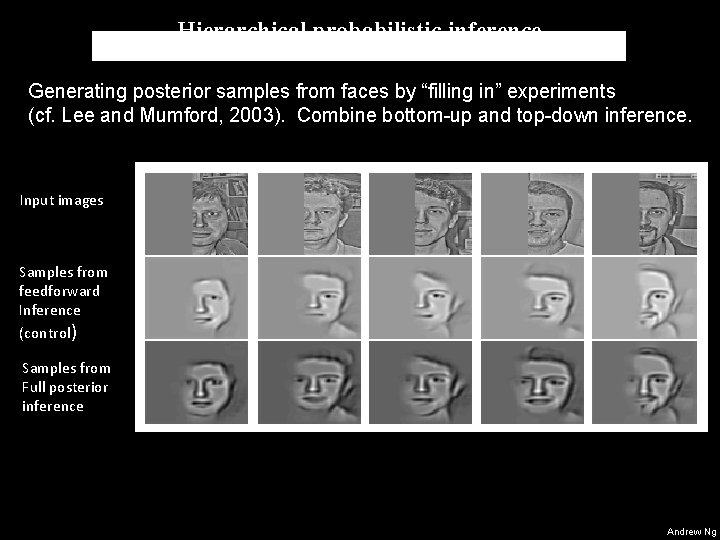

Neural Network example [Courtesy of Yann Le. Cun] Andrew Ng

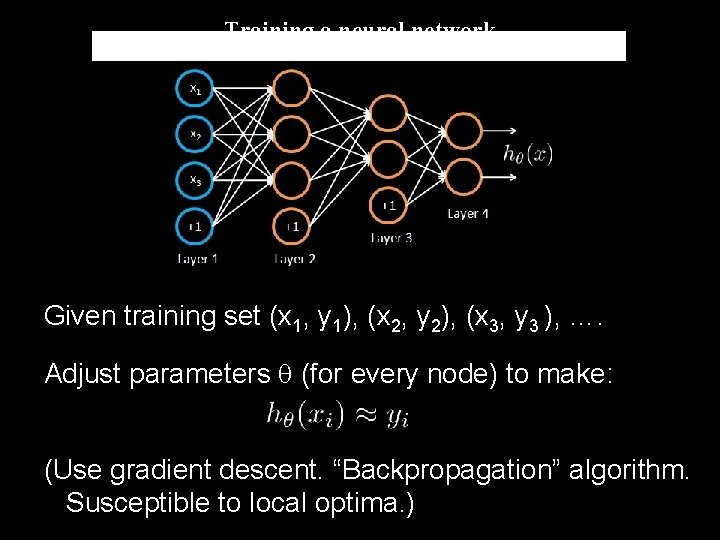

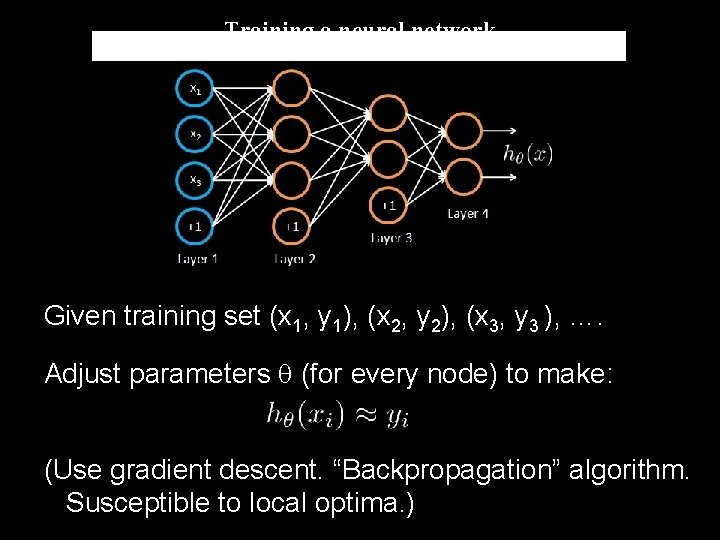

Training a neural network Given training set (x 1, y 1), (x 2, y 2), (x 3, y 3 ), …. Adjust parameters q (for every node) to make: (Use gradient descent. “Backpropagation” algorithm. Susceptible to local optima. ) Andrew Ng

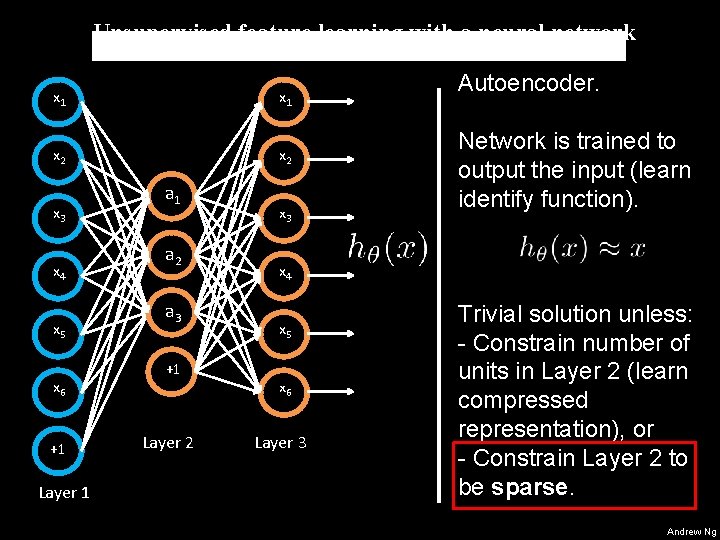

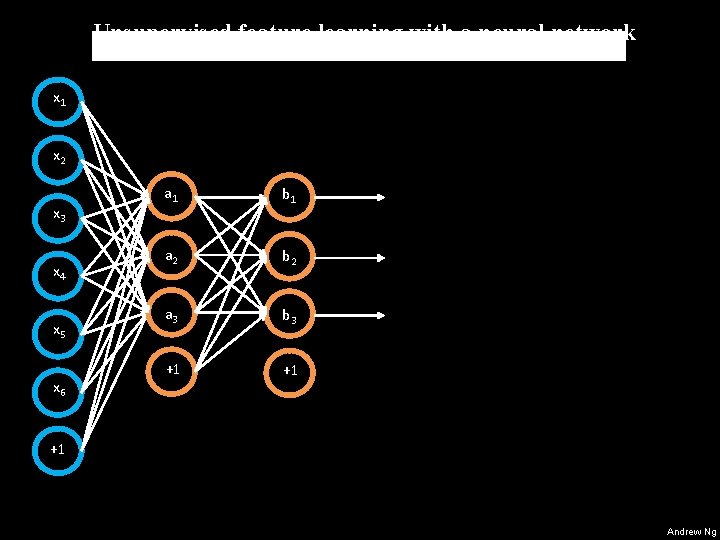

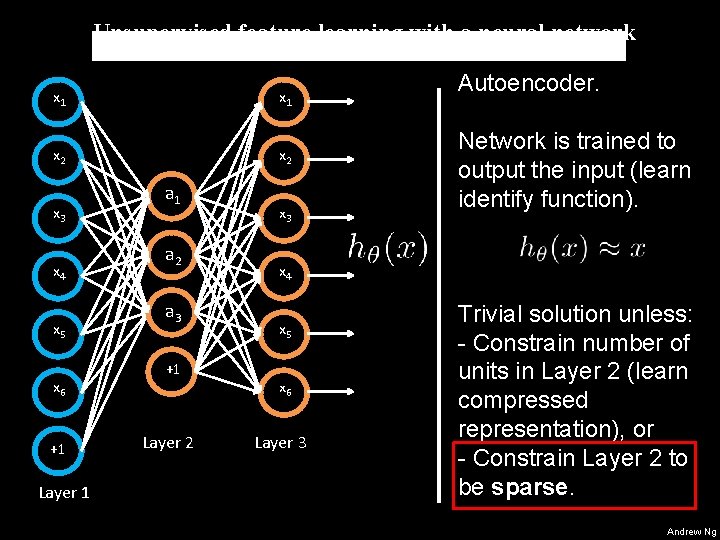

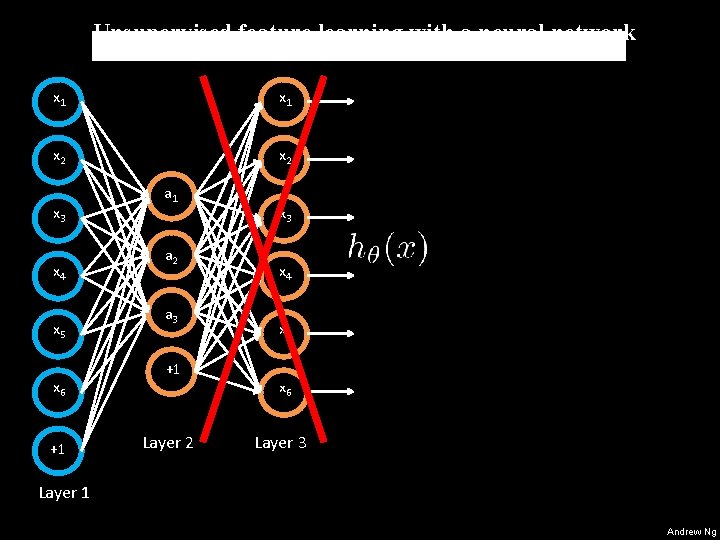

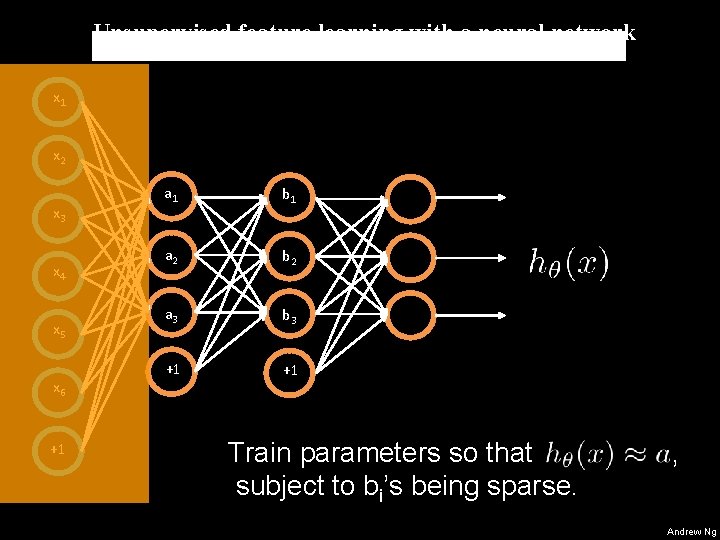

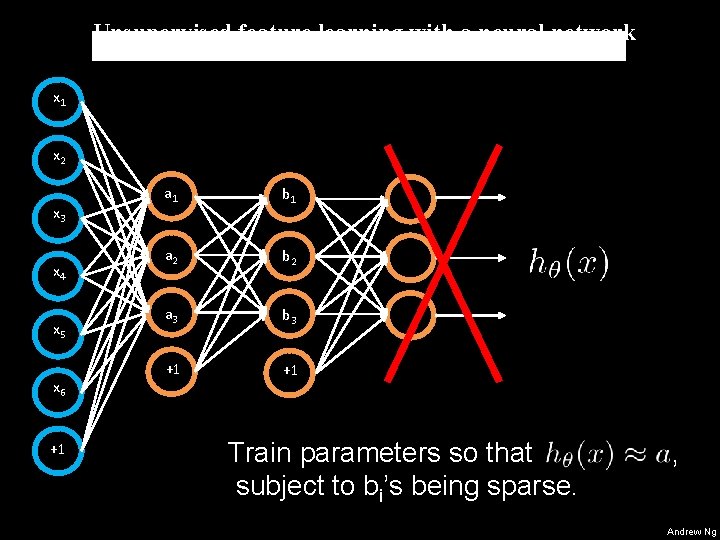

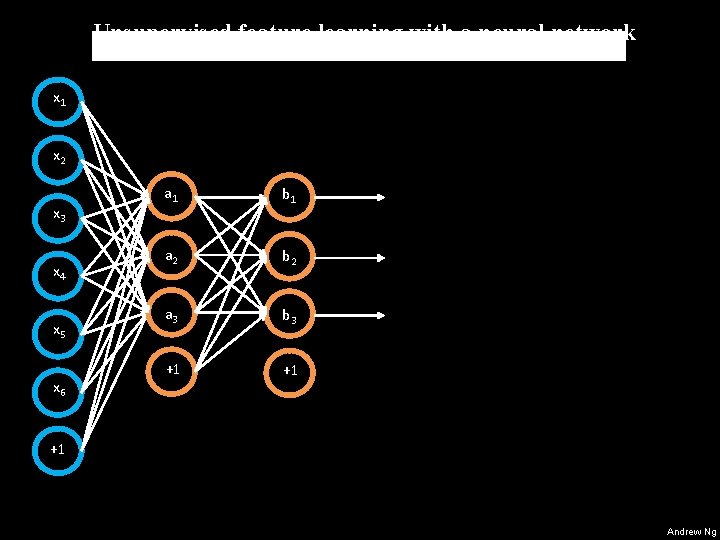

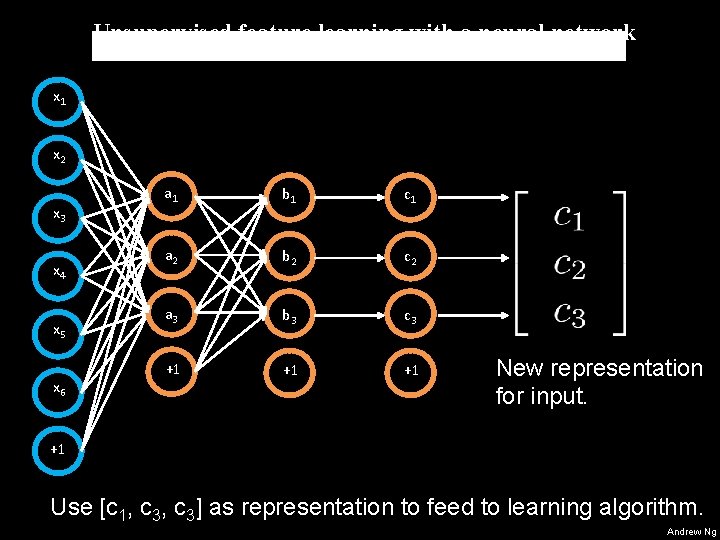

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 +1 Layer 1 a 2 a 3 +1 Layer 2 x 3 Autoencoder. Network is trained to output the input (learn identify function). x 4 x 5 x 6 Layer 3 Trivial solution unless: - Constrain number of units in Layer 2 (learn compressed representation), or - Constrain Layer 2 to be sparse. Andrew Ng

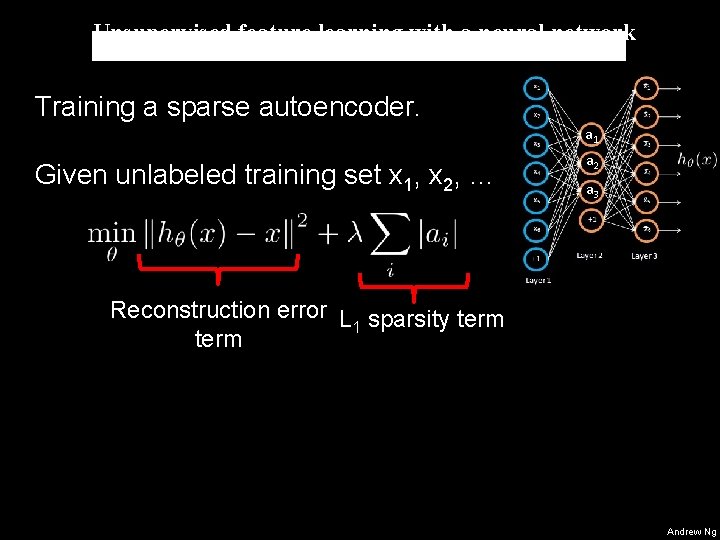

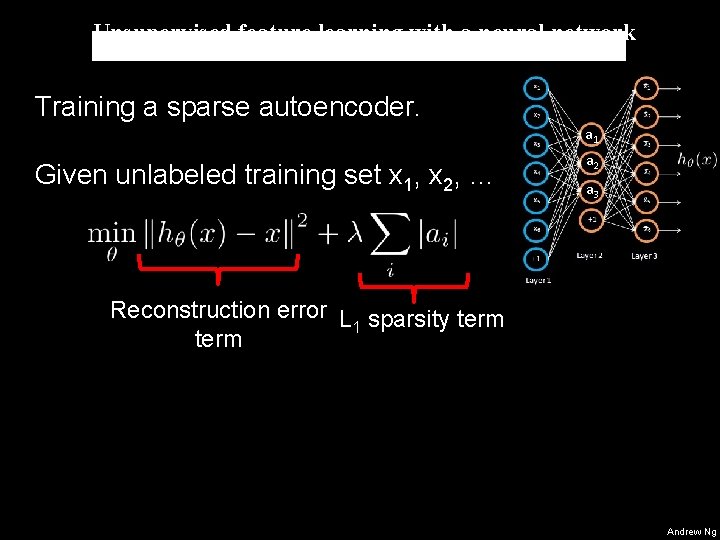

Unsupervised feature learning with a neural network Training a sparse autoencoder. a 1 Given unlabeled training set x 1, x 2, … a 2 a 3 Reconstruction error L sparsity term 1 term Andrew Ng

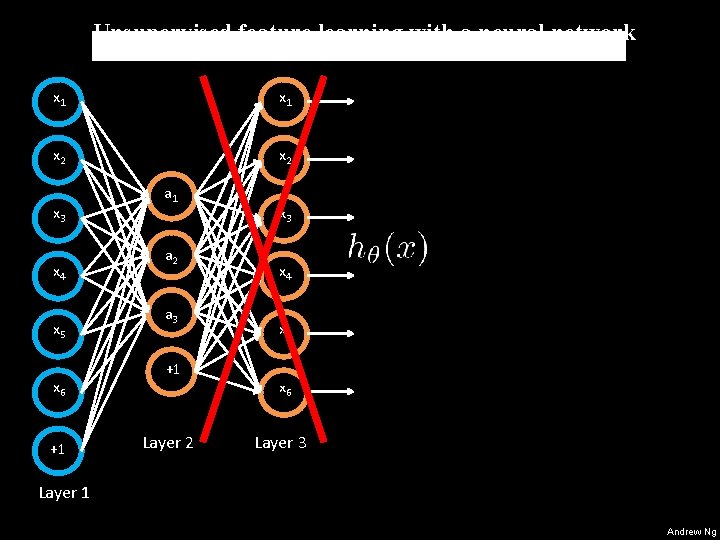

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 +1 a 2 a 3 +1 Layer 2 x 3 x 4 x 5 x 6 Layer 3 Layer 1 Andrew Ng

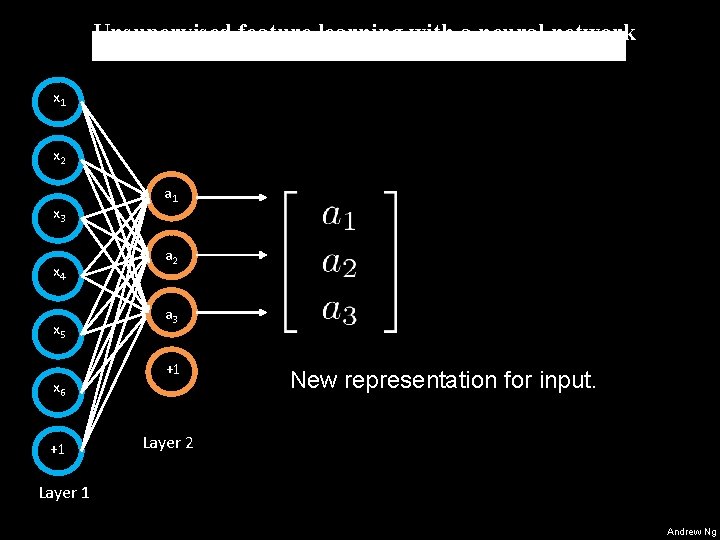

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 +1 a 2 a 3 +1 New representation for input. Layer 2 Layer 1 Andrew Ng

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 +1 a 2 a 3 +1 Layer 2 Layer 1 Andrew Ng

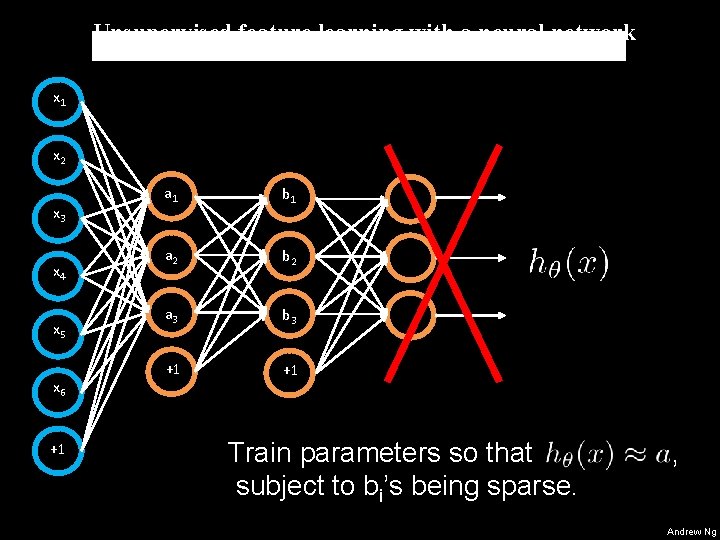

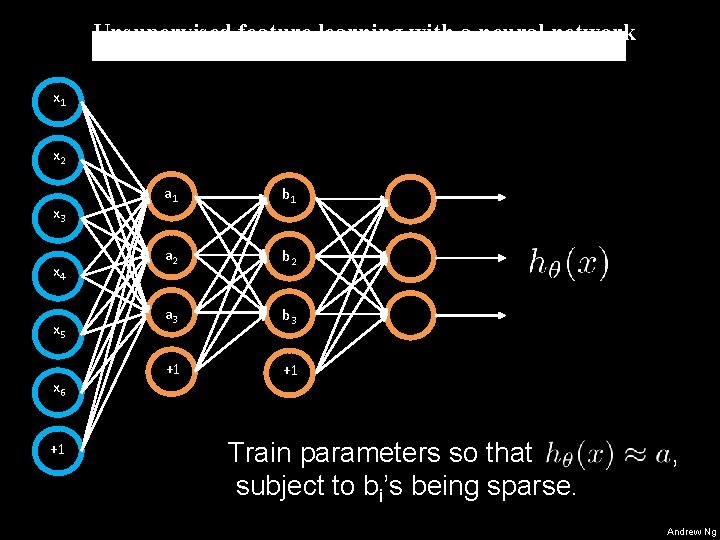

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 +1 a 1 b 1 a 2 b 2 a 3 b 3 +1 +1 Train parameters so that subject to bi’s being sparse. , Andrew Ng

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 +1 a 1 b 1 a 2 b 2 a 3 b 3 +1 +1 Train parameters so that subject to bi’s being sparse. , Andrew Ng

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 +1 a 1 b 1 a 2 b 2 a 3 b 3 +1 +1 Train parameters so that subject to bi’s being sparse. , Andrew Ng

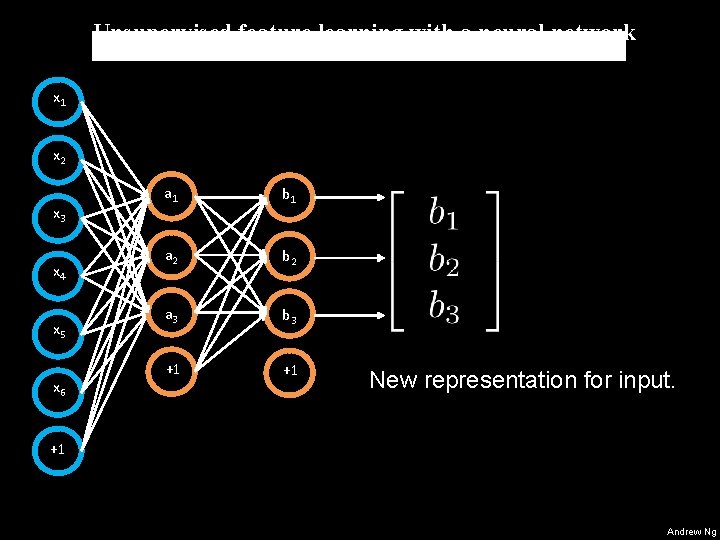

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 a 1 b 1 a 2 b 2 a 3 b 3 +1 +1 New representation for input. +1 Andrew Ng

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 a 1 b 1 a 2 b 2 a 3 b 3 +1 +1 +1 Andrew Ng

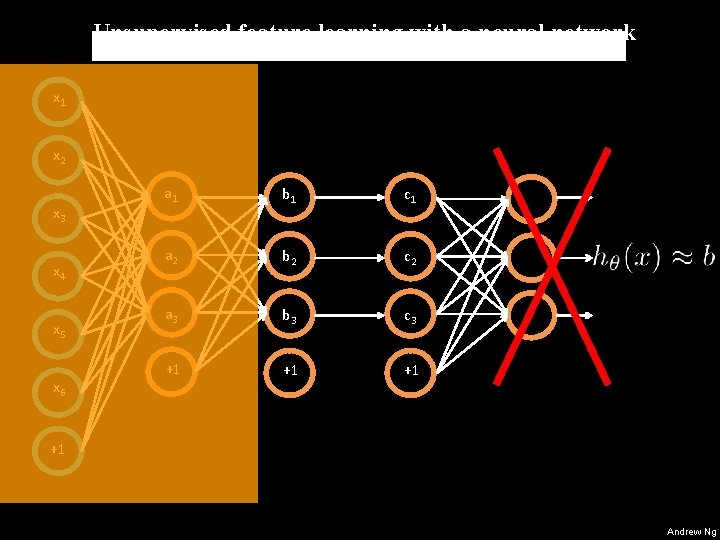

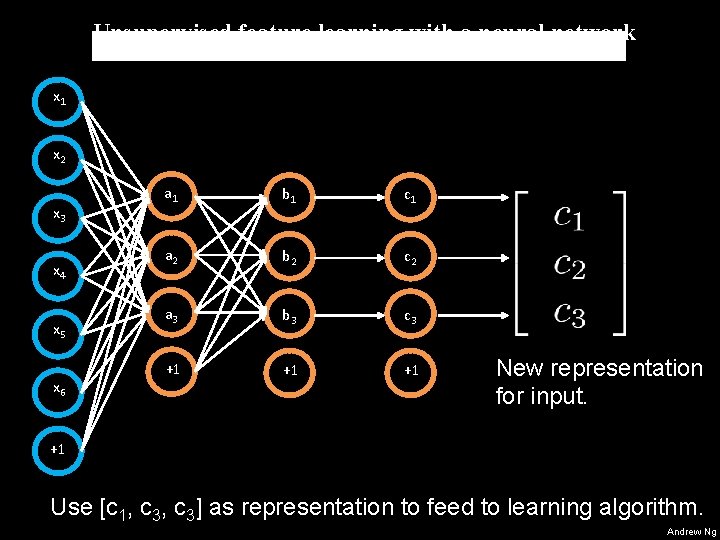

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 a 1 b 1 c 1 a 2 b 2 c 2 a 3 b 3 c 3 +1 +1 Andrew Ng

Unsupervised feature learning with a neural network x 1 x 2 x 3 x 4 x 5 x 6 a 1 b 1 c 1 a 2 b 2 c 2 a 3 b 3 c 3 +1 +1 +1 New representation for input. +1 Use [c 1, c 3] as representation to feed to learning algorithm. Andrew Ng

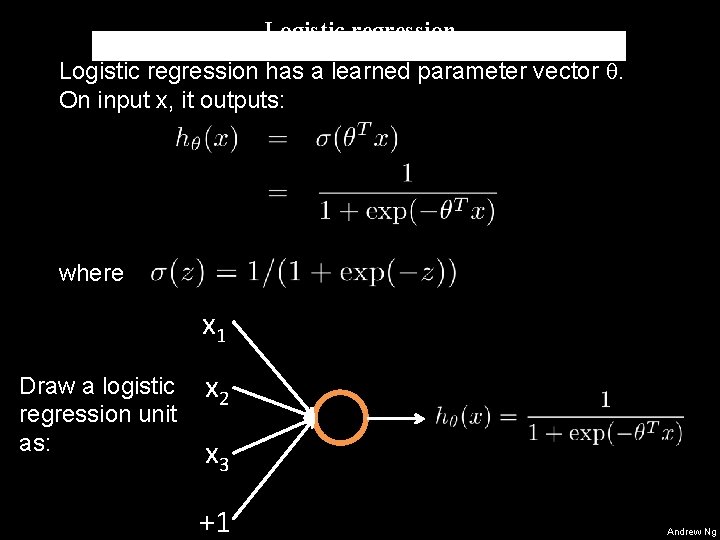

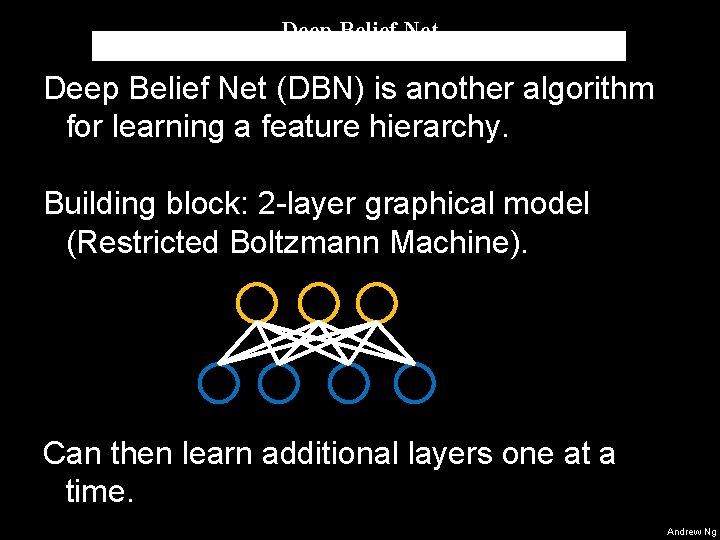

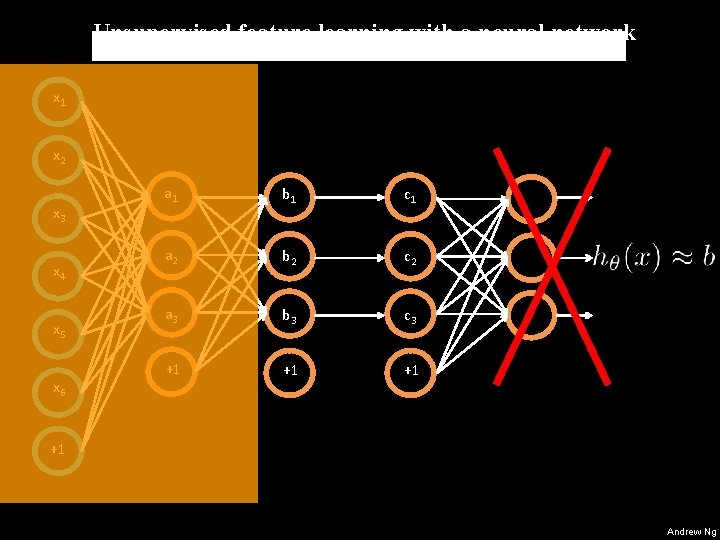

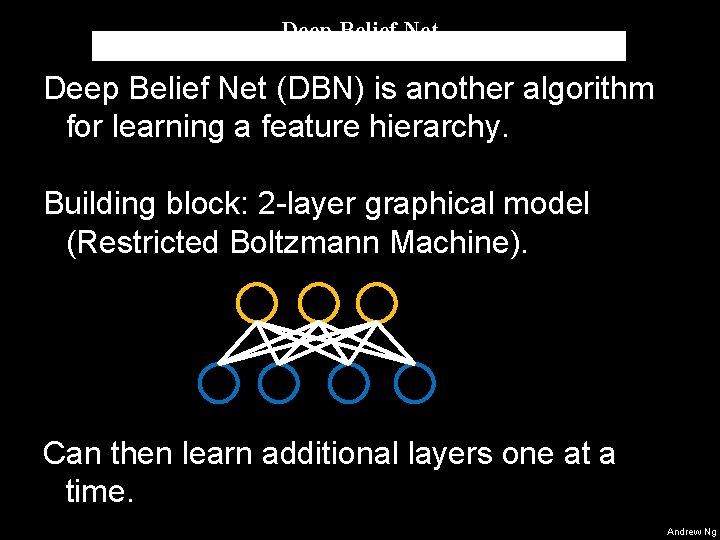

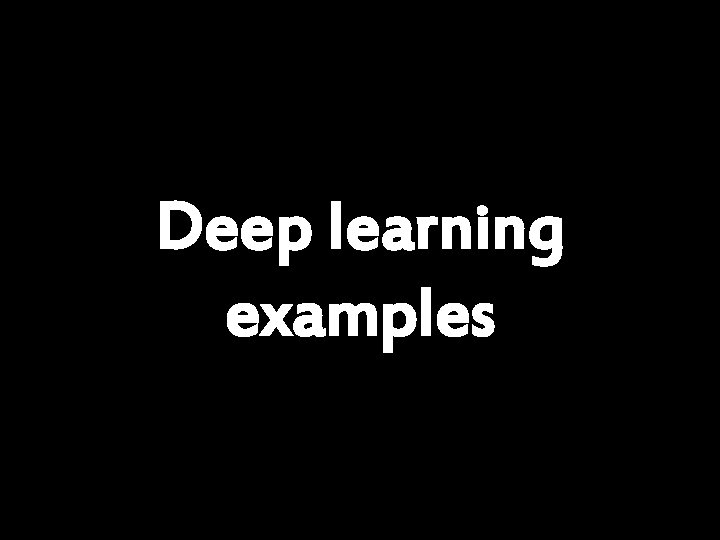

Deep Belief Net (DBN) is another algorithm for learning a feature hierarchy. Building block: 2 -layer graphical model (Restricted Boltzmann Machine). Can then learn additional layers one at a time. Andrew Ng

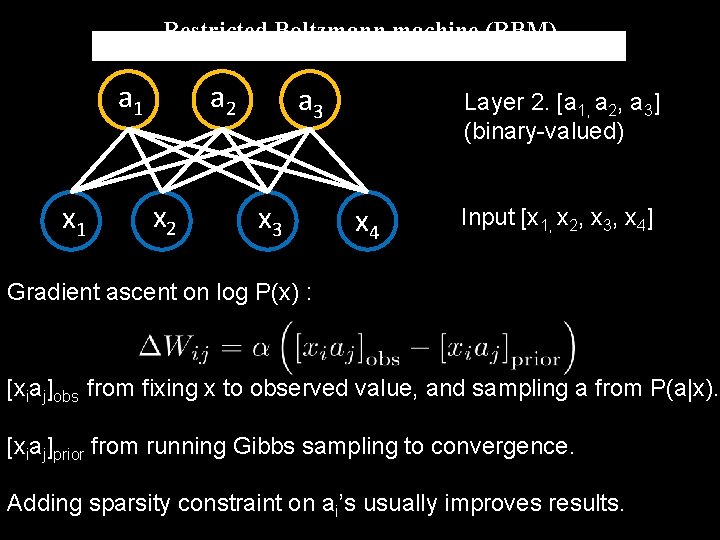

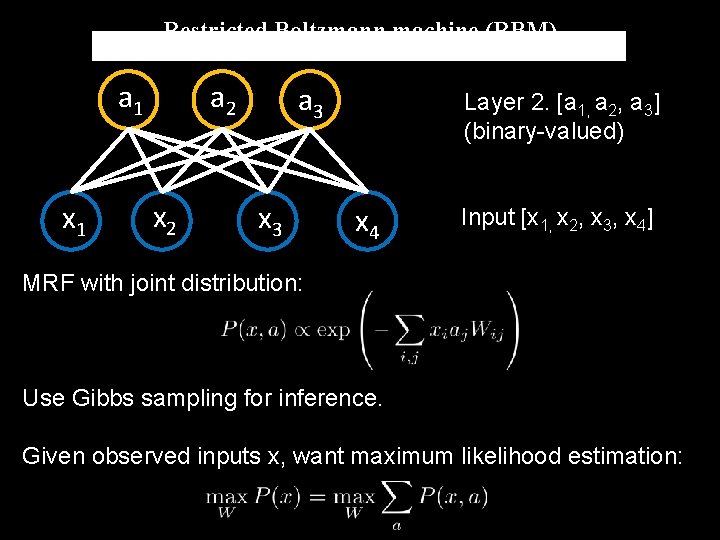

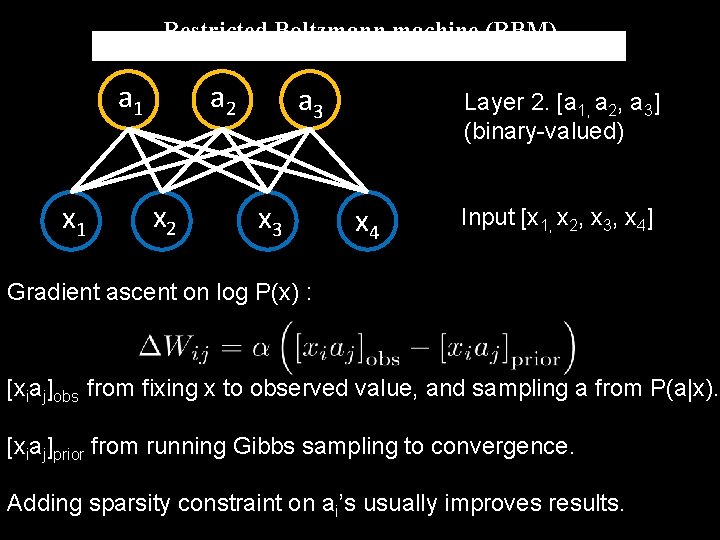

Restricted Boltzmann machine (RBM) a 1 x 1 a 2 x 2 a 3 x 3 Layer 2. [a 1, a 2, a 3] (binary-valued) x 4 Input [x 1, x 2, x 3, x 4] MRF with joint distribution: Use Gibbs sampling for inference. Given observed inputs x, want maximum likelihood estimation: Andrew Ng

Restricted Boltzmann machine (RBM) a 1 x 1 a 2 x 2 a 3 x 3 Layer 2. [a 1, a 2, a 3] (binary-valued) x 4 Input [x 1, x 2, x 3, x 4] Gradient ascent on log P(x) : [xiaj]obs from fixing x to observed value, and sampling a from P(a|x). [xiaj]prior from running Gibbs sampling to convergence. Adding sparsity constraint on ai’s usually improves results. Andrew Ng

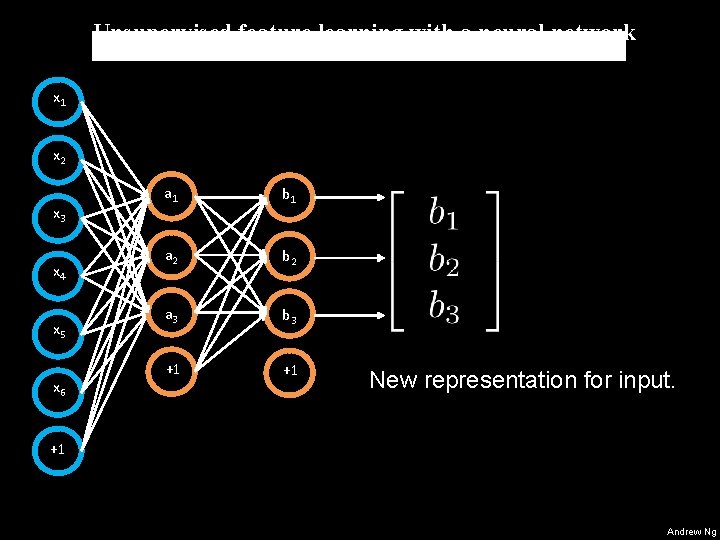

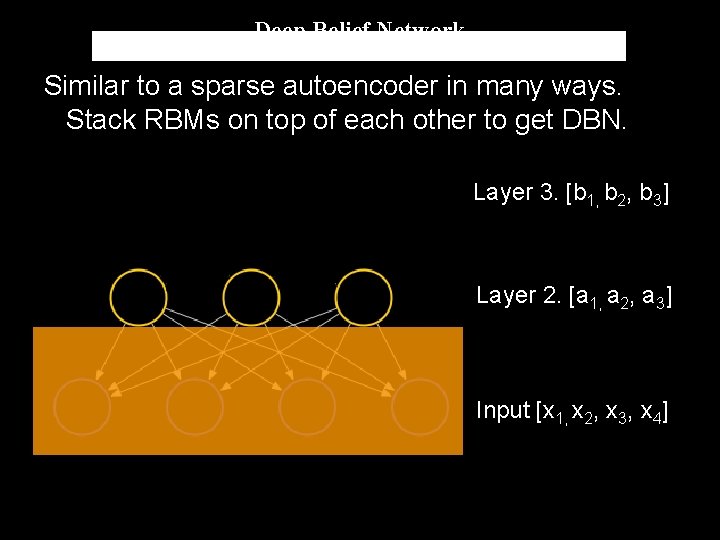

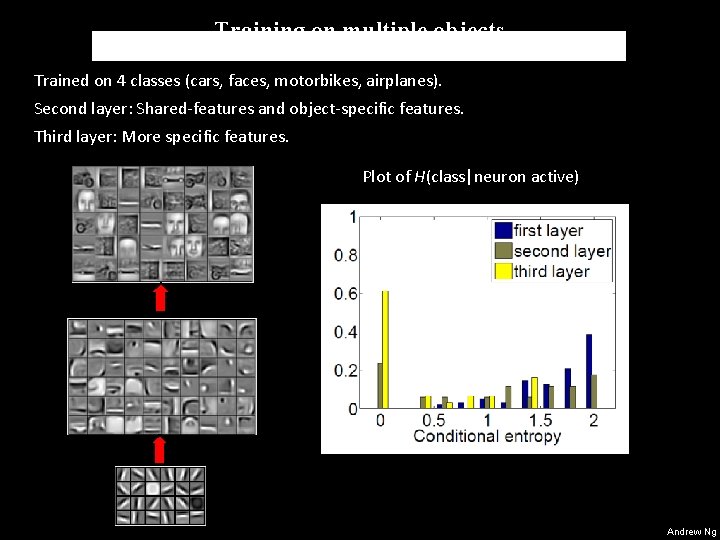

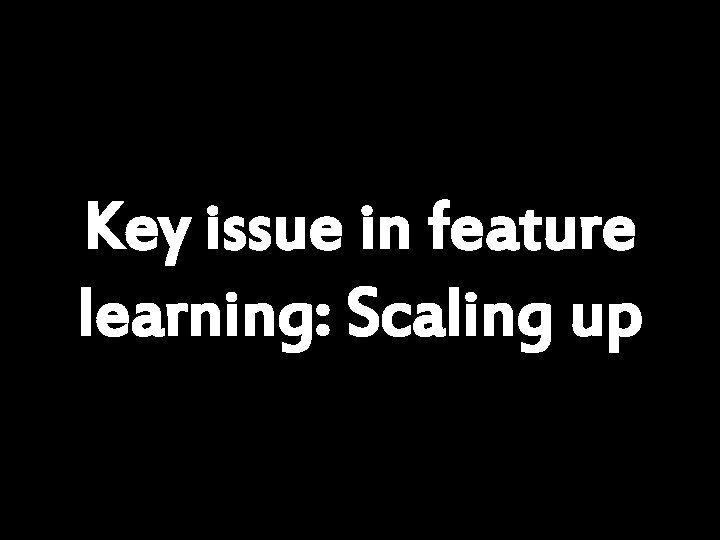

Deep Belief Network Similar to a sparse autoencoder in many ways. Stack RBMs on top of each other to get DBN. Layer 3. [b 1, b 2, b 3] Layer 2. [a 1, a 2, a 3] Input [x 1, x 2, x 3, x 4] Andrew Ng

![Deep Belief Network Layer 4 c 1 c 2 c 3 Layer 3 b Deep Belief Network Layer 4. [c 1, c 2, c 3] Layer 3. [b](https://slidetodoc.com/presentation_image_h/cca58a4533294ba40bed18bd6b24d197/image-33.jpg)

Deep Belief Network Layer 4. [c 1, c 2, c 3] Layer 3. [b 1, b 2, b 3] Layer 2. [a 1, a 2, a 3] Input [x 1, x 2, x 3, x 4] Andrew Ng

Deep learning examples Andrew Ng

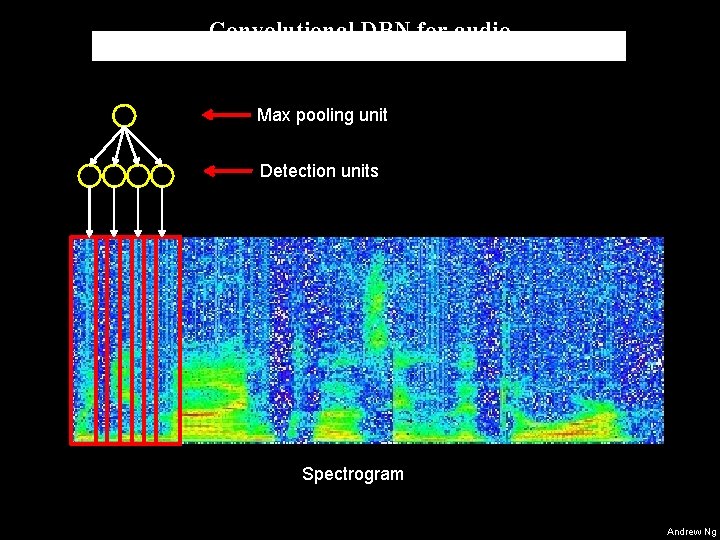

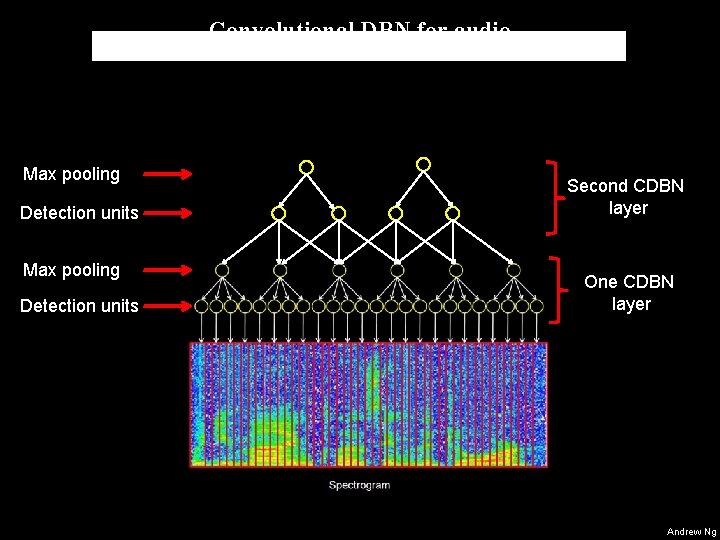

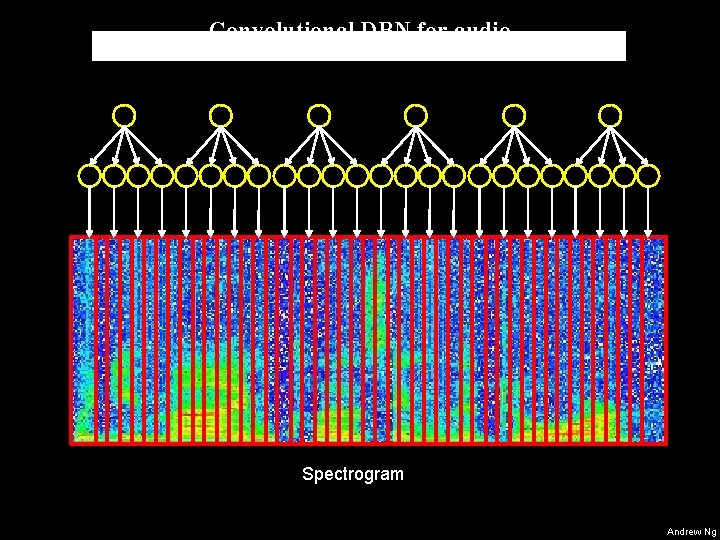

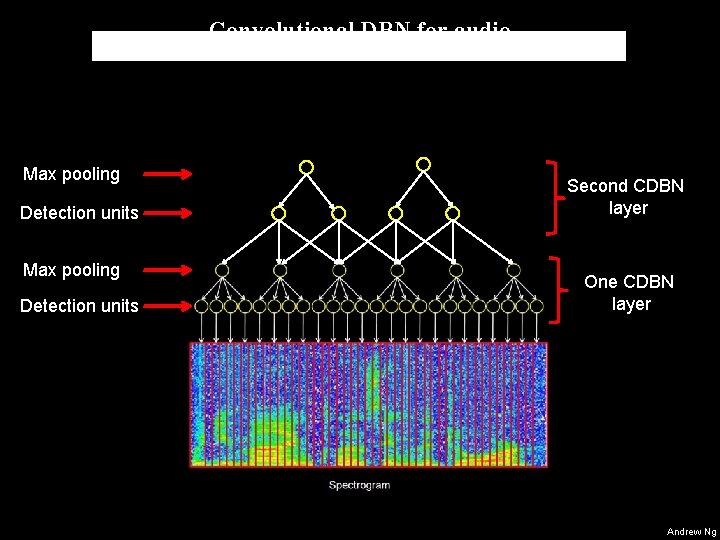

Convolutional DBN for audio Max pooling unit Detection units Spectrogram Andrew Ng

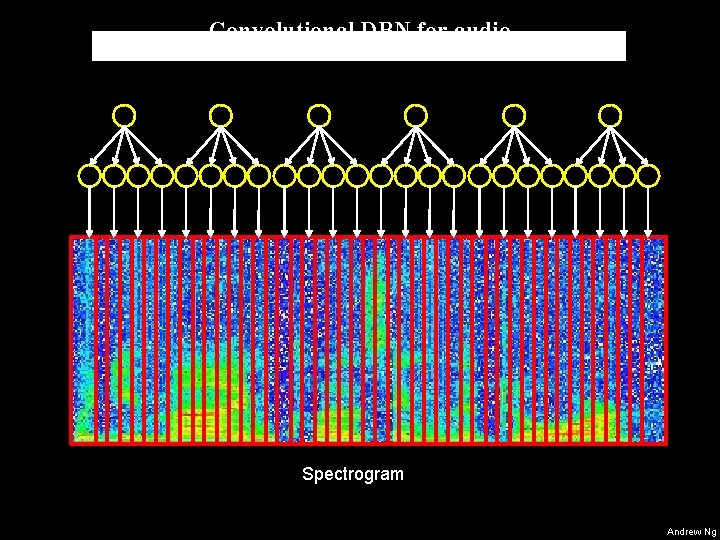

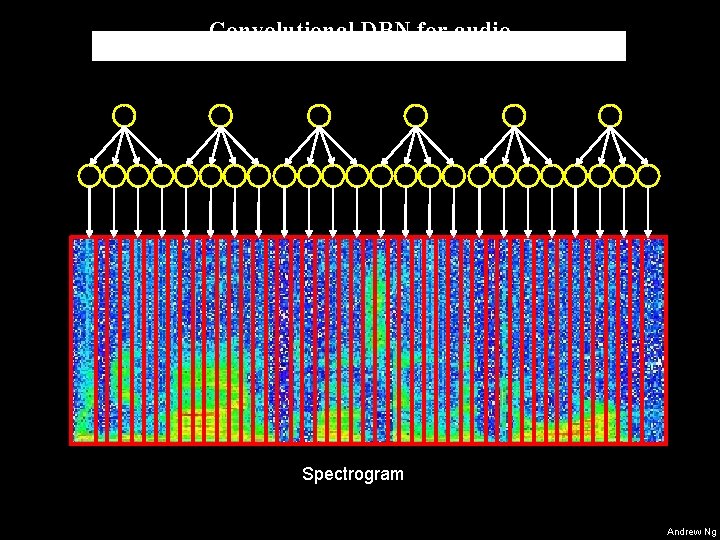

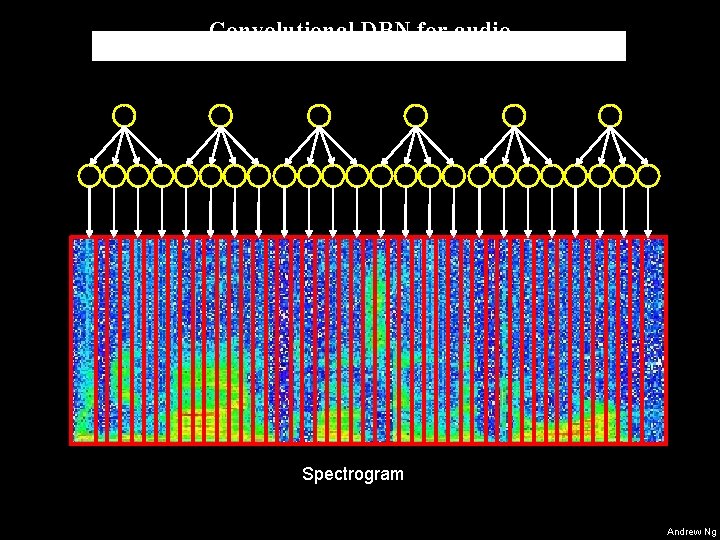

Convolutional DBN for audio Spectrogram Andrew Ng

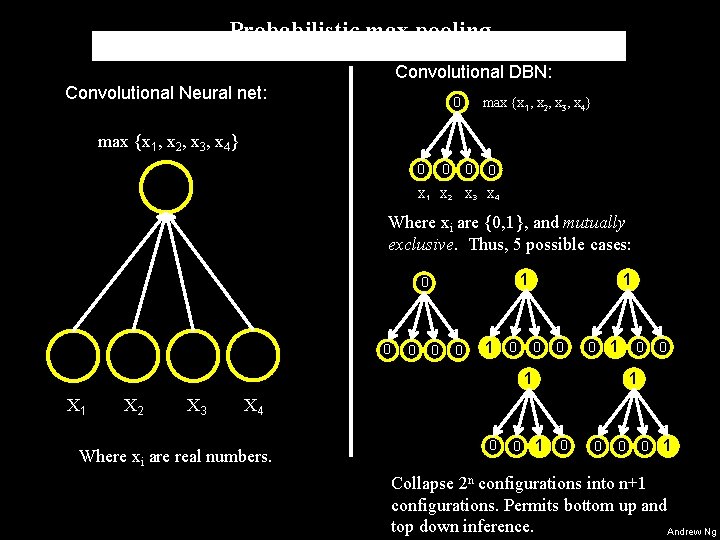

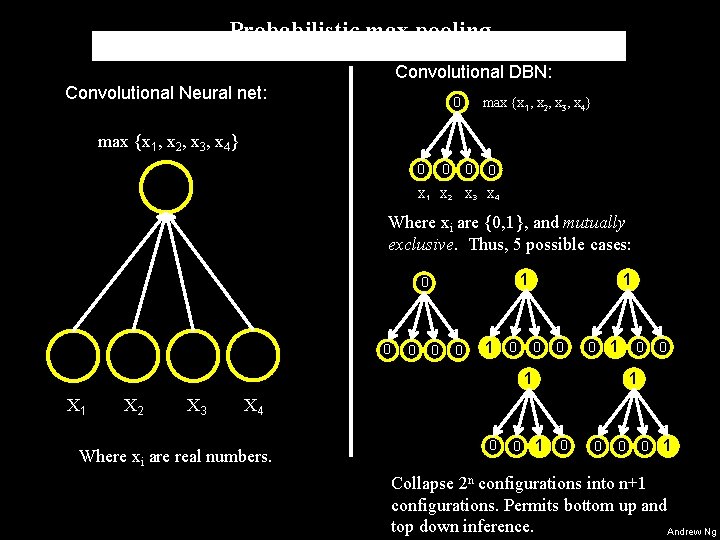

Probabilistic max pooling Convolutional DBN: Convolutional Neural net: max {x 1, x 2, x 3, x 4} 0 0 0 X 1 X 2 0 X 3 X 4 Where xi are {0, 1}, and mutually exclusive. Thus, 5 possible cases: 0 0 1 1 0 0 0 0 1 1 X 2 X 3 0 0 1 X 4 Where xi are real numbers. 0 0 1 Collapse 2 n configurations into n+1 configurations. Permits bottom up and top down inference. Andrew Ng

Convolutional DBN for audio Spectrogram Andrew Ng

Convolutional DBN for audio Max pooling Detection units Second CDBN layer One CDBN layer Andrew Ng

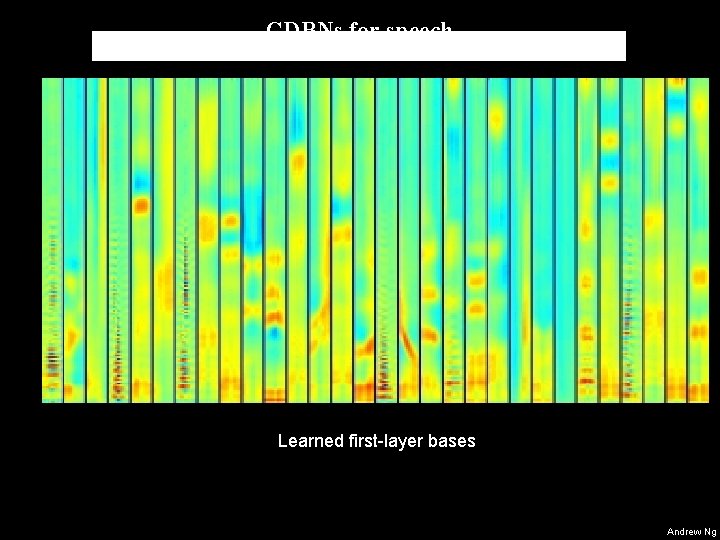

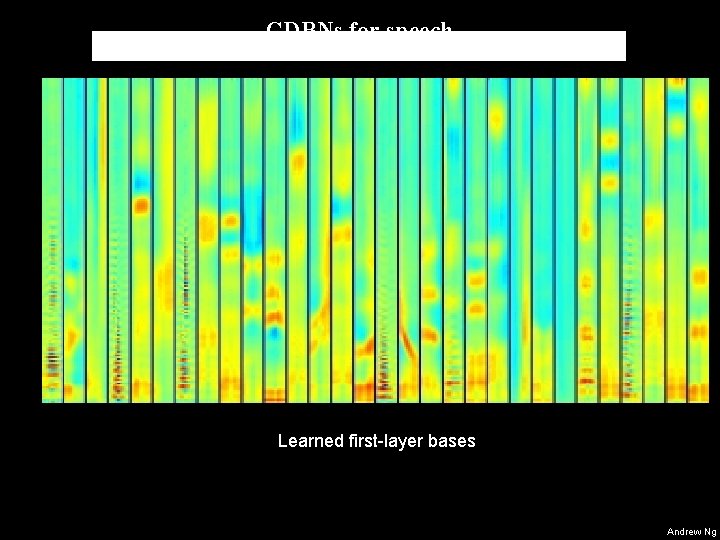

CDBNs for speech Learned first-layer bases Andrew Ng

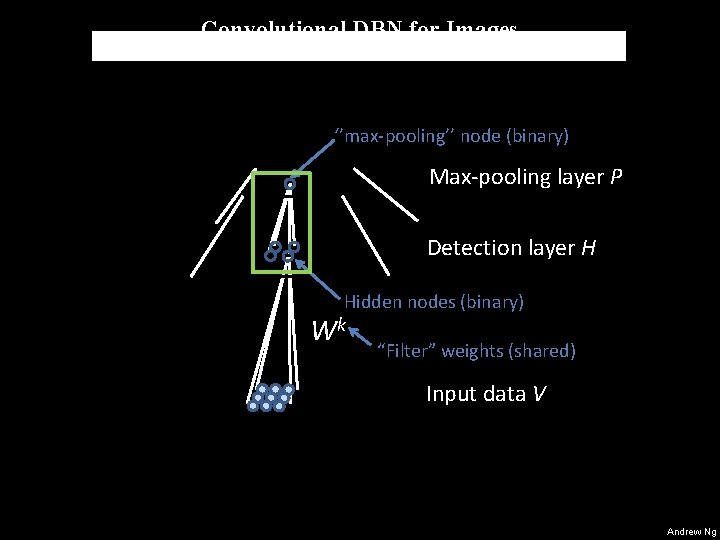

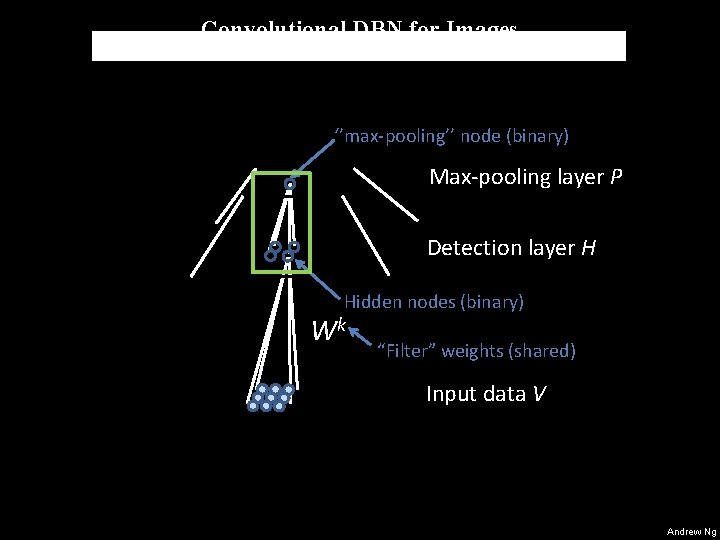

Convolutional DBN for Images ‘’max-pooling’’ node (binary) Max-pooling layer P Detection layer H Hidden nodes (binary) Wk “Filter” weights (shared) Input data V Andrew Ng

Convolutional DBN on face images object models object parts (combination of edges) edges pixels Andrew Ng

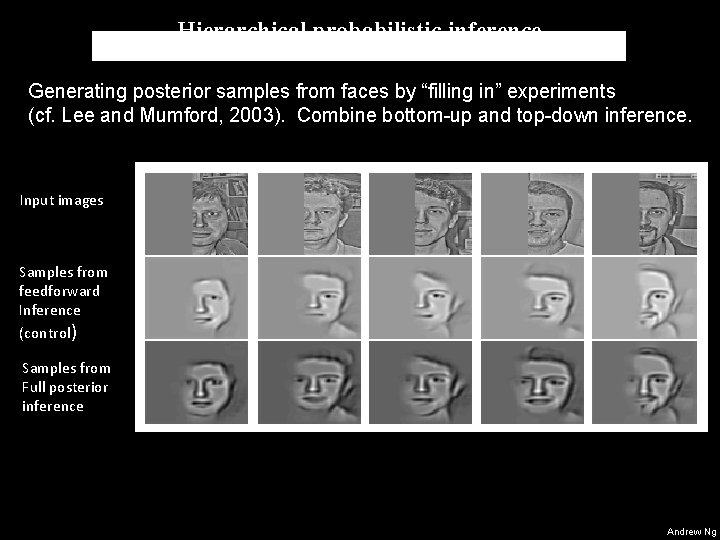

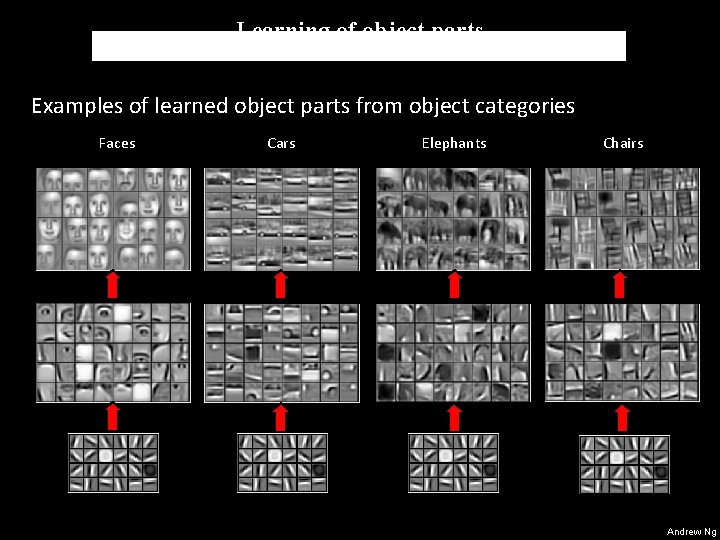

Learning of object parts Examples of learned object parts from object categories Faces Cars Elephants Chairs Andrew Ng

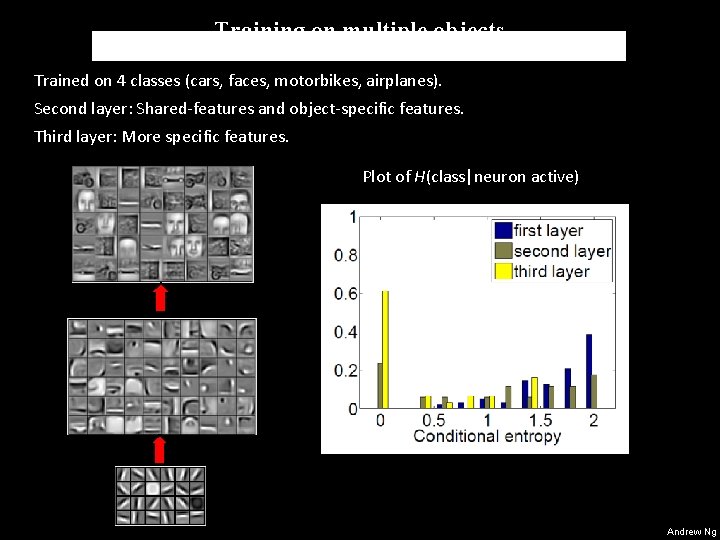

Training on multiple objects Trained on 4 classes (cars, faces, motorbikes, airplanes). Second layer: Shared-features and object-specific features. Third layer: More specific features. Plot of H(class|neuron active) Andrew Ng

Hierarchical probabilistic inference Generating posterior samples from faces by “filling in” experiments (cf. Lee and Mumford, 2003). Combine bottom-up and top-down inference. Input images Samples from feedforward Inference (control) Samples from Full posterior inference Andrew Ng

Key issue in feature learning: Scaling up Andrew Ng

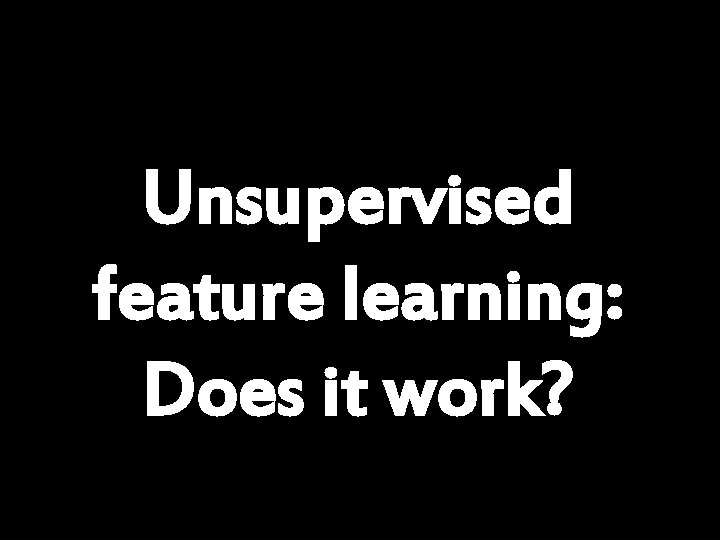

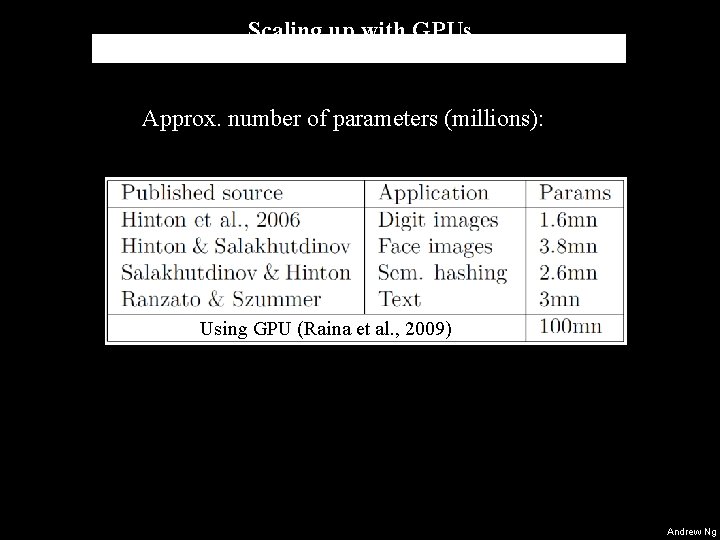

Scaling up with graphics processors US$ 250 NVIDIA GPU Peak GFlops Intel CPU 2003 2004 2005 2006 2007 2008 (Source: NVIDIA CUDA Programming Guide) Andrew Ng

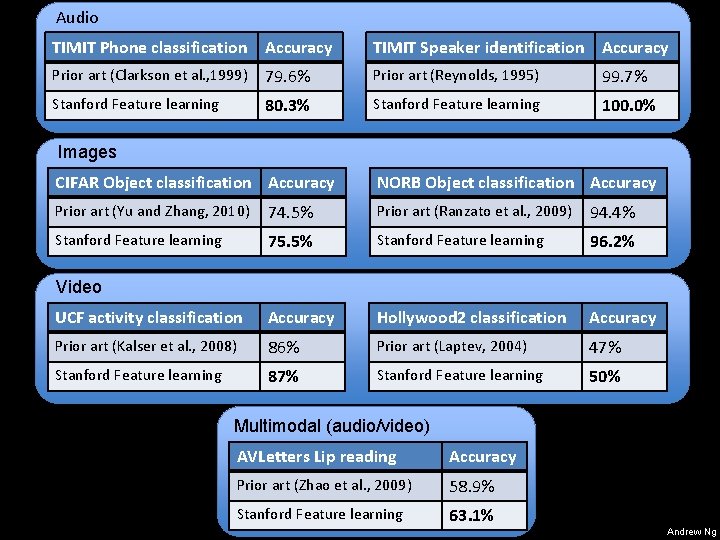

Scaling up with GPUs Approx. number of parameters (millions): Using GPU (Raina et al. , 2009) Andrew Ng

Unsupervised feature learning: Does it work? Andrew Ng

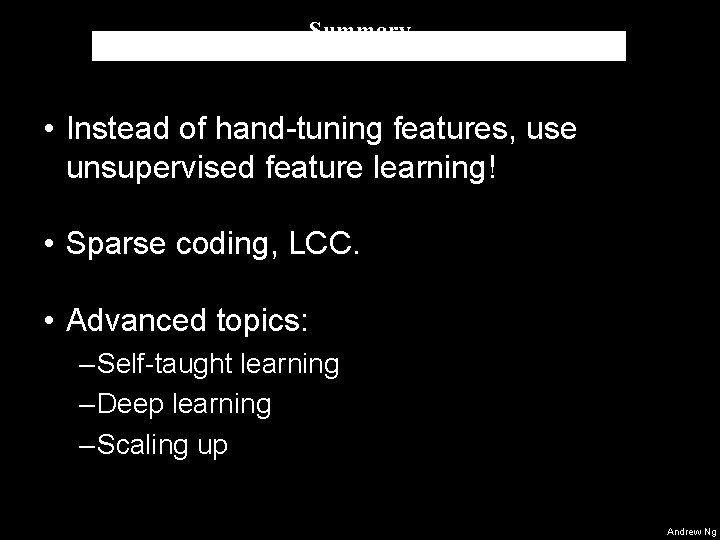

Audio State-of-the-art task performance TIMIT Phone classification Accuracy TIMIT Speaker identification Accuracy Prior art (Clarkson et al. , 1999) 79. 6% Prior art (Reynolds, 1995) 99. 7% Stanford Feature learning 80. 3% Stanford Feature learning 100. 0% Images CIFAR Object classification Accuracy NORB Object classification Accuracy Prior art (Yu and Zhang, 2010) 74. 5% Prior art (Ranzato et al. , 2009) 94. 4% Stanford Feature learning 75. 5% Stanford Feature learning 96. 2% UCF activity classification Accuracy Hollywood 2 classification Accuracy Prior art (Kalser et al. , 2008) 86% Prior art (Laptev, 2004) 47% Stanford Feature learning 87% Stanford Feature learning 50% Video Multimodal (audio/video) AVLetters Lip reading Accuracy Prior art (Zhao et al. , 2009) 58. 9% Stanford Feature learning 63. 1% Andrew Ng

Summary • Instead of hand-tuning features, use unsupervised feature learning! • Sparse coding, LCC. • Advanced topics: – Self-taught learning – Deep learning – Scaling up Andrew Ng

Other resources Workshop page: http: //ufldl. stanford. edu/eccv 10 -tutorial/ • Code for Sparse coding, LCC. • References. • Full online tutorial. Andrew Ng