Advanced Smoothing Evaluation of Language Models 1 WittenBell

Advanced Smoothing, Evaluation of Language Models 1

Witten-Bell Discounting A zero ngram is just an ngram you haven’t seen yet…but every ngram in the corpus was unseen once…so. . . How many times did we see an ngram for the first time? Once for each ngram type (T) Est. total probability of unseen bigrams as View training corpus as series of events, one for each token (N) and one for each new type (T) We can divide the probability mass equally among unseen bigrams…. or we can condition the probability of an unseen bigram on the first word of the bigram Discount values for Witten-Bell are much more reasonable than Add. One 2

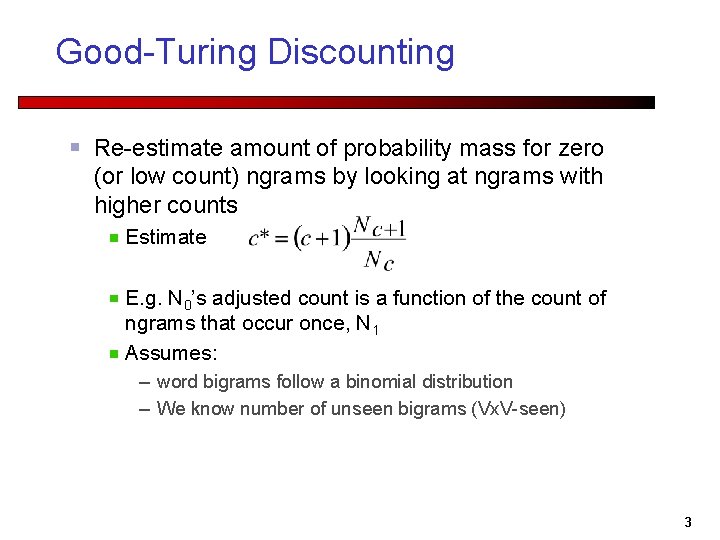

Good-Turing Discounting Re-estimate amount of probability mass for zero (or low count) ngrams by looking at ngrams with higher counts Estimate E. g. N 0’s adjusted count is a function of the count of ngrams that occur once, N 1 Assumes: – word bigrams follow a binomial distribution – We know number of unseen bigrams (Vx. V-seen) 3

Interpolation and Backoff Typically used in addition to smoothing techniques/ discounting Example: trigrams Smoothing gives some probability mass to all the trigram types not observed in the training data We could make a more informed decision! How? If backoff finds an unobserved trigram in the test data, it will “back off” to bigrams (and ultimately to unigrams) – Backoff doesn’t treat all unseen trigrams alike – When we have observed a trigram, we will rely solely on the trigram counts 4

Backoff methods (e. g. Katz ‘ 87) For e. g. a trigram model Compute unigram, bigram and trigram probabilities In use: – Where trigram unavailable back off to bigram if available, o. w. unigram probability – E. g An omnivorous unicorn 5

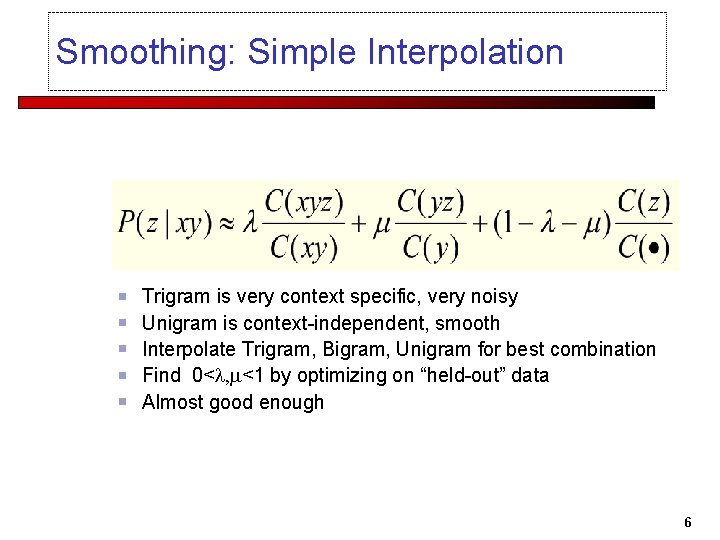

Smoothing: Simple Interpolation Trigram is very context specific, very noisy Unigram is context-independent, smooth Interpolate Trigram, Bigram, Unigram for best combination Find 0< <1 by optimizing on “held-out” data Almost good enough 6

Smoothing: Held-out estmation Finding parameter values Split data into training, “heldout”, test Try lots of different values for on heldout data, pick best Test on test data Sometimes, can use tricks like “EM” (estimation maximization) to find values How much data for training, heldout, test? Answer: enough test data to be statistically significant. (1000 s of words perhaps) 7

Summary N-gram probabilities can be used to estimate the likelihood Of a word occurring in a context (N-1) Of a sentence occurring at all Smoothing techniques deal with problems of unseen words in a corpus 8

Practical Issues Represent and compute language model probabilities on log format p 1 p 2 p 3 p 4 = exp (log p 1 + log p 2 + log p 3 + log p 4) 9

Class-based n-grams P(wi|wi-1) = P(ci|ci-1) x P(wi|ci) Factored Language Models 10

Evaluating language models We need evaluation metrics to determine how good our language models predict the next word Intuition: one should average over the probability of new words 11

Some basic information theory Evaluation metrics for language models Information theory: measures of information Entropy Perplexity 12

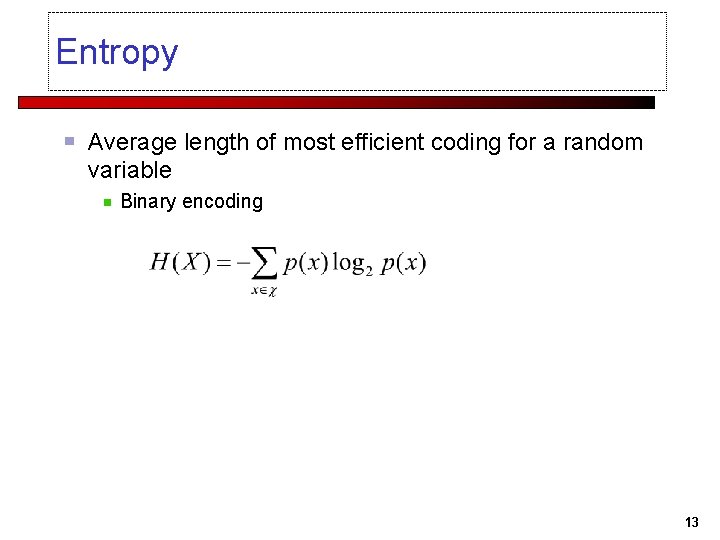

Entropy Average length of most efficient coding for a random variable Binary encoding 13

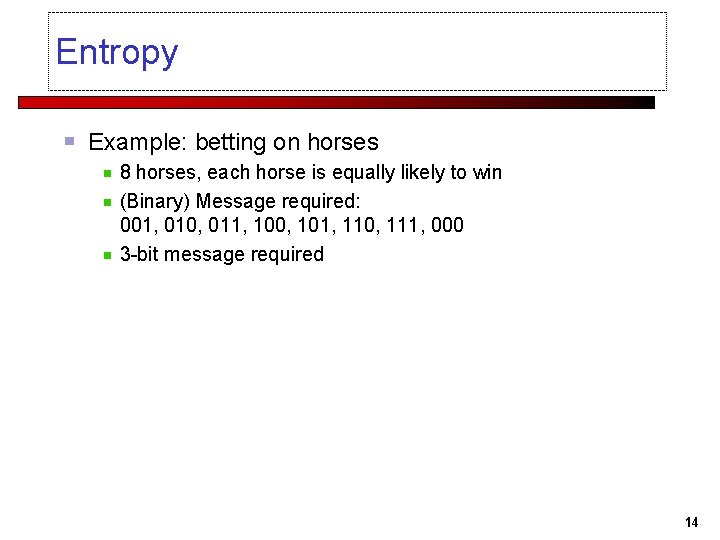

Entropy Example: betting on horses 8 horses, each horse is equally likely to win (Binary) Message required: 001, 010, 011, 100, 101, 110, 111, 000 3 -bit message required 14

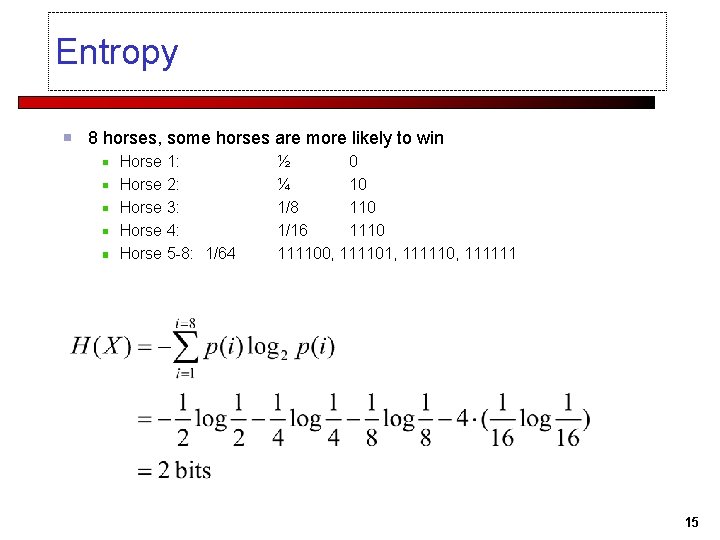

Entropy 8 horses, some horses are more likely to win Horse 1: Horse 2: Horse 3: Horse 4: Horse 5 -8: 1/64 ½ 0 ¼ 10 1/8 110 1/16 1110 111100, 111101, 111110, 111111 15

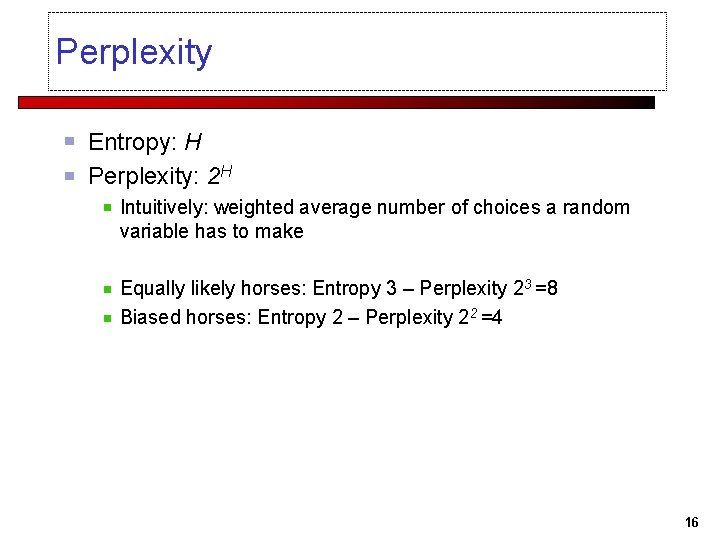

Perplexity Entropy: H Perplexity: 2 H Intuitively: weighted average number of choices a random variable has to make Equally likely horses: Entropy 3 – Perplexity 23 =8 Biased horses: Entropy 2 – Perplexity 22 =4 16

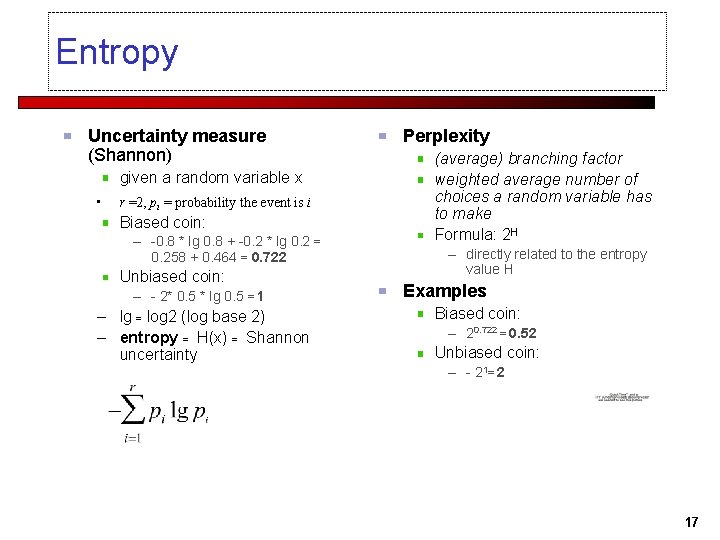

Entropy Uncertainty measure (Shannon) given a random variable x • r =2, pi = probability the event is i Biased coin: – -0. 8 * lg 0. 8 + -0. 2 * lg 0. 2 = 0. 258 + 0. 464 = 0. 722 Unbiased coin: – - 2* 0. 5 * lg 0. 5 = 1 – lg = log 2 (log base 2) – entropy = H(x) = Shannon uncertainty Perplexity (average) branching factor weighted average number of choices a random variable has to make Formula: 2 H – directly related to the entropy value H Examples Biased coin: – 20. 722 = 0. 52 Unbiased coin: – - 21= 2 17

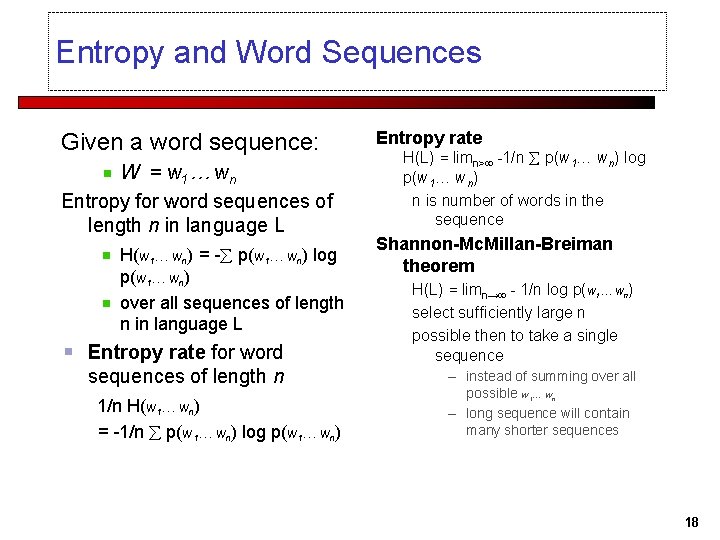

Entropy and Word Sequences Given a word sequence: W = w 1 … wn Entropy for word sequences of length n in language L H(w 1… wn) = - p(w 1… wn) log p(w 1… wn) over all sequences of length n in language L Entropy rate for word sequences of length n 1/n H(w 1… wn) = -1/n p(w 1… wn) log p(w 1… wn) Entropy rate H(L) = limn> -1/n p(w 1… wn) log p(w 1… wn) n is number of words in the sequence Shannon-Mc. Millan-Breiman theorem H(L) = limn→ - 1/n log p(w 1… wn) select sufficiently large n possible then to take a single sequence – instead of summing over all possible w 1… wn – long sequence will contain many shorter sequences 18

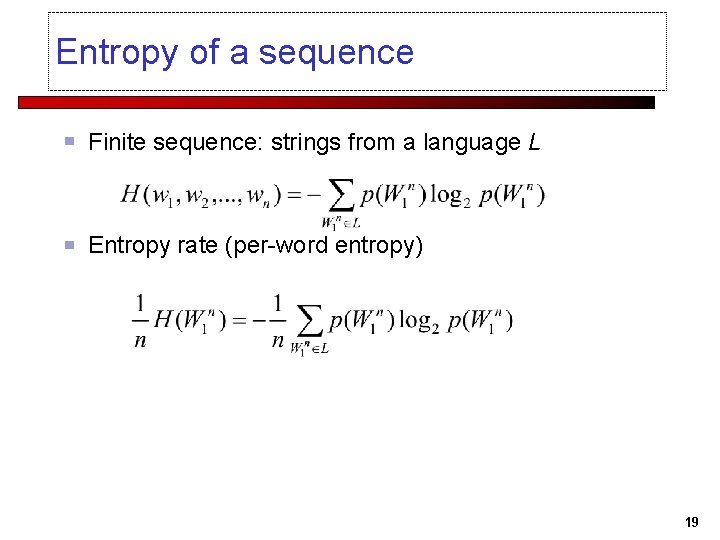

Entropy of a sequence Finite sequence: strings from a language L Entropy rate (per-word entropy) 19

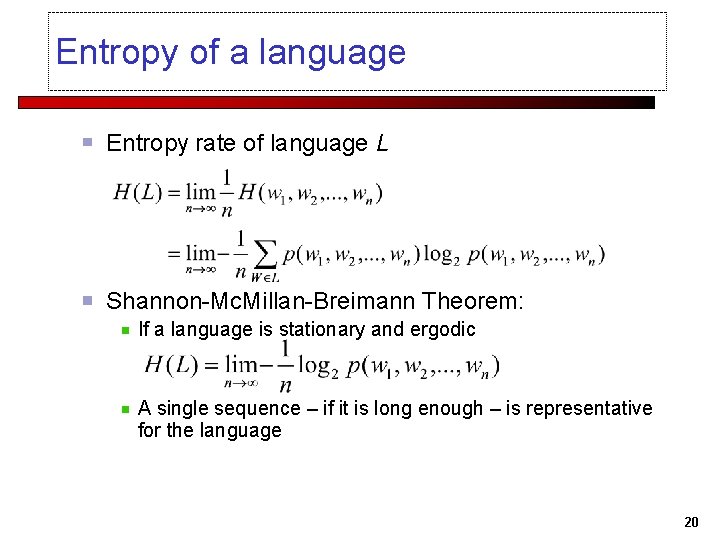

Entropy of a language Entropy rate of language L Shannon-Mc. Millan-Breimann Theorem: If a language is stationary and ergodic A single sequence – if it is long enough – is representative for the language 20

- Slides: 20