Advanced Programming in Parallel Environment CPU and system

- Slides: 26

Advanced Programming in Parallel Environment CPU and system architectures Jakub Yaghob

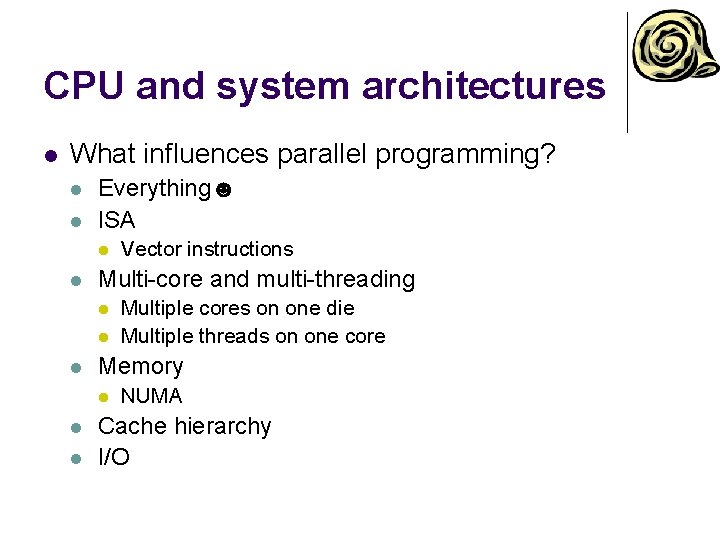

CPU and system architectures l What influences parallel programming? l l Everything☻ ISA l l Multi-core and multi-threading l l Multiple cores on one die Multiple threads on one core Memory l l Vector instructions NUMA Cache hierarchy I/O

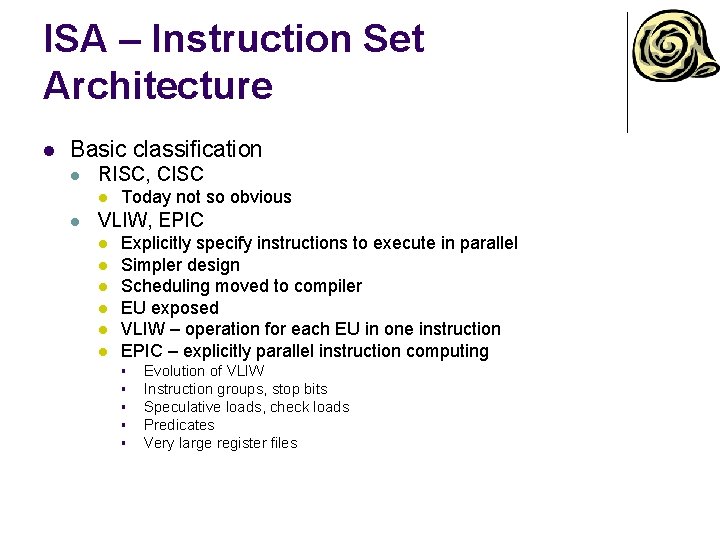

ISA – Instruction Set Architecture l Basic classification l RISC, CISC l l Today not so obvious VLIW, EPIC l l l Explicitly specify instructions to execute in parallel Simpler design Scheduling moved to compiler EU exposed VLIW – operation for each EU in one instruction EPIC – explicitly parallel instruction computing § § § Evolution of VLIW Instruction groups, stop bits Speculative loads, check loads Predicates Very large register files

ISA l Factors influencing parallel programming l Register pressure l Register renaming § l l l Intel Skylake has 180 entries in integer PRF Load-Execute-Store Vector instructions Execution units

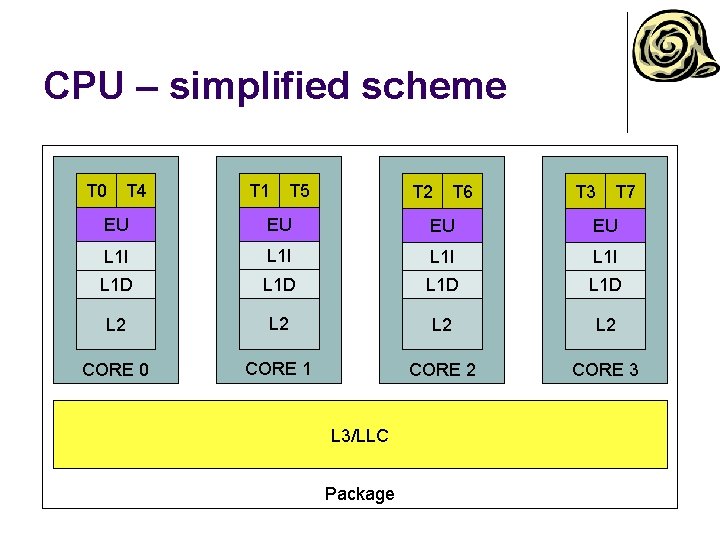

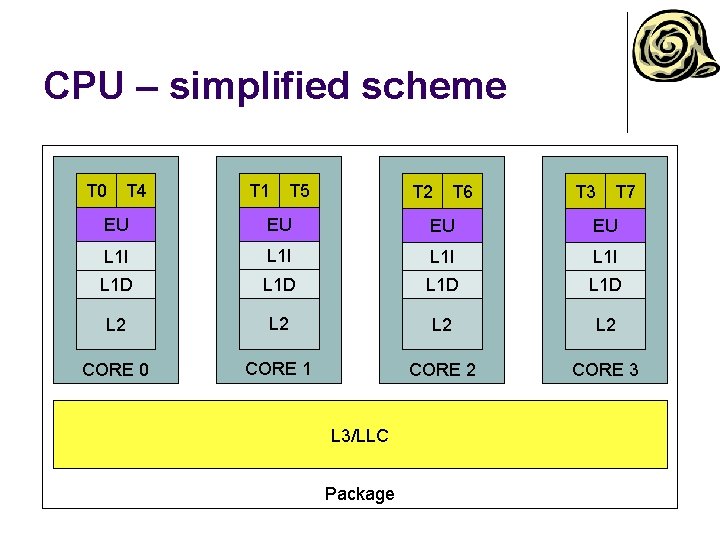

CPU – simplified scheme T 0 T 4 T 1 T 5 T 2 T 6 T 3 T 7 EU EU L 1 I L 1 D L 2 L 2 CORE 0 CORE 1 CORE 2 CORE 3 L 3/LLC Package

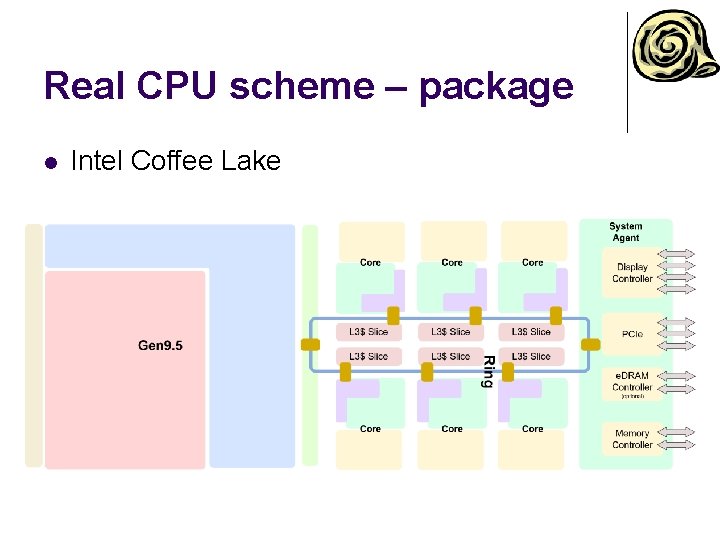

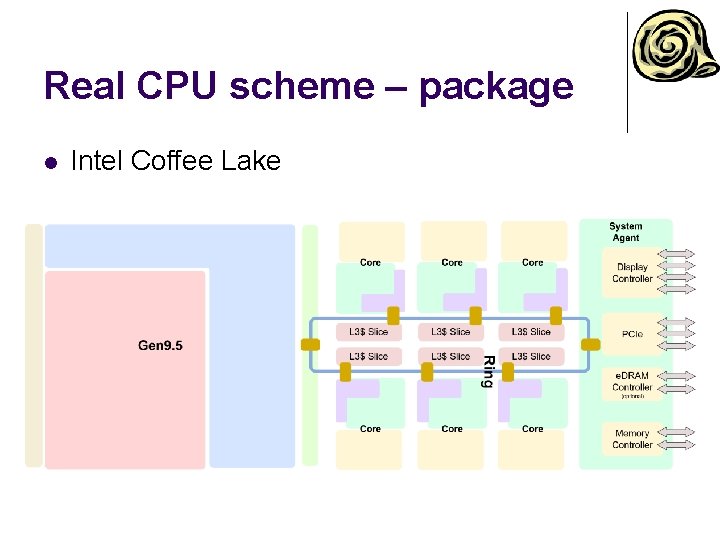

Real CPU scheme – package l Intel Coffee Lake

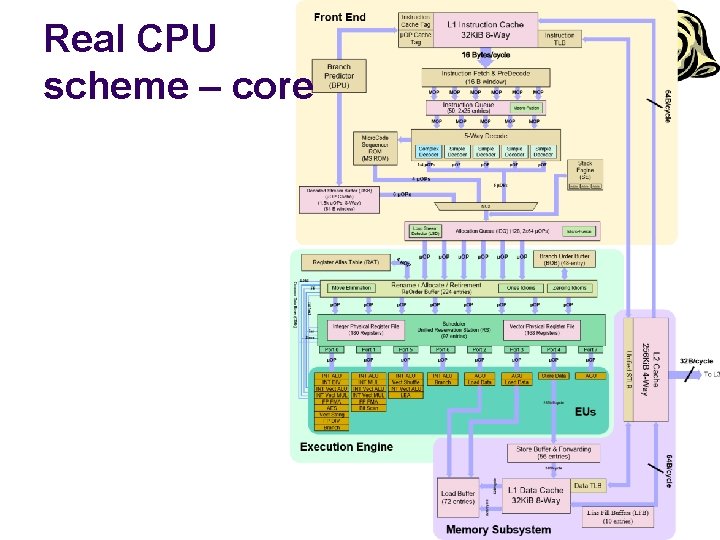

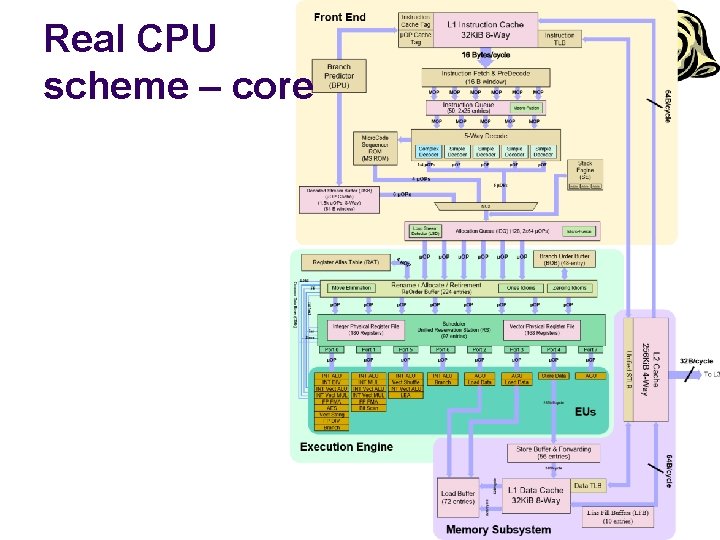

Real CPU scheme – core

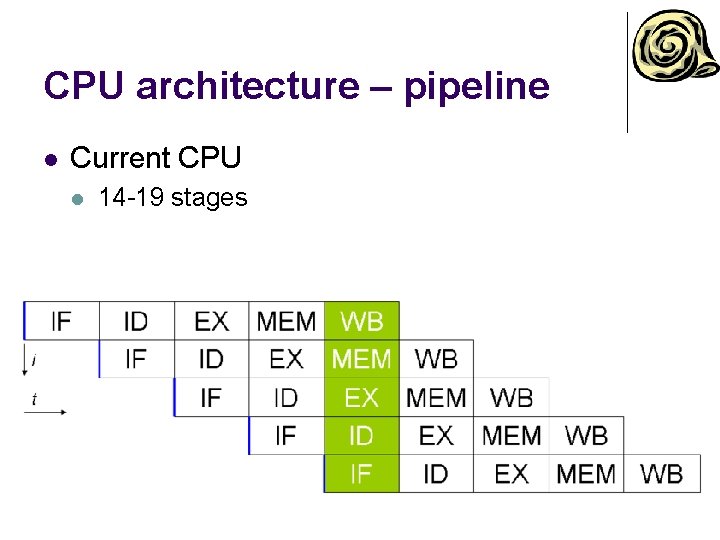

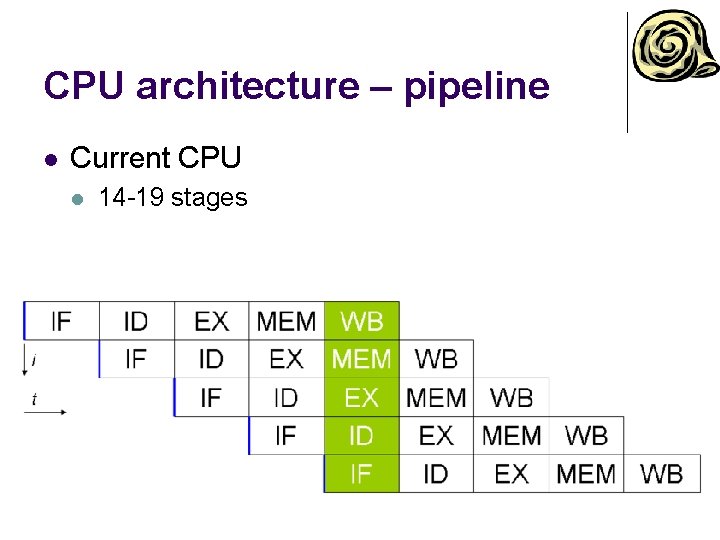

CPU architecture – pipeline l Current CPU l 14 -19 stages

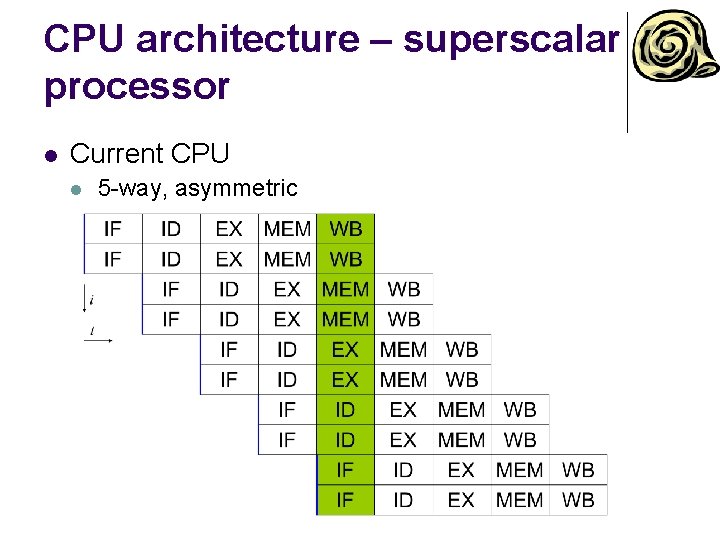

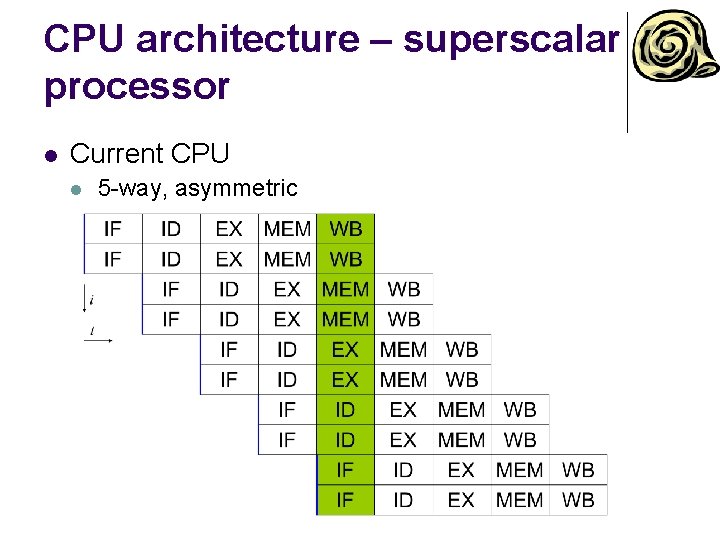

CPU architecture – superscalar processor l Current CPU l 5 -way, asymmetric

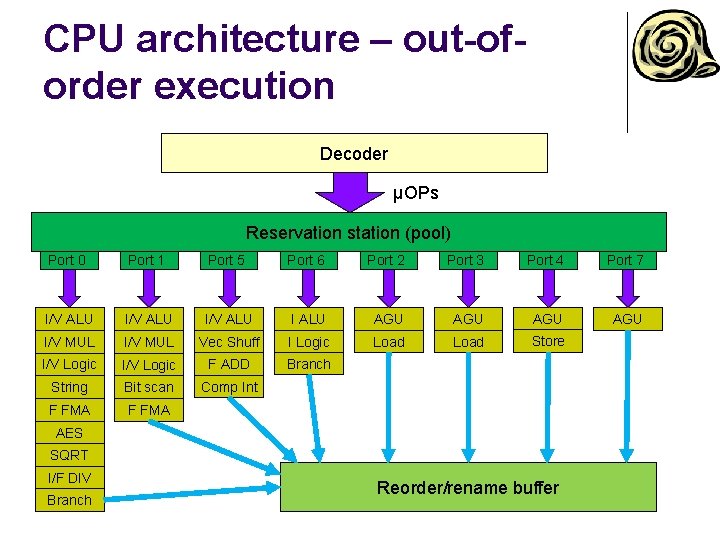

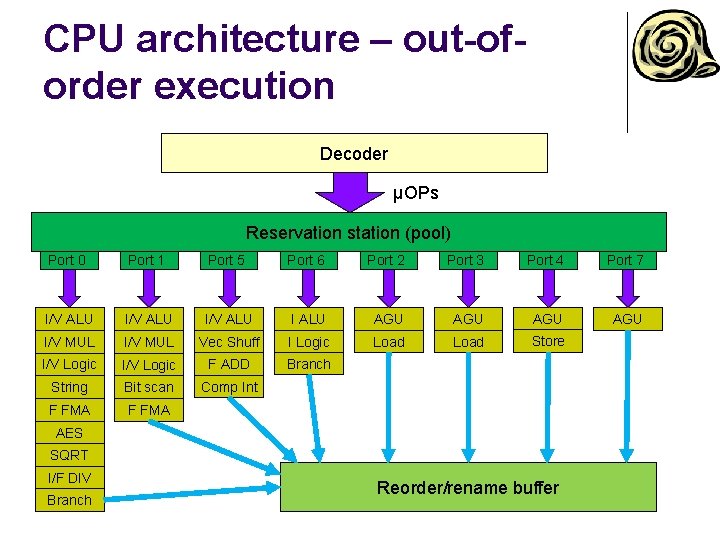

CPU architecture – out-oforder execution Decoder µOPs Reservation station (pool) Port 0 Port 1 Port 5 Port 6 Port 2 Port 3 Port 4 Port 7 I/V ALU I ALU AGU AGU I/V MUL Vec Shuff I Logic Load Store I/V Logic F ADD Branch String Bit scan Comp Int F FMA AES SQRT I/F DIV Branch Reorder/rename buffer

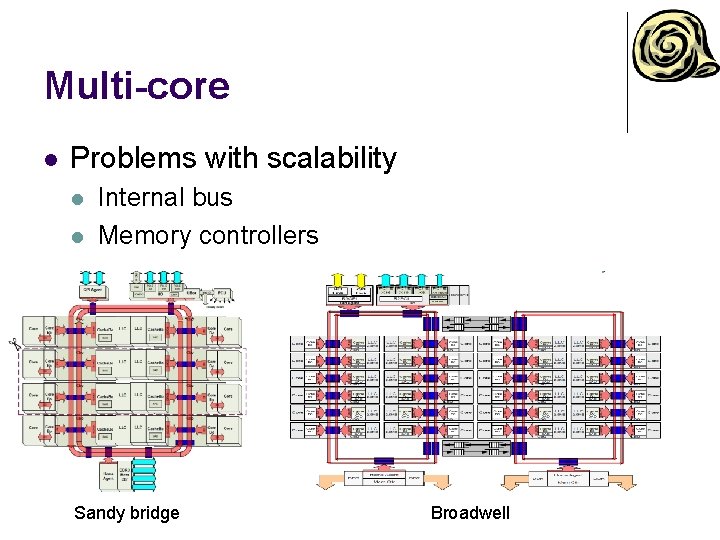

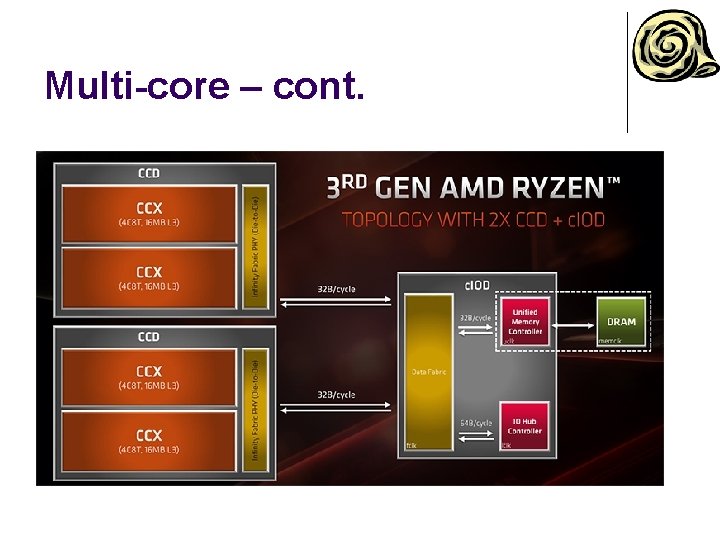

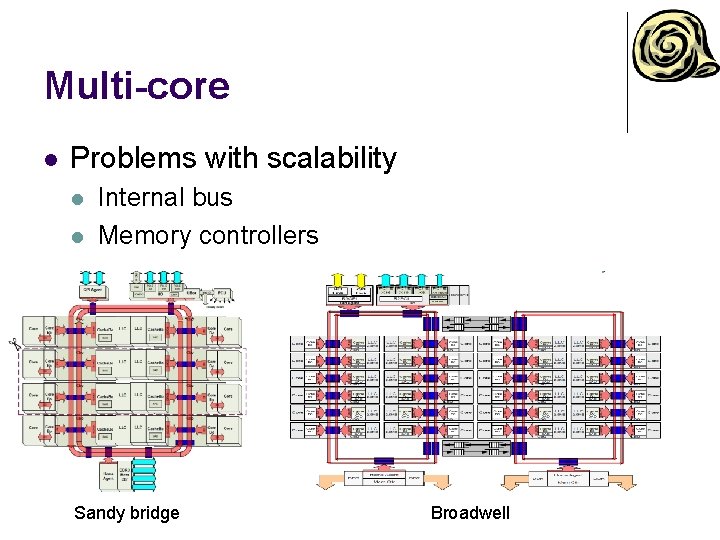

Multi-core l Problems with scalability l l Internal bus Memory controllers Sandy bridge Broadwell

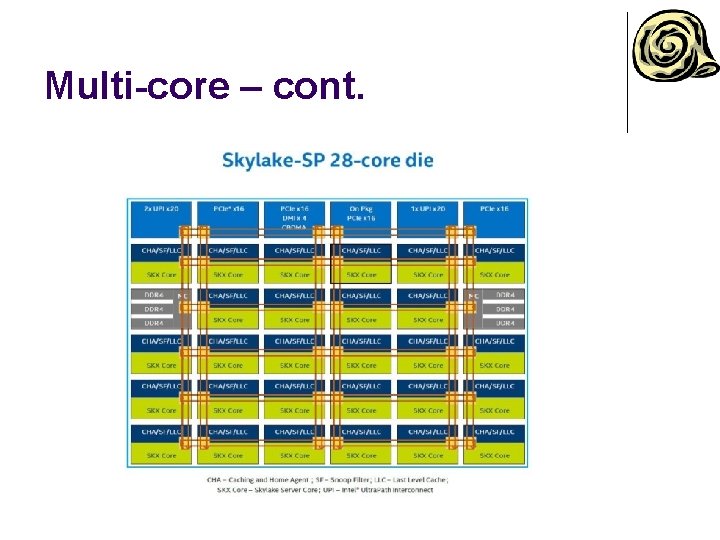

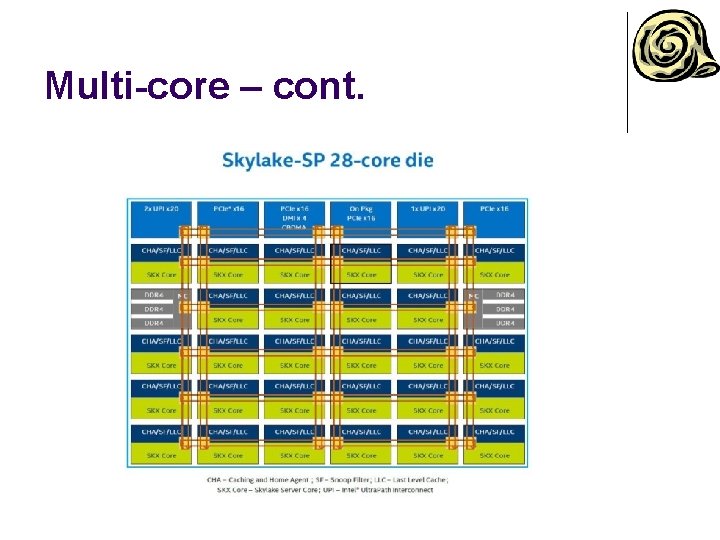

Multi-core – cont.

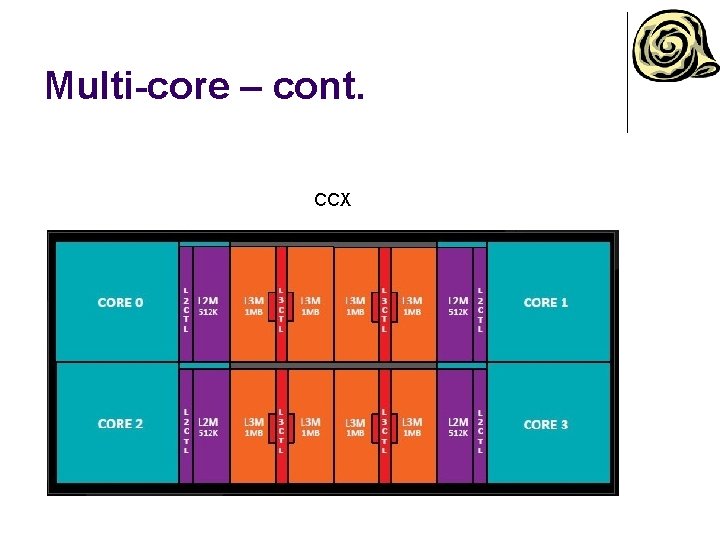

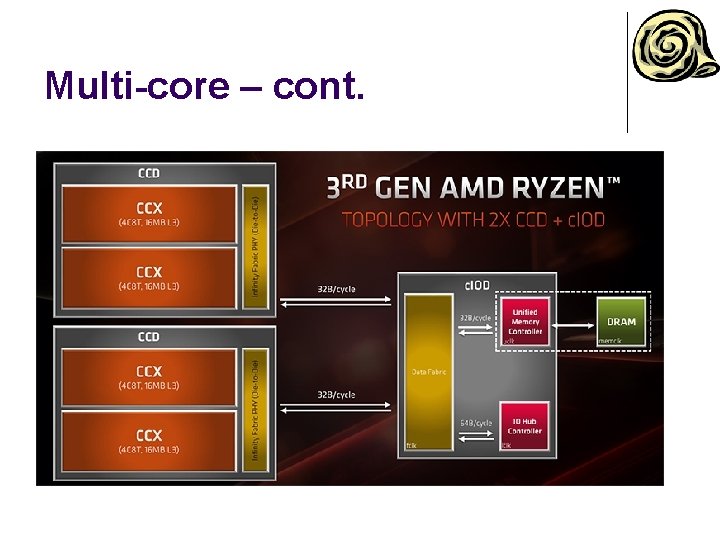

Multi-core – cont.

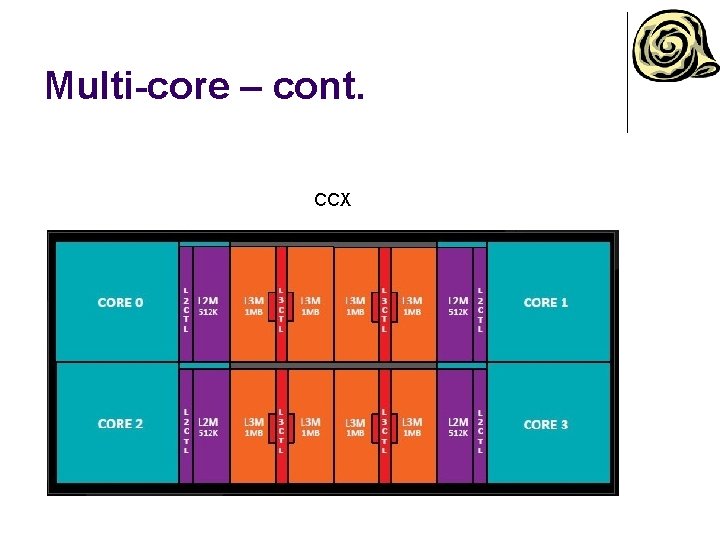

Multi-core – cont. CCX

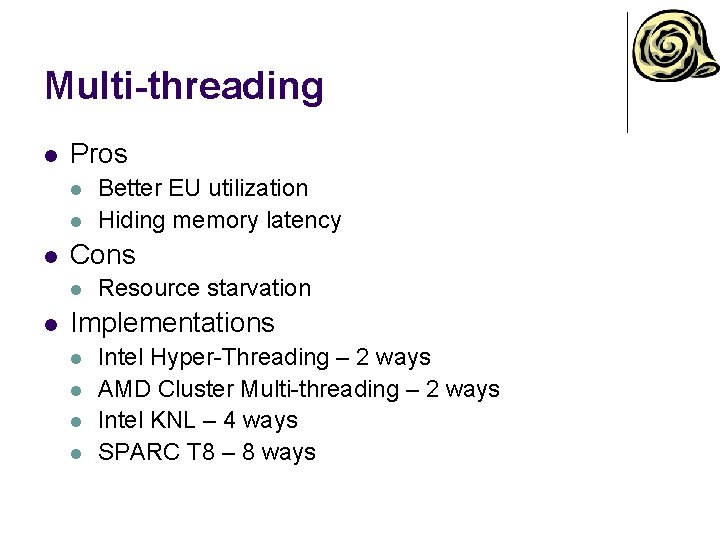

Multi-threading l Pros l l l Cons l l Better EU utilization Hiding memory latency Resource starvation Implementations l l Intel Hyper-Threading – 2 ways AMD Cluster Multi-threading – 2 ways Intel KNL – 4 ways SPARC T 8 – 8 ways

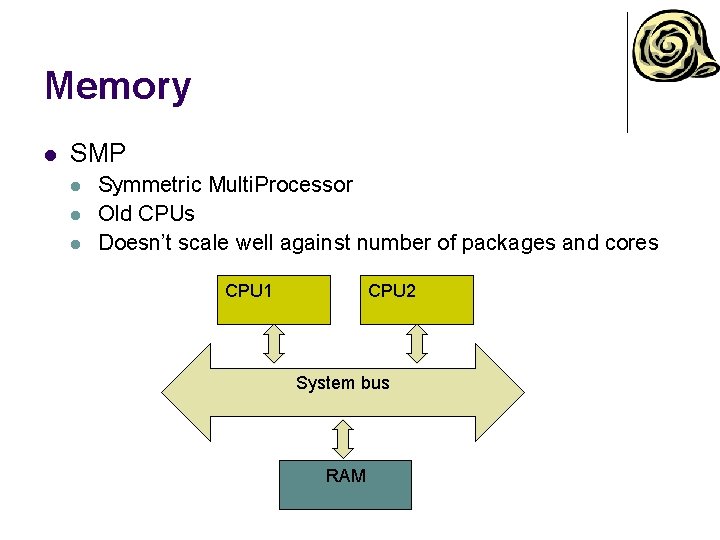

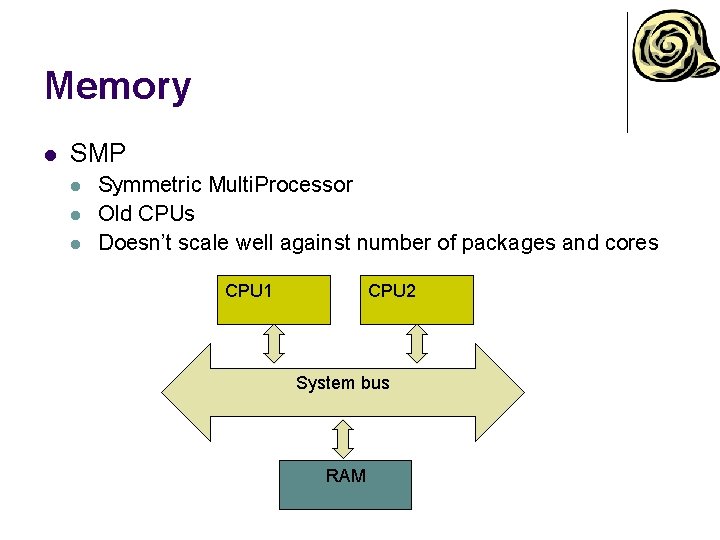

Memory l SMP l l l Symmetric Multi. Processor Old CPUs Doesn’t scale well against number of packages and cores CPU 1 CPU 2 System bus RAM

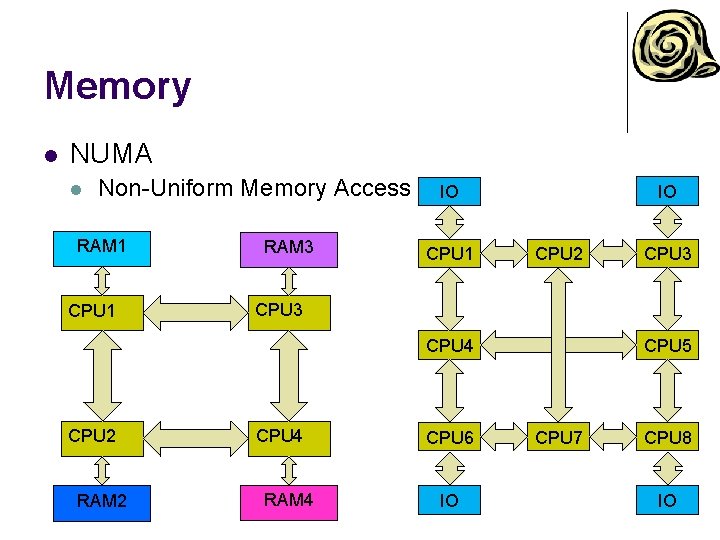

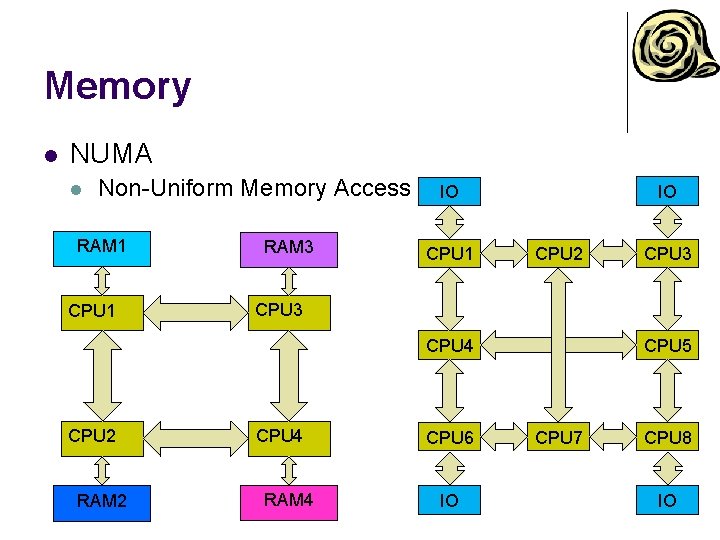

Memory l NUMA l Non-Uniform Memory Access RAM 1 CPU 1 RAM 3 IO IO CPU 1 CPU 2 CPU 3 CPU 4 CPU 2 RAM 2 CPU 3 CPU 4 RAM 4 CPU 6 IO CPU 5 CPU 7 CPU 8 IO

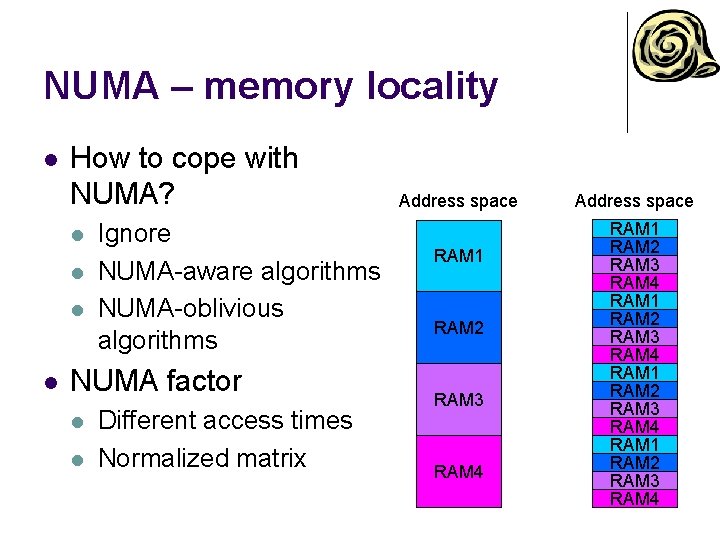

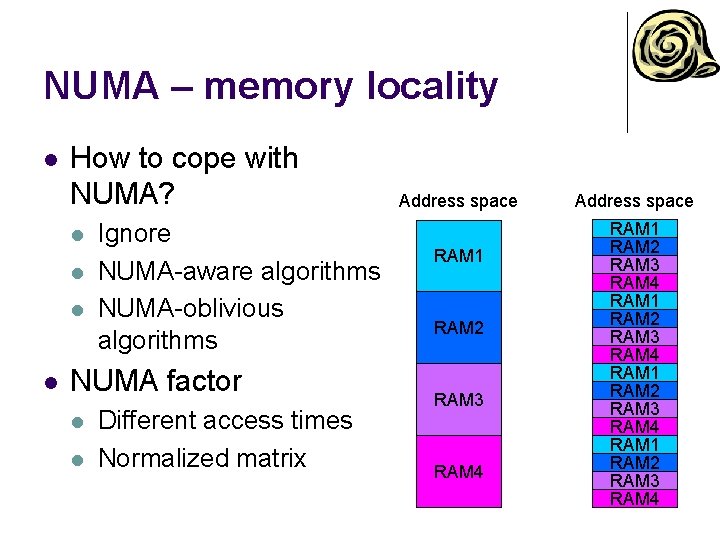

NUMA – memory locality l How to cope with NUMA? l l Ignore NUMA-aware algorithms NUMA-oblivious algorithms NUMA factor l l Different access times Normalized matrix Address space RAM 1 RAM 2 RAM 3 RAM 4 RAM 1 RAM 2 RAM 3 RAM 4

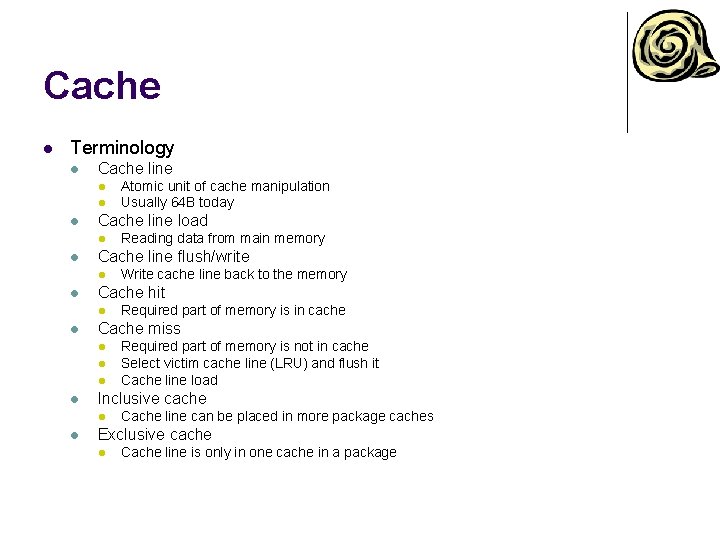

Cache l Terminology l Cache line l l l Cache line load l l Required part of memory is not in cache Select victim cache line (LRU) and flush it Cache line load Inclusive cache l l Required part of memory is in cache Cache miss l l Write cache line back to the memory Cache hit l l Reading data from main memory Cache line flush/write l l Atomic unit of cache manipulation Usually 64 B today Cache line can be placed in more package caches Exclusive cache l Cache line is only in one cache in a package

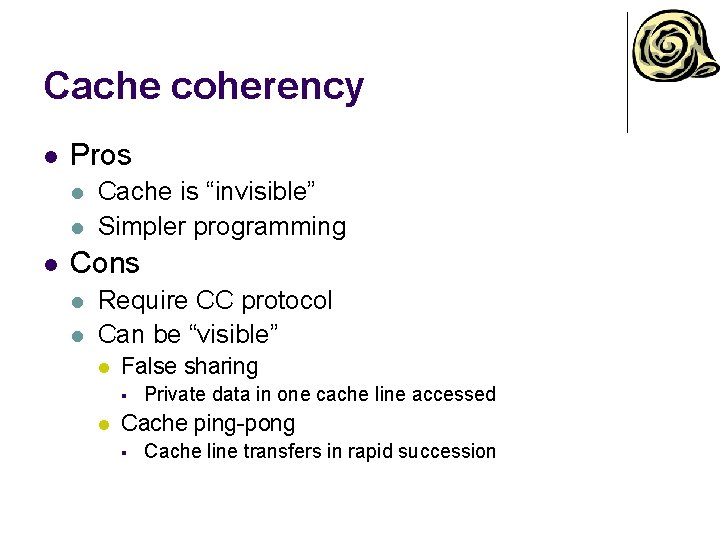

Cache coherency l Pros l l l Cache is “invisible” Simpler programming Cons l l Require CC protocol Can be “visible” l False sharing § l Private data in one cache line accessed Cache ping-pong § Cache line transfers in rapid succession

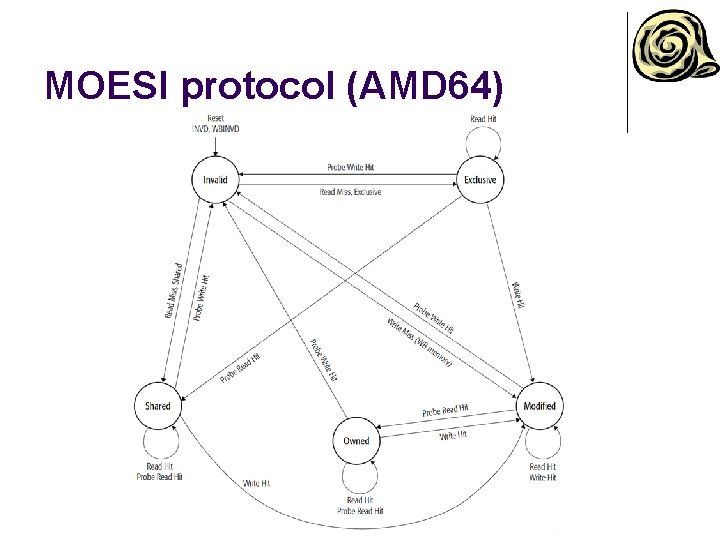

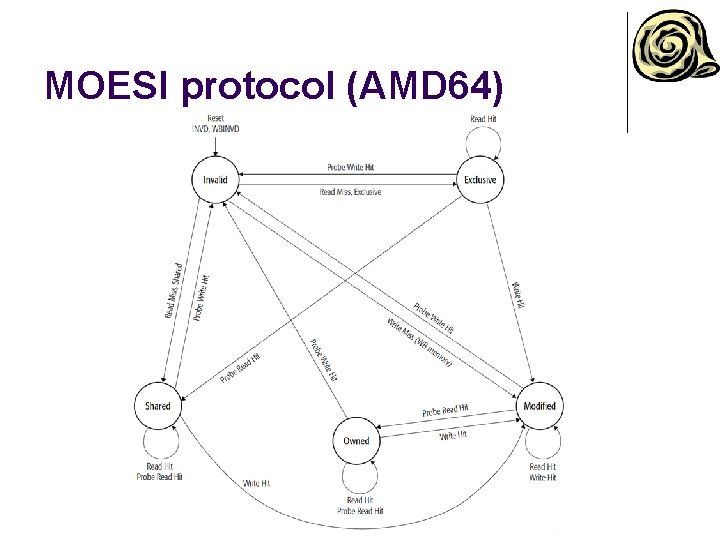

Cache coherency protocol l CC protocol l l “Invisible” Each cache line has a state Finite automaton with state transitions depending on different events Many implementations l MESI, MOESI, MERSI, Firefly, Dragon, …

MOESI protocol (AMD 64)

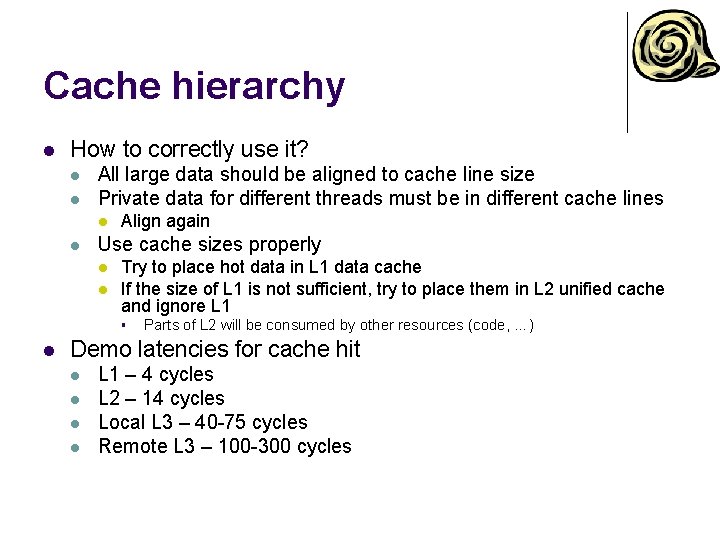

Cache hierarchy l How to correctly use it? l l All large data should be aligned to cache line size Private data for different threads must be in different cache lines l l Align again Use cache sizes properly l l Try to place hot data in L 1 data cache If the size of L 1 is not sufficient, try to place them in L 2 unified cache and ignore L 1 § l Parts of L 2 will be consumed by other resources (code, …) Demo latencies for cache hit l l L 1 – 4 cycles L 2 – 14 cycles Local L 3 – 40 -75 cycles Remote L 3 – 100 -300 cycles

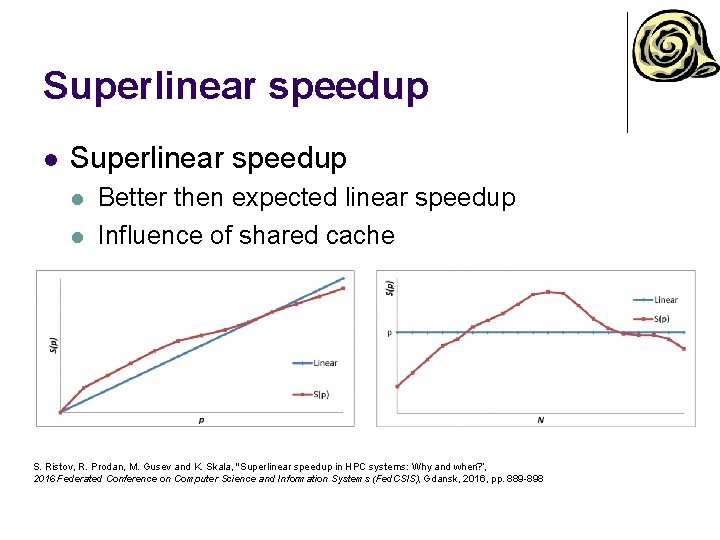

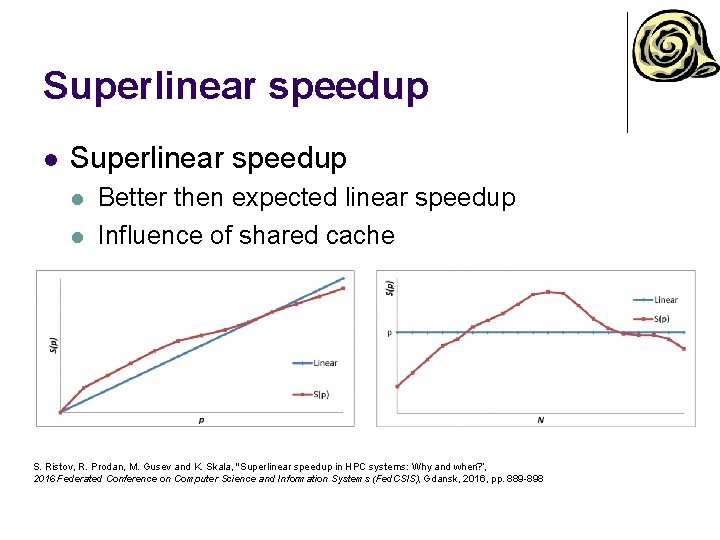

Superlinear speedup l l Better then expected linear speedup Influence of shared cache S. Ristov, R. Prodan, M. Gusev and K. Skala, "Superlinear speedup in HPC systems: Why and when? “, 2016 Federated Conference on Computer Science and Information Systems (Fed. CSIS), Gdansk, 2016, pp. 889 -898

I/O l I/O as a bottleneck l l l Program speedup measured for the whole program execution time, including reading and writing data Good algorithm parallelization is not enough Amdahl’s law lurks in the darkness

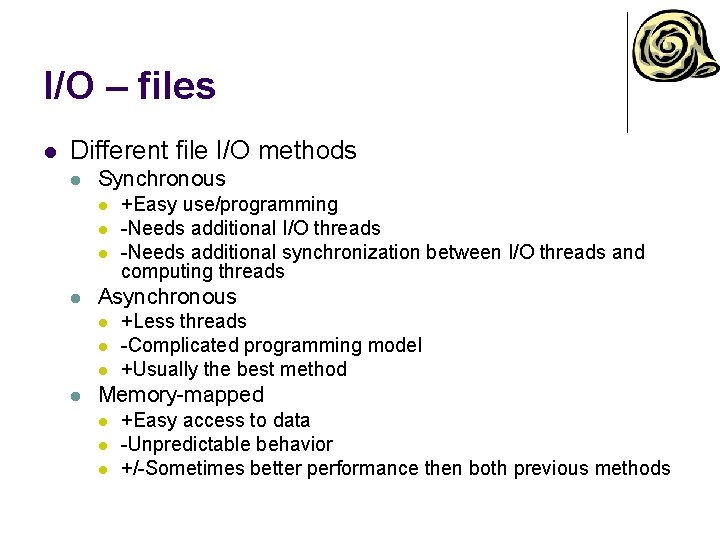

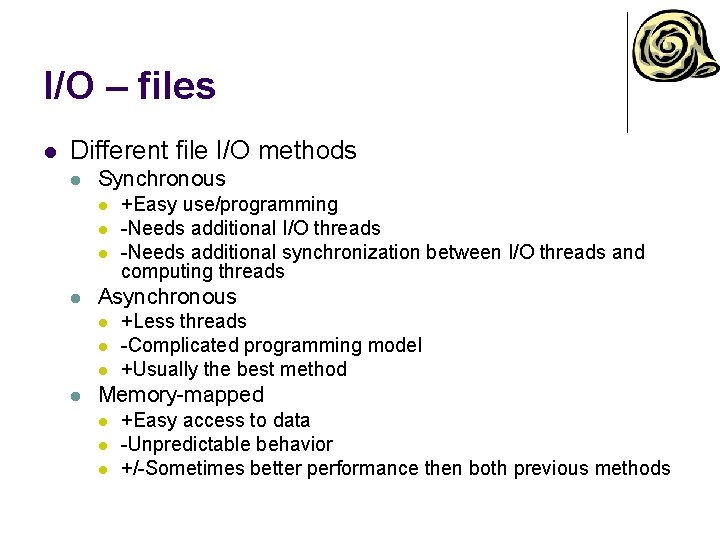

I/O – files l Different file I/O methods l Synchronous l l Asynchronous l l +Easy use/programming -Needs additional I/O threads -Needs additional synchronization between I/O threads and computing threads +Less threads -Complicated programming model +Usually the best method Memory-mapped l l l +Easy access to data -Unpredictable behavior +/-Sometimes better performance then both previous methods