Advanced Operating Systems n Course Reading Material n

![Ada task [type] <name> is entry specifications end Task body <name> is Declaration of Ada task [type] <name> is entry specifications end Task body <name> is Declaration of](https://slidetodoc.com/presentation_image_h/2b276242288077bb8184e018c2f8d091/image-31.jpg)

- Slides: 45

Advanced Operating Systems n Course Reading Material: n n Lecture notes, class discussions will be the material for examination purposes. Recommend reading appropriate reference papers for the different algorithms. (May be out of print): “Advanced Concepts in Operating Systems: Distributed, Database, & Multiprocessor Operating Systems” by Mukesh Singhal and Niranjan G. Shivaratri. Mc. Graw Hill publishers. Other books discussing the algorithms will help too. B. Prabhakaran 1

Advanced Operating Systems n Project Reference Text: n “Unix Network programming”, Richard Stevens, Prentice Hall. B. Prabhakaran 2

Contact Information B. Prabhakaran Department of Computer Science University of Texas at Dallas Mail Station EC 31, PO Box 830688 Richardson, TX 75083 Email: praba@utdallas. edu Fax: 972 883 2349 URL: http: //www. utdallas. edu/~praba/cs 6378. html Phone: 972 883 4680 Office: ES 3. 706 Office Hours: 11. 45 am-12. 30 pm, Mondays & Wednesdays Other times by appointments through email Announcements: Made in class and on course web page. TA: TBA. B. Prabhakaran 3

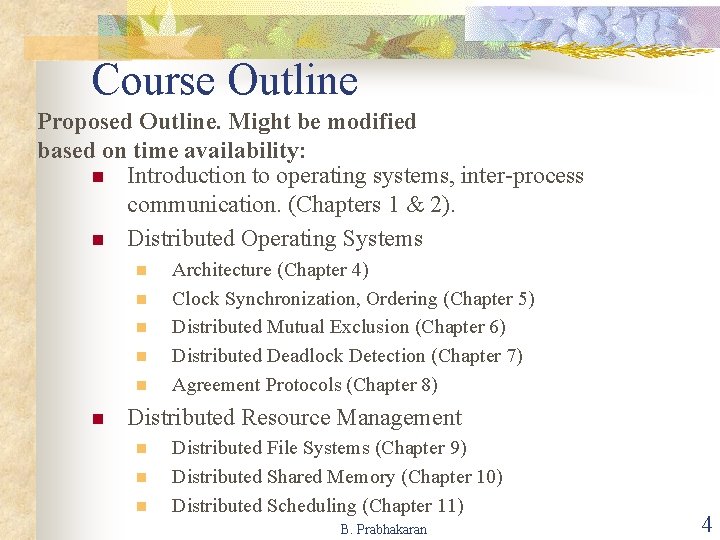

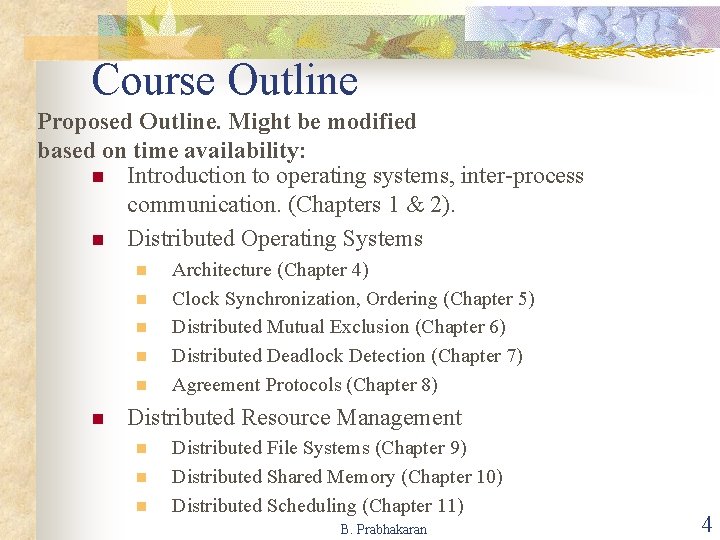

Course Outline Proposed Outline. Might be modified based on time availability: n Introduction to operating systems, inter-process communication. (Chapters 1 & 2). n Distributed Operating Systems n n n Architecture (Chapter 4) Clock Synchronization, Ordering (Chapter 5) Distributed Mutual Exclusion (Chapter 6) Distributed Deadlock Detection (Chapter 7) Agreement Protocols (Chapter 8) Distributed Resource Management n n n Distributed File Systems (Chapter 9) Distributed Shared Memory (Chapter 10) Distributed Scheduling (Chapter 11) B. Prabhakaran 4

Course Outline. . . n Recovery & Fault Tolerance n n Chapters 12 and 13 Concurrency Control/ Security n Depending on time availability Discussions will generally follow the main text. However, additional/modified topics might be introduced from other texts and/or papers. References to those materials will be given at appropriate time. B. Prabhakaran 5

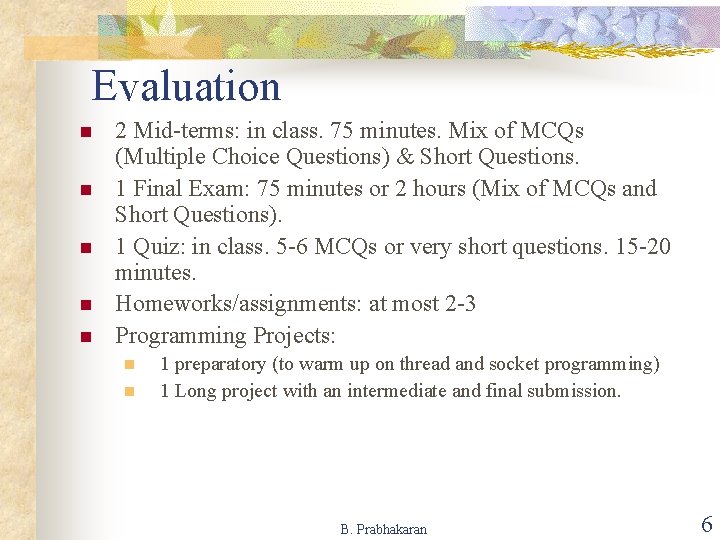

Evaluation n n 2 Mid-terms: in class. 75 minutes. Mix of MCQs (Multiple Choice Questions) & Short Questions. 1 Final Exam: 75 minutes or 2 hours (Mix of MCQs and Short Questions). 1 Quiz: in class. 5 -6 MCQs or very short questions. 15 -20 minutes. Homeworks/assignments: at most 2 -3 Programming Projects: n n 1 preparatory (to warm up on thread and socket programming) 1 Long project with an intermediate and final submission. B. Prabhakaran 6

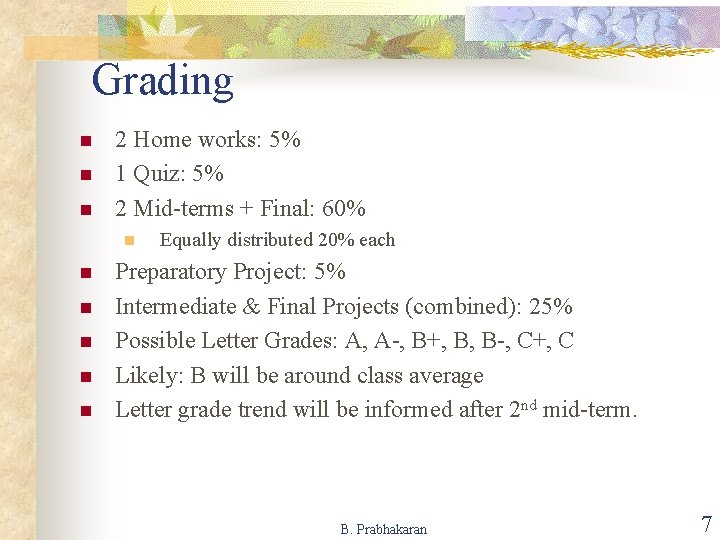

Grading n n n 2 Home works: 5% 1 Quiz: 5% 2 Mid-terms + Final: 60% n n n Equally distributed 20% each Preparatory Project: 5% Intermediate & Final Projects (combined): 25% Possible Letter Grades: A, A-, B+, B, B-, C+, C Likely: B will be around class average Letter grade trend will be informed after 2 nd mid-term. B. Prabhakaran 7

Schedule n n n n Quiz: September 21, 2009 1 st Mid-term: September 30, 2009 2 nd Mid-term: November 9, 2009 Final Exam: December 11, 2009 (As per UTD schedule) Subject to minor changes Homework schedules will be announced in class and course web page, giving sufficient time for submission. Tutorial: September 16, 2009; 1 st Homework Due: September 20, 2009. Likely project deadlines: n n n Preparatory project: September 13, 2009 Intermediate project: October 25, 2009 Final Project: December 6, 2009 B. Prabhakaran 8

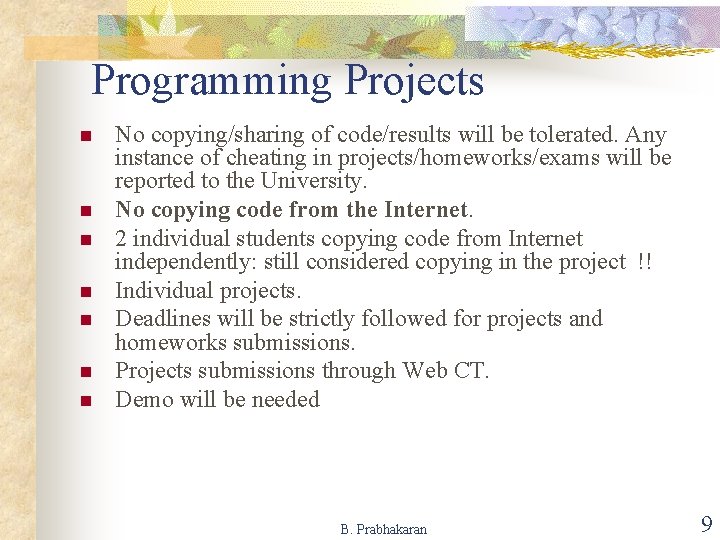

Programming Projects n n n n No copying/sharing of code/results will be tolerated. Any instance of cheating in projects/homeworks/exams will be reported to the University. No copying code from the Internet. 2 individual students copying code from Internet independently: still considered copying in the project !! Individual projects. Deadlines will be strictly followed for projects and homeworks submissions. Projects submissions through Web CT. Demo will be needed B. Prabhakaran 9

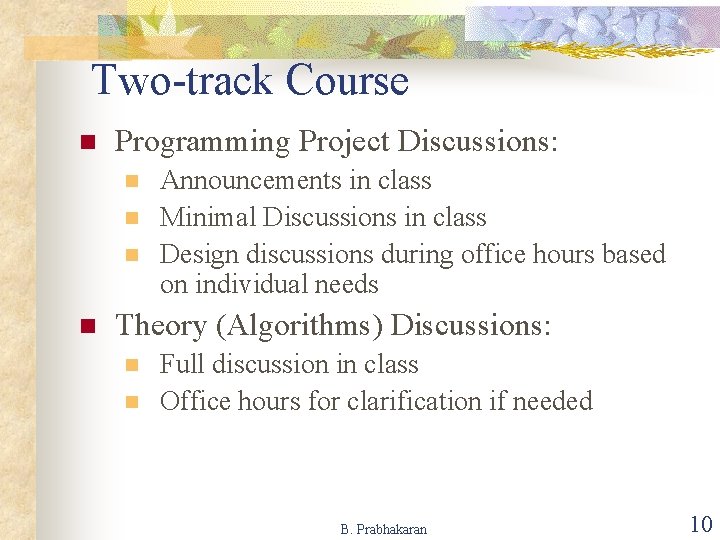

Two-track Course n Programming Project Discussions: n n Announcements in class Minimal Discussions in class Design discussions during office hours based on individual needs Theory (Algorithms) Discussions: n n Full discussion in class Office hours for clarification if needed B. Prabhakaran 10

e. Learning n n Go to: https: //elearning. utdallas. edu/webct/entry. Page. Ins. dowebct It has a discussion group that can be used for project and other course discussions. B. Prabhakaran 11

Cheating n n n Academic dishonesty will be taken seriously. Cheating students will be handed over to Head/Dean for further action. Remember: home works/projects (exams too !) are to be done individually. Any kind of cheating in home works/ projects/ exams will be dealt with as per UTD guidelines. Cheating in any stage of projects will result in 0 for the entire set of projects. B. Prabhakaran 12

Projects n n n Involves exercises such as ordering, deadlock detection, load balancing, message passing, and implementing distributed algorithms (e. g. , for scheduling, etc. ). Platform: Linux/Windows, C/C++. Network programming will be needed. Multiple systems will be used. Specific details and deadlines will be announced in class and course webpage. Suggestion: Learn network socket programming and threads, if you do not know already. Try simple programs for file transfer, talk, etc. Sample programs and tutorials available at: n http: //www. utdallas. edu/~praba/projects. html B. Prabhakaran 13

Homeworks n n At most 2 -3 home works, announced in class and course web page. Homeworks Submission: n Submit on paper to TA/Instructor. B. Prabhakaran 14

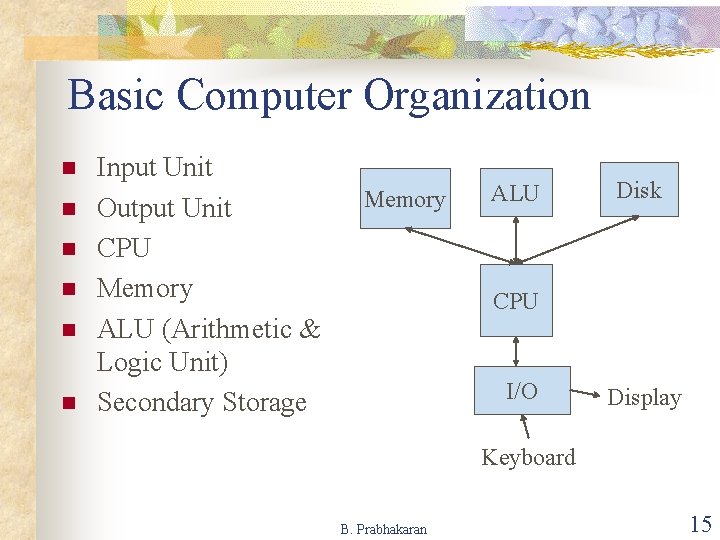

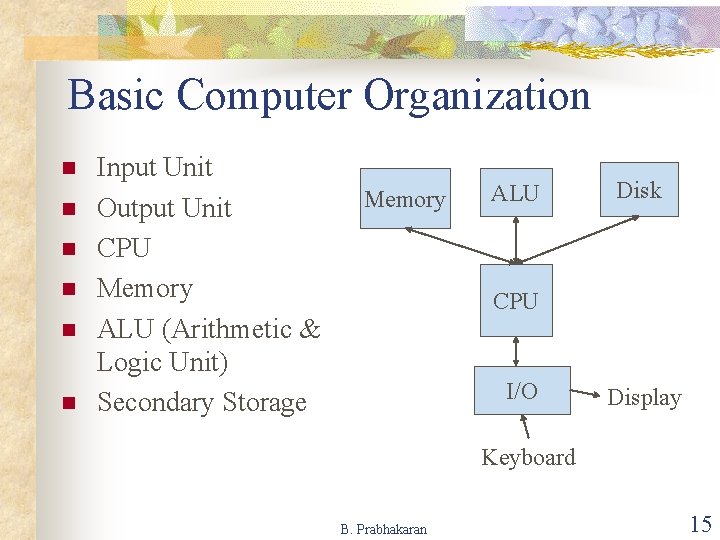

Basic Computer Organization n n n Input Unit Output Unit CPU Memory ALU (Arithmetic & Logic Unit) Secondary Storage Memory ALU Disk CPU I/O Display Keyboard B. Prabhakaran 15

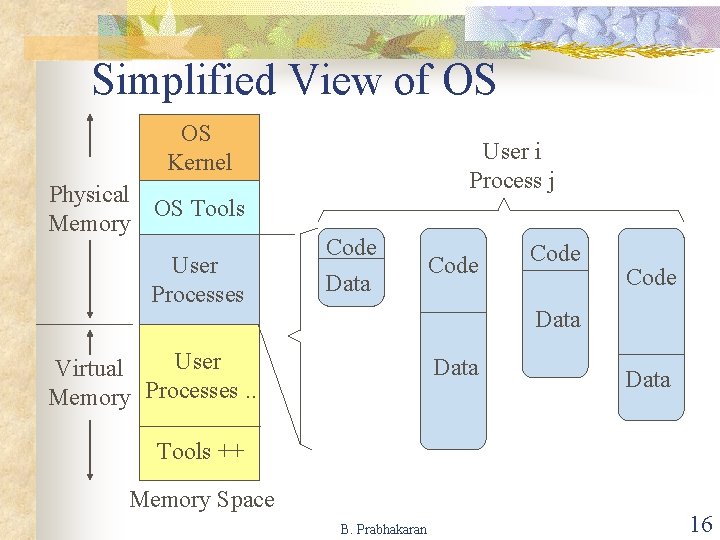

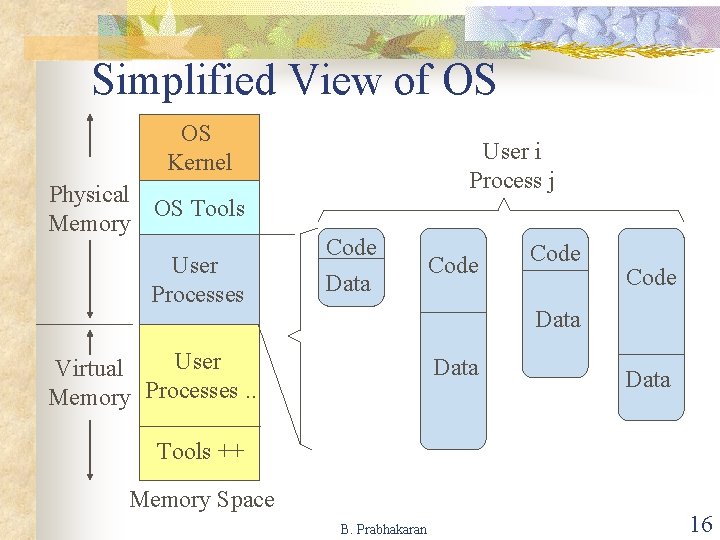

Simplified View of OS OS Kernel Physical OS Tools Memory User Processes User i Process j Code Data Code Data User Virtual Memory Processes. . Data Tools ++ Memory Space B. Prabhakaran 16

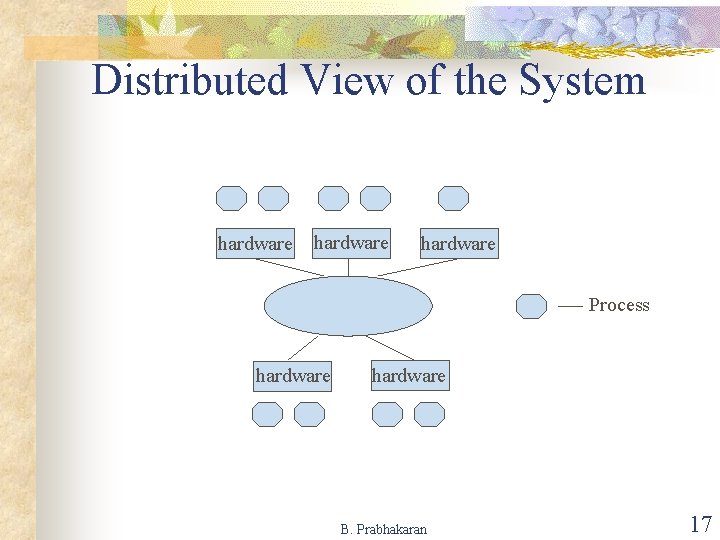

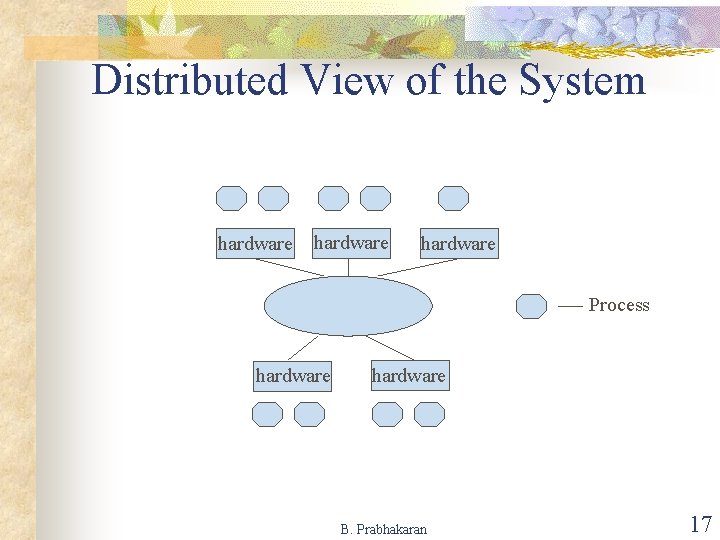

Distributed View of the System hardware Process hardware B. Prabhakaran 17

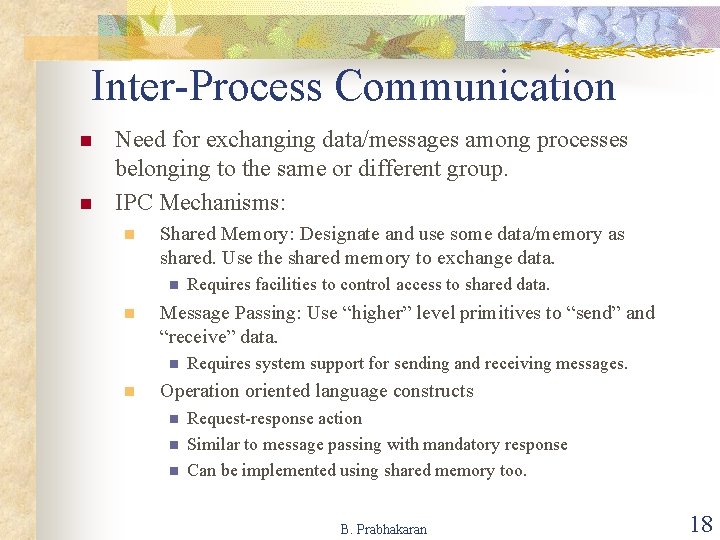

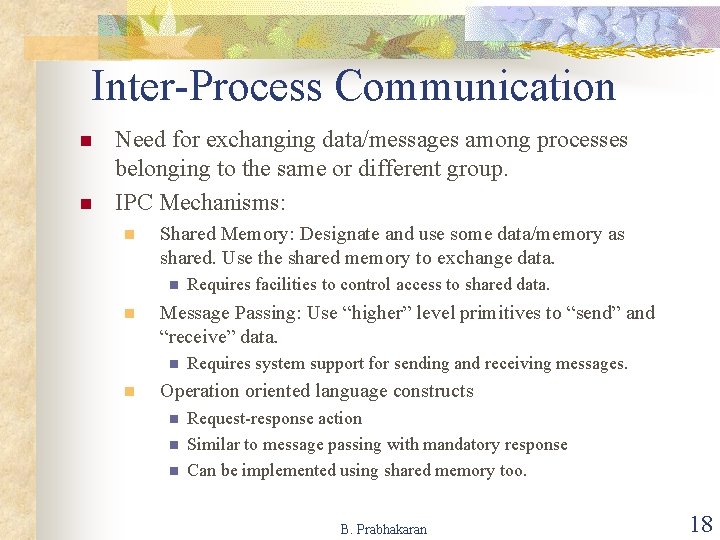

Inter-Process Communication n n Need for exchanging data/messages among processes belonging to the same or different group. IPC Mechanisms: n Shared Memory: Designate and use some data/memory as shared. Use the shared memory to exchange data. n n Message Passing: Use “higher” level primitives to “send” and “receive” data. n n Requires facilities to control access to shared data. Requires system support for sending and receiving messages. Operation oriented language constructs n n n Request-response action Similar to message passing with mandatory response Can be implemented using shared memory too. B. Prabhakaran 18

IPC Examples n Parallel/distributed computation such as sorting: shared memory is more apt. n n Client-server type: message passing or RPC may suit better. n n Using message passing/RPC might need an array/data manager of some sort. Shared memory may be useful, but the program is more clear with the other types of IPCs. RPC vs. Message Passing: if response is not a must, atleast immediately, simple message passing should suffice. B. Prabhakaran 19

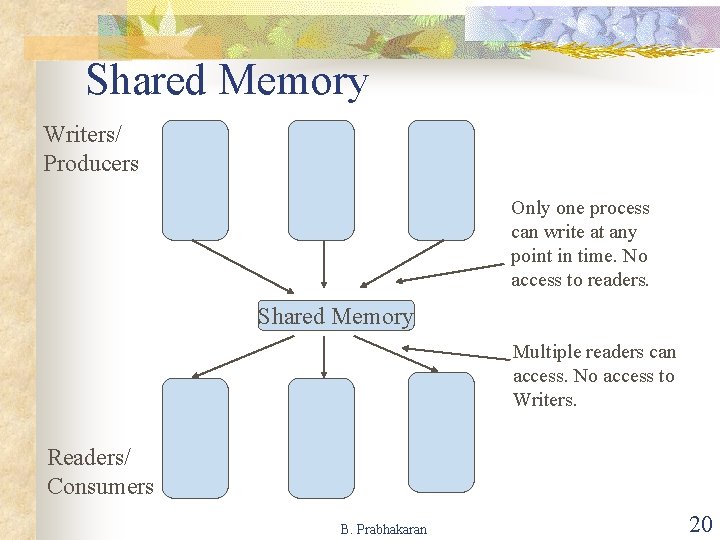

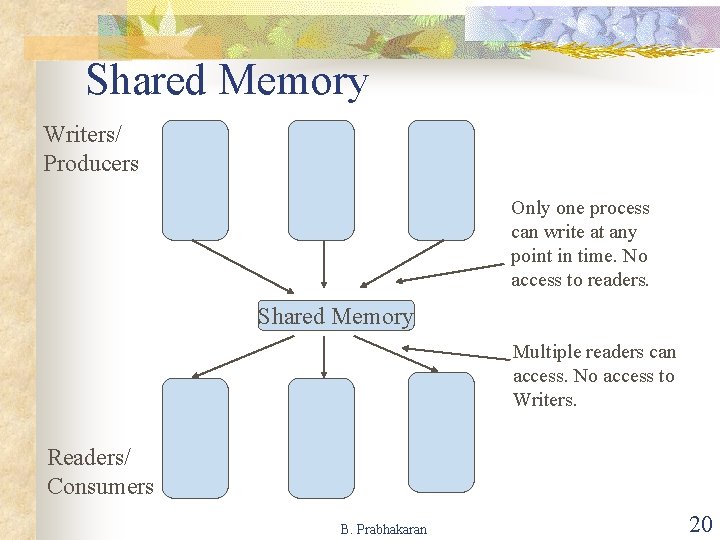

Shared Memory Writers/ Producers Only one process can write at any point in time. No access to readers. Shared Memory Multiple readers can access. No access to Writers. Readers/ Consumers B. Prabhakaran 20

Shared Memory: Possibilities n n n Locks (unlocks) Semaphores Monitors Serializers Path expressions B. Prabhakaran 21

Message Passing n n n Blocked Send/Receive: Both sending and receiving process get blocked till the message is completely received. Synchronous. Unblocked Send/Receive: Both sender and receiver are not blocked. Asynchronous. Unblocked Send/Blocked Receive: Sender is not blocked. Receiver waits till message is received. Blocked Send/Unblocked Receive: Useful ? Can be implemented using shared memory. Message passing: a language paradigm for human ease. B. Prabhakaran 22

Un/blocked n Blocked message exchange n n n Easy to: understand, implement, verify correctness Less powerful, may be inefficient as sender/receiver might waste time waiting Unblocked message exchange n n n More efficient, no waste on waiting Needs queues, i. e. , memory to store messages Difficult to verify correctness of programs B. Prabhakaran 23

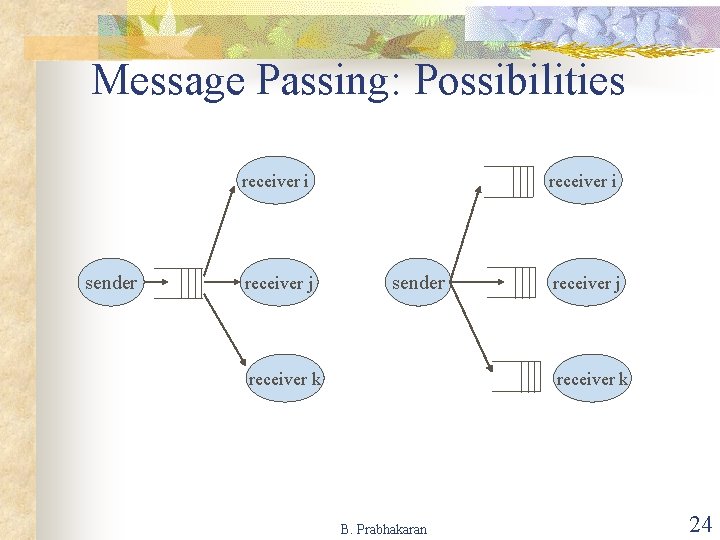

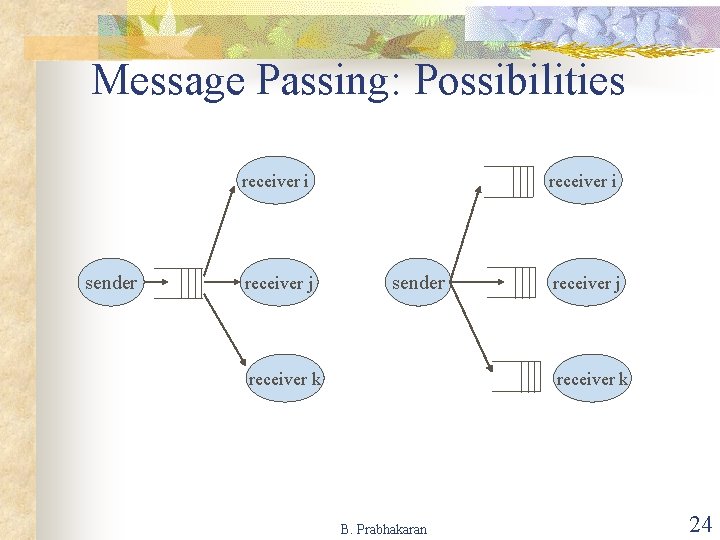

Message Passing: Possibilities receiver i sender receiver j receiver i sender receiver k receiver j receiver k B. Prabhakaran 24

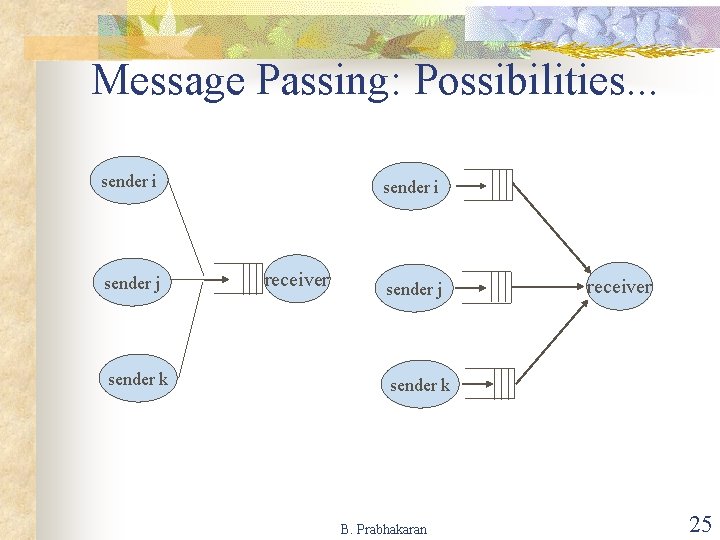

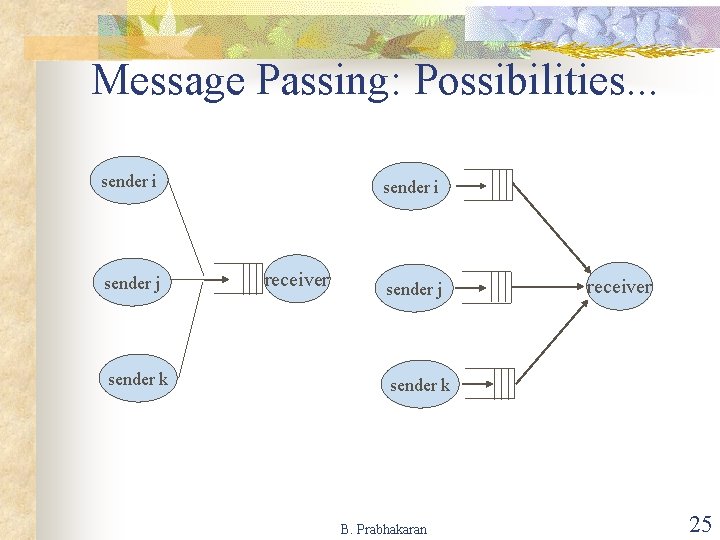

Message Passing: Possibilities. . . sender i sender j sender k sender i receiver sender j receiver sender k B. Prabhakaran 25

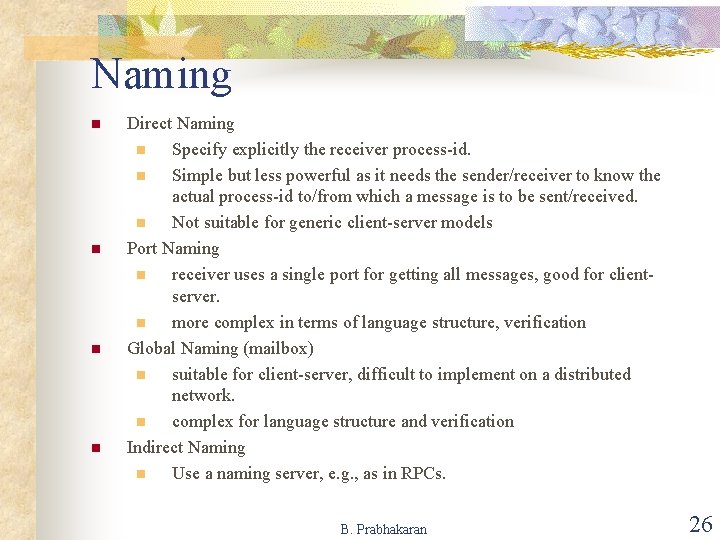

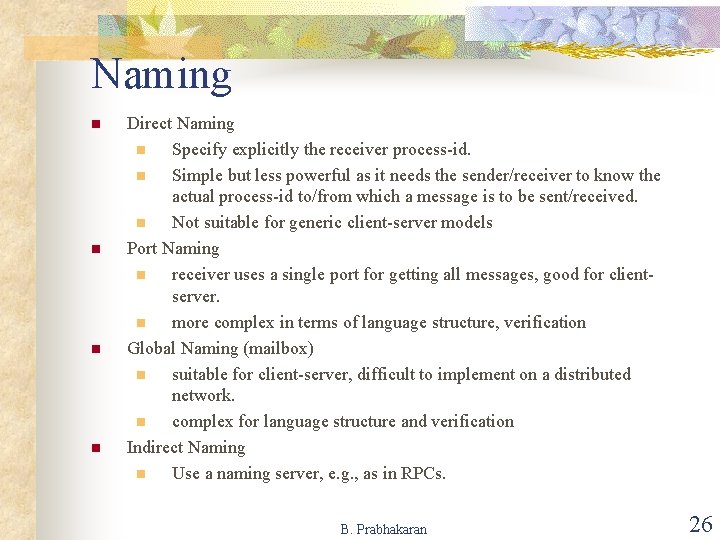

Naming n n Direct Naming n Specify explicitly the receiver process-id. n Simple but less powerful as it needs the sender/receiver to know the actual process-id to/from which a message is to be sent/received. n Not suitable for generic client-server models Port Naming n receiver uses a single port for getting all messages, good for clientserver. n more complex in terms of language structure, verification Global Naming (mailbox) n suitable for client-server, difficult to implement on a distributed network. n complex for language structure and verification Indirect Naming n Use a naming server, e. g. , as in RPCs. B. Prabhakaran 26

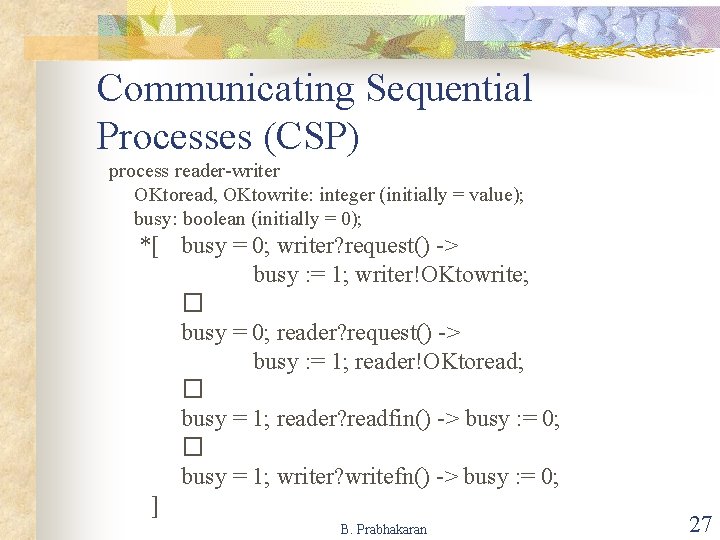

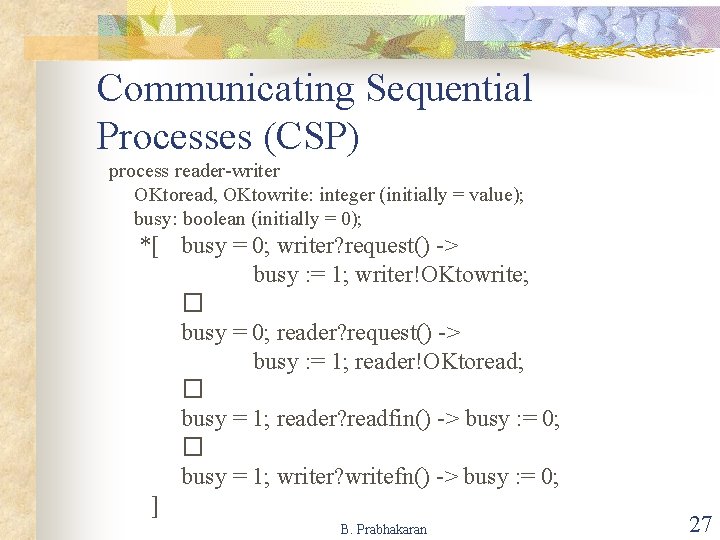

Communicating Sequential Processes (CSP) process reader-writer OKtoread, OKtowrite: integer (initially = value); busy: boolean (initially = 0); *[ busy = 0; writer? request() -> busy : = 1; writer!OKtowrite; � busy = 0; reader? request() -> busy : = 1; reader!OKtoread; � busy = 1; reader? readfin() -> busy : = 0; � busy = 1; writer? writefn() -> busy : = 0; ] B. Prabhakaran 27

CSP: Drawbacks n n Requires explicit naming of processes in I/O commands. No message buffering; input/output command gets blocked (or the guards become false) -> Can introduce delay and inefficiency. B. Prabhakaran 28

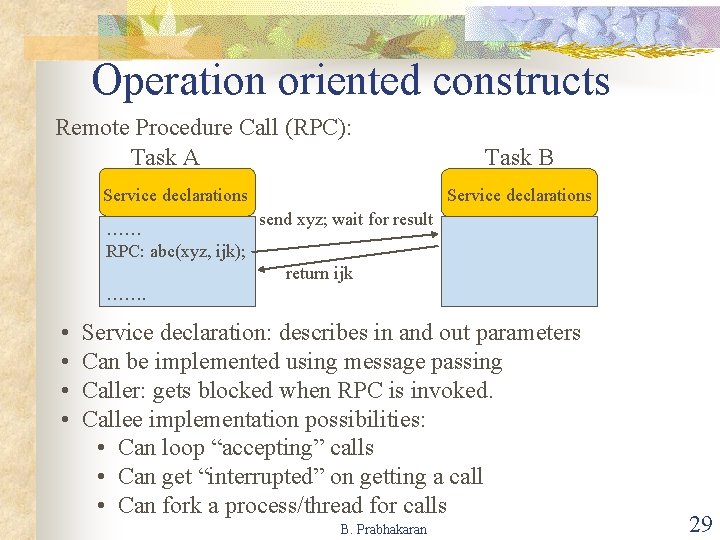

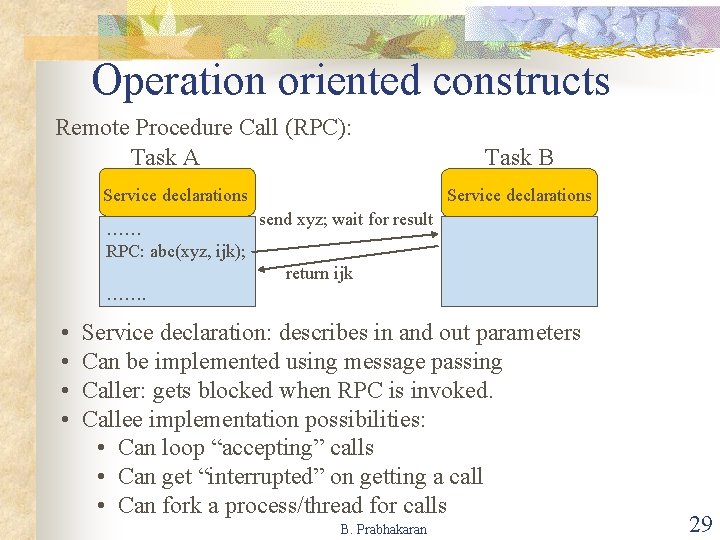

Operation oriented constructs Remote Procedure Call (RPC): Task A Service declarations …… RPC: abc(xyz, ijk); Task B Service declarations send xyz; wait for result return ijk ……. • • Service declaration: describes in and out parameters Can be implemented using message passing Caller: gets blocked when RPC is invoked. Callee implementation possibilities: • Can loop “accepting” calls • Can get “interrupted” on getting a call • Can fork a process/thread for calls B. Prabhakaran 29

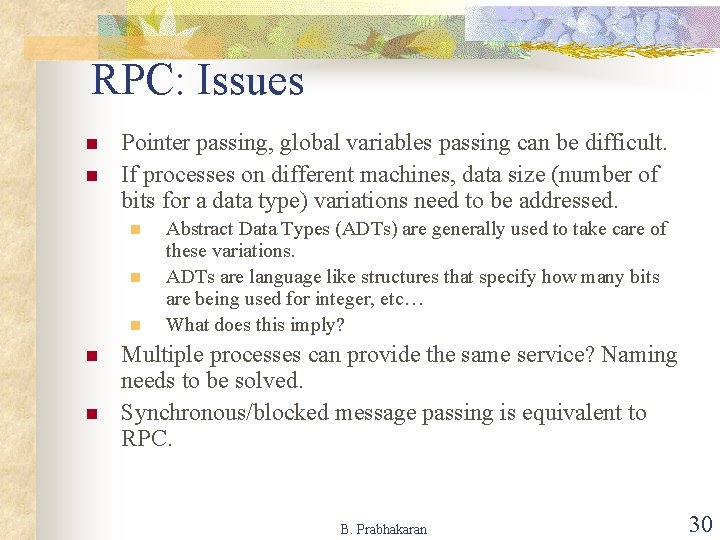

RPC: Issues n n Pointer passing, global variables passing can be difficult. If processes on different machines, data size (number of bits for a data type) variations need to be addressed. n n n Abstract Data Types (ADTs) are generally used to take care of these variations. ADTs are language like structures that specify how many bits are being used for integer, etc… What does this imply? Multiple processes can provide the same service? Naming needs to be solved. Synchronous/blocked message passing is equivalent to RPC. B. Prabhakaran 30

![Ada task type name is entry specifications end Task body name is Declaration of Ada task [type] <name> is entry specifications end Task body <name> is Declaration of](https://slidetodoc.com/presentation_image_h/2b276242288077bb8184e018c2f8d091/image-31.jpg)

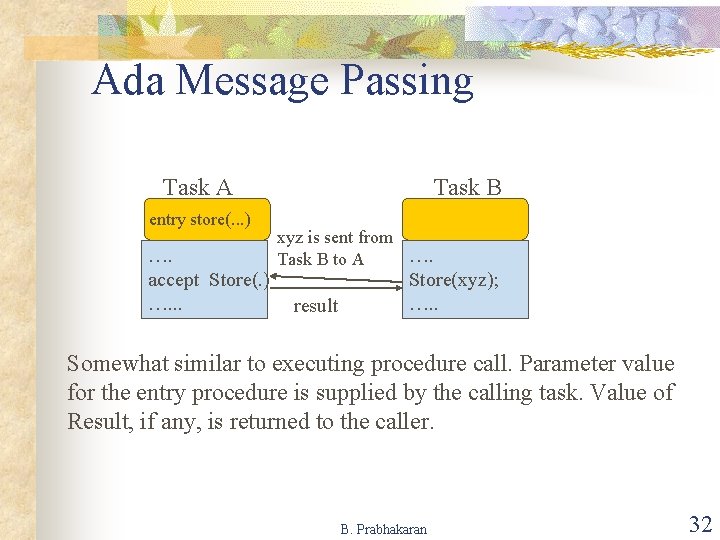

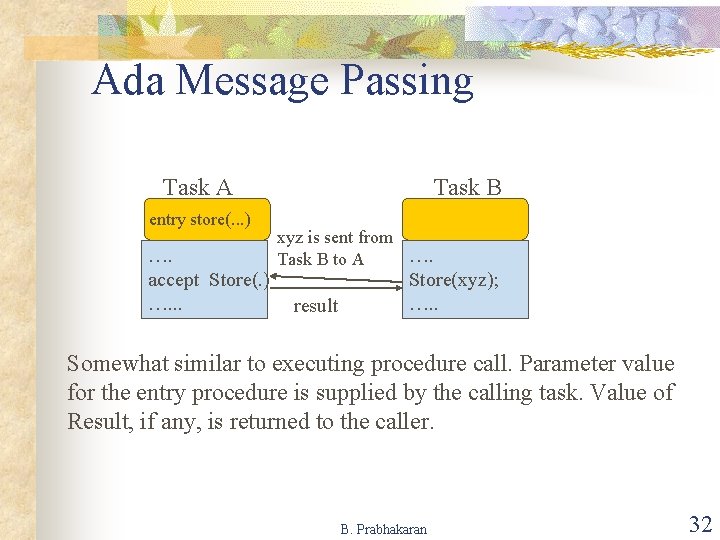

Ada task [type] <name> is entry specifications end Task body <name> is Declaration of local variables begin list of statements …. . accept <entry id> (<formal parameters> do body of the accept statement end<entry id> exceptions Exception handlers end; B. Prabhakaran task proc-buffer is entry store(x: buffer); remove(y: buffer); end; task body proc-buffer is temp: buffer; begin loop when flag accept store(x: bu temp : = x; flag : =0; end store; When !flag accept remove(y: y : = temp; flag : =1; end remove; end loop end proc-buffer. 31

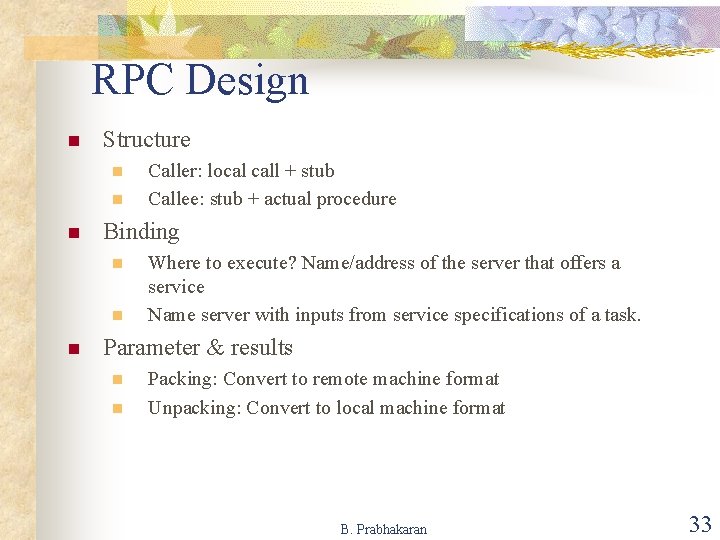

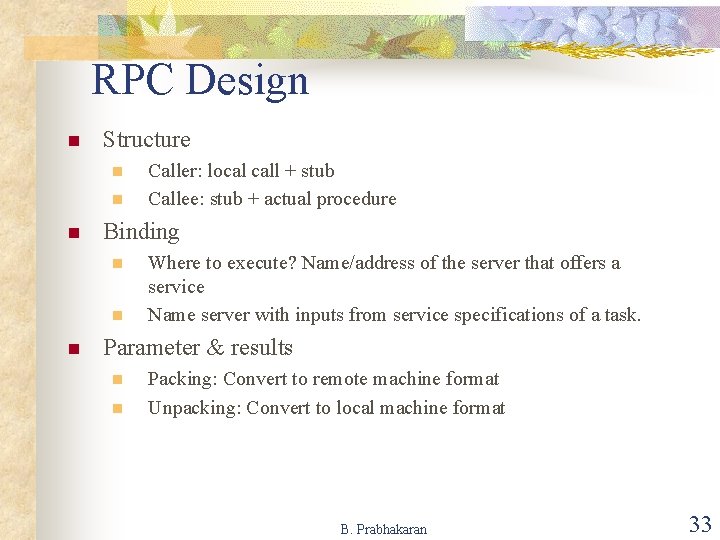

Ada Message Passing Task A entry store(. . . ) …. accept Store(. ) …. . . Task B xyz is sent from …. Task B to A result Store(xyz); …. . Somewhat similar to executing procedure call. Parameter value for the entry procedure is supplied by the calling task. Value of Result, if any, is returned to the caller. B. Prabhakaran 32

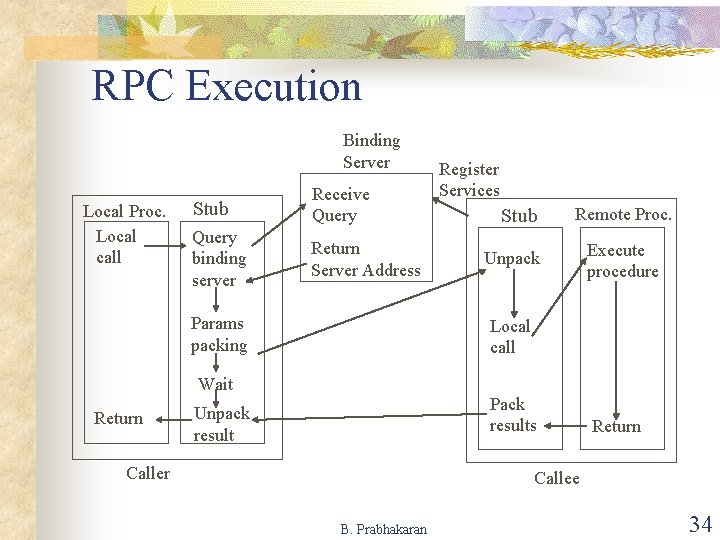

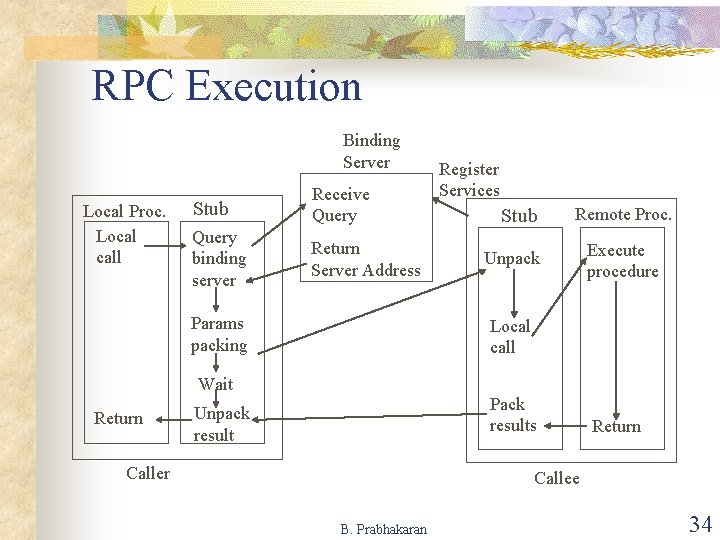

RPC Design n Structure n n n Binding n n n Caller: local call + stub Callee: stub + actual procedure Where to execute? Name/address of the server that offers a service Name server with inputs from service specifications of a task. Parameter & results n n Packing: Convert to remote machine format Unpacking: Convert to local machine format B. Prabhakaran 33

RPC Execution Binding Server Local Proc. Local call Stub Receive Query binding server Return Server Address Params packing Register Services Stub Remote Proc. Unpack Execute procedure Local call Wait Return Pack results Unpack result Caller Return Callee B. Prabhakaran 34

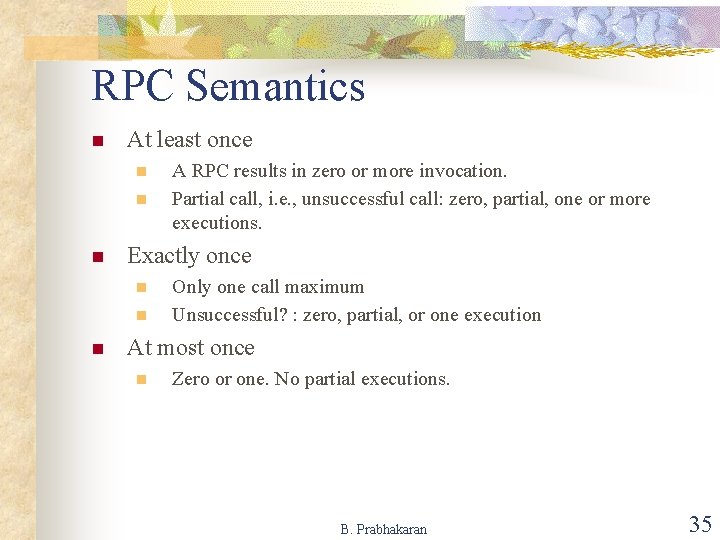

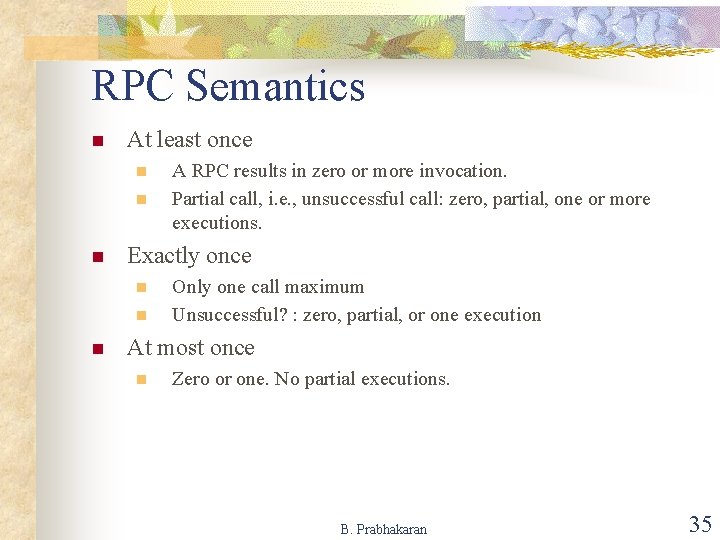

RPC Semantics n At least once n n n Exactly once n n n A RPC results in zero or more invocation. Partial call, i. e. , unsuccessful call: zero, partial, one or more executions. Only one call maximum Unsuccessful? : zero, partial, or one execution At most once n Zero or one. No partial executions. B. Prabhakaran 35

RPC Implementation n Sending/receiving parameters: n n n Use reliable communication? : Use datagrams/unreliable? Implies the choice of semantics: how many times a RPC may be invoked. B. Prabhakaran 36

RPC Disadvantage n Incremental results communication not possible: (e. g. , ) response from a database cannot return first few matches immediately. Got to wait till all responses are decided. B. Prabhakaran 37

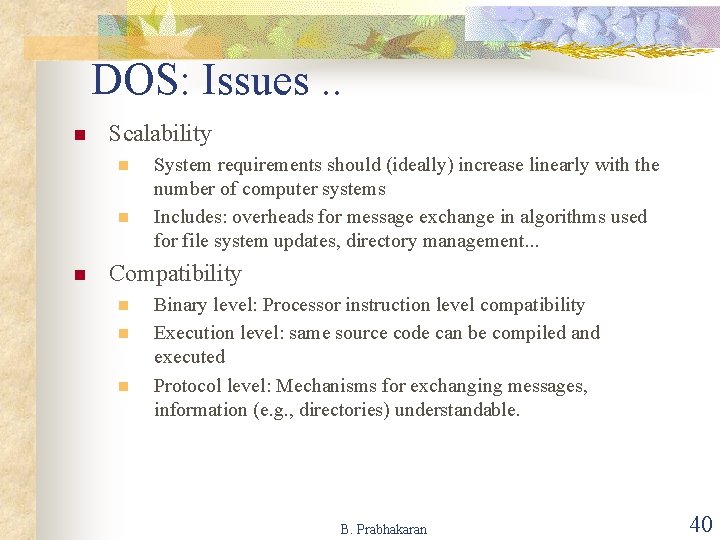

Distributed Operating Systems Issues : n n n n n Global Knowledge Naming Scalability Compatibility Process Synchronization Resource Management Security Structuring Client-Server Model B. Prabhakaran 38

DOS: Issues. . n Global Knowledge n n n Lack of global shared memory, global clock, unpredictable message delays Lead to unpredictable global state, difficult to order events (A sends to B, C sends to D: may be related) Naming n n Need for a name service: to identify objects (files, databases), users, services (RPCs). Replicated directories? : Updates may be a problem. Need for name to (IP) address resolution. Distributed directory: algorithms for update, search, . . . B. Prabhakaran 39

DOS: Issues. . n Scalability n n n System requirements should (ideally) increase linearly with the number of computer systems Includes: overheads for message exchange in algorithms used for file system updates, directory management. . . Compatibility n n n Binary level: Processor instruction level compatibility Execution level: same source code can be compiled and executed Protocol level: Mechanisms for exchanging messages, information (e. g. , directories) understandable. B. Prabhakaran 40

DOS: Issues. . n Process Synchronization n n Resource Management n n n Distributed shared memory: difficult. Data/object management: Handling migration of files, memory values. To achieve a transparent view of the distributed system. Main issues: consistency, minimization of delays, . . Security n Authentication and authorization B. Prabhakaran 41

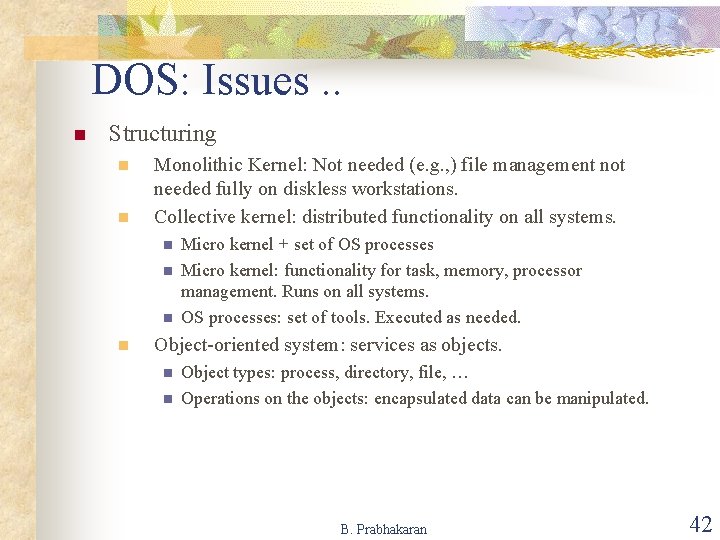

DOS: Issues. . n Structuring n n Monolithic Kernel: Not needed (e. g. , ) file management not needed fully on diskless workstations. Collective kernel: distributed functionality on all systems. n n Micro kernel + set of OS processes Micro kernel: functionality for task, memory, processor management. Runs on all systems. OS processes: set of tools. Executed as needed. Object-oriented system: services as objects. n n Object types: process, directory, file, … Operations on the objects: encapsulated data can be manipulated. B. Prabhakaran 42

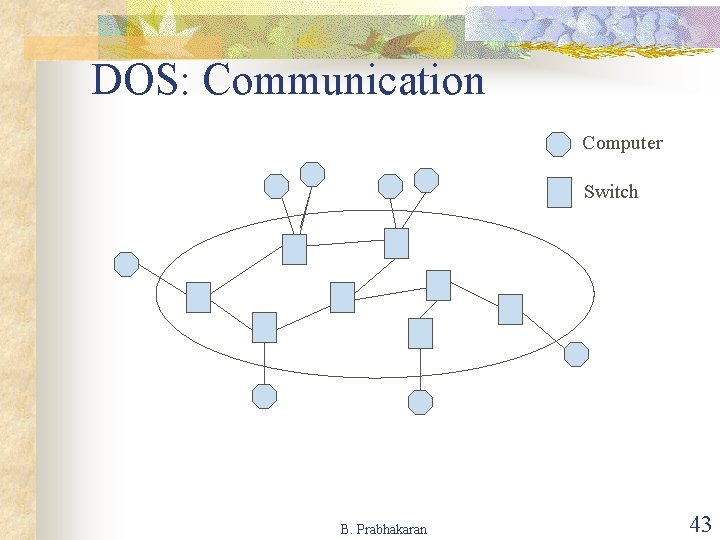

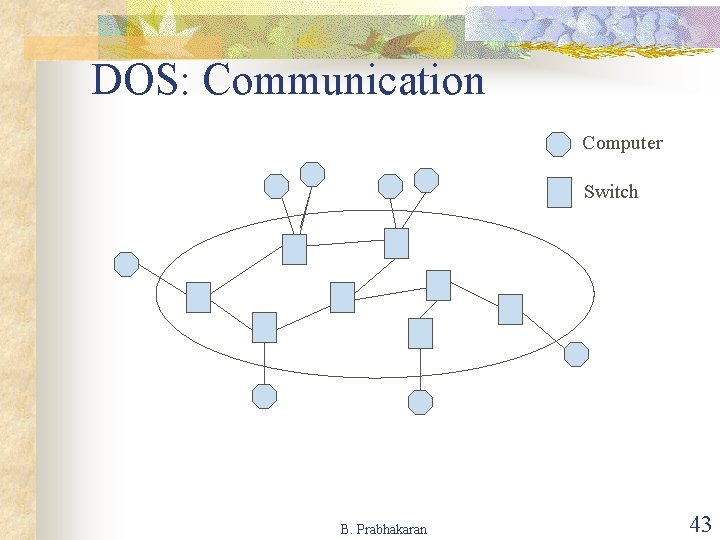

DOS: Communication Computer Switch B. Prabhakaran 43

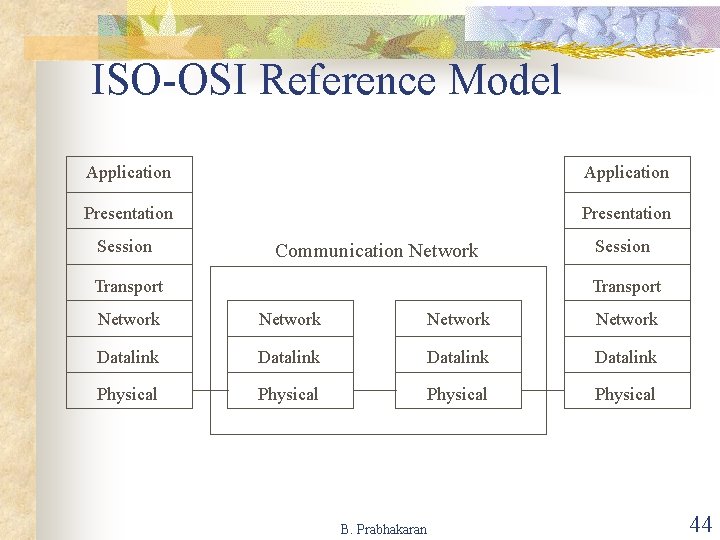

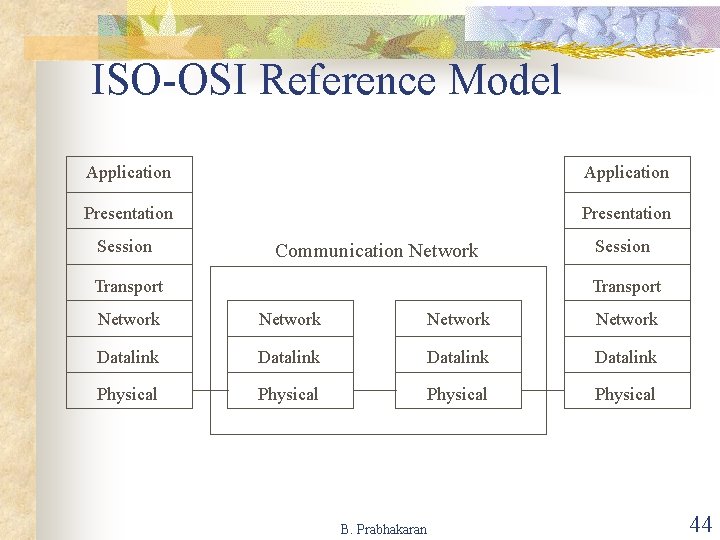

ISO-OSI Reference Model Application Presentation Session Communication Network Transport Session Transport Network Datalink Physical B. Prabhakaran 44

Un/reliable Communication n Reliable Communication n n Virtual circuit: one path between sender & receiver. All packets sent through the path. Data received in the same order as it is sent. TCP (Transmission Control Protocol) provides reliable communication. Unreliable communication n Datagrams: Different packets are sent through different paths. Data might be lost or out of sequence. UDP (User datagram Protocol) provides unreliable communication. B. Prabhakaran 45