Advanced Operating Systems Lecture notes Dr Clifford Neuman

![Camelot [Spector et al. ] v Supports execution of distributed transactions. v Specialized functions: Camelot [Spector et al. ] v Supports execution of distributed transactions. v Specialized functions:](https://slidetodoc.com/presentation_image_h2/fac3d64fcf47651d2beeed4859535ce6/image-34.jpg)

![Distributed Deadlock Detection Algorithms 1 v [Chandy et al. ] v Message sequencing is Distributed Deadlock Detection Algorithms 1 v [Chandy et al. ] v Message sequencing is](https://slidetodoc.com/presentation_image_h2/fac3d64fcf47651d2beeed4859535ce6/image-38.jpg)

![Weighted Voting [Gifford] 1 v. Every copy assigned a number of votes (weight assigned Weighted Voting [Gifford] 1 v. Every copy assigned a number of votes (weight assigned](https://slidetodoc.com/presentation_image_h2/fac3d64fcf47651d2beeed4859535ce6/image-46.jpg)

![Virtual Time [Jefferson] v. Time warp mechanism. v. May or may not have connection Virtual Time [Jefferson] v. Time warp mechanism. v. May or may not have connection](https://slidetodoc.com/presentation_image_h2/fac3d64fcf47651d2beeed4859535ce6/image-55.jpg)

- Slides: 83

Advanced Operating Systems Lecture notes Dr. Clifford Neuman University of Southern California Information Sciences Institute Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Administration v. Assignment 1 has been posted q Due 17 September 2008 Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

CSci 555: Advanced Operating Systems Lecture 3 – Distributed Concurrency, Transactions, Deadlock 12 September 2008 Dr. Clifford Neuman University of Southern California Information Sciences Institute (lecture slides written by Dr. Katia Obraczka) Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Concurrency Control and Synchronization v How to control and synchronize possibly conflicting operations on shared data by concurrent processes? v First, some terminology. q Processes. q Light-weight processes. q Threads. q Tasks. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Processes v. Text book: q Processing activity associated with an execution environment, ie, address space and resources (such as communication and synchronization resources). Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Threads v OS abstraction of an activity/task. q Execution environment expensive to create and manage. q Multiple threads share single execution environment. q Single process may spawn multiple threads. q Maximize degree of concurrency among related activities. q Example: multi-threaded servers allow concurrent processing of client requests. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Other Terminology v. Process versus task/thread. q Process: heavy-weight unit of execution. q Task/thread: light-weight unit of execution. P 2 P 1 t 2 t 11 t 12 Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Threads Case Study 1 v Hauser et al. v Examine use of user-level threads in 2 OS’s: q Xerox Parc’s Cedar (research). q GVX (commercial version of Cedar). v Study dynamic thread behavior. q Classes of threads (eternal, worker, transient) q Number of threads. q Thread lifetime. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Thread Paradigms v Different categories of usage: q Defer work: thread does work not vital to the main activity. § Examples: printing a document, sending mail. q Pumps: used in pipelining; use output of a thread as input and produce output to be consumed by another task. q Sleepers: tasks that repeatedly wait for an event to execute; e. g. , check for network connectivity every x seconds. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Synchronization v. So far, how one defines/activates concurrent activities. v. But how to control access to shared data and still get work done? v. Synchronization via: q Shared data [DSM model]. q Communication [MP model]. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Synchronization by Shared Data v. Primitives flexibility structure q Semaphores. q Conditional critical regions. q Monitors. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Synchronization by MP v. Explicit communication. v. Primitives send and receive § Blocking send, blocking receive: sender and receiver are blocked until message is delivered (redezvous) § Nonblocking send, blocking receive: sender continues processing receiver is blocked until the requested message arrives § Nonblocking send, nonblocking receive: messages are sent to a shared data structure consisting of queues (mailboxes) Deadlocks ? • Mailboxes one process sends a message to the mailbox and the other process picks up the message from the mailbox Example: Send (mailbox, msg) Receive (mailbox, msg) Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Transactions v Database term. q Execution of program that accesses a database. v In distributed systems, q Concurrency control in the client/server model. q From client’s point of view, sequence of operations executed by server in servicing client’s request in a single step. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Transaction Properties v. ACID: Atomicity: a transaction is an atomic unit of processing and it is either performed entirely or not at all Consistency: a transaction's correct execution must take the database from one correct state to another Isolation: the updates of a transaction must not be made visible to other transactions until it is committed Durability: if transaction commits, the results must never be lost because of subsequent failure Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Transaction Atomicity v “All or nothing”. v Sequence of operations to service client’s request are performed in one step, ie, either all of them are executed or none are. v Start of a transaction is a continuation point to which it can roll back. v Issues: q Multiple concurrent clients: “isolation”. 1. Each transaction accesses resources as if there were no other concurrent transactions. 2. Modifications of the transaction are not visible to other resources before it finishes. 3. Modifications of other transactions are not visible during the transaction at all. q Server failures: “failure atomicity”. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Transaction Features v Recoverability: server should be able to “roll back” to state before transaction execution. v Serializability: transactions executing concurrently must be interleaved in such a way that the resulting state is equal to some serial execution of the transactions v Durability: effects of transactions are permanent. q A completed transaction is always persistent (though values may be changed by later transactions). q Modified resources must be held on persistent storage before transaction can complete. May not just be disk but can include battery-backed RAM. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Concurrency Control Maintain transaction serializability: § establish order of concurrent transaction execution § Interleave execution of operations to ensure serializability v. Basic Server operations: read or write. v 3 mechanisms: q Locks. q Optimistic concurrency control. q Timestamp ordering. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Locks v Lock granularity: affects level of concurrency. q q 1 lock per shared data item. Shared Read § Exists when concurrent transactions granted READ access § Issued when transaction wants to read and exclusive lock not held on item q Exclusive Write § Exists when access reserved for locking transaction § Used when potential for conflict exists § Issued when transaction wants to update unlocked data • Many Read locks simultaneously possible for a given item, but only one Write lock • Transaction that requests a lock that cannot be granted must wait Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Lock Implementation v. Server lock manager q Maintains table of locks for server data items. q Lock and unlock operations. q Clients wait on a lock for given data until data is released; then client is signalled. q Each client’s request runs as separate server thread. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Deadlock v Use of locks can lead to deadlock. v Deadlock: each transaction waits for another transaction to release a lock forming a wait cycle. T 1 T 2 v Deadlock condition: cycle in the wait-for graph. v Deadlock prevention and detection. q require all locks to be acquired at once Problems? q Ordering of data items: once a transaction locks an item, it cannot lock anything occurring earlier in the ordering v Deadlock resolution: lock timeout. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE T 3

COVERED LAST LECTURE Optimistic Concurrency Control 1 v. Assume that most of the time, probability of conflict is low. v. Transactions allowed to proceed in parallel until close transaction request from client. v. Upon close transaction, checks for conflict; if so, some transactions aborted. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Optimistic Concurrency 2 v Read phase q q Transactions have tentative version of data items it accesses. § Transaction reads data and stores in local variables § Any writes are made to local variables without updating the actual data Tentative versions allow transactions to abort without making their effect permanent. v Validation phase q Executed upon close transaction. q Checks serially equivalence. q If validation fails, conflict resolution decides which transaction(s) to abort. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Optimistic Concurrency 3 v. Write phase q If transaction is validated, all of its tentative versions are made permanent. q Read-only transactions commit immediately. q Write transactions commit only after their tentative versions are recorded in permanent storage. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Timestamp Ordering v. Uses timestamps to order transactions accessing same data items according to their starting times. v. Assigning timestamps: q Clock based: assign global unique time stamp to each transaction q Monotonically increasing counter. v. Some time stamping necessary to avoid “livelock”: where a transaction cannot acquire any locks because of unfair waiting algorithm Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Local versus Distributed Transactions v Local transactions: q All transaction operations executed by single server. v Distributed transactions: q Involve multiple servers. v Both local and distributed transactions can be simple or nested. q Nesting: increase level of concurrency. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Distributed Transactions 1 s 111 T 1 c T 2 s 11 c s 112 s 1 T 3 s 3 Simple Distributed Transaction s 12 Nested Distributed Transaction Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE s 121

COVERED LAST LECTURE Distributed Transactions 2 v. Transaction coordinator q First server contacted by client. q Responsible for aborting/committing. q Adding workers. v. Workers q Other servers involved report their results to the coordinator and follow its decisions. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE Atomicity in Distributed Transactions v Harder: several servers involved. v Atomic commit protocols q 1 -phase commit § Example: coordinator sends “commit” or “abort” to workers; keeps re-broadcasting until it gets ACK from all of them that request was performed. § Inefficient. § How to ensure that all of the servers vote + that they all reach the same decision. It is simple if no errors occur, but the protocol must work correctly even when server fails, messages are lost, etc. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE 2 -Phase Commit 1 v. First phase: voting q Each server votes to commit or abort transaction. v. Second phase: carrying out joint decision. q If any server votes to abort, joint decision is to abort. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

COVERED LAST LECTURE 2 -Phase Commit 2 v Phase I: Each participant votes for the transaction to be committed or aborted. q Participants must ensure to carry out its part of commit protocol. (prepared state). q Each participant saves in permanent storage all of the objects that it has altered in transaction to be in 'prepared state'. v Phase II: q Every participant in the transaction carries out the joint decision. q If any one participant votes to abort, then the decision is to abort. q If all participants vote to commit, then the decision is to commit. q Coordinator 1. Prepared to commit? Can com mit? Yes/No Do commi 3. Committed. 5. End. Workers t/aborted a / d e t t i m ve com 2. Prepared to commit/abort 4. Committed/aborted. Ha Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Concurrency Control in Distributed Transactions 1 v Locks q Each server manages locks for its own data. q Locks cannot be released until transaction committed or aborted on all servers involved. q Lock managers in different servers set their locks independently, there are chances of different transaction orderings. q The different ordering lead to cyclic dependencies between transactions and a distributed deadlock situation. q When a deadlock is detected, a transaction is aborted to resolve the deadlock Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Concurrency Control in Distributed Transactions 2 v. Timestamp Ordering q Globally unique timestamps. § Coordinator issues globally unique TS and passes it around. § TS: <server id, local TS> q Servers are jointly responsible for ensuring that they performed in a serially equivalent manner. q Clock synchronization issues Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Concurrency Control in Distributed Transactions 3 v. Optimistic concurrency control q Each transaction should be validated before it is allowed to commit. q The validation at all servers takes place during the first phase of the 2 -Phase Commit Protocol. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

![Camelot Spector et al v Supports execution of distributed transactions v Specialized functions Camelot [Spector et al. ] v Supports execution of distributed transactions. v Specialized functions:](https://slidetodoc.com/presentation_image_h2/fac3d64fcf47651d2beeed4859535ce6/image-34.jpg)

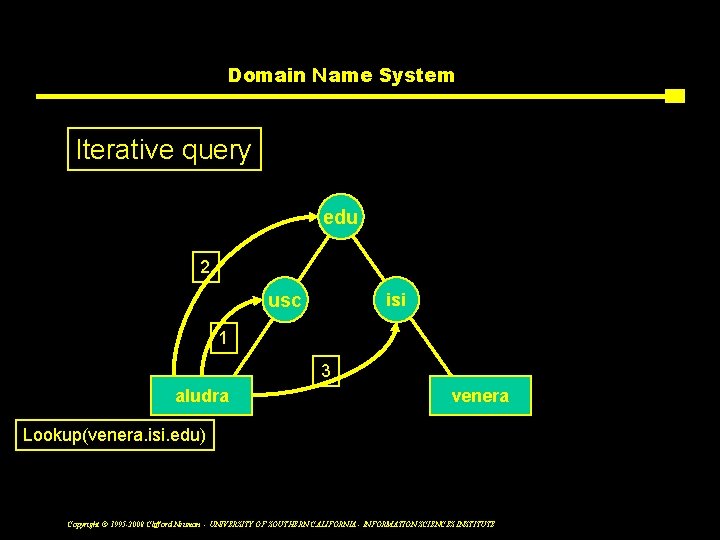

Camelot [Spector et al. ] v Supports execution of distributed transactions. v Specialized functions: q Disk management § Allocation of large contiguous chunks. q Recovery management § Transaction abort and failure recovery. q Transaction management § Abort, commit, and nest transactions. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Distributed Deadlock 1 v When locks are used, deadlock can occur. v Circular wait in wait-for graph means deadlock. v Centralized deadlock detection, prevention, and resolutions schemes. q Examples: § Detection of cycle in wait-for graph. § Lock timeouts: hard to set TO value, aborting unnecessarily. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Distributed Deadlock 2 v. Much harder to detect, prevent, and resolve. Why? q No global view. q No central agent. q Communication-related problems § Unreliability. § Delay. § Cost. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Distributed Deadlock Detection v. Cycle in the global wait-for graph. v. Global graph can be constructed from local graphs: hard! q Servers need to communicate to find cycles. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

![Distributed Deadlock Detection Algorithms 1 v Chandy et al v Message sequencing is Distributed Deadlock Detection Algorithms 1 v [Chandy et al. ] v Message sequencing is](https://slidetodoc.com/presentation_image_h2/fac3d64fcf47651d2beeed4859535ce6/image-38.jpg)

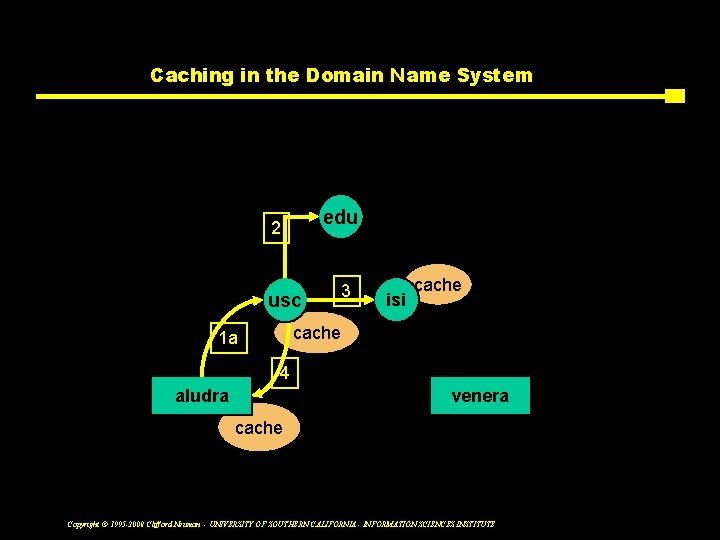

Distributed Deadlock Detection Algorithms 1 v [Chandy et al. ] v Message sequencing is preserved. v Resource versus communication models. q Resource model § Processes, resources, and controllers. § Process requests resource from controller. q Communication model § Processes communicate directly via messages (request, grant, etc) requesting resources. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Resource versus Communication Models v In resource model, controllers are deadlock detection agents; in communication model, processes. v In resource model, process cannot continue until all requested resources granted; in communication model, process cannot proceed until it can communicate with at least one process it’s waiting for. v Different models, different detection alg’s. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Distributed Deadlock Detection Schemes v. Graph-theory based. v. Resource model: deadlock when cycle among dependent processes. v. Communication model: deadlock when knot (all vertices that can be reached from i can also reach i) of waiting processes. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Deadlock Detection in Resource Model v. Use probe messages to follow edges of wait-for graph (aka edge chasing). v. Probe carries transaction wait-for relations representing path in global wait-for graph. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Deadlock Detection Example 1. Server 1 detects transaction T is waiting for U, which is waiting for data from server 2. 2. Server 1 sends probe T->U to server 2. 3. Server 2 gets probe and checks if U is also waiting; if so (say for V), it adds V to probe T->U->V. If V is waiting for data from server 3, server 2 forwards probe. 4. Paths are built one edge at a time. Before forwarding probe, server checks for cycle (e. g. , T->U->V->T). 5. If cycle detected, a transaction is aborted. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Replication requires synchronization v Keep more than one copy of data item. v Technique for improving performance in distributed systems. v In the context of concurrent access to data, replicate data for increase availability. q Improved response time. q Improved availability. q Improved fault tolerance. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Replication 2 v But nothing comes for free. v What’s the tradeoff? q Consistency maintenance. v Consistency maintenance approaches: q Lazy consistency (gossip approach). § An operation call is executed at just one replica; updating of other replicas happens by lazy exchange of “gossip” messages. q q Quorum consensus is based on voting techniques. Process group. Stronger consistency Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Quorum Consensus v. Goal: prevent partitions from producing inconsistent results. v. Quorum: subgroup of replicas whose size gives it the right to carry out operations. v. Quorum consensus replication: q Update will propagate successfully to a subgroup of replicas. q Other replicas will have outdated copies but will be updated off-line. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

![Weighted Voting Gifford 1 v Every copy assigned a number of votes weight assigned Weighted Voting [Gifford] 1 v. Every copy assigned a number of votes (weight assigned](https://slidetodoc.com/presentation_image_h2/fac3d64fcf47651d2beeed4859535ce6/image-46.jpg)

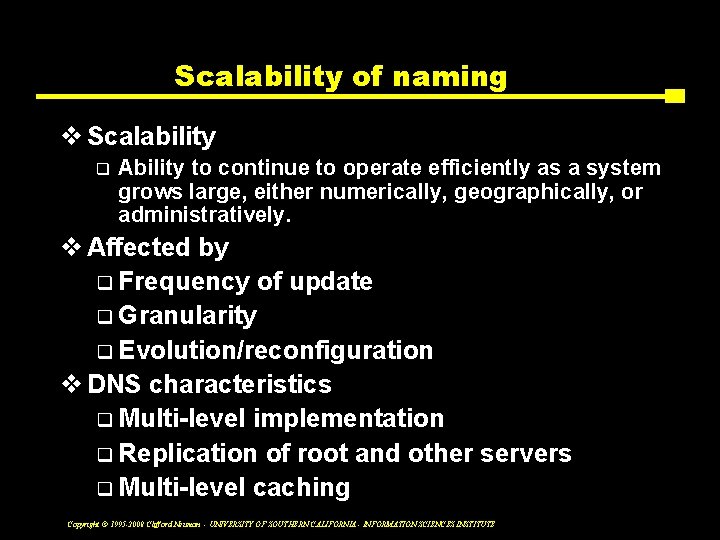

Weighted Voting [Gifford] 1 v. Every copy assigned a number of votes (weight assigned to a particular replica). v. Read: Must obtain R votes to read from any up-to-date copy. v. Write: Must obtain write quorum of W before performing update. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Weighted Voting 2 v. W > 1/2 total votes, R+W > total votes. v. Ensures non-null intersection between every read quorum and write quorum. v. Read quorum guaranteed to have current copy. v. Freshness is determined by version numbers. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Weighted Voting 3 v On read: q Try to find enough copies, ie, total votes no less than R. Not all copies need to be current. q Since it overlaps with write quorum, at least one copy is current. v On write: q Try to find set of up-to-date replicas whose votes no less than W. q If no sufficient quorum, current copies replace old ones, then update. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Time in Distributed Systems v. Notion of time is critical. v“Happened before” notion. q Example: concurrency control using TSs. q “Happened before” notion is not straightforward in distributed systems. § No guarantees of synchronized clocks. § Communication latency. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Event Ordering v. Lamport defines partial ordering (→): 1. If X and Y events occurred in the same process, and X comes before Y, then X→Y. 2. Whenever X sends a message to Y, then X→Y. 3. If X→Y and Y→Z, then X→Z. 4. X and Y are concurrent if X→Y and Y→Z Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Causal Ordering v“Happened before” also called causal ordering. v. In summary, possible to draw happened-before relationship between events if they happen in same process or there’s chain of messages between them. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Logical Clocks v. Monotonically increasing counter. v. No relation with real clock. v. Each process keeps its own logical clock Cp used to timestamp events. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Causal Ordering and Logical Clocks 1. Cp incremented before each event. Cp=Cp+1. 2. When p sends message m, it piggybacks t=Cp. 3. When q receives (m, t), it computes: Cq=max(Cq, t) before timestamping message receipt event. Example: text book page 398. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Total Ordering v. Extending partial to total order. v. Global timestamps: q (Ta, pa), where Ta is local TS and pa is the process id. q (Ta, pa) < (Tb, pb) iff Ta < Tb or T a=Tb and pa<pb q Total order consistent with partial order. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

![Virtual Time Jefferson v Time warp mechanism v May or may not have connection Virtual Time [Jefferson] v. Time warp mechanism. v. May or may not have connection](https://slidetodoc.com/presentation_image_h2/fac3d64fcf47651d2beeed4859535ce6/image-55.jpg)

Virtual Time [Jefferson] v. Time warp mechanism. v. May or may not have connection with real time. v. Uses optimistic approach, i. e. , events and messages are processed in the order received: “look-ahead”. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Local Virtual Clock v. Process virtual clock set to TS of next message in input queue. v. If next message’s TS is in the past, rollback! q Can happen due to different computation rates, communication latency, and unsynchronized clocks. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Rolling Back v Process goes back to TS(last message). v Cancels all intermediate effects of events whose TS > TS(last message). v Then, executes forward. v Rolling back is expensive! q Messages may have been sent to other processes causing them to send messages, etc. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Anti-Messages 1 v. For every message, there is an antimessage with same content but different sign. v. When sending message, message goes to receiver input queue and a copy with “-” sign is enqueued in the sender’s output queue. v. Message is retained for use in case of roll back. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Anti-Message 2 v. Message + its anti-message = 0 when in the same queue. v. Processes must keep log to “undo” operations. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Implementation v. Local control. v. Global control q How to make sure system as a whole progresses. q “Committing” errors and I/O. q Avoid running out of memory. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Global Virtual Clock v Snapshot of system at given real time. v Minimum of all local virtual times. v Lower bound on how far processes rollback. v Purge state before GVT. v GVT computed concurrently with rest of time warp mechanism. q Tradeoff? Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

ISIS 1 v Goal: provide programming environment for development of distributed systems. v Assumptions: q DS as a set of processes with disjoint address spaces, communicating over LAN via MP. q Processes and nodes can crash. q Partitions may occur. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

ISIS 2 v. Distinguishing feature: group communication mechanisms q Process group: processes cooperating in implementing task. q Process can belong to multiple groups. q Dynamic group membership. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Virtual Synchrony v. Real synchronous systems q Events (e. g. , message delivery) occur in the same order everywhere. q Expensive and not very efficient. v. Virtual synchronous systems q Illusion of synchrony. q Weaker ordering guarantees when applications allow it. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Atomic Multicast 1 v All destinations receive a message or none. v Primitives: q ABCAST: delivers messages atomically and in the same order everywhere. q CBCAST: causally ordered multicast. § “Happened before” order. § Messages from given process in order. q GBCAST § used by system to manage group addressing. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Other Features v Process groups q Group membership management. v Broadcast and group RPC q RPC-like interface to CBCAST, ABCAST, and GBCAST protocols. q Delivery guarantees § Caller indicates how many responses required. – No responses: asynchronous. – 1 or more: synchronous. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Implementation v. Set of library calls on top of UNIX. v. Commercially available. v. In the paper, example of distributed DB implementation using ISIS. v. HORUS: extension to WANs. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

CSci 555: Advanced Operating Systems Lecture 5 – September 19 2008 Naming and Binding PRELIMINARY SLIDES Dr. Clifford Neuman University of Southern California Information Sciences Institute Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Naming Concepts v. Name q What you call something v. Address q Where it is located v. Route q How one gets to it What is http: //www. isi. edu/~bcn ? v. But it is not that clear anymore, it depends on perspective. A name from one perspective may be an address from another. q Perspective means layer of abstraction Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

What are things we name v Users q To direct, and to identify v Hosts (computers) q High level and low level v Services q Service and instance v Files and other “objects” q Content and repository v Groups q Of any of the above Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

How we name things v Host-Based Naming q Host-name is required part of object name v Global Naming q Must look-up name in global database to find address q Name transparency v User/Object Centered Naming q Namespace is centered around user or object v Attribute-Based Naming q Object identified by unique characteristics q Related to resource discovery / search / indexes Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Namespace v. A name space maps: S * ®X e O At a particular point in time. v. The rest of the definition, and even some of the above, is open to discussion/debate. v. What is a “flat namespace” q Implementation issue Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Case Studies v. Host Table Get. Host. By. Name(usc. arpa){ q Flat namespace (? ) scan(host file); return(matching entry); q Global namespace (? ) } v. Grapevine Get. Host. By. Name(host. q Two-level, iterative lookup sc) q Clearinghouse 3 level v. Domain name system gv es q Arbitrary depth q Iterative or recursive(chained) lookup q Multi-level caching Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE sc gv

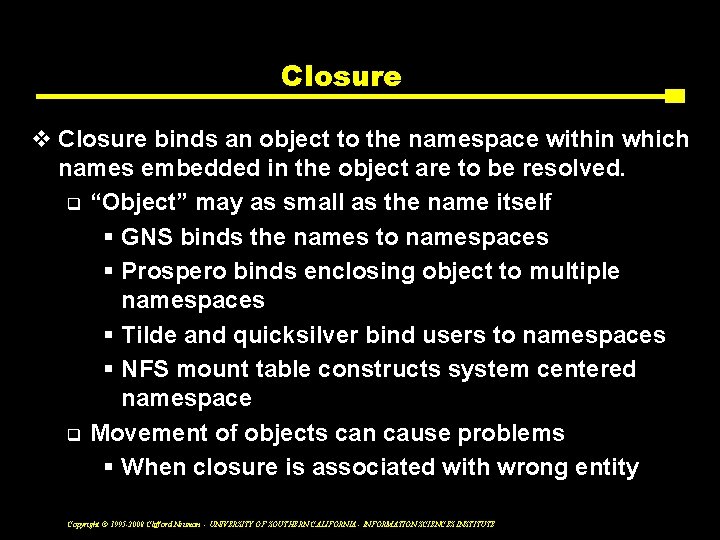

Domain Name System Iterative query edu 2 usc isi 1 3 aludra venera Lookup(venera. isi. edu) Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Caching in the Domain Name System Chained query edu 2 usc 3 isi cache 1 a 4 aludra Lookup(venera. isi. edu) venera cache Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Scalability of naming v Scalability q Ability to continue to operate efficiently as a system grows large, either numerically, geographically, or administratively. v Affected by q Frequency of update q Granularity q Evolution/reconfiguration v DNS characteristics q Multi-level implementation q Replication of root and other servers q Multi-level caching Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Closure v Closure binds an object to the namespace within which names embedded in the object are to be resolved. q “Object” may as small as the name itself § GNS binds the names to namespaces § Prospero binds enclosing object to multiple namespaces § Tilde and quicksilver bind users to namespaces § NFS mount table constructs system centered namespace q Movement of objects can cause problems § When closure is associated with wrong entity Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Other implementations of naming v. Broadcast q Limited scalability, but faster local response v. Prefix tables q Essentially a form of caching v. Capabilities q Combines security and naming q Traditional name service built over capability based addresses Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Advanced Name Systems v DEC’s Global Naming q Support for reorganization the key idea q Little coordination needed in advance v Half Closure q Names are all tagged with namespace identifiers § DID - Directory Identifier § Hidden part of name - makes it global § Upon reorganization, new DID assigned § Old names relative to old root q But the DID’s must be unique - how do we assign? Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Prospero Directory Service v Multiple namespace centered around a “root” node that is specific to each namespace. q Closure binds objects to this “root” node. v Layers of naming q User level names are “object” centered q Objects still have an address which is global q Namespaces also have global addresses v Customization in Prospero q Filters create user level derived namespaces on the fly q Union links support merging of views Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

Resource Discovery v. Similar to naming q Browsing related to directory services q Indexing and search similar to attribute based naming v. Attribute based naming q Profile q Multi-structured naming v. Search engines v. Computing resource discovery Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

The Web v. Object handles q Uniform Resource Identifier (URI’s) q Uniform Resource Locators (URL’s) q Uniform Resource Names (URN’s) v. XML q Definitions provide a form of closure § Conceptual level rather than the “namespace” level. Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE

LDAP v. Manage information about users, services q Lighter weight than X. 500 DAP § Heavier than DNS q Applications have conventions on where to look § Often data is duplicated because of multiple conventions q Performance enhancements not as well defined § Caching harder because of less constrained patterns of access Copyright © 1995 -2008 Clifford Neuman - UNIVERSITY OF SOUTHERN CALIFORNIA - INFORMATION SCIENCES INSTITUTE