Advanced NET Programming I 12 th Lecture http

- Slides: 45

Advanced. NET Programming I 12 th Lecture http: //d 3 s. mff. cuni. cz/~jezek Pavel Ježek pavel. jezek@d 3 s. mff. cuni. cz CHARLES UNIVERSITY IN PRAGUE faculty of mathematics and physics Some of the slides are based on University of Linz. NET presentations. © University of Linz, Institute for System Software, 2004 published under the Microsoft Curriculum License (http: //www. msdnaa. net/curriculum/license_curriculum. aspx)

Locks Allow to execute complex operations “atomically” (if used correctly). Are slow if locking (Monitor. Enter) blocks (implies processor yield) problem for short critical sections – consider spinlocks –. NET struct System. Threading. Spin. Lock).

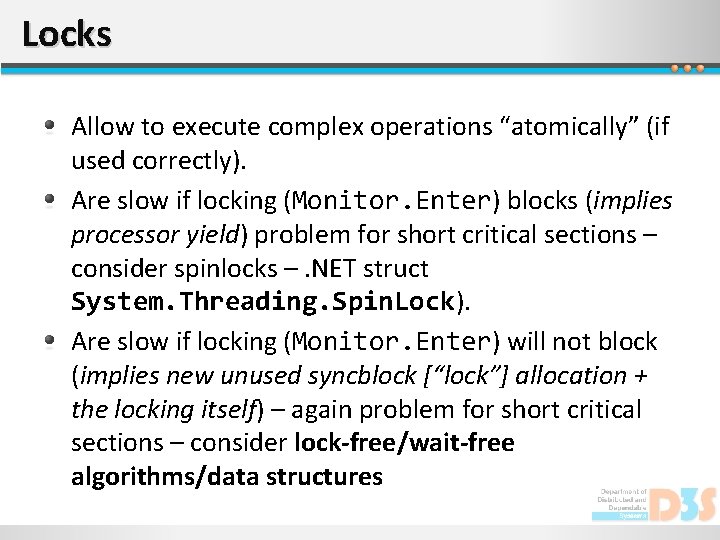

Locks Allow to execute complex operations “atomically” (if used correctly). Are slow if locking (Monitor. Enter) blocks (implies processor yield) problem for short critical sections – consider spinlocks –. NET struct System. Threading. Spin. Lock). Are slow if locking (Monitor. Enter) will not block (implies new unused syncblock [“lock”] allocation + the locking itself) – again problem for short critical sections – consider lock-free/wait-free algorithms/data structures

Journey to Lock-free/Wait-free World What is C#/. NET’s memory model? Any guaranties of a thread behavior (operation atomicity and ordering) from point of view of other threads?

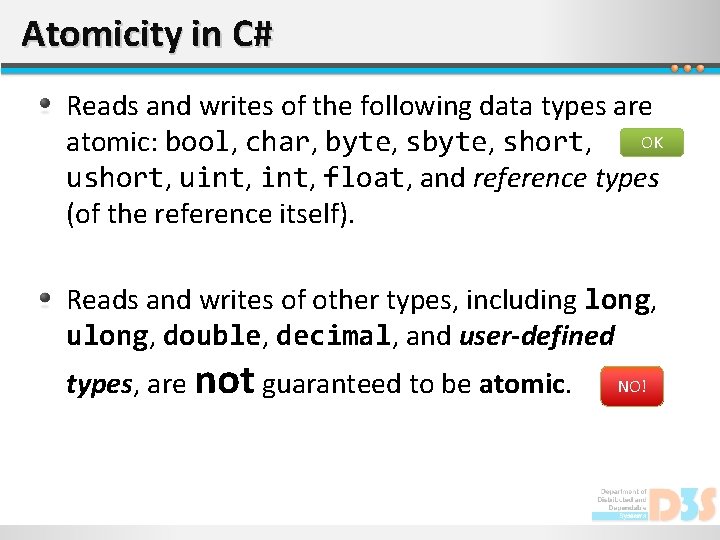

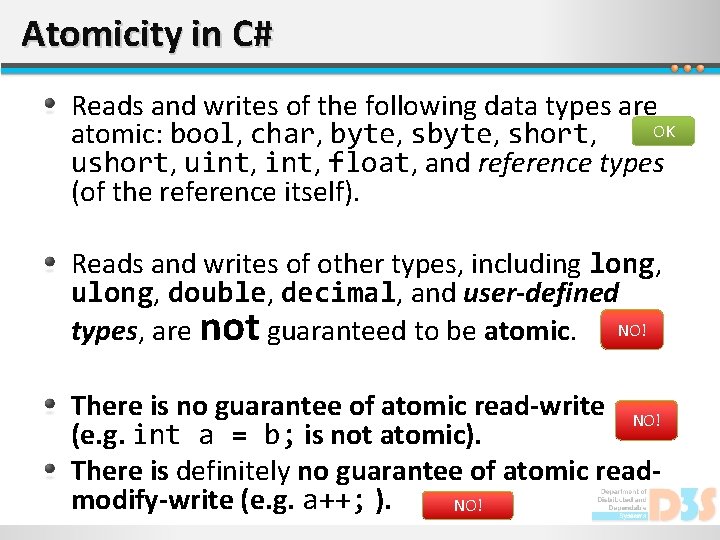

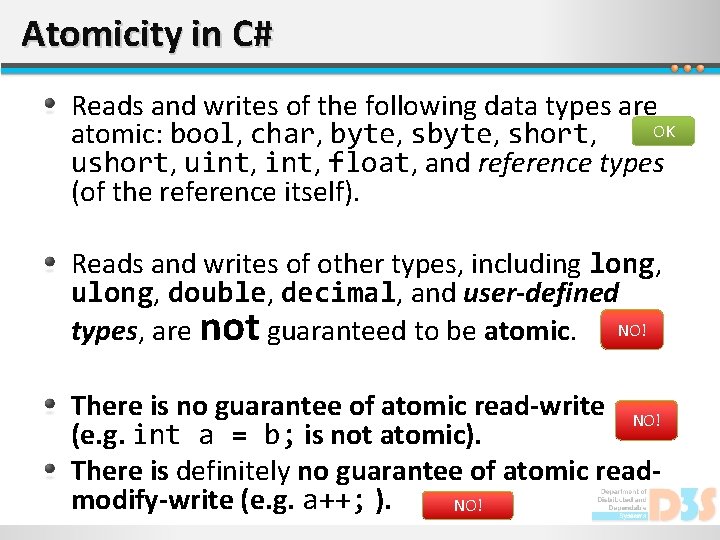

Atomicity in C# Reads and writes of the following data types are OK atomic: bool, char, byte, short, ushort, uint, float, and reference types (of the reference itself).

Atomicity in C# Reads and writes of the following data types are OK atomic: bool, char, byte, short, ushort, uint, float, and reference types (of the reference itself). Reads and writes of other types, including long, ulong, double, decimal, and user-defined types, are not guaranteed to be atomic. NO!

Atomicity in C# Reads and writes of the following data types are OK atomic: bool, char, byte, short, ushort, uint, float, and reference types (of the reference itself). Reads and writes of other types, including long, ulong, double, decimal, and user-defined types, are not guaranteed to be atomic. NO! There is no guarantee of atomic read-write NO! (e. g. int a = b; is not atomic). There is definitely no guarantee of atomic readmodify-write (e. g. a++; ). NO!

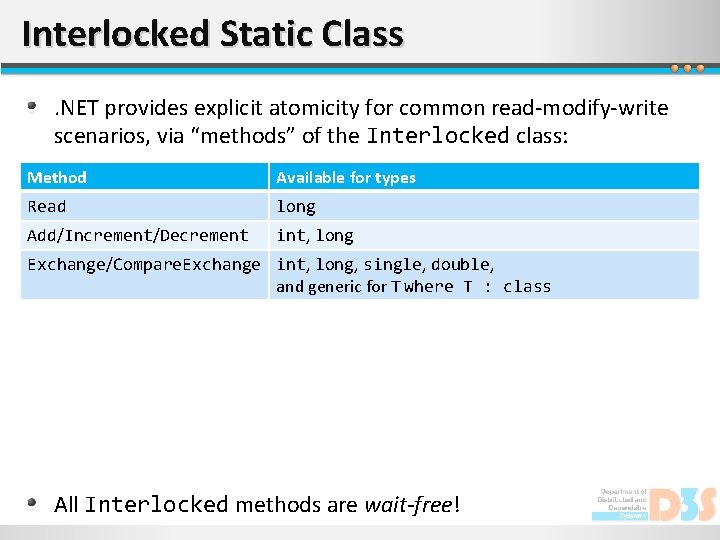

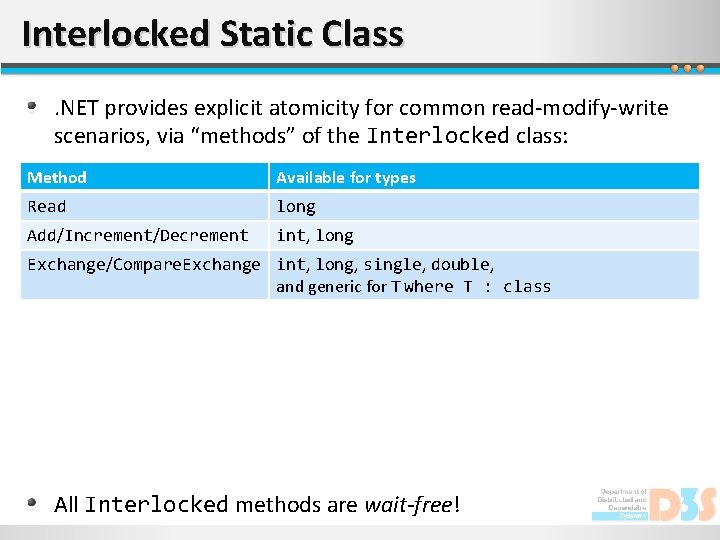

Interlocked Static Class. NET provides explicit atomicity for common read-modify-write scenarios, via “methods” of the Interlocked class: Method Available for types Read long Add/Increment/Decrement int, long Exchange/Compare. Exchange int, long, single, double, and generic for T where T : class All Interlocked methods are wait-free!

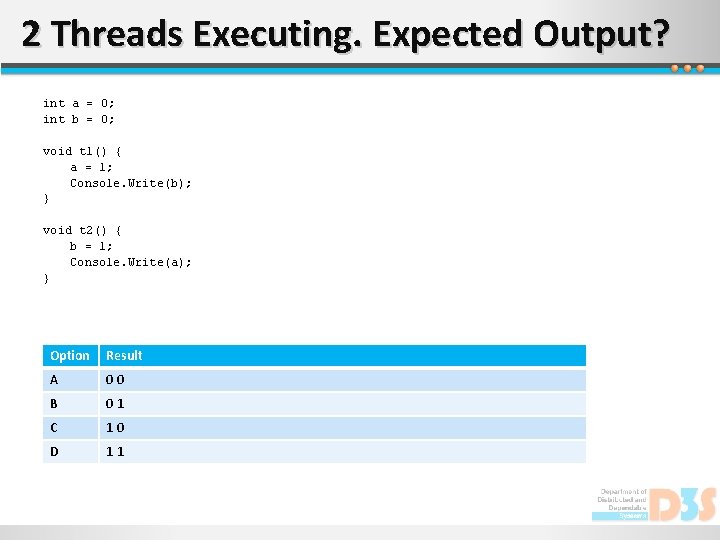

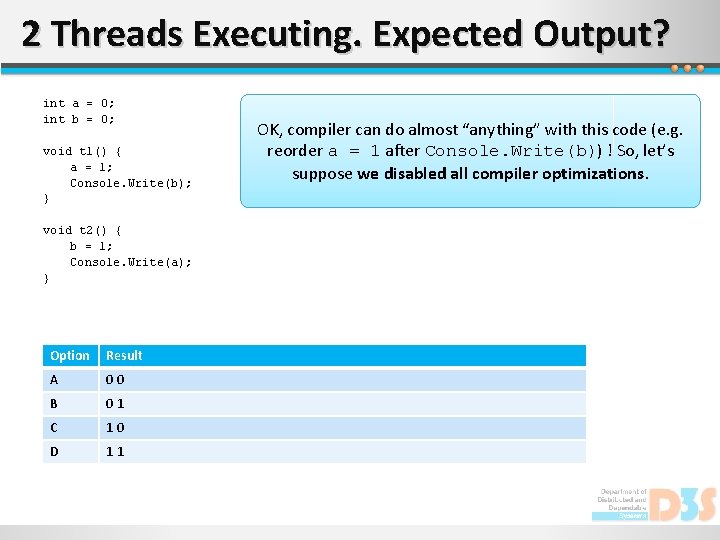

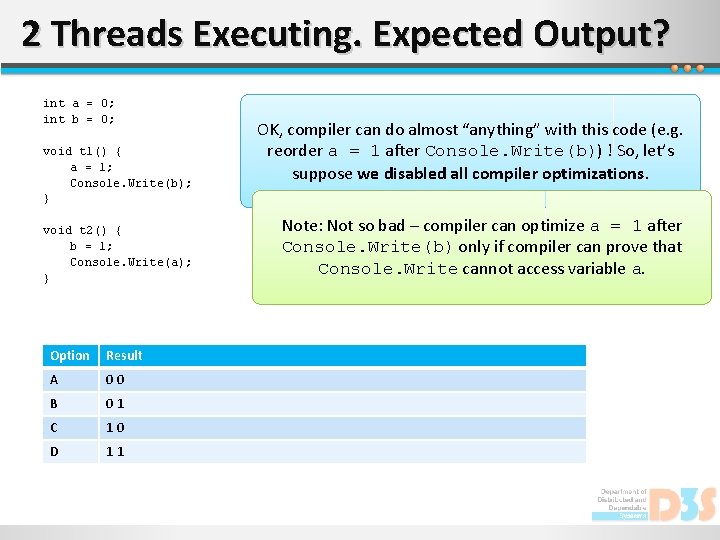

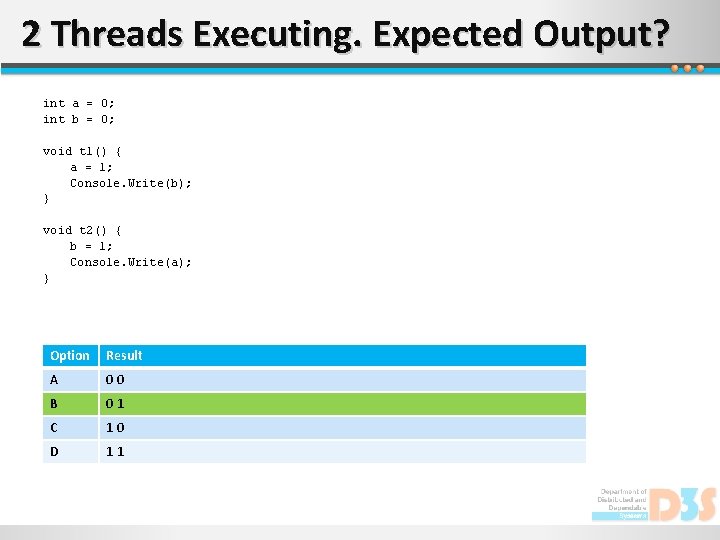

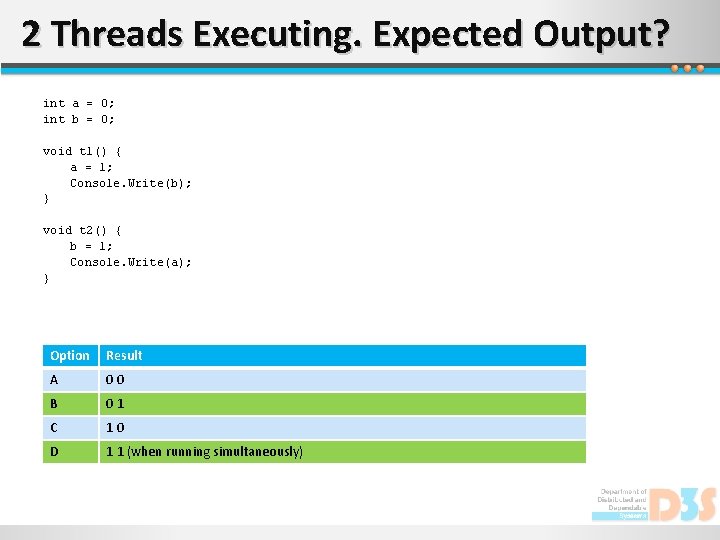

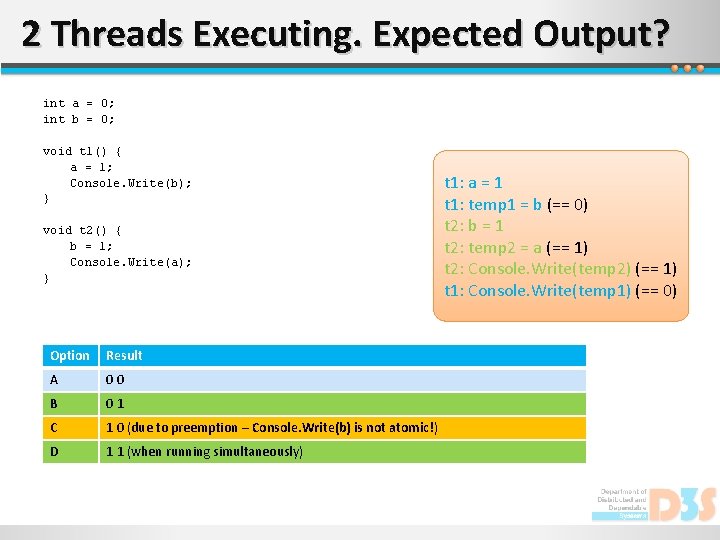

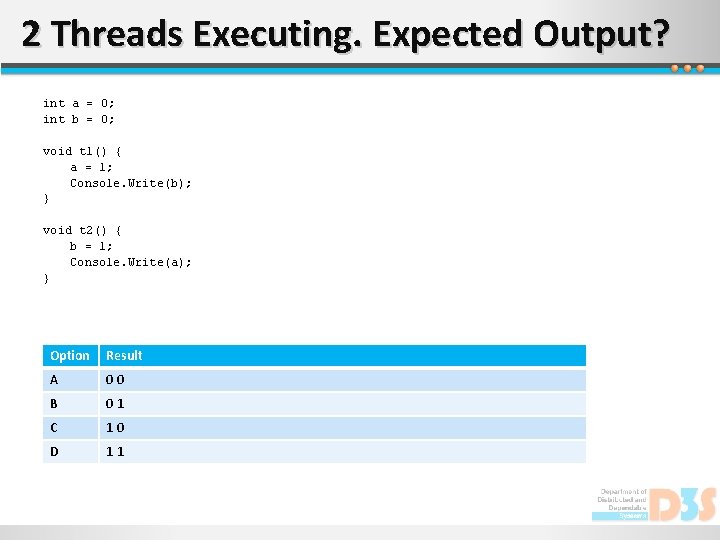

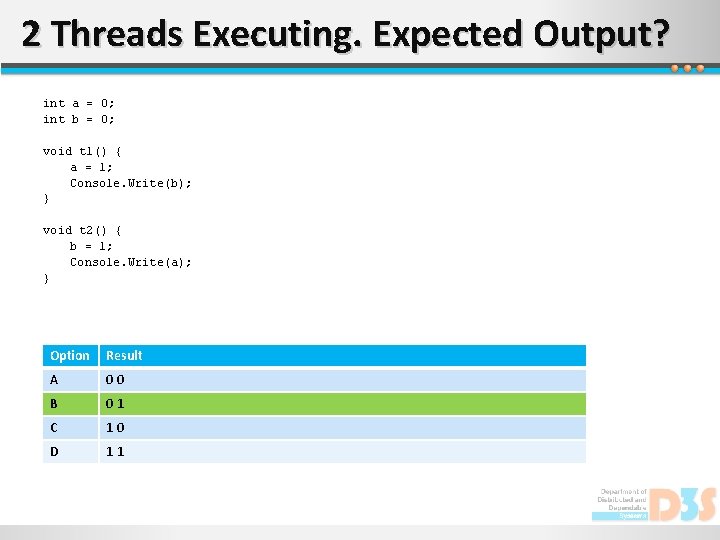

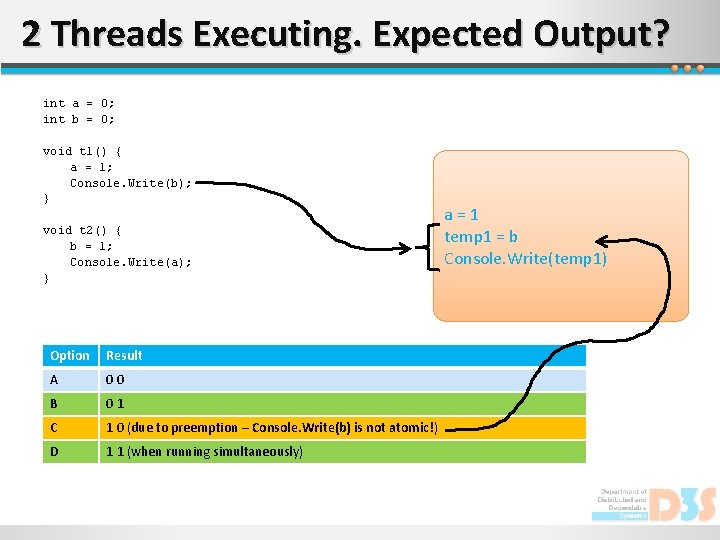

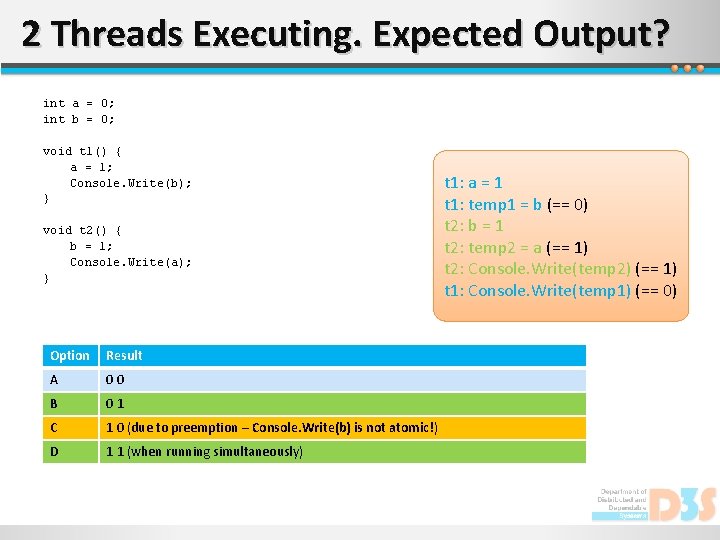

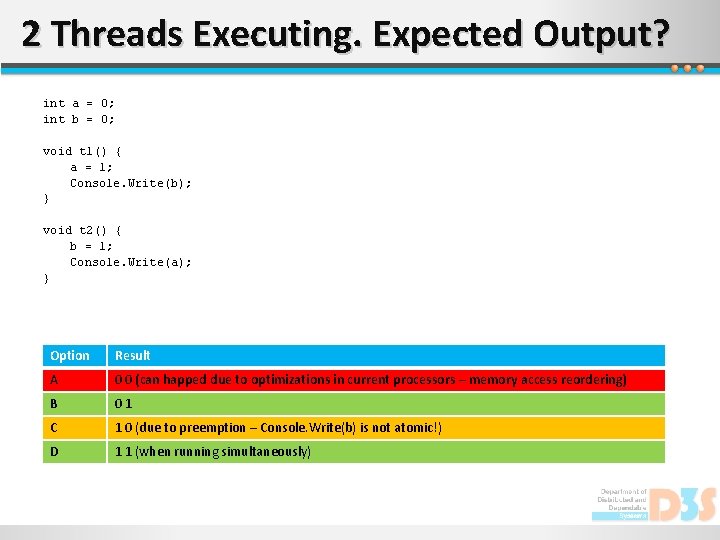

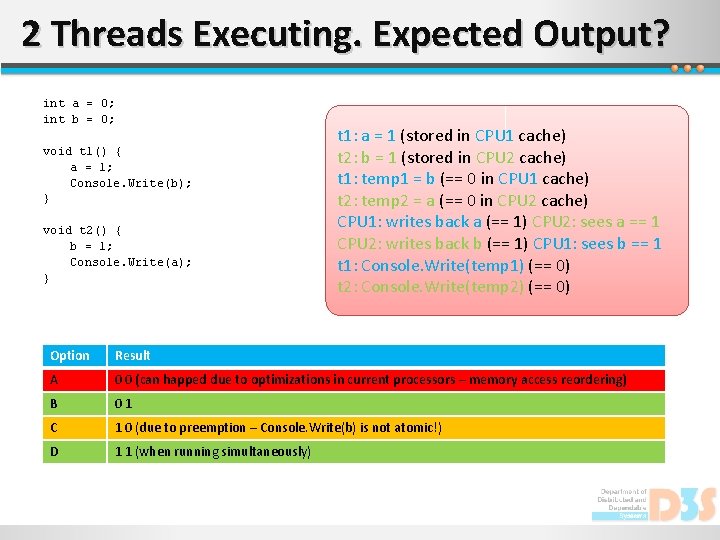

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 10 D 11

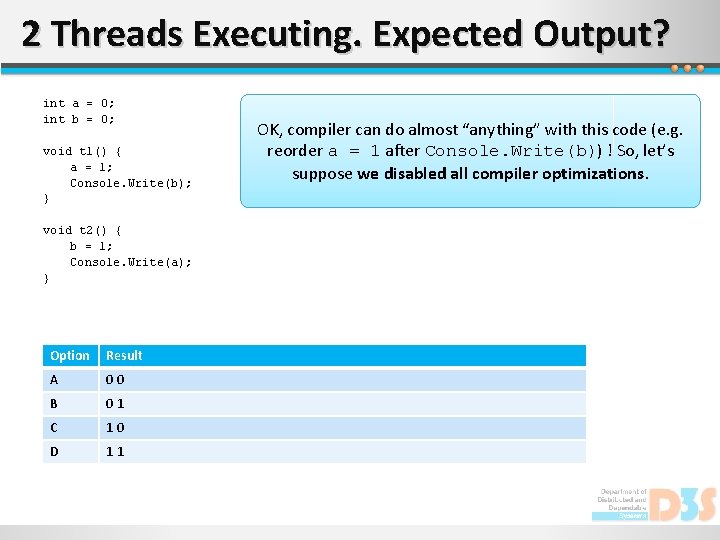

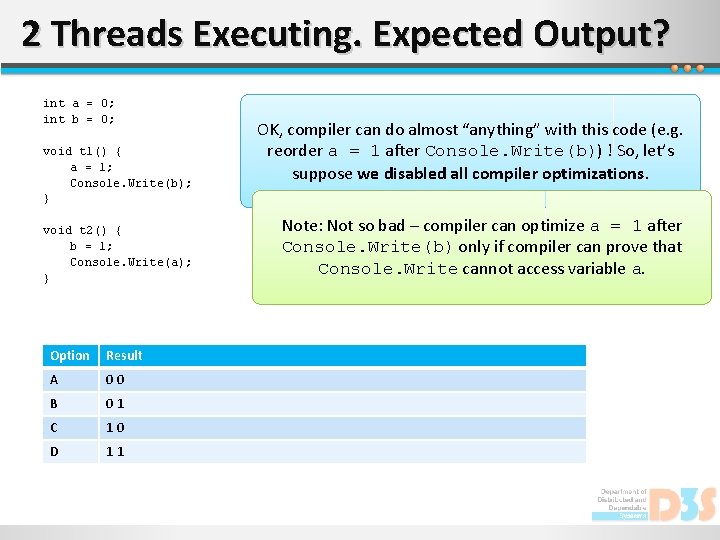

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 10 D 11 OK, compiler can do almost “anything” with this code (e. g. reorder a = 1 after Console. Write(b)) ! So, let’s suppose we disabled all compiler optimizations.

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 10 D 11 OK, compiler can do almost “anything” with this code (e. g. reorder a = 1 after Console. Write(b)) ! So, let’s suppose we disabled all compiler optimizations. Note: Not so bad – compiler can optimize a = 1 after Console. Write(b) only if compiler can prove that Console. Write cannot access variable a.

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 10 D 11

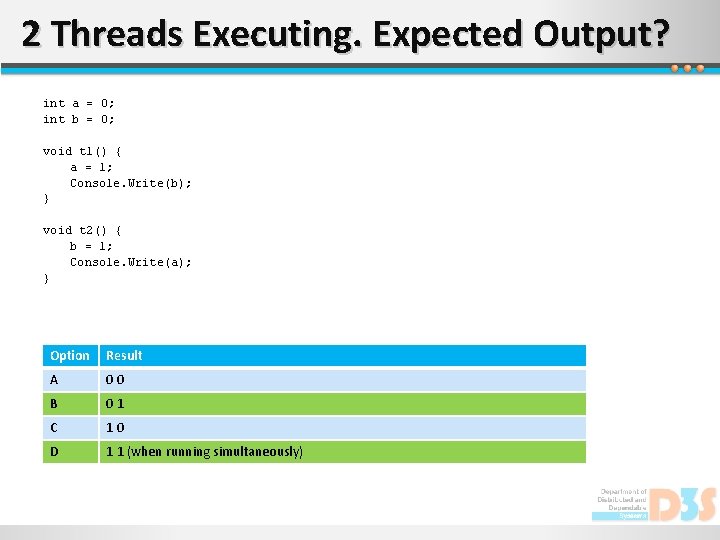

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 10 D 1 1 (when running simultaneously)

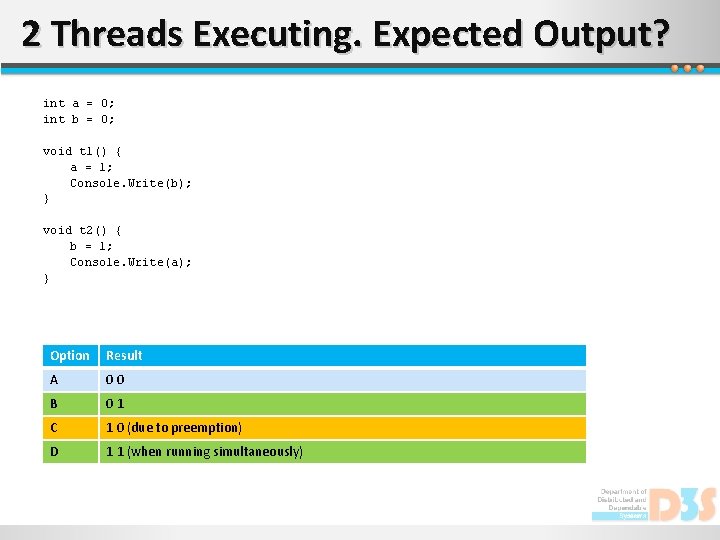

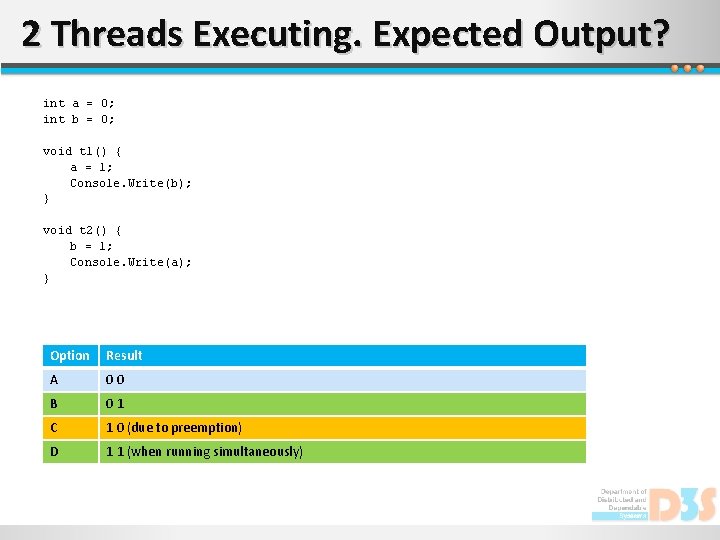

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 1 0 (due to preemption) D 1 1 (when running simultaneously)

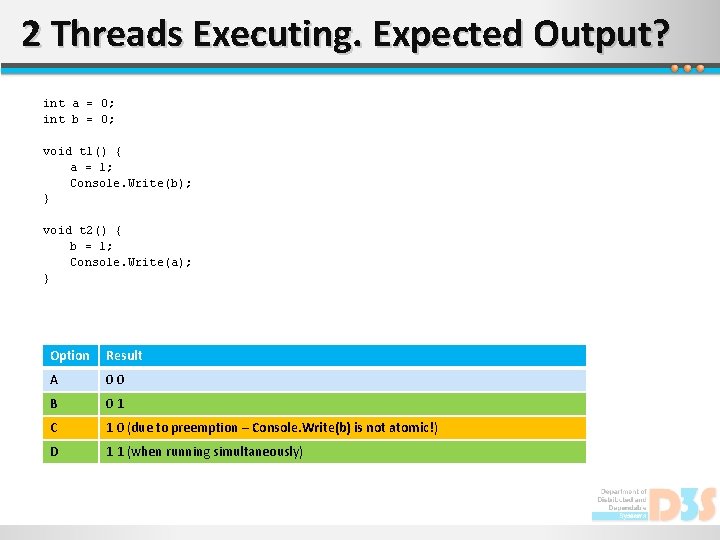

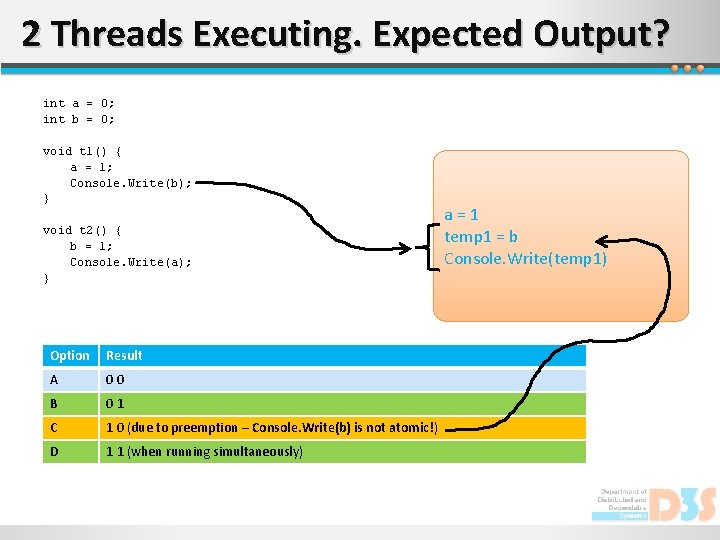

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 1 0 (due to preemption – Console. Write(b) is not atomic!) D 1 1 (when running simultaneously)

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 1 0 (due to preemption – Console. Write(b) is not atomic!) D 1 1 (when running simultaneously) a=1 temp 1 = b Console. Write(temp 1)

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 1 0 (due to preemption – Console. Write(b) is not atomic!) D 1 1 (when running simultaneously) t 1: a = 1 t 1: temp 1 = b (== 0) t 2: b = 1 t 2: temp 2 = a (== 1) t 2: Console. Write(temp 2) (== 1) t 1: Console. Write(temp 1) (== 0)

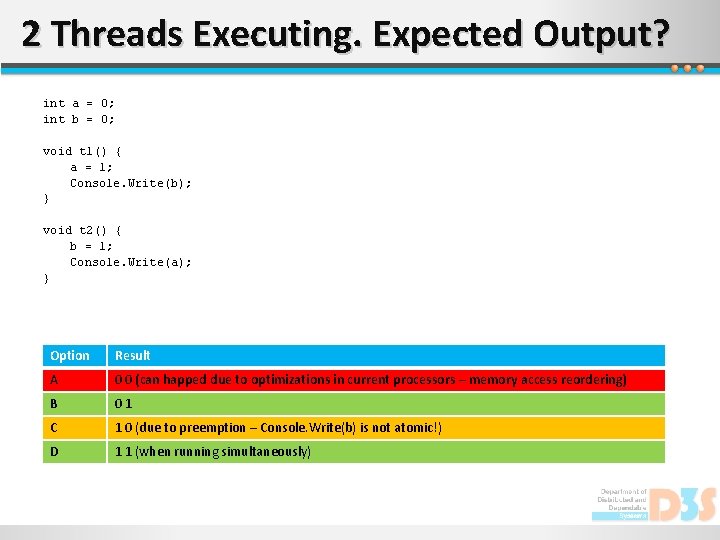

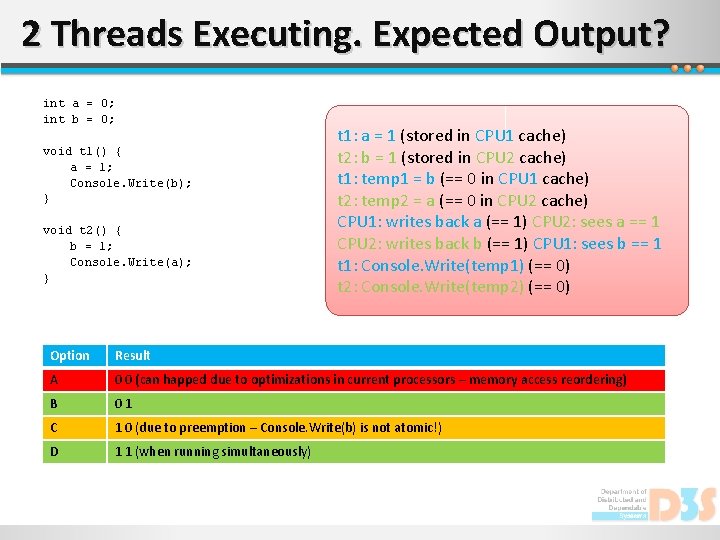

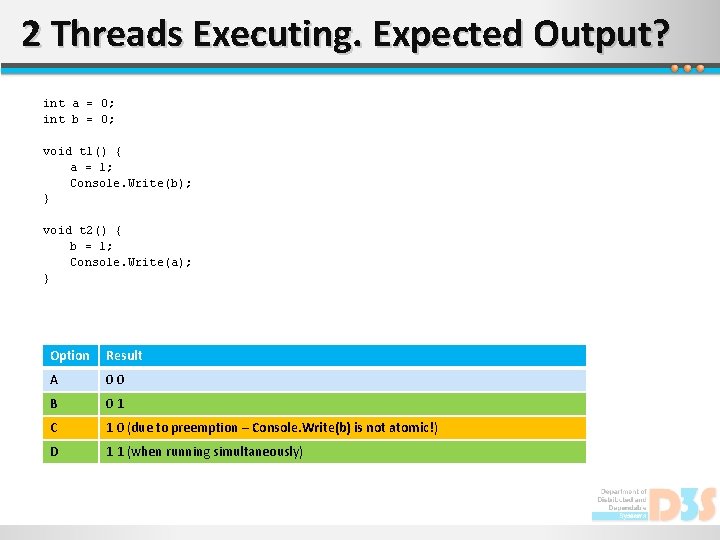

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 0 0 (can happed due to optimizations in current processors – memory access reordering) B 01 C 1 0 (due to preemption – Console. Write(b) is not atomic!) D 1 1 (when running simultaneously)

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } t 1: a = 1 (stored in CPU 1 cache) t 2: b = 1 (stored in CPU 2 cache) t 1: temp 1 = b (== 0 in CPU 1 cache) t 2: temp 2 = a (== 0 in CPU 2 cache) CPU 1: writes back a (== 1) CPU 2: sees a == 1 CPU 2: writes back b (== 1) CPU 1: sees b == 1 t 1: Console. Write(temp 1) (== 0) t 2: Console. Write(temp 2) (== 0) Option Result A 0 0 (can happed due to optimizations in current processors – memory access reordering) B 01 C 1 0 (due to preemption – Console. Write(b) is not atomic!) D 1 1 (when running simultaneously)

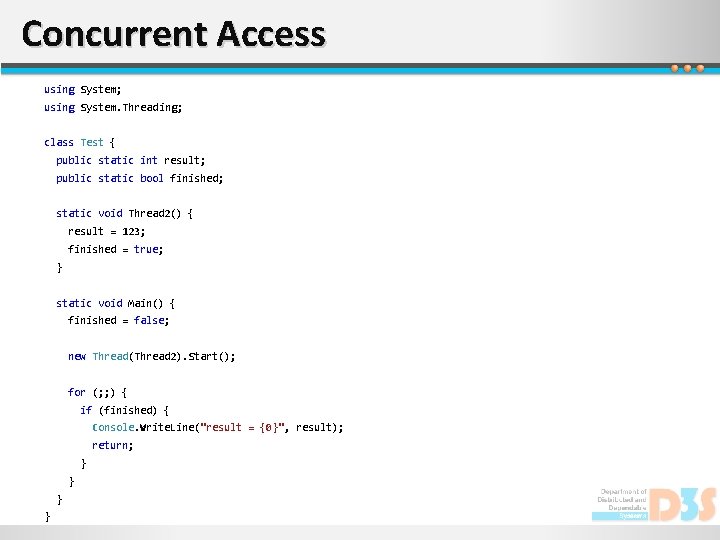

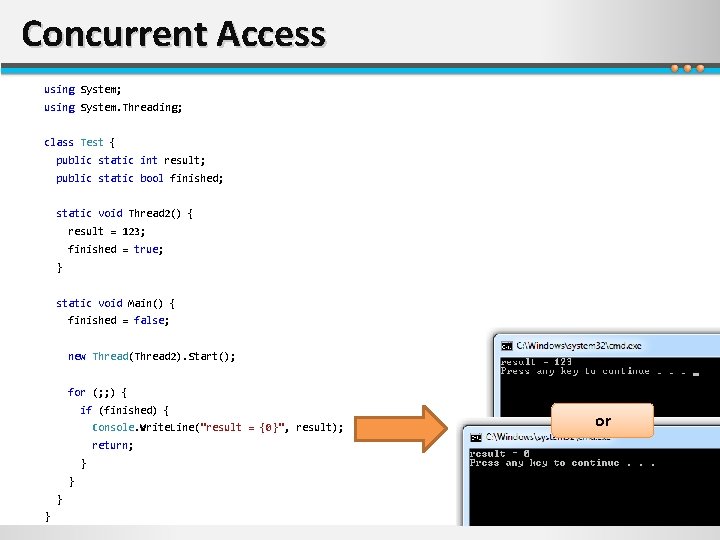

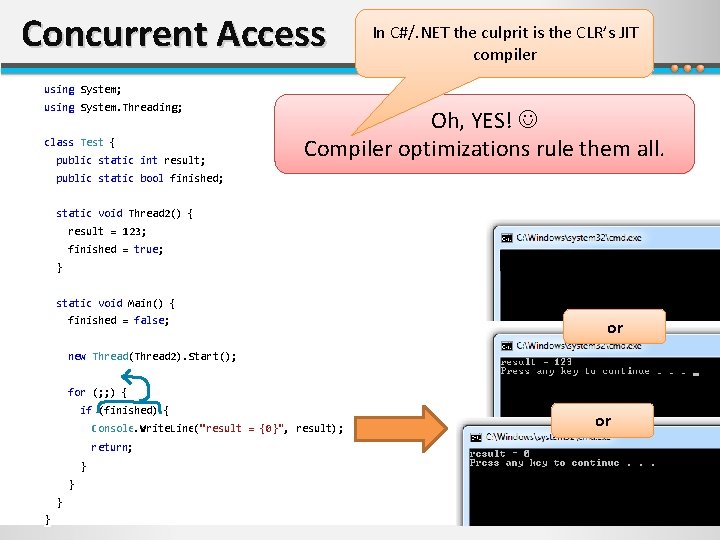

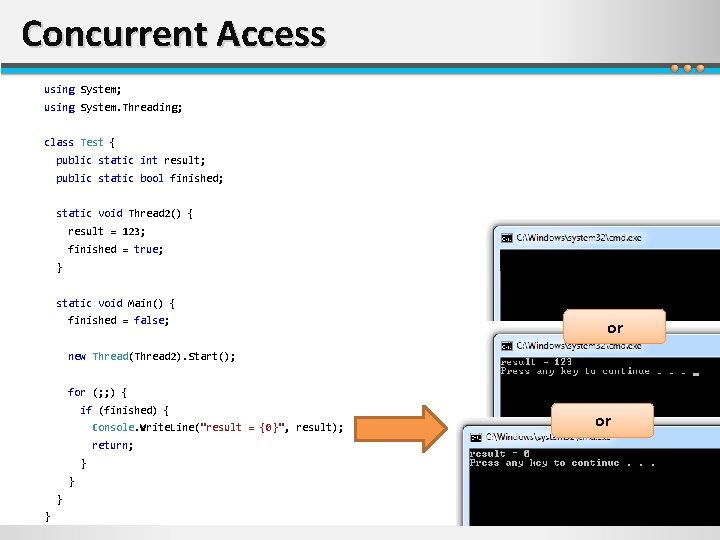

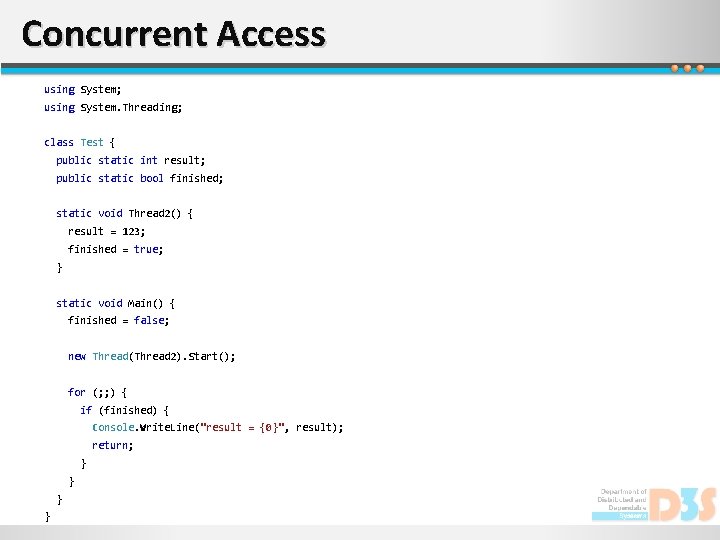

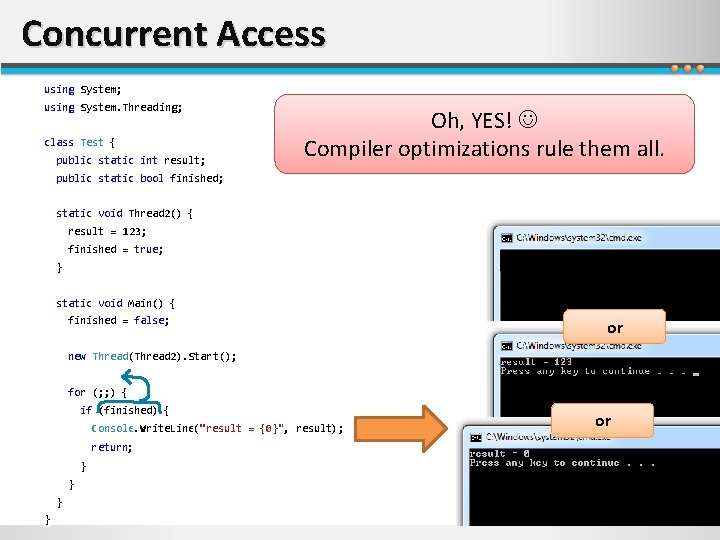

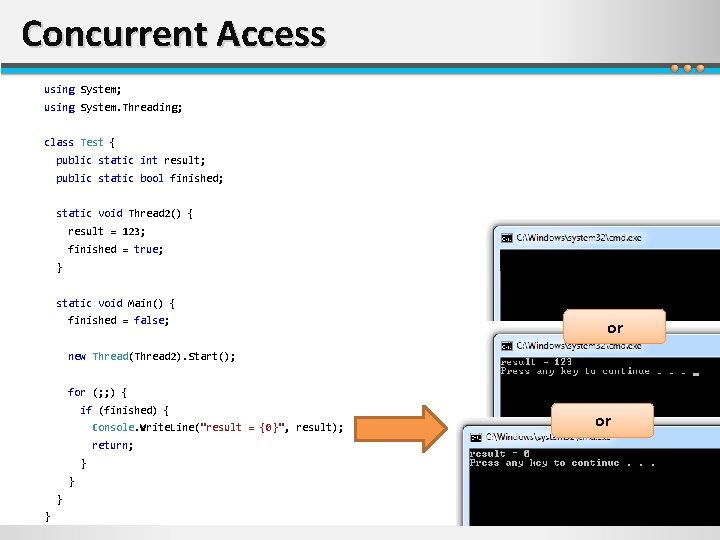

Concurrent Access using System; using System. Threading; class Test { public static int result; public static bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } }

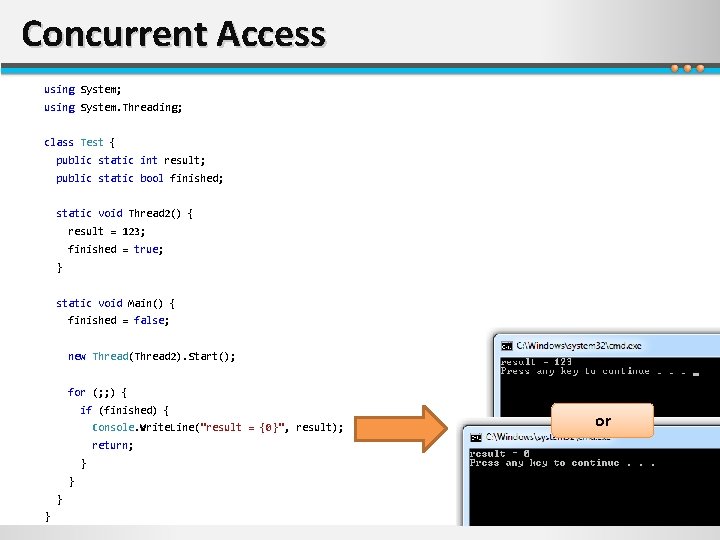

Concurrent Access using System; using System. Threading; class Test { public static int result; public static bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } or

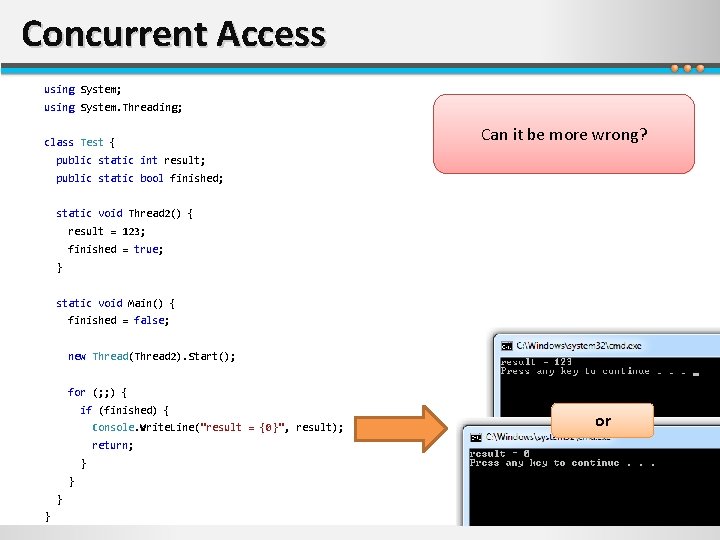

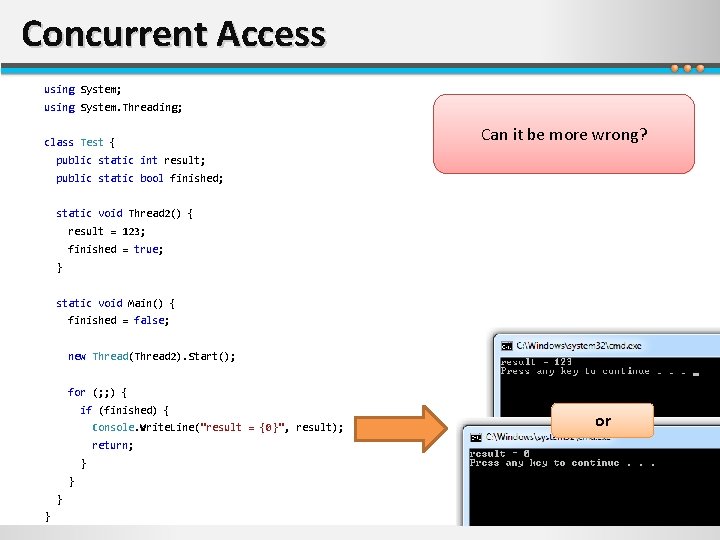

Concurrent Access using System; using System. Threading; class Test { Can it be more wrong? public static int result; public static bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } or

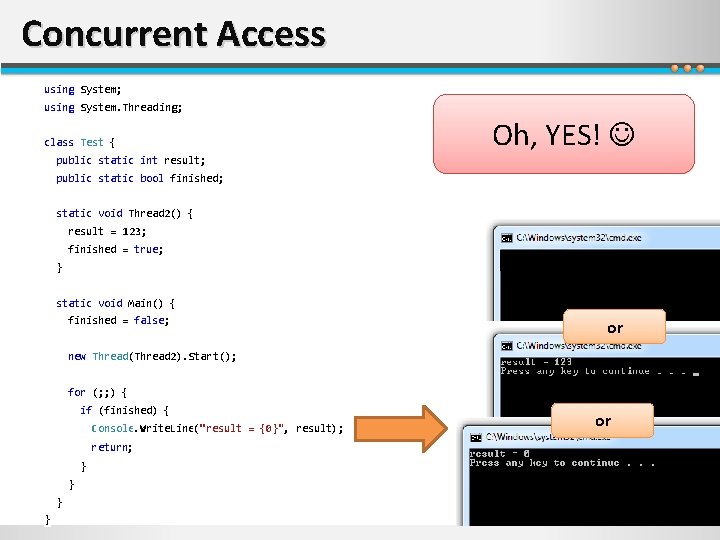

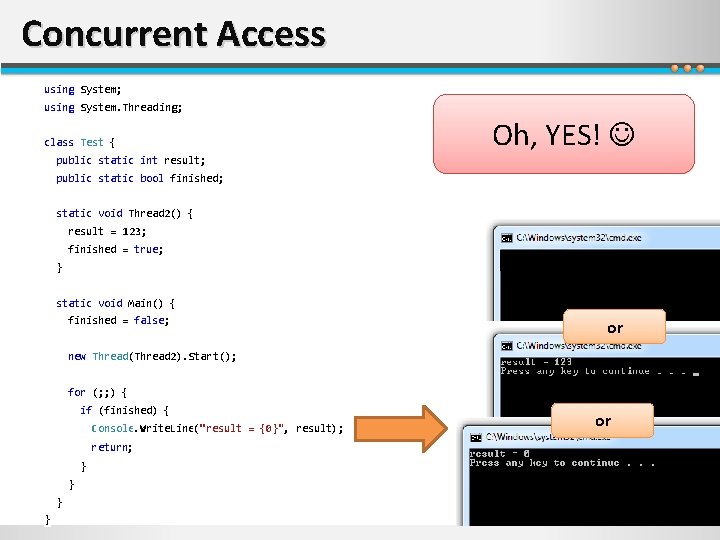

Concurrent Access using System; using System. Threading; class Test { Oh, YES! public static int result; public static bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; or new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } or

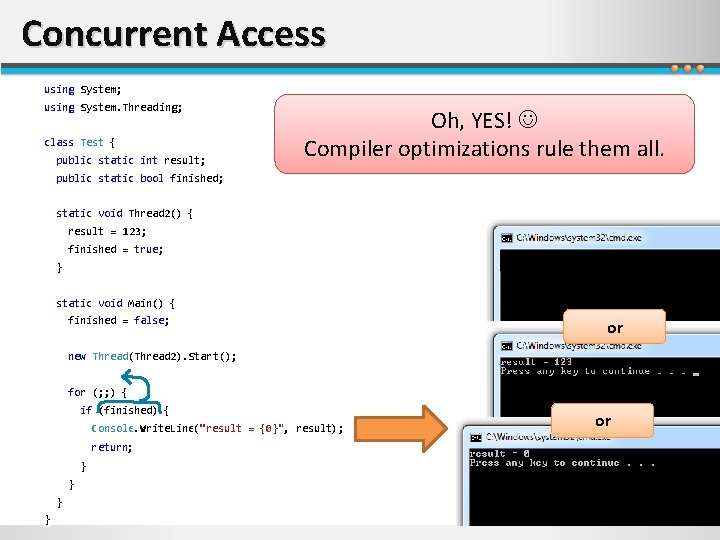

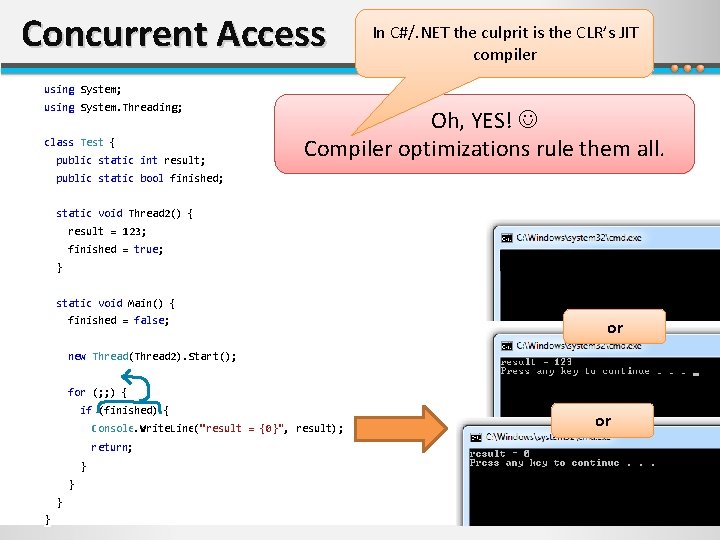

Concurrent Access using System; using System. Threading; class Test { public static int result; Oh, YES! Compiler optimizations rule them all. public static bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; or new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } or

Concurrent Access In C#/. NET the culprit is the CLR’s JIT compiler using System; using System. Threading; class Test { public static int result; Oh, YES! Compiler optimizations rule them all. public static bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; or new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } or

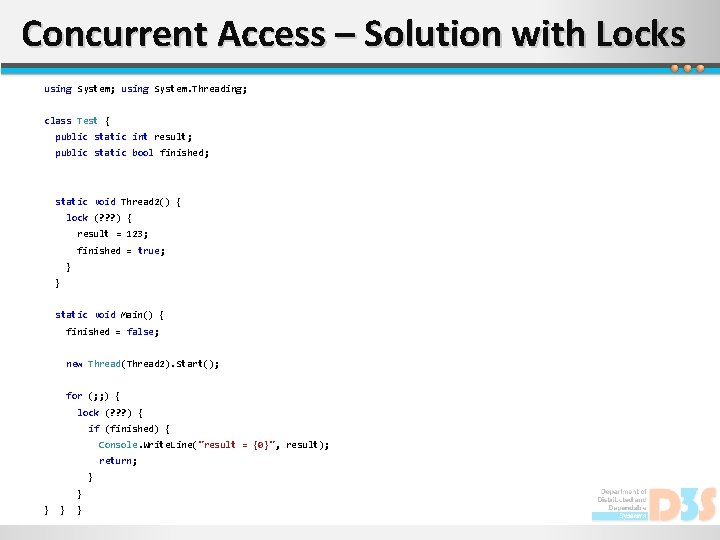

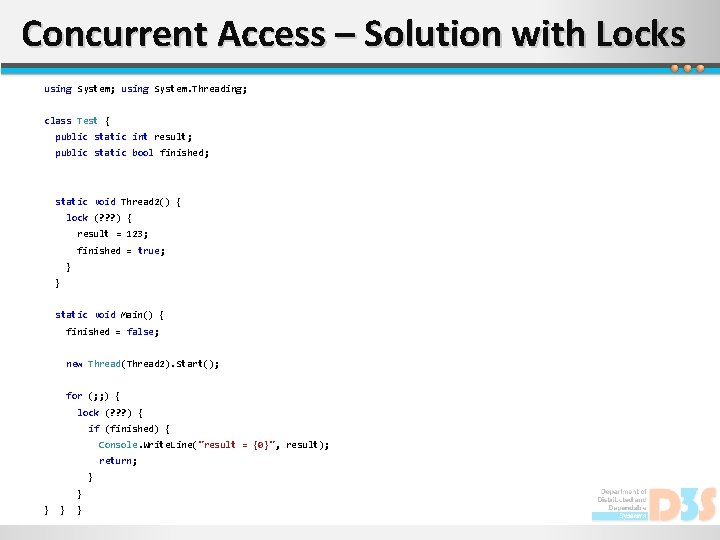

Concurrent Access – Solution with Locks using System; using System. Threading; class Test { public static int result; public static bool finished; static void Thread 2() { lock (? ? ? ) { result = 123; finished = true; } } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { lock (? ? ? ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } }

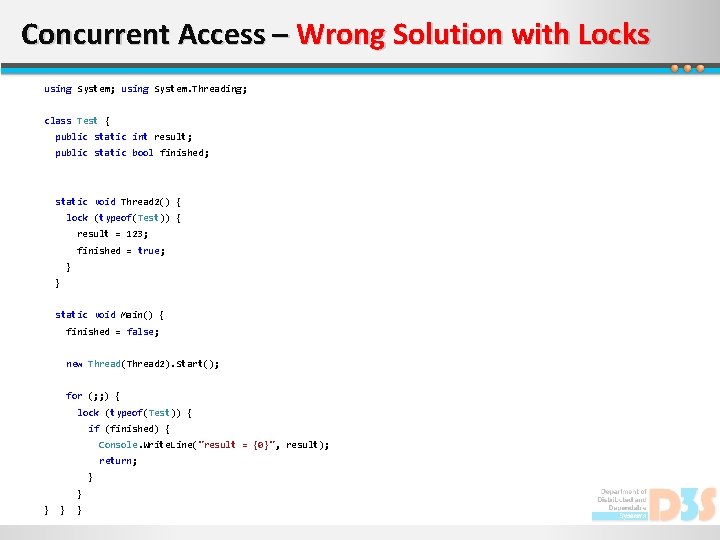

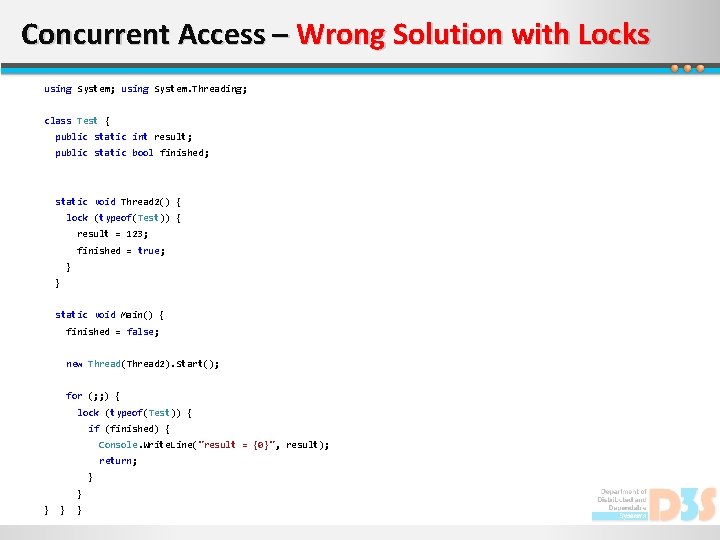

Concurrent Access – Wrong Solution with Locks using System; using System. Threading; class Test { public static int result; public static bool finished; static void Thread 2() { lock (typeof(Test)) { result = 123; finished = true; } } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { lock (typeof(Test)) { if (finished) { Console. Write. Line("result = {0}", result); return; } } }

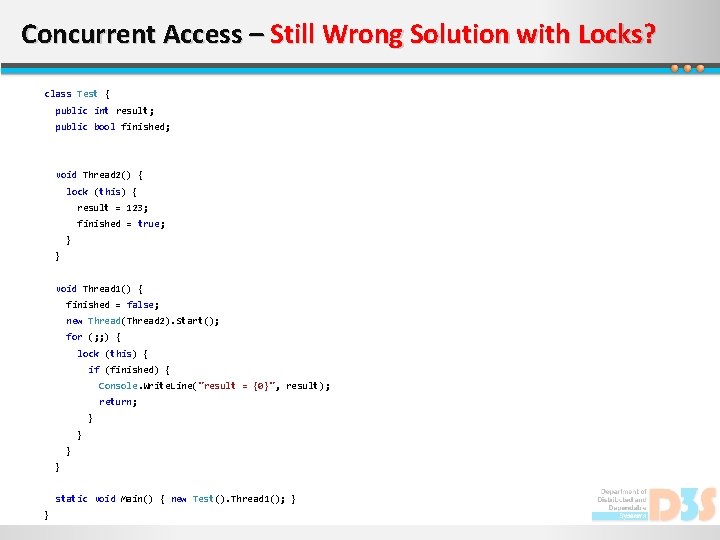

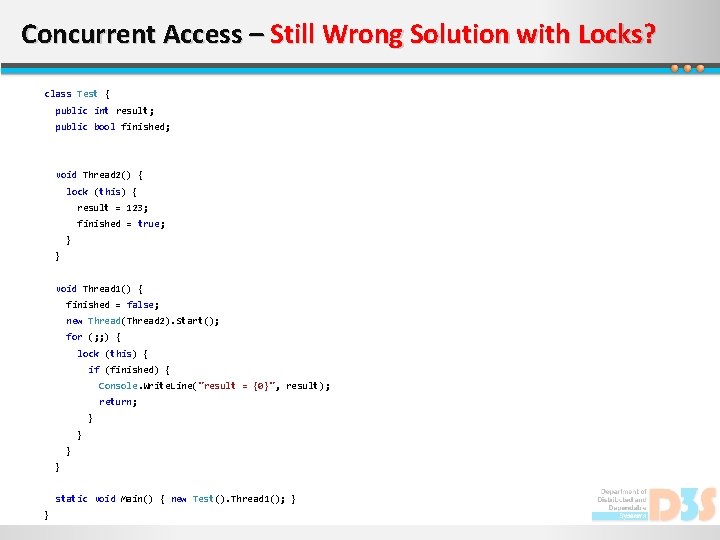

Concurrent Access – Still Wrong Solution with Locks? class Test { public int result; public bool finished; void Thread 2() { lock (this) { result = 123; finished = true; } } void Thread 1() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { lock (this) { if (finished) { Console. Write. Line("result = {0}", result); return; } } static void Main() { new Test(). Thread 1(); } }

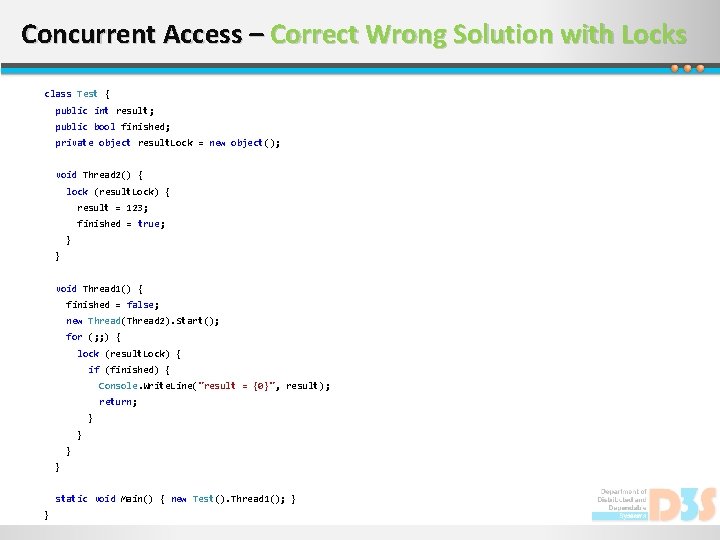

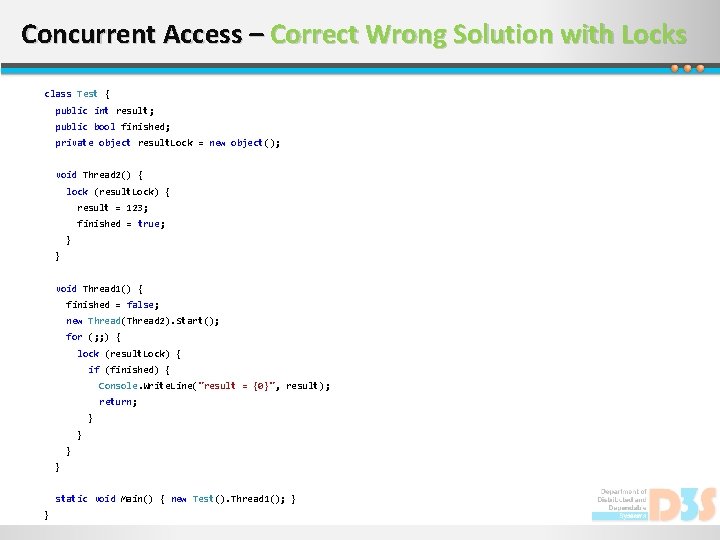

Concurrent Access – Correct Wrong Solution with Locks class Test { public int result; public bool finished; private object result. Lock = new object(); void Thread 2() { lock (result. Lock) { result = 123; finished = true; } } void Thread 1() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { lock (result. Lock) { if (finished) { Console. Write. Line("result = {0}", result); return; } } static void Main() { new Test(). Thread 1(); } }

Concurrent Access using System; using System. Threading; class Test { public static int result; public static bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; or new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } or

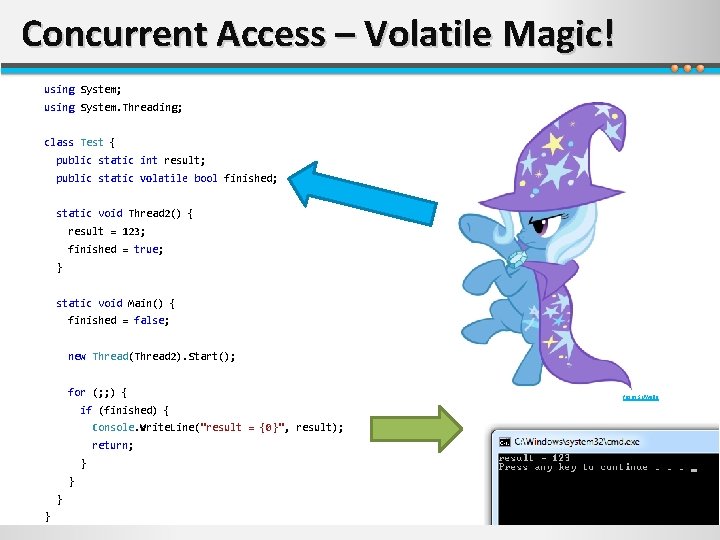

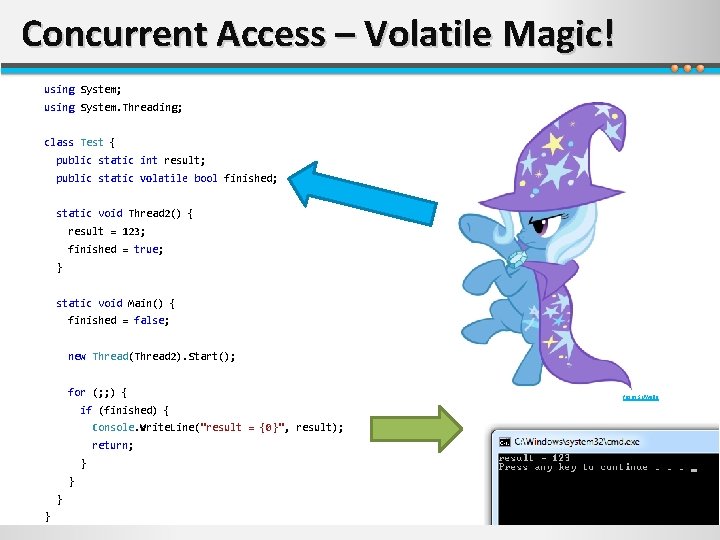

Concurrent Access – Volatile Magic! using System; using System. Threading; class Test { public static int result; public static volatile bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } from: SUWalls

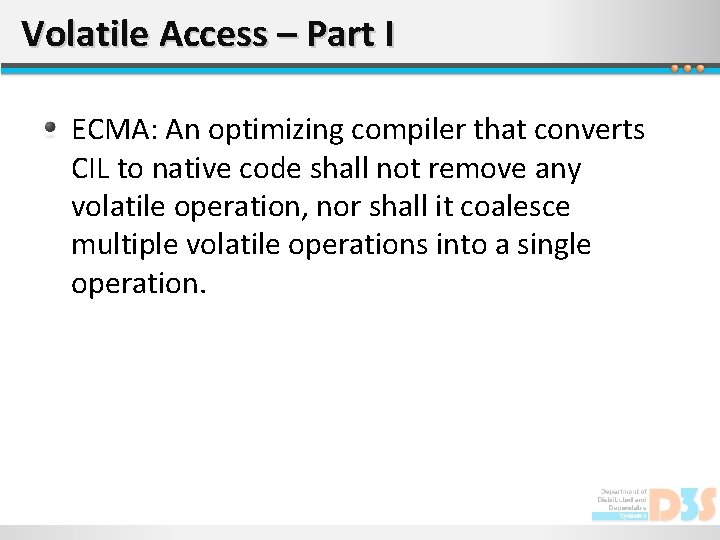

Volatile Access – Part I ECMA: An optimizing compiler that converts CIL to native code shall not remove any volatile operation, nor shall it coalesce multiple volatile operations into a single operation.

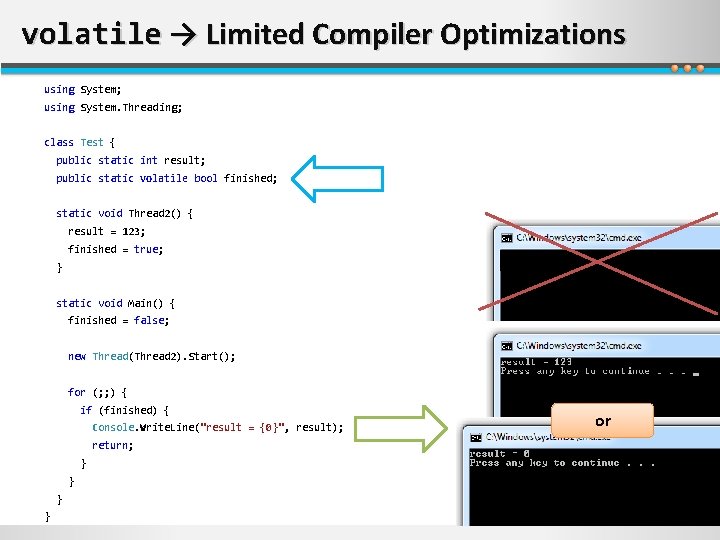

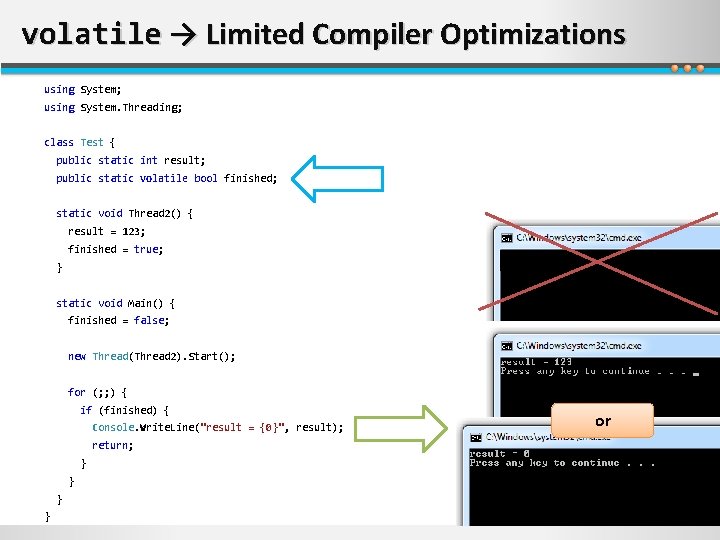

volatile → Limited Compiler Optimizations using System; using System. Threading; class Test { public static int result; public static volatile bool finished; static void Thread 2() { result = 123; finished = true; } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { if (finished) { Console. Write. Line("result = {0}", result); return; } } or

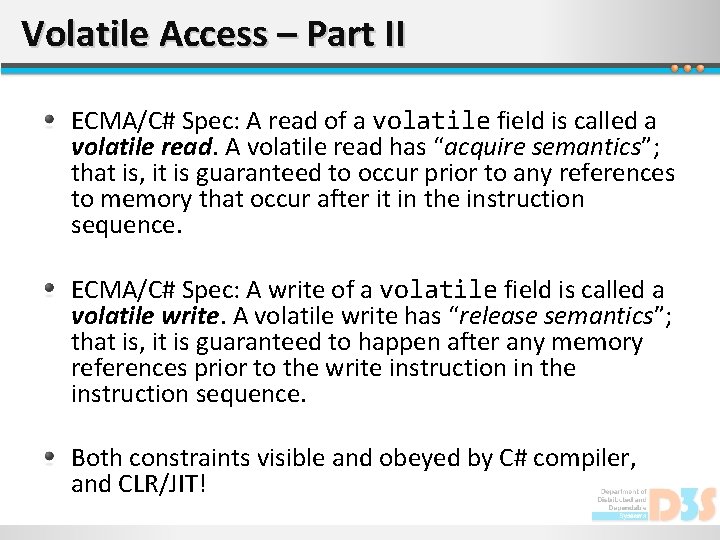

Volatile Access – Part II ECMA/C# Spec: A read of a volatile field is called a volatile read. A volatile read has “acquire semantics”; that is, it is guaranteed to occur prior to any references to memory that occur after it in the instruction sequence. ECMA/C# Spec: A write of a volatile field is called a volatile write. A volatile write has “release semantics”; that is, it is guaranteed to happen after any memory references prior to the write instruction in the instruction sequence. Both constraints visible and obeyed by C# compiler, and CLR/JIT!

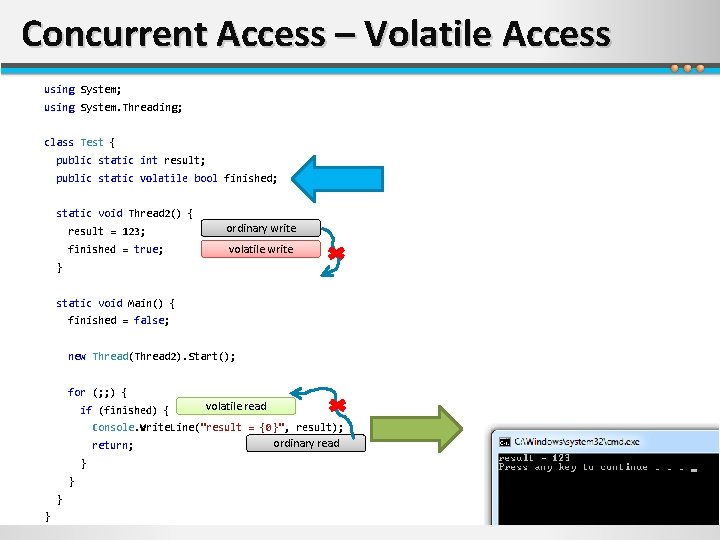

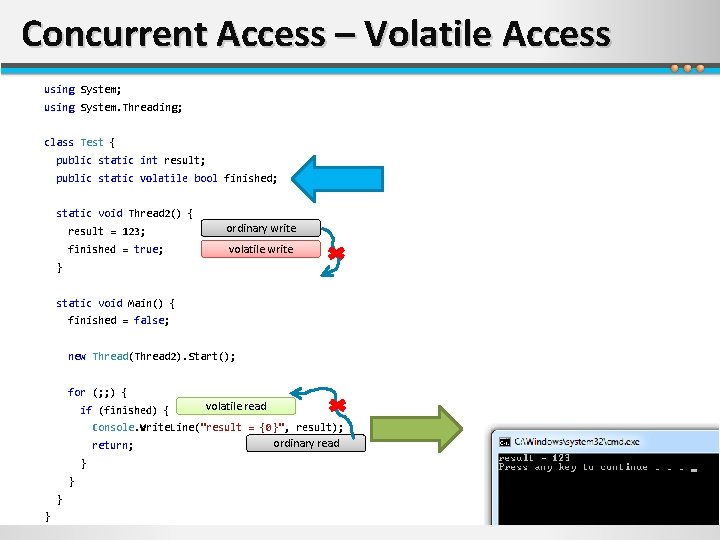

Concurrent Access – Volatile Access using System; using System. Threading; class Test { public static int result; public static volatile bool finished; static void Thread 2() { result = 123; ordinary write finished = true; volatile write } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { if (finished) { volatile read Console. Write. Line("result = {0}", result); return; } } ordinary read

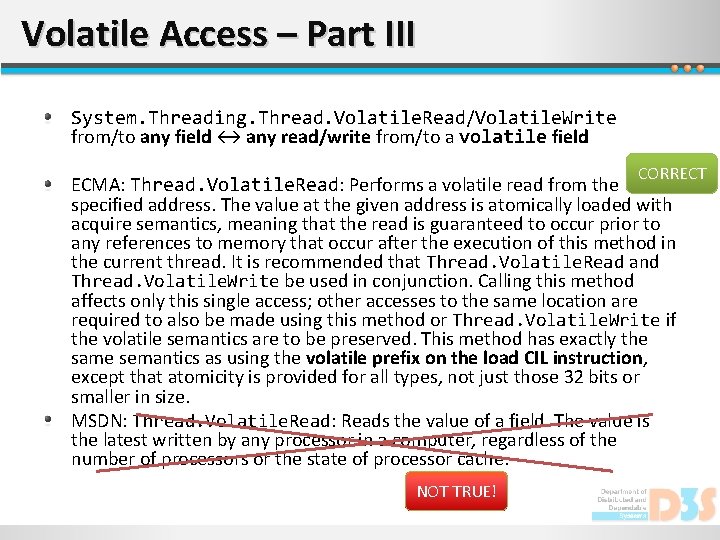

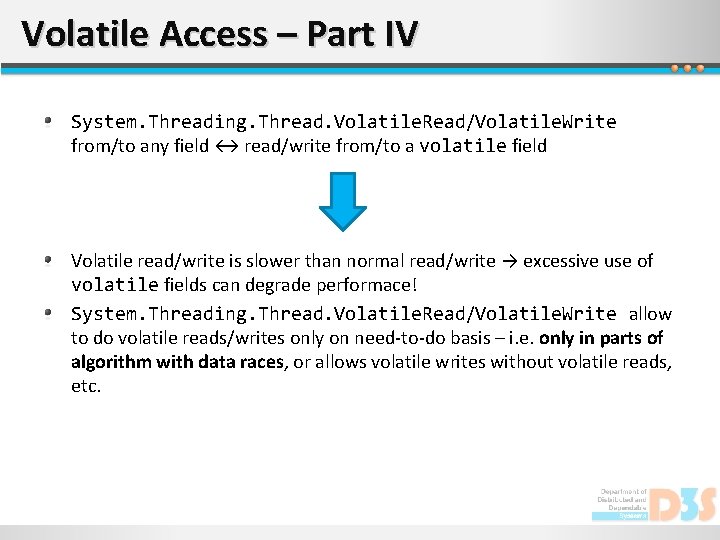

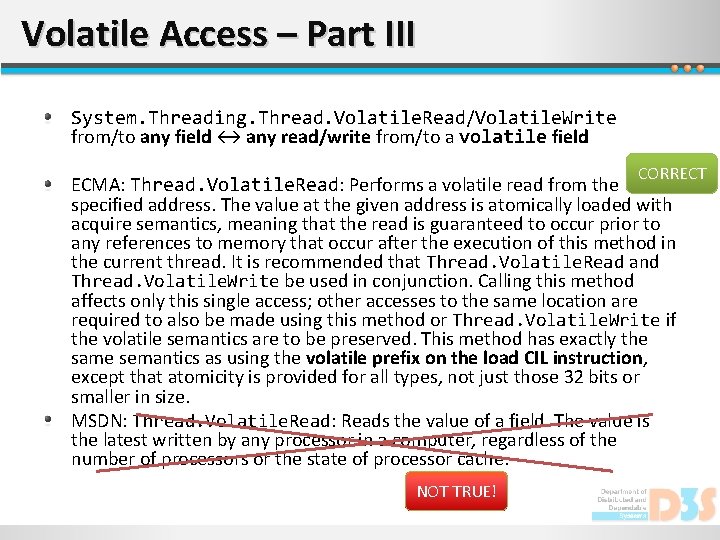

Volatile Access – Part III System. Threading. Thread. Volatile. Read/Volatile. Write from/to any field ↔ any read/write from/to a volatile field CORRECT ECMA: Thread. Volatile. Read: Performs a volatile read from the specified address. The value at the given address is atomically loaded with acquire semantics, meaning that the read is guaranteed to occur prior to any references to memory that occur after the execution of this method in the current thread. It is recommended that Thread. Volatile. Read and Thread. Volatile. Write be used in conjunction. Calling this method affects only this single access; other accesses to the same location are required to also be made using this method or Thread. Volatile. Write if the volatile semantics are to be preserved. This method has exactly the same semantics as using the volatile prefix on the load CIL instruction, except that atomicity is provided for all types, not just those 32 bits or smaller in size. MSDN: Thread. Volatile. Read: Reads the value of a field. The value is the latest written by any processor in a computer, regardless of the number of processors or the state of processor cache. NOT TRUE!

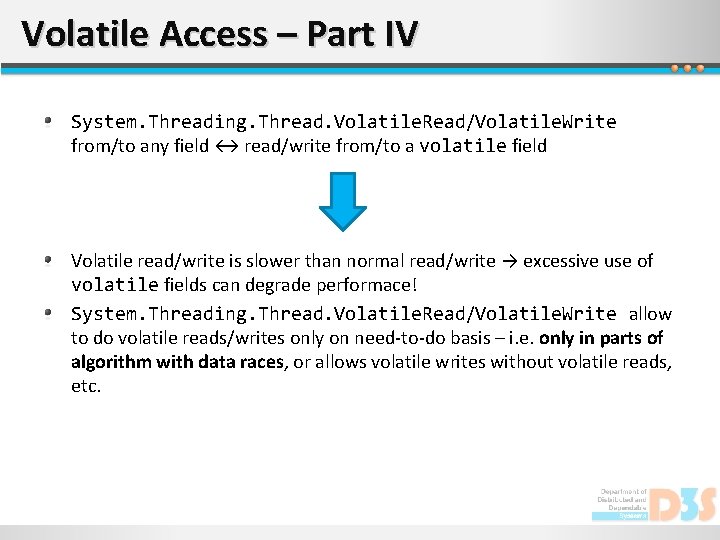

Volatile Access – Part IV System. Threading. Thread. Volatile. Read/Volatile. Write from/to any field ↔ read/write from/to a volatile field Volatile read/write is slower than normal read/write → excessive use of volatile fields can degrade performace! System. Threading. Thread. Volatile. Read/Volatile. Write allow to do volatile reads/writes only on need-to-do basis – i. e. only in parts of algorithm with data races, or allows volatile writes without volatile reads, etc.

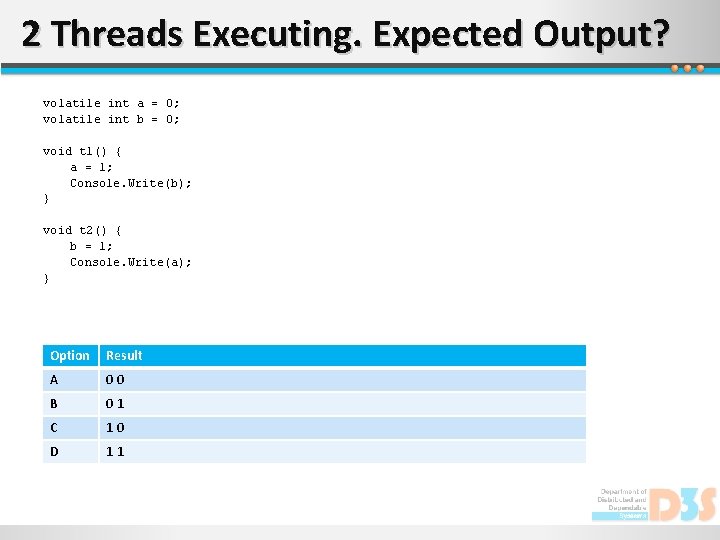

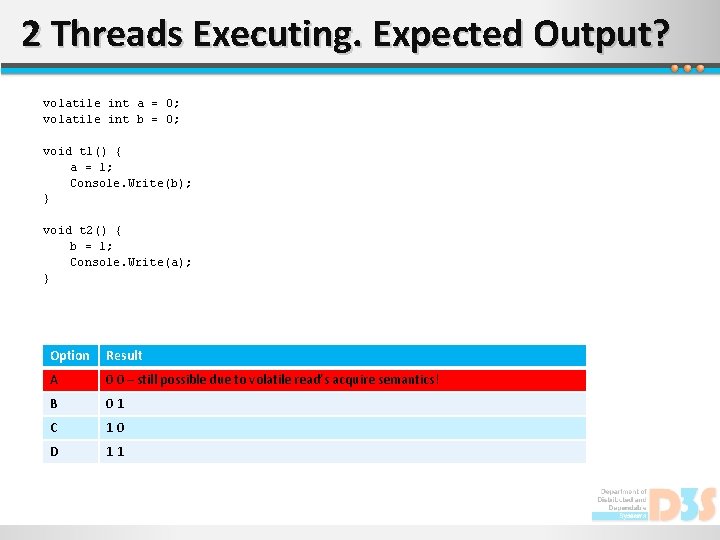

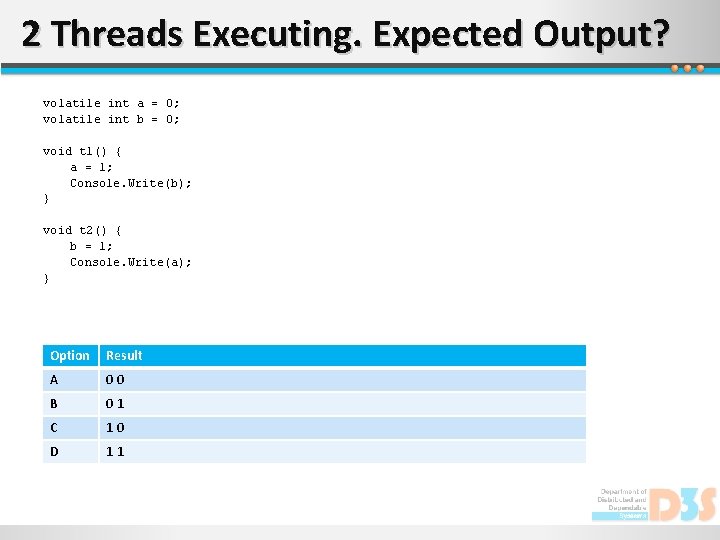

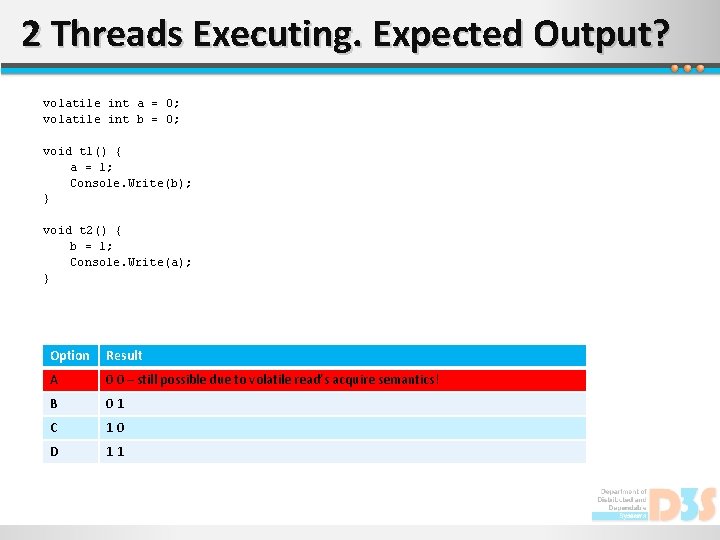

2 Threads Executing. Expected Output? volatile int a = 0; volatile int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 00 B 01 C 10 D 11

2 Threads Executing. Expected Output? volatile int a = 0; volatile int b = 0; void t 1() { a = 1; Console. Write(b); } void t 2() { b = 1; Console. Write(a); } Option Result A 0 0 – still possible due to volatile read’s acquire semantics! B 01 C 10 D 11

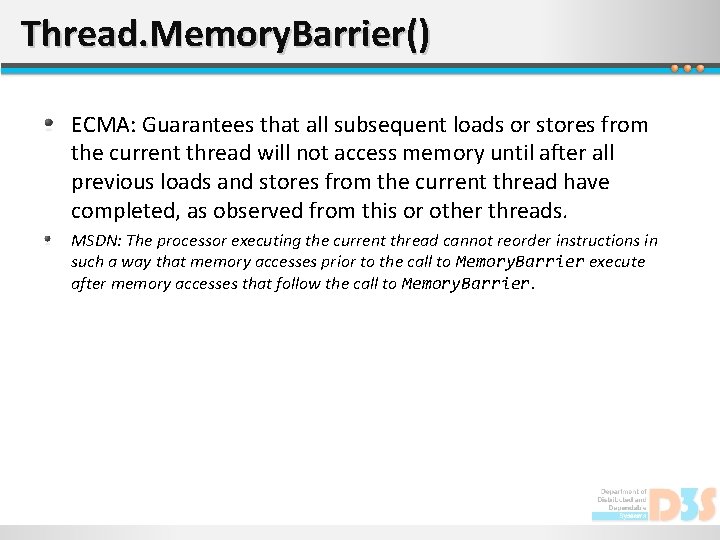

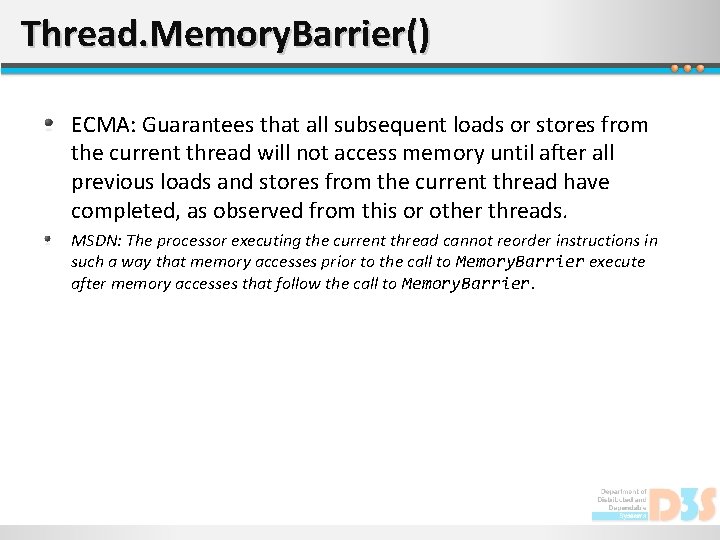

Thread. Memory. Barrier() ECMA: Guarantees that all subsequent loads or stores from the current thread will not access memory until after all previous loads and stores from the current thread have completed, as observed from this or other threads. MSDN: The processor executing the current thread cannot reorder instructions in such a way that memory accesses prior to the call to Memory. Barrier execute after memory accesses that follow the call to Memory. Barrier.

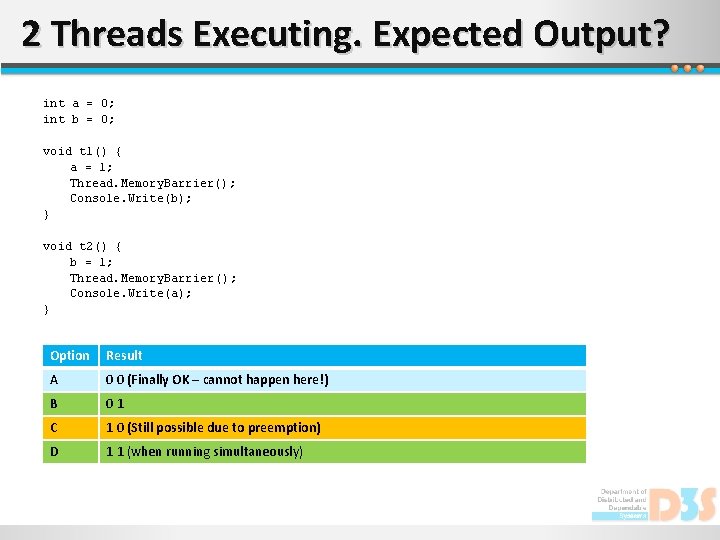

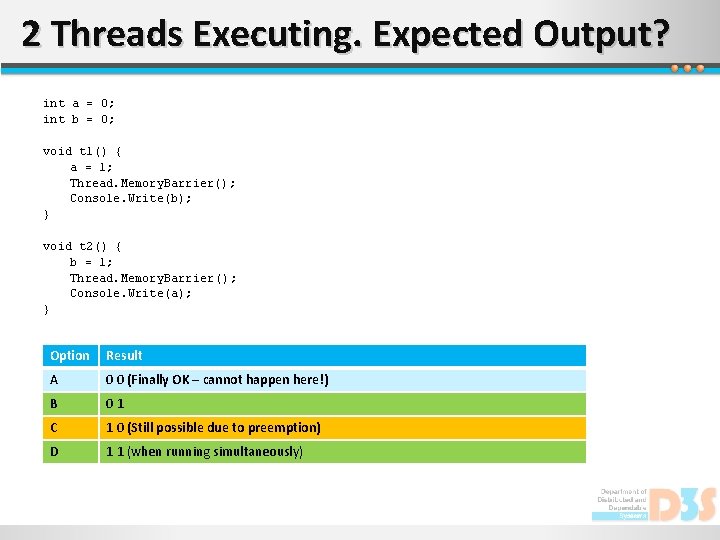

2 Threads Executing. Expected Output? int a = 0; int b = 0; void t 1() { a = 1; Thread. Memory. Barrier(); Console. Write(b); } void t 2() { b = 1; Thread. Memory. Barrier(); Console. Write(a); } Option Result A 0 0 (Finally OK – cannot happen here!) B 01 C 1 0 (Still possible due to preemption) D 1 1 (when running simultaneously)

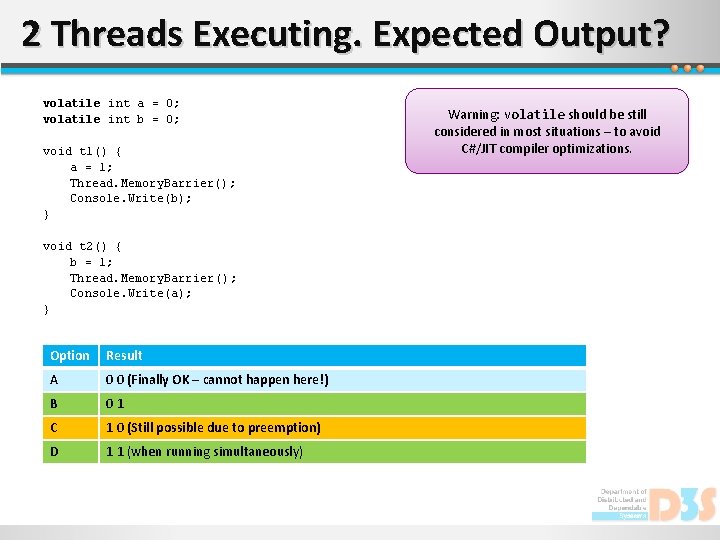

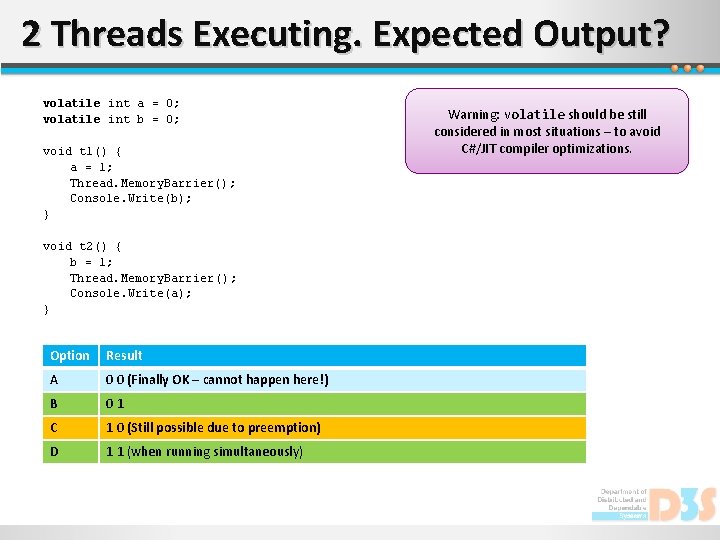

2 Threads Executing. Expected Output? volatile int a = 0; volatile int b = 0; void t 1() { a = 1; Thread. Memory. Barrier(); Console. Write(b); } void t 2() { b = 1; Thread. Memory. Barrier(); Console. Write(a); } Option Result A 0 0 (Finally OK – cannot happen here!) B 01 C 1 0 (Still possible due to preemption) D 1 1 (when running simultaneously) Warning: volatile should be still considered in most situations – to avoid C#/JIT compiler optimizations.

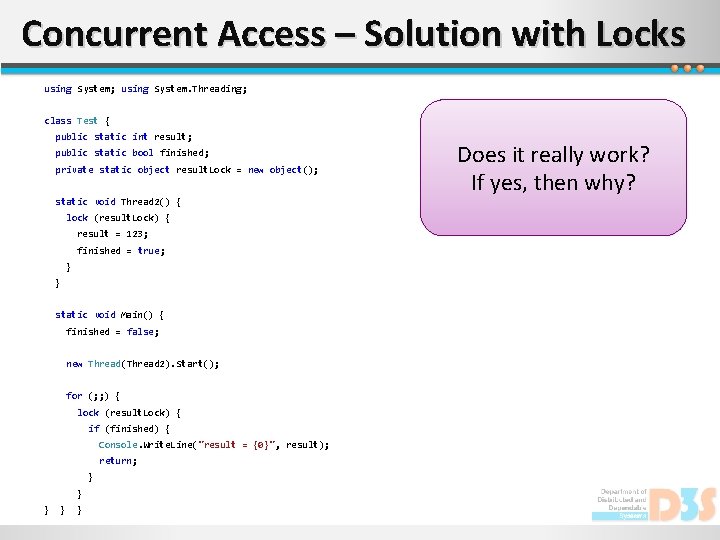

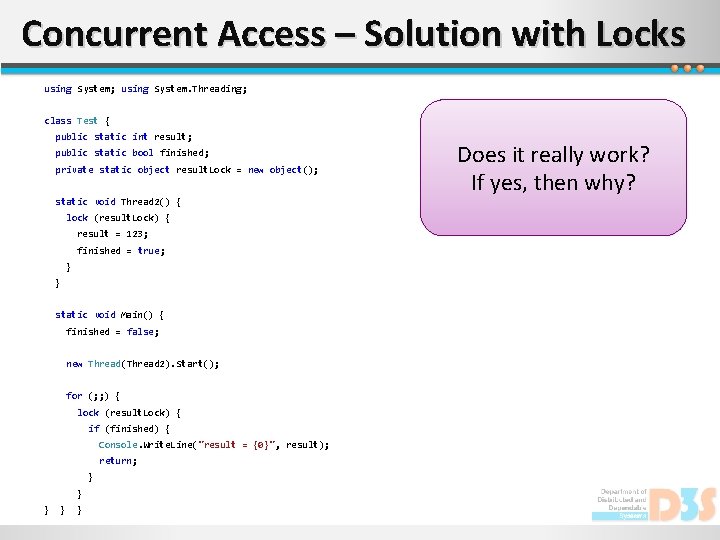

Concurrent Access – Solution with Locks using System; using System. Threading; class Test { public static int result; public static bool finished; private static object result. Lock = new object(); static void Thread 2() { lock (result. Lock) { result = 123; finished = true; } } static void Main() { finished = false; new Thread(Thread 2). Start(); for (; ; ) { lock (result. Lock) { if (finished) { Console. Write. Line("result = {0}", result); return; } } } Does it really work? If yes, then why?

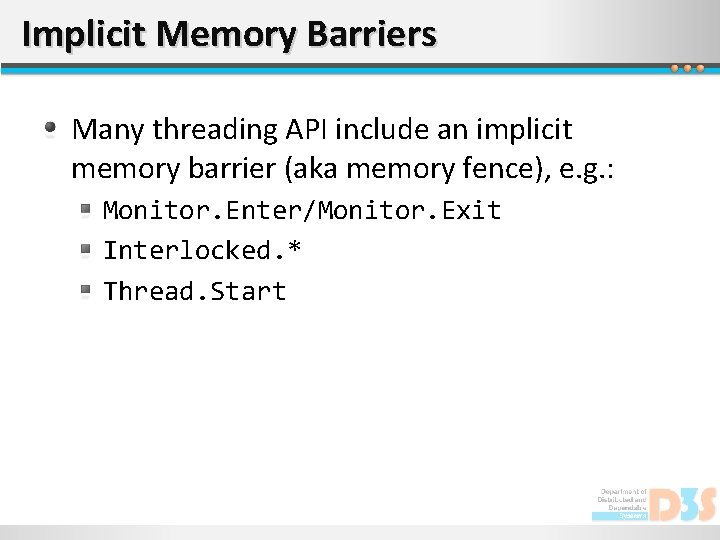

Implicit Memory Barriers Many threading API include an implicit memory barrier (aka memory fence), e. g. : Monitor. Enter/Monitor. Exit Interlocked. * Thread. Start

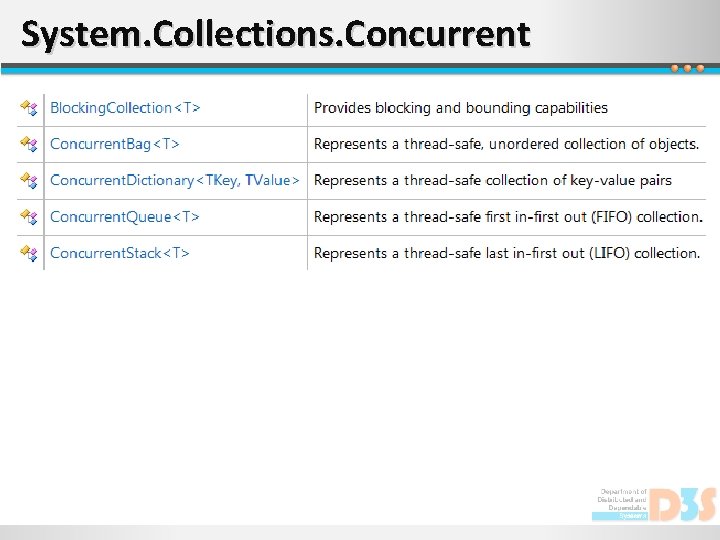

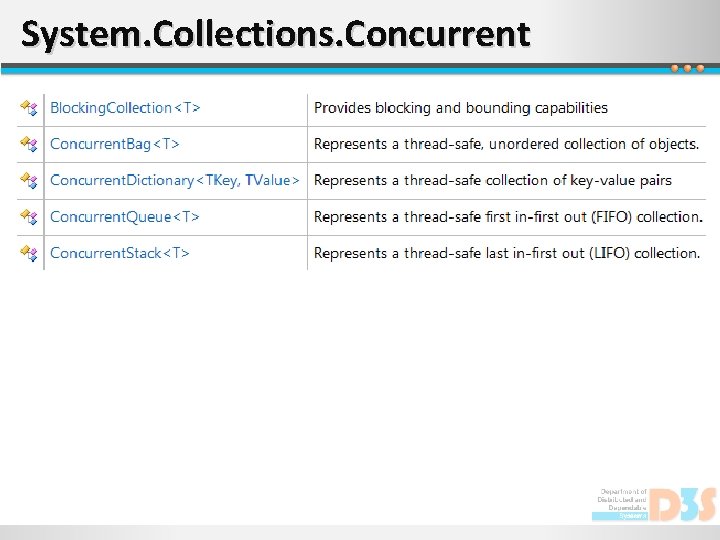

System. Collections. Concurrent