ADVANCED COMPUTER ARCHITECTURE Fundamental Concepts Computing Models Samira

- Slides: 48

ADVANCED COMPUTER ARCHITECTURE Fundamental Concepts: Computing Models Samira Khan University of Virginia Jan 28, 2019 The content and concept of this course are adapted from CMU ECE 740

AGENDA • Review from last lecture • Data flow architecture • Flynn’s taxonomy of computers 2

ANNOUNCEMENT • The class will meet at Rice Hall from now • Rice 340 • Mon-Wed 2. 00 -3. 15 pm 3

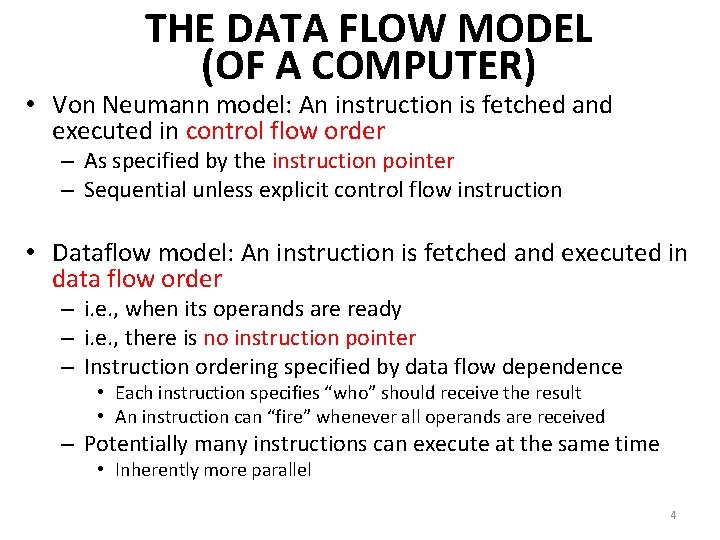

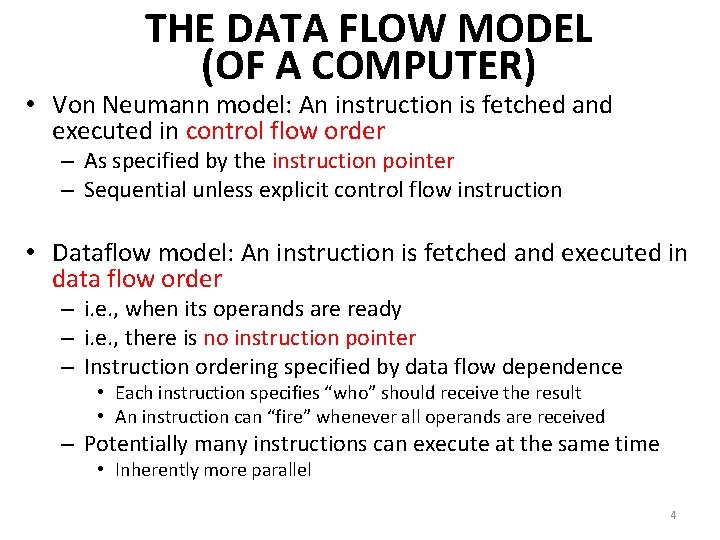

THE DATA FLOW MODEL (OF A COMPUTER) • Von Neumann model: An instruction is fetched and executed in control flow order – As specified by the instruction pointer – Sequential unless explicit control flow instruction • Dataflow model: An instruction is fetched and executed in data flow order – i. e. , when its operands are ready – i. e. , there is no instruction pointer – Instruction ordering specified by data flow dependence • Each instruction specifies “who” should receive the result • An instruction can “fire” whenever all operands are received – Potentially many instructions can execute at the same time • Inherently more parallel 4

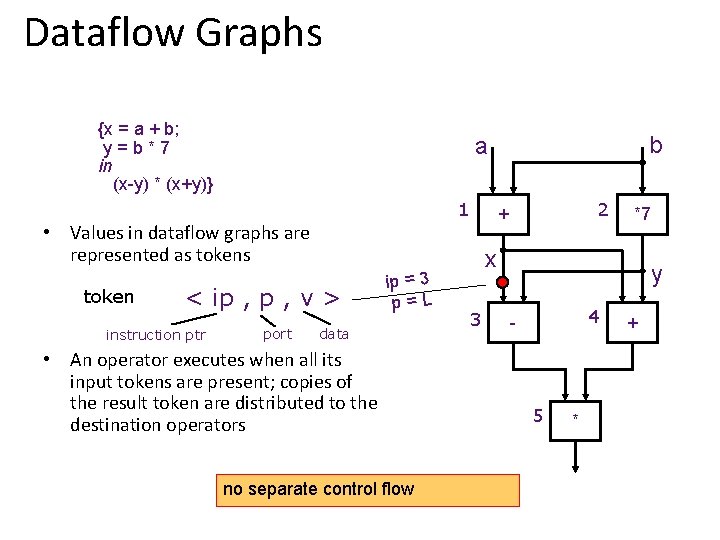

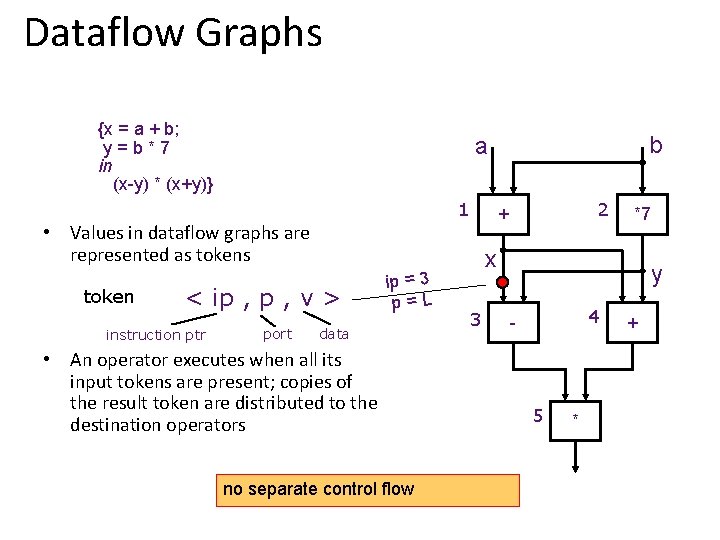

Dataflow Graphs {x = a + b; y=b*7 in (x-y) * (x+y)} 1 < ip , v > instruction ptr port ip = 3 p=L data • An operator executes when all its input tokens are present; copies of the result token are distributed to the destination operators no separate control flow 2 + • Values in dataflow graphs are represented as token b a *7 x 3 y 4 - 5 * +

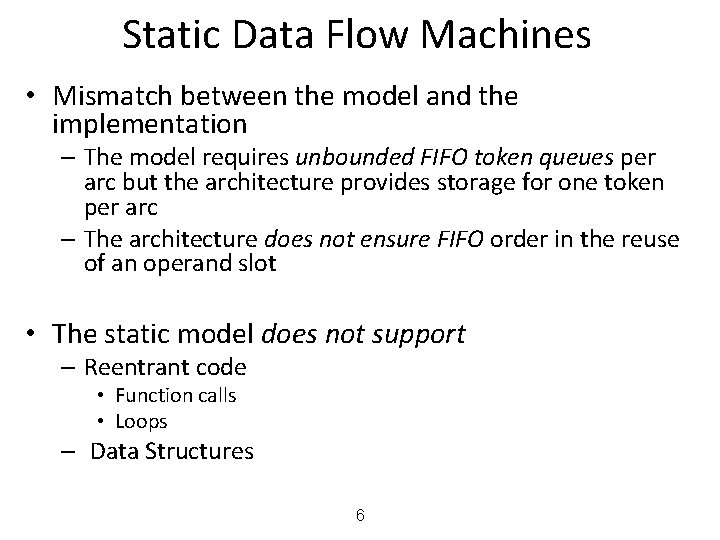

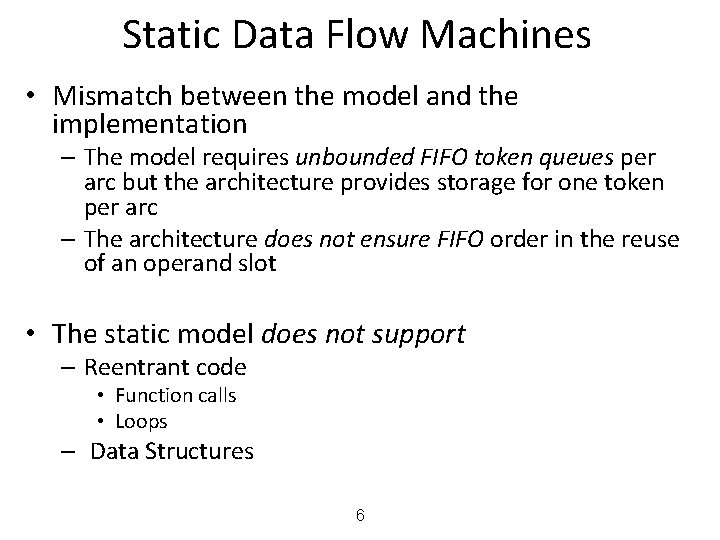

Static Data Flow Machines • Mismatch between the model and the implementation – The model requires unbounded FIFO token queues per arc but the architecture provides storage for one token per arc – The architecture does not ensure FIFO order in the reuse of an operand slot • The static model does not support – Reentrant code • Function calls • Loops – Data Structures 6

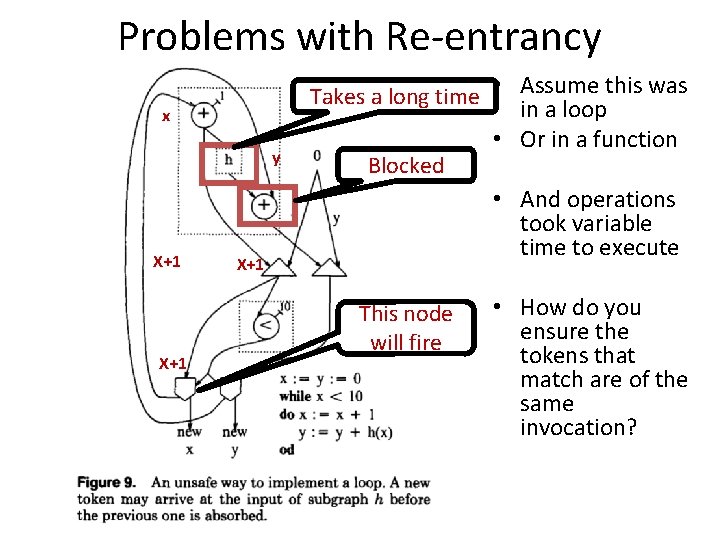

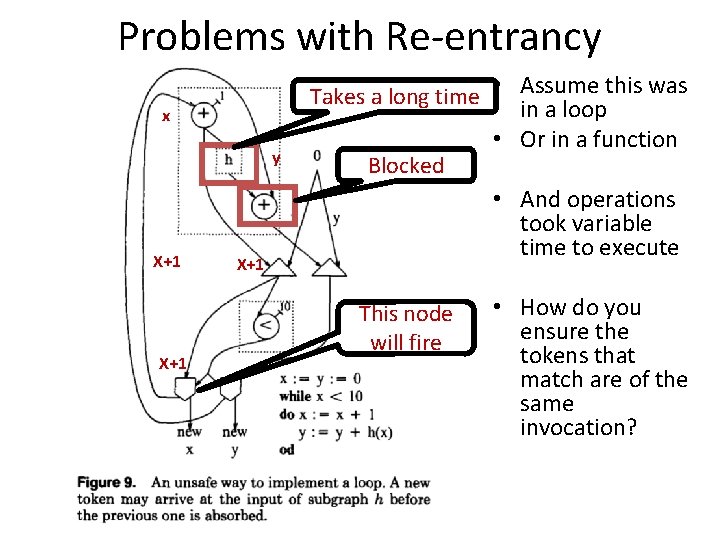

Problems with Re-entrancy x y X+1 X+1 Takes a long time • Assume this was in a loop • Or in a function Blocked • And operations took variable time to execute This node will fire 7 • How do you ensure the tokens that match are of the same invocation?

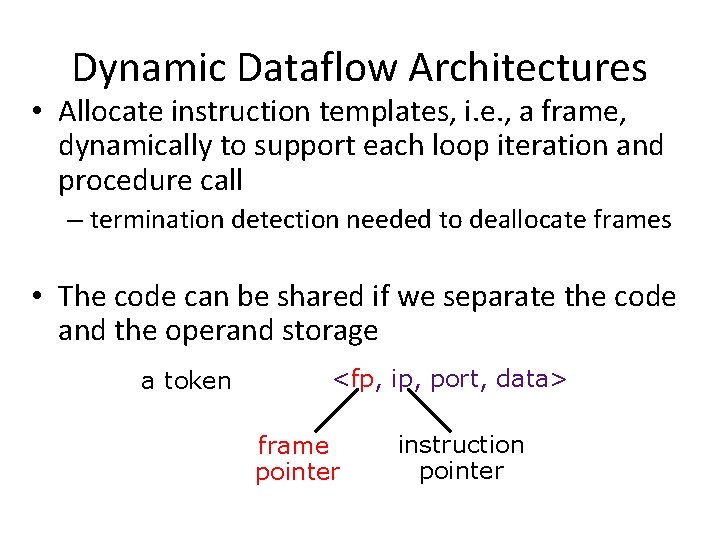

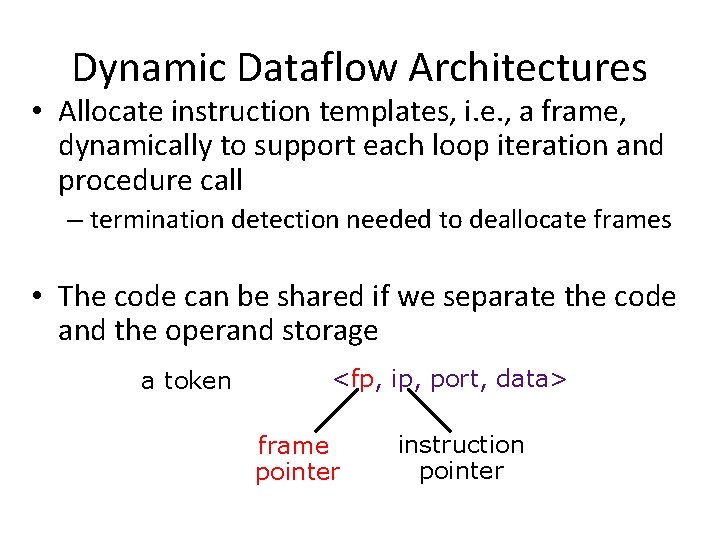

Dynamic Dataflow Architectures • Allocate instruction templates, i. e. , a frame, dynamically to support each loop iteration and procedure call – termination detection needed to deallocate frames • The code can be shared if we separate the code and the operand storage a token <fp, ip, port, data> frame pointer instruction pointer

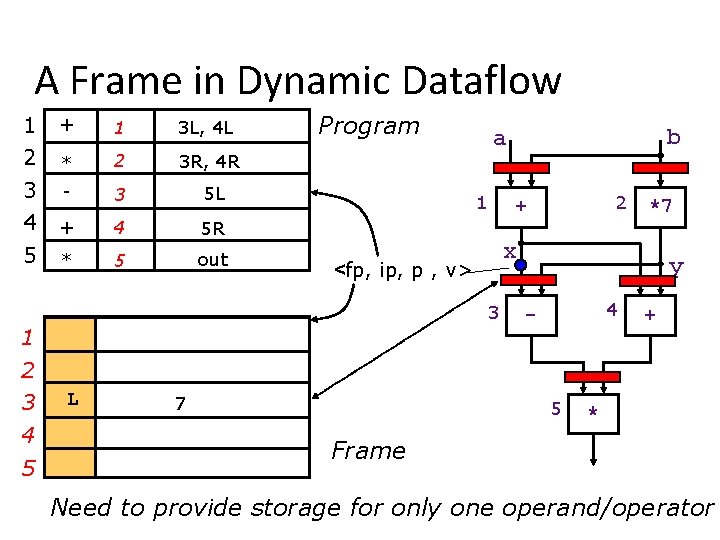

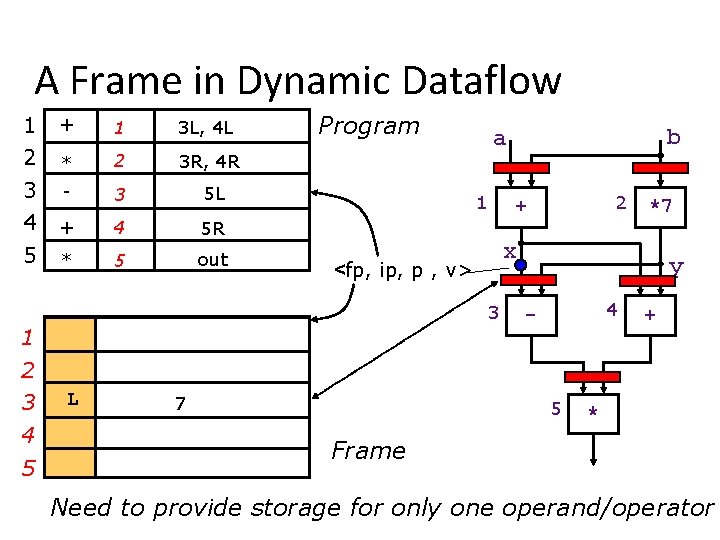

A Frame in Dynamic Dataflow 1 2 + 1 3 L, 4 L * 2 3 R, 4 R 3 4 5 - 3 5 L + 4 5 R * 5 out Program 1 4 5 L 7 *7 x 3 2 + <fp, ip, p , v> 1 b a y 4 - 5 + * Frame Need to provide storage for only one operand/operator

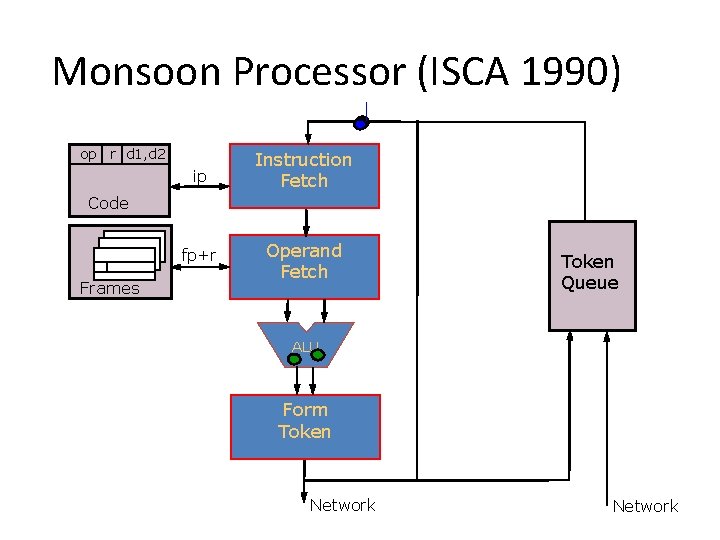

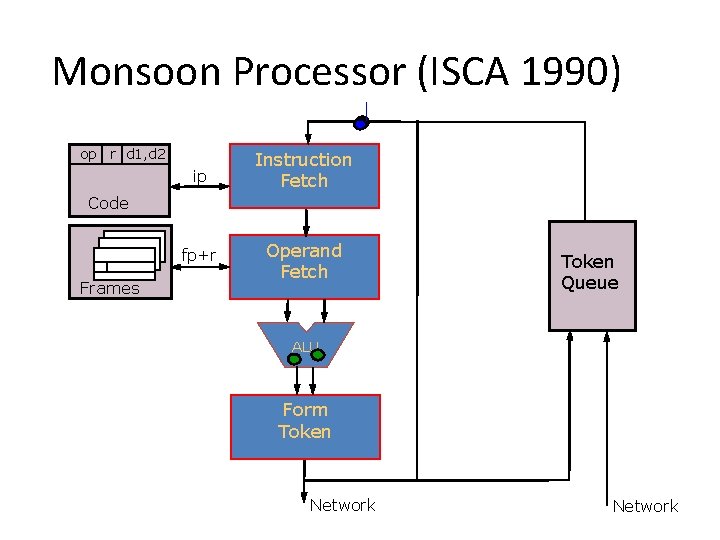

Monsoon Processor (ISCA 1990) op r d 1, d 2 ip Instruction Fetch fp+r Operand Fetch Code Frames Token Queue ALU Form Token Network

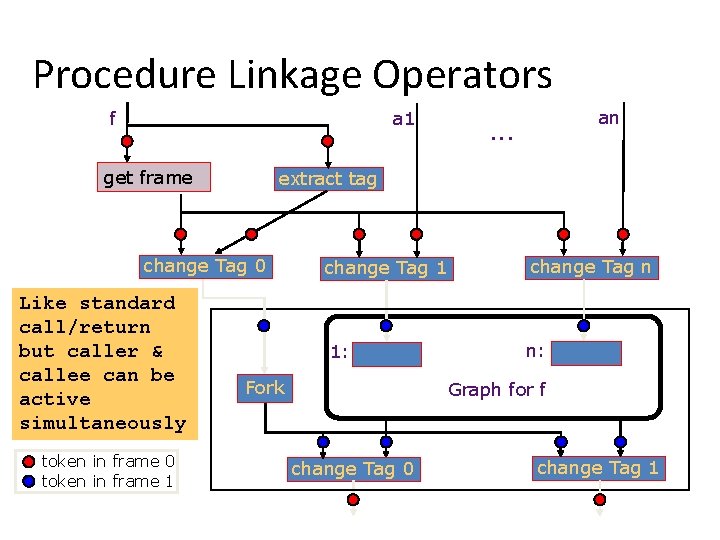

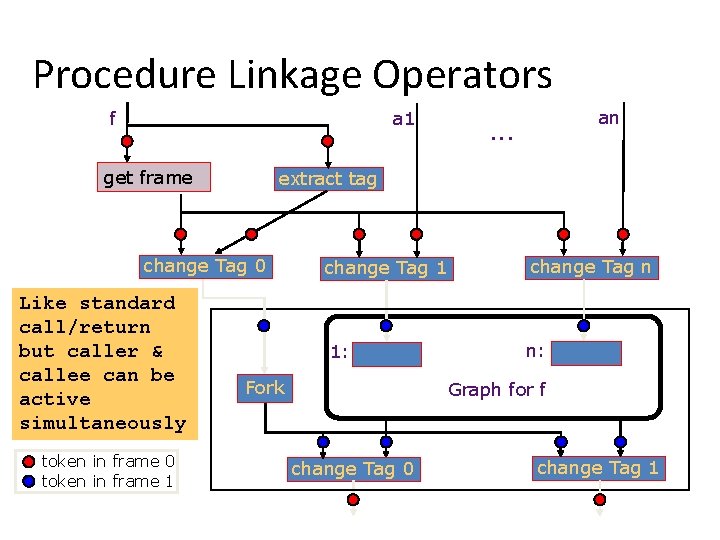

Procedure Linkage Operators f a 1 get frame extract tag change Tag 0 Like standard call/return but caller & callee can be active simultaneously token in frame 0 token in frame 1 an . . . change Tag 1 change Tag n 1: n: Fork Graph for f change Tag 0 change Tag 1

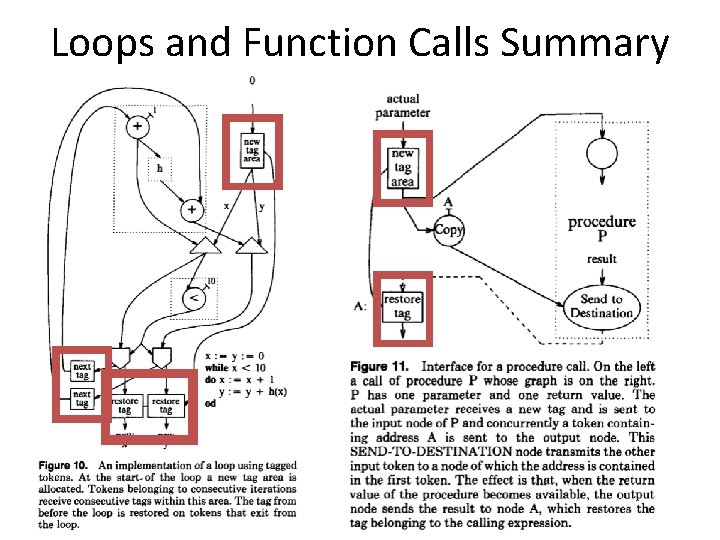

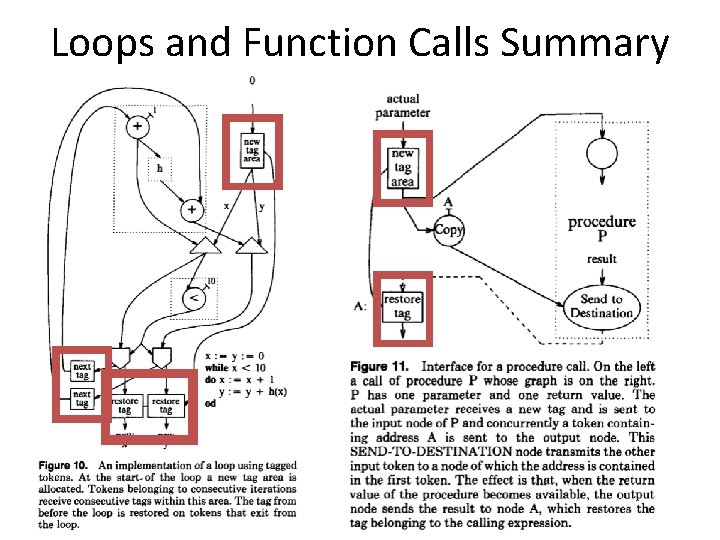

Loops and Function Calls Summary 12

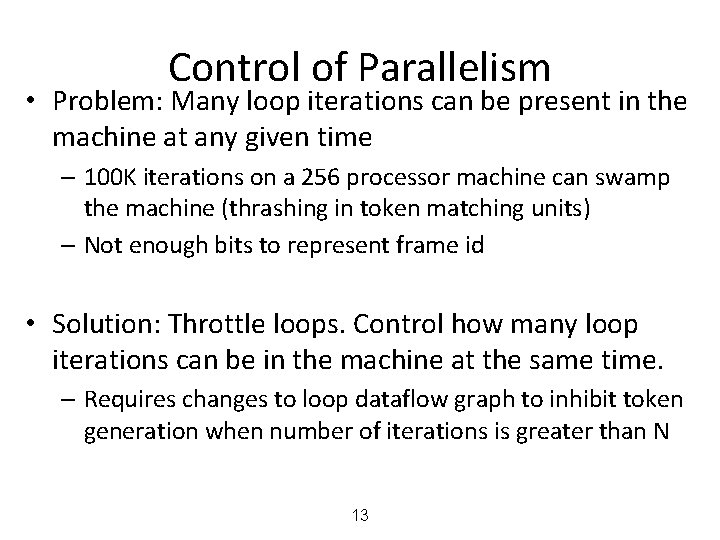

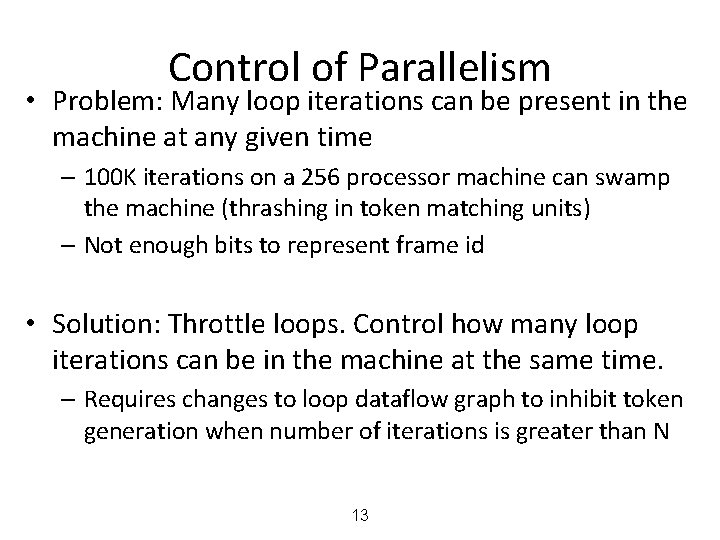

Control of Parallelism • Problem: Many loop iterations can be present in the machine at any given time – 100 K iterations on a 256 processor machine can swamp the machine (thrashing in token matching units) – Not enough bits to represent frame id • Solution: Throttle loops. Control how many loop iterations can be in the machine at the same time. – Requires changes to loop dataflow graph to inhibit token generation when number of iterations is greater than N 13

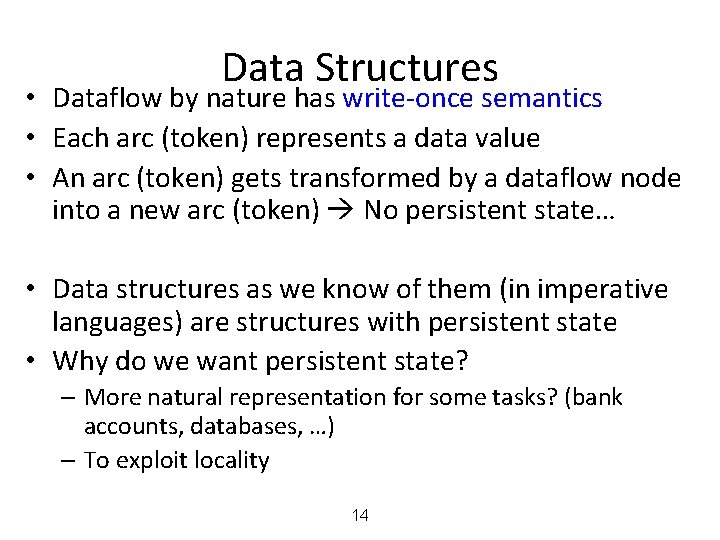

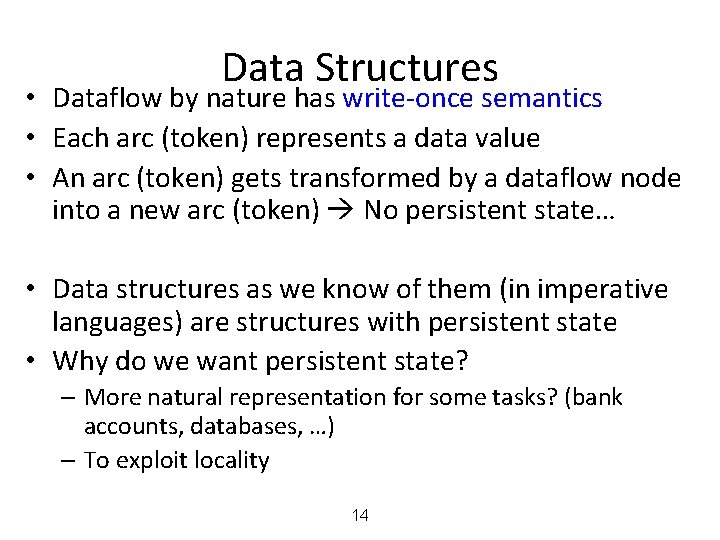

Data Structures • Dataflow by nature has write-once semantics • Each arc (token) represents a data value • An arc (token) gets transformed by a dataflow node into a new arc (token) No persistent state… • Data structures as we know of them (in imperative languages) are structures with persistent state • Why do we want persistent state? – More natural representation for some tasks? (bank accounts, databases, …) – To exploit locality 14

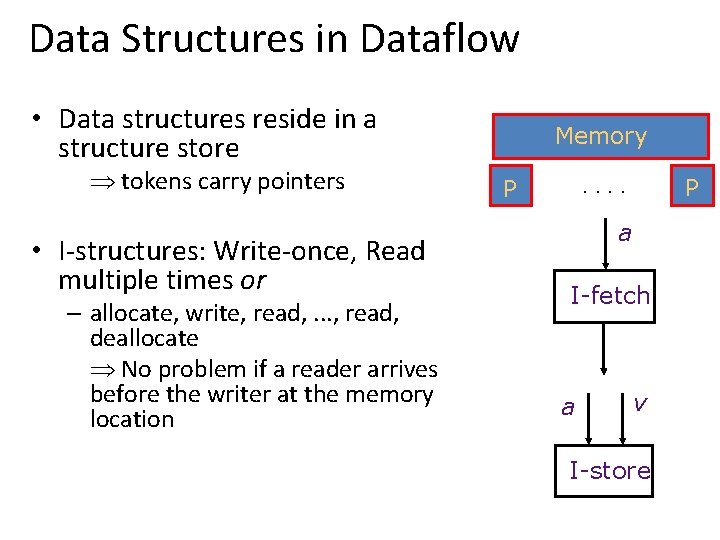

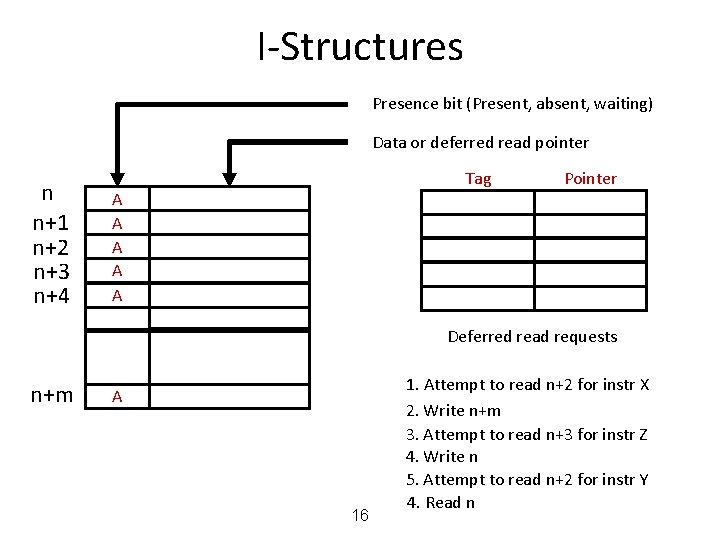

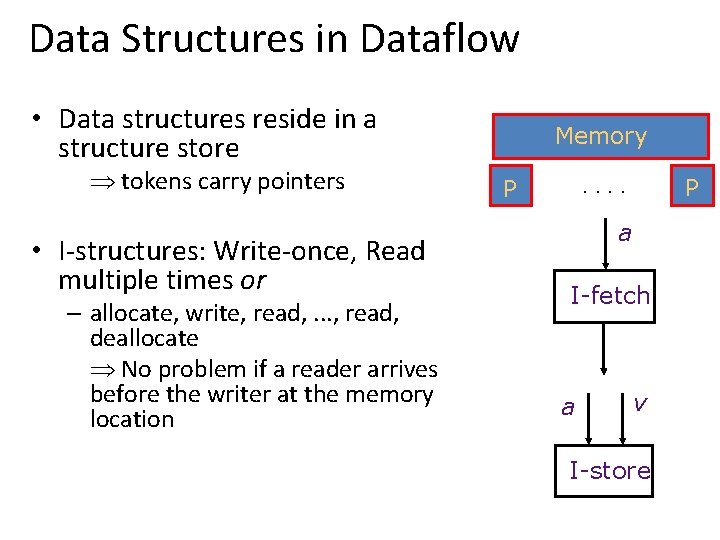

Data Structures in Dataflow • Data structures reside in a structure store Þ tokens carry pointers • I-structures: Write-once, Read multiple times or – allocate, write, read, . . . , read, deallocate Þ No problem if a reader arrives before the writer at the memory location Memory. . P P a I-fetch a v I-store

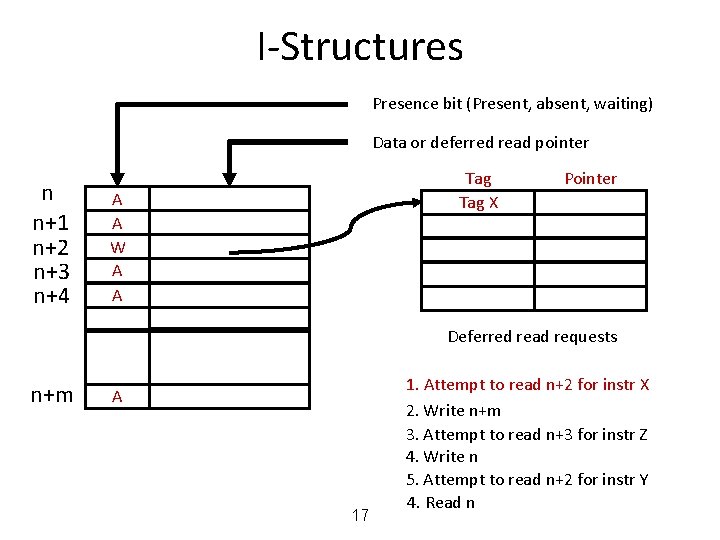

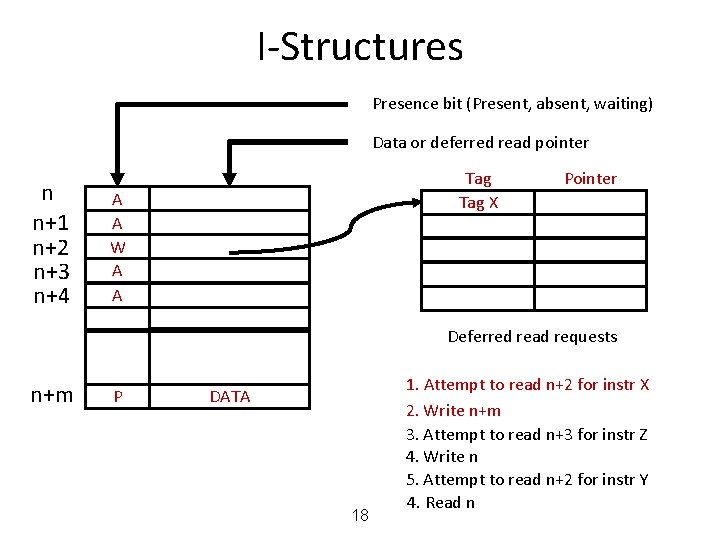

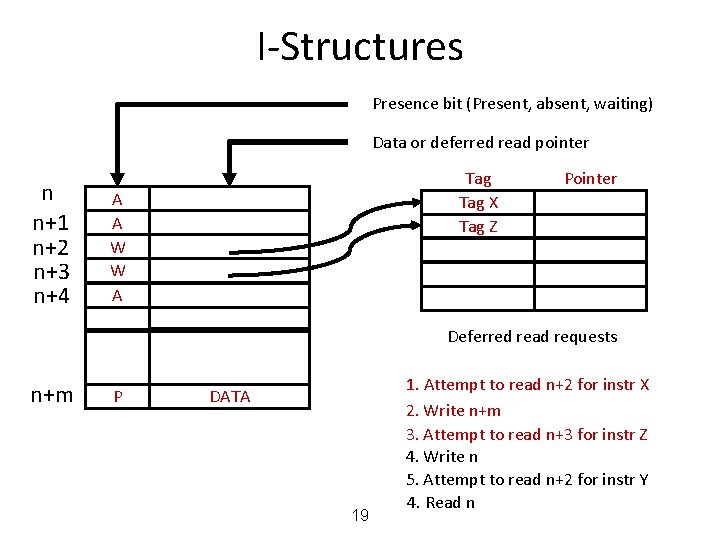

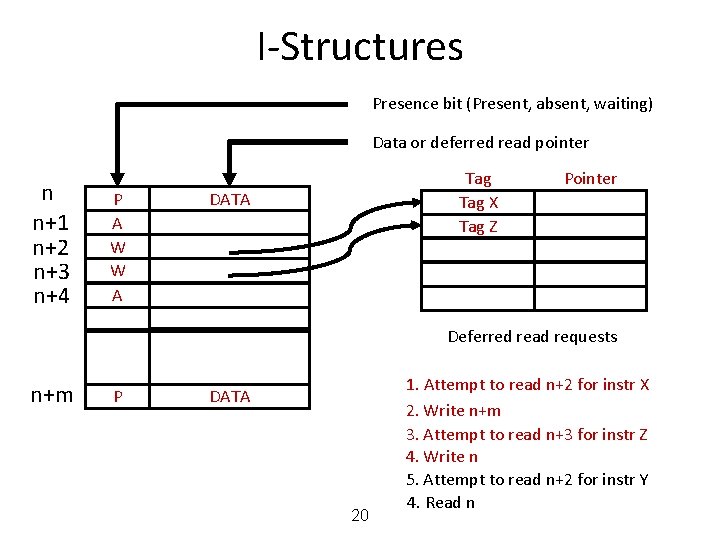

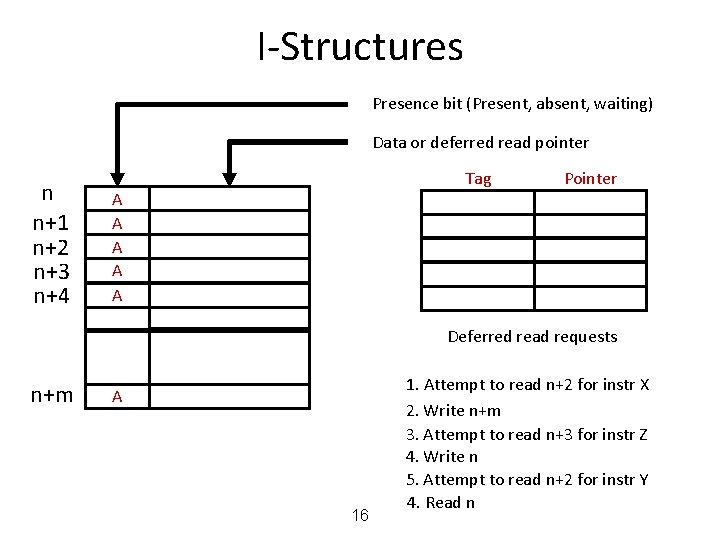

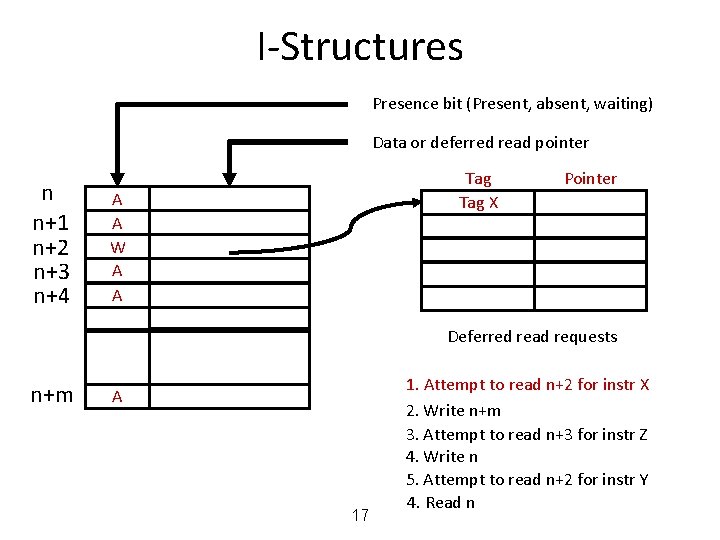

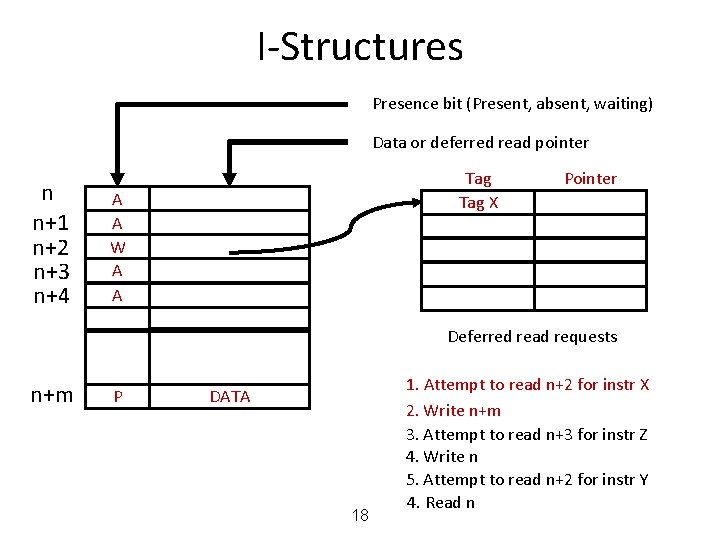

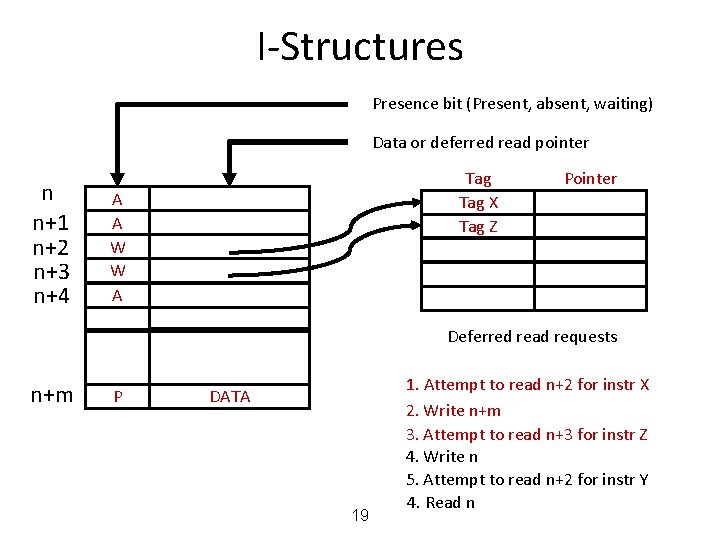

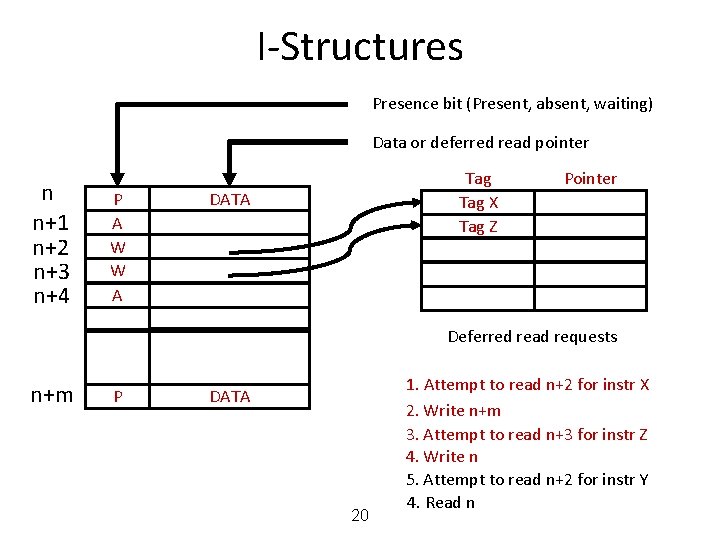

I-Structures Presence bit (Present, absent, waiting) Data or deferred read pointer n n+1 n+2 n+3 n+4 Tag A A A Pointer Deferred read requests n+m A 16 1. Attempt to read n+2 for instr X 2. Write n+m 3. Attempt to read n+3 for instr Z 4. Write n 5. Attempt to read n+2 for instr Y 4. Read n

I-Structures Presence bit (Present, absent, waiting) Data or deferred read pointer n n+1 n+2 n+3 n+4 Tag X A A W A A Pointer Deferred read requests n+m A 17 1. Attempt to read n+2 for instr X 2. Write n+m 3. Attempt to read n+3 for instr Z 4. Write n 5. Attempt to read n+2 for instr Y 4. Read n

I-Structures Presence bit (Present, absent, waiting) Data or deferred read pointer n n+1 n+2 n+3 n+4 Tag X A A W A A Pointer Deferred read requests n+m P DATA 18 1. Attempt to read n+2 for instr X 2. Write n+m 3. Attempt to read n+3 for instr Z 4. Write n 5. Attempt to read n+2 for instr Y 4. Read n

I-Structures Presence bit (Present, absent, waiting) Data or deferred read pointer n n+1 n+2 n+3 n+4 Tag X Tag Z A A W W A Pointer Deferred read requests n+m P DATA 19 1. Attempt to read n+2 for instr X 2. Write n+m 3. Attempt to read n+3 for instr Z 4. Write n 5. Attempt to read n+2 for instr Y 4. Read n

I-Structures Presence bit (Present, absent, waiting) Data or deferred read pointer n n+1 n+2 n+3 n+4 P A W W A Tag X Tag Z DATA Pointer Deferred read requests n+m P DATA 20 1. Attempt to read n+2 for instr X 2. Write n+m 3. Attempt to read n+3 for instr Z 4. Write n 5. Attempt to read n+2 for instr Y 4. Read n

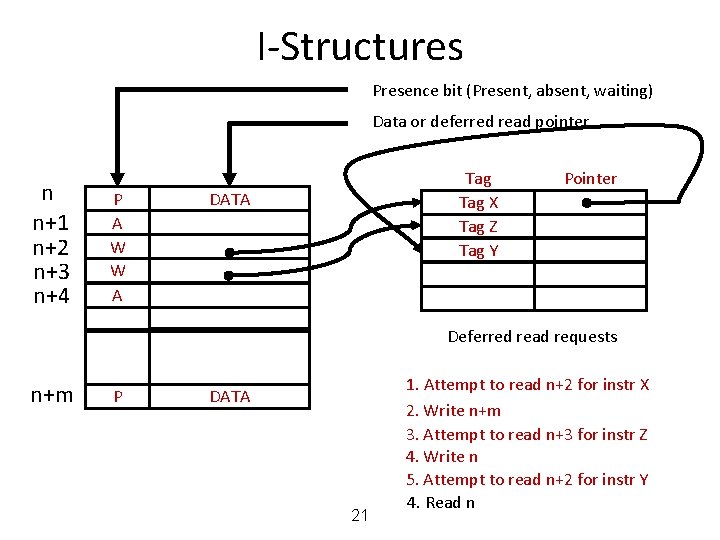

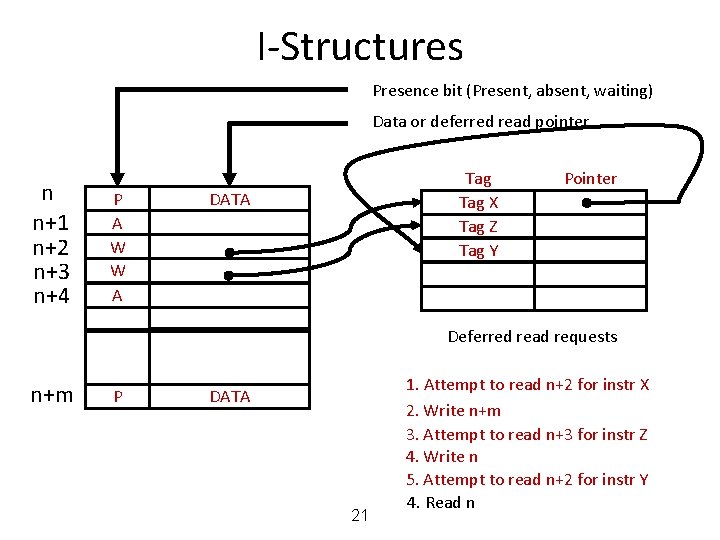

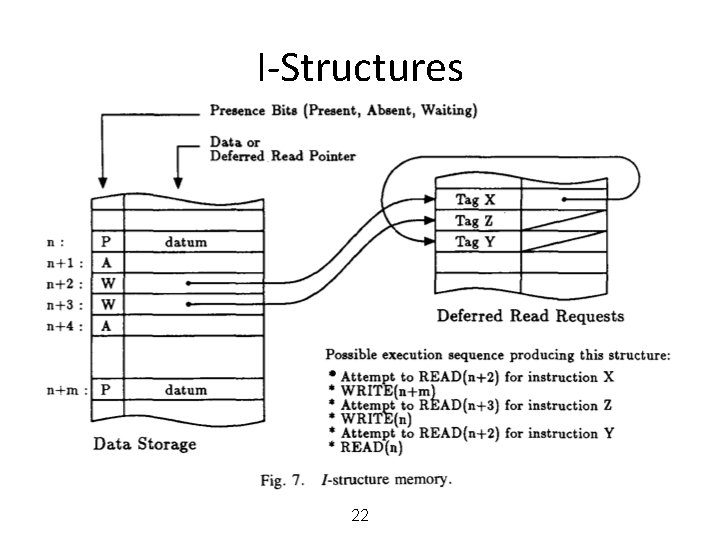

I-Structures Presence bit (Present, absent, waiting) Data or deferred read pointer n n+1 n+2 n+3 n+4 P A W W A Tag X Tag Z Tag Y DATA Pointer Deferred read requests n+m P DATA 21 1. Attempt to read n+2 for instr X 2. Write n+m 3. Attempt to read n+3 for instr Z 4. Write n 5. Attempt to read n+2 for instr Y 4. Read n

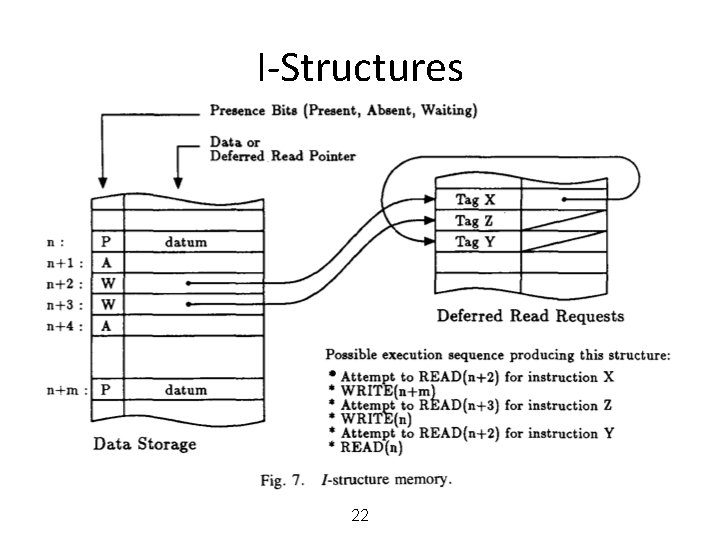

I-Structures 22

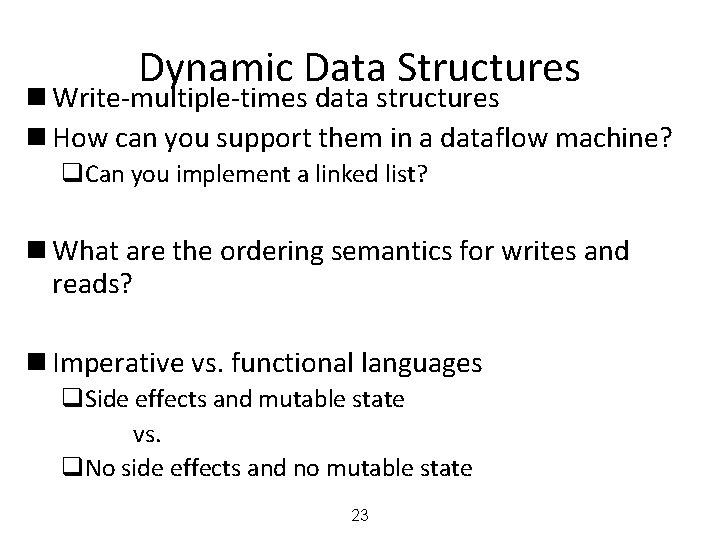

Dynamic Data Structures n Write-multiple-times data structures n How can you support them in a dataflow machine? q. Can you implement a linked list? n What are the ordering semantics for writes and reads? n Imperative vs. functional languages q. Side effects and mutable state vs. q. No side effects and no mutable state 23

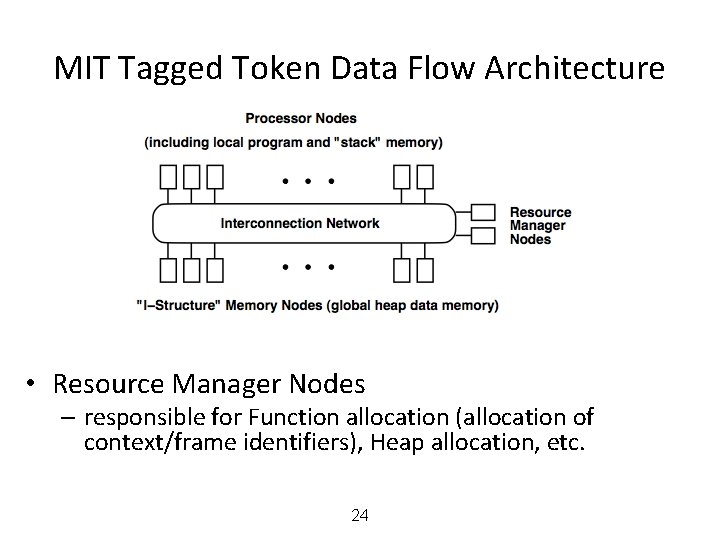

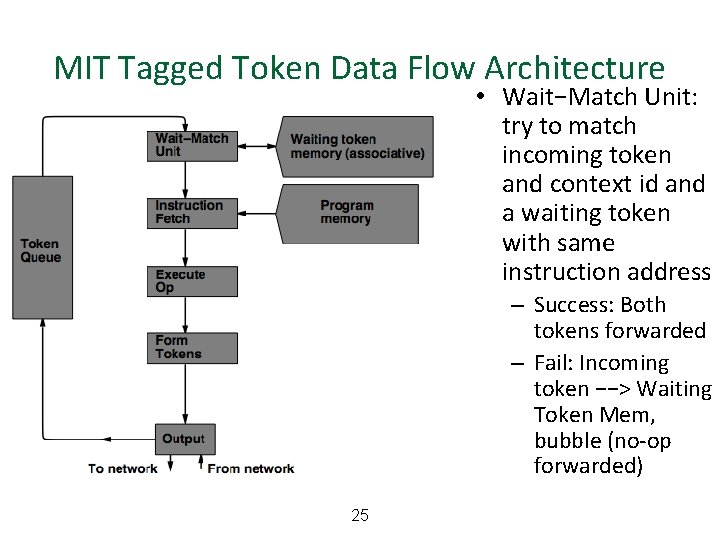

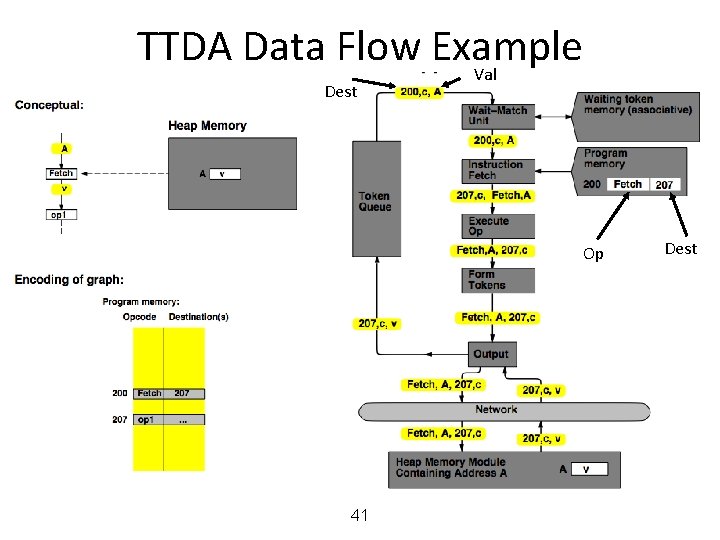

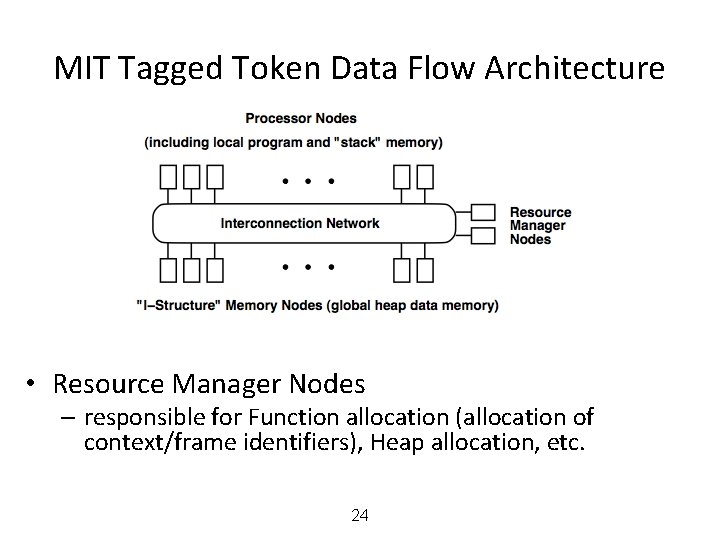

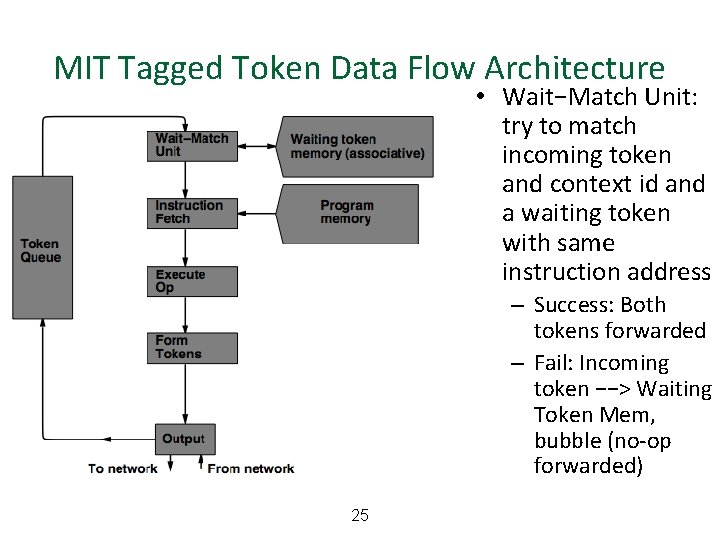

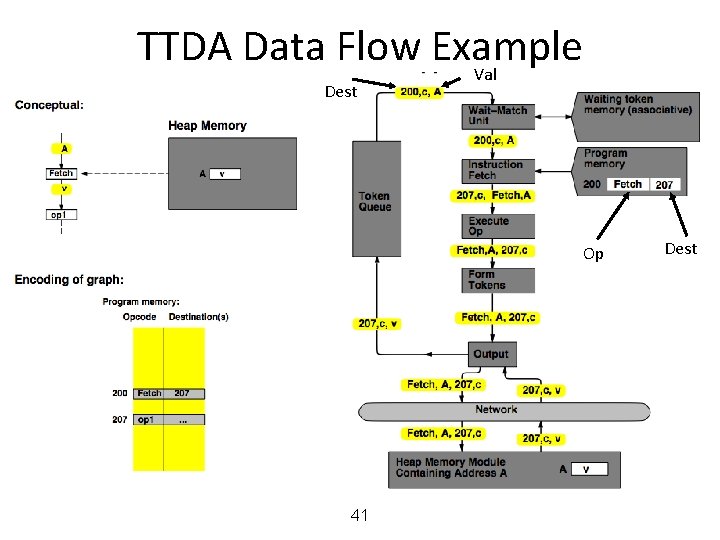

MIT Tagged Token Data Flow Architecture • Resource Manager Nodes – responsible for Function allocation (allocation of context/frame identifiers), Heap allocation, etc. 24

MIT Tagged Token Data Flow Architecture • Wait−Match Unit: try to match incoming token and context id and a waiting token with same instruction address – Success: Both tokens forwarded – Fail: Incoming token −−> Waiting Token Mem, bubble (no-op forwarded) 25

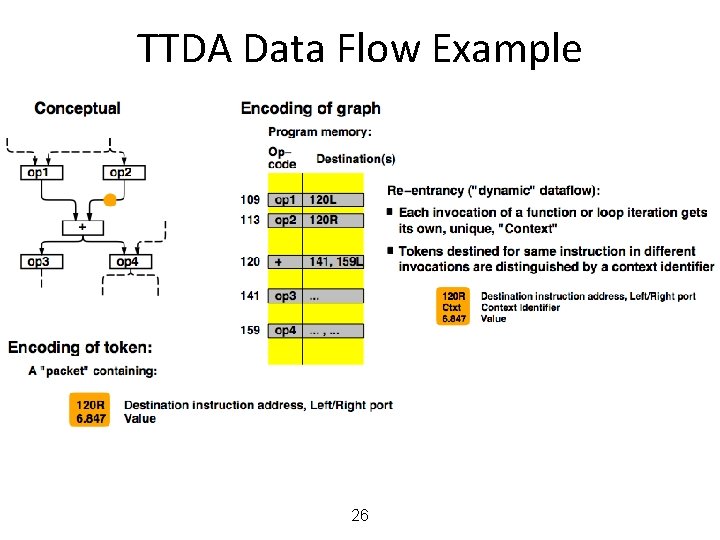

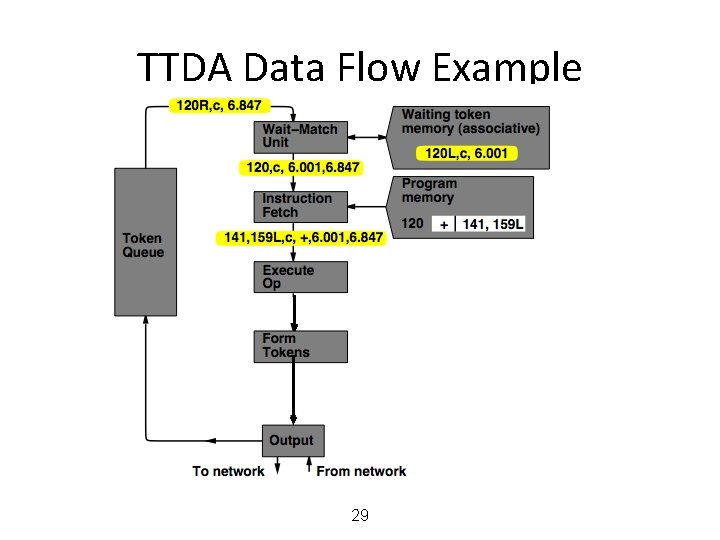

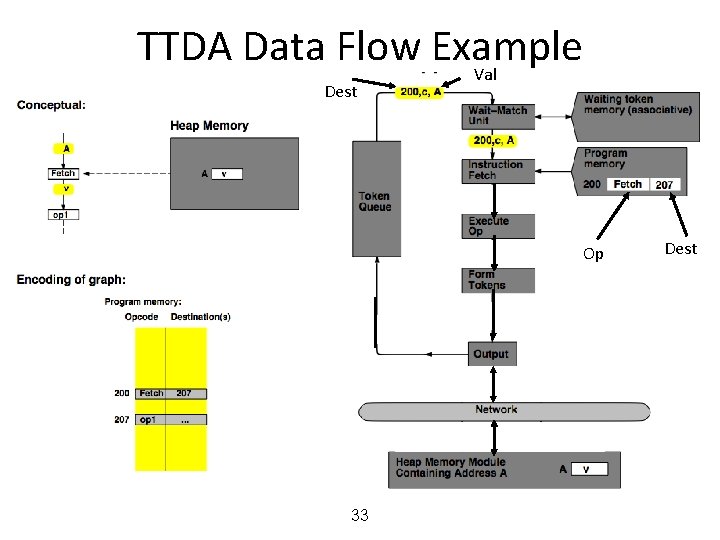

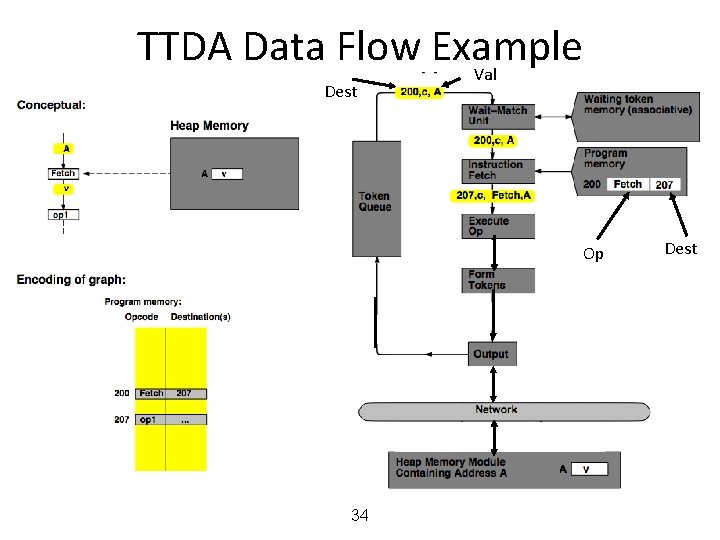

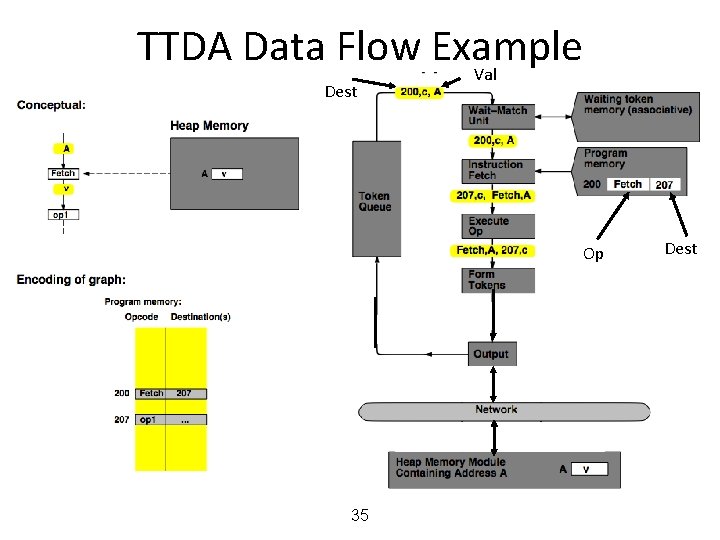

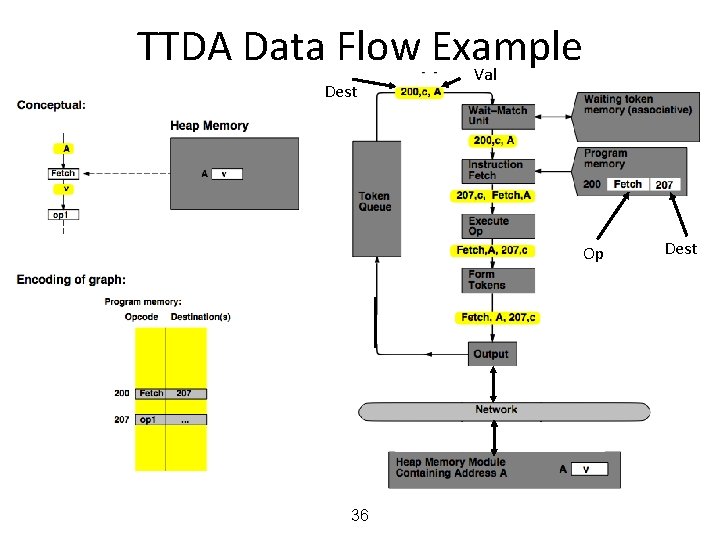

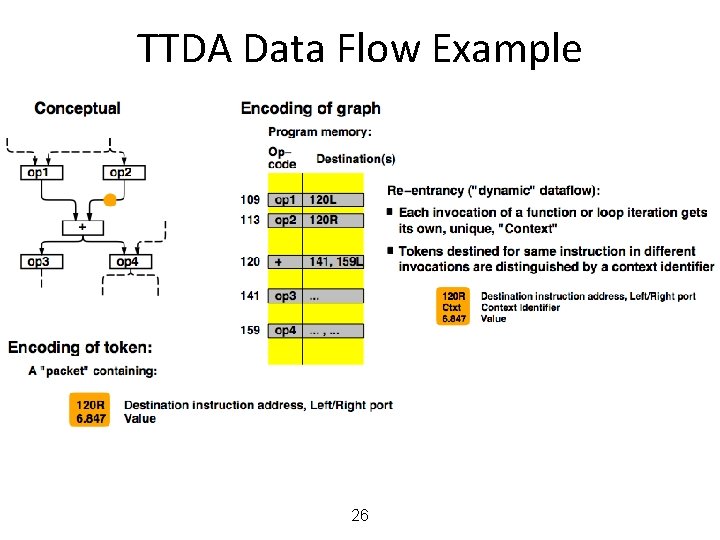

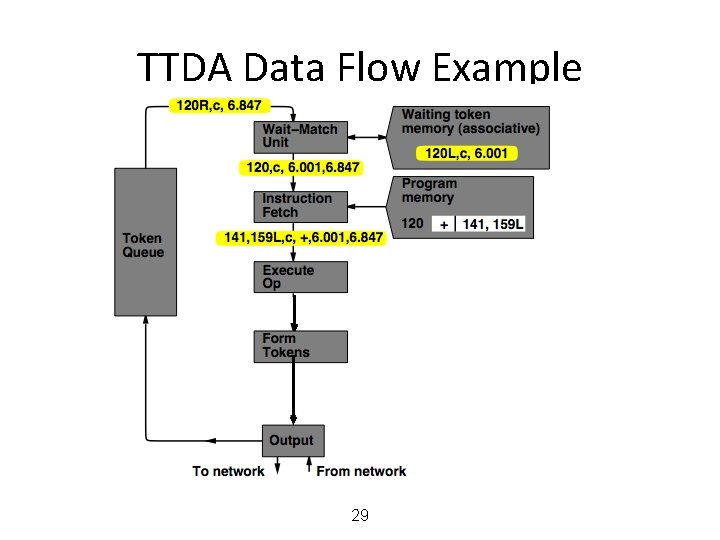

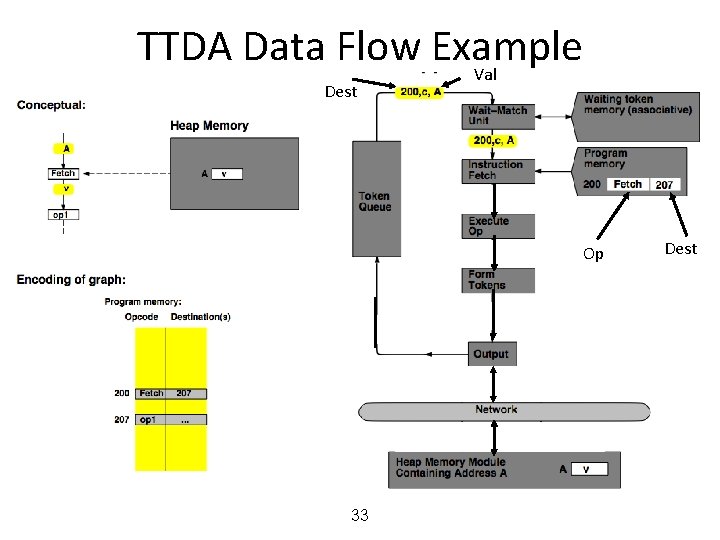

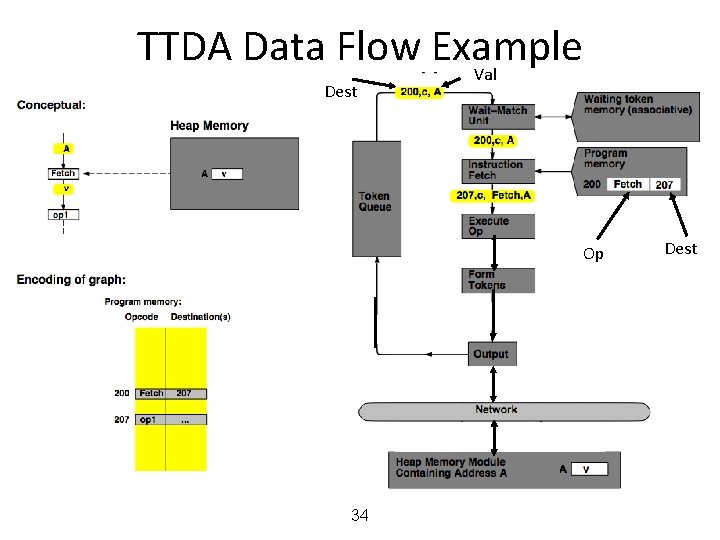

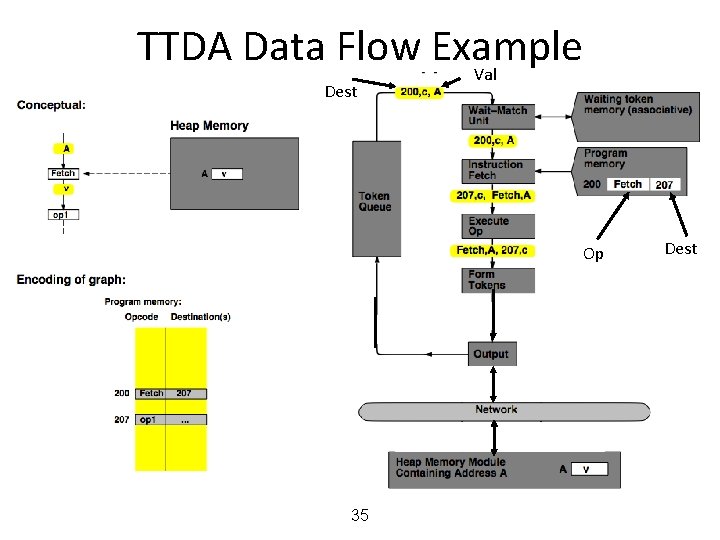

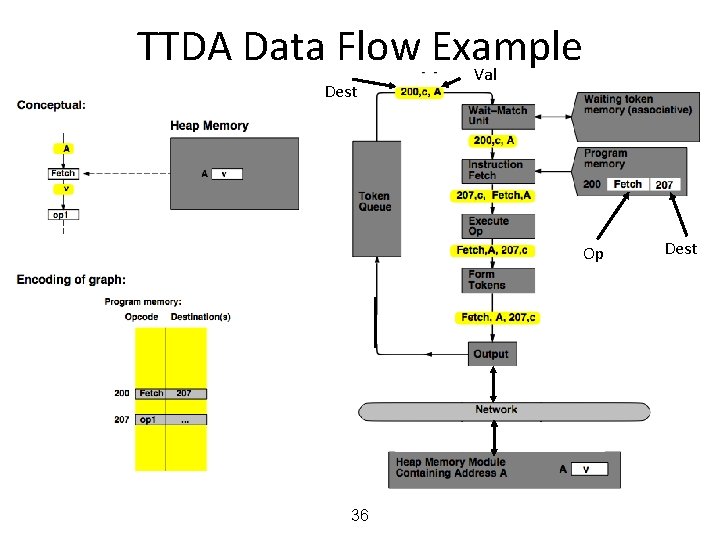

TTDA Data Flow Example 26

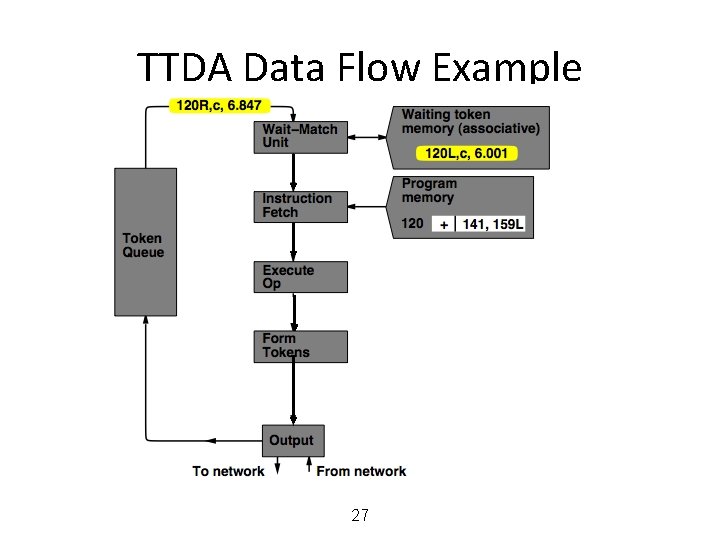

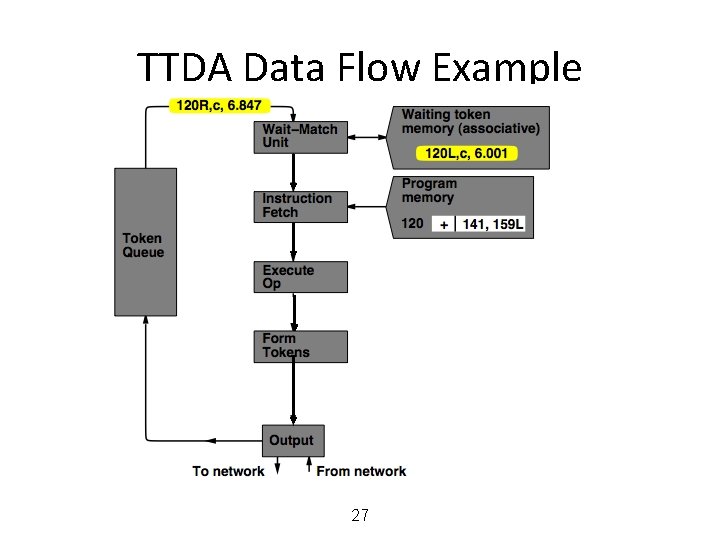

TTDA Data Flow Example 27

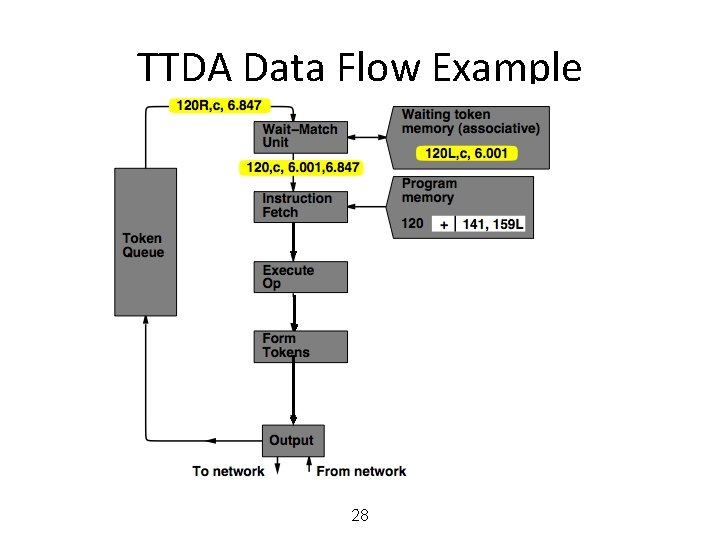

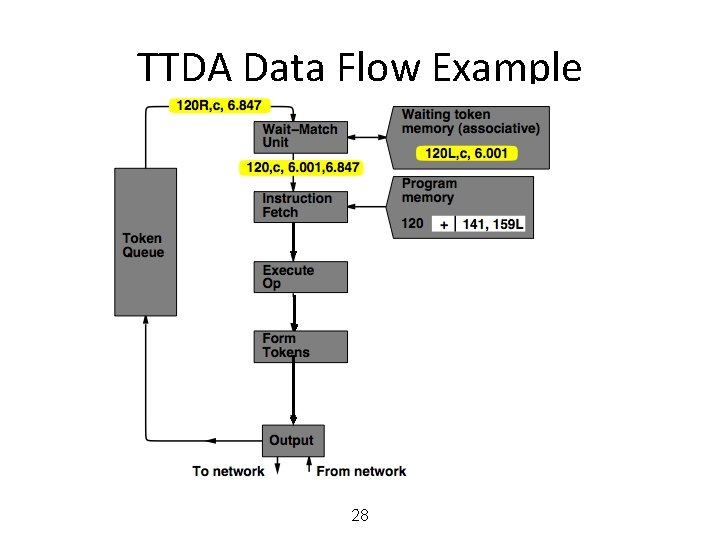

TTDA Data Flow Example 28

TTDA Data Flow Example 29

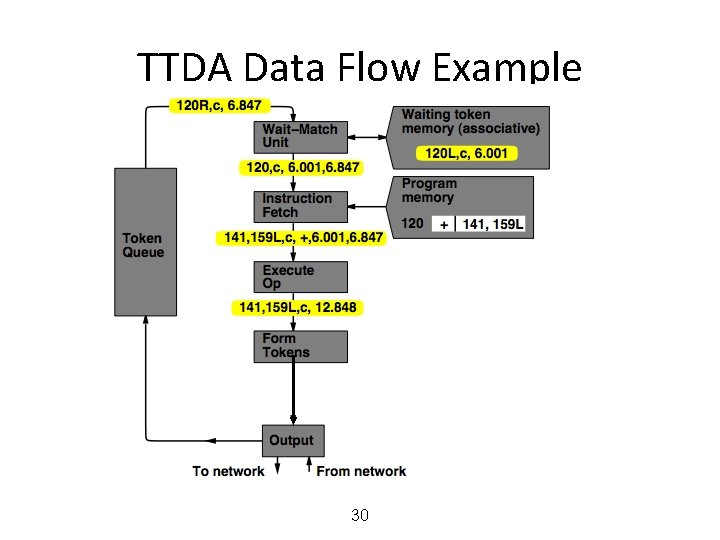

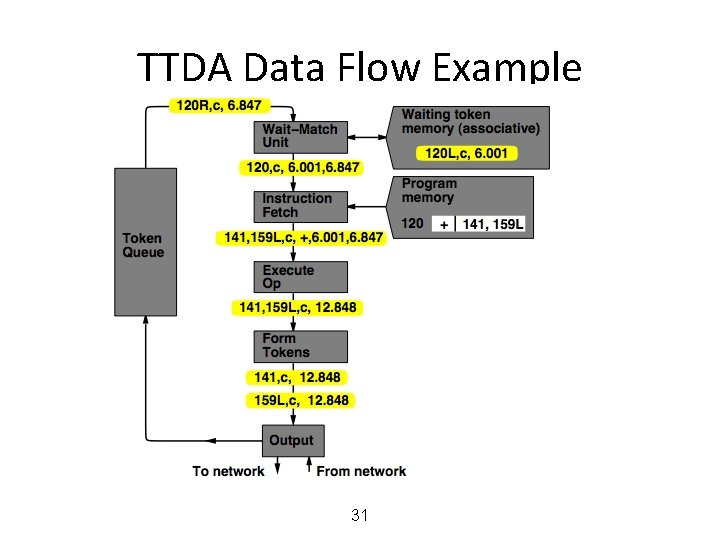

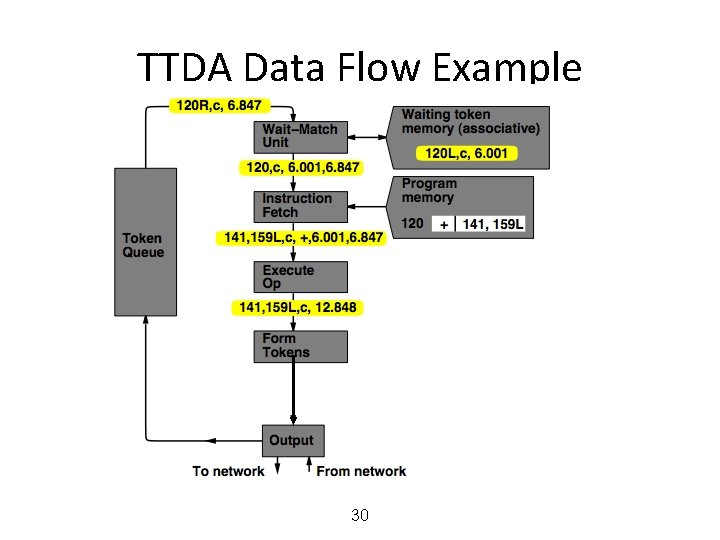

TTDA Data Flow Example 30

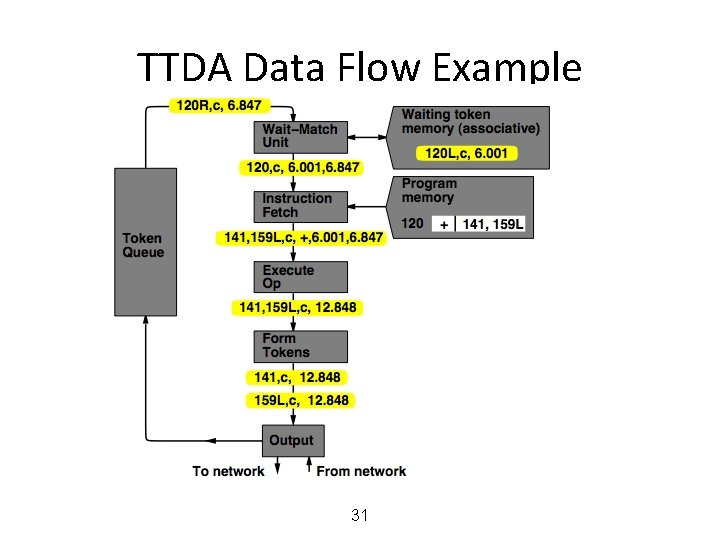

TTDA Data Flow Example 31

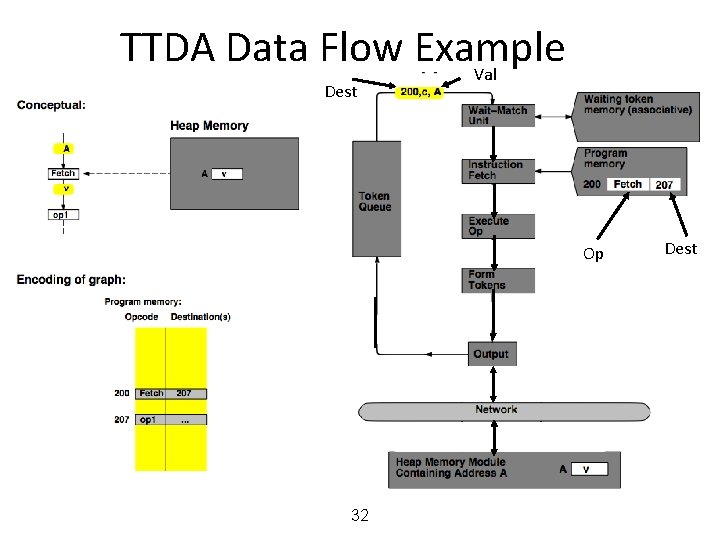

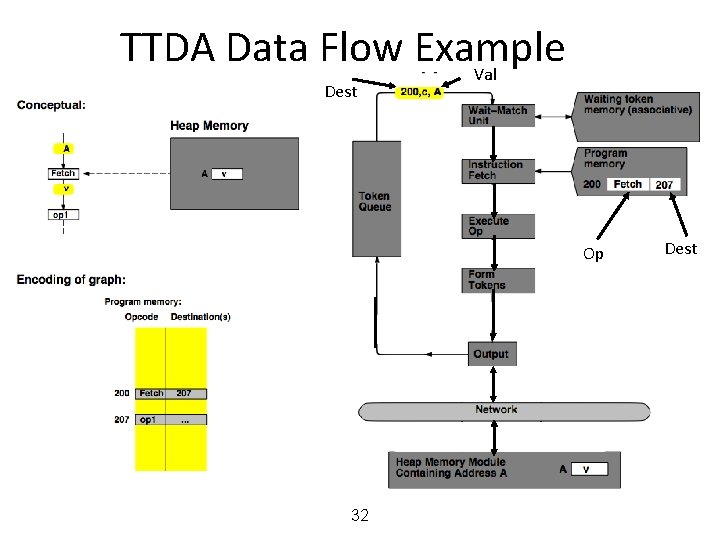

TTDA Data Flow Example Val Dest Op 32 Dest

TTDA Data Flow Example Val Dest Op 33 Dest

TTDA Data Flow Example Val Dest Op 34 Dest

TTDA Data Flow Example Val Dest Op 35 Dest

TTDA Data Flow Example Val Dest Op 36 Dest

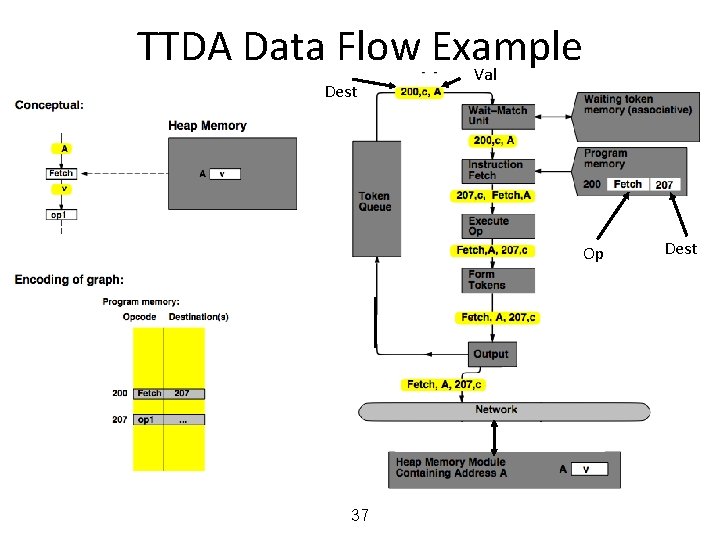

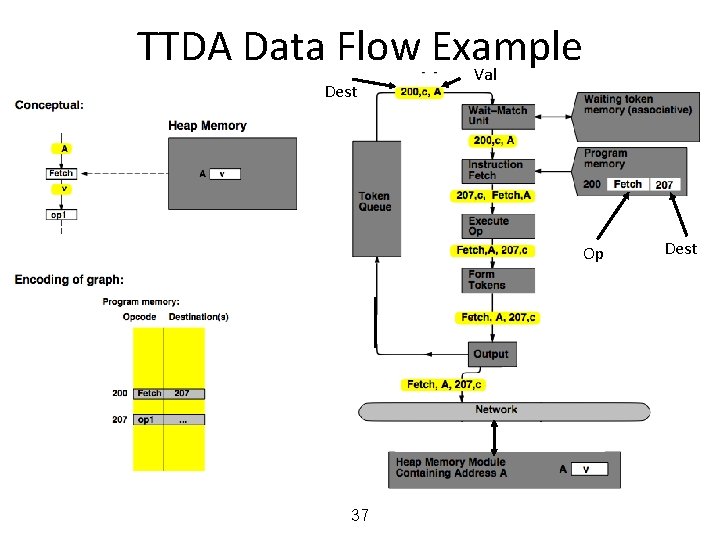

TTDA Data Flow Example Val Dest Op 37 Dest

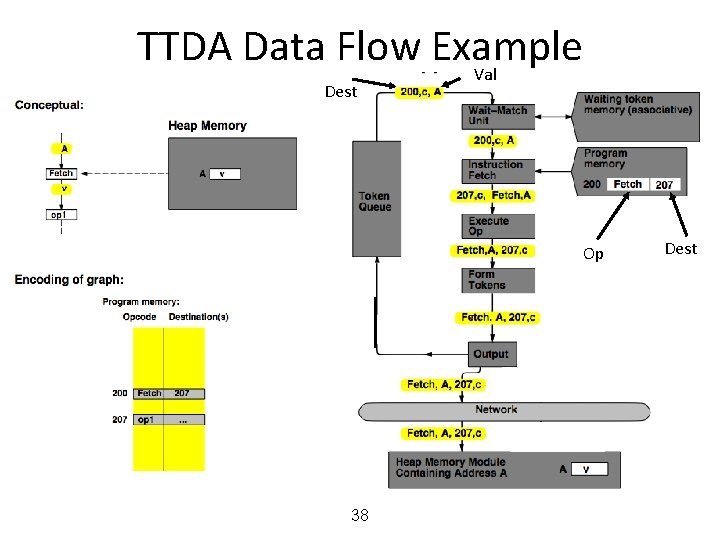

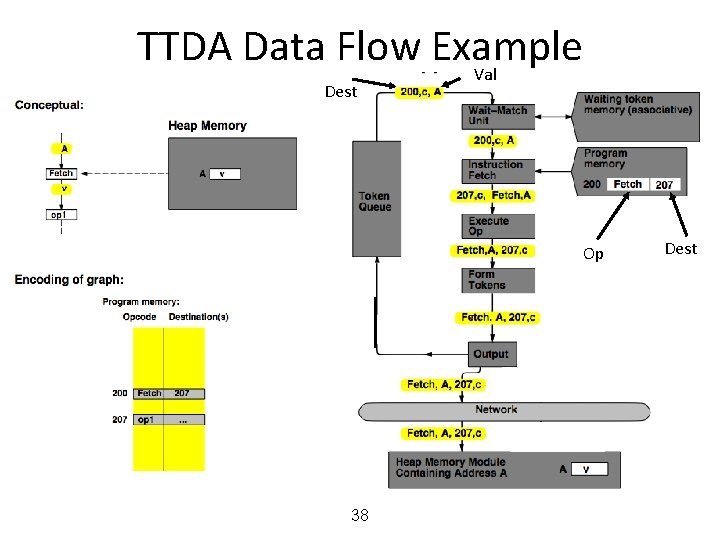

TTDA Data Flow Example Val Dest Op 38 Dest

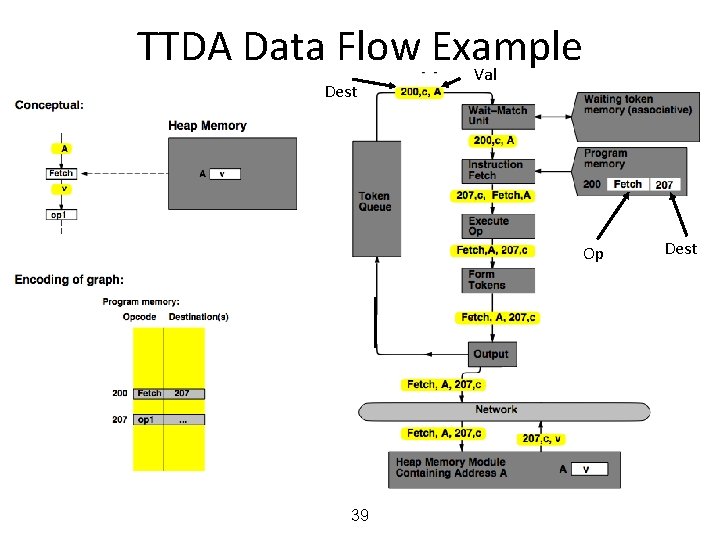

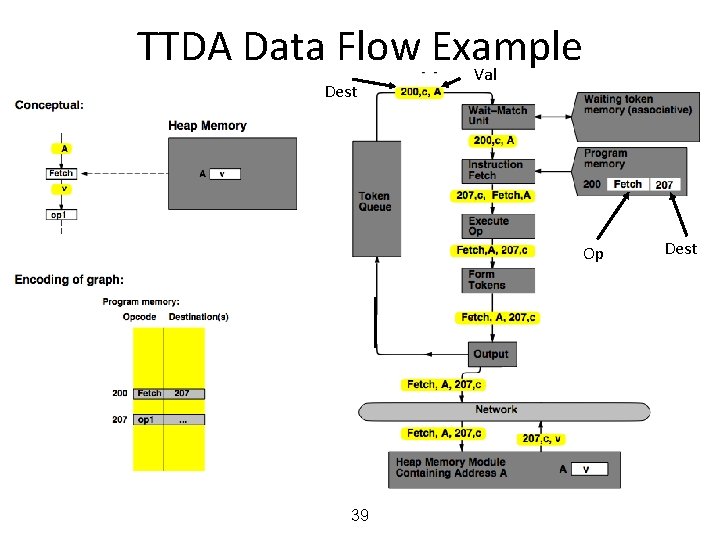

TTDA Data Flow Example Val Dest Op 39 Dest

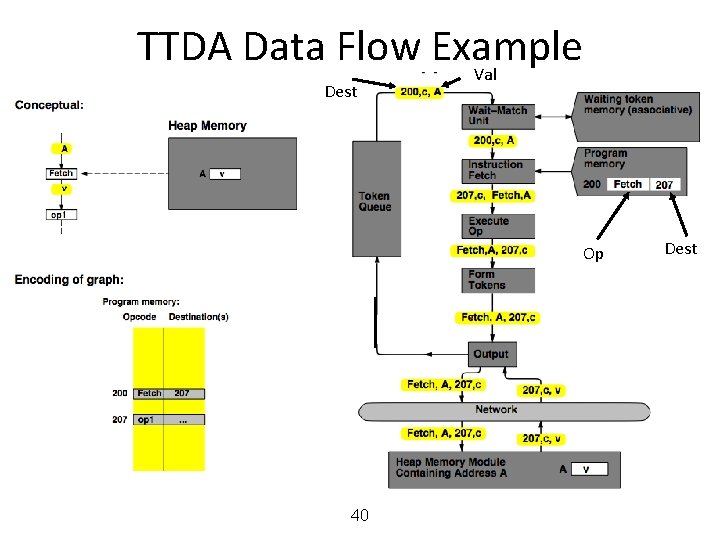

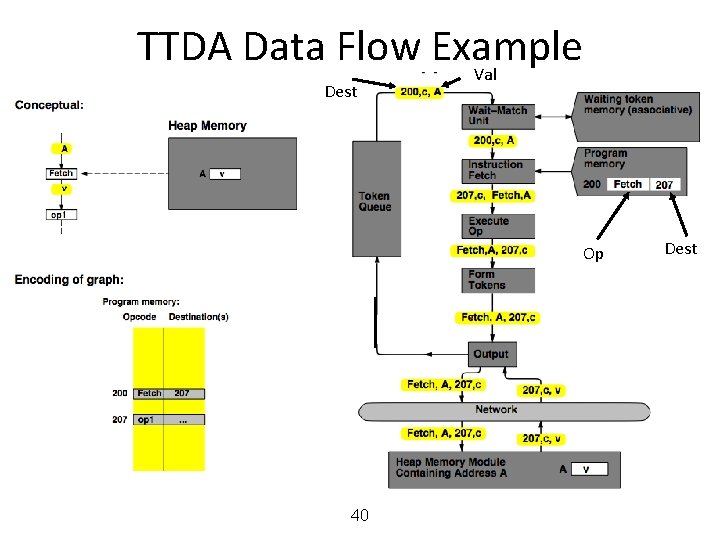

TTDA Data Flow Example Val Dest Op 40 Dest

TTDA Data Flow Example Val Dest Op 41 Dest

Data Flow Summary • Availability of data determines order of execution • A data flow node fires when its sources are ready • Programs represented as data flow graphs (of nodes) • Data Flow at the ISA level has not been (as) successful • Data Flow implementations under the hood (while preserving sequential ISA semantics) have been successful – Out of order execution – Hwu and Patt, “HPSm, a high performance restricted data flow architecture having minimal functionality, ” ISCA 1986. 42

Data Flow Characteristics • Data-driven execution of instruction-level graphical code – Nodes are operators – Arcs are data (I/O) – As opposed to control-driven execution • Only real dependencies constrain processing • No sequential I-stream – No program counter • Operations execute asynchronously • Execution triggered by the presence of data • Single assignment languages and functional programming – E. g. , SISAL in Manchester Data Flow Computer – No mutable state 43

Data Flow Advantages/Disadvantages • Advantages – Very good at exploiting irregular parallelism – Only real dependencies constrain processing • Disadvantages – Debugging difficult (no precise state) • Interrupt/exception handling is difficult (what is precise state semantics? ) – Implementing dynamic data structures difficult in pure data flow models – Too much parallelism? (Parallelism control needed) – High bookkeeping overhead (tag matching, data storage) – Instruction cycle is inefficient (delay between dependent instructions), memory locality is not exploited 44

Combining Data Flow and Control Flow • Can we get the best of both worlds? • Two possibilities – Model 1: Keep control flow at the ISA level, do dataflow underneath, preserving sequential semantics – Model 2: Keep dataflow model, but incorporate control flow at the ISA level to improve efficiency, exploit locality, and ease resource management • Incorporate threads into dataflow: statically ordered instructions; when the first instruction is fired, the remaining instructions execute without interruption 45

OOO EXECUTION: RESTRICTED DATAFLOW • An out-of-order engine dynamically builds the dataflow graph of a piece of the program – which piece? • The dataflow graph is limited to the instruction window – Instruction window: all decoded but not yet retired instructions • Can we do it for the whole program? • Why would we like to? • In other words, how can we have a large instruction window? 46

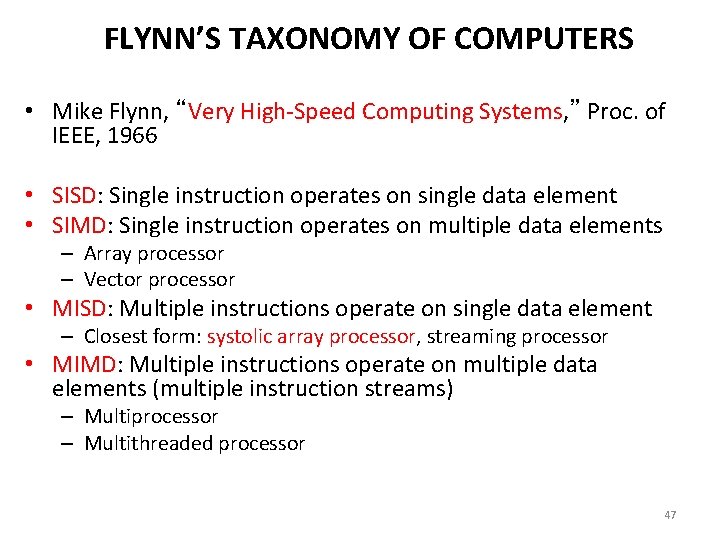

FLYNN’S TAXONOMY OF COMPUTERS • Mike Flynn, “Very High-Speed Computing Systems, ” Proc. of IEEE, 1966 • SISD: Single instruction operates on single data element • SIMD: Single instruction operates on multiple data elements – Array processor – Vector processor • MISD: Multiple instructions operate on single data element – Closest form: systolic array processor, streaming processor • MIMD: Multiple instructions operate on multiple data elements (multiple instruction streams) – Multiprocessor – Multithreaded processor 47

ADVANCED COMPUTER ARCHITECTURE Fundamental Concepts: Computing Models Samira Khan University of Virginia Jan 28, 2019 The content and concept of this course are adapted from CMU ECE 740