Advanced Computer Architecture 5 MD 00 Exploiting ILP

![Dependencies Limit ILP: Example C loop: for (i=1; i<=1000; i++) x[i] = x[i] + Dependencies Limit ILP: Example C loop: for (i=1; i<=1000; i++) x[i] = x[i] +](https://slidetodoc.com/presentation_image_h/9e100290554bb2e73130704886e28cd7/image-8.jpg)

![Loop Unrolling: increasing ILP At source level: for (i=1; i<=1000; i++) x[i] = x[i] Loop Unrolling: increasing ILP At source level: for (i=1; i<=1000; i++) x[i] = x[i]](https://slidetodoc.com/presentation_image_h/9e100290554bb2e73130704886e28cd7/image-13.jpg)

![Dynamic trace for unrolled code for (i=1; i<=1000; i++) a[i] = a[i]+s; Integer instruction Dynamic trace for unrolled code for (i=1; i<=1000; i++) a[i] = a[i]+s; Integer instruction](https://slidetodoc.com/presentation_image_h/9e100290554bb2e73130704886e28cd7/image-18.jpg)

- Slides: 39

Advanced Computer Architecture 5 MD 00 Exploiting ILP with SW approaches Henk Corporaal www. ics. ele. tue. nl/~heco TUEindhoven December 2012

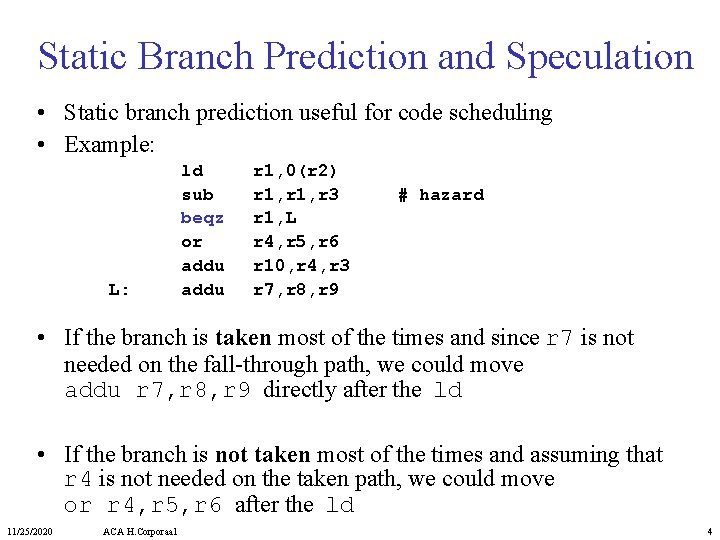

Topics • • Static branch prediction and speculation Basic compiler techniques Multiple issue architectures Advanced compiler support techniques – Loop-level parallelism – Software pipelining • Hardware support for compile-time scheduling 11/25/2020 ACA H. Corporaal 2

We discussed previously dynamic branch prediction This does not help the compiler !!! Should the compiler speculate operations (= move operations before a branch) from target or fall-through? • We need Static Branch Prediction 11/25/2020 ACA H. Corporaal 3

Static Branch Prediction and Speculation • Static branch prediction useful for code scheduling • Example: L: ld sub beqz or addu r 1, 0(r 2) r 1, r 3 r 1, L r 4, r 5, r 6 r 10, r 4, r 3 r 7, r 8, r 9 # hazard • If the branch is taken most of the times and since r 7 is not needed on the fall-through path, we could move addu r 7, r 8, r 9 directly after the ld • If the branch is not taken most of the times and assuming that r 4 is not needed on the taken path, we could move or r 4, r 5, r 6 after the ld 11/25/2020 ACA H. Corporaal 4

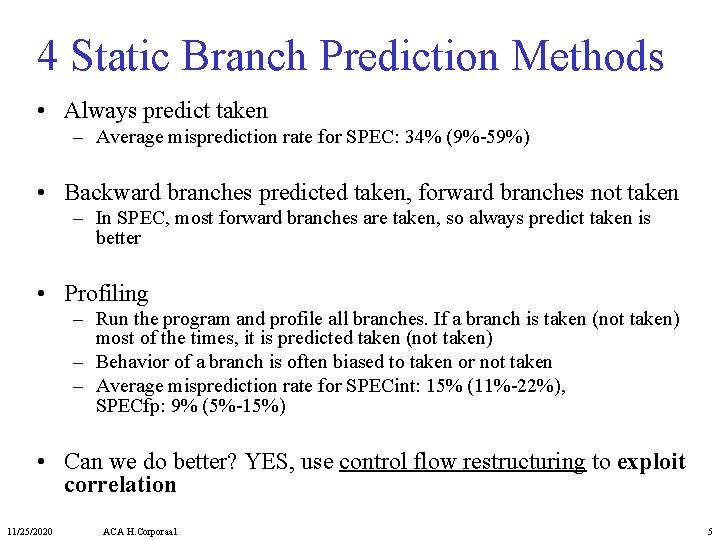

4 Static Branch Prediction Methods • Always predict taken – Average misprediction rate for SPEC: 34% (9%-59%) • Backward branches predicted taken, forward branches not taken – In SPEC, most forward branches are taken, so always predict taken is better • Profiling – Run the program and profile all branches. If a branch is taken (not taken) most of the times, it is predicted taken (not taken) – Behavior of a branch is often biased to taken or not taken – Average misprediction rate for SPECint: 15% (11%-22%), SPECfp: 9% (5%-15%) • Can we do better? YES, use control flow restructuring to exploit correlation 11/25/2020 ACA H. Corporaal 5

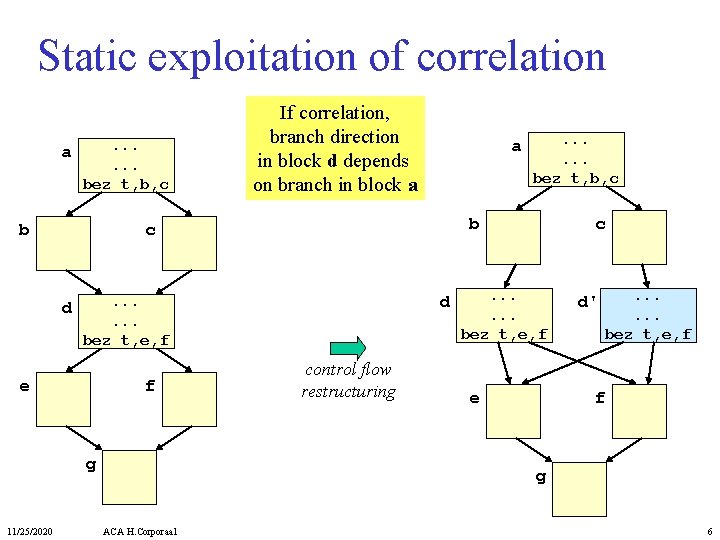

Static exploitation of correlation a . . . bez t, b, c b a d . . . bez t, e, f e f g . . . bez t, b, c b c d 11/25/2020 If correlation, branch direction in block d depends on branch in block a control flow restructuring c . . . bez t, e, f e d' . . . bez t, e, f f g ACA H. Corporaal 6

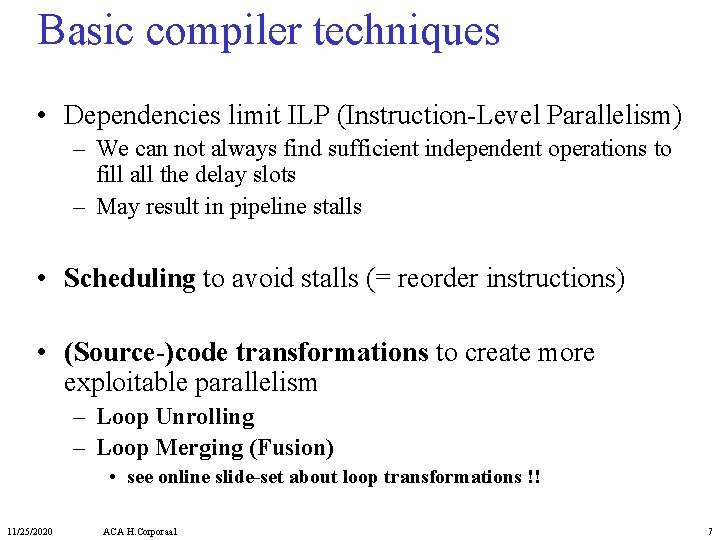

Basic compiler techniques • Dependencies limit ILP (Instruction-Level Parallelism) – We can not always find sufficient independent operations to fill all the delay slots – May result in pipeline stalls • Scheduling to avoid stalls (= reorder instructions) • (Source-)code transformations to create more exploitable parallelism – Loop Unrolling – Loop Merging (Fusion) • see online slide-set about loop transformations !! 11/25/2020 ACA H. Corporaal 7

![Dependencies Limit ILP Example C loop for i1 i1000 i xi xi Dependencies Limit ILP: Example C loop: for (i=1; i<=1000; i++) x[i] = x[i] +](https://slidetodoc.com/presentation_image_h/9e100290554bb2e73130704886e28cd7/image-8.jpg)

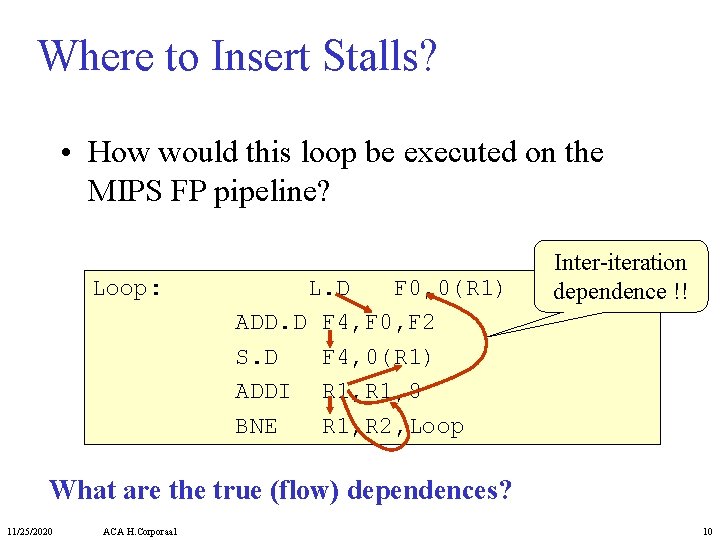

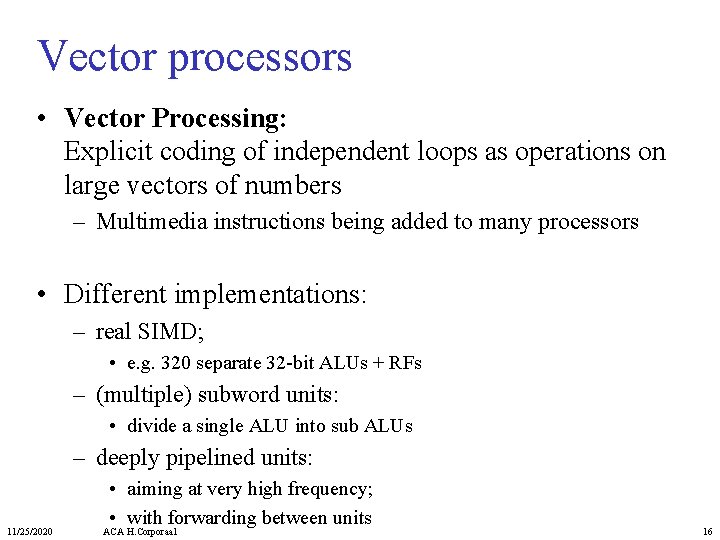

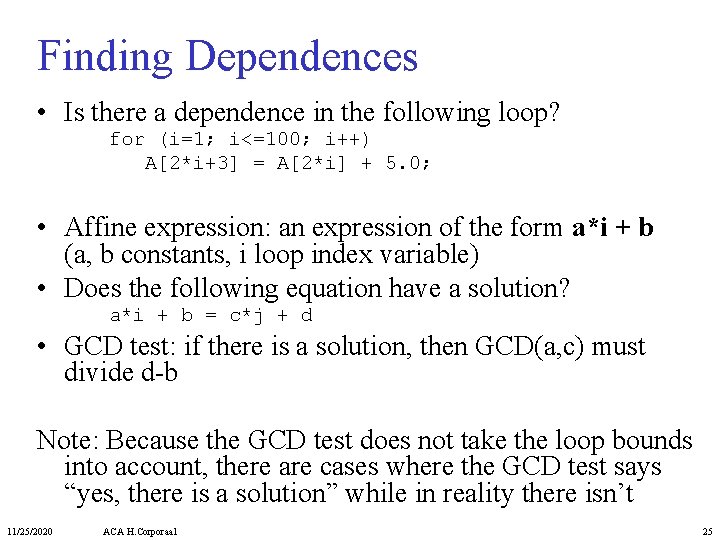

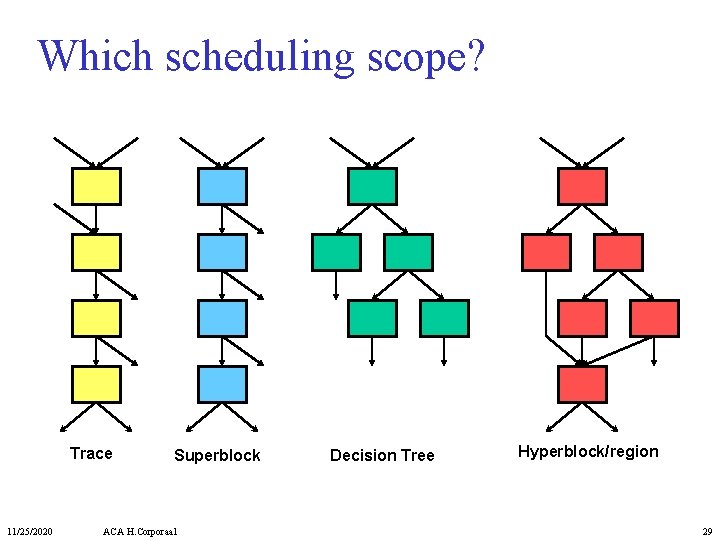

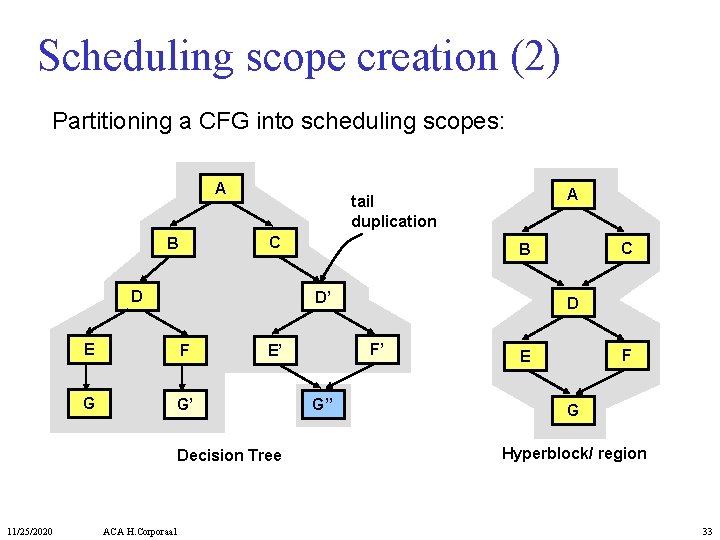

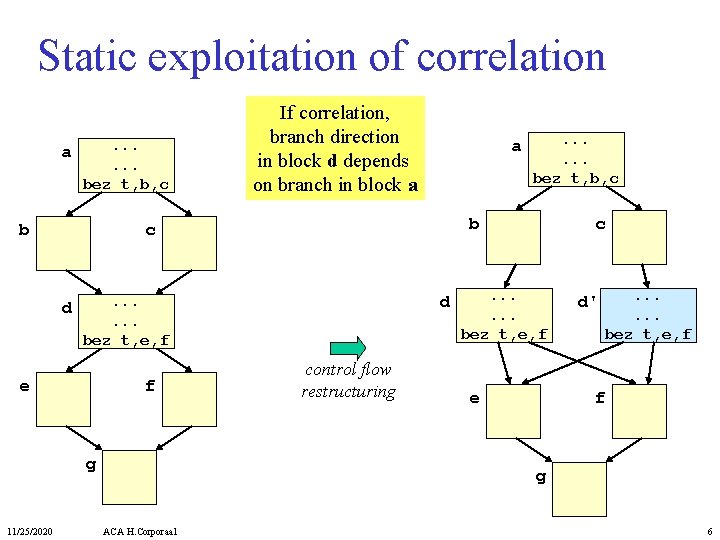

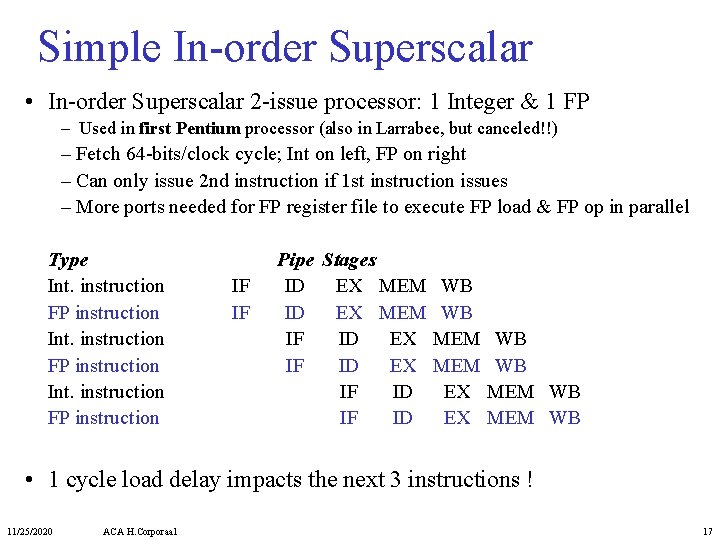

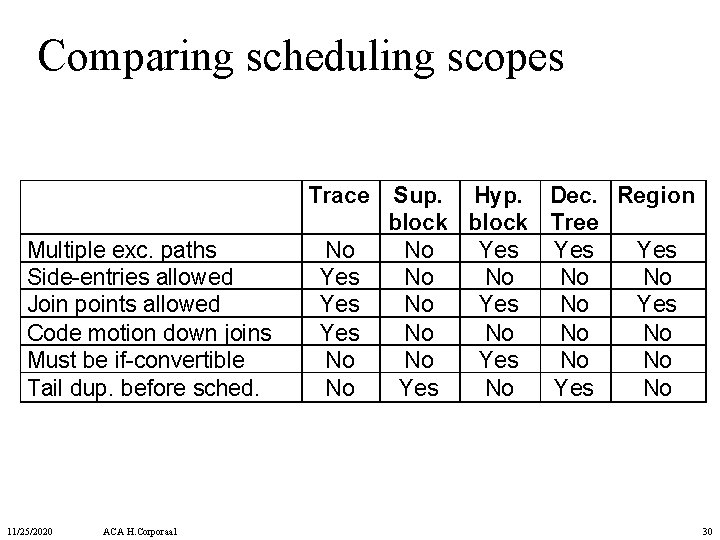

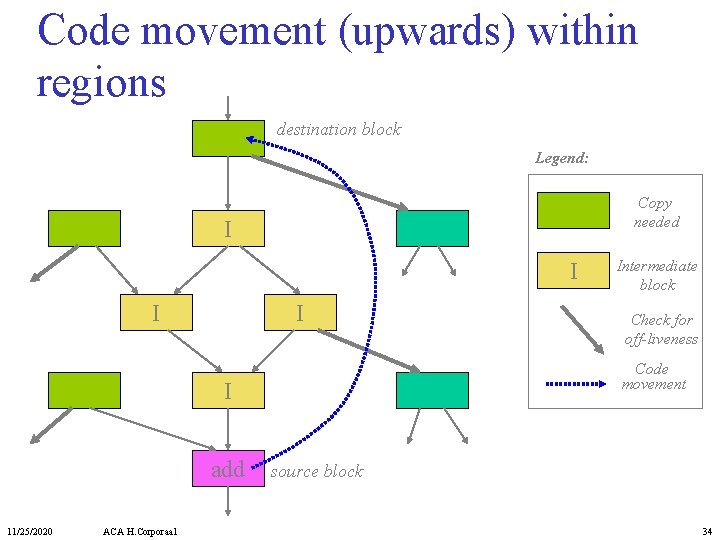

Dependencies Limit ILP: Example C loop: for (i=1; i<=1000; i++) x[i] = x[i] + s; MIPS assembly code: ; R 1 = &x[1] ; R 2 = &x[1000]+8 ; F 2 = s Loop: L. D ADD. D S. D ADDI BNE 11/25/2020 ACA H. Corporaal F 0, 0(R 1) F 4, F 0, F 2 0(R 1), F 4 R 1, 8 R 1, R 2, Loop ; ; ; F 0 = x[i] F 4 = x[i]+s x[i] = F 4 R 1 = &x[i+1] branch if R 1!=&x[1000]+8 8

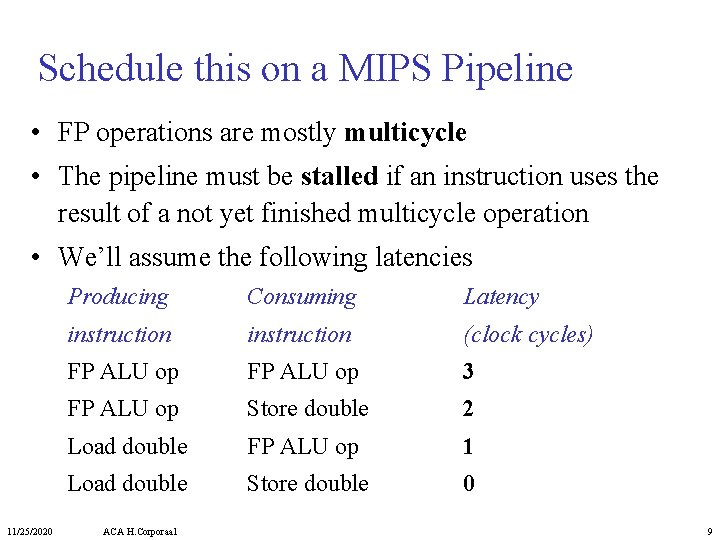

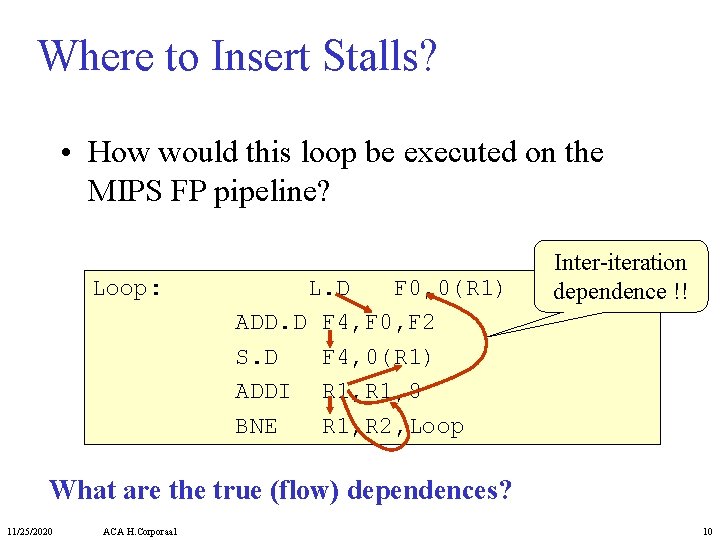

Schedule this on a MIPS Pipeline • FP operations are mostly multicycle • The pipeline must be stalled if an instruction uses the result of a not yet finished multicycle operation • We’ll assume the following latencies 11/25/2020 Producing Consuming Latency instruction (clock cycles) FP ALU op 3 FP ALU op Store double 2 Load double FP ALU op 1 Load double Store double 0 ACA H. Corporaal 9

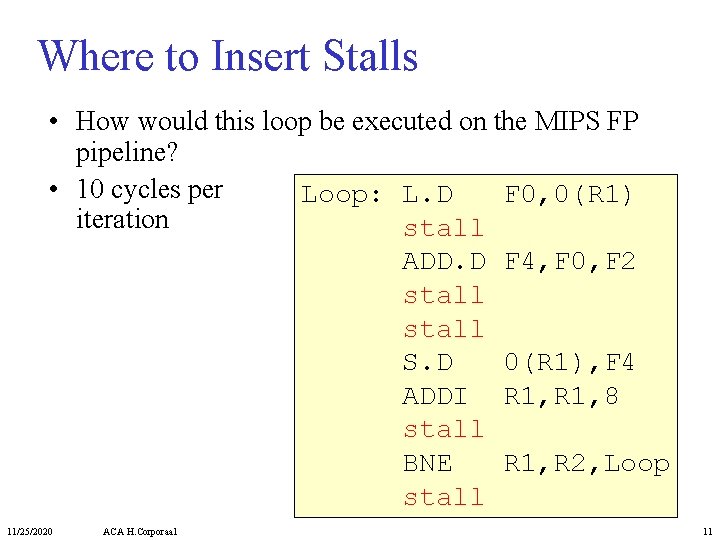

Where to Insert Stalls? • How would this loop be executed on the MIPS FP pipeline? Loop: L. D F 0, 0(R 1) ADD. D F 4, F 0, F 2 S. D F 4, 0(R 1) ADDI R 1, 8 BNE R 1, R 2, Loop Inter-iteration dependence !! What are the true (flow) dependences? 11/25/2020 ACA H. Corporaal 10

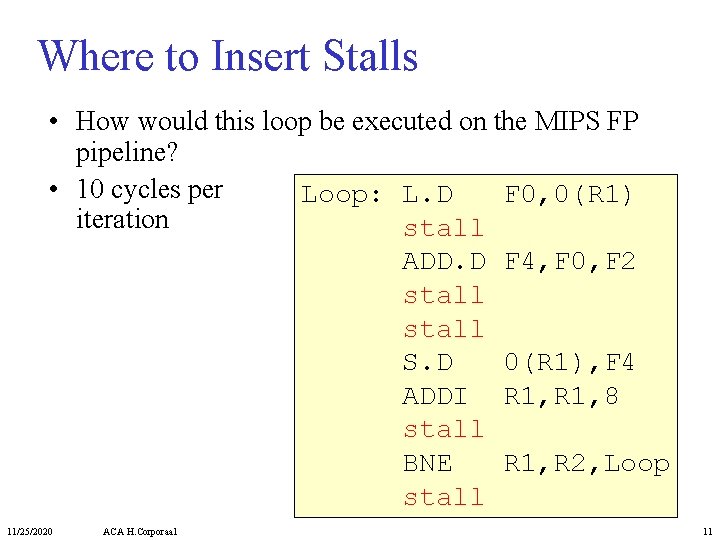

Where to Insert Stalls • How would this loop be executed on the MIPS FP pipeline? • 10 cycles per Loop: L. D F 0, 0(R 1) iteration stall ADD. D F 4, F 0, F 2 stall S. D 0(R 1), F 4 ADDI R 1, 8 stall BNE R 1, R 2, Loop stall 11/25/2020 ACA H. Corporaal 11

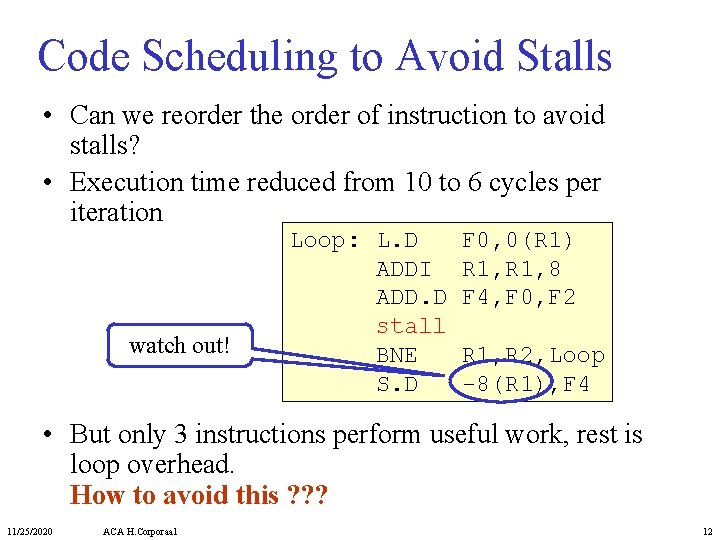

Code Scheduling to Avoid Stalls • Can we reorder the order of instruction to avoid stalls? • Execution time reduced from 10 to 6 cycles per iteration watch out! Loop: L. D ADDI ADD. D stall BNE S. D F 0, 0(R 1) R 1, 8 F 4, F 0, F 2 R 1, R 2, Loop -8(R 1), F 4 • But only 3 instructions perform useful work, rest is loop overhead. How to avoid this ? ? ? 11/25/2020 ACA H. Corporaal 12

![Loop Unrolling increasing ILP At source level for i1 i1000 i xi xi Loop Unrolling: increasing ILP At source level: for (i=1; i<=1000; i++) x[i] = x[i]](https://slidetodoc.com/presentation_image_h/9e100290554bb2e73130704886e28cd7/image-13.jpg)

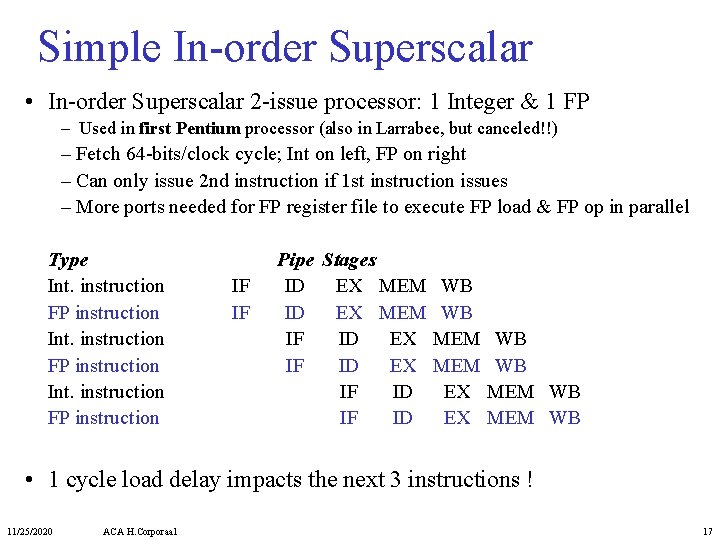

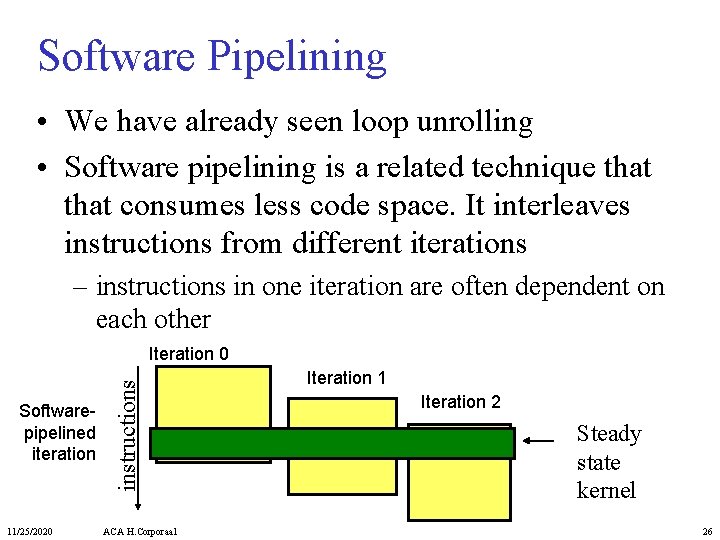

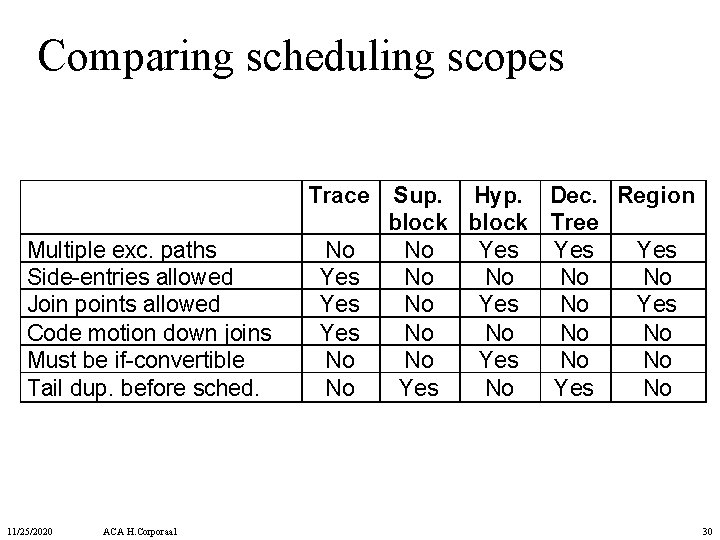

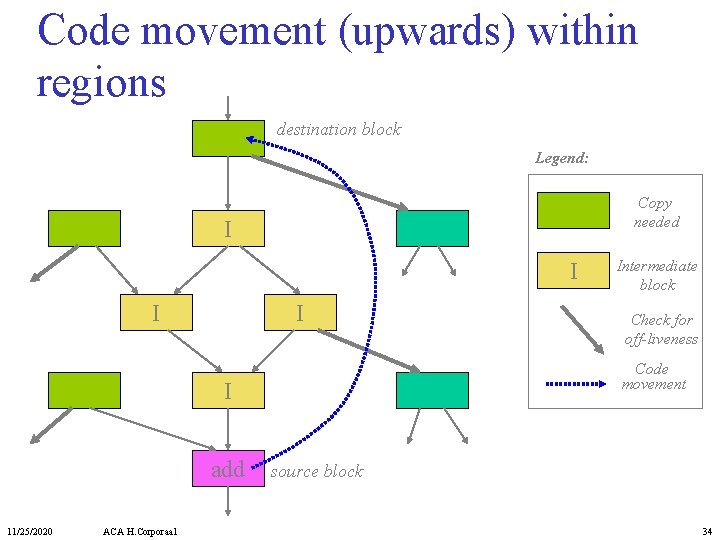

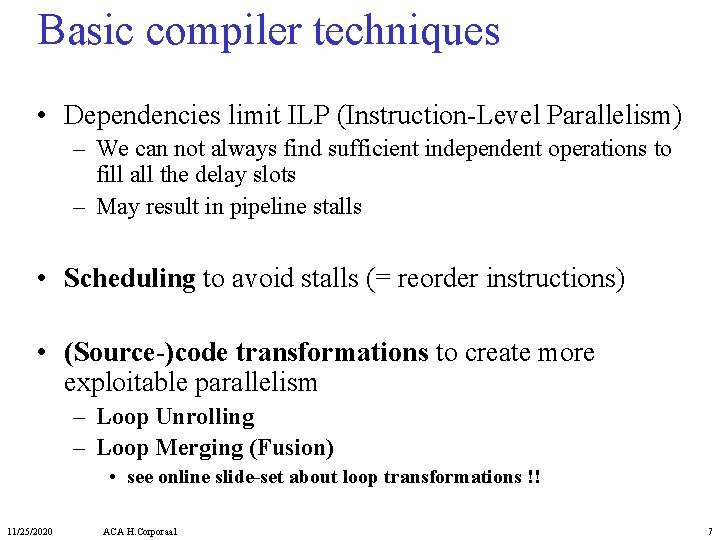

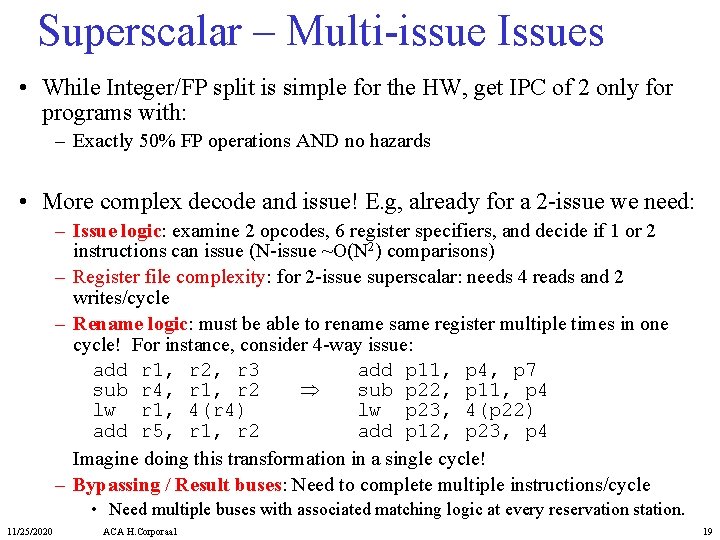

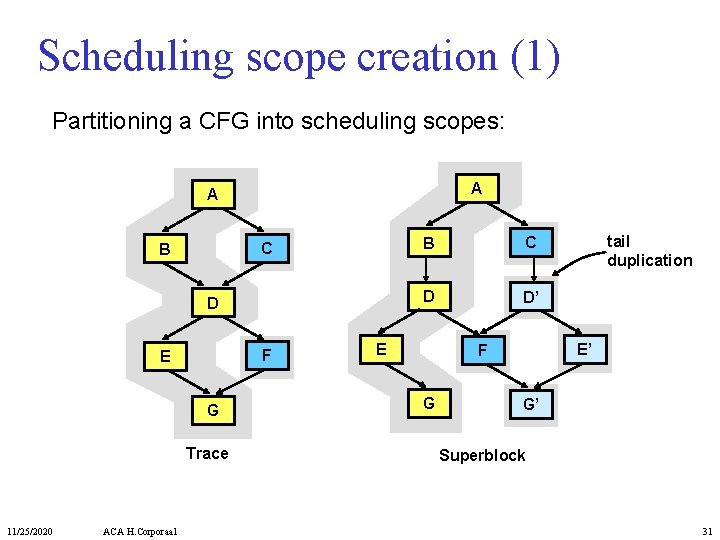

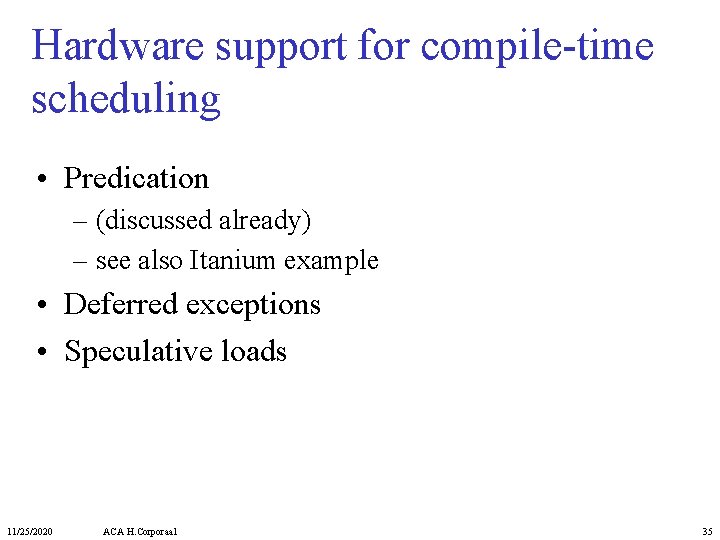

Loop Unrolling: increasing ILP At source level: for (i=1; i<=1000; i++) x[i] = x[i] + s; • 11/25/2020 MIPS code after scheduling: Loop: L. D for (i=1; i<=1000; i=i+4) ADD. D { ADD. D x[i] = x[i] + s; ADD. D x[i+1] = x[i+1]+s; ADD. D x[i+2] = x[i+2]+s; S. D x[i+3] = x[i+3]+s; } S. D ADDI SD Any drawbacks? – loop unrolling increases code size BNE – more registers needed SD ACA H. Corporaal F 0, 0(R 1) F 6, 8(R 1) F 10, 16(R 1) F 14, 24(R 1) F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 2 0(R 1), F 4 8(R 1), F 8 R 1, 32 -16(R 1), F 12 R 1, R 2, Loop -8(R 1), F 16 13

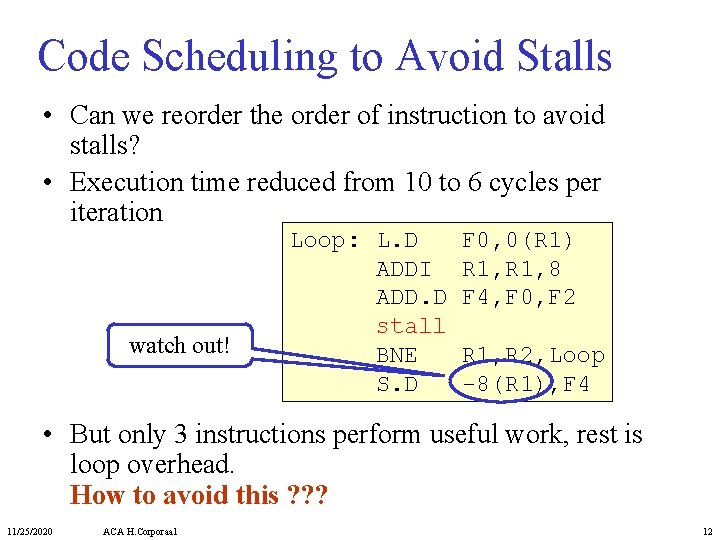

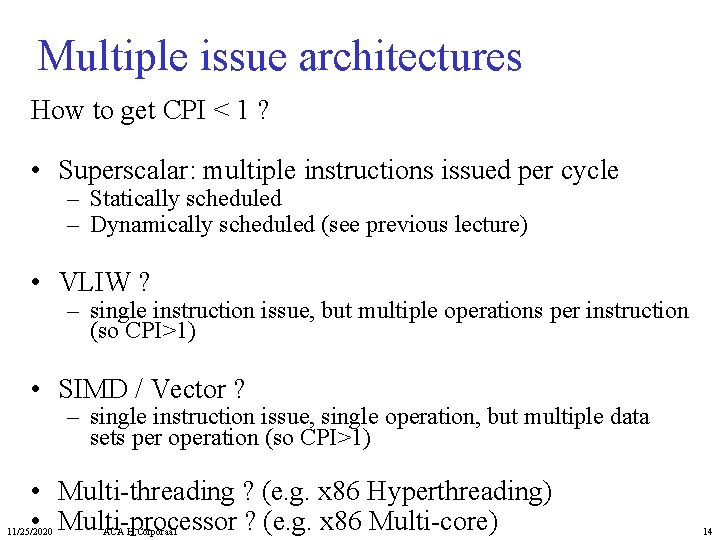

Multiple issue architectures How to get CPI < 1 ? • Superscalar: multiple instructions issued per cycle – Statically scheduled – Dynamically scheduled (see previous lecture) • VLIW ? – single instruction issue, but multiple operations per instruction (so CPI>1) • SIMD / Vector ? – single instruction issue, single operation, but multiple data sets per operation (so CPI>1) • Multi-threading ? (e. g. x 86 Hyperthreading) • Multi-processor ? (e. g. x 86 Multi-core) 11/25/2020 ACA H. Corporaal 14

Instruction Parallel (ILP) Processors The name ILP is used for: • Multiple-Issue Processors – Superscalar: varying no. instructions/cycle (0 to 8), scheduled by HW (dynamic issue capability) • IBM Power. PC, Sun Ultra. Sparc, DEC Alpha, Pentium III/4, etc. – VLIW (very long instr. word): fixed number of instructions (4 -16) scheduled by the compiler (static issue capability) • Intel Architecture-64 (IA-64, Itanium), Tri. Media, TI C 6 x • (Super-) pipelined processors • Anticipated success of multiple instructions led to Instructions Per Cycle (IPC) metric instead of CPI 11/25/2020 ACA H. Corporaal 15

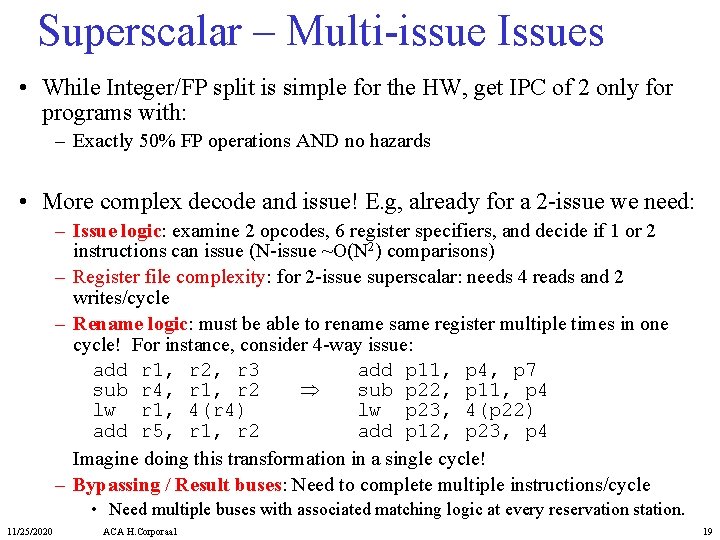

Vector processors • Vector Processing: Explicit coding of independent loops as operations on large vectors of numbers – Multimedia instructions being added to many processors • Different implementations: – real SIMD; • e. g. 320 separate 32 -bit ALUs + RFs – (multiple) subword units: • divide a single ALU into sub ALUs – deeply pipelined units: 11/25/2020 • aiming at very high frequency; • with forwarding between units ACA H. Corporaal 16

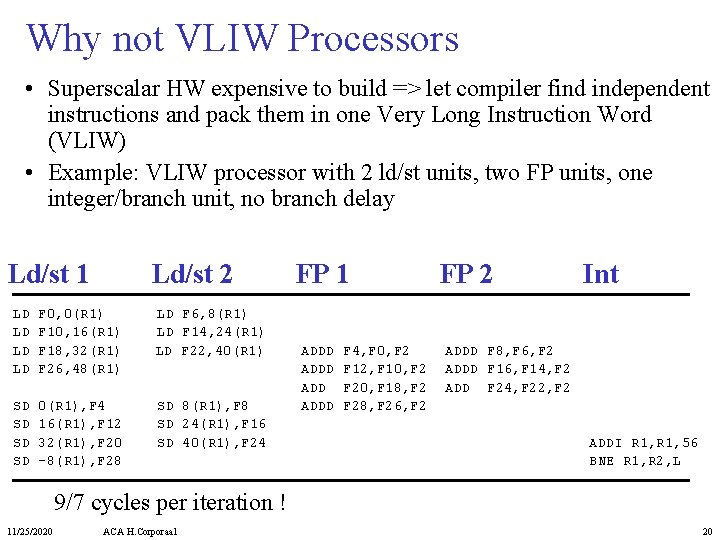

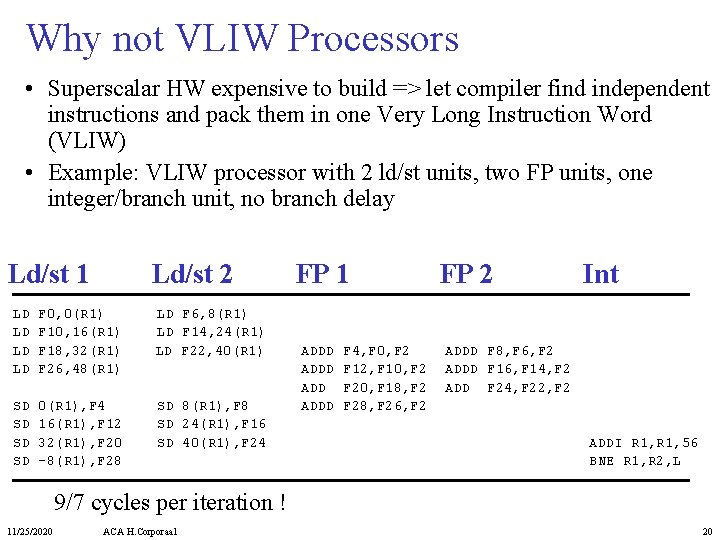

Simple In-order Superscalar • In-order Superscalar 2 -issue processor: 1 Integer & 1 FP – Used in first Pentium processor (also in Larrabee, but canceled!!) – Fetch 64 -bits/clock cycle; Int on left, FP on right – Can only issue 2 nd instruction if 1 st instruction issues – More ports needed for FP register file to execute FP load & FP op in parallel Type Int. instruction FP instruction IF IF Pipe Stages ID EX MEM WB IF ID EX MEM IF ID EX WB WB MEM WB • 1 cycle load delay impacts the next 3 instructions ! 11/25/2020 ACA H. Corporaal 17

![Dynamic trace for unrolled code for i1 i1000 i ai ais Integer instruction Dynamic trace for unrolled code for (i=1; i<=1000; i++) a[i] = a[i]+s; Integer instruction](https://slidetodoc.com/presentation_image_h/9e100290554bb2e73130704886e28cd7/image-18.jpg)

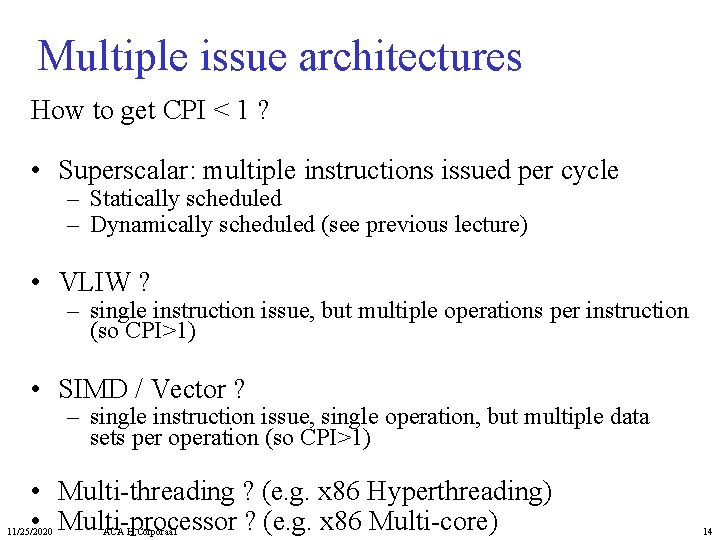

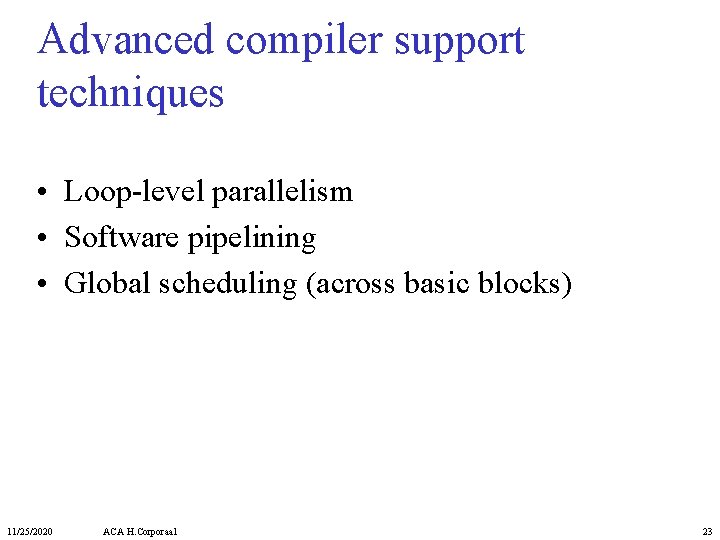

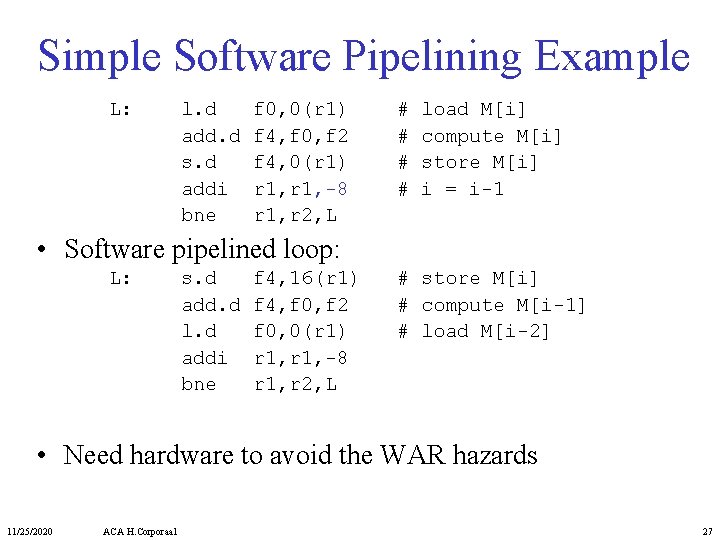

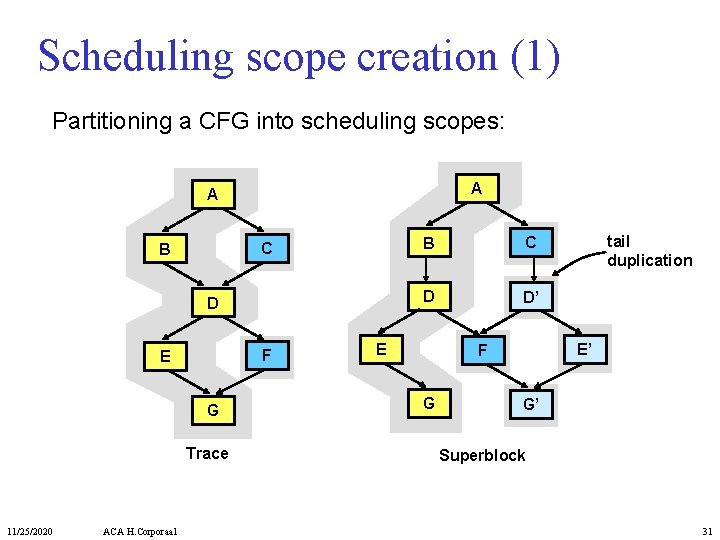

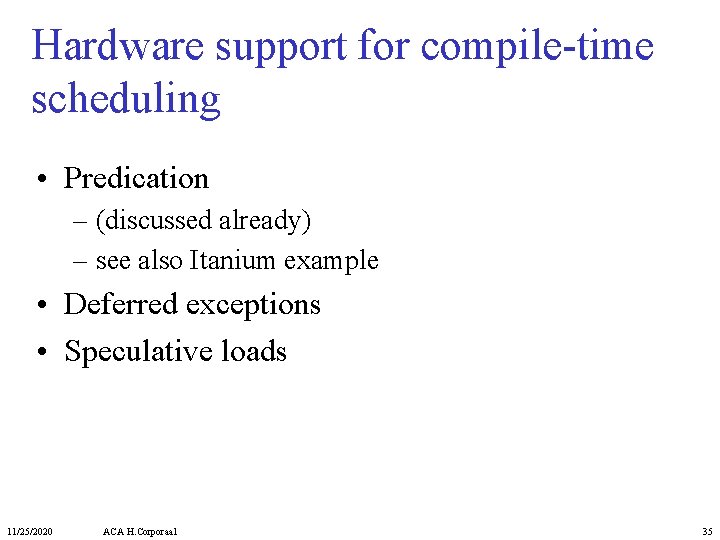

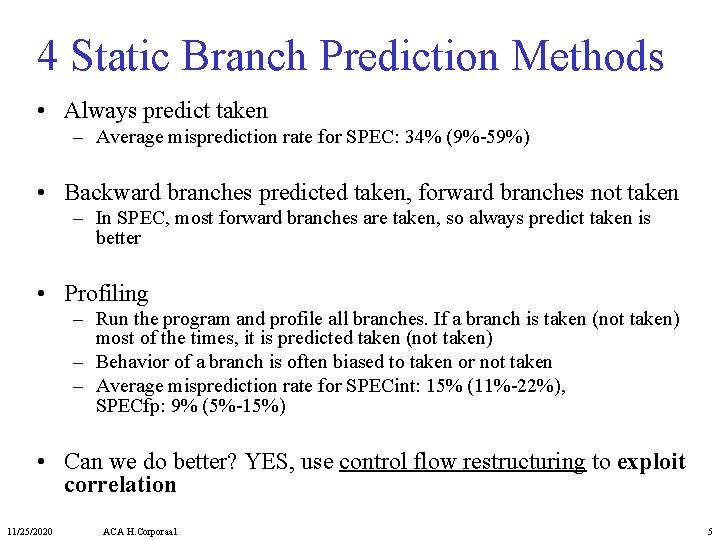

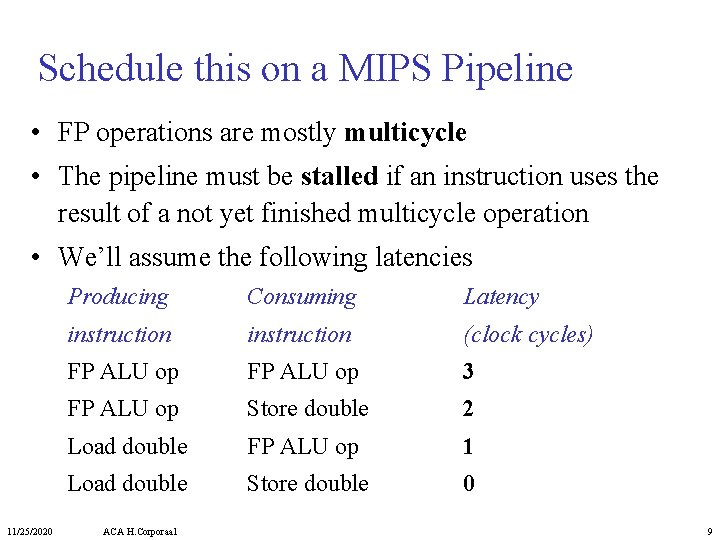

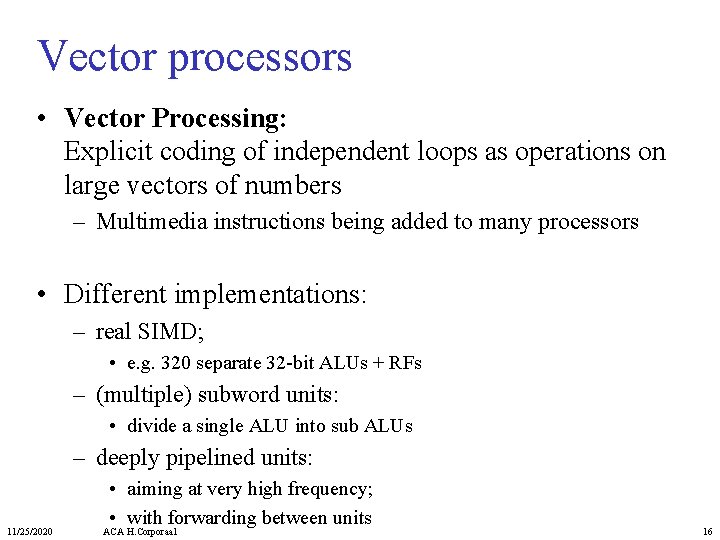

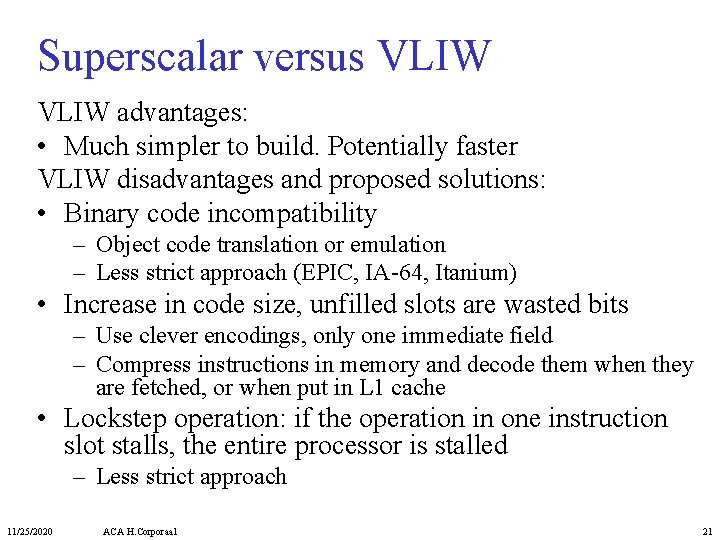

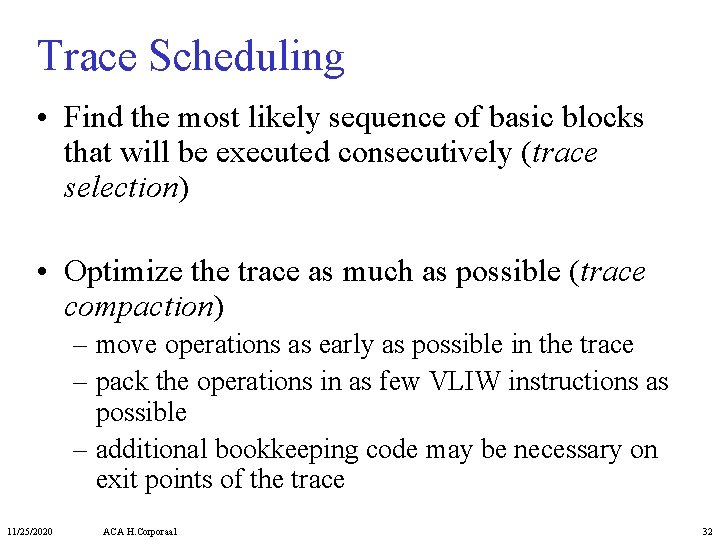

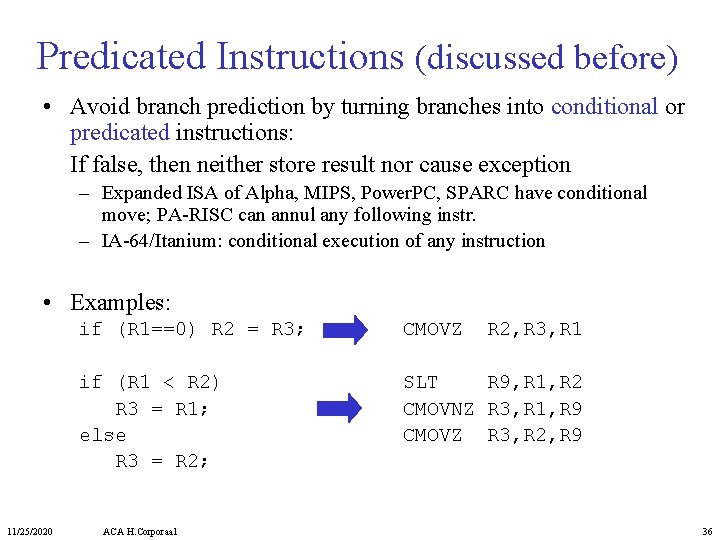

Dynamic trace for unrolled code for (i=1; i<=1000; i++) a[i] = a[i]+s; Integer instruction L: LD LD LD SD SD SD ADDI SD BNE SD F 0, 0(R 1) F 6, 8(R 1) F 10, 16(R 1) F 14, 24(R 1) F 18, 32(R 1) 0(R 1), F 4 8(R 1), F 8 16(R 1), F 12 R 1, 40 -16(R 1), F 16 R 1, R 2, L -8(R 1), F 20 Load: 1 cycle latency ALU op: 2 cycles latency FP instruction ADDD ADDD F 4, F 0, F 2 F 8, F 6, F 2 F 12, F 10, F 2 F 16, F 14, F 20, F 18, F 2 Cycle 1 2 3 4 5 6 7 8 9 10 11 12 • 2. 4 cycles per element vs. 3. 5 for ordinary MIPS pipeline • Int and FP instructions not perfectly balanced 11/25/2020 ACA H. Corporaal 18

Superscalar – Multi-issue Issues • While Integer/FP split is simple for the HW, get IPC of 2 only for programs with: – Exactly 50% FP operations AND no hazards • More complex decode and issue! E. g, already for a 2 -issue we need: – Issue logic: examine 2 opcodes, 6 register specifiers, and decide if 1 or 2 instructions can issue (N-issue ~O(N 2) comparisons) – Register file complexity: for 2 -issue superscalar: needs 4 reads and 2 writes/cycle – Rename logic: must be able to rename same register multiple times in one cycle! For instance, consider 4 -way issue: add r 1, r 2, r 3 add p 11, p 4, p 7 sub r 4, r 1, r 2 sub p 22, p 11, p 4 lw r 1, 4(r 4) lw p 23, 4(p 22) add r 5, r 1, r 2 add p 12, p 23, p 4 Imagine doing this transformation in a single cycle! – Bypassing / Result buses: Need to complete multiple instructions/cycle • Need multiple buses with associated matching logic at every reservation station. 11/25/2020 ACA H. Corporaal 19

Why not VLIW Processors • Superscalar HW expensive to build => let compiler find independent instructions and pack them in one Very Long Instruction Word (VLIW) • Example: VLIW processor with 2 ld/st units, two FP units, one integer/branch unit, no branch delay Ld/st 1 Ld/st 2 LD LD F 0, 0(R 1) F 10, 16(R 1) F 18, 32(R 1) F 26, 48(R 1) LD F 6, 8(R 1) LD F 14, 24(R 1) LD F 22, 40(R 1) SD SD 0(R 1), F 4 16(R 1), F 12 32(R 1), F 20 – 8(R 1), F 28 SD 8(R 1), F 8 SD 24(R 1), F 16 SD 40(R 1), F 24 FP 1 ADDD F 4, F 0, F 2 F 12, F 10, F 20, F 18, F 28, F 26, F 2 FP 2 Int ADDD F 8, F 6, F 2 ADDD F 16, F 14, F 2 ADD F 24, F 22, F 2 ADDI R 1, 56 BNE R 1, R 2, L 9/7 cycles per iteration ! 11/25/2020 ACA H. Corporaal 20

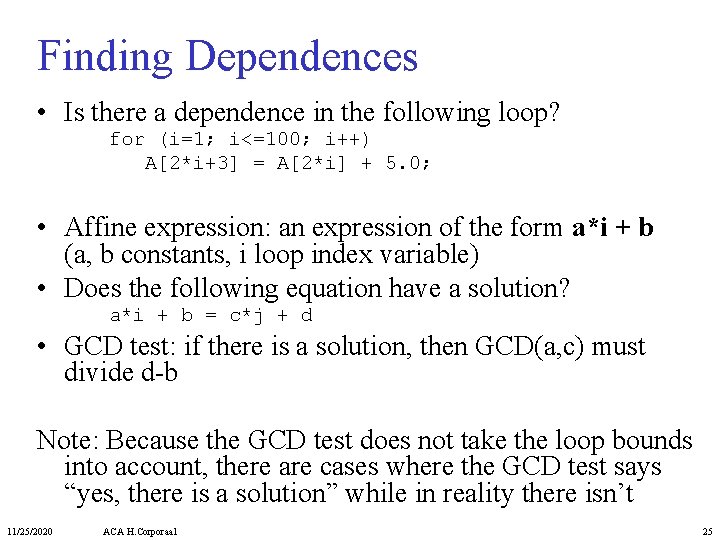

Superscalar versus VLIW advantages: • Much simpler to build. Potentially faster VLIW disadvantages and proposed solutions: • Binary code incompatibility – Object code translation or emulation – Less strict approach (EPIC, IA-64, Itanium) • Increase in code size, unfilled slots are wasted bits – Use clever encodings, only one immediate field – Compress instructions in memory and decode them when they are fetched, or when put in L 1 cache • Lockstep operation: if the operation in one instruction slot stalls, the entire processor is stalled – Less strict approach 11/25/2020 ACA H. Corporaal 21

Use compressed instructions Memory L 1 Instruction Cache compressed instructions in memory CPU or decompress here? Q: What are pros and cons? 11/25/2020 ACA H. Corporaal 22

Advanced compiler support techniques • Loop-level parallelism • Software pipelining • Global scheduling (across basic blocks) 11/25/2020 ACA H. Corporaal 23

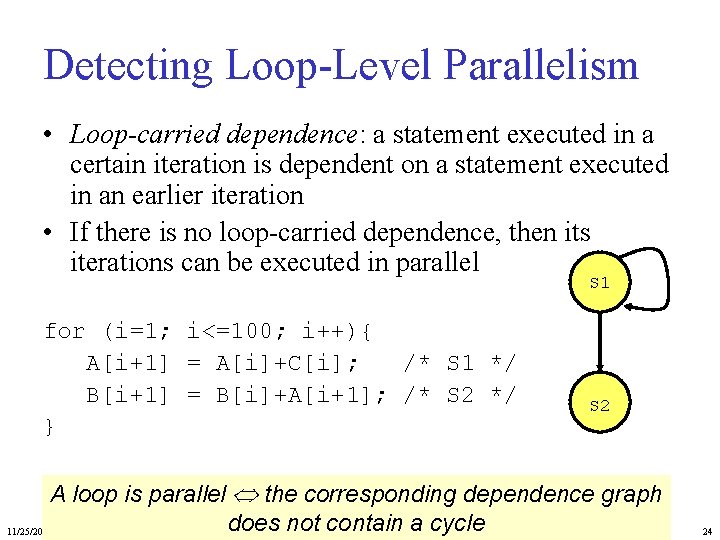

Detecting Loop-Level Parallelism • Loop-carried dependence: a statement executed in a certain iteration is dependent on a statement executed in an earlier iteration • If there is no loop-carried dependence, then its iterations can be executed in parallel S 1 for (i=1; i<=100; i++){ A[i+1] = A[i]+C[i]; /* S 1 */ B[i+1] = B[i]+A[i+1]; /* S 2 */ } S 2 A loop is parallel the corresponding dependence graph does not contain a cycle 11/25/2020 ACA H. Corporaal 24

Finding Dependences • Is there a dependence in the following loop? for (i=1; i<=100; i++) A[2*i+3] = A[2*i] + 5. 0; • Affine expression: an expression of the form a*i + b (a, b constants, i loop index variable) • Does the following equation have a solution? a*i + b = c*j + d • GCD test: if there is a solution, then GCD(a, c) must divide d-b Note: Because the GCD test does not take the loop bounds into account, there are cases where the GCD test says “yes, there is a solution” while in reality there isn’t 11/25/2020 ACA H. Corporaal 25

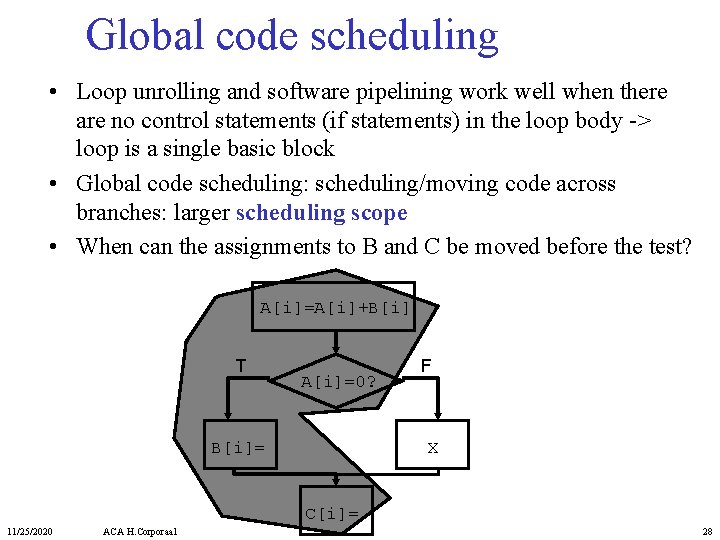

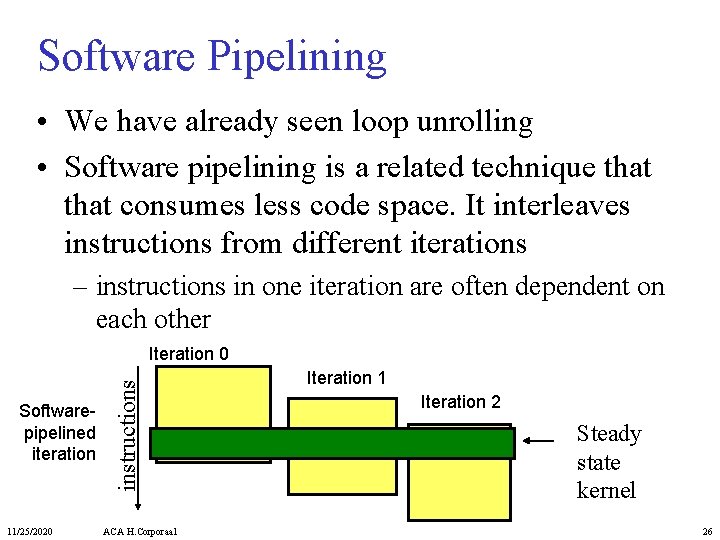

Software Pipelining • We have already seen loop unrolling • Software pipelining is a related technique that consumes less code space. It interleaves instructions from different iterations – instructions in one iteration are often dependent on each other Softwarepipelined iteration 11/25/2020 instructions Iteration 0 ACA H. Corporaal Iteration 1 Iteration 2 Steady state kernel 26

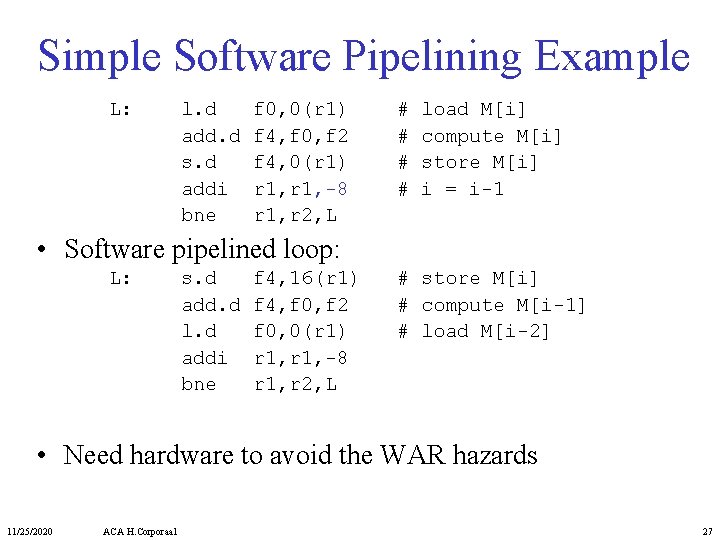

Simple Software Pipelining Example L: l. d add. d s. d addi bne f 0, 0(r 1) f 4, f 0, f 2 f 4, 0(r 1) r 1, -8 r 1, r 2, L # # load M[i] compute M[i] store M[i] i = i-1 • Software pipelined loop: L: s. d add. d l. d addi bne f 4, 16(r 1) f 4, f 0, f 2 f 0, 0(r 1) r 1, -8 r 1, r 2, L # store M[i] # compute M[i-1] # load M[i-2] • Need hardware to avoid the WAR hazards 11/25/2020 ACA H. Corporaal 27

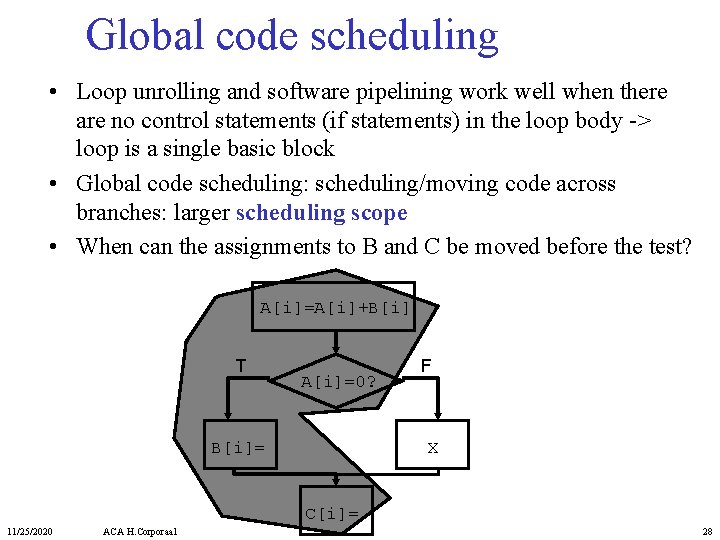

Global code scheduling • Loop unrolling and software pipelining work well when there are no control statements (if statements) in the loop body -> loop is a single basic block • Global code scheduling: scheduling/moving code across branches: larger scheduling scope • When can the assignments to B and C be moved before the test? A[i]=A[i]+B[i] T A[i]=0? B[i]= F X C[i]= 11/25/2020 ACA H. Corporaal 28

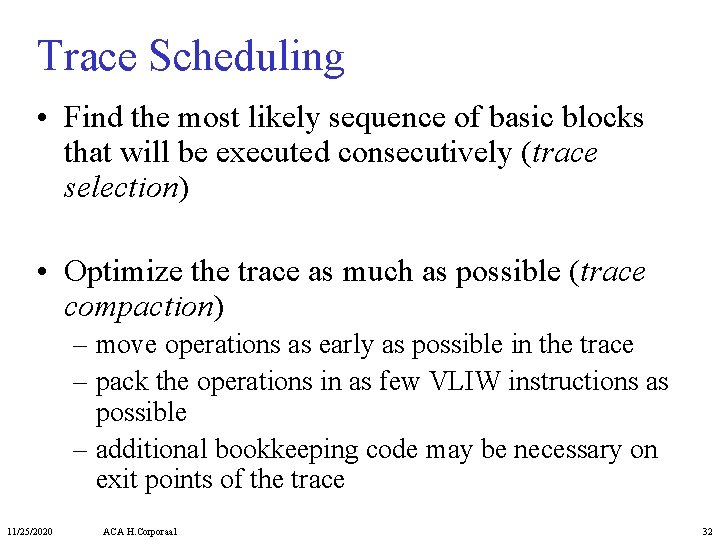

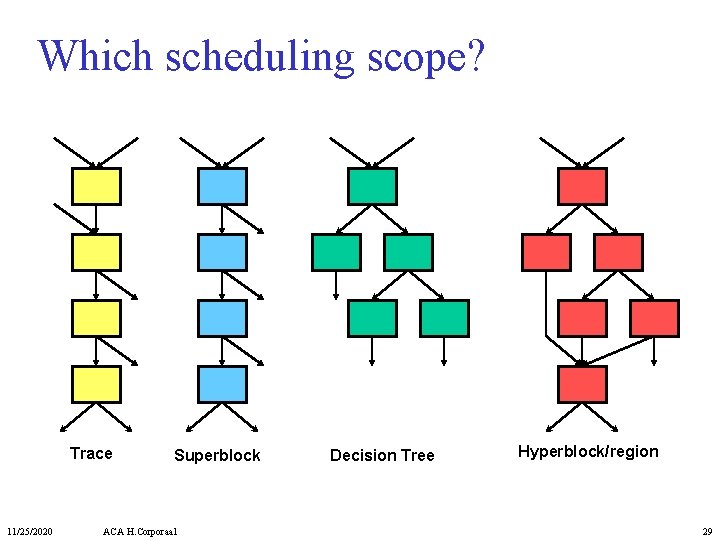

Which scheduling scope? Trace 11/25/2020 Superblock ACA H. Corporaal Decision Tree Hyperblock/region 29

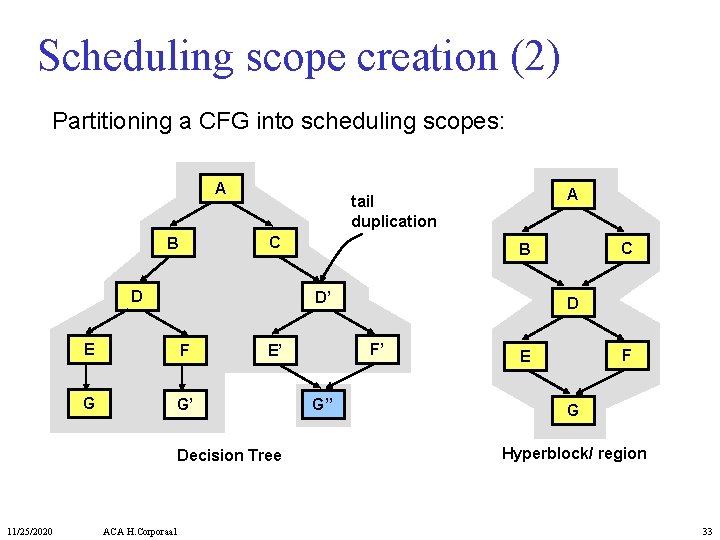

Comparing scheduling scopes Trace Multiple exc. paths Side-entries allowed Join points allowed Code motion down joins Must be if-convertible Tail dup. before sched. 11/25/2020 ACA H. Corporaal No Yes Yes No No Sup. Hyp. Dec. Region block Tree No Yes Yes No No No Yes No 30

Scheduling scope creation (1) Partitioning a CFG into scheduling scopes: A A C B D F E G Trace 11/25/2020 ACA H. Corporaal B C D D’ E E’ F G tail duplication G’ Superblock 31

Trace Scheduling • Find the most likely sequence of basic blocks that will be executed consecutively (trace selection) • Optimize the trace as much as possible (trace compaction) – move operations as early as possible in the trace – pack the operations in as few VLIW instructions as possible – additional bookkeeping code may be necessary on exit points of the trace 11/25/2020 ACA H. Corporaal 32

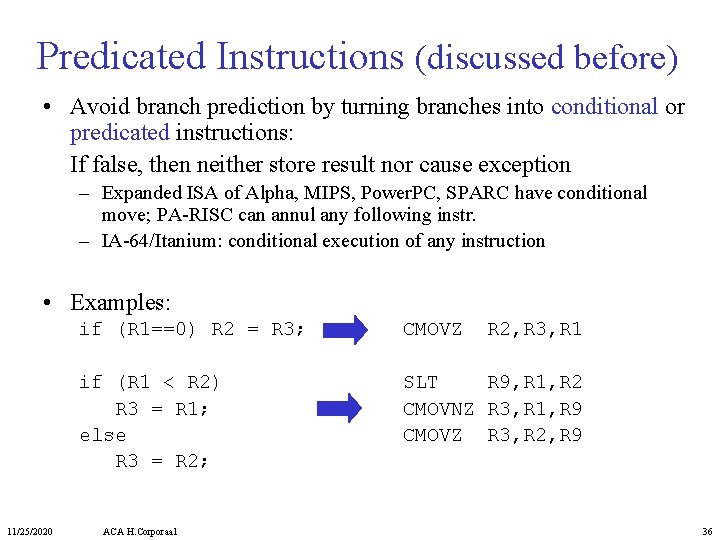

Scheduling scope creation (2) Partitioning a CFG into scheduling scopes: A C B D E F G G’ ACA H. Corporaal C B D’ D F’ E’ Decision Tree 11/25/2020 A tail duplication G’’ F E G Hyperblock/ region 33

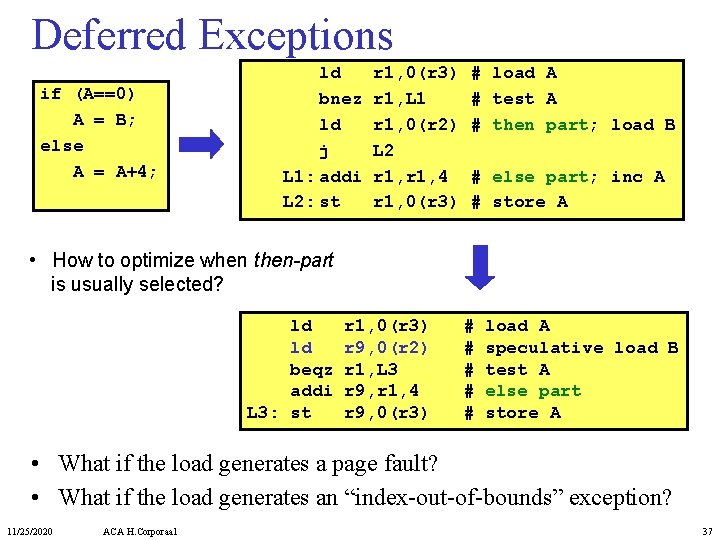

Code movement (upwards) within regions destination block Legend: Copy needed I I 11/25/2020 ACA H. Corporaal Check for off-liveness Code movement I add Intermediate block source block 34

Hardware support for compile-time scheduling • Predication – (discussed already) – see also Itanium example • Deferred exceptions • Speculative loads 11/25/2020 ACA H. Corporaal 35

Predicated Instructions (discussed before) • Avoid branch prediction by turning branches into conditional or predicated instructions: If false, then neither store result nor cause exception – Expanded ISA of Alpha, MIPS, Power. PC, SPARC have conditional move; PA-RISC can annul any following instr. – IA-64/Itanium: conditional execution of any instruction • Examples: 11/25/2020 if (R 1==0) R 2 = R 3; CMOVZ if (R 1 < R 2) R 3 = R 1; else R 3 = R 2; SLT R 9, R 1, R 2 CMOVNZ R 3, R 1, R 9 CMOVZ R 3, R 2, R 9 ACA H. Corporaal R 2, R 3, R 1 36

Deferred Exceptions if (A==0) A = B; else A = A+4; ld bnez ld j L 1: addi L 2: st r 1, 0(r 3) r 1, L 1 r 1, 0(r 2) L 2 r 1, 4 r 1, 0(r 3) # load A # test A # then part; load B # else part; inc A # store A • How to optimize when then-part is usually selected? ld ld beqz addi L 3: st r 1, 0(r 3) r 9, 0(r 2) r 1, L 3 r 9, r 1, 4 r 9, 0(r 3) # # # load A speculative load B test A else part store A • What if the load generates a page fault? • What if the load generates an “index-out-of-bounds” exception? 11/25/2020 ACA H. Corporaal 37

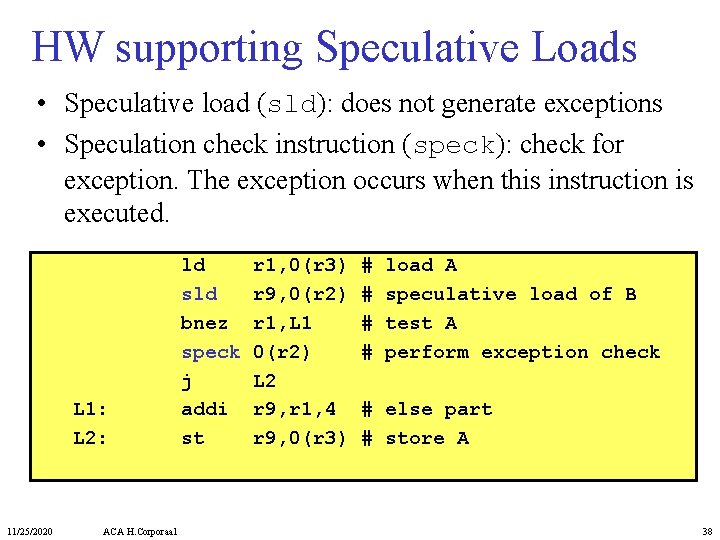

HW supporting Speculative Loads • Speculative load (sld): does not generate exceptions • Speculation check instruction (speck): check for exception. The exception occurs when this instruction is executed. L 1: L 2: 11/25/2020 ACA H. Corporaal ld sld bnez speck j addi st r 1, 0(r 3) r 9, 0(r 2) r 1, L 1 0(r 2) L 2 r 9, r 1, 4 r 9, 0(r 3) # # load A speculative load of B test A perform exception check # else part # store A 38

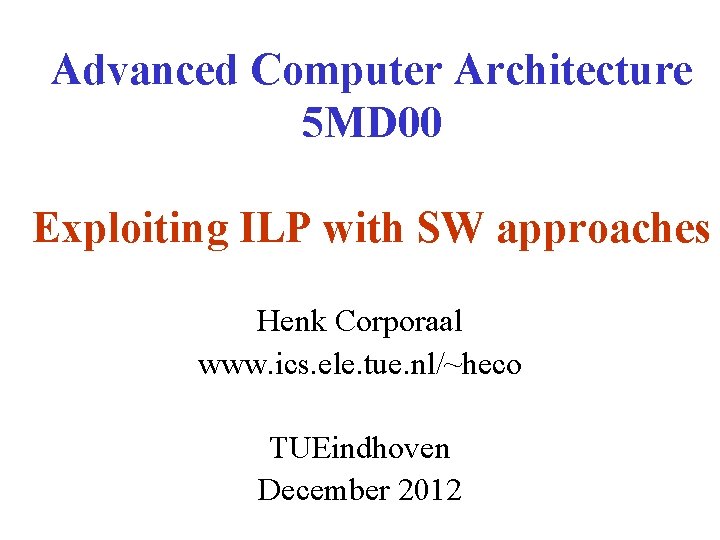

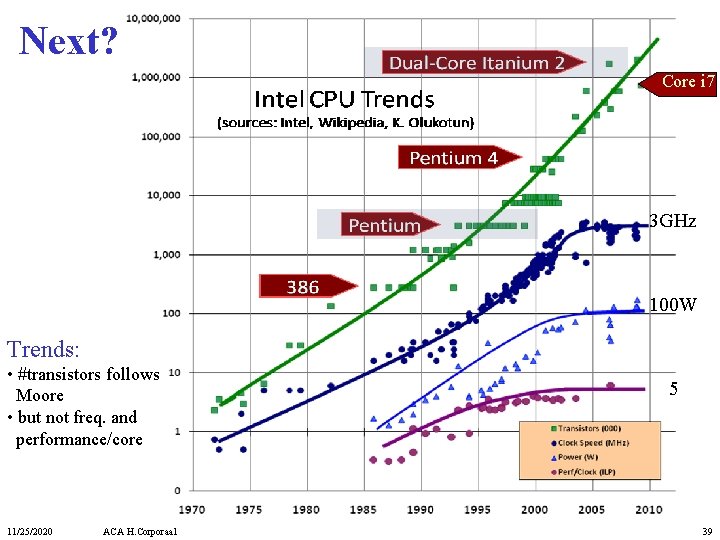

Next? Core i 7 3 GHz 100 W Trends: • #transistors follows Moore • but not freq. and performance/core 11/25/2020 ACA H. Corporaal 5 39