Advanced Compilers CMPSCI 710 Spring 2003 Instruction Scheduling

2. r 1 = r 8 Scheduling Example 1. r 8 = [r 12+8](4) 2. r 1 = r 8](https://slidetodoc.com/presentation_image/2eef79151d76138913931276b6b0dfee/image-8.jpg)

2. r 1 = r 8 Scheduling Example 1. r 8 = [r 12+8](4) 2. r 1 = r 8](https://slidetodoc.com/presentation_image/2eef79151d76138913931276b6b0dfee/image-9.jpg)

- Slides: 22

Advanced Compilers CMPSCI 710 Spring 2003 Instruction Scheduling Emery Berger University of Massachusetts, Amherst UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science

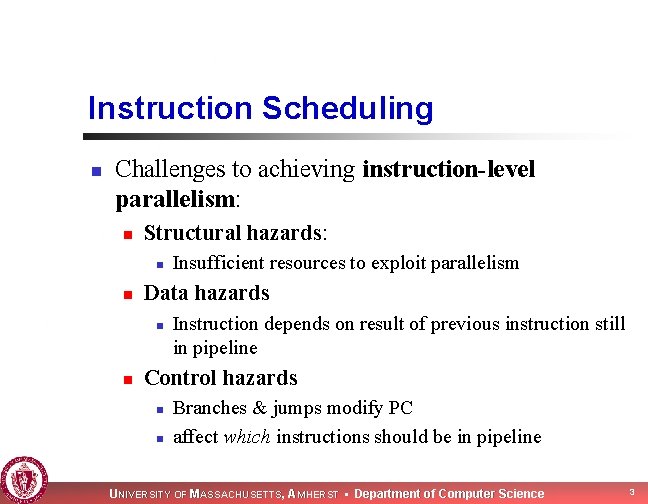

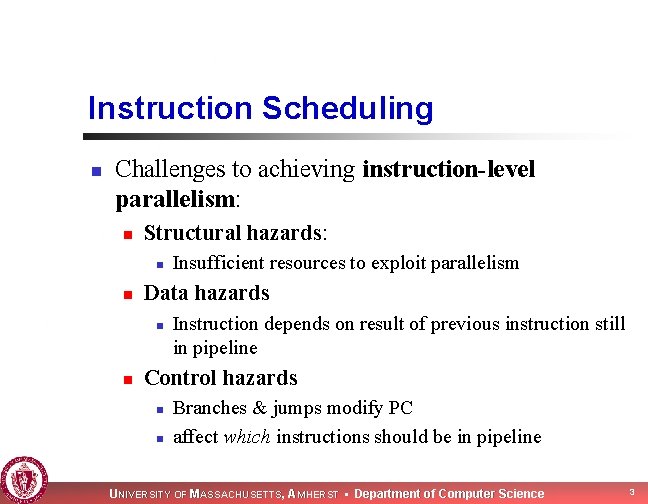

Modern Architectures n Lots of features to increase performance and hide memory latency n Superscalar n n Multiple issue n n 2 or more instructions issued per cycle Speculative execution n Multiple logic units Branch predictors Speculative loads Deep pipelines UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 2

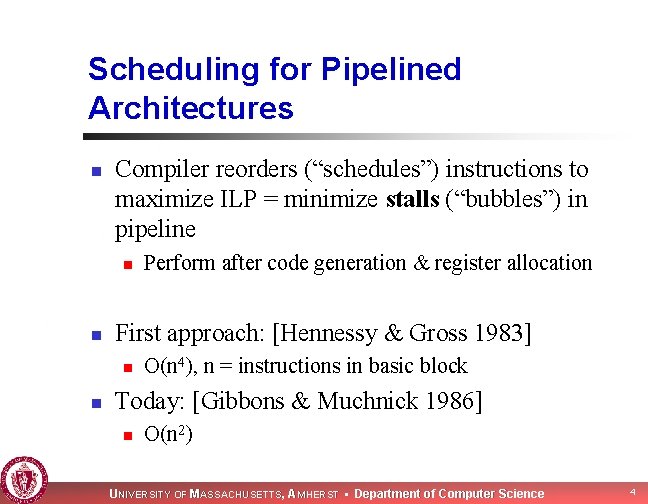

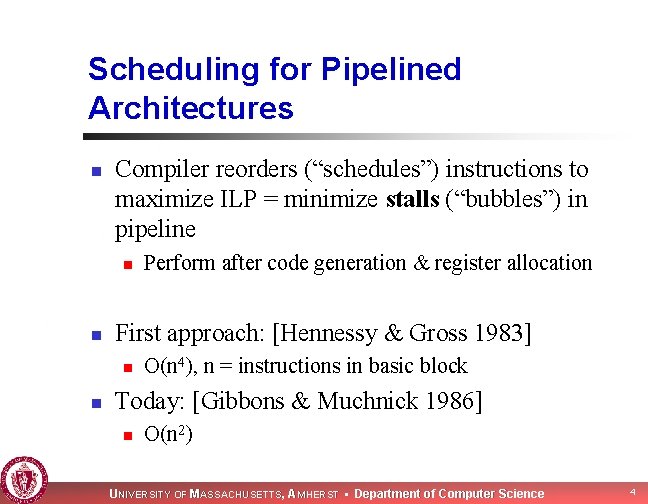

Instruction Scheduling n Challenges to achieving instruction-level parallelism: n Structural hazards: n n Data hazards n n Insufficient resources to exploit parallelism Instruction depends on result of previous instruction still in pipeline Control hazards n n Branches & jumps modify PC affect which instructions should be in pipeline UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 3

Scheduling for Pipelined Architectures n Compiler reorders (“schedules”) instructions to maximize ILP = minimize stalls (“bubbles”) in pipeline n n First approach: [Hennessy & Gross 1983] n n Perform after code generation & register allocation O(n 4), n = instructions in basic block Today: [Gibbons & Muchnick 1986] n O(n 2) UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 4

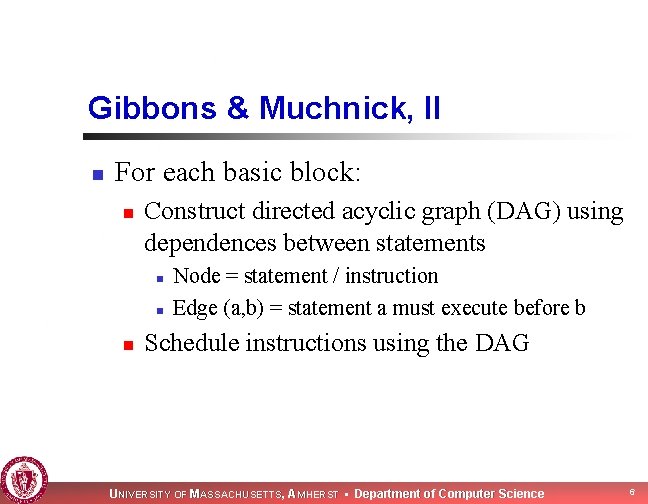

Gibbons & Muchnick, I n Assumptions: n Hardware hazard detection n n Algorithm not required to introduce nops Each memory location referenced via offset of single base register Pointer may reference all of memory Load followed by add creates interlock (stall) Hazards only take single cycle UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 5

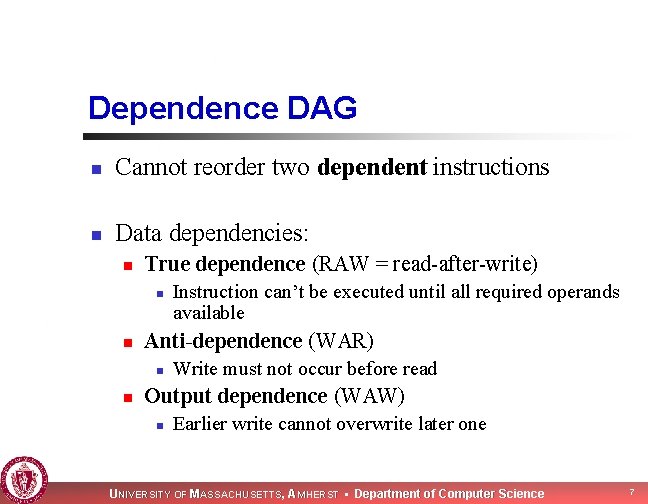

Gibbons & Muchnick, II n For each basic block: n Construct directed acyclic graph (DAG) using dependences between statements n n n Node = statement / instruction Edge (a, b) = statement a must execute before b Schedule instructions using the DAG UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 6

Dependence DAG n Cannot reorder two dependent instructions n Data dependencies: n True dependence (RAW = read-after-write) n n Anti-dependence (WAR) n n Instruction can’t be executed until all required operands available Write must not occur before read Output dependence (WAW) n Earlier write cannot overwrite later one UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 7

2. r 1 = r 8](https://slidetodoc.com/presentation_image/2eef79151d76138913931276b6b0dfee/image-8.jpg)

Scheduling Example 1. r 8 = [r 12+8](4) 2. r 1 = r 8 + 1 3. r 2 = 2 4. call r 14, r 31 5. nop 6. r 9 = r 1 + 1 UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 8

2. r 1 = r 8](https://slidetodoc.com/presentation_image/2eef79151d76138913931276b6b0dfee/image-9.jpg)

Scheduling Example 1. r 8 = [r 12+8](4) 2. r 1 = r 8 + 1 3. r 2 = 2 4. call r 14, r 31 5. nop 6. r 9 = r 1 + 1 n We can reschedule to remove nop in delay slot: (1, 3, 4, 2, 6) UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 9

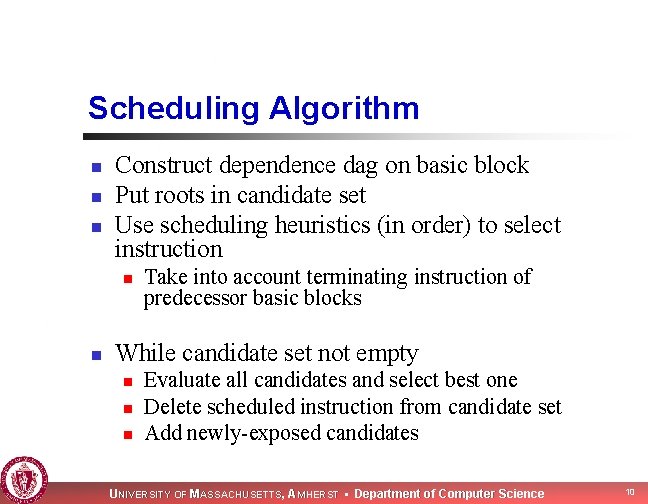

Scheduling Algorithm n n n Construct dependence dag on basic block Put roots in candidate set Use scheduling heuristics (in order) to select instruction n n Take into account terminating instruction of predecessor basic blocks While candidate set not empty n n n Evaluate all candidates and select best one Delete scheduled instruction from candidate set Add newly-exposed candidates UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 10

Instruction Scheduling Heuristics n n NP-complete ) we need heuristics Bias scheduler to prefer instructions: n Interlock with dag successors n n Have many successors n n n More flexibility in scheduling Progress along critical path Free registers n n Allow other operations can proceed Reduce register pressure etc. (see ACDI p. 542) UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 11

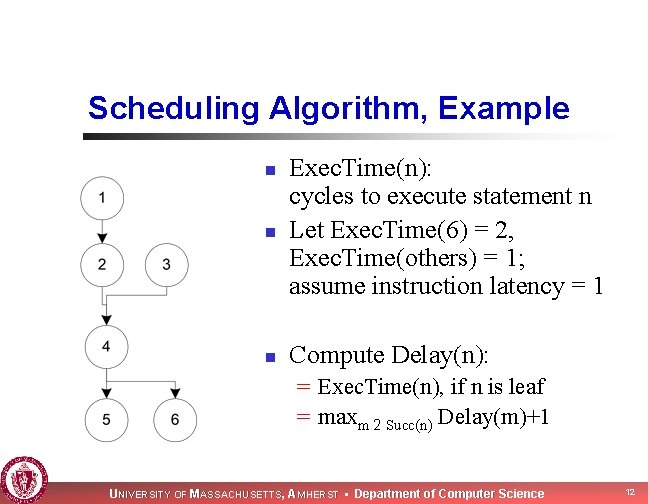

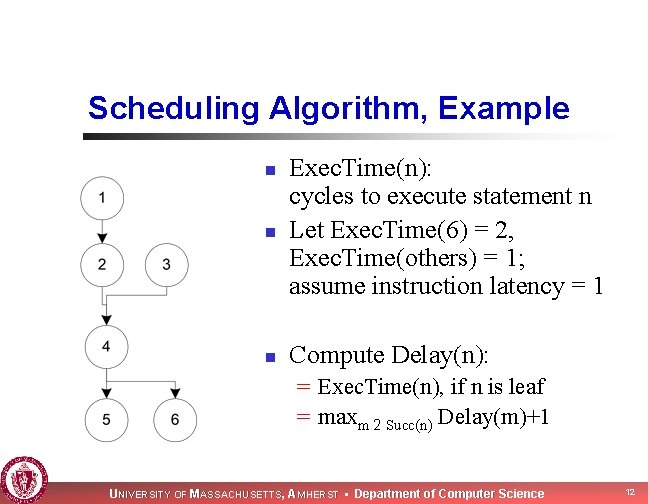

Scheduling Algorithm, Example n Exec. Time(n): cycles to execute statement n Let Exec. Time(6) = 2, Exec. Time(others) = 1; assume instruction latency = 1 n Compute Delay(n): n = Exec. Time(n), if n is leaf = maxm 2 Succ(n) Delay(m)+1 UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 12

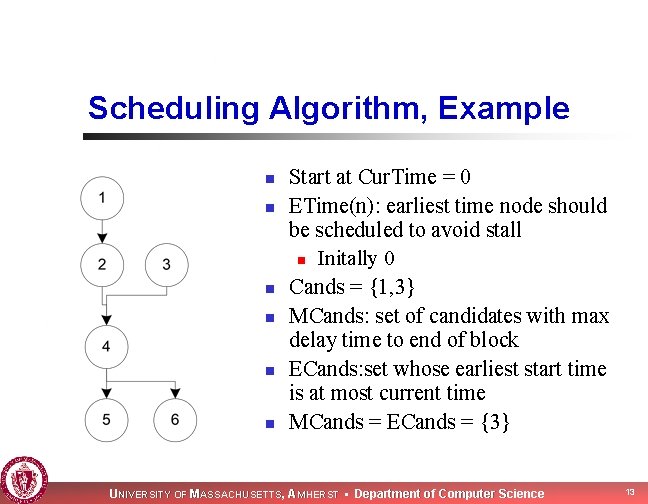

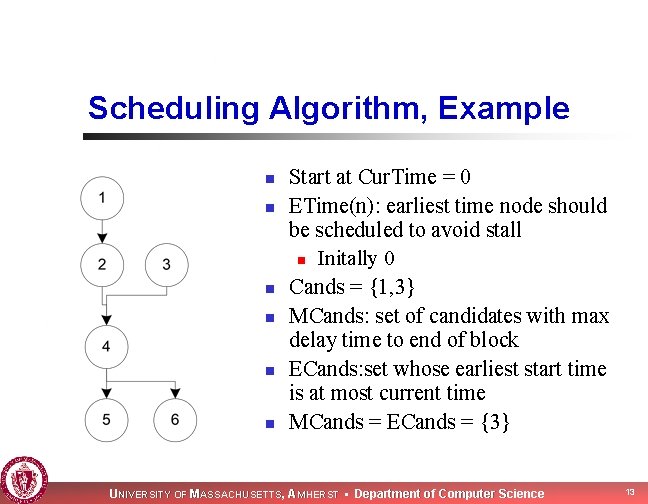

Scheduling Algorithm, Example n n n Start at Cur. Time = 0 ETime(n): earliest time node should be scheduled to avoid stall n Initally 0 Cands = {1, 3} MCands: set of candidates with max delay time to end of block ECands: set whose earliest start time is at most current time MCands = ECands = {3} UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 13

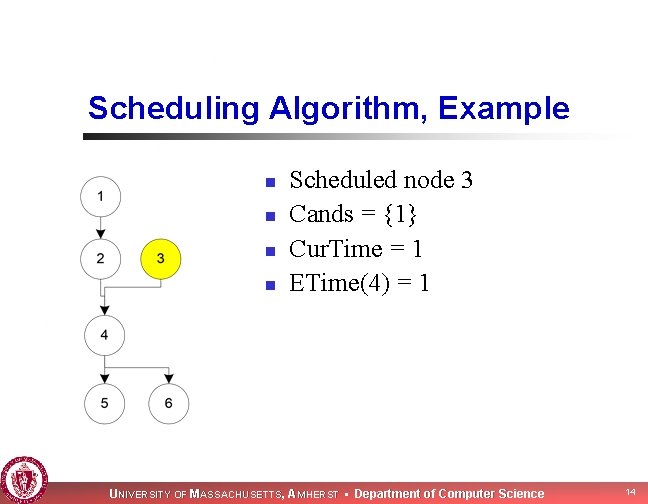

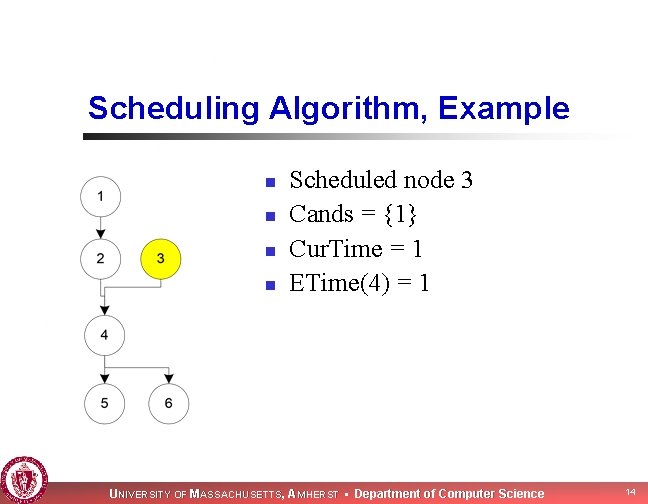

Scheduling Algorithm, Example n n Scheduled node 3 Cands = {1} Cur. Time = 1 ETime(4) = 1 UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 14

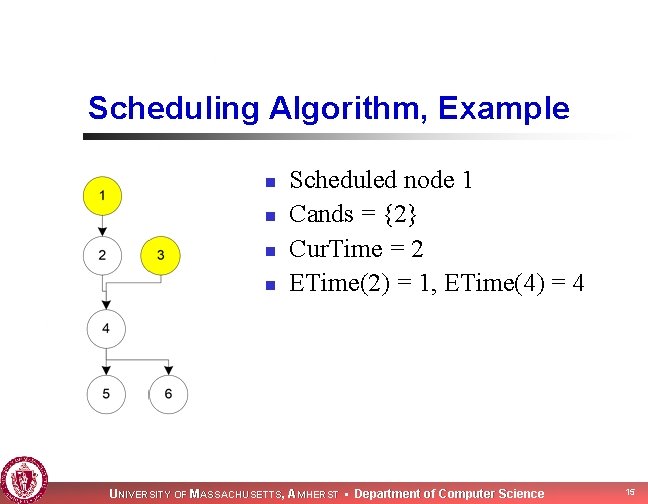

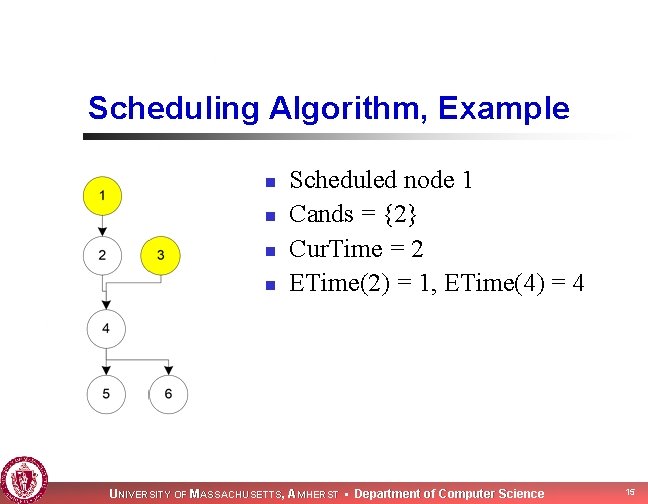

Scheduling Algorithm, Example n n Scheduled node 1 Cands = {2} Cur. Time = 2 ETime(2) = 1, ETime(4) = 4 UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 15

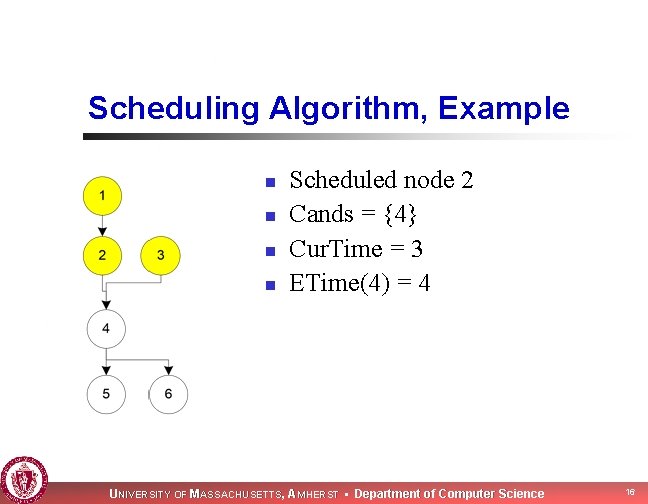

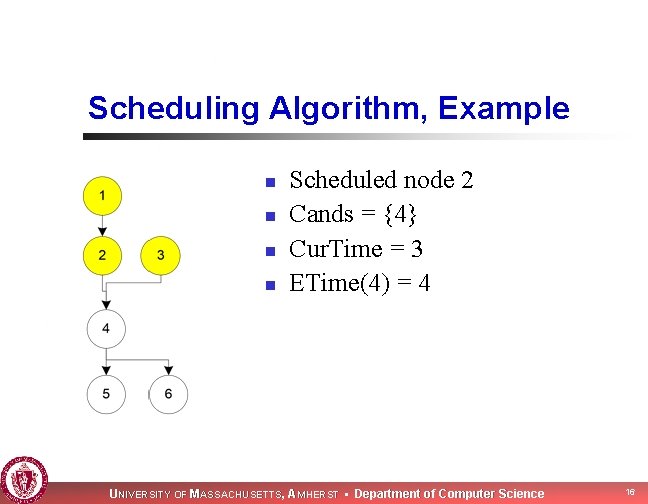

Scheduling Algorithm, Example n n Scheduled node 2 Cands = {4} Cur. Time = 3 ETime(4) = 4 UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 16

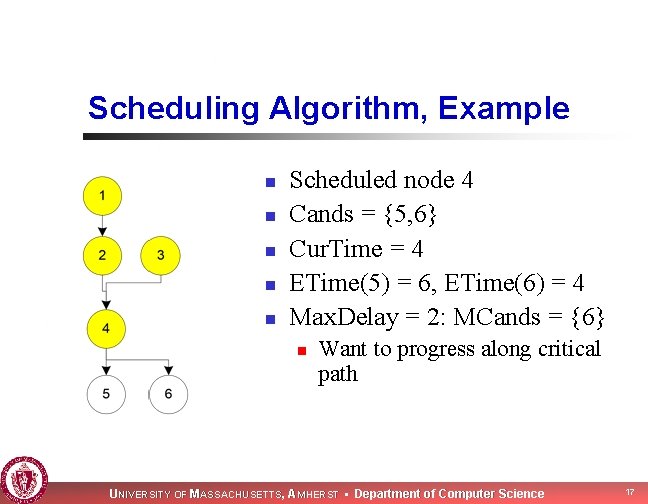

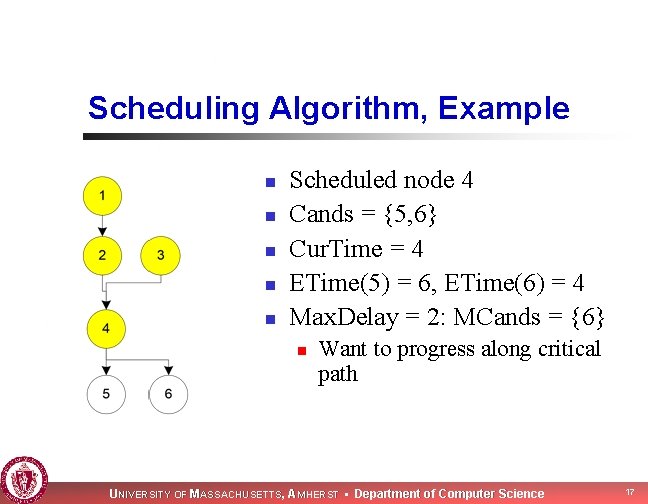

Scheduling Algorithm, Example n n n Scheduled node 4 Cands = {5, 6} Cur. Time = 4 ETime(5) = 6, ETime(6) = 4 Max. Delay = 2: MCands = {6} n Want to progress along critical path UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 17

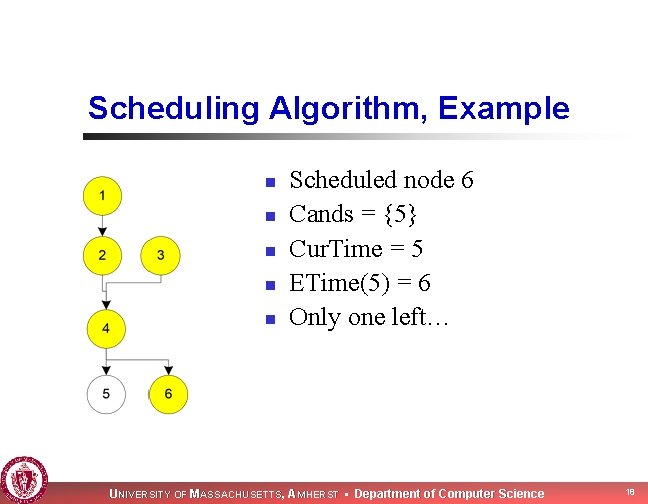

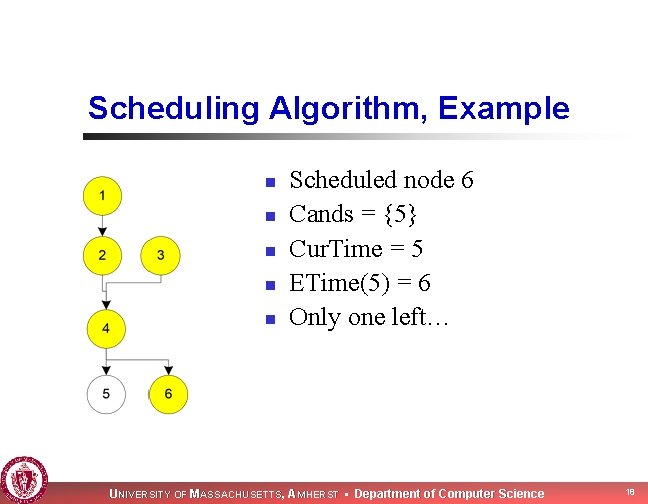

Scheduling Algorithm, Example n n n Scheduled node 6 Cands = {5} Cur. Time = 5 ETime(5) = 6 Only one left… UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 18

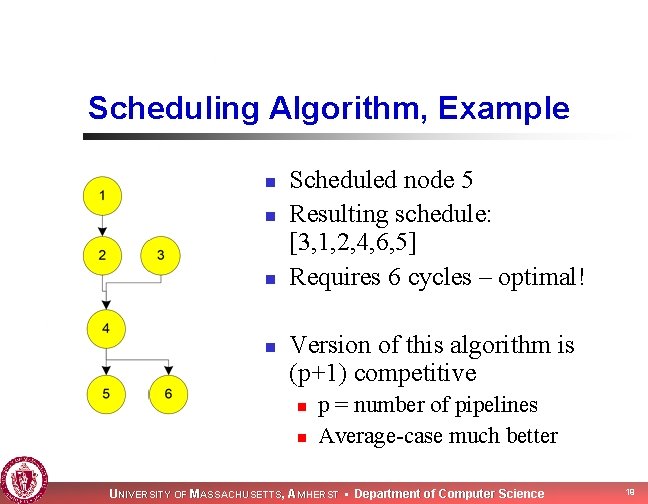

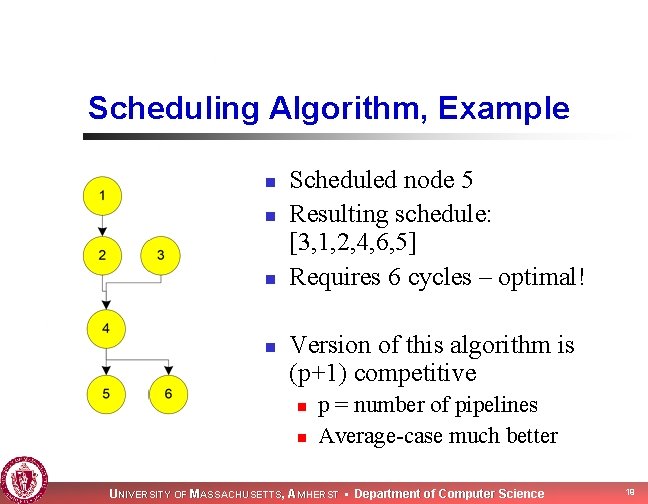

Scheduling Algorithm, Example n n Scheduled node 5 Resulting schedule: [3, 1, 2, 4, 6, 5] Requires 6 cycles – optimal! Version of this algorithm is (p+1) competitive n n p = number of pipelines Average-case much better UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 19

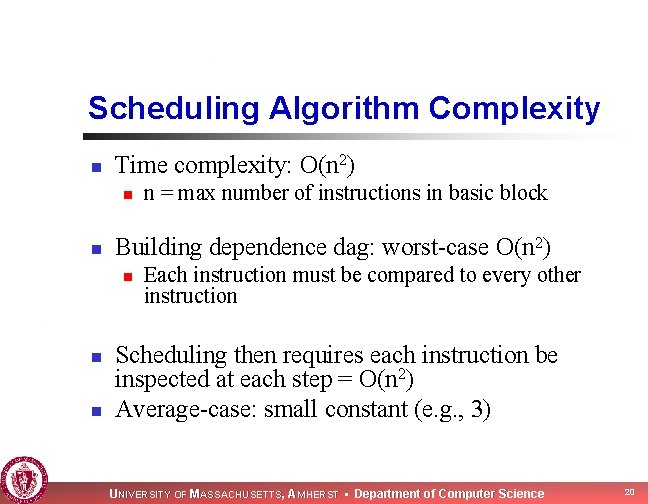

Scheduling Algorithm Complexity n Time complexity: O(n 2) n n Building dependence dag: worst-case O(n 2) n n = max number of instructions in basic block Each instruction must be compared to every other instruction Scheduling then requires each instruction be inspected at each step = O(n 2) Average-case: small constant (e. g. , 3) UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 20

Empirical Results n n n Scheduling: always a win (1 -13% on PARISC) Results same as Hennessy & Gross for most benchmarks However: removes only 5/16 stalls in sieve, at most 10/16 with better alias information UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 21

Next Time n n We’ve assumed no cache misses! Next time: balanced scheduling n Read Kerns & Eggers UNIVERSITY OF MASSACHUSETTS, AMHERST • Department of Computer Science 22