Advanced Artificial Intelligence Lecture 4 Neural Networks Outline

- Slides: 60

Advanced Artificial Intelligence Lecture 4: Neural Networks

Outline § Perceptron Introduction § Deep Neural Network Structure § Backpropagation 2

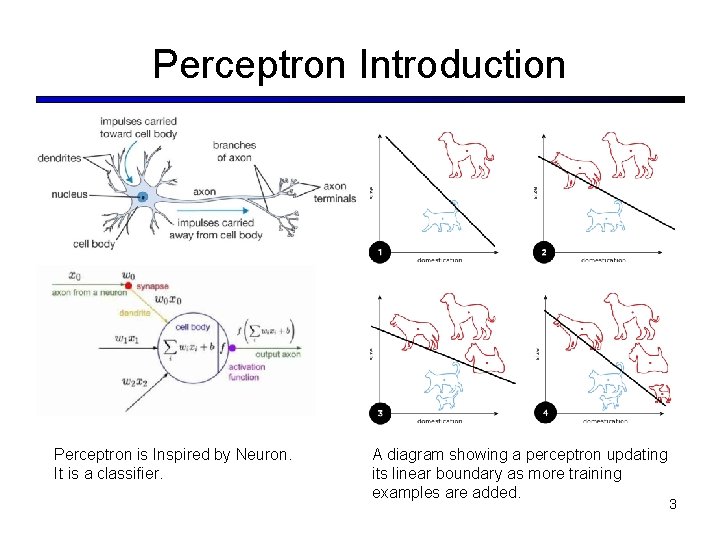

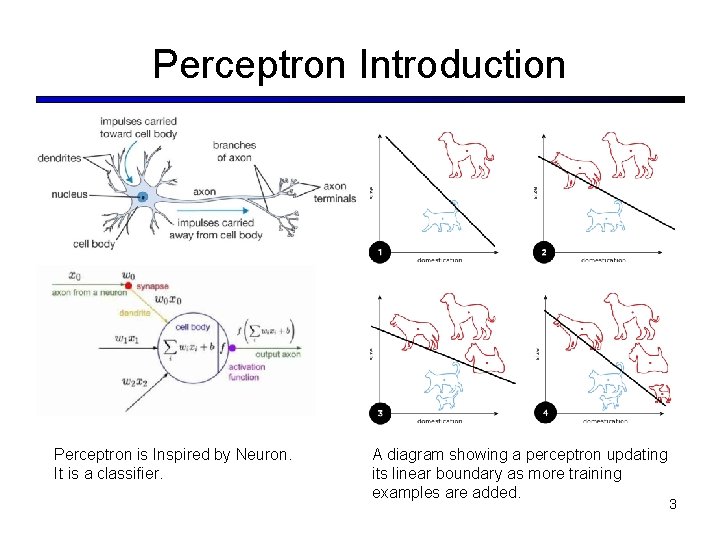

Perceptron Introduction Perceptron is Inspired by Neuron. It is a classifier. A diagram showing a perceptron updating its linear boundary as more training examples are added. 3

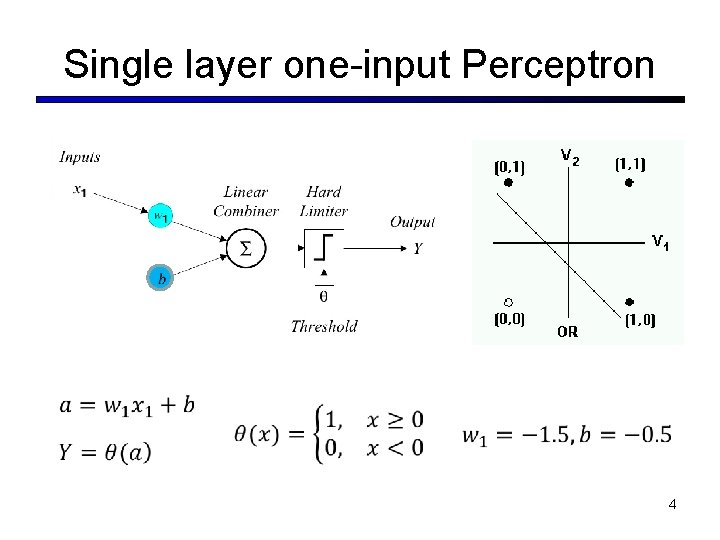

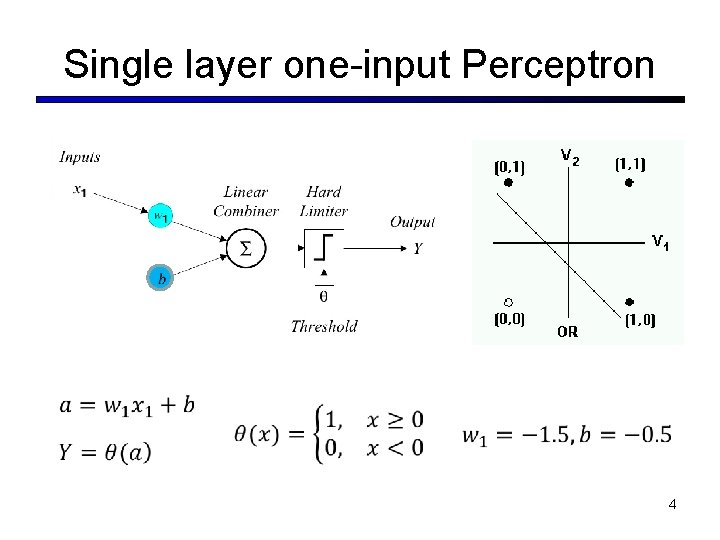

Single layer one-input Perceptron 4

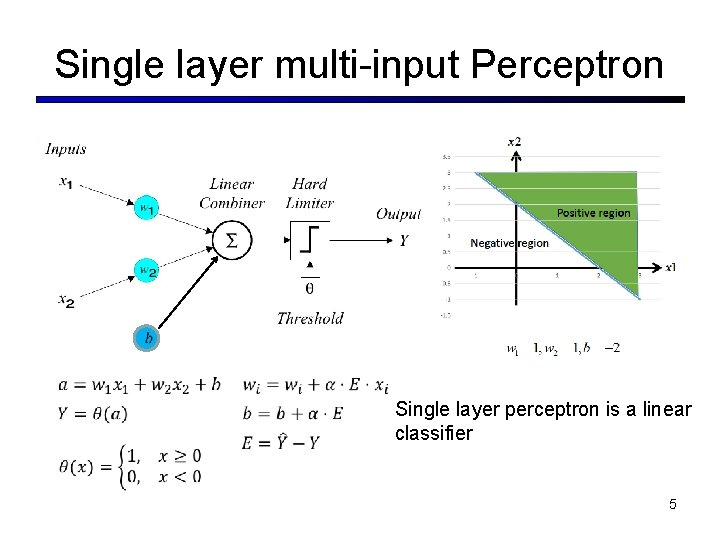

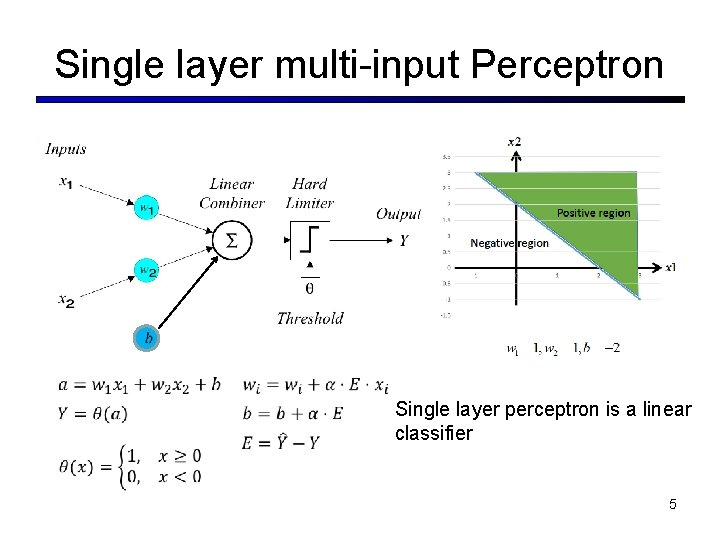

Single layer multi-input Perceptron Single layer perceptron is a linear classifier 5

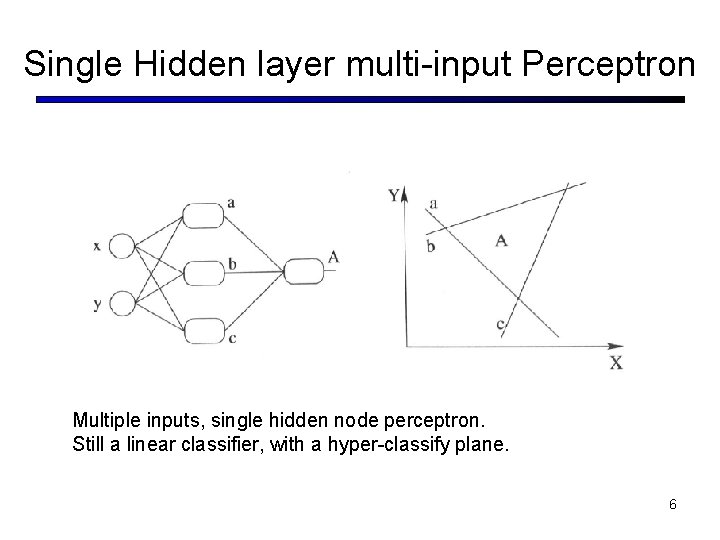

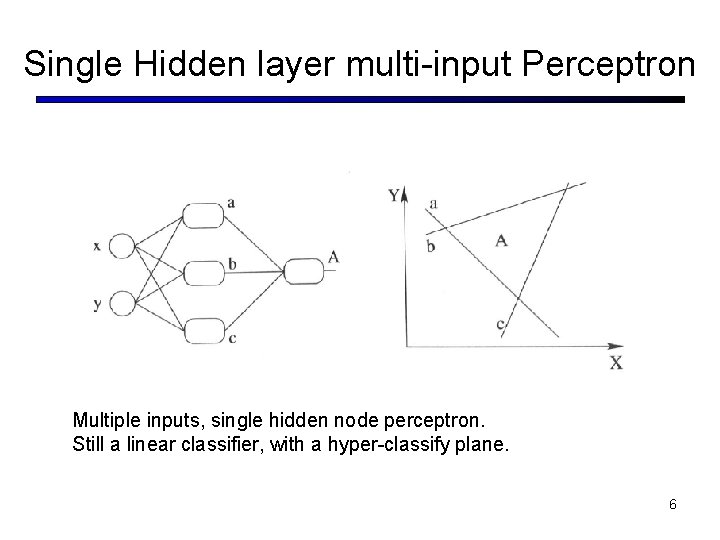

Single Hidden layer multi-input Perceptron Multiple inputs, single hidden node perceptron. Still a linear classifier, with a hyper-classify plane. 6

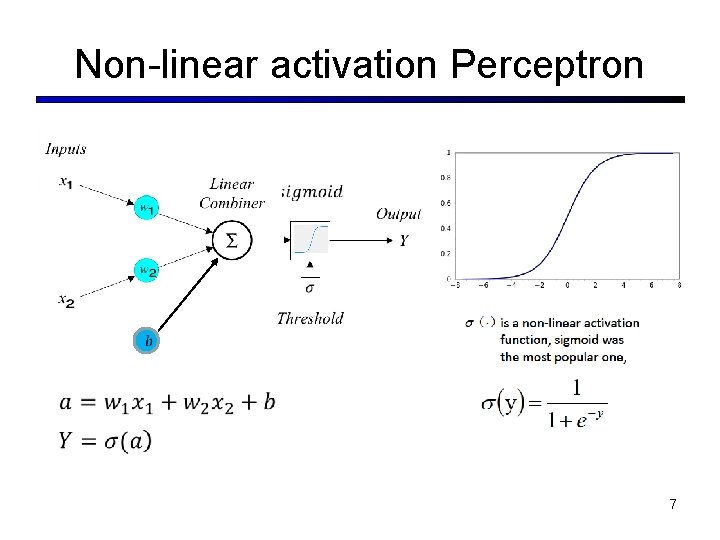

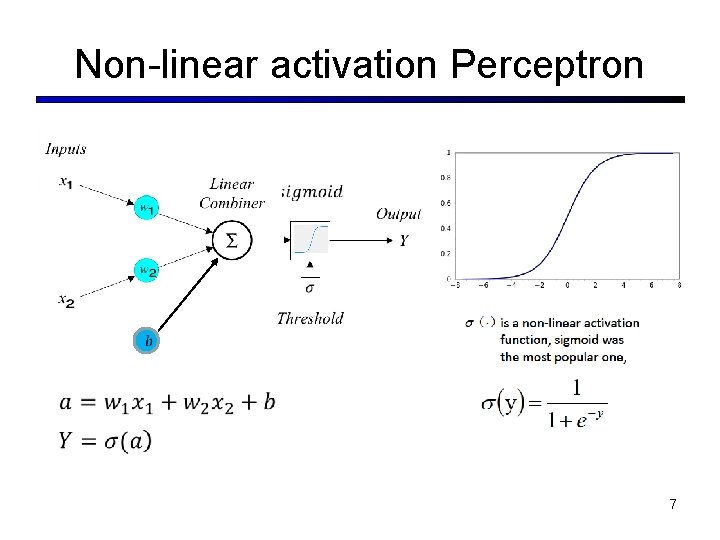

Non-linear activation Perceptron 7

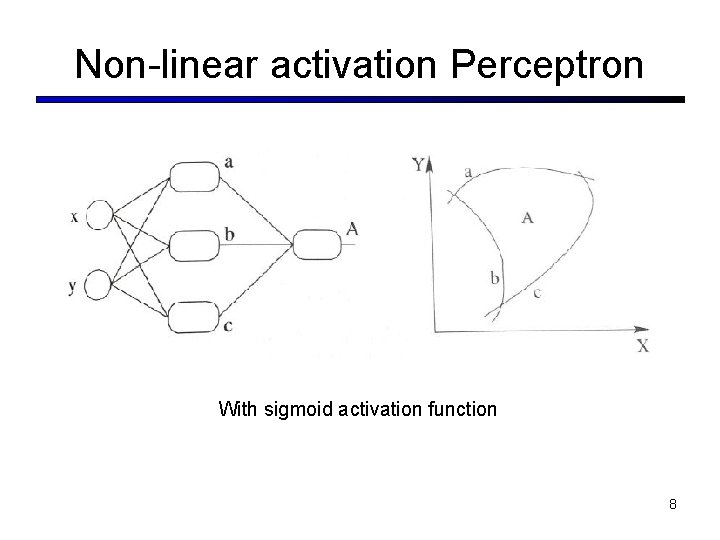

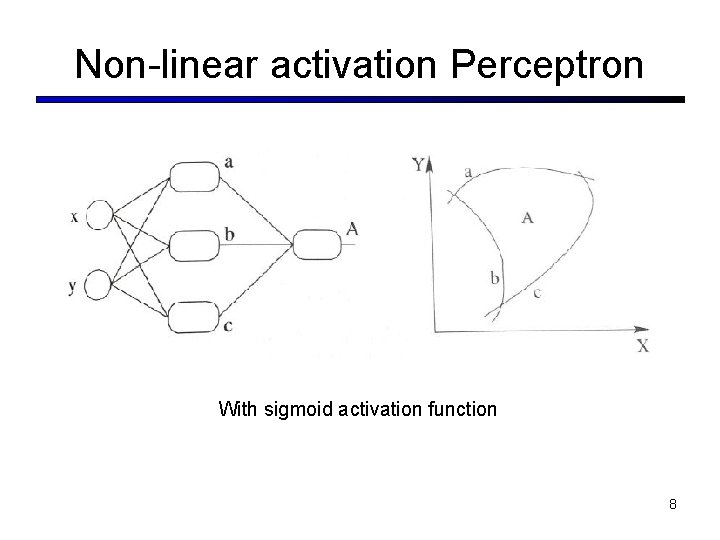

Non-linear activation Perceptron With sigmoid activation function 8

Outline § Perceptron Introduction § Deep Neural Network Structure § Backpropagation 9

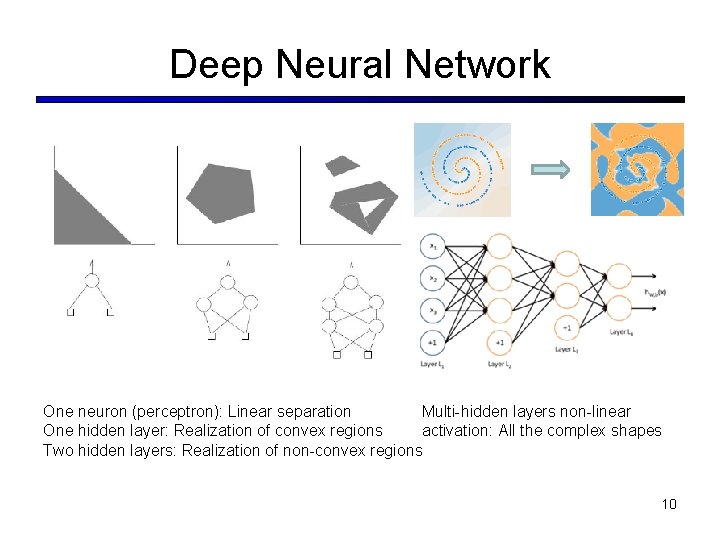

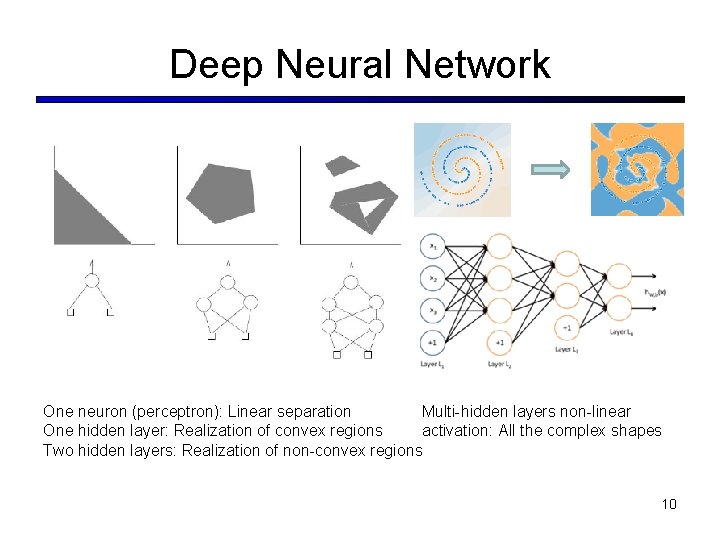

Deep Neural Network One neuron (perceptron): Linear separation Multi-hidden layers non-linear One hidden layer: Realization of convex regions activation: All the complex shapes Two hidden layers: Realization of non-convex regions 10

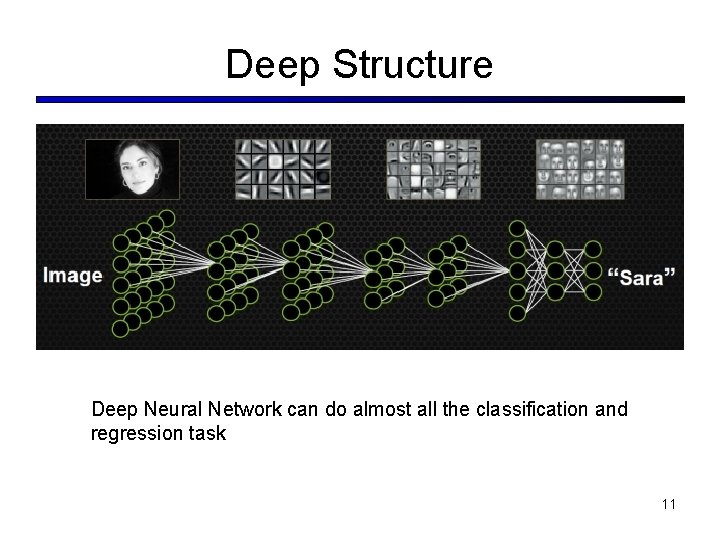

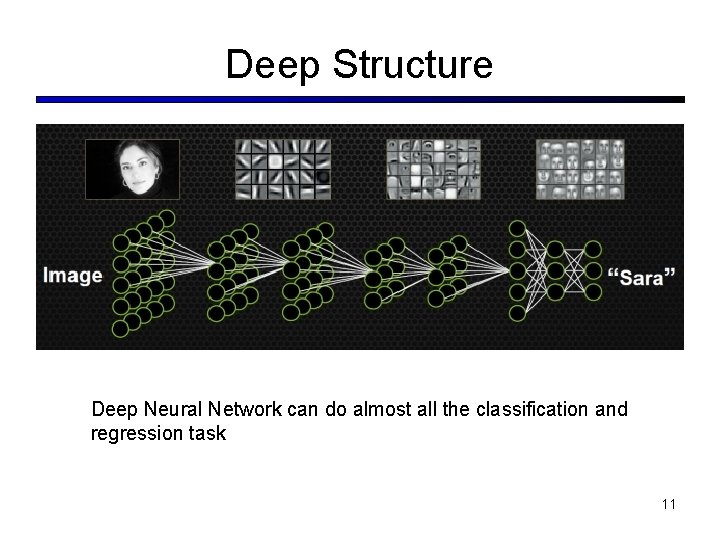

Deep Structure Deep Neural Network can do almost all the classification and regression task 11

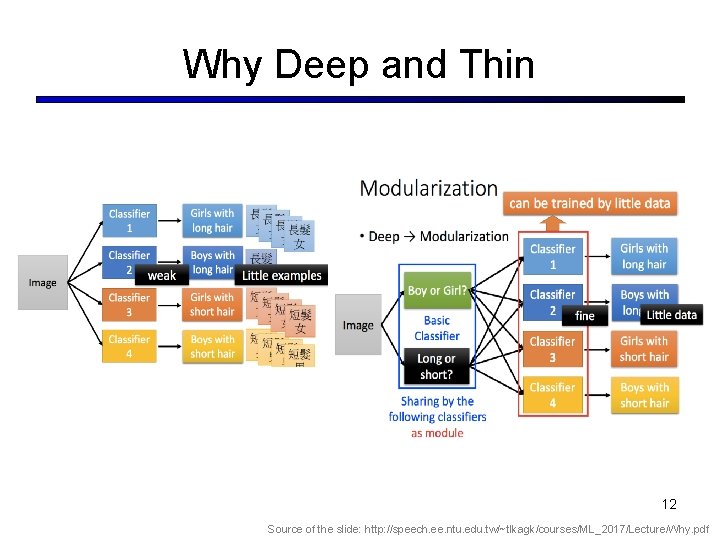

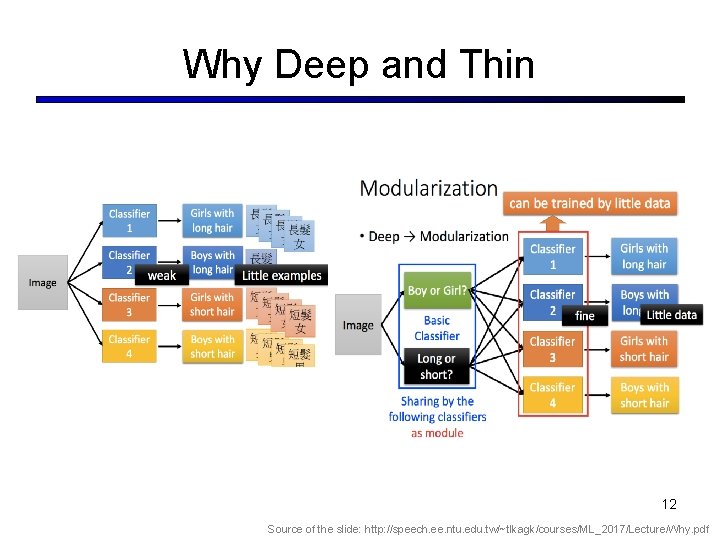

Why Deep and Thin 12 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/Why. pdf

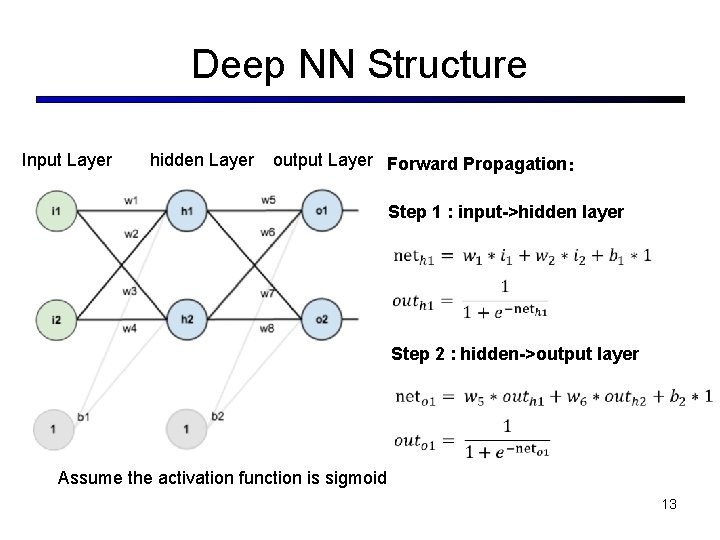

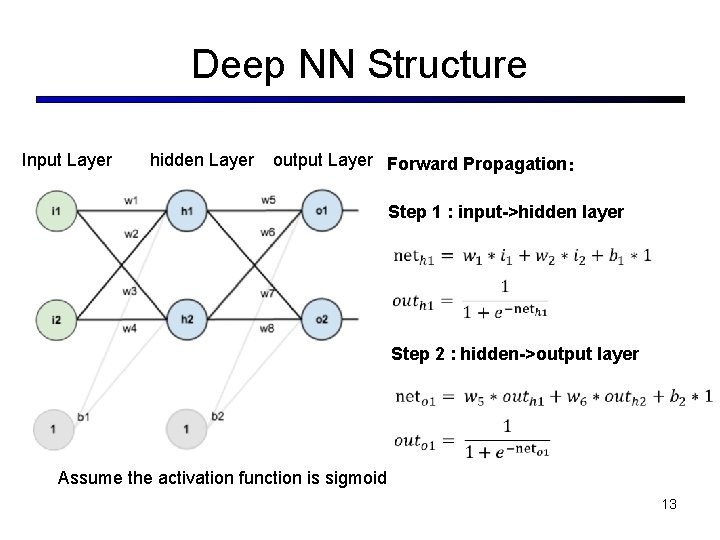

Deep NN Structure Input Layer hidden Layer output Layer Forward Propagation: Step 1 : input->hidden layer Step 2 : hidden->output layer Assume the activation function is sigmoid 13

Outline § Perceptron Introduction § Deep Neural Network Structure § Backpropagation 14

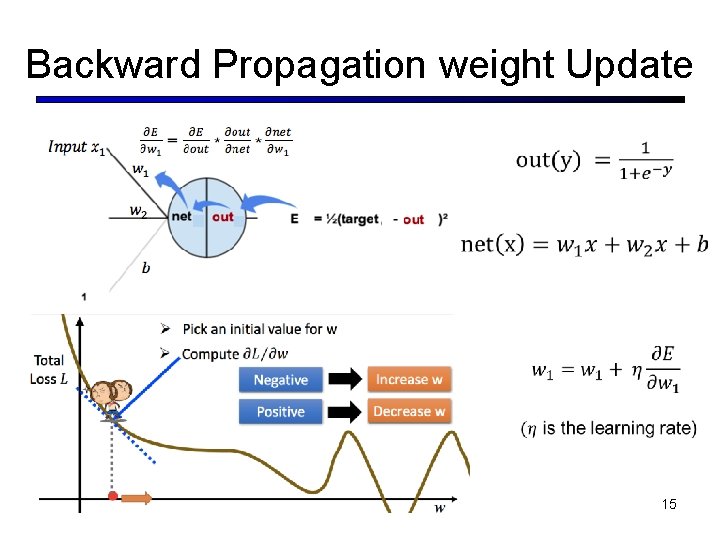

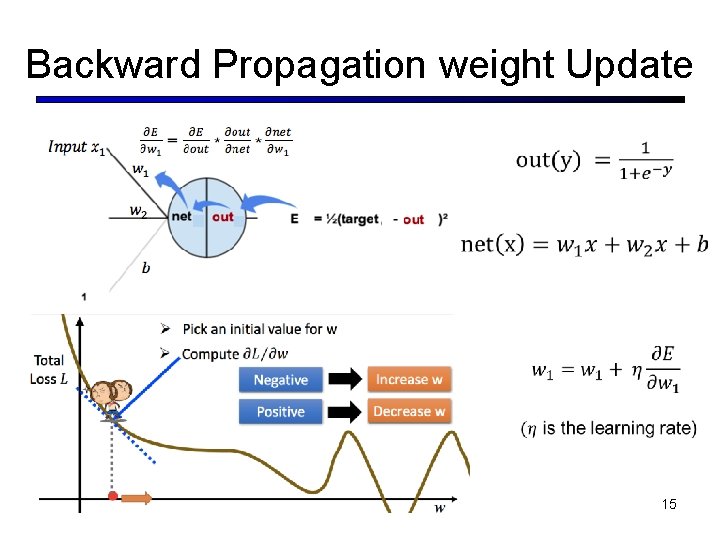

Backward Propagation weight Update 15

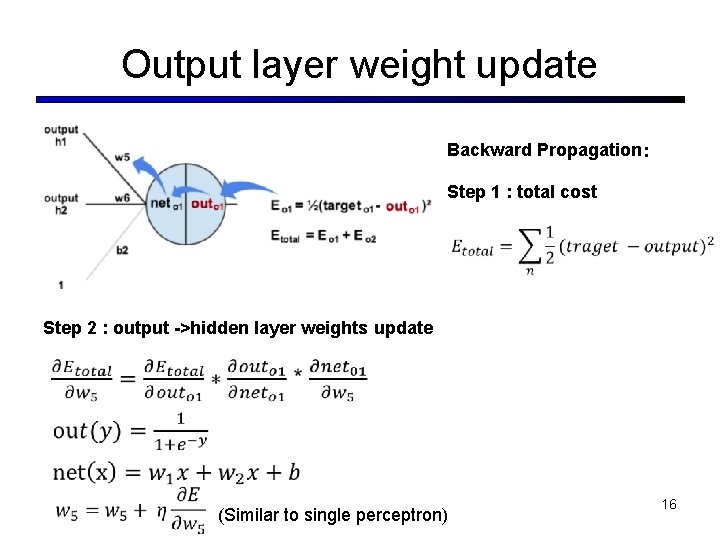

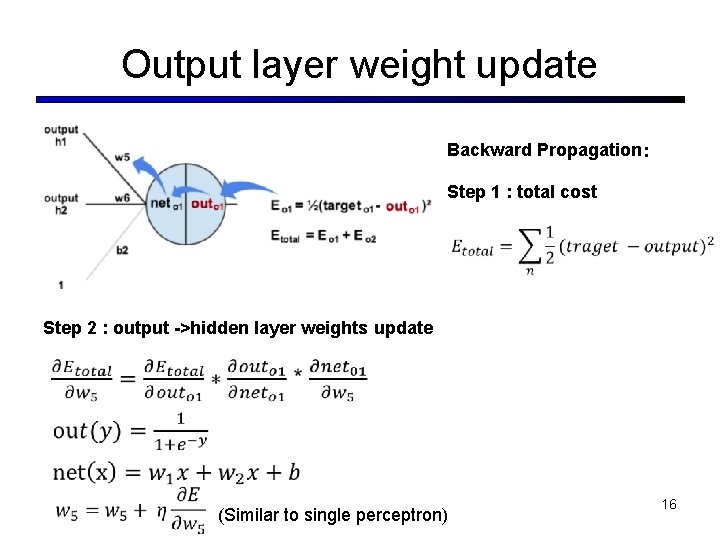

Output layer weight update Backward Propagation: Step 1 : total cost Step 2 : output ->hidden layer weights update (Similar to single perceptron) 16

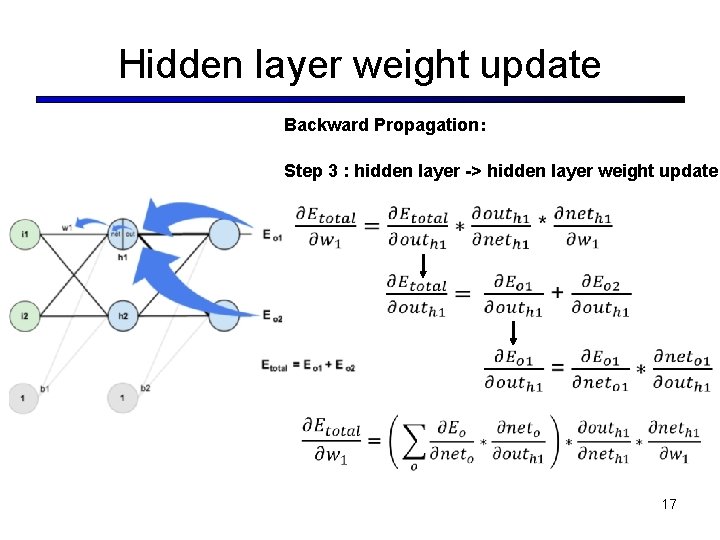

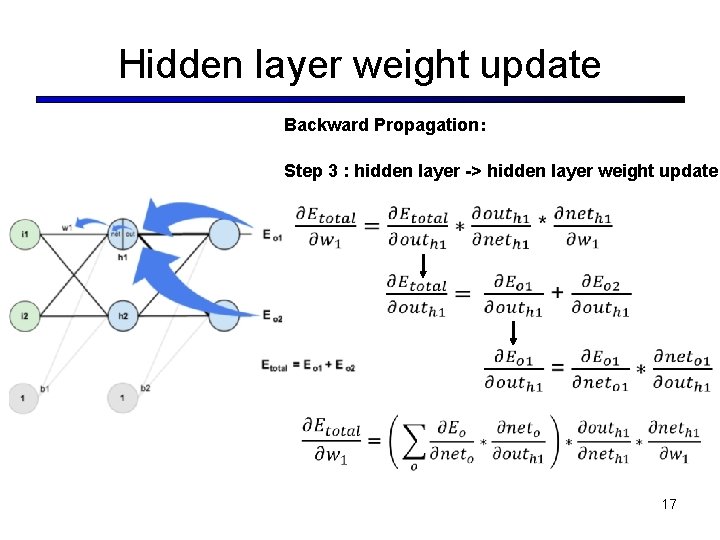

Hidden layer weight update Backward Propagation: Step 3 : hidden layer -> hidden layer weight update 17

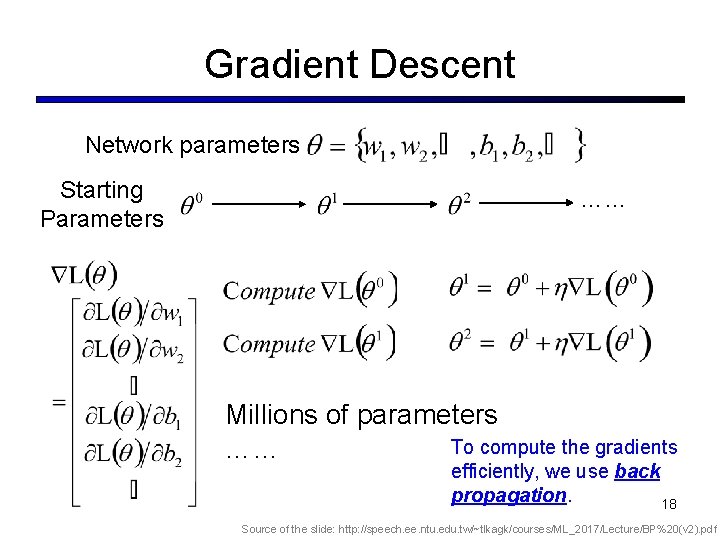

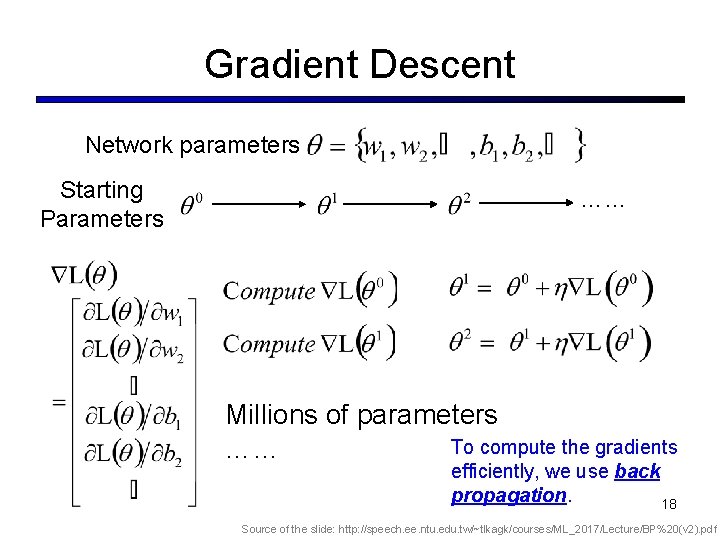

Gradient Descent Network parameters Starting Parameters …… Millions of parameters To compute the gradients …… efficiently, we use back propagation. 18 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

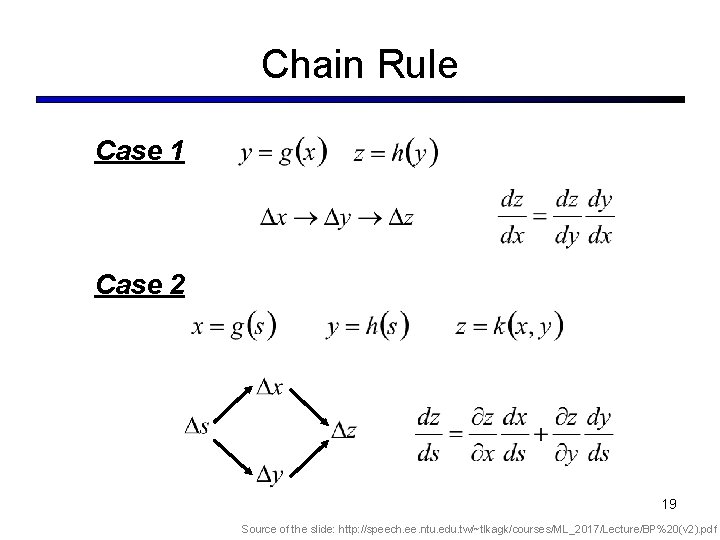

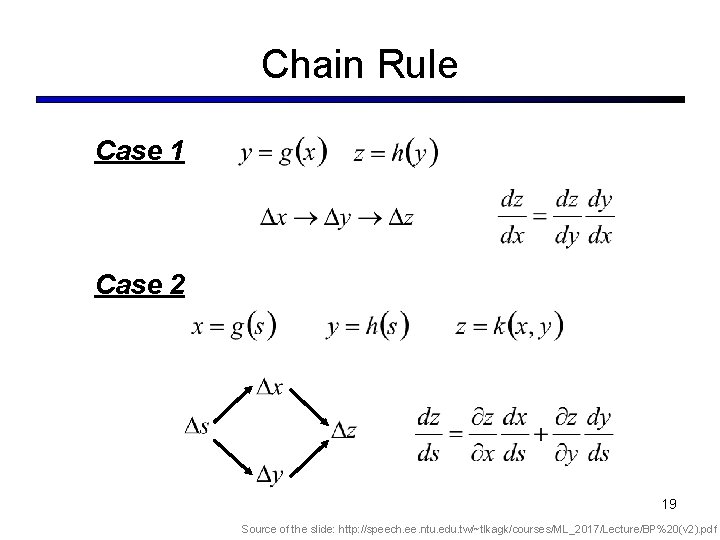

Chain Rule Case 1 Case 2 19 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

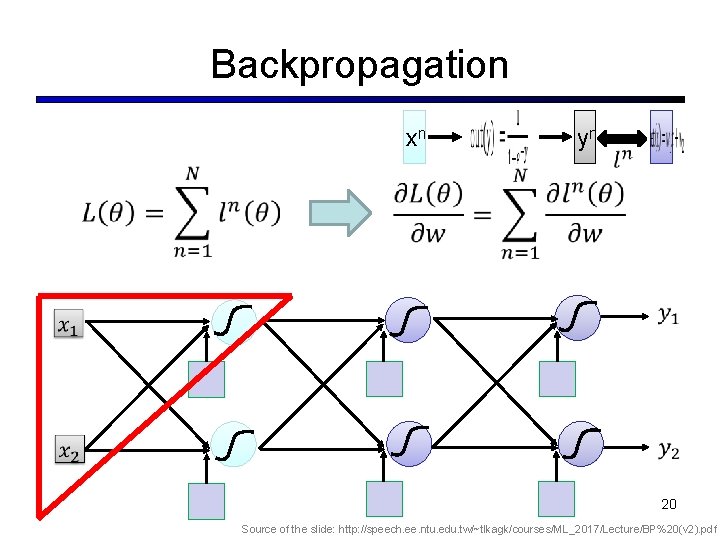

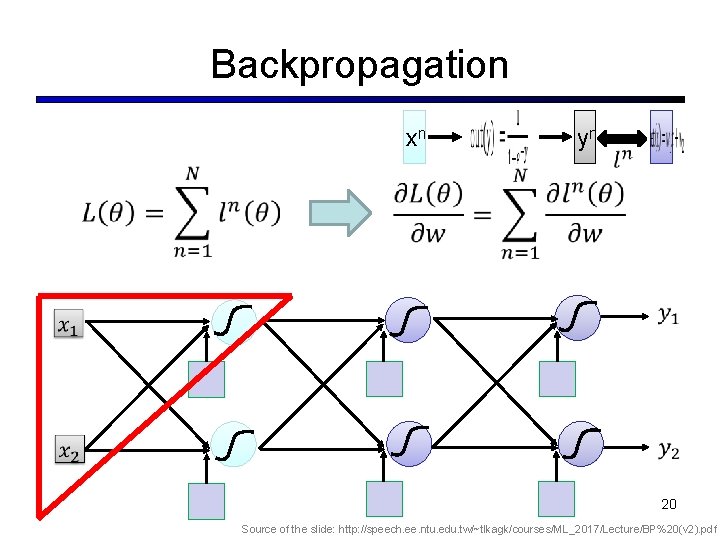

Backpropagation xn yn 20 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

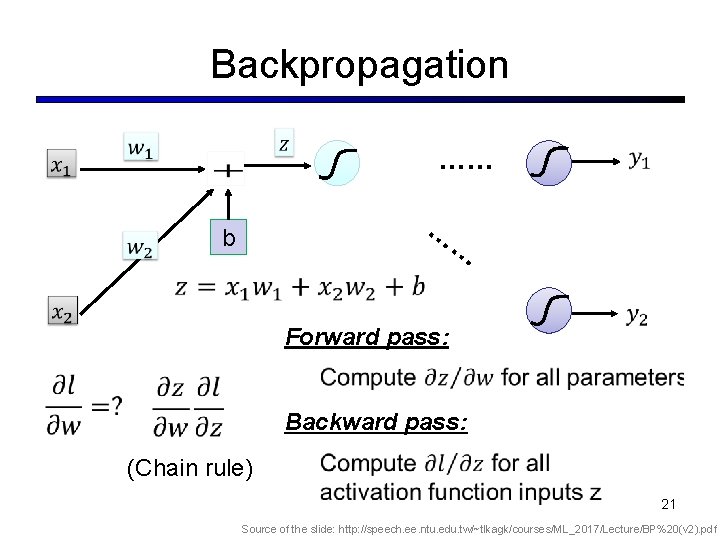

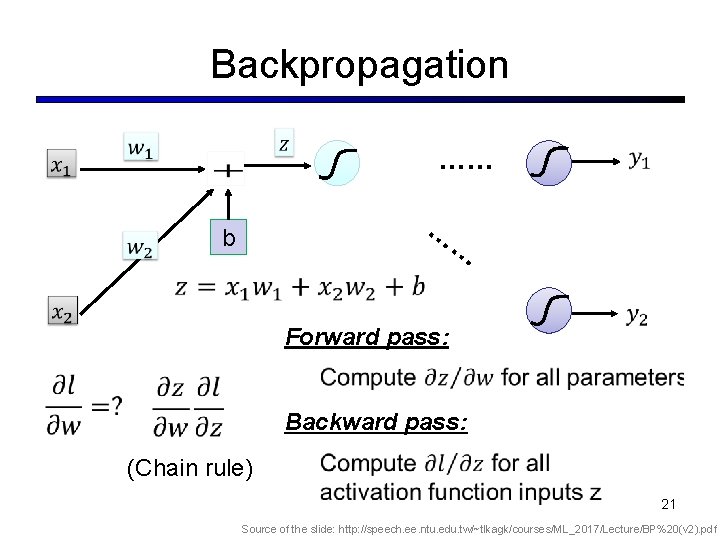

Backpropagation …… … b … Forward pass: Backward pass: (Chain rule) 21 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

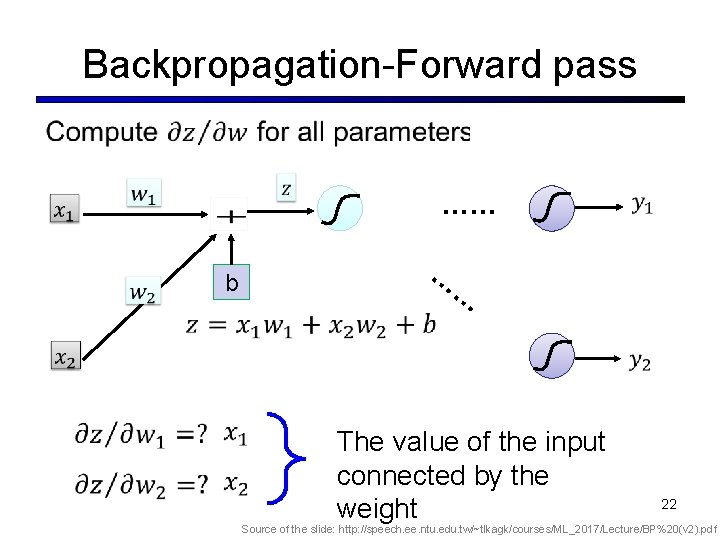

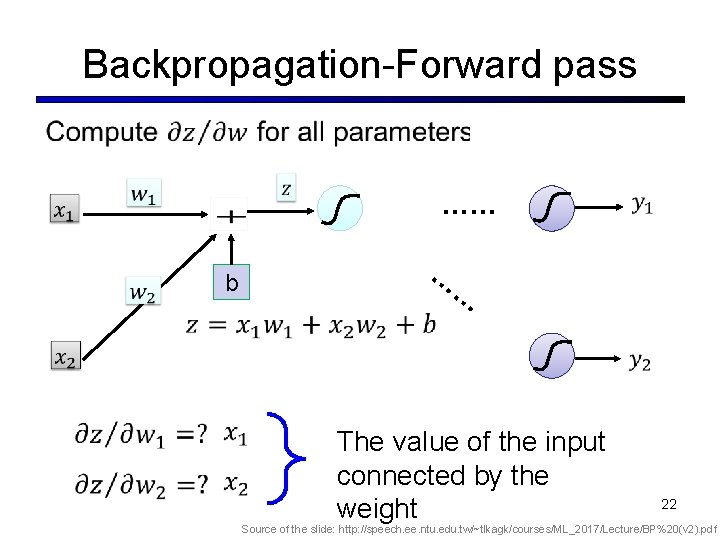

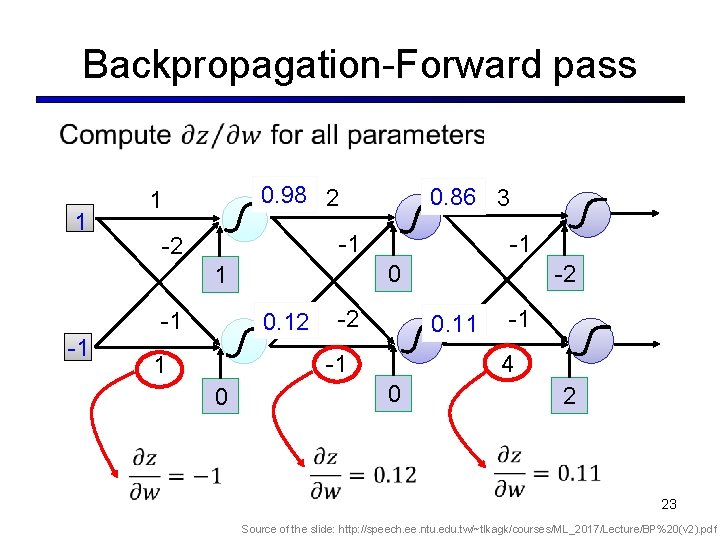

Backpropagation-Forward pass …… b … … The value of the input connected by the weight 22 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

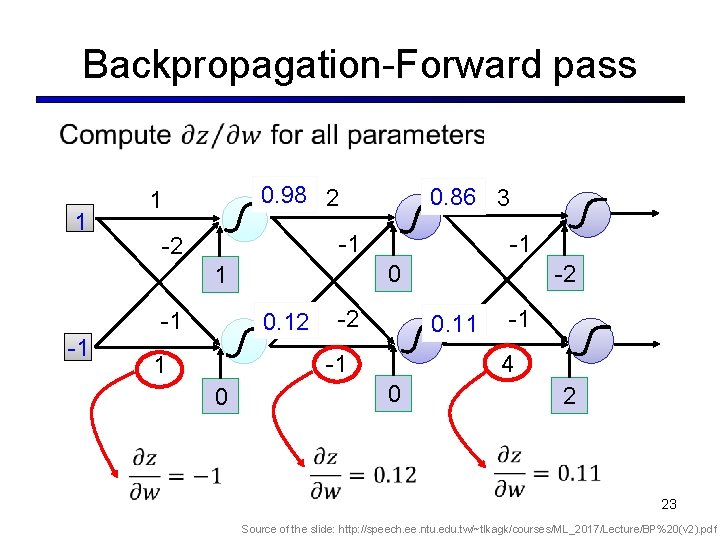

Backpropagation-Forward pass 1 0. 98 2 1 -1 -2 -1 -1 0. 12 -2 0. 11 -1 1 0 -2 0 1 -1 0. 86 3 -1 4 0 2 23 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

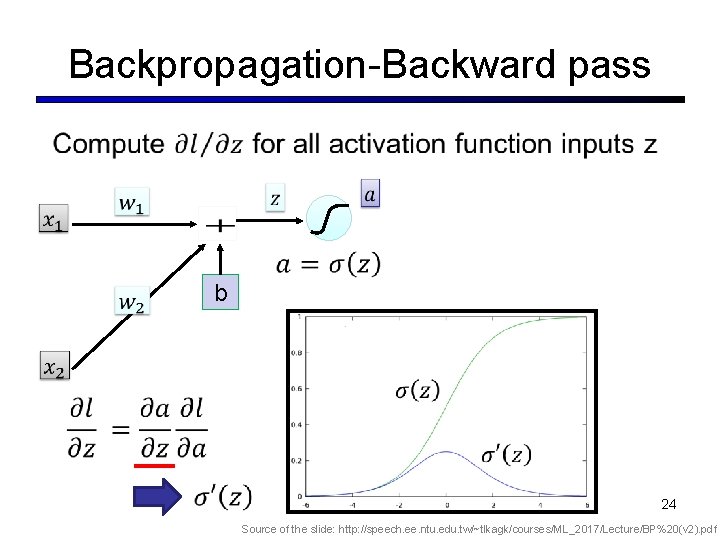

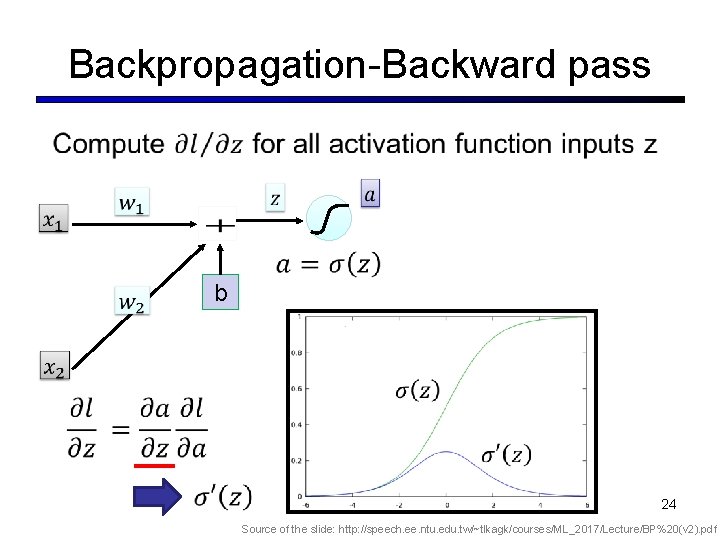

Backpropagation-Backward pass b 24 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

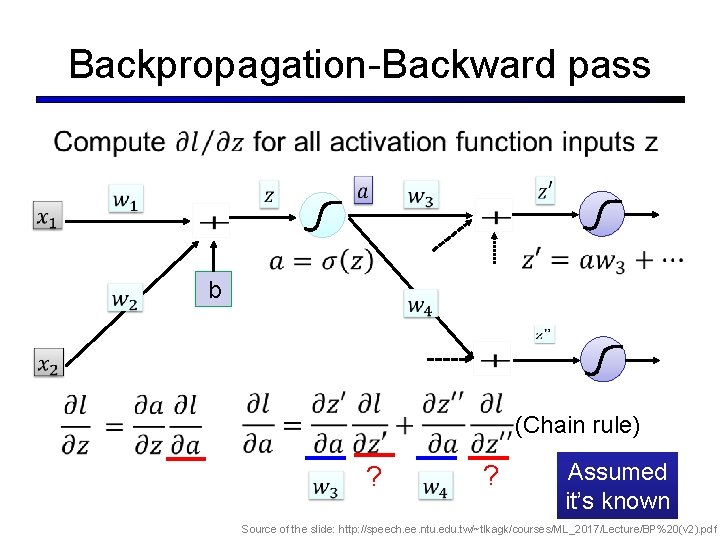

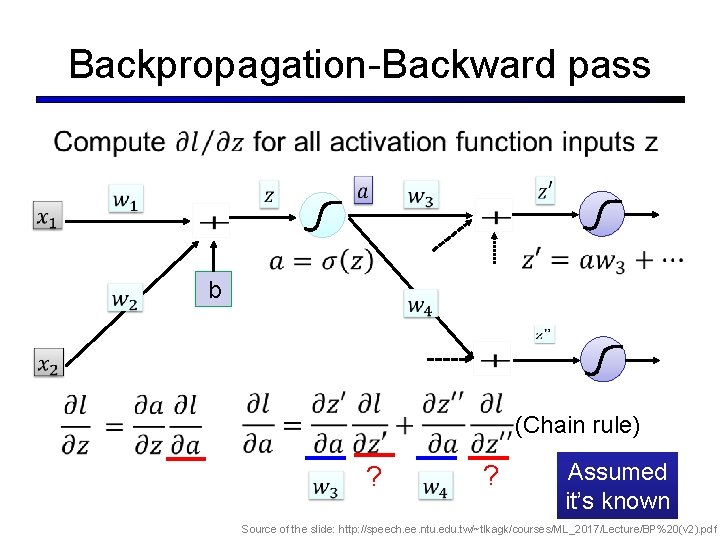

Backpropagation-Backward pass b (Chain rule) ? ? Assumed it’s known 25 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

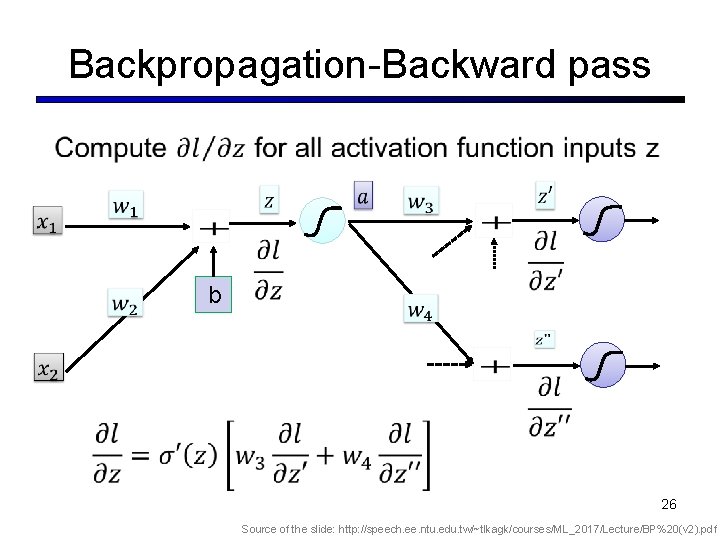

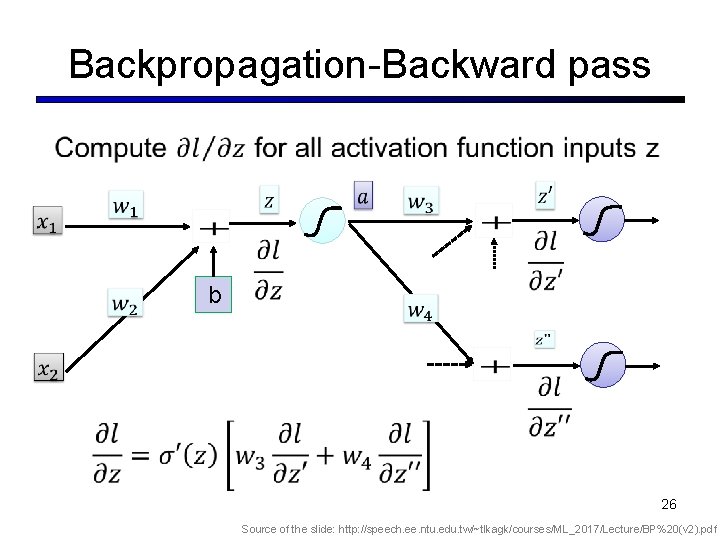

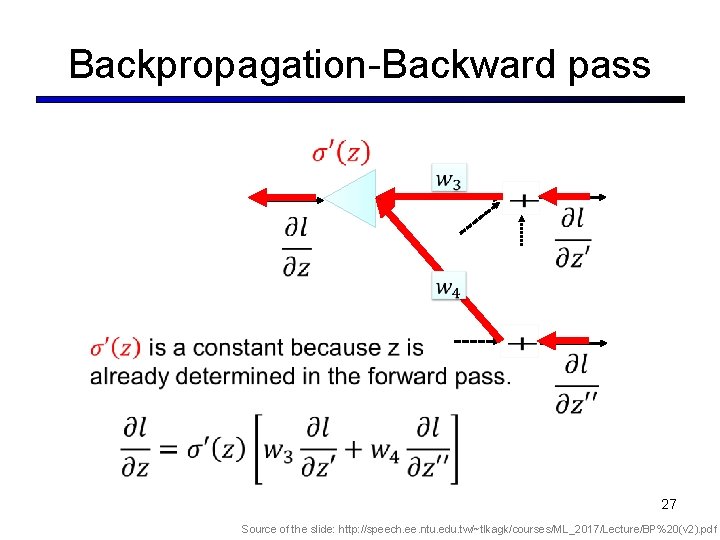

Backpropagation-Backward pass b 26 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

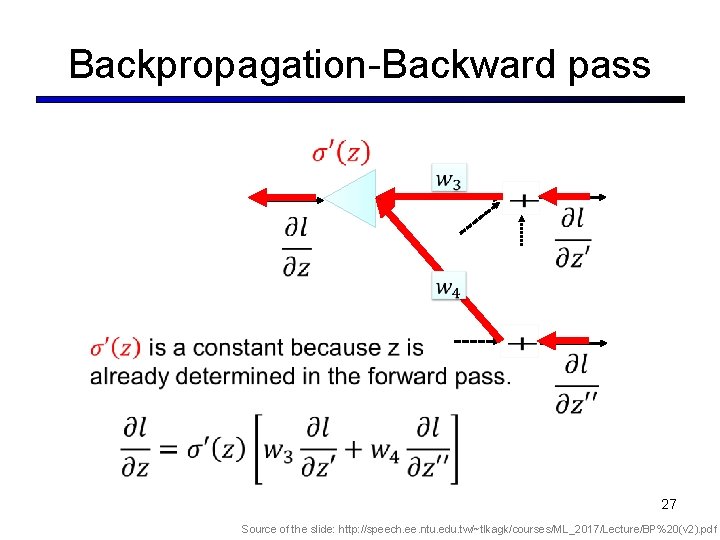

Backpropagation-Backward pass 27 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

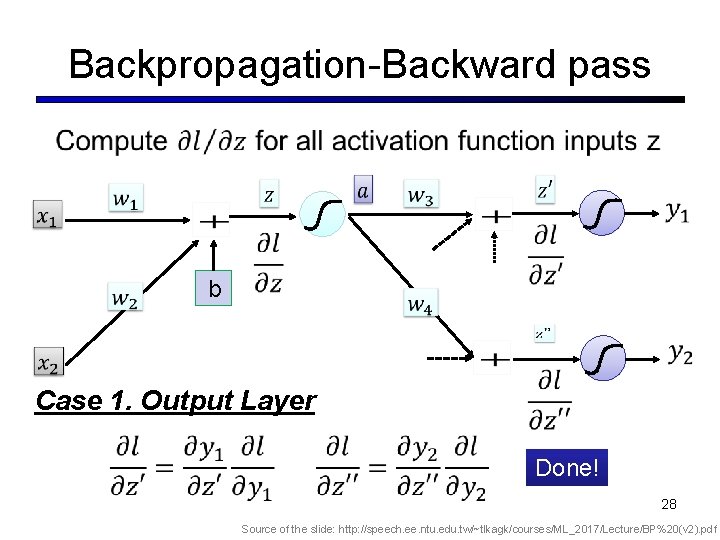

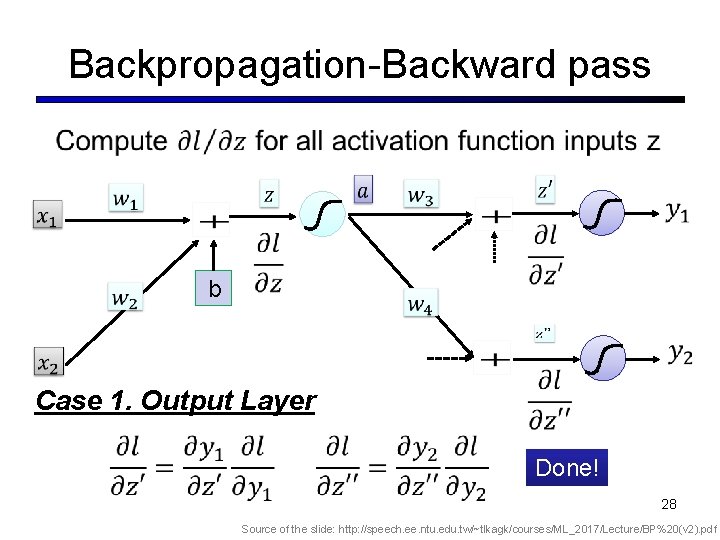

Backpropagation-Backward pass b Case 1. Output Layer Done! 28 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

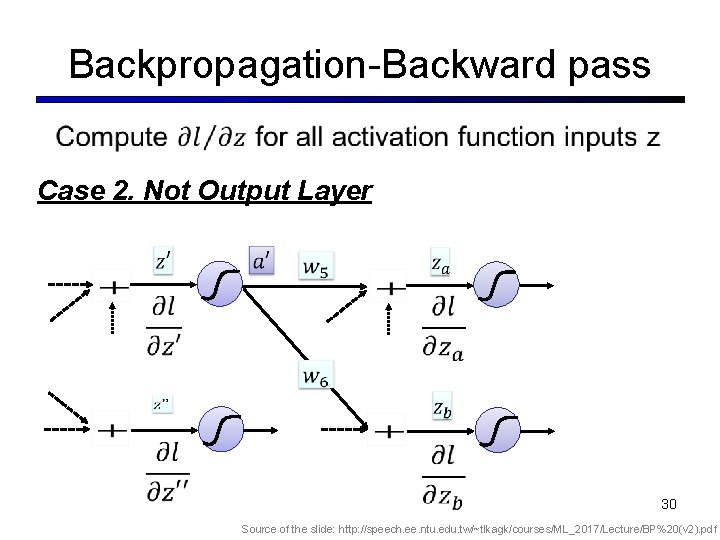

Backpropagation-Backward pass Case 2. Not Output Layer … … 29 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

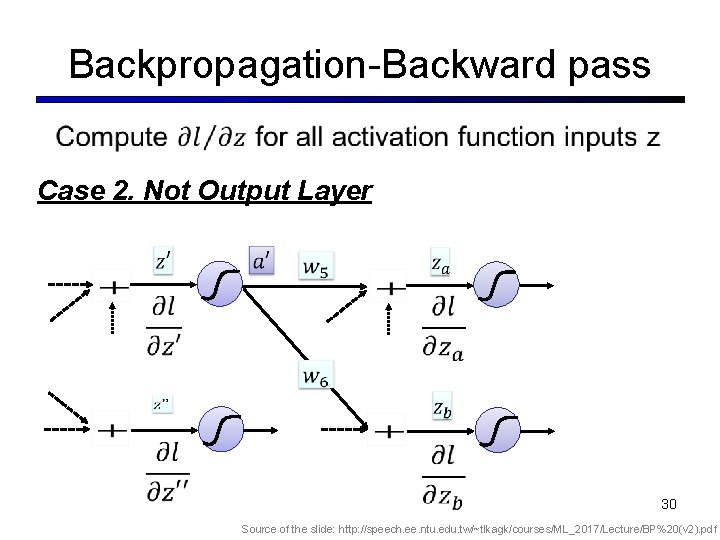

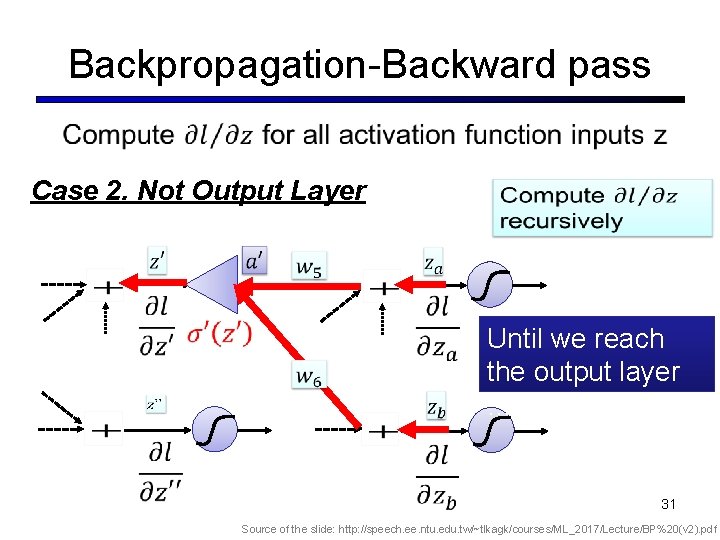

Backpropagation-Backward pass Case 2. Not Output Layer 30 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

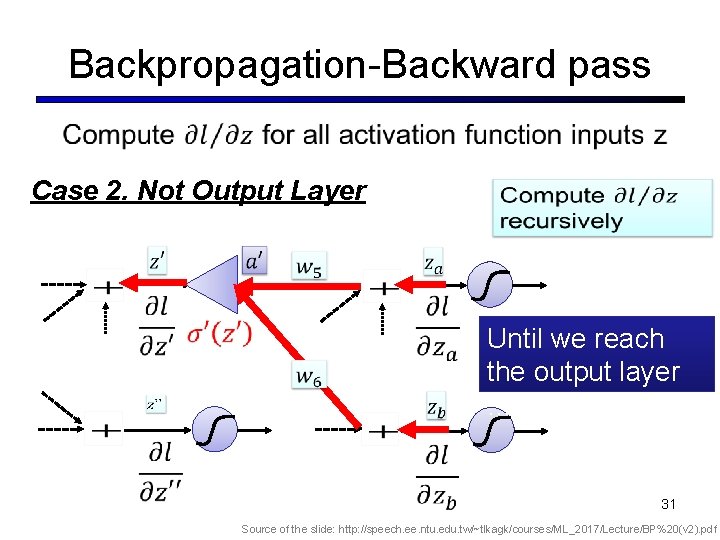

Backpropagation-Backward pass Case 2. Not Output Layer Until we reach the output layer …… 31 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

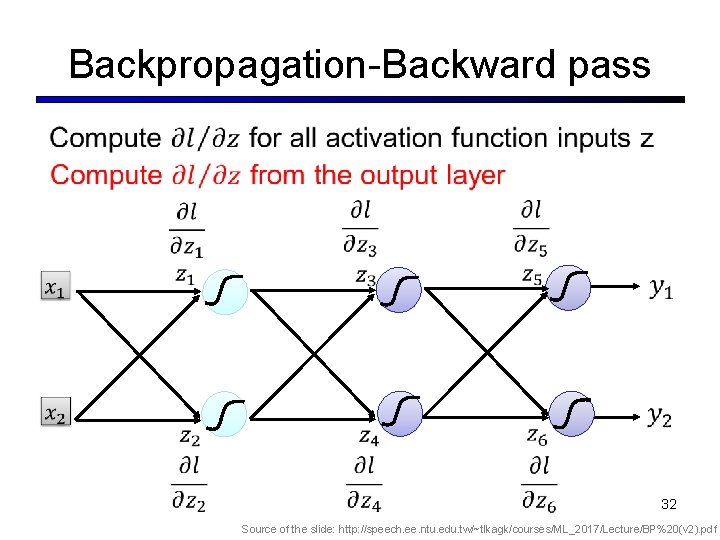

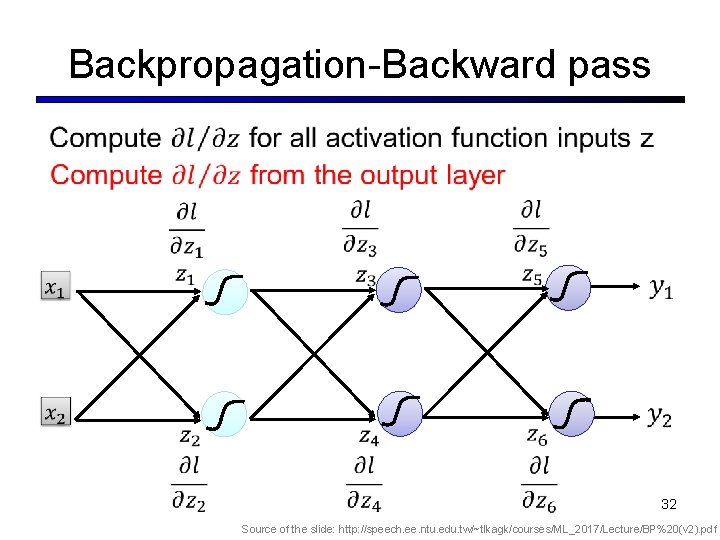

Backpropagation-Backward pass 32 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

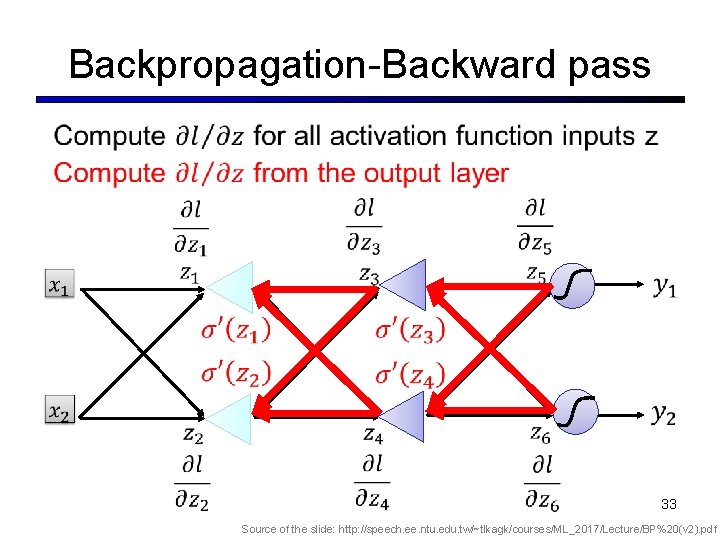

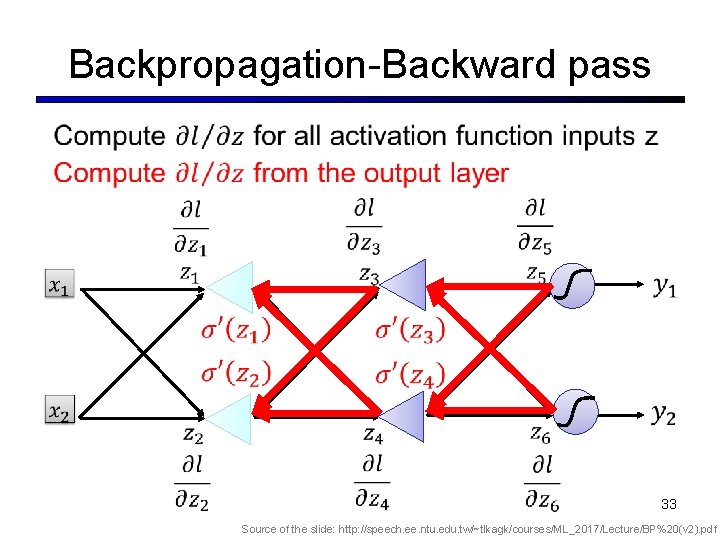

Backpropagation-Backward pass 33 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

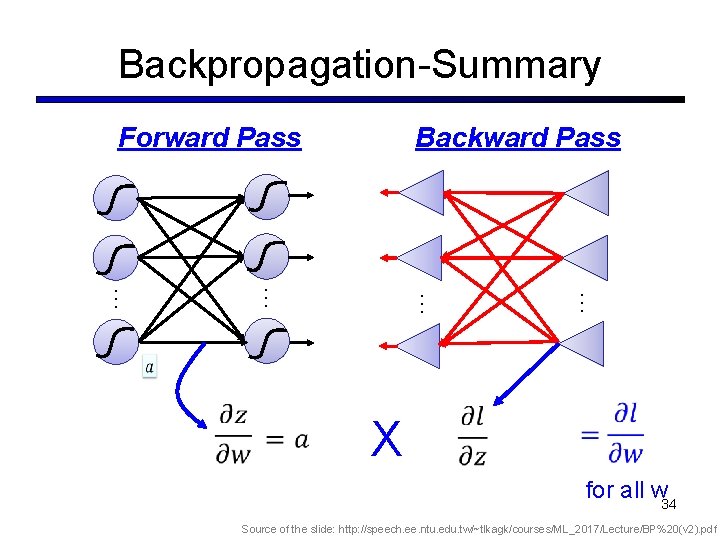

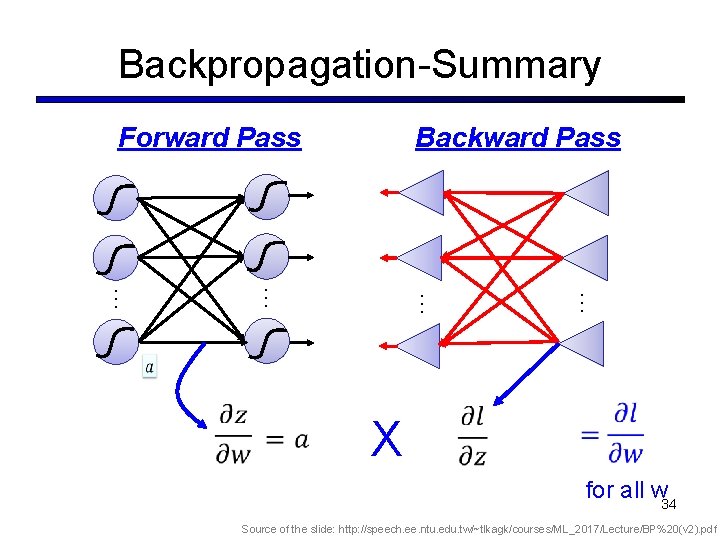

Backpropagation-Summary Backward Pass Forward Pass … … X for all w 34 Source of the slide: http: //speech. ee. ntu. edu. tw/~tlkagk/courses/ML_2017/Lecture/BP%20(v 2). pdf

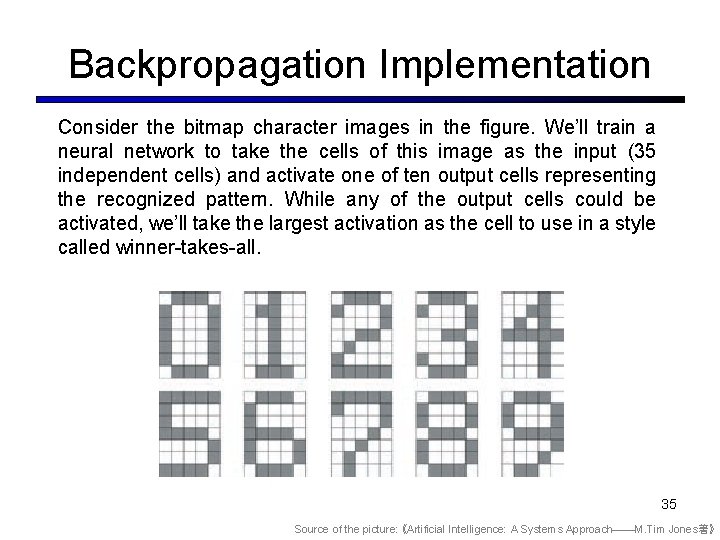

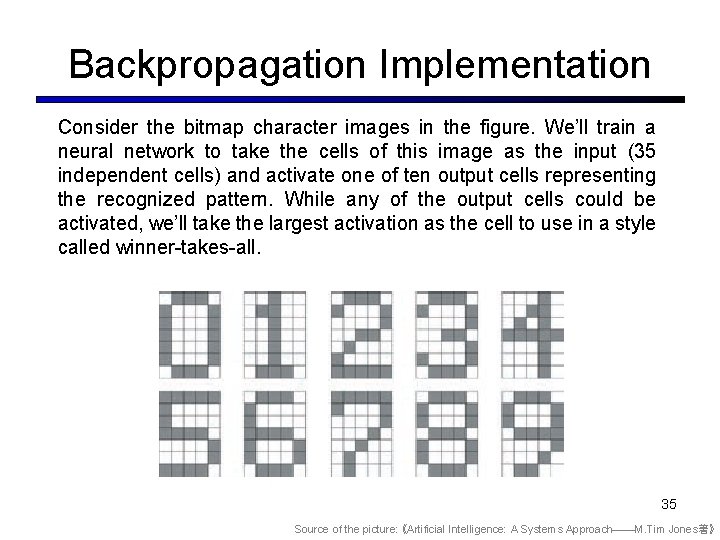

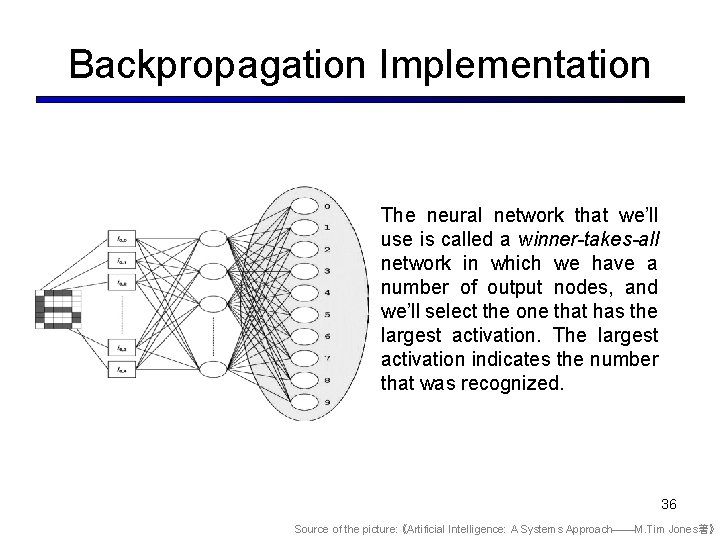

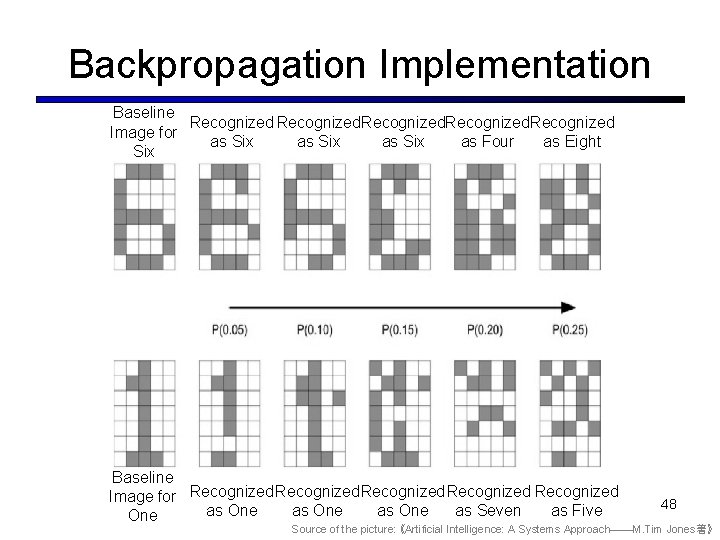

Backpropagation Implementation Consider the bitmap character images in the figure. We’ll train a neural network to take the cells of this image as the input (35 independent cells) and activate one of ten output cells representing the recognized pattern. While any of the output cells could be activated, we’ll take the largest activation as the cell to use in a style called winner-takes-all. 35 Source of the picture: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

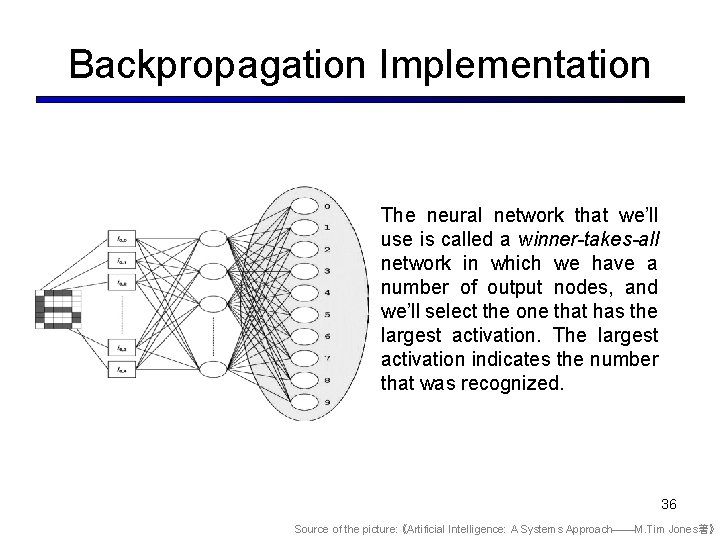

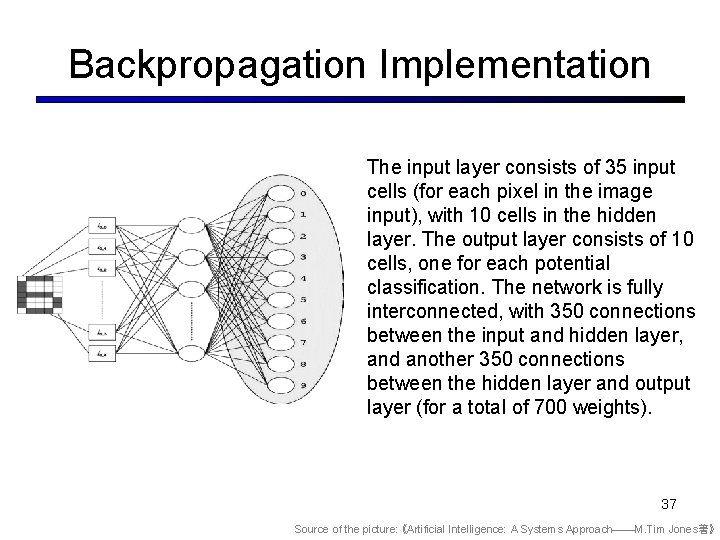

Backpropagation Implementation The neural network that we’ll use is called a winner-takes-all network in which we have a number of output nodes, and we’ll select the one that has the largest activation. The largest activation indicates the number that was recognized. 36 Source of the picture: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

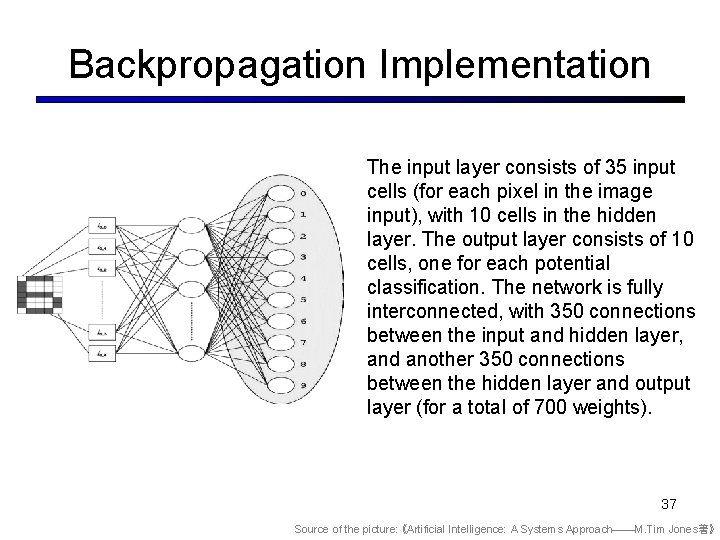

Backpropagation Implementation The input layer consists of 35 input cells (for each pixel in the image input), with 10 cells in the hidden layer. The output layer consists of 10 cells, one for each potential classification. The network is fully interconnected, with 350 connections between the input and hidden layer, and another 350 connections between the hidden layer and output layer (for a total of 700 weights). 37 Source of the picture: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

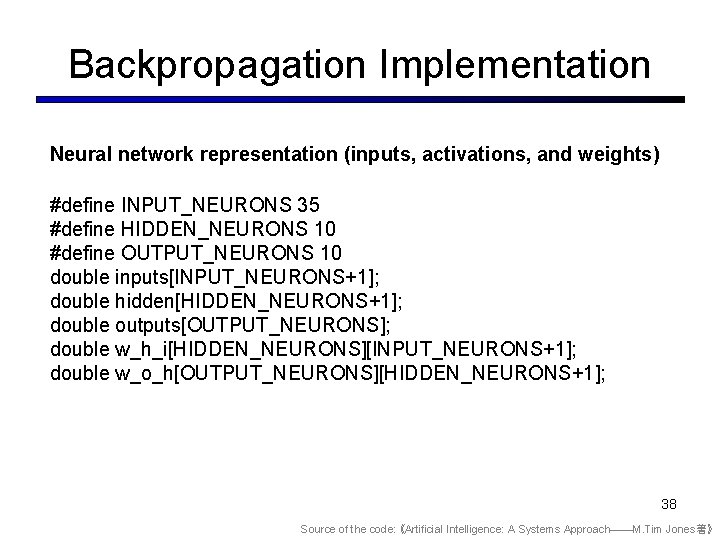

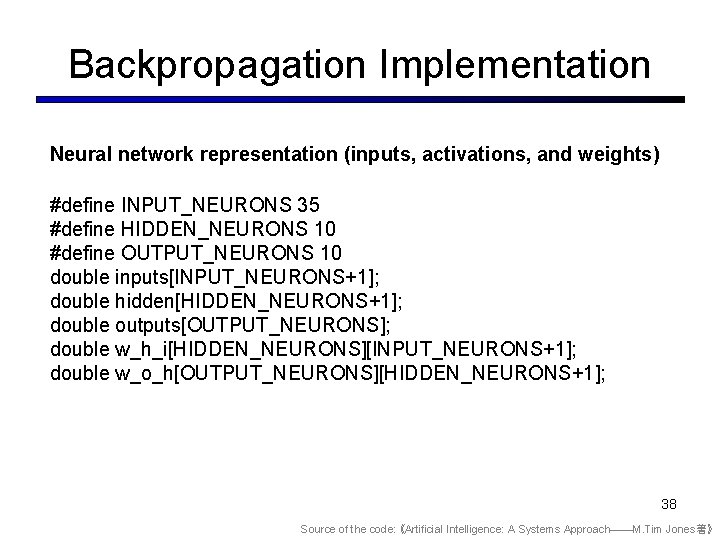

Backpropagation Implementation Neural network representation (inputs, activations, and weights) #define INPUT_NEURONS 35 #define HIDDEN_NEURONS 10 #define OUTPUT_NEURONS 10 double inputs[INPUT_NEURONS+1]; double hidden[HIDDEN_NEURONS+1]; double outputs[OUTPUT_NEURONS]; double w_h_i[HIDDEN_NEURONS][INPUT_NEURONS+1]; double w_o_h[OUTPUT_NEURONS][HIDDEN_NEURONS+1]; 38 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

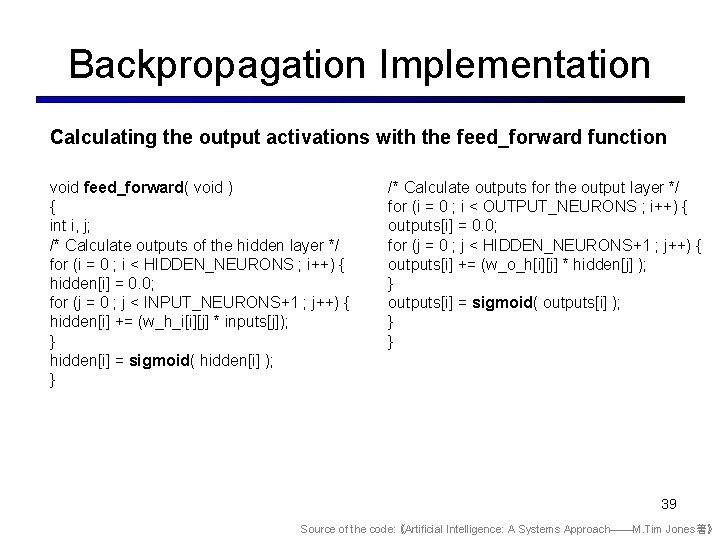

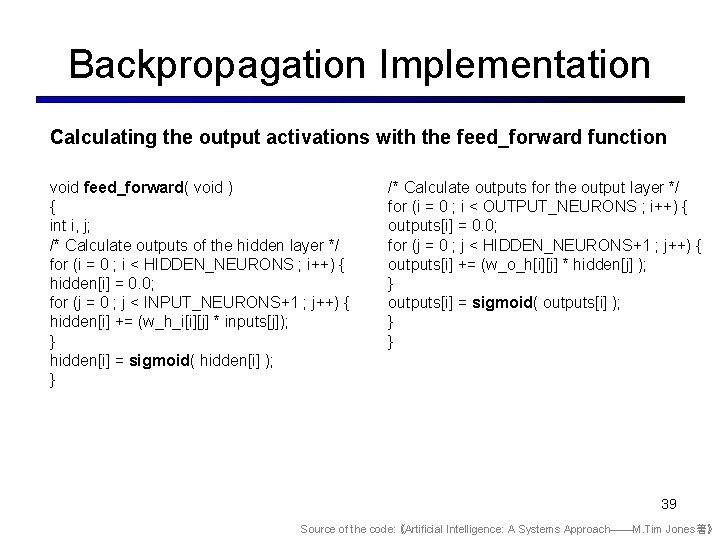

Backpropagation Implementation Calculating the output activations with the feed_forward function void feed_forward( void ) { int i, j; /* Calculate outputs of the hidden layer */ for (i = 0 ; i < HIDDEN_NEURONS ; i++) { hidden[i] = 0. 0; for (j = 0 ; j < INPUT_NEURONS+1 ; j++) { hidden[i] += (w_h_i[i][j] * inputs[j]); } hidden[i] = sigmoid( hidden[i] ); } /* Calculate outputs for the output layer */ for (i = 0 ; i < OUTPUT_NEURONS ; i++) { outputs[i] = 0. 0; for (j = 0 ; j < HIDDEN_NEURONS+1 ; j++) { outputs[i] += (w_o_h[i][j] * hidden[j] ); } outputs[i] = sigmoid( outputs[i] ); } } 39 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

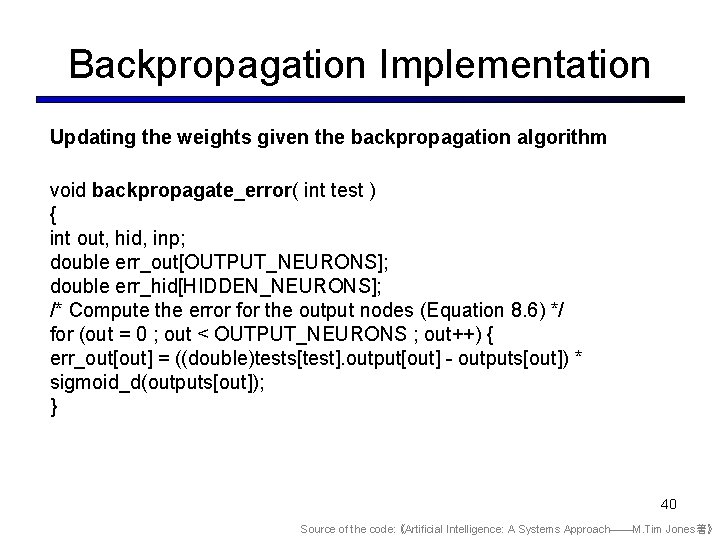

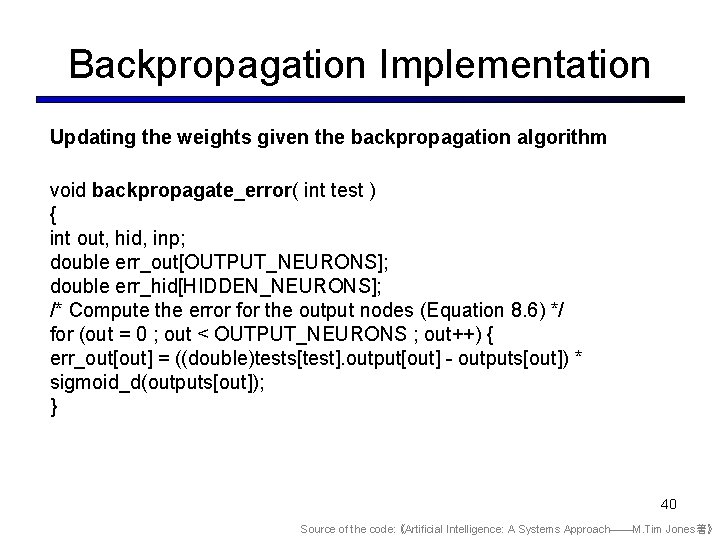

Backpropagation Implementation Updating the weights given the backpropagation algorithm void backpropagate_error( int test ) { int out, hid, inp; double err_out[OUTPUT_NEURONS]; double err_hid[HIDDEN_NEURONS]; /* Compute the error for the output nodes (Equation 8. 6) */ for (out = 0 ; out < OUTPUT_NEURONS ; out++) { err_out[out] = ((double)tests[test]. output[out] - outputs[out]) * sigmoid_d(outputs[out]); } 40 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

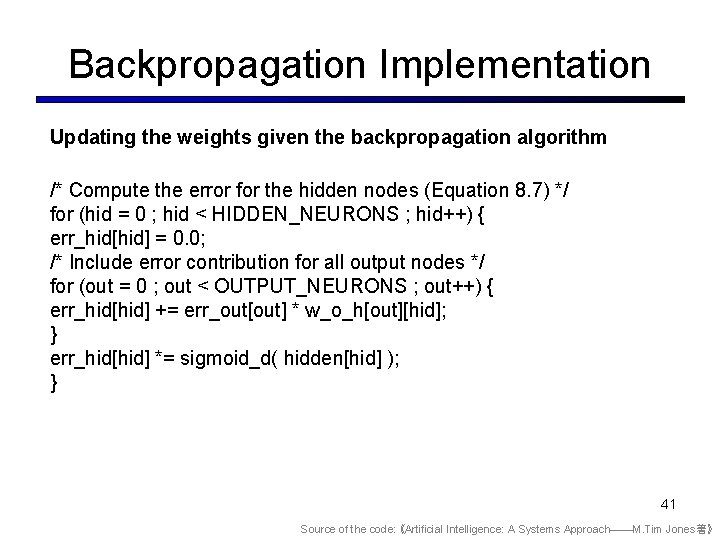

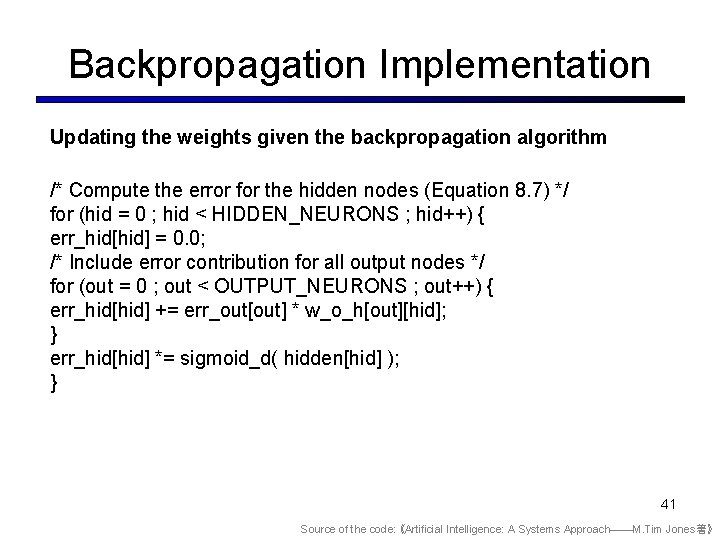

Backpropagation Implementation Updating the weights given the backpropagation algorithm /* Compute the error for the hidden nodes (Equation 8. 7) */ for (hid = 0 ; hid < HIDDEN_NEURONS ; hid++) { err_hid[hid] = 0. 0; /* Include error contribution for all output nodes */ for (out = 0 ; out < OUTPUT_NEURONS ; out++) { err_hid[hid] += err_out[out] * w_o_h[out][hid]; } err_hid[hid] *= sigmoid_d( hidden[hid] ); } 41 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

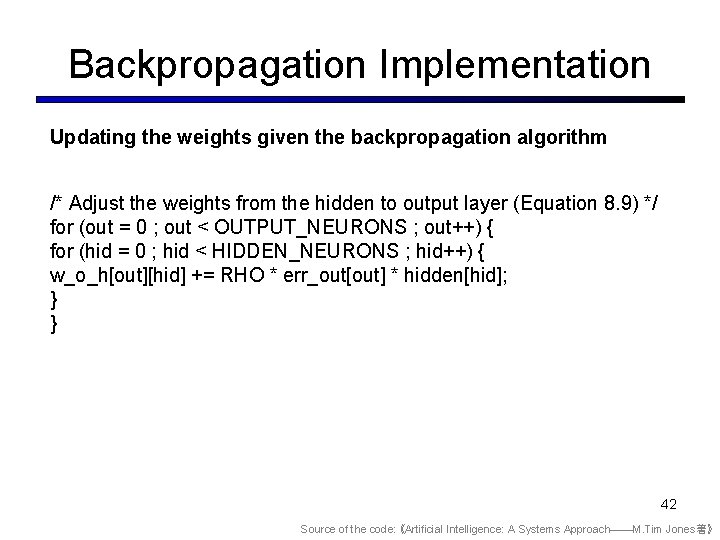

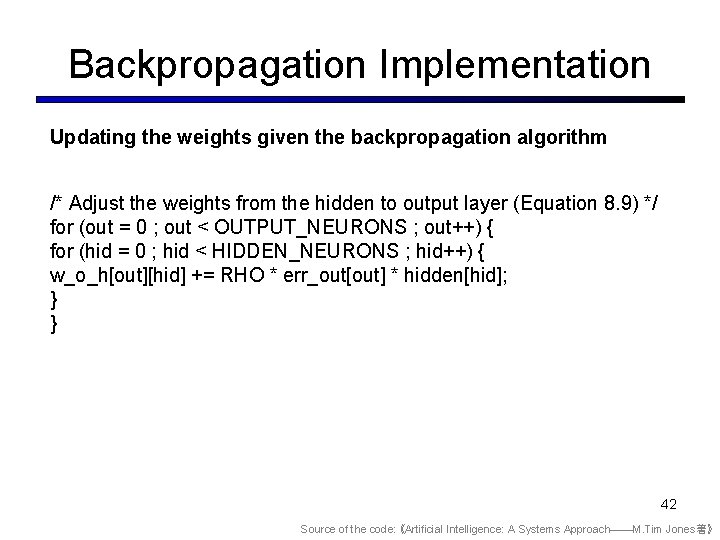

Backpropagation Implementation Updating the weights given the backpropagation algorithm /* Adjust the weights from the hidden to output layer (Equation 8. 9) */ for (out = 0 ; out < OUTPUT_NEURONS ; out++) { for (hid = 0 ; hid < HIDDEN_NEURONS ; hid++) { w_o_h[out][hid] += RHO * err_out[out] * hidden[hid]; } } 42 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

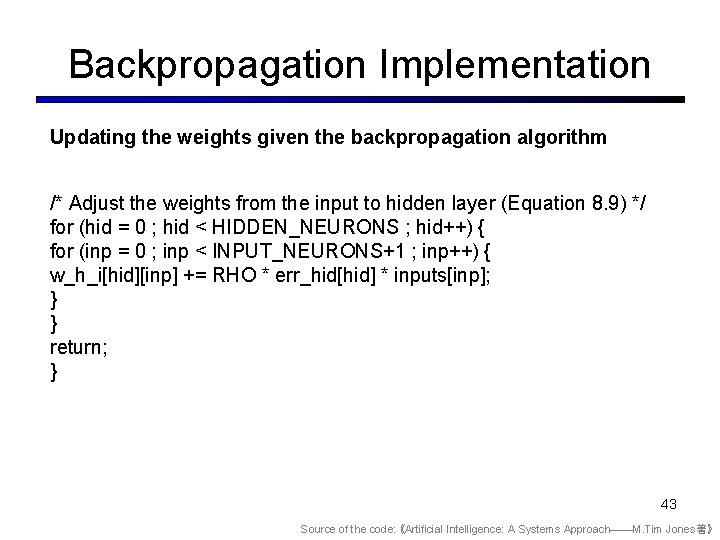

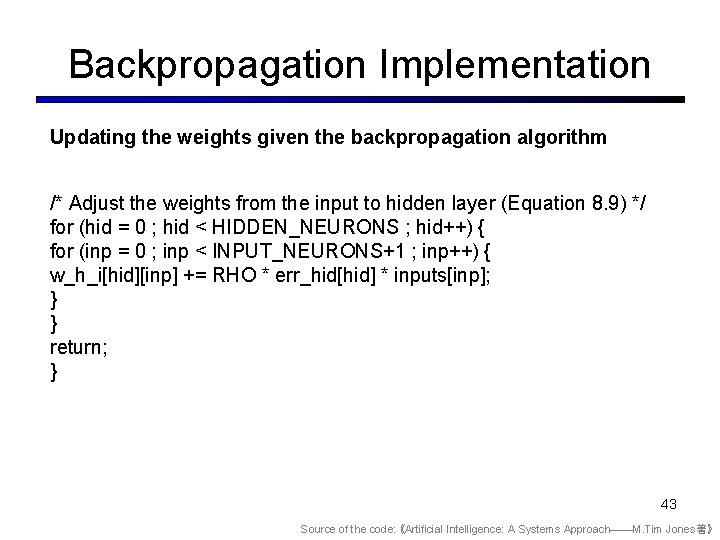

Backpropagation Implementation Updating the weights given the backpropagation algorithm /* Adjust the weights from the input to hidden layer (Equation 8. 9) */ for (hid = 0 ; hid < HIDDEN_NEURONS ; hid++) { for (inp = 0 ; inp < INPUT_NEURONS+1 ; inp++) { w_h_i[hid][inp] += RHO * err_hid[hid] * inputs[inp]; } } return; } 43 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

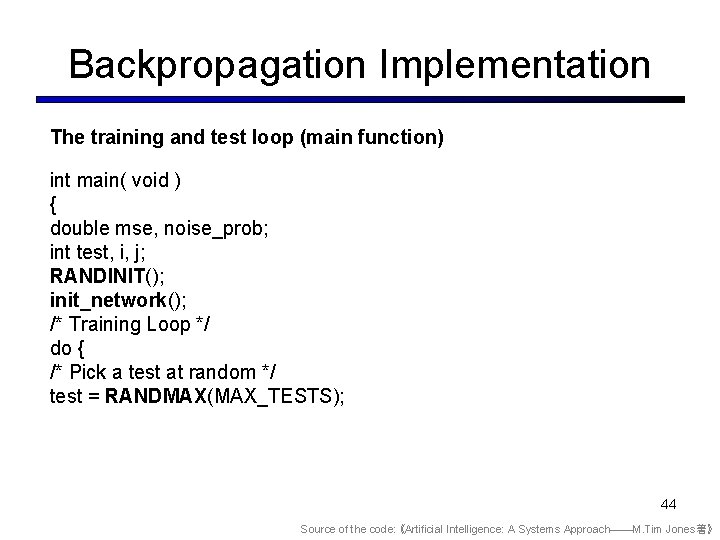

Backpropagation Implementation The training and test loop (main function) int main( void ) { double mse, noise_prob; int test, i, j; RANDINIT(); init_network(); /* Training Loop */ do { /* Pick a test at random */ test = RANDMAX(MAX_TESTS); 44 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

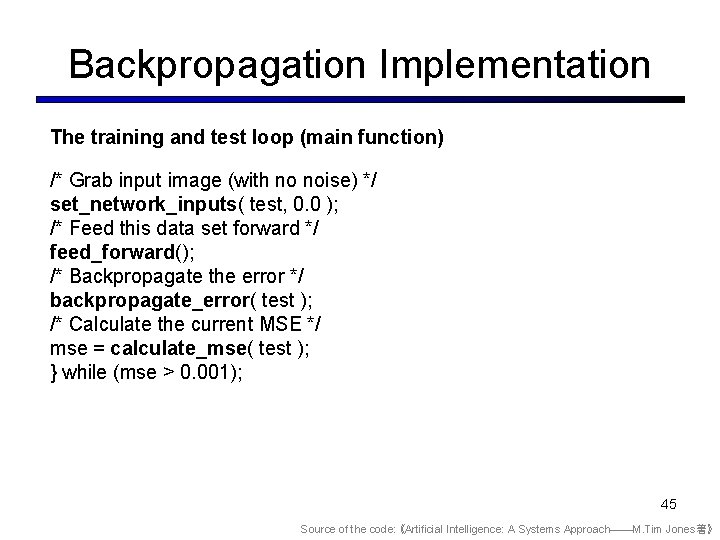

Backpropagation Implementation The training and test loop (main function) /* Grab input image (with no noise) */ set_network_inputs( test, 0. 0 ); /* Feed this data set forward */ feed_forward(); /* Backpropagate the error */ backpropagate_error( test ); /* Calculate the current MSE */ mse = calculate_mse( test ); } while (mse > 0. 001); 45 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

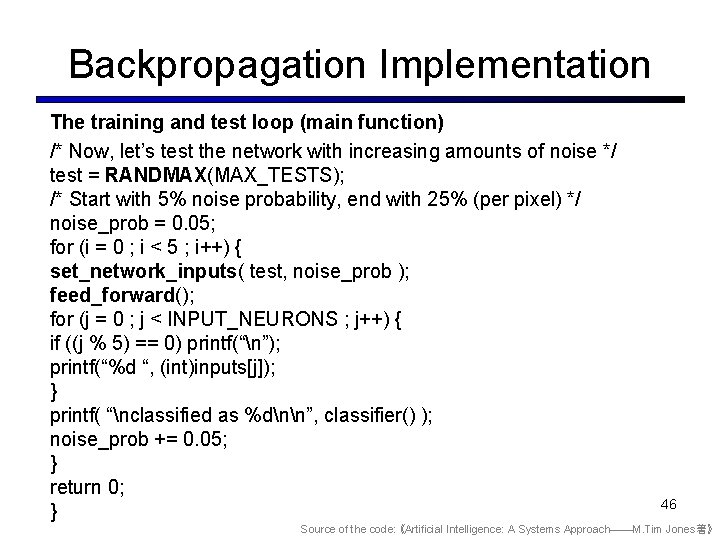

Backpropagation Implementation The training and test loop (main function) /* Now, let’s test the network with increasing amounts of noise */ test = RANDMAX(MAX_TESTS); /* Start with 5% noise probability, end with 25% (per pixel) */ noise_prob = 0. 05; for (i = 0 ; i < 5 ; i++) { set_network_inputs( test, noise_prob ); feed_forward(); for (j = 0 ; j < INPUT_NEURONS ; j++) { if ((j % 5) == 0) printf(“n”); printf(“%d “, (int)inputs[j]); } printf( “nclassified as %dnn”, classifier() ); noise_prob += 0. 05; } return 0; } 46 Source of the code: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

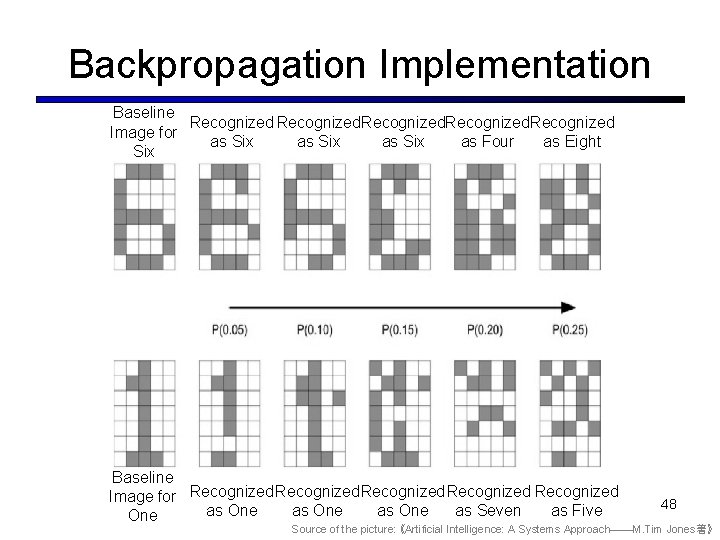

Backpropagation Implementation The figure graphically illustrates the generalization capabilities of the network trained using error backpropagation. In both cases, once the error rate reaches 20%, the image is no longer recognizable. What’s shown in main is a common pattern for neural network training and use. Once a neural network has been trained, the weights can be saved off and used in the given application. 47 Source of the words: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

Backpropagation Implementation Baseline Recognized Image for as Six as Four as Eight Six Baseline Image for Recognized Recognized as One as Seven as Five One 48 Source of the picture: 《Artificial Intelligence: A Systems Approach——M. Tim Jones著》

MNIST Based on Keras Import library files 49 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

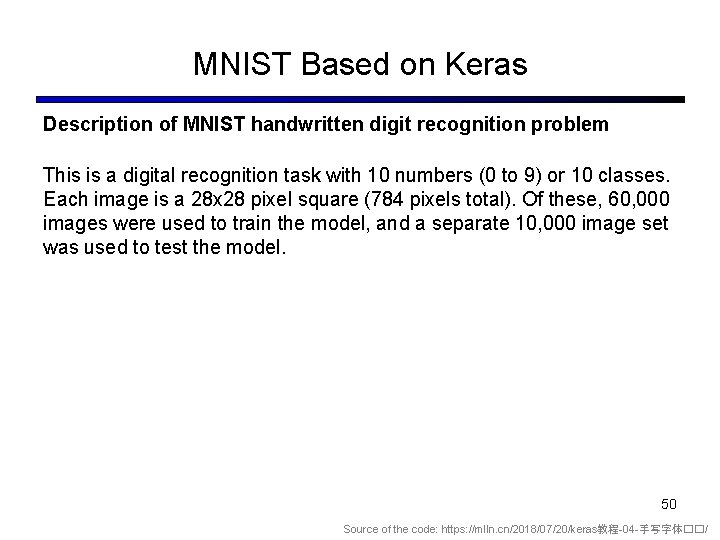

MNIST Based on Keras Description of MNIST handwritten digit recognition problem This is a digital recognition task with 10 numbers (0 to 9) or 10 classes. Each image is a 28 x 28 pixel square (784 pixels total). Of these, 60, 000 images were used to train the model, and a separate 10, 000 image set was used to test the model. 50 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

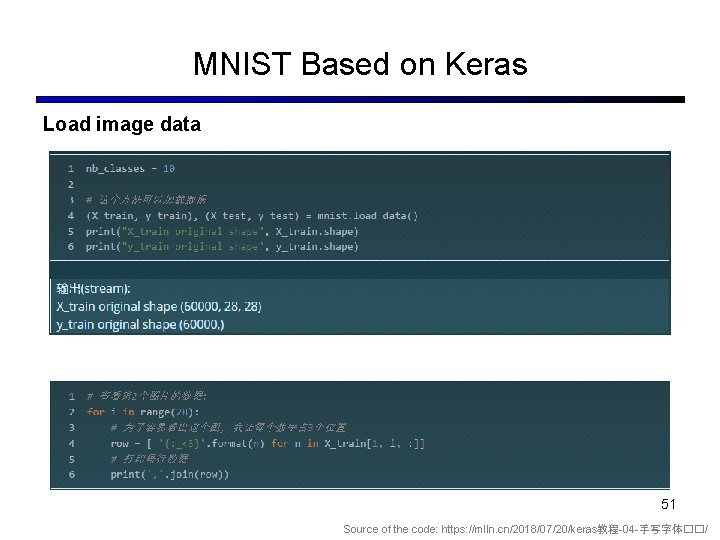

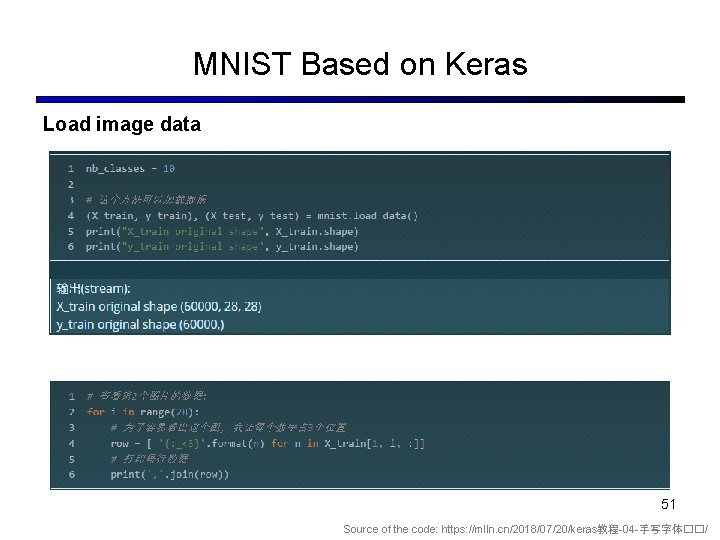

MNIST Based on Keras Load image data 51 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

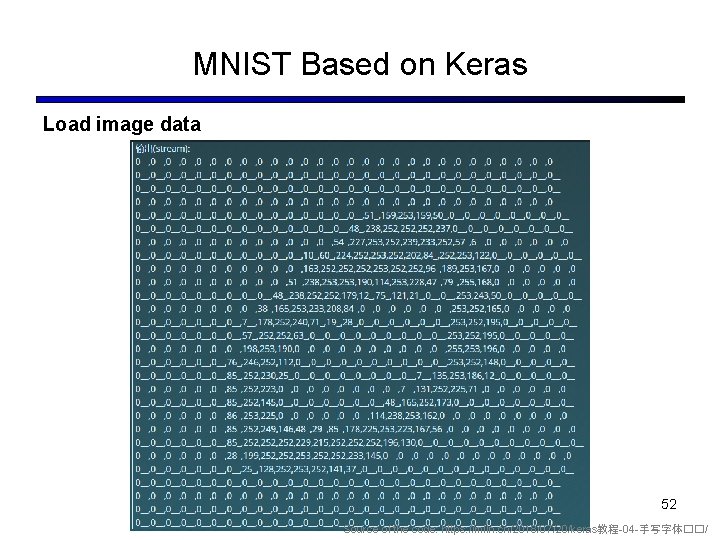

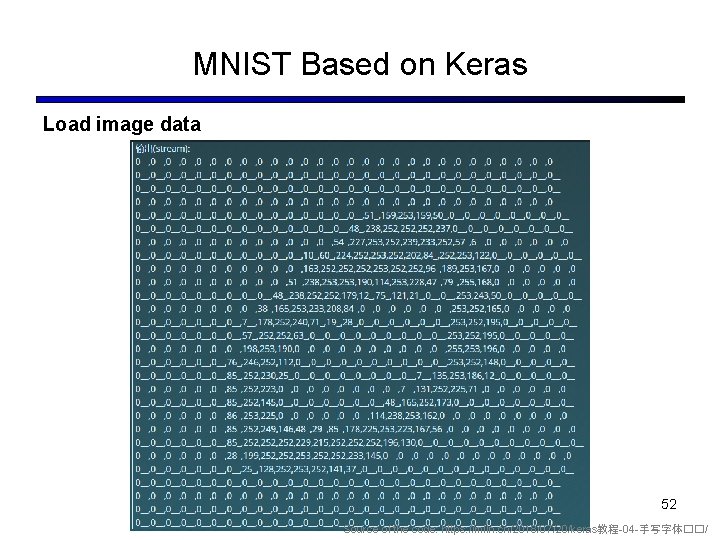

MNIST Based on Keras Load image data 52 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

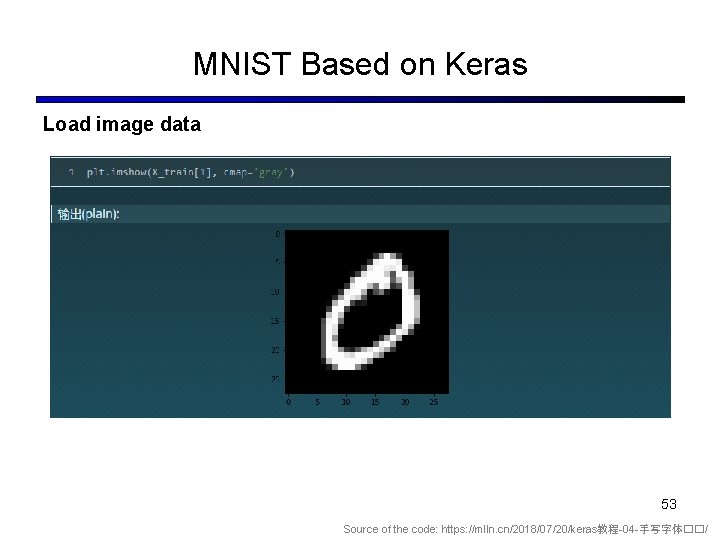

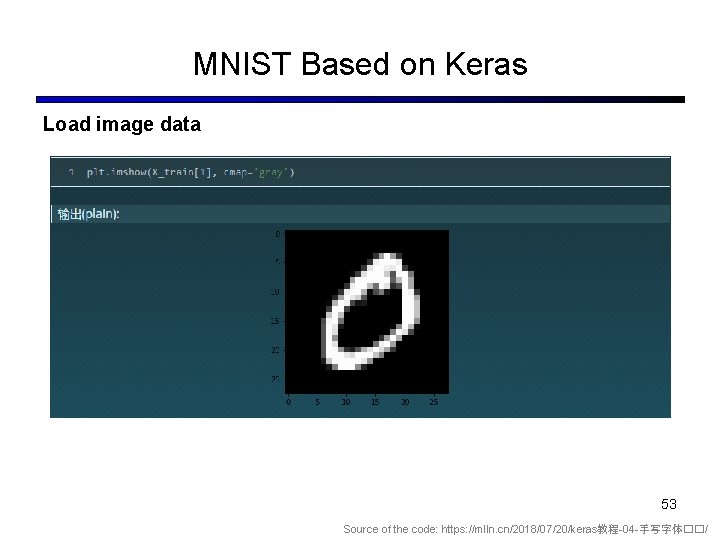

MNIST Based on Keras Load image data 53 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

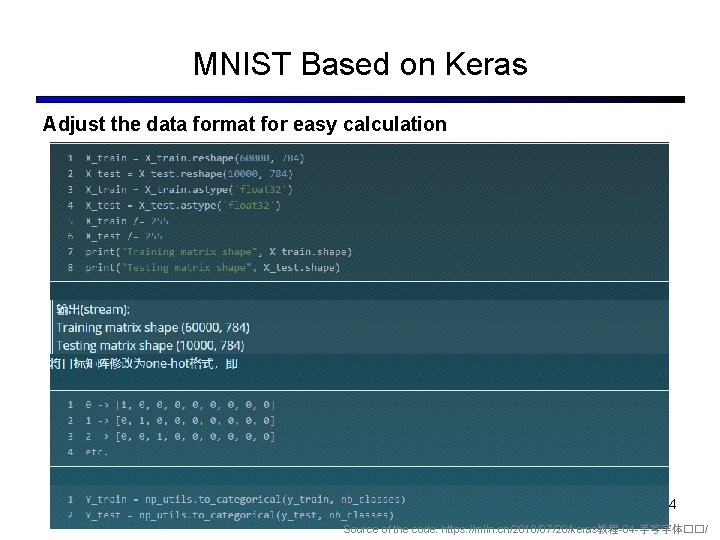

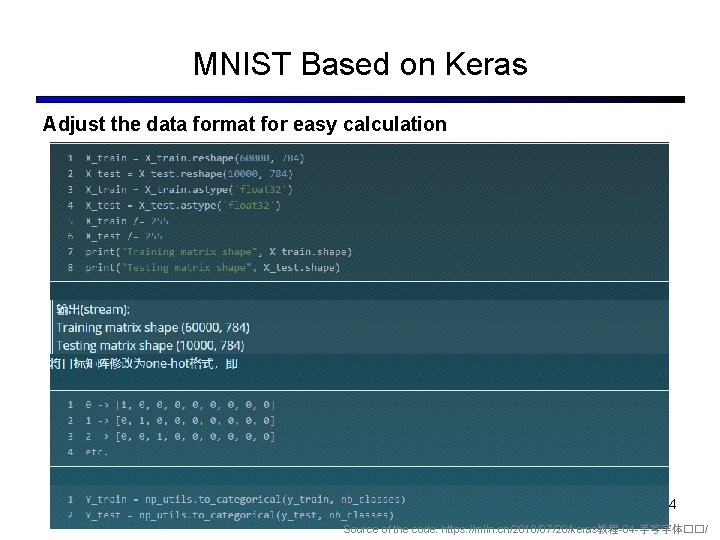

MNIST Based on Keras Adjust the data format for easy calculation 54 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

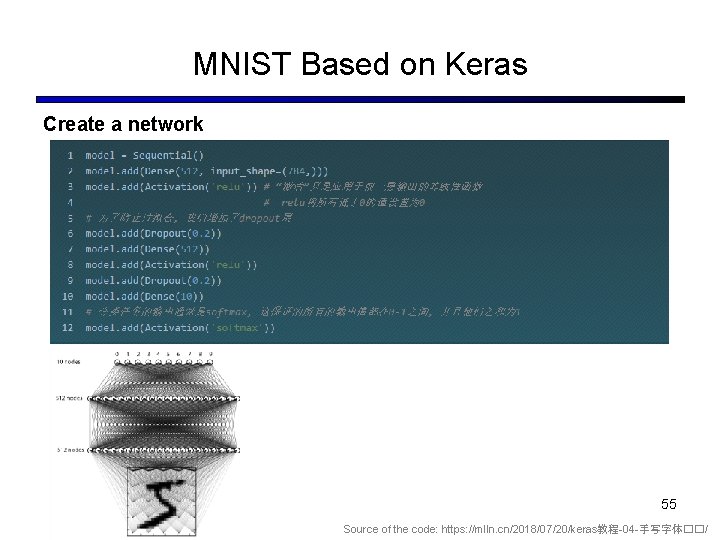

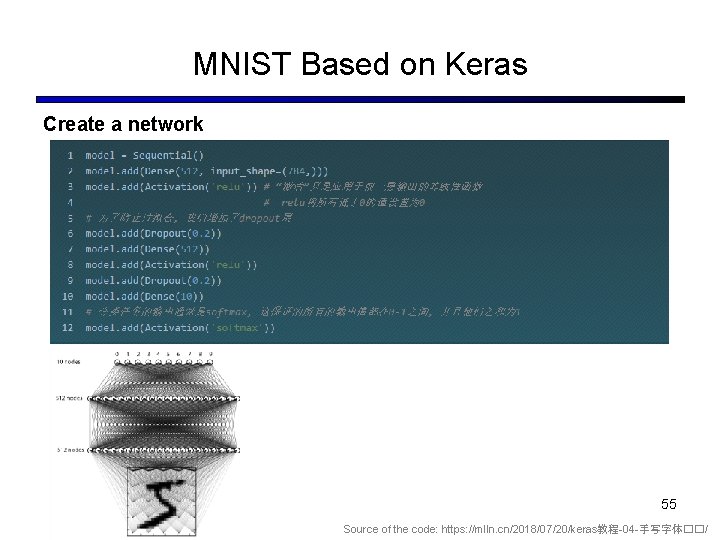

MNIST Based on Keras Create a network 55 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

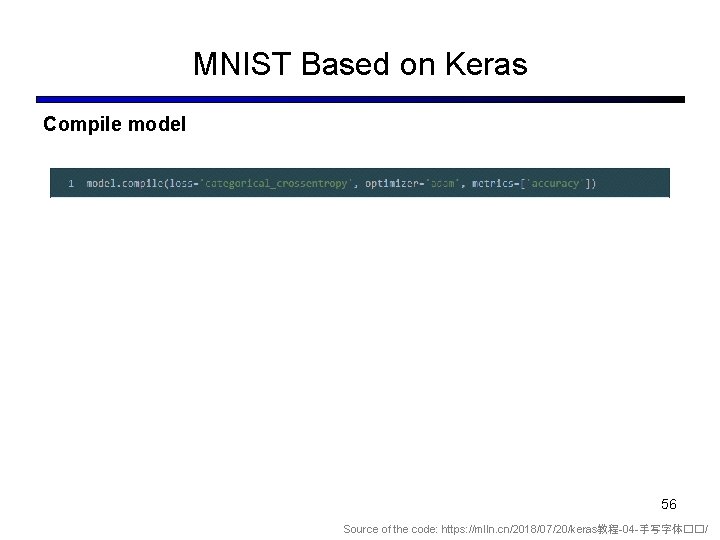

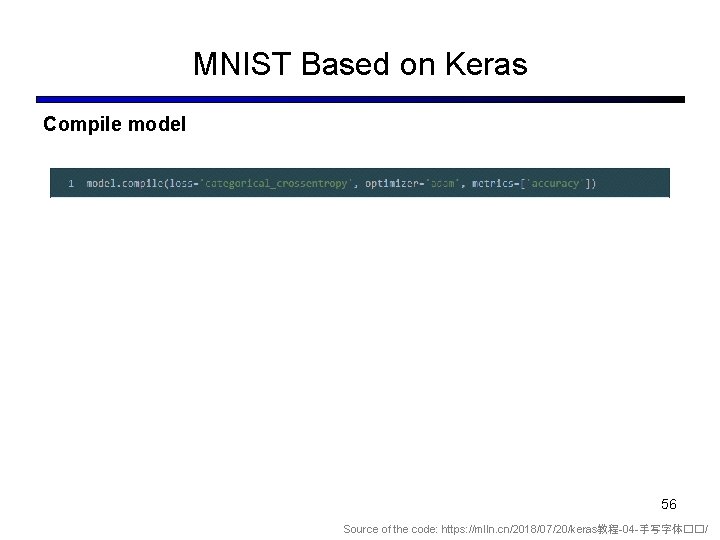

MNIST Based on Keras Compile model 56 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

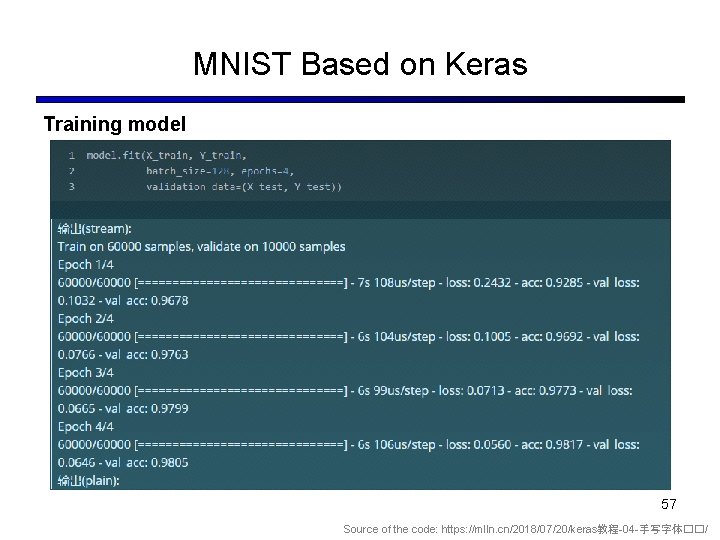

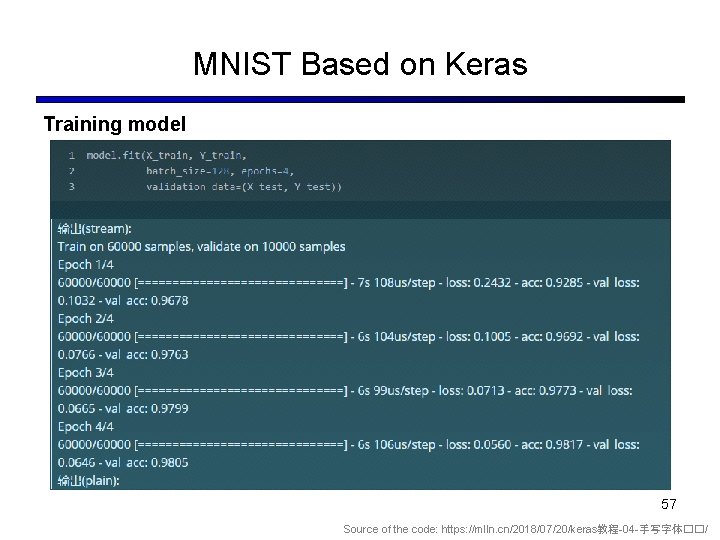

MNIST Based on Keras Training model 57 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

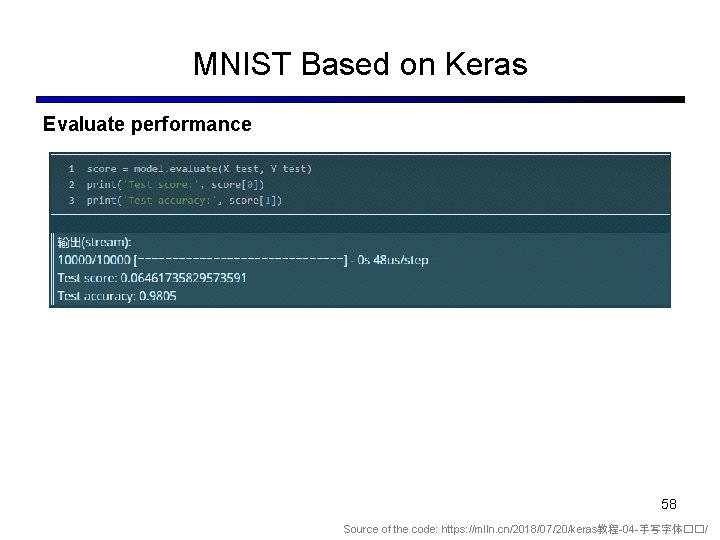

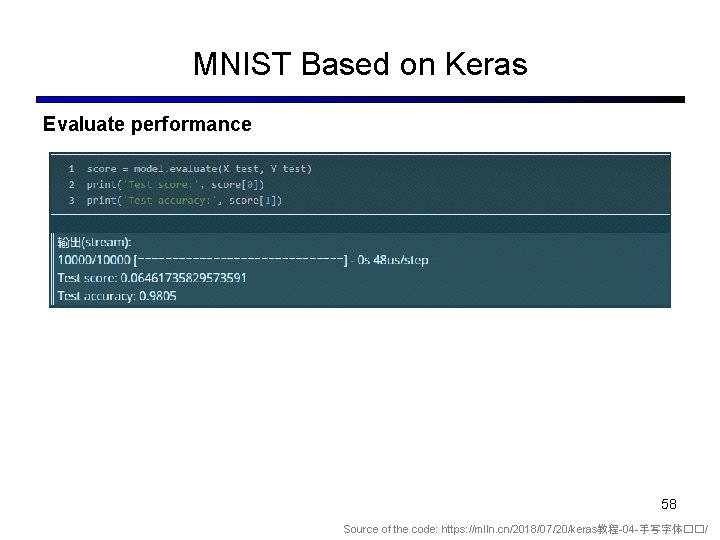

MNIST Based on Keras Evaluate performance 58 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

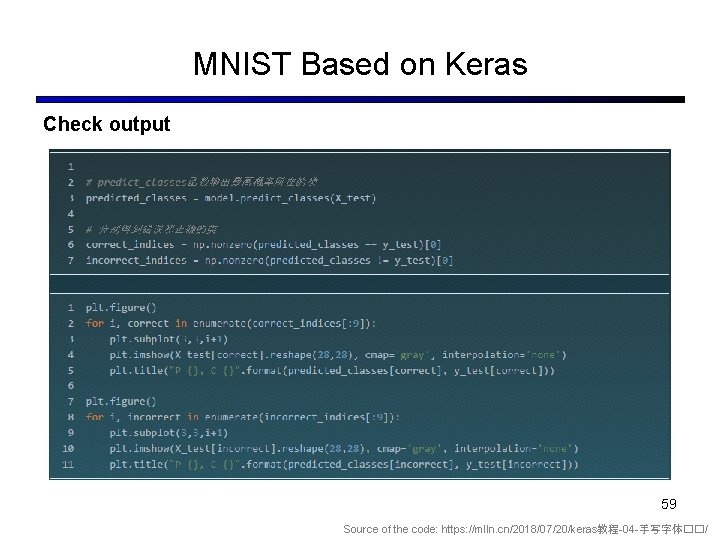

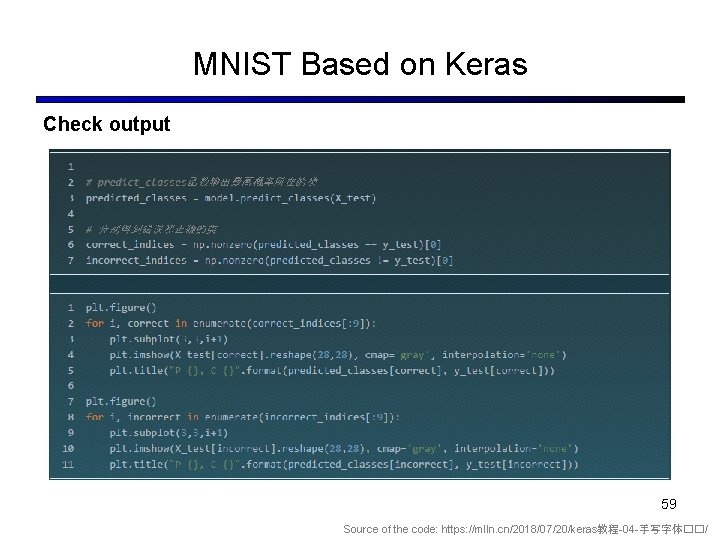

MNIST Based on Keras Check output 59 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/

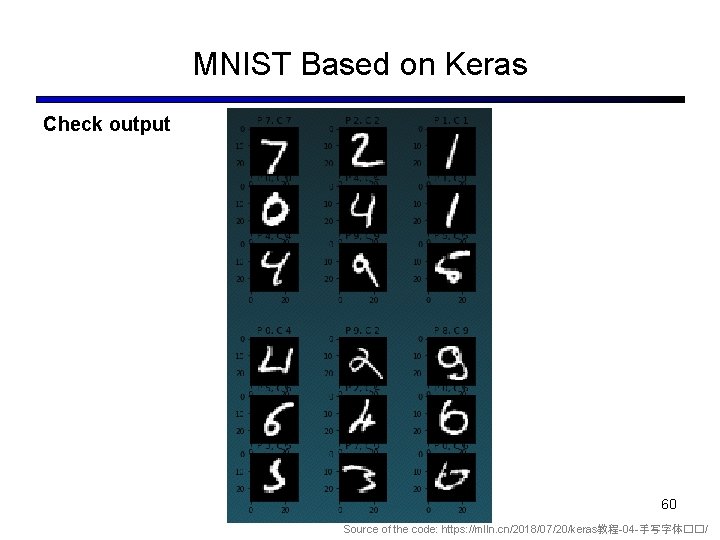

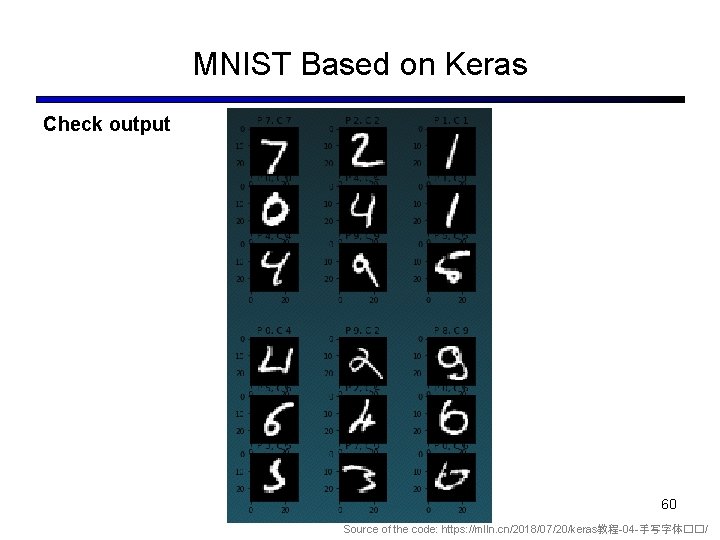

MNIST Based on Keras Check output 60 Source of the code: https: //mlln. cn/2018/07/20/keras教程-04 -手写字体��/