Adopting Open CAPI for High Bandwidth Database Accelerators

Adopting Open. CAPI for High Bandwidth Database Accelerators Authors: Jian Fang 1, Yvo T. B. Mulder 1, Kangli Huang 1, Yang Qiao 1, Xianwei Zeng 1, Jan Hidders 2, Jinho Lee 3, H. Peter Hofstee 1, 3 1 H 2 RC @ SC’ 17, Denver, USA Speaker: Jian Fang (j. fang-1@tudelft. nl) November 17 th, 2017

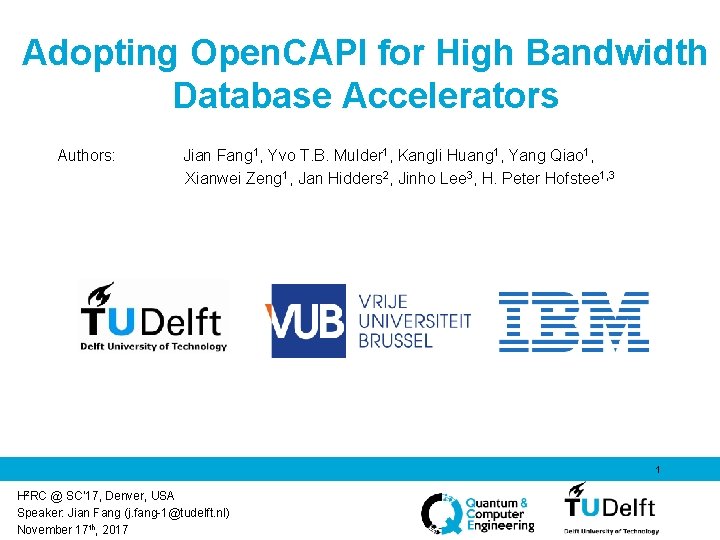

Netezza Data Appliance Architecture • Netezza 2 Source: The Netezza data appliance architecture: A platform for high performance data warehousing and analytics

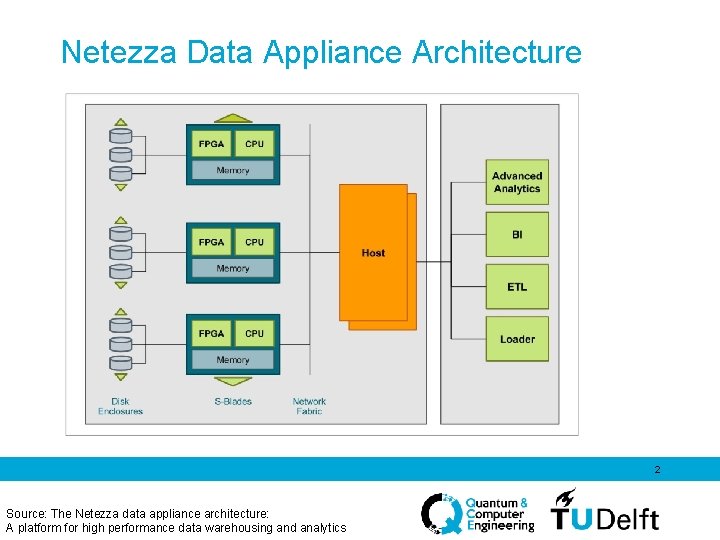

S-Blade in Netezza Compressed Data Filtered Decompressed Data 3 Source: The Netezza data appliance architecture: A platform for high performance data warehousing and analytics

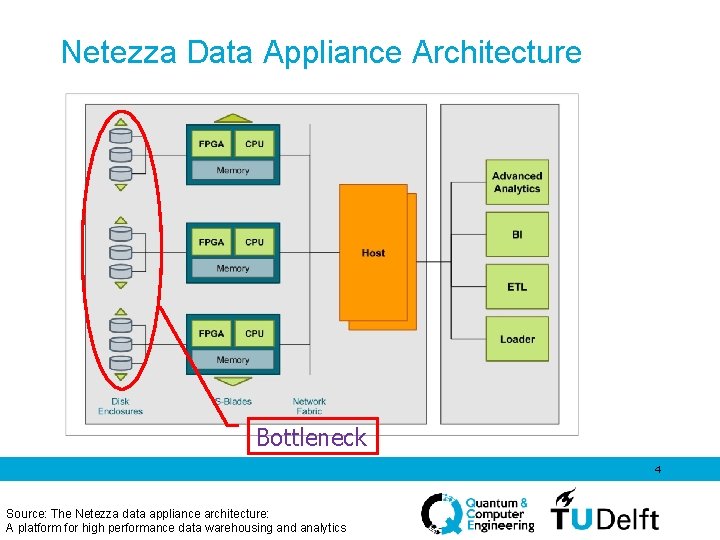

Netezza Data Appliance Architecture • Netezza Bottleneck 4 Source: The Netezza data appliance architecture: A platform for high performance data warehousing and analytics

DB with FPGAs: What is new? • Databases move from Disk to Memory • Databases move from Disk to Flash FPGAs still help? Faster Data Movement 5 Source: https: //www. datanami. com/2015/10/21/ neo 4 j-touts-10 x-performance-boost-of-graphs-on-ibm-power-fpgas/

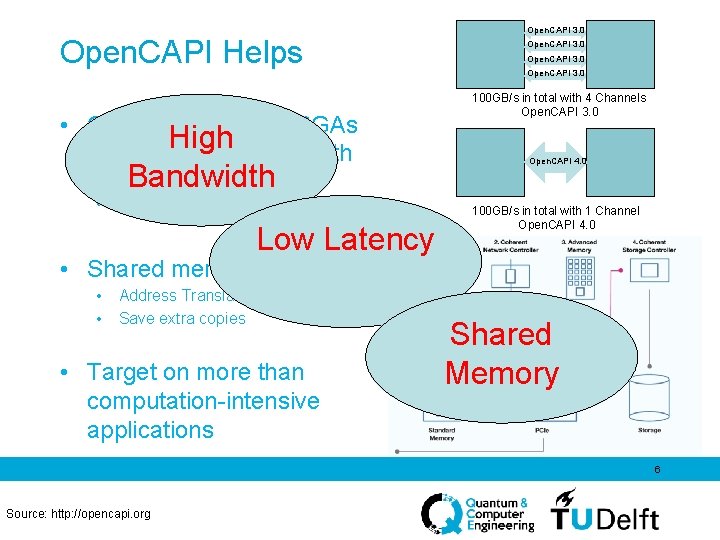

Open. CAPI Helps • Open. CAPI brings FPGAs High memory scale bandwidth • • Bandwidth Open. CAPI 3. 0(x 8) -> 25 GB/s Open. CAPI 4. 0(x 32) -> 100 GB/s Low Latency Open. CAPI 3. 0 100 GB/s in total with 4 Channels Open. CAPI 3. 0 Open. CAPI 4. 0 100 GB/s in total with 1 Channel Open. CAPI 4. 0 • Shared memory • • Address Translation Save extra copies • Target on more than computation-intensive applications Shared Memory 6 Source: http: //opencapi. org

Acceleration DBs with Open. CAPI • Decompress-Filter • Hash-Join • Merge-Sorter • • • • 7

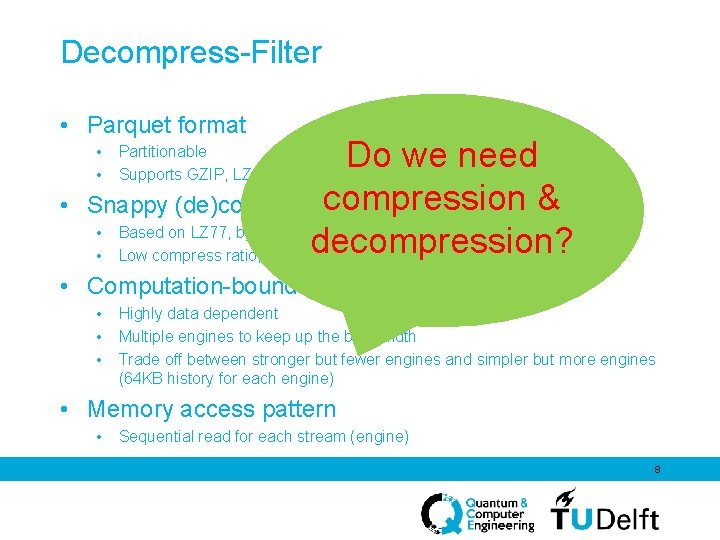

Decompress-Filter • Parquet format Do we need & Snappy (de)compression algorithm • Based on LZ 77, byte-oriented decompression? • Low compress ratio, but fast (de)compress speed • • • Partitionable Supports GZIP, LZO, Snappy, . . . • Computation-bound • • • Highly data dependent Multiple engines to keep up the bandwidth Trade off between stronger but fewer engines and simpler but more engines (64 KB history for each engine) • Memory access pattern • Sequential read for each stream (engine) 8

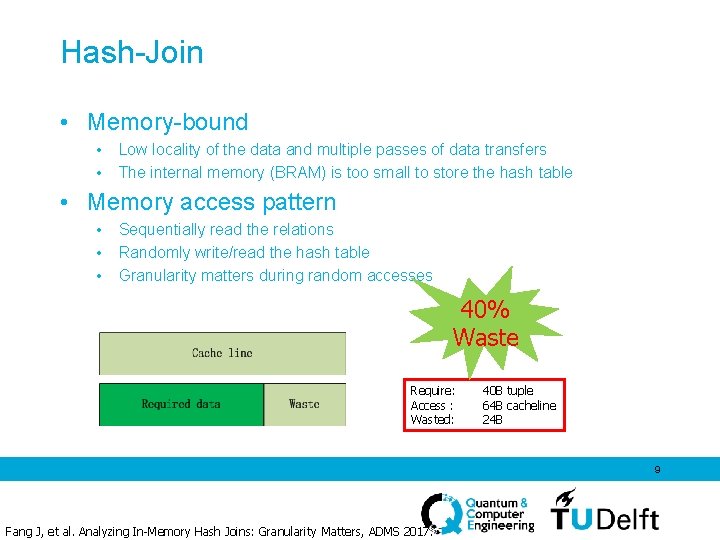

Hash-Join • Memory-bound • • Low locality of the data and multiple passes of data transfers The internal memory (BRAM) is too small to store the hash table • Memory access pattern • • • Sequentially read the relations Randomly write/read the hash table Granularity matters during random accesses 40% Waste Require: Access : Wasted: 40 B tuple 64 B cacheline 24 B 9 Fang J, et al. Analyzing In-Memory Hash Joins: Granularity Matters, ADMS 2017.

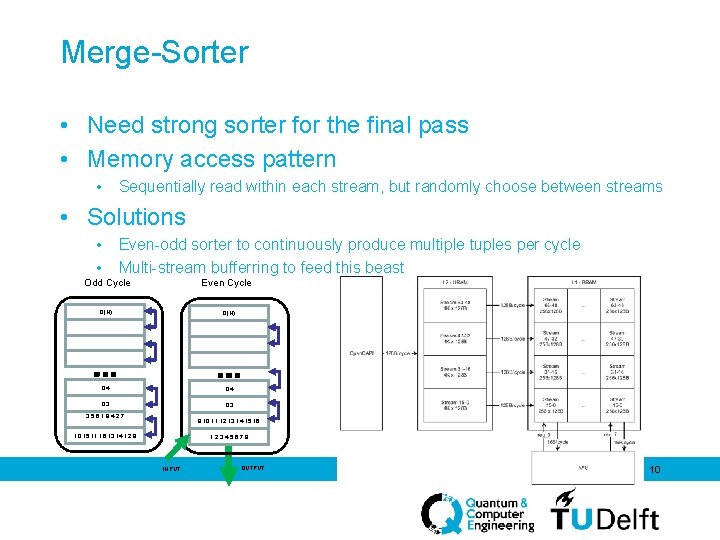

Merge-Sorter • Need strong sorter for the final pass • Memory access pattern • Sequentially read within each stream, but randomly choose between streams • Solutions • • Even-odd sorter to continuously produce multiple tuples per cycle Multi-stream bufferring to feed this beast Odd Cycle Even Cycle Q(N) Q 4 Q 3 3, 5, 6, 1, 8, 4, 2, 7 9, 10, 11, 12, 13, 14, 15, 16 10, 15, 11, 16, 13, 14, 12, 9 1, 2, 3, 4, 5, 6, 7, 8 INPUT OUTPUT 10

Summary • Databases have/need faster rate moving data • With Open. CAPI, FPGAs can help DBs more • Challenges of high bandwidth acclerator design • Three examples 11

Authors Jian Fang TU Delft Yvo T. B. Mulder TU Delft Kangli Huang TU Delft Yang Qiao TU Delft Xianwei Zeng TU Delft Jan Hidders Vrije Universiteit Brussel Jinho Lee IBM Research H. Peter Hofstee TU Delft & IBM Research 12

Thank You More Detail: Progress with Power Systems and CAPI https: //ibm. ent. box. com/v/Open. POWERWorkshop. Micro 50/file/239719608792 Leveraging the bandwidth of Open. CAPI with reconfigurable logic https: //indico-jsc. fz-juelich. de/event/55/other-view? view=standard Contact Me: j. fang-1@tudelft. nl 13

- Slides: 13